Abstract

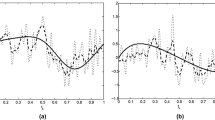

In this paper we study generalized semi-Markov high dimension regression models in continuous time, observed at fixed discrete time moments. The generalized semi-Markov process has dependent jumps and, therefore, it is an extension of the semi-Markov regression introduced in Barbu et al. (Stat Inference Stoch Process 22:187–231, 2019a). For such models we consider estimation problems in nonparametric setting. To this end, we develop model selection procedures for which sharp non-asymptotic oracle inequalities for the robust risks are obtained. Moreover, we give constructive sufficient conditions which provide through the obtained oracle inequalities the adaptive robust efficiency property in the minimax sense. It should be noted also that, for these results, we do not use neither sparse conditions nor the parameter dimension in the model. As examples, regression models constructed through spherical symmetric noise impulses and truncated fractional Poisson processes are considered. Numerical Monte-Carlo simulations confirming the theoretical results are given in the supplementary materials.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Motivations

In this paper we study the following linear regression model in continuous time

where the functions \((\mathbf{u}_{{j}})_{{1\le j\le q}}\) are known linear independent 1-periodic \({{\mathbb {R}}}\rightarrow {{\mathbb {R}}}\) functions, the duration of observations T is an integer number and \((\xi _{{t}})_{{t\ge 0}}\) is an unobservable noise process defined in Sect. 2. The process (1) is observed only at the fixed time moments

where the observation frequency p is some fixed integer number. We consider the model (1) in the case when the parameter dimension is greater than the number of observations, i.e., \(q>n\). Such models are called big data or high dimension regression in continuous time (see, for example, Fujimori, 2019 for diffusion processes). The problem is to estimate the unknown parameters \((\beta _{{j}})_{{1\le j\le q}}\) on the basis of the observations (2). Usually for such problems one uses either the Lasso algorithm or the Dantzig selector method. It should be emphasized that to apply these methods one needs to assume sparsity conditions which provide the nonlarge (“reasonable”) number of the nonzero unknown parameters and, moreover, the parameter dimension q must be known (see, for example, Hastie et al., 2008). It should be noted also that the case of unknown parameter dimension q is one of the crucial points in important practical problems such as, for example, signal and image statistical processing (see, for example, Beltaief et al., 2020 and the references therein).

For the model (1) we consider a nonparametric setting, i.e., this is the setting for the estimation problem of the function

i.e.,

where S is an unknown 1-periodic function, \(S: {{\mathbb {R}}}\rightarrow {{\mathbb {R}}},\) such that the restriction of S to the interval [0, 1] belongs to \(\mathcal{L}_{{2}}[0,1].\) This model means that we observe T times, i.e. on the interval [0, T], the same function S defined on [0, 1], with values in \({{\mathbb {R}}}.\) Here we do not assume neither sparsity conditions, nor the condition that the parameter dimension is known; in particular, we can assume that \(q=+\infty .\) Now the problem is to estimate the unknown function S in the model (3) on the basis of observations (2). Originally, such problems were considered in the framework “signal+white noise” models (see, for example, Ibragimov and Khasminskii, 1981; Kutoyants, 1994; Pinsker, 1981). Later, these models were extended to the “colour noise” models defined through non Gaussian Ornstein-Uhlenbeck processes (see Barndorff-Nielsen and Shephard, 2001; Konev and Pergamenshchikov, 2012, 2015). The problem here is that the dependence defined on the basis of the Ornstein-Uhlenbeck processes disappears very fast, i.e., at a geometric rate. This means that such models are asymptotically equivalent to models with independent observations. To keep the dependence in the observations for large time periods for the estimation problem on the complete data, in the article (Barbu et al., 2019a) it is proposed to define the model (3) through semi-Markov processes with jumps. Such models considerably extend the potential applications of statistical results in many important practical fields such as finance, insurance, signals and image processing, reliability, biology (see, for example, Barbu et al., 2019a; Barbu and Limnios, 2008 and the references therein). In this paper we extend the semi-Markov regression models to the generalized semi-Markov processes by introducing an additional dependence in jump sizes of \((\xi _{{t}})_{{t\ge 0}}\).

1.2 Methods

In order to estimate the 1-periodic function S defined on the interval [0, 1], we develop model selection methods using the quadratic risks defined as

where \(\widehat{S}_{{T}}(\cdot )\) is some estimate based on T periods of the observations of the model (3) (i.e. any 1—periodical function measurable with respect to the observations \(\sigma \{y_{{t_{{0}}}},\ldots y_{{t_{{n}}}}\}\) given in (2)) and \(\mathbf{E}_{{Q,S}}\) is the expectation with respect to the distribution \(\mathbf{P}_{{Q,S}}\) of the process (3) corresponding to the unknown noise distribution Q in the Skorokhod space \(\mathcal{D}[0,T]\) and to the function S. We assume that this distribution belongs to some distribution family \(\mathcal{Q}_{{T}}\) specified in Sect. 2. To study the properties of the estimators uniformly over the noise distribution (what is really needed in practice), we use the robust risk defined as

It should be noted that statistical procedures that are optimal in the sense of this risk possess stable mean square accuracy uniformly over all possible admissible noise distributions in the model (3). This means that the corresponding statistical optimal algorithms have high noise immunity and, therefore, significantly improve the quality and reliability of statistical inference obtained on their basis.

To construct model selection procedures on the basis of the discrete data (2) we use the approach proposed in Konev and Pergamenshchikov (2015). It should be noted that the main analytic tool in that paper is based on the exponential decrease rate of the dependence in Ornstein-Uhlenbeck models, and, therefore, we cannot apply those methods to the semi-Markov models of the current work, since these models can retain a dependence in noises for a long time. So, in this paper, to study the estimation problem based on the discrete observations (2) for the model (3) with noises defined through semi-Markov processes, we develop new methods based on the special renewal theory from Barbu et al. (2019a); based on these techniques we can analyse the approximation errors in the discrete observations and obtain non-asymptotic sharp oracle inequalities. Moreover, as a consequence, we found constructive sufficient conditions on the observation frequency that provide the robust efficiency for proposed model selection procedures in an adaptive setting, i.e., in the case when the regularity properties of the function S are unknown.

1.3 Main contributions of this paper

As previously mentioned, in this paper we use for the first time nonparametric adaptive methods for estimation problems in the framework of the big data generalized semi-Markov regression models. To this end, we develop model selection procedures and corresponding analytical tools providing, under some constructive sufficient conditions, the optimality in the sharp oracle inequality sense and the robust adaptive efficiency in the minimax sense for the proposed estimators. It turns out that these conditions hold true for important practical cases such as, for example, regression models constructed through truncated fractional Poisson processes introduced in Barbu et al. (2019b). Moreover, in this paper, we extend for the first time the model from Barbu et al. (2019a) using the generalized semi-Markov models obtained by introducing a dependence structure in the sizes of the jumps. As an example, we use spherically symmetric random variables, which play very important role in many practical applications (see, for example, Fourdrinier and Pergamenshchikov, 2007 and the references therein).

1.4 Organization of the paper

The rest of the paper is organized as follows. In Sect. 2 we state the main conditions under which we consider the model (3). In Sect. 3 we present the truncated fractional Poisson process and its main properties. In Sect. 4 we construct model selection procedures on the basis of weighted least squares estimates. In Sect. 5 we state the main results. In Sect. 6 we develop the stochastic calculus for the generalized semi-Markov processes. Section 7 gives the proofs of the main results. Some auxiliary tools are given in an Appendix.

2 Main conditions

First, we assume that the noise process \((\xi _{{t}})_{{t\ge \, 0}}\) in the model (3) is defined as

where \(\varrho _{{1}}\), \(\varrho _{{2}}\) and \(\varrho _{{3}}\) are unknown coefficients, \((w_{{t}})_{{t\ge \,0}}\) is a standard Brownian motion, \( L_{{t}}= \int ^{t}_{{0}}\int _{{{{\mathbb {R}}}_{{*}}}}x (\mu (\mathrm {d}s,\mathrm {d}x) -\widetilde{\mu }(\mathrm {d}s,\mathrm {d}x))\), \(\mu (\mathrm {d}s\,\mathrm {d}x)\) is the jump measure with deterministic compensator \(\widetilde{\mu }(\mathrm {d}s\,\mathrm {d}x)=\mathrm {d}s\varPi (\mathrm {d}x)\), \(\varPi (\cdot )\) is the Lévy measure on \({{\mathbb {R}}}_{{*}}={{\mathbb {R}}}\setminus \{0\}\) (see, for example, Liptser and Shiryaev, 1989 for details), with

Here we use the usual notations for \(\varPi (\vert x\vert ^{m})=\int _{{{{\mathbb {R}}}}}\,\vert z\vert ^{m}\,\varPi (\mathrm {d}z)\). Note that \(\varPi (\vert x\vert )\) may be equal to \(+\infty \). In this paper we assume that the “dependent part” in the noise (6) is modelled by the generalized semi-Markov process \((z_{{t}})_{{t\ge \, 0}} \) defined as

where \((\zeta _{{i}})_{{i\ge \, 1}}\) are random variables satisfying the following conditions:

\((\mathbf{C}_{{1}})\) For any \(i\ge 1,\) we have \( \mathbf{E}\,\zeta _{{i}}=0\), \(\mathbf{E}\,\zeta _{{i}}^2=1\) and \(\zeta _{{*}}=\sup _{{l \ge 1}}\mathbf{E}\,\zeta ^4_{{l}}<\infty \);

\((\mathbf{C}_{{2}})\) \(\mathbf{E}\,\zeta _{{i}}\, \zeta _{{j}} =0\) for any \(i\ne j\);

\((\mathbf{C}_{{3}})\) For any \(1\le k_{{1}}< k_{{2}}< k_{{3}}< k_{{4}},\) the random variables \((\zeta _{{k_{{i}}}})_{{1\le i\le 4}}\) are such that \( \mathbf{E}\,\zeta ^{\iota _{{1}}}_{{k_{{1}}}}\, \zeta ^{\iota _{{2}}}_{{k_{{2}}}}\, \zeta ^{\iota _{{3}}}_{{k_{{3}}}}\, \zeta ^{\iota _{{4}}}_{{k_{{4}}}}=0\) for any \(\iota _{{1}},\ldots , \iota _{{4}} \in \{0,1,2,3\}\) for which \(3\le \sum _{i=1}^{4} \iota _{{i}} \le 4\) and at least one among them is equal to one.

Now we give some examples for the correlation conditions \((\mathbf{C}_{{1}})\)–\((\mathbf{C}_{{3}})\). To this end, we first remind the definition of spherically symmetric distribution (see, for example, Fourdrinier and Pergamenshchikov, 2007). A random vector \(\zeta =(\zeta _{{1}},\ldots ,\zeta _{{d}})'\) is called spherically symmetric if its density in \({{\mathbb {R}}}^{d}\) has the form \(\mathbf{g}(\vert \cdot \vert ^{2})\) for some nonnegative function \(\mathbf{g}\). Here the prime denotes the transposition. Note that there is a very important particular case of the spherically symmetric vectors represented by Gaussian mixture distributions. The vector \(\zeta =(\zeta _{{1}},\ldots ,\zeta _{{d}})'\) is called a Gaussian mixture in \({{\mathbb {R}}}^{d}\) if it has the spherically symmetric distribution with

where \(\mathbf{s}\) is a non-negative random variable. It should be emphasized that in radio-physics such distributions are very popular for statistical signal processing (see, for example, Middleton, 1979; Kassam, 1988). Using these definitions it is easy to see that the following random variables satisfy the conditions \((\mathbf{C}_{{1}})\)–\((\mathbf{C}_{{3}})\):

-

\((\zeta _{{j}})_{{j\ge \,1}}\) that are i.i.d. random variables satisfying condition \((\mathbf{C}_{{1}})\);

-

For some \(d>1,\) a random vector \((\zeta _{{1}},\ldots ,\zeta _{{d}})'\) that has a spherically symmetric distribution in \({{\mathbb {R}}}^{d},\) with \(\mathbf{E}\,\zeta _{{1}}^2=1\), \(\mathbf{E}\,\zeta _{{1}}^4 < \infty \) and such that the random variables \((\zeta _{{j}})_{{j>\,d}}\) are independent and satisfy condition \((\mathbf{C}_{{1}})\);

-

For some \(d\ge 1,\) a random vector \((\zeta _{{1}},\ldots ,\zeta _{{d}})'\) that is a Gaussian mixture with mixture variable \(\mathbf{s}\) for which \(\mathbf{E}\,\mathbf{s}^{2}=1\) and \(\mathbf{E}\,\mathbf{s}^{4}<\infty .\)

Note that the process \(N_{{t}}\) in (8) is a general counting process defined as

with \((\tau _{{l}})_{{l\ge \,1}}\) an i.i.d. sequence of positive integrated random variables with distribution \(\eta \) and mean \(\overline{\tau }=\mathbf{E}_{{Q}}\,\tau _{{1}}>0.\) We assume that the processes \((N_{{t}})_{{t\ge 0}}\), \((Y_{{i}})_{i\ge \, 1}\) and \((L_{{t}})_{{t\ge 0}}\) are independent. In the sequel we will use the renewal measure defined as

where \(\eta ^{(l)}\) is the lth convolution power of the measure \(\eta \).

Remark 1

Note that in the case when the random variables \((\zeta _{{j}})_{{j\ge 1}}\) are i.i.d., then (8) is the semi-Markov process used in Barbu et al. (2019a).

To use the renewal methods from Barbu et al. (2019a) we assume that the distribution \(\eta \) has a density g for which the following conditions hold true.

\((\mathbf{H}_{{1}}\)) Assume that, for any \(x\in {{\mathbb {R}}},\) there exist the finite limits \( g(x-)=\lim _{{z\rightarrow x-}}g(z)\) and \(g(x+)=\lim _{{z\rightarrow x+}}g(z)\) and, \(\forall \, K>0\), \( \exists \delta =\delta (K)>0\) for which

\((\mathbf{H}_{{2}}\)) \(\forall \gamma >0,\) the upper bound \( \sup _{{z\ge 0}}\,z^{\gamma }\vert 2g(z) -g(z-)-g(z+) \vert \,<\,\infty \).

\((\mathbf{H}_{{3}}\)) There exists \(\beta >0\) such that \(\int _{{{{\mathbb {R}}}_{{+}}}}\,e^{\beta x}\,g(x)\,\mathrm {d}x<\infty .\)

\((\mathbf{H}_{{4}}\)) There exists \(t^{*}>0\) such that the Fourier transformation \(\widehat{g}(\theta -it)\) belongs to \(\mathcal{L}_{{1}}({{\mathbb {R}}})\) for any \(0\le t\le t^{*}\), where \( \widehat{g}(z)= (2\pi )^{-1}\, \int _{{{{\mathbb {R}}}}}\,e^{i z v} g(v)\mathrm {d}v\).

Moreover, to check these conditions, we will use the following assumption.

\((\mathbf{H}^{*}_{{4}}\)) The density g is two times continuously differentiable with \(g(0)=0\) and there exists \(\beta >0\) such that \( \int ^{+\infty }_{{0}}\,e^{\beta x}\left( g(x) +\vert g^{'}(x)\vert + \vert g^{''}(x)\vert \right) \mathrm {d}x<\infty \) and \(\lim _{{x\rightarrow \infty }}\,e^{\beta x}\left( g(x)+ \vert g^{'}(x)\vert \right) =0\).

It is clear that the conditions \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{3}})\) hold true in this case. To obtain the condition \((\mathbf{H}_{{4}})\) it suffices to calculate the integral in \(\widehat{g},\) integrating by parts two times. For example, one can take the gamma distribution of order \(m\ge 2\)

It should be noted that, in view of Proposition 5.2 from Barbu et al. (2019a), Conditions \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{4}})\) imply that the renewal measure (11) has a continuous density \(\rho \) such that for any \(\mathbf{b}>0\)

Note that this implies

Remark 2

It should be noted that Condition \((\mathbf{H}_{{4}})\) does not hold for the exponential random variable \((\tau _{{j}})_{{j\ge 1}}\) since its density is not continuous in zero. But for exponential random variables, i.e. in the case when \((N_{{t}})_{{t\ge 0}}\) is a Poisson process, the renewal density can be calculated directly, i.e. \(\rho (x)\equiv 1/\overline{\tau }\) and \(\varUpsilon \equiv 0\).

Now we describe the class of possible admissible noise distributions used in the robust risk (5). To this end we set

As to the parameters in (6), we assume that

where the unknown bounds \(0<\varsigma _{{*}}\le \varsigma ^{*}\) can be functions of T, i.e. \(\varsigma _{{*}}=\varsigma _{{*}}(T)\) and \(\varsigma ^{*}=\varsigma ^{*}(T)\), such that for any \(\mathbf{b}>0\)

We denote by \(\mathcal{Q}_{{T}}\) the family of all distributions of the process (6) in \(\mathcal{D}[0,T]\) satisfying the properties (15)–(16).

Remark 3

As we will see later, the parameter (14) is the limit of the Fourier transform of the noise process (6). This limit is called variance proxy (see Konev and Pergamenshchikov, 2012).

3 Truncated fractional Poisson processes

As an example of the process (10) that satisfies Conditions \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{4}}),\) we give the truncated fractional Poisson process introduced in Barbu et al. (2019b). To this end, we remind the definition of the fractional Poisson process (see, for example, Biard and Saussereau, 2014; Laskin, 2003). The process (10) is called fractional Poisson process if the i.i.d. random variables \((\tau _{{j}})\) have the Mittag-Leffler distribution which, for some \(\mathbf{a}>0,\) is defined as

where \(0<H\le 1\) is called the Hurst index,

Note that, if \(H=1\), then we obtain the exponential distribution with parameter \(\mathbf{a}>0\) and, therefore, the process (10) is a Poisson process. If \(0<H<1\), then the density of the distribution (17) (see, for example, Repin and Saichev, 2000) can be represented as

Form here we can directly obtain that

and

In particular, this implies that the Mittag-Leffler distribution has a heavy tail, i.e.

i.e. \(\mathbf{E}\tau _{{1}}=+\infty \). Therefore, the condition \((\mathbf{H}_{{3}})\) does not hold for the distribution (17). To correct this effect, in Barbu et al. (2019b) it is proposed to replace the Mittag-Leffler random variables in (10) with i.i.d. random variables distributed as \(\tau ^{*}_{{1}}=\min (X^{\mathbf{b}}_{{*}},X^{*})\), where \(X_{{*}}\) is a Mittag-Leffler random variable with \(0<H<1\), \(0<\mathbf{b}\le H/3\) and \(X^{*}\) is a positive random variable satisfying the condition \((\mathbf{H}^{*}_{{4}})\). Such processes are called truncated Poisson processes. Using the asymptotic properties (19) and (20) one can check directly that the random variable \(\tau ^{*}_{{1}}\) satisfies the condition \((\mathbf{H}^{*}_{{4}})\) and, therefore, the conditions \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{4}})\) hold true for this case.

Remark 4

It should be noted also that the process (10) with the Mittag-Leffler random variables has a “memory” in its increments (see, for example, Maheshwari and Vellaisamy, 2016) in the sense that, for any \(\delta >0\) and \(s>0,\) the correlation coefficient

It should be noted that this property is very important for many practical problems and it essentially allows to expand the possible applications of statistical results.

Unfortunately, we can’t use directly the fractional Poisson process in the regression model (3) since the impulse noise of the fractional Poisson processes will be very rare, since the time between jumps is not integrable, i.e. very large and, therefore, they have almost negligible influence in the observation models. On the contrary, the truncated process has an exponential moment, i.e. the same property as Poisson processes, and, moreover, it keeps a dependence on large time intervals.

4 Model selection

In this section we construct a model selection procedure for estimating the unknown function S given in (3) starting from the discrete-time observations (2) and we establish the oracle inequality for the associated risk. To this end, note that for any function \(f:[0,T] \rightarrow {{\mathbb {R}}}\) from \(\mathcal{L}_{{2}}[0,T]\), the integral

is well defined, with \(\mathbf{E}_{{Q}}\,I_{{T}}(f)=0\). Moreover, as it is shown in Lemma 1 under the conditions \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{4}}),\)

In this paper we assume that the observations frequency p in (2) is odd and we will use the trigonometric basis \((\phi _{{j}})_{{j\ge \, 1}}\) in \(\mathcal{L}_{{2}}[0,1]\) defined as

where the function \(\text{ Tr}_{{j}}(x)= \cos (x)\) for even j and \(\text{ Tr}_{{j}}(x)= \sin (x)\) for odd j, [x] denotes the integer part of x. Note, that these functions are orthonormal on the points \((t_{{j}})_{{1\le j\le p}}\), i.e. for any \(1\le i,j\le p\)

In the sequel we denote by \(\Vert x\Vert ^{2}_{{p}}=(x,x)_{{p}}\). Now note that, for any \(1\le l\le p,\)

Using the approach from Konev and Pergamenshchikov (2015), we estimate the Fourier coefficients \(\theta _{{j,p}}\) on the basis of the observations from the interval [0, T] as

Note here that the functions \((\psi _{{j,p}})_{1 \le j \le p }\) are 1-periodic and orthonormal on the interval [0, 1], i.e. in \(\mathcal{L}_{{2}}[0,1]\),

The Fourier coefficients of S for this basis in \(\mathcal{L}_{{2}}[0,1]\) can be represented as

where \(h_{j,p}(S)= \sum _{{l=1}}^{p} \int _{{t_{l-1}}}^{t_l} \phi _{{j}}(t_l) (S(t)-S(t_l)) \mathrm {d}\,t\). Taking into account here that the functions \((\psi _{{j,p}})_{1 \le j \le p }\) are 1-periodic and using the observation model (3) in (27) we obtain, that

Therefore,

As in Barbu et al. (2019a), we use the model selection procedures based on the following weighted least squares estimators

where the weight vector \(\lambda =(\lambda (1),\ldots ,\lambda (p))'\) belongs to some finite set \(\varLambda \) from \([0,1]^{p}\). Here the prime \('\) denotes the transposition. Moreover, we set

where \(\text{card }(\varLambda )\) is the cardinal number of the set \(\varLambda \). We assume that \(\varLambda _{{*}}\le T\). Now we use the same criteria as in Barbu et al. (2019a) to choose a weight vector in \(\varLambda \), i.e., we minimize the empirical error

which can be represented as

Note that the Fourier coefficients \((\theta _{{j,p}})_{{j\ge \,1}}\) are unknown. Therefore, using the approach from Barbu et al. (2019a) to minimize this function we replace the terms \(\widehat{\theta }_{{j,p}}\overline{\theta }_{{j,p}}\) by their estimators

where the proxy variance \(\sigma _{{Q}}\) is defined in (15). In the case when this variance is unknown we use its estimator, i.e.

Now, using this estimator we define the penalty term as

In the case when the variance \(\sigma _{{Q}}\) is known we set

Finally, we define the cost function as

where \(\delta >0\) is some threshold which will be specified later. Now we set the model selection procedure as

In the case when \(\widehat{\lambda }\) is not unique we take one of them.

5 Main results

5.1 Oracle inequalities

First, we obtain non-asymptotic oracle inequalities for the procedure (39).

Theorem 1

Assume that the conditions \((\mathbf{C}_{{1}})\)–\((\mathbf{C}_{{3}})\) and \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{4}})\) hold true. Then, there exists some constant \(\mathbf{c}^{*}>0\) such that for any \(T\ge \, 1\) and any noise distribution \(Q\in \mathcal{Q}_{{T}}\) and \( 0 <\delta \le 1/6\), the procedure (39) satisfies the following oracle inequality

In the case when \(\sigma _{{Q}}\) is known, the inequality (40) has the form given in the next result.

Corollary 1

Assume that the conditions \((\mathbf{C}_{{1}})\)–\((\mathbf{C}_{{3}})\) and \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{4}})\) hold true and that the proxy variance \(\sigma _{{Q}}\) is known. Then there exists some constant \(\mathbf{c}^{*}>0\) such that for any \(T\ge \,1\) and for any noise distribution \(Q\in \mathcal{Q}_{{T}}\) and \( 0 <\delta \le 1/6\), the procedure (39) with \(\widehat{\sigma }_{{T}}=\sigma _{{Q}}\) satisfies the following oracle inequality

Now we study the estimator \(\widehat{\sigma }_{{T}}\) defined in (35).

Proposition 1

Assume that the conditions \((\mathbf{C}_{{1}})\)–\((\mathbf{C}_{{3}})\) and \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{4}})\) hold true for the model (3) and that \(S(\cdot )\) is continuously differentiable. Then, there exists a constant \(\mathbf{c}^{*}>0\) such that for any \(T\ge 4\), \(Q\in \mathcal{Q}_{{T}}\) and \(p>\sqrt{T}\),

where \(\mathbf{g}^{*}_{{T,p}}=\sqrt{T}/p+ 1/\sqrt{p}\).

Now Theorem 1 and this proposition imply directly the following result.

Theorem 2

Assume that the function S is continuously differentiable and that the conditions \((\mathbf{C}_{{1}})\)–\((\mathbf{C}_{{3}})\) and \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{4}})\) hold true. Then there exists some constant \(\mathbf{c}^{*}>0\) such that for any continuously differentiable function S for any \(T\ge \, 4 \), for any noise distribution \(Q\in \mathcal{Q}_{{T}}\), \(p> \sqrt{T}\) and \( 0 <\delta \le 1/6\),

Now, to study asymptotic properties of the term \(\mathbf{g}^{*}_{{T,p}}\) as \(T\rightarrow \infty \) and to provide an efficient estimation for the function S, we have to assume some condition on the frequency of the observations p.

\((\mathbf{H}_{{5}}\)) Assume that the frequency p is a function of T, i.e. \(p=p_{{T}}\), such that

Remark 5

This condition is the same as in Konev and Pergamenshchikov (2015) (see (3.41)). Note that it is not too restrictive since we use only observations of the process (3) at discrete time moments. It should be noted also that, to provide optimal asymptotic properties for the model selection procedure (39), one needs to approximate the model (3) on the basis of the observations (2) such that to minimise the right-hand side of the inequality (42). To do this, we need to find a family of optimal estimators \(\widehat{S}_{{\lambda \in \varLambda }}\) and, moreover, we need to minimise the estimation accuracy for the variance \(\sigma _{{Q}}\). For these reasons we have to use the lower bound (43).

Moreover, to study the last term of the right-hand side of the inequality (42), we also need a condition for weights.

\((\mathbf{H}_{{6}}\)) The parameters \(\mathbf{m}_{{*}}\) and \(\varLambda _{{*}}\) defined in (32) can be functions of T, i.e., \(\mathbf{m}_{{*}}=\mathbf{m}_{{*}}(T)\) and \(\varLambda _{{*}}=\varLambda _{{*}}(T)\), such that \(\forall \,\mathbf{b}> 0\) \( \lim _{{T\rightarrow \infty }} T^{-\mathbf{b}}\mathbf{m}_{{*}}(T) =0\) and \(\lim _{{T\rightarrow \infty }} T^{-1/3-\mathbf{b}} \varLambda _{{*}}(T) =0\).

Now, Theorem 2 implies the following oracle inequality.

Theorem 3

Assume that the function S is continuously differentiable and that the conditions \((\mathbf{C}_{{1}})\)–\((\mathbf{C}_{{3}})\) and \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{6}})\) hold true. Then, for any \(T\ge 4\), \(p> \sqrt{T}\) and \( 0<\delta <1/6,\) the procedure (39) satisfies the following oracle inequality

where the term \(\mathbf{U}^{*}_{{T}}>0\) is such that, for any \(r>0\) and \(\mathbf{b}>0,\)

To obtain the efficiency property, we specify the weight coefficients in the procedure (39). Consider, for some \(0<\varepsilon <1,\) a numerical grid of the form

where \(k^*\ge 1\) and \(\varepsilon \) are functions of T, i.e. \(k^*=k^*(T)\) and \(\varepsilon =\varepsilon (T)\), such that

for any \(\mathbf{b}>0\). One can take, for example, for \(T\ge 2\)

where \(k^{*}_{{0}}\ge 0\) is a fixed constant. For each \(\alpha =(k, r)\in {{\mathcal {A}}}\), we set the vector

through its components which are defined as

where \( \omega _{{\alpha }}= \left( \tau _{{k}} \,r\upsilon _{{T}} \right) ^{1/(2k+1)}\),

and \(\varsigma ^{*}\) is introduced in (15). Now we define the set \(\varLambda \) as

These weight coefficients are used in Konev and Pergamenshchikov (2009a; 2012; 2015) for continuous time regression models to show the asymptotic efficiency. Note also that in this case the cardinal of the set \(\varLambda \) is \(\mathbf{m}_{{*}}=k^{*} m\). Moreover, taking into account that for \(k\ge 1\) the coefficient \(\omega _{{\alpha }}<(r\upsilon _{{T}})^{1/(2k+1)}\), we obtain that the norm of the set \(\varLambda \) defined in (32) can be bounded as \(\varLambda _{{*}}\, \le \, \sup _{{\alpha \in {{\mathcal {A}}}}} \omega _{{\alpha }} \le (\upsilon _{{T}}/\varepsilon )^{1/3}\). Therefore, the properties (46) imply the condition \(\mathbf{H}_{{6}})\).

5.2 Robust asymptotic efficiency

Now we study the asymptotic efficiency properties for the procedure (39), (48) with respect to the robust risks (5) defined by the distribution family (15)–(16). To this end, we assume that on the interval [0, 1] the unknown function S in the model (3) belongs to the Sobolev ball

where \(\mathbf{r}>0\), \(k\ge 1\) are some unknown parameters, \({{\mathcal {C}}}^{\mathbf{k}}_{{per}}[0,1]\) is the set of \(\mathbf{k}\) times continuously differentiable functions \(f\,:\,[0,1]\rightarrow {{\mathbb {R}}}\) such that \(f^{(i)}(0)=f^{(i)}(1)\) for all \(0\le i \le \mathbf{k}\). Note, that the class (49) is an ellipsoid, i.e.

where \(a_{{j}}=\sum ^{\mathbf{k}}_{{i=0}}\left( 2\pi [j/2]\right) ^{2i}\) and \(\theta _{{j}}=(f,\phi _{{j}})=\int ^{1}_{{0}}f(t)\phi _{{j}}(t)\mathrm {d}t\). Similarly to Barbu et al. (2019a) we will show here that the asymptotic sharp lower bound for the normalized robust risk (5) is given by the well-known Pinsker constant defined as

To study efficient properties we need to use the set \(\Xi _{{T}}\) of all possible estimators \(\widehat{S}_{{T}}\) measurable with respect to the sigma-algebra \(\sigma \{y_{{t}}\,,\,0\le t\le T\}\).

Theorem 4

For the risk (5) with the coefficient rate \(\upsilon _{{T}}=T/\varsigma ^{*}\)

Note that, if the radius \(\mathbf{r}\) and the regularity \(\mathbf{k}\) are known, i.e. for the nonadaptive estimation problem on the continuous observations \((y_{{t}})_{{0\le t\le T}}\), in Barbu et al. (2019a) it is proposed to use the estimate \(\widehat{S}_{{\lambda _{{0}}}}\) defined in (31) with the weights (48)

Now, we show the same result for the discrete observations (2).

Proposition 2

Assume that the conditions \((\mathbf{C}_{{1}})\)–\((\mathbf{C}_{{2}})\) and \((\mathbf{H}_{{1}}\))–\((\mathbf{H}_{{5}}\)) hold true. Then

For the adaptive estimation we user the model selection procedure (39) with the parameter \(\delta \) defined as a function of T, i.e. \(\delta =\delta _{{T}}\), such that

for any \(\mathbf{b}>0\). For example, we can take \(\delta _{{T}}=(6+\ln T)^{-1}\).

Theorem 5

Assume that the conditions \((\mathbf{C}_{{1}})\)–\((\mathbf{C}_{{3}})\) and \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{6}})\) hold true. Then the robust risk (5) for the procedure (39) with the coefficients (48) and the parameter \(\delta =\delta _{{T}}\) satisfying (55) has the following upper bound

Theorems 4 and 5 imply the following result.

Theorem 6

Assume that the conditions \((\mathbf{C}_{{1}})\)–\((\mathbf{C}_{{3}})\) and \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{6}})\) hold true. Then the procedure (39) with the weight coefficients (48) and the parameter \(\delta =\delta _{{T}}\) satisfying (55) is asymptotically efficient, i.e.

and

Remark 6

It is well known that the optimal (minimax) risk convergence rate for the Sobolev ball \(\mathcal{W}_{{\mathbf{r},\mathbf{k}}}\) is \(T^{2\mathbf{k}/(2\mathbf{k}+1)}\) (see, for example, Pinsker, 1981; Konev and Pergamenshchikov, 2009b). We see here that the efficient robust rate is \(\upsilon ^{2\mathbf{k}/(2\mathbf{k}+1)}_{{T}}\), i.e. if the distribution upper bound \(\varsigma ^{*}\rightarrow 0\) as \(T\rightarrow \infty \) we obtain a faster rate with respect to \(T^{2\mathbf{k}/(2\mathbf{k}+1)}\), and if \(\varsigma ^{*}\rightarrow \infty \) as \(T\rightarrow \infty \) we obtain a slower rate. In the case when \(\varsigma ^{*}\) is constant the robust rate is the same as the classical non robust convergence rate.

5.3 Big data analysis for the model (1)

Now we consider the estimation problem for the parameters \((\beta _{{j}})_{{1\le j\le q}}\) in (3) with unknown q. In this case we have to estimate the sequence \(\beta =(\beta _{{j}})_{{j\ge 1}}\) in which \(\beta _{{j}}=0\) for \(j\ge q+1\). To this end we assume that the functions \((\mathbf{u}_{{j}})_{{j\ge 1}}\) are orthonormal in \(\mathcal{L}_{{2}}[0,1]\), i.e. \((\mathbf{u}_{{i}},\mathbf{u}_{{j}})=\mathbf{1}_{{\{i\ne j\}}}\).

Indeed, we can use always the Gram-Schmidt orthogonalization procedure to provide this property. Thus, in this case we estimate the parameters \(\beta =(\beta _{{j}})_{{j\ge 1}}\) through the estimator (39) as \(\widehat{\beta }_{{*}}=(\widehat{\beta }_{{*,j}})_{{j\ge 1}}\) and \(\widehat{\beta }_{{*,j}}=(\mathbf{u}_{{j}},\widehat{S}_{{*}})\). Similarly, using the weighted estimators (31) we define the basic estimators \((\widehat{\beta }_{{\lambda }})_{{\lambda \in \varLambda }}\) as \(\widehat{\beta }_{{\lambda }}=(\widehat{\beta }_{{j,\lambda }})_{{j\ge 1}}\) and \( \widehat{\beta }_{{j,\lambda }}=(\mathbf{u}_{{j}},\widehat{S}_{{\lambda }})\). Taking into account that in this case

\( \vert \widehat{\beta }_{{*}}-\beta \vert ^{2}=\sum ^{\infty }_{{j=1}}\,(\widehat{\beta }_{{*,j}}-\beta _{{j}})^{2} =\Vert \widehat{S}_{{*}}- S\Vert ^{2}\) and \( \vert \widehat{\beta }_{{\lambda }}-\beta \vert ^{2}=\Vert \widehat{S}_{{\lambda }}- S\Vert ^{2}\), Theorem 3 implies the following oracle inequality.

Theorem 7

Assume that the function (3) is continuously differentiable and that the conditions \((\mathbf{C}_{{1}})\)–\((\mathbf{C}_{{3}})\), \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{5}})\) and (15)–(16) hold true. Then, for any \(T\ge \, 4 \) and \( 0<\delta <1/6,\) the following oracle inequality holds true

where the term \(\mathbf{U}^{*}_{{T}}>0\) satisfies the property (44).

Moreover, Theorem 6 implies the following efficiency property.

Theorem 8

Assume that the conditions \((\mathbf{C}_{{1}})\)–\((\mathbf{C}_{{3}})\) and \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{6}})\) hold true. Then the estimator \(\widehat{\beta }_{{*}}\) constructed through the procedure (39) with the weight coefficients (48) and the parameter \(\delta =\delta _{{T}}\) satisfying (55) is asymptotically efficient in the minimax sense, i.e.

and

where the infimum is taken over all possible estimators \(\widehat{\beta }_{{T}}\) measurable with respect the field \(\sigma \{y_{{t}}\,,\,0\le t\le T\}\) and the lower bound \(\mathbf{l}_{{*}}\) is defined in (51).

Remark 7

It should be emphasized that the efficiency properties (56) are obtained without sparse conditions on the number of nonzero parameters \(\beta _{{j}}\) in the model (1) (see, for example, Hastie et al., 2008). Moreover, we do not use even the parameter dimension q which can be equal to \(+\infty \).

6 Stochastic calculus for generalized semi-Markov processes

In this section we study some properties of the stochastic integrals (22). First, note that using the conditions \((\mathbf{C}_{{1}})\) and \((\mathbf{C}_{{2}})\) and the stochastic calculus developed in Barbu et al. (2019a) for semi-Markov processes we can show the following Lemmas 1 and 2.

Lemma 1

Assume that the conditions \((\mathbf{C}_{{1}})\)–\((\mathbf{C}_{{2}})\) and \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{4}})\) hold true. Then, for any nonrandom functions f and h from \(\mathcal{L}_{{2}}[0,T]\)

where \((f,h)_{{t}}=\int _{{0}}^{t} f(s)\,h(s) \mathrm {d}s\) and \(\rho \) is the density of the renewal measure (11).

It should be noted that this lemma implies directly that the stochastic integral (22) satisfies the properties (23).

Lemma 2

Assume that the conditions \((\mathbf{C}_{{1}})\)–\((\mathbf{C}_{{2}})\) and \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{4}})\) hold true. Then, for any bounded \([0,\infty ) \rightarrow {{\mathbb {R}}}\) functions f and h and for any \(k\ge 1,\)

where \(\mathbf{t}_{{k}}=\sum ^{k}_{{j=1}}\tau _{{j}}\) and \(\mathcal{G}=\sigma \{\mathbf{t}_{{l}}\,,\,l\ge 1\}\).

Lemma 3

Assume that the conditions \((\mathbf{C}_{{1}})\)–\((\mathbf{C}_{{3}})\) and \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{4}})\) hold true. Then, for any nonrandom bounded \([0,T]\rightarrow {{\mathbb {R}}}\) functions f and h,

the expectation \( \mathbf{E}_{{Q}} \int _{{0}}^{T} I^2_{{t-}}(f) I_{{t-}}(h) h(t) \mathrm {d}\xi _{{t}} = 0\).

Proof

Setting \( \check{L}_{{t}} =\varrho _{1} w_{{t}}+ \varrho _{2} L_{{t}}\) , we can represent the integral (22) as

where \(\check{I}_{{t}}(f)=\int ^{t}_{{0}} f(u)\mathrm {d}\check{L}_{{u}}\) and \(I^{z}_{{t}}(f)=\int ^{t}_{{0}} f(u)\mathrm {d}z_{{u}}\). Note that using the condition (7) and the inequality for martingales from Novikov (1975) we can obtain that \(\mathbf{E}_{{Q}}\,\sup _{{0\le t\le T}}\,\check{I}^{8}_{{t}}(f) <\infty \). Since \(\check{L}_{{t}}\) and \(z_{{t}}\) are independent, we get \(\mathbf{E}_{{Q}} \int _{{0}}^{T} I^2_{{t-}}(f) I_{{t-}}(h) h(t) d\check{L}_{{t}} = 0\). Moreover, the conditions \((\mathbf{C}_{{1}})\) – \((\mathbf{C}_{{3}})\) yield that, for any nonrandom \((c_{{i,j}})\) and \(k\ge 1,\) \(\mathbf{E}\,\left( \sum ^{k-1}_{{j=1}}c_{{1,j}} \zeta _{{j}}\right) ^{2} \zeta _{{k}}=0\) and \(\mathbf{E}\,\left( \sum ^{k-1}_{{j=1}}c_{{1,j}} \zeta _{{j}}\right) ^{2}\, \left( \sum ^{k-1}_{{j=1}}c_{{2,j}} \zeta _{{j}}\right) \zeta _{{k}}=0\). Therefore, taking into account that the sequence \((\zeta _{{k}})_{{k\ge 1}}\) does not depend on the moments \((\mathbf{t}_{{k}})_{{k\ge 1}}\) and the process \((\check{L}_{{t}})_{{t\ge 0}}\), and using the same method as in the proof of Lemma 8.4 from Barbu et al. (2019a) we obtain

This implies the desired result. \(\square \)

Now we study the integrals \(\widetilde{I}_{{T}}(f)= I^2_{{T}}(f)- \mathbf{E}_{{Q}} I_{{T}}^2(f)\) as functions of f.

Proposition 3

Assume that the conditions \((\mathbf{C}_{{1}})\)–\((\mathbf{C}_{{3}})\) and \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{4}})\) hold true. Then, for any \([0,\infty ) \rightarrow {{\mathbb {R}}}\) functions f, h such that \( \vert f \vert _{{*}} \le 1\) and \( \vert h \vert _{{*}} \le 1\), one has

where \( \widetilde{\mathbf{c}}=4\overline{\tau } \Vert \varUpsilon \Vert _{{1}} \left( 2+ \overline{\tau }\vert \rho \vert _{{*}}+\Vert \varUpsilon \Vert _{{1}}\right) +\varPi (x^{4})+\zeta _{{*}}\vert \rho \vert _{{*}}\), \(\zeta _{{*}}=\sup _{{j\ge 1}} \mathbf{E}\zeta ^{4}_{{j}}\) and \( \vert f \vert _{{*}}=\sup _{{t\ge 0}}\vert f(t)\vert \).

Proof

First of all, note that in view of the Ito formula and using the fact that for the process (6) the jumps \(\varDelta z_{{s}} \varDelta L_{{s}}=0\) a.s. for any \(s\ge 0\), we obtain that

Note also that Lemma 1 yields \( \mathbf{E}_{{Q}} I^2_{{t}}(f) = (\varrho ^{2}_{{1}}+\varrho ^{2}_{{2}})\Vert f\Vert ^{2}_{{t}} +\varrho ^{2}_{{3}} \Vert f\sqrt{\rho }\Vert ^2_{{t}}\) with \( \Vert f\Vert ^{2}_{{t}}=\int ^{t}_{{0}}\,f^{2}(t)\mathrm {d}t\). Therefore,

where \( \check{m}_{{t}}= \sum _{{0\le s \le t}}(\varDelta L_{{s}})^2 - t\) and \( m_{{t}} = \sum _{{0\le s \le t}}(\varDelta z_{{s}})^2 - \int _{{0}}^{t} \rho (s) \mathrm {d}s\). Thus,

Using here Lemma 3 and, taking into account that \((\check{m}_{{t}})_{{t\ge 0}}\) is a square integrable martingale, we get

The last integral can be represented as

where \(J_{{1}}=\mathbf{E}_{{Q}}\sum _{{k\ge 1}}\,I^{2}_{{\mathbf{t}_{{k}}-}}(f) h^{2}(\mathbf{t}_{{k}})\mathbf{1}_{{\{\mathbf{t}_{{k}}\le T\}}}\) and \(J_{{2}}=\int ^{T}_0 \,\mathbf{E}_{{Q}}\,I^{2}_{{t}}(f) h^{2}(t)\rho (t) \mathrm {d}t\). By Lemma 2 we get

where \( J_{{1,1}}=\mathbf{E}_{{Q}}\sum _{{k\ge 1}}\, \Vert f\Vert ^{2}_{{\mathbf{t}_{{k}}}} h^{2}(\mathbf{t}_{{k}}) \mathbf{1}_{{\{\mathbf{t}_{{k}}\le T\}}} =\int ^{T}_{{0}}\,\Vert f\Vert ^{2}_{{t}} h^{2}(t)\rho (t)\mathrm {d}t\) and

Moreover, using Lemma 1 for the last term in (60), we obtain that

and we can represent the expectation in (60) as

Therefore, \( \vert \mathbf{E}_{{Q}}\int ^{T}_0 \,I^{2}_{{t-}}(f) h^{2}(t) \mathrm {d}m_{{t}}\vert \le 2 \varrho ^{2}_{{3}}T\Vert \varUpsilon \Vert _{{1}}\, \vert \rho \vert _{{*}}\) and

Furthermore, note that

where \( \widetilde{I}^c_{{t}} (f)=2\varrho _{{1}} \int ^{t}_{{0}}\,I_{{s}}(f) f(s) \mathrm {d}w_{{s}}\) and \(\mathbf{D}_{{T}}(f,h)= \sum _{0\le t \le T} \varDelta \widetilde{I}^{d}_{{t}}(f) \varDelta \widetilde{I}^{d}_{{t}}(h)\). In this case \( \widetilde{I}^{d}_{{t}} (f)=2 \int ^{t}_{{0}}\,I_{{s-}}(f) f(s) \mathrm {d}\xi ^{d}_{{s}} + \int ^{t}_{{0}}\,f^2(s) \mathrm {d}\widetilde{m}_{{s}}\) and \(\xi ^{d}_{{t}}=\varrho _{{2}}L_{{t}}+\varrho _{{3}}z_{{t}}\). Therefore, in view of Lemma 1,

Since \(\vert f \vert _{{*}} \le 1\) and \( \vert h \vert _{{*}} \le 1,\) we get \(\int ^{T}_{{0}} \vert (f,h\varUpsilon )_{{t}} f(t) h(t)\vert \mathrm {d}t\le T \Vert \varUpsilon \Vert _{{1}}\) and

To study the process \(\mathbf{D}_{{T}}(f,h)\) note that \( \varDelta \xi ^{d}_{{t}} \varDelta \widetilde{m}_{{t}} = \varrho ^{3}_{{2}} (\varDelta L_{{t}})^{3} + \varrho ^{3}_{{3}} (\varDelta z_{{t}})^{3}\). Note also that for any \(t\ge 0\) the expectation \(\mathbf{E}_{{Q}}I_{{t}}(f)=0\). Therefore, using the definition of the process \(L_{{t}},\) we obtain through the Fubini theorem that, for any bounded measurable nonrandom functions V, \(V: [0,T]\rightarrow {{\mathbb {R}}},\) we have

Moreover, since the processes \((\check{L}_{{t}})_{{t\ge 0}}\) and \((z_{{t}})_{{t\ge 0}}\) are independent we get

where the integral \(\check{I}_{{t}}(f)\) is defined in (58) and the \(\mathcal{G}^{z}=\sigma \{z_{{t}}\,,\,t\ge 0\}\). Note that the condition \(\mathbf{C}_{{3}})\) implies that for any \(k\ge 1\) and nonrandom \((c_{{j}})_{{j\ge 1}}\) \( \mathbf{E}_{{Q}}\left( \sum ^{ k-1}_{{j=1}}c_{{j}} \zeta _{{j}}\right) \,\zeta ^{3}_{{k}} =0\). Therefore, \( \mathbf{E}_{{Q}}\,\sum _{{0\le t\le T}}\,V(t)I^{z}_{{t-}}(f) (\varDelta z_{{t}})^{3}=0\) and

So, the expectation of \(\mathbf{D}_{{T}}(f,h)\) can be represented as

where \(\mathbf{D}_{{1,T}}(f,h)=\sum _{{0\le t\le T}}\, I_{{t-}}(f)I_{{t-}}(h) f(t)h(t)(\varDelta L_{{t}})^{2}\),

and \( \mathbf{D}_{{3,T}}(f,h)=\sum _{{0\le t\le T}}\,f^2(t)\,h^{2}(t) (\varDelta \widetilde{m}_{{t}})^{2}\). First, since \(\varPi (x^{2})=1\), we get

and \(\vert \mathbf{E}_{{Q}}\,\mathbf{D}_{{1,T}}(f,h)\vert \le \sigma _{{Q}} \left( (f,h)^{2}_{{T}}/2 +T \overline{\tau }\Vert \varUpsilon \Vert _{{1}} \right) \). Then, taking into account that \(\mathbf{E}\,\zeta ^{2}_{{j}}=1\) and using Lemma 2, we find, that

where \( \mathbf{D}^{'}_{{2,T}}(f,h)= \sum _{{k\ge 1}} \sum _{{l=1}}^{k-1}\, f(\mathbf{t}_{{l}})\,h(\mathbf{t}_{{l}}) f(\mathbf{t}_{{k}})h(\mathbf{t}_{{k}}) \,\mathbf{1}_{{\{\mathbf{t}_{{k}}\le T\}}}\). Note, that

i.e.

Furthermore, the expectation of \(\mathbf{D}^{'}_{{2,T}}(f,h)\) can be represented as

where

Since \(T\ge 1\), we can obtain that

and, therefore,

Moreover, using that \(\zeta _{{*}}=\sup _{{j\ge 1}} \mathbf{E}\zeta ^{4}_{{j}}<\infty \), we get directly

Therefore, \( \vert \mathbf{E}_{{Q}}\,\mathbf{D}_{{T}}(f,h)\vert \le \sigma ^{2}_{{Q}} \left( 2(f,h)^{2}_{{T}} +T\widetilde{\mathbf{c}} \right) \), where \(\widetilde{\mathbf{c}}\) is given in (59). Now, using (62), we get \( \mathbf{E}_{{Q}}\, [ \widetilde{I} (f),\widetilde{I} (h)]_{{T}} \le \sigma ^{2}_{{Q}} \left( 4 (f,h)^{2}_{{T}} +2T\widetilde{\mathbf{c}} \right) \). This bound with (61) implies (59). Hence the proof is achieved. \(\square \)

In order to prove the oracle inequalities we need to study the conditions introduced in Konev and Pergamenshchikov (2012) for the general semi-martingale model (3). To this end, we set for any \(x\in {{\mathbb {R}}}^{p}\) the functions

where \(\sigma _{{Q}}\) is defined in (15) and \(\widetilde{\xi }_{{j,p}}=\xi ^2_{{j,p}}- \mathbf{E}_{{Q}}\xi ^2_{{j,p}}\).

Proposition 4

Assume that the conditions \((\mathbf{C}_{{1}})\)–\((\mathbf{C}_{{2}})\), \((\mathbf{H}_{{1}})\)–\((\mathbf{H}_{{5}})\) hold true. Then there exists some constant \(\mathbf{c}^{*}>0\) such that for any \(Q\in \cup _{{k\ge 1}}\,\mathcal{Q}_{{k}}\)

and

where \(|x|^2 = \sum _{{j=1}}^{p} x^2_{{j}}\) and \(\#(x)=\sum _{{j=1}}^{p} \mathbf{1}_{{\{x_{{j}}\ne 0\}}}\).

Proof

Firstly, using here Lemma 1, we obtain that

From (13) it follows that \( \left| \mathbf{E}_{{Q}}\xi ^2_{{j,p}}-\sigma _{{Q}} \right| \le 2 \varrho ^2_3 \Vert \varUpsilon \Vert _{{1}}/T\) and, therefore, taking into account that \(\#(x)\le T\), we obtain the inequality (64). Next, note that

where \(\widetilde{I}_{{T}}(f)= I^2_{{T}}(f)- \mathbf{E}_{{Q}} I_{{T}}^2(f)\). Now Proposition 3 and the property (28) imply, that for some constant \(\mathbf{c}^{*}>0\) and for \(\vert x\vert \le 1\)

Since \(\#(x)\le T\), we obtain the upper bound (65). \(\square \)

7 Proofs

7.1 Proof of Theorem 1

Using the cost function given in (38), we can rewrite the empirical squared error in (34) as follows

where

with \(\varsigma _{{j,p}}=\mathbf{E}_{{Q}}\xi ^{2}_{{j,p}}-\sigma _{{Q}}\) and \(\widetilde{\xi }_{{j,p}}=\xi ^{2}_{{j,p}}-\mathbf{E}_{{Q}}\xi ^{2}_{{j,p}}\). Setting

and using the functions (63) through the penalty term (37), we rewrite (67) as

where \(\nu (\lambda )=\lambda /|\lambda |\). Let \(\lambda _{{0}}= (\lambda _{{0}}(j))_{{1\le j\le \,p}}\) be a fixed sequence in \(\varLambda \) and \(\widehat{\lambda }\) be defined as in (39). Substituting \(\lambda _{{0}}\) and \(\widehat{\lambda }\) in (69), we obtain

where \(\varpi = \widehat{\lambda } - \lambda _{{0}}\), \(\widehat{\nu } = \nu (\widehat{\lambda })\) and \(\nu _{{0}} = \nu (\lambda _{{0}})\). Now, in view of the inequality \(2|ab| \le \delta a^2 + \delta ^{-1} b^2\) we get that

Then, taking into account that \(|L(\varpi )| \le \,L(\widehat{\lambda }) + L(\lambda ) \le 2\varLambda _{{*}}\) and using the definition (64) we get

where \(B^*_{{2,Q}} = \sup _{{\lambda \in \varLambda }} B^2_{{2,Q}}((\nu (\lambda ))\). Using now the definitions (36), (37) and the inequality \(|\lambda _{{0}}|^{2}=\sum ^{p}_{{j=1}} \lambda ^2_{{0}}(j)\le \varLambda _{{*}}\), we can estimate the penalty term \(\widehat{P}_{{T}}(\lambda _{{0}})\) as \(\widehat{P}_{{T}}(\lambda _{{0}})\le P_{{T}}(\lambda _{{0}})+\varLambda _{{*}} |\widehat{\sigma }_{{T}} -\sigma _{{Q}}|/T\). Therefore, using this in the last inequality, we get, that for \(0< \delta < 1\)

To study here the term \(\mathbf{M}(\cdot )\) we define for any \(x=(x(j))_{{1\le j\le p}}\in {{\mathbb {R}}}^{p}\) the function \( S_{{x}} = \sum _{{j=1}}^{p} x(j) \overline{\theta }_{{j,p}} \psi _{{j,p}}\). Then, using the definition of \(\xi _{{j,p}}\) in (30) we get through (68), that \(\mathbf{M}(x)=I_{{T}}(S_{{x}})/\sqrt{T}\) and, therefore, thanks to (23)

Moreover, setting here \( Z^* = \sup _{{x \in \varLambda _{{1}}}} T M^2 (x)/\Vert S_{{x}}\Vert ^2\) and \(\varLambda _{{1}} = \varLambda - \lambda _{{0}}\), we get

The last term here can be estimated from above as

where \(\mathbf{m}_{{*}} = \text{ card }(\varLambda )\). Moreover, note that, for any \(x\in \varLambda _{{1}}\),

where \(\mathbf{M}_{{1}}(x) = T^{-1/2}\,\sum _{{j=1}}^{p}\, x^2(j)\overline{\theta }_{{j,p}} \xi _{{j,p}}\). Taking into account now that, for any \(x \in \varLambda _{{1}}\), the components \(|x(j)|\le 1\), we can estimate this term as in (70), i.e. \( \mathbf{E}_{{Q}}\, \mathbf{M}^2_{{1}}(x) \le \varkappa _{{Q}}\,\Vert S_{{x}}\Vert ^2/T\). Similarly to the previous reasoning setting

\( Z^*_{{1}} = \sup _{{x \in \varLambda _{{1}}}}T \mathbf{M}^2_1 (x)/\Vert S_{{x}}\Vert ^2\), we get \(\mathbf{E}_{{Q}}\, Z^*_1 \le \varkappa _{{Q}}\,\mathbf{m}_{{*}}\). Using the same type of arguments as in (71), we can derive

From here and (72), we get

for any \(0<\delta <1\). Using this bound in (71) yields

Taking into account that \(\vert \widehat{S}_{{\varpi }}\vert ^{2}\le 2\,(\text{ Err }(\widehat{\lambda })+\text{ Err }(\lambda _{{0}}))\), we obtain

and, therefore,

Note here, that (65) implies \( \mathbf{E}_{{Q}}\, B^*_{{2,Q}} \le \sum _{{\lambda \in \varLambda }}\mathbf{E}_{{Q}} B^2_{{2,Q}} (\nu (\lambda )) \le \mathbf{m}_{{*}} \mathbf{L}_{{2,Q}}\) and, therefore, taking into account, that \(1-3\delta \ge 1/2\) for \(0<\delta <1/6\), we get

Now Lemma 4 yields

Moreover, noting here, that \(\varkappa _{{Q}}\le (1+\overline{\tau }\vert \rho \vert _{{*}})\sigma _{{Q}}\) and using the bounds (64) and (65) we obtain the inequality (40). Hence we obtain the desired result. \(\square \)

7.2 Proof of Proposition 1

Let \(x^{'}=(x^{'}_{{j}})_{{1\le j\le p}}\) with \(x^{'}_{{j}}= \mathbf{1}_{{\{[\sqrt{T}] \leqslant j\leqslant p\}}}\). Then (30) and (35) yield

where \(\mathbf{M}\) is given in (68). Setting \(x^{''}=(x^{''}_{{j}})_{{1\le j\le p}}\) and \( x^{''}_{{j}}= p^{-1/2} \mathbf{1}_{{\{[\sqrt{T}] \leqslant j\leqslant p\}}}\), one can write the last term on the right-hand side of (75) as

where the functions \(B_{{1,Q}}\) and \(B_{{2,Q}}\) are defined in (63). To estimate the first term in (75) we note, that \(\sum _{{j\ge [\sqrt{T}]}}j^{-2}\le 2[\sqrt{T}]^{-1}\) and \(\sqrt{T}\ge 2\). Therefore, using Proposition 4 and Lemma 6, we come to the following upper bound

In the same way as in (70) through Lemma 6, we obtain

Taking into account, that \(\int ^{1}_{{0}}\vert \dot{S}(t)\vert \mathrm {d}t \le \Vert \dot{S}\Vert \) and \(\kappa _{{Q}}\le (1+\overline{\tau }\vert \rho \vert _{{*}})\sigma _{{Q}}\) and using the bounds (64) and (65) we obtain the inequality (41). Hence Proposition 1 holds true. \(\square \)

7.3 Proof of Theorem 2

This proof directly follows from Theorem 1 and Proposition 1. \(\square \)

7.4 Proof of Theorem 4

First, we denote by \(Q_{{0}}\) the distribution in \(\mathcal{D}[0,n]\) of the noise (6) with the parameter \(\varrho _{{1}}=\varsigma ^{*}\), \(\varrho _{{2}}=0\) and \(\varrho _{{3}}=0\), i.e., the distribution for the “signal + white noise” model. So, we can estimate from below the robust risk \( \mathcal{R}^{*}_{{T}}(\widetilde{S}_{{T}},S)\ge \mathcal{R}_{{Q_{{0}}}}(\widetilde{S}_{{T}},S)\). Now, Theorem 6.1 from Konev and Pergamenshchikov (2009b) yields the bound (52). Hence we obtain the desired result. \(\square \)

7.5 Proof of Proposition 2

First, we note that in view of (31) one can represent the quadratic risk for the empiric norm \(\Vert \cdot \Vert _{{p}}\) defined in (25) as

where \(\overline{\varTheta }_{{p}}= \sum _{j=1}^{p}\, \left( \theta _{{j,p}}-\lambda _{{0}}(j)\,\overline{\theta }_{{j,p}} \right) ^2\). First, note that

where \(\mathbf{L}^{*}_{{1,T}}=\sup _{{Q\in \mathcal{Q}_{{T}}}}\,\mathbf{L}_{{1,Q}}\). Taking into account that \(\upsilon _{{T}}=T/\varsigma ^{*}\), we get

Recalling here, that \(\lambda _{{0}}=\lambda _{{\alpha _{{0}}}}\), we get that

where \(\omega _{{0}}=\omega _{{\alpha _{{0}}}}= \left( \tau _{{k}} r_{{0}} \upsilon _{{T}}\right) ^{1/(2k+1)}\), \(r_{{0}}=\left[ \mathbf{r}/ \varepsilon \right] \varepsilon \) and \(\tau _{{\mathbf{k}}}\) is given in (47). Indeed, this follows immediately from the fact that \(\lim _{{T\rightarrow \infty }}r_{{0}}=\mathbf{r}\) and

Now, from (29) we obtain that for any \(0<\widetilde{\varepsilon }<1\)

where \(\varTheta _{{p}}= \sum ^{p}_{j=1}\,(1-\lambda _{{0}}(j))^2\,\theta ^2_{{j,p}}\). Moreover, in view of the definition (53)

Note here, that \(\theta ^2_{{j,p}}\le (1+\widetilde{\varepsilon })\theta ^{2}_{{j}}+(1+\widetilde{\varepsilon }^{-1})(\theta _{{j,p}}-\theta _{{j}})^{2}\) for any \(\widetilde{\varepsilon }>0\). Therefore, in view of Lemma 8 using the bound \(\sum ^{[\omega _{{0}}]}_{{j=[\ln T]}}j^{2}\le \omega ^{3}_{{0}}\), we get

Through Lemma 7 we have \( \varTheta _{{2,p}}\le (1+\widetilde{\varepsilon }) \sum _{{j\ge [\omega _{{0}}]+1}}\,\theta ^{2}_{{j}} +(1+\widetilde{\varepsilon }^{-1})\,\mathbf{r}\,p^{-2}\). Hence, \( \varTheta _{{p}}\, \le (1+\widetilde{\varepsilon })\, \varTheta ^{*} + (1+\widetilde{\varepsilon }^{-1})\, \left( 4\pi ^{2}r\omega ^{3}_{{0}}+r\right) \,p^{-2}\), where the first term \(\varTheta ^{*} =\sum _{{j\ge \ln T}}\,(1-\lambda _{{0}}(j))^2\,\theta ^{2}_{{j}}\). Moreover, note that

Moreover, \(\mathcal{W}_{{\mathbf{r},\mathbf{k}}}\subseteq \mathcal{W}_{{\mathbf{r},2}}\) for any \(\mathbf{k}\ge 2\). From here and Lemma 9 we get

and, therefore, in view of the condition \((\mathbf{H}_{{5}}\))

This implies, that

To estimate the term \(\varTheta ^{*}\) we set

where the sequence \((a_{{j}})_{{j\ge 1}}\) is defined in (50). This leads to the inequality

Using \(\lim _{{T\rightarrow \infty }} r_{{0}}=\mathbf{r}\), we get \( \limsup _{T\rightarrow \infty }\, \mathbf{U}_{{T}} \le \, \pi ^{-2\mathbf{k}}\left( \tau _{{\mathbf{k}}}\,r \right) ^{-2\mathbf{k}/(2\mathbf{k}+1)}\), where the coefficient \(\tau _{{\mathbf{k}}}\) is given in (76). This implies immediately that

Therefore, from (76) and (78) it follows that

Using now Lemma 5 and the condition \((\mathbf{H}_{{5}})\), we get the upper bound (54). Hence we obtain the desired result. \(\square \)

References

Barbu, V. S., Beltaief, S., Pergamenshchikov, S. M. (2019a). Robust adaptive efficient estimation for semi-Markov nonparametric regression models. Statistical Inference for Stochastic Processes, 22(2), 187–231.

Barbu, V. S., Beltaief, S., Pergamenshchikov, S. M. (2019b). Robust statistical signal processing in semi-Markov nonparametric regression models. Les Annales de l’I.S.U.P, 63(2–3), 45–56.

Beltaief, S., Chernoyarov, O., Pergamenshchikov, S. M. (2020). Model selection for the robust efficient signal processing observed with small Lévy noise. Annals of the Institute of Statistical Mathematics, 72, 1205–1235.

Barbu, V. S., Limnios, N. (2008). Semi markov chains and hidden semi-markov models toward applications their use in reliability and DNA analysis. Lecture notes in statistics. New York: Springer.

Barndorff-Nielsen, O. E., Shephard, N. (2001). Non-Gaussian Ornstein-Uhlenbeck-based models and some of their uses in financial mathematics. Journal of the Royal Statistical Society Series B (Statistical Methodology), 63, 167–241.

Biard, R., Saussereau, B. (2014). Fractional Poisson processes: Long-range dependence and applications in ruin theory. Journal of Applied Probability, 51, 727–740.

Fourdrinier, D., Pergamenshchikov, S. M. (2007). Improved selection model method for the regression with dependent noise. Annals of the Institute of Statistical Mathematics, 59(3), 435–464.

Fujimori, K. (2019). The Dantzig selector for a linear model of diffusion processes. Statistical Inference for Stochastic Processes, 22, 475–498.

Hastie, T., Friedman, J., Tibshirani, R. (2008). The elements of statistical leaning. data mining, inference and prediction (2nd ed.). New York: Springer, Springer series (in Statistics).

Ibragimov, I. A., Khasminskii, R. Z. (1981). Statistical estimation: Asymptotic theory. New York: Springer.

Kassam, S. A. (1988). Signal detection in Non-Gaussian Noise. IX. New York: Springer.

Konev, V. V., Pergamenshchikov, S. M. (2009). Nonparametric estimation in a semimartingale regression model. Par.t 1. Oracle Inequalities. Vestnik Tomskogo Gosudarstvennogo Universiteta. Matematika i Mekhanika, 3(7), 23–41.

Konev, V. V., Pergamenshchikov, S. M. (2009). Nonparametric estimation in a semimartingale regression model. Part 2. Robust asymptotic efficiency. Vestnik Tomskogo Gosudarstvennogo Universiteta. Matematika i Mekhanika, 4(8), 31–45.

Konev, V. V., Pergamenshchikov, S. M. (2012). Efficient robust nonparametric in a semimartingale regression model. Annales de l’Institut Henri Poincaré, Probabilités et Statistiques, 48(4), 1217–1244.

Konev, V. V., Pergamenshchikov, S. M. (2015). Robust model selection for a semimartingale continuous time regression from discrete data. Stochastic Processes and their Applications, 125, 294–326.

Kutoyants, Yu. A. (1994). Identification of dynamical systems with small noise. Dordrecht: Kluwer Academic Publishers Group.

Laskin, N. (2003). Fractional Poisson processes. Communications in Nonlinear Science and Numerical Simulation, 8, 201–213.

Liptser, R. S., Shiryaev, A. N. (1989). Theory of martingales. New York: Springer.

Maheshwari, A., Vellaisamy, P. (2016). On the long - range dependence of fractional Poisson processes. Journal of Applied Probability, 53(4), 989–1000.

Middleton, D. (1979). Canonical non-Gaussian noise models: Their implications for measurement and for prediction of receiver performance. IEEE Transactions on Electromagnetic Compatibility, 21, 209–220.

Novikov, A. A. (1975). On discontinuous martingales. Theory of Probability and its Applications, 20(1), 11–26.

Pinsker, M. S. (1981). Optimal filtration of square integrable signals in gaussian white noise. Problems of Transmission Information, 17, 120–133.

Repin, O. N., Saichev, A. I. (2000). Fractional Poisson law. Radiophysics and Quantum Electronics, 43(9), 738–741.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This research was supported by RSF, Project No 20-61-47043 (National Research Tomsk State University, Russia).

Supplementary Information

Below is the link to the electronic supplementary material.

Appendix

Appendix

1.1 Property of the penalty term

Lemma 4

For any \(n\ge \,1\) and \(\lambda \in \varLambda \),

where the coefficient \(P_{{T}}(\lambda )\) is defined in (68) and \(\mathbf{L}_{{1,Q}}\) is defined in (64).

Proof

Now Proposition 4 implies

Hence we obtain the result. \(\square \)

1.2 Properties of the Fourier coefficients

Lemma 5

Let f be an absolutely continuous function, \(f: [0,1]\rightarrow {{\mathbb {R}}},\) with \(\Vert \dot{f}\Vert <\infty \) and g be a simple function, \(g: [0,1]\rightarrow {{\mathbb {R}}}\) of the form \( g(t)=\sum _{j=1}^p\,c_{{j}}\,\chi _{(t_{j-1},\mathbf{t}_{{j}}]}(t),\) where \(c_{{j}}\) are some constants. Then, for any \(\varepsilon >0,\) the function \(\varDelta =f-g\) satisfies the following inequalities

Lemma 6

Let the function S(t) in (3) be absolutely continuous and have an absolutely integrable derivative. Then the coefficients \((\overline{\theta }_{{j,p}})_{1\leqslant j \leqslant p}\) defined in (29) satisfy the inequalities \(\max _{{2\leqslant j \leqslant p}} j \vert \overline{\theta }_{{j,p}} \vert \leqslant 2 \sqrt{2} \int ^{1}_{{0}}\vert \dot{S}(t) \vert \mathrm {d}t\).

Lemma 7

For any \(p\ge 2\), \(1\le N\le p\) and \(r>0\), the coefficients \((\theta _{{j,p}})_{{1\le j\le p}}\) of functions S from the class \(\mathcal{W}_{{\mathbf{r},1}}\) satisfy, for any \(\widetilde{\varepsilon }>0\), the following inequality \( \sum ^{p}_{{j=N}} \theta ^{2}_{{j,p}} \, \le \,(1+\widetilde{\varepsilon }) \,\sum _{{j\ge N}}\,\theta ^{2}_{{j}} \, +(1+\widetilde{\varepsilon }^{-1})\,r\,p^{-2}\).

Lemma 8

For any \(p\ge 2\) and \(r>0\), the coefficients \((\theta _{{j,p}})_{{1\le j\le p}}\) of functions S satisfy the inequality \( \max _{{1\le j\le p}} \,\sup _{{S\in \mathcal{W}_{{\mathbf{r},1}}}} \left( |\theta _{{j,p}} - \theta _{{j}}| -2\pi \sqrt{r} \,j\,p^{-1} \right) \, \le 0\).

Lemma 9

For any \(p\ge 2\) and \(r>0\) the correction coefficients from (29) satisfy the inequality \( \sup _{{S\in \mathcal{W}_{{\mathbf{r},2}}}} \sum ^{p}_{{j=1}} h^{2}_{{j,p}} \le \,3r\,p^{-2}\).

About this article

Cite this article

Barbu, V.S., Beltaief, S. & Pergamenchtchikov, S. Adaptive efficient estimation for generalized semi-Markov big data models. Ann Inst Stat Math 74, 925–955 (2022). https://doi.org/10.1007/s10463-022-00820-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10463-022-00820-y