Abstract

In this paper, we consider testing the homogeneity for proportions in independent binomial distributions, especially when data are sparse for large number of groups. We provide broad aspects of our proposed tests such as theoretical studies, simulations and real data application. We present the asymptotic null distributions and asymptotic powers for our proposed tests and compare their performance with existing tests. Our simulation studies show that none of tests dominate the others; however, our proposed test and a few tests are expected to control given sizes and obtain significant powers. We also present a real example regarding safety concerns associated with Avandia (rosiglitazone) in Nissen and Wolski (New Engl J Med 356:2457–2471, 2007).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

An important step in statistical meta-analysis is to carry out appropriate tests of homogeneity of the relevant effect sizes before pooling of evidence or information across studies. While the familiar Cochran (1954) Chi-square goodness-of-fit test is widely used in this context, it turns out that this test may perform poorly in terms of not maintaining Type I error rate in many problems. In particular, this is indeed a serious drawback of Cochran’s test for testing the homogeneity of several proportions in case of sparse data. A recent meta-analysis (Nissen and Wolski 2007), addressing the cardiovascular safety concerns associated with (rosiglitazone), has received wide attention (Cai et al. 2010; Tian et al. 2009; Shuster et al. 2007; Shuster 2010; Stijnen et al. 2010). Two difficulties seem to appear in this study: first, study sizes (N) are highly unequal, especially in control arm, with over \(95 \%\) of the studies having sizes below 400 and two studies having sizes over 2500; second, event rate is extremely low, especially for death end point, with the maximum death rate in the treatment arm being \(2 \%\), while in control arm, over \(80 \%\) of the studies have zero events. The original meta-analysis (Nissen and Wolski 2007) was performed under fixed effects framework, as the diagnostic test based on Cochran’s Chi-square test failed to reject homogeneity. However, with two large studies dominating the combined result, people agree random effects analysis is the superior choice over fixed effects (Shuster et al. 2007). Moreover, the results for the fixed and random effect analyses are discordant. While different fixed effect and random effect approaches are proposed, the problem of testing for homogeneity of effect sizes is less familiar and often not properly addressed. This is precisely the object of this paper, namely a thorough discussion of tests of homogeneity of proportions in case of sparse data situations. Recently, there are some studies on testing the equality of means when the number of groups increases with fixed sample sizes in either ANOVA (analysis of variance) or MANOVA (multivariate analysis of variance). For example, see Bathke and Harrar (2008), Bathke and Lankowski (2005) and Boos and Brownie (1995). Those studies have limitation in asymptotic results since they assume all samples sizes are equal, i.e., balanced design. On the other hand, we actually emphasize the case that sample sizes are highly unbalanced and present more fluent asymptotic results for a variety cases including unbalanced cases and small values of proportions in binomial distributions.

In this paper, we first point out that the classical Chi-square test may fail in controlling a size when the number of groups is high and data are sparse. We modify the classical Chi-square test with providing asymptotic results. Moreover, we propose two new tests for homogeneity of proportions when there are many groups with sparse count data. Throughout this study, we present some theoretical conditions under which our proposed tests achieve the asymptotic normality, while most of existing tests do not have rigorous investigation of asymptotic properties.

A formulation of the testing problem for proportions is provided in Sect. 2 along with a review of the literature and suggestion for new tests. The necessary asymptotic theory to ease the application of the suggested test is developed. Results of simulation studies are reported in Sect. 3, and an application to the Nissen and Wolski (2007) data set is made in Sect. 4. Concluding remark is presented in Sect. 5.

2 Testing the homogeneity of proportions with sparse data

In this section, we present a modification of a classical test which is Cochran’s test and also propose two types of new tests. Throughout this paper, our theoretical studies are based on triangular array which is commonly used in asymptotic theories in high dimension. See Park and Ghosh (2007) and Park (2009) for triangular array in binary data and Greenshtein and Ritov (2004) for more general cases. More specifically, let \({\varTheta }^{(k)} = \{ (\pi _1^{(1)}, \pi _2^{(2)}, \ldots , \pi _k^{(k)}) : 0<\pi _{i}^{(k)}<1~~\text{ for } 1\le i \le k\}\) be the parameter space in which \(\pi _i^{(k)}\)s are allowed to be varying depending on k as k increases. Additionally, sample sizes \((n_1^{(1)}, \ldots , n_k^{(k)})\) also change depending on k. However, for notational simplicity, we suppress superscript k from \(\pi _i^{(k)}\) and \(n_i^{(k)}\). The triangular array provides more flexible situations, for example all increasing sample sizes and all decreasing \(\pi _i\)s. On the other hand, the asymptotic results in Bathke and Lankowski (2005) and Boos and Brownie (1995) are based on increasing k, but all sample sizes and \(\pi _i\)s are fixed. This set up provides somewhat limited results, while we present the asymptotic results on the triangular array. Our results will include the asymptotic power functions of proposed tests, while existing studies do not provide them.

2.1 Modification of Cochran’s test

Suppose that there are k independent populations and the ith population has \(X_{i} \sim \hbox {Binomial}(n_i, \pi _i)\). Denote the total sample size and the weighted average of \(\pi _i\)’s by \(N=\sum _{i=1}^kn_i\) and \(\bar{\pi }= \frac{1}{N} \sum _{i=1}^kn_i \pi _i\), respectively. We are interested in testing the homogeneity of \(\pi _{i}\)’s from different groups,

To test the above hypothesis in (1), one familiar procedure is Cochran’s Chi-square test in Cochran (1954), namely \(T_S\):

where \({\hat{\pi }} = \frac{\sum _{i=1}^kX_i }{\sum _{i=1}^kn_i}\). \(\mathcal{T}_S\) uses an approximate Chi-square distribution with degrees of freedom \((k-1)\) under \(H_0\). The \(H_0\) is rejected when \({T}_S > \chi ^2_{1-\alpha , k-1}\) where \(\chi ^2_{1-\alpha , k-1}\) is the \(1-\alpha \) quantile of Chi-square distribution with degrees of freedom \((k-1)\). In particular, when k is large, \( \frac{T_S -k}{\sqrt{2k}}\) is approximated by a standard normal distribution under \(H_0\). Although Cochran’s test for homogeneity is widely used, the approximation to the \(\chi ^2\) distribution of \(T_S\) or normal approximation may be poor when the sample sizes within the groups are small or when some counts in one of the two categories are low. This is partly because the test statistic becomes noticeably discontinuous and partly because its moments beyond the first may be rather different from those of \(\chi ^2\).

We demonstrate that the asymptotic Chi-square approximation to \({T}_S\) or normal approximation based on \(\frac{{T}_S-k}{\sqrt{2k}}\) may be very poor when k is large or \(\pi _i\)s are small compared to \(n_i\)s. We provide the following theorem and propose a modified approximation to \(T_S\) which is expected to provide more accurate approximation. Let us define

where \(\mathcal{T}_S = \sum _{i=1}^k \frac{(X_i - n_i \bar{\pi })^2}{n_i \bar{\pi }(1-\bar{\pi })}\), \(\mathcal{B}_k \equiv \hbox {Var}(\mathcal{T}_S) = \sum _{i=1}^k \hbox {Var} \left( \frac{(X_i - n_i \bar{\pi })^2}{n_i \bar{\pi }(1-\bar{\pi })} \right) \equiv \sum _{i=1}^k B_i\) and

Note that \(\mathcal{T}_S\) is not a statistic since it still includes the unknown parameter \(\bar{\pi }= \sum _{i=1}^k \frac{n_i \pi _i}{N}\). It will be shown later that \(\bar{\pi }\) can be replaced by \(\hat{\bar{\pi }} = \frac{1}{N} \sum _{i=1}^k n_i \hat{\pi }_i\) under \(H_0\) since \(\hat{\bar{\pi }}\) has the ratio consistency (\(\frac{\hat{\bar{\pi }}}{\bar{\pi }} \rightarrow 1\) in probability) under some mild conditions. Define

and

which is the T defined in (3) under \(H_0\) since \(E(\mathcal{T}_S) =k\) and \(\mathcal{B}_k = \mathcal{B}_{0k}\) under \(H_0\). The following theorem shows the asymptotic properties of \(T_0\) in (4).

Theorem 1

For \(\theta _i=\pi _i(1-\pi _i)\) and \(\bar{\theta }= \bar{\pi }(1-\bar{\pi })\), if \(\frac{\sum _{i=1}^k\left( \theta _i^4 + \frac{\theta _i}{n_i} \right) }{(\bar{\pi }(1-\bar{\pi }))^4 \mathcal{B}_k^2 } \rightarrow 0\) and \( \frac{\sum _{i=1}^kn_i^2 \theta _i (\pi _i -\bar{\pi })^4 (\theta _i + \frac{1}{n_i}) }{(\bar{\pi }(1-\bar{\pi }))^4\mathcal{B}_k^2} \rightarrow 0\) as \(k\rightarrow \infty \), then we have

where \(\mu _k = \frac{E(\mathcal{T}_S)-k}{\sqrt{\mathcal{B}_{k}}}\), \(\sigma ^2_k = \frac{\mathcal{B}_k}{\mathcal{B}_{0k}}\) and \(\bar{\varPhi }(z) = 1-{\varPhi }(z) =P(Z\ge z) \) for a standard normal distribution Z.

Proof

See “Appendix”. \(\square \)

We propose to use a test which rejects the \(H_0\) if

where \(z_{1-\alpha }\) is the \(1-\alpha \) quantile of a standard normal distribution, \(\hat{\mathcal{B}}_{0k} = \sum _{i=1}^k \left( 2-\frac{6}{n_i} + \frac{1}{n_i \hat{\bar{\pi }}(1-\hat{\bar{\pi }})} \right) \) and \(\hat{\bar{\pi }} = \frac{\sum _{i=1}^k n_i \hat{\pi }_i}{N}\).

Using Theorem 1, we obtain the following results which states that our proposed modification of Cochran’s test in (5) is the asymptotically size \(\alpha \) test, while \(\frac{{T}_S -k}{\sqrt{2k}}\) may fail in controlling a size \(\alpha \) under some conditions.

Corollary 1

Under \(H_0\) and the conditions in Theorem 1, \(T_{\chi }\) in (5) is asymptotically size \(\alpha \) test. A normal approximation to \(\frac{{T}_S -k}{\sqrt{2k}}\) is not asymptotically size \(\alpha \) test unless \(\frac{\mathcal{B}_{0k}}{2k} \rightarrow 1\).

Proof

We first show that \(\hat{\bar{\pi }}/\bar{\pi }\rightarrow 1\) in probability. Under \(H_0\), \(\pi _i \equiv \pi \), we have \(\sum _{i=1}^k n_i \hat{\pi }_i \sim Binomial(N,\pi )\). Using \( \sum _{i=1}^k n_i \pi _i = N\pi \rightarrow \infty \) under \(H_0\), we have

leading to \(\hat{\bar{\pi }}/\bar{\pi }\rightarrow 1\) in probability. From this, we have \( \frac{\hat{\mathcal{B}}_{0k}}{\mathcal{B}_{0k} } \rightarrow 1 \) in probability under \(H_0\). Furthermore, under \(H_0\), since we have \( \frac{\mathcal{B}_{0k}}{\mathcal{B}_k}=1\) and \(E(\mathcal{T}_S)=k\), we obtain \(T_{\chi } - T = ( \sqrt{\frac{\mathcal{B}_{0k}}{ \hat{\mathcal{B}}_{0k} }} -1 )T = o_p(1) O_p(1) = o_p(1)\) which means \(T_{\chi }\) and T are asymptotically equivalent under the \(H_0\). Since \(P_{H_0}(T > z_{1-\alpha } ) - \bar{\varPhi }(z_{1-\alpha }) \rightarrow 0 \), we have \(P_{H_0}(T_{\chi } > z_{1-\alpha } ) - \alpha \rightarrow 0\) which means \(T_{\chi }\) is the asymptotically size \(\alpha \) test. On the other hand, it is obvious that \( \frac{\mathcal{T}_S -k}{\sqrt{2k}}\) does not have an asymptotic standard normality unless \({\mathcal{B}_{0k}}/(2k) \rightarrow 1\) since \( \frac{\mathcal{T}_S-k}{\sqrt{2k}} = \sqrt{\frac{\hat{\mathcal{B}}_{0k}}{2k}} T_{\chi }\) under the \(H_0\). \(\square \)

Under \(H_0\), since \(\mathcal{B}_{0k} = 2k + (\frac{1}{ \bar{\pi }(1-\bar{\pi })} -6 ) \sum _{i=1}^k \frac{1}{n_i}\), we expect \(\frac{\mathcal{B}_{0k}}{2k}\) to converge to 1 when \( (\frac{1}{\pi (1-\pi )} -6 ) \sum _{i=1}^k \frac{1}{n_i} =o(k)\) where \(\pi _i= \bar{\pi }\equiv \pi \) under \(H_0\). This may happen when \(\pi \) is bounded away from 0 and 1 and \(n_i\)s are large. If all \(n_i\)s are bounded by some constant, say C, and \(|\frac{1}{\pi (1-\pi )} -6| \ge \delta >0\) (this can happen when \(\pi <\epsilon _1\) or \(\pi > 1-\epsilon _2\) for some \(\epsilon _1>0\) and \(\epsilon _2>0\)), then \(\frac{\mathcal{B}_k}{2k}\) does not converge to 1. Even for \(n_i\)s are large, if \(\pi \rightarrow 0\) fast enough, then \(\frac{\mathcal{B}_{0k}}{2k}\) does not converge to 1. For example, if \(\pi =1/k\) and \(n_i=k\) as \(k \rightarrow \infty \), then \(\frac{\mathcal{B}_{0k}}{2k} \rightarrow 3/2\) which leads to \(\frac{\mathcal{T}_S-k}{\sqrt{2k}} \rightarrow N(0, \frac{3}{2})\) in distribution. This implies that \(P(\frac{\mathcal{T}_S -k}{\sqrt{2k}}> z_{1-\alpha }) \rightarrow 1-{\varPhi }( \sqrt{\frac{2}{3}} z_{1-\alpha } ) > \alpha \), so the test obtains a larger asymptotic size than a given nominal level. To summarize, if either \(\pi \) is small or \(n_i\)s are small, we may not expect an accurate approximation to \(\frac{\mathcal{T}_S-k}{\sqrt{2k}}\) based on normal approximation, so the sparse binary data with small \(n_i\)s and a large number of groups (k) need to be handled more carefully.

2.2 New tests

In addition to the modified Cochran’s test \(T_{\chi }\), we also propose new tests designed for sparse data when k is large. Similar to the asymptotic normality of \(T_{\chi }\), it will be justified that our proposed tests have the asymptotic normality when \(k \rightarrow \infty \) although \(n_i\)s are not required to increase. Toward this end, we proceed as follows. Let \(||{\varvec{\pi }} - {\varvec{\bar{\pi }}}||^2_{\mathbf{n}} = \sum _{i=1}^k n_i (\pi _i - \bar{\pi })^2\) which is weighted \(l_2\) distance from \({\varvec{\pi }}=(\pi _1,\pi _2,\ldots , \pi _k)\) to \({\varvec{\bar{\pi }}} = (\bar{\pi }, \bar{\pi }, \ldots , \bar{\pi })\) where \(\mathbf{n}=(n_1,\ldots , n_k)\). The proposed test is based on measuring the \(||{\varvec{\pi }} - {\varvec{\bar{\pi }}}||^2_{\mathbf{n}}\). Since this is unknown, one needs to estimate the \(||{\varvec{\pi }} - {\varvec{\bar{\pi }}}||^2_{\mathbf{n}}\). One typical estimator is a plug-in estimator such as \(||\hat{{\varvec{\pi }}} - {{\varvec{\hat{\bar{\pi }}}}}||_{\mathbf{n}}\); however, this estimator may have a significant bias. To illustrate this, note that

where \(c_i =(1 - \frac{n_i}{N})\). This shows that \(||\hat{{\varvec{\pi }}} - {{\varvec{\hat{ \bar{\pi }}}}}||^2_{\mathbf{n}}\) is an overestimate of \(||{\varvec{\pi }} - {\varvec{\bar{\pi }}}||^2_{\mathbf{n}}\) by \(\sum _{i=1}^kc_i \pi _i (1-\pi _i)\) which needs to be corrected. Using \(E\left[ \frac{n_i}{n_i-1} \hat{\pi }_i (1-\hat{\pi }_i)\right] = \pi _i (1-\pi _i)\) for \(\hat{\pi }_i = \frac{x_i}{n_i}\), we define \(d_i=\frac{n_i c_i}{n_i-1}\) and

which is an unbiased estimator of \(||{\varvec{\pi }} - {\varvec{\bar{\pi }}}||^2_{\mathbf{n}}\). This implies \(E(T)= ||{\varvec{\pi }} - {\varvec{\bar{\pi }}}||^2_{\mathbf{n}} \ge 0\) and “=” holds only when \(H_0\) is true. Therefore, it is natural to consider large values of T as an evidence supporting \(H_1\), and we thus propose a one-sided (upper) rejection region based on T for testing \(H_0\). Our proposed test statistics are based on T of which the asymptotic distribution is normal distribution under some conditions.

We derive the asymptotic normality of a standardized version of T under some regularity conditions. Let us decompose T into two components, say \(T_1\) and \(T_2\):

where \(T_1 \equiv \sum _{i=1}^kT_{1i}\) for \(T_{1i} = n_i (\hat{\pi }_i - \pi _i)^2 - d_i \hat{\pi }_i (1-\hat{\pi }_i)+2 n_i (\hat{\pi }_i - \pi _i ) (\pi _i-\bar{\pi })+n_i (\pi _i -\bar{\pi })^2\). To prove the asymptotic normality of the proposed test, we need some preliminary results stated below in Lemmas 1, 2 and 3 and show the ratio consistency of proposed estimators of \(\hbox {Var}(T_1)\) in Lemma 5.

Lemma 1

Let \(\theta _i= \pi _i (1-\pi _i)\). When \(X_i \sim Binomial (n_i, \pi _i)\) and \(\hat{\pi }_i = \frac{X_i}{n_i}\), we have

Proof

The first three results are easily derived by some computations. For the last result, note that when \(X_{i}\sim \hbox {Binomial}(n_{i},\pi _{i})\), \(E[X_i(X_i-1)\cdots (X_i-l+1)] = n_i(n_i-1)\cdots (n_i-l+1) \pi _{i}^l\). Let  , then we have the above unbiased estimators under \(H_0\) using \(\hat{\pi }= \frac{X}{N} = \frac{1}{N} \sum _{i=1}^kn_i \hat{\pi }_i\). \(\square \)

, then we have the above unbiased estimators under \(H_0\) using \(\hat{\pi }= \frac{X}{N} = \frac{1}{N} \sum _{i=1}^kn_i \hat{\pi }_i\). \(\square \)

We now derive the asymptotic null distribution of \(\frac{T_1}{\sqrt{\hbox {Var}(T_1)}}\) and propose an unbiased estimator of \({\hbox {Var}(T_1)}\) which has the ratio consistency property. We first compute \(\hbox {Var}(T_1)\) and then propose an estimator \(\widehat{\hbox {Var}(T_1)}\).

Lemma 2

The variance of \(T_1\), \(\hbox {Var}(T_1)\), is

where \( \mathcal{A}_{1i}= \left( 2-\frac{6}{n_i} - \frac{d_i^2}{n_i} + \frac{8d_i^2}{n_i^2}-\frac{6d_i^2}{n_i^3} + 12 d_i \frac{n_i-1}{n_i^2} \right) \) and \(\mathcal{A}_{2i}= \frac{ n_i}{N^2}\) for \(d_i = \frac{n_i}{n_i-1} \left( 1-\frac{n_i}{N} \right) \) .

Proof

See “Appendix”. \(\square \)

Under the \(H_0\) (\(\pi _i = \pi \) for all \(1\le i \le k\)), the third and fourth terms including \(\pi _i-\bar{\pi }\) in (9) are 0, and therefore, we obtain the \(\hbox {Var}(T_1)\) under \(H_0\) as follows;

\(\mathcal{V}_1\) in (10) and \(\mathcal{V}_{1*}\) in (11) are equivalent under the \(H_0\); however, the estimators may be different depending on whether \(\theta _i\)s are estimated individually from \(x_i\) or the common value \(\pi \) is estimated in \(\mathcal{V}_{1*}\) by the pooled estimator \(\hat{\pi }\). We shall consider these two approaches for estimating \(\mathcal{V}_1\) and \(\mathcal{V}_{1*}\).

First, we demonstrate the estimator for \(\mathcal{V}_1\) in (10). \( \mathcal{V}_{1i} \equiv \mathcal{A}_{1i} \theta _i^2 + \mathcal{A}_{2i} \theta _i\) is a fourth degree polynomial in \(\pi _i\), in other words, \(\mathcal{V}_{1i} = a_{1i} \pi _i + a_{2i} \pi _i^2 + a_{3i} \pi _3^3 + a_{14} \pi _i^4\) where \(a_{ij}\)’s depend only on N and \(n_i\). As an estimator of \(\mathcal{V}_1 = \sum _{i=1}^k(a_{1i} \pi _i + a_{2i} \pi _i^2 + a_{3i} \pi _i^3 + a_{4i} \pi _i^4)\), we consider unbiased estimators of \(\pi _i\), \(\pi _i^2\), \(\pi _i^3\) and \(\pi _i^4\). Let \(\eta _{li}= \pi _{i}^l\), \(l=1,2,3,4\), then unbiased estimators of \(\eta _{li}\), say \(\hat{\eta }_{li}\), are obtained directly from Lemma 1, leading to the first estimator of \(\mathcal{V}_{1}\), as

where \( \hat{\eta }_{li} = \frac{n_i^l}{\prod _{j=1}^{l-1} (n_i-j)} \prod _{j=0}^{l-1} \left( \hat{\pi }_i - \frac{j}{n_i} \right) \) for \(l=1,2,3,4\) from Lemma 1 and

The second estimator is based on estimating \(\mathcal{V}_{1*}\) in (11). Since all \(\pi _i=\pi \) under \(H_0\), we can write \(\mathcal{V}_{1*}= \sum _{i=1}^{k} \sum _{l=1}^4 a_{li} \pi _i^l = \sum _{i=1}^k\sum _{l=1}^4 a_{li} \pi ^l\) and use an unbiased estimator of \(\pi ^l\) using \(\sum _{i=1}^k x_i \sim Binomial(N, \pi )\) from Lemma 1. This leads to the estimator of \(\mathcal{V}_{1*}\) under \(H_0\) which is

where \(\hat{\eta }_{l} = \frac{N^l}{\prod _{j=0}^l(N-j)} \prod _{j=0}^{l-1} \left( \hat{\pi }- \frac{j}{N} \right) \) and \(\hat{\pi }= \frac{1}{N} \sum _{i=1}^kn_i \hat{\pi }_i\), as used earlier.

Remark 1

Note that \(\hat{\mathcal{V}}_1\) is an unbiased estimator of \(\mathcal{V}_1\) regardless of \(H_0\) and \(H_1\). On the other hand, \(\hat{\mathcal{V}}_{1*}\) is an unbiased estimator of \(\mathcal{V}_{1*}\) only under the \(H_0\) since we use the binomial distribution of the pooled data \(\sum _{i=1}^k x_i\) and use Lemma 1.

For sequences of \(a_n (>0)\) and \(b_n(>0)\), let us define \(a_n \asymp b_n \) if \( 0< \liminf \frac{a_n}{b_n} \le \limsup \frac{a_n}{b_n} < \infty \). The following lemmas will be used in the asymptotic normality of the proposed test.

Lemma 3

Suppose \(n_i \ge 2\) for \(1\le i \le k\). Then,

-

1.

we have \(\mathcal{V}_1 \asymp \sum _{i=1}^k \theta _i^2 + \frac{1}{N^2} \sum _{i=1}^k n_i \theta _i\). In particular, if \(0<c\le \pi _i \le 1-c <1\) for all i and some constant c, we have \(\mathcal{V}_1\asymp k\).

-

2.

we have

$$\begin{aligned} \sum _{i=1}^k \mathcal{A}_{1i} \theta _i^2 \le \hbox {Var}(T_1) \le K\left( \mathcal{V}_1 + ||{{\varvec{\pi }}} - \bar{{\varvec{\pi }}} ||_{\mathbf{n} \theta }^2\right) \end{aligned}$$(14)for some constant \(K>0\) where \(||{{\varvec{\pi }}} - \bar{{\varvec{\pi }}} ||_{\mathbf{n} \theta }^2 = \sum _{i=1}^k n_i (\pi _i - \bar{\pi })^2 \theta _i\). If \( |\pi _i - \bar{\pi }| \ge \frac{1+\epsilon }{N}\) for some \(\epsilon >0\) and \(1\le i\le k\), we have

$$\begin{aligned} \hbox {Var}(T_1) \asymp \mathcal{V}_1 + ||{{\varvec{\pi }}} - \bar{{\varvec{\pi }}} ||_{\mathbf{n} \theta }^2. \end{aligned}$$(15)

Proof

See “Appendix”. \(\square \)

We provide another lemma which plays a crucial role in the proof of the main result. As mentioned, we have two types of variances such as \(\mathcal{V}_1\) in (10) and \(\mathcal{V}_{1*}\) in (11) and their estimators \(\widehat{\mathcal{V}_1}\) and \(\widehat{\mathcal{V}}_{1*}\). For \(T_1\) in (8), we consider two types of standard deviations based on \(\hbox {Var}(T_1)\) and \(\hbox {Var}(T_1)_*\).

The following lemma provides upper bounds of \(n^4 E(\hat{\pi }- \pi )^8\) and \(E(\hat{\pi }(1-\hat{\pi }))^4\) which are needed in our proof for our mail results.

Lemma 4

If \(X \sim \hbox {Binomial}(n,\pi )\), \(\hat{\pi }= \frac{X}{n}\) and \(\hat{\eta }_l\) is the unbiased estimator of \(\pi ^l\) defined in Lemma 1, then we have, for \(\theta \equiv \pi (1-\pi )\),

where C and \(C'\) are universal constants which do not depend on \(\pi \) and n.

Proof

See “Appendix”. \(\square \)

Remark 2

It should be noted that the bounds in Lemma 4 depend on the behavior of \(\theta =\pi (1-\pi )\) and the sample size n in binomial distribution. In the classical asymptotic theory for a fixed value of \(\pi \), if \(\pi \) is bounded away from 0 and 1 and n is large, then \(\theta ^4\) dominates \(\frac{\theta }{n}\) (or \(\frac{\theta }{n^3}\)). However, n is not large and \(\pi \) is close to 0 or 1, then \(\frac{\theta }{n}\) (or \(\frac{\theta }{n^3}\)) is a tighter bound of \(n^4 E(\hat{\pi }- \pi )^8\) (or \(E(\hat{\pi }(1-\hat{\pi }))^4\)) than \(\theta ^4\).

The following lemma shows that \(\hat{\mathcal{V}}_1\) and \(\hat{\mathcal{V}}_{1*}\) have the ratio consistency under some conditions.

Lemma 5

For \(\tilde{\theta }= \bar{\pi }(1-\bar{\pi })\), \(\bar{\pi }= \frac{1}{N} \sum _{i=1}^kn_i \pi _i\) and \(\pi _i \le \delta <1\) for some \(0<\delta <1\), we have the followings;

-

1.

if \(\frac{\sum _{i=1}^k \left( \frac{\theta _i^3}{n_i}+ \frac{\theta _i}{n_i^3}\right) }{\left( \sum _{i=1}^k \left( \theta _i^2 + \frac{1}{N^2} \frac{\theta _i}{n_i} \right) \right) ^2} \rightarrow 0\) as \(k\rightarrow 0\), \(\frac{\hat{\mathcal{V}}_1}{\mathcal{V}_1} \rightarrow 1\) in probability.

-

2.

if \(\frac{ (\tilde{\theta })^3 \sum _{i=1}^k\frac{1}{n_i} + \tilde{\theta }\sum _{i=1}^k \frac{1}{n_i^3}}{ \left( k (\tilde{\theta })^2+ \frac{\tilde{\theta }}{N^2} \sum _{i=1}^k \frac{1}{n_i} \right) ^2 } \rightarrow 0 \), \(\frac{\hat{\mathcal{V}}_{1*}}{\mathcal{V}_{1*}} \rightarrow 1\) in probability.

Proof

See “Appendix”. \(\square \)

Remark 3

Lemma 5 includes the condition \(\pi _i \le \delta <1\) which avoids dense case that the majority of observations are 1. Since our study focuses on sparse case, it is realistic to exclude \(\pi _i\)s which are very close to 1. When data are dense, the homogeneity test of \(\pi _i\) can be done through testing \(\pi _i^* \equiv 1-\pi \) and \(x_{ij}^*=1-x_{ij}\).

Remark 4

As an estimator of \(\pi _i^l\) or \(\pi ^l\) for \(l=1,2,3,4\), we used unbiased estimators of them. Instead of unbiased estimators, we may consider simply MLE, \( (\hat{\pi }_i)^l\) or \( (\hat{\pi })^l\) for \(l=1,2,3,4\). For the first type estimator \(\hat{\mathcal{V}}_1\), when sample sizes \(n_i\) are not large, unbiased estimators and MLE are different. Especially, if all \(n_i\)s are small and k is large, then such small differences are accumulated so the behavior of estimators for variance is expected to be significantly different. This will be demonstrated in our simulation studies. On the other hand, for \(\hat{\mathcal{V}}_{1*}\), unbiased estimators and MLEs for \( (\pi )^l\) under \(H_0\) behave almost same way even for small \(n_i\) since the total sample size \(N=\sum _{i=1}^k n_i\) is large due to large k. The estimator of \(\mathcal{V}_{1}\) based on \(\hat{\pi }_i\), namely \(\hat{\mathcal{V}}_{1}^{mle}\) has the larger variance

while \(E(\hat{\mathcal{V}}_1 - \mathcal{V}_1)^2 \asymp \sum _{i=1}^k\left( \frac{\theta _i^3}{n_i} + \frac{\theta _i}{n_i^3}\right) \). Similarly, we can also define \({\hat{\mathcal{V}}}_{1*}^{mle}\) based on the \(\hat{\pi } = \frac{\sum _{i=1}^k x_{i}}{N}\). Even with the given condition \( \sum _{i=1}^k\left( \frac{\theta _i^3}{n_i}+\frac{\theta _i}{n_i^3}\right) /(\sum _{i=1}^k\theta _i^2 + \frac{1}{N^2} \sum _{i=1}^k\frac{\theta _i}{n_i})^2 =o(1)\), \(\hat{\mathcal{V}}_{1}^{mle}\) may not be a ratio-consistent estimator due to the additional variation from biased estimation of \(\pi _i^l\) for \(l=2,3,4\). We present simulation studies comparing tests with \(\hat{\mathcal{V}}_1\) and \(\hat{\mathcal{V}}_1^{mle}\) later.

In Lemma 5, we present ratio consistency of \(\hat{\mathcal{V}}_1\) and \(\hat{\mathcal{V}}_{1*}\) under some conditions. Both conditions avoid too small \(\pi _i\)s compared to \(n_i\)s among k groups. It is obvious that the conditions are satisfied if all \(\pi _i\)s are uniformly bounded away from 0 and 1. In general, however, the conditions allow small \(\pi _i\)s which may converge to zero at some rate satisfying presented conditions on \(\theta _i\)s in lemmas and theorems.

Under \(H_0\), we have two different estimators, \(\hat{\mathcal{V}}_1\) and \(\hat{\mathcal{V}}_{1*}\) and their corresponding test statistics, namely \(T_\mathrm{new1}\) and \(T_\mathrm{new2}\), respectively:

The following theorem shows that the proposed tests, \(T_\mathrm{new1}\) and \(T_\mathrm{new2}\), are asymptotically size \(\alpha \) tests.

Theorem 2

Under \(H_0 : \pi _i \equiv \pi \) for all \(1\le i \le k\), if the condition in Lemma 5 holds and \(\frac{ \sum _{i=1}^k \frac{1}{n_i} }{k\theta ^3} \rightarrow 0\) for \(\theta = \pi (1-\pi )\) under \(H_0\), then \(T_\mathrm{new1}\rightarrow N(0,1)\) in distribution and \(T_\mathrm{new2} \rightarrow N(0,1)\) in distribution as \(k \rightarrow \infty \).

Proof

See “Appendix”. \(\square \)

Remark 5

The condition in Lemma 5 under the \(H_0\) is \(\frac{\theta ^3 \sum _{i=1}^k\frac{1}{n_i} + \theta \sum _{i=1}^k\frac{1}{n_i^3}}{ \left( k\theta ^2 + \frac{\theta }{N^2} \sum _{i=1}^k \frac{1}{n_i} \right) ^2 } =o(1)\). This condition includes a variety of situations such as small values of \(\pi \) as well as small sample sizes. Furthermore, inhomogeneous sample sizes are also included. For example, when the sample sizes are bounded, we have \(\sum _{i=1}^k\frac{1}{n_i} \asymp k\) and \(\sum _{i=1}^k\frac{1}{n_i^3} \asymp k\) leading to \(\frac{\theta ^3 \sum _{i=1}^k\frac{1}{n_i} + \theta \sum _{i=1}^k\frac{1}{n_i^3}}{ \left( k\theta ^2 + \frac{\theta }{N^2} \sum _{i=1}^k \frac{1}{n_i} \right) ^2 } \le \frac{1}{k\theta ^3}\) which converges to 0 when \(k \theta ^3 \rightarrow \infty \). This happens when \(\pi = k^{\epsilon -1/3}\) for \(0< \epsilon <1/3\) which is allowed to converge to 0. Another case is that sample sizes are highly unbalanced. For example, we have \( n_i \asymp i^{\alpha }\) for \( \alpha >1\) which implies \(\sum _{i=1}^{\infty } \frac{1}{n_i} < \infty \) and \(\sum _{k=1}^{\infty } \frac{1}{n_i^3} <\infty \). Therefore, the condition is \( \frac{\theta ^3 \sum _{i=1}^k\frac{1}{n_i} + \theta \sum _{i=1}^k\frac{1}{n_i^3}}{ \left( k\theta ^2 + \theta \sum _{i=1}^k \frac{1}{n_i} \right) ^2 } \asymp \frac{ \theta ^3 + \theta }{ (k \theta ^2 + \theta )^2 } \le \frac{ \theta ^3 + \theta }{k^2 \theta ^4 } = \frac{1}{k^2 \theta } + \frac{1}{k^2 \theta ^3} \rightarrow 0 \) if \( \pi \asymp k ^{\epsilon } \) for \( -\frac{2}{3}< \epsilon < 0\). In this case, the sample size \(n_i\) diverges as \(i \rightarrow \infty \), so sample sizes are highly unbalanced. For the asymptotic normality, additional condition \(\sum _{i=1}^k\frac{1}{n_i}/(k\theta ^3) \rightarrow 0\) in Theorem 2 is satisfied for \( -\frac{1}{3}< \epsilon <0\).

From Theorem 2, we reject the \(H_0\) if

where \(z_{1-\alpha }\) is \((1-\alpha )\) quantile of a standard normal distribution. As explained in Sect. 2.2, note that the rejection region is one-sided since we have \(E(T) \ge 0\), implying that large values of tests support the alternative hypothesis.

Although they have the same asymptotic null distribution, their power functions are different due to the different behaviors of \(\hat{\mathcal{V}}_1\) and \(\hat{\mathcal{V}}_{1*}\) under \(H_1\). In general, it is not necessary to have the asymptotic normality under the \(H_1\); however, to compare the powers analytically, one may expect asymptotic power functions to be more specific.

The following lemma states the asymptotic normality of \(T/\sqrt{{\hbox {Var}(T_1)}}\) where \(\hbox {Var}(T_1)\) is in (9) in Lemma 2. In the following asymptotic results, it is worth mentioning that we put some conditions on \(\theta _i\)s so that they do not approach to 0 too fast.

Theorem 3

If (i) \( |\pi _i -\bar{\pi }| \ge \frac{1+\epsilon }{N}\) for \(1\le i \le k\), (ii) \(\frac{\sum _{i=1}^k(\theta _i^4 + \frac{\theta _i}{n_i})}{\left( \sum _{i=1}^k\theta _i^4 + \frac{1}{N^2} \sum _{i=1}^k\frac{\theta _i}{N} \right) ^2 } \rightarrow 0\) and (iii) \(\frac{ \max _i (\pi _i - \bar{\pi })^2 (n_i\theta _i +1)}{ \mathcal{V}_1 + ||{{\varvec{\pi }}} - \bar{{\varvec{\pi }}} ||^2_{\theta \mathbf{n}}} \rightarrow 0\) where \(||{{\varvec{\pi }}} - \bar{{\varvec{\pi }}} ||^2_{\theta \mathbf{n}} = \sum _{i=1}^k n_i (\pi _i-\bar{\pi })^2 \theta _i\), then

where \(\hbox {Var}(T_1)\) is defined in (9).

Proof

See “Appendix”. \(\square \)

Using Theorem 3, we obtain the asymptotic power of the proposed tests. We state this in the following corollary.

Corollary 2

Under the assumptions in Lemma 5 and Theorem 3, the powers of \(T_\mathrm{new1}\) and \(T_\mathrm{new2}\) are

and

where \(\bar{\varPhi }(x) = 1-{\varPhi }(x) = P(Z > x)\) for a standard normal random variable Z and \(\hbox {Var}(T_1)\) defined in (9).

2.3 Comparison of powers

In the previous section, we present the asymptotic power of tests, \(T_\mathrm{new1}\) and \(T_\mathrm{new2}\). Currently, it does not look straightforward to tell one test is uniformly better than the others. However, one may consider some specific scenario and compare different tests under those scenario which may help to understand the properties of tests in a better way. Asymptotic powers depend on the configurations of \((\pi _i's)\), \((n_i's)\) and k. It is not possible to consider all configurations; however, what we want to show through simulations is that neither of \(T_\mathrm{new1}\) and \(T_\mathrm{new2}\) dominates the other.

Let \(\beta (T)\) be the asymptotic power of a test statistic \(\lim _{k\rightarrow \infty } P(T >z_{1-\alpha })\) where T is one of \(T_{\chi }\), \(T_\mathrm{new1}\) and \(T_\mathrm{new2}\).

Theorem 4

-

1.

If sample sizes \(n_1=\cdots =n_k\equiv n\) and \(\max _{1\le i \le k} \pi _i < \frac{1}{2}-\frac{1}{\sqrt{3}}\), then

$$\begin{aligned} \lim _{k\rightarrow \infty }(\beta (T_\mathrm{new2}) - \beta (T_\mathrm{new1}) ) \ge 0. \end{aligned}$$If \(n_i=n\) for all \(1\le i \le k\) and \( n\bar{\pi }(1-\bar{\pi }) \rightarrow \infty \), then

$$\begin{aligned} \lim _{k \rightarrow \infty } (\beta ({T_\mathrm{new2}}) - \beta (T_{\chi }) )\ge & {} 0. \end{aligned}$$ -

2.

Suppose \(\pi _i =\pi =k^{-\gamma }\) for \(1\le i\le k-1\) and \(\pi _k = k^{-\gamma }+\delta \) for \(0<\gamma <1\) as well as \(n_i =n\) for \(1\le i\le k-1\), and \(n_k = [nk^{\alpha }]\) for \(0<\alpha < 1\) where [x] is the greatest integer which does not exceed x. Then, if \(n \rightarrow \infty \),

-

(a)

for \(\{(\alpha ,\gamma ) : 0<\alpha<1, 0<\gamma<1, 0<\alpha + \gamma<1, 0<\gamma \le \frac{1}{2}\}\), then \(\lim _k (\beta (T_\mathrm{new_1}) -\beta (T_\mathrm{new2}))=0\).

-

(b)

for \(\{ (\alpha , \gamma ) :0<\alpha<1, 0<\gamma <1, \alpha + \gamma>1, \alpha > \frac{1}{2} \} \), then \(\lim _k (\beta (T_\mathrm{new_1}) -\beta (T_\mathrm{new2})) >0\).

-

(a)

-

3.

Suppose \(\pi _1 = k^{-\gamma }+\delta \) and \(n_1 =n \rightarrow \infty \) and \(\pi _i = k^{-\gamma }\) and \(n_i = [n k^{\alpha }]\) for \(2 \le i \le n\). For \(0<\gamma <1\) and \(0<\alpha <1\), if \(0<\gamma <1/2\) and \(k^{1-\alpha -\gamma } =o(n)\), then

$$\begin{aligned} \lim _{k \rightarrow \infty } (\beta (T_\mathrm{new_2}) -\beta (T_\mathrm{new_1})) >0. \end{aligned}$$(18)

Proof

See “Appendix”. \(\square \)

From Theorem 4, we conjecture that \(T_\mathrm{new2}\) has better powers than others when sample sizes are homogeneous or similar to each other. For inhomogeneous sample sizes, \(T_\mathrm{new1}\) and \(T_\mathrm{new2}\) have different performances from the cases of 2 and 3 in Theorem 4. We show numerical studies reflecting these cases later.

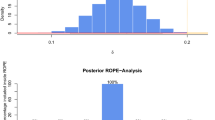

Although we compare the powers of the proposed tests under some local alternative, it is interesting to see different scenarios and compare powers. Instead of an analytical approach, we present numerical studies as follows: Since the asymptotic powers of \(T_\mathrm{new1}\) and \(T_\mathrm{new2}\) depend on the behavior of \(\mathcal{V}_1\) and \(\mathcal{V}_{1*}\), we compare those two variances under a variety of situations. If \(\mathcal{V}_{1*}> \mathcal{V}_1\), then \(T_\mathrm{new1}\) is more powerful than \(T_\mathrm{new2}\); otherwise, we have an opposite result. Although we compared the powers of tests in this paper in Theorem 4, there are numerous additional situations which are not covered analytically. We provide some additional situations from numerical studies here. We take \(k=100\), and we generate sample sizes \(n_i \sim \{20,21,\ldots ,200 \}\) uniformly. The left panel is for \(\pi _i \sim U(0.01, 0.2)\) and the left panel is for \(\pi \sim U(0.01,0.5)\) where U(a, b) is the uniform distribution in (a, b). We consider 1, 000 different configurations of \((n_i, \pi _i)_{1\le i \le 100}\) for each panel. We see that \(\hbox {Var}(T_1)\) and \(\hbox {Var}(T_1)_*\) have different behaviors when \(\pi _i\)s are generated different ways. If \(\pi _i\)s are widely spread out, then \(\hbox {Var}(T_1)_*\) is larger, otherwise \(\hbox {Var}(T_1)\) seems to be larger from our simulations (Fig. 1).

We present simulation studies comparing the performance of \(T_\mathrm{new1}\), \(T_\mathrm{new2}\) and existing tests. They have different performances depending on different situations.

3 Simulations

In this section, we present simulation studies to compare our proposed tests with existing procedures.

We first adopt the following simulation setup and evaluate our proposed tests. Let us define

where \({\varvec{n}}_{m}^*=(2^m,2^m,\ldots , 2^m)\) is a 8 dimensional vector. We consider the following simulations (Tables 1, 2, 3, 4, 5, 6).

-

Setup 1

\(\pi _i=0.001\) for \(1\le i \le k-1\) and \(\pi _k=0.001+\delta \) for \(k=8\) and \({\varvec{n}}_8\)

-

Setup 2

\(\pi _i=0.001+\delta \) for \(k=1\) and \(\pi _i = 0.001\) for \(2\le i \le k\) for \(k=8\) and \({\varvec{n}}_8\)

-

Setup 3

\(\pi _1 = 0.001 + \delta \) and \(\pi _i=0.001\) for \(2\le i \le 8\), \(k=8\), \(n_i= 2560\) for \(1\le i \le 8\)

-

Setup 4

\(\pi _i=0.001\) for \(1\le i \le k-1\) and \(\pi _k=0.001+\delta \) for \(k=40\) and \({\varvec{n}}_{40}\)

-

Setup 5

\(\pi _i=0.001+\delta \) for \(k=1\) and \(\pi _i = 0.001\) for \(2\le i \le k\) for \(k=40\) and \({\varvec{n}}_{40}\)

-

Setup 6

\(\pi _i=0.001+\delta \) for \(i=1\) and \(\pi _i=0.001\) for \(2 \le i \le k\). \(n_i= 2560\) for \(1\le i \le 40\)

As test statistics, we use \(T_\mathrm{new1}\), \(M_1\), \(T_\mathrm{new2}\), \(M_2\), TS, modTS and PW. Here, as discussed in Remark 4, \(M_1\) uses \(\hat{\mathcal{V}}_1^{mle}\) as an estimator of \(\mathcal{V}_1\) in \(T_\mathrm{new1}\) and \(M_2\) uses \(\hat{\mathcal{V}}_{1*}^{mle}\) for \(\mathcal{V}_{1*}\) in \(T_\mathrm{new2}\). TS represents the test in () and modTS represents the test in (10). Chi represents Chi-square test based on \(T_S > \chi ^2_{k-1, 1-\alpha }\) where \(\chi ^2_{k-1,1-\alpha }\) is the \((1-\alpha )\) quantile of Chi-square distribution with degrees of freedom \(k-1\). PW is the test in Potthoff and Whittinghill (1966), and BL represents the test in Bathke and Lankowski (2005). Note that BL is available only when sample sizes are all equal. For calculation of size and power of each test, we simulate 10,000 samples and compute empirical size and power based on 10,000 p values.

From the above scenario, we consider inhomogeneous sample sizes (Setups 1, 2, 4 and 5) and homogeneous sample sizes (Setups 3 and 6). Furthermore, when sample sizes are inhomogeneous, two cases are considered: one is the case that different \(\pi _i\) occurs for a study with large sample (Setups 1 and 4) and the other for a study with small sample (Setups 2 and 5). Setups 1–6 consider the cases that only one study has a different probability (\(0.001+\delta \)) and all the others have the same probability (0.001). On the other hand, we may consider the following cases which represent all probabilities are different from each other (Tables 7, 8).

-

Setup 7

\(\pi _i=0.001(1+\epsilon _i)\), \(k=40\), \(n_i = 2560\) for \(1\le i \le 40\) where \(\epsilon _i\)s are equally spaced grid in \([-\delta , \delta ]\).

-

Setup 8

\(\pi _i=0.01(1+\epsilon _i)\), \(k=40\), \({\varvec{n}}_{40}^*\) where \(\epsilon _i\)s are equally spaced grid in \([-\,\delta , \delta ]\).

From our simulations, we first see that \(T_\mathrm{new1}\) obtains more powers than \(M_1\), while \(T_\mathrm{new2}\) and \(M_2\) obtain almost similar powers. The performance of \(T_\mathrm{new1}\) and \(T_\mathrm{new2}\) is different depending on different situations. When sample sizes are homogeneous (Setups 3, 6 and 7), \(T_\mathrm{new2}\) obtains slightly more power than \(T_\mathrm{news}\) as shown in 1 in Theorem 4. On the other hand, when sample sizes are inhomogeneous, \(T_\mathrm{new1}\) seems to have more advantage for the cases that different probability occurs for large sample sizes, while \(T_\mathrm{new2}\) seems to obtain better powers for the opposite case. Overall, the performances of \(T_\mathrm{new1}\) and \(T_\mathrm{new2}\) are different depending on situations. Cochran’s test seems to fail in controlling a given size; however, the modified TS achieves reasonable empirical sizes. When sample sizes are homogeneous, the modified TS has comparable powers; however, for inhomogeneous sample sizes, the modified TS has significantly small powers compare to \(T_\mathrm{new1}\) and \(T_\mathrm{new2}\) for Setup 8 (Tables 8).

As suggested by a reviewer, we consider the following two more numerical studies when k is extremely large (Tables 9, 10).

-

Setup 9

\(\pi _i=0.01(1+\epsilon _i)\), \(k=2000\), \(n_i = 100\) for \(1\le i \le 2,000\) where \(\epsilon _i\)s are equally spaced grid in \([-\delta , \delta ]\).

-

Setup 10

\(\pi _i=0.01(1+\epsilon _i)\), \(k=2,000\), \({\varvec{n}}=({\varvec{n}}_{1,250}, {\varvec{n}}_{2,250},\ldots , {\varvec{n}}_{8,250})\) where \({\varvec{n}}_{m,250}=(2^m, 2^m, \ldots , 2^m)\) is a 250 dimensional vector with all components \(2^m\) and \(\epsilon _i\)s are equally spaced grid in \([-\,\delta , \delta ]\).

Setup 9 is the case of a extremely large number of groups with small sample sizes. As mentioned in introduction, we focus on sparse count data in the sense that \(\pi _i\)s are small, so we take \(\pi _i=0.01\) and homogeneous sample sizes \(n_i=100\) so that we have \(E(X_i)=n_i\pi _i\) which represents very sparse data in each group. For the number of groups, we use \(k=2000\) which is much larger than \(n_i=100\). Table 9 shows sizes and powers of all tests, and we see that all tests have similar performances when sample sizes are homogeneous. On the other hand, for the case that sample sizes are highly unbalanced which is the case of Setup 10, Table 10 shows that our proposed tests control the nominal level of size and obtain increasing patter of powers, while tests based on Chi-square statistics fail in controlling the nominal level of size and obtaining powers. In particular, those Chi-square-based tests have decreasing patterns of powers even though the effect sizes (\(\delta \) in this case) increase. PW controls the size and has increasing pattern of powers; however, the powers of PW are much smaller than those of our proposed tests. All codes will be available upon request.

4 Real examples

In this section, we provide real examples for testing the homogeneity of binomial proportions from a large number of independent groups.

We apply our proposed tests and existing tests to the rosiglitazone data in Nissen and Wolski (2007). The data set includes the 42 studies and consists of study size (N), number of myocardial infarctions (MI) and number of deaths (D) for rosiglitazone (treatment) and the corresponding results under control arm for each study.

We consider testing (1) for the proportions of myocardial infarctions and death rate (D) from cardiovascular causes. There are four situations, (i) MI/rosiglitazone, (ii) death from cardiovascular (DCV)/rosiglitazone, (iii) MI/control and (iv) death from cardiovascular(DCV)/control. Table 4 shows the p values for different situations and different test statistics. In case of MI/rosiglitazone and MI/control, all tests have 0 p value. On the other hand, for the other two cases, some tests have different results. For DCV/Rosiglitazone, \(T_\mathrm{new2}\), TS and modTS have small p values, while \(T_\mathrm{new1}\) and PW have slightly larger p values. For DCV/Control, \(T_\mathrm{new1}\) and \(T_\mathrm{new2}\) have much small p values (0.107 and 0.079) compared to \(T_S\), modTS, Chi and PW (0.609, 0.406, 0.584 and 0.229, respectively) (Table 11).

5 Concluding remarks

In this paper, we considered testing homogeneity of binomial proportions from a large number of independent studies. In particular, we focused on the sparse data and heterogeneous sample sizes which may affect the identification of null distributions. We proposed new tests and showed their asymptotic results under some regular conditions. We provided simulations and real data examples, which show that our proposed tests are convincing in case of sparse and a large number of studies. This is a convincing result since our proposed test is most reliable in controlling a given size from our simulations, so small p values from our proposed test are strong evidence against the null hypotheses.

References

Bathke, A. C., Harrar, S. W. (2008). Nonparametric methods in multivariate factorial designs for large number of factor levels. Journal of Statistical Planning and Inference, 138(3), 588–610.

Bathke, A., Lankowski, D. (2005). Rank procedures for a large number of treatments. Journal of Statistical Planning and Inference, 133(2), 223–238.

Billingsley, P. (1995). Probability and Measure (3rd ed.). Hoboken: Wiley.

Boos, D. D., Brownie, C. (1995). ANOVA and rank tests when the number of treatments is large. Statistics and Probability Letters, 23, 183–191.

Cai, T., Parast, L., Ryan, L. (2010). Meta-analysis for rare events. Statistics in Medicine, 29(20), 2078–2089.

Cochran, W. G. (1954). Some methods for strengthening the common \(\chi ^2\) tests. Biometrics, 10, 417–451.

Greenshtein, E., Ritov, R. (2004). Persistence in high-dimensional linear predictor selection and the virtue of over parametrization. Bernoulli, 10, 971–988.

Nissen, S. E., Wolski, K. (2007). Effect of rosiglitazone on the risk of myocardial infarction and death from cardiovascular causes. New England Journal of Medicine, 356(24), 2457–2471.

Park, J. (2009). Independent rule in classification of multivariate binary data. Journal of Multivariate Analysis, 100, 2270–2286.

Park, J., Ghosh, J. K. (2007). Persistence of the plug-in rule in classification of high dimensional multivariate binary data. Journal of Statistical Planning and Inference, 137, 3687–3705.

Potthoff, R. F., Whittinghill, M. (1966). Testing for homogeneity: I. The binomial and multinomial distributions. Biometrika, 53, 167–182.

Shuster, J. J. (2010). Empirical versus natural weighting in random effects meta analysis. Statistics in Medicine, 29, 1259–1265.

Shuster, J. J., Jones, L. S., Salmon, D. A. (2007). Fixed vs random effects meta-analysis in rare event studies: The rosiglitazone link with myocardial infarction and cardiac death. Statistics in Medicine, 26, 4375–4385.

Stijnen, T., Hamza, Taye H., Zdemir, P. (2010). Random effects meta-analysis of event outcome in the framework of the generalized linear mixed model with applications in sparse data. Statistics in Medicine, 29, 3046–3067.

Tian, L., Cai, T., Pfeffer, M. A., Piankov, N., Cremieux, P. Y., Wei, L. J. (2009). Exact and efficient inference procedure for meta-analysis and its application to the analysis of independent \(2\times 2\) tables with all available data but without artificial continuity correction. Biostatistics, 10, 275–281.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix

Proof of Theorem 1

We use the Lyapunov’s condition for the asymptotic normality of \(\frac{\mathcal{T}_S- E(\mathcal{T}_S)}{\sqrt{\mathcal{B}_k}}\). Let \(\mathcal{T}_{Si} = \frac{(X_i - n_i \bar{\pi })^2}{n_i \bar{\pi }(1-\bar{\pi })}\), then we define \(\mathcal{D}_i = \mathcal{T}_{Si} - E(\mathcal{T}_{Si}) = \frac{(X_i - n_i \bar{\pi })^2}{n_i \bar{\pi }(1-\bar{\pi })} - \frac{n_i(\pi _i -\bar{\pi })^2}{n_i \bar{\pi }(1-\bar{\pi })} - \frac{\pi _i (1-\pi _i)}{n_i \bar{\pi }(1-\bar{\pi })} = \frac{1}{n_i \bar{\pi }(1-\bar{\pi }) } ((X_i - n_i \pi _i)^2 + 2n_i (X_i - n_i \pi _i) (\pi _i -\bar{\pi }) - n_i \pi _i (1-\pi _i))\). We show that the Lyapunov’s condition is satisfied, \( \frac{\sum _{i=1}^kE(\mathcal{D}_i^4) }{\mathcal{B}_k^2} \rightarrow 0.\) We see that

from the given conditions. Therefore, we have the asymptotic normality of \(\frac{\mathcal{T}_S-E(\mathcal{T}_S)}{\sqrt{\mathcal{B}_k}} \rightarrow N(0,1) \) in distribution. Furthermore, we also have the asymptotic normality of

which leads to \( P( T_0 \ge z_{1-\alpha }) = P(\sigma _k \frac{T_S-k}{\sqrt{\mathcal{B}_{k}}} + \mu _k \ge z_{1-\alpha } ) = P(\frac{T_S -k}{\sqrt{\mathcal{B}_k}} \ge \frac{z_{1-\alpha }}{\sigma _k} -\mu _k)\). Using \(\frac{T_S -k}{\sqrt{\mathcal{B}_k}} \rightarrow N(0,1)\) in distribution, we have \( P(T_0 \ge z_{1-\alpha }) - \bar{\varPhi }( \frac{z_{1-\alpha }}{\sigma _k} -\mu _k) \rightarrow 0.\)\(\square \)

Proof of Lemma 2

Since \(T_{1i}\) and \(T_{1j}\) for \(i\ne j\) are independent, we have \(\mathcal{V}_1 \equiv \hbox {Var}(T_1) = \sum _{i=1}^k\hbox {Var}(T_{1i})\) where

Using the following results

we derive

where \( \mathcal{A}_{1i}= \left( 2-\frac{6}{n_i} - \frac{d_i^2}{n_i} + \frac{8d_i^2}{n_i^2}-\frac{6d_i^2}{n_i^3} + 12 d_i \frac{n_i-1}{n_i^2} \right) \) and \(\mathcal{A}_{2i} =\Big (\frac{1}{n_i} +\frac{d_i^2}{n_i} - \frac{2d_i^2}{n_i^2} + \frac{d_i^2}{n_i^3}- 2d_i\frac{n_i-1}{n_i^2} \Big ) = \frac{n_i}{N^2}\) from \(d_i = \frac{n_i}{n_i-1} \left( 1 -\frac{n_i}{N} \right) \). \(\square \)

Proof of Lemma 3

-

1.

Using \(d_i = \frac{n_i}{n_i-1} (1-\frac{n_i}{N}) < 2\), we can derive \(\mathcal{A}_{1i}\) is uniformly bounded since \(\mathcal{A}_{1i} = 2 - \frac{6}{n_i} - \frac{6 d_i^2}{n_i} + \frac{8d_i^2}{n_i^2} -\frac{6d_i^2}{n_i^3} + 12 \frac{n_i}{n_i-1} \frac{n_i-1}{n_i^2}(1-\frac{n_i}{N}) = 2 + \frac{6}{n_i} -\frac{12}{N} + \frac{d_i^2}{n_i}( -1 + \frac{8}{n_i} -\frac{6}{n_i^2}) (1-\frac{n_i}{N}) \). Let \(x = \frac{1}{n_i} \le \frac{1}{2}\), then \( f(x) = (-1 + \frac{8}{n_i} -\frac{6}{n_i^2}) = -6(x-\frac{2}{3})^2 + \frac{7}{9}\) which has the value \( -1< f(x) \le \frac{3}{2}\). Therefore, we have \( 2 + \frac{6}{n_i} -\frac{12}{N} + \frac{6}{n_i} \ge \mathcal{A}_{1i} \ge 2 + \frac{6}{n_i} -\frac{12}{N} - \frac{4}{n_i}\). Using \(n_i\ge 2\) and \(N \rightarrow \infty \) as \(k \rightarrow \infty \), lower and upper bounds are uniformly bounded away from 0 and \(\infty \) for all i. Therefore, we have \(\mathcal{A}_{1i} \asymp 1\) and \(\mathcal{A}_{2i} = \frac{n_i}{N^2}\) leading to \(\mathcal{V}_1 = \sum _{i=1}^k\mathcal{A}_{1i} \theta _i^2 + \sum _{i=1}^k\mathcal{A}_{2i} \theta _i \asymp \sum _{i=1}^k\theta _i^2 + \frac{1}{N^2} \sum _{i=1}^kn_i \theta _i\).

-

2.

Let \(\mathcal{G}_n = 4 \sum _{i=1}^kn_i (\pi _i -\bar{\pi })^2 \theta _i + 4 \frac{1}{N} \sum _{i=1}^kn_i (\pi _i-\bar{\pi })(1-2\pi _i)\theta _i = 4 \sum _{i=1}^k\theta _i G_{i} \) where \(G_i = n_i(\pi _i -\bar{\pi })^2 + \frac{n_i}{N}(\pi _i-\bar{\pi })(1-2\pi _i)\). If we define \(\mathcal{B} = \{ i : |\pi _i - \bar{\pi }| \ge \frac{(1+\epsilon )}{N} \}\) for some \(\epsilon >0\), then we decompose

$$\begin{aligned} \mathcal{V}_1= & {} \underbrace{ \sum _{i \in \mathcal{B}} ( \mathcal{A}_{1i} \theta _i^2 + \mathcal{A}_{2i} \theta _i)}_{\mathcal{F}_1} + \underbrace{\sum _{i \in \mathcal{B}^c} ( \mathcal{A}_{1i} \theta _i^2 + \mathcal{A}_{2i} \theta _i)}_{\mathcal{F}_2} \end{aligned}$$(19)$$\begin{aligned} \mathcal{G}_n= & {} 4 \underbrace{\sum _{i \in \mathcal{B}} \theta _i G_i}_{\mathcal{G}_{n1}} + 4 \underbrace{\sum _{i \in \mathcal{B}^c}\theta _i G_i}_{\mathcal{G}_{n2}} \equiv 4 \mathcal{G}_{n1} + 4 \mathcal{G}_{n2}. \end{aligned}$$(20)For \(i \in \mathcal{B}\), we have \( \frac{n_i}{N}| (1-2\pi _i) (\pi _i - \bar{\pi }) \theta _i| \le \frac{n_i}{(1+\epsilon )} (\pi _i - \bar{\pi })^2 \theta _i\) which implies

$$\begin{aligned} \frac{4\epsilon }{1+\epsilon } \sum _{i \in \mathcal{B}} n_i ( \pi _i -\bar{\pi })^2 \theta _i \le 4 \mathcal{G}_{n1} \le \frac{4 (2+\epsilon )}{1+\epsilon } \sum _{i \in \mathcal{B}} n_i ( \pi _i -\bar{\pi })^2 \theta _i. \end{aligned}$$This leads to \(4 \mathcal{G}_{n1} \asymp \sum _{i \in \mathcal{B}} n_i (\pi _i- \bar{\pi })^2 \theta _i\) and

$$\begin{aligned} \mathcal{F}_1+ 4 \mathcal{G}_{n1} \asymp \mathcal{F}_1+ \sum _{i \in \mathcal{B}} n_i ( \pi _i -\bar{\pi })^2 \theta _i. \end{aligned}$$(21)For \(\mathcal{B}^c = \{ i | |\pi _i -\bar{\pi }| < \frac{(1+\epsilon )}{N}\}\), we first show \( \mathcal{F}_2 + 4\mathcal{G}_{n2} \ge \sum _{i \in \mathcal{B}^c} \mathcal{A}_{1i} \theta _i^2\). For \(i \in \mathcal{B}^c\) and \(x = \pi _i-\bar{\pi }\), we have \( G_i = n_i( x+ \frac{1}{2N} (1-2\pi _i))^2 - \frac{(1-2\pi _i)^2 n_i }{4N^2} \ge -\frac{ (1-2\pi _i)^2 n_i}{4N^2}\) leading to

$$\begin{aligned} \mathcal{F}_2 + 4 \mathcal{G}_{n2}\ge & {} \sum _{i \in \mathcal{B}^c} \mathcal{A}_{1i}\theta _i^2 + \frac{1}{N^2} \sum _{i \in \mathcal{B}^c} {n_i\theta _i} \left( 1-(1-2\pi )^2 \right) \nonumber \\= & {} \sum _{i\in \mathcal{B}^c} \mathcal{A}_{1i} \theta _i^2 + \frac{4}{N^2} \sum _{i \in \mathcal{B}^c} {n_i \theta _i^2} = \sum _{i\in \mathcal{B}^c} \mathcal{A}_{1i} \theta _i^2 + 4 \sum _{i \in \mathcal{B}^c} \mathcal{A}_{2i}\theta _i^2 \nonumber \\> & {} \sum _{i\in \mathcal{B}^c} \mathcal{A}_{1i} \theta _i^2. \end{aligned}$$(22)The upper bound of \(4\mathcal{G}_{n_2}\) is

$$\begin{aligned} 4 \mathcal{G}_{n_2}\le & {} \frac{4(1+\epsilon )}{N^2} \sum _{i \in \mathcal{B}^c} n_i \theta _i = 4(1+\epsilon ) \sum _k \mathcal{A}_{2i} \theta _i \end{aligned}$$resulting in

$$\begin{aligned} \mathcal{F}_2 + 4 \mathcal{G}_{n2}\le & {} 4(1+\epsilon ) \sum _{i\in \mathcal{B}^c} \mathcal{A}_{1i} \theta _i^2 + 4(1+\epsilon ) \sum _{i \in \mathcal{B}^c} \mathcal{A}_{2i} \theta _i + 4 \sum _{i \in \mathcal{B}^c} n_i (\pi _i - \bar{\pi })^2 \theta _i \nonumber \\< & {} 4(1+\epsilon ) ( \mathcal{F}_2 + \sum _{i \in \mathcal{B}^c} n_i (\pi _i - \bar{\pi })^2 \theta _i). \end{aligned}$$(23)Combining (22) and (23), we have

$$\begin{aligned} \sum _{i \in \mathcal{B}^c} \mathcal{A}_{1i} \theta _i^2< \mathcal{F}_2 + \mathcal{G}_{n2} < 4(1+\epsilon )( \mathcal{F}_2 + \sum _{i \in \mathcal{B}^c} n_i (\pi _i - \bar{\pi })^2 \theta _i). \end{aligned}$$(24)From (21) and (24), we conclude, for \(K=4(1+\epsilon )\),

$$\begin{aligned} \sum _{i=1}^k \mathcal{A}_{1i} \theta _i^2 < \hbox {Var}(T_1) \le K (\nu _1 + ||{{\varvec{\pi }}} - \bar{{\varvec{\pi }}} ||_{\mathbf{n} \theta }^2). \end{aligned}$$In particular, if \( \mathcal{B}^c\) is an empty set, then we have \(\hbox {Var}(T) = \mathcal{F}_{1} + 4 \mathcal{G}_{n1}\), therefore (21) implies (15). \(\square \)

Proof of Lemma 4

Let \(X = \sum _{i=1}^n X_i\) where \(X_i\)s are iid Bernoulli(\(\pi \)). In expansion of \((X-n\pi )\), each term has the form of \((X_{i_1}-\pi )^{m_1}(X_{i_2}-\pi )^{m_2}\cdots (X_{i_k}-pi)^{m_k}\) for \(1\le i_1,\ldots , i_k \le n\) and \( m_1 + \cdots + m_k=n\), so if there exists at least one \(m_{k}=1\), then expectation of the term is zero. We only need to consider the terms without \((X_{i_j}-\pi )\), so we finally have

We have \(E(X_1-\pi )^m = \sum _{i=0}^m {m \atopwithdelims ()i} E(X_1^i) (-\pi )^{m-i} = (-\pi )^m + \sum _{i=1}^m {m \atopwithdelims ()i} E(X_1^i) (-\pi )^{m-i}\) and using \(E(X_1^i) = E(X_i) =\pi \) for \(i\ge 1\), we obtain \(E(X_1-\pi )^m = (-\pi )^m + \pi \sum _{i=1}^m {m \atopwithdelims ()i} (-\pi )^{m-i} = (-\pi )^m-\pi (-\pi )^m + \pi \sum _{i=0}^m {m \atopwithdelims ()i} (-\pi )^m = (1-\pi )(-\pi )^m + \pi (1-\pi )^m = \pi (1-\pi )( (-1)^m \pi ^{m-1}+(1-\pi )^{m-1})\le \pi (1-\pi )\) for \(m \ge 2\). Since all coefficients in the expansion of \(E(\sum _{i=1}^n (X_i - \pi ))\) are fixed constants, for some universal constant \(C>0\), we have

since maximum is obtained at either \(n\pi (1-\pi )\) or \((n\pi (1-\pi ))^4\) depending on \(n\pi (1-\pi ) \le 1 \) or \(n\pi (1-\pi )>1\).

For the second equation, we first consider the moment of \(E(\hat{\pi }^4)\) and \(E(\hat{(}1-\hat{\pi })^4)\). The latter one is easily obtained from the first one by changing the distribution from \(B(n,\pi )\) to \(B(n,1-\pi )\). We first obtain

where the last equality holds due to the fact that the maximum is obtained at either \(\pi ^4\) or \(\frac{\pi }{n^3}\) depending on \(\pi \ge \frac{1}{n}\) or \(\pi < \frac{1}{n}\). Similarly, the following inequality is obtained

Using \(E \hat{\pi }^4 (1-\hat{\pi })^4 \le \min (E\hat{\pi }^4, E (1-\hat{\pi })^4 )\), we have

If \(\pi \le \frac{1}{2}\), \(\pi \ge 2\pi (1-\pi ) = 2\theta \); if \(\pi > \frac{1}{2}\), \(1-\pi \le 2\theta \). So the last equality is

for some universal constant \(C'\).

We use the following relationship: for some constants \(b_m\), \(m=1,\ldots ,l-1\)

For example we have \(x^3 = x(x-1)(x-2) + 3x(x-1) +x\). Using \(E\prod _{j=1}^{l} (X-j+1) = \prod _{j=1}^{l} (n-j+1) \pi ^l\),

Using this, we can derive

Since \(\hat{\eta }_l -\hat{\pi }^l = {\hat{\pi }^l}O(\frac{1}{n}) + \sum _{i=1}^{l-1} {\hat{\pi }^{l-i}} O(\frac{1}{n^i})\), we have \(E(\hat{\eta }_l -\hat{\pi }^l)^2 \le \left\{ E(\hat{\pi }^{2l})O(\frac{1}{n^2}) + \sum _{i=1}^{l-1} {\hat{\pi }^{2l-2i}} O(\frac{1}{n^{2i}}) \right\} .\) Using \(E \hat{\pi }^{2l}=\pi ^{2l}+ O \left( \frac{\pi }{n^{2l-1}} + \frac{\pi ^{2l-1}}{n} \right) \) from (4), we obtain

We can show \(\frac{\pi ^{2(l-i)}}{n^{i}} \le \frac{\pi }{n^{2l-1}} + \frac{\pi ^{2l-1}}{n}\) for \(2\le i \le l-1\) since \(\frac{\pi ^{2(l-i)}}{n^{i}} \le \frac{\pi ^{2l-1}}{n}\) for \(\pi \ge \frac{1}{n}\) and \(\frac{\pi ^{2(l-i)}}{n^{i}} \le \frac{\pi }{n^{2l-1}}\) for \(\pi <\frac{1}{n}\). Using this, we have (25) \(\le O\left( \frac{\pi }{n^{2l-1}} + \frac{\pi ^{2l-1}}{n} \right) \) which proves \(E(\hat{\eta }_l -\hat{\pi }^l)^2 = O\left( \frac{\pi }{n^{2l-1}} + \frac{\pi ^{2l-1}}{n} \right) \). \(\square \)

Proof of Lemma 5

For the ratio consistency of \({\hat{\mathcal{V}}}_{1}\), it is enough to show \( \frac{E[( {\hat{\mathcal{V}}}_1 - \mathcal{V}_1 )^2]}{(\mathcal{V}_1)^2} \rightarrow 0\) as \(k \rightarrow \infty \). Since \(\hat{\mathcal{V}}_1\) is an unbiased estimator of \(\mathcal{V}_1\),

where the last equality follows since \(E[(\hat{\eta }_{li}- \eta _{li})(\hat{\eta }_{l'i'} - \eta _{l'i'})] = E[(\hat{\eta }_{li}-\eta _{li})] E[(\hat{\eta }_{l'i'}-\eta _{l'i'})]=0\) because \(\hat{\eta }_{li}\) and \(\hat{\eta }_{l'i'}\) are independent for \(i \ne i'\) and both are unbiased estimators. Since \(\mathcal{V}_1\) depends on \(\theta _i=\pi _i(1-\pi _i)\), we have the same result if we change \(\pi _i\) to \(1-\pi _i\); in other words, \(\hbox {Var}(\hat{\mathcal{V}}_1) =\sum _{i=1}^k \sum _{l=1}^4 a_{li}^2 (\eta _{li} -\eta _{li})^2 = \sum _{i=1}^k \sum _{l=1}^4 a^2_{li} (\hat{\eta }^*_{li} -\eta ^*_{li})^2\) where \(\eta ^*_{li} = (1-\pi _i)^l\) and \(\hat{\eta }^*_{li}\) is the corresponding unbiased estimator. For \(\pi \le 1/2\), we use \(\mathcal{V}_1 =\sum _{i=1}^k \sum _{l=1}^4 a_{li} \pi _i^l\) and obtain \(\hbox {Var}(\hat{\mathcal{V}}_1) = O(\sum _{i=1}^k (\frac{\pi _i^3}{n_i} + \frac{\pi _i}{n_i^3}))\) from Lemma 4. Since \( \pi _i \le \delta <1\), we have \(\hbox {Var}(\hat{\mathcal{V}}_1) = O(\sum _{i=1}^k (\frac{\pi _i^3}{n_i} + \frac{\pi _i}{n_i^3})) = O(\sum _{i=1}^k (\frac{\theta _i^3}{n_i} + \frac{\theta _i}{n_i^3})).\) From Lemma 3 and the given condition, we obtain

Similarly, we can show, for some constant \(C'\),

\(\square \)

Proof of Theorem 2

Since the condition in Lemma 5 holds, \(\hat{\mathcal{V}}_1\) and \(\hat{\mathcal{V}}_{1*}\) are the ratio-consistent estimators of \( \mathcal{V}_1 = \mathcal{V}_{1*}\) under the \(H_0\). From \( \frac{T}{\sqrt{\mathcal{V}_1}} = \frac{T_1 - T_2}{\sqrt{\mathcal{V}_1}}\), we only need to show (i) \(\frac{T_1}{\sqrt{\mathcal{V}_1}} \rightarrow N(0,1)\) in distribution and (ii) \(\frac{T_2}{\sqrt{\mathcal{V}_1}} \rightarrow 0\) in probability. To prove (i), we show the Lyapunov’s condition (see Billingsley 1995) for the asymptotic normality is satisfied. In other words, under \(H_0\), we need to show \(\frac{\sum _{i=1}^k E(T_{1i}^4)}{ \hbox {Var}(T_1)^2} \rightarrow 0\). Under \(H_0\), we have \(T_{1i} = n_i(\hat{\pi }_i -\pi _i)^2 - d_i \hat{\pi }_i (1-\hat{\pi }_i)\) with \(E(T_{1i})=0\); therefore, the Lyapunov’s condition is \( \sum _{i=1}^kE(T_{1i}^4)/ \hbox {Var}(T_1)^2 \rightarrow 0\). Using Lemma 4, we have \(\sum _{i=1}^kE(T_{1i}^4) \le 2^4(\sum _{i=1}^kn_i^4 E(\hat{\pi }_i -\pi _i )^4 + d_i^4 E(\hat{\pi }_i (1-\hat{\pi }_i))^4 ) = O( \sum _{i=1}^k(\theta _i^4 + \frac{\theta _i}{n_i})) + O( \sum _{i=1}^k(\theta _i^4 + \frac{\theta _i}{n_i}^3) ) = O( (k \theta ^4 + \frac{\theta }{\sum _{i=1}^k}\frac{1}{n_i}))\) since all \(\theta _i =\theta \) under \(H_0\). Combining this with the result 1 in Lemma 3, we have \(\frac{\sum _{i=1}^k E(T_{1i}^4)}{ \hbox {Var}(T_1)^2} = \frac{ O(k\theta ^4 + \theta \sum _{i=1}^k\frac{1}{n_i}) }{ (k\theta ^2 + \frac{\theta }{N^2} \sum _{i=1}^k\frac{1}{n_i})^2} \le \frac{ k \theta ^4 + \theta \sum _{i=1}^k\frac{1}{n_i}}{ k \theta ^4} = \frac{1}{k} + \frac{\sum _{i=1}^k\frac{1}{n_i}}{ k \theta ^3} \rightarrow 0\) as \(k \rightarrow \infty \) from the given condition \(\frac{\sum _{i=1}^k\frac{1}{n_i}}{ k \theta ^3} \rightarrow 0\) which shows \(\frac{T_1}{\sqrt{\mathcal{V}_1}} \rightarrow N(0,1)\) in distribution.

Furthermore, from Lemma 3 under the \(H_0\), we have \(\mathcal{V}_1 \asymp k\theta ^2 + \theta \sum _{i=1}^k\frac{1}{n_i} \); therefore, we obtain \(E\left( \frac{T_2}{\sqrt{\mathcal{V}_1}} \right) = \frac{E( N(\hat{\bar{\pi }} - \bar{\pi })^2 )}{\sqrt{\mathcal{V}_1}} \asymp \frac{ \theta }{\sqrt{k \theta ^2 + \frac{\theta }{N^2} \sum _{i=1}^k\frac{1}{n_i} }} \le \frac{1}{\sqrt{k}} \rightarrow 0\) which leads to \(\frac{T_2}{\sqrt{\mathcal{V}_1}} \rightarrow 0\) in probability. Combining the asymptotic normality of \(\frac{T}{\sqrt{\mathcal{V}_1}}\) with the ratio consistency of \(\hat{\mathcal{V}}_1\) and \(\hat{\mathcal{V}}_{1*}\), we have the asymptotic normality of \(T_\mathrm{new1}\) and \(T_\mathrm{new2}\) under the \(H_0\). \(\square \)

Proof of Theorem 3

Since \(T=T_1 -T_2\) from (8), we only need to show the following:

-

(I)

\(\frac{T_1 -\sum _{i=1}^k n_i (\pi _i - \bar{\pi })^2 }{\sqrt{{\hbox {Var}(T_1)}}} \rightarrow N(0,1)\) in distribution

-

(II)

\(\frac{T_2}{\sqrt{{\hbox {Var}(T_1)}}} \rightarrow 0\) in probability.

For (I), we use the Lyapunov’s condition for the asymptotic normality of \(T_1\). We show \(\frac{\sum _{i=1}^k E(T_{1i}- n_i(\pi _i -\bar{\pi })^2)^4}{\hbox {Var}(T_1)^2 } \rightarrow 0\) where \( G_i = T_{1i}- n_i(\pi _i -\bar{\pi })^2 = n_i (\hat{\pi }_i -\pi _i)^2 -d_i \hat{\pi }_i (1-\hat{\pi }_i) + 2n_i (\hat{\pi }_i -\pi _i)(\pi _i-\bar{\pi })\). Using \(\sum _{i=1}^k E(G_i^4) \le \sum _{i=1}^k \left( n_i^4E((\hat{\pi }_i - \pi _i)^8) + d_i^4 E( (\hat{\pi }_i (1-\hat{\pi }_i))^4) + 2^4 n_i^4 E(\hat{\pi }_i -\pi _i)^4 (\pi _i-\bar{\pi })^4 \right) \). From Lemma 4, we have \(n_i^4E((\hat{\pi }_i - \pi _i)^8) \le O\left( \theta _i^4 + \frac{\theta _i}{n_i} \right) \), \(d_i^4 E( (\hat{\pi }_i (1-\hat{\pi }_i))^4) \le 2^4 \left( \frac{3\theta _i^2}{n_i^2} + \frac{(1-6\theta _i)\theta _i}{n_i^3} \right) \le O( \frac{\theta _i^2}{n_i^2} + \frac{\theta _i}{n_i^3} )\) where \(O(\cdot )\) is uniform in \(1\le i \le k\). Using the result in Lemma 1, we have \(2^4 \sum _{i=1}^k n_i^4 E(\hat{\pi }_i -\pi _i)^4 \sum _{i=1}^k (\pi _i-\bar{\pi })^4 \le 2^4 \sum _{i=1}^k n_i^4 (\pi _i-\bar{\pi })^4 \left( \frac{3\theta _i^2}{n_i^2}+ \frac{(1-6\theta _i)\theta _i}{n_i^3} \right) \le \max _{1\le i \le k} \left\{ n_i (\pi _i-\bar{\pi })^2 \left( \theta _i + \frac{1}{n_i}\right) \right\} \sum _{i=1}^k n_i (\pi _i-\bar{\pi })^2 \theta _i = \max _{1\le i \le k} \left\{ n_i (\pi _i-\bar{\pi })^2 \left( \theta _i + \frac{1}{n_i}\right) \right\} ||{{\varvec{\pi }}} - \bar{{\varvec{\pi }}} ||^2_{\theta \mathbf{n}}\). Therefore, we have

from the given conditions.

The negligibility of \(T_2 = N(\hat{\bar{\pi }} - \bar{\pi })^2\) can be proven by noting that \(\frac{\hbox {NE}(\hat{\bar{\pi }} - \bar{\pi })^2}{\sqrt{\hbox {Var}(T_1)}} = \frac{ \bar{\theta }}{\sqrt{\hbox {Var}(T_1)}} = \frac{1}{N} \frac{\sum _{i=1}^kn_i \theta _i}{\sqrt{\hbox {Var}(T_1)}} \asymp \frac{\max _i \theta _i \sum _{i=1}^kn_i}{ N \sqrt{ \mathcal{V}_1 + ||{{\varvec{\pi }}} - \bar{{\varvec{\pi }}} ||^2_{\theta \mathbf{n}} } }\) by (15) from the condition (i). This leads to \(\left( \frac{\max _i \theta _i^2 }{{ \mathcal{V}_1 + ||{{\varvec{\pi }}} - \bar{{\varvec{\pi }}} ||^2_{\theta \mathbf{n}} } } \right) ^{1/2} \rightarrow 0\) from the condition (ii), so we have \(\frac{N(\hat{\bar{\pi }} - \bar{\pi })^2}{\sqrt{\hbox {Var}(T_1)}} \rightarrow 0\) in probability. Combining (I) and (II), we conclude \(\frac{T - \sum _{i=1}^k n_i (\pi _i - \bar{\pi })^2}{\sqrt{{\hbox {Var}(T_1)}}} \rightarrow N(0,1)\) in distribution. \(\square \)

Proof of Theorem 4

-

1.

Proof of 1 : We prove \(\beta (T_\mathrm{new2}) \ge \beta (T_\mathrm{new1})\). For this, we only need to show that \(\mathcal{V}_{1} \ge \mathcal{V}_{1*}\) from Corollary 2. Let \(f(x)= 2 x^2(1-x)^2 + \frac{x(1-x)}{n}\), then f(x) is convex for \(0< x < \frac{1}{2} -\frac{1}{\sqrt{3}} \sqrt{1+\frac{1}{n}}\) since \(f''(x) >0 \) for \(0< x < \frac{1}{2} -\frac{1}{\sqrt{3}} \sqrt{1+\frac{1}{n}}\). Furthermore, \(\mathcal{V}_1 = \sum _{i=1}^kf(\pi _i)\) and \(\mathcal{V}_{1*} = k f(\bar{\pi })\) for \(\bar{\pi }= \frac{1}{N} \sum _{i=1}^kn_i \pi _i\). From the convexity of f, if \(n_i=n\) for all \(1\le i\le k\), we have \(\frac{1}{k} \mathcal{V}_1 = \frac{1}{k} \sum _{i=1}^kf(\pi _i) \ge f(\bar{\pi }) = \frac{1}{k} \mathcal{V}_{1*}\). Therefore, \(\mathcal{V}_1 \ge \mathcal{V}_{1*}\) which leads to \( \lim _{k\rightarrow \infty }(\beta (T_\mathrm{new2}) - \beta (T_\mathrm{new1})) \ge 0\) for the given \(0< \pi _i < \frac{1}{2} -\frac{1}{\sqrt{3}}\) for all i.

Under the given condition, \(\hat{\mathcal{B}}_{0k} = 2k (1+o_p(1))\) and

$$\begin{aligned} T_\mathrm{new2}= & {} \frac{ \sum _{i=1}^k n_i (\hat{\pi }_i -\hat{\bar{\pi }})^2 - \sum _{i=1}^k \hat{\pi }_{i}(1-\hat{\pi }_i) }{\sqrt{2k \hat{\bar{\pi }} (1-\hat{\bar{\pi }}) }} (1+o_p(1))\\ T_{\chi }= & {} \frac{ \sum _{i=1}^k n_i (\hat{\pi }_i -\hat{\bar{\pi }})^2 - k \hat{\bar{\pi }}(1-\hat{\bar{\pi }}) }{\sqrt{2k \hat{\bar{\pi }} (1-\hat{\bar{\pi }}) }} (1+o_p(1)) \end{aligned}$$which leads to

$$\begin{aligned} T_\mathrm{new2} - T_{\chi } = \frac{k \hat{\bar{\pi }}(1-\hat{\bar{\pi }}) - \sum _{i=1}^k \hat{\pi }_{i}(1-\hat{\pi }_i)}{\sqrt{2k \hat{\bar{\pi }} (1-\hat{\bar{\pi }})}} (1+o_p(1)). \end{aligned}$$Using \(k\hat{\bar{\pi }}(1-\hat{\bar{\pi }}) \ge \sum _{i=1}^k \hat{\pi }_i(1-\hat{\pi }_i)\), \( \lim _{k \rightarrow \infty } P( T_\mathrm{new2} - T_{\chi } \ge 0) \rightarrow 1\) which leads to \(\lim _{k \rightarrow \infty } (\beta (T_\mathrm{new2}) - \beta (T_{\chi })) \ge 0\).

-

2.

Proof of 2: Note that \(\mathcal{A}_{1i} = 2(1+o(1))\) and \(\mathcal{A}_{2i} = 4(1+o(1))\) where o(1) is uniform in i. Using \(\bar{\pi }= (k^{-\gamma } + \delta k^{\alpha -1}) (1+O(k^{-1}))\) and \(\tilde{\theta }= \bar{\pi }(1+ o(1))\), we obtain

$$\begin{aligned} \mathcal{V}_1= & {} \left( 2 \sum _{i=1}^k\theta _i^2 + 4 \sum _{i=1}^k\frac{\theta _i}{n_i} \right) (1+o(1)) \\= & {} \left( 2 (k-1) k^{-2\gamma } + 2 (k^{-\gamma }+\delta )^2+ \frac{(k-1)k^{-\gamma }}{n} + \frac{k^{-\gamma } + \delta }{ n k^{\alpha }} \right) \\= & {} \left( 2k^{1-2\gamma } + 2 \delta ^2 + \frac{4k^{1-\gamma }}{n}\right) (1+o(1))\\ \mathcal{V}_{1*}= & {} 2 k(k^{-\gamma } + \delta k^{\alpha -1})^2 (1+O(k^{-1})) + 4 \tilde{\theta }\sum _{i=1}^k\frac{1}{n_i} \\= & {} 2 k^{1-2\gamma } + 4\delta k^{\alpha -\gamma } +2 \delta ^2 k^{2\alpha -1}\\&+ 4(k^{-\gamma } + \delta k^{\alpha -1}) \left( \frac{k-1}{n} + \frac{1}{ nk^{\alpha }} \right) (1+o(1)) \\= & {} 2 k^{1-2\gamma } + 4 \delta k^{\alpha -\gamma } +2 \delta ^2 k^{2\alpha -1} + 4 \frac{k^{1-\gamma } + \delta k^{\alpha }}{n} (1+o(1)) \end{aligned}$$so

$$\begin{aligned} \frac{\mathcal{V}_{1*} - \mathcal{V}_1}{\mathcal{V}_1} = \frac{ (2\delta k^{\alpha -\gamma } + \delta ^2 (k^{2\alpha -1}-1)) + 2\frac{\delta k^{\alpha }}{n} (1+o(1))}{k^{1-2\gamma } + 2 \delta ^2 + 2 \frac{k^{1-\gamma }}{n} (1+o(1))}. \end{aligned}$$(28)-

(a)

if \(\alpha + \gamma <1\) and \(\alpha \ge \frac{1}{2}\), then \(k^{\alpha -\gamma } = o(k^{2\alpha -1})\), therefore (28) \(= \frac{\delta ^2 k^{2\alpha -1} I(\alpha \ne \frac{1}{2}) + 2\frac{k^{\alpha }}{n}}{ k^{1-2\gamma } + \delta ^2 + 2\frac{k^{1-\gamma }}{n}} \rightarrow 0\) where \(I(\cdot )\) is an indicator function.

-

(b)

if \(\alpha +\gamma <1\), \(\alpha < \frac{1}{2}\) and \(\alpha \ge \gamma \), then (28) \(= \frac{2 \delta k^{\alpha -\gamma }-\delta ^2 + 2\frac{k^{\alpha }}{n}}{k^{1-2\gamma } + \delta ^2 + 2\frac{k^{1-\gamma }}{n}} \rightarrow 0\).

-

(c)

if \(\alpha +\gamma <1\), \(\alpha < \frac{1}{2}\), \(\gamma \le \frac{1}{2}\) and \(\alpha <\gamma \), then (28)\(= \frac{-\delta ^2 + 2\frac{k^{\alpha }}{n}}{ k^{1-2\gamma } + \delta ^2 + 2\frac{k^{1-\gamma }}{n}} \rightarrow 0\).

-

(d)

if \(\alpha +\gamma <1\), \(\alpha < \frac{1}{2}\) and \(\gamma > \frac{1}{2}\), then there are two cases depending on the behavior of n. When \(\frac{k^{1-\gamma }}{ n} \rightarrow 0\), then \((28) \rightarrow \frac{-\delta ^2}{\delta ^2} = -1\). When \(\frac{k^{1-\gamma }}{n} \rightarrow \infty \), (28) \(= \frac{\frac{k^{\alpha }}{n}}{\frac{k^{1-\gamma }}{n}} (1+o(1)) = k^{\alpha +\gamma -1} \rightarrow 0\).

-

(e)

if \(\alpha +\gamma >1\), \(\alpha >\frac{1}{2}\) and \(\gamma < \frac{1}{2}\), then (28) \(= \frac{\delta ^2 k^{2\alpha -1} + 2\frac{k^{\alpha }}{n}}{ k^{1-2\gamma } + 2\frac{k^{1-\gamma }}{n}} (1+o(1)) \rightarrow \infty \).

-

(f)

if \(\alpha +\gamma >1\), \(\alpha >\frac{1}{2}\) and \(\gamma \ge \frac{1}{2}\), then (28) \(= \frac{ k^{\alpha -\gamma } + \delta ^2 k^{2\alpha -1} + 2\frac{k^{\alpha }}{n} }{ I(\gamma =\frac{1}{2}) +\delta ^2 + 2\frac{k^{1-\gamma }}{n}} (1+o(1)) \rightarrow \infty \).

-

(g)

if \(\alpha +\gamma >1\), \(\alpha <\frac{1}{2}\) and \(\gamma >\frac{1}{2}\), then \(\alpha <\gamma \) and (28) \(= \frac{ - \delta ^2 + 2\frac{k^{\alpha }}{n} }{ \delta ^2 + 2\frac{k^{1-\gamma }}{n}} (1+o(1)) = \frac{-\delta ^2 + \frac{k^{\alpha }}{n}}{ \delta ^2 + 2\frac{k^{1-\gamma }}{n}} (1+o(1))\). There are two situations depending n. When \(\frac{k^{\alpha }}{n} \rightarrow \infty \), (28) \(= \frac{-\delta ^2 + \frac{k^{\alpha }}{n}}{ 2\delta ^2 + \frac{k^{1-\gamma }}{n}} (1+o(1)) \rightarrow \infty \). When \(\frac{k^{\alpha }}{n} \rightarrow 0\), we have \(\frac{k^{1-\gamma }}{n} \rightarrow 0\), so we derive (28) \(= \frac{-\delta ^2}{ \delta ^2 } (1+o(1)) \rightarrow -1\).

In \( (a) \cup (b) \cup (c) = \{ (\alpha ,\gamma ) : 0<\alpha<1, 0<\gamma<1, 0< \alpha + \gamma<1, 0< \gamma \le \frac{1}{2} \}\), we have \(\lim _n \frac{\mathcal{V}_{1*}}{\mathcal{V}_1} =1\) leading to \(\lim _n (\beta (T_\mathrm{new1}) - \beta (T_\mathrm{new1}))=0\). In \((e)\cup (f) = \{(\alpha , \gamma ) : 0<\alpha<1, 0<\gamma <1, \alpha + \gamma> 1, 1>\alpha >\frac{1}{2} \}\), we have \( \lim \frac{\mathcal{V}_{1*}}{\mathcal{V}} >1\) which leads to \(\lim _n (\beta (T_\mathrm{new1}) - \beta (T_\mathrm{new2})) >0\).

In (e) and (g), the performances are different depending on the sample sizes.

-

(a)

-

3.

We first have

$$\begin{aligned} \mathcal{V}_1= & {} 2 (k^{-\gamma } + \delta )^2 + 2 (k -1) k^{-2\gamma } + \frac{4(\delta + k^{-\gamma })}{n} + 4 (k-1)\frac{k^{-\gamma }}{ nk^{\alpha }} \\= & {} \left( 2 \delta ^2 + 2 k^{1-2\gamma } + \frac{4 k^{1-\gamma -\alpha }}{n} \right) (1+o(1)). \end{aligned}$$Since \(\tilde{\theta }= \bar{\pi }(1-\bar{\pi }) = \frac{\delta + k^{\alpha -\gamma +1}}{k^{\alpha +1}} (1+o(1)) = k^{-\gamma }(1+o(1))\) from \( 0< \alpha <1\) and \(0<\gamma <1\),

$$\begin{aligned} \mathcal{V}_1^*= & {} 2 k^{1-2\gamma } + \frac{4k^{-\gamma }}{n} + \frac{ (k-1)k^{-\gamma } }{nk^{\alpha }} \\= & {} \left( 2 k^{1-2\gamma } + \frac{4 k^{1-\gamma -\alpha }}{n} \right) (1+o(1)). \end{aligned}$$If \(1-2\gamma <0\) and \( k^{1-\gamma -\alpha } =o(n)\), then \(\mathcal{V}_1 = \delta ^2 (1+o(1))\) and \(\mathcal{V}_1^* =o(1)\), we have \(\frac{\mathcal{V}_1}{ \mathcal{V}_1^* } \rightarrow \infty \) which leads to \( \beta (T_\mathrm{new2}) - \beta (T_\mathrm{new1}) >0\). \(\square \)

About this article

Cite this article

Park, J. Testing homogeneity of proportions from sparse binomial data with a large number of groups. Ann Inst Stat Math 71, 505–535 (2019). https://doi.org/10.1007/s10463-018-0652-2

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10463-018-0652-2