Abstract

In a multivariate nonparametric regression problem with fixed, deterministic design asymptotic, uniform confidence bands for the regression function are constructed. The construction of the bands is based on the asymptotic distribution of the maximal deviation between a suitable nonparametric estimator and the true regression function which is derived by multivariate strong approximation methods and a limit theorem for the supremum of a stationary Gaussian field over an increasing system of sets. The results are derived for a general class of estimators which includes local polynomial estimators as a special case. The finite sample properties of the proposed asymptotic bands are investigated by means of a small simulation study.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Within the last decades, nonparametric regression has received a great deal of attention as a powerful tool for data analysis. Various different models and methods have been discussed and thoroughly investigated. Nonparametric curve estimation provides many useful applications, not only for graphical visualization but also as a basis for the development of means of statistical inference such as goodness of fit tests or the construction of confidence sets for the unknown regression function. While interval estimates can be used for its point-wise analysis, simultaneous confidence bands have to be employed to draw conclusions regarding global features of the curve under consideration and thus shed more light into the connection between dependent and independent variables.

In this paper, we develop new asymptotic uniform confidence sets in a nonparametric regression setting with a deterministic and multivariate predictor. To be precise, we consider the multivariate regression model

where \(\mathbf {i}=(i_1,\ldots ,i_d)\) is a multi-index, the \(\{\mathbf {t}_{\mathbf {i}}:=(t_{i_1},\ldots ,t_{i_d})\in \mathbb {R}^d\,|\,1\le i_1,\ldots ,i_d\le n\}\) are deterministic design points in \(\mathbb {R}^d, \{\varepsilon _{(i_1,\ldots ,i_d)}\,|\,1\le i_1,\ldots ,i_d\le n\}\) is a field of centered, independent identically distributed random variables with common variance \(\sigma ^2\) and \(f\) is an unknown, smooth regression function.

The construction of confidence sets requires a reliable estimate of the unknown object. Often kernel smoothing techniques are applied in this context [cf., e.g., Wand and Jones (1995)]. Alternative approaches, such as spline smoothing for instance, often show similar asymptotic behavior in the sense that corresponding estimators have approximately the same form, that is, linear in the observations and with a kernel that is of convolution form and possibly variable with respect to the sample size \(n\).

Given a suitable estimate, a well-established method to construct asymptotic uniform confidence bands is based on the original work of Bickel and Rosenblatt (1973b) who extended results of Smirnov (1950) for a histogram estimate and constructed confidence bands for a univariate density function of independent identically distributed observations. Their method is based on the asymptotic distribution of the supremum of a centered kernel density estimator and closely related to extreme value theory. Since this seminal paper the idea has been elaborated, advanced and adopted to various situations. For example Johnston (1982) constructed confidence bands based on the Nadaraya–Watson and Yang estimator, Härdle (1989) derived asymptotic uniform confidence bands for \(M\)-smoothers. Eubank and Speckman (1993) who considered deterministic, uniform design and local constant estimation, and Xia (1998) who considered random design points under dependence and local linear estimation, employed an explicit bias correction. Bootstrap confidence bands for nonparametric regression were proposed by Neumann and Polzehl (1998) and Claeskens and Keilegom (2003). Härdle and Song (2010) investigated asymptotic uniform confidence bands for a quantile regression curve with a one-dimensional predictor. In the context of density estimation, Giné et al. (2004) derived asymptotic distributions of weighted suprema. Further, confidence bands were proposed in adaptive density estimation based on linear wavelet and kernel density estimators (Giné and Nickl 2010), density deconvolution (Bissantz et al. 2007) or adaptive density deconvolution (Lounici and Nickl 2011). All these authors, if not otherwise indicated, employed undersmoothing to cope with the bias. Also, in all the above listed references, one-dimensional models are considered and the results are not applicable in cases where the quantity of interest depends on a multivariate predictor. On the other hand only a few results can be found in a multivariate setting which attracted comparatively little attention so far. For instance, in the same year, the well-known paper Bickel and Rosenblatt (1973b) was released and also a multivariate extension was published (Bickel and Rosenblatt 1973a) which received by far less attention. Rosenblatt (1976) studied maximal deviations of multivariate density estimates, Konakov and Piterbarg (1984) investigated the convergence of the distribution of the maximal deviation for the Nadaraya–Watson estimate in a multivariate, random design regression setting and Rio (1994) investigated local invariance principles in the context of density estimation. An alternative approach was recently proposed by Hall and Horowitz (2013) who addressed the bias-difficulty explicitly and constructed confidence bands based on normal approximations and a bootstrap method that is used to adjust the level \(\alpha \) in the normal quantiles in such a way that a coverage of a desired value of at least \(1-\alpha _0\) is attained at at least a predefined portion of values \(x\in \mathcal {R},\) where \(\mathcal {R}\subset \mathbb {R}^d.\) They discuss both nonparametric density and regression estimation.

In this paper, we construct asymptotic uniform confidence bands for a regression function in a multivariate setting for a general class of nonparametric estimators of the regression function. For the sake of a transparent notation, we focus on local polynomial estimators. However, our approach is generally applicable for several other estimators in use (see Theorem 3 and Remark 2 below).

Notations and definitions as well as assumptions, required for the asymptotic theory, can be found in Sect. 2. For a clear exposition, we examine in Sect. 3 the two-dimensional case, briefly discuss the properties of the estimator and state the main results. The general case of a \(d\)- dimensional predictor is discussed in Sect. 4. The finite sample properties of the proposed asymptotic bands are investigated in Sect. 5 and detailed proofs for the two-dimensional case are given in Sect. 6 while the case \(d>2\) is considered in Sect. 7. Our arguments heavily rely on results by Piterbarg (1996) who provided a limit theorem for the supremum

of a stationary Gaussian field \(\{X(t)\,|\,t \in \mathbb {R}^d\} \), where \(\{T_n\subset \mathbb {R}^d\}_{n\in \mathbb {N}}\) is an increasing system of sets such that \(\lambda ^d(T_n)\rightarrow \infty \) as \(n\rightarrow \infty \) and also on multivariate strong approximation methods provided by Rio (1993).

2 General setup and assumptions

Let \(\varOmega :=(0,1)^d\) and suppose that for two positive constants \(k\in \mathbb {N}\) and \(a\in (0,1)\) the function \(f:\overline{\varOmega }\rightarrow \mathbb {R}\) from model (1) belongs to the Hölder class of functions \(C^{k,a}(\overline{\varOmega }),\) i.e., for all multi-indices \(\pmb \beta =(\beta _1,\ldots ,\beta _d)\) with \(|\pmb \beta |=\beta _1+\cdots +\beta _d\le k\) the derivatives \(D^{\pmb \beta }f\) exists and \(\Vert f\Vert _{C^{k,a}}<\infty .\) Here, we use the following notation

and

where \(\Vert \cdot \Vert \) without a subscript denotes the Euclidean distance. Also, in what follows, more of the usual multi-index notation will be used, such as

Further, with a slight abuse of notation, we shall denote the vector \(\Bigl (\frac{t_1-x_1}{h_1},\ldots ,\frac{t_d-x_d}{h_d}\Bigr )^T\) by \(\frac{\mathbf {t}_{\mathbf {i}}-\mathbf {x}}{\mathbf {h}}\) for the sake of brevity.

Assumption 1

Assume that the following three conditions hold

-

(i)

The kernel \(K\) has compact support: \(\text {supp}(K)\subset [-1,1]^d\).

-

(ii)

There exist constants \(D,K_1>0\) and \(K_{2}<\infty \) such that

$$\begin{aligned} K_1\cdot I_{[-D,D]^d}(\mathbf {u})\le K(\mathbf {u})\le K_2\cdot I_{[-1,1]^d}(\mathbf {u}). \end{aligned}$$ -

(iii)

All derivatives of \(K\) up to the order \(d\) exist and are continuous.

Assumption 2

Suppose that the design points \(\{t_{\mathbf {i}}=(t_{i_1},\ldots ,t_{i_d})|\mathbf {i}=(i_1,\ldots ,i_d)\in \{1,\ldots ,n\}^d\}\) satisfy

for positive design densities \(g_j,\,j=1,\ldots ,d\) on \([0,1]\) [see also Sacks and Ylvisaker (1970)] that are bounded away from zero and continuously differentiable on \((0,1)\) with bounded derivatives up to order \((d-1)\vee 1\).

Remark 1

If for some \(j\in \{1,\ldots ,d\}\, g_j\) is the uniform density, that is, \(g_j=I_{[0,1]},\) Assumption 2 gives

Hence, the case of equally spaced design is included in Assumption 2 as a special case.

3 Bivariate nonparametric regression

3.1 Notation, estimation and auxiliary results

In the following, we shall adapt the notation introduced in Tsybakov (2009), Chapter 1.6, to the two-dimensional setting. We shall also make use of some of the results stated therein and extend the proofs, if necessary, to the case where the design only meets Assumption 2, i.e., can be but is not necessarily uniform. To define the estimator, we need to fix some notation first. For \(j=1,\ldots ,k\) let \(U_j:\mathbb {R}^2\rightarrow \mathbb {R}^{j+1}\) be defined as

and let further \(U:\mathbb {R}^2\rightarrow \mathbb {R}^{(k+1)(k+2)/2}\) be defined as \(U(\mathbf {u}):=(1,U_1(\mathbf {u}), U_2(\mathbf {u}),\ldots ,U_k(\mathbf {u}))^T.\) Moreover, for \(j=1,\ldots ,k\) and \(\mathbf {h}=(h_1,h_2)\) define \( \theta (\mathbf {x})=(f(\mathbf {x}),\mathbf {h}^1 f^{(1)}(\mathbf {x}),\mathbf {h}^2f^{(2)}(\mathbf {x}),\ldots ,\mathbf {h}^k f^{(k)}(\mathbf {x}))^T,\) where \( \mathbf {h}^jf^{(j)}:=(\mathbf {h}^{(j,0)}\cdot D^{(j,0)}f,\mathbf {h}^{(j-1,1)}\cdot D^{(j-1,1)}f,\mathbf {h}^{(j-2,2)}\cdot D^{(j-2,2)}f,\ldots ,\mathbf {h}^{(0,j)}\cdot D^{(0,j)}f)\) with the multi-index notation \(\mathbf {h}^{\pmb \alpha }\) and \(D^{(\alpha _1,\alpha _2)}\) as defined in (4) and (2), respectively. Let \(K:\mathbb {R}^2\rightarrow \mathbb {R}^+_0\) be a kernel function as specified in Assumption 1 in the previous section. Recall that, given the above notation, the quantity

is called local polynomial estimator of order \(k\) of \(\theta (x)\) and that the statistic

is called local polynomial estimator of order \(k\) of \(f(\mathbf {x})\) [see Tsybakov (2009)]. Introducing some more notation, we can rewrite the estimators \(\hat{\theta }_n(\mathbf {x})\) and \(\hat{f}_n(\mathbf {x})\) in a perhaps more intuitive way. For \(\mathbf {x}\in \overline{\varOmega }\) let

and \({\mathcal B }_{n,\mathbf {x}}\in \mathbb {R}^{(k+1)(k+2)/2\times (k+1)(k+2)/2}\) be defined as

and

Now we can write

, which yields the necessary condition

. It is obvious that for a positive definite matrix \({\mathcal B}_{n,\mathbf {x}}\) the estimator \(\hat{\theta }_{n}(\mathbf {x})\) is defined by the equation

and that, also for a positive definite matrix \({\mathcal B}_{n,\mathbf {x}}\), with the definition of the weights \(W_{n,\mathbf {i}}(\mathbf {x})\) by

we obtain

that is, the estimator \(\hat{f}_n(\mathbf {x})\) is linear in \(Y_{\mathbf {i}}.\)

To conclude this section, we give some useful results regarding the estimator and its defining quantities such as the asymptotic form of the matrix \({\mathcal B}_{n,\mathbf {x}}\), the asymptotic variance and a uniform estimate of the bias which will be needed for the subsequent considerations.

Lemma 1

Let \({\mathcal B}_{n,\mathbf {x}}\) be as defined in (6), \(K\) a kernel as specified in Assumption 1 and define the matrices \({\mathcal B},{\mathcal B}_{\mathbf {x}}\in \mathbb {R}^{(k+1)(k+2)/2\times (k+1)(k+2)/2}\) as

where integration is carried out component wise. Let further Assumption 2 be satisfied. Then,

-

(i)

for each \((p,q)\in \{1,\ldots ,(k+1)(k+2)/2\}\times \{1,\ldots ,(k+1)(k+2)/2\},\;0<\delta <1/2,\)

$$\begin{aligned} \sup _{\mathbf {x}\in [\delta ,1-\delta ]^2}\bigl |({\mathcal B}_{n,\mathbf {x}}-{\mathcal B}_{\mathbf {x}})_{p,q}\bigr |=O\left( h_1+h_2\right) \end{aligned}$$ -

(ii)

and the matrix \({\mathcal B}_{\mathbf {x}}\) is positive definite.

Note that, the matrix \({\mathcal B}\) is independent of the variable \(\mathbf {x}.\)

Lemma 2

(Variance of the local polynomial estimator) Let \({\mathcal B}\) be as defined in (9), \(K\) a kernel as specified in Assumption 1 and define

Then \(\mathrm{Var} [\hat{f}_n(\mathbf {x})]=s^2(\mathbf {x})/(n^2h_1h_2)+ o\bigl (1/(n^2h_1h_2)\bigr )\), where the estimate \(o\bigl (1/(n^2h_1h_2)\bigr )\) is independent of the variable \(\mathbf {x}.\)

Lemma 3

(Bias of the local polynomial estimator) If Assumption 1 and Assumption 2 are satisfied we find for the bias of the local polynomial estimate (5) of a function \(f\in C^{k,a}([0,1]^2)\)

3.2 A limit theorem and its implications

Given the notation and the auxiliary results presented in the previous Sect. 3.1, we can now state the main results for the two-dimensional regression model (1).

Theorem 1

Let Assumption 1 and Assumption 2 be satisfied. Assume that there exists a constant \(\nu \in (0,1]\) such that \(\mathbb {E}|\varepsilon _{(1,1)}|^r<\infty \) for some \(r>4/(2-\nu ),\) and \(\sqrt{\log (n)}(1/n^{\nu }h_1h_2+1/nh_1^2+1/nh_2^2)=o(1).\) Further assume that there exist constants \(0<l<1\) and \(L<\infty \) such that the inequality \(h_1+h_2\le L(h_1h_2)^{1-l}\) holds. Then, for all \(0<\delta <1/2,\,\kappa \in \mathbb {R}\)

and

It is clear that in nonparametric curve estimation one always has to deal with the effect of bias subject to smoothing. In the context of the construction of (simultaneous) confidence bands one of two major strategies to cope with this difficulty is usually pursued, namely explicit bias correction, which allows for an ”optimal” choice of smoothing parameter and slight undersmoothing, i.e., accepting a higher variability in the estimation to suppress the bias. In this paper, we shall follow the latter strategy for which Hall (1992) gave theoretical justification by showing that it results in minimal coverage error as compared to explicit bias correction. The price, however, is slightly wider asymptotic bands. As a direct consequence of Theorem 1 and the use of an undersmoothing bandwidth, we obtain the following result.

Corollary 1

Let the assumptions of Theorem 1 be satisfied and let \((h_1+h_2)^{k+a}\cdot n\cdot \sqrt{h_1h_2\log (n)}=o(1).\) Then the set

where \(\varPhi _{n,\alpha }(\mathbf {x}):=\bigl (\kappa _{\alpha }/l_n+l_n\bigr )s(\mathbf {x})/(n\sqrt{h_1h_2}) \quad \text {and}\quad \kappa _{\alpha }=-\log (-0.5\log (1-\alpha )),\) defines an asymptotic uniform \((1-\alpha )\)-confidence band for the bivariate function \(f\in C^{k,a}(\overline{\varOmega })\) from regression model (1).

4 Multivariate nonparametric regression

In this section, we first introduce more notation that is needed to define the local polynomial estimator of order \(k\) for the multivariate function \(f.\) Then, we state the \(d\)-dimensional versions of Theorem 1 and Corollary 1 presented in the previous section and conclude with a further generalization regarding the estimator. For \(j=1,\ldots ,k\) and \(N_{j,d}:=\left( {\begin{array}{c}d+j\\ d\end{array}}\right) \) let

be an enumeration of the set \(\bigl \{\pmb \alpha \in \{0,1,\ldots ,j\}^d\,\bigl |\,|\pmb \alpha |\le j\bigr \}\) and let \(U:\mathbb {R}^d\rightarrow \mathbb {R}^{N_{k,d}}\) be defined as

where \(U_{j,\varPsi _j(p)}(\mathbf {u})=\frac{\mathbf {u}^{\varPsi _j(p)}}{\varPsi _j(p)!},\;p=1,\ldots ,N_{j,d-1},\;j=1,\ldots ,k\).

Moreover, for \(j=1,\ldots ,k\) and \(\mathbf {h}=(h_1,\ldots ,h_d)\) define \(\theta (\mathbf {x})=(f(\mathbf {x}),\mathbf {h}^1 f^{(1)}(\mathbf {x}),\mathbf {h}^2f^{(2)}(\mathbf {x}),\ldots ,\mathbf {h}^k\cdot f^{(k)}(\mathbf {x}))^T,\) where \(\mathbf {h}^jf^{(j)}:=(\mathbf {h}^{\varPsi _{j}(1)}\cdot D^{\varPsi _{j}(1)}f,\ldots ,\mathbf {h}^{\varPsi _j\left( N_{j,d-1}\right) }\cdot D^{\varPsi _j\left( N_{j,d-1}\right) }f).\) Using the notation just introduced, we can define the \(d\)-dimensional local polynomial estimator of order \(k\) of \(f(\mathbf {x})\) exactly as in (5) For \(\mathbf {x}\in \overline{\varOmega }\) let

and \({\mathcal B}_{n,\mathbf {x},d}\in \mathbb {R}^{N_{k,d}\times N_{k,d}}\) be the \(d\)-dimensional analogs of

and \({\mathcal B}_{n,\mathbf {x}}\). Again we have

provided the matrix \({\mathcal B}_{n,\mathbf {x},d}\) is positive definite. Recall from definition (4) that \(\mathbf {h}^{\mathbf {1}}=h_1\cdot h_2\cdot \ldots \cdot h_d.\)

Theorem 2

Let Assumption 1 and Assumption 2 be satisfied. Assume that there exists a constant \(\nu \in (0,1]\cap (0,d)\) such that \(\mathbb {E}|\varepsilon _{(1,1)}|^r<\infty \) for some \(r>4/(2-\nu ),\) and \(\sqrt{\log (n)}\bigl (1/(n^{\nu }\mathbf {h}^{\mathbf {1}})+1/(nh_1^d)+\cdots +1/(nh_d^d)\bigr )=o(1).\) Further assume that there exist constants \(0<l<1\) and \(L<\infty \) such that the inequality \(\sum _{p=1}^{d}h_p\le L(\prod _{p=1}^dh_p)^{1-l}\) holds. Then, for all \(0<\delta <1/2,\,\kappa \in \mathbb {R}, s_d(\mathbf {x}):=\sigma \Vert \widetilde{K}_{{\mathcal B},U}\Vert /\sqrt{g_1(x_1)\cdot \ldots \cdot g_d(x_d)}\)

and

Corollary 2

Let the assumptions of Theorem 1 be satisfied and let \((h_1+\cdots +h_2)^{k+a}\cdot n^{\frac{d}{2}}\cdot \sqrt{\mathbf {h}^{\mathbf {1}}\log (n)}=o(1).\) Then the set

where \( \varPhi _{n,\alpha ,d}(\mathbf {x}):=\Bigl (\frac{\kappa _{\alpha }}{l_n}+l_n\Bigr )\frac{s_d(\mathbf {x})}{n^{\frac{d}{2}} \sqrt{h_1\cdot \ldots \cdot h_d}} \quad \text {and}\quad \kappa _{\alpha }=-\log (-0.5\log (1-\alpha )),\) defines an asymptotic uniform \((1-\alpha )\)-confidence band for the multivariate function \(f\in C^{k,a}(\overline{\varOmega })\) from regression model (1).

The results stated above hold for general linear nonparametric kernel regression estimates with a kernel of convolution form, or a sequence of kernels even, satisfying Assumption 1. This is a consequence of the fact that those kernel estimates can all be approximated by a Gaussian process, which is stationary, where the supremum is taken with respect to a growing system of sets, which has an extreme value limit distribution. To conclude the section, we now present a further limit theorem in which this generalization is formalized.

Theorem 3

Assume that the conditions of Theorem 2 are satisfied and that

with a sequence of kernels \((K^{(n)})_{n\in \mathbb {N}}\) meeting Assumption 1 and one of the following two conditions

-

(i)

There exists a number \(M\in \mathbb {N}\), which is independent of \(n\), kernels \(K,K_1,\ldots ,K_M\), each satisfying Assumption 1, and sequences \((a_{n,1})_{n\in \mathbb {N}},\ldots ,(a_{n,M})_{n\in \mathbb {N}}\) such that \(a_{n,p}=o(1/\sqrt{\log (n)}),\,p=1,\ldots ,M\) and \(K^{(n)}-K=\sum _{p=1}^{M}a_{n,p}K_p.\)

-

(ii)

There exists a limit kernel \(K\), meeting Assumption 1 such that \(\Vert K^{(n)}-K\Vert _{\infty }=o\bigl (\sqrt{\mathbf {h}^{\mathbf {1}}}/\sqrt{\log (n)}\bigr ).\)

Then, Theorem 2 holds when each \(\widetilde{K}_{{\mathcal B},U}\) is to be replaced by \(K\) in the definitions of the quantities \(s_d(\mathbf {x})\) and \(\varLambda _2.\)

Remark 2

The results from Theorem 3 are relevant in several applications. For instance, in the context of spline smoothing, Silverman (1984) showed that a one-dimensional cubic spline estimator is asymptotically of convolution-kernel form with a bounded, smooth kernel \(K_S\) defined by \(K_S(u)=1/2\exp (-|u|/\sqrt{2})\sin (|u|/\sqrt{2}+\pi /4)\). The associated estimator satisfies the assumptions of Theorem 3 except for Assumption 1 (i). The results of this paper also hold in this case, even for relatively mild (polynomial) decay of the kernel function in each direction, however, the technical complexity is unproportionally greater, hence we will not include this case to our considerations.

5 Finite sample properties

In this section, the finite sample properties of the proposed asymptotic confidence bands are investigated. First, the simulation setup is described in Sect. 5.1 and the results are presented and discussed in Sect. 5.2.

5.1 Simulation setup

All results are based on 2500 simulation runs. We simulate data from the bivariate regression model (1) with normally distributed errors \(\varepsilon _{i,j}\sim \mathcal {N}(0,\sigma ^2)\) where \(\sigma =0.3\) and \((n_1,n_2)\in \{1,\ldots ,n\}^2, n\in \{75,150,250\}\). For the unknown regression function, we consider two different versions \(f_1\) and \(f_2\) of a product of trigonometric functions defined by

with increasing complexity (see Fig. 1, central column for a contour plot of both functions under consideration). As kernel function \(K\), we consider a product kernel \(K(x_1,x_2)=K_1(x_1)\cdot K_1(x_2)\) with a compactly supported, three times continuously differentiable function \(K_1(x)=(1-x^2)^4I_{[-1,1]}(x).\) In these settings, we compare the performances of both a local linear as well as a local quadratic estimator. The corresponding limit kernels \(\widetilde{K}_{{\mathcal B},U,\mathrm{lin}}\) and \(\widetilde{K}_{{\mathcal B},U,\mathrm{quad}}\) are then given by \(\widetilde{K}_{{\mathcal B},U,\mathrm{lin}}(\mathbf {x})=1.514\cdot K(\mathbf {x})\) and \(\widetilde{K}_{{\mathcal B},U,\mathrm{quad}}(\mathbf {x})=(3.482-10.826(x_1^2+x_2^2))K(\mathbf {x})\). A difference-based variance estimator is used to estimate \(\sigma ^2.\) Concerning the smoothing parameter \(h\), we first determine a suitable value for each setting by a small preliminary simulation study. These fixed smoothing parameters are then used in all runs for the respective simulation setting.

5.2 Simulation results

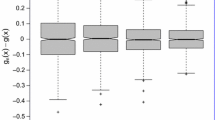

We now summarize the results of the simulation study. Figure 2 illustrates the confidence bands based on the local quadratic estimator for the regression function \(f_2\), top down for growing sample sizes. In each row, the contourplots show the lower confidence surface, the true object and the upper confidence surface (from left to right) and the improvement in the performance for growing \(n\) clearly shows. Tables 1 and 2 contain the simulated coverage probabilities and the average half widths of the bands for the local linear and the local quadratic estimator, respectively. We observe that, even for moderate sample sizes, the simulated coverage probabilities are close to the nominal values and that the bands are reasonably narrow. For \(n=150\) and \(n=250\), the widths of the asymptotic bands are clearly below the noise level of \(0.3\) in all cases. The bands are narrow enough to provide, for instance, lower bounds on the number of local minima and maxima, since maximal regions are clearly distinguishable from minimal regions by the fact that the lower bands in a neighborhood of the maxima are much higher than the upper bands in a neighborhood of the minima.

Further, it is evident that the bands for \(f_1\) constructed with the local linear estimator are narrower than the ones for the local quadratic estimator. This is due to the fact that the local linear estimator produces a smaller variance because the \(L^2\)-norms of the limit kernels \(\widetilde{K}_{{\mathcal B},U,\mathrm{lin}}\) and \(\widetilde{K}_{{\mathcal B},U,\mathrm{quad}}\) are not equal, more precisely \(\Vert \widetilde{K}_{{\mathcal B},U,\mathrm{lin}}\Vert _2<\Vert \widetilde{K}_{{\mathcal B},U,\mathrm{quad}}\Vert _2\). Nevertheless, the results for the local quadratic estimator are slightly better which is due to the smaller bias of this estimator as compared to its linear counterpart which guarantees a more accurate centering of the bands and results in higher coverage. We also find that, while there seems to be hardly any difference for the different settings for the local quadratic estimator, the bands for \(f_2\) based on the local linear estimator are clearly wider. The effect is shown in Fig. 2 where plots of both cases for the local linear estimator are displayed.

6 Proofs

In this section, we present the proofs of the results presented in the previous sections. Those that are completely analogous to the ones presented in Tsybakov (2009), Chapter 1.6, are omitted, only some extensions are included in this section.

6.1 Proofs of auxiliary results

Proof of Lemma 1

(i) Since each entry \(U^{(p_0)}(\mathbf {u})U^{(q_0)}(\mathbf {u})\) of the matrix

is a polynomial of degree \(\le k\) the smoothness properties of \(K\) transfer to the products \(U^{(p_0)}(\mathbf {u})U^{(q_0)}(\mathbf {u})\cdot K.\) Hence, it follows by Assumption 2 that

Finally, again by Assumption 2

for sufficiently large \(n\).

(ii) Let \(\mathbf {v}\in \mathbb {R}^{(k+1)(k+2)/2}\backslash \{\mathbf {0}\}.\) Assumption 1 implies that

For \(\mathbf {v}\ne \mathbf {0}\) the quantity \(\mathbf {v}^TU(\mathbf {u})\ne 0\) is a polynomial in \(\mathbf {u}\) of degree \(\le k\) and it can only be equal to zero at a finite number of points. Since also \(D>0\) it follows that \(\mathbf {v}^T{\mathcal B}\mathbf {v}>0\) and since the design densities \(g_1\) and \(g_2\) are bounded away from zero it also follows that \(\mathbf {v}^T{\mathcal B}_{\mathbf {x}}\mathbf {v}=g_1(x_1)g_2(x_2)\mathbf {v}^T{\mathcal B}\mathbf {v}>0\),which concludes the proof of the lemma. \(\square \)

Proof of Lemma 2

By definition of the weights \(W_{n,\mathbf {i}}\) it follows that

Now, we apply Lemma 1 and obtain

By Assumption 1 and Assumption 2 we find

since \(t_{i_j}-t_{i_j-1}=G^{-1}_j(i_j/(n+1))-G^{-1}_j((i_j-1)/(n+1))=1/(ng_j(t_j))+O(1/n^2)\) by differentiation of the inverse function \(G^{-1}_j\) and the mean value theorem. The assertion of the lemma immediately follows. \(\square \)

Proof of Lemma 3

Lemma 1 implies that the matrix \({\mathcal B}_{n,\mathbf {x}}\) is positive definite for sufficiently large \(n\in \mathbb {N}\). Hence, we can use the linear representation of the local polynomial estimator which is given in (8). This also implies that there exists a positive constant \(\lambda _0\) and a positive number \(n_0\in \mathbb {N}\) such that the smallest eigenvalue \(\lambda _{\text {min}}({\mathcal B}_{n,\mathbf {x}})\ge \lambda _0\), for all \(\mathbf {x}\in [0,1]^2\) if \(n\ge n_0\). Furthermore, we make use of the fact that the local polynomial estimator of order \(k\) reproduces polynomials of degree \(\le k.\) This means that for any polynomial \(Q\) with \( Q(\mathbf {x})=\sum _{\pmb \beta \in \{0,\ldots ,k\}^2,\,|\pmb \beta |\le k}a_{\pmb \beta }\mathbf {x}^{\pmb \beta }\) for \(\mathbf {x}\in \mathbb {R}^2\) the following equality holds

and hence

since (12) implies the identity \(\sum _{i_1,i_2=1}^nW_{n,(i_1,i_2)}(\mathbf {x})=1.\) Equation (12) further implies that \(\sum _{i_1,i_2=1}^{n}(\mathbf {t}_{(i_1,i_2)}-\mathbf {x})^{\pmb \beta }W_{n,(i_1,i_2)}(\mathbf {x})=0\) for all multi-indices \(\pmb \beta \in \{0,\ldots ,k\}^2,\,|\pmb \beta |\le k.\) By Taylor expansion and from (3) we obtain

Since \(t_{i_j}=G^{-1}(i_j/(n+1))\) and \(G\) is strictly increasing the indicator functions \(I_{[x_j-h_j,x_j+h_j]}(t_{i_j})\) can be replaced by \(I_{[G_j(x_j-h_j),G_j(x_j+h_j)]}(G_j(t_{i_j}))\) for \(j=1\) and \(j=2.\) This finally implies

where the last estimate \(O\left( (h_1+h_2)^{k+a}\right) \) does no longer depend on \(\mathbf {x}\). \(\square \)

6.2 Proofs of Theorem 1 and Corollary 1

To prove Theorem 1, we perform several steps to approximate the quantity \(n\sqrt{h_1h_2}s(\mathbf {x})^{-1}\bigl (\hat{f}_n(\mathbf {x})-\mathbb {E}\hat{f}_n(\mathbf {x})\bigr )\) uniformly in \(\mathbf {x}\in [\delta ,1-\delta ]^2\) by a stationary Gaussian field \(Z(\mathbf {x}):=\int _{\mathbb {R}^2}\widetilde{K}_{{\mathcal B},U}(\mathbf {t}-\mathbf {x})\,\mathrm{d}W(\mathbf {t})\), where \(W\) is a Wiener sheet on \(\mathbb {R}^2\), the function \(\widetilde{K}_{{\mathcal B},U}\) is defined in (10) and the supremum is then taken over the set \(\frac{1}{h_1}I_1\times \frac{1}{h_2}I_2\). Then, we apply Theorem 14.1 in Piterbarg (1996) to this stationary field which will complete the proof of the theorem. Define the process

\(Z_{n,0}\) can be decomposed as follows (see Lemma 8 below for details)

where the processes \(Z_{n,1}(\mathbf {x})\) and \(R_{n,\mathbf {x}}(\mathbf {x})\) are defined in an obvious manner. In a first approximation step, \(Z_{n,0}\) is approximated by \(Z_{n,1}.\) In a next step, the observation errors are replaced by their partial sums which allows to replace \(Z_{n,1}\) by \(Z_{n,2}\):

where \(W\) is the Wiener sheet specified in Lemma 4,

and \(G^{-1}(\mathbf {z}):=\bigl (G_1^{-1}(z_1),G_{2}^{-1}(z_2)\bigr )\). To this end, we extend an approach introduced by Stadtmüller (1986) or Eubank and Speckman (1993) for one-dimensional models with deterministic (close to) uniform design. Note that, it is not immediate how to generalize this methodology to higher dimensions as well as to a not necessarily uniform design under general design assumptions and a broader class of estimators, which is all done here. Next, the sum is approximated by the corresponding Wiener integral which gives the approximation of \(Z_{n,2}\) by \(Z_{n,3},\) defined by

where \(G(\mathbf {z}):=\bigl (G_1(z_1),G_2(z_2)\bigl ).\) We now define

Note that, \(Z_{n,3}\) and \(Z_{n_4}\) have the same probability structure, i.e., \(\{Z_{n,3}(\mathbf {x})\}\mathop {=}\limits ^{\mathcal {D}}\{Z_{n,4}(\mathbf {x})\}.\) Hence, in a next step we replace \(Z_{n,4}\) by \(Z_{n,5},\) defined by

In a further step, we replace the process \(Z_{n,5}\) by the stationary process \(Z_{n,6}\)

and take the supremum with respect to \(\mathbf {x}\in 1/h_1[\delta ,1-\delta ]\times 1/h_2[\delta ,1-\delta ].\) Last, we show that the remainder process \(R_{n,0}\) is negligible, that is \(\sup _{\mathbf {x}\in [\delta ,1-\delta ]^2}|R_{n,0}(\mathbf {x})|=o_P\bigl (\log (n)^{-1/2}\bigr )\). Each approximation step corresponds to one of the Lemmas 4 to 8 listed and proven below.

Lemma 4

There exists a Wiener sheet \(W\) on a suitable probability space such that

where \(\nu \in (0,1]\) is the constant defined in Theorem 1.

Proof

Here and in what follows let \(I\) denote the unit cube, i.e., \(I:=[0,1]^d.\) Define the partial sums \(S_{(i_1,i_2)},\) indexed by double-indices \((i_1,i_2)\in \{0,1,\ldots ,n\}^2\) by \(S_{(i_1,i_2)}:=\sum _{p=1}^{i_1}\sum _{q=1}^{i_2}\varepsilon _{(p,q)}\) and set \(S_{(i_1,0)}\equiv S_{(0,i_2)}\equiv 0\) for all \((i_1,i_2)\in \{0,\ldots ,n\}^2.\) Note that, the following identity holds:

i.e., the errors can be replaced by the respective ”increments” on \([\mathbf {i}-\mathbf {1},\mathbf {i}]=:[i_1-1,i_1]\times [i_2-1,i_2]\) of the partial sum process on the grid \(\{0,\ldots ,n\}\times \{0,\ldots ,n\}.\) We thus obtain

We can now re-sort the sum and obtain a sum that contains the increments of the function \(\mathbf {z}\mapsto \widetilde{K}_{{\mathcal B},U}\Bigl (\frac{G^{-1}(\mathbf {z})-\mathbf {x}}{\mathbf {h}}\Bigr )\) instead of the increments of the partial sum process and obtain

Observe that \(\mathbf {x}\in [\delta ,1-\delta ]^2, \mathbf {t}_{(i_1,n)}=(G_1^{-1}(i_1/n),1), x_{(n,i_2)}=(1,G_2^{-1}(i_2/n))\) and for large enough \(n, \delta /h_1\wedge \delta /h_2>1.\) From Assumption 1 and from \(S_{(i_1,0)}\equiv S_{(0,i_2)}\equiv 0\) for all \((i_1,i_2)\in \{0,\ldots ,n\}^2\). It now follows that all terms except the first one in the latter representation of \(Z_{n,1}(\mathbf {x})\) are equal to zero for sufficiently large \(n,\) which implies

for all \(n\ge n_0,\) for some \(n_0\in \mathbb {N}.\) This yields

The assertion of the lemma now follows from Theorem 1 in Rio (1993), which gives the estimate

since, under the assumptions of Theorem 1, \(\mathbb {E}|\varepsilon _{(1,1)}|^r<\infty \) for \(r>4/(2-\nu ).\)

It follows that

\(\square \)

For the next approximation step we need that \(1/(nh_1h_2)=o(1/log(n)^2)\) which is implied by the conditions of Theorem 1.

Lemma 5

Under the assumptions of Theorem 1 the process \(Z_{n,2}(\mathbf {x})\) can be approximated by \(Z_{n,3}(\mathbf {x})\) uniformly with respect to \(\mathbf {x}\in [\delta ,1-\delta ]^2,\) i.e.,

Proof

There exists a number \(n_0\in \mathbb {N}\) such that we obtain by integration by parts

for all \(n\ge n_0.\) Here, all terms obtained by integration by parts except the one on the right-hand side vanish for sufficiently large \(n\) since \(W(z_1,0)\equiv W(0,z_2)\equiv 0\) and all edge points lie outside the support of the kernel \(\widetilde{K}_{{\mathcal B},U}\). The increment of \(\widetilde{K}_{{\mathcal B},U}\) in the definition of the process \(Z_{n,2}\) can be expressed in terms of an integral as follows

see, e.g., Owen (2005), Section 9, where we used the notation

This gives

By a change of variables, we further obtain

by definition of the design points. Moreover,

and hence

Next, we apply Theorem 3.2.1 in Khoshnevisan (2002) which gives a modulus of continuity for the Wiener sheet

almost surely. We also observe that

which conclude the proof of this lemma. \(\square \)

Lemma 6

Proof

Since \(Z_{n,3}\) and \(Z_{n,4}\) have the same probability structure, we show that \(\sup _{\mathbf {x}\in [\delta ,1-\delta ]^2}|Z_{n,4}(\mathbf {x})-Z_{n,5}(\mathbf {x})|=O\left( \log (n)\,\frac{(h_1+h_2)^{\frac{3}{2}}}{\sqrt{h_1h_2}}\right) \) almost surely which proves the assertion of the lemma. Again, by integration by parts for sufficiently large \(n\), since \(\widetilde{K}_{{\mathcal B},U}\) is of bounded support,

By change of variables, under Assumption 2 with the modulus of continuity of the Brownian sheet (here, \(|u|\le 1\), since \(K\) has support contained in the cube \([-1,1]\times [-1,1]\))

almost surely. Furthermore,

which implies

For sufficiently large \(n\in \mathbb {N}\), the first four summands vanish completely and thus

which completes the proof of this lemma. \(\square \)

Lemma 7

Under the assumptions of Theorem 1 the following result holds

Proof

A combination of integration by parts, change of variables and the scaling property of the Brownian sheet yield

With the definition of the sets \(D_{<0}:=\{(z_1,z_2)\in \mathbb {R}^2\,|\,z_1<0\;\vee \; z_2<0\}\) and \(D_{>\frac{1}{h}}:=\{(z_1,z_2)\in \mathbb {R}^2\,|\,z_1>1/h_1\;\vee \; z_2>1/h_2\}\) we obtain

For \(\mathbf {z}\in D_{>\frac{1}{h}},\,\mathbf {x}\in [\delta ,1-\delta ]^2\) we further have \(z_j-x_j/h_j>\delta /h_j\quad \text {for}\quad j=1\,\vee \,j=2\) and for \(\mathbf {z}\in D_{<0},\,\mathbf {x}\in [\delta ,1-\delta ]^2\) we obtain \(z_j-x_j/h_j<-\delta /h_j\quad \text {for}\quad j=1\,\vee \,j=2.\) Since \(\delta \) is a fixed positive constant, there exists a number \(n_0\in \mathbb {N}\) such that \(\widetilde{K}_{{\mathcal B},U}\bigl (\mathbf {z}-\mathbf {x}/\mathbf {h}\Bigr )\equiv 0 \quad \text {for all}\quad \mathbf {z}\in D_{<0}\cup D_{>\frac{1}{h}},\, \mathbf {x}\in [\delta ,1-\delta ]^2\). Hence, for \(n\ge n_0\, Z_{n,6}(\mathbf {x}/\mathbf {h})\mathop {=}\limits ^{\mathcal {D}}Z_{n,5}(\mathbf {x})\) and \(\sup _{\mathbf {x}\in [\delta ,1-\delta ]^2} |Z_{n,6}(\mathbf {x}/\mathbf {h})|\mathop {=}\limits ^{\mathcal {D}}\sup _{\mathbf {x}\in [\delta ,1-\delta ]^2} |Z_{n,5}(\mathbf {x})|\mathop {=}\limits ^{\mathcal {D}} \sup _{\mathbf {x}\in \frac{1}{h_1}[\delta ,1-\delta ]\times \frac{1}{h_2}[\delta ,1-\delta ]}|Z_{n,6}(\mathbf {x})|,\) which completes the proof of the lemma. \(\square \)

Proof of Theorem 1

Given the assumptions of Theorem 1 regarding the relative growth of the bandwidths \(h_1\) and \(h_2,\) the system of sets \(\Bigl \{\frac{1}{h_1}[\delta ,1-\delta ]\times \frac{1}{h_2}[\delta ,1-\delta ]\,\bigl |\,n\in \mathbb {N}\Bigr \}\) with volumes \((1-2\delta )^2/(h_1h_2)\) is a blowing up system of sets according to Definition 14.1 in Piterbarg (1996). An application of Theorem 14.3 therein thus yields

The following lemma provides the last missing piece, the negligibility of the remainder \(R_{n,0}.\) \(\square \)

Lemma 8

Let Assumption 1 and 2 be satisfied. Then,

Proof

Lemma 1 implies the decomposition \({\mathcal B}_{n,\mathbf {x}}^{-1}={\mathcal B}_{\mathbf {x}}^{-1}+{\mathcal R}_{n,\mathbf {x}}^{-}\) with a \((k+1)(k+2)/2\times (k+1)(k+2)/2\)-matrix \({\mathcal R}_{n,\mathbf {x}}^{-}\) that has the property

The quantity \(U^{T}(\mathbf {0}){\mathcal R}_{n,x}^{-}=\bigl ((r_{n,\mathbf {x}}^{-})_{1,1},\ldots ,(r_{n,\mathbf {x}}^{-})_{1,(k+1)(k+2)/2}\bigr ) \in \mathbb {R}^{1\times (k+1)(k+2)/2}\) is the first row of the matrix \({\mathcal R}_{n,x}^{-}\) and \(U\Bigl (\frac{\mathbf {t}_{\mathbf {i}}-\mathbf {x}}{\mathbf {h}}\Bigr )=\biggl (U^{(1)}\Bigl (\frac{\mathbf {t}_{\mathbf {i}}-\mathbf {x}}{\mathbf {h}}\Bigr ),\ldots , U^{((k+1)(k+2)/2)}\Bigl (\frac{\mathbf {t}_{\mathbf {i}}-\mathbf {x}}{\mathbf {h}}\Bigr )\biggr )\in \mathbb {R}^{(k+1)(k+2)/2},\) hence we can write

For each fixed number \(p_0\in \{1,\ldots ,(k+1)(k+2)/2\}\) we find

with the same arguments as used before to prove the convergence of \(\sup _{\mathbf {x}\in [\delta ,1-\delta ]^2}|Z_{n,1}(x)|\), property (19) and the boundedness of the design densities \(g_1\) and \(g_2\). \(\square \)

An application of Lemmas 4–8 finally completes the proof of Theorem 1.

Proof of Corollary 1

Under the assumptions of Corollary 1, Lemma 3 implies that \(\sup _{\mathbf {x}\in [\delta ,1-\delta ]^2}|\mathrm{bias}(\hat{f}_n,f,\mathbf {x})|=O\bigl ((h_1+h_2)^{k+a}\bigr )\) Hence,

that is, the bias is asymptotically negligible. \(\square \)

7 Proofs of Theorem 2 and Corollary 2

In this section, we sketch the extension of the proofs of the results of Sect. 6.2 to the case of general dimension \(d.\) Here, we need a multivariate generalization of the concept of functions of bounded variation for which we make use of the elementary, intuitive approach in terms of suitable generalizations of increments such as it is given in Owen (2005). The generalization of the concept of increments \(\varDelta _d\) of a function \(f\) over \(d\)-dimensional intervals \([\mathbf {a},\mathbf {b}]\) that is relevant for us in this context is given by the definition

where \(\pmb \alpha \odot (\mathbf {a}-\mathbf {b})=(\alpha _1\cdot (a_1-b_1),\ldots ,\alpha _d\cdot (a_d-b_d))^T\) denotes the vector of component-wise products of the multi-index \(\pmb \alpha \) and the vector \(\mathbf {b}-\mathbf {a}.\) The above defined increments \(\varDelta _d\) of a function \(f\) over \(d\)-dimensional intervals \([\mathbf {a},\mathbf {b}]\) have the following property

Lemma 9

There exists a Wiener sheet \(W\) on a suitable probability space such that

where \( Z_{n,1,d}\) and \( Z_{n,2,d}\) are the d-dimensional analogs of \( Z_{n,1}\) and \( Z_{n,2}\) (see (13) and (14), respectively).

Proof

For general dimension \(d\) define the partial sum \(S_{(i_1,\ldots ,i_d)}:=\sum _{p_1}^{i_1}\ldots \sum _{p_d=1}^{i_d}\varepsilon _{(p_1,\ldots ,p_d)}\) and set \(S_{\mathbf {i}}\equiv 0\) if \(i_j=0\) for at least one \(j\in \{1,\ldots ,d\}.\) Again, we can replace the errors by suitable increments of the partial sum \(S_{(\cdot )}\) over \([\mathbf {i-1},\mathbf {i}]:\)

With the same arguments as in the two-dimensional case, the replacement of the errors by the increments of the partial sums yields for sufficiently large \(n\) (such that all boundary terms vanish)

Another application of Theorem 1 in Rio (1993) yields the estimate

\(\square \)

Lemma 10

Under the assumptions of Theorem 2, the process \(Z_{n,2}(\mathbf {x})\) can be approximated by \(Z_{n,3}(\mathbf {x})\) uniformly with respect to \(\mathbf {x}\in [\delta ,1-\delta ]^d,\) i.e.,

where \(Z_{n,3,d}\) is the d-dimensional analog of \(Z_{n,3},\) defined in (15).

Proof

Also for the \(d\)-dimensional case there exists a number \(n_0\in \mathbb {N}\) such that we obtain by integration by parts for all \(n\ge n_0\)

i.e., all boundary terms vanish for sufficiently large \(n.\) To prove the assertion of the lemma, we can now use Eq. (21) and follow the lines of the proof of Lemma 5 and obtain the estimate

\(\square \)

Lemma 11

Assume that the assumptions of Theorem 2 hold. Then,

where \(Z_{n,5,d}\) is the d-dimensional analog of (17).

Proof

Again, we make an intermediate step by introducing a further process, \(Z_{n,4,d},\) that has the same probability structure as \(Z_{n,3,d}\) and which is defined as the \(d\)-dimensional analog of \(Z_{n,4}\) [see (16)]. By assumption, the design densities \(g_j\) are continuously differentiable up to order \((d-1)\vee 1,\,j=1,\ldots ,d\). By higher order Taylor expansion of the difference \(\sqrt{\prod _{j=1}^dg_j(x_j+u_jh_j)}-\sqrt{\prod _{j=1}^dg_j(x_j)}\) with the same arguments applied in the proof of Lemma 6, we obtain the estimate

which holds almost surely and uniformly in \(\mathbf {x}\in [\delta ,1-\delta ]^d.\) \(\square \)

The generalization of Lemma 7 and Lemma 8 and both proofs, as well as the further steps in the proofs of Theorem 1 and Corollary 2 are straightforward and are, therefore, omitted.

References

Bickel, P., Rosenblatt, M. (1973a). Two-dimensional random fields. In: Multivariate Analysis, III (Proceedings of the Third International Symposium, Wright State University, Dayton, Ohio, 1972) (pp. 3–15). New York: Academic Press.

Bickel, P. J., Rosenblatt, M. (1973b). On some global measures of the deviations of density function estimates. Annals of Statistics, 1, 1071–1095.

Bissantz, N., Dümbgen, L., Holzmann, H., Munk, A. (2007). Nonparametric confidence bands in deconvolution density estimation. Journal of the Royal Statistical Society Series B Statistical Methodology, 69, 483–506.

Claeskens, G., van Keilegom, I. (2003). Bootstrap confidence bands for regression curves and their derivatives. Annals of Statistics, 31, 1852–1884.

Eubank, R. L., Speckman, P. L. (1993). Confidence bands in nonparametric regression. Journal of the American Statistical Association, 88, 1287–1301.

Giné, E., Nickl, R. (2010). Confidence bands in density estimatio. Annals of Statistics, 38, 1122–1170.

Giné, E., Koltchinskii, V., Sakhanenko, L. (2004). Kernel density estimators: convergence in distribution for weighted sup-norms. Probability Theory and Related Fields, 130(2), 167–198.

Hall, P. (1992). Effect of bias estimation on coverage accuracy of bootstrap confidence intervals for a probability density. Annals of Statistics, 20, 675–694.

Hall, P., Horowitz, J. (2013). A simple bootstrap method for constructing confidence bands for functions. Annals of Statistics, 41(4), 1892–1921.

Härdle, W. (1989). Asymptotic maximal deviation of m-smoothers. Journal of Multivariate Analysis, 29, 163–179.

Härdle, W., Song, S. (2010). Confidence bands in quantile regression. Econometric Theory, 26(4), 1180–1200.

Johnston, G. J. (1982). Probabilities of maximal deviations for nonparametric regression function estimates. Journal of Multivariate Analysis, 12, 402–414.

Khoshnevisan, D. (2002). Multiparameter processes—an Introduction. New York: Springer.

Konakov, V. D., Piterbarg, V. I. (1984). On the convergence rate of maximal deviation distribution for kernel regression estimate. Journal of Multivariate Analysis, 15, 279–294.

Lounici, K., Nickl, R. (2011). Global uniform risk bounds for wavelet deconvolution estimators. Annals of Statistics, 39, 201–231.

Neumann, M. H., Polzehl, J. (1998). Simultaneous bootstrap confidence bands in nonparametric regression. Journal of Nonparametric Statistics, 9, 307–333.

Owen, A. B. (2005). Multidimensional variation for quasi-Monte Carlo. In: Fan J, Gang L (Eds.), Contemporary multivariate analysis and design of experiments (In celebration of Prof. Kai-Tai Fang’s 65th birthday), series in biostatistics (Vol. 2, pp. 49–74). Hackensack: World Scientific Publishing.

Piterbarg, V. I. (1996). Asymptotic methods in the theory of Gaussian processes and fields, translations of mathematical monographs (Vol. 148). Providence: American Mathematical Society.

Rio, E. (1993). Strong approximation for set-indexed partial sum processes via KMT constructions I. Annals of Probability, 21(2), 759–790.

Rio, E. (1994). Local invariance principles and their application to density estimation. Probability Theory and Related Fields, 98(1), 21–45.

Rosenblatt, M. (1976). On the maximal deviation of k-dimensional density estimates. Annals of Probability, 6, 1009–1015.

Sacks, J., Ylvisaker, D. (1970). Designs for regression problems with correlated errors. Annals of Mathematical Statistics, 41, 2057–2074.

Silverman, B. W. (1984). Spline smoothing: the equivalent variable kernel method. Annals of Statistics, 12, 898–916.

Smirnov, N. V. (1950). On the construction of confidence regions for the density of distribution of random variables. Doklady Akademii Nauk SSSR, 74, 189–191.

Stadtmüller, U. (1986). Asymptotic properties of nonparametric curve estimates. Periodica Mathematica Hungarica, 17, 83–108.

Tsybakov, A. B. (2009). Introduction to nonparametric estimation. New York: Springer Series in Statistics, Springer.

Wand, M. P., Jones, M. C. (1995). Kernel smoothing. Monographs on statistics and applied probability, 60. London: Chapman and Hall Ltd.

Xia, Y. (1998). Bias-corrected confidence bands in nonparametric regression. Journal of the Royal Statistical Society Series B Statistical Methodology, 60, 797–811.

Acknowledgments

This work has been supported by the Collaborative Research Center ”Statistical modeling of nonlinear dynamic processes” (SFB 823, Teilprojekt C4) of the German Research Foundation (DFG). The author would like to thank Holger Dette for helpful discussions and a careful reading of this manuscript. The author is grateful to an Associate Editor and a referee for their helpful suggestions and comments that improved the presentation of the results.

Author information

Authors and Affiliations

Corresponding author

About this article

Cite this article

Proksch, K. On confidence bands for multivariate nonparametric regression. Ann Inst Stat Math 68, 209–236 (2016). https://doi.org/10.1007/s10463-014-0494-5

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10463-014-0494-5