Abstract

We present a comprehensive review of the evolutionary design of neural network architectures. This work is motivated by the fact that the success of an Artificial Neural Network (ANN) highly depends on its architecture and among many approaches Evolutionary Computation, which is a set of global-search methods inspired by biological evolution has been proved to be an efficient approach for optimizing neural network structures. Initial attempts for automating architecture design by applying evolutionary approaches start in the late 1980s and have attracted significant interest until today. In this context, we examined the historical progress and analyzed all relevant scientific papers with a special emphasis on how evolutionary computation techniques were adopted and various encoding strategies proposed. We summarized key aspects of methodology, discussed common challenges, and investigated the works in chronological order by dividing the entire timeframe into three periods. The first period covers early works focusing on the optimization of simple ANN architectures with a variety of solutions proposed on chromosome representation. In the second period, the rise of more powerful methods and hybrid approaches were surveyed. In parallel with the recent advances, the last period covers the Deep Learning Era, in which research direction is shifted towards configuring advanced models of deep neural networks. Finally, we propose open problems for future research in the field of neural architecture search and provide insights for fully automated machine learning. Our aim is to provide a complete reference of works in this subject and guide researchers towards promising directions.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Artificial Neural Network (ANN) is a computational machine learning model loosely inspired by the human brain. It is typically composed of several processing units (neurons) interconnected in a layered structure (Haykin 1993). This model can demonstrate human-like skills such as image recognition and natural language processing. The learning is established through training of the network with the help of structured data and the utilization of learning algorithms.

Since the invention of Mark I Perceptron, the first ANN model by Frank Rosenblatt in 1958 (Rosenblatt 1958), Artificial Neural Networks have been transformed from single-layer models into complex structures consisting of hundreds or even thousands of layers in various architectures. Thus, they have been called Deep Neural Networks. The process of training these deep networks is called Deep Learning. Thanks to the recent availability of the massive amount of data (Big Data) and advancements in the technology of Graphic Processing Units (GPU), modern deep learning architectures surpass human performance by achieving state-of-the-art results on image classification tasks which helped develop revolutionary technologies such as self-driving cars and cancer diagnosis from x-ray images (Abdel-Zaher and Eldeib 2016; Levine et al. 2019; Rashed and El Seoud 2019; Spielberg et al. 2019).

Extensive experimental data reveal that the success of a neural network for solving a particular problem essentially depends on its architecture (Weiß 1994a). From a simple ANN model to today's highly complex deep structures, designing artificial neural networks is rather a difficult and troublesome task. Even today, network architectures are usually determined manually by domain experts through trial and error. Furthermore, the relationship between network architecture and its performance cannot be formulated. Considering the vast computational resources and amount of time required to search for possible neural architectures, manual methods are undoubtedly infeasible to obtain optimal solutions. This motivated researchers to employ advanced algorithms such as metaheuristics to automate this process and improve network performance with better architectures.

It would be quite demanding to conduct a review that examines the optimization of Artificial Neural Network design from a broad spectrum, covering all types of solution methods including metaheuristics and other advanced algorithms. Among many approaches, Evolutionary Computation, a set of global-search techniques inspired by the evolution theory became the most popular, offering promising and competitive solutions on a wide range of real-world tasks. For this reason, this review will narrow down the research and our focus will only be on the works that concentrated on combining artificial neural networks and evolutionary algorithms, which are two powerful paradigms of Artificial Intelligence (AI).

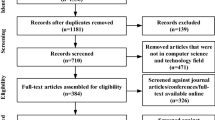

The first studies aiming to design network architectures with evolutionary methods start in the late 1980s. Over the past 30 years, considerable progress has been achieved. To this end, we made a thorough research and surveyed all relevant papers in this period. By examining the historical progress, we analyzed studies in chronological order and divided the whole timeframe into three periods based on significant achievements and scientific trends. The first period covers initial attempts to evolve simple ANN architectures in a competitive nature to invent efficient strategies for chromosome representation. The second period starts with the introduction of the Neuroevolution of Augmenting Topologies (NEAT) proposed by Stanley and Miikkulainen (2001). NEAT was considered to be a major breakthrough and a key milestone in this field. The second period involves many attempts to improve or outperform NEAT from various aspects. The third period covers the Deep Learning Era when researchers explored methods to automate the design and configuration of deep neural networks. Figure 1 shows the number of papers published on the evolutionary design of neural network architectures throughout the investigated period and how the focus has been shifted from simple ANN models to Deep Neural Network (DNN) architectures. The works include, but are not limited to journal articles, conference papers, and dissertations. We aimed to include all relevant papers without any selection criteria, such as the number of citations, the Journal’s impact factor, etc., and exhaustively searched through all databases available by double-checking with interim review papers in the historical context.

The primary purpose of this study is to present all innovative works by examining novel evolutionary approaches adopted in the design of artificial neural network architectures and to analyze solution strategies comparatively with a special emphasis on various evolutionary computation techniques adopted and the encoding strategies proposed. As such, it has a complementary nature to previous studies. Due to the surge of interest in the subject, many review papers have been published in various intervals until today. The first review paper was published in 1992 by Schaeffer (1992), who examined the early steps and surveyed approaches for encoding strategies. Later on, quite extensive reviews were carried out by Yao in the first decade (1993; 1998; 1999). Further reviews have been published covering up-to-date surveys and comparative analysis (Azzini and Tettamanzi 2011; Balakrishnan and Honavar 1995; Branke 1995; Cantú-Paz and Kamath 2005; Castellani 2013; Castillo et al. 2003; Castillo et al. 2007; de Campos et al. 2015; De Campos et al. 2011; Drchal and Šnorek 2008; Floreano et al. 2008; Vonk et al. 1995b; Weiß 1993; Weiß 1994a; Weiß 1994b; Whitley 1995). The most recent surveys are by Ojha et al. (2017), Chiroma et al. (2017) Stanley et al. (2019), and Baldominos et al. (2020). Due to a shift of interest from conventional neural models to deep architectures, some of the latest surveys concentrate mostly on Neural Architecture Search (NAS) methods recently being developed (Elsken et al. 2018b; Wistuba et al. 2019). Although these works provide comprehensive analysis, only a few of these reviews cover the whole spectrum of historical progress. Furthermore, there are still papers that are ignored, not sufficiently examined, or not compared in terms of encoding strategies and various techniques adopted. Despite being recently published, the review paper by Baldominos et al. (2020) doesn’t sufficiently cover the latest advances in the evolutionary design of deep neural networks such as AmoebaNet-A by Real et al. (2019). The rapid increase of interest in this subject requires more frequent updates since significant achievements with state-of-the-art results have been reported in the last two years, by utilizing evolutionary approaches. Published review papers are depicted on a timeline in Fig. 2.

To summarize, this paper presents an extensive survey on the evolutionary design of neural network architectures with the following contributions:

-

We provide a detailed and systematic review of evolutionary approaches for searching optimal neural network architectures, covering the complete spectrum of historical progress.

-

We examine the use of various evolutionary computation techniques such as Genetic Algorithms or Evolutionary Programming and analyze genetic operations, population initialization methods, and evaluation techniques with a variety of fitness functions.

-

We put a special emphasis on chromosome encoding strategies with a comparative analysis of direct and indirect representation approaches, since they have a significant effect on the performance of the optimization process.

-

We surveyed not only simple ANN architecture optimization approaches but also recent advances on the evolutionary design of deep neural architectures such as Convolutional Neural Networks (CNN).

-

We raise open questions for future research on reducing the computational cost of architecture search and providing systems to fully automate machine learning tasks without expert knowledge.

The rest of this review paper is organized as follows: In Sec. 2, we introduce Artificial Neural Networks with biological backgrounds and historical developments. In Sec. 3 we investigate optimization methodology and summarize Evolutionary Computation techniques together with genetic operators applied. In Sec. 4, we made a categorical classification of representation methods and surveyed various encoding strategies with common challenges such as Competing Conventions Problem. In Sec. 5, we investigated the historical progress in three periods of development, namely early works, the rise, and the deep learning era. Finally, we conclude the survey in Sec.6.

2 Artificial neural networks

Artificial Neural Network is an advanced machine learning model inspired by the human brain (Haykin 1993). Designed as a simulation of biological nerve cells, it consists of several neurons interconnected in a layered structure and connections. ANN basically serves as a function for input–output mapping of a particular problem. A basic skeleton of an ANN has an input layer which acts as the collection point of sensors from the external world and an output layer that produces an output value as a function of received inputs. Between the input layer and the output layer, there are hidden layers with arbitrary depth and width, accommodating a determined number of processing elements (neurons) and connections. This part is usually considered as a “Black Box” since no one can explain the effect of its structure for a given problem. A typical Artificial Neural Network with two hidden layers and a single output is depicted in Fig. 3.

An artificial neuron can be defined as the weighted sum of incoming signals transformed by an activation function (Floreano et al. 2008) (Eq. 2). The connection between two neurons acts as a variable multiplier, commonly referred to as synaptic weights. The training is carried out to determine the best values for these weights. This mathematical model can generalize with the help of the sample data fed to it, thereby realizing learning-related skills such as classification and regression. The artificial neural network model with only one hidden layer can theoretically approximate any non-linear continuous function. With this feature, it is defined as a Universal Function Approximator (Cybenko 1989; Funahashi 1989; Hecht-Nielsen 1987; Hornik 1991; Kolmogorov 1957).

2.1 Biological Motivation

The human brain accommodates a huge network of biological nerve cells, called neurons. When this highly complex structure is examined closely, it can be seen that neurons are connected to other neurons through dendrites, synapses, and the axon. The signals obtained from the input unit called dendrites are processed inside the cell and transferred to other neurons with the help of axons and synapses (Fig. 4). The nerve cell to which the signal is transferred likewise transfers the signal transmitted to it to the next neuron. Neurons that act as a kind of “activation” sometimes strengthen the signals they receive by transferring them to the next neuron (excite) and sometimes stop it by inhibiting (all-or-none).

In 1943, McCulloch and Pitts (1943) laid the foundations of Artificial Neural Networks by creating a model of the biological nerve cell (Fig. 5). In 1958, Frank Rosenblatt invented the machine called Mark I Perceptron (Rosenblatt 1958). In the Perceptron project funded by the American Navy, Rosenblatt aimed to recognize and classify simple geometric shapes by mechanically creating artificial neurons. Despite the simplicity of perceptron, the project has been described by the New York Times as the “embryo of an electronic computer that will be able to see, speak, write, walk, multiply and be conscious of its existence '' in the near future (Baldominos et al. 2020).

The artificial neurons in Perceptron can be defined as a binary device with a threshold. It receives inputs from excitatory or inhibitory synapses. The neuron becomes active if the sum of weighted inputs exceeds its threshold. It can also be expressed as a function that maps its input x to an output value f(x):

where w is a vector of weights and \(w \cdot x\) is the dot product of \(\sum\limits_{i = 1}^{m} {w_{i} x_{i} ,}\) where m is the number of inputs, and b is the bias (Fig. 6).

Networks, where activation is started from the inputs and flowed through hidden layers and towards output, is called a feedforward neural network. Likewise, the output of a neuron in a feed-forward neural network can be determined as the sum of its weighted inputs squashed with an activation function:

where \(x_{1} ,x_{2} ,...x_{n}\) are input signals, \(w_{1} ,w_{2} ,...w_{n}\) are connection weights, b is the bias, and \(f\) is the activation function (e.g., sigmoid, tanh, etc.).

Some networks may have a feedback loop, where output is redirected to its input for discovering or responding to temporal dependencies (Fig. 7). These types of networks are commonly used for natural language processing and are called Recurrent Neural Networks (RNN) (Stanley 2004).

2.2 Multi-layer perceptron (MLP)

Although Rosenblatt's perceptron became popular and created excitement, it was only able to provide solutions to linear functions. In 1969, Minsky and Papert published an article called Perceptrons that proved the inadequacy of this model by revealing in all aspects that Perceptron could not approximate non-linear functions such as XOR (Minsky and Papert 1969). With this paper, the AI Winter, a period of great decrease of research and investments in artificial intelligence which would last until the mid-1980s, has started.

The development that made artificial neural networks popular again was the discovery of a gradient-based training method called Backpropagation (Rumelhart et al. 1986). Backpropagation updates the synaptic weights by taking the derivation of the network output error in multiple layers of artificial neural networks (also called Multi-Layer Perceptron (MLP)) based on the delta rule (Werbos 1974). It facilitates the training of Artificial Neural Networks in a very short time. This method is still the most commonly used network training method. Thus, multi-layered and fully feedforward neural networks became popular again as an effective machine learning model and started to produce solutions to many real-world problems, including non-linear functions. By the end of the 1980s many models have been developed, some of which are still in use today. Hopfield networks (Hopfield 1982), Vector Quantization Models (LVQ) (Kohonen 1995), Adaptive Resonance Theory (ART) (Carpenter and Grossberg 1986) Self-organizing models (SOM) (Kohonen 1989), Elman Network (Elman 1990), Support Vector Machines (Cortes and Vapnik 1995) and Radial Based Networks (Park and Sandberg 1991) have been introduced to the literature as different variants of Artificial Neural Networks.

2.3 Towards deep architectures

In 1980, Fukushima (1980) laid the foundations of Convolutional Neural Networks (CNN), with the Neocognitron inspired by Hubel and Wiesel’s studies on neuroscience (1959, 1962). This model consisted of two layers, similar to the visual cortex in the brains of mammals. In the first layer, rough features of images such as corners and edges were detected, and in the second layer, more detailed processing and classification were carried out. Then, LeCun (1989, 1990a) introduced the first handwriting character recognition software in the late 1980s by using the MNIST dataset to train his model (Fig. 8). In addition, developments in the field of natural language processing (NLP) led to the development of advanced methods for processing Recurrent Neural Networks (RNN), bringing techniques such as LSTM in the late 90 s (Hochreiter and Schmidhuber 1997).

Training of Artificial Neural Networks was a difficult and time-consuming process even in the 2000s when processor technology was making progress. As the number of layers in the network increases, so does the number of parameters to calculate, which required more processing power and higher memory in multi-layer structures called deep neural networks. The second AI Winter lasted until 2006, when Geoffrey Hinton, one of the pioneers of the field, published his groundbreaking work, showing that training of deep networks can be carried out in a more reasonable time with the structure called Deep Belief Nets (DBN). This development helped speed up the research on Artificial Neural Networks again and led to the blossom of the AI boom (Hinton et al. 2006; Hinton and Salakhutdinov 2006).

Overcoming obstacles in front of the Deep Neural Networks increased interest in this area and led to new research directions. Prof Fei Fe Li from Stanford University argued that the algorithms had reached maturity even many years ago, but the available datasets were still very poor. Fei Fei Li and his colleagues created a dataset consisting of thousands of categories and millions of images from the internet with the work they started in 2007, called ImageNet (Deng et al. 2009). This dataset was the largest dataset ever created in the world. The biggest feature that distinguishes ImageNet from other data sets was that the categories were hierarchically subdivided according to the WordNet system (Fig. 9).

As of 2009, a competition started to be organized for image recognition using the ImageNet dataset (ImageNet Large Scale Visual Recognition Challenge - ILSVRC). The researchers started to compete with the deep neural network models they developed to obtain the highest accuracy to recognize images in ImageNet, and this race led to one of the most important developments in the field of Deep Learning in 2012. Alex Krizhevsky and his colleagues have made the biggest leap in artificial intelligence by halving the error rate achieved on ImageNet with the deep neural network model they named AlexNet (Krizhevsky et al. 2012). No such improvement was expected because the researchers estimated that the average error rate of around 25% could improve by 1% each year. With the model they developed, AlexNet made a great leap of advance which could take approximately 12 years. The model they created implemented many recent innovations such as ReLU activation, GPU usage, and dropout. Furthermore, they proved that deep networks with millions of parameters and connections do better, as opposed to shallow ones. This success brought interest in the competition and deep learning research to the highest level, allowing a new spring of artificial intelligence to begin. In the ImageNet contest, successive records were achieved in the proceeding years. Key milestones in the development of Artificial Neural Networks are depicted in Fig. 10.

3 ANN optimization

The optimization of Artificial Neural Networks has been studied from various aspects. These are mainly architectural design, connection weights, learning algorithm, node transfer functions, determination of initial weights, optimization of the input layer, and optimization of learning parameters e.g., learning rate, or momentum. To summarize, every variable in the artificial neural network model can be optimized in several ways. However, it is possible to combine these optimization areas into two main sub-categories. The first one is network design and the second one is network training.

3.1 Optimization of ANN architectures

Architecture optimization in Artificial Neural Networks is mainly concerned with the optimization of structural parameters such as the number of layers, number of neurons in each layer, and connections scheme. The selection of node activation functions, which is studied separately in some works, is actually an area of architecture design. On the other hand, the process of reducing input parameters, defined as input layer optimization, is an area that falls into the field of data science, and cannot be defined as architecture optimization. The general flow of an ANN optimization is depicted in Fig. 11.

3.2 Optimization of synaptic weights

The backpropagation method is the most common and most effective method of finding optimal weights. It is a gradient-descent-based algorithm which aims to minimize the total mean square error between actual output and desired output. In every iteration, this error is used to guide the algorithm to find optimal weight values for the desired output. Although being very effective, the BP has a tendency to be trapped at local minima and quite often causes the vanishing or exploding gradients problem. Furthermore, in some real-world problems, it could be inefficient due to the structure of the error surface. For best results, the user is required to select the best hyper parameters, such as learning rate, momentum, and batch size, which further necessitates another heuristics.

Therefore, other methods have also been proposed to train Artificial Neural Networks. Initial attempts were aimed to increase the performance of BP by introducing gradient-based variations using sophisticated algorithms such as Conjugate Gradient (Charalambous 1992; Fletcher and Reeves 1964; Hestenes and Stiefel 1952) and Quasi-Newton methods (Dennis and Moré 1977; Huang 1970; Nocedal and Wright 2006). In order to improve convergence, adaptive learning rates were applied to some applications (Barzilai and Borwein 1988). Later, nature-inspired metaheuristics were thoroughly investigated and experimented with as competitive alternatives to Backpropagation. These approaches include but not limited to Evolutionary Computation techniques such as Genetic Algorithms (Gonzalez-Seco 1992; Gupta and Sexton 1999; Montana and Davis 1989; Sexton and Gupta 2000), Evolutionary Strategies (Greenwood 1997), and Differential Evolution (Ilonen et al. 2003), popular optimization methods such as Simulated Annealing (Sexton et al. 1999), Artificial Bee Colony Algorithm (Karaboga and Akay 2007), Particle Swarm Optimization (Roy et al. 2013). Due to the surge of interest in the field of Artificial Intelligence, many other techniques were also applied, including Fuzzy Sets (Juang et al. 1999), APPM (Artificial Photosynthesis and Phototropism Mechanisms) (Cui et al. 2012) as an alternative solution to BP.

3.3 Simultaneous optimization of architecture and weights

The general goal of an ANN optimization process is to achieve the best generalization. During optimization, every candidate solution is evaluated by simply training the network by using BP or other methods, thus obtaining the error rate on test data. It would require vast computational resources and time to achieve optimal architectures, by iterating through possible solutions. Addressing this phenomenon, many researchers aimed to optimize both architectures and weights at the same time to save computation costs.

3.4 Invasive and non-invasive approaches

The typical strategy of ANN architecture optimization is to search for better models and evaluate the algorithm by training the candidate solutions using gradient-based methods such as backpropagation. Many researchers did not follow this computationally intensive path and aimed to optimize both architecture and weights simultaneously. Thus, these two different approaches formed the classification of approaches as invasive and non-invasive. Non-invasive refers to the former approaches where architecture is optimized and weights are obtained by BP-like algorithms, while invasive refers to the latter approaches (Palmes et al. 2005).

3.5 Methodology

In Artificial Neural Networks, it is possible to consider network architecture design as a search problem in the architectural space. The average error obtained for each architecture creates a surface in this search space. Proposed methods aim to find the lowest (or highest) point on this surface (Liu and Yao 1996a). According to Miller et al. (1989), this surface is:

-

infinitely large: because there is no limit on the number of neurons and connections that can be used.

-

nondifferentiable: because decision variables are discrete.

-

complex and deceptive: because there is no direct relationship between network performance and network size, and similar architectures may yield different performance.

-

multimodal: because networks with different topologies can give the same result.

For this reason, finding the ideal architecture in artificial neural networks is too difficult or impossible even for small networks to be solved by conventional methods. As Miller et al. (1989) express, “the network design stage remains something of a black art”.

3.5.1 Generalization and architecture

A considerable amount of reports in the literature state that the speed and generalization ability of a neural network usually depends on its complexity (Weiß 1994a). For example, a deep ANN having a large number of hidden layers and nodes will provide more accurate output for the training data but may demonstrate poor generalization for unknown test data, which is a phenomenon called overfitting (Yen and Lu 2000; Zhang and Muhlenbein 1993). In this case, the network simply memorizes training samples and noise in the training data, destroying the capability of the network to generalize (Fiszelew et al. 2007). On the other hand, a smaller network with only a few hidden layers and neurons usually has poor learning ability and may not be able to approximate the function. Some researchers followed the principle of Occam’s Razor, which states that simpler models should be preferred to unnecessarily complex ones (Thorburn 1918; Zhang and Muhlenbein 1993; Zhang and Mühlenbein 1993). There are several approaches to identify an optimal and efficient neural network. These are SIC (Schwarz Information Criterion), AIC (Akaike Information Criterion), and PMDL (Predictive Minimum Description Length). Although used by many researchers all the above methods have significant drawbacks and weaknesses.

3.5.2 Conventional methods

Conventional techniques such as brute force, enumerative, or random search only provide low-quality solutions over very limited options. Constructive and destructive methods are among the classical approaches introduced in the early years. Constructive methods, as the name suggests, aims to obtain the ideal topology by gradually expanding the model starting from a minimal architecture. For example, the cascade-correlation approach is an example of constructive methods (Fahlman and Lebiere 1990). On the other hand, destructive methods start from a large architecture first and then are followed by removing neurons or pruning the connections on this architecture. Therefore, they are often referred to as pruning methods. A popular example of destructive methods is LeCun’s Optimal Brain Damage (OBD) (1990b). Although these methods address the problem of structuring ANN models, they investigate restricted topological subsets rather than the entire surface of possible ANN architectures (Fischer and Leung 1998). The inefficiency of conventional methods has led researchers to exploit global search algorithms. Mostly inspired by natural phenomena, Metaheuristics are usually applied in such circumstances where the search space is infinitely large. A general characteristic of Metaheuristics is that they can obtain an optimum solution to very difficult problems in a reasonable time.

3.5.3 Metaheuristics

Metaheuristics are stochastic/non-deterministic global optimization methods that are generally inspired by nature, the swarm of animals, or daily life. Although they are classified in different ways, they generally appear in three different types: single solution-based, population-based, or hybrid (Fig. 12) (Blum and Roli 2003; Dréo et al. 2006; Ojha et al. 2017). While some of them have memory features, some others are memoryless approaches.

3.5.3.1 Single solution based metaheuristics

As it can be understood from its name, these methods proceed with only one solution during the search. Examples of single solution-based metaheuristics are Simulated Annealing (SA) (Kirkpatrick et al. 1983), which simulates the warming and cooling processes of substances in the metallurgical industry, and Tabu Search (TS) (Glover 1989, 1990) inspired by the phenomenon of taboo in human behavior. Furthermore, local search algorithms such as Variable Neighborhood Search (VNS) (Mladenović and Hansen 1997) and Greedy Randomized Adaptive Search (GRASP) (Feo et al. 1994) also fall into this class.

3.5.3.2 Population-based metaheuristics

Population-based algorithms are global optimization methods that are mostly inspired by nature and based on the principle of performing the search with more than one candidate solution on every iteration. Unlike single-solution-based approaches, they have global search capability. Evolutionary computational approaches are among the most popular population-based methods. It is based on the survival of the fittest principle of evolution theory. Another example of population-based approaches is Swarm Intelligence. The most common methods in this approach are Particle Swarm Optimization (PSO) (Eberhart and Kennedy 1995), Ant Colony Optimization (ACO) (Dorigo et al. 1996), and Artificial Bee Colony Algorithm (ABC) (Karaboga 2005) which are inspired by self-organized behaviors of animals such as fish, birds, bees and ants (Ojha et al. 2017). In this method, a random swarm is created initially, and the behavioral patterns of the swarm help the search move to directions of possible solutions. For example, flocks of birds in PSO form a weight vector and search the entire search space for food and direct the flock towards the food source (good solutions). Similarly, ACO is inspired by the ant swarm looking for food and leaving pheromone in the direction of the food source. As the level of pheromone increases, the search is steered to better solutions. Many other algorithms, inspired by nature, have been developed. These are including, but are not limited to Gray Wolf Optimization (Mirjalili et al. 2014), Cuckoo Search (Yang and Deb 2009), and Firefly algorithms (Yang 2009).

3.5.3.3 Hybrid metaheuristics

Another important paradigm in metaheuristics is hybrid approaches. In the hybrid approach, called a memetic algorithm, the strategy is to combine more than one global or local search algorithm to obtain a stronger algorithm. Conventional or local search algorithms reach the results fairly quickly, while the risks of trapping into local minimum are higher. On the other hand, population-based global search methods are slower but have the ability to reach the global minimum. It is aimed to obtain more effective results with the synergy of these two approaches.

3.6 Evolutionary computation

Evolutionary computation is a set of global optimization techniques that have been widely used for training and automatically designing neural networks (García-Pedrajas et al. 2003). It is undoubtedly the most popular and successful population-based metaheuristic optimization paradigm inspired by biological evolution (Sun et al. 2019c). Throughout its historical development, several evolutionary approaches have been proposed including Genetic Algorithms (GA), Evolutionary Programming (EP), Genetic Programming (GP), Evolutionary Strategies (ES), etc., among which GAs became the most popular due to their biological grounds and superior performance in solving various optimization problems in a reasonable time. A general classification of Evolutionary Computation methods is depicted in Fig. 13.

Evolutionary approaches mimic natural selection, adaptation to the environment, and survival of the fittest principles of biological evolution. Similar to the evolution of living organisms in nature, they aim to reach a global solution by improving the candidates called individuals within each population in each generation. Thus, without having any a priori information, it can simultaneously search many points in the architecture space in parallel and reach the optimum solution in a short time without trapping into the local minimum. This makes it one of the most successful methods for architectural design in artificial neural networks. Therefore, the newly formed discipline of evolutionary computation-based methods used for network design or network training in artificial neural networks is called Neuroevolution (Stanley et al. 2019).

In evolutionary computation, all features of an individual in the population are encoded on the chromosome as in DNA encoding. This encoding can be binary, real number, or categorical. The encoded chromosome is called the genotype, while decoded features are called the phenotype. Each value encoded in the chromosome is called alleles. Evolutionary Computation is divided into various sub-disciplines: evolutionary programming, genetic algorithms, genetic programming, evolution strategies, and differential evolution. Although they all mimic the natural processes of biological evolution and having many features in common, there are some methodological differences. For example, only selection and mutation operators are used in evolutionary programming, while genetic algorithms use all genetic operators such as selection, crossover, and mutation. In addition, in the sub-discipline of genetic programming, reproduction is tree encoding instead of binary or real-coded (Bäck et al. 1997; Baldominos et al. 2020; Spears et al. 1993).

3.6.1 Evolutionary programming (EP)

Evolutionary Programming focuses on the evolution of various parameters of fixed computer programs. It was proposed by Fogel et al. (1964; 1962; 1966). The basic approach in the optimization of these parameters is the selection and random mutation in generations. In this method, the crossover operator is not applied. With this feature, it is less affected by encoding restrictions. For many authors, EP is the most suited paradigm of evolutionary computation for evolving ANNs (Angeline et al. 1994; García-Pedrajas et al. 2003).

3.6.2 Genetic algorithms (GAs)

Genetic algorithms are an evolutionary global optimization technique introduced by John Holland in 1975 (De Jong 1975; Goldberg and Holland 1988; Holland 1975; Mitchell 1998). It has been applied to a wide variety of problems and demonstrated superior performance. Unlike the other evolutionary approaches, GA incorporates a ‘crossover’ operator to imitate the effect of sexual reproduction (Jones 1993). However, in artificial neural network design, some researchers avoided the crossover operator. This is due to a permutation problem or a phenomenon called competing conventions. In this problem which will be detailed in the next section, it is observed that chromosomes with different encoding produce the same mathematical output. This creates an undesirable situation in terms of optimization.

3.6.3 Evolutionary strategies (ES)

In this approach, a vector consisting of real numbers is subject to evolution by using selection and mutation operators. This paradigm was introduced in the 1970s by Rechenberg (1973) and Schwefel (1977). It uses representations independent of the natural problem and uses only selection and mutation as operators. Later on, Covariance Matrix Adaptation Evolution Strategy (CMA-ES) has been developed which can take the results of each generation, and adaptively increase or decrease the search space for the next generation (Hansen and Ostermeier 1996, 1997).

3.6.4 Differential evolution (DE)

Differential Evolution was developed by Storn and Price (1997) to overcome various deficiencies in evolutionary approaches. The method they proposed is non-differentiable, non-linear, and has parallelization capability which can handle multi-model cost functions easily. It has fewer control parameters and good convergence features. The vector generation scheme of DE leads to a rapid increase in population vector distances if the target function surface is flat. This “divergence feature” prevents the DE from progressing very slowly in shallow areas of the objective function surface and ensures rapid progression after the population passes through a narrow valley.

3.6.5 Genetic programming (GP)

Genetic Programming is an extension of Genetic Algorithms, invented by Cramer (1985) and further developed by Koza (Koza 1992, 1995). Genetic Programming enables machines to automatically build computer programs (Gruau 1994). Koza used LISP, which is a tree-based programming language to evolve compute programs to solve several tasks. A LISP program can be defined as a rooted and labeled tree called the S expression. LISP functions are represented as labels and leaves are labeled with constants or inputs. Computed S expression values form the output. Crossover of two parent trees is accomplished by cutting a sub tree from one parent and pasting to another as a replacement. As the key researcher on this paradigm, John Koza applied this paradigm for generating neural networks and optimizing both architectures and weights (Koza and Rice 1991).

3.6.5.1 Gene expression programming (GEP)

Gene Expression Programming was invented by Ferreira (2001; 2006), as a variation to Genetic Algorithms. Unlike GAs, in which individuals of a population are linear strings of fixed length, and unlike GP, in which individuals are nonlinear entities of different sizes and shapes (parse trees), GEP incorporates both, encoding individuals first as a linear string of fixed length, then representing them as expression trees (ET). Expression trees are encoded into a linear form by using Karva language and the encoded tree is then called a K-expression. GEP allows the creation of multiple genes, each coding for a program in a small size or sub-expression tree. Ferreira also used GEP to evolve ANNs, claiming his algorithm is very well suited, producing valid structures all the time.

3.6.5.2 Grammatical evolution (GE)

Grammatical Evolution is an evolutionary search framework, typically used to generate computer programs defined through context-free grammar, which describes the syntax of expressions (Noorian et al. 2016). It is introduced by Ryan, Collins, and O’Neil (1998) in 1998. GE is designed to evolve programs in any language by using a variable-length linear genome and adopts BNF (Backus Naur Form) to express the grammar in the form of production rules. When compared to GP, it is more flexible since the user is able to constrain the way in which the program symbols are assembled together (Drchal and Šnorek 2008). Later it was improved by Lourenço et al. (2016) as structured grammatical evolution (SGE) to address the redundancy and locality issues in GE and consisted of a list of genes, one for each non-terminal symbol (Assunçao et al. 2017).

3.7 Genetic operators

Evolutionary Algorithms typically apply genetic operators namely: Initialization, Selection, Reproduction (crossover), and Mutation. More recently elitism is introduced to improve performance on some real-world tasks. Inspired by biology, these operators are essential tools to obtain global optimum for a given problem, and the performance of the algorithms mainly depends on how these operators are exploited.

3.7.1 Generating the initial population

In evolutionary approaches, first of all, a population of determined size is generated. Each individual in the population represents a solution to the problem. The population size is one of the important parameters affecting the solution. The large selection of the population size increases the diversity while bringing extra calculation costs. Selecting rather a small population size causes the search area to narrow. The generation of the initial population is usually carried out randomly. This allows starting from different points in the solution space.

3.7.2 Fitness function and evaluation

The convergence of the individuals in a population is evaluated by the fitness function. For this reason, the fitness value of the genotype is calculated. In Genetic Algorithms, the fitness function is unique to the problem. The fitness represents how suitable the individuals are for the solution. The performance expected from genetic algorithms is related to the precise determination of the fitness function.

3.7.3 Selection

The selection process eliminates individuals with low quality and transfers better individuals to the next step, reproduction. Based on the Darwinian principle of survival of the fittest, individuals with higher fitness are more likely to win during selection, although the process involves randomness in nature. There are many methods in the literature for selection. Among them, the roulette wheel, tournament selection, and rank method are the most frequently used.

3.7.4 Reproduction (Crossover)

The reproduction process is executed by crossover which simulates the sexual generation of a child, or offspring from two parents (Koehn 1994). Individuals selected for reproduction produce offspring who share the common characteristics of their parents. It is algorithmically accomplished by taking and combining some parts of two parents and forming the child (Fig. 14). How the crossover is carried out may vary depending on the structure of the encoding and the nature of the problem. The most commonly used crossover methods are single-point, two-point, arithmetic, and uniform crossover. This step, which seems to be not making sense at first glance, determines new solution candidates that bring us closer to the optimal solution. In genetic algorithms, generally, the entire population is not subject to crossover operation. Only, a determined part of the whole is taken to the crossover.

3.7.5 Mutation

Mutation in nature is the change or degradation of a DNA molecule that is found in the nucleus of the living cell and enables the emergence of hereditary properties. Some possible causes of mutation are radiation, X-ray, ultraviolet, sudden temperature changes, and degradation as a result of chemistry. The mutation is very rare and takes place in a very small part of the chromosomes. In genetic algorithms, the mutation is a small, structural change in chromosomes similar to the natural phenomenon (Fig. 15). As in selection and crossover strategies, the ultimate goal in solving the problem is to reach an optimal solution without getting caught in local optima. There is always a possibility that the genetic algorithm solution will get trapped in a local solution. A way to eliminate this possibility is to mutate some chromosomes. This increases the chances of obtaining the ultimate optimum solution.

3.7.6 Elitism

Although the selection strategy seeks to find good candidates, some powerful individuals among the population can also be eliminated, as the process will proceed randomly. Elitism is applied in order to prevent losing good solutions and ensure that the strongest candidates can be transmitted to the next generation in absolute terms. Although criticized for its tendency to converge prematurely, the elitist strategy was used in many studies and produced encouraging results. What is important here is to determine the number of population members to be separated by elitism. Care should be taken to select the most suitable ratio considering that the high amount can reduce the diversity, resulting in a local minimum.

3.8 Multi-objective evolutionary algorithms

The only goal in architectural optimization in Artificial Neural Networks is not high accuracy or good generalization. An algorithm with good generalization capability but high computational cost is not suitable for many real-world problems with time and hardware constraints. Therefore, in addition to network performance, algorithms require to meet more than one criterion such as model size and computational complexity. Multi-Objective Evolutionary Algorithms, which were put forward for such problems, were also preferred in the architectural optimization of artificial neural networks.

A cost function with two contradictory objectives usually comes with an objective causing the other objective to get dominated. Thus, a nondominated solution is called a Pareto-optimal solution. All Pareto-optimal solutions are also called Pareto-front. A plot of Pareto-front with two objectives is depicted in Fig. 16.

Multi-objective evolutionary algorithms are generally examined in two categories. These are non-Pareto-based or Pareto-based multi-objective approaches. Non-Pareto-based approaches work on the principle of aggregating the cost of criteria that make up the objective function. Here the user adds a multiplier that determines the weight of the criterion she/he wants. Then, net fitness is calculated with a weighted sum. On the other hand, in Pareto-based approaches, the criteria are handled as a whole and the solutions that make up the Pareto-front are presented to the user.

Multi-Objective Evolutionary Algorithms emerge with the introduction of the Vector Evaluated Genetic Algorithm (VEGA) by Schaffer (1985; 1986). Later, MOGA (Fonseca and Fleming 1993) and NSGA (Srinivas and Deb 1994) were developed. These algorithms were based on population diversity based on the individual selection, non-dominated sorting, and fitness sharing mechanism. Later, fast non-dominated sorting and elitism-based external archive strategies were adopted. NSGA-II has been one of the most successful studies in the literature working on this principle (Deb et al. 2002). In addition, SPEA (Zitzler and Thiele 1999), SPEA2 (Zitzler et al. 2001), and PAES (Knowles and Corne 1999) have been successfully implemented on various problems. For further research on these works, the reader can refer to (Zhou et al. 2011) and (Zhang and Xing 2017) for detailed surveys.

3.9 Coevolutionary approaches

Although they are powerful, evolutionary algorithms may perform poorly in problem types where search space is very large. This is more prohibitive, especially when the fitness function cannot be fully expressed. Researchers employ coevolutionary methods in such situations. The co-evolutionary algorithm is a type of evolutionary algorithm, where the fitness function depends on the relationship between individuals in the population. Relationships between individuals are evaluated and fitness is determined. In other words, there is relative fitness instead of directly calculated fitness. This shows that the coevolutionary algorithm is significantly different from the classical evolutionary algorithm (Azzini and Tettamanzi 2006; Potter and De Jong 1994; Potter and De Jong 1995; Wiegand 2003). Coevolutionary algorithms are basically divided into two sub-categories as Cooperative and Competitive.

3.9.1 Cooperative coevolution

In Cooperative Coevolution, every individual in the population contributes in cooperation with other individuals to solve the big problem. In order to obtain a general solution, all individual solutions must be brought together.

3.9.2 Competitive coevolution

In competitive coevolution, individuals evolve in competition with each other. In this competition, individuals with high fitness survive according to Darwin's survival of the fittest principle, while those with low fitness disappear. These types of algorithms can be explained as follows: Let's consider two models that have a predator–prey relationship with each other. Considering that one of these models is a network that performs sorting or pattern recognition, and the other model is a mechanism that generates input for this network, the first model will try to recognize better in the process of evolution, and the other network will produce more difficult inputs for the first network. In this way, they will help each other to reach a global solution (Hillis 1990).

4 Representation

The most important aspect of an evolutionary design is undoubtedly genetic representation. Representation is the method that describes how the genetic chromosome, in other words, the genotype is encoded and how to transform an encoded genotype into the explicit form of a feature string, called the phenotype. Genetic encoding directly affects the speed and efficiency of the solution process. For this reason, an effective encoding mechanism will be one of the most important factors that determine network performance. Although named differently by different authors, there are basically two representation methods (Floreano et al. 2008; Gruau 1994; Yao 1993). These are direct encoding and indirect encoding (Fig. 17).

4.1 Direct encoding

Direct encoding, also called strong representation (Miller et al. 1989) or high-level encoding (Schiffmann et al. 1993), is a method in which structural parameters of ANN architecture are directly encoded in the chromosome. The connections between each node forming the network and the connections between these nodes are often expressed as binary with the help of a connection matrix. For instance, an NxN matrix can represent an ANN architecture with N nodes, where cij indicates the existence or non-existence of a connection from node i to node j. We can use cij = 1 to indicate an existing connection and cij = 0 to indicate no connection. The final chromosome will be formed by concatenating the matrix rows (Fig. 18).

The main advantage of direct representation is that each parameter can be expressed explicitly. In addition, since it does not require any special encoding, its conversion from genotype to phenotype or phenotype to genotype is very fast. On the other hand, since the chromosome that will form as the network model grows will expand exponentially, it is preferred only in small-size networks. If there is enough prior domain knowledge about the network, for example, if the network is known to be fully connected feedforward, only a smaller chromosome can be obtained by encoding the number of layers and the number of nodes in each layer to the chromosome. In cases where direct representation is preferred, extra care is required when using evolutionary operators because model integrity may be impaired in operations such as crossover, leading to the creation of infeasible child networks (Stanley 2004).

4.2 Indirect encoding

The representation approach in which the Artificial Neural Network model is expressed in various production rules and systems is called low level, weak, recipe, or indirect encoding (Branke 1995; Schiffmann et al. 1993). The structural features that make up the network are encoded (or generated) using various parameters or developmental rewriting rules. Also, not all features of the network model need to be specified. The chromosome structure can be reduced by encoding only important parameters.

The emergence of indirect encoding is motivated by biological phenomena. While human DNA is home to only 30.000 chromosomes, there are billions of neurons and trillions of connections between the neurons in the human brain (Mjolsness et al. 1989). This is the basic indication that DNA somehow encodes the human brain indirectly. In order for a compact encoding to be possible in this way, the structures formed must be highly regular.

Throughout history, indirect encoding has been implemented in various ways and used for the evolutionary design of Artificial Neural Network architecture. Yao (Yao 1993) classifies indirect encoding into three categories. These are:

-

Parametric (Blueprint) encoding, which encodes parameters for constructing connectivity.

-

Developmental Rules, rewriting grammars and nature-inspired systems.

-

Fractals from biology.

According to Stanley (2004), the effectiveness of indirect encoding originates from gene reuse. By using multiple times of a single gene at different developmental stages, an effective representation as in DNA can be obtained. Stanley examined indirect encoding in two categories. In the first category, he argued that phenotypic structures were created with repeating patterns and that the same pattern was repeated with a structural theme, while in the second category, the same was used to create different developmental pathways. He added that in the second category, different structures can be expressed in different locations. He stated that numerous left/right symmetries in vertebrates and numerous receptive fields in the visual cortex are biological examples of this type of encoding.

4.2.1 Parametric representation

Parametric representation, which is also known as Blueprint encoding, is one of the earliest forms of indirect encoding, in which the properties of Artificial Neural Network architecture are encoded with several parameters. These parameters define the number of layers, the number of neurons in each layer, and how neurons connect with each other. The most important advantage is that large models can be expressed with relatively small chromosomes. On the other hand, it is restricted to a range of architectures and may not achieve modular architectures (Gruau 1994). The pioneering example of this type of encoding is the work of Harp et al. (1989). Later on, Hancock (1993), Dodd (1990), and Mandischer (1993) used similar encoding techniques.

4.2.2 Developmental approaches

In the developmental representation, which is also defined as a grammatical encoding (Kitano 1990) in the early studies, the artificial neural network architecture is expressed by previously determined production or growth rules. The most important feature of the method is that it is scalable, abstract, and modular. Thus, even very large networks can be represented hierarchically with compact chromosomes. This representation form is a biologically plausible encoding method. According to Boers and Kuiper (1992), this encoding approach is expressed in recipes instead of Blueprints. Living organisms have a very modular structure and this modularity creates tissues and organs of cells of the same type by repeating each other by following certain growth rules (Dawkins 1986). The cooking process of this recipe can be defined as the ontogenesis of an organism, in which the rules of splitting or specialization of cells are encoded in the genome (Grönroos 1998). Establishing developmental rules in the creation of artificial neural network architecture is usually carried out with recursive equations or graph generation rules. The first examples of this type of encoding are Mjolsness’s (1989) and Kitano's (1990) work. In the following years, Gruau's Cellular Encoding (1994) and Luke and Spector's Edge Encoding (1996).

4.2.3 Fractals

Fractals are endless development patterns inspired by biological organisms. Popularized by Benoit Mandelbrot (1982), Fractals are created by repeating a simple process in an infinite loop. They often start with a simple geometrical object and a rule for modifying the object leading to a complex structure. One of the earliest and most popular descriptions of a fractal is Koch-snowflake (shown in Fig. 19), which begins with an equilateral triangle and then replaces the middle third of every line segment with a pair of line segments that form an equilateral bump (Koch 1906).

The first six iterations of the Koch-Snowflake (redrawn from Mandelbrot (1982))

A fractal representation of ANN connectivity has been proposed by Merrill and Port (1991), arguing that they are biologically more plausible than growth rules. They also claimed that strong evidence exists about parts of the human body (such as lungs) having fractal structures.

4.3 Lindenmayer systems (L-Systems)

L-systems are a special class of fractals that mathematically models biological growth in multicellular organisms, especially plants. L-systems are introduced and developed by Aristid Lindenmayer (1971), a Hungarian theoretical biologist and botanist at the University of Utrecht. L-system grammars create production rules and morphological description strings applied on the starting axiom that consists of symbols with associated numerical values (Lee et al. 2005). The process of applying these rules is called string re-writing, so highly complex morphologies can be built with relatively simple rules. L-systems are especially suitable for describing fractal structures such as cell divisions in biological organisms and modeling the growth of plants in computer graphics (Lee et al. 2005). A popular example of an L-systems is Sierpinski Triangle (Fig. 20). Many researchers developed artificial neural network architectures optimized with evolutionary algorithms and inspired by L-systems for representation (Boers and Kuiper 1992; Gruau 1994; Kitano 1990).

4.4 Artificial embryogeny (AE)

Stanley and Miikkulainen (2003) introduced the term Artificial Embryogeny by combining artificial evolutionary systems that utilize the developmental process of embryos in nature. In their taxonomic study, they collected all the developmental processes including Artificial Ontogeny (Bongard and Pfeifer 2001), Computational Embryogeny (Bentley and Kumar 1999), Cellular Encoding (Gruau 1994), and Morphogenesis (Jakobi 1995) under one term. Thus, they created a framework for future studies and emphasized that indirect coding will have an important place in the evolution of artificial neural networks.

4.5 Other Nature-Inspired Approaches

Neural networks are viewed by many authors in a broader biological context of artificial life. Inspired by the features of neural development in animals, Nolfi and Parisi (1997; 1994) developed an innovative method encoding neural network architectures into genetic strings. In this model, the neurons are represented with coordinates in a two-dimensional space. The connections are defined by allowing axon tress to grow in the forward direction from neurons. These trees were basically L-system fractals generated from the grammar. This work was further developed by Cangelosi et al. (1994) by adding cell division and migration rules to grow neuron population rather than the direct encoding of each neuron to chromosome. Later Cangelosi and Elman (1995) simulated a model of regulatory ontogenetic development of artificial neural networks. In their simulation, network growth is controlled by genes that produce elements regulating the activation, inhibition, and delay of neurogenetic events. In another nature-inspired study, Dellaert and Beer (1994; 1996) described a model based on Boolean networks to evolve autonomous agents with developmental processes. The reader may refer to (Cangelosi et al. 2003) for an extensive survey of studies on biologically inspired neural development.

4.6 Competing conventions problem

Competing Conventions problem, also called Permutation problem (Radcliffe 1993) or Structural–Functional Mapping Problem (Whitley et al. 1990), is one of the key problems that arise in the optimization of Artificial Neural Networks using evolutionary methods. During the genetic process, some individual solutions in the population, which are completely different with their genotype and phenotype but functionally equivalent produce the same output. This phenomenon makes the evolutionary optimization process unnecessarily slow and causes child networks obtained with crossover to have infeasible or lower fitness. In two separate network models shown in Fig. 21, the permutation of the nodes of the hidden layer does not change the function of the network.

4.7 Noisy fitness evaluation problem

Due to the stochastic nature of random weight initialization, the fitness evaluation of ANN architectures is noisy unless weights are optimized simultaneously (Yao and Liu 1995). The transformation of genotype to phenotype together with the network training returns a fitness which would undoubtedly be different for initial weights generated randomly. This gets worse when an indirect encoding is adopted for representation because developmental rules are not deterministic. Some authors opted to evolve both architecture and weights at the same time to alleviate this problem. Another approach is to train each architecture several times with different initial weights, and then take the best result to calculate fitness. However, this will lead to a massive increase in computation time (Fiszelew et al. 2007).

4.8 Ensembles

The primary objective of Artificial Neural Networks is to provide generalization. A network that achieves high accuracy on the training set may perform poorly on test data, which has not been previously introduced. On the other hand, the aim of evolutionary methods is optimization. The fitness function of artificial neural networks optimized by evolutionary methods aims for high accuracy with training data. However, the global minimum obtained for the highest accuracy does not necessarily mean the best generalization has been achieved. In the population, there may be other individuals with lower fitness but higher generalization ability. In such cases, ensemble methods are used to obtain the best generalization.

5 Historical progress

We investigated the historical progress in three periods. In the first period, we investigated the early works by explaining the roots of diverse ideas for chromosome representation. In the second period, the emergence and rise of more advanced methods were surveyed. In the last period, recent advances in the deep learning era were reviewed.

5.1 Early work

Research on the evolutionary design of ANN architectures begins with Harp et al.’s NeuroGENESYS (Guha et al. 1988; Harp et al. 1989, 1990), based on the parametric indirect coding. Introducing a high-level scheme called Blueprints, the authors defined a variable-length binary chromosome string consisting of several parameters such as the number of layers, layer size, and connections. In this scheme, each segment called area refers to a set of nodes in the network. Each area includes relevant parameters and projections to several other areas. The start and end of those segments have markers, which help to align of genotype during the crossover (Fig. 22). This enabled the representation of larger networks with smaller chromosomes. A disadvantage of this encoding is that it can only search for architecture within a limited subset.

Network Blueprint Representation (redrawn from Harp et al. (1990))

On the contrary, Miller et al. (1989) proposed a direct-encoding-based approach, which they define as a strong representation. This model, called Innervator, represents the artificial neural network with a simple connection matrix. In this matrix, the connection from each node to another node is defined with 1 if a connection exists and with 0 if a connection does not exist. Then the GA chromosome is built by concatenating the rows in this matrix. The most important advantage of this direct encoding approach suggested by Miller et al. is that it can search all possible network architectures (feasible and infeasible) in the search space without any restrictions. However, it has a big disadvantage that the chromosome length will increase as the network model grows. Thus, it can only be applied to small networks (Fig. 23).

Connectivity Constraint Matrix (redrawn from Miller et al. (1989)). The first N columns of matrix C specify the constraints on the connections between the N units, while the final (N + 1) column contains the constraints for the threshold biases of each unit. Here 1 indicates connection, 0 indicates no connection and L indicates learnable connection

One of the earliest studies implementing a nature-inspired indirect encoding is the graph generation grammar introduced by Kitano (1990). In his approach, Kitano defined a context-free production grammar to create the connection matrix of the artificial neural network using a modified L-system and created the network structure with 2 × 2 recursive iterations starting from an axiom matrix of 1 × 1. Thus, by creating a simple model of neurogenesis in nature, he demonstrated that large networks can also be expressed with the help of very small chromosomes (Fig. 24). Although employing conventional search methods, Mjolsness (1989) described a similar compact encoding scheme where the connection matrix of a network is specified by recursive application of developmental patterns.

Graph generation rules used for generation of the 2–2-1 XOR network (redrawn from Kitano, 1990)

The beginning of the 1990s has witnessed many other studies in which architectures are generated automatically by evolutionary approaches. Wilson (1989) conducted experiments on perceptrons and analyzed the performance of models obtained using GA. Schifmann et al. (1990) investigated the relations between network structure and classification ability of BP. They utilized the so-called BP-Generator based on a mutation-only evolutionary strategy to create ANN architectures and compared their performance with standard BP-nets. Later, they extended their approach with a crossover operator (Schiffmann et al. 1992; 1993). Hintz and Spofford (1990) proposed a combination of ANN and GA that optimizes the network by evolving the number of neurons, weights, and connections. Another study that optimizes artificial neural network architecture using GA is Dodd's (1990) Structured Neural Network model. He proposed an approach that simultaneously optimizes generalization ability and network compactness for a pattern recognition problem classifying dolphin sounds, with a parametric indirect encoding.

In another pioneering work, an invasive approach was taken by Whitley et al. (1990) optimizing both the weight and architecture of ANN. Contrary to augmenting topologies, they started from a fully connected and already trained network and utilized a modified GA, which they call the GENITOR algorithm to find connections to be pruned. A significant amount of training time was saved by initializing the pruned network using the weights in the starting network (Branke 1995). Similarly, Höffgen et al. (1990) used GA to minimize networks for better generalization. In the same year, Hancock and Smith (1990) developed GANNET to specify the structure of BP-network and implemented their method on real-world problems. Later Hancock (1992b) proposed a GA-based approach to pruning the connections of BP-trained neural networks, similar to Whitley et al. (1990), and explored solutions to the permutation problem (Hancock 1992a; Hancock 1993). These early works were thoroughly investigated and compared by various authors (Balakrishnan and Honavar 1995; Branke 1995; Radcliffe 1990; Rudnick 1990; Schaffer et al. 1992; Weiß 1994a; Whitley 1995).

As the key researcher of the famous Genetic Programming approach, Koza (1989) had shown that computer programs can be evolved to perform a particular task by extending genetic algorithms applied to tree-based programming languages like LISP. In 1991, Koza and Rice (1991) used this Genetic Programming paradigm to obtain both weights and architecture of a neural network and applied it to the problem of the one-bit adder (Fig. 25). This differs from the previous approaches in that the network architecture, as well as the weights, are encoded in the chromosome and they are trained simultaneously. Later, Vonk et al. (1995c) proposed GPNN (Genetically Programmed Neural Network) which implemented the same method on toy problems and extended it within another work reviewing recent works of the time (Vonk et al. 1995b).

A graphical depiction of a LISP S-Expression as a rooted tree, representing a neural network for XOR problem (redrawn from Koza & Rice (1991)). Here the root is a linear threshold processing function P. W is the weight function with D0 and D1 as inputs

Optimizing both weight and architecture was also an objective by Marshall (1991). However, instead of optimizing both at the same time, he adopted a different approach by first evolving the network parameters with GA and using BP to calculate fitness. Once an optimum structure is achieved, he optimized weights with GA. Dasgupta and McGregor (1992) described a Structured Genetic Algorithm (sGA) to optimize both architecture and weights with a hierarchical two-level direct representation, where high-level genes activate or de-activate sets of lower-level genes. In this approach, the high-level part of the chromosome encodes the connectivity scheme while the low-level encodes weights and biases with binary strings. Robbins et al. (1993) used GANNET which adopts a direct encoding approach where each gene directly represents the presence or absence of a connection in the network. Although producing long strings, which are not suitable for large networks, their prototype was capable of designing, implementing, and evaluating a variety of multi-layer perceptrons while outperforming conventional methods such as random search, hill-climbing, and parallel hill climbing.

Early works had many innovative approaches for chromosome representation. Fullmer & Miikkulainen (1992) proposed a marker-based encoding scheme which is inspired by the biological structure of DNA. In this approach, markers are used to separate individual node definitions, containing all information about a node such as its identification, initial activation value, and a list of values which specifies its input sources and weights. This enabled the use of recurrent nodes and nodes without an input, acting as bias nodes which are normally used in BP learning. Later this approach was extended by Moriarty & Miikkulainen (1993; 1995a; 1995b), who developed new game-playing strategies based on the evolution of ANNs. They described the marker-based chromosome as a continuous circular entity and improved the predecessor work by defining only hidden nodes, which enabled more compact encoding when the output layer is large. The flexibility of location-independent alleles gave the genetic algorithm more freedom to explore useful schemata. Their solution was able to discover new strategies in Othello, a popular strategy board game in Japan, similar to Go. In a preliminary study, Gruau (1992; 1993) introduced Cellular Encoding designed with a cell rewriting developmental process to improve Kitano’s (1990) graph generation grammar. This sophisticated indirect encoding approach was claimed by the author to be biologically more plausible, compact, modular, and abstract. It evolves both architecture and weights represented in binary form with target functions as boolean functions. Later, in his doctoral dissertation (1994), he presented Cellular Encoding as a machine language for neural networks and published many other works implementing and comparing his approach with other encoding strategies (Gruau and Quatramaran 1997; Gruau et al. 1996; Whitley et al. 1995).

After Kitano and Gruau, many successful studies were followed inspired by biological developmental patterns. Since the brain is considered to be a highly modular structure, a significant amount of work has been devoted to understanding the cooperative interaction between these modules in the visual system of a mammalian brain. Happel and Murre (1992) explored such modular constraints on neural networks and used CALM (Categorization and Learning Modules), a GA-based algorithm to search for suitable modular architectures. In an extension to their initial work (Happel and Murre 1994), they proposed a modular neural network framework to model the brain’s global and local structural regularities. Instead of beginning with a fully connected network, they build a modular structure with sparse connections. In a case study of recognizing handwritten digits, a significant improvement has been observed in the generalization performance (Fig. 26). Also inspired by the brain, Elias (1992) described a connection pattern, modeled after morphologically complex biological neurons which are evolved with GA and implemented over analog electronic hardware constructed from artificial dendritic trees which exhibit a spatiotemporal processing capability. In another biologically motivated work by Bornholdt and Graudenz (1992), arbitrary connections, including asymmetric, backward directed, and feedback loops among the neurons of a generally diluted model-brain was allowed. They divided the neurons of the model into three groups: input neurons, cortex neurons, and output neurons. The architecture of the cortex, which is not grouped into layers, is designed to be arbitrary. Similarly, Jacob and Rehder (1993) proposed a hierarchically structured connectionist model, inspired by the intuition of a network designer who builds architectures in mainly two stages. In the first stage, a course connectivity structure is evolved. In the second stage network, architecture is fine-tuned through learning and adaptation by the specialized task. They used context-free grammar to represent net connectivity and optimized both weights and architecture of neural networks. Inspired by Kitano’s work and aiming to develop a universal network generator, Voigt et al. (1993) described a model called Building Blocks in Cascades Learning (BBC-Algorithms) and its extension with an evolutionary framework called BBC-EVO algorithm based on L-systems. Their evolutionary framework was based on the classical Evolution Strategy with self-adaptation of strategy parameters. Later they extended their work with BBC-EA (Born and Santibánez-Koref 1995; Born et al. 1994), discussing the structuring task as an example of the pseudo-boolean optimization problem.

The modular structure of the winning network after GA search of CALM (redrawn from Happel and Murre (1992)). Dashed arrows indicate learning input connections while solid arrows indirect unidirectional learning connections between modules

Measuring the effects of representation was an interest by several authors including Marti (1992). He used GAs to obtain parameters to generate neural networks and analyzed their behaviors with various genome representations. Karunanithi et al. (1992) adopted a constructive approach named Genetic Cascade Learning as an intuitive solution to competing convention problems. Their proposed method combined GA and the properties of the Cascade-Correlation learning algorithm (Fahlman and Lebiere 1990) by adding one hidden unit at a time. Alba et al. (1993a; 1993b) built a three-level genetic ANN design, where the top-level defines structure, the middle layer defines connectivity and the lowest level sets the weights. They developed a tool called GRIAL (Genetic Research in Artificial Learning) to apply various GA techniques and used PARLOG, a Concurrent Logic Language to implement GA and ANN behavior in GRIAL, which attains intra-level distributed search and parallelism. Following the principle of natural evolution and growth, Boers et al. (1992) described a reverse engineering model of the mammalian brain using L-systems combined with GA to design ANN architectures, trained with BP. With the help of an indirect representation scheme based on production rules, they were able to reduce the computation cost with modular and scalable architectures. Following a different path, Braun and Weisbrod (1993) utilized a direct representation scheme for a constrained architecture space. Aiming to overcome competing conventions problem, they proposed ENZO, a genetic algorithm-driven neural network generator which evolves both the architecture and weights for specific problems. Mandischer (1993) developed a representation scheme to construct backpropagation networks using GA. It is based on layers, which structures the network as a list of network parameters and layer blocks. Another attempt to optimize both the architecture and weights of a neural network was reported by White & Ligomenides (1993) using a node-based encoding. In their proposed algorithm, which they call GANNet, they used a distributed GA with multiple populations to discover and protect the best individuals among subpopulations. Another survey was carried out by Koehn (1994) in his master thesis, investigating various encoding strategies which influence GAs and ANNs.