Abstract

Deep neural networks (DNNs), which are extensions of artificial neural networks, can learn higher levels of feature hierarchy established by lower level features by transforming the raw feature space to another complex feature space. Although deep networks are successful in a wide range of problems in different fields, there are some issues affecting their overall performance such as selecting appropriate values for model parameters, deciding the optimal architecture and feature representation and determining optimal weight and bias values. Recently, metaheuristic algorithms have been proposed to automate these tasks. This survey gives brief information about common basic DNN architectures including convolutional neural networks, unsupervised pre-trained models, recurrent neural networks and recursive neural networks. We formulate the optimization problems in DNN design such as architecture optimization, hyper-parameter optimization, training and feature representation level optimization. The encoding schemes used in metaheuristics to represent the network architectures are categorized. The evolutionary and selection operators, and also speed-up methods are summarized, and the main approaches to validate the results of networks designed by metaheuristics are provided. Moreover, we group the studies on the metaheuristics for deep neural networks based on the problem type considered and present the datasets mostly used in the studies for the readers. We discuss about the pros and cons of utilizing metaheuristics in deep learning field and give some future directions for connecting the metaheuristics and deep learning. To the best of our knowledge, this is the most comprehensive survey about metaheuristics used in deep learning field.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Artificial Intelligence (AI) algorithms can learn feature hierarchies and generalize them to new contexts, and automatically learning features at multiple levels of abstraction provides to learn complex mappings. Shallow learning algorithms extract features using artificial sampling or empirical sampling. As the data size increases, the abilities of shallow techniques turn to be insufficient on large-scale and high-dimensional data. More advanced and competent AI techniques are needed to extract features at high-level abstractions and learn complex mappings in large-scale, incompletely annotated data. One of the advanced representation learning techniques that can address these issues is deep learning (DL) (Hinton et al. 2006), which is inspired by brain activities performed in visual cortex to establish the non-linear relationships. DNNs can learn higher levels of feature hierarchy by transforming the raw feature space into another feature space. Although they had been proposed earlier, their applicability had remained limited because they require high computation budget. However, growth in computational power enabled them to be employed in many studies, recently.

A DL architecture has several specific layers corresponding to a different area of cortex. Each layer has an arbitrary number of neurons and outputs, as well as distinct initialization methods, and activation functions. The values assigned for model and learning parameters, the way of the feature representation described and the weight and bias values determined affect the overall performance of DL methods while there is a trade-off between generalization capability and computational complexity. Although Grid Search, Random Search and Bayesian Optimization (Bergstra et al. 2011) are popular to configure hyper-parameters, they are impractical when the number of parameters and the complexity of the problem is high. Besides, there is no analytic approach to automatically design the optimal architecture, designing manually or using exhausted search requires high computational cost even if high-processing facilities such as GPU and parallel computing are used. In training of DL models, using derivative-based methods is difficult to parallelize and causes to slow convergence. Recently, researchers have proposed new studies to automate the search for their design and parameters. Among these studies, neuro-evolution applies evolutionary computation to explore the huge search space and mitigate the challenges of these approaches. Nature-inspired evolutionary metaheuristics that combine a natural phenomenon and randomness can deal with dynamic changes in the problem space by transferring the problem-specific knowledge from previous generations. They can produce high-quality near-optimal solutions for large-scale problems within an acceptable time.

Tian and Fong (2016) reviewed genetic algorithm (GA) and particle swarm optimization (PSO), for traditional neural network’s training and parameter optimization. Fong et al. (2017) reviewed the applications of metaheuristics in DL. Gülcü and Kuş (2019) reviewed the metaheuristic methods used to optimize hyper-parameters in Convolutional Neural Networks (CNNs). Chiroma et al. (2019) pointed out recent development issues and created a taxonomy based on nature-inspired algorithms for deep learning. These surveys report the advances in the area focusing on only some limited aspects of DL models (training or hyper-parameter optimization) or some architectures. Therefore, we have prepared a more comprehensive review considering most aspects of DL that can be treated as an optimization problem and solved by metaheuristics. As far as we know, this is the most comprehensive review about metaheuristics used in DL field. We believe that this review would be very beneficial for the researchers who prepares to study on the hybridization of metaheuristics and DL approaches.

Our motivation is to provide a review about the metaheuristic algorithms used for the optimization problems arising in deep learning field and answer the research questions listed below:

-

Which optimization problems do arise in the field of deep learning?

-

Which metaheuristic algorithms are used to optimize DNNs?

-

Which encodings can be used to map solution space to network space?

-

Which problems are solved by DNNs optimized by metaheuristics?

-

Which DNN architectures are optimized by metaheuristics?

-

How we can validate the results of DNNs optimized by metaheuristics?

-

Which datasets are mostly used in the benchmarks for DNN optimization by metaheuristics?

To answer the research questions, first, we give a brief information about common DNN architectures widely used in engineering field and optimized by metaheuristics. Second, we group the metaheuristic algorithms based on the number of solutions evolved and the natural phenomenon underpinning the nature-inspired algorithms, including evolutionary algorithms and swarm intelligence algorithms. We provide a section for how DNNs can be designed and trained by the metaheuristics. The problem statements for hyper-parameter optimization of DNNs, training DNNs, architecture optimization, optimization at DNN feature representation level are presented to highlight decision variables and the search space. Besides, in this section, encoding schemes, which convert a network into a solution vector to be evolved by a metaheuristic, are grouped based on how mapping is achieved between genotype-phenotype spaces and network spaces. How the researchers can validate the performance of a DNN designed by the metaheuristics is also given. Next, we group the studies related to metaheuristics and DNNs based on the problem type considered. A section has also been dedicated to the description of datasets which can be used to validate the methodologies on the same experimental setup and compare with state-of-the-art algorithms.

The rest of the paper is organized as follows. In Sect. 2, the most common DL architectures are briefly introduced; in Sect. 3, the properties of metaheuristic algorithms are summarized. The forth section is dedicated to automated DNN search by the metaheuristics. The problem statements of hyper-parameter optimization, training DNNs, architecture search and optimization at feature representation level of DNNs are provided. Network encodings used to represent solutions in the metaheuristics are grouped, and how the performance of the metaheuristics can be validated is described briefly. In Sect. 6, the literature review methodology is explained and the reviewed studies are grouped according to the optimization problem arising in DNNs. Some brief information about the common-used datasets in this field is also provided. Section 7 provides a discussion and the last section is dedicated to the conclusion and future directions.

2 Deep neural network architectures

A neural network (NN) is composed of connected layers which consist of interacting neurons performing computations to achieve an intelligent behaviour or to approximate a complex function. Deep NN models can be categorized into two groups: Discriminative and Generative models. The discriminative models adopt a bottom-up approach. They model a decision boundary between classes based on the observed data and produce a class label for unseen data by calculating the maximum similarity with labeled items. The generative models adopt a top-down approach. They establish a model based on the actual distribution of each class and produce an output for unseen data based on the joint probability in an unsupervised manner. Based on architectural properties, deep neural networks can be categorized into four groups as shown in Fig. 1 (Patterson and Gibson 2017): Unsupervised Pretrained Networks (UPN), Convolutional Neural Networks (CNNs) (Fukushima 1980), Recurrent Neural Networks (RNNs) (Rumelhart et al. 1986) and Recursive Neural Networks. UPNs include Auto-Encoders (AEs) (Kramer 1991), Restricted Boltzmann Machines (RBMs) (Smolensky 1986), Deep Belief Networks (DBNs) (Hinton et al. 2006) and Generative Adversarial Networks (GANs) (Goodfellow et al. 2014). Long Short-Term Memory (LSTM) (Hochreiter and Schmidhuber 1997) is also an implementation of RNNs.

A taxonomy for DNN architectures (Patterson and Gibson 2017)

2.1 Convolutional neural networks

CNN (Fukushima 1980) is one of the most used DNN models generally used for grid-structured data, such as images. In CNNs (Fig. 2), a layer performs transformations and calculations and then propagates it to the next layer. While the dimensionality of hidden layers determines the width of the model, the depth of the model means the overall length of layers. The degree of abstraction depends on the depth of the architecture. Dense, dropout, reshape, flatten, convolution and pooling are some examples of layers in CNNs. The convolution layer weights each element in a data matrix by a probability density function. The number of filters and the filter sizes are important hyper-parameters of the convolution layer. The pooling layer is used to downsample the feature maps using operators such as max, min, average, median. Pool size and striding are to be assigned in each pooling layer. In the dense layers, outputs are calculated by transferring the weighted inputs to the activation function. The initialization mode of the weights, activation function (softmax, softplus, softsign, relu, tanh, sigmoid, hard sigmoid, linear, etc.) and the number of outputs are to be determined in each dense layer. The dropout layer assigns zeros to some randomly selected inputs to prevent overfitting during training. The reshape layer ensures that the one-dimensional feature map is conformable to the data format in other layers. The flatten layer reshapes a multi-dimensional data into a one-dimensional form.

The cost function for a sample (x, y) can be defined by Eq. 1 and error at sub-sampling layer is calculated by Eq. 2 (Shrestha and Mahmood 2019):

where \(\delta _k^{(l + 1)}\)is the error for \((l+1)\)th layer of a network and \(f'(z_k^{(l)} )\) represents the derivate of the activation function. AlexNet (Krizhevsky et al. 2012), GoogleNet (Inceptionv1) (Szegedy et al. 2015), ResNet (He et al. 2016) and VGG (Simonyan and Zisserman 2014) are some popular and widely-used CNN models. The CNN models can be categorized as in Fig. 3 (Khan et al. 2020).

A taxonomy for CNN architecture (Khan et al. 2020)

2.2 Unsupervised pretrained networks

UPNs employ greedy layer-wise unsupervised pretraining followed by a second stage for supervised fine-tuning. An unsupervised algorithm based on a nonlinear transformation trains each layer and extracts the variations in its input. This stage intensifies the weights and makes the cost function more complicated with included topological features. In the second stage, the labels are used and a training criterion is minimized (Erhan et al. 2010). UPNs have the advantage in initializing a number of layers over random initialization (Bengio 2009). Auto-Encoders (AEs) (Kramer 1991), Restricted Boltzmann Machines (RBMs) (Smolensky 1986), Deep Belief Networks (DBNs) (Hinton et al. 2006) and Generative Adversarial Networks (GANs) (Goodfellow et al. 2014) are representatives of UPNs that use training algorithms based on layer-local unsupervised criteria. These are described below briefly.

2.2.1 Auto-encoders

An auto-encoder (Kramer 1991) (Fig. 4) is a special case of feed-forward NNs that learn features by copying its input to output. AE uses greedy layer-by-layer unsupervised pretraining and fine-tuning with backpropagation (Kramer 1991). It is composed of two parts: an encoder function \(h = f (x)\) that transforms data from high-dimensional space to a low dimensional space as a lossy compression, and a decoder that produces a reconstruction \(r = g(h)\). The aim is to achieve \(g(f (x)) = x\) nonlinear representation using an unsupervised algorithm because they regenerate the input itself rather than other output.

When the structure has linear one hidden layer, the k units in this layer learn the first k principal component of the input by minimizing mean squared error function. When the hidden layer is non-linear, AE does not act like Principal Component Analysis and can extract multi-modal variations in data by minimizing the negative log-likelihood of reconstruction given by Eq. 3, and the loss function can be defined by Eq. 4 when the inputs are binary (Bengio 2009):

2.2.2 Restricted Boltzmann machine and deep belief networks

RBMs (Smolensky 1986) (Fig. 5) can build non-linear generative models from unlabeled data to reconstruct the input by learning the probability distribution. The architecture has a hidden layer and a visible layer in which all units are fully connected to the units in the hidden layer, and there are no connections between the units in the same layer. The energy of the RBM network is calculated by Eq. 5:

where \(\upsilon _i\) and \(h_j\) are the states of ith node. \(\upsilon\) is the set of nodes in the input layer, h is the set of nodes in the hidden layer, \(b_{\upsilon _i}\) and \(b_{ h_j}\) are the biases for the input layer and hidden layer, respectively. \(\omega _{ij}\) represents the weight of the edge connecting two nodes. The probability of each node is calculated by energy formula given in Eq. 6.

Hinton et al. (2006) showed that deeper networks could efficiently be trained using greedy layer-wise pretraining and proposed DBNs which are composed of a stacked set of RBMs with both directed edges and undirected edges. A DBN with \(\ell\) layers models the joint distribution between observed vector x and \(\ell\) hidden layers \(h^k\) by Eq. 7:

where \(x = h^0\), \(P(h^{k - 1}|h^k)\) is a visible-given-hidden conditional distribution in an RBM associated with level k of the DBN, and \(P(h^{\ell - 1} ,h^\ell )\) is the joint distribution in the top-level RBM. Sampling from the top level of RBM is performed using Gibbs chain. DBN is trained in greedy layer-wise fashion.

2.2.3 Generative adversarial neural networks

GANs (Goodfellow et al. 2014) are designed to train two adversarial networks, a generative model G and a discriminative model D. G, which is a differentiable function represented by a multilayer perceptron, can learn the generator’s distribution \(p_g\) over data x while D estimates the probability that a sample is drawn from the training set rather than G. A prior on input noise variables \(p_z(z)\) is mapped by \(G(z; \theta _g)\), where \(\theta _g\) is multilayer perceptron parameters. A latent code is generated by the generator network. The second network \(D(x; \theta _d)\) aims to assign the correct label to both training examples and samples from G, while G is trained to generate samples that counterattack the success of D by minimizing \(log(1 - D(G(z)))\), which is a two-player minimax game with value function V(G; D) defined by Eq. 8 (Yinka-Banjo and Ugot 2019):

To avoid saturation in early cycles of learning, G can also be allowed to maximize log(D(G(z))) (Goodfellow et al. 2014).

2.3 Recurrent neural networks

RNNs (Rumelhart et al. 1986) are special-purpose networks for processing sequential data, especially time series. The primary function of each layer is introducing memory rather than hierarchical processing. RNNs illustrated in Fig. 6 produce an output at each time step by Eq. 9 and have recurrent connections between hidden units (Eq. 10).

where \(h_t\) denotes the hidden state defined by Eq. 10.

where \(x_t\) and \(y_t\) are the input and output at time t, and f indicates the nonlinear function. Learning phase updates weights which determine the information to pass onward or to discard.

2.3.1 Long short-term memory

LSTM (Hochreiter and Schmidhuber 1997) is an implementation of RNN, which partly addresses problems of vanishing gradients and long time delays. LSTM cells (Fig. 7.) with internal recurrence (a self-loop) are introduced to produce paths where the gradient can flow for long durations rather than element-wise nonlinearity. LSTM can obtain previous states and can be trained for applications requiring memory or state awareness. LSTM technique replaces hidden neurons by memory cells as multiple gating units control the flow of information.

In Fig. 7, x is the input, h is the hidden neuron, c is memory cell state, the symbol \(\sigma\) is the sigmoid function and tanh is the hyperbolic tangent function, operator \(\oplus\) is the element-wise summation and \(\otimes\) is the element-wise multiplication. The input and output gates regulate the read and write access to the cell, the forget gates learn to reset memory cells once their contents are useless. The values at the output of the gates can be given by Eqs. 11–15 (Shrestha and Mahmood 2019):

where f, i, o correspond to the forget, input and output gate vectors, W, w and b are weights of input, weights of recurrent output and bias, respectively.

2.4 Recursive neural networks

Recursive NNs are defined as skewed tree structures (Fig. 8), and a deep recursive NN can be constructed by stacking multiple layers of recursive layers (Irsoy and Cardie 2014).

Assuming that \(l(\eta )\) and \(r(\eta )\) are left and right children of a node \(\eta\) in a binary tree, a recursive NN computes the representations at each internal node \(\eta\) by Eq. 16:

where \(W_L\) and \(W_R\) are square weight matrices of left and right child, respectively, and b is a bias vector. The result at the output layer can be calculated by Eq. 17: representation layer:

where \(W_o\) is the output weight matrix and \(b_o\) is the bias vector to the output layer.

When each hidden layer lies in a different space in stacked deep learners, Irsoy and Cardie (2014) proposed a stacked deep recursive NN of individual recursive by Eq. 18:

where i is the layer index of the multiple stacked layers, \(W_L^{(i)}\) and \(W_R^{(i)}\) are weight matrices of left and right child in layer i, and \(V^{(i)}\) is the weight matrix between \((i-1)\)th and ith hidden layers.

3 Metaheuristic algorithms

Most of the optimization problems in real-world are hard such that their objective functions and constraints have high nonlinearity, multi-modality, discontinuity in addition to conflicting with each other etc (Karaboga and Akay 2009). These hard problems might not be solved by exact methods in a reasonable time, and approximate algorithms are preferred to find good solutions. Metaheuristic algorithms are a group of approximate algorithms which mimic a natural phenomenon using operators performing intensification and diversification. They adopt an encoding scheme to represent a solution to the problem and use some search operators to change the solution in search space, systematically. These search operators try to find high-quality solutions by exploiting the current knowledge (intensification) or explore new solutions (diversification) to avoid getting stuck to local minima. A good algorithm performs intensification and diversification in a balanced manner. To guide the search for a better region in the search space, algorithms employ some greedy and stochastic selection operators.

The metaheuristic algorithms can be divided into two main groups as given in Fig. 9 depending on the number of solutions considered during the search.

3.1 Single solution-based algorithms

The first group called trajectory methods or single solution-based methods start with a single solution and construct next solution using a perturbation mechanism. Tabu Search (TS) (Glover 1986) and Simulated Annealing (SA) (Kirkpatrick et al. 1983) algorithms are popular examples of the first group.

TS proposed by Glover (1986) applies local search to construct a solution and uses a tabu list as a memory to prohibit the previous solutions. In each iteration, worse solutions have a chance to be accepted if no improvement can be obtained. SA proposed by Kirkpatrick et al. (1983) mimics metallurgical annealing in which heat of a material is increased and cooled down to optimize its crystal structure to prevent defects. In each time step, the algorithm generates a random solution, and the new solution is accepted or rejected according to the probability calculated based on thermodynamic principles.

3.2 Population-based algorithms

The second group uses a group of solutions called a population and tries to improve the population collectively. In the population-based algorithms, solutions might use the information due to the other solutions in the population, or the next population might be generated considering whole population. Evolutionary Computation (EC) algorithms such as Evolution Strategies (ES) (Rechenberg 1965), Evolutionary Programming (EP) (Fogel et al. 1966) and Genetic Algorithms (GA) (Holland 1975), Genetic Programming (GP) (Koza 1990) and Differential Evolution (DE) (Storn and Price 1995), and Swarm Intelligence algorithms such as Artificial Bee Colony (ABC) (Karaboga 2005), Particle Swarm Optimization (PSO) (Kennedy and Eberhart 1995), Ant Colony Optimization (ACO) (Dorigo et al. 1996) algorithms are the most popular members of population-based algorithms.

3.2.1 Evolutionary computation algorithms

EC algorithms mimic natural selection and genetic operations during the search, and they can be grouped as ES by Rechenberg (1965), EP by Fogel et al. (1966), GA by Holland (1975) and GP by Koza (1990). For each solution in the population, EC algorithms generate offsprings by genetic recombination, and mutation operators and next population is formed by the solutions corresponding to better regions in the search space. DE proposed by Storn and Price (1995) is an evolutionary algorithm proposed to optimize the function of real-valued decision variables.

3.2.2 Swarm intelligence algorithms

Swarm intelligence algorithms mimic the collective behaviour of creatures to establish a global response through local interactions of agents in a swarm. They adopt self-organization and division of labour, where self-organization is characterized by positive feedback to intensify better patterns, negative feedback to abandon the patterns exploited sufficiently, multiple interactions between agents and fluctuations to bring diversity to swarm. PSO developed by Kennedy and Eberhart (1995) simulates the flocking of birds and schooling of fishes. The algorithm changes the velocity and location of each particle using the particle’s self-experience and the global information of all particles. ACO proposed by Dorigo et al. (1996) is an analogy of ants’ path finding between the nest and source using the pheromone trail. In each iteration, ants change their positions based on probabilities proportional with pheromone amount between the nodes. An edge visited by more ants will have higher pheromone, and a higher amount of pheromone will attract more ants while the pheromone is evaporating by time. ABC proposed by Karaboga (2005) is another well-known swarm intelligence algorithm which simulates the foraging behaviour of honey bees. There are three types of bees allocated for foraging task: employed bees, onlooker bees and scout bees, which all try to maximize the nectar amount unloaded to the hive. There are some other metaheuristics as well as the modifications of these algorithms.

4 Automated DNN search by metaheuristics

While solving a specific problem by using a DNN, there are some design decisions that influence the performance of the DNN. Selecting the hyper-parameters of a DNN, training a DNN to find the optimal weights and bias values, deciding the optimal architecture parameters of a DNN, or reducing the dimensionality in feature representation level of a DNN can be considered as optimization problems. Metaheuristic algorithms can be used for solving these types of optimization problems. In each evolution cycle, present solution/s is/are assigned a fitness value, and new solution/s is/are generated by reproduction operators. The selection operators of metaheuristics guide the search to promising regions of the search space based on the information of solutions, and this makes the metaheuristics efficient tools to solve complex problems.

4.1 Problem statements

The optimization problems encountered in DNN design and training are formulated in the subsections.

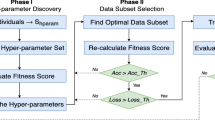

4.1.1 Hyper-parameter optimization

Most DL models have model-specific hyper-parameters that have to be tuned before the model starts the learning phase. Some of these parameters are related to macro structure of DNN (outer level) while some are cell or block-level parameters (inner level). Training the model with these parameters is very influential in the behaviour and performance of the network. Assuming that \(\lambda _h\) is a hyper-parameter vector, the hyper-parameter optimization can be defined by Eq. 19 (Li et al. 2017):

where Err is the error on the test set, \(\wedge _h\) is hyper-parameter space, \(A_{\lambda _h}\) is learning algorithm, \(\theta\) represents the model parameters, \(x_{train}\) and \(x_{test}\) are train and test datasets, respectively. By deciding the optimal hyper-parameter values, the error of the model is minimized.

Running different configurations manually and evaluating them to decide the best parameter setting is quite labor-intensive and time-consuming especially when the search space is high-dimensional. It should also be noted that a tuned hyper-parameter setting by an experienced user is application-dependent. Therefore, automatic hyper-parameter optimization is needed to reduce computational cost and user involvement. The steepest gradient descent algorithm is not suitable to optimize hyper-parameter configurations. Although Grid Search, Random Search (RS) and Bayesian Optimization are popular to configure hyper-parameters, they are impractical as the DNN model gets more complicated and deeper or when the number of parameters and the complexity of the problem is high (Nakisa et al. 2018). Some studies evaluate the hyper-parameters on a low dimensional model and apply the values to the real model (Hinz et al. 2018). Metaheuristic algorithms have been used to obtain a faster convergence and to find a near-optimal configuration in a reasonable time. They systematically search the hyper-parameter space by evolving the population at each iteration.

4.1.2 Training DNNs

Although deep learning models can be successfully applied to solve many problems, training them is complicated for several reasons. Some studies employ derivative methods such as stochastic gradient descent, conjugate gradient, Hessian-free optimization to determine the optimal values of weights and biases. These conventional training methods suffer from premature convergence and vanishing (or exploding) gradients problem which means that the gradients calculated layer-wise in a cascading manner are decreasing or increasing exponentially and tend to explode. Because the weights are updated based on the gradient values, the training might go to stagnation or overshoot, especially when nonlinear activation functions ranging in a small scale are employed, and hence, the solutions may not converge to the optimum. The gradient-based algorithms can get stuck to saddle points arising due to flat regions in the search space. Besides, steep edges in the search space surface leads overshoot during weight updates. Parallelizing the derivative-based training algorithms and distributing to computing facilities to reduce the computational cost is also hard. Therefore, the metaheuristic algorithms are introduced in the training of deep learning methods. Training a DNN can be expressed as an optimization problem defined by Eq. 20:

4.1.3 Architecture optimization (architecture search)

Deep learning models require to define an application-dependent architecture that affects the model accuracy. These parameters include the model-specific parameters such as convolutional layers’ parameters, fully-connected layers’ parameters, and parameters of the training process itself. The problem of finding an optimal CNN architecture (Eq. 21) can be considered as an expensive combinatorial optimization problem.

where \(i=1,2,\ldots ,LN\), LN is the number of layers, \(\lambda _N\) is the global parameter set of the network, \(\lambda _{L_i}\) parameter set for ith layer \(\wedge _N\) and \(\wedge _{L_i}\) are parameter spaces for global parameters and layer-wise parameters, respectively.

If a compact model is established, the accuracy may be adversely affected whilst if a complex model is adopted, training time and computational cost will be higher. Additionally, when the network is expanded with large number of computations units, this time the network might get overfitting to the outliers in the data. There is a trade-off between generalization capability and computational complexity. In one-shot models (Brock et al. 2017; Bender et al. 2018), a large network that represents a wide variety of architectures is trained, and weight sharing is applied between sub-models instead of training separate models from scratch. Architecture optimization is hard because it involves optimizing too many real, integer, binary and categoric variables. Depending on the values of outer level parameters, the number of inner-level parameters to be optimized changes and therefore, the search space has variable-size dimension. There is no analytic approach to automate the design of DL model architecture. Since there are many possible configurations, an exhaustive search is too expensive. Therefore, researchers have employed metaheuristics to automatically search for optimal architecture to maximize performance metrics defined in Sect. 4.6.

4.1.4 Optimization at feature representation level

Metaheuristic algorithms have been used in the feature representation level of deep models or dimensionality reduction. They find a subset of features by maximizing a fitness function measuring how good the subset \(F_s\) represents the whole set F (Eq. 22).

where F is the feature set, \(F_s\) is a subset of F and I is a fitness function such as mutual information. The features extracted are transferred to the input layer of deep models.

4.2 Representations used in metaheuristics for encoding DNN parameters

Encoding is converting the network parameters or structure into a solution representation of the metaheuristic, so that the reproduction operators can operate on the solutions and generate candidate solutions. Adopting an efficient representation and encoding strategy is a critical design issue because search operators are decided in accordance with the encoding selected, and they affect the performance of the metaheuristic and the DNN produced. The suitable representation is related with how the DNN optimization is handled. If the network topology is fixed, and hyper-parameters or weights are optimized, a fixed-length representation might be employed. However, when the topology is evolved during search, a variable-length representation is required to be able to encode larger or smaller networks constructed by the search operators. The encoding of networks can be divided into three main groups: direct, indirect and implicit encoding. For encoding solutions based on these encoding types, bit strings, real-valued vectors, index vectors for categorical data, adjacency matrices, context-free grammar, directed cyclic graphs or trees can be used.

In the direct encoding, an evaluation of genotype-phenotype mapping for the network is employed. There are a variety of direct encoding types, including, but not limited to, connection-based, cell-based, layer-based, module-based, pathway-based, block-based, operation-based (Fekiač et al. 2011; Kassahun et al. 2007). An example of direct encoding for a chained CNN architecture and its hyper-parameters is shown in Fig. 10.

-

Connection-based: in a predetermined network structure, each switch connection can be represented by one bit, or weights can be represented by bit strings or real values. If the connectivity matrix of a network is represented by a matrix, when the network includes n neurons, the matrix is of size \(n^2\), which can cause the scalability problem. After reproduction, the solutions may not be feasible and need repairing mechanisms.

-

Cell-based: the individual contains all the information about the nodes and their connectivity. A list can contain nodes and their connections, or a tree can represent the nodes and connection weights. The complete architecture is constructed by stacking the cells based on a predefined rule (Koza and Rice 1991; Schiffmann 2000; Suganuma et al. 2017; Pham et al. 2018; Zoph et al. 2018; Real et al. 2019).

-

Layer-based: the individual encodes the layers’ parameters together with the global network parameters (Jain et al. 2018; Baldominos et al. 2019; Mattioli et al. 2019; Sun et al. 2019b; Pandey and Kaur 2018; Martín et al. 2018).

-

Module-based: it can be seen as a special case of cell-based representation. Because some modules are repeating in the networks such as GoogleNet and ResNet, the modules and the blueprints are encoded in the chromosomes. The blueprint chromosomes correspond to a graph of nodes pointing a specific module while the module chromosomes correspond to a network. Blueprint and module chromosomes are assembled together to construct a large network (Miikkulainen et al. 2017).

-

Pathway-based: a set of paths represented by context-free grammar is used to describe the paths from the inputs to the outputs. This encoding can be preferred especially for recurrent networks (Jacob and Rehder 1993; Cai et al. 2018a). Especially for chain-structured structures such as Inception models (Szegedy et al. 2016), ResNets (He et al. 2016) and DenseNets (Huang et al. 2017), path-based representation and evolution modifies the path topologies in the network and maintains functionality by means of weight reusing. Bidirectional tree-structured representation can be used for efficient exploration (Cai et al. 2018a) (Fig. 11).

-

Block-based: in block-based networks, two-dimensional arrays holds different internal configurations depending on the structure settings and weights (Zhong et al. 2018; Junior and Yen 2019; Wang et al. 2019b). Sun et al. (2018a) proposed block based definition for CNN architecture which is composed of ResNet, DenseNet blocks and pooling layer unit.

-

Operation-based: phases are encoded by a directed acyclic graph describing the operations within a phase such as convolution, pooling, batch-normalization or a sequence of operations. Skip connections are introduced for bypassing some blocks. The same phases are not repeated in network construction (Xie and Yuille 2017; Lu et al. 2019).

-

Multi-level encoding: one or more encoding types can be used jointly to represent structures, nodes, pathways and connections. Stanley and Miikkulainen (2002) represented augmenting topologies by connection genes which refer to two node genes being connected and neuron genes which specifies the in-node, the out-node, the weight of the connection, whether or not the connection gene is expressed (an enable bit), and an innovation number, which allows finding corresponding genes during crossover, as shown in Fig. 12.

Transforming a single layer to a tree-structured motif via path-level transformation operations (Cai et al. 2018a)

Augmenting topologies encoding (Stanley and Miikkulainen 2002)

In the indirect encoding, phenotype can be converted to a genotype in a smaller search space based on a production rule. L-System, cellular encoding, developmental and generative encoding, connective compositional pattern producing networks are some examples of indirect encoding.

-

L-System is a mathematical model simulating the natural growth (Lindenmayer 1968). Information exchange between neighboring cells arises by continually determining rewriting steps to make a neural network based on grammar production rules.

-

Cellular Encoding is similar to L-system as it has grammar based production rule. It starts with an initial cell and constructs a graph grammar network until it contains only terminals (Gruau 1994).

-

Developmental and generative encoding mimics DNA mapping to a mature phenotype through a growth process and reactivating genes during the development process. The connectivity patterns are generated by applying the right activation functions and repetition with variation motifs (Gauci and Stanley 2007).

-

Connective compositional pattern producing networks exploit geometry and represent connectivity patterns as functions of Cartesian space (Stanley et al. 2009).

Another group of encoding is implicit encoding in which a network is represented based on biological gene regulatory (Floreano et al. 2008) or based on the parameters of Gaussian distribution where the weights are assumed to be sampled (Sun et al. 2019a).

While designing a search space, various architectures can be represented using a single one-shot model as a super-graph of DNN components. The template network is trained to predict the validation accuracies of the architectures. The pretrained one-shot model is used to validate the architectures, and the best architectures are re-trained from scratch (Bender et al. 2018). An example of one-shot model is shown in Fig. 13.

Diagram of a one-shot architecture (Bender et al. 2018)

The representation can also be grouped into flat and hierarchical representations (Liu et al. 2017b).

-

Flat Representation: Each architecture \(A=assemble(G,o)\) is represented as a single-source, single-sink directed acyclic graph in which each node corresponds to a feature map, and each edge is associated with available primitive operators, \(o = \{o_1,o_2,\ldots \}\), such as convolution, pooling etc. G is adjacency matrix where \(G_{ij}=k\) if \(o_k\) operation is performed between nodes i and j. To calculate the feature map of a node, the feature maps of the predecessor nodes of the current node are concatenated in depth-wise manner or element-wise manner.

-

Hierarchical Representation: In this representation there are several motifs at different levels of hierarchy. Assuming that the hierarchy has L levels and \(\ell\)th level includes \(M_\ell\)motifs. Lower level motifs correspond to operations used to construct the high level motifs. The lowest level, \(\ell =1\), contains a set of primitive operations while the highest level, \(\ell =L\), corresponds to the entire full architecture as a composition of previous layers (Liu et al. 2017b, 2018). The set of operations in level \(\ell -1\)can be defined recursively as \(o_m^{(\ell )} = \rm {assemble}(G_m^{(\ell )} ,o_{}^{(\ell - 1)} ),\forall \ell = 2, \ldots ,L\)where \(o^{(\ell - 1)} = \left\{ {o_1^{(\ell - 1)} ,o_2^{(\ell - 1)} , \ldots ,o_{M(\ell - 1)}^{(\ell - 1)} } \right\}\)(Liu et al. 2017b). An example of this operation is shown in Fig. 14.

An example of how level-1 primitive operations are merged into a level-2 motif (Liu et al. 2017b)

A problem related to the network encoding is that multiple different genotypes can be mapped to the same genotype. This is called competing conventions problem and can mislead the search process (Stanley and Miikkulainen 2002; Hoekstra 2011).

4.3 Evolutionary operators used for evolving DNN architecture and parameters

The initial solutions in metaheuristics can be generated randomly, by applying a large number of mutations (Liu et al. 2017b) to a trivial genotype, using a constructive heuristic (Lorenzo and Nalepa 2018) or a rule (Zoph and Le 2016), by morphing apriori networks for neural network architecture search. Elsken et al. (2018) and Real et al. (2017) inherit knowledge from a parent network by using network morphism and obtained speed-up compared to random initialization. To find optimal parameters in one-shot or separate models, initial solutions are generated in real space or discrete space based on the type of the parameter. To evolve the solutions and guide the search towards the optimum, some operators compatible with the representation can be used during search, including arithmetic and binary crossover, mutation operators.

Crossover combines the information of two or more individuals to create two new networks or parameter set to exploit the information in the architecture space (Miikkulainen et al. 2017). It achieves information sharing among the solutions in the population. Single point crossover, n-point crossover, uniform crossover, shuffle crossover, arithmetic crossover, flat crossover, unimodal normal distribution crossover, fuzzy connective based crossover, simplex crossover, average bound crossover, geometrical crossover, partially mapped crossover, order-based crossover, and hybrid crossover operators (Lim et al. 2017) can be used to produce new solutions exploiting the information of current solutions. When an integer representation is adopted, position-based, edge-based, order-based, subset-based, graph partition and swap-based crossover operators can be used while swap, semantic, cut and merge, matrix addition, distance-based and probability based operators are suitable when tree structures are used to represent solutions (Pavai and Geetha 2016). Although crossover operator is very helpful for numeric and binary problems, it was shown that it does not provide significant improvement in combinatorial problems compared to mutation operator (Osaba et al. 2014; Salih and Moshaiov 2016). For variable-size chromosomes, Sun et al. (2019a) proposed unit alignment and unit restore to align the chromosomes to the top based on their orders and then restore them after applying reproduction.

Mutation operator can be applied for different purposes during evolution similar to human designer actions, including layer deepening, layer widening, kernel widening, branch layer, inserting SE-skip connection, inserting dense connection (Chen et al. 2015a; Wistuba 2018; Zhu et al. 2019), altering learning rate, reseting weights, inserting convolution, removing convolution, altering stride, altering number of channels, changing filter size, inserting one to one identity connection, adding skip, removing skip (Real et al. 2017). Cai et al. (2018a) presented an RNN which iteratively generates transformation operations based on current network topology and reinforcement.

4.4 Selection operators and diversity preserving

The selection operator in evolutionary algorithms is an analogy of natural selection leading survival of the fittest individuals. It means that during evolution, genetic information of the best solutions are assigned higher chance to be transferred to the next generations by means of reproduction. A very strong selection that consider only the fittest solutions can cause genetic drift and stagnation in the population due to reduced diversity. Although a weak selection can maintain diversity in the population, it may slow down convergence. There are different types of selection operators. Some of them are used to sample the individuals to be reproduced while some are used to determine solutions to be survived in the next generation population (Real et al. 2017, 2019; Liu et al. 2017b), \(p[t + 1]= s(v(p[t]))\), where p[t] is the population at generation t, v(.) is the variation operator and s(.) is the selection operator. Truncation selection, stochastic universal sampling, tournament selection, roulette wheel selection, rank-based selection, greedy selection, elitism and Boltzmann selection can be used in evolutionary search.

Especially for population-based metaheuristics, diversity is an important factor in the initialization and during evolution of the population (Moriarty and Miikkulainen 1997). Diversity preserving mechanisms can be integrated into the algorithms by applying weak selection strategies for avoiding the individuals to be very similar or applying mutation with high rate to increase diversity in the population. Fitness sharing (Goldberg et al. 1987) to prevent genetic drift, niching (Mahfoud 1995) to reduce the effect of genetic drift, and crowding mechanisms (De Jong 1975) are also helpful to maintain the diversity.

When an offspring is generated in the initialization or after reproduction operators, it may not satisfy all of the problem-specific constraints. These solutions are classified as infeasible solutions. The constraint handling techniques may apply a distinction between feasible and infeasible solutions, penalize the constraint violations or use a repairing mechanism to transform infeasible solutions into feasible solutions (Koziel and Michalewicz 1999).

4.5 Speed-up methods

Because finding the optimal architecture, parameters and weights requires thousands of GPU days, some speed-up methods have been introduced. To reduce the training time, a downscaled model and data can be used (Li et al. 2017; Zoph et al. 2018), a learning curve can be extrapolated based on the results of previous epochs (Baker et al. 2017), warm-start can be used by weight inheritance/network morphisms (Real et al. 2017; Elsken et al. 2018; Cai et al. 2018a), one-shot models can be used (Brock et al. 2017; Pham et al. 2018; Bender et al. 2018) or surrogate models can be used to approximate the objective functions (Gaier and Ha 2019). Parallel implementation of the approaches can also be used to gain a speed-up (Desell 2017; Real et al. 2017; Martinez et al. 2018).

4.6 Validating the performance of the designed DNN architectures

In order to validate the performance of a network designed by metaheuristics, experiments might rely on the performance metrics, including loss function such as cross entropy, validation error, predictive accuracy, precision, recall, and \(F_1\); network size metrics, including the number of parameters, neurons, depth, layers; memory consumption metrics, including the number of processing units, floating point operations per second (FLOP), memory usage and network inference time. One or more of these metrics can be considered during optimization in a single objective or multi-objective manner based on penalty, decomposition or Pareto-based approaches (Coello 2003). Some of these metrics can be treated as constraints and constraint handling techniques are used during optimization to guide the search towards feasible region (Koziel and Michalewicz 1999). When the objective function calculation is expensive, surrogate models can be alternative to reduce computational cost based on approximation (Jin 2011).

When a study focuses on architecture optimization, although there is no state-of-the-art algorithm validated on architecture search (Li and Talwalkar 2020), the efficiency of the metaheuristics can be verified against random search, grid search, tree-based Bayesian optimization (Bergstra et al. 2011; Hutter et al. 2011), Monte Carlo tree search (Negrinho and Gordon 2017), reinforcement learning (Zoph and Le 2016; Baker et al. 2016) or discrete gradient estimators (Xie et al. 2018). Jin et al. (2019) proposed an approach based on network morphism and Bayesian optimization for architecture search.

5 Literature review

5.1 Methodology and paper inclusion/exclusion criteria

In this section, we provide a review of the studies on utilizing metaheuristic algorithms to solve the optimization problems encountered in DL field. We searched databases Elsevier, Springer, IEEExplore, Web of Science, Scopus and Google Scholar in October 2019 by filtering with some combinations of the keywords deep learning, deep neural network, optimization, evolutionary, metaheuristic, hyper-parameter, parameter tuning, training, architecture, topology, structure. We reached 179 publications at the end of the search. The results included articles, book chapters and conference papers reporting original studies. The date range of papers was 2012 when the first paper was appeared and 2019 when the search was conducted. 77 of 179 publications were excluded because they used other optimization methods rather than employing a metaheuristic or they were written in some other languages rather than English. Within 102 publications included, there were 4 survey papers. The number of studies on training DL models using metaheuristics is 21, the number of publications on hyper-parameter optimization of DL models using metaheuristics is 30, the number of studies on architecture (topology) optimization of DL models using metaheuristics is 39, and the number of publications on using metaheuristics at feature representation level is 8.

In the subsequent sections, we summarize how metaheuristics are applied to solve optimization problems encountered in the deep learning field. The DNN architecture used, the encoding scheme, the fitness function and search operators tailored for the metaheuristics are presented as much as possible provided that they are described in the original paper. The datasets on which the study was validated and the results are also presented in the rest of the paper.

5.2 Studies using metaheuristics for hyper-parameter optimization of deep neural networks

5.2.1 Articles

Zhang et al. (2017) introduced MOEA/D integrated to DBN ensemble for remaining useful life estimation, which is a core task in condition-based maintenance (CBM) in industrial applications. The proposed method was incorporated with DE operator and Gaussian mutation to evolve multiple DBNs optimizing accuracy and diversity. Each DBN with a fixed number of hidden layers was trained using contrastive divergence followed by BP. The number of hidden neurons per hidden layer, weight cost, and learning rates used by contrastive divergence and BP were encoded as decision variables. The proposed method was evaluated on NASA’s C-MAPSS aero-engine data set (Saxena and Goebel 2008), which contained four sub-datasets. From the results, it was concluded that the proposed approach demonstrated outstanding performance in comparison with some existing approaches.

The study by Delowar Hossain and Capi (2017) and another study by Hossain et al. (2018) presented an evolutionary learning method that combined GA and DBNN for robot object recognition and grasping. The DBNN consisted of a visible layer, three hidden layers, and an output layer. The parameters of DBNN including the number of hidden units, the number of epochs, learning rates and momentum of the hidden layers are optimized using GA algorithm. Visible units were set to the activation probabilities, while hidden units were set to the binary values. They generated a dataset of images of six robot graspable objects in different orientations, positions, and lighting conditions in the experimental environment. They considered the error rates and network training time as the objective to be minimized and reported that their method was efficient at assigning robotic tasks.

Lopez-Rincon et al. (2018) proposed an evolutionary optimized CNN classifier for tumor-specific microRNA signatures. In the approach, the number of layers was fixed to 3, and each layer was described by output channels, convolution window, max-pooling window. Together with the output channels in the fully connected rectifier layer, 10 hyper-parameters were tuned by EA, whose fitness was the average accuracy of CNN on a 10-fold validation process. The approach was validated on a real-world dataset containing miRNA sequencing isoform values taken from The Cancer Genome Atlas (TCGA) (2006) featuring 8129 patients, for 29 different classes of tumors, using 1046 different biomarkers. The presented approach was compared against 21 state-of-the-art classifiers. The hyper-parameters optimized by EA helped CNN to improve average validation accuracy to 96.6%, and their approach was shown to be the best classifier on the considered problem.

Nakisa et al. (2018) presented a framework to automate the search for LSTM hyper-parameters including the number of hidden neurons and batch size using the DE algorithm. The fitness function is the accuracy of emotion classification. The performance evaluation and comparison with state-of-the-art algorithms (PSO, SA, RS, and TPE) were performed using a new dataset collected from a lightweight wireless EEG headset (Emotiv) and smart wristband (Empatica E4) which can measure EEG and BVP signals. This performance was evaluated based on four-quadrant dimensional emotions: High Arousal Positive emotions (HA-P), Low Arousal-Positive emotions (LA-P), High Arousal-Negative emotions (HA-N), and Low Arousal- Negative emotions (LA-N). The experimental results showed that the average accuracy of the system based on the optimized LSTM network using DE and PSO algorithms attained progress in each time interval and could improve emotion classification significantly. Considering the average time, the processing time for the SA algorithm was lower than the others, DE consumed the most processing time overall iterations, however, its performance was higher than all other algorithms. It was noted that after a number of iterations (100 iterations), the performance of the system using ECs (PSO, SA, and DE) did not change significantly due to the occurrence of a premature convergence problem.

Loussaief and Abdelkrim (2018) proposed a GA-based CNN model to learn optimal hyper-parameters. For a CNN with \(D_p\) convolutional layers (CNN depth), the genetic algorithm optimized \(2*D_p\) variables which are \(D_p\) pairs of values (Filter Number per Layer and Filter Size per Layer). The experiments on the Caltech-256 (Griffin et al. 2007) dataset proved the ability of the GA to enhance the CNN model with a classification accuracy of 90%. They inserted a batch normalization layer after each convolutional layer and improved the quality of the network training. GA simulation produced a pretrained CNN performing an accuracy of 98.94%.

Soon et al. (2018) trained CNN by tuning its parameters for the vehicle logo recognition system. For this purpose, the hyper-parameters of CNN were selected by the PSO algorithm on The XMU dataset (Huang et al. 2015). Optimized hyper-parameters were the learning rate (\(\alpha\)), spatial size of convolutional kernels, and the number of convolutional kernels in each convolutional layer i. They reported that the proposed CNN framework optimized by PSO achieved better accuracy compared to other handcrafted feature extraction methods.

Hinz et al. (2018) aimed to reduce the time needed for hyper-parameter optimization of deep CNNs. The parameters considered in the optimization process were the learning rate, the number of convolutional and fully connected layers, the number of filters per convolutional layer and their size, the number of units per fully connected layer, the batch size, and the L1 and L2 regularization parameters. They identified suitable value ranges in lower-dimensional data representations and then increased the dimensionality of the input later during optimization. They performed experiments with random search, TPE, sequential model-based algorithm, and GA. The approaches were validated on Cohn-Kanade (CK+) data set (Lucey et al. 2010) to classify the displayed emotion, the STL-10 data set (Coates et al. 2011), the Flowers 102 dataset (Nilsback and Zisserman 2008). All three optimization algorithms (random search, TPE, GA) produced similar values for the different hyper-parameters for all resolutions. There were minor differences in the number of filters and units per convolutional or fully connected layer. The hyper-parameters found by the GA and TPE produced better results compared to the results achieved by RS.

de Rosa and Papa (2019) presented a GP approach for hyper-parameter tuning in DBNs for binary image reconstruction. Hyper-parameters were encoded as terminal nodes, and internal nodes were the combinations of mathematical operators. The individual (solution) vector was composed of four design variables: learning rate (\(\eta\)), number of hidden units (n), weight decay (\(\lambda\)), and momentum (\(\alpha\)). In the experiments, the difference between the original and reconstructed images was used as the fitness function. Each DBN is trained with three distinct learning algorithms: contrastive divergence, persistent contrastive divergence (PCD), and fast persistent contrastive divergence (FPCD). GP was compared to nine approaches including RS, RS-Hyperopt, Hyper-TPE, HS, IHS, Global-Best HS (GHS), Novel GHS (NGHS), Self-Adaptive GHS (SGHS), Parameter-Setting-Free HS (PSF-HS). Performances of the methods were evaluated on the MNIST, CalTech 101 Silhouettes (Marlin et al. 2010), Semeion Handwritten Digit datasets (Semeion 2008). Depending on the results, GP achieved the best results, and it was statistically similar to IHS in some cases. On the Semeion Handwritten Digit dataset, only GP achieved the best results.

Yoo (2019) proposed a search algorithm called a univariate dynamic encoding algorithm that combines local search and global search to improve the training speed of a network with several parameters to configure. In the local search, a bisectional search and a uni-directional search was performed while in global search, the multi-start method was employed. The batch size, the number of epochs, and the learning rate were the hyper-parameters optimized. The cost function was the average of the difference between the decoded value and the original image for the AE, and the inverse of the accuracy for the CNN. The proposed method was tested for AE and CNN on the MNIST dataset and its performance was compared with those of SA, GA, and PSO, and it was shown that the proposed method achieved fast convergence rate and less computational cost.

Wang et al. (2019b) proposed a cPSO to optimize the hyper-parameters of CNNs. cPSO-CNN employed a confidence function defined by a normal compound distribution to improve exploration capability and updates scalar acceleration coefficients according to the variant ranges of CNN hyper-parameters. A linear prediction model predicted the ranking of the PSO particles to reduce the cost of fitness function evaluation. In the first part of the experiments, the first convolutional layers of AlexNet and the other CNNs including VGGNet-16, VGGNet-19, GoogleNet, ResNet-52, ResNet-101, DenseNet-121 were optimized on CIFAR-10 dataset using CER as the performance metric. From the experiments, the approach was effective with all CNN architectures. In the second part of the experiments, eight layers of AlexNet were optimized using cPSO and the results were compared to those obtained by state-of-the-art methods, including GA, PSO, SA, Nelder-Mead. It was demonstrated that cPSO-CNN performed competitively according to both performance metrics and overall computation cost.

Sabar et al. (2019) proposed a hyper-heuristic parameter optimization framework (HHPO) to set DBN parameters. HHPO iteratively selects suitable heuristics from a heuristic set using Multi-Armed Bandit (MAB), applies the heuristic to tune the DBN for a better fit with the current search space. In this study, the DBN parameters optimized by the framework were learning rate, weight decay, penalty parameter, and the number of hidden units, and MSE was utilized as the cost function. The proposed approach was compared with PSO, HS, IHS, and FFA on the MNIST, CalTech 101 Silhouettes, Semeion datasets. The proposed HHPO achieved the best test MSE across almost all datasets except for on Semeion data with a one layer DBN trained using CD. The p-value results obtained by the Wilcoxon test demonstrated that HHPO was statistically better than other algorithms.

Lin et al. (2019) proposed a DBN optimized with GA for predicting wind speed and weather-related data collected from Taiwan’s central weather bureau. The seasonal autoregressive integrated moving average (SARIMA) method and the least squares support vector regression for time series with genetic algorithms (LSSVRTSGA) were used to forecast wind speed in a time series, and the least-squares support vector regression with genetic algorithms (LSSVRGA). In DBN, the momentum and learning rate was considered in both unsupervised learning and supervised learning stages. Two parameters of the LSSVR and LSSVRTS models were tuned by GA using negative RMSE values as the fitness function. DBNGA models outperformed the other models according to forecasting accuracy.

Guo et al. (2019) combined GA and TS in hyper-parameter tuning to avoid wasting redundant computational budget arising from repeated searches. They reported that grid search is not suitable as the number of hyper-parameters increases. To avoid repeated searches in GAs, the proposed method integrated a Tabu list to GA (TabuGA) and was validated on the MNIST and Flower5 (Mamaev 2018) datasets. It was indicated that the Flower5 dataset was more sensitive to the values of hyper-parameters than the MNIST dataset was. The experiment on the MNIST dataset was performed on the classic LeNet-5 convolutional neural network while the experiment on the Flower5 dataset was carried out using a four-layer CNN structure. In the LeNet architecture, learning rate, batch size, number of F1 units, dropout rate, l2 weight decay parameters were optimized. In the CNN architecture, learning rate, batch size, number of F1 units, number of F2 units, number of F3 units, number of F4 units, l2 weight decay, dropout rate1, dropout rate2, dropout rate3, dropout rate4 parameters were evolved during optimization. Both results of experiments on MNIST and Flower-5 showed that TabuGA was preferable by reducing the required number of evaluations to achieve the highest classification accuracy. The proposed TabuGA was superior to the existing popular methods, including RS, SA, CMA-ES, TPE, GA, TS.

Yuliyono and Girsang (2019) proposed ABC to automate the search for hyper-parameters of LSTM for bitcoin price prediction. The sliding window size, number of LSTM units, dropout rate, regularizer, regularizer rate, optimizer and learning rate were encoded in a solution and RMSE was used as the fitness function. ABC-LSTM revealed an RMSE of 189.61 while LSTM without tuning resulted in an RMSE of 236.17, and the ABC-LSTM model outperformed the latter.

5.2.2 Conference papers

Zhang et al. (2015) applied an ensemble of deep learning network (DBN) and MOEA/D in diagnosis problems with multivariate sensory data. A multi-objective ensemble learning was conducted to find optimal ensemble weights of deep NNs. Diversity and accuracy as the conflicting objectives were employed with MOEA/D to adjust the ensemble weights. Turbofan engine degradation dataset (Saxena and Goebel 2008) was used to validate the efficacy of the proposed model. The results indicated that the average accuracy of multi-objective DBN ensemble learning schemes outperformed mean and majority voting ensemble schemes.

Rosa et al. (2016) used the Firefly Algorithm (FFA), PSO, HS, IHS algorithms to properly fine-tune RBM and DBN hyper-parameters in the learning step, including learning rate \(\eta\), weight decay \(\lambda\), penalty parameter \(\alpha\), and the number of hidden units n. They considered three DBN structures: one layer, two layers, and three layers. The experiments were performed on MNIST (Lecun et al. 1998), CalTech 101 Silhouettes (Li et al. 2003), Semeion (Semeion 2008) datasets. The experiment results showed that FFA produced the best results with fewer number of layers and less computational effort compared to the other optimization techniques considered in the study.

Nalepa and Lorenzo (2017) introduced PSO and parallel PSO techniques for automatically tuning DNN hyper-parameters, including the receptive field size, number of receptive fields, stride size, receptive field size. The experiments were performed for several DNN architectures on multi-class benchmark datasets, MNIST (Lecun et al. 1998) and CIFAR-10 (Krizhevsky 2009). They also analyzed the convergence abilities of their sequential and parallel PSO for optimizing hyper-parameters in DNNs using the Markov-chain theory. The convergence time, the number of processed generations, and the number of visited points in the search space were examined according to the values of DNN hyper-parameters. It was shown that adding new particles to the swarm increased the computational cost significantly while improving the generalization performance of the final DNN slightly. Therefore, smaller swarms were noted to be preferable in practice.

Hossain and Capi (2017) integrated DBN with NSGA-II for real-time object recognition and robot grasping tasks. The number of hidden units and the number of epochs in each hidden layer were considered as decision variables to optimize accuracy and network training time. Normalization and shuffling operations were performed in preprocessing step. As a result of the optimization step, the number of hidden units was determined as 515, 707 and 1024 in three hidden layers, respectively. The number of epochs iterated in three hidden layers was 129, 153 and 184, respectively. Experimental results showed that the approach proposed method was efficient in object recognition and robot grasping tasks.

Qolomany et al. (2017) utilized a PSO algorithm to optimize parameter settings to reduce computational resources in the tuning process. The deep learning models were trained to predict the number of occupants and their locations on a dataset from a Wi-Fi campus network. PSO was used to optimize the number of hidden layers and the number of neurons in each layer for deep learning models. They reported that the proposed method was an efficient approach for tuning the parameters and decreased the training time compared to the grid search method.

Fujino et al. (2017) proposed a GA-based deep learning technique for the recognition of human sketches. They focused on the evolution of hyper-parameters in AlexNet, including the number of filters, filter sizes and, max-pooling sizes. In order to reduce the training time of GA-based CNN evolution, a memory was integrated into GA to retrieve the fitness of a solution when it was encountered in the subsequent generations instead of recalculating the fitness. The proposed approach was applied to sketch recognition dataset that contains 250 object categories (Eitz et al. 2012). The proposed method showed higher performance even on smaller size images and provided high generalization ability.

Bochinski et al. (2017) introduced an EA-based hyper-parameter optimization by a committee of multiple CNNs. An individual was represented by the hyper-parameters h, which is a set of the configuration tuple of the ith layer and the layer sizes. A one-point crossover and the variation and the elimination or creation of a gene mutation operators were applied in \((\mu + \lambda )\) EA. They compared the performances of the evolutionary-based hyper-parameter optimization for a committee of independent CNNs. The experiments were validated on the MNIST dataset and significant improvements were reported over the state-of-the-art.

Sinha et al. (2018) optimized CNN parameters by PSO on CIFAR-10 (Krizhevsky 2009) and road-side vegetation dataset (RSVD). Each particle was represented by a binary vector of 4 input image sizes, 8 filter sizes, 8 number of filters, and 2 architectures. Top-1 score error measurement was used to evaluate the performance of each approach. CNN optimized by the proposed approach revealed a higher accuracy than the grid search and standard Alexnet classification for the CIFAR-10 dataset. For RSVD, the best network obtained a higher accuracy than the grid search approach and class-semantic color-texture textons approach. It was shown that increasing the swarm size and the number of iterations increase accuracy.

Sun et al. (2018b) investigated stacked AE models, including AEs, SAEs, DAEs, and CAEs optimized using PSO and compared them to the grid search method. Stacked AEs were preferred due to their small number of hyper-parameters that the grid search method can exploit all combinations within the given experimental time slot. In the proposed method, the hyper-parameters including the number of neurons n, weight balance factor \(\lambda\), predefined sparsity \(\rho\) of SAE, sparsity balance factor \(\beta\) of SAE, corruption level z of DAE, contractive term balance factor \(\gamma\) of CAE were considered during optimization. The experiments were validated on the MINST dataset variations (Larochelle et al. 2007) including the MNIST with Background Images (MBI), Random Background (MRB), Rotated Digits (MRD), with RD plus Background Images (MRDBI), the Rectangle, the Rectangle Images (RI), and the Convex Sets (CS) benchmarks. They reported that AE models optimized by PSO could achieve the comparative classification accuracy but only took 10% to 1% computational complexity to that of grid search.

Agbehadji et al. (2018) introduced an approach to adjust the learning rate of LSTM by using two different aspects of Kestrel bird behavior (KSA). Six biological datasets (Carcinom, SMK_CAN_187, Tox_171, CLL_SUB_111, Glioma, Lung) (Li et al. 2018) were used in the experiments. The results showed that out of the six datasets, KSA has the best learning parameter (highlighted in bold) in three datasets.

Sabar et al. (2017) proposed an evolutionary hyper-heuristic (EHH) framework for automatic parameter optimization of DBN, which was tedious and time-consuming when handled manually. EHH performed a high-level strategy and a pool of large sets of evolutionary operators such as crossover and mutation and employed a non-parametric statistical test to identify a subset of effective operators. The number of hidden units, learning rate, penalty parameter, and weight decay were the hyper-parameters optimized using the mean squared error as the objective function. Three datasets, MNIST, CalTech 101 Silhouettes, Semeion Handwritten Digit datasets were used to evaluate the performance of the proposed approach. Three different DBN models using three different learning algorithms were tested, and the results of the proposed approach were compared to those of existing metaheuristics based DBN optimization methods. The results demonstrated that the proposed approach was competitive compared to state-of-the-art methods.

Sehgal et al. (2019) employed GA for tuning hyper-parameters used in Deep Deterministic Policy Gradient (DDPG) combined with Hindsight Experience Replay (HER) to speed up the learning agent. GA maximized task performance and minimized the number of training epochs considering the following parameters: discounting factor \(\gamma\), polyak-averaging coefficient \(\tau\), learning rate for critic network \(\alpha _{critic}\), learning rate for actor-network \(\alpha _{actor}\), percent of times a random action is taken \(\varepsilon\). The experiments were validated on fetch-reach, slide, push, pick and place and door opening robotic manipulation tasks. They reported that the proposed method could find the parameter values yielding faster learning and better or similar performance at the chosen tasks.

5.2.3 Others

Jaderberg et al. (2017) introduced a Population-Based Training (PBT) algorithm to jointly optimize a population of models and their hyper-parameters to maximize performance with a fixed computational budget. PBT was validated on reinforcement learning, supervised learning for machine translation, and training of Generative Adversarial Networks. They evaluated the GAN model by a variant of the Inception score on a pretrained CIFAR classifier, which used a much smaller network and made evaluation much faster. PBT optimized the discriminator’s learning rate and the generator’s learning rate separately. In all cases, PBT produced stable training and a better final performance by the automatic discovery of hyper-parameter schedules.

Steinholtz (2018) evaluated the Nelder-Mead Algorithm, PSO, Bayesian Optimization with Gaussian Processes (BO-GP) and Tree-structured Parzen Estimator (TPE) for two hyper-parameter optimization problem instances. In the first experiment, LeNet5 structure was adopted for the CIFAR-10 dataset. In the second experiment, an RNN model of LSTM-units was applied to a language modeling problem on the PTB dataset to predict the next word in a sentence by a given history of previous words. In LeNet structure, learning Rate, number of filters C1, number of filters C2, receptive field size C1, receptive field size C2, receptive field size P1, receptive field size P2, stride size P1, stride size P2, number of neurons FC1, number of neurons FC2, dropout probability FC2 parameters were optimized. In LSTM, learning rate, learning rate decay, pre-decay epochs, maximum gradient norm, initialization scale, number of layers, layer Size, number of steps, dropout probability parameters were optimized. Experimental results showed that the TPE algorithm achieved the highest performance according to mean solution quality for both problems. The NM, PSO and BO-GP algorithms outperformed the RS for the first experiment.

These studies are summarized in Table 1.

5.3 Studies using metaheuristics for training deep neural networks

5.3.1 Articles

Rere et al. (2015) proposed SA to train CNN on MNIST (Lecun et al. 1998) dataset that consists of digital images of handwriting. The best solution produced by SA was stored as the weights and bias values of CNN, and then the output of CNN was used to compute the loss function. When various neighbourhood sizes including 10, 20 and 50 were investigated, the percentage accuracy of CNN trained by SA was better when the neighbourhood size was 50. They concluded that although the computation time increased, the accuracy of the model was improved by the proposed method compared to the traditional CNN.

Rere et al. (2016) proposed SA, DE, and Harmony Search (HS) to improve the accuracy of CNN by computing the loss function of vector solution or the standard error on the training set. The experiments were performed on MNIST and CIFAR-10 datasets. The proposed methods and the original CNN were compared to LeNet-5 architecture. CNNSA produced the best accuracy for all epochs. The computation time of CNNSA was in the range of 1.01 times compared to the original CNN. They concluded that although the proposed methods showed an increase in the computation time, the accuracy achieved by them was also improved up to 7.14 percentage.

Syulistyo et al. (2016) utilized PSO in CNNs to improve the recognition accuracy of neural networks on the handwritten dataset from MNIST. The system gained 95.08% in 4 epochs, which was better than the conventional CNN and DBN without increasing the execution time. Besides, they concluded that CNN trained by PSO has better accuracy compared to CNN trained by SA and CNNPSO had the advantages of fast searching ability with the minimum iteration of CNN. In terms of execution time, DBN using contrastive divergence was very fast while CNNPSO consumed more time due to extra calculations in the PSO algorithm.

Badem et al. (2017) combined ABC and L-BFGS method for tuning the parameters of a DNN. The DNN architecture used in the study included one or more AE layers cascaded to a softmax layer. The proposed method combines the exploitation ability of L-BFGS’s and the exploration capability of ABC to avoid getting trapped minima. The performance of the proposed method was validated on the 15 benchmark data sets from UCI (Dua and Graff 2017) and compared with the state-of-the-art classifiers, including MLP, SVM, KNN, DT and NB. It was reported that the proposed hybrid strategy achieved better classification performance on a wide range of the data sets and boosted the performance of the DNN classifier on the data sets consisting of binary and multiple classes.

Deepa and Baranilingesan (2018) designed a DLNN model predictive controller to investigate the performance of a non-linear continuous stirred tank reactor (CSTR). The train data was collected from a state-space model of a CSTR. The weights of DLNN were tuned by a hybrid model of PSO and Gravitational Search Algorithm (GSA) to avoid the random initialization of the weights. The hybrid model aimed to overcome the inefficiency of each individual algorithm in exploration and exploitation. The effectiveness of the proposed approach was compared with those of some state-of-the-art techniques, and it was reported to achieve better minimal integral square error.