Abstract

Medical imaging is an invaluable resource in medicine as it enables to peer inside the human body and provides scientists and physicians with a wealth of information indispensable for understanding, modelling, diagnosis, and treatment of diseases. Reconstruction algorithms entail transforming signals collected by acquisition hardware into interpretable images. Reconstruction is a challenging task given the ill-posedness of the problem and the absence of exact analytic inverse transforms in practical cases. While the last decades witnessed impressive advancements in terms of new modalities, improved temporal and spatial resolution, reduced cost, and wider applicability, several improvements can still be envisioned such as reducing acquisition and reconstruction time to reduce patient’s exposure to radiation and discomfort while increasing clinics throughput and reconstruction accuracy. Furthermore, the deployment of biomedical imaging in handheld devices with small power requires a fine balance between accuracy and latency. The design of fast, robust, and accurate reconstruction algorithms is a desirable, yet challenging, research goal. While the classical image reconstruction algorithms approximate the inverse function relying on expert-tuned parameters to ensure reconstruction performance, deep learning (DL) allows automatic feature extraction and real-time inference. Hence, DL presents a promising approach to image reconstruction with artifact reduction and reconstruction speed-up reported in recent works as part of a rapidly growing field. We review state-of-the-art image reconstruction algorithms with a focus on DL-based methods. First, we examine common reconstruction algorithm designs, applied metrics, and datasets used in the literature. Then, key challenges are discussed as potentially promising strategic directions for future research.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Biomedical image reconstruction translates signals acquired by a wide range of sensors into images that can be used for diagnosis and discovery of biological processes in cell and organ tissue. Each biomedical imaging modality leverages signals in different bands of the electromagnetic spectrum, e.g. from gamma rays ( Positron emission tomography PET/SPECT)), X-rays (computed tomography (CT)), visible light (microscopy, endoscopy), infrared (thermal images), and radio-frequency (Nuclear magnetic resonance imaging (MRI)), as well as pressure sound waves (in ultrasound (US) imaging) (Webb and Kagadis 2003). Reconstruction algorithms transform the collected signals into a 2, 3, or 4-dimensional image.

The accuracy of each reconstruction is critical for discovery and diagnosis. Robustness to noise and generalization cross modality specifications’ (e.g., sampling pattern, rate, etc.) and imaging devices parameters’ allow a reconstruction algorithm to be used in wider applications. The time required for each reconstruction determines the number of subjects that can be diagnosed as well as the suitability of the technique in operating theatres and emergency situations. The number of measurements needed for a high quality reconstruction impacts the exposure a patient or sample will have to endure. Finally, the hardware requirements define whether a reconstruction algorithm can be used only in a dedicated facility or in portable devices thus dictating the flexibility of deployment.

The study of image reconstruction is an active area of research in modern applied mathematics, engineering and computer science. It forms one of the most active interdisciplinary fields of science (Fessler 2017) given that improvement in the quality of reconstructed images offers scientists and clinicians an unprecedented insight into the biological processes underlying disease. Figure 1 provides an illustration of the reconstruction problem and shows a typical data flow in a medical imaging system.

Over the past few years, researchers have begun to apply machine learning techniques to biomedical reconstruction to enable real-time inference and improved image quality in a clinical setting. Here, we first provide an overview of the image reconstruction problem and outline its characteristics and challenges (Sect. 1.1) and then outline the purpose, scope, and the layout of this review (Sect. 1.2).

Data flow in a medical imaging and image interpretation system. Forward model encodes the physics of the imaging system. The inverse model transforms the collected signals by the acquisition hardware into a meaningful image. The success of diagnosis, evaluation, and treatment rely on accurate reconstruction, image visualization and processing algorithms

1.1 Inverse problem and challenges

1.1.1 From output to input

Image reconstruction is the process of forming an interpretable image from the raw data (signals) collected by the imaging device. It is known as an inverse problem where given a set of measurements, the goal is to determine the original structure influencing the signal collected by a receiver given some signal transmission medium properties (Fig. 2). Let \(y \in {\mathbb {C}}^{M}\) represent a set of raw acquired sensor measurements subject to some unknown noise (or perturbation) vector \({\mathcal {N}}\in {\mathbb {C}}^{M}\) intrinsic to the acquisition process. The objective is to recover the spatial-domain (or spatio-temporal) unknown image \(x \in {\mathbb {C}}^{N}\) such that:

where \({\mathcal {F}}(\cdot )\) is the forward model operator that models the physics of image-formation, which can include signal propagation, attenuation, scattering, reflection and other transforms, e.g. Radon or Fourier transform. \({\mathcal {F}}(\cdot )\) can be a linear or a nonlinear operator depending on the imaging modality. \({\mathcal {A}}\) is an aggregation operation representing the interaction between noise and signal, in the assumption of additive noise \({\mathcal {A}} = +\).

Propagation of signals from sender to receiver. While passing through a transmission channel, signals s pick up noise (assuming additive) along the way, until the acquired sensor data y = s+\({\mathcal {N}}\) reaches the receiver. The properties of the received signal may, via a feedback loop, affect properties of future signal transmission. Sender and receiver modeling differ within modalities. For example the lower figure illustrates in the left ultrasound probe used to send and collect signals (S = R); in the middle: X-ray signal propagates through the subject toward the detector (S \(\rightarrow\) R); Single molecule localization microscopy is an instance where S and R are components of a feedback loop (S \(\leftrightarrow\) R). The signal, optical measurements of nano-meter precise fluorescent emissions, is reconstructed in a discrete (temporal) X/Y/Z domain. An example of a feedback loop in SMLM is the auto-tuning of the laser power in response to an increase in density (R) that can compromise the spatio-temporal sparsity required for accurate reconstruction (Cardoen et al. 2019)

While imaging systems are usually well approximated using mathematical models that define the forward model, an exact analytic inverse transform \({\mathcal {A}}^{-1}(\cdot )\) is not always possible. Reconstruction approaches usually resort to iteratively approximate the inverse function and often involve expert-tuned parameters and prior domain knowledge considerations to optimize reconstruction performance.

(A) A problem is ill-conditioned when two different objects produce very close observed signals. When the observed signals are identical and hence identical reconstructed images, the inverse solution is non-unique. Prior knowledge can be leveraged to rule out certain solutions that conflict with the additional knowledge about the object beyond the measurement vectors. (B) A use case toy example of two objects with the same acquired signals. Prior knowledge about homogeneity of object rules out the second object

1.1.2 An ill-posed problem

A basic linear and finite-dimensional model of the sampling process leads us to study a discrete linear system of the imaging problem of the form:

For full sampling, in MRI for instance, we have M = N and the matrix F \(\in {\mathbb {C}}^{M \times N}\) is a well-conditioned discrete Fourier transform matrix. However, when (\(M<N\)), there will be many images x that map to the same measurements y, making the inverse an ill-posed problem (Fig. 3). Mathematically, the problem is typically under-determined as there would be fewer equations to describe the model than unknowns. Thus, one challenge for the reconstruction algorithm is to select the best solution among a set of potential solutions (McCann and Unser 2019). One way to reduce the solution space is to leverage domain specific knowledge by encoding priors, i.e. regularization.

Sub-sampling in MRI or sparse-view/limited-angle in CT are examples of how reducing data representation (\(M < N\)) can accelerate acquisition or reduce radiation exposure. Additional gains can be found in lowered power, cost, time, and device complexity, albeit at the cost of increasing the degree of ill-posedness and complexity of the reconstruction problem. This brings up the need for sophisticated reconstruction algorithms with high feature extraction power to make the best use of the collected signal, capture modality-specific imaging features, and leverage prior knowledge. Furthermore, developing high-quality reconstruction algorithms requires not only a deep understanding of both the physics of the imaging systems and the biomedical structures but also specially designed algorithms that can account for the statistical properties of the measurements and tolerate errors in the measured data.

(A) The marked increase in publications on biomedical image reconstruction and deep learning in the past 10 years. In red: results obtained by PubMed query that can be found at: https://bit.ly/recon_hit. Search query identified 310 contributions which were filtered according to quality, venue, viability and idea novelty, resulting in a total of 95 contributions as representatives covered in this survey (blue). (B) The pie chart represents the frequency of studies per modality covered in this survey

1.2 Scope of this survey

The field of biomedical image reconstruction has undergone significant advances over the past few decades and can be broadly classified into two categories: conventional methods (analytical and optimization based methods) and data-driven or learning-based methods. Conventional methods (discussed in Sect. 2) are the most dominant and have been extensively studied over the last few decades with a focus on how to improve their results (Vandenberghe et al. 2001; Jhamb et al. 2015; Assili 2018) and reduce their computational cost (Despres and Jia 2017).

Researchers have recently investigated deep learning (DL) approaches for various biomedical image reconstruction problems (discussed in Sect. 3) inspired by the success of deep learning in computer vision problems and medical image analysis (Goceri and Goceri 2017). This topic is relatively new and has gained a lot of interest over the last few years, as shown in Fig. 4a and listed in Table 1, and forms a very active field of research with numerous special journal issues (Wang 2016; Wang et al. 2018; Ravishankar et al. 2019). MRI and CT received the most attention among studied modalities, as illustrated in Fig. 4b, given their widespread clinical usage, the availability of analytic inverse transform and the availability of public (real) datasets. Several special issues journals devoted to MRI reconstruction have been recently published (Knoll et al. 2020; Liang et al. 2020; Sandino et al. 2020).

Fessler (2017) wrote a brief chronological overview on image reconstruction methods highlighting an evolution from basic analytical to data-driven models. McCann et al. (2017)) summarized works using CNNs for inverse problems in imaging. Later, Lucas et al. (2018) provided an overview of the ways deep neural networks may be employed to solve inverse problems in imaging (e.g., denoising, superresolution, inpainting). As illustrated in Fig. 4 since their publications a great deal of work has been done warranting a review. Recently, McCann and Unser (2019) wrote a survey on image reconstruction approaches where they presented a toolbox of operators that can be used to build imaging systems models and showed how a forward model and sparsity-based regularization can be used to solve reconstruction problems. While their review is more focused on the mathematical foundations of conventional methods, they briefly discussed data-driven approaches, their theoretical underpinning, and performance. Similarly, Arridge et al. (2019) gave a comprehensive survey of algorithms that combine model and data-driven approaches for solving inverse problems with focus on deep neural based techniques and pave the way towards providing a solid mathematical theory. Zhang and Dong (2019) provided a conceptual review of some recent DL-based methods for CT with a focus on methods inspired by iterative optimization approaches and their theoretical underpinning from the perspective of representation learning and differential equations.

This survey provides an overview of biomedical image reconstruction methods with a focus on DL-based strategies, discusses their different paradigms (e.g., image domain, sensor domain (raw data) or end to end learning, architecture, loss, etc. ) and how such methods can help overcome the weaknesses of conventional non-learning methods. To summarize the research done to date, we provide a comparative, structured summary of works representative of the variety of paradigms on this topic, tabulated according to different criteria, discusses the pros and cons of each paradigm as well as common evaluation metrics and training dataset challenges. The theoretical foundation was not emphasized in this work as it was comprehensively covered in the aforementioned surveys. A summary of the current state of the art and outline of what we believe are strategic directions for future research are discussed.

The remainder of this paper is organized as follows: in Sect. 2 we give an overview of conventional methods discussing their advantages and limitations. We then introduce the key machine learning paradigms and how they are being adapted in this field complementing and improving on conventional methods. A review of available data-sets and performance metrics is detailed in Sect. 3. Finally, we conclude by summarizing the current state-of-the-art and outlining strategic future research directions (Sect. 6).

2 Conventional image reconstruction approaches

A wide variety of reconstruction algorithms have been proposed during the past few decades, having evolved from analytical methods to iterative or optimization-based methods that account for the statistical properties of the measurements and noise as well as the hardware of the imaging system (Fessler 2017). While these methods have resulted in significant improvements in reconstruction accuracy and artifact reduction, are in routine clinical use currently, they still present some weaknesses. A brief overview of these methods’ principles is presented in this section outlining their weaknesses.

2.1 Analytical methods

Analytical methods are based on a continuous representation of the reconstruction problem and use simple mathematical models for the imaging system. Classical examples are the inverse of the Radon transform such as filtered back-projection (FBP) for CT and the inverse Fourier transform (IFT) for MRI. These methods are usually computationally inexpensive (in the order of ms) and can generate good image quality in the absence of noise and under the assumption of full sampling/all angles projection (McCann and Unser 2019). They typically consider only the geometry and sampling properties of the imaging system while ignoring the details of the system physics and measurement noise (Fessler 2017).

Reprinted by permission from Springer Nature (Thaler et al. 2018)

CT Image reconstruction from sparse view measurements. (A) Generation of 2D projections from a target 2D CT slice image × for a number of N fixed angles \(\alpha _i\). (B) Image reconstructed using conventional filtered back projection method for different number of a projection angles N. Modified figures from (Thaler et al. 2018)

When dealing with noisy or incomplete measured data e.g., reducing the measurement sampling rate, analytical methods results deteriorate quickly as the signal becomes weaker. Thus, the quality of the produced image is compromised. Thaler et al. (2018) provided examples of a CT image reconstruction using the FBP method for different limited projection angles and showed that analytical methods are unable to recover the loss in the signal (Fig. 5), resulting in compromised diagnostic performance.

2.2 Iterative methods

Iterative reconstruction methods, based on more sophisticated models for the imaging system’s physics, sensors and noise statistics, have attracted a lot of attention over the past few decades. They combine the statistical properties of data in the sensor domain (raw measurement data), prior information in the image domain, and sometimes parameters of the imaging system into their objective function (McCann and Unser 2019). Compared to analytical methods iterative reconstruction algorithms offer a more flexible reconstruction framework and better robustness to noise and incomplete data representation problems at the cost of increased computation (Ravishankar et al. 2019).

Iterative image reconstruction workflow example. (A) Diffuse optical tomography (DOT) fibers brain probe consisting of a set of fibers for illumination and outer bundle fibers for detection (Hoshi and Yamada 2016). (B) Probe scheme and light propagation modelling in the head, used by the forward model. (C) Iterative approach pipeline. (D) DOT reconstructed image shows the total Hemoglobin (Hb) concentrations in the brain. (Figure licensed under CC-BY 4.0 Creative Commons license)

Iterative reconstruction methods involve minimizing an objective function that usually consists of a data term and a regularization terms imposing some prior:

where \(||{\mathcal {F}}({\hat{x}}) -y\Vert\) is a data fidelity term that measures the consistency of the approximate solution \({\hat{x}}\), in the space of acceptable images e.g 2D, 3D and representing the physical quantity of interest, to the measured signal y, which depends on the imaging operator and could include images, Fourier samples, line integrals, etc. \(\mathcal R(\cdot )\) is a regularization term encoding the prior information about the data, and \(\lambda\) is a hyper-parameter that controls the contribution of the regularization term. The reconstruction error is minimized iteratively until convergence. We note that the solution space after convergence does not need to be singular, for example in methods where a distribution of solutions is sampled (Bayesian neural networks (Yang et al. 2018; Luo et al. 2019)). In such cases, the “\({\hat{x}}^* \in\)” notation replaces the current “\({\hat{x}}^* =\)”. However, for consistency with recent art we use the simplified notation assuming a singular solution space at convergence.

The regularization term is often the most important part of the modeling and what researchers have mostly focused on in the literature as it vastly reduces the solution space by accounting for assumptions based on the underlying data (e.g., smoothness, sparsity, spatio-temporal redundancy). The interested reader can refer to (Dong and Shen 2015; McCann and Unser 2019) for more details on regularization modeling. Figure 6 shows an example of an iterative approach workflow for diffuse optical tomography (DOT) imaging.

Several image priors were formulated as sparse transforms to deal with incomplete data issues. The sparsity idea, representing a high dimensional image x by only a small number of nonzero coefficients, is one dominant paradigm that has been shown to drastically improve the reconstruction quality especially when the number of measurements M or theirs signal to noise ratio (SNR) is low. Given the assumption that an image can be represented with a few nonzero coefficients (instead of its number of pixels), it is possible to recover it from a much smaller number of measurements. A popular choice for a sparsifying transform is total variation (TV) that is widely studied in academic literature. The interested reader is referred to Rodríguez (2013) for TV based algorithms modeling details. While TV imposes a strong assumption on the gradient sparsity via the non-smooth absolute value that is more suited to piece-wise constant images, TV tends to cause artifacts such as blurred details and undesirable patchy texture in the final reconstructions (Fig. 7 illustrates an example of artifacts present in TV-based reconstruction). Recent work aimed at exploiting richer feature knowledge to overcome TV’s weaknesses, for example TV-type variants (Zhang et al. 2016a), non-local means (NLM) (Zhang et al. 2016b), wavelet approaches (Gao et al. 2011), and dictionary learning (Xu et al. 2012). Non-local means filtering methods, widely used for CT (Zhang et al. 2017), are operational in the image domain and allow the estimation of the noise component based on multiple patches extracted at different locations in the image (Sun et al. 2016).

Figure Reconstruction results of a limited-angle CT real head phantom (120 projections) using different conventional reconstruction methods. (A) Reference image reconstructed from 360 projections, (B) Image reconstruction using analytical FBP, (C) Images reconstructed by iterative POCS-TV with initial zero image (Guo et al. 2016). Arrows point out to artifacts present in the reconstructed images. (Figure licensed under CC-BY 4.0 Creative Commons license)

While sparsity measures the first order sparsity, i.e the sparsity of vectors (the number of non-zero elements), some models exploited alternative properties such as the low rank of the data, especially when processing dynamic or time-series data, e.g. dynamic and functional MRI (Liang 2007; Singh et al. 2015) where data can have a correlation over time. Low rank can be interpreted as the measure of the second order (i.e., matrix) sparsity (Lin 2016). Low rank is of particular interest to compression given its requirement to a full utilization of the spatial and temporal correlation in images or videos leading to a better exploitation of available data. Recent work combined low rank and sparsity properties for improved reconstruction results (Zhao et al. 2012). Guo et al. (2014); Otazo et al. (2015) decomposed the dynamic image sequence into the sum of a low-rank component, that can capture the background or slowly changing parts of the dynamic object, and sparse component that can capture the dynamics in the foreground such as local motion or contrast changes. The interested reader is referred to (Lin 2016; Ravishankar et al. 2019) for low rank based algorithms modeling survey.

The difficulties of solving the image reconstruction problem motivated the design of highly efficient algorithms for large scale, nonsmooth and nonconvex optimization problems such as the alternating direction method of multipliers (ADMM) (Boyd et al. 2011; Gabay and Mercier 1976), primal-dual algorithm (Chambolle and Pock 2011b), iterative shrinkage-thresholding algorithm (ISTA) (Daubechies et al. 2004), to name just a few. For instance, using the augmented Lagrangian function (Sun et al. 2016) of Eq. 3, the ADMM algorithm solves the reconstruction problem by breaking it into smaller subproblems that are easier to solve. Despite the problem decomposition, a large number of iterations is still required for convergence to a satisfactory solution. Furthermore, performance is defined to a large extent by the choice of transformation matrix and the shrinkage function which remain challenging to choose (McCann and Unser 2019).

ISTA is based on a simpler gradient-based algorithm where each iteration leverages hardware accelerated matrix-vector multiplications representing the physical system modeling the image acquisition process and its transpose followed by a shrinkage/soft-threshold step (Daubechies et al. 2004). While the main advantage of ISTA relies on its simplicity, it has also been known to converge quite slowly (Zhang and Dong 2019). Convergence was improved upon by a fast iterative soft-thresholding algorithm (FISTA)(Beck and Teboulle 2009) based on the idea of Nesterov (1983).

Overall, although iterative reconstruction methods showed substantial accuracy improvements and artifact reductions over the analytical ones, they still face three major weaknesses: First, iterative reconstruction techniques tend to be vendor-specific since the details of the scanner geometry and correction steps are not always available to users and other vendors. Second, there are substantial computational overhead costs associated with popular iterative reconstruction techniques due to the load of the projection and back-projection operations required at each iteration. The computational cost of these methods is often several orders of magnitude higher than analytical methods, even when using highly-optimized implementations. A trade-off between real-time performance and quality is made in favor of quality in iterative reconstruction methods due to their non-linear complexity of quality in function of the processing time. Finally, the reconstruction quality is highly dependent on the regularization function form and the related hyper-parameters settings as they are problem-specific and require non-trivial manual adjustments. Over-imposing sparsity (\({\mathcal {L}}{\mathcal {1}}\) penalties) for instance can lead to cartoon-like artifacts (McCann and Unser 2019). Proposing a robust iterative algorithm is still an active research area (Sun et al. 2019c; Moslemi et al. 2020).

Visualization of common deep learning-based reconstruction paradigms from raw sensor data. (A) A two-step processing model is shown where deep learning complements conventional methods (Sect. 3.1). A typical example would be to pre-process the raw sensor data using a conventional approach \(f_1\), enhance the resulting image with a deep learning model \(f_{\theta _2}\) then perform task processing using \(f_{\theta _3}\). The overall function will thus be \(f_{\theta _3} \circ f_{\theta _2} \circ f_1\). Vice versa, one can pre-process the raw sensor data using a DL-based approach \(f_{\theta _2}\) to enhance the collected raw data and recover missing details then apply conventional method \(f_1\) to reconstruct image and finally perform task processing using \(f_{\theta _3}\). The overall function will be \(f_{\theta _3}\circ f_1 \circ f_{\theta _2}\) in this case. (B) An end-to-end model is shown: The image is directly estimated from the raw sensor data with a deep learning model 3.2 followed by downstream processing tasks. The overall function will be \(f_{\theta _3} \circ f_{\theta _2}\). (C) Task results can be inferred with or without explicit image reconstruction (Sect. 3.3) using \(f_{\theta _3}\) function

3 Deep learning based image reconstruction

To further advance biomedical image reconstruction, a more recent trend is to exploit deep learning techniques for solving the inverse problem to improve resolution accuracy and speed up reconstruction results. As a deep neural network represents a complex mapping, it can detect and leverage features in the input space and build increasingly abstract representations useful for the end-goal. Therefore, it can better exploit the measured signals by extracting more contextual information for reconstruction purposes. In this section, we summarize works using DL for inverse problems in imaging.

Learning-based image reconstruction is driven by data where a training dataset is used to tune a parametric reconstruction algorithm that approximates the solution to the inverse problem with a significant one-time, offline training cost that is offset by a fast inference time. There is a variety of these algorithms, with some being closely related to conventional methods and others not. While some methods considered machine learning as a reconstruction step by combining a traditional model with deep modeling to recover the missing details in the input signal or enhance the resulting image (Sect. 3.1), some others considered a more elegant solution to reconstruct an image from its equivalent initial measurements directly by learning all the parameters of a deep neural network, in an end-to-end fashion, and therefore approximating the underlying physics of the inverse problem (Sect. 3.2), or even going further and solving for the target task directly (Sect. 3.3). Figure 8 shows a generic example of the workflow of these approaches. Table 1 surveys various papers based on these different paradigms and provides a comparison in terms of used data (Table 1-Column “Mod.”,“Samp.”,“D”), architecture (Table 1-Column “TA”,“Arch.”), loss and regularization (Table 1-Column “Loss”,“Reg.”), augmentation (Table 1-Column “Aug.”), etc .

3.1 Deep learning as processing step: two step image reconstruction models

Complementing a conventional image reconstruction approach with a DL-model enables improved accuracy while reducing the computational cost. The problem can be addressed either in the sensor domain (pre-processing) or the image domain (post-processing) (Fig. 8a, Table 1-Column “E2E”).

3.1.1 A pre-processing step (sensor domain)

The problem is formulated as a regression in the sensor domain from incomplete data representation (e.g., sub-sampling, limited view, low dose) to complete data (full dose or view) using DL methods and has led to enhanced results (Hyun et al. 2018; Liang et al. 2018; Cheng et al. 2018). The main goal is to estimate, using a DL model, missing parts of the signal that have not been acquired during the acquisition phase in order to input a better signal to an analytical approach for further reconstruction.

Hyun et al. (2018) proposed a k-space correction based U-Net to recover the unsampled data followed by an IFT to obtain the final output image. They demonstrated artifact reduction and morphological information preservation when only 30% of the k-space data is acquired. Similarly, Liang et al. (2018) proposed a CT angular resolution recovery based on deep residual convolutional neural networks (CNNs) for accurate full view estimation from unmeasured views while reconstructing images using filtered back projection. Reconstruction demonstrated speed-up with fewer streaking artifacts along with the retrieval of additional important image details. Unfortunately, since noise is not only present in the incomplete data acquisition case, but also in the full data as well, minimizing the error between the reference and the predicted values can cause the model to learn to predict the mean of these values. As a result, the reconstructed images can suffer from lack of texture detail.

Huang et al. (2019b) argue that DL-based methods can fail to generalize to new test instances given the limited training dataset and DL’s vulnerability to small perturbations especially in noisy and incomplete data case. By constraining the reconstructed images to be consistent with the measured projection data, while the unmeasured information is complemented by learning based methods, reconstruction quality can be improved. DL predicted images are used as prior images to provide information in missing angular ranges first followed by a conventional reconstruction algorithm to integrate the prior information in the missing angular ranges and constrain the reconstruction images to be consistent to the measured data in the acquired angular range.

Signal regression in the sensor domain reduces signal loss enabling improved downstream results from the coupled analytic method. However, the features extracted by DL methods are limited to the sensor domain only while analytical methods’ weaknesses are still present in afterword processing.

3.1.2 A post-processing step (image domain)

The regression task is to learn the mapping between the low-quality reconstructed image and its high-quality counterpart. Although existing iterative reconstruction methods improved the reconstructed image quality, they remain computationally expensive and may still result in reconstruction artifacts in the presence of noise or incomplete information, e.g. sparse sampling of data (Cui et al. 2019; Singh et al. 2020). The main difficulty arises from the non-stationary nature of the noise and serious streaking artifacts due to information loss (Chen et al. 2012; Al-Shakhrah and Al-Obaidi 2003). Noise and artifacts are challenging to isolate as they may have strong magnitudes and do not obey specific model distributions in the image domain (Wang et al. 2016). The automatic learning and detection of complex patterns offered by deep neural networks can outperform handcrafted filters in the absence of complete information (Yang et al. 2017a; Singh et al. 2020).

Given an initial reconstruction estimate from a direct inverse operator e.g., FBP (Sun et al. 2018; Gupta et al. 2018; Chen et al. 2017a), IFT (Wang et al. 2016), or few iterative approach steps (Jin et al. 2017; Cui et al. 2019; Xu et al. 2017), deep learning is used to refine the initialized reconstruction and produce the final reconstructed image. For example, Chen et al. (2017b) used an autoencoder to improve FBP results on a limited angle CT projection. Similarly, Jin et al. (2017) enhanced FBP results on a sparse-view CT via subsequent filtering by a U-Net to reduce artifacts. U-Net and other hour-glass shaped architectures rely on a bottleneck layer to encode a low-dimensional representation of the target image.

Generative adversarial networks (GAN) (Goodfellow et al. 2014) were leveraged to improve the quality of reconstructed images. Wolterink et al. (2017) proposed to train an adversarial network to estimate full-dose CT images from low-dose CT ones and showed empirically that an adversarial network improves the model’s ability to generate images with reduced aliasing artifacts. Interestingly, they showed that combining squared error loss with adversarial loss can lead to a noise distribution similar to that in the reference full-dose image even when no spatially aligned full-dose and low dose scans are available.

Yang et al. (2017a) proposed a deep de-aliasing GAN (DAGAN) for compressed sensing MRI reconstruction that resulted in reduced aliasing artifacts while preserving texture and edges in the reconstruction. Remarkably, a combined loss function based on content loss ( consisting of a pixel-wise image domain loss, a frequency domain loss and a perceptual VGG loss) and adversarial loss were used. While frequency domain information was incorporated to enforce similarity in both the spatial (image) and the frequency domains, a perceptual VGG coupled to a pixel-wise loss helped preserve texture and edges in the image domain.

Combining DL and conventional methods reduce the computational cost but has its own downsides. For instance, the features extracted by DL methods are highly impacted by the results of the conventional methods, especially in case of limited measurements and the presence of noise where the initially reconstructed image may contain significant and complex artifacts that may be difficult to remove even by DL models. In addition, the information missing from the initial reconstruction is challenging to be reliably recovered by post-processing like many inverse problems in the computer vision literature such as image inpainting. Furthermore, the number of iterations required to obtain a reasonable initial image estimate using an iterative method can be hard to define and generally requires a long run-time (in the order of several min) to be considered for real-time scanning. Therefore, the post-processing approach may be more suitable to handle initial reconstructions that are of relatively good quality and not drastically different from the high-quality one.

Common network architectures used for image reconstruction. From left to right: a multilayer perceptron network based on fully connected layers; an encoder-decoder architecture based convolutional layers; a residual network, e.g. ResNet (He et al. 2016), utilizing skip connections (skip connection is crucial in facilitating stable training when the network is very deep); and a generative adversarial network (GAN). A decoder like architecture includes only the decoder part of the encoder-decoder architecture and may be preceded by a fully connected layer to map measurements to the image space depending on the input data size. A U-Net (Ronneberger et al. 2015) resembles the encoder-decoder architecture while it uses skip connections between symmetric depths. A GAN (Goodfellow et al. 2014) includes a generator and descriminor that contest with each other in a min-max game. The generator learns to create more realistic data by incorporating feedback from the discriminator. We refer the interested reader to (Alom et al. 2019; Khan et al. 2020) for a an in depth survey of various types of deep neural network architectures

3.2 End-to-end image reconstruction: direct estimation

An end-to-end solution leverages the image reconstruction task directly from sensor-domain data using a deep neural network by mapping sensor measurements to image domain while approximating the underlying physics of the inverse problem (Fig. 8b). This direct estimation model may represent a better alternative as it benefits from the multiple levels of abstraction and the automatic feature extraction capabilities of deep learning models.

Given pairs of measurement vectors \(y \in {\mathbb {C}}^{M}\) and their corresponding ground truth images \(x \in {\mathbb {C}}^{N}\) (that produce y), the goal is to optimize the parameters \(\theta \in {\mathbb {R}}^{d}\) of a neural network in an end-to-end manner to learn the mapping between the measurement vector y and its reconstructed tomographic image x, which recovers the parameters of underlying imaged tissue. Therefore, we seek the inverse function \({\mathcal {A}}^{-1}(\cdot )\) that solves:

where \({\mathcal {L}}\) is the loss function of the network that, broadly, penalizes the dissimilarity between the estimated reconstruction and the ground truth. The regularization term \({\mathcal {R}}\), often introduced to prevent over-fitting, can apply penalties on layer parameters (weights) or layer activity (output) during optimization. \({\mathcal {L}}1\)/\({\mathcal {L}}2\) norms are the most common choices. \(\lambda\) is a hyper-parameter that controls the contribution of the regularization term. Best network parameters (\(\theta {^*}\)) depend on hyper-parameters, initialization and a network architecture choice.

Recently, several paradigms have emerged for end-to-end DL-based reconstruction the most common of which are generic DL models and DL models that unroll an iterative optimization.

3.2.1 Generic models

Although some proposed models rely on multilayer perceptron (MLP) feed-forward artificial neural network (Pelt and Batenburg 2013; Boublil et al. 2015; Feng et al. 2018; Wang et al. 2019), CNNs remain the most popular generic reconstruction models mainly due to their data filtering and features extraction capabilities. Specifically, encoder-decoder (Nehme et al. 2018; Häggström et al. 2019), U-Net (Waibel et al. 2018), ResNet (Cai et al. 2018) and decoder like architecture (Yoon et al. 2018; Wu et al. 2018; Zhu et al. 2018) are the most dominant architectures as they rely on a large number of stacked layers to enrich the level of features extraction. A set of skip connections enables the later layers to reconstruct the feature maps with both the local details and the global texture and facilitates stable training when the network is very deep. Figure 9 illustrates some of the architectures that are widely adopted in medical image reconstruction.

The common building blocks of neural network architectures are convolutional layers, batch normalization layers, and rectified linear units (ReLU). ReLU is usually used to enforce information non-negativity properties, given that the resulting pixels values represent tissue properties e.g., chromophores concentration maps (Yoo et al. 2020), refractive index (Sun et al. 2016), and more examples in Table 1. Batch normalization is used to reduce the internal covariate shift and accelerates convergence. The resulting methods can produce relatively good reconstructions in a short time and can be adapted to other modalities but require a large training dataset and good initialization parameters. Table 1-Column “E2E” (check-marked) summarizes papers by architecture, loss, and regularization for 2D, 3D, 4D, and different modalities.

Zhu et al. (2018) proposed a manifold learning framework based decoder neural network to emulate the fast-Fourier transform (FFT) and learn an end-to-end mapping between k-space data and image domains where they showed artifact reduction and reconstruction speed up. However, when trained with an \({\mathcal {L}}1\) or \(\mathcal L2\) loss only, a DL-based reconstructed image still exhibits blurring and information loss, especially when used with incomplete data. Similarly, Yedder et al. (2018) proposed a decoder like model for DOT image reconstruction. While increased reconstruction speed and lesion localization accuracy are shown, some artifacts are still present in the reconstructed image when training with \({\mathcal {L}}2\) loss only. This motivated an improved loss function in their follow-up work (Yedder et al. 2019) where they suggested combining \({\mathcal {L}}2\) with a Jaccard loss component to reduce reconstructing false-positive pixels.

Thaler et al. (2018) proposed a Wasserstein GAN (WGAN) based architecture for improving the image quality for 2D CT image slice reconstruction from a limited number of projection images using a combination of \({\mathcal {L}}1\) and adversarial losses. Similarly, Ouyang et al. (2019) used a GAN based architecture with a task-specific perceptual and \({\mathcal {L}}1\) losses to synthesize PET images of high quality and accurate pathological features. Some attempts were made to reconstruct images observed by the human visual system directly from brain responses using GAN (Shen et al. 2019; St-Yves and Naselaris 2018). Shen et al. (2019) trained a GAN for functional magnetic resonance imaging (fMRI) reconstruction to directly map between brain activity and perception (the perceived stimulus). An adversarial training strategy which consists of three networks: a generator, a comparator, and a discriminator was adopted. The comparator is a pre-trained neural network on natural images used to calculate a perceptual loss term. The loss function is based on \({\mathcal {L}}2\) distance in image space, perceptual loss (similarity in feature space) and adversarial loss.

To further enhance results and reduce artifacts due to motion and corruption of k-space signal, Oksuz et al. (2019) proposed a recurrent convolutional neural network (RCNN) to reconstruct high quality dynamic cardiac MR images while automatically detecting and correcting motion-related artifacts and exploiting the temporal dependencies within the sequences. Proposed architecture included two sub-networks trained jointly: an artifact detection network that identifies potentially corrupted k-space lines and an RCNN for reconstruction.

To relax the requirement of a large number of training samples, a challenging requirement in a medical setting, simulating data was proposed as an alternative source of training data. However, creating a realistic synthetic dataset is a challenging task in itself as it requires careful modeling of the complex interplay of factors influencing real-world acquisition environment. To bridge the gap between the real and in silico worlds, transfer learning provides a potential remedy as it helps transfer the measurements from the simulation domain to the real domain by keeping the general attenuation profile while accounting for real-world factors such as scattering, etc.

Yedder et al. (2019) proposed a supervised sensor data distribution adaptation based MLP to take advantage of cross-domain learning and reported accuracy enhancement in detecting tissue abnormalities. Zhou et al. (2019) proposed unsupervised CT sinograms adaptation, based on CycleGAN and content consistent regularization, to further alleviate the need for real measurement-reconstruction pairs. Interestingly, the proposed method integrated the measurement adaptation network and the reconstruction network in an end-to-end network to jointly optimize the whole network.

Waibel et al. (2018) investigated two different variants of DL-based methods for photo-acoustique image reconstruction from limited view data. The first method is a post-processing DL method while the second one is an end-to-end reconstruction model. Interestingly, they showed empirically that an end-to-end model achieved qualitative and quantitative improvements compared to reconstruction with a post-processing DL method. Zhang and Liang (2020) studied the importance of fully connected layers, commonly used in end-to end model (Zhu et al. 2018; Yedder et al. 2019), to realize back-projection data from the sensor domain to the image domain and showed that while a back-projection can be learned through neural networks it is constrained by the memory requirements induced by the non-linear number of weights in the fully connected layers. Although several generic DL architectures and loss functions have been explored to further enhance reconstruction results in different ways (resolution, lesion localization, artifact reduction, etc.), a DL-based method inherently remains a black-box that can be hard to interpret. Interpretability is key not only for trust and accountability in a medical setting but also to correct and improve the DL model.

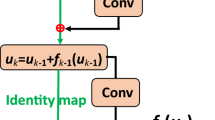

3.2.2 Unrolling iterative methods

Unrolling conventional optimization algorithms into a DL model has been suggested by several works (Qin et al. 2018; Schlemper et al. 2017; Würfl et al. 2018; Sun et al. 2016; Adler and Öktem 2018; Hosseini et al. 2019) in order to combine the benefits of traditional iterative methods and the expressive power of deep models (Table 1-Column “E2E”). Rajagopal et al. (2019) proposed a theoretical framework demonstrating how to leverage iterative methods to bootstrap network performance while preserving network convergence and interpretability featured by the conventional approaches.

Deep ADMM-Net (Sun et al. 2016) was the first proposed model reformulating the iterative reconstruction ADMM algorithm into a deep network for accelerating MRI reconstruction, where each stage of the architecture corresponds to an iteration in the ADMM algorithm. In its iterative scheme, the ADMM algorithm requires tuning of a set of parameters that are difficult to determine adaptively for a given data set. By unrolling the ADMM algorithm into a deep model, the tuned parameters are now all learnable from the training data. The ADMM-Net was later further improved to Generic-ADMM-Net (Yang et al. 2017b) where a different variable splitting strategy was adopted in the derivation of the ADMM algorithm and demonstrated state-of-the-art results with a significant margin over the BM3D-based algorithm (Dabov et al. 2007). Similarly, the PD-Net (Adler and Öktem 2018) adopted neural networks to approximate the proximal operator by unrolling the primal-dual hybrid gradient algorithm (Chambolle and Pock 2011a) and demonstrated performance boost compared with conventional and two step image reconstruction models. Figure 10 shows results of their proposed method in a simplified setting of a two-dimensional CT image reconstruction problem.

Reconstructions of the Shepp–Logan phantom in a two-dimensional CT image reconstruction problem using different reconstruction methods. (a) Reference image.(b)–(c) The standard FBP and TV-regularized reconstruction. (d) FBP reconstruction followed by a U-Net architecture. (e) The learned primal-dual scheme (Adler and Öktem 2018). One can observe the artifacts in the conventional methods removed by the DL-based approaches. Overall, the unrolled-iterative method gave the best results and outperformed even the DL as a post-processing step method. The interested reader is referred to (Arridge et al. 2019) for more details about the training setting. (Reproduced with permission of Cambridge University Press)

In like manner, Schlemper et al. (2017) proposed a cascade convolutional network that embeds the structure of the dictionary learning-based method while allowing end-to-end parameter learning. The proposed model enforces data consistency in the sensor and image domain simultaneously, reducing the aliasing artifacts due to sub-sampling. An extension for dynamic MR reconstructions (Schlemper et al. 2018) exploits the inherent redundancy of MR data.

While, the majority of the aforementioned methods used shared parameters over iterations only, Qin et al. (2018) proposed to propagate learnt representations across both iteration and time. Bidirectional recurrent connections over optimization iterations are used to share and propagate learned representations across all stages of the reconstruction process and learn the spatio–temporal dependencies. The proposed deep learning based iterative algorithm can benefit from information extracted at all processing stages and mine the temporal information of the sequences to improve reconstruction accuracy. The advantages of leveraging temporal information was also demonstrated in single molecule localization microscopy (Cardoen et al. 2019). An LSTM was able to learn an unbiased emission density prediction in a highly variable frame sequence of spatio-temporally separated fluorescence emissions. In other words, joint learning over the temporal domain of each sequence and across iterations leads to improved de-aliasing.

3.2.3 Semi-supervised and unsupervised learning methods

The majority of deep learning methods for image reconstruction are based on supervised learning where the mapping between signal and reconstructed image is learned. However, the performance of supervised learning is, to a large degree, determined by the size of the available training data which is constrained in a medical setting. In an effort to work around this requirement, Gong et al. (2018) applied the deep image prior method (Ulyanov et al. 2018) to PET image reconstruction. The network does not need prior training matching pairs, as it substitutes the target image with a neural network representation of the target domain but rather requires the patient’s own prior information. The maximum-likelihood estimation of the target image is formulated as a constrained optimization problem and is solved using the ADMM algorithm. Gong et al. (2019) have extended the approach to the direct parametric PET reconstruction where acquiring high-quality training data is more difficult. However, to obtain high-quality reconstructed images without prior training datasets, registered MR or CT images are necessary. While rigid registration is sufficient for brain regions, accurate registration is more difficult for other regions.

Instead of using matched high-dose and a low-dose CT, Kang et al. (2019) proposed to employ the GAN loss to match the probability distribution where a cycleGAN architecture based cyclic loss and identity loss for multiphase cardiac CT problems is used. Similarly, Li et al. (2020b) used an unsupervised data fidelity enhancement network that uses an unsupervised network to fine-tune a supervised network to different CT scanning protocol properties. Meng et al. (2020) proposed to use only a few supervised sinogram-image pairs to capture critical features (i.e., noise distribution, tissue characteristics) and transfer these features to larger unlabeled low-dose sinograms. A weighted least-squares loss model based TV regularization term and a KL divergence constraint between the residual noise of supervised and unsupervised learning is used while a FBP is employed to reconstruct CT images from the obtained sinograms.

3.3 Raw-to-task methods: task-specific learning

Typical data flow in biomedical imaging involves solving the image reconstruction task followed by preforming an image processing task (e.g., segmentation, classification) (Fig 11a). Although each of these two tasks (reconstruction and image processing) is solved separately, the useful clinical information extracted for diagnosis by the second task is highly dependent on the reconstruction results. In the raw-to-task paradigm, task results are directly inferred from raw data, where image reconstruction and processing are lumped together and reconstructed image may not be necessarily outputted (Fig. 8c, Fig. 11b)

Jointly solving for different tasks using a unified model is frequently considered in the computer vision field, especially for image restoration (Sbalzarini 2016), and has lead to improved results than solving tasks sequentially (Paul et al. 2013). The advantages explain the recent attention this approach received in biomedical image reconstruction (Sun et al. 2019a; Huang et al. 2019a). For instance, a unified framework allows joint parameters tuning for both tasks and feature sharing where the two problems regularize each other when considered jointly. In addition, when mapping is performed directly from the sensor domain, the joint task can even leverage sensor domain features for further results enhancing while it can be regarded as a task-based image quality metric that is learned from the data. Furthermore, Sbalzarini (2016) argues that solving ill-posed problems in sequence can be more efficient than in isolation. Since the output space of a method solving an inverse problem is constrained by forming the input space of the next method, the overall solution space contracts. Computational resources can then be focused on this more limited space improving runtime, solution quality or both.

Image reconstruction is not always necessary for optimal performance. For example, in single-pixel imaging recent work (Latorre-Carmona et al. 2019) illustrates that an online algorithm can ensure on-par performance in image classification without requiring the full image reconstruction. A typical pipeline in processing compressive sensing requires image reconstruction before classical computer vision (CV) algorithms process the images in question. Recent work (Braun et al. 2019) has shown that CV algorithms can be embedded into the compressive sensing domain directly, avoiding the reconstruction step altogether. In discrete tomography the full range of all possible projections is not always feasible or available due to time, resource, or physical constraints. A hybrid approach in discrete tomography (Pap et al. 2019) finds a minimal number of projections required to obtain a close approximation of the final reconstruction image. While an image is still reconstructed after the optimal number of projections is found, determining this optimal set required no reconstructions, a significant advantage compared to an approach where either an iterative or combinatorial selection of reconstructions to determine the optimal set of projections.

4 Medical training datasets

The performance of learning-based methods is dictated to a large extent by the size and diversity of the training dataset. In a biomedical setting, the need for large, diverse, and generic datasets is non-trivial to satisfy given constraints such as patient privacy, access to acquisition equipment and the problem of divesting medical practitioners to annotate accurately the existing data. In this section, we will discuss how researchers address the trade-offs in this dilemma and survey the various publicly available dataset type used in biomedical image reconstruction literature.

Table 1-Columns “Data”, “Site” and “Size” summarise details about dataset used by different surveyed papers, which are broadly classified into clinical (real patient), physical phantoms, and simulated data. The sources of used datasets have been marked in the last column of Table 1-Columns “Pub”. Data” in case of their public availability to other researchers. Since phantom data are not commonly made publicly available, the focus was mainly given to real and simulated data whether they are publicly available or as part of challenges. Used augmentation techniques have been mentioned in Table 1-Columns “Aug”. Remarkably, augmentation is not always possible in image reconstruction task especially in sensor domain given the non-symmetries of measurements in some case, the nonlinear relationship between raw and image data, and the presence of other phenomena(e.g., scattering). We herein survey the most common source of data and discuss their pros and cons.

4.1 Real-world datasets

Some online platforms (e.g., Lung Cancer Alliance , Mridata , MGH-USC HCP (2016), and Biobank) made the initiative to share datasets between researchers for image reconstruction task. Mridata, for example, is an open platform for sharing clinical MRI raw k-space datasets. The dataset is sourced from acquisitions of different manufacturers, allowing researchers to test the sensitivity of their methods to overfitting on a single machine’s output while may require the application of transfer-learning techniques to handle different distributions. As of writing, only a subset of organs for well known modalities e.g., MRI and CT are included (Table 1-Columns “Site”). Representing the best reconstruction images acquired for a specific modality, the pairs of signal-image form a gold standard for reconstruction algorithms. Releasing such data, while extremely valuable for researchers, is a non-trivial endeavour where legal and privacy concerns have to be taken into account by, for example, de-anonymization of the data to make sure no single patient or ethnographically distinct subset of patients can ever be identified in the dataset. Source of real-word used datasets on surveyed papers has been marked in Table 1-Columns “Pub. Data” where the sizes remain relatively limited to allow a good generalization of DL-based methods.

4.2 Physics-based simulation

Physics-based simulation (Schweiger and Arridge 2014; Harrison 2010; Häggström et al. 2016) provides an alternative source of training data that allows generating a large and diversified dataset. The accuracy of a physical simulation with respect to real-world acquisitions increases at the cost of an often super-linear increase in computational resources. In addition, creating realistic synthetic datasets is a nontrivial task as it requires careful modeling of the complex interplay of factors influencing real-world acquisition environment. With a complete model of the acquisition far beyond computational resources, a practitioner needs to determine how close to reality the simulation needs to be in order to allow the method under development to work effectively. Transfer learning provides a potential remedy to bridge the gap between real and in silico worlds and alleviates the need for a large clinical dataset (Zhu et al. 2018; Yedder et al. 2019). In contrast, the approach of not aiming for complete realism but rather using the simulation as a tool to sharpen the research question can be appropriate. Simulation is a designed rather than a learned model. For both overfitting to available data is undesirable. The assumptions underlying the design of the simulation are more easily verified or shown not to hold if the simulation is not fit to the data, but represents a contrasting view. For example, simulation allows the recreation and isolation of edge cases where a current approach is performing sub-par. As such simulation is a key tool for hypothesis testing and validation of methods during development. For DL-based methods the key advantage simulation offers is the almost unlimited volume of data that can be generated to augment limited real-world data in training. With the size of datasets as one of the keys determining factors for DL-based methods leveraging simulation is essential. Surveyed papers that used simulated data as a training or augmentation data have been marked in Table 1-Columns “Pub”.

4.3 Challenge datasets

There are only a few challenge (competition) datasets for image reconstruction task e.g., LowDoseCT (2014) FastMRI (Zbontar et al. 2018), Fresnel (Geffrin et al. 2005) and SMLM challenge (Holden and Sage 2016) that includes raw measurements. Low-quality signals can be simulated by artificially subsampling the full-dose acquired raw signal while keeping their corresponding high resolution images pair (Gong et al. 2018; Xu et al. 2017). While this method offers an alternative source of training data, the downsampling is only one specific artificial approximation to the actual acquisition of low-dose imaging and may not reflect the real physical condition. Hence, performance can be compromised by not accounting for the discrepancy between the artificial training data and real data. Practitioners can leverage techniques such as transfer learning to tackle the discrepancy. Alternatively, researchers collect high-quality images from other medical imaging challenges, e.g., segmentation (MRBrainS challenge (Mendrik et al. 2015), Bennett and Simon (2013)), and use simulation, using a well known forward model, to generate full and/or incomplete sensor domain pairs. Here again, only a subset of body scans and diseases for well-studied modalities are publicly available as highlighted in Table 1-Columns “Site” and “Pub. Data”.

5 Reconstruction evaluation metrics

5.1 Quality

Measuring the performance of the reconstruction approaches is usually performed using three metrics, commonly applied in computer vision, in order to access the quality of the reconstructed images. These metrics are the root mean squared error (RMSE) or normalized mean squared error, structural similarity index (SSIM) or its extended version multiscale structural similarity (Wang et al. 2003), and peak signal to noise ratio (PSNR).

While RMSE measures the pixel-wise intensity difference between ground truth and reconstructed images by evaluating pixels independently, ignoring the overall image structure, SSIM, a perceptual metric, quantifies visually perceived image quality and captures structural distortion. SSIM combines luminance, contrast, and image structure measurements and ranges between [0,1] where the higher SSIM value the better and SSIM = 1 means that the two compared images are identical.

PSNR (Eq. 5) is a well-known metric for image quality assessment which provides similar information as the RMSE but in units of dB while measuring the degree to which image information rises above background noise. Higher values of PSNR indicate a better reconstruction.

where \(X_{max}\) is the maximum pixel value of the ground truth image.

Illustrating modality specific reconstruction quality is done by less frequently used metrics such as contrast to noise ratio (CNR) (Wu et al. 2018) for US. Furthermore, modalities such as US, SMLM or some confocal fluorescence microscopy can produce a raw image where the intensity distribution is exponential or long-tailed. Storing the raw image in a fixed-precision format would lead to unacceptable loss of information due to uneven sampling of the represented values. Instead, storing and more importantly processing the image in logarithmic or dB-scale preserves the encoded acquisition better. The root mean squared logarithmic error (RMSLE) is then a logical extension to use as an error metric (Beattie 2018).

Normalized mutual information (NMI) is a metric used to determine the mutual information shared between two variables, in this context image ground truth and reconstruction (Wu et al. 2018; Zhou et al. 2019). When there is no shared information NMI is 0, whereas if both are identical a score of 1 is obtained. To illustrate NMI’s value, consider two images X, Y with random values. When generated from two different random sources, X and Y are independent, yet RMSE (X,Y) can be quite small. When the loss function minimizes RMSE, such cases can induce stalled convergence at a suboptimal solution due to a constant gradient. NMI, on the other hand, would return zero (or a very small value), as expected. RMSE minimizes average error which may make it less suitable for detailed distribution matching tasks in medical imaging such as image registration where NMI makes for a more effective optimization target (Wells III et al. 1996).

The intersection over union, or Jaccard index, is leveraged to ensure detailed accurate reconstruction (Yedder et al. 2018; Sun et al. 2019a). In cases where the object of interest is of variable size and small with respect to the background, an RMSE score is biased by matching the background rather than the target of interest. In a medical context, it is often small deviations (e.g. tumors, lesions, deformations) that are critical to diagnosis. Thus, unlike computer vision problems where little texture changes might not alter the overall observer’s satisfaction, in medical reconstruction, further care should be taken to ensure that practitioners are not misled by a plausible but not necessarily correct reconstruction. Care should be taken to always adjust metrics with respect to their expected values under an appropriate random model (Gates and Ahn 2017). The understanding of how a metric responds to its input should be a guideline to its use. As one example, the normalization method in NMI has as of writing no less than 6 (Gates and Ahn 2017) alternatives with varying effect on the metric. Table 1-Columns “Metrics” surveys the most frequently used metrics on surveyed papers.

5.2 Inference speed

With reconstruction algorithms constituting a key component in time-critical diagnosis or intervention settings, the time complexity is an important metric in selecting methods. Two performance criteria are important in the context of time: throughput measures how many problem instances can be solved over a time period, and latency measures the time needed to process a single problem instance. In a non-urgent medical setting, a diagnosing facility will value throughput more than latency. In an emergency setting where even small delays can be lethal, latency is critical above all. For example, if a reconstruction algorithm is deployed on a single device it is not unexpected for there to be waiting times for processing. As a result latency, if the waiting time is included, will be high and variable, while throughput is constant. In an emergency setting there are limits as to how many devices can be deployed, computing results on scale in a private cloud on the other hand can have high throughput, but higher latency as there will be a need to transfer data offsite for processing. In this regard it is critical for latency sensitive applications to allow deployment on mobile (low-power) devices. To minimize latency (including wait-time), in addition to parallel deployment, the reconstruction algorithm should have a predictable and constant inference time, which is not necessarily true for iterative approaches.

Unfortunately, while some papers reported their training and inference times, (Table 1-Columns “Metrics-IS”) it is not obvious to compare their time complexity given the variability in datasets, sampling patterns, hardware, and DL frameworks. A dedicated study, out of the scope of this survey, needs to be conducted for a fair comparison. Overall, the offline training of DL methods bypasses the laborious online optimization procedure of conventional methods, and has the advantage of lower inference time over all but the simplest analytical. In addition, when reported, inference times are usually in the order of milliseconds per image making them real time capable. The unrolled iterative network, used mainly in the MR image reconstruction (Sun et al. 2016; Yang et al. 2017b) as the forward and backward projections can be easily performed via FFT, might be computationally expensive for other modality where the forward and backward projections can not be computed easily. Therefore, the total training time can be much longer. In addition when data is acquired and reconstructed in 3D, the GPU memory becomes a limiting factor especially when multiple unrolling modules are used or the network architecture is deep.

6 Conclusion, discussion and future direction

Literature shows that DL-based image reconstruction methods have gained popularity over the last few years and demonstrated image quality improvements when compared to conventional image reconstruction techniques especially in the presence of noisy and limited data representation. DL-based methods address the noise sensitivity and incompleteness of analytical methods and the computational inefficiency of iterative methods. In this section we will illustrate the main trends in DL-based medical image reconstruction: a move towards task-specific learning where image reconstruction is no longer required for the end task, and the focus on a number of strategies to overcome the inherent sparsity of manually annotated training data.

6.1 Discussion

Learning: Unlike conventional approaches that work on a single image in isolation and require prior knowledge, DL-based reconstruction methods leverage the availability of large-scale datasets and the expressive power of DL to impose implicit constraints on the reconstruction problem. While DL-based approaches do not require prior knowledge, their performance can improve with it. By not being dependent on prior knowledge, DL-based methods are more decoupled from a specific imaging (sub)modality and thus can be more generalizable. The ability to integrate information from multiple sources without any preprocessing is another advantage of deep neural networks. Several studies have exploited GANs for cross-modal image generation (Li et al. 2020a; Kearney et al. 2020) as well as to integrate prior information (Lan et al. 2019; Bhadra et al. 2020). Real-time reconstruction is offered by DL-based methods by performing the optimization or learning stage offline, unlike conventional algorithms that require iterative optimization for each new image. The diagnostician can thus shorten diagnosis time increasing the throughput in patients treated. In operating theatres and emergency settings this advantage can be life saving.

Interpretability: While the theoretical understanding and the interpretability of conventional reconstruction methods are well established and strong (e.g., one can prove a method’s optimality for a certain problem class), it is weak for the DL-based methods (due to the black-box nature of DL) despite the effort in explaining the operation of DL-based methods on many imaging processing tasks. However, one may accept the possibility that interpretabilty is secondary to performance as fully understanding DL-based approaches may never become practical.

Complexity: On the one hand, conventional methods can be straightforward to implement, albeit not necessarily to design. On the other, they are often dependent on parameters requiring manual intervention for optimal results. DL-based approaches can be challenging to train with a large if not intractable hyper-parameter space (e.g., learning rate, initialization, network design). In both cases, the hyper-parameters are critical to results and require a large time investment from the developer and the practitioner. In conclusion, there is a clear need for robust self-tuning algorithms, for both DL-based and conventional methods.

Robustness: Conventional methods can provide good reconstruction quality when the measured signal is complete and the noise level is low, their results are consistent across datasets and degrade as the data representation and/or the signal to noise ratio is reduced by showing noise or artifacts (e.g., streaks, aliasing). However, a slight change in the imaging parameters (e.g., noise level, body part, signal distribution, adversarial examples, and noise) can severely reduce the DL-based approaches’ performances and might lead to the appearance of structures that are not supported by the measurements (Antun et al. 2019; Gottschling et al. 2020). DL based approaches still leave many unsolved technical challenges regarding their convergence and stability that in turn raise questions about their reliability as a clinical tool. A careful fusion between DL-based and conventional approaches can help mitigate these issues and achieve the performance and robustness required for biomedical imaging.

Speed: DL-based methods have the advantage in processing time over all but the most simple analytical methods at inference time. As a result, latency will be low for DL-based methods. However, one must be careful in this analysis. DL-based methods achieve fast inference by training for a long duration, up to weeks, during development. If any changes to the method are needed and retraining is required, even partial, a significant downtime can ensue. Typical DL-based methods are not designed to be adjusted at inference time. Furthermore, when a practitioner discovers that, at diagnosis time, the end result is sub-par, an iterative method can be tuned by changing its hyper-parameters. For a DL-based approach, this is non-trivial if not infeasible.

A final if not less important distinction is adaptive convergence. At deployment, a DL-based method has a fixed architecture and weights with a deterministic output. Iterative methods can be run iterations until acceptable performance is achieved. This is a double-edged sword as convergence is not always guaranteed and the practitioner might not know exactly how many more iterations are needed.

Training Dataset: Finally, the lack of large scale biomedical image datasets for training due to privacy, legal, and intellectual property related concerns, limits the application of DL-based methods on health care. Training DL-based models often requires scalable high performance hardware provided by cloud based offerings. However, deploying on cloud computing and transmitting the training data risks the security, authenticity, and privacy of that data. Training on encrypted data offers a way to ensure privacy during training (Gilad-Bachrach et al. 2016). More formally a homomorphic encryption algorithm (Rivest et al. 1978) can ensure evaluation (reconstruction) on the encrypted data results are identical after decryption to reconstruction on the non-encrypted data. In practice, this results in an increase in dataset size as compression becomes less effective, a performance penalty is induced by the encryption and decryption routines, and interpretability and debugging the learning algorithm becomes more complex since it operates on human unreadable data.

The concept of federated learning, where improvements of a model (weights) are shared between distributed clients without having to share datasets, has seen initial success in ensuring privacy while enabling improvements in quality (Geyer et al. 2017). However a recent work by Zhu et al. (2019) has shown that if an attacker has access to the network architecture and the shared weights, the training data can be reconstructed with high fidelity from the gradients alone. Data sharing security in a federated setting still presents a concern that requires further investigations.

Simulating a suitable training set also remains a challenge that requires careful tuning and more realistic physical models to improve DL-based algorithm generalization.

Performance: Reconstruction is sensitive to missing raw measurements (false negative, low recall) and erroneous signal (false positive, low precision). Sensitivity in reconstruction is critical to ensure all present signals are reconstructed but high recall is not sufficient as in this setting a reconstructed image full of artifacts and noise can still have high recall. Specificity may be high in reconstructed images, where little to no artifacts or noise is present, but at the cost of omitting information. In the following we discuss how the indicators of both are affected by training regimes and dataset size.

Deep learning reconstruction quality varies when using different loss metrics. For instance, when a basic \({\mathcal{L}{1} \text{ or } \mathcal{L}{2}}\) loss in image space is used solely, reconstruction results tend to be worse quality because it generates an average of all possible reconstructions having the same distance in image space, hence the reconstructed images might still present artifacts (Shen et al. 2019; Yedder et al. 2018). Adding feature loss in high dimensional space like perceptual loss and frequency domain loss helps to better constrain the reconstruction to be perceptually more similar to the original image (Yang et al. 2017a; Shen et al. 2019; Huang et al. 2019b). Furthermore, reconstruction quality varies with dataset size. To analyze the effect of training-dataset size on reconstruction quality, Shen et al. (2019) trained their reconstruction model with a variable number of training samples while gradually increasing the dataset size from 120 to 6,000 samples. Interestingly, they reported that while the reconstruction quality improved with the number of training samples, the quality increase trend did not converge which suggests that better reconstruction quality might be achieved if larger datasets are available. Similarly, Sun and Kamilov (2018) studied the effect of decreasing the number of measurements and varying the noise level on multiple scattering based deep learning reconstruction methods and concluded that better feature extraction from higher dimensional space are required for very low sampling cases. In addition, data is usually imbalanced, with the unhealthy (including anomalies) samples being outnumbered by the healthy ones resulting in reduced performance and compromised reconstruction of tumor related structures (Wen et al. 2019).