Abstract

The Index is a data structure which stores data in a suitably abstracted and compressed form to facilitate rapid processing by an application. Multidimensional databases may have a lot of redundant data also. The indexed data, therefore need to be aggregated to decrease the size of the index which further eliminates unnecessary comparisons. Feature-based indexing is found to be quite useful to speed up retrieval, and much has been proposed in this regard in the current era. Hence, there is growing research efforts for developing new indexing techniques for data analysis. In this article, we propose a comprehensive survey of indexing techniques with application and evaluation framework. First, we present a review of articles by categorizing into a hash and non-hash based indexing techniques. A total of 45 techniques has been examined. We discuss advantages and disadvantages of each method that are listed in a tabular form. Then we study evaluation results of hash based indexing techniques on different image datasets followed by evaluation campaigns in multimedia retrieval. In this paper, in all 36 datasets and three evaluation campaigns have been reviewed. The primary aim of this study is to apprise the reader of the significance of different techniques, the dataset used and their respective pros and cons.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the explosive growth of multimedia data technologies, it becomes challenging to fulfill diverse user needs related to textual, visual and audio data retrieval. The advances in the integration of computer vision, machine learning, database systems, and information retrieval have enabled the development of advanced information retrieval systems (Gani et al. 2016). As multidimensional databases are gigantic, it has become important to develop data accessing and querying techniques that could facilitate fast similarity search. The issues of feature extraction and high-dimensional indexing mechanism are crucial in visual information retrieval (VIR) due to the massive amount of data collections. A typical VIR system (Wang et al. 2016) operates in three phases namely feature extraction phase, high-dimensional indexing phase, and retrieval system design phase. Potential applications (Datta et al. 2005, 2008) include digital libraries, commerce, medical, biodiversity, copyright, law enforcement and architectural design. Figure 1, below, displays the block diagram of the query by visual example.

The most important aspect of any indexing technique is to make a quick comparison between the query and object in the multidimensional database (Bohm et al. 2001). Multidimensional databases may have a lot of redundant data also. The indexed data, therefore, need to be aggregated to decrease the size of the index which further eliminates unnecessary comparisons.

1.1 Basic concepts

Feature and feature extraction Feature corresponds to the overall description of the image contents. ‘Local’ and ‘global’ are the terms used in the context of image features. Shape, color, and texture individually describe contents of an image, but that information is not descriptive enough. In this regard Histograms, SIFT, and CNN based computer vision techniques are developed to extract more informative contents. Feature aggregation techniques like Bag-of-visual-words (BoVW), VLAD, and Fisher vector produces fixed length vector which helps to approximate the performance of similarity metrics.

Index The image index is a data structure which stores data in a suitably abstracted and compressed form to facilitate rapid processing by an application. Feature-based indexing is found to be quite useful to speed up retrieval and is currently needed in this generation. Typically, any information retrieval system demands the following principle requirements (Téllez et al. 2014): size of the index, parallelism, the speed of index generation and speed of search.

Query processing The retrieval process starts with feature extraction for a query image. The primary aim is to extract and match the corresponding query features with pre-computed image dataset features under issue such as scalability of image descriptors and user intent to search. A query can be processed in a number of ways, depending on the type of indexing and extracted features.

Query formation Query formation is an attempt to define user’s precise needs and subjectivity. It is very difficult to capture the human perception and intention into a query. There is different query formation schemes proposed in literature such as query by text, query by image example, query by sketch, query by color layout etc. In Fig. 2 different query formation techniques are presented.

Relevance feedback The different user intent may contain image clarity, quality, and associated meta-data. With the use of earlier user logs and semantic feedback; query refinement and iterative feedback techniques are highly recommended to satisfy the user. The ultimate goal is to optimize the interaction between system and user during a session. Feedback methods may range from short-term techniques, that directly modify the queries to long-term methods, that make the use of query logs.

1.2 Indexing techniques

Indexing phase is one of the most important aspects of any VIR system. Indexing techniques are a powerful means to make a quick comparison between the query and object in the multidimensional database (Bohm et al. 2001). Multidimensional databases may have a lot of redundant data also. The indexed data, therefore, need to be aggregated to decrease the size of the index which further eliminates unnecessary comparisons. Most of the VIR systems are designed for specific types of image such as sports, species, architecture design, art galleries, fashion, etc. Generally, in VIR four kinds of techniques are adopted for indexing: inverted files, hashing based, space partitioning and neighborhood graph construction. To facilitate the indexing and image similarity there is need to pack the visual features. The different techniques of image similarity and indexing are highly affected by the feature extraction/aggregation and query formation as discussed above. For hashing techniques we refer readers to previous survey (Gani et al. 2016; Wang et al. 2016, 2018). In Gani et al. 2016 surveyed indexing techniques in the past 15 years from the perspective of big data. They categorize the indexing techniques in three different categories viz. Artificial Intelligence, Non-artificial Intelligence and Collaborative Artificial Intelligence on the basis of time and space consumption. The main intent of the paper is to identify indexing techniques in cloud computing. Other recent surveys on hashing are reported in Wang et al. (2016, 2018). The concept of learning to hash are delineated there. The survey in Wang et al. (2016) only categorizes and emphasizes on the methodology of data sensitive hashing techniques with big data perspective without any performance analysis. Wang et al. (2018) analyze and compare a comprehensive list of learning to hash algorithms in brief. In this work, they consider similarity preserving and quantization to group the hashing techniques exists in the literature. In addition, they have analyzed the query performance of pair-wise, multi-wise and quantization techniques for limited datasets. The recently conducted survey has been focused only on hash based indexing techniques for which there are only a limited support for experimental analysis and applications.

Therefore, we found that there is a need of survey article which has to cover detail of applications, datasets, performance analysis, evaluation challenges and non-hash based indexing techniques as well. In this regard, we analyze and compare a comprehensive list of indexing techniques. Here, we are focusing on overview of hash and non-hash based indexing techniques of the recent years. We provide more categorical detail of indexing techniques in Sect. 4. In comparison to earlier surveys (Gani et al. 2016; Wang et al. 2016, 2018), the contributions of our article are as follows:

-

1.

It provides splendid image retrieval application areas.

-

2.

It provides an immense description and categorization of hash and non-hash based indexing techniques.

-

3.

It extensively discussed and listed 36 different datasets in detail.

-

4.

It presents a separate evaluation section to examine the performance of different hashing techniques for different datasets.

-

5.

It presents details of multimedia evaluation programs covering top conferences related to indexing techniques.

1.3 Organization of the article

The rest of the article has been organized as follows: Sect. 2 describes the significant challenges in VIR system; Sect. 3, presents promising application areas followed by Sect. 4, which categorizes the indexing techniques. Section 5 provides an evaluation framework for different hash based indexing techniques followed by Sect. 6, that presents some evaluation campaign organized for multimedia indexing. Section 7 addresses the future work, and in the last section, we draw a conclusion.

2 Major challenges in VIR systems

2.1 Similarity search

Content-based search extends our capability to explore/search the visual data in different domains. This operation relies on the notion of similarity for search e.g. to search for images with content similar to a query image (Kurasawa et al. 2010). Translating the similarity search into the nearest neighbor (NN) (Uysal et al. 2015), search problem finds many applications for information retrieval, machine learning, and data mining. The context of large-scale unstructured data envisages finding approximate solutions. Approximate similarity (Pedreira and Brisaboa 2007; Hjaltason and Samet 2003) search relaxes the search constraints to get acceptable results at a lower cost (e.g. computation, memory, time).

2.2 Curse of dimensionality

All the research works have a common concern of scaling up indexing from low dimensional feature space to high dimensional feature space in getting good results, and it is a significant problem due to the phenomenon so called “the curse of dimensionality” (Wang et al. 1998). Recent studies show that most of the indexing schemes even become less efficient than sequential indexing for high dimensions. Such degradations and shortcomings prevent a widespread usage of such indexing structures, especially on multimedia collections.

2.3 Semantic gap

The field of semantic based image retrieval first received active research interest in the late 2000s (Zhang and Rui 2013). Both the single feature and the combination of multiple features are lacking in capturing the high-level concept of images. It is essential to understand the discrepancy between low-level image features and high-level concept to design good applications for VIR. The disparity leads to the so-called semantic gap (Sharif et al. 2018) in the VIR context. Describing images in semantic terms is the highest level of visual information retrieval, and it is a challenging task (Wang et al. 2016).

3 Applications

Indexing is widely used in visual information retrieval systems to make fast offline and online comparisons among data items. With the increase of storage devices as well as progress on the internet, image retrieval is growing with diverse application domains. In the literature a very few fully operational VIR systems are available but the importance of image retrieval has been highlighted in many fields. Though these certainly represent only the tip of the mountain, some potentially productive areas at the end of 2017 are as follows:

-

a.

Medical applications A rapid evolution in diagnostic techniques results in a large archives of medical images. At present this area is largely publicized as the prime users of VIR systems. This area has great potentials to be developed as huge markets for VIR system as it has unique ingredients (feature set viz. shape, texture etc.) for feature selection and indexing. The use of VIR can result in valuable services that can benefit biomedical information systems. The retrieval, monitoring, and decision-making should be integrated seamlessly to design an efficient medical information system for radiologist, cardiologist, and others.

-

b.

Biodiversity information systems Researchers in the life sciences are becoming increasingly concerned about to detect various diseases related to agricultural plants and to understand habitats of species. The in-time gathering and monitoring of visual data consistently achieve objectives as well as minimize the effect of diseases in plants/animals and monitors the lack of nutrients in plants.

-

c.

Remote sensing applications VIR system can be used to retrieve images related to fire detection, flood detection, land sliding, rainfall observation in agriculture, etc. For the query “show all forest area having less rainfall in last ten years” system replies with images having a region of interest. From military applications point of view probably this area is well developed and less publicized.

-

d.

Trademarks and copyrights This is one of the mature areas and on the advanced stage of development. In recent years, illegal use of logos and trademarks of noted brands has been emerged for business benefits. VIR is used as a counter mechanism in the identification of duplicate/similar trademark symbols which further helps in law enforcement and copyright violation investigation.

-

e.

Criminal investigation As an application this is not a truly VIR system as it purely supports identity matching rather than similarity matching. The VIR systems have a big significance in the criminal investigation. The identification of mugshot images, tattoos, fingerprint and shoeprint can be supported by these systems. Practically a large number of systems are used throughout the world for criminal investigation.

-

f.

Architectural and interior design Images that visually model the inner and outer structure of a building are containing more diagrammatic information. The use of VIR can result in important services that can benefit interior design or decorating and floor plan of a building.

-

g.

Fashion and fabric design The fashion and fabric industry have a predominant position among other industries all over world. For the product development purpose, designer of cloths has to refer previous designs. For the online shopping purpose, the user has to retrieve similar product options. As an application the aim of VIR system is to search the similar fabrics and products for designers and buyers respectively.

-

h.

Cultural heritage In comparison to other areas, image retrieval in art galleries and museum highly depends upon the creativity of user as images have heterogeneous specifics. In digitized art gallery and museum, the feature set is of high dimensionality which in turn requires advanced VIR systems.

-

i.

Education and manufacturing The main paradigm for performing 3D model retrieval has been using query-by example and query-by-sketch approach. The 3D image retrieval can be seen, as a toolbox for computer aided design, video game industry, teaching material and different manufacturing industries.

Other examples of database applications where visual information retrieval and indexing is useful: Personal Archives, Scientific Databases, Journalism and advertising, Storage of fragile documents, Biometric identification and Sketch-based Information Retrieval.

4 Categorization of indexing techniques

This section provides a background on indexing techniques and how they facilitate visual information retrieval and visual query by example. Many of the existing indexing techniques may range from the simple tree based (Robinson 1981; Lazaridis and Mehrotra 2001; Uhlmann 1991; Baeza-Yates et al. 1994) approaches to complex approaches that include deep learning (Babenko et al. 2014; Donahue et al. 2014; Dosovitskiy et al. 2014; Fischer et al. 2014) and hashing based (Andoni and Indyk 2008; Baluja and Covell 2008; He et al. 2011; Zhuang et al. 2011; Mu and Yan 2010; Liu et al. 2012). By approximate nearest neighbor search there exist hash and non-hash based indexing techniques and methodology of both turns around various concepts in the literature. But our finding says it is limited to some quality concepts. The hash based techniques are basically turnaround these concepts: Graph based, Matrix Factorization, Column Sampling, Weight Ranking, Rank Preserving, List-wise Ranking, Quantization, Semantic similarity (Text/image), Bit Scalability, Variable bit etc. whereas the non hash based techniques contains concepts namely Pivot Selection, Ball Partitioning, Pruning Rules, Semantic Similarity, Queue based Clustering, Manifold Ranking, Hybrid Segmentation, Approximation etc. Out of these significant concepts related methods have been detailed in this section. The categorization of these indexing techniques is presented in Fig. 3.

4.1 Hash based indexing

The Hashing has its origins in different fields including computer vision, graphics, and computational geometry. It was first introduced as locality sensitive hashing (Datar et al. 2004) in an approximate nearest neighbor (ANN) (Muja and Lowe 2009) search context. Any hash based ANN search works in three basic steps: figuring out the hash function, indexing the database objects and querying with hashing. Most ordinary hash functions are of the form

here f() is nonlinear function. The projection vector w and the corresponding bias b are estimated during the training procedure. sgn() is the element wise function which returns 1 if element is positive number and return − 1 otherwise. In addition, the choice of f() varies with type of hashing under consideration. Further, the choice of Hashing technique is highly dependent and mostly effected by the following factors: type of hash function, hash code balancing, similarity measurement and code optimization. The hash functions are of following forms: Linear, Non-linear, Kernelized, Deep CNN. The concept of code balancing is transfigured to bit balance and bit uncorrelation. Bit bi is balanced iff it is set to 1 in one half of all hash codes. Bit uncorrelation means that all pair of bits of hash code B is uncorrelated. Hash codes similarity is measured by hamming distance and its variants. Further, in optimization process sign function keeping, dropping and other relaxation methods are available. All these factors are denoted by legend in Fig. 3. To improve the search performance by fast hash function learning, researchers come up with new hashing methods of different flavors:

4.1.1 Data-dependent hashing (unsupervised)

The design of hash functions subject to analysis of available data and to integrate different properties of data. The main aim is to learn features from the particular dataset and to preserve similarity among the various spaces viz. data space and Hamming space. The unsupervised data dependent method uses unlabeled data to learn hash codes and committed to maintaining Euclidean similarity between the samples of training data. A representative method includes Kernelized LSH (Kulis and Grauman 2012), Spectral Hashing (Weiss et al. 2008), Spherical Hashing (Heo et al. 2012) and much more. Some of these techniques are discussed below.

4.1.1.1 Discrete graph hashing

Liu et al. (2014) proposed a mechanism for nearest neighbor search. To introduce a graph- based hashing approach, the author uses anchor graph to capture neighborhood structure in a dataset. The anchor graph provides nonlinear data-to-anchor mapping, and they are easy to build in linear time which is directly proportional to a number of data points. The proposed discrete graph hashing is an asymmetric hashing approach as it has different ways to deal with queries and in and out of sample data points (Table 1). The objective function written in matrix form is:

Define graph Laplacian L as anchor graph Laplacian \( L = I_{n} - S \), then the objective function is rewritten as:

By softening the constraints and ignoring error prone relaxation the objective function can be transformed as

4.1.1.2 Asymmetric inner product hashing

Fumin Shen et al. (Shen et al. 2017) address the asymmetric inner product hashing to learn binary code efficiently. In another context, it maintains inner product similarity of feature vectors. With the help of asymmetric hash function the Maximum Inner Product Search (MIPS) problem is formulated as

Here \( h\left( \cdot \right) \) and \( z\left( \cdot \right) \) are the hash functions, \( \cdot \) is the Frobenius norm and S is the similarity matrix computed as \( S = A^{T} X \). Further with linear form of hash functions the proposed Asymmetric Inner-product Binary Coding problem is formulated as (in matrix form):

The author incorporates the discrete variable as a substitute for sign function to optimize the bit generation approach.

4.1.1.3 Scalable graph hashing

Jiang and Li (2015) get inspiration from asymmetric LSH (Datar et al. 2004) to propose an unsupervised graph hashing. The proposed scheme formulates the approximation of the whole graph through feature transformation. Here approximation of graph is made without computing pair-wise similarity graph matrix. It learns hash functions bit by bit. The objective function is:

In particular, it uses concept of kernel bases to learn hash function and the objective function is defined as (in matrix form):

Subject to: \( K\left( X \right) \in {\mathbb{R}}^{n \times m} \) is the kernel feature matrix.

4.1.1.4 Locally linear hashing to capture non-linear manifolds

Irie et al. (2014) suggest a locally linear hashing to obtain the manifolds structure concealed in visual data. To identify the nearest neighbor in the same manifold related to the query, a local structure preserving scheme is proposed. In particular, it uses Locally Linear Sparse Reconstruction to capture locally linear structure:

The proposed model maintains the linear structure in a Hamming space by simultaneously minimizing the errors and losses due to reconstruction weights and quantization respectively. Therefore, the optimization problem is defined as:

4.1.1.5 Non-linear manifold hashing to learn compact binary embeddings

Shen et al. (2015) address the embedding to learn Nonlinear Manoifolds efficiently. The proposed method is entirely unsupervised which is used to uncover the multiple structures obscured in image data via Linear embedding and t-SNE (t-distributed stochastic neighbor embedding). For the construction of embedding a prototype algorithm has been proposed. For a given data point \( x_{q} \), the embedding \( y_{q} \) can be generated as

4.1.2 Data-dependent hashing (supervised)

Another category of learning to hash technique is supervised hashing. The supervised data dependent method uses labeled data to learn hash codes and committed to maintaining semantic similarity constructed from semantic labels of training samples. In comparison to unsupervised methods, supervised methods are slower during learning of large hash codes and labeled data. Further, it is limited to applications as it is not possible to get semantic labels always. The level of supervision further categorizes supervised methods in point-wise, triplets and list-wise approaches. Representative method includes Supervised Discrete hashing (Shen 2015), Minimal loss hashing (Norouzi and Fleet 2011) and many more (Lin et al. 2013; Ding et al. 2015; Ge et al. 2014; Neyshabur et al. 2013). Some of these techniques are listed and discussed below.

4.1.2.1 Discrete hashing

Shen et al. (2015) proposed a new learning-based data dependent hashing framework. The main aim is to optimize the binary codes for linear classification. This method jointly learns bit embedding and linear classifier under the optimization. For the optimization of hash bits, the discrete cyclic coordinate descent (DCC) algorithm is proposed. The objective function is defined as (in matrix form):

here \( \uplambda\;{\text{and}}\; v \) are the regularization parameter and penalty term respectively. They use hinge loss and \( l_{2 } \) loss for linear classifier. The proposed method showed improvement in results when it is compared with state-of-the-art supervised hashing methods (Zhang et al. 2014; Lin et al. 2014; Deng et al. 2015; Wang et al. 2013) and addressed their limitations. Further, in (Shen et al. 2016) they presented fast optimization of binary codes for linear classification and retrieval. Supervised and Unsupervised losses are considered for the development of scalable and computationally efficient method.

4.1.2.2 Discrete hashing by applying column sampling approach

Kang et al. (2016) presented discrete supervised hashing method based on column sampling for learning hash codes generated from semantic data. The proposed scheme is an iterative scheme where sampling of similarity matrix columns has been done through a column sampling technique (Li et al. 2013) i.e. the technique sample current available training data point into a single iteration. A randomized approach is used to sample data points i.e. few of the possible data points are selected for random sampling. Further, it partitions the sample space into two unequal halves and objective function is formulated as:

\( s.t. \)\( \tilde{S} \in \left\{ { - 1,1} \right\}^{\varGamma \times \varOmega } \), \( \varOmega \) and \( \varGamma \) are two halves of sample space, \( \varGamma = \)N – \( \varOmega \) with N = {1, 2,… n}.

It is a multi-iterative approach where the sample spaces updated with each iteration on an alternate basis.

4.1.2.3 Adaptive relevance based multi-task visual labeling

Deng et al. (2015) developed an image classification approach to overcome issues like data scarcity and scalability of learning technique. A use of hashing based feature dimension reduction reported much better image classification and stepped down required storage. The proposed method is a two-pronged multi-task hashing learning. Firstly, in learning step, each task suggests learning the defined model for a particular label. Further, this learning step executed in two levels viz. tasks and features simultaneously. The idea suggests that the complicated structure of processed features efficiently handle by task relevance scheme. Secondly, in the prediction step test datasets and trained model simultaneously classify and predicts the multiple labels. Outcomes reveal the algorithm potential of enhancing the quality of classification across multiple modalities.

4.1.3 Ranking-based hashing

With the advent of supervised, unsupervised and semi-supervised learning algorithms it is easy to generate optimized compact hash codes. Nearest neighbor search in large dataset under data-dependent methods produces suboptimal results. By exploring the ranking order and accuracy, it is easy to evaluate the quality of hash codes. Associated relevant values of hash codes help to maintain the ranking order of search results. A representative method includes Ranking-based Supervised Hashing, Column Generation Hashing and Rank Preserving Hashing and much more. Some of these methods are listed and discussed below.

4.1.3.1 Weighting scheme for hash code ranking

Ji et al. (2014) presented a ranking method involving weighting system. They aim to make an improved hamming distance ranking. However hamming distance ranking loses some valuable information during quantization of hash functions. To get highly efficient hamming distance measurement the proposed scheme learns weights [Qsrank (Zhang et al. 2012) and Whrank (Zhang et al. 2013)] during similarity search. To cope with expensive computing of higher order independence among hash function, this method uses mutual information of hash bit to propose mutual independence among hash bits. The neighbour preservation weighting scheme is defined as:

Here p and q are two weight vectors to capture the shared structure among task parameters, and variations specific to each task respectively. The objective function is maximized as follows:

s.t. \( 1^{T} \pi = 1, \pi \ge 0, \gamma \ge 0, \)\( a_{ij} \) is mutual independence between bit variables and \( w_{i}^{*} \) = \( w_{k} \pi_{k} \).

The anchor graph is used to represent sample to make similarity measurement useful for various datasets.

4.1.3.2 Ranking preserving hashing

Wang et al. (2015) proposed ranking preserving hashing to improve the ranking accuracy named Normalized Discounted Cumulative Gain (NDCG) which is the quality measure for hashing codes. The main aim is to learn new devised hashing codes that can maintain the ranking order and relevance values both for data examples. The ranking accuracy is calculated as follows:

here ranking position is defined as \( , \pi \left( {x_{i} } \right) = 1 + \mathop \sum \limits_{k = 1}^{n} I(b_{q}^{T} \left( {b_{k} - b_{i} } \right) > 0) \), Z is the normalization factor and \( r \) is the relevance value of data item \( x \). It’s hard to optimize NDCG directly due to its dependency on the ranking of data standards. Optimization of NDCG is done through linear hashing function which evaluates the expectation of NDCG.

4.1.3.3 Use of list-wise supervision for hash codes learning

Wang et al. (2013) presented an interesting variant for learning hash function. To this end, ranking order is used for learning procedure. The proposed approach implemented in three steps: (a) Firstly, transforms Ranking lists of queries into triplet matrix. (b) Secondly, the inner product has been used to compute the hash codes similarity which further derives the rank triplet matrix in Hamming space. (c) Finally, triplets are set to minimize inconsistency. The formulation of Listwise supervision is based on ranking triplet defines as:

The objective is to measure the quality of ranking list, which is formally calculated through loss function written in matrix form:

s.t. \( C \in {\mathbb{R}}^{\text{dxk}} \) is the coefficient matrix, \( p_{m} = \mathop \sum \limits_{i,j} \left[ {x_{i} - x_{j} } \right]S_{mij} \) and G = \( \mathop \sum \limits_{m} p_{m} q_{m}^{T} \).

The measurement of Loss function differentiates two ranking lists. They used Augmented Lagrange Multipliers (ALM) for optimization to reduce the computation time.

4.1.4 Multi-modal hashing

Developing a new retrieval model that is focusing on different types of multimedia data is a challenging task. Cross view or multimodal hashing techniques map different high dimensional data modalities into a small dimensional Hamming space. The main issue in performing joint modality hashing is preserving of similarity among inter and intra modalities. Utilization of information further categorize these methods in real-valued (Rasiwasia et al. 2010) and binary representation learning (Song et al. 2013) approaches. Some of the representative techniques are listed and discussed below.

4.1.4.1 Semantic-preserving hashing

Lin et al. (2015) proposed semantic preserving hashing named SPH for multimodal retrieval. The proposed scheme formulate the procedure in two steps: Firstly, it transforms semantic labels of data into a probability distribution. Secondly, it approximates data in Hamming space by minimizing its Kullback-Leiber divergence. The objective function is written as follow:

s.t. \( \alpha \) is the parameter for balancing the KL divergence and \( No \) is the normalizing factor

here the probabilities \( p_{i,j} \) and \( q_{i,j} \) are the probability of observing the similarity among training data and similarity among data items in hamming space respectively. I is the matrix having all entries 1. Quantization loss is measured as \( \left| {\widehat{H}} \right| - I^{2} \). They used kernel logistic regression boosted by sampling technique to introduce projection, the regression is done through k-mean and random sampling similarly.

4.1.4.2 Semantic topic hashing via latent semantic information

Wang et al. (2015) addresses the issues with graph-based and matrix decomposition based multimodal hashing methods. The long training time, decrease in mapping quality, and large quantization errors are the generic drawbacks of above-mentioned techniques. In the proposed work, the discrete nature of hash code has been considered. The overall objective function for text and image concept (\( L_{T} , L_{I} , L_{C} \)) is defined as:

Here, F is a set of latent semantic topic, P is the correlation matrix between text and image, U and V are the set of semantic concepts, \( \uplambda, \mu , \gamma \) are the tradeoff parameters and \( {\text{R}}\left( \cdot \right) \) is the regularization term to avoid over fitting.

4.1.4.3 Multiple complementary hash tables learning

Liu et al. (2015) switches research direction from compact hash codes to multiple additional hash tables. The author claims that this is the first approach which takes into account the multi-view complementary hash tables. In this method, additional hash tables are considered as clusters that use exemplars-based feature fusion. They extend exemplar based approximation techniques by adopting a new feature representation \( \left[ {z_{i} } \right]_{k} = \frac{{\delta_{k} K\left( {x_{i } , u_{k} } \right)}}{{\mathop \sum \nolimits_{{k^{\prime} = 1}}^{M} \delta_{k} K\left( {x_{i } , u_{{k^{\prime}}} } \right)}} \) here \( \delta_{k} \in \left\{ {0,1} \right\}, u_{k} \) are exemplar points \( \in \)\( \{ {\mathbb{R}}^{\text{d}} \} _{k = 1}^{M} \) and \( \kappa \left( { \cdot , \cdot } \right) \) is kernel function. The overall objective is to minimize:

Here μ is the weight vector. The strength of presented scheme is that they assume a few cluster centers to measure the similarity in between entire data points. They defend linear weighting and bundling of multiple features in one vector using nonlinear transformation.

4.1.4.4 Alternating co-quantization based hashing

Irie et al. (2015) proposed an improved multimodal retrieval which is based on the binary hash codes. The primary goal is to minimize the binary quantization error. To reduce the errors, the proposed model learns hash functions that provide a uniform mapping to one standard hash space with minimum distance among projected data points. The overall objective function (similarity preserving + quantization error) is formulated as:

Here λ, η are the balancing parameter, A and D are parameters of the binary quantizer, C is used to define inter and intra modal correlation matrix between X and Y and \( U,V \in \left\{ { \pm 1} \right\} \) are binary codes for X and Y. Later in quantization phase, two different quantizers are generated for two separate modalities viz. image and data which leads to end-to-end binary optimizers.

4.1.5 Deep hashing

In all hashing methods, the quality of extracted image features will affect the quality of generated hash codes. To ensure good quality and error free compact hash codes a joint learning model is needed to incorporate feature learning and hash value learning simultaneously. The model consists of different stages of learning and training deep neural networks. Deep hashing model has three simple steps to generate individual hash codes: (a) Image input (b) generation of intermediate features using convolution layer (c) a divide and encode module to distribute intermediate features into different channels further each channel is encoded into the hash bit. Representative methods (Abbas et al. 2018) are based on Recurrent Neural Networks (RNN) and Convolutional Networks (CNN). Some of these methods are listed and discussed below.

4.1.5.1 Point-wise deep learning

Lin et al. (2015) suggested a deep learning framework for fast ANN search. The main aim is to generate compact binary codes driven by CNN. Instead of applying learning separately on image representation and binary codes, simultaneous learning has been adopted with the assumption of labeled data. The proposed approach implemented in three steps: (a) supervised pre-training on the dataset (b) fine tuning the network (c) image retrieval. For computationally cheap large scale learning with compact binary codes, multiple visual signatures are converted to binary codes. Following the data independence approach, the proposed approach is highly scalable which lead to very efficient and practical large scale learning algorithms.

4.1.5.2 Pair-wise labels based supervised hashing

Li et al. (2016) addresses the issue of optimal compatibility among handcrafted features and hashing function used in various hashing methods. Simultaneous learning has been adopted, instead of applying learning separately on feature and hash code. The proposed end-to-end learning framework contains three main elements: (a) deep neural network for learning (b) hash function, which takes care of mapping between two spaces (c) loss function that is used to grade the hash code led by the pairwise label. The overall problem (feature learning + objective function) is formulated as:

Here θ represents the all parameters of the 7 layers, \( \emptyset \left( {{\text{x}}_{\text{i}} ;\uptheta} \right) \) denotes the output of the last Full layer, v is the bias vector and \( \eta \) is the hyper-parameter. Following the principles, deep architecture integrates all three components which further permit the cyclic chain of feedback among different parts.

4.1.5.3 Regularized learning based bit scalable hashing

Zhang et al. (2015) incorporate the concept of bit scalability to compute similarity among the images. For rapid and efficient image retrieval the author group training images into a pack of triplet sample. The author assumes that each sample consists of two images with a similar label and one with the dissimilar label. In particular, the learning algorithm has been implemented in a batch-process fashion that makes use of stochastic gradient descent (SGD) for minimizing the objective function in large-scale learning. The objective function is formally written as follows:

Here \( D_{{\mathbf{w}}} \left( {r_{i} ,r_{i}^{ + } ,r_{i}^{ - } } \right) = {\text{M}}\left( {r_{i} ,r_{i}^{ + } } \right) - {\text{M}}\left( {r_{i} ,r_{i}^{ - } } \right) \) with \( r_{i} ,r_{i}^{ + } ,r_{i}^{ - } \) are the approximated hash codes,\( {\text{M}} \) is weighted Euclidean distance, \( C = - \frac{\text{q}}{2} \) for q bit hashing code, \( R = \left[ {r_{1} \widehat{\text{w}}^{{\frac{1}{2}}} ,r_{2} \widehat{\text{w}}^{{\frac{1}{2}}} , \ldots ,r_{T} \widehat{\text{w}}^{{\frac{1}{2}}} } \right] \) for T number of images and \( \omega \) and \( \uplambda \) are the parameter of hashing function and hyper-parameter for balancing respectively.

4.1.5.4 Similarity-adaptive deep hashing

For learning similarity-preserving binary codes Shen et al. (2018) proposed an unsupervised deep hashing method. To introduce similarity preserving binary codes, the author uses anchor graph to propose pair-wise similarity graph. The anchor graph provides nonlinear data-to-anchor mapping, and they are easy to build in linear time which is directly proportional to a total number of data points. The proposed approach implemented in three steps: (a) Firstly, Euclidean loss layer has been introduced to train the deep model for error control (b) Secondly, the pair-wise similarity graph has been updated to make deep hash model and binary codes more compatible. (c) Finally, alternating direction method of multipliers (Boyd et al. 2010) is used for optimization. The updating of similarity graph is highly dependent on the output representations of deep hash model which subsequently improves the code optimization.

4.1.6 Online hashing

Existing data dependent and independent hashing schemes follows batch mode learning algorithms. That is learning based hashing methods demands massive labeled data set in advance for training and learning. Providing gigantic data in advance is infeasible. A new hashing technique that is focusing on updating of a hash function with a continuous stream of data has been developed known as online hashing. Some of the representative methods are listed and discussed below.

4.1.6.1 Kernel-based hashing

Huang et al. (2013) proposed a real-time online kernel based hashing which has its origins in the problem of large data handling in existing batch-based learning schemes. In other contexts, the online learning is known as the passive aggressive learning. It is first introduced by Crammer et al. (2006). The objective function for updating projection matrix is inspired by structured prediction and given as below:

Here C is the aggressiveness parameter and \( \upxi \) ≥ 0.

4.1.6.2 Adaptive hashing

Cakir and Sclaroff (2015) presented adaptive hashing, a new hashing approach that makes use of SGD for minimizing the objective function in large-scale learning. The objective function is defined as:

An update strategy is utilized for deciding in what amount any hash function need to update and which of the hash function need to be corrected. W is updated as follows: \( W^{t + 1} = W^{t} - \eta^{t} \nabla_{\text{W}} l\left( {f\left( {x_{i} } \right),f\left( {x_{j} } \right);W^{t} } \right) \). Here \( \eta^{t} \) is the constant value, \( \nabla_{\text{W}} \) is obtained approximating the sgn() function with sigmoid. During online learning orthogonality regularization is required to break the correlation among decision boundaries. Adaptive hashing is highly flexible and iterative as it updated the hash function with the speed of streaming data.

4.1.7 Quantization for hashing

Every approximate nearest neighbor searching techniques comprise two stages: projection and quantization. Initially, the data points are mapped into low dimensional space. Next, each assigned value quantized into binary code. During quantization information loss is instinctive, but in any bit selection approach for quantization, similarity preservation of Hamming and Euclidean distance and independence between bits are other major issues. A representative method includes single bit quantization (Indyk and Motwani 1998), double bit quantization (Kong and Li 2012), multiple bit quantizations (Moran et al. 2013) and much more. Some of these methods are listed and discussed below.

4.1.7.1 Variable bit quantization

Moran et al. (2013) proposed a data driven variable bit allocation per locality sensitive hyperplane hashing for quantization stage of hashing based ANN search. In previous widely popular approaches SBQ (Indyk and Motwani 1998), DBQ (Kong and Li 2012), NPQ (Moran et al. 2013) and MQ (Kong et al. 2012) it is taken for granted that each hyperplane has been assigned with 1 or 2 bit respectively and in case of any method violate the defined assignment principle then either bits are discarded or other hyperplanes serves with lesser bits accordingly. Initially, in order to allocate a variable number of bits to each hyperplane, the F-measure has been computed for each hyperplane. The principle idea behind F-measure calculation is that large informative hyper-planes results in higher F-measure.

4.1.7.2 Most informative hash bit selection

Liu et al. (2013) proposed a bit selection method named NDomSet which unify different selection problem into a single framework. The author presents a new family of hash bit selection from a pool of hashed bits which further grows into the discovery of standard dominant set in the bit graph. In this approach, firstly an edge-weighted graph is made, representing the bit pool. The proposed approach consisted of bit selection as quadratic programming to deal with similarity preservation and non-redundancy properties of bits. The experimental results show that the proposed non-uniform bit selection strategy perform well while using hash bits generated by different hashing methods viz. ITQ (Gong et al. 2013), SPH (Weiss et al. 2008), RMMH (Joly and Buisson 2011).

4.1.7.3 Exploring code space through multi bit quantization

Wang et al. (2015) address the issue of quantization error and neighborhood structure of raw data. The author introduced an innovative multi-bit quantization scheme to use available code space at its maximum. To depict the similarity preservation among Hamming and Euclidean distance space, a distance error function has been introduced. They also proposed an O(n3) algorithm for optimization to reduce the computation time. Results obtained by experiments demonstrate a possible improvement in search accuracy due to proposed quantization method. They also demonstrate the effectiveness of Hamming, Quadra and Manhattan distance on multi-bit quantization approach.

Table 2 compares various hash based indexing techniques regarding their pros, cons, dataset, feature used, evaluation measure and experimental results. Table 3 lists a brief introduction to different datasets used in hash based indexing techniques.

4.2 Non-hash based indexing

Non-hash based methods are classified in various categories viz. tree, bitmap, machine learning, deep learning and soft computing based. To maximize the scope of non-hash based methods here, we consider every technique one by one in order.

4.2.1 Tree based techniques

Earlier nearest neighbor searching methods are tree-based, and there is a need for indexing structure to partition the data space. Further different similarity measurement metrics, space partitioning, and pivot selection techniques are adopted to compute the nearest neighbor among image features. Due to these joint efforts, a large class of tree-based indexing techniques is available in literature such as R-Tree (Guttman 1984), KD-Tree (Friedman et al. 1977), VP-Tree (Markov 2004) and M-tree (Ciaccia et al. 1997). Studies below stressed on some essential techniques in large image datasets.

4.2.1.1 Pivot selection based

Indexing schemes based on reference (pivot) objects results in minor distance computation and disk accesses. The different pivot selection algorithms compete for selection of right pivots, the number of pivots, pre-computed distances, and distribution of pivots. Pivot selection techniques are classified into two categories: Pivot partitioning and Pivot filtering. Further partitioning can be done in two different ways ball partitioning and hyperplane partitioning. Some of these techniques are listed and discussed below.

-

(i)

Use of Spacing-Correlation Objects Selection for Vantage Indexing

Van Leuken et al. (2011) propose an algorithm to select a set of pivots carefully. The proposed vantage indexing makes use of a randomized incremental algorithm for the selection of a set of pivots. The two-pronged scheme firstly proposes criteria to measure the quality of pivots and secondly provides a pivot selection scheme with the condition of no random pre-selection. They have proposed two new quality criteria for variance of spacing and correlation, defined as:

Here \( \mu \) is the average spacing \( \sigma \) be the variance of spacing, A and V are objects and vantage objects respectively, \( d\left( {\cdot,\cdot} \right) \) be the distance function and C be the linear correlation coefficient.

-

(ii)

Cost-Based Approach for Optimizing Query Evaluation

Erik and Hetland (2012) proposed a cost-based approach to evaluating pivot selection dynamically. The main aim is to find selective pivots and an exact number of pivots required to assess a query. Initially, to perform a sequential search by skipping the searching in indexes, the cost model has been used. Quadratic Form Distance is used to compare histograms, and Euclidean distance has been used for experimental measurements. This approach believes in the static use of pivots (Traina et al. 2007) and the principle of maximizing the distance between pivots strengthen the approach.

-

(iii)

Improving Node Split Strategy for Ball-Partitioning

De Souza et al. (2013) described ball-partitioning-based metric access methods that able to reduce the number of distance calculation and fast execution of distance-based queries. Node split strategies of M-tree and slim tree are too complex. The main aim is to propose the modified node split strategies. For better pivot selection, to avoid unbalanced splits and to categorize the nodes in different sets, three different algorithms viz. maximum dissimilarity, path distance sum based on prim’s algorithm and reference element have been proposed. The proposed methods are shown to be efficient in the number of distance calculations and the time spent to build the structures.

-

(iv)

Efficient k-closest pair queries by considering Effective Pruning Rules

Gao et al. (2015) proposed several algorithms for closest pair query processing by developing more effective pruning rule. The contribution of this paper is twofold: minimizes the number of distance computations as well as the number of node accesses. The proposed approach consisted of depth-first and best-first traversals to deal with duplicate accesses. Query efficiency is achieved by the employment of new pruning rules based on metric space. Experimental results on different data sets proved that the proposed scheme reached minimum distance computation and minimized I/O overhead.

-

(v)

Metric all-kNN search by Considering Grouping, Reuse and Progressive Pruning Techniques

Chen et al. (2016) proposed a novel method for All-k-Nearest-Neighbor Search named Count M-tree (COM). The contribution of this paper is twofold: minimizes the number of distance computations as well as some node accesses. The indexing method relies on dynamic disk-based metric indexes which use different pruning rules, grouping, recycle and pruning methods. The strength of presented scheme is that the query set and the object set share the same dataset as no different dataset required to train them separately.

-

(vi)

Radius-Sensitive Objective Function for Pivot Selection

Mao et al. (2016) proposed an improved metric space indexing which is based on the selection of several pivots. The system performs following functions: Firstly, they present importance of pivot selection. Their criterion for pivot selection is based on relevance and distance among pivot objects. An extended pruning mechanism has been presented with a framework to fix and select some relevant pivots. Radius-sensitive objective function for pivot selection is to maximize:

Here S is the dataset in metric space, \( L_{\infty } \) is the distance.

4.2.1.2 Clustering based

The grouping of semantically similar images into clusters suggests a novel framework for nearest neighbor search in image retrieval. Instead of matching large part of image data set with query image it is meaningful to match a representative image(s) from a cluster. Following the principles, clustering based techniques work for a particular dataset. Varieties of clustering methods are available in the literature. Some of these methods are listed and discussed below.

-

(i)

Priority Queues and Binary Feature based Scalable Nearest Neighbor Search

Muja and Lowe (2014) proposed a priority queue based algorithms for approximate nearest neighbor matching and proposed an algorithm for matching binary features also. The focus of this algorithm is to extend in finding a large number of closest neighbors. They have developed an extended version using a well-known best-bin-first strategy. A small number of iterations considerably cut down the tree build time which further maintains the search performance. The author also comes up with an open source library called the fast library for approximate nearest neighbors (FLANN) for the use of research community.

-

(ii)

Indexing and Packaging of Semantically Similar Images into Super-Images

Luo et al. (2014) work on the packaging of semantically similar images into super-images. The fact behind this proposal is the strong relevance of the images into a dataset. The concept of super-image effectively bundle the multiple images into a single unit of same relevance and significantly decreases the size of the index. Semantic attribute extraction is the main issue in index construction. The attributes are extracted during packaging of one super-image i.e. during off-line indexing to make fast index structure. Visual compactness of a superimage candidate is calculated as:

Here TF is the normalized term frequency vector and dist() is the cosine distance.

-

(iii)

Image Discovery through Clustering Similar Images

Zhang and Qiu (2015) proposed a scheme to discover landmark images in large image datasets. For rapid and efficient image retrieval the author group semantically similar images into clusters. One landmark image with different viewpoints adequately packed into a sub-cluster. Clusters can be partially overlapped. Each sub-cluster contains a center called as bundling center. Further, the bundling center of sub-cluster acts as a representative of the sub-cluster, to avoid exact image matching the scheme performs image matching by placing the bundling center.

4.2.1.3 Other techniques

This half contains the approximation, relevance feedback and some other techniques for VIR. Retrieval of images by approximation, relevance feedback or by some other means viz. online techniques and query as video demands extra efforts but results in fine-grained results. Some of the representative methods are listed and discussed below.

-

(i)

Indexing via Sparse Approximation

Borges et al. (2015) propose a high-dimensional indexing scheme based on sparse approximation techniques. The focus of this scheme is to improve the retrieval efficiency and to reduce the data dimensionality by designing a dictionary for mapping the feature vectors onto sparse feature vectors. The proposed scheme switches its direction to compute the high-dimensional sparse representations based on regression with the condition of preserved maximum locality. They showed that traversal of the data structure would be independent of metric function, low storage space required for efficient encoding of sparse representation and search space pruned efficiently.

-

(ii)

Use of Hybrid Segmentation and Relevance Feedback for Colored Image Retrieval

Bose et al. (2015) proposed a new Relevance Feedback (RF) based VIR. The first advantage of the proposed scheme is feature-reweighting for relevance feedback i.e. to compute relevance score and weights of features the combination of feature-reweighting and instance based cluster density approach are used. The second advantage is a good utilization of image and shape contents. This scheme extracts the color and shape information through the color co-occurrence matrix (CCM) and segmentation of the image respectively. Here, unrestricted segmentation (k-mean) is used to segment the images. The relevance feedback scheme is initialized with three different approaches: intersection approach, union approach, and a combination approach. They have proposed two new measures retrieval efficiency, false discovery respectively to address the accuracy of retrieval.

-

(iii)

Parallelism based Online Image Classification

Xie et al. (2015) propose a united algorithm for classification and retrieval named ONE (Online Nearest-neighbor Estimation). They observed that image classification and retrieval fundamentals are same and similarity measurement function could launch both of them. Its overall aim is to utilize the GPU parallelization to make the fast computation fully. The dimension reduction scheme is initiated with the help of both PCA and PQ. The scheme relies on feature extraction, training, quantization and an inverted index structure.

-

(iv)

Query by Video Search

Yang et al. (2013) proposed a priority queue based algorithms for feature description and proposed a cache based bi-quantization algorithm also for information retrieval concept implementation. This method considers a short video clip as a query. Further, to find stable and representative good points among SIFT features, scheme perform feature tracking (Ramezani and Yaghmaee 2016) within video frames. The calculation of good point is formulated as:

Here \( S\left( {p_{i}^{j} } \right) \) denotes stableness of the point, Len() represents number of frames being tracked, and Framecount denotes the total number of frames in video query. Query representation is initiated by combining good points into a histogram.

Table 4 compares various tree-based indexing techniques regarding their pros, cons, dataset, feature used, evaluation measure and experimental results. Table 5 lists a brief introduction to different datasets used in tree-based indexing techniques.

4.2.2 Ranking based

Another category of tree-based indexing technique exists in literature to rectify the distance computation cost and index building cost by the use of different ranking strategies. In comparison to other methods, most of the ranking based methods are independent of distance measures. By exploring the ranking order and post processing, it is easy to the accurate and fast construction of index. The ranking scheme can be further extended to graph based, manifold ranking, supervised and unsupervised techniques.

4.2.3 Deep learning based

The need for full utilization of feature extraction, processing, and indexing in VIR shifted the research direction towards deep learning. The recently proposed models map low-level features into a high level with the help of nonlinear mapping techniques. Different feature extraction networks are available in literature viz. Alexnet (Krizhevsky et al. 2012), Goognet (Szegedy et al. 2015) and VGG (Simonyan and Zisserman 2014). Issues like number of layers in a network, distance metric, indexing techniques and much more are still unanswered and need to be benchmarked. Representative methods are based on RNN and CNN.

4.2.4 Machine learning based

It is essential to understand the discrepancy between low-level image features and high-level concept to design good applications for VIR. This leads to the so-called semantic gap in the VIR context. To reduce the semantic gap different classification and clustering techniques under machine learning are available. The support vector machine (SVM) and manifold learning are used to identify the category of the images in the dataset. Relevance feedback is also a good alternative. The level of learning and feedback further categorize learning methods in ‘active’ and ‘passive’ learning and ‘long’ and ‘short’ term learning approaches.

4.2.5 Soft computing based

Soft computing is combined effort of reasoning and deduction that employ development of membership and classification. The key to any productive soft computing based CBIR technique is to choose the best feature extraction scheme. Some of the soft computing techniques are Artificial Neural Network, Fuzzy Logic, and Evolutionary Computation. Above listed methods are discussed in Table 6.

In the previous half we present a detailed review of hash and non-hash based indexing techniques and we found that hash and non hash (tree) based techniques are totally different in nature. Generally speaking, in comparison to hash based techniques, the tree based techniques have following serious issues:

-

(1)

Tree based methods are in need of large storage requirement in comparison to hashing based methods (sometimes more than the size of dataset itself) and the situation becomes worse when managing high dimensional datasets.

-

(2)

For high dimensional datasets, in comparison to hashing based methods the retrieval accuracy of tree based methods approaches to linear search as backtracking takes long search time.

-

(3)

The use of branch and bound criteria in tree based method makes them computationally more expensive as they are unable to stop after finding the optimal point and continuing in search of other points whereas in hashing based methods the criteria is to stop the search once they find a good enough points.

-

(4)

On behalf of partitioning the entire dataset the hashing based methods repeatedly partition the dataset to generate a one ‘bit’ hash from each partitioning whereas tree based methods uses the recursive one.

On the application side tree based methods are applicable and useful when we have low dimensional datasets and user wanting exact nearest neighbor search. In the era of big data and deep learning, hashing based techniques are more suitable for high dimensional datasets and nearest neighbor search with low online computational overheads. Further, different intents and needs of users bring up unheard challenges as discussed in Sect. 7 later, all these challenges are in the scope of hash based methods. So we surely handle all of these issues by employing advanced hashing techniques. Therefore, to ensure fair comparisons a summary of their potential is presented in Table 7.

5 Evaluation framework

In this section, we evaluate hashing techniques by careful analysis of results in the literature and approaches surveyed in this paper. By focusing on experimental works, we make an analysis of large number of notable hash based indexing techniques whose codes are available online. The experiments are run on an Intel Core i7 (4.20 GHz) with 32 GB of RAM, and Windows 10 OS. All the strategies are implemented in Matlab R2017b using the same framework to allow a fair comparison (Table 8).

5.1 Description of data

For the experiments regarding large scale similarity search and image retrieval we resorted to the five data sets: NUS-WIDE, CIFAR, SUN397, LabelMe, Wiki. The five datasets are chosen for their qualities viz. diverse, accessible, large size, and a rich set of descriptor considering different properties of the image. A large number of datasets are available in the literature as listed in Table 3 with their license, content, and accessibility issues. We have only opted general image datasets as they are largely opted by state-of the-art.

5.2 Evaluation metrices

The majority of datasets and techniques use Mean Average Precision (MAP) as the central evaluation metric for experiments. Along with MAP, they consider Mean Precision, Mean Classification Accuracy (MCA), Precision and Recall of Hamming distance 2. They also use two different metrics for evaluating retrieval: (i) Normalized Discounted Cumulative Gain (NDCG) using Hamming Ranking (ii) Average Cumulative Gain (ACG) using Hamming Ranking.

5.3 Evaluation mechanisms

The evaluation of system decides how far the system accomplish user’s needs and technically which methodology is best for feature selection feature weighting, and hash function generation to make efficient and accurate retrieval process. Accordingly, researchers have explored a variety of ways to assessing user satisfaction and general evaluation of Image Retrieval system. It has always been a challenging and difficult matter for image retrieval importantly due to semantic gap (Wang et al. 2016) and further it is more problematic to pick out relevant set in the image database. There exist different ways of evaluating Image Retrieval systems in the literature are described below.

-

(1)

Precision It is a measure of exactness which pertains to the fraction of the retrieved images that is relevant to the query.

-

(2)

Recall It is the measure of completeness which refers to the fraction of relevant images that is responded to the query.

-

(3)

Average precision This is the ratio of relevant images to irrelevant images in a specific number of retrieved images.

-

(4)

Mean average precision This is the average of the average precision value for a set of queries.

-

(5)

Normalized discounted cumulative gain (NDCG): It is the measure of uniformity between ground-truth relevance list to a query and estimated ranking positions.

-

(6)

F-measure It is combined measure that assesses precision/recall tradeoff.

Besides these evaluation measures there exist some measures which can strengthen the evaluation procedure despite semantic gap type of challenges.

-

(1)

The size of index It determines the storage utilization of generated Index. Practically the size of the index must be a fraction of dataset size.

-

(2)

Index compression Some indexing techniques generate short hash codes or other similar codes for image data thereby reducing the storage requirement of Index.

-

(3)

Multimodal indexing This refers to the ability of the index to support cross-media retrieval. As per current scenario the user intent to search via query by keywords, query by image or combination of both. Practically being multimodal, a system must support text to text, text to image and image to image search.

The metrics and measures mentioned above do not quantify user requirements. Other than image semantic, the different user intent may contain image clarity, quality, and associated meta-data. The satisfaction of user highly depends on the following factors:

-

(1)

User effort This factor decides the role of the user and their efforts in devising queries, conducting the search, and viewing the output.

-

(2)

Visualization This refers to the different ways to display the results to the users either in linear list format or 2-D grid format. Further, it influences the user’s ability to employ the retrieved results.

-

(3)

Outcome coverage This factor decides to which level the relevant images (by agreed relevant score) are included in the output.

5.4 Evaluated techniques and results

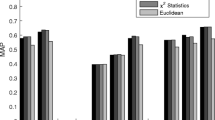

To evaluate the large-scale similarity search accuracy and effectiveness, we compare some hashing methods. To allow the comparison the most important aspects to evaluate is the algorithm performance metric as discussed in Sect. 5.2. Figure 4 below shows the comparison of various unsupervised data dependent techniques on CIFAR (GIST features) and SUN397 (CNN features) datasets.

It is evident from the Fig. 4a that AIBC (Shen et al. 2017) performed better than other unsupervised techniques. The AIBC improved Mean Average Precision nearly 2%, 4% and 9% for code length 32, 64 and 128 bits. The MAP results for various unsupervised data dependent are examined on the SUN397 dataset. Best score of MAP are obtained by AIBC because the correlation among inner products in this approach is maximum, the key to generating high-quality codes. Figure 4b displays the comparison of various techniques with AIBC regarding MAP values. The AIBC improved the MAP nearly 8% for code length 64 and 128 bits.

In Fig. 5 we compare the performance of Column Sampling based Discrete Supervised Hashing (Kang et al. 2016) with state-of-the-art. Figure 5a presents the MAP obtained with values of code length ranging from 32, 64 and 128 bits. It can be seen that the column sampling based discrete hashing improved the MAP nearly 16%, 12% and 12% for code length 32, 64 and 128 bits respectively on CIFAR (GIST features) dataset. Figure 5b displays the comparison regarding MAP value for NUSWIDE (GIST features) dataset. The COSDISH improved MAP nearly 6% for code length 32, 64 and 128 bits with the use of column sampling technique to sample similarity matrix columns iteratively.

The ranking quality of retrieved results for Ranking based Hashing on the NUS-WIDE (GIST features) dataset is displayed in Fig. 6. NDCG@K is used to evaluate the ranking quality. Here K represents the value of retrieved instance. The figure presents the value of NDCG obtained with values of K ranging from 5 to 20 for 64 hashing bits. The Ranking preserving hashing improved NDCG nearly 1% under NDCG@5, 10 and 20 measures.

Next, the comparison of various Multi-Modal techniques on WiKi (CNN features for image data and skipgrams for text data) and NUS-WIDE (SIFT features for image data and binary tagging vector for text data) datasets has been represented. Figure 7a depicts the MAP values results on Wiki dataset for the image to image search. The MAP value results are observed for 32, 48 and 64-bit code length. The ACQ (Irie et al. 2015) improved the values approximately 1.5%, for three different code lengths respectively. Figure 7b also displays the comparison regarding MAP values among various techniques on Wiki dataset for the text to image search. Here we show the comparison of ACQ (Irie et al. 2015) with state-of-the-art multi-modal techniques. The ACQ improved the values nearly 4, 3 and 2% for 32, 48 and 64-bit code length respectively. Figure 7c depicts the MAP values results on NUS-WIDE dataset for the text to image search. Here we show the comparison of Semantics-Preserving Hashing (Lin et al. 2015) with state-of-the-art multi-modal techniques. The probability based SPH showed an average maximum improvement of 19% for 32, 48 and 64-bit code length respectively.

The image retrieval results for Deep Hashing on CIFAR and NUSWIDE dataset are displayed in Fig. 8. The figure presents the value of MAP obtained for different values of hash code length viz. 32, 48 and 64 bits. Figure 8a depicts the MAP values results on CIFAR dataset. The Pair-wise Labels based Supervised Hashing (Li et al. 2016) improved MAP by 13, 12 and 12% respectively. Figure 8b also displays the comparison regarding MAP values among various techniques on NUS-WIDE dataset. The DPSH (Li et al. 2016) improved the values nearly 2, 3 and 3% respectively.

Figure 9 list the retrieval results of Adaptive Hashing, Online hashing and other Batch Hashing techniques are examined on LabelMe (GIST features) dataset. The MAP values for different hash code length generated by Adaptive hashing are not very promising. BRE (Kulis and Darrell 2009) showed an average maximum improvement of 6% in MAP on other batch techniques and 4% as compared to online methods. Further, it is observed that the concept of adaptive hashing (Cakir and Sclaroff 2015) does not put much impact on MAP values of kernel-based online hashing (Huang et al. 2013).

From the above evaluations, we draw the following conclusions:

-

(1)

Supervised learning methods mostly attain good performance in comparison to unsupervised learning methods. As, the supervised method uses labeled data to learn hash codes and committed to maintaining semantic similarity constructed from semantic labels. In comparison to unsupervised methods, supervised methods are slower during learning of large hash codes due to labeled data. This slowness can be overcome by incorporating deep learning concept.

-

(2)

The performance of multimodal retrieval methods is totally guided by the quality of feature set. The results of text to image search are better than image to image search for multimodal datasets. The direct extension of two- modality algorithm into three or more modality is not possible.

-

(3)

The methods like adaptive hashing and online hashing does not provide promising results. Even, the concept of adaptive hashing does not put much impact on performance of kernel-based online hashing.

-

(4)

Nearest neighbor search on optimized compact hash codes of large dataset induces suboptimal results. By exploring the ranking order and accuracy, it is easy to evaluate the quality of hash codes. Associated relevant values of hash codes help to maintain the ranking order of search results.

6 Multimedia indexing evaluation programs

There are several well-instituted evaluation campaigns and meetings which provide test-bed and metric based environment to compare different proposed solutions in image retrieval domain. In this section, we describe various evaluation campaigns in image retrieval organized by the University of Sheffield, Stanford University, Princeton University and various research groups.

6.1 MediaEval

MediaEvalFootnote 1 Benchmarking Initiative is a benchmarking activity organized by various research groups devoted to evaluating a new algorithm for multilingual multimedia content access and retrieval. It set out initially to benchmark some tasks related to the image, video, and music viz. Tagging Task for videos including Social Event Detection, Subject Classification, affect task, later from 2013 it sets to benchmark Diverse Images task.

The goal of Diverse Images task is to retrieve images from tourist images dataset that is participants has to refine (reorder) provided a ranked list of photos by maintaining diversity and representativeness. As a novelty in 2015, the diverse image task has been extended to multi-concept, ad-hoc, queries scenario. The dataset used for campaign is of small size having general-purpose visual/textual descriptors. It contains 95,000 images, splits into 50% for designing/training and 50% for evaluating. Different metric are considered to evaluate the system: Cluster Recall, Precision and F-measure. The evaluation of ranking (F-measure score) has been done by visiting the first page of the displayed outcome only. Other popular evaluation metrics are intent-aware expected reciprocal rank and the Normalized discounted cumulative gain (NDCG). Table 9 shows the evolution of MediaEval tasks over the year.

6.2 ImageCLEF

This evaluation forumFootnote 2 was initiated by University of Sheffield in 2003 nowadays it is run by individual different research groups. Initially, it is launched as a part of CLEF.Footnote 3 As mentioned on the website: It is a series of challenges to promote concept based annotation of images and multimodal and multilingual retrieval both. The first image retrieval track was included in 2003, where the objective was to perform similarity search and to find relevant images related to a topic in the cross-language environment. In 2004, Visual features were included the first time in any image CLEF track. The task was to perform image (tagged by English captions) search with text queries and visual features based medical image retrieval and classification. Over the year, it considers a broad range of topics related to multimedia retrieval and analysis. Different tracks under imageCLEF challenge are listed in Table 10.

6.3 ILSVRC

ILSVRC is in its 8th year in 2017 and is governed by a research team from Stanford and Princeton University respectively. The ImageNetFootnote 4 large scale task organized (since 2010) some object category classification and category detection task to promote the evaluation of proposed retrieval and annotation methods. Over the year ILSVRC consists of following tasks: Image classification (2010–2014), Single-object localization (2011–2014) and Object detection (2013–2014) and different dataset for testing and training and evolution tasks are listed in Tables 11 and 12 respectively.

7 Open issues and future challenges

Over the years, image retrieval has come a long way from simple linear scan techniques to more traditional learning and hashing techniques such as rank-based, deep learning, multimodal and online hashing techniques. Interest in topics such as quantization, supervised and unsupervised hashing is also increasing. The field of multimedia retrieval has witnessed different indexing techniques for data analysis. Further, different intents and needs of users bring up unheard challenges. We discuss some open and unresolved issues as follows:

7.1 A collection of big multimodal dataset

Instead of the uni-modal retrieval system, multiple unimodal systems can be combined to obtain multimodal retrieval system. To study and retrieve information across various modalities have been widely adopted by different research communities. There is an urgent need of large, annotated, easily available, and benchmarked multimodal dataset to train, test and evaluate multimodal algorithms.

7.2 Utilization of labeled and unlabeled data samples to learn

Earlier VIR systems were simple, and were answerable to small datasets only i.e. they were independent or partially dependent on side (extra/labeled information. Simultaneous learning of labeled and unlabeled data samples put more complications in modern labeled information based systems. The ignorance of unlabelled database samples and a limited selection of these samples are the generic drawbacks of current approaches. Hence, need to utilize labeled and unlabeled data samples jointly for a better active learning.

7.3 Unsupervised deep learning

The need for full utilization of feature extraction, processing and indexing in VIR shifted the research direction towards deep learning. The recently proposed models map low-level features into a high level with the help of nonlinear mapping techniques. Researchers adopt the idea of supervised deep learning because it is a mature field and is in its middle stages of development. Human and animal learning is largely unsupervised which opens the door for researchers to develop future VIR system.

7.4 Multi feature fusion

To fulfil diverse user needs it becomes more challenging to develop fast and efficient multimodal VIR system as the traditional single feature or uni-modal based VIR system are lopsided. Better mechanism for fusing the multiple features for hash generation and learning are to be determined as assimilation of feature fusion concept can lessen the effect of well known Semantic gap.

7.5 Open evaluation program

Differences in technical capacities and data availability enlarge the VIR research gap between academics and industry based real application. A large number of researchers in academics bound to available resources and it is difficult to achieve industry based real application solution for them. It is necessary to organize open evaluation program to bridge this gap. The most felicitous part of organizing the open program is that they provide a common platform to industry and academic researchers to exchange more practical solutions. Further they jointly look into the key difficulties in different real time scenarios to develop application-independent methods.

The complete report of datasets, evaluation results, and the list of participants can be reviewed from the annual evaluation reports and websites of the challenges discussed above.

8 Conclusion