Abstract

Feature selection is the process of reducing the number of collected features to a relevant subset of features and is often used to combat the curse of dimensionality. This paper provides a review of the literature on feature selection techniques specifically designed for Gaussian mixture models (GMMs) and hidden Markov models (HMMs), two common parametric latent variable models. The primary contribution of this work is the collection and grouping of feature selection methods specifically designed for GMMs and for HMMs. An additional contribution lies in outlining the connections between these two groups of feature selection methods. Often, feature selection methods for GMMs and HMMs are treated as separate topics. In this survey, we propose that methods developed for one model can be adapted to the other model. Further, we find that the number of feature selection methods for GMMs outweighs the number of methods for HMMs and that the proportion of methods for HMMs that require supervised data is larger than the proportion of GMM methods that require supervised data. We conclude that further research into unsupervised feature selection methods for HMMs is required and that established methods for GMMs could be adapted to HMMs. It should be noted that feature selection can also be referred to as dimensionality reduction, variable selection, attribute selection, and variable subset reduction. In this paper, we make a distinction between dimensionality reduction and feature selection. Dimensionality reduction, which we do not consider, is any process that reduces the number of features used in a model and can include methods that transform features in order to reduce the dimensionality. Feature selection, by contrast, is a specific form of dimensionality reduction that eliminates feature as inputs into the model. The primary difference is that dimensionality reduction can still require the collection of all the data sources in order to transform and reduce the feature set, while feature selection eliminates the need to collect the irrelevant data sources.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Gaussian mixture models (GMMs) and hidden Markov models (HMMs) are two common parametric latent variable models. GMMs are often used for clustering or modeling multi-modal data. HMMs, on the other hand, are often used for modeling time series data. However, both of these models have similar traits in that the distribution of the observed or collected data is dependent upon a hidden or latent random variable. In the case of GMMs, the latent variables are independent and identically distributed, while the latent variable corresponding to a specific observation for an HMM is dependent upon the previous latent variable in the sequence. The successful application of GMMs and HMMs, domains ranging from speech recognition to financial data analytics, has led to a corresponding explosion in the amount and variety of data being collected for analysis using these methods. This growth in data is not limited to the number of observations but extends to the type of information collected or features.

Generally, the more information that can be observed the better a machine learning method will perform. However, there are a broad set of issues, collectively known as the curse of dimensionality, associated with high-dimensional data. Training times and algorithmic complexity, storage space requirements, and the noise in data sets all may scale poorly with the number of features. Many pattern recognition and machine learning techniques have trouble dealing with a large number of irrelevant features. Both GMMs and HMMs can handle high-dimensional data. However, increasing the dimensionality of the data, or adding more features to the data set, can decrease the accuracy of a model by introducing noise, a phenomenon known as peaking (Jain et al. 2000). Further, the number of observations needed to accurately train a model increases with the number of dimensions or features due to class conditional sparsity.

Feature selection is the process of reducing the number of collected features to a relevant subset of features and is often used to combat the curse of dimensionality. Feature selection can increase the performance of models by eliminating noise in the data, increase the training and prediction speed of the model, improve model interpretation, decrease the risk of overfitting, and decrease the cost of a system by eliminating the need to collect certain features. Feature selection has been widely studied in multiple fields and for various models (Dash and Liu 1997; Guyon and Elisseeff 2003; Liu and Yu 2005). For example, in the field of bioinformatics where high-dimensional data is prevalent, feature selection has become a prerequisite for constructing mathematical models (Saeys et al. 2007). There is a clear and growing need for feature selection methods as the complexity of collected data grows. In 2003, at the dawn of the big data age, Guyon and Elisseeff (2003) wrote a review of feature selection techniques and stated that most of the papers they cite deal with hundreds to tens of thousands of features. The number of features available in most domains has surely grown since then as a result of the many successful applications of artificial intelligence and machine learning and in correspondence with the capabilities of systems and sensors for collecting contextual and transactional data.

Extensive research has been performed on feature selection for clustering and the specific case of GMMs. However, the work on feature selection for HMMs is limited. In this paper, we survey and review the literature on feature selection techniques specifically designed for GMMs and HMMs. We cover both GMMs and HMMs because of the similar nature of these two models and that techniques designed for one can often be applied to the other. General feature selection techniques can be applied to these models. However, there are two challenges that must be considered when selecting a feature selection technique to be used with these models. First, both GMMs and HMMs are latent variable models, and the latent variables might not be available in the collected data set. Therefore, an unsupervised feature selection technique (Dy 2008), which can estimate the set of relevant features without knowledge about the latent variables, could be required. Second, if unsupervised methods are used for feature selection, they are often iterative and highly computational. Feature selection can be a highly computational process when all the information is known, and this trait often limits the feature selection process in application. Therefore, a highly computational feature selection technique is often undesirable. Feature selection methods developed specifically for GMMs and HMMs often take into account these challenges. Further, the specific feature selection techniques often outperform general techniques that can be applied to any type of model.

Given the vast amount of general feature selection methods and the large number of methods specific to GMMs and HMMs, a survey of the feature selection methods for these models is needed. The primary contribution of this work is the collection and grouping of feature selection methods specifically designed for GMMs and for HMMs. An additional contribution lies in outlining the connections between these two groups of feature selection methods. Often, feature selection methods for GMMs and HMMs are treated as separate topics. In this survey, we propose that methods developed for one model can be adapted to the other model. For example, the feature saliency feature selection technique was originally developed for GMMs but was later extended to HMMs. Further, this survey finds that the number of feature selection methods for GMMs outweighs the number of methods for HMMs and that the proportion of methods for HMMs that require supervised data is larger than the proportion of GMM methods that require supervised data. We conclude that further research into unsupervised feature selection methods for HMMs is required and that established methods for GMMs could be adapted to HMMs.

In this survey, we primarily focus on Gaussian mixture models and HMMs that have a Gaussian observation distribution. Other distributions, such as the gamma distribution or the Student’s t-distribution, could be used in place of the Gaussian for both models. Further, HMMs are often modeled with discrete observations and non-parametric observation distributions. Our review of the literature on feature selection techniques for these models reflects the popularity of the Gaussian distribution. However, we believe that most of the methods discussed in this review could be easily extended to models with non-Gaussian distributions.

Feature selection can also be referred to as dimensionality reduction, variable selection, attribute selection, and variable subset reduction. In this paper, we would like to make a distinction between dimensionality reduction and feature selection. Dimensionality reduction is any process that reduces the number of features used in a model and can include methods that transform features in order to reduce the dimensionality. Feature selection is a specific form of dimensionality reduction that eliminates feature as inputs into the model. The primary difference is that dimensionality reduction can still require the collection of all the data sources in order to transform and reduce the feature set, while feature selection eliminates the need to collect the irrelevant data sources. Principal component analysis (PCA) is an example of dimensionality reduction that we do not consider feature selection.

This paper is organized as follows. Section 2 outlines GMMs and HMMs. Section 3 describes feature selection and general feature selection methods. Section 4 describes the feature selection methods specific to GMMs, and Sect. 5 describes the feature selection methods specific to HMMs. Finally, Sect. 6 concludes with a discussion and outlines needed research areas.

2 Gaussian mixture models and hidden Markov models

In this section, we review the technical aspects of GMMs and HMMs. GMMs are used for clustering unsupervised data and detect hidden structure among the individual data points. Clustering algorithms are often divided into two types: distance-based and model-based. The former method clusters data using a distance metric while the latter fits a predefined model to the data. GMMs are a model-based clustering approach that assumes each cluster has a multivariate normal distribution. The cluster assignment is considered a random variable. Model-based clustering offers the advantage of a framework for assessing the number of clusters and the significance of each variable in the clustering process (Maugis et al. 2009a).

Similar to GMMs, HMMs are probabilistic models consisting of two sets of correlated random variables. The first set is a hidden state sequences X, which is modeled as a Markov chain. The second set consists of the observations or emissions Y, which are modeled with state-conditional distributions. The emission distribution is often represented by a Gaussian distribution; but it can take on any parametric or non-parametric distribution and be either continuous or discrete. These models are widely applied in finance, speech recognition, medicine, activity recognition, and manufacturing. In some cases, the observations for HMMs are modeled as GMMs.

2.1 GMMs

GMMs are parametric models used for clustering data. Given N observations and I mixtures, let \(X = \{x_1, \ldots ,x_N\}\) represent the observation’s cluster or mixture assignment random variable. Let \(Y = \{y_1, \ldots ,y_N\}\) represent the multivariate data where \(y_{ln}\) is the lth component of the nth observation. Specifically for GMMs, we assume that each mixture emits the data from a multivariate Gaussian \(\mathcal {N}(y_n|\varvec{\mu }_i,\varSigma _i)\), where \(\varvec{\mu }_i\) is the mixture dependent mean vector and \(\varSigma _i\) is the mixture dependent covariance matrix. A graphical model for a GMM is displayed in Fig. 1. The likelihood of observing the data given the model is

where \(\pi _i = P(x=i)\) and \(\varLambda \) is the set of model parameters \(\{\pi _1, \ldots ,\pi _I,\varvec{\mu }_1, \ldots ,\varvec{\mu }_I,\varSigma _1, \ldots ,\varSigma _I\}\). If the covariance matrix is diagonal, which represents the assumption that each feature is independent, the likelihood can be rewritten as

where \(\sigma _{il}^2\) is the variance of the lth feature of the ith mixture.

Let \(\mathfrak {x}_{in}\) indicate if the nth observation belongs to the ith cluster, i.e \(\mathfrak {x}_{in} = 1\) if \(x_n = i\) and \(\mathfrak {x}_{in} = 0\) otherwise. Using this indicator, the joint probability of the mixture random variable X and the observations Y can be written as

In many cases, the expectation-maximization (EM) algorithm is used to solve for the parameters in the model (Bilmes 1998). In the E-step, calculate the probability that each observation belongs to the ith mixture using

In the M-step, update the parameter estimates using

and

There are numerous methods for estimating the parameters of a GMM including variational Bayesian methods (Corduneanu and Bishop 2001) and Bayesian sampling methods (Frühwirth-Schnatter 2001; Jasra et al. 2005; Richardson and Green 1997).

2.2 HMMs

HMMs (Rabiner 1989) are parametric models used for representing time series data. Two sequences of random variables are represented by a joint probability distribution. The hidden state sequence \(X = \{x_1, \ldots ,x_T\}\) is modeled as a Markov chain and follows the Markovian property that the current state is solely based on the previous state. The Markov chain has parameters \(\pi \) representing the initial state distribution and \(a_{ij}\) representing the transition probability between state i and j. The transition probabilities are collected into the transition matrix A. At each time step t, an observable emission \(Y = \{y_1, \ldots ,y_T\}\) is generated from a state dependent distribution. When there are L features, the lth feature is represented by \(y_{lt}\). For an HMM with I states, the state dependent distribution is represented by \(p(y_t|x_t=i,\theta )\) where \(\theta \) represents the parameters of the distribution. The set of all model parameters for the HMM is represented by \(\varLambda = \{\pi ,A,\theta \}\). A graphical model for an HMM is displayed in Fig. 2.

The joint probability for the state sequence X and the emission sequence Y is given by

where \(f_{x_t}(y_t)\) is the emission distribution given \(x_t=i\). The probability for the emission sequence can be found by summing Equation (8) over all possible state sequences.

The Baum–Welch algorithm (Bilmes 1998; Rabiner 1989) is the most common algorithm used for unsupervised parameter estimation and is the EM algorithm applied to HMMs. In the expectation step, the forward and backward probabilities are calculated

and

These probabilities are used to calculate the posterior probabilities for the state and the transitions

and

In the M-step, the Markov chain parameters are updated using

and

When the emission distribution is assumed to be Gaussian and the features are assumed to be conditionally independent, the mean \(\mu \) and standard deviation \(\sigma \) are updated using the following equations

and

Other popular methods for estimating the parameters of an HMM given an observation sequence include variational Bayes estimation (Chatzis and Kosmopoulos 2011; Ji et al. 2006; McGrory and Titterington 2009; Wei and Li 2011), descent methods (Bagos et al. 2004; Cappé et al. 1998), Bayesian sampling methods such as Markov chain Monte Carlo or Gibbs sampling (Boys and Henderson 2001; Robert et al. 2000; Rydén 2008; Scott 2002), and maximum mutual information estimation (Bahl et al. 1986; Merialdo 1988). Online or incremental learning algorithms for HMMs are surveyed by in Khreich et al. (2012).

The Viterbi algorithm (Rabiner 1989) can be used to predict the most likely state sequence given an emission sequence. If classes or labels are mapped to the states of an HMM, the Viterbi algorithm can be used for classification and prediction. Another formulation of a classification problem using HMM maps a class or label to an individual HMM. In this formulation, a different HMM is trained for each class. During prediction, the likelihood that the observed data was generated from each HMM is calculated. The observed sequence is labeled with the class corresponding to the HMM with the highest likelihood of generating the observed data.

3 Feature selection literature review

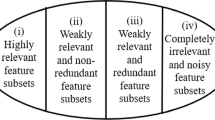

Feature selection is the process of reducing the set of collected features \(\mathcal {L}\) to a subset of relevant features \(\mathcal {L}^\prime \); see Fig. 3 for a visual representation of the feature selection process. Feature selection is also called variable selection, attribute selection, or feature subset selection. Feature selection speeds the learning process, improves model interpretation, reduces the risk of overfitting, and alleviates the effects of the curse of dimensionality. When the feature set is small, an exhaustive search can be performed. As the number of features grows, this method becomes impractical, because the number of possible feature subsets grows exponentially.

As an example, suppose that two features can be collected on a two-class problem. Let the two features be designated by x1 and x2. In Fig. 4, neither feature is relevant. In Fig. 5, x1 is a relevant feature and x2 is irrelevant. In Fig. 6, both features are relevant, however only a single feature is needed to distinguish between the two classes. Thus, x1 and x2 are considered redundant features. Figure 7 shows an example of the two-class problem where both features are needed to adequately distinguish between classes. A good feature selection algorithm should be able to identify the single relevant feature in Fig. 5 and select both features in Fig. 7.

Feature extraction is a separate problem from feature selection. Feature selection identifies relevant features from a candidate set of features, while feature extraction calculates new features from a given set. Feature selection does not alter the original features, while extraction generates new features. Dimensionality reduction is usually applied to extracted features. Principal component analysis (PCA) (Murphy 2012) is a feature extraction method. Dimensionality reduction is performed by selecting the first m principal components. This method differs from feature selection, however, because all input features must still be collected in order to extract the new principal components. Conversely, after feature selection has been performed, the irrelevant features no longer need to be collected. Further, the selected subset of features can give insight into the process and be interpreted by a domain expert. However, methods such as PCA reduce the noise in the extracted features, and can provide more discriminating features than the original raw features. Independent component analysis (ICA) (Murphy 2012) is another form of feature extraction similar to PCA.

John et al. (1994) and Kohavi and John (1997) outline four definitions for relevant features that were current in the literature in the mid 1990’s. Using a correlated XOR problem, they show that different definitions can lead to the selection of different feature subsets, and, after arguing that definitions of strong and weak relevance are necessary, they provide such. However, Kohavi and John (1997) go on to show, using an example, that relevant features are not always included in the optimal feature set and irrelevant features are not always excluded from the optimal feature set. Blum and Langley (1997) provide definitions of feature relevance that include a target concept and a measure of complexity of the selected feature subset. Blum and Langley’s final definition of relevance, which first appeared in Caruana and Freitag (1994), introduces the idea of relevance with respect to a specific learning algorithm.

Guyon and Elisseeff (2003) lay out several steps for general feature selection. These are in the form of questions, and the answers lead to specific actions to be taken or a particular feature selection method to be used. For example, their fifth question is, “Do you need to assess features individually?” If the answer is yes, a procedure that ranks features should be used.

Molina et al. (2002) test and compare 10 feature selection algorithms that require the use of supervised data. They characterize each algorithm based on search optimization (exploring the feature space), generation of successors (selecting the next feature to add or subtract from the proposed feature set), and the evaluation measure. The authors evaluate each algorithm based on the number of relevant or irrelevant features included in the final feature subset, as well as the number of redundant features included in the final subset. Their tests demonstrate that the algorithm that performs the best is highly dependent upon the data, leading to the conclusion that there is no optimal feature selection algorithm for all data sets.

Feature selection methods can be divided into three groups: filters, wrappers, and embedded methods. Filters assess feature relevance by investigating the feature’s properties. They address the problems of selecting features and building models independently. Wrappers assess feature relevance with regard to a specific learning algorithm. In most cases, a model is built with respect to a subset of features and the model’s performance is evaluated based on specified criteria. Wrappers then move through the subset space evaluating feature subsets with regard to the evaluation function. Embedded methods simultaneously select features and construct models. Figure 8 gives visual displays of each of these methods. There are numerous feature selection methods that can be applied to any type of model, therefore we briefly outline and group a selection of popular feature methods that could be applied to GMMs and HMMs but are not specific to these models.

Types of feature selection methods. a The filter F takes as inputs the set of all features \(\mathcal {L}\) and reduces it to the relevant subset \(\mathcal {L}^\prime \) independent of the model parameters \(\varLambda \), which are constructed or estimated only on \(\mathcal {L}^\prime \). b The wrapper first selects a subset of features \(\mathcal {L}^\prime \) using the a search algorithm S, estimates the model parameters \(\varLambda \) on \(\mathcal {L}^\prime \), and then evaluates \(\mathcal {L}^\prime \) the using an evaluation function E. This process continues until a stopping criterion is met. c The embedded method Em takes the full feature set \(\mathcal {L}\) and outputs both the reduced set \(\mathcal {L}^\prime \) and the model parameters \(\varLambda \)

3.1 General filters

Filters treat feature selection as a preprocessing step and select features with no regard to the model, i.e. filters only consider the properties of the collected features and how they distinguish themselves from one another or how they relate to a target class label. These methods are generally fast in terms of computation, but can result in feature subsets that do not yield satisfactory predictive accuracy. Certain types of filters can be applied to unsupervised data. In addition, filters usually scale well to the number of features. Under certain conditions, e.g. if supervised data is available, the filters described in this section can be applied to feature sets before training GMMs or HMMs.

The simplest filtering technique is to select features based on their correlation with the class or continuous response variable. More complex filters include the FOCUS (Almuallim and Dietterich 1991) and Relief (Kira and Rendell 1992) algorithms. Extensions of Relief (Kononenko 1994; Robnik-Šikonja and Kononenko 2003) can be applied to multi-class problems and unsupervised data. Feature-similarity feature selection (Mitra et al. 2002) is an unsupervised filter that groups the k closest features. A single feature from each group is chosen to represent the group in the reduced feature subset. The idea behind this method is that features that are similar by some metric are redundant and can be removed with insignificant change in prediction accuracy. Some filters rank or assign weights to features. Correlation-based methods (Yu and Liu 2003) rank features based on their association with a class label. Forman (2003) compares several metrics for ranking features. The evaluation is specific to text classification, so results may not generalize to all classification problems. The author concludes that the bi-normal separation metric outperforms other filters on the chosen data sets.

Bins and Draper (2001) propose using both filters and a traditional wrapper in a three-stage feature selection method. The first stage removes the irrelevant features using a filter. The second stage removes the redundant features also using a filter. The first two stages reduce the number of features to a point where a traditional wrapper can be applied without significant computational cost. The authors suggest using either forward or backward search depending on how many features remain after the first two stages.

3.2 General wrappers

Wrappers test feature subsets given a model and an evaluation function. These methods are more computationally expensive than filters but take the model into account and often yield feature subsets with better predictive ability. However, wrappers generally require the use of supervised data for the evaluation function. In a wrapper approach, data are typically divided into three groups: training, evaluation, and testing. A model using a subset of the candidate features is trained on the training set, then evaluated using the evaluation set. The feature subset is then augmented in some fashion and the process repeated. The feature subset that optimizes the evaluation function is chosen as the final feature subset and tested on the withheld testing set. When data are scarce, the evaluation set can be eliminated by evaluating the feature subset on the training data. However, this can cause a poor generalization error and increase the likelihood of overfitting to the training data. Cross validation can be used in the case of small data sets.

Sequential forward search and sequential backward search are two types of exploration algorithms (John et al. 1994; Kohavi and John 1997). These are considered greedy algorithms, as opposed to an exhaustive search of the feature subsets. Sequential search methods are often referred to as hill-climbing strategies, because they look for improvement in the evaluation function. However, a non-exhaustive search cannot guarantee the optimal feature subset (Cover and Campenhout 1977). Aha and Bankert (1995) compare forward and backward search algorithms to filters. They found that these wrappers, and some of the tested variants, generally outperform filters on the tested data sets and that backward search does not significantly outperform forward search as previously claimed by Doak (1992). Another exploration method, the stepwise search, combines forward and backward sequential search so that at each step in the algorithm a feature can either be added or removed. When compared to unidirectional methods and filters, stepwise search algorithms outperform unidirectional search and some filtering techniques (Caruana and Freitag 1994).

Kohavi and John (1997) propose a best-first-search feature-exploration technique with compound operators. Compound operators add or remove groups of features, as opposed to adding or removing a single feature in sequential forward search and sequential backward search. The branch-and-bound algorithm (Narendra and Fukunaga 1977; Somol et al. 2004) can yield an optimal feature subset, but requires a monotonic evaluation function that is generally not practical. Floating-search methods (Pudil et al. 1994a, b) add and remove different numbers of features to avoid the nested-feature problem. Ng (1998) presents theoretical bounds on the performance of wrappers and a new search procedure called ORDERED-FS that only searches over all subsets of the same size to reduce computational cost.

When using wrappers, the choice of an evaluation function greatly affects the outcome of the method. Dash et al. (2000) compare a consistency measure to distance measures, information measures, and dependence measures. The authors argue for a consistency-measure evaluation function, because it is monotonic and lacks search bias. Obviously, the type of evaluation function is based on the available data. Functions that require the class label, such as consistency or accuracy, cannot be implemented in unsupervised learning.

While wrappers can be used to select features for both GMMs and HMMs, there are two primary drawbacks to these methods both having to do with the unsupervised data. First, the wrapper will need to use an unsupervised evaluation metric. In unsupervised feature selection, these metrics often trade off the likelihood the model fits the data with the number of parameters or features included in the model. These metrics can be inaccurate and uninformative for feature selection. Second, the computation required for the search procedure is amplified due to the fact that unsupervised learning techniques for GMMs and HMMs are often iterative and computationally expensive.

3.3 General embedded techniques

Embedded techniques simultaneously select features and construct models. Therefore, these techniques have the wrapper’s advantage of selecting feature subsets with respect to a specific learning algorithm, and the filter’s advantage of being more computationally efficient than wrappers. Classification and regression trees (CART) (Murphy 2012) are one example of an embedded feature selection method. CART recursively divides the feature space to create a classification model. Irrelevant features will not be selected for inclusion in the tree. Daelemans et al. (2003) show that jointly selecting features and optimizing model parameters in a natural-language-processing context outperforms optimizing the two processes separately.

While the number of general embedded feature selection techniques discussed in this section is limited, there are numerous embedded techniques for GMMs and HMMs that will be discussed in the following sections.

4 Feature selection for GMM literature review

Feature selection methods specific to GMMs are discussed in this section. It begins with an overview of the research that uses some form of feature selection with GMMs in an applied setting. Later in the section, filters, wrappers, and embedded techniques specifically designed for GMMs are outlined. Table 1 lists the feature selection methods for GMMs discussed in this section.

Ribeiro and Santos-Victor (2005) combine several types of feature selection methods in an application of feature selection to human activity recognition from video. The authors do not propose a new feature selection technique but rather test four different methods with the ultimate goal of finding the best subset for their application. They limit their search by using methods with a predefined number of features. The four methods are a brute search (testing all possible combinations of subsets contain the preselected number of features), a lite search (grouping the individual features with the best predictive ability into a single subset), a lite-lite search (similar to the lite search but ranking features), and the Relief algorithm. In their testing, they look for feature subsets of only 1, 2, or 3 features, thus limiting the computation. They find that the brute search method with 3 features yields the highest recognition accuracy on the tested data.

In another applied setting, Godino-Llorente et al. (2006) use two feature selection methods to select features derived from mel frequency cepstral coefficients in a voice impairment assessment tool. The two methods, F-ratio and Fisher’s discriminant ratio, both compare metrics derived from class assignments. Therefore, observations must be classified using the learned model or supervised data must be provided to utilize the techniques proposed in this study. The work of Godino-Llorente, Gomez-Vilda, and Blanco-Velasco is included in this review to present another application of feature selection when GMMs are used to model data.

Kerroum et al. (2010) propose a feature selection method for classification that uses GMMs but is not specifically designed for selecting features for a GMM. This method is a wrapper that employs mutual information as the evaluation function. GMMs are used to model the data during feature selection but, in the end, the selected features are fed to a standard classifier such as a support vector machine. More specifically, the mutual information of two continuous random variables is

Let X represent the data, and C represent the class. The mutual information between the input X and the output C is

where H(C) and H(C|X) are entropy and conditional entropy. If the number of classes is known, the entropy H(C) is fixed and the information is dependent upon the conditional differential entropy. A mixture model is used to model the data in the conditional probability p(c|x). Let \(g_c\) be the number of Gaussian mixtures in class c and \(N_c\) be the number of classes. Then

where \(f(x|\theta )\) is a Gaussian distribution, \(\pi \) is the mixture prior probability, and \(\theta \) is the set of parameters for the Gaussian distribution. The GMM is used to get a better fit to the data, but this proposed feature selection method can only be used if the class labels are provided.

Steinley and Brusco (2008) conducted an empirical study of eight feature selection techniques for clustering including two techniques specific to GMMs (Law et al. 2004; Raftery and Dean 2006). Numerical experiments were only performed on simulated data. The authors conclude that the feature selection techniques using GMMs did not perform as well as the other methods on the synthetic data. They also conclude that a major limitation of these methods is that the number of clusters must be known a priori. We feel that an updated empirical study is needed which includes a wider variety of techniques and numerical experiments performed on real data sets.

4.1 Filters for GMMs

Krishnan et al. (1996) propose a pruning feature selection technique specific to GMMs based on the Fisher ratio. We refer to this method as a pruning technique rather than a filtering technique because it requires the model parameters, where filters are independent of the model. However, this method does not iterate through the feature space nor simultaneously estimate model parameters and feature subsets so we consider it closer to a filter than either a wrapper or an embedded method. This method assumes that each class has a multi-modal distribution modeled as a GMM. The Fisher ratio between two classes is

where \(\mu _{ik}\) and \(\mu _{jk}\) are the cluster means for the kth feature of classes i and j, and \(\sigma _{ik}\) and \(\sigma _{jk}\) are the corresponding standard deviations of the kth feature. This ratio can be extended to multiple classes and averaged

where J is the number of classes, and \(P_i\) and \(P_j\) represent the prior probability of belonging to each class. Discriminating features have a higher Fisher ratio, and all features in the data set can be ranked based on this metric. Krishnan et al. generalize this metric to GMMs and use it for feature selection.

4.2 Wrappers for GMMs

Dy and Brodley (2004) evaluate a general feature selection criterion for clustering on GMMs. This method, called feature subset selection using expectation-maximization (FSSEM), was previously presented in Dy (2000) but not specified to GMMs. FSSEM uses one of two methods for evaluating features: scatter separability and maximum likelihood. Both of these criteria contain dimension-based bias. Therefore, the authors propose a normalization to reduce bias. Dy and Brodley (2004) cite the lack of research on unsupervised feature selection and attempt to explore wrappers as a method for feature selection for unsupervised learning, identify issues in the area of unsupervised feature selection, propose ways to address these issues, point out lessons learned, and give direction to future research. The numerical experiments on both synthetic and real data using GMMs as the clustering algorithm demonstrate that feature selection outperforms using the entire feature set, one evaluation criteria is not better than the other, and both criteria still contain bias. The maximum likelihood approach selects features that yield a high-density clustering while the scatter separability approach selects features that maximize separability.

Raftery and Dean (2006) formulate the feature selection process for clustering as a comparison between competing models. In this method, variables are divided into three groups: those relevant to clustering, those irrelevant to clustering, and those proposed for inclusion or exclusion from the set of variables relevant to clustering. At each step, models are compared using the Bayesian information criterion (BIC). Raferty and Dean define BIC as

where n is the number of observations. Let \(Y^{(1)}\) represent the set of variables already selected for clustering, \(Y^{(2)}\) be the set of variables being considered for inclusion in the model, and \(Y^{(3)}\) be the set of remaining variables. The proposed feature selection method compares model M1

to model M2

where z represents the class memberships. Figure 9 displays a graphical representation of M1 and M2. A greedy stepwise search through the feature space is performed until convergence. BIC is an unsupervised evaluation metric which assesses both features in the model and the number of clusters. Therefore, this technique can both select features and the number of clusters.

Graphical representation of M1 (left) and M2 (right) from Raftery and Dean (2006)

Maugis et al. (2009a) propose a feature selection method for GMMs based on backward stepwise selection. This method is an extension of the work previously conducted by Raftery and Dean (2006) as the authors use a similar method for dividing variables into sets of relevant, irrelevant, and proposed for inclusion or exclusion. However, the method in Maugis et al. (2009a) allows for the possibility that irrelevant variables can be independent of the relevant variables. Further, the authors investigate the more general case where blocks of variables cannot be split. Like the method in Raftery and Dean (2006), the method proposed by Maugis et al. can be used to select features and the number of clusters. Maugis, Celeux, and Martin-Magniette later generalize this technique by assuming that some of the irrelevant features can be linked to some of the relevant features while other irrelevant features remain independent of the relevant features (Maugis et al. 2009b). Celeux et al. (2014) present a study comparing the method proposed in Maugis et al. (2009b) to a regularization method specific to the K-means algorithm (Witten and Tibshirani 2010). This study concluded that feature selection improves clustering accuracy and that the model selection method outperformed the regularization method on simulated data. Galimberti et al. (2017) further generalize this concept by allowing features to provide information about multiple clustering structures. This is accomplished by modeling the dependence between subgroups of features as a multiple regression linear model.

Scrucca (2016) suggests using the evaluation criteria proposed by Raftery and Dean (2006) but a genetic algorithm to search the feature space. A genetic algorithm is composed of several parts. The genetic coding scheme represents the feature subsets, where inclusion in the subset is indicated by a binary variable. The population size is an input parameter into the genetic algorithm and represents the number of models to generate. The fitness function evaluates the feature subset. In the proposed method, the BIC criteria from Raftery and Dean (2006) is used as the fitness function. The genetic operators mutate the genetic code with the intention of improving the fitness function. The genetic algorithm tends to select smaller feature sets than the stepwise search while maintaining accuracy.

Marbac and Sedki (2017) cast the feature selection problem as a model selection problem and propose a new information criterion for model evaluation, the maximum integrated complete likelihood (MICL). This method assumes that an irrelevant feature will have the same estimates for \(\mu \) and \(\sigma \) across all clusters. A binary variable is introduce that indicates the relevance of each feature. Specifically, if \(\omega _l = 1\) the lth feature is relevant, and if \(\omega _l = 0\) the lth feature is irrelevant. A model \(\mathbf {m}\) is defined by \(\omega \) and the number of clusters I. Feature selection is performed by finding the model that maximizes the posterior distribution. If a uniform prior is assumed over the models, then

where \(\mathcal {M}\) is the set of possible models, and

The integrated complete-data likelihood is

The binary relevance variable \(\omega \) is incorporated into the modeling process through the prior distribution on the model parameters \(P(\varLambda |\mathbf {m})\). The MICL evaluation criterion is

where

An iterative optimization algorithm alternates between estimating X given Y and \(\mathbf {m}\) and maximizing \(\omega \) given Y and X. We classify this method as a wrapper because of this iterative process of selecting the feature set and then estimating the cluster assignment. However, it is generally more computationally efficient than other wrappers because estimating the model parameters has been integrated out.

Zeng and Cheung (2009) construct two new metrics for evaluating feature sets. The objective of the first is to select features that are relevant to clustering. The objective of the second is to remove redundant features. An iterative process of constructing a clustering model and performing feature selection is proposed with the objective of determine both the optimal number of clusters and the relevant non-redundant feature set. The authors build on their previous work that proposes using the rival penalized EM algorithm to determine the optimal number of clusters for a GMM (Cheung 2004, 2005). The relevant feature metric utilizes the ratio of cluster specific variance to total variance

where \(\sigma _l\) is the total variance of the lth feature. This scoring metric is used to rank features, and features with a score below a given threshold can be removed. Redundant features are found using a Markov blanket. Let \(M_l\) represent the Markov blanket for the lth feature, F represent the entire feature set, and \(F_l\) represent the lth feature. If the Markov blanket for \(F_l\) can be found in F, the information in \(F_l\) is redundant and can be eliminated without affecting the ability of the model to predict the cluster. We consider this method a wrapper because of its iterative nature, and its reliance on constructing a model.

Some feature selection methods for clustering find cluster-specific feature sets. To illustrate this point, we present the example from Li et al. (2008), a study on localized feature selection for general clustering. Consider a four cluster problem with four features. Clusters 1 and 2 have two relevant features, designated X1 and X2, and two irrelevant features, designated X3 and X4. Clusters 3 and 4 have X2 and X3 as relevant features and X1 and X4 as irrelevant features. The model parameters are \(\mu _{C1} = [0.5,-0.5,0,0]\), \(\mu _{C2} = [-0.5,-0.5,0,0]\), \(\mu _{C3} = [0,0.5,0.5,0]\), \(\mu _{C4} = [0,0.5,-0.5,0]\), \(\sigma _{C1} = \sigma _{C2} = [0.2,0.2,0.6,0.6]\), and \(\sigma _{C3} = \sigma _{C4} = [0.6,0.2,0.2,0.6]\). A hundred observations are generated from each cluster and the simulated results are presented in Fig. 10. Li, Dong, and Hua concluded that a general clustering algorithm may not be able to adequately cluster this data due to each cluster having an irrelevant feature. Further, a global feature selection method could find it difficult to determine a relevant feature subset for all 4 clusters. A localized feature selection method, which finds a relevant feature subset for each cluster, is needed for this type of problem.

Data generated by model presented in Li et al. (2008)

Liu et al. (2002) develop a cluster-specific feature selection method for document clustering where the features are word occurrences. In this method, a GMM is trained on all the features using the EM algorithm, and then each document is assigned to a cluster. Discriminative features are determined using a discriminative feature metric. A word or feature is considered discriminative if that word has the highest number of occurrences inside the assigned cluster and a low number of occurrences outside the assigned cluster. This feature selection algorithms iterates between finding discriminative features and assigning documents to clusters based on those features, therefore we classify the algorithm as a wrapper.

4.3 Embedded feature selection techniques

In this section, embedded feature selection techniques for GMMs are reviewed. There are numerous types of embedded methods for GMMs so this section is broken down into several subsections, each focused on a different type of embedded technique.

4.3.1 Penalized model-based clustering

Pan and Shen (2007) propose a penalized model-based clustering algorithm for feature selection. This method is specifically designed to address data sets that are “high dimension, low sample size”. The primary idea behind this feature selection technique is that if the cluster means for the lth feature is close to 0, then the lth feature is irrelevant. The cluster means are driven toward 0 by penalizing the cluster means during estimation. Similar methods are used in regression to penalize coefficients.

The log-likelihood can be found by taking the logarithm of Eq. (1)

Similarly, when assuming that each feature is independent, the log-likelihood of the joint probability of X and Y is

where \(\mathfrak {x}_{in} = 1\) if the nth observation belongs to the ith mixture and 0 otherwise.

Pan and Shen regularize the complete log-likelihood by adding a penalty term

Equation 33 replaces the conventional complete log-likelihood in the EM algorithm, and BIC is used for selecting the number of mixtures. In an earlier paper, Pan et al. (2006) developed a semi-supervised version of this penalized mixture model and applied it to microarray classification.

Wang and Zhu (2008) build on the work of Pan and Shen by proposing two new penalty terms that “group” the parameters of each cluster. Let \(\mathcal {L}(\varLambda )\) represent the complete log-likelihood from Eq. (32) and \(\mathcal {L}_P(\varLambda )\) the penalized form of the complete log-likelihood. The first novel penalized log-likelihood is called the Adaptive \(L_{\infty }\) Penalized Gaussian Mixture Model (ALP-GMM)

Weights \(w_i\) can be added to the penalty to give preference to different variables

The second novel penalty term yields a model named the Adaptive Hierarchically Penalized Gaussian Mixture Model (AHP-GMM). First, the cluster means are reparameterized \(\mu _{il} = \gamma _l \theta _{il}\) for \(i=1, \ldots ,I\) and \(l = 1, \ldots ,L\) where \(\gamma _l \ge 0\). The hierarchical penalized complete log-likelihood is

As with the previous penalty term, weights can be added

The EM algorithm is used to estimate model parameters, however ALP-GMM does not have a closed form solution for the cluster mean update. Therefore, a quadratic program is derived. Through tests on simulated data and microarray data, the authors conclude that both ALP-GMM and AHP-GMM outperform the method in Pan and Shen (2007), but ALP-GMM is more computationally expensive due to the quadratic program.

Xie et al. (2008b) develop vertical and horizontal penalties. The vertical penalty term assumes that if a feature is irrelevant, the means of all mixtures will be equal to 0. This results in the following penalized log-likelihood

where \(||\mu _{\cdot l}|| = \sqrt{\sum _I \mu _{il}^2}\). The horizontal penalty term assumes that features can be divided into subgroups. Establishing the subgroups often requires some form of prior knowledge. The subgroups are penalized using the following log-likelihood

where there are M subgroups of features and \(L^m\) represents the number of features in subgroup m. These two penalties can be combined into the following log-likelihood

The vertical, horizontal, and combined penalties are shown to outperform the original penalized method in Pan and Shen (2007) on both synthetic and real data.

Several other penalty methods have been suggested in the literature. Bhattacharya and McNicholas (2014) propose a LASSO-like penalty term. The log likelihood function which is maximized during parameter estimation is

where \(\lambda _n\) is dependent upon the observation. The authors use a modified form of the EM algorithm. Guo et al. (2010) propose a pairwise penalty term with equal covariance across the clusters

The previous penalty methods discussed shrink the cluster means of non-informative features to 0, while the pairwise penalty shrinks non-informative cluster means towards each other.

In addition to the \(L_1\) regularization proposed by Pan and Shen (2007), Xie et al. (2008a) penalize the variance. They propose two schemes and both penalize the mean and variance of each mixture. The first penalized log-likelihood is

and the second is

The previously discussed penalized methods all assume a diagonal covariance matrix. In practice, it is unlikely for this assumption to hold. Zhou et al. (2009) relax the assumption of a diagonal covariance matrix by penalizing the terms of the precision matrix W. More specifically, \(\lambda _2 \sum _{l=1}^L \sum _{l^\prime =1}^L |W_{ll^\prime }|\) is added to Eq. (33). Xie et al. (2010) build on their prior work by generalizing to non-diagonal covariance matrices. This generalization leads to a significant increase in the number of parameters that need to be estimated. Further, there are computational issues stemming from the requirement that the estimated covariance matrix be positive definite. To overcome these obstacles, Xie, Pan, and Shen utilize a mixture of factor analyzers (McLachlan and Peel 2000) and model the covariance matrix using latent variables. As with the previous penalized methods, the EM algorithm is used for estimating parameters of the penalized GMM.

The concept of a penalized likelihood can be extended to a mixture of factors (Galimberti et al. 2009). In this method, it is assumed that the observation vector y is generated by a linear factor model

where \(\tilde{\varLambda }\) is a factor loading matrix, \(\tilde{z}\) is an R-dimensional latent variable, and \(\tilde{\mu }\) is a L-dimensional Gaussian term with a mean of 0 and covariance \(\varPsi \). It is also assumed that the latent variable \(\tilde{z}\) is a mixture of Gaussian distributions \(\tilde{z} \sim \sum _{i=1}^I \pi _i \mathcal {N}(\tilde{z}|\tilde{\mu }_i,\varSigma _i)\). The log-likelihood for y can now be written as

The penalty term for the mixture of factors shrinks the components of the factor loading matrix \(\tilde{\varLambda }\) towards zero.

Maugis and Michel (2011) derive a non-asymptotic penalized criterion for model and feature selection. In their approach, the “best” model, in terms of both the number of clusters and the relevant feature subset, minimizes the estimation error between the estimated likelihood given the data and the true but unknown likelihood. The authors consider four collections of Gaussian mixtures, where each collection is defined by the structure of the variance matrix for both the relevant and irrelevant sets of features. One major drawback to this method is that it is purely theoretical. Maugis and Michel acknowledge that these theoretical results are not immediately usable because they rely upon unknown constants and the mixture parameters are unbounded.

Bouveyron and Brunet-Saumard (2014) propose a feature selection method for a discriminative latent mixture (DLM) model using penalization to impose sparsity in the projection matrix. A DLM, as described in Bouveyron and Brunet (2012), models the observations as a linear combination of an unobserved random vector

where U is the loading matrix and \(\epsilon \sim \mathcal {N}(\epsilon |0,\varPsi )\). The unobserved variable is dependent upon the cluster, represented by Z, and follows a Gaussian distribution \(P(X|Z=i) \sim \mathcal {N}(X|\varvec{\mu }_i,\varSigma _i)\). The conditional probability of Y given both X and Z also follows a Gaussian distribution \(P(Y|X,Z=i) \sim \mathcal {N}(Y|UX,\varPsi )\). The marginal distribution of Y is

where \(m_i = U\mu _i\) and \(S_i = U\varSigma _i U^T+\varPsi \). A modified version of the EM algorithm, which adds an intermediate F-step to estimate the loading matrix, is derived and used to estimate model parameters. The discriminative variables are linear combinations of the original variables making interpretation difficult. Bouveyron and Brunet-Saumard (2014) propose three methods for penalizing the loading matrix which imposes sparsity and allows for feature selection. The first method adds an \(L_1\) regularization term to the estimation of U in the F-step, the second method implements a penalized regression criterion, and the third method implements penalized singular value decomposition.

4.3.2 Bayesian methods

Carbonetto et al. (2003) propose a Bayesian feature weighting method for unsupervised feature selection for GMMs. In this Bayesian technique, the means and covariance matrices of the Gaussian distributions are treated as random variables and assigned prior distributions. The component or cluster means, designated by \(\mu _c\), are assigned a Gaussian prior with the mean designated by \(\mu ^*\) and a diagonal covariance matrix designated by T with elements \(\tau ^2\). \(\mu ^*\) is the sample mean of the observed data, and the \(\tau \)’s are estimated using the EM algorithm. Estimated \(\tau \)’s near 0 are assumed to be irrelevant. An inverse Gamma distribution is placed on each \(\tau \). Figure 11 displays a graphical model of this Bayesian GMM. This method could be viewed as being similar to the penalized likelihood methods of Pan and Shen (2007) and Wang and Zhu (2008), but instead of shrinking the mean of the cluster to 0, Carbonetto et al. shrink variance of the of the prior distribution on the cluster means to determine the relevance of features.

Graphical model of Bayesian GMM from Carbonetto et al. (2003)

4.3.3 Feature saliency

Feature saliency, which recasts the feature selection problem as a parameter-estimation problem, is an embedded feature selection technique first developed for GMMs. New parameters, called feature saliencies, are added to the conditional distribution of the GMM. The emission probability consists of mixture-dependent and mixture-independent distributions, and feature saliencies represent the probability of belonging to the mixture-dependent distribution. The saliencies can be interpreted as the probability that a feature is relevant. The feature-saliency selection method is an embedded feature selection technique, because it simultaneously constructs a model and performs selection. However, the estimated feature saliency can be used to rank feature relevance, as is the case with many filtering techniques.

A method that does not estimate feature saliencies but uses a similar idea of mixture-dependent and mixture-independent distributions was developed by Pudil et al. (1995). In this model, each observation in Y is considered to belong to one of C classes \(\varOmega = {\omega _1,\omega _2, \ldots ,\omega _C}\), and the labels for each observation are assumed to be known. The class conditional distribution \(P(Y|\omega )\) is assumed to be a mixture of distributions f(y|b), where b represents the distribution’s parameters. The probability density function can be written as

where \(\phi _l\) is binary \(\{0,1\}\), \(b_{0l}\) represents the parameters for the background distribution, and \(b_{il}^\omega \) are the parameters for the mixture and class dependent distribution. Feature selection is performed by finding the set of \(\phi \)’s that minimize the Kullback-Liebler divergence between the true and postulated class-conditional probability density function. The EM algorithm is used to estimate model parameters. This method was later refined and specified for a two class problem in Novovicová et al. (1996).

In the work that would eventually lead to feature saliency, Figueiredo et al. (2003) use the idea of splitting the likelihood into relevant and irrelevant distributions. In their formulation, the available features are divided into two subsets \(y^\mathscr {U}\) and \(y^\mathscr {N}\), which represent the disjoint “useful” and “non-useful” feature sets. The data likelihood can be written as

where \(\varLambda ^\mathscr {N}\) represents the set of model parameters for the “non-useful” feature distribution, \(\varLambda _i^\mathscr {U}\) represents the set of model parameters for the ith mixture of the “useful” feature distribution, and \(\mathscr {U}\) specifies the set of useful features. This feature selection method relies on the assumption that the non-useful features are independent of the mixture. This can be seen by deriving the posterior probability that y belongs to the ith mixture. Let \(P(x=i|y,\varLambda ) = w_i\), then

where \(w_i\) can be obtained using the EM algorithm. Let \(v_i(\mathscr {N})\) be the expected label using only the set of useful features, and let \(\hat{\varLambda }\) be the corresponding model parameters. A measure of non-usefullness can now be defined by

This non-usefullness measure is used in a backward sequential search algorithm to rank the features. The cutoff point between the useful and non-useful features is determined using minimum description length

In their initial studies of feature saliency and GMMs, Law et al. (2002) and Law et al. (2004) used the EM algorithm and the minimum-message length (MML) criterion to estimate model parameters and the number of clusters. The likelihood for the feature saliency GMM is written as

where \(p(\cdot |\cdot )\) is a mixture-dependent Gaussian distribution with parameters \(\theta _{il} = \{\mu _{il},\sigma _{il}\}\), \(q(\cdot |\cdot )\) is a mixture-independent Gaussian distribution with parameters \(\lambda _l = \{\mu _l,\sigma _l\}\), and \(\rho _l\) is the feature saliency which represents the probability that a feature is relevant. The MML penalty, which is used to aid in model selection, encourages feature-saliency estimates for irrelevant features to go to zero and helps estimate the number of mixtures by forcing the probability of sparsely occupied components to zero. Law et al. (2004) propose a post-processing step in which feature saliencies are optimized to discriminate between components, after which other parameters of the model are estimated using EM. They also note that a limitation to this method is the assumption that all the features are independent.

There are several variational Bayesian (VB) approaches for estimating feature saliency model parameters for GMMs. VB is an approximate learning method for latent variables models. In a general sense, let Y represent the given data, X represent the hidden variables, and \(\varLambda \) represent the model parameters. The VB approach assumes that the true posterior distributions for the model parameters \(p(\varLambda |Y)\) and the hidden variable p(X|Y) can be approximated by \(q(\varLambda |Y)\) and q(X|Y). The objective is to find the approximating distributions that maximize the upper bound of the marginal likelihood

where \(p(\varLambda )\) is the prior distribution of the parameters and \(D(\cdot |\cdot )\) is the Kullback-Leibler divergence. The learning procedure is similar to the EM algorithm in that it is based on iteratively updating quantities in two steps

and

Valente and Wellekens (2004) and Constantinopoulos et al. (2006) both implement VB on a feature saliency GMM. Valente and Wellekens treat all model parameters as random variables and assign them prior distributions, while Constantinopoulos, Titsias, and Likas only treat some of the model parameters as random variables. Both studies conclude that the VB approach outperforms the MML approach proposed in Law et al. (2002, 2004).

Li et al. (2009) propose a localized feature saliency model which assumes that the relevance of each feature is dependent upon the cluster. A matrix S represents the feature relevance where \(s_{il}=1\) indicates that the lth feature is associated with the ith cluster, and \(s_{il}=0\) otherwise. The feature saliency can now be interpreted as \(\rho _{il} = P(s_{il}=1)\), and the likelihood is rewritten as

VB approximation is used to estimate posterior distributions for model parameters. The numerical experiments demonstrate that the localized feature selection method outperforms the global method proposed in Constantinopoulos et al. (2006).

Expectation propagation (EP) is another Bayesian inference technique that Chang et al. 2005 apply to a feature saliency GMM model for feature selection. EP is an extension of assumed density filtering (ADF) (Minka 2001), a sequential method for estimating posterior distributions. In a general ADF formulation, let \(Y = \{y_1,y_2, \ldots ,y_N\}\) represent the observed data and \(\varLambda \) represent the parameters for the model that generated the data. The joint distribution of Y and \(\varLambda \) is given by \(P(Y,\varLambda ) = P(\varLambda ) \prod _{n=1}^Np(y_n|\varLambda )\). ADF assumes that the joint distribution can be factored into simple terms denoted by \(t_n(\varLambda )\). The objective of ADF is to estimate the approximating distribution \(q(\varLambda |h)\), where h is the set of hyperparameters. EP is an extension of ADF that incorporates iterative refinements of the approximating distribution. More specifically, EP starts by estimating \(t_n(\varLambda )\) by \(\tilde{t}_n\) and iteratively updating the hyperparameters until convergence. Given \(\tilde{t}_n\), the approximate posterior can be written as

Let \(q^{\backslash i}(\varLambda |h^{\backslash i})\) represent the approximate posterior without the ith observation

The contribution of the ith distribution is incorporated into the posterior distribution through

The approximate posterior \(q^{\backslash i}(\varLambda |h^{\backslash i})\) is found by minimizing the KL divergence between \(\hat{p}(\varLambda )\) and \(q(\varLambda )\). The update for \(\tilde{t}_n\) is

with \(Z_n = \int _{\varLambda '} t_n(\varLambda ') q^{\backslash i}(\varLambda |h^{\backslash i}) d\varLambda '\). When EP is applied to the feature saliency GMM, Chang, Dasgupta, and Carin found that it outperformed the maximum likelihood formulation proposed in Law et al. (2004).

Graham and Miller (2006) use the mixture-dependent and mixture-independent model for model and feature selection on general mixture models. They assume that features are mixture dependent and write the likelihood as

where \(\varTheta (I)\) is the set of model parameters for I mixtures, \(P(Y_l|\theta _i)\) and \(P(Y_l|\theta _s)\) are mixture-dependent and shared distributions of any form with parameter sets \(\theta _i\) and \(\theta _s\) (the s indicates the shared or mixture-independent distribution), and \(v_{il}\) is a binary model parameter indicating if the feature belongs to the mixture-dependent or shared distribution. The major differences between this formulation and the feature saliency formulation proposed by Law et al. (2004) is the lack of a feature saliency parameter and treating \(v_{il}\) as a model parameter as opposed to a random variable. The learning procedure proposed by Graham and Miller (2006) is composed of two steps. In the first step, BIC is used to estimate the number of mixtures. In the second step, a modified EM algorithm is performed to estimate model parameters and select features. The E-step is modified to estimate the expected complete penalized log-likelihood, where the penalty comes from replacing the complete log-likelihood with BIC in the expectation. The M-step is modified by splitting it into two sub-steps: (1) estimates a subset of the model parameters given a fixed \(v_{il}\) and (2) finds the optimal configuration of \(v_{il}\). Graham and Miller derive learning procedures for GMMs and multinomial models.

Allili et al. (2010) adapt the feature saliency model to mixtures of generalized Gaussian distributions and apply it to image and video segmentation. Their primary contribution is the assumption that the mixture-independent distribution can be composed of K mixtures. The updated likelihood is now

where \(p(\cdot |\cdot )\) is a generalized Gaussian distribution (Allili et al. 2008). As in Law et al. (2004), the minimum message length criteria and the EM algorithm are used to estimate model parameters. Similarly, Boutemedjet et al. (2007) adapt feature saliency to mixtures of general Dirichlet distributions and solve for model parameters using the MML criteria and the EM algorithm.

Tadesse et al. (2005) propose a Bayesian feature selection method based on the idea of mixture-dependent and mixture-independent distributions. In this formulation, the features are not assumed to be independent but come from multivariate Gaussian distributions. Estimation of the model parameters, including the assignment of features to the mixture-dependent and mixture-independent distributions, is carried out using a Markov chain Monte Carlo (MCMC) algorithm. The MCMC algorithm can split and merge mixtures as well as change the assignment of each feature to the preferred distribution. This method is not tested against any of the other feature saliency methods outlined in this section, but we speculate that the computational cost of this MCMC algorithm would not scale well with the number of features as the possible combinations of feature assignments grows exponentially. This model is expanded to infinite mixtures of distributions using a Dirichlet process (Kim et al. 2006). Swartz et al. (2008) add a known structure to the Tadesse model by assigning groups of observations to specific clsuters. This method is particularly useful for modeling microarray data. Later, Vannucci and Stingo (2010) outline a Bayesian approach to variable selection that is similar to feature saliency: A binary random variable is used to represent belonging to either a component-dependent and component-independent distribution, and priors can be used to convey biological information to the system. The authors outline both supervised and unsupervised models, and suggest that Gibbs sampling be used to estimate model parameters. However, only the supervised model is tested and evaluated on data.

Feature saliency has also been expanded to generative topographical mapping (GTM) (Bishop et al. 1998), a constrained form of the GMM. A GTM is essentially a GMM with equal mixture weights (\(\pi _i = I^{-1}\;\forall i\)), a shared covariance matrix across the mixtures, and Gaussian means that do not move independently. Vellido (2006) uses feature saliency to select features for a GTM. Vellido et al. (2006) use feature saliency on a GTM that assumes a Student’s t-distribution instead of the standard Gaussian. The t-distribution allows the model to be more robust to outliers. This is demonstrated on MRS data. However, the MRS results suggest that the performance of the feature saliency GTM may be affected by low sample sizes and noise in the collected data. Vellido and Velazco (2008) investigate these limitations using synthetic data. They conclude that these limitations are a characteristic of the model and suggest that future research should test if regularization could be added to improve model performance in high noise environments.

5 Feature selection for HMM literature review

While numerous studies have investigated feature selection in general and GMMs specifically, research on feature selection methods specific for HMMs is lacking. This section begins by outlining studies addressing feature extraction and dimensionality reduction for HMMs as opposed to feature selection. These methods include principal component analysis, independent component analysis, and feature pruning. Then, we outline studies which compare different types of general feature selection methods and use HMMs as the predictive model. Finally, there are subsections for filters, wrappers, and embedded feature selection techniques with specific application to HMMs. Table 2 lists the feature selection methods for HMMs discussed in this section.

In most applications of HMMs, features are pre-selected based on domain knowledge and the feature selection procedure is completely omitted. Xie et al. (2002) use HMMs for analyzing soccer videos. The objective is to classify if the video is showing a soccer game that is in progress or a soccer game that is on a break. Features are extracted from the video that give information on the ratio of grass pixels to non-grass pixels and the motion intensity which estimates the gross motion in the frame. These features are selected purely on the basis of domain knowledge and there is no analysis of their impact on the classification ability of the HMM. Montero and Sucar (2004) use HMMs for visual human gesture recognition. They use domain knowledge to select different trajectory-based features, and then they experimentally evaluate different feature subsets. The final feature subset is chosen based on the experimental evaluation but they do present a methodology for feature selection. This could be considered a brute-force search method over subsets of features where the subsets are grouped based on objective similarity, i.e. all feature generated by a certain extraction function are in the same feature subset.

A few attempts have been made to reduce the likelihood calculation of the HMM, but these methods are not truly feature selection techniques, as all data streams must be collected. Bocchieri (1993) proposes to use vector quantization to efficiently compute continuous Gaussian likelihoods. In this method, the likelihoods of observations that are not classified as outliers will be calculated using a Gaussian distribution, while the likelihoods of outliers will only be approximated. Vector quantization is used to determine the the boundary between an outlier and a non-outlier. Li and Bilmes (2003) and Li and Bilmes (2005) propose a similar idea where the likelihood for a subset of features is calculated exactly, and the likelihood for the remaining features is approximated. The authors refer to this method as feature pruning, and the amount of pruning is determined by the algorithm. Another attempt to reduce the computation of HMMs was proposed by Gales et al. (1999). In this method, the number of Gaussian components or number of mixtures is trimmed.

Principal component analysis (PCA) is often used to reduce the size of the feature space and extract features for HMMs. Bashir et al. (2007) use PCA to extract features from object trajectories for activity classification using HMMs. Similarly, Liu and Chen (2003) use PCA to extract features from video for face recognition. Independent component analysis (ICA), which is similar to PCA, has also been used with HMMs and attempts to identify independent noise components of a signal. Windridge and Bowden (2005) use ICA to extract features and then perform feature selection on the extracted features to eliminate the noise components. However, this method is dependent on the ICA transformation and the feature selection technique cannot be successfully utilized without ICA. Therefore, we consider the method proposed by Windridge and Bowden to be a dimensionality reduction technique because ICA does not eliminate data streams. Zhou and Zhang (2008) use ICA to extract features for video content analysis using HMMs which they call the ICA Mixture Hidden Markov Model.

Other methods for transforming the original feature set for use with HMMs have also been studied. Yin et al. (2004) present asymmetrically boosted HMMs that use a form of AdaBoost (Meyer 2002; Schwenk 1999) to construct a new feature set. Yin et al. (2008) use segmental boosting, which creates an ensemble of weak learners or HMMs to create a new feature space. This method is presented as feature selection, however we classify it as feature extraction because it does not select a subset of relevant features but instead constructs a new relevant feature set.

Gales (1999) introduces the idea of semi-tied covariance matrices for HMMs with Gaussian conditional likelihoods. This method does not select features but reduces the number of parameters in the covariance matrix.

5.1 Feature selection comparison for HMMs

Nouza (1996) compares three feature selection techniques when using HMMs: sequential forward search (SFS), discriminate feature analysis (DFA), and PCA. The HMM used in this study assumes that each feature in the conditional likelihood follows a Gaussian mixture distribution. The PCA feature extraction technique used in this comparison calculates principal components using eignenvalues and eigenvectors.

DFA evaluates the contribution of each feature to correctly classifying an observation. Consider the case where the covariance matrix is diagonal and there is only one mixture in the conditional likelihood. The log-likelihood given model \(\varPsi \) can be written as

where K represents the part of the likelihood that is not dependent on the features, and \(D_l\) is

The state at each time period \(x_t\) given the observation sequence is estimated using the Viterbi algorithm. Now, let \(C_l = \mathbb {E}[D_l]\) when \(\varPsi \) is the correct model, and \(W_l = \mathbb {E}[D_l]\) when \(\varPsi \) is the incorrect model. The feature significance factor \(R_l\), which is used for evaluating each feature in DFA, is defined as

Features with a higher \(R_l\) contribute more to classification and should be considered more relevant than features with lower values of \(R_l\).

The SFS method implemented in this comparison uses recognition rate as the evaluation function. The PCA method can be considered an unsupervised feature extraction technique akin to a filter because it does not require class labels and is performed before training the HMM. The DFA method is a supervised filtering feature selection technique. The SFS is a supervised wrapper. This study demonstrates that SFS and DFA both outperform PCA, but require more computation and supervised data.

Paliwal (1992) compares four feature reduction techniques for use on an HMM-based speech recognizer. The first method is a filtering feature selection technique that uses the F-ratio – the between class variance over the pooled within class variance. The second method constructs an HMM for each feature and then tests the recognition rate of each HMM. The top d features are selected based on the top d recognition rates of the corresponding single feature HMMs. The third method extracts features using linear discriminant analysis, and the fourth method extracts features using PCA. The study concludes that the method that evaluates features using recognition rate and single feature HMMs outperforms the other methods. However, a set of features selected based on domain knowledge was shown to outperform all of the feature reduction techniques in this specific application.

5.2 Filters for HMMs

Zhu et al. (2008) present a discriminant feature selection method specific to HMMs and apply the method to estimating the condition of a micro-milling tool. The authors compare their proposed method to PCA and automatic relevance determination (ARD). ARD is a Bayesian single layer neural network. First, we describe ARD in a general application. Let \(D = \{x_n,y_n\}\) for \(n=1, \ldots ,N\) be a data set drawn from a random distribution where y is the output, and x is the input to the neural network. The distribution of y can be written as

where P(w|D) is the posterior distribution for the weights in the neural network. ARD assumes that the weights have a Gaussian prior so training simplifies to finding the weights that minimize the cost function

where \(\alpha \) and \(\beta \) are hyperparameters that control the cost function. These hyperparameters are reestimated using the evidence framework (MacKay 1992), and the optimal set of hyperparameters is linked to the relevance of each feature, i.e. a small value for the hyperparameter corresponds to a large weight and relevance.

For the proposed feature selection method, Zhu, Hong, and Wong adapt Fisher’s linear discriminant analysis (Duda et al. 2001) to the feature selection problem. Given K classes and a data set x with N observations and L features, the sample mean and covariance for each class are represented by \(\mu _k\) and \(\varSigma _k\). The within-class covariance \(\varSigma _w\) can be calculated by

where \(D_k\) is the subset of observations for class k. The between-class covariance \(\varSigma _b\) can be calculated by

where \(n_k\) is the number of observations in class k, and \(\mu \) is the overall mean of the data. In the two class problem, the absolute values of \(\varSigma _w\) and \(\varSigma _b\) are proportional to \(s_1+s_2\) (the sum of the variances for each class) and \((\mu _1+\mu _2)^2\), and the Fisher’s discriminant ratio (FDR) can be written as

The multiclass FDR for the lth feature is

The proposed method modifies the multiclass FDR because it emphasizes class separability over feature ranking

For feature selection, the FDR is computed for each feature and then ranked in descending order based upon this calculation. The top m features are selected for inclusion in the relevant feature subset.