Abstract

Deep reinforcement learning (RL) has achieved outstanding results in recent years. This has led to a dramatic increase in the number of applications and methods. Recent works have explored learning beyond single-agent scenarios and have considered multiagent learning (MAL) scenarios. Initial results report successes in complex multiagent domains, although there are several challenges to be addressed. The primary goal of this article is to provide a clear overview of current multiagent deep reinforcement learning (MDRL) literature. Additionally, we complement the overview with a broader analysis: (i) we revisit previous key components, originally presented in MAL and RL, and highlight how they have been adapted to multiagent deep reinforcement learning settings. (ii) We provide general guidelines to new practitioners in the area: describing lessons learned from MDRL works, pointing to recent benchmarks, and outlining open avenues of research. (iii) We take a more critical tone raising practical challenges of MDRL (e.g., implementation and computational demands). We expect this article will help unify and motivate future research to take advantage of the abundant literature that exists (e.g., RL and MAL) in a joint effort to promote fruitful research in the multiagent community.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Almost 20 years ago Stone and Veloso’s seminal survey [305] laid the groundwork for defining the area of multiagent systems (MAS) and its open problems in the context of AI. About 10 years ago, Shoham et al. [289] noted that the literature on multiagent learning (MAL) was growing and it was not possible to enumerate all relevant articles. Since then, the number of published MAL works continues to steadily rise, which led to different surveys on the area, ranging from analyzing the basics of MAL and their challenges [7, 55, 333], to addressing specific subareas: game theory and MAL [233, 289], cooperative scenarios [213, 248], and evolutionary dynamics of MAL [38]. In just the last couple of years, three surveys related to MAL have been published: learning in non-stationary environments [141], agents modeling agents [6], and transfer learning in multiagent RL [290].

The research interest in MAL has been accompanied by successes in artificial intelligence, first, in single-agent video games [221]; more recently, in two-player games, for example, playing Go [291, 293], poker [50, 224], and games of two competing teams, e.g., DOTA 2 [235] and StarCraft II [339].

While different techniques and algorithms were used in the above scenarios, in general, they are all a combination of techniques from two main areas: reinforcement learning (RL) [315] and deep learning [184, 281].

RL is an area of machine learning where an agent learns by interacting (i.e., taking actions) within a dynamic environment. However, one of the main challenges to RL, and traditional machine learning in general, is the need for manually designing quality features on which to learn. Deep learning enables efficient representation learning, thus allowing the automatic discovery of features [184, 281]. In recent years, deep learning has had successes in different areas such as computer vision and natural language processing [184, 281]. One of the key aspects of deep learning is the use of neural networks (NNs) that can find compact representations in high-dimensional data [13].

In deep reinforcement learning (DRL) [13, 101] deep neural networks are trained to approximate the optimal policy and/or the value function. In this way the deep NN, serving as function approximator, enables powerful generalization. One of the key advantages of DRL is that it enables RL to scale to problems with high-dimensional state and action spaces. However, most existing successful DRL applications so far have been on visual domains (e.g., Atari games), and there is still a lot of work to be done for more realistic applications [359, 364] with complex dynamics, which are not necessarily vision-based.

DRL has been regarded as an important component in constructing general AI systems [179] and has been successfully integrated with other techniques, e.g., search [291], planning [320], and more recently with multiagent systems, with an emerging area of multiagent deep reinforcement learning(MDRL) [232, 251].Footnote 1

Learning in multiagent settings is fundamentally more difficult than the single-agent case due to the presence of multiagent pathologies, e.g., the moving target problem (non-stationarity) [55, 141, 289], curse of dimensionality [55, 289], multiagent credit assignment [2, 355], global exploration [213], and relative overgeneralization [105, 247, 347]. Despite this complexity, top AI conferences like AAAI, ICML, ICLR, IJCAI and NeurIPS, and specialized conferences such as AAMAS, have published works reporting successes in MDRL. In light of these works, we believe it is pertinent to first, have an overview of the recent MDRL works, and second, understand how these recent works relate to the existing literature.

This article contributes to the state of the art with a brief survey of the current works in MDRL in an effort to complement existing surveys on multiagent learning [56, 141], cooperative learning [213, 248], agents modeling agents [6], knowledge reuse in multiagent RL [290], and (single-agent) deep reinforcement learning [13, 191].

First, we provide a short review of key algorithms in RL such as Q-learning and REINFORCE (see Sect. 2.1). Second, we review DRL highlighting the challenges in this setting and reviewing recent works (see Sect. 2.2). Third, we present the multiagent setting and give an overview of key challenges and results (see Sect. 3.1). Then, we present the identified four categories to group recent MDRL works (see Fig. 1):

-

Analysis of emergent behaviors: evaluate single-agent DRL algorithms in multiagent scenarios (e.g., Atari games, social dilemmas, 3D competitive games).

-

Learning communication: agents learn communication protocols to solve cooperative tasks.

-

Learning cooperation: agents learn to cooperate using only actions and (local) observations.

-

Agents modeling agents: agents reason about others to fulfill a task (e.g., best response learners).

For each category we provide a description as well as outline the recent works (see Sect. 3.2 and Tables 1, 2, 3, 4). Then, we take a step back and reflect on how these new works relate to the existing literature. In that context, first, we present examples on how methods and algorithms originally introduced in RL and MAL were successfully been scaled to MDRL (see Sect. 4.1). Second, we provide some pointers for new practitioners in the area by describing general lessons learned from the existing MDRL works (see Sect. 4.2) and point to recent multiagent benchmarks (see Sect. 4.3). Third, we take a more critical view and describe practical challenges in MDRL, such as reproducibility, hyperparameter tunning, and computational demands (see Sect. 4.4). Then, we outline some open research questions (see Sect. 4.5). Lastly, we present our conclusions from this work (see Sect. 5).

Our goal is to outline a recent and active area (i.e., MDRL), as well as to motivate future research to take advantage of the ample and existing literature in multiagent learning. We aim to enable researchers with experience in either DRL or MAL to gain a common understanding about recent works, and open problems in MDRL, and to avoid having scattered sub-communities with little interaction [6, 81, 141, 289].

Categories of different MDRL works. a Analysis of emergent behaviors: evaluate single-agent DRL algorithms in multiagent scenarios. b Learning communication: agents learn with actions and through messages. c Learning cooperation: agents learn to cooperate using only actions and (local) observations. d Agents modeling agents: agents reason about others to fulfill a task (e.g., cooperative or competitive). For a more detailed description see Sects. 3.3–3.6 and Tables 1, 2, 3 and 4

2 Single-agent learning

This section presents the formalism of reinforcement learning and its main components before outlining deep reinforcement learning along with its particular challenges and recent algorithms. For a more detailed description we refer the reader to excellent books and surveys on the area [13, 101, 164, 315, 353].

2.1 Reinforcement learning

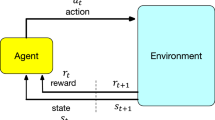

RL formalizes the interaction of an agent with an environment using a Markov decision process (MDP) [261]. An MDP is defined by the tuple \(\langle \mathcal {S},\mathcal {A},R,T,\gamma \rangle \) where \(\mathcal {S}\) represents a finite set of states. \(\mathcal {A}\) represents a finite set of actions. The transition function \(T : \mathcal {S} \times \mathcal {A} \times \mathcal {S} \rightarrow [0,1]\) determines the probability of a transition from any state \(s \in \mathcal {S}\) to any state \(s' \in \mathcal {S}\) given any possible action \(a \in \mathcal {A}\). The reward function \(R : \mathcal {S} \times \mathcal {A} \times \mathcal {S} \rightarrow \mathbb {R}\) defines the immediate and possibly stochastic reward that an agent would receive given that the agent executes action a while in state s and it is transitioned to state \(s'\), \(\gamma \in [0, 1]\) represents the discount factor that balances the trade-off between immediate rewards and future rewards.

MDPs are adequate models to obtain optimal decisions in single agent fully observable environments.Footnote 2 Solving an MDP will yield a policy \(\pi : \mathcal {S} \rightarrow \mathcal {A}\), which is a mapping from states to actions. An optimal policy \(\pi ^*\) is the one that maximizes the expected discounted sum of rewards. There are different techniques for solving MDPs assuming a complete description of all its elements. One of the most common techniques is the value iteration algorithm [33], which requires a complete and accurate representation of states, actions, rewards, and transitions. However, this may be difficult to obtain in many domains. For this reason, RL algorithms often learn from experience interacting with the environment in discrete time steps.

Q-learning One of the most well known algorithms for RL is Q-learning [346]. It has been devised for stationary, single-agent, fully observable environments with discrete actions. A Q-learning agent keeps the estimate of its expected payoff starting in state s, taking action a as \(\hat{Q}(s,a)\). Each tabular entry \(\hat{Q}(s,a)\) is an estimate of the corresponding optimal \(Q^*\) function that maps state-action pairs to the discounted sum of future rewards starting with action a at state s and following the optimal policy thereafter. Each time the agent transitions from a state s to a state \(s'\) via action a receiving payoff r, the Q table is updated as follows:

with the learning rate \(\alpha \in [0,1]\). Q-learning is proven to converge to \(Q^*\) if state and action spaces are discrete and finite, the sum of the learning rates goes to infinity (so that each state-action pair is visited infinitely often) and that the sum of the squares of the learning rates is finite (which is required to show that the convergence is with probability one) [94, 154, 168, 318, 319, 329, 346]. The convergence of single-step on-policy RL algorithms, i.e, SARSA (\(\lambda =0\)), for both decaying exploration (greedy in the limit with infinite exploration) and persistent exploration (selecting actions probabilistically according to the ranks of the Q values) was demonstrated by Singh et al. [294]. Furthermore, Van Seijen [337] has proven convergence for Expected SARSA (see Sect. 3.1 for convergence results in multiagent domains).

REINFORCE (Monte Carlo policy gradient) In contrast to value-based methods, which do not try to optimize directly over a policy space [175], policy gradient methods can learn parameterized policies without using intermediate value estimates.

Policy parameters are learned by following the gradient of some performance measure with gradient descent [316]. For example, REINFORCE [354] uses estimated return by Monte Carlo (MC) methods with full episode trajectories to learn policy parameters \(\theta \), with \(\pi (a ; s,\theta ) \approx \pi (a ; s)\), as follows

where \(G_{t}\) represents the return, \(\alpha \) is the learning rate, and \(A_{t} \sim \pi \). A main limitation is that policy gradient methods can have high variance [175].

The policy gradient update can be generalized to include a comparison to an arbitrary baseline of the state [354]. The baseline, b(s), can be any function, as long as it does not vary with the action; the baseline leaves the expected value of the update unchanged, but it can have an effect on its variance [315]. A natural choice for the baseline is a learned state-value function, this reduces the variance, and it is bias-free if learned by MC.Footnote 3 Moreover, when using the state-value function for bootstrapping (updating the value estimate for a state from the estimated values of subsequent states) it assigns credit (reducing the variance but introducing bias), i.e., criticizes the policy’s action selections. Thus, in actor-critic methods [175], the actor represents the policy, i.e., action-selection mechanism, whereas a critic is used for the value function learning. In the case when the critic learns a state-action function (Q function) and a state value function (V function), an advantage function can be computed by subtracting state values from the state-action values [283, 315]. The advantage function indicates the relative quality of an action compared to other available actions computed from the baseline, i.e., state value function. An example of an actor-critic algorithm is Deterministic Policy Gradient (DPG) [292]. In DPG [292] the critic follows the standard Q-learning and the actor is updated following the gradient of the policy’s performance [128], DPG was later extended to DRL (see Sect. 2.2) and MDRL (see Sect. 3.5). For multiagent learning settings the variance is further increased as all the agents’ rewards depend on the rest of the agents, and it is formally shown that as the number of agents increase, the probability of taking a correct gradient direction decreases exponentially [206]. Recent MDRL works addressed this high variance issue, e.g., COMA [97] and MADDPG [206] (see Sect. 3.5).

Policy gradient methods have a clear connection with deep reinforcement learning since the policy might be represented by a neural network whose input is a representation of the state, whose output are action selection probabilities or values for continuous control [192], and whose weights are the policy parameters.

2.2 Deep reinforcement learning

While tabular RL methods such as Q-learning are successful in domains that do not suffer from the curse of dimensionality, there are many limitations: learning in large state spaces can be prohibitively slow, methods do not generalize (across the state space), and state representations need to be hand-specified [315]. Function approximators tried to address those limitations, using for example, decision trees [262], tile coding [314], radial basis functions [177], and locally weighted regression [46] to approximate the value function.

Similarly, these challenges can be addressed by using deep learning, i.e., neural networks [46, 262] as function approximators. For example, \(Q(s,a ; \theta )\) can be used to approximate the state-action values with \(\theta \) representing the neural network weights. This has two advantages, first, deep learning helps to generalize across states improving the sample efficiency for large state-space RL problems. Second, deep learning can be used to reduce (or eliminate) the need for manually designing features to represent state information [184, 281].

However, extending deep learning to RL problems comes with additional challenges including non-i.i.d. (not independently and identically distributed) data. Many supervised learning methods assume that training data is from an i.i.d. stationary distribution [36, 269, 281]. However, in RL, training data consists of highly correlated sequential agent-environment interactions, which violates the independence condition. Moreover, RL training data distribution is non-stationary as the agent actively learns while exploring different parts of the state space, violating the condition of sampled data being identically distributed [220].

In practice, using function approximators in RL requires making crucial representational decisions and poor design choices can result in estimates that diverge from the optimal value function [1, 21, 46, 112, 334, 351]. In particular, function approximation, bootstrapping, and off-policy learning are considered the three main properties that when combined, can make the learning to diverge and are known as the deadly triad [315, 334]. Recently, some works have shown that non-linear (i.e., deep) function approximators poorly estimate the value function [104, 151, 331] and another work found problems with Q-learning using function approximation (over/under-estimation, instability and even divergence) due to the delusional bias: “delusional bias occurs whenever a backed-up value estimate is derived from action choices that are not realizable in the underlying policy class”[207]. Additionally, convergence results for reinforcement learning using function approximation are still scarce [21, 92, 207, 217, 330]; in general, stronger convergence guarantees are available for policy-gradient methods [316] than for value-based methods [315].

Below we mention how the existing DRL methods aim to address these challenges when briefly reviewing value-based methods, such as DQN [221]; policy gradient methods, like Proximal Policy Optimization (PPO) [283]; and actor-critic methods like Asynchronous Advantage Actor-Critic (A3C) [158]. We refer the reader to recent surveys on single-agent DRL [13, 101, 191] for a more detailed discussion of the literature.

Value-based methods The major breakthrough work combining deep learning with Q-learning was the Deep Q-Network (DQN) [221]. DQN uses a deep neural network for function approximation [268]Footnote 4 (see Fig. 2) and maintains an experience replay (ER) buffer [193, 194] to store interactions \(\langle s,a,r,s' \rangle \). DQN keeps an additional copy of neural network parameters, \(\theta ^-\), for the target network in addition to the \(\theta \) parameters to stabilize the learning, i.e., to alleviate the non-stationary data distribution.Footnote 5 For each training iteration i, DQN minimizes the mean-squared error (MSE) between the Q-network and its target network using the loss function:

where target network parameters \(\theta ^-\) are set to Q-network parameters \(\theta \) periodically and mini-batches of \(\langle s,a,r,s' \rangle \) tuples are sampled from the ER buffer, as depicted in Fig. 3.

Deep Q-Network (DQN) [221]: Inputs are four stacked frames; the network is composed of several layers: Convolutional layers employ filters to learn features from high-dimensional data with a much smaller number of neurons and Dense layers are fully-connected layers. The last layer represents the actions the agent can take (in this case, 10 possible actions). Deep Recurrent Q-Network (DRQN) [131], which extends DQN to partially observable domains [63], is identical to this setup except the penultimate layer (\(1 \times 256\) Dense layer) is replaced with a recurrent LSTM layer [147]

The ER buffer provides stability for learning as random batches sampled from the buffer helps alleviating the problems caused by the non-i.i.d. data. However, it comes with disadvantages, such as higher memory requirements and computation per real interaction [219]. The ER buffer is mainly used for off-policy RL methods as it can cause a mismatch between buffer content from earlier policy and from the current policy for on-policy methods [219]. Extending the ER buffer for the multiagent case is not trivial, see Sects. 3.5, 4.1 and 4.2. Recent works were designed to reduce the problem of catastrophic forgetting (this occurs when the trained neural network performs poorly on previously learned tasks due to a non-stationary training distribution [111, 214]) and the ER buffer, in DRL [153] and MDRL [246].

DQN has been extended in many ways, for example, by using double estimators [130] to reduce the overestimation bias with Double DQN [336] (see Sect. 4.1) and by decomposing the Q-function with a dueling-DQN architecture [345], where two streams are learned, one estimates state values and another one advantages, those are combined in the final layer to form Q values (this method improved over Double DQN).

In practice, DQN is trained using an input of four stacked frames (last four frames the agent has encountered). If a game requires a memory of more than four frames it will appear non-Markovian to DQN because the future game states (and rewards) do not depend only on the input (four frames) but rather on the history [132]. Thus, DQN’s performance declines when given incomplete state observations (e.g., one input frame) since DQN assumes full state observability.

Real-world tasks often feature incomplete and noisy state information resulting from partial observability (see Sect. 2.1). Deep Recurrent Q-Networks (DRQN) [131] proposed using recurrent neural networks, in particular, Long Short-Term Memory (LSTMs) cells [147] in DQN, for this setting. Consider the architecture in Fig. 2 with the first dense layer after convolution replaced by a layer of LSTM cells. With this addition, DRQN has memory capacity so that it can even work with only one input frame rather than a stacked input of consecutive frames. This idea has been extended to MDRL, see Fig. 6 and Sect. 4.2. There are also other approaches to deal with partial observability such as finite state controllers [218] (where action selection is performed according to the complete observation history) and using an initiation set of options conditioned on the previously employed option [302].

Policy gradient methods For many tasks, particularly for physical control, the action space is continuous and high dimensional where DQN is not suitable. Deep Deterministic Policy Gradient (DDPG) [192] is a model-free off-policy actor-critic algorithm for such domains, based on the DPG algorithm [292] (see Sect. 2.1). Additionally, it proposes a new method for updating the networks, i.e., the target network parameters slowly change (this could also be applicable to DQN), in contrast to the hard reset (direct weight copy) used in DQN. Given the off-policy nature, DDPG generates exploratory behavior by adding sampled noise from some noise processes to its actor policy. The authors also used batch normalization [152] to ensure generalization across many different tasks without performing manual normalizations. However, note that other works have shown batch normalization can cause divergence in DRL [274, 335].

Asynchronous Advantage Actor-Critic (A3C) [219] is an algorithm that employs a parallelized asynchronous training scheme (using multiple CPU threads) for efficiency. It is an on-policy RL method that does not use an experience replay buffer. A3C allows multiple workers to simultaneously interact with the environment and compute gradients locally. All the workers pass their computed local gradients to a global NN which performs the optimization and synchronizes with the workers asynchronously (see Fig. 4). There is also the Advantage Actor-Critic (A2C) method [234] that combines all the gradients from all the workers to update the global NN synchronously. The loss function for A3C is composed of two terms: policy loss (actor), \(\mathcal {L}_{\pi }\), and value loss (critic), \(\mathcal {L}_{v}\). A3C parameters are updated using the advantage function \(A(s_t, a_t; \theta _v)=Q(s,a)-V(s)\), commonly used to reduce variance (see Sect. 2.1). An entropy loss for the policy, \(H(\pi )\), is also commonly added, which helps to improve exploration by discouraging premature convergence to suboptimal deterministic policies [219]. Thus, the loss function is given by: \(\mathcal {L}_{\text {A3C}} = \lambda _v \mathcal {L}_{v} + \lambda _{\pi } \mathcal {L}_{\pi } - \lambda _{H} \mathbb {E}_{s \sim \pi } [H(\pi (s, \cdot , \theta )]\) with \(\lambda _{v}, \lambda _{\pi },\) and \(\lambda _{H}\), being weighting terms on the individual loss components. Wang et al. [344] took A3C’s framework but used off-policy learning to create the Actor-critic with experience replay (ACER) algorithm. Gu et al. [118] introduced the Interpolated Policy Gradient (IPG) algorithm and showed a connection between ACER and DDPG: they are a pair of reparametrization terms (they are special cases of IPG) when they are put under the same stochastic policy setting, and when the policy is deterministic they collapse into DDPG.

Asynchronous Advantage Actor-Critic (A3C) employs multiple (CPUs) workers without needing an ER buffer. Each worker has its own NN and independently interacts with the environment to compute the loss and gradients. Workers then pass computed gradients to the global NN that optimizes the parameters and synchronizes with the worker asynchronously. This distributed system is designed for single-agent deep RL. Compared to different DQN variants, A3C obtains better performance on a variety of Atari games using substantially less training time with multiple CPU cores of standard laptops without a GPU [219]. However, we note that more recent approaches use both multiple CPU cores for more efficient training data generation and GPUs for more efficient learning

Jaderberg et al. [158] built the Unsupervised Reinforcement and Auxiliary Learning (UNREAL) framework on top of A3C and introduced unsupervised auxiliary tasks (e.g., reward prediction) to speed up the learning process. Auxiliary tasks in general are not used for anything other than shaping the features of the agent, i.e., facilitating and regularizing the representation learning process [31, 288]; their formalization in RL is related to the concept of general value functions [315, 317]. The UNREAL framework optimizes a combined loss function \(\mathcal {L}_{\text {UNREAL}} \approx \mathcal {L}_{\text {A3C}} + \sum _i \lambda _{AT_i} \mathcal {L}_{AT_i} \), that combines the A3C loss, \(\mathcal {L}_{\text {A3C}}\), together with auxiliary task losses \(\mathcal {L}_{AT_i}\), where \(\lambda _{AT_i}\) are weight terms (see Sect. 4.1 for use of auxiliary tasks in MDRL). In contrast to A3C, UNREAL uses a prioritized ER buffer, in which transitions with positive reward are given higher probability of being sampled. This approach can be viewed as a simple form of prioritized replay [278], which was in turn inspired by model-based RL algorithms like prioritized sweeping [10, 223].

Another distributed architecture is the Importance Weighted Actor-Learner Architecture (IMPALA) [93]. Unlike A3C or UNREAL, IMPALA actors communicate trajectories of experience (sequences of states, actions, and rewards) to a centralized learner, thus IMPALA decouples acting from learning.

Trust Region Policy Optimization (TRPO) [283] and Proximal Policy Optimization (PPO) [284] are recently proposed policy gradient algorithms where the latter represents the state-of-the art with advantages such as being simpler to implement and having better empirical sample complexity. Interestingly, a recent work [151] studying PPO and TRPO arrived at the surprising conclusion that these methods often deviate from what the theoretical framework would predict: gradient estimates are poorly correlated with the true gradient and value networks tend to produce inaccurate predictions for the true value function. Compared to vanilla policy gradient algorithms, PPO prevents abrupt changes in policies during training through the loss function, similar to early work by Kakade [166]. Another advantage of PPO is that it can be used in a distributed fashion, i.e, Distributed PPO (DPPO) [134]. Note that distributed approaches like DPPO or A3C use parallelization only to improve the learning by more efficient training data generation through multiple CPU cores for single agent DRL and they should not be considered multiagent approaches (except for recent work which tries to exploit this parallelization in a multiagent environment [19]).

Lastly, there’s a connection between policy gradient algorithms and Q-learning [282] within the framework of entropy-regularized reinforcement learning [126] where the value and Q functions are slightly altered to consider the entropy of the policy. In this vein, Soft Actor-Critic (SAC) [127] is a recent algorithm that concurrently learns a stochastic policy, two Q-functions (taking inspiration from Double Q-learning) and a value function. SAC alternates between collecting experience with the current policy and updating from batches sampled from the ER buffer.

We have reviewed recent algorithms in DRL, while the list is not exhaustive, it provides an overview of the different state-of-art techniques and algorithms which will become useful while describing the MDRL techniques in the next section.

3 Multiagent deep reinforcement learning (MDRL)

First, we briefly introduce the general framework on multiagent learning and then we dive into the categories and the research on MDRL.

3.1 Multiagent learning

Learning in a multiagent environment is inherently more complex than in the single-agent case, as agents interact at the same time with environment and potentially with each other [55]. The independent learners, a.k.a. decentralized learners approach [323] directly uses single-agent algorithms in the multi-agent setting despite the underlying assumptions of these algorithms being violated (each agent independently learns its own policy, treating other agents as part of the environment). In particular the Markov property (the future dynamics, transitions, and rewards depend only on the current state) becomes invalid since the environment is no longer stationary [182, 233, 333]. This approach ignores the multiagent nature of the setting entirely and it can fail when an opponent adapts or learns, for example, based on the past history of interactions [289]. Despite the lack of guarantees, independent learners have been used in practice, providing advantages with regards to scalability while often achieving good results [213].

To understand why multiagent domains are non-stationary from agents’ local perspectives, consider a simple stochastic (also known as Markov) game \((\mathcal {S},\mathcal {N},\mathcal {A},\mathcal {T},\mathcal {R})\), which can be seen as an extension of an MDP to multiple agents [198, 200]. One key distinction is that the transition, \(\mathcal {T}\), and reward function, \(\mathcal {R}\), depend on the actions \(\mathcal {A}= A_1\times \cdots \times A_\mathcal {N}\) of all, \(\mathcal {N}\), agents, this means, \(\mathcal {R}=R_1\times \cdots \times R_\mathcal {N}\) and \(\mathcal {T}=\mathcal {S} \times A_1\times \cdots \times A_\mathcal {N}\).

Given a learning agent i and using the common shorthand notation \(\varvec{-i} = \mathcal {N} \setminus \{ i \}\) for the set of opponents, the value function now depends on the joint action \(\varvec{a} = (a_i, \varvec{a_{-i}})\), and the joint policy \(\varvec{\pi }(s, \varvec{a}) = \prod _j \pi _j (s, a_j) \)Footnote 6:

Consequently, the optimal policy is dependent on the other agents’ policies,

Specifically, the opponents’ joint policy \(\varvec{\pi _{-i}}(s,\varvec{a_{-i}})\) can be non-stationary, i.e., changes as the opponents’ policies change over time, for example with learning opponents.

Convergence results Littman [200] studied convergence properties of reinforcement learning joint action agents [70] in Markov games with the following conclusions: in adversarial environments (zero-sum games) an optimal play can be guaranteed against an arbitrary opponent, i.e., Minimax Q-learning [198]. In coordination environments (e.g., in cooperative games all agents share the same reward function), strong assumptions need be made about other agents to guarantee convergence to optimal behavior [200], e.g., Nash Q-learning [149] and Friend-or-Foe Q-learning [199]. In other types of environments no value-based RL algorithms with guaranteed convergence properties are known [200].

Recent work on MDRL have addressed scalability and have focused significantly less on convergence guarantees, with few exceptions [22, 40, 255, 297]. One notable work has shown a connection between update rules for actor-critic algorithms for multiagent partially observable settings and (counterfactual) regret minimizationFootnote 7: the advantage values are scaled counterfactual regrets. This lead to new convergence properties of independent RL algorithms in zero-sum games with imperfect information [300]. The result is also used to support policy gradient optimization against worst-case opponents, in a new algorithm called Exploitability Descent [204].Footnote 8

We refer the interested reader to seminal works about convergence in multiagent domains [23, 41, 42, 45, 113, 165, 167, 277, 295, 357, 367]. Note that instead of convergence, some MAL algorithms have proved learning a best response against classes of opponents [66, 326, 349].

There are other common problems in MAL, including action shadowing [105, 347], the curse of dimensionality [55], and multiagent credit assignment [2]. Describing each problem is out of the scope of this survey. However, we refer the interested reader to excellent resources on general MAL [209, 333, 350], as well as surveys in specific areas: game theory and multiagent reinforcement learning [55, 233], cooperative scenarios [213, 248], evolutionary dynamics of multiagent learning [38], learning in non-stationary environments [141], agents modeling agents [6], and transfer learning in multiagent RL [290].

3.2 MDRL categorization

In Sect. 2.2 we outlined some recent works in single-agent DRL since an exhaustive list is out of the scope of this article. This explosion of works has led DRL to be extended and combined with other techniques [13, 191, 251]. One natural extension to DRL is to test whether these approaches could be applied in a multiagent environment.

We analyzed the most recent works (that are not covered by previous MAL surveys [6, 141] and we do not consider genetic algorithms or swarm intelligence in this survey) that have a clear connection with MDRL. We propose 4 categories which take inspiration from previous surveys [6, 55, 248, 305] and that conveniently describe and represent current works. Note that some of these works fit into more than one category (they are not mutually exclusive), therefore their summaries are presented in all applicable Tables 1, 2, 3 and 4, however, for the ease of exposition when describing them in the text we only do so in one category. Additionally, for each work we present its learning type, either a value-based method (e.g., DQN) or a policy gradient method (e.g., actor-critic); also, we mention if the setting is evaluated in a fully cooperative, fully competitive or mixed environment (both cooperative and competitive).

-

Analysis of emergent behaviors These works, in general, do not propose learning algorithms—their main focus is to analyze and evaluate DRL algorithms, e.g., DQN [188, 264, 322], PPO [24, 264] and others [187, 225, 264], in a multiagent environment. In this category we found works which analyze behaviors in the three major settings: cooperative, competitive and mixed scenarios; see Sect. 3.3 and Table 1.

-

Learning communication [96, 183, 225, 253, 256, 312]. These works explore a sub-area in which agents can share information with communication protocols, for example through direct messages [96] or via a shared memory [256]. This area is attracting attention and it had not been explored much in the MAL literature. See Sect. 3.4 and Table 2.

-

Learning cooperation While learning to communicate is an emerging area, fostering cooperation in learning agents has a long history of research in MAL [213, 248]. In this category the analyzed works are evaluated in either cooperative or mixed settings. Some works in this category take inspiration from MAL (e.g., leniency, hysteresis, and difference rewards concepts) and extend them to the MDRL setting [98, 244, 247]. A notable exception [99] takes a key component from RL (i.e., experience replay buffer) and adapts it for MDRL. See Sect. 3.5 and Table 3.

-

Agents modeling agents Albrecht and Stone [6] presented a thorough survey in this topic and we have found many works that fit into this category in the MDRL setting, some taking inspiration from DRL [133, 148, 265], and others from MAL [97, 136, 180, 263, 358]. Modeling agents is helpful not only to cooperate, but also for modeling opponents [133, 136, 148, 180], inferring goals [265], and accounting for the learning behavior of other agents [97]. In this category the analyzed algorithms present their results in either a competitive setting or a mixed one (cooperative and competitive). See Sect. 3.6 and Table 4.

In the rest of this section we describe each category along with the summaries of related works.

3.3 Emergent behaviors

Some recent works have analyzed the previously mentioned independent DRL agents (see Sect. 3.1) from the perspective of types of emerging behaviors (e.g., cooperative or competitive).

One of the earliest MDRL works is by Tampuu et al. [322], which had two independent DQN learning agents to play the Atari Pong game. Their focus was to adapt the reward function for the learning agents, which resulted in either cooperative or competitive emergent behaviors.

Leibo et al. [188] meanwhile studied independent DQNs in the context of sequential social dilemmas: a Markov game that satisfies certain inequalities [188]. The focus of this work was to highlight that cooperative or competitive behaviors exist not only as discrete (atomic) actions, but they are temporally extended (over policies). In the related setting of one shot Markov social dilemmas, Lerer and Peysakhovich [189] extended the famous Tit-for-Tat (TFT)Footnote 9 strategy [15] for DRL (using function approximators) and showed (theoretically and experimentally) that such agents can maintain cooperation. To construct the agents they used self-play and two reward schemes: selfish and cooperative. Previously, different MAL algorithms were designed to foster cooperation in social dilemmas with Q-learning agents [77, 303].

Self-play is a useful concept for learning algorithms (e.g., fictitious play [49]) since under certain classes of games it can guarantee convergenceFootnote 10 and it has been used as a standard technique in previous RL and MAL works [43, 291, 325]. Despite its common usage self-play can be brittle to forgetting past knowledge [180, 186, 275] (see Sect. 4.5 for a note on the role of self-play as an open question in MDRL). To overcome this issue, Leibo et al. [187] proposed Malthusian reinforcement learning as an extension of self-play to population dynamics. The approach can be thought of as community coevolution and has been shown to produce better results (avoiding local optima) than independent agents with intrinsic motivation [30]. A limitation of this work is that it does not place itself within the state of the art in evolutionary and genetic algorithms. Evolutionary strategies have been employed for solving reinforcement learning problems [226] and for evolving function approximators [351]. Similarly, they have been used multiagent scenarios to compute approximate Nash equilibria [238] and as metaheuristic optimization algorithms [53, 54, 150, 248].

Bansal et al. [24] explored the emergent behaviors in competitive scenarios using the MuJoCo simulator [327]. They trained independent learning agents with PPO and incorporated two main modifications to deal with the MAL nature of the problem. First, they used exploration rewards [122] which are dense rewards that allow agents to learn basic (non-competitive) behaviors—this type of reward is annealed through time giving more weight to the environmental (competitive) reward. Exploration rewards come from early work in robotics [212] and single-agent RL [176], and their goal is to provide dense feedback for the learning algorithm to improve sample efficiency (Ng et al. [231] studied the theoretical conditions under which modifications of the reward function of an MDP preserve the optimal policy). For multiagent scenarios, these dense rewards help agents in the beginning phase of the training to learn basic non-competitive skills, increasing the probability of random actions from the agent yielding a positive reward. The second contribution was opponent sampling which maintains a pool of older versions of the opponent to sample from, in contrast to using the most recent version.

Raghu et al. [264] investigated how DRL algorithms (DQN, A2C, and PPO) performed in a family of two-player zero-sum games with tunable complexity, called Erdos-Selfridge-Spencer games [91, 299]. Their reasoning is threefold: (i) these games provide a parameterized family of environments where (ii) optimal behavior can be completely characterized, and (iii) support multiagent play. Their work showed that algorithms can exhibit wide variation in performance as the algorithms are tuned to the game’s difficulty.

Lazaridou et al. [183] proposed a framework for language learning that relies on multiagent communication. The agents, represented by (feed-forward) neural networks, need to develop an emergent language to solve a task. The task is formalized as a signaling game [103] in which two agents, a sender and a receiver, obtain a pair of images. The sender is told one of them is the target and is allowed to send a message (from a fixed vocabulary) to the receiver. Only when the receiver identifies the target image do both agents receive a positive reward. The results show that agents can coordinate for the experimented visual-based domain. To analyze the semantic propertiesFootnote 11 of the learned communication protocol they looked whether symbol usage reflects the semantics of the visual space, and that despite some variation, many high level objects groups correspond to the same learned symbols using a t-SNE [210] based analysis (t-SNE is a visualization technique for high-dimensional data and it has also been used to better understand the behavior of trained DRL agents [29, 362]). A key objective of this work was to determine if the agent’s language could be human-interpretable. To achieve this, learned symbols were grounded with natural language by extending the signaling game with a supervised image labelling task (the sender will be encouraged to use conventional names, making communication more transparent to humans). To measure the interpretability of the extended game, a crowdsourced survey was performed, and in essence, the trained agent receiver was replaced with a human. The results showed that 68% of the cases, human participants picked the correct image.

Similarly, Mordatch and Abbeel [225] investigated the emergence of language with the difference that in their setting there were no explicit roles for the agents (i.e., sender or receiver). To learn, they proposed an end-to-end differentiable model of all agent and environment state dynamics over time to calculate the gradient of the return with backpropagation.

3.4 Learning communication

As we discussed in the previous section, one of the desired emergent behaviors of multiagent interaction is the emergence of communication [183, 225]. This setting usually considers a set of cooperative agents in a partially observable environment (see Sect. 2.2) where agents need to maximize their shared utility by means of communicating information.

Reinforced Inter-Agent Learning (RIAL) and Differentiable Inter-Agent Learning (DIAL) are two methods using deep networks to learn to communicate [96]. Both methods use a neural net that outputs the agent’s Q values (as done in standard DRL algorithms) and a message to communicate to other agents in the next timestep. RIAL is based on DRQN and also uses the concept of parameter sharing, i.e., using a single network whose parameters are shared among all agents. In contrast, DIAL directly passes gradients via the communication channel during learning, and messages are discretized and mapped to the set of communication actions during execution.

Memory-driven (MD) communication was proposed on top of the Multi-Agent Deep Deterministic Policy Gradient (MADDPG) [206] method. In MD-MADDPG [256], the agents use a shared memory as a communication channel: before taking an action, the agent first reads the memory, then writes a response. In this case the agent’s policy becomes dependent on its private observation and its interpretation of the collective memory. Experiments were performed with two agents in cooperative scenarios. The results highlighted the fact that the communication channel was used differently in each environment, e.g., in simpler tasks agents significantly decrease their memory activity near the end of the task as there are no more changes in the environment; in more complex environments, the changes in memory usage appear at a much higher frequency due to the presence of many sub-tasks.

Dropout [301] is a technique to prevent overfitting (in supervised learning this happens when the learning algorithm achieves good performance only on a specific data set and fails to generalize) in neural networks which is based on randomly dropping units and their connections during training time. Inspired by dropout, Kim et al. [173] proposed a similar approach in multiagent environments where direct communication through messages is allowed. In this case, the messages of other agents are dropped out at training time, thus the authors proposed the Message-Dropout MADDPG algorithm [173]. This method is expected to work in fully or limited communication environments. The empirical results show that with properly chosen message dropout rate, the proposed method both significantly improves the training speed and the robustness of learned policies (by introducing communication errors) during execution time. This capability is important as MDRL agents trained in simulated or controlled environments will be less fragile when transferred to more realistic environments.

While RIAL and DIAL used a discrete communication channel, CommNet [312] used a continuous vector channel. Through this channel agents receive the summed transmissions of other agents. The authors assume full cooperation and train a single network for all the agents. There are two distinctive characteristics of CommNet from previous works: it allows multiple communication cycles at each timestep and a dynamic variation of agents at run time, i.e., agents come and go in the environment.

In contrast to previous approaches, in Multiagent Bidirectionally Coordinated Network (BiCNet) [253], communication takes place in the latent space (i.e., in the hidden layers). It also uses parameter sharing, however, it proposes bidirectional recurrent neural networks [285] to model the actor and critic networks of their model. Note that in BiCNet agents do not explicitly share a message and thus it can be considered a method for learning cooperation.

Learning communication is an active area in MDRL with many open questions, in this context, we refer the interested reader to a recent work by Lowe et al. [205] where it discusses common pitfalls (and recommendations to avoid those) while measuring communication in multiagent environments.

3.5 Learning cooperation

Although explicit communication is a new emerging trend in MDRL, there has already been a large amount of work in MAL for cooperative settingsFootnote 12 that do not involve communication [213, 248]. Therefore, it was a natural starting point for many recent MDRL works.

Foerster et al. [99] studied the simple scenario of cooperation with independent Q-learning agents (see Sect. 3.1), where the agents use the standard DQN architecture of neural networks and an experience replay buffer (see Fig. 3). However, for the ER to work, the data distribution needs to follow certain assumptions (see Sect. 2.2) which are no loger valid due to the multiagent nature of the world: the dynamics that generated the data in the ER no longer reflect the current dynamics, making the experience obsolete [99, 194]. Their solution is to add information to the experience tuple that can help to disambiguate the age of the sampled data from the replay memory. Two approaches were proposed. The first is Multiagent Importance Sampling which adds the probability of the joint action so an importance sampling correction [36, 260] can computed when the tuple is later sampled for training. This was similar to previous works in adaptive importance sampling [4, 102] and off-environment RL [68]. The second approach is Multiagent Fingerprints which adds the estimate (i.e., fingerprint) of other agents’ policies (loosely inspired by Hyper-Q [326], see Sect. 4.1). For the practical implementation, good results were obtained by using the training iteration number and exploration rate as the fingerprint.

Gupta et al. [123] tackled cooperative environments in partially observable domains without explicit communication. They proposed parameter sharing (PS) as a way to improve learning in homogeneous multiagent environments (where agents have the same set of actions). The idea is to have one globally shared learning network that can still behave differently in execution time, i.e., because its inputs (individual agent observation and agent index) will be different. They tested three variations of this approach with parameter sharing: PS-DQN, PS-DDPG and PS-TRPO, which extended single-agent DQN, DDPG and TRPO algorithms, respectively. The results showed that PS-TRPO outperformed the other two. Note that Foerster et al. [96] concurrently proposed a similar concept, see Sect. 3.4.

Lenient-DQN (LDQN) [247] took the leniency concept [37] (originally presented in MAL) and extended their use to MDRL. The purpose of leniency is to overcome a pathology called relative overgeneralization [249, 250, 347]. Similar to other approaches designed to overcome relative overgeneralization (e.g., distributed Q-learning [181] and hysteretic Q-learning [213]) lenient learners initially maintain an optimistic disposition to mitigate the noise from transitions resulting in miscoordination, preventing agents from being drawn towards sub-optimal but wide peaks in the reward search space [246]. However, similar to other MDRL works [99], the LDQN authors experienced problems with the ER buffer and arrived at a similar solution: adding information to the experience tuple, in their case, the leniency value. When sampling from the ER buffer, this value is used to determine a leniency condition; if the condition is not met then the sample is ignored.

In a similar vein, Decentralized-Hysteretic Deep Recurrent Q-Networks (DEC-HDRQNs) [244] were proposed for fostering cooperation among independent learners. The motivation is similar to LDQN, making an optimistic value update, however, their solution is different. Here, the authors took inspiration from Hysteretic Q-learning [213], originally presented in MAL, where two learning rates were used. A difference between lenient agents and hysteretic Q-learning is that lenient agents are only initially forgiving towards teammates. Lenient learners over time apply less leniency towards updates that would lower utility values, taking into account how frequently observation-action pairs have been encountered. The idea being that the transition from optimistic to average reward learner will help make lenient learners more robust towards misleading stochastic rewards [37]. Additionally, in DEC-HDRQNs the ER buffer is also extended into concurrent experience replay trajectories, which are composed of three dimensions: agent index, the episode, and the timestep; when training, the sampled traces have the same starting timesteps. Moreover, to improve on generalization over different tasks, i.e., multi-task learning[62], DEC-HDRQNs make use of policy distillation [146, 273] (see Sect. 4.1). In contrast to other approaches, DEC-HDRQNS are fully decentralized during learning and execution.

Weighted Double Deep Q-Network (WDDQN) [365] is based on having double estimators. This idea was originally introduced in Double Q-learning [130] and aims to remove the existing overestimation bias caused by using the maximum action value as an approximation for the maximum expected action value (see Sect. 4.1). It also uses a lenient reward [37] to be optimistic during initial phase of coordination and proposes a scheduled replay strategy in which samples closer to the terminal states are heuristically given higher priority; this strategy might not be applicable for any domain. For other works extending the ER to multiagent settings see MADDPG [206], Sects. 4.1 and 4.2.

A schematic view of the architecture used in FTW (For the Win) [156]: two unrolled recurrent neural networks (RNNs) operate at different time-scales, the idea is that the Slow RNN helps with long term temporal correlations. Observations are latent space output of some convolutional neural network to learn non-linear features. Feudal Networks [338] is another work in single-agent DRL that also maintains a multi-time scale hierarchy where the slower network sets the goal, and the faster network tries to achieve them. Fedual Networks were in turn, inspired by early work in RL which proposed a hierarchy of Q-learners [82, 296]

While previous approaches were mostly inspired by how MAL algorithms could be extended to MDRL, other works take as base the results by single-agent DRL. One example is the For The Win (FTW) [156] agent which is based on the actor-learner structure of IMPALA [93] (see Sect. 2.2). The authors test FTW in a game where two opposing teams compete to capture each other’s flags [57]. To deal with the MAL problem they propose two main additions: a hierarchical two-level representation with recurrent neural networks operating at different timescales, as depicted in Fig. 5, and a population based training [157, 185, 271] where 30 agents were trained in parallel together with a stochastic matchmaking scheme that biases agents to be of similar skills. The Elo rating system [90] was originally devised to rate chess player skills,Footnote 13 TrueSkill [138] extended Elo by tracking uncertainty in skill rating, supporting draws, and matches beyond 1 vs 1; \(\alpha -\)Rank is a more recent alternative to ELO [243]. FTW did not use TrueSkill but a simpler extension of Elo for n vs n games (by adding individual agent ratings to compute the team skill). Hierarchical approaches were previously proposed in RL, e.g., Feudal RL [82, 296], and were later extended to DRL in Feudal networks [338]; population based training can be considered analogous to evolutionary strategies that employ self-adaptive hyperparameter tuning to modify how the genetic algorithm itself operates [20, 85, 185]. An interesting result from FTW is that the population-based training obtained better results than training via self-play [325], which was a standard concept in previous works [43, 291]. FTW used heavy compute resources, it used 30 agents (processes) in parallel where every training game lasted 4500 agent steps (\(\approx \) 5 min) and agents were trained for two billion steps (\(\approx \) 450K games).

Lowe et al. [206] noted that using standard policy gradient methods (see Sect. 2.1) on multiagent environments yields high variance and performs poorly. This occurs because the variance is further increased as all the agents’ rewards depend on the rest of the agents, and it is formally shown that as the number of agents increase, the probability of taking a correct gradient direction decreases exponentially [206]. Therefore, to overcome this issue Lowe et al. proposed the Multi-Agent Deep Deterministic Policy Gradient (MADDPG) [206], building on DDPG [192] (see Sect. 2.2), to train a centralized critic per agent that is given all agents’ policies during training to reduce the variance by removing the non-stationarity caused by the concurrently learning agents. Here, the actor only has local information (turning the method into a centralized training with decentralized execution) and the ER buffer records experiences of all agents. MADDPG was tested in both cooperative and competitive scenarios, experimental results show that it performs better than several decentralized methods (such as DQN, DDPG, and TRPO). The authors mention that traditional RL methods do not produce consistent gradient signals. This is exemplified in a challenging competitive scenarios where agents continuously adapt to each other causing the learned best-response policies oscillate—for such a domain, MADDPG is shown to learn more robustly than DDPG.

Another approach based on policy gradients is the Counterfactual Multi-Agent Policy Gradients (COMA) [98]. COMA was designed for the fully centralized setting and the multiagent credit assignment problem [332], i.e., how the agents should deduce their contributions when learning in a cooperative setting in the presence of only global rewards. Their proposal is to compute a counterfactual baseline, that is, marginalize out the action of the agent while keeping the rest of the other agents’ actions fixed. Then, an advantage function can be computed comparing the current Q value to the counterfactual. This counterfactual baseline has its roots in difference rewards, which is a method for obtaining the individual contribution of an agent in a cooperative multiagent team [332]. In particular, the aristocrat utility aims to measure the difference between an agent’s actual action and the average action [355]. The intention would be equivalent to sideline the agent by having the agent perform an action where the reward does not depend on the agent’s actions, i.e., to consider the reward that would have arisen assuming a world without that agent having ever existed (see Sect. 4.2).

On the one hand, fully centralized approaches (e.g., COMA) do not suffer from non-stationarity but have constrained scalability. On the other hand, independent learning agents are better suited to scale but suffer from non-stationarity issues. There are some hybrid approaches that learn a centralized but factoredQ value function [119, 174]. Value Decomposition Networks (VDNs) [313] decompose a team value function into an additive decomposition of the individual value functions. Similarly, QMIX [266] relies on the idea of factorizing, however, instead of sum, QMIX assumes a mixing network that combines the local values in a non-linear way, which can represent monotonic action-value functions. While the mentioned approaches have obtained good empirical results, the factorization of value-functions in multiagent scenarios using function approximators (MDRL) is an ongoing research topic, with open questions such as how well factorizations capture complex coordination problems and how to learn those factorizations [64] (see Sect. 4.4).

3.6 Agents modeling agents

An important ability for agents to have is to reason about the behaviors of other agents by constructing models that make predictions about the modeled agents [6]. An early work for modeling agents while using deep neural networks was the Deep Reinforcement Opponent Network (DRON) [133]. The idea is to have two networks: one which evaluates Q-values and a second one that learns a representation of the opponent’s policy. Moreover, the authors proposed to have several expert networks to combine their predictions to get the estimated Q value, the idea being that each expert network captures one type of opponent strategy [109]. This is related to previous works in type-based reasoning from game theory [129, 167] later applied in AI [6, 26, 109]. The mixture of experts idea was presented in supervised learning where each expert handled a subset of the data (a subtask), and then a gating network decided which of the experts should be used [155].

a Deep policy inference Q-network: receives four stacked frames as input (similar to DQN, see Fig. 2). b Deep Policy Inference Recurrent Q-Network: receives one frame as input and has an LSTM layer instead of a fully connected layer (FC). Both approaches [148] condition the \(Q_M\) value outputs on the policy features, \(h^{PI}\), which are also used to learn the opponent policy \(\pi _o\)

DRON uses hand-crafted features to define the opponent network. In contrast, Deep Policy Inference Q-Network (DPIQN) and its recurrent version, DPIRQN [148] learn policy features directly from raw observations of the other agents. The way to learn these policy features is by means of auxiliary tasks [158, 317] (see Sects. 2.2 and 4.1) that provide additional learning goals, in this case, the auxiliary task is to learn the opponents’ policies. This auxiliary task modifies the loss function by computing an auxiliary loss: the cross entropy loss between the inferred opponent policy and the ground truth (one-hot action vector) of the opponent. Then, the Q value function of the learning agent is conditioned on the opponent’s policy features (see Fig. 6), which aims to reduce the non-stationarity of the environment. The authors used an adaptive training procedure to adjust the attention (a weight on the loss function) to either emphasize learning the policy features (of the opponent) or the respective Q values of the agent. An advantage of these approaches is that modeling the agents can work for both opponents and teammates [148].

In many previous works an opponent model is learned from observations. Self Other Modeling (SOM) [265] proposed a different approach, this is, using the agent’s own policy as a means to predict the opponent’s actions. SOM can be used in cooperative and competitive settings (with an arbitrary number of agents) and infers other agents’ goals. This is important because in the evaluated domains, the reward function depends on the goal of the agents. SOM uses two networks, one used for computing the agents’ own policy, and a second one used to infer the opponent’s goal. The idea is that these networks have the same input parameters but with different values (the agent’s or the opponent’s). In contrast to previous approaches, SOM is not focused on learning the opponent policy, i.e., a probability distribution over next actions, but rather on estimating the opponent’s goal. SOM is expected to work best when agents share a set of goals from which each agent gets assigned one at the beginning of the episode and the reward structure depends on both of their assigned goals. Despite its simplicity, training takes longer as an additional optimization step is performed given the other agent’s observed actions.

There is a long-standing history of combining game theory and MAL [43, 233, 289]. From that context, some approaches were inspired by influential game theory approaches. Neural Fictitious Self-Play (NFSP) [136] builds on fictitious (self-) play [49, 135], together with two deep networks to find approximate Nash equilibriaFootnote 14 in two-player imperfect information games [341] (for example, consider Poker: when it is an agent’s turn to move it does not have access to all information about the world). One network learns an approximate best response (\(\epsilon -\)greedy over Q values) to the historical behavior of other agents and the second one (called the average network) learns to imitate its own past best response behaviour using supervised classification. The agent behaves using a mixture of the average and the best response networks depending on the probability of an anticipatory parameter [287]. Comparisons with DQN in Leduc Hold’em Poker revealed that DQN’s deterministic strategy is highly exploitable. Such strategies are sufficient to behave optimally in single-agent domains, i.e., MDPs for which DQN was designed. However, imperfect-information games generally require stochastic strategies to achieve optimal behaviour [136]. DQN learning experiences are both highly correlated over time, and highly focused on a narrow state distribution. In contrast to NFSP agents whose experience varies more smoothly, resulting in a more stable data distribution, more stable neural networks and better performance.

The (N)FSP concept was further generalized in Policy-Space Response Oracles (PSRO) [180], where it was shown that fictitious play is one specific meta-strategy distribution over a set of previous (approximate) best responses (summarized by a meta-game obtained by empirical game theoretic analysis [342]), but there are a wide variety to choose from. One reason to use mixed meta-strategies is that it prevents overfittingFootnote 15 the responses to one specific policy, and hence provides a form of opponent/teammate regularization. An approximate scalable version of the algorithm leads to a graph of agents best-responding independently called Deep Cognitive Hierarchies (DCHs) [180] due to its similarity to behavioral game-theoretic models [59, 72].

Minimax is a paramount concept in game theory that is roughly described as minimizing the worst case scenario (maximum loss) [341]. Li et al. [190] took the minimax idea as an approach to robustify learning in multiagent environments so that the learned robust policy should be able to behave well even with strategies not seen during training. They extended the MADDPG algorithm [206] to Minimax Multiagent Deep Deterministic Policy Gradients (M3DDPG), which updates policies considering a worst-case scenario: assuming that all other agents act adversarially. This yields a minimax learning objective which is computationally intractable to directly optimize. They address this issue by taking ideas from robust reinforcement learning [227] which implicitly adopts the minimax idea by using the worst noise concept [257]. In MAL different approaches were proposed to assess the robustness of an algorithm, e.g., guarantees of safety [66, 259], security [73] or exploitability [80, 161, 215].

Previous approaches usually learned a model of the other agents as a way to predict their behavior. However, they do not explicitly account for anticipated learning of the other agents, which is the objective of Learning with Opponent-Learning Awareness (LOLA) [97]. LOLA optimizes the expected return after the opponent updates its policy one step. Therefore, a LOLA agent directly shapes the policy updates of other agents to maximize its own reward. One of LOLA’s assumptions is having access to opponents’ policy parameters. LOLA builds on previous ideas by Zhang and Lesser [363] where the learning agent predicts the opponent’s policy parameter update but only uses it to learn a best response (to the anticipated updated parameters).

Theory of mind is part of a group of recursive reasoning approaches[60, 61, 109, 110] in which agents have explicit beliefs about the mental states of other agents. The mental states of other agents may, in turn, also contain beliefs and mental states of other agents, leading to a nesting of beliefs [6]. Theory of Mind Network (ToMnet) [263] starts with a simple premise: when encountering a novel opponent, the agent should already have a strong and rich prior about how the opponent should behave. ToMnet has an architecture composed of three networks: (i) a character network that learns from historical information, (ii) a mental state network that takes the character output and the recent trajectory, and (iii) the prediction network that takes the current state as well as the outputs of the other networks as its input. The output of the architecture is open for different problems but in general its goal is to predict the opponent’s next action. A main advantage of ToMnet is that it can predict general behavior, for all agents; or specific, for a particular agent.

Deep Bayesian Theory of Mind Policy (Bayes-ToMoP) [358] is another algorithm that takes inspiration from theory of mind [76]. The algorithm assumes the opponent has different stationary strategies to act and changes among them over time [140]. Earlier work in MAL dealt with this setting, e.g., BPR+ [143] extends the Bayesian policy reuseFootnote 16 framework [272] to multiagent settings (BPR assumes a single-agent environment; BPR+ aims to best respond to the opponent in a multiagent game). A limitation of BPR+ is that it behaves poorly against itself (self-play), thus, Deep Bayes-ToMoP uses theory of mind to provide a higher-level reasoning strategy which provides an optimal behavior against BPR+ agents.

Deep BPR+ [366] is another work inspired by BPR+ which uses neural networks as value-function approximators. It not only uses the environment reward but also uses the online learned opponent model [139, 144] to construct a rectified belief over the opponent strategy. Additionally, it leverages ideas from policy distillation [146, 273] and extends them to the multiagent case to create a distilled policy network. In this case, whenever a new acting policy is learned, distillation is applied to consolidate the new updated library which improves in terms of storage and generalization (over opponents).

4 Bridging RL, MAL and MDRL

This section aims to provide directions to promote fruitful cooperations between sub-communities. First, we address the pitfall of deep learning amnesia, roughly described as missing citations to the original works and not exploiting the advancements that have been made in the past. We present examples on how ideas originated earlier, for example in RL and MAL, were successfully extended to MDRL (see Sect. 4.1). Second, we outline lessons learned from the works analyzed in this survey (see Sect. 4.2). Then we point the readers to recent benchmarks for MDRL (see Sect. 4.3) and we discuss the practical challenges that arise in MDRL like high computational demands and reproducibility (see Sect. 4.4). Lastly, we pose some open research challenges and reflect on their relation with previous open questions in MAL [6] (see Sect. 4.5).

4.1 Avoiding deep learning amnesia: examples in MDRL

This survey focuses on recent deep works, however, in previous sections, when describing recent algorithms, we also point to original works that inspired them. Schmidhuber said “Machine learning is the science of credit assignment. The machine learning community itself profits from proper credit assignment to its members” [280]. In this context, we want to avoid committing the pitfall of not giving credit to original ideas that were proposed earlier, a.k.a. deep learning amnesia. Here, we provide some specific examples of research milestones that were studied earlier, e.g., RL or MAL, and that now became highly relevant for MDRL. Our purpose is to highlight that existent literature contains pertinent ideas and algorithms that should not be ignored. On the contrary, they should be examined and cited [58, 79] to understand recent developments [343].

Dealing with non-stationarity in independent learners It is well known that using independent learners makes the environment non-stationary from the agent’s point of view [182, 333]. Many MAL algorithms tried to solve this problem in different ways [141]. One example is Hyper-Q [326] which accounts for the (values of mixed) strategies of other agents and includes that information in the state representation, which effectively turns the learning problem into a stationary one. Note that in this way it is possible to even consider adaptive agents. Foerster et al. [96] make use of this insight to propose their fingerprint algorithm in an MDRL problem (see Sect. 3.5). Other examples include the leniency concept [37] and Hysteretic Q-learning [213] originally presented in MAL, which now have their “deep” counterparts, LDQNs [247] and DEC-HDRQNs[244], see Sect. 3.5.

Multiagent credit assignment In cooperative multiagent scenarios, it is common to use either local rewards, unique for each agent, or global rewards, which represent the entire group’s performance [3]. However, local rewards are usually harder to obtain, therefore, it is common to rely only on the global ones. This raises the problem of credit assignment: how does a single agent’s actions contribute to a system that involves the actions of many agents [2]. A solution that came from MAL research that has proven successful in many scenarios is difference rewards [3, 86, 332], which aims to capture an agent’s contribution to the system’s global performance. In particular the aristocrat utility aims to measure the difference between an agent’s actual action and the average action [355], however, it has a self-consistency problem and in practice it is more common to compute the wonderful life utility [355, 356], which proposes to use a clamping operation that would be equivalent to removing that player from the team. COMA [98] builds on these concepts to propose an advantage function based on the contribution of the agent, which can be efficiently computed with deep neural networks (see Sect. 3.5).

Multitask learning In the context of RL, multitask learning [62] is an area that develops agents that can act in several related tasks rather than just in a single one [324]. Distillation, roughly defined as transferring the knowledge from a large model to a small model, was a concept originally introduced for supervised learning and model compression [52, 146]. Inspired by those works, Policy distillation [273] was extended to the DRL realm. Policy distillation was used to train a much smaller network and to merge several task-specific policies into a single policy, i.e., for multitask learning. In the MDRL setting, Omidshafiei et al. [244] successfully adapted policy distillation within Dec-HDRQNs to obtain a more general multitask multiagent network (see Sect. 3.5). Another example is Deep BPR+ [366] which uses distillation to generalize over multiple opponents (see Sect. 3.6).

Auxiliary tasks Jaderberg et al. [158] introduced the term auxiliary task with the insight that (single-agent) environments contain a variety of possible training signals (e.g., pixel changes). These tasks are naturally implemented in DRL in which the last layer is split into multiple parts (heads), each working on a different task. All heads propagate errors into the same shared preceding part of the network, which would then try to form representations, in its next-to-last layer, to support all the heads [315]. However, the idea of multiple predictions about arbitrary signals was originally suggested for RL, in the context of general value functions [315, 317] and there still open problems, for example, better theoretical understanding [31, 88]. In the context of neural networks, early work proposed hints that improved the network performance and learning time. Suddarth and Kergosien [311] presented a minimal example of a small neural network where it was shown that adding an auxiliary task effectively removed local minima. One could think of extending these auxiliary tasks to modeling other agents’ behaviors [142, 225], which is one of the key ideas that DPIQN and DRPIQN [148] proposed in MDRL settings (see Sect. 3.6).

Experience replay Lin [193, 194] proposed the concept of experience replay to speed up the credit assignment propagation process in single agent RL. This concept became central to many DRL works [220] (see Sect. 2.2). However, Lin stated that a condition for the ER to be useful is that “the environment should not change over time because this makes past experiences irrelevant or even harmful” [194]. This is a problem in domains where many agents are learning since the environment becomes non-stationary from the point of view of each agent. Since DRL relies heavily on experience replay, this is an issue in MDRL: the non-stationarity introduced means that the dynamics that generated the data in the agent’s replay memory no longer reflect the current dynamics in which it is learning [96]. To overcome this problem different methods have been proposed [99, 244, 247, 365], see Sect. 4.2.

Double estimators Double Q-learning [130] proposed to reduce the overestimation of action values in Q-learning, this is caused by using the maximum action value as an approximation for the maximum expected action value. Double Q-learning works by keeping two Q functions and was proven to convergence to the optimal policy [130]. Later this idea was applied to arbitrary function approximators, including deep neural networks, i.e., Double DQN [336], which were naturally applied since two networks were already used in DQN (see Sect. 2.2). These ideas have also been recently applied to MDRL [365].

4.2 Lessons learned

We have exemplified how RL and MAL can be extended for MDRL settings. Now, we outline general best practices learned from the works analyzed throughout this paper.

-

Experience replay buffer in MDRL While some works removed the ER buffer in MDRL [96] it is an important component in many DRL and MDRL algorithms. However, using the standard buffer (i.e., keeping \(\langle s,a,r,s' \rangle \)) will probably fail due to a lack of theoretical guarantees under this setting, see Sects. 2.2 and 4.1. Adding information in the experience tuple that can help disambiguate the sample is the solution adopted in many works, whether a value based method [99, 244, 247, 365] or a policy gradient method [206]. In this regard, it is an open question to consider how new DRL ideas could be best integrated into the ER [11, 83, 153, 196, 278] and how those ideas would fare in a MDRL setting.

-

Centralized learning with decentralized execution Many MAL works were either fully centralized or fully decentralized approaches. However, inspired by decentralized partially observable Markov decison processes (DEC-POMDPs) [34, 237],Footnote 17 in MDRL this new mixed paradigm has been commonly used [98, 99, 180, 206, 247, 266] (a notable exception are DEC-HDRQNs [244] which perform learning and execution in a decentralized manner, see Sect. 3.5). Note that not all real-world problems fit into this paradigm and it is more common for robotics or games where a simulator is generally available [96]. The main benefit is that during learning additional information can be used (e.g., global state, action, or rewards) and during execution this information is removed.

-