Abstract

Computational trust and reputation models are key elements in the design of open multi-agent systems. They offer means of evaluating and reducing risks of cooperation in the presence of uncertainty. However, the models proposed in the literature do not consider the costs they introduce and how they are affected by environmental aspects. In this paper, a cognitive meta-model for adaptive trust and reputation in open multi-agent systems is presented. It acts as a complement to a non-adaptive model by allowing the agent to reason about it and react to changes in the environment. We demonstrate how the meta-model can be applied to existent models proposed in the literature, by adjusting the model’s parameters. Finally, we propose evaluation criteria to drive meta-level reasoning considering the costs involved when employing trust and reputation models in dynamic environments.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Trust and reputation have become common approaches in supporting the management of interactions in distributed environments [17]. In a multi-agent system (MAS), autonomous agents often need to cooperate to achieve their goals. Consequently, agents need to trust each other, explicitly or not. When choosing its partners, an agent must have knowledge of their capabilities, as well as their competence and commitment to cooperate. This decision can be based on previous, direct experience or by obtaining information about the reputation of potential partners.

In this study we adopt the definition of trust offered by Jøsang et al. [16]: “Trust is the extent to which one party is willing to depend on something or somebody in a given situation with a feeling of relative security, even though negative consequences are possible.” As for reputation, it is defined by Keung and Griffiths [17] as “the information received from third parties by agents about the behaviour of their partners”. For Jøsang et al. [16], reputation can also be viewed as a “collective measure of trustworthiness (in the sense of reliability) based on the referrals or ratings from members in a community”. Teacy et al. [35] note that “an agent must not assume that the opinions of others are accurate or based on actual experience”. The effectiveness of a reputation system is, therefore, based on the premise that the reputation of an agent reflects the quality of previous interactions and can be used as an estimate, but not a guarantee, of its quality. Consequently, the credibility and reliability of the information sources must also be taken into account.

Previous works show that most models focus on matters of representation and internal components, with less emphasis on procedural details, such as how to bootstrap and maintain the model [16, 17, 23, 30]. An aspect that is overlooked is the cost that such models introduce. For example, reputation models often rely on external sources to obtain and verify reputation information. This procedure introduces additional communication costs. In [8], the authors suggest that reputation costs should be considered, since “high reputation costs may make reputation-based trust modeling infeasible, even when reputations are very accurate”. In a dynamic environment, changes in these costs would alter the agent’s capability to obtain and verify such information. Before seeking information about another agent, one must consider whether the marginal utility of such information will be superior to its cost of acquisition [3].

While tens of models with different characteristics have been proposed in the literature, from the standpoint of an autonomous agent, there is no evaluation criteria to guide its decision on which model to use and how to adjust them to each scenario it faces. This prompts some questions. Considering the diversity of models, could an autonomous agent reason about its use of trust and reputation in a model independent way? Would it be capable of using the available models adaptively? What criteria could it use to evaluate its models and adaptation strategies?

To address these questions, we studied common elements found in trust and reputation models (TRM) and how they are affected in dynamic environments. To reason about trust and reputation in a model independent way, a meta-model containing such elements is proposed, as is a domain independent meta-model of the environmental aspects involved. The latter allows the agent to take environmental aspects in consideration even if they are not an explicit part of the original model. To adapt the models, a BDI-like approach is proposed along with the necessary evaluation criteria. The proposed components form a cognitive meta-model for adaptive trust and reputation in open MAS. The meta-model acts as a complement to existing models, by giving agents the capacity to react to changes in the system and adjust the model in response. We posit that agents in an open MAS that have such capacity are more adaptable and obtain better overall utility when compared to those using a predetermined, fixed model. We demonstrate how the meta-model can be applied to existent models proposed in the literature, including experimental results showing improvements obtained by adapting the models’ parameters in a dynamic environment.

This paper is organized as follows: Sect. 2 discusses related works; the proposed meta-model and its components are presented in detail in Sects. to 6; experimental results are shown and discussed in Sect. 7; Sect. 8 presents the conclusions and future works.

2 Related works

In the study of trust and reputation, the problem of providing a generalized approach to trust and reputation adaptation has not been explored, but some works have tackled the need to learn about the parameters of trust and reputation. Fullam and Barber [8] propose the use of reinforcement learning to dynamically learn whether to choose experience or reputation as an information source, depending on the conditions. It considers variations in the frequency of transactions, partner trustworthiness and reputation accuracy. Regarding the costs involved in the application of trust and reputation, Fullam [7] proposes an adaptive cost selection algorithm to assess the value of reputation information and decide what information to acquire.

Kinateder et al. [18] propose UniTEC, a generic trust model to provide a common trust representation for the class of trust update algorithms. The authors exemplify how other models can be integrated, mostly by adapting their trust measure representation to a real number in the interval of \([0,1]\). The model generalizes some model parameters related to memory and recency, but does not use them in any way to adapt the models. Experiments comparing the trust update algorithms of several models, including ReGreT [29] and BRS [15], shows how the models’ performance vary due to the test conditions and model parameters.

Regarding the use of meta-models, Staab and Muller [34] propose MITRA, a meta-model for information flow in trust and reputation. It generalizes how trust and reputation information flows inside and between agents, dividing the trust modelling process into four consecutive parts: observation, evaluation (of its own observations), fusion (combination of trust and reputation evaluations) and decision making. As MITRA is focused in information flow, it does not consider an additional phase of adaptation.

Considering BDI agents, Koster et al. [20] discuss how to incorporate a trust model into an extended BDI framework to allow the agent to reason about it and adapt its parameters. It proposes a logic-based approach that uses a multi-context system (MCS) to specify each element of the BDI agent and its trust model. This differs from our meta-model approach, as each model must be represented using the proposed MCS. Also, our model focus the BDI framework in the adaptation process of the trust and reputation and does not interfere with other reasoning elements.

In a more general approach, Raja and Lesser [24] propose a framework for meta-level control in MAS. They augment the classical agent model [28] by adding a meta-level control layer to reason about control actions such as gathering information about other agents and the environment, planning and scheduling, and coordination. A meta-level controller uses the current state of the agent to make appropriate decisions. The authors point out that the complexity of the real state of the agent may lead to a very large search space. To bound the size of the search space, they suggest the simplification of features, for example, by making them time independent.

Each of these works deal separately with some of the issues addressed in this study. The generic model proposed by [18] generalizes some components of trust and reputation, but it is missing components when compared to the proposed meta-model. It also does not address the need for adaptation. The works of [8] and [7] address the adaptation of some trust and reputation components, such as reputation weighting and information acquisition, including a discussion about the costs involved in the latter.

While [34] proposes meta-modelling of trust and reputation information flow, its approach is fundamentally different, as it does not consider individual components at the meta-level and the need of trust and reputation adaptation. In this sense, the works of [24] and [20] are closer to our proposal, as they also consider the view of a deliberative agent.

3 A meta-model for trust and reputation adaptation

Trust and reputation concepts are adaptive in the sense that changes in the behavior and performance of agents are ultimately reflected in both evaluations. However, trust and reputation are also affected by environmental aspects that impact how models work, such as the time scale and frequency of transactions in the system. These aspects impact not only the performance of the models, but the costs of employing the models themselves. As noted by Raja and Lesser [24, “an agent is not performing rationally if it fails to account for all the costs involved in achieving a desired goal”.

In this paper, we propose a meta-model for adaptive use of trust and reputation in MAS, whose goal is to allow agents to adjust their models in response to changes in the environment. According to Seidewitz [32, “a meta-model makes statements about what can be expressed in the valid models of a certain modelling language”. In MAS engineering, a meta-model can be used to separate abstract representations from concrete implementations, as exemplified by Ferber and Gutknecht [6]. The authors propose a meta-model for the analysis and design of organizations divided into two levels: abstract, that defines possible roles, interactions and organizations; and concrete, describing an actual agent organization.

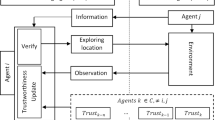

For the purposes of adapting a TRM, we also divide the agent model into two levels: the meta-level, consisting of abstract representations of environmental aspects and common elements of trust and reputation; and the implementation level, that includes the concrete, model-specific realization of these elements. This model is presented in Fig. 1. This approach divides the trust and reputation meta-model into three submodels that encompass the elements of trust, reputation and exploration that can be adapted in response to changing environmental conditions. The adaptation model connects both levels by providing BDI-like reasoning components that execute the adaptation process. The following sections discuss each part of the model in detail.

4 The trust and reputation meta-model

In order to study how an agent can better adapt a TRM to suit its needs, we focus on the common elements that can be generalized to most models, regardless of the domain or application involved. The proposed trust and reputation meta-model, shown in Fig. 1, is divided into three submodels that represent the elements of trust, reputation and exploration that are subject to adaptation. Figure 2 shows the meta-model division and the elements contained in each submodel. Each of these components is a generalization of functions, algorithms or beliefs proposed in models found in the literature. Appendix 1 presents some models as an illustration of the diversity, but also similarities, in the approaches to trust and reputation.

As stated by [23, “trust can be seen as a process of practical reasoning that leads to the decision to interact with somebody”. Following this rationale, the trust model includes elements related to its information sources (direct trust, biases and norms), how dimensions and contexts are considered, the weight of reputation in trust evaluation and the decision making process of whether to trust or not.

The reputation model includes elements related to the other information sources used in the evaluation of reputation. The choice of what information to acquire and the weighting of the sources in the final value of reputation are also part of this model.

The exploration model defines how to bootstrap the TRM, and also methods for seeking new partnerships and information sources. Most models proposed in the literature do not define these aspects at all, considering pre-established settings that cannot be changed. For this reason, the exploration model is included as a separate, explicit component, as it clearly indicates if the model defines such elements. In an open, dynamic environment, the capacity to seek new partners and information sources is essential to adaptiveness, as agents may join or leave the system (or become unavailable) at any time.

The meta-model presented in this section is geared towards trust and reputation adaptation, and is not intended as a meta-model of trust and reputation as a whole. For this reason, it does not encompass aspects of how the models represent trust and reputation values internally, such as boolean values, numerical intervals, probability distributions, fuzzy sets or beliefs. It also assumes the existence of minimum interoperability between the models, without explicitly representing this component, as the cost of interoperability is already considered in information acquisition process of each information source. Finally, this meta-model is not targeted at specifying the information flow of the TRM, as most models follow the same basic flow [34]. In the following sections, the elements that compose each of the submodels and their information sources are presented along with a discussion on how they are affected in a dynamic environment.

4.1 Information sources

Models rely on distinct information sources to calculate the values of trust and reputation. The two main sources of information are direct experience and witness information. In larger agent societies, using only direct experience is infeasible. The agent would take a long time to interact with every other agent, and even longer to establish confidence in them. In such cases, seeking witness information provides a quicker way to learn about other agents. But, this leads to an additional problem: what is the cost of such information and how reliable is it? Also, an agent entering the system has no knowledge of another to interact directly, but also does not have enough information about the sources to trust the information they provide. The problem of credibility and reliability is discussed in Sect. 4.1.2.

Other information sources have been proposed by several authors. Models described in [5, 31] and [37] consider the possibility of observed interaction (direct observation) as another source. In this case, an agent can observe other agents interacting to evaluate their performance. The FIRE [13] model also introduces the concept of certified reputation, described in Appendix 1.

Prejudice is a more unusual source [5, 29]. In human societies, the term prejudice has a very negative connotation. For the purposes of this study, we use the concept of bias, since it can be used to represent both negative and positive preferences. Bias, one of the components of the meta-model shown in Fig. 2, represents a preference internal to the agent, based on the generalization of previous experiences or specific, pre-existing rules. In this sense, the social relationships involving agents may also be used as an information source [29]. This is often used to reduce uncertainty in the reputation by using a priori knowledge. As an example, consider a hierarchical system in which a subordinate agent could assume that its superiors always have maximum credibility. Stereotypes [2] and roles [11] also have been used to generalize the past behavior of similar agents in order to predict the outcome of interactions with similar, albeit unknown agents. In this work, these approaches are also considered in the concept of bias.

Norms, when employed in the system, can also be used as a source. In the LIAR model [37], the violation or respect of the social norms have a direct impact on the reputation of the interacting agent. As stated by [4], in open MAS, norms are a mechanism that can “inspire trust into the agents that will join them”. A norm will only be reliable if it is properly enforced, otherwise, its credibility will be diminished to the point where it no longer inspires any additional trust. In this category, we also include the situation of “trust as a three party relationship”, described by Castelfranchi and Falcone [3]. In this case, the trust of agent \(x\) in agent \(y\), depends on the \(y\)’s fear and \(x\)’s trust on a third-party authority \(A\). Examples of such situations include promises involving witnesses and contracts.

The different types of information sources are exemplified in Fig. 3. Agents \(a\) and \(c\) interact directly with agent \(b\). To estimate trust on \(b\), agent \(a\) can use, apart from this direct interaction (\(DI\)), the witness information (\(WI\)) provided by \(c\) and the certified reputation (CR) provided by \(b\). If possible, agent \(a\) can also directly observe (DO) the interaction between \(b\) and \(c\) to obtain more information. In the illustration, agent \(e\) acts as a reputation aggregator. Although it has not interacted directly with \(b\), it receives reports from \(c\) and \(d\) to provide an indirect recommendation to \(a\). Lastly, the resulting trust also depends on any bias (\(B\)) that agent \(a\) may have or norms (\(N\)) in effect in the system that affect its perception of \(b\).

Each type of information source used in the meta-model is described by a set of components that define its main characteristics, as shown in Fig. 4. These include the information used (memory), its recency, the methods of information acquisition and sharing, the credibility of the source and the reliability of the obtained value. Each component is discussed in detail in the following sections.

With several information sources available, the agent must decide how to weight each source and how to combine them with its internal sources, such as direct experience and bias. In Fig. 2, this is represented, respectively, by the Information Source Weighting and Reputation Weighting components.

Dynamic characteristics such as the cost of information acquisition, the availability and credibility of the sources are very relevant when deciding the sources to be used. The cost of using each source will vary with the environment and actual implementation. Bias, for instance, is a source internal to the agent and, as such, has a minimum cost of use. Witness information, on the other hand, has higher communication costs to contact the witnesses for information.

The availability of sources also varies. Consider the example of direct observation. In a very noisy environment, the observation of other agents’ interactions may not be possible, and even if possible, its reliability will be affected negatively. Likewise, the reliability of witness information depends on the availability of the best witnesses. Certified reputation, proposed by FIRE, may not always be possible, as it depends on the cooperation of agents to provide the certified references and the existence of mechanisms to check the authenticity of the references. Even direct experience is not always available, as is the case with a newly joined agent.

4.1.1 Memory and recency

Trust establishes itself over time as a result of previous transactions. Consequently, temporal considerations are necessary in models of trust. While considering past experiences, the agent must define how far back it will look and how much emphasis it will put in more recent interactions. These two definitions also apply to the information used in reputation evaluation. They are represented in Fig. 4 by the Memory and Recency components.

Marsh [21] discusses the concept of memory and how it affects the agent’s disposition to trust. Memory is a limited resource that can be bounded, for instance, by time or by a limited number of interactions. Depending on the agent’s memory span, a partner’s past performance could be forgotten after a period of time. In [21], the agents assume one of three dispositions: (i) optimistic, that considers the maximum trust value in its memory; (ii) pessimistic, that considers the worst possible value; and (iii) realistic, that considers the average of experience within its memory span. Note that this model does not consider the recency of the experience, as every experience in the agent’s memory span is equally considered.

SPORAS [40] deals with the issue of recency by considering only the most recent rating given by an agent about another. The number of ratings considered in the reputation calculations can also be set, limiting the agent’s memory span. Other models, like ReGreT [29], use a time dependent function to emphasize recent transactions without an explicit limitation in memory span. The FIRE model [14] adopts the direct trust component of ReGreT, and improves on the recency calculations by introducing a recency scaling factor that can be adjusted depending on the time granularity of the environment.

Environments with a high frequency of transactions allow a shorter memory span with higher emphasis on recent interactions, as new evidence on the trust and reputation of agents is abundant. A lower frequency of transactions requires a wider memory span, with less emphasis on recency. In dynamic environment where time granularity changes over time, a fixed policy on these elements can have a negative impact on the agent’s performance. Thus, an agent must adjust the time scale in response to changes in the time granularity, possibly affecting the agent’s memory span as well.

4.1.2 Credibility and reliability

As discussed in Sect. 4.1, every individual information source has an associated measure of credibility. The credibility of an information source is a measure proposed by several models, that can be used to compare and combine information from different sources and also obtain a reliability measure on the final reputation value. Changes in the credibility of an information source should not only change the weight of its contributions in the final value, but should also change how often the source is consulted for information. With various sources to choose from, credibility is an important discriminant of how valuable a source is, considering that recency, memory, reliability and credibility are the meta-level information sources as presented in Fig. 4.

In direct trust, an agent must establish a degree of reliability in others. Consider two agents trusted equally by a third, but with a different number of transactions involving them. To choose one of them, a measure of reliability can be used. Two factors can be considered in this measure: the number of interactions and the deviation in performance.

The ReGreT model [29] illustrates these two factors. It defines an intimate level, as a domain dependent value that depends on the interaction frequency of the individuals. If the number of interactions between two agents is below the intimate level, it reduces the reliability of the direct trust measure. Additionally, the deviation of performance is also considered in the reliability measure. The more the agent’s results vary from the expected, calculated trust, the lower the reliability.

Once again, the frequency of interactions is mentioned as a defining environmental factor in adjusting the model. In an environment with higher frequency of interactions, a higher threshold for intimacy may be used. Other factor is the deviation in the performance. As mentioned, changes in the environment, such as changes in communication costs have an impact on the agent performance. Consequently, a stronger deviation will be observed.

Other models proposed in the literature have focused the subject of credibility and reliability of trust and reputation measures using a probabilistic approach. In probabilistic models, the dimensions of trust are modeled as random variables using different probability distributions. BRS [15] and TRAVOS [35] are based on the beta distribution and consider, as input, only the number of positive and negative outcomes. BLADE [27] uses a similar approach that can deal with discrete representations of multiple values, while HABIT [36] proposes a model that allows multiple confidence models to be used and combined into the reputation model.

In conclusion, the choice of the credibility model, probabilistic or not, depends on the type and amount of information that can be exchanged between the agents regarding their experiences. Some systems such as BRS [15], only require the number of satisfactory/unsatisfactory interactions. This binary approach is very simple, with little overhead in transferring and storing the information, but also too limited for more sophisticated, multi-dimensional representations. Other systems require, in addition to a trust value, a reliability measure provided by the source, like ReGreT [29]. This depends on the disposition of all agents in the referral chain to provide such information in order to reach a proper estimate.

4.1.3 Information acquisition and sharing

The process of acquiring and sharing information ia another important element of a TRM. In an open MAS, recent information about the performance of the target agent is essential to the reliable evaluation of trust and reputation. In order to obtain this information, the truster must query the available information sources. The agent itself may also share its information with others. Each type of information source has its own method of information acquisition and sharing, as shown in Fig. 4. Additionally, the agent must decide the sources to query, considering their availability, credibility and cost of information acquisition. This is represented in Fig. 2 by the reputation information management component.

A centralized approach, such as the one used in SPORAS [40], offers a very accessible information source that receives rating from various sources. However, in an open MAS, it cannot be assumed that every agent will contribute to and accept the evaluations of this system. In a distributed approach, the individual agents store their observation locally and query their neighbors about other agent’s reputation [12]. In addition, in a system with a large number of agents, it could prove difficult to find someone with information regarding the desired agent.

A feasible distributed approach based on a referral network is proposed by [39] and used by FIRE [13]. As the agent receives information from external sources, it establishes a network of referrers. After evaluating the reliability of its information sources, the agent must choose which sources to query. With time, the agent learns who to seek for information on a specific agent. However, in a dynamic environment, the availability of the preferred referrers is not guaranteed.

Also, the costs of acquiring the information must be considered. If obtaining reputation information was free, an agent would be able to periodically query the whole MAS for information about an agent. Realistically, it must consider the time it will spend waiting for the information and, in some cases, the cost of paying for it.

The model of [39] defines two parameters related to the referral process: branching factor and a referral length threshold. These have a direct impact in the cost of acquiring reputation information, as they define the number of sources to be queried in order to find the required information. A higher cost of communication, for instance, would force the agent to reduce both parameters. The need for lower response times could suggest a reduction in the referral length and an increase in the branching factor.

The process of information acquisition in a dynamic environment is illustrated by Fig. 5. In this example, agent \(a\) wants information about agent \(x\). Agents \(b, c, d\) and \(e\) are known referrers. Agent \(a\) decides to query its neighborhood with a branching factor of 3, so it chooses agents \(b, c\) and \(e\). It also sets a referral length threshold of 3, as indicate by the numbers in the graph. Since the environment is dynamic, agent \(b\) is not available, so \(a\) receives no response from it. Agent \(c\) consults the only agent it knows and it answers that it does not have information on \(x\). Agent \(e\) uses the maximum allowed length threshold to obtain information on \(x\) by forwarding the query to other referrals known to him. Note that agent \(a\) could have chosen to query agent \(d\) instead of \(e\) and, as a result, would not obtain any information on agent \(x\). This shows how reputation information acquisition is subject to the dynamics of the environment, such as the sparsity of connections and the availability of referrals.

4.2 Dimensions and contexts

Trust and reputation may be viewed as multi-dimensional elements that depend on the context of the interaction [9]. Agents define how different aspects of the interaction contribute to the evaluation of trust, and under which conditions they were observed. In Fig. 2, this is represented by the Dimension & Context components.

Regarding context, Marsh [21] defines situational trust, that takes into account previous interactions in a specific situation. In the absence of previous experience in a specific situation, the agent can consider trust obtained in other situations as an estimate. The FIRE model defines role-based trust as a way to define domain-specific ways of calculating trust [14].

ReGreT [29] evaluates trust considering the specific behavioral aspect. It defines an ontological dimension, that decomposes trust as a graph of related aspects (e.g. timeliness, quality, price). To calculate trust, the values of each node must be combined, for example, using a weighted average. Figure 6 illustrates the concept of multi-dimensional trust. Trust in a seller is composed by three dimensions: delivery time, product quality and price. The product quality is further divided into two dimensions: durability and performance. The numbers indicate the weight of each dimension in the composition of the upper dimensions.

Multi-dimensional and contextual trust are better suited for dynamic environments, as changes can affect one or more dimensions and, consequently, have a reflex on the trust value. For example, in a distributed system, changes in the network conditions can impact the response time dimension, thus reducing trust in the affected agents.

Using a particular representation, including a multi-dimensional one, to the values of trust and reputation leads to a difficulty in the interpretability of models. If other agents do not share the same representation, when querying for specific dimensions, the answering agent may simply return the overall reputation, without the intended, specific value. The issues of semantic alignment of trust dimensions and interoperability of models are beyond the scope of this study. Works in these directions can be found in [22, 33] and [19]. For the purposes of our approach, the dimension and context components assume the existence of minimal interoperability to allow information exchange. Difficulties in interoperability will reflect on the usefulness of the information source. If the exchanged reputation information cannot be correctly interpreted, the credibility of the source will be impacted. On the other hand, if two agents share the same elements of dimension and context, their sharing of reputation information will be improved.

4.3 Decision making

A review of trust and reputation models by [23] shows that not every model has a clear definition of how the decision to trust another agent is made. For that reason, the trust and reputation meta-model includes an explicit Decision Making component that represents this process (Fig. 2). This can be as simple as choosing the agent with the highest trust value or the best reputation, but it may also consider other elements. For example, if agent \(a\) has a trust value of 0.7 (with reliability 0.9) and agent \(b\) has a trust value of 0.9 (with reliability 0.6), this component defines which one will be chosen. It could choose \(b\), since its the most trusted agent, but it could also choose \(a\), if it considers the trust measure of \(b\) to be unreliable; or it could use the multiplication of both values to obtain the expected trust, also resulting in \(a\) being chosen.

Consequently, the decision making process also relies on additional components such as the weighting of the available information. If multiple reputation information sources are used, the agent must decide the weight to give to each one in the final reputation evaluation. In ReGreT [29], sources with a credibility measure under a certain threshold could be disregarded, while the remaining sources could be weighted according to their credibility. In Fig. 2, this is represented by the Information Source Weighting (ISW) component. Additionally, after defining the ISW, the agent must define how to consider the reputation evaluation in the final calculation of trust. The Reputation Weighting component is responsible for this. In FIRE [12], this is made by giving a weight to each component considered in the calculation of trust.

4.4 Bootstrapping and exploration

In most models, the issue of how to start operating in the absence of previous information is often ignored [23]. Models assume the presence of a pre-existing referral network accessible to the new agent, without the need to gradually discover its information sources. In dynamic environments, agents will face this situation more frequently due to changes in the population and in the availability/credibility of referrers and information sources. This makes the use of bootstrapping and exploration mechanisms an important part of the agent’s ability to adapt. These mechanisms can make use of any information source available to deal with the lack of more precise or recent information. They also have to define the environmental conditions that trigger their use.

Three components, shown in Fig. 2, constitute the exploration model: (i) Bootstrapping, that defines the initial exploration of the environment; (ii) Direct Trust Exploration, used to explore new direct partnerships; and (iii) Information Source Exploration, used to explore new referrers and sources of information. We consider bootstrapping and exploration as essential elements of adaptiveness. In dynamic environments subject to severe, sudden changes—such as agent migration or losing connectivity with a significant portion of the system—may force the agent to return to an exploration state. Also, after continuously using a limited number of sources, the agent looses perspective of other, potentially better sources. This is also related to the memory and recency elements, show in Sect. 4.1.1, that tend to “forget” older interactions, thus reducing the credibility of the source.

Bootstrapping strategies, for example, are suggested in HABIT [36] and SPORAS [40]. HABIT predicts the behavior of an unknown agent based on past interactions with other agents with similar attributes. The model learns the reliability of newcomers in general, by considering the average performance of similar agents already in the system. This introduces the difficulty of finding similar groups of agents, but should allow for more reliable results. In SPORAS, a newcomer has zero reputation, the lowest possible value. The rationale behind this decision is that agents have no incentive to start anew, since their reputation can never improve from this. Trusting a newcomer in this case depends solely on the agents disposition to try a direct interaction without any reputation information.

Exploration strategies are suggested in [10] and [14]. In the absence of trustworthy partners, [10] defines a rebootstrap rate, which is the probability an agent will choose the best available partner that is neither trusted, nor distrusted. Using Boltzmann exploration, FIRE [14] allows agents to choose unknown partners according to a temperature parameter that is decreased over time. Initially, agents risk losing utility by trusting an unknown agent, but they also have the chance to discover new and possibly better partners. The same is valid for referrers. Agents need to discover new referrers to extend the referral network and increase the quality of reputation information obtained. As the temperature parameters decrease, agents restrict their choices to known partners and referrers.

5 Environment meta-model

The environment is another important element of the agent model shown in Fig. 1. This model is used to translate the agent’s perceptions of the actual environment to the agent’s inner representation. As discussed in Sect. 4, several elements of trust and reputation are affected by environmental aspects. Modelling these aspects allows the agent to correctly perceive and react to changes in the environment.

For the purpose of adaptation, not every aspect of the environment model needs to be represented in the meta-level. Population size, for instance, is not explicitly considered at the meta-level, as the agent will not reason about population size directly, but about aspects that are affected by it.

The environment meta-model (\(Env\)), shown in Fig. 7, is an abstract representation of the environment model that generalizes the domain independent aspects that are part of the adaptation process. The environment is represented by a 8-tuple that includes:

-

operating costs—\(C_{op}\);

-

earned and total utility per unit of time—\(U_{earned}, U_{total}\);

-

frequency of transactions—\(F\);

-

availability of trusted partners—\(Av_{tp}\);

-

availability of information sources—\(Av_{is}\);

-

communication costs—\(C_{comm}\) and

-

cost of information acquisition—\(C_{info}\).

Note that domain-specific aspects contained in the environment model can be mapped to one or more meta-level aspects. For instance, consider weather conditions as a domain-specific environmental aspect. The impact of these conditions on the TRM are indicated by their mappings to the affected aspects of the meta-model. If weather conditions, for example, disrupt communications between the agent and its information sources, \(Av_{is}\) will reflect this. This meta-level allows the adaptation process to reason about the impacted aspects and not their domain-specific cause. Later in the adaptation process, the domain-specific aspects can be reintroduced in the agent’s adaptation plans, described in Sect. 6.

5.1 Operating costs

When seeking cooperation, an agent can choose not to trust any of the available partners and wait for a more trusted option to become available. Castelfranchi and Falcone [3] cite “doing nothing” as a valid choice the agent has when seeking cooperation. Such choice is represented as a neutral choice in terms of utility and, as such, is later omitted. However, in a realistic MAS, an agent uses resources that have an associated cost, regardless of how efficiently the agent uses it.

As mentioned before, the operating costs (\(C_{op}\)) represent a value paid by the agent for the right to use a resource. This means that, whether the agent is busy or not, this cost will be discounted from the agent’s utility. The existence of this basic cost has an impact on the agent’s disposition to trust, since it adds a sense of urgency to the decision of trusting another agent. Without this urgency, the agent could wait indefinitely for the most trustworthy agent, thus minimizing its risks at the cost of potential utility gains with other agents. When assessing the risk of partnership, if the cost of “doing nothing” is high enough, the agent is better off risking cooperation. How high is this cost, depends on two other aspects of the environment: the expected utility gains and the frequency of transactions.

5.2 Utility and frequency of transactions

In order to better reason about the risks of cooperation, an agent must perceive in how much utility it expects to obtain. This allows the agent to put other costs in perspective, in order to evaluate if an action is either cheap or expensive. For example, in service-oriented systems, utility is a dynamic value depending on the demand for services. An agent that offers a service with lower demand, will obtain less utility per unit of time \(U_{earned}\). As a result, this agent’s \(C_{op}\) will be proportionally higher when compared to an agent with the same \(C_{op}\) that obtains more \(U_{earned}\). An agent that earns very little \(U_{earned}\) can also have restricted trust and reputation, since costlier actions (such as contacting a large number of agents for reputation information) may not be viable. In a dynamic environment, changes in the relation between \(U_{earned}\) and \(C_{op}\) require the agent to modify its model to adapt to the new conditions. We differentiate between utility earned by the agent (\(U_{earned}\)) and total utility offered in the system (\(U_{total}\)), both per unit of time.

The frequency of transactions is a complementary aspect of utility. The agent must perceive how many opportunities per unit of time it has. Utility alone is not enough to precisely characterize the environment. As seen in Sect. 4, the frequency of transactions affects distinct elements of trust and reputation, such as memory span and credibility measures. Consider an environment where \(U_{total} = 100/hour\) and frequency of transactions is 2/hour, meaning that, on average, only two transactions per hour are offered with a total utility of \(100\). Now, consider an environment with the same \(U_{total}\) and frequency of transactions of 10/hour. The sense of urgency to trust is higher in the first case, since the opportunities are scarcer.

5.3 Availability of agents

The availability of trusted partners is also an aspect to be considered. Burnett et al. [2] states that selecting the most trusted agent is the most commonly found method in the literature. However, the most trusted agent is not always available. It is reasonable to expect that agents with better reputation will be busier than others. The same can be said about information sources: in a referral network, referrers may not be available all the time. Therefore, the agent cannot assume that the best option will always be available. By considering the next best available option, risks are increased and the dilemma of taking the increased risk versus waiting for a better opportunity becomes more pressing. We differentiate partners’ availability (\(Av_{tp}\)) from information sources’ availability \(Av_{is}\), as they often refer to distinct groups of agents, such as providers and clients, respectively.

Availability may also be limited by other aspects such as location or capacity. As agents and providers change their location, they may become too distant, and consequently unavailable [14]. Likewise, if the most trusted provider cannot tend to the agent’s request, the agent must again choose the next best option or simply wait for the preferred partner to become available.

5.4 Communication costs

Exchanging information is the core of reputation. As such, there is always communication costs (\(C_{comm}\)) involved. As seen in Sect. 4, there are several types of information sources with varying degrees of credibility and complexity. An agent must be capable of choosing information sources whose benefits outweigh their costs of information acquisition and maintenance. Other aspect may amplify \(C_{comm}\), such as the frequency of transactions. If frequency of transactions is high, the \(C_{comm}\) involved in constantly requesting newer information about the reputation of agents would also increase. In this case, there is a tradeoff between \(C_{comm}\) and always having the latest information available.

Consider an extreme example, where a temporary network failure results in very high \(C_{comm}\). In such a scenario, consulting with external sources of information may become infeasible. Similarly, if the agent or its most trusted referrers change location, the resulting increase in \(C_{comm}\) may force the agent to look for new, lesser known referrers. Since information sources have different \(C_{comm}\), agents can modify their choice according to the situation, seeking a less credible source with a lower \(C_{comm}\). Obviously, the choice of a lower cost, less credible source must be made only if the added risk is worth it.

5.5 Cost of information

The importance of knowing other agent’s reputation in the absence of direct experience is undeniable. Nevertheless, some models that consider the use of reputation information do not give the necessary attention to the costs involved in gathering and verifying it.

Apart from the communication costs involved, the cost of a piece of information \(C_{info}\) is something to be considered. If an agent \(a\) queries \(b\) about the reputation of another agent, \(b\) may request a payment in return for the information. By receiving an adequate compensation, even if \(b\) does not have any information, it could forward the query to other referrers it knows, deducing any costs from its initial compensation. The reliability of an information source may also affect the cost of information, as an agent may choose to pay a higher cost for information from a more reliable source. In [7], an adaptive cost selection algorithm is proposed to evaluate the utility of the information acquired previously in order to determine how many and which information to purchase.

Verifying the received information is also another cost. For example, in certified reputation, proposed by FIRE [14] and described in Appendix 1, agent \(a\) receives references from \(b\)’s previous interactions that are certified by other agents. To verify these references, \(a\) must check its authenticity either by directly contacting the referrers or using a security mechanism, such as digital signatures. Both ways incur additional costs.As a result, an agent may choose to pay a higher cost for more reliable information.

In a referral network [39], an indirect recommendation could also require verification. Suppose that \(b\), as referrer, forwards \(a\)’s query to other agents. Later, \(b\) provides \(a\) with a piece of information given by agent \(x\), who is not known by \(a\). Agent \(a\) could decide to contact \(x\) to verify the information, to ascertain if \(x\) actually sent the information and if the information was not tampered with by \(b\) or any other agent in the referral path.

6 Adaptation model

The trust and reputation meta-model abstracts the elements of the implementation-level model, while the environment meta-model maps adaptation-related aspects that are part of the agent’s perception of the environment. Based on these two meta-level components, the adaptation model works to provide the capabilities to adjust the model to changing conditions. For this purpose, the Belief-Desire-Intention (BDI) model of agency was chosen. In this model, beliefs, desires and intentions represent, respectively, the informative, motivational and deliberative components of the agent’s mental attitudes [26]. We take advantage of these representations and the traditional BDI architecture to allow the agent to reason about trust and reputation adaptation. The adaptation model is shown in Fig. 8, where elements in gray are related to the agent’s reasoning process.

As shown in Fig. 1, the adaptation model is a component that connects the meta-level to the implementation level. Although we have chosen a BDI approach, agents with other reasoning models can still make use of the mentioned meta-models by implementing their own adaptation model. A reactive agent, for instance, could implement a simplified detection/reaction cycle to perform the adaptation.

In the proposed model, beliefs are used to represent facts about the environment and the TRM, as represented by their meta-level counterparts. To represent the agent’s desires, two types of goals are used: monitoring (\(G_{M}\)) and adaptation goals (\(G_{A}\)). Monitoring goals are related to detecting changes that signal the need to adjust the model in use. They monitor one or more aspects mentioned in Sect. 5, and the performance of the components. A goal \(g \in G_{M}\) is defined as \(\{Env' \subseteq Env, m' \subseteq m_{meta}, \langle decl \rangle \}\), where \(Env'\) and \(m'\) are, respectively, subsets of the environmental aspects and the components of the trust and reputation meta-model that are referenced in the goal declaration \(\langle decl \rangle \). This declaration depends on the logic being used by the agent. At the implementation level, this depends on the agent platform or reasoner.

Next, an adaptation event \(evt = \{ t, g \in G_{M}, env_{t} \}\) contains the time (\(t\)) of the event, the goal that triggered it (\(g \in G_{M}\)), and the conditions of the environment at that time (\(env_{t}\)). Multiple events can be generated in a given interval of time. The event handling process (EHP) is responsible for analyzing the event queue and setting the adaptation goals (\(G_{A}\)). This includes eliminating conflicting goals and merging duplicate goals.

With the adaptation goals set, the plan selection process (PSP) evaluates the set of available adaptation plans \(P\). Since more than one plan may be suitable to the same situation, it builds a list of candidate plans (\(P' \subseteq P\)) that can be used to achieve these goals. If more than one plan is available, the PSP will choose the candidate plan with the higher estimated utility.

Lastly, the plan execution process (PEP) implements the adaptations in the concrete model. An adaptation plan, \(p \in P\), can be divided in two parts: (i) a model-independent part, that references only the elements of the trust and reputation meta-model, and (ii) a model-specific part, that uses the functions and parameters of the concrete model. As the result of the PEP, the adapted model is obtained and the related beliefs are also updated through the meta-model.

To illustrate the use of the adaptation model, consider the following practical example. Suppose that the model proposed by Marsh [21], presented in Appendix 1, is being used by an agent to choose an online service it needs. For example, lets consider a remote storage service where the agent can keep the files it produces. If the agent does not trust any service provider, then it must store file locally at a higher cost. In this case, the difference in cost the agent pays for not trusting represents the agent’s operating cost. If this cost becomes higher than the utility the agent earns from its work, then the agent must reevaluate its trust criteria. In Marsh’s model, this can be achieved by modifying the cooperation threshold that is used to determine if there is sufficient trust for cooperation. The cooperation threshold is represented in the trust and reputation meta-model by the decision making component.

To use the adaptation model in this situation, a monitoring goal can be defined to observe if the operating cost (a component of the environment meta-model) is above a given percentage of the utility earned by the agent. The operating cost observed by the agent is fed as a belief to the adaptation model, as is the TRM (and its configuration) being used by the agent. If the cost is above the limit, an adaptation goal to lower the cost is created. An adaptation event is triggered and an adaptation plan, if available for this situation, is selected and executed. A possible plan for this situation, is to adjust the decision making component. In the case of Marsh’s model, the model-specific part of this plan is to lower the cooperation threshold, such that providers that are less trusted could be chosen.

It is important to note that the use of the adaptation model does not guarantee improvements in the overall utility earned, as this depends on the quality and adequacy of adaptation plans. depending on how long the agent

6.1 Application and evaluation

In order to incorporate an existing TRM into the meta-model, its functions, algorithms, parameters or beliefs must be mapped into the meta-model’s components. These mappings allow the agent to choose the goals and plans that can be applied to the concrete model. For instance, if the model does not use any external information, then the agent does not set any goals or plans related to reputation information acquisition.

To illustrate the meta-model application, four models where chosen: Marsh’s [21], SPORAS [40], ReGreT [29] and FIRE [12]. They are described in detail in Appendix 1. This does not mean that the meta-model was defined based only on these models, or that its applicable only to them. In Sect. 4, other models are mentioned that have proposed different approaches to the components in the meta-model. Also, some works have no intention of providing a complete TRM, focusing instead in the definition of specific components, like credibility models or different representations of trust (such as fuzzy sets and probability distributions).

Figure 9 shows an informal representation of the mappings of some of Marsh’s model components. Since this model makes no use of reputation, the associated components are not mapped. Basic trust is considered here as a rudimentary bootstrapping strategy.

Formally, the mapping of a component in the trust and reputation meta-model is a set containing the associated elements (functions, algorithms, parameters and beliefs). In the previous example, the mapping of the DT component would be a set composed of the basic trust, general trust and situational trust components.

Figure 10 shows the case of SPORAS, that defines only reputation-related components. It also illustrates how some of the model’s components are mapped directly into information source components. Note that the model does not provide a component that specifies how information acquisition and sharing occurs.

In Fig. 11, we show the mappings of some of the components defined by ReGreT and FIRE. Noticeably, the models lack a decision making component. Although these models share some components (like direct trust), they use different information sources.

These mappings show that not every model implements all the specified components, and that models can share some elements. This suggests the possibility of adapting models beyond the adjustment of parameters and functions, by switching model components or implementing missing features. For instance, the simple bootstrapping component of Marsh’s model (based on the notion of basic trust) could be replaced by a more sophisticated exploration strategy, such as the Boltzmann exploration used in FIRE’s experiments. Similarly, the decision making component from Marsh could be used to complement FIRE, as it lacks an explicit decision-making component.

6.1.1 Goals and plans

In addition to these mappings, a set of valid configurations for each component must be provided. In the case of Marsh’s model, the set of valid configurations define the range of the memory span, values for the cooperation threshold parameter, and possible variations of the basic and situational trust calculations. In the case of SPORAS, these could be the functions used in the model, or the number of observations used in the calculations. Likewise, both ReGreT and FIRE define several parameters in their configurations, shown in Appendix 1.

The definition of valid configurations, together with the aforementioned mappings, allows the adaptation model to define potential adaptation plans. Valid configurations can be as simple as a numeric interval, or they can consider environmental parameters such as the frequency of interactions. Later, the adaptation model can verify if a configuration that is better suited for the current conditions is available and apply it to the TRM. To decide which plan to adopt, an evaluation criteria for the adaptation process must be defined.

As mentioned in Sect. 6, monitoring and adaptation goals can be expressed using the model agnostic components of the environment and trust and reputation meta-models. Although the components of these models are model and domain independent, the conditions expressed in the goals may not. These depend on aspects such as the acceptable levels of performance, such as cost limits, and the resources that are allocated to the agent’s reasoning process, that limit the amount of deliberation in the adaptation model.

6.1.2 Evaluation criteria for the adaptation process

To evaluate the performance of a TRM \(m\) under the current environment conditions \(env\), the adaptation model considers the costs associated with the three submodels that represent the elements of trust, reputation and exploration:

-

the cost associate with the trust model (\(C_{trust}\));

-

the cost associated with the reputation model (\(C_{rep}\));

-

the cost of exploration (\(C_{exp}\)).

These costs are a result of the costs of the individual components in each model. Two additional costs must also be considered: the cost of staying idle due to the decision of not trusting any of the available partners (\(C_{idle}\)) and the cost of deliberation (\(C_{del}\)).

The sum of these costs is used to obtain the final evaluation, as shown in Eq. 1. Naturally, they are to be measured using the actual implementation of the TRM, with the measured cost of each component. For instance, \(C_{comm}\) and \(C_{info}\) depend on the information acquisition and sharing strategy, such as the quantity and type of information sources. The time spent on deliberation (\(C_{del}\)) will vary depending on the model’s decision making component. The minimization of these costs offers guidance to the adaptation process, as changes in one aspect of a TRM can have an impact on the cost of others.

The cost of staying idle depends on the operating costs (\(C_{op}\)) of the environment, the frequency of transactions and the availability of the most trustworthy agent (\(Av\)). This estimates the time the agent will spend idle if no suitable partners are available and it decides not to pursue other opportunities. The operating cost is not under direct control of the agent. To change the operating costs, the agent would have to be able to negotiate its values or migrate to a less expensive resource.

The cost of deliberation (\(C_{del}\)) depends on the complexity of the agent’s reasoning and the amount of evidence used in the evaluation of trust and reputation. It is the cost associated with the evaluation of trust and reputation, using the already available information, for each type of information source. As such, it is affected by the components shown in Fig. 4, but mainly by the decision making component.

The cost associated with the trust model \(C_{trust}\) is based on the expected loss of utility due to mistrust. This is a result of the reputation weighting and the decision making components, shown in Fig. 2. An estimate of the utility loss depends on trust (and its reliability) and the availability of the trustworthy agents. If the model’s adaptation worsens its performance (when compared to the original configuration), \(C_{trust}\) will be affected as a reflex.

The cost associated with the reputation model \(C_{rep}\) is mostly related to the reputation information management component, and depends on the communication costs (\(C_{comm}\)) and the cost of information (\(C_{info}\)) for each information source used by the agent.

Lastly, the cost of exploration \(C_{exp}\) indicates the cost incurred in searching new partners or referrers. This cost is calculated as the difference between the costs of trust and reputation before and during the exploration process. For example, the exploration of new information sources would increase \(C_{rep}\), as new agents are contacted and new information is acquired. Likewise, the exploration of direct interaction could result in loss of utility with unknown agents, negatively affecting \(C_{trust}\). On the other hand, if the exploration process is successful, \(C_{trust}\) will be positively impacted by the discovery of better partners and more credible information sources.

7 Experimental results

To evaluate whether the proposed meta-model can be used to improve existing models under dynamic environmental conditions, two experiments were performed with two models: Marsh’s and FIRE. In both experiments, four agents use the same model with different configurations. We chose such number to allow a better visualization of the results they obtain as a consequence of having different configurations.

These agents interact randomly with another fifty agents whose parameters follow a normal distribution with a given mean and standard deviation. Due to the random nature of interactions and the type of the distribution used, a higher number of agents would result in a similar sample of interactions with no significant difference in the overall results. It is also a number compatible with the experiments in the original models presented in Appendix 1. Since the environment is dynamic, one aspect is changed after a number of iterations. In the first round, agents interact without changing their configurations. In a second round, they are allowed to use the meta-model to adapt. Table 1 presents a summary of these experiments.

These experiments do not exhaust all possible combinations of meta-model components, environmental aspects, models’ parameters and adaptation plans. Likewise, the experiments are not intended to evaluate the quality of the adaptation goals and plans used or the performance of the original TRM. The goal is to illustrate how the proposed meta-model allows the agent to reason about its use of trust and reputation in a model and domain-independent way. For this reason, there is no specific domain attached to these experiments. Agents in these experiments perform the roles of truster and trustee that can generalized to a diversity of domains.

To perform the experiments, a proof of concept prototype was built using Jason [1] as an interpreter for the adaptation model’s elements expressed in AgentSpeak [25]. Each experiment was repeated 30 times in order to obtain the mean results for two groups of agents: with and without the ability to adapt. The first group obtained significantly better results, which are presented and discussed in the following sections.

7.1 Experiment A

In this experiment, we observe how changes in the operating costs affect Marsh’s model, presented in detail in Sect. 1. Marsh’s model is used in this test as it explicitly defines a decision making component. The cooperation threshold is used to determine whether an agent will trust another. If trust is below this threshold, cooperation does not take place.

In this test, a population of four agents with different values for the cooperation threshold is used. For the potential partners, a population of fifty agents is generated according to a normal distribution of availability and reliability. In each iteration, the four agents are presented with the most reliable agent available. Each agent then chooses whether to trust the selected partner based on its cooperation threshold. If the partner’s reliability is above the threshold, the agent decides to trust it. Otherwise, it decides to skip the opportunity and it pays the operating costs. If the interaction is successful, the agent gains one point of utility. If the interaction fails, the agent looses one point.

The operating costs \(C_{op}\) start at 1 % of the earned utility (\(U_{earned}\)). After each 100 iterations, an additional cost of 5 % is added. The cost \(C_{idle}\) is calculated as the number of skipped opportunities (the choice not to trust) multiplied by the operating costs. The cost \(C_{trust}\) is calculated as the number of failed interactions multiplied by the utility of the task.

For this scenario, the four agents consider beliefs related to a subset of the elements contained in the meta-models that are relevant to the tests. The beliefs and goals used in this experiment are as follows:

-

Beliefs (from environment): \(Env' = \{C_{op}, Av_{tp}, U_{earned}\}\)

-

Beliefs (from meta-model): \(m' = \{directTrust, decisionMaking(cooperation-Threshold) \}\)

-

Monitoring goal: \(g_{m} = \{ Env', m', maintain(C_{idle} < applicationThreshold) \}\)

-

Adaptation goal: \(g_{a} = \{ Env', m', achieve(C_{idle} < applicationThreshold) \}\)

The monitoring goal \(g_{m}\) is used to check if \(C_{idle}\) does not exceed the threshold defined for this application. After this cost increases beyond the threshold, the adaptation goal \(g_{a}\) seeks to bring \(C_{idle}\) below this limit. Notice that the beliefs and goals include only elements specified in the meta-model. The \(decisionMaking\) belief only specifies that the decision making component of the meta-model is implemented by \(cooperationThreshold\) component of the concrete model.

The adaptation plan used in this experiment modifies the decision making component. In the case of Marsh’s model, the plan gradually reduces the cooperation threshold, allowing the agent to interact with less trusted agents. Table 2 presents the settings used in Experiment A.

Figure 12 shows the results for Agent 4 with and without the use of the adaptation plan. Since Agent 4 has the highest cooperation threshold, meaning a lower disposition to trust and a tendency to stay idle more often, it shows more clearly the impact that changes in the operating cost have on the utility earned. It also shows how the adaptation plan results in increased utility. Using the adaptation plan, Agent 4 decreases its cooperation threshold when the operating cost is increased and goes above the defined limit. With a reduced threshold, it interacts with less trusted agents, but on the other hand it avoids the penalty of staying idle. The utility values in Fig. 12 were scaled to an interval of \([0, 100]\).

This plan has a higher impact on agents with higher cooperation thresholds, since they have the range available to decrease their threshold. Since the plan only affects the cooperation threshold, after a certain point, it cannot do anything else to improve the utility being reduced by the operating costs. The oscillation in utility after this point is the result of the adaptation that follows the increased cost. As the operating cost increases, the utility is reduced until the agent reacts and performs the adaptation to reduce \(C_{idle}\) and regain some utility.

The mean utility obtained by each agent, with and without adaptation, is shown in Fig. 13. The error bars indicate the 95 % confidence interval for the mean. In this case, the adaptation plan is more useful for the agents with higher cooperation thresholds. In the case of Agent 1, that already had a low threshold, the results of the adaptation plan were not significant. The other agents have significant increases in their mean utilities.

In this experiment, it is possible to observe that agents with static cooperation threshold do not respond to changes in the operating costs and, as such, their utility is reduced as soon as the operating costs are increased. When using the meta-model, the agents reason about the effects of the changing operating costs on its decision making process, and change the cooperation threshold accordingly. Consequently, the agent can improve its utility by setting the cooperation threshold that is more adequate to the situation.

7.2 Experiment B

In the second experiment, we observe the impact of the communication costs/information price on the process of acquiring reputation information. To do this, we use FIRE’s information source for obtaining witness information called a referral network. Like in the first experiment, these costs start at 1 % and are increased by 5 % every 100 iterations, starting at the 200th iteration.

Four agents are used with different configurations of the information acquisition component of the information source. Fifty agents are used as referrers, whose availability and reliability of recommendations are defined by normal distributions with the given mean and standard deviation. The beliefs and goals used in this experiment are shown next.

-

Beliefs (from environment): \(Env' = \{C_{comm}, C_{info}\}\)

-

Beliefs (from meta-model): \(m' = \{referralNetwork(infoAcquisition)\}\)

-

Monitoring goal: \(g_{m} = \{ Env', m', maintain(C_{rep} < applicationThreshold) \}\)

-

Adaptation goal: \(g_{a} = \{ Env', m', achieve(C_{rep} < applicationThreshold) \}\)

The monitoring goal \(g_{m}\) is used to check if the cost of reputation \(C_{rep}\) is below the threshold defined for this application. Here, \(C_{rep}\) is calculated as the sum of the communication costs (\(C_{comm}\)) and the price paid for information (\(C_{info}\). When \(C_{rep}\) goes above the threshold, an adaptation goal \(g_{a}\) is set to reduce this cost below the threshold again.

The adaptation plan used in this experiment changes two parameters of the information acquisition process in referral networks (discussed in Sect. 4.1.3: the branching factor (number of referrers directly contacted) and the referral length threshold (the maximum number of referrers in a referral path). In this plan, an agent first reduces the referral length and then reduces the branching factor, thus reducing the costs involved. Table 3 presents the settings used in Experiment B.

Figure 14 shows the results for Agent 1 with and without the use of the adaptation plan. After the first change in the cost of reputation information (after 200 iterations), the agent using the adaptation plan gradually reduces the number of referrers used, while the other suffers the loss of utility due to the increased costs. In this case, the level of availability and the credibility of referrers is enough to allow this reduction in the number of referrers without a significant loss of utility. The jagged nature of both lines show the variance of the availability and credibility distributions. The agent using the adaptation plan presents a lower variance in its results, as shown by the confidence interval band. The values in Fig. 14 were scaled to an interval of \([0, 100]\).

The cost of reputation information for each agent is shown in Fig. 15 in three settings: no adaptation, adaptation when costs reach 15 and 5 % of the earned utility. The error bars indicate the 95 % confidence interval for the mean. Naturally, Agent 4 has higher costs since it uses more referrers. Even when it adapts, it takes a longer time to reach a lower number of referrers. Consequently, it accumulates more costs. For agents that adapt earlier, when the cost is still low (at 5 %), the overall cost of reputation information is also lower.

By using the meta-model to reason about the number of referrers used in the reputation information acquisition process, the agents were able to reduce the costs involved without a significant loss of utility. Considering the evaluation criteria proposed in Sect. 6.1.2, the strategy to adapt earlier was the most successful.

8 Conclusions

In this paper we presented a meta-model for adaptive use of trust and reputation in dynamic multi-agent systems. We avoid assumptions of a stable environment, since it does not reflect the true dynamics of a distributed, open MAS, where aspects such as fluctuations in the cost of communication and the occurrence of failures can affect trust among agents. The main contributions of such model are:

-

allows the agent to autonomously reason, in a model-independent way, about trust and reputation and to adapt the models to better suit the system’s conditions;

-

allows the introduction of adaptiveness in models that did not considered its use dynamic environments;

-

proposes a domain-independent representation of environmental aspects that are affect in dynamic systems;

-

proposes a model-independent evaluation criteria to be used in the adaptation process.

The proposed meta-model was successfully used with two models in a dynamic setting. By mapping concrete components of trust and reputation models to a meta-model, agents were able to reason at the meta-level, in a model-independent way, about their trust and reputation mechanisms. This allows the agents to react to changes in the environment that affect their use of these mechanisms, by choosing and executing one of the available adaptation plans.

The experimental results show the importance of the adaptation model as a complement to the currently proposed models. Agents using the meta-model where able to adapt their models to the environmental conditions and improve the overall utility. Agents using static configurations, on the other hand, had poorer results.

In Marsh’s model, excessive idleness due to mistrust in a high operating cost environment was avoided by adjusting the cooperation threshold without incurring in excessive loss of utility as a result of the increased risk. In FIRE, the cost of information acquisition was adjusted according to changes in the communication costs, without excessive impact on the reliability of reputation evaluation. While these are just two possible adaptation plans, they illustrate the power of such approach. This agrees with the view of Raja and Lesser [24] that the use of “efficient and inexpensive meta-level control which reasons about the costs and benefits of alternative computations leads to improved agent performance in resource-bounded environments”.

Further research should improve the capacity to better identify and react to changes in the environment. We plan to experiment with learning methods for discovering new adaptation plans. As the number of models available, and possible configurations, increase, so does the diversity of options for the adaptation process. We also intend to experiment on the possibility of multi-model composition, by combining components from different models into one hybrid model. For instance, the credibility model could be switched depending on the type of reputation information available. If the interactions are binary in nature (only complete success or failure), a credibility model based on the beta distribution, such as BRS [15], could be employed, without modifying the other components of the original model. Different algorithms for reputation information acquisition could also be used interchangeably. This kind of composition would be possible since the meta-model elements abstract the actual implementation of the TRM, although this would require a more explicit solution to interoperability issues.

References

Bordini, R. H., & Jomi Fred Wooldridge, M. (2007). Programming multi-agent systems in AgentSpeak using Jason. Hoboken: Wiley.

Burnett, C., Norman, T., & Sycara, K. (2010). Bootstrapping trust evaluations through stereotypes. In Proceedings of the 9th International Conference on Autonomous Agents and Multiagent Systems (Vol. 1, no. 1, pp. 241–248).

Castelfranchi, C., & Falcone, R. (2001). Social trust: A cognitive approach. In C. Castelfranchi & Y. H. Tan (Eds.), Trust and deception in virtual societies (pp. 55–90). Dordrecht: Kluwer Academic Publishers.

Dignum, V., Vázquez-Salceda, J., & Dignum, F. (2005). Omni: Introducing social structure, norms and ontologies into agent organizations (pp. 181–198)., Programming Multi-Agent Systems Berlin: Springer.

Esfandiari, B., & Chandrasekharan, S. (2001). On how agents make friends: Mechanisms for trust acquisition. In Proceedings of the Fourth Workshop on Deception, Fraud and Trust in Agent Societies (Vol. 222).

Ferber, J., & Gutknecht, O. (1998). A meta-model for the analysis and design of organizations in multi-agent systems. In International Conference on Multi Agent Systems (pp. 128–135). IEEE Comput. Soc.

Fullam, K.K. (2007). Adaptive trust modeling in multi-agent systems: Utilizing experience and reputation. Doctoral thesis, University of Texas.

Fullam, K.K., & Barber, K.S. (2007). Dynamically Learning Sources of Trust Information: Experience vs. Reputation. In Proceedings of the 6th International Conference on Autonomous Agents and Multiagent Systems (Vol. 5, pp. 1055–1062).

Griffiths, N. (2005). Task delegation using experience-based multi-dimensional trust. In Proceedings of the Fourth International Joint Conference on Autonomous Agents and Multiagent Systems—AAMAS’05, pp. 489–496. New York, NY: ACM.

Griffiths, N. (2006). A fuzzy approach to reasoning with trust, distrust and insufficient trust. In M. Klusch, M. Rovatsos, & T. R. Payne (Eds.), Cooperative Information Agents X (Vol. 4149, pp. 360–374)., Lecture Notes in Computer Science Berlin: Springer.

Hermoso, R., & Billhart, H., (2010). Sascha Ossowski: Role evolution in open multi-agent systems as an information source for trust. In Proceedings of the 9th International Conference on Autonomous Agents and Multiagent Systems (Vol. 1, pp. 217–224).

Huynh, T.D. (2006). Trust and reputation in open multi-agent systems. Doctoral thesis, University of Southhampton.

Huynh, T., & Jennings, N. (2006). Certified reputation: How an agent can trust a stranger. In The Fifth International Joint Conference on Autonomous Agents and Multiagent Systems (pp. 1217–1224).