Abstract

One of the most important signals to assess respiratory function, especially in patients with sleep apnea, is airflow. A convenient method to estimate airflow is based on analyzing tracheal sounds and movements. However, this method requires accurate identification of respiratory phases. Our goal is to develop an automatic algorithm to analyze tracheal sounds and movements to identify respiratory phases during sleep. Data from adults with suspected sleep apnea who were referred for in-laboratory sleep studies were included. Simultaneously with polysomnography, tracheal sounds and movements were recorded with a small wearable device attached to the suprasternal notch. First, an adaptive detection algorithm was developed to localize the respiratory phases in tracheal sounds. Then, for each phase, a set of morphological features from sound energy and tracheal movement were extracted to classify the localized phases into inspirations or expirations. The average error and time delay of detecting respiratory phases were 7.62% and 181 ms during normal breathing, 8.95% and 194 ms during snoring, and 13.19% and 220 ms during respiratory events, respectively. The average classification accuracy was 83.7% for inspirations and 75.0% for expirations. Respiratory phases were accurately identified from tracheal sounds and movements during sleep.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Airflow is one of the main physiological signals used to assess the respiratory system and diagnose sleep apnea.25 Sleep apnea occurs due to full or partial cessation of airflow during sleep.11 Airflow is commonly measured using nasal cannula or penumotachometer. However, the mask connected to the penumotachometer is uncomfortable, can change the normal pattern of breathing, and make sleeping difficult for the individuals.8 With growing interest in developing portable devices to monitor sleep apnea at home, ease of use in non-laboratory settings is an important consideration. Nasal cannulae are inconvenient for users and sensitive to leakage of airflow and movement, which is particularly difficult to control in home use, potentially affecting accuracy. Moreover, nasal cannulae and penumotachometer can be costly as they contain several parts that are disposable or need to be sterilized after each use. Hence, it is important to explore other modalities for accurate and convenient estimation of airflow, especially during sleep and in patients with sleep apnea.

An alternative way of estimating respiratory airflow is through analyzing respiratory sounds such as tracheal sounds or lung sounds, which can be recorded over suprasternal notch or chest, respectively. Lung sounds are usually quiet during expiration, which make it easy to identify respiratory phases. However, recording lung sounds could be uncomfortable during sleep and have lower amplitude during shallow breathing compared to tracheal sounds.38 In contrast, tracheal sound is more convenient for recording and has higher amplitude during both inspiration and expiration.27

Previous studies have shown that the energy of tracheal sounds is strongly related to the respiratory airflow.10,13,28,31,39,34,36 Such a relationship between flow and sound energy is different during inspirations compared to expirations in terms of amplitude and also in polarity.15,1,33 While airflow is positive/negative during inspirations/expirations, this polarity does not exist in tracheal sound energy, which is always positive. Hence, accurate estimation of airflow from tracheal sound requires respiratory phase detection,2 which includes detecting the onset of respiratory phases (localization) and labeling each phase as inspiration or expiration (identification).

There have been a few studies on respiratory phase detection from tracheal sounds. In some studies, breath localization in sound signals was manually performed,15,34,33 which can be tedious for an overnight sleep screening. Also, during overnight recording, tracheal sound features can change due to sleeping posture, sleep stages, presence of snoring sounds, and partial or complete collapse of pharyngeal airways. Presence of various noises such as swallowing, background noise, and body movement will make it more challenging to detect respiratory phases during sleep compared to controlled conditions during wakefulness. Along with tracheal sounds, respiratory related movements can be recorded over the trachea using an accelerometer that can reflect respiratory related movements.23 During inspiration and expiration, pharyngeal wall under the suprasternal notch moves up and down. Such movements, referred to as tracheal movements in this paper, can be recorded over the skin by attaching an accelerometer. However, the accelerometer signal is very sensitive to body and neck motions, and severe snoring can distort its shape. Thus, tracheal movements alone may not be accurate to detect the respiratory phases and require additional information from tracheal sounds. The aim of this study is to identify respiratory phases during overnight sleep screening using tracheal sounds and movements.

Materials and Methods

Study Participants and Protocol

Adult individuals aged 18 years and above with suspected sleep breathing disorder who were referred to the sleep laboratory of Toronto Rehabilitation Institute were recruited for this study. The protocol was approved by the Research Ethics Board of the University Health Network (IRB #: 15-8967). All participants gave written consent prior to participation in the study. Sixty-eight individuals agreed to participate in the study. Data recorded from 6 out of 68 participants were excluded due to the misplacement of the sensors resulting in poor quality of sound signal.

Data Collection

Participants underwent overnight in-laboratory polysomnography (PSG) using Embla® N7000/S4500 (Natus Medical Incorporated). Electroencephalograms were recorded using standard surface electrodes. Airflow was monitored by nasal cannula, thoracoabdominal movements using respiratory inductance plethysmography, and arterial oxyhemoglobin saturation (SaO2) by pulse oximetry. Sleep stages, arousals, apneas and hypopneas were annotated according to the American Academy of Sleep Medicine standard.3 Apneas and hypopneas were defined as more than 90% and 30% drops in airflow or respiratory effort for a duration of more than 10 seconds, respectively.3 Additionally, hypopneas had to be associated with arousals or a decrease in SaO2 of more than 3%.

Simultaneously with PSG, respiratory sounds and respiratory related movements over the trachea were recorded with a small wearable device, called the Patch.30 The Patch is developed by our team and includes a small microphone and a three-dimensional accelerometer. The Patch was attached over suprasternal notch with double-sided tape. The Patch records respiratory sound and respiratory related movement with the sampling rates of 15 kHz and 60 Hz, respectively.

Data Analysis

The recorded signals were processed and analyzed using Matlab (2016b, MathWorks, Natick, MA) software.

Preprocessing: The recorded signal by microphone attached over the trachea includes different sounds with overlapping frequency responses, such as low frequency muscle movement (about 25 Hz26), breathing sounds (50–4000 Hz5), heart sounds (20–500 Hz29), snoring sounds (20–1500 Hz18) and high frequency ambient noises (> 2000 Hz29). To extract the breathing sounds and remove the low and high frequency noises, the recorded tracheal sound was filtered using a zero-phase fifth-order bandpass Butterworth filter with bandwidth of 70–2000 Hz,37 which includes the main frequency components of tracheal respiratory sounds.35 Tracheal sound energy (TSEng) was calculated as the logarithm of the variance of the bandpass filtered signal calculated within a moving window of 20 ms length and 75% overlap.13,28,33

Heart sounds removal involves two steps of localizing heart sounds and removing the effects of heart sounds from the TSEng. The spectral content of heart sounds is typically below 150 Hz.29 Thus, to localize heart sounds, the breathing components of the recorded tracheal sounds were attenuated using a band-pass filter with a frequency range of 20–150 Hz. Then, the peaks in the envelope of the filtered signal were detected. To remove the effects of heart sounds from TSEng, localized heart sounds segments were replaced by cubic interpolation of TSEng in the adjacent segments.35

Snoring sounds are another common noise superimposed with breathing sounds during sleep. Snoring sounds usually occur during respiratory phases and not during transitions between respiratory phases. Therefore, the presence of snoring sounds is not expected to affect our proposed algorithm to detect respiratory phases. As a result, snoring sounds were not removed from the recorded tracheal sounds. However, we investigated the performance of our proposed method during periods with snoring sounds to ensure its validity.

Our proposed method for respiratory phase identification has two main steps: to localize the respiratory phase; and to classify each phase into inspiration or expiration (Fig. 1).

Respiratory phase localization: Energy of tracheal sound is directly related to the amount of airflow.28,36 During inspiration or expiration, the surge of airflow through the trachea leads to higher levels of energy in tracheal sound. In contrast, during the breathing pauses between respiratory phases, there is no flow of air. Thus, the microphone attached over the trachea records only the background noise with lower level of energy. Therefore, breathing pauses before the onset of respiratory phases appear as local minima in sound energy (Fig. 2a and b). On the other hand, similar to the chest movements during breathing, tracheal movements can be sensed by an accelerometer placed over the trachea. During inspiration and expiration, the tracheal movement signal fluctuates with upward and downward movements of the trachea. Accordingly, a local minimum or maximum appears at the onset of these movements (Fig. 2c). An automatic algorithm was used to detect the onset of respiratory phases through analyzing tracheal sound and movement.

Example traces of data recorded during sleep, demonstrating (a): apnea marked with complete cessation of airflow, (b) hypopnea with reduction in the airflow rate, (c): severe continous snoring and (d): milder snoring. The panels include airflow signal recorded by nasal pressure, recorded tracheal sound signal by the Patch, filtered movement data in anteroposterior direction by the Patch, extracted tracheal sound energy with the corresponding segments during inspiration, expiration and silent periods. In (c) and (d), unfiltered movement signal has been shown for better demonstration of snoring impacts. In (c), snoring has appeared in every inspirations and can be recognized from the (unfiltered) movement signal. Also, snoring has distorted the filtered movement as it becomes out of phase compared to airflow. In (d), First inspiration has snoring pattern apparently not that intense to affect the filtered movement. The silent segments were detected by comparing energy of each segment with energy of the background noise. Note that the sound energy is similarly positive for inspiration and expiration phases.

To detect the onsets, the tracheal sound energy (TSEng) was first smoothed using a zero-phase fifth-order Butterworth low-pass filter with 2 Hz cut-off frequency. A K-means algorithm12 was employed to cluster the samples of TSEng into 4 different groups. The group with the lowest mean value was chosen. This group creates a neighborhood around each local minimum. Within each neighborhood, the point with the lowest power represents the respiratory phase onset. However it is not precise due to baseline changes in tracheal sound during sleep, likely caused by snoring sounds or respiratory events. Thus, the detected set of local minima (SLV) obtained using clustering was further revised by considering other complementary information from tracheal movement.

To revise SLV, the tracheal movement in axial direction was low-pass filtered with 0.7 Hz cut-off frequency and the local minima and maxima of movement signal were extracted. These points (STM) ideally represent the onset of inspirations and expirations and were used for estimating the duration of breath-phases. A local minimum was added to SLV if the elapsing time between the two consecutive local minima was more than one and half of the phase duration estimated from set STM. Conversely, a local minimum was removed from SLV if the elapsing time between the two consecutive local minima was less than half of the estimated phase duration using set STM. Additionally, the area under the curve (AUC) and the height of the energy segment between two consecutive local minima were compared to those of preceding segment. If the AUC ratio was less than a threshold (TAUC) and the height ratio was less than Th, the phase was merged with its previous one. To determine the optimal values of TAUC and Th, the phase localization algorithm was applied to 10-min segments of data with stable breathing in NREM stage of sleep that were selected from three subjects randomly chosen from groups with normal, moderate and severe AHI. Then, we changed the thresholds between 10 and 90%. The best phase localization performance reported as training accuracy was achieved for TAUC of 40% and Th of 25%. Using these data-driven values, validation accuracy was calculated using the data-driven values over the remaining 59 subjects. The final version of SLV includes the location of power drops which segment energy signal into a sequence of respiratory phases and silence parts.

Silent segments were associated to the segments for which TSEng is close to the energy of background noise.38 Since the energy of the background noise can change over time, the average value of TSEng at the preceding 5 local minima was used as the noise energy. If the difference between the average energy of a segment and that of the background noise was less than 2 dB, it was considered as silence; otherwise, its morphology was further processed to classify the segment as inspiration or expiration.

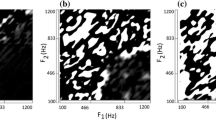

Respiratory phase classification: To classify each respiratory phase as inspiration or expiration, three criteria were extracted and used in a voting strategy. The first logic, termed as movement logic, was extracted from the tracheal movement signal as recorded by the accelerometer. During each respiratory phase, inspirations and expirations were marked based on the rising and falling slopes of the movement signal, respectively (Fig. 2c). Second logic was called previous-phase logic, assuming that each inspiration was followed by expiration. The third logic is shape logic, and is based on the fact that the pattern of TSEng is different during inspiration and expiration.15 Therefore, five morphological features related to the shape of each phase were extracted as follows (Fig. 3):

-

Skewness: Negative of skewness of TSEng which shows the asymmetry in the shape of a curve. Skewness is zero for a bell-shaped signal such as normal inspiration and is positive for left skewed shapes such as expiration.

-

Widthphase: The width of the TSEng envelope during each respiratory phase at the points where TSEng is equal to the average of TSEng.

-

SlopeFall: The slope of fitted line to the falling edge of the phase.

-

AUC2/1: The ratio of AUC of TSEng in the second half of respiratory phase over the first half of respiratory phase.

-

AUC3/1: The ratio of the last third of AUC over the first third of AUC.

To localize the falling edge, firstly the breath-phase was partitioned into two segments; one segment with the breathing pattern and the other segment with lower power including background noise. This was performed using a thresholding technique based on Lloyd quantization20 for sequestering the points of a breath-phase into two different groups. The Lloyd algorithm clusters the points of a segment into different partitions by considering their closeness to the center of each partition (their average value) while preserving their continuous geometrical shape. The group with lower mean value was considered as a short pause in breathing and used for calculating the background noise power. The other group was known to include breathing information. Then, the falling edge of a breath-phase was extracted within the breathing part by considering the samples from the peak to the end.

Based on the morphology of inspirations and expirations, we expect that all the extracted features would have smaller values during expiration than inspiration. The morphological features were extracted for each respiratory phase and compared with those of the preceding phase. If more than three of the features were higher for the respiratory phase compared to those extracted from the preceding phase, the shape logic was set as inspiration; otherwise it was marked as expiration. Finally, voting was applied to the three logics to determine the type of the respiratory phase. The performance of the phase identification algorithm was further analyzed using different combinations of morphological features (see the Appendix).

Validation and Statistical Analysis

Statistical analyses were performed using R (i386 3.4.1) software. After examining the normality of data by the Shapiro-Wilk test, we used paired t-test in case of normal distributions to compare the morphological features extracted from inspiration and expiration. Otherwise, the paired Wilcoxon signed-rank test was used for the comparison. Moreover, for each respiratory-phase, repeated measures analysis of variance (ANOVA) was performed to compare the morphological features at different airflow levels and sleep stages. Airflow levels were categorized relative to the average of airflow signal during the first 5 minutes of data that subject was awake before falling sleep. Accordingly, four groups were defined as normal breathing (flow rates of >70% of the awake airflow), mild flow reduction (50–70%), moderate flow reduction (10–50%), and shallow breathing (<10%). To compare the effects of sleep stages, data during wakefulness, rapid eye movement (REM), and Non-REM stages 1, 2, and 3 (N1, N2, N3, respectively) of sleep were considered. Tuckey’s post hoc test was used to detect variables that were significantly different. All the annotations related to sleep stages and body postures were extracted from PSG. Nasal pressure was used for determining different airflow rates. p value of less than 0.05 was considered as statistically significant.

The performance of the respiratory phase localization algorithm was assessed by calculating the normalized mean error (NME) between the number of detected phases overnight using The Patch and those detected from PSG-based thoracoabdominal movements as the reference signal. Furthermore, the average detection time delay between respiratory phases detected by The Patch and the reference respiratory phase was calculated. NME and detection time delay were reported during periods of normal breathing, snoring segments, and respiratory events (apneas and hypopneas). Since, thoracoabdominal movements can be sensitive to motion artifacts, nasal airflow was used as the gold standard for validating the classification algorithm. Nasal airflow has been validated against face mask in sleep assessment.32 Moreover, in our study protocol, we used a large size nasal cannula and taped the cannula to the face to minimize the leakage. The classification algorithm was evaluated in different airflow levels, wakefulness and sleep stages, and body postures. The performance of the algorithm was analyzed in terms of classification accuracy.

Results

Data Demographics

Sixty-two subjects (age: 51 ± 15 years, 30 females) with a body mass index of 29.0 ± 5.5 kg/m2 were considered for this study. Demographics and sleep structure of the subjects are presented in Table 1.

Respiratory Phase Identification

Figure 2 demonstrates an example of the identified respiratory phases based on the proposed algorithms. NME respiratory phase localization algorithm was 7.62% (3.72–14.53) during normal breathing, 8.95% (4.96–15.32) during periods of snoring and 13.19% (5.33–18.83) during respiratory events (Fig. 4). The delay of the algorithm to detect the onset of each respiratory phase was 181 ms (131–251) during normal breathing, 194 ms (124–292) during snoring segments, and 220 ms (179–268) during respiratory events. To validate the value of the TAUC and Th, training accuracy for detecting inspirations (79.46 ± 20.56%) and expirations (75.81 ± 14.97%) were compared to test accuracy for inspirations (84.69 ± 8.84%) and expirations (76.93 ± 10.11%). Over 62 subjects, we analyzed 715,334 breaths; we successfully detected 666,002 (93%) and missed 49,332 (7%). On average, there were 11,727± 2,064 breaths for each subject; we detected 10,918 ± 2,059 of them and missed 809 ± 881. The results related to validating the performance of the phase identification algorithm using different subsets of morphological features were reported in the Appendix.

Statistical Analyses

All morphological features were significantly larger during inspiration compared to the expiration (Fig. 5): Skewness (0.42 ± 0.23 vs. 0.05 ± 0.27, p < 0.001), Widthphase (1.30 ± 0.20 vs. 1.20 ± 0.22, p < 0.01), Slopefall (0.012 ± 0.004 vs. 0.011 ± 0.003, p < 0.05), AUC2/1 (1.67±0.34 vs. 0.68 ± 0.13, p < 0.001), and AUC3/1 (2.31 ± 0.70 vs. 0.63 ± 0.16, p < 0.001).

During both inspiration and expiration phases, the values of all morphological features were similar during normal breathing and mild flow reduction. The features in these two flow levels were larger than those during moderate reduction in airflow and very shallow breathing. Moreover, during inspiration, the values of all morphological features were larger during moderate reduction in airflow than those during shallow breathing. During expiration, the values of all morphological features, except for AUC3/1, were larger during moderate airflow reduction than those during shallow breathing (Fig. 6a).

Separate comparison of the average values of different morphological features in inspirations and expirations in terms of (a) different airflow levels defined as normal breathing (>70%), mild flow reduction (50–70%), moderate flow reduction (10–50%) and shallow breathing (<10%), and (b) wakefulness and different sleep stages. Data is presented as mean ± STD. All the values were normalized by supine wakefulness data for each subject. *p < 0.05, ** p < 0.01, *** p < 0.001.

Respiratory Phase Classification

Table 2 shows the accuracy of the respiratory phase classification during inspiration and expiration for different flow levels, sleep stages and body position. Furthermore, for these variables in each respiratory phase, the changes in the accuracy across groups were compared to normal breathing, wakefulness and supine body position, respectively. Significant lower accuracies occurred in shallow breathing (p < 0.001) in inspiration. For expiration, lower accuracies were obtained in moderate flow reduction (p < 0.001) and shallow breathing (p < 0.001) compared to normal breathing. Also, during inspiration significant higher accuracies obtained during N2 (p = 0.011) and N3 (p < 0.001) over wakefulness. For expiration, the accuracy in all sleep stages except N1 was significantly higher than wakefulness. For lateral postures, in comparison to supine, significant higher accuracy was obtained. The overall classification accuracy of the proposed algorithm based on both tracheal sounds and movements were 83.63% (80.42–88.77) during inspiration and 74.90% (69.44–80.87) during expiration. On the other hand, we found that the classification accuracy based on analyzing only tracheal sound was 63.10% (56.93–67.25) during inspiration and 53.52% (51.08–54.56) during expiration. Similarly, the classification accuracy using only tracheal movements was 77.20% (71.69–88.91) during inspiration and 71.21% (65.60–79.11) during expiration. Note that the presented classification results were based on the phase identification algorithm, which was mostly based on analyzing the sound signal.

Discussion

The main finding of our study is to develop the first automatic algorithm to detect inspiratory and expiratory phases during sleep in patients with sleep apnea using respiratory related sounds and movements. We have demonstrated that our proposed algorithm was able: 1) to detect the onset of respiratory phases with delay of less than 300 ms; 2) to integrate the morphological features extracted from tracheal sounds and the direction of tracheal movement to classify respiratory phases into inspirations and expirations, and 3) to achieve high classification accuracy in the presence of respiratory events and snoring segments. In our recent study, we have shown reliable estimation of airflow shape using tracheal signals during sleep tests, which was based on identification of respiratory phases.2 In this study, we extensively presented our approach for identification of respiratory phases.

Previous studies successfully differentiated inspirations from expirations during wakefulness in tracheal sound1,36,33 seismocardiogram signals42,41 lung sounds22,24 and their combination with tracheal sounds.19 However, analysis of tracheal sound recorded during a clinical sleep test is more challenging, especially in patients with sleep apnea who present a lot of variability in their airflow level due to the presence of respiratory event.

To address this challenge, we have analyzed tracheal sounds combined with tracheal movements. Associated with inhalation and exhalation, the accelerometer over the trachea records the upward and downward movements of the trachea; herein the recorded signal is called the “movement signal”. However, the movement signal does not provide an accurate measure of the onset of each breathing phase and can be easily distorted by snoring and body movements (Fig. 2c). Thus, in this study, the movement signal has been used only for a rough estimation of the breathing phase as part of the phase localization algorithm. On the other hand, tracheal sounds, as shown before,27 provides a much more accurate measure of the onset of each breathing phase. Thus, in this study, the analysis of tracheal sounds played a major role in the phase localization algorithm (Fig. 2). However, in voting algorithm for phase identification, the shape logic extracted from tracheal sounds had the same weight as movement logic and previous-phase logic. Therefore, respiratory phase localization and identification were improved through analyzing both tracheal sounds and tracheal movements.

Our proposed localization algorithm is based on a novel approach to detect the onset of respiratory phases in overnight recordings through detecting breathing pauses from tracheal sound energy and revising them based on phase transitions in tracheal movement. Most previous studies on respiratory phase localization using tracheal sound were validated on a few subjects and only during wakefulness with controlled airflow rates or during simulated apnea.14,16 In a study during sleep,17 respiratory phases were detected in selected 10-minute segments of sound signals from normal breathing of 10 participants. In another study, respiratory phase localization was investigated using chest sound signals in 2 subjects during a seated position.40 In this paper, the phase localization algorithm was validated for the whole sleep data. Additionally, the algorithm performs adaptive assessment of the background noise level to differentiate respiratory phases from silent segments. The error of phase localization was higher during respiratory events due to: i) reduction in tracheal sound amplitude and the consequent reduction in signal to noise ratio; and ii) the increased noise levels in thoracoabdominal bands due to respiratory related movements. Nevertheless, the localization algorithm was able to determine the onset of each phase with delay <300 ms, which is small compared to duration of a respiratory phase (1.2–2.5 s).

To classify the detected respiratory phases into inspiration or expiration, the patterns of tracheal sound and movement were analyzed. Due to the distinct airflow patterns during different respiratory phases19,4,43 and the relationships between airflow and tracheal sound energy,5,35 it was expected that the sound energy pattern would be different during inspiration compared to expiration.33 This was statistically investigated by showing significant changes of morphological features between different respiratory phases in this study.

All the morphological features except Skewness were used as defined previously in Ref. 15. However, there are other differences between the detection algorithm presented in Ref. 15 and our algorithm. In this study, in order to extract the width of a respiratory phase (Widthmean), first the average value of the sound energy was calculated. Then, the duration of the phase was calculated at the level of average energy. This was due to the ambiguity in detecting the onset and termination of the phase especially during respiratory events. Also, in contrast to Ref. 15, where the features of a phase were compared to those of preceding and following phases, we performed the comparison only between the phase and its preceding. Additionally in this study, the direction of tracheal movement, which changes in relation to respiratory phases, was extracted. Using the logics extracted from tracheal movements and the sounds, the classification algorithm was able to demonstrate high performance without the need of any model training with additional complexity in the algorithm.

The performance of classification algorithm was affected by airflow level. Although our algorithm showed high performance, the lowest accuracy was obtained during shallow breathing possibly due to lower signal to noise ratio. Additionally, based on the changes in the value of AUC2/1 and AUC3/1 during shallow breathing, inspirations and expirations resembled each other and were hard to differentiate.

Sleep stages also impacted the performance of the algorithm. Compared to wakefulness, the pattern of breathing and the activity level of pharyngeal dilator muscles are different during sleep as well as different sleep stages.7 For those with sleep apnea, very shallow breathing and respiratory events hardly occur in N3.6 Since the accuracy of the classification algorithm was lower during shallow breathing, its performance was further studied during wakefulness and across sleep stages. Higher accuracy was obtained in NREM sleep, especially in N3. Moreover, the changes of morphological features, particularly AUC2/1 and AUC3/1 were higher in N3 that shows inspirations and expirations are more separable in this stage. In terms of body posture, the accuracy was higher in lateral position in-line with a lower chance of shallow breathing due to a lower susceptibility of upper airway to collapse.9

This work has several limitations; first, we did not exclude any low quality sound or movement segments that may have been caused by motion artifacts or loose attachment of the device. This could further add to some of the discrepancies in our results. For those phases during noisy segments in which the algorithm generated outlier values for morphological features or was not able to detect steadily falling or rising patterns, the respiratory phase was assumed as inspiration; however, the algorithm automatically was able to correct the labels after a few phases. Second, the proposed algorithm was validated on a population with suspected sleep apnea; hence further studies with a wider range of individuals are required. Another limitation was related to the hardware that hindered us from adding another microphone for lung sounds along with tracheal sounds. This requires more complex hardware and maintaining synchronization between the two microphones, which was out of scope of this work. Another limitation of this study was that we used nasal cannula as the gold standard for validation, which could be less accurate during shallow breathing or mouth breathing. However, a previous study has shown comparable results of nasal cannula compared to face mask in assessing airflow during sleep.32 We have not controlled for mouth breathing in our population. While mouth breathing occurs in less than 12–15% of individuals during sleep,21 future studies may control for mouth breathing to increase validation accuracy. Finally, we have not developed algorithms to detect swallowing. However, some short swallowing sounds were merged with the previous respiratory phase.

In conclusion, the current study shows the feasibility of automatic localization and identification of respiratory phases during sleep using tracheal sounds and movements. The proposed algorithm can be employed in development of wearable devices for robust detection of respiratory phases in tracheal sound, which is the first step for accurate and convenient estimation of airflow during sleep, especially in patients with sleep apnea.

References

Chuah, J. S., and Z. K. Moussavi, “Automated respiratory phase detection by acoustical means,” in Syst Cybernet Informatics (SCI) Conf, 2000, pp. 228-231.

Ghahjaverestan, N. M. et al., “Estimation of Respiratory Signals using Tracheal Sound and Movement,” ed: Eur Respiratory Soc, 2019.

Berry, R. B., R. Brooks, C. E. Gamaldo, S. M. Harding, C. Marcus, and B. Vaughn, “The AASM manual for the scoring of sleep and associated events,” Rules, Terminology and Technical Specifications, Darien, Illinois, American Academy of Sleep Medicine, 2012.

Broaddus, V.C. et al., Murray & Nadel’s Textbook of Respiratory Medicine E-Book. Elsevier Health Sciences, 2015.

Beck, R., G. Rosenhouse, M. Mahagnah, R. M. Chow, D. W. Cugell, and N. Gavriely. Measurements and theory of normal tracheal breath sounds. Ann. Biomed. Eng. 33(10):1344–1351, 2005.

Becker, H. F., et al. Breathing during sleep in patients with nocturnal desaturation. Am. J. Respir. Crit. Care Med. 159(1):112–118, 1999.

Carberry, J. C., A. S. Jordan, D. P. White, A. Wellman, and D. J. Eckert. Upper airway collapsibility (Pcrit) and pharyngeal dilator muscle activity are sleep stage dependent. Sleep 39(3):511–521, 2016.

Fleming, P. J., M. Levine, and A. Goncalves. Changes in respiratory pattern resulting from the use of a facemask to record respiration in newborn infants. Pediatr. Res. 16(12):1031, 1982.

George, C. F., T. Millar, and M. Kryger. Sleep apnea and body position during sleep. Sleep 11(1):90–99, 1988.

Golabbakhsh, M. Tracheal breath sound relationship with respiratory flow: Modeling, the effect of age and airflow estimation. Elec. & Comp. Engg.: University of Manitoba, 2004.

Guilleminault, C., A. Tilkian, and W. C. Dement. The sleep apnea syndromes. Annu. Rev. Med. 27(1):465–484, 1976.

Hartigan, J. A., and M. A. Wong. Algorithm AS 136: A k-means clustering algorithm. J. R. Stat. Soc. Ser. C 28(1):100–108, 1979.

Hossain, I., and Z. Moussavi, Respiratory airflow estimation by acoustical means. In Proceedings of the Second Joint 24th Annual Conference and the Annual Fall Meeting of the Biomedical Engineering Society][Engineering in Medicine and Biology, 2002, vol. 2: IEEE, pp. 1476-1477.

Hult, P., B. Wranne, and P. Ask. A bioacoustic method for timing of the different phases of the breathing cycle and monitoring of breathing frequency. Med. Eng. Phys. 22(6):425–433, 2000.

Huq, S., and Z. Moussavi. Acoustic breath-phase detection using tracheal breath sounds. Med. Biol. Eng. Comput. 50(3):297–308, 2012.

Jin, F., F. Sattar, and D. Y. Goh. An acoustical respiratory phase segmentation algorithm using genetic approach. Med. Biol. Eng. Comput. 47(9):941–953, 2009.

Kulkas, A., et al. Intelligent methods for identifying respiratory cycle phases from tracheal sound signal during sleep. Comput. Biol. Med. 39(11):1000–1005, 2009.

Lee, G.-S., et al. The frequency and energy of snoring sounds are associated with common carotid artery intima-media thickness in obstructive sleep apnea patients. Sci. Rep. 6:30559, 2016.

Li, H., and W. M. Haddad. “Optimal determination of respiratory airflow patterns using a nonlinear multicompartment model for a lung mechanics system,” Comput. Math. Methods Med. 2012. https://doi.org/10.1155/2012/165946.

Linde, Y., A. Buzo, and R. Gray. An algorithm for vector quantizer design. IEEE Trans. Commun. 28(1):84–95, 1980.

Martins, D. L. L., et al. The mouth breathing syndrome: prevalence, causes, consequences and treatments. A literature review. J. Surg. Clin. Res. 5(1):47–55, 2014.

Messner, E., M. Hagmüller, P. Swatek, F.-M. Smolle-Jüttner, and F. Pernkopf, “Respiratory airflow estimation from lung sounds based on regression,” in 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2017: IEEE, pp. 1123-1127.

Morillo, D. S., J. L. R. Ojeda, L. F. C. Foix, and A. L. Jiménez. An accelerometer-based device for sleep apnea screening. IEEE Trans. Inf. Technol. Biomed. 14(2):491–499, 2010.

Moussavi, Z. K., M. T. Leopando, H. Pasterkamp, and G. Rempel. Computerised acoustical respiratory phase detection without airflow measurement. Med. Biol. Eng. Comput. 38(2):198–203, 2000.

Nakano, H., et al. Validation of a single channel airflow monitor for screening of sleep-disordered breathing. Eur. Respir. J. 32:1060–1067, 2008.

Oster, G., and J. S. Jaffe. Low frequency sounds from sustained contraction of human skeletal muscle. Biophys. J. 30(1):119, 1980.

Penzel, T., and A. Sabil. The use of tracheal sounds for the diagnosis of sleep apnoea. Breathe 13(2):e37–e45, 2017.

Que, C.-L., C. Kolmaga, L.-G. Durand, S. M. Kelly, and P. T. Macklem. Phonospirometry for noninvasive measurement of ventilation: methodology and preliminary results. J. Appl. Physiol. 93(4):1515–1526, 2002.

Reichert, S., R. Gass, C. Brandt, and E. Andrès, “Analysis of respiratory sounds: state of the art,” Clinical medicine. Circulatory, respiratory and pulmonary medicine, vol. 2, p. CCRPM. S530, 2008.

Saha, S., et al. Apnea-hypopnea index (AHI) estimation using breathing Sounds, accelerometer and pulse oximeter. ERJ Open Res. 5(suppl 3):P63, 2019. https://doi.org/10.1183/23120541.sleepandbreathing-2019.P63.

Soufflet, G., et al. Intraction between tracheal sound and flow rate: a comparison of some different flow evaluations from lung sounds. IEEE Trans. Biomed. Eng. 37(4):384–391, 1990.

Thurnheer, R., X. Xie, and K. E. Bloch. Accuracy of nasal cannula pressure recordings for assessment of ventilation during sleep. Am. J. Respir. Crit. Care Med. 164(10):1914–1919, 2001.

Yadollahi, A., A. Montazeri, A. Azarbarzin, and Z. Moussavi. Respiratory flow–sound relationship during both wakefulness and sleep and its variation in relation to sleep apnea. Ann. Biomed. Eng. 41(3):537–546, 2013.

Yadollahi, A., and Z. M. Moussavi. A robust method for estimating respiratory flow using tracheal sounds entropy. IEEE Trans. Biomed. Eng. 53(4):662–668, 2006.

Yadollahi, A., and Z. M. Moussavi. “A robust method for heart sounds localization using lung sounds entropy. IEEE Trans. Biomed. Eng. 53(3):497–502, 2006.

Yadollahi, A., and Z. M. Moussavi. Acoustical respiratory flow. IEEE Eng. Med. Biol. Mag. 1(26):56–61, 2007.

Yadollahi, A., and Z. Moussavi. Automatic breath and snore sounds classification from tracheal and ambient sounds recordings. Med. Eng. Phys. 32(9):985–990, 2010.

Yadollahi, A., and Z. Moussavi, “Measuring minimum critical flow for normal breath sounds,” in Engineering in Medicine and Biology Society, 2005. IEEE-EMBS 2005. 27th Annual International Conference of the, 2006: IEEE, pp. 2726-2729.

Yap, Y.L., and Z. Moussavi, “Acoustic airflow estimation from tracheal sound power,” in IEEE CCECE2002. Canadian Conference on Electrical and Computer Engineering. Conference Proceedings (Cat. No. 02CH37373), 2002, vol. 2: IEEE, pp. 1073-1076.

Yildirim, I., R. Ansari, and Z. Moussavi, “Automated respiratory phase and onset detection using only chest sound signal,” in 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 2008: IEEE, pp. 2578-2581.

Zakeri, V., A. Akhbardeh, N. Alamdari, R. Fazel-Rezai, M. Paukkunen, and K. Tavakolian. Analyzing seismocardiogram cycles to identify the respiratory phases. IEEE Trans. Biomed. Eng. 64(8):1786–1792, 2016.

Zakeri, V. and K. Tavakolian, “Identification of respiratory phases using seismocardiogram: A machine learning approach,” in 2015 Computing in Cardiology Conference (CinC), 2015: IEEE, pp. 305-308.

Zubair, M., K. A. Ahmad, M. Z. Abdullah, and S. F. Sufian. Characteristic airflow patterns during inspiration and expiration: Experimental and numerical investigation. J. Med. Biol. Eng. 35(3):387–394, 2015.

Acknowledgments

This study was supported by FedDev Ontario, Ontario Centers of Excellence (OCE), NSERC Discovery grant, and BresoTEC Inc. Toronto, ON, Canada.

Author information

Authors and Affiliations

Corresponding author

Additional information

Associate Editor Peter E. McHugh oversaw the review of this article.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The classification performance was validated using different subsets of features over the training data (including 3 subjects randomly selected from groups with normal, moderate and severe sleep apnea). Each subset needed to have odd numbers of features due to the voting process for extracting shape logic. Table 3 compares the performance of the classification using subsets with three features compared to the set including all the features.

Rights and permissions

About this article

Cite this article

Montazeri Ghahjaverestan, N., Kabir, M., Saha, S. et al. Automatic Respiratory Phase Identification Using Tracheal Sounds and Movements During Sleep. Ann Biomed Eng 49, 1521–1533 (2021). https://doi.org/10.1007/s10439-020-02651-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10439-020-02651-5