Abstract

Angioectasias are lesions that occur in the blood vessels of the bowel and are the cause of more than 8% of all gastrointestinal bleeding episodes. They are usually classified as bleeding related lesions, however current state-of-the-art bleeding detection algorithms present low sensitivity in the detection of these lesions. This paper proposes a methodology for the automatic detection of angioectasias in wireless capsule endoscopy (WCE) videos. This method relies on the automatic selection of a region of interest, selected by using an image segmentation module based on the Maximum a Posteriori (MAP) approach where a new accelerated version of the Expectation-Maximization (EM) algorithm is also proposed. Spatial context information is modeled in the prior probability density function by using Markov Random Fields with the inclusion of a weighted boundary function. Higher order statistics computed in the CIELab color space with the luminance component removed and intensity normalization of high reflectance regions, showed to be effective features regarding angioectasia detection. The proposed method outperforms some current state of the art algorithms, achieving sensitivity and specificity values of more than 96% in a database containing 800 WCE frames labeled by two gastroenterologists.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

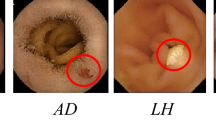

Angioectasias are degenerative lesions of the blood vessels, caused by microvascular abnormalities that may appear in the mucosa and submucosa of the bowel wall.9 These lesions are the most common source of bleeding from the small bowel in patients older than 50 years,26 and are the cause of approximately 8% of all gastrointestinal (GI) bleeding episodes.6 These lesions have a cherry red appearance, and usually have a diameter from 2 to 10 mm. They are superficial lesions, therefore easily spotted by imaging techniques that capture images from the inside of the GI tract.32

Wireless capsule endoscopy (WCE) is a medical device that was introduced as a novel technology that, contrary to the conventional endoscopy, allows the inspection of the entire GI tract without major risks and discomfort to the patients, not requiring specialized endoscopic operators.17 The WCE is a pill-like device that includes a miniaturized camera, a light source consisting on four LED’s and a wireless circuit for the acquisition and posterior transmission of signals. After the acquisition, the video frames are wirelessly transmitted to an external receiver, worn in a belt by the patient, and stored in a hard drive. The images are captured by a short focal length lens, as the capsule is propelled through the GI tract, with an acquisition of 2 frames per second.14 The resulting video, with a duration of about 8 hours, consists of about 60.000 frames. This is a large number of images to be analyzed by a physician and being a boring task, is predisposed to subjective errors since most frames contain only normal tissue.1 A 2012 study36 reports that only 69% of angioectasias were detected by selected experts, which potentiates the inclusion of automatic diagnosis systems for this particular pathology.

Segmentation-based approaches present two major advantages: assessment of areas/volumes of lesions and lesion localization inside the image. When comparing different exams over time, treatment efficiency and/or severity of the pathology can be obtained. Lesion localization separates the lesion from normal tissue which can be used for physicians’ training.

Suspected Blood Indicator (SBI) is a tool included in the software of PillCam™ devices, helping the detection of small bowel bleeding lesions in capsule endoscopy procedures. During the last years, several published studies analyzed SBI performance of detecting the presence of blood and several lesions, including angioectasias. A meta-analysis review for the validity of the SBI in clinical context clearly shows the high sensitivity of this tool for detecting active bleeding (around 99%), although it still shows a low specificity (around 65%). However, the detection of lesions with bleeding potential is clearly reduced, with values of 55 and 58% for sensitivity and specificity, respectively.35 When looking for angioectasia detection only, a significant loss of performance occurs, with values of 13 and 85%21; 41 and 68%5; 39 and 54%2 of sensitivity and specificity, respectively. Therefore more efficient tools than SBI are needed for the diagnosis of angioectasias.

Literature Review

The first study with the purpose of detecting blood in WCE frames is of Hwang et al.,10 that proposed a segmentation of bleeding regions, using the Expectation-Maximization (EM) algorithm for clustering and the Bayesian information criterion for helping to choose the number of clusters, using the values of RGB channels as observations. A frame is considered as bleeding if after the segmentation, a blood region is found. Alternative approaches also using the RGB color space can be found in Reference 16 where a Karhunen-Loeve based color space transformation is followed by a Fuzzy based segmentation, or in Reference 15 where the removal of under and over illuminated regions, and a transformation of the red channel are both explored for segmentation purposes, to name only a few. Alternative color spaces such as CIELab have also been used for the same purpose such as in Reference 23 where the Euclidean distance from each pixel to the bleeding pattern cluster center is used for classification purposes, or in Reference 7 where the second component is used with the objective of highlight blood regions. Some algorithms focus only on the blood detection by dividing the image in a fixed number of regions by using RGB8, HSV19 or HIS20 color spaces. Such methods have the advantage of not classifying the whole frame, so reducing the probability of diluting the lesion tissue on the rest of the image. However the division of the frame in rigid blocks may also divide lesions making them small in each of the patches; so a traditional segmentation algorithm may be preferable.

In spite of the high number of papers involving WCE research only a few refer specifically to angioectasias. A saliency maps based approach from RGB color space (only channels red and green) was proposed in References 3 and 4, where a sensitivity of 94% and a specificity of 84% were reported in a database with more than 3600 images. Handcraft (color and texture) and Deep Learning (DL) based features were compared in Reference 25 for angioectasia lesion detection in a database of 600 frames having been reported a sensitivity and specificity of 62 e 78%, respectively. A DL based architecture for pixel-wise segmentation purposes was proposed in Reference 27 by using AlbuNet and TernausNet networks where a Dice coefficient of 85% in a database of 600 images was reported. Although it is stated that it can be used for a detection purpose, the paper does not present these results. In general small datasets are not appropriate for DL based strategies; therefore, alternative approaches may achieve higher performances. As an example we can focus on Reference 22 where a histogram equalization step is used to increase image contrast with a posterior decorrelation between RGB channels to enhance color differences in the images. To select a ROI, a threshold is used in the green channel to provide a seed to a region growing algorithm. The resultant regions are then splitted when the variance is high enough. Then 24 statistical textural and geometrical features are extracted for several color spaces. A decision tree is used to classify each region as normal and abnormal reaching an accuracy of 96.80%.

Angioectasia segmentation by using the EM algorithm with Markov Random Fields (MRF) was proposed in Reference 30 and improved in several aspects in the current paper. As shown in that paper angioectasias seem to have a better characterization in the CIELab than in the RGB color spaces. Therefore, CIELab will be the color space chosen in the current approach. A new acceleration procedure for the EM algorithm is proposed in order to avoid slow convergence especially in normal frames where the classes may be poorly separated. A MRF approach allows to compensate the assumption of independence inside the Gaussian Mixture Model (GMM) model among different components, and also to add spatial information to the segmentation procedure. A relaxation coefficient computed on the basis of pixel intensities is proposed in order to cope with boundaries’ imperfections. A sigmoid-based function is proposed to compute this coefficient, which was obtained by experimentation. Regarding feature extraction for classification purposes several statistical measures are computed in both regions where one is considered background (normal tissue). This procedure leads the system to focus more on the differences between regions than in absolute values in each region, improving perhaps robustness against patient and device variability being a significant novelty of the proposed approach.

Materials and Methods

Figure 1 shows the flowchart of the proposed system, which consists in two major blocks: segmentation and classification modules. The purpose of the segmentation module is to break the image into two logical regions, where each logical region may have several space regions since lesions may be scattered across the frame. The purpose of the classification module is to characterize the lesion nature by extracting adequate features in both logical regions. Lesion nature knowledge was previously acquired in the training phase. This approach presents sufficient versatility to control the sensibility to unwanted type of lesions which can be of utmost importance in multipathology automatic diagnosis.

Minor blocks include color space transformation from RGB to CIELab since as shown in "Color Space and Pre-processing", angioectasia lesions are better characterized in this color space. Sub-modules such as pre- and post-processing are included for the goal of emphasizing lesions and improving the edges and are described in "Color Characterization of Angioectasias" and "Post-processing", respectively. The segmentation of normal frames present one of the logical regions broken into several very small spatial regions which in some cases can disappear during the post-processing stage. Therefore, in these cases, after the segmentation module, these frames are automatically selected as normal. The rest of the images have statistical features extracted from the regions selected in the previous step, that are then considered as input to a supervised classifier ("Feature Extraction")

Color Characterization of Angioectasias

CIELab is characterized by three statistical independent components30,34: L represents the lightness information that goes from 0 (black) to 100 (diffuse white), and the components a and b represent the color-opponent dimensions. Negative values of channel a indicate green and positive indicate magenta (adequate for the detection of red color); and negative values of b indicate blue and positive indicate yellow.33

Figure 2a shows a frame with an angioectasia lesion and in Figure 2b the correspondent component a of the same frame. It is clear that the angioectasia tissue (red arrow) is highlighted when comparing with normal tissue. Nevertheless, the set of pixels next to the yellow arrows also appear highlighted, not corresponding to angioectasia tissue. These regions correspond to shadows of some bubbles; which are characterized by low levels of intensity for green and/or blue components which usually don’t characterize tissue regions.

Therefore, a pre-processing algorithm is proposed to improve the performance of the segmentation process. Let C be a RGB image with a \(M\times N\) size and D the corresponding image in CIELab color space. \(C^k(i,j)\) and \(D^l(i,j)\) represent the correspondent pixel in the component \(k={R,G,B}\) and \(l={L,a,b}\), respectively, and with the coordinates \(i=1,2,...,M\) and \(j=1,2,...,N\). In this algorihtm (Algorithm 1), the pixels that present values of green or blue components lower than a chosen threshold (\(\delta\)), are replaced by an average of a neighboring region (with a variable size) centered in that pixel (\(\aleph \{D^l(i,j)\}\)).

Next section details the proposed segmentation module emphasizing the effectiveness of this procedure.

Segmentation

The segmentation module is based on the Maximum a Posteriori (MAP) approach by using the EM algorithm. A modified version of the Andersen acceleration algorithm is proposed in order to make the EM usable in poor separated cases. A modified MRF, with a weighted boundary function, was included for spatial context modeling purposes.

Expectation-Maximization

The segmentation module uses a statistical classification based on Bayes rule (Eq. (1)). This rule indicates how the posterior probability of each class is calculated.

In this equation, \(\omega _j\) refers to the jth class and \({\mathbf{x }}\) to the feature vector, while \(p(\omega _j|{{\mathbf{x }}})\), \(p({\mathbf{x}}|\omega _j)\) and \(p(\omega _j)\) are the a posteriori probability of class \(\omega _j\), the class conditional probability density function and the a priori probability, respectively. The term \(p({\mathbf{x }})\) is a scaling factor which purpose is setting the a posteriori probability to the range between zero and one, that however can be ignored since it takes the same value for each class \(\omega _j\). Therefore, for comparison purposes among classes, Eq. (1) can be written as:

Assuming the number of classes as \(n_c\), for a given \({\mathbf{x }}\), the MAP is computed for all classes and \({\mathbf{x }}\) is assigned to the class with maximum MAP. Class conditional probability density function is usually assigned to the Gaussian function, being the observations modeled as a Gaussian mixture whose parameters can be iteratively estimated by using the EM algorithm. The a priori probability has a precise meaning in the model regarding data partition over all classes, however it is frequently used as a spatial regularizer by capturing neighboring information, not taken into consideration in the Gaussian mixture model that models pixel intensities as random variables independent and identically distributed. Neighborhood information can be modelled by MRFs.

The most appropriate parameters of the GMM are then estimated according to the Maximum Likelihood (ML) criterion. This likelihood, regarding one feature vector \({\mathbf{x }}\) is given by:

In Eq. (3), \(\varphi _j\) corresponds to the vector of estimated parameters for class j, in this case mean, variance and class coefficient. Because the measures are considered independent, the likelihood for N measures \(p(X|\varphi )\) can be written as:

Maximizing the likelihood can be achieved indirectly by maximizing the log-likelihood, since the logarithm is a crescent function. Apart from that, having Gaussian functions involved using the logarithm is advantegeous regarding derivative calculations since the exponential is anulated.

The maximization of the log-likelihood results in the following re-estimation equations for the parameter’s update:

The weight of class j shown in Eq. (8) will be affected by pixel neighborhood, using MRFs ("Computation of a Priori Probability with MRF").

The process to find the best parameters is iterative and proceeds as follows:

-

1.

Initialization of parameters, which in this case was done by using K-means algorithm

-

2.

E-step (expectation): calculation of likelihood of each sample for each class

-

3.

M-step (maximization): find maximum likelihood value and recalculate the parameters

Steps 2 and 3 are repeated until convergence is achieved.

Convergence Acceleration of the EM Algorithm

One of the most important properties of the EM algorithm is the linearity of its convergence that can be unacceptably slow when the populations are poorly separated. The Anderson acceleration method is based on a fixed-point iteration and tries to make use of information gained from previous iterations for accelerating the convergence process. The goal of fixed-point iteration is to solve \(g(x) = x\) in an iterative manner. For any fixed-point problem we have the general Algorithm 2.

A fixed-point problem can be adapted to the EM algorithm by using the Q-function (Eq. (5)) through the relationship \(f(x) = g(x) - x = 0\), or in other words a maximum-likelihood estimate is a fixed-point of the iteration.

Let \(x_{k-m},...,x_k \in \mathbb {R}^d\) denote the most current \(m+1\) iterations and \(f_{k-m},...,f_k \in \mathbb {R}^d\), where m represents the number of stored iterations to be able to accurately predict the next. In this case, the Anderson Acceleration Algorithm applied in the context of the EM can be described as it can be seen in Algorithm 3.31

This is a constrained problem, which can be reformulated in an unconstrained optimization problem, as can be seen in Reference 31. However, the EM algorithm is characterized as having a smooth curve, meaning that last iterations lead to better predictions than older iterations. Therefore, our implementation have one more constraint characterized by \(\alpha _j < \alpha _{j+1}\) subjected to the constraint \(\alpha > \delta\), being \(\delta\) a small constant assuring that all the previous iterations are effectively used. This constrained optimization problem can be solved by using the Lagrange multipliers method as follows:

First, a vector containing the difference of the log-likelihood between successive iterations must be composed. These differences capture the rate of convergence of the EM algorithm among iterations. Defining this vector as \(\bar{c} = \{c_1,c_2,...,c_n\}\) and assuming the existence of a \(\alpha\) vector such as \(\bar{\alpha } = \{\alpha _1,\alpha _2,...,\alpha _n\}\), the main goal is to find the alpha vector that minimizes the following function:

subject to the following constraints:

Applying Lagrange multipliers we obtain:

The solution for this problem requires the simultaneous solution of a set of equations that include the non-negativity conditions (\(\lambda _i, \mu _i \ge 0\)) and the differentiation of the Lagrangian function in order to all the unknowns, known as the stationary of Lagrange and the complementarity conditions given by \(\lambda _i\alpha _i = 0\) and \(\mu _i(\alpha _i - \alpha _{i+1}) = 0\).

The proposed algorithm outperforms the conventional algorithm for the poor separated cases presented in Reference 24 in approximately \(12.5\%\). We used \(m=7\) and \(I=13\) to make the algorithm with a quick and reliable behaviour.

Computation of a Priori Probability with MRF

MRF models have the ability of capturing neighborhood information to improve a priori probabilities \(p(\omega )\). An image can be considered as a random field, or a collection of random variables (\(\Omega = {\Omega _1,...,\Omega _N}\)) that are defined on the set S. A random field is considered an MRF only when the following conditions are fulfilled:

-

1.

\(p(\omega )>0, \forall \omega \in \Omega\), which is the condition of positivity,

-

2.

\(p(\omega _j | \omega _{S-\{j\}} ) = p(\omega _j | \omega _{N_j})\), which is the condition of Markovianity.

The first condition is straightforward fulfilled just because the values are probabilities, so by definition, greater than 0. The condition of Markovianity states that the probability of an observation \(\omega _j\), given the other random variables in the field, is equal to the probability of the same observation, given a neighborhood around its location (\(\mathcal {N}_j\)), or in other words, given its neighborhood, a variable is independent on the rest of the variables. Fulfilling this condition is in fact modelling the neighborhood effect. Using Gibbs Random Field (GRF), the apriori class probability can be assigned such as:

In this equation, the constant T represents the temperature and controls the level of peaking in the probability density, and the quantity Z is a normalizing constant which guarantees that \(p(\omega )\) is always between zero and one. \(U(\omega )\) is an energy function and is obtained by summing all functions \(V(\omega )\) (clique potential) over all C possible cliques. A clique is defined as a grouping of pixels in a neighborhood system, such that the grouping includes pixels that are neighbors of another in the same system.

The Hammersley-Clifford theorem defines that if and only if a random field \(\Omega\) on S is a MRF with respect to neighborhood system \(\mathcal {N}\), then \(\Omega\) is a GRF on S with respect to a neighborhood system \(\mathcal {N}\). This fact allows to convert the conditional probability as a Markovianity condition of a MRF to the non-conditional probability of a Gibbs distribution of Eq. (12).

To compute the estimation of \(p(\omega )\), the energy function used was based on Reference 28:

In Eq. (15), k is the direction (in this case it can be horizontal or vertical) and \(l_{k,j}\) is the Dirac impulse function in such a way that \(U(\omega _j)\) depends on the count of pixels in neighborhood that do not belong to class j.

Usually, in practice, models are considered as isotropic, so the amount of variables to estimate is strongly decreased, becoming in this case \(\beta _k\) a constant. However pixels near the borders are sometimes wrongly classified in the Gaussian Mixture especially due to the partial volume effect. Therefore using the \(\beta _k\) parameter to model intensity differences in neighborhood pixels in order to reinforce border conditions has been used in several works where several functions have been suggested. The main idea is to set \(\beta _k\) in such a way that a direct interference on border location is achieved. Heuristically we want to avoid class j under situations of high variance that usually appear near borders, even if a large number of pixels belong to class j. Under relative smooth conditions the border can also be present and can be detected by pixel intensity variations which occurs at corners of small structures. Some tests were conducted in order to compute \(\beta _k\) for pixels on and near the border of several angioectasias and the approximated function given by Eq. (16) was found and is proposed in the ambit of this paper as follows:

In Eq. (16) \(\beta _k\) is dependent on the difference of intensities (\(|I_i-I_c|\)) of the neighbor in the direction k, but also of the distance between the pixel in the center and the neighbouring pixel (\(\text {dist}(I_i,I_c)\)). The term \(\sigma\) is the standard deviation of the neighboring used in this case and presented in Fig. 3.

This energy function uses a 2D-neighboring system of 8 pixels that can be seen in Fig. 3, where the darker pixel is the current observation.

Post-processing

The purpose of the post-processing step is to improve the segmentation results, where the main limitations are:

-

1.

isolated pixels are sometimes selected as abnormal region,

-

2.

sharp and irregular edges in some lesions,

-

3.

selection of tissue region as abnormal that are in fact normal.

Problem 1 can be solved by using the mathematical morphology opening operation. Using a small structuring element, the regions that consist of isolated pixels will disappear from the binary image. This will also help to correct some of the irregularity described in problem 2, but using also a mathematical morphology closing operation with the same structuring element the results can be improved. In fact, while opening has the objective to regularize sharp edges to the outside of the lesion, closing will smooth the edges to the inside of the lesion, so the result will be a smooth contour for both directions. Regarding the problem 3, the regions that are incorrectly selected as angioectasia are usually small vessels that appear on the surface of the tissue, and the biggest difference between these two types of tissue are their shape. In the case of angioectasias, they are usually circular lesions, while vessels are elongated regions; so, applying the connected components algorithm, each lesion could be analyzed individually. In this regard, lesion tissue can be selected by using the ratio between major and minor axis length of each region, that being compared with a predefined threshold, established on the basis of previous tests; which value was set to 3.

Feature Extraction

The output of the segmentation module can have 3 different results:

-

1.

after post-processing step, only one region exists in the image,

-

2.

the image is divided into two different regions (one of them contains an angioectasia lesion),

-

3.

the image is divided in two different regions (none of them contains an angioectasia lesion).

In the first situation the image is automatically classified as normal, since no significant differences are found in channel a values that support different classes under the constraint of contiguous minimum area. Second and third cases require a classifier module that rely on features extracted from both regions. This approach models the difference between both regions, in order to improve robustness against environmental conditions (related to device and subject changes). In fact, light characteristics may vary among different devices while tissue color may vary among different subjects.

Figures 4 and 5 show the histograms of channel a and b, respectively, for angioectasia and normal tissue. It is clear that for both channels both shape and mean (more in channel a than in b) of distributions can help to distinguish normal from angioectasia regions. Therefore, histogram measures seem adequate to detect the presence of an angioectasia lesion. In the current work mean, variance, entropy and kurtosis are proposed as features, and were computed by the following expressions:

These four measures are then computed in both regions and concatenated forming the feature vector; which will feed the supervised classifiers.

Results

This section presents the used datasets and some implementation details, followed by some partial results focused on the segmentation module and ending with global classification results.

Datasets and Implementation

In this work, two different databases were used:

-

1.

For the evaluation of the segmentation module, the public database KID was used.11,12,13, 18 This database consists of 27 images, divided into 3 groups of different bleeding probabilities (P0, P1 and P2; from the lowest probability to the highest). All the images were manually segmented by experienced physicians and were all acquired with MiroCam®.

-

2.

For detection purposes, a bigger database was used, with 798 images ( 248 images with angioectasias and 550 images labeled as normal). All the images with lesions and 300 normal frames were taken from 20 exams from PillCam™ SB2, where the rest of normal images (250) were taken from 5 normal exams from MiroCam® in order to obtain a higher degree of generalization. All the exams were performed in Hospital of Braga (Portugal) and were examined by two expert physicians in the diagnosis of WCE exams. The images were included in the database only when both agreed with the diagnosis.

Experimental results of classification were obtained by using WEKA—an open source machine learning package. A stratified 10-fold cross-validation was used, taking into consideration subject variability along folds, with a Multilayer Perceptron (MLP) neural network and a Support Vector Machine (SVM).

For evaluation purposes, several metrics were computed for each test: sensitivity, specificity and accuracy, computed as follows, as well as area under the ROC curve (AUC):

A baseline system was implemented, with the purpose of comparing the proposed method with a reference result. This implementation was based on the algorithm described in Reference 22, which was already described in the "Introduction". This method applies a histogram equalization step to increase contrast of the images and a decorrelation between RGB channels to enhance color differences. After, a threshold is applied to the green channel, which will work as a seed to a region growing algorithm. Regions with specific values of area, perimeter and extent are then removed; and the rest of regions are splitted when their variance were higher than a specific value. Then, 24 statistical, textural and geometrical features are extracted for several color spaces (RGB, HSV, CIELab and YCbCr). A decision tree (RUSBoosted) is used to classify each region as normal or abnormal.

Color Space and Pre-processing

The purpose of this sub-section is to show that the CIELab Color Space has some advantages over RGB even if the relative Red value is used, as defined in Eq. (24). For this purpose Fig. 6 presents a frame containing an angioectasia shown in different color channels of different color spaces. It is clear that the a channel (Fig. 6d) clearly shows the lesion, while some potential false positives are avoided. R* channel (Fig. 6c) also avoids potential false positives however the lesion appears much more subtle. Because RGB shows a high correlation among the three channels,29 the relationship between color red and channel R is not direct. In other words, information carried by the red component is also carried by the other two components, hence a large range of information is simultaneously carried by the different color components. Therefore, even using the R* component, the discrimination remains difficult, however slightly better when compared to the red component (R).

Figure 7 shows some results after using the pre-processing step, previously explained in "Color Characterization of Angioectasias", where an angioectasia frame is used as input with different neighborhood sizes (7, 21 and 51). Results show that with an increasing size of \(\aleph \{D^l(i,j)\}\), the algorithm shows a better performance. Although the lesion also becomes less intense when increasing the neighborhood size, this pre-processing step improves the overall results of segmentation (as will be discussed in the next subsection). The chosen \(\delta\) was 5, because presented good results for removing these highlighted regions, not affecting the lesion area.

Segmentation

The segmentation of angioectasias is an important part of this system, because it strongly influences the next step (Classification). The segmentation algorithm proposed in this paper is an improvement of the one presented in Reference 30, which was also tested in the KID dataset. Major improvements are the acceleration of convergence of the EM algorithm and a new parameter \(b_k\) which incorporates pixel intensity in the computation of a priori probabilities provided by the MRF.

Figure 8 shows segmentation results of an image with an angioectasia lesion. Figure 8b shows the performance of the Otsu algorithm applied to the channel a of the frame. It is clear that with this basic segmentation method it is impossible to correctly differentiate normal from angioectasia tissue. Figure 8c shows that the use of MAP algorithm leads to a major improvement when compared to the Otsu method. The same argument can be used when looking at Figs. 8d and 8e; where both pre- and post-processing methods lead to an improvement in the segmentation of this image. In the case of the pre-processing step, almost all non-lesion zones highlighted with channel a were removed. With the use of post-processing, small regions were also removed, reaching a result where the only selected pixels are the ones belonging to the angioectasia.

Figure 9 shows another example of a frame with an angioectasia, but in this case there is a higher incidence of bubbles in the image. This fact increases the number of pixels corresponding to reflections in these bubbles, that contain a high component of a (which can be verified with the analysis of Fig. 9b). When no pre-processing is applied, the region of angioectasia is not selected by the segmentation module (Fig. 9c). The result is improved with the inclusion of the pre-processing step, in which the angioectasia lesion is also selected (Fig. 9d). This result is also improved with the inclusion of the post-processing step (Fig. 9e), exactly as in the previous example.

KID Database was used to validate the segmentation algorithm since manual segmentation of all the images are available. To compare the different methods the Dice metric (Eq. (25)) was computed over all the 27 images of the database. In this equation A is the set of pixels segmented by the algorithm and B is the set of pixels in the manual segmentation. The higher the Dice metric, the better is the performance of the segmentation algorithm.

To see how every step would influence the segmentation of the images 4 different experiments were carried out:

-

1.

Exp A Results without pre and post-processing, using MRF with constant \(\beta\) values.

-

2.

Exp B Results with pre-processing but without post-processing, using MRF with constant \(\beta\) values.

-

3.

Exp C Results with pre and post-processing, using MRF with constant \(\beta\) values.

-

4.

Exp D Results with pre and post-processing, using MRF with varying \(\beta\) values.

Figure 10 shows Dice values for both the whole KID dataset (a) and only P2 lesions from the same dataset (b). These lesions are the ones with the biggest probability to bleed, therefore they are most dangerous for the patients. Also, it is common for these lesions to appear bigger and more reddish.

Results allow to infer the efficiency of each block of segmentation algorithm; pre-processing, post-processing and intensity based anisotropic MRF modeling. The improvement is more prominent from Exp. A to Exp. B, showing that indeed the pre-processing step has a relevant role in the segmentation method as a whole. The inclusion of post-processing also leads to an improvement in the segmentation performance (Exp. B to Exp. C). Lastly, an improvement is also notorious when comparing standard MRF with the new approach proposed in this paper (Exp. C to Exp. D).

When comparing the results from Figs. 10a and 10b, the more immediate conclusion is that Dice values are bigger when only using P2 lesions, which was already concluded in Reference 30. It is also important to notice that P2 lesions show a bigger improvement when the proposed equation for MRF is included. This fact shows that this new parameter is important for segmentation of this type of lesions that have sharper edges.

It can be also interesting to analyze some images that show some flaws in the Segmentation step, which will directly affect their classification afterwards. In Fig. 11, both images led to bad segmentation results, as it can be seen in the images on the right. The first example (top), the lesion is behind the bubbles, which becomes difficult for the system to localize it (channel a is highlighted in lesion tissue and in presence of bubbles). Although the system could find some of the angioectasia tissue, most part of the result is not correct. In the bottom example, the angioectasia is at an early stage, appearing with a very soft red appearance in the middle of the tissue. As it can be seen in Fig. 11b, abnormal tissue is not that highlighted, which leads to the result on the right where several false positives appear.

Classification

One of the advantages of the approach presented in this paper is that not all images need to be passed in the classifier to be classified. Some of the normal ones are classified by the segmentation module because they do not have ROI. In fact, normal frames present smooth amplitude variations between neighboring pixels, which causes the most intense class to be spread in numerous groups of few pixels that are easily eliminated in the post-processing step. Table 1 shows that more than 33% of normal frames were correctly classified as normal and none of the pathological ones was classified as normal. This is a major advantage for the classifier enabling a more effective discrimination by reducing the sample space.

Given the findings and conclusions of "Feature Extraction", second and higher order statistics were used in the ambit of this paper. A feature analysis algorithm was used to rank the different features according to its discriminative and predictive power. A correlation based feature selection, which computes the Pearson’s correlation value between the value and its class, was used. These results can be analyzed in Table 2, where all the features were ranked according to the correlation values obtained using WEKA.

It is possible to conclude that channel a presents the most representative features, where the mean reaches the highest value. It was decided to group features into different sets, which were built according to the results in the previous table and according to the statistical measures that were expected to represent in a better way the data. The chosen sets can be seen in Table 3.

In Tables 4 and 5 were included the results with a MLP classifier and a SVM classifier, respectively. Not surprisingly, given the non-Gaussian nature of the intensity distribution, the best results were achieved by including higher order statistics. It was also observed that entropy seems to have more discriminative power than kurtosis. Results present higher sensitivity than specificity, which is usually the purpose with medical applications, because it is more acceptable to have some false positives than false negatives.

Another interesting finding is that, when using the features that were chosen to be more representative according to Table 2, the results did not show an improvement. For example, the set C (which is a set with also four features), presents similar results both when using MLP or SVM. When using SVM, set A is the set with only four features with the best performance.

Both tables also show that MLP and SVM perform similarly (mainly in accuracy), where the sensitivity values are higher in SVM and specificity lower. The best result was achieved using a MLP classifier (96.60% of sensitivity and 94.08% of specificity, leading to an accuracy value of 95.58%).

When comparing to the reference algorithm implemented by the authors (Table 6), the proposed approach lead to higher results both in sensitivity and specificity. The selected method22 is based on several parameters chosen by its authors, when using a specific database, which is why the results shown in here are not equivalent to those shown in the paper. We can conclude that the performance of the proposed overall system is better than the one considered as a reference.

Discussion

Lesion detection in WCE exams is of a tremendous interest for the future of medicine, and the development of an automatic diagnosis system that deals with this problem will allow for the physicians to reduce the reviewing time of these exams. Although there are already commercial tools for the detection of blood-based lesions, these show a sensitivity of 41% when angioectasia are considered. This work presents an effective approach for segmentation and detection of angioectasias in small bowel tissue in WCE exams.

With this paper it was possible to conclude that the use of CIELab color space indeed improves the highlight of angioectasia lesions in WCE frames, specifically when the proposed pre-processing algorithm is applied. The use of MRFs to model the pixels’ neighborhood also shows improvements in lesion segmentation, more specifically with the inclusion of the proposed weighted-boundary function. Both of these improvements were shown visually (Figs. 8 and 9) and graphically (Fig. 10). Another important novelty presented in this paper is that the segmentation module has the ability of correctly classify a substantial percentage of normal images of the dataset. This is important to reduce the time needed to classify an entire WCE video.

The use of a supervised classifier showed that these lesions can be detected with the use of a small set of features. As expected, higher order statistics improve the system performance, given the non-Gaussianity observed in intensity distributions.

When compared to the methods already published to detect angioectasias in WCE images, the proposed system shows a better performance. This method does not use algorithms with a high computational complexity (like deep learning), which can be an advantage to be used in a clinical practice, where machines with a high computational power are not usually available. Also, the work described in this paper does not just has the ability of detect angioectasia lesions, but also can localize them in the image; which can be an extra help for the physician.

Nevertheless, there are still some problems to address. As was shown in Fig. 11, some images were misclassified with the proposed method. The pre-processing step should be improved so images with different backgrounds would have the lesions better highlighted. Also, in future works, maybe other color channels could be used simultaneously to segment angioectasias. Also, the segmentation has room for further improvement (specially when smaller lesions are present), which consequently will improve the classification. More promising features to encode color information should be tested, like histogram of oriented gradients (HOG). And improved classification methods should be used (like deep learning or ensemble learning), that usually need larger databases in order to work properly. Looking at the performance values that were reached, we can conclude that the use of this system in the clinical practice can be started, as well as tests with entire videos of WCE and the test of this system in clinical practice.

References

Barbosa, D., Ramos, J., Lima, C. S. Detection of small bowel tumors in capsule endoscopy frames using texture analysis based on the discrete wavelet transform. Conference proceedings: Annual International Conference of the IEEE Engineering in Medicine and Biology Society IEEE Engineering in Medicine and Biology Society Conference 2008:3012–3015, 2008

Boal Carvalho, P., Magalhães, J., Dias de Castro, F., Monteiro, S., Rosa, B., Moreira, M. J., Cotter, J. Suspected blood indicator in capsule endoscopy: a valuable tool for gastrointestinal bleeding diagnosis. Arquivos de Gastroenterol. 54(1):16–20, 2017

Deeba, F., Mohammed, S. K., Bui, F. M., Wahid, K. A. A saliency-based unsupervised method for angioectasia detection in capsule endoscopic images. In: The 39th Conference of The Canadian Medical and Biological Engineering/La Societe Canadiénné de Génie Biomédical, 2016

Deeba, F., Mohammed, S. K., Bui, F. M., Wahid, K. A. A saliency-based unsupervised method for angiectasia detection in endoscopic video frames. J. Med. Biol. Eng. 38(2), 325–335, 2017.

D’Halluin, P. N., Delvaux, M., Lapalus, M. G., Sacher-Huvelin, S., Ben Soussan, E., Heyries, L., Filoche, B., Saurin, J. C., Gay, G., Heresbach, D. Does the “Suspected blood indicator” improve the detection of bleeding lesions by capsule endoscopy? Gastrointest. Endosc. 61(2), 243–249, 2005.

Fan, G.W., Chen, T. H., Lin, W. P., Su, M. Y., Sung, C. M., Hsu, C. M., Chi, C. T. Angiodysplasia and bleeding in the small intestine treated by balloon-assisted enteroscopy. J. Digest. Dis. 14(3), 113–116, 2013.

Figueiredo, I. N., Kumar, S., Leal, C., Figueiredo, P. N. (2013) Computer-assisted bleeding detection in wireless capsule endoscopy images. Comput. Methods Biomech. Biomed. Eng. 1(4), 198–210.

Fu, Y., Zhang, W., Mandal, M., Meng, M. Q. H. Computer-aided bleeding detection in WCE video. IEEE J. Biomed. Health Inf. 18(2), 636–642, 2014.

Hemingway, A.P. Angiodysplasia: current concepts. Postgrad. Med. J. 64(750), 259–63, 1988.

Hwang, S., Oh, J., Cox, J., Tang, S. J., Tibbals, H. F. Blood detection in wireless capsule endoscopy using expectation maximization clustering. In: Medical Imaging 2006: Image Processing, edited by J. M. Reinhardt, J. P. W. Pluim. SPIE, 2006

Iakovidis, D. K., Koulaouzidis, A. Automatic lesion detection in capsule endoscopy based on color saliency: closer to an essential adjunct for reviewing software. Gastrointest Endosc 80(5), 877–883, 2014.

Iakovidis, D. K., Koulaouzidis, A. Automatic lesion detection in wireless capsule endoscopy—a simple solution for a complex problem. In: IEEE International Conference on Image Processing (ICIP), IEEE, pp 2236–2240, 2014

Iakovidis, D. K., Koulaouzidis, A. Software for enhanced video capsule endoscopy: challenges for essential progress. Nat. Rev. Gastroenterol. Hepatol. 12(3), 172–186, 2015.

Iddan, G., Meron, G., Glukhovsky, A., Swain, P. Wireless capsule endoscopy. Nature 405(6785):417, 2000.

Jung, Y. S., Kim, Y. H., Lee, D. H., Kim, J. H. Active blood detection in a high resolution capsule endoscopy using color spectrum transformation. 2008 International Conference on BioMedical Engineering and Informatics pp 859–862, 2008

Karargyris, A., Bourbakis, N. A methodology for detecting blood-based abnormalities in wireless capsule endoscopy videos. In: 2008 8th IEEE International Conference on BioInformatics and BioEngineering, IEEE, pp 1–6, 2008

Kodogiannis, V., Boulougoura, M., Wadge, E., Lygouras, J. The usage of soft-computing methodologies in interpreting capsule endoscopy. Eng. Appl. Artif. Intell. 20(4), 539–553, 2007.

Koulaouzidis, A., Iakovidis, D. K. KID: Koulaouzidis-Iakovidis Database for Capsule Endoscopy. 2016. http://is-innovation.eu/kid

Lau, P. Y., Correia, P. L. Detection of bleeding patterns in WCE video using multiple features. Conference proceedings: Annual International Conference of the IEEE Engineering in Medicine and Biology Society IEEE Engineering in Medicine and Biology Society Conference 2007:5601–5604, 2007.

Li, B., Meng, M. Computer-Aided Detection of Bleeding Regions for Capsule Endoscopy Images. IEEE Trans. Biomed. Eng. 56(4), 1032–1039, 2009.

Liangpunsakul, S., Mays, L., Rex, D. K. Performance of given suspected blood indicator. Am. J. Gastroenterol. 98(12), 2676–2678, 2003.

Noya, F., Alvarez-Gonzalez, M. A., Benitez, R. Automated angiodysplasia detection from wireless capsule endoscopy, IEEE, pp 3158–3161, 2017

Pan, G. B., Yan, G. Z., Song, X. S., Qiu, X. l. Bleeding detection from wireless capsule endoscopy images using improved euler distance in CIELab. J. Shanghai Jiaotong Univ. (Sci.) 15(2):218–223, 2010

Plasse, J. H. (2013) The EM Algorithm in Multivariate Gaussian Mixture Models using Anderson Acceleration. PhD thesis, Master thesis in applied mathematics, Worcester Polytechnic Institute

Pogorelov, K., Ostroukhova, O., Petlund, A., Halvorsen, P., de Lange, T., Espeland, H. N., Kupka, T., Griwodz, C., Riegler, M. Deep learning and handcrafted feature based approaches for automatic detection of angiectasia. In: 2018 IEEE EMBS International Conference on Biomedical and Health Informatics (BHI), IEEE. 2018. https://doi.org/10.1109/bhi.2018.8333444

Regula, J., Wronska, E., Pachlewski, J. Vascular lesions of the gastrointestinal tract. Best Pract. Res. Clin. Gastroenterol. 22(2), 313–328, 2008.

Shvets, A., Iglovikov, V., Rakhlin, A., Kalinin, A. A. Angiodysplasia detection and localization using deep convolutional neural networks. CoRR. 2018. doi: 10.1101/306159.

Van Leemput, K., Maes, F., Vandermeulen, D., Suetens, P. Automated model-based tissue classification of MR images of the brain. IEEE Trans. Med. Imaging 18:897–908, 1999.

Vieira, P., Ramos, J., Barbosa, D., Roupar, D., Silva, C., Correia, H., Lima, C. S. Segmentation of small bowel tumor tissue in capsule endoscopy images by using the MAP algorithm. Conference proceedings: Annual International Conference of the IEEE Engineering in Medicine and Biology Society IEEE Engineering in Medicine and Biology Society Conference 2012:4010–4013, 2012.

Vieira, P. M., Goncalves, B., Goncalves, C. R., Lima, C. S. Segmentation of angiodysplasia lesions in WCE images using a MAP approach with Markov random fields. In: 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), IEEE, pp 1184–1187. http://ieeexplore.ieee.org/document/7590916/

Walker, H. F., Ni, P. Anderson acceleration for fixed-point iterations. SIAM J. Num. Anal. 49(4), 1715–1735, 2011.

Warkentin, T., Moore, J. C., Anand, S. S., Lonn, E. M., Morgan, D. G. (2003) Gastrointestinal bleeding, angiodysplasia, cardiovascular disease, and acquired von Willebrand syndrome. Transf. Med. Rev. 17(4), 272–286, 2003.

Weatherall, I. L., Coombs, B. D. Skin color measurements in terms of CIELAB color space values. J. Investig. Dermatol. 99(4), 468–473, 1992.

Woodland, A., Labrosse, F. On the separation of luminance from colour in images. In: Proceedings of the International Conference on Vision, Video and Graphics, Edinburgh, pp 29–36, 2005

Yung, D. E., Sykes, C., Koulaouzidis, A. The validity of suspected blood indicator software in capsule endoscopy: a systematic review and meta-analysis. Expert Rev. Gastroenterol. Hepatol. 11(1), 43–51, 2017.

Zheng, Y., Hawkins, L., Wolff, J., Goloubeva, O., Goldberg, E. Detection of lesions during capsule endoscopy: physician performance is disappointing. Am. J. Gastroenterol. 107(4), 554–60, 2012.

Acknowledgments

This work is supported by FCT (Fundação para a Ciência e Tecnologia) with the reference Project UID/EEA/04436/2019 and with the PhD Grant SFRH/BD/92143/2013.

Author information

Authors and Affiliations

Corresponding author

Additional information

Associate Editor Jane Grande-Allen oversaw the review of this article.

Rights and permissions

About this article

Cite this article

Vieira, P.M., Silva, C.P., Costa, D. et al. Automatic Segmentation and Detection of Small Bowel Angioectasias in WCE Images. Ann Biomed Eng 47, 1446–1462 (2019). https://doi.org/10.1007/s10439-019-02248-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10439-019-02248-7