Abstract

The primary code is further encoded with a secondary code for many modern global navigation satellite system (GNSS) signals. The secondary code usually has a higher chip rate than navigation message symbol rate, preventing integration time from being extended. Based on the partial-matched filter with FFT (PMF-FFT) algorithm, we propose the improved high-sensitivity partial-matched filter with FFT (IHSPF) algorithm. The proposed parallel secondary code phase estimate (PScPE) algorithm combined with PMF-FFT can achieve parallel search in three dimensions: primary code, secondary code, and Doppler frequency. The envelope loss mitigation (ELM) method is used for the integration gain loss issue due to the FFT scalloping loss. Finally, the improved multi-search code phase compare detection method (I-MCCD) is also presented to improve the detection performance. The proposed algorithm achieves extremely high sensitivity and is suitable for hardware implementation. The BDS B1I, B2I, B3I, and B2a signals are used to simulate and analyze the algorithm performance. The simulation results show that the acquisition sensitivity of the B1I signal is effectively improved up to 23 dB-Hz. The success probability can get 93% at the maximum gain points and 74% at the minimum gain points.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Signals broadcast by global navigation satellite system (GNSS), such as the GPS, BDS, Galileo, and GLONASS, are made up of three major components: (1) navigation message, which is a sequence of + 1 and − 1 transmitted at a low rate to provide ephemeris and time information needed for navigation and positioning; (2) a pseudo-random noise (PRN) code, also known as pseudo-code, spreading code, or ranging code, which is used for ranging and distinguishing signals from different satellites; and (3) a carrier, a sinusoidal signal in the L band (Leclere et al. 2014).

After being processed by the RF front end of the receiver, the satellite signal is converted to an intermediate frequency (IF) digital signal. The acquisition is the first stage of the following processes, which detects the visible satellites and roughly estimates Doppler frequencies and code phases. The implementation of the acquisition is based on the auto-correlation properties of the PRN codes. First, multiplying with the local replica of the carrier signal, the IF signal is stripped off the carrier whose center frequency is the sum of the Doppler shift and the IF. Then, the carrier-peeled signal is correlated with the local replica of the PRN code. When the estimates of the Doppler frequency and code phase are both close to the actual values, the auto-correlation peak appears, and the acquisition is finished (Xie 2017).

The secondary code is widely applied in modern GNSS signals (Leclere and Landry 2018), such as the BDS B1I, B2a, GPS L5, Galileo E1, and E5a signals. Long spreading codes are generated by a tiered code construction, whereby the secondary code multiplies the primary code. Each secondary code chip's edge coincides with the primary code chip's edge. A primary code start coincides with the beginning of a secondary code chip. Using the tiered code, better cross-correlation and anti-jamming performance can be obtained (Svatoň and Vejražka 2018). However, the tiered code makes the acquisition more complicated at the same time. First, it adds the secondary code phase dimension to the conventional two-dimensional searching space (the Doppler frequency and primary code dimensions), which results in more searching stages and longer acquisition time. Second, the secondary code usually has a higher chip rate than the navigation message symbol rate, preventing the coherent time from being extended, which worsens the acquisition sensitivity. Meanwhile, for high-sensitivity acquisition, a long integration time increases computational complexity and acquisition time. Consequently, modern GNSS receivers should concurrently consider the two competing aims of high sensitivity and fast speed.

State of the art

A high-performance acquisition algorithm requires consideration of three parts: the integration method, the search strategy, and the decision method. These parts directly determine the sensitivity and speed of acquisition (Kong 2017).

First, selecting an integration method with higher signal-to-noise ratio (SNR) gain is the primary way to improve sensitivity. Currently, coherent integration, non-coherent integration, and differential integration are the three main integration techniques used in acquiring GNSS signals. Coherent integration coherently adds up the correlation results and provides the highest gain but is constrained by bit transitions. The other two techniques are unaffected by bit transitions. Still, their gains are lower than coherent integration due to the squaring loss of non-coherent integration (Xie 2017) and the monotonous phase shift loss of differential integration (Corazza and Pedone 2007). There are various approaches to increase the integration gain. The main consideration for the non-coherent and differential integration is to lessen the loss of gain. The generalized differential combination (GDC) method proposed by Corazza and Pedone (2007) and the improved generalized differential combination (MGDC) presented by Ta et al. (2012) both improved the differential integration performance. For coherent integration, the aim is to overcome bit transitions and extend the integration time. Lo Presti et al. (2009) proposed a new 2-D function on the search space insensitive to the bit transitions based on the energy invariance property of the total useful signal energy. Zhu and Fan (2015) proposed a time parallel acquisition algorithm with transition detection to overcome the bit transition limitation. Foucras et al. (2016) focused on the bit transition and its impact on acquisition performance by providing a general mathematical study.

Second, improving search parallelism is vital to improving acquisition speed (Kong 2017). Commonly used parallel search algorithms include the parallel code phase search, parallel Doppler search, and hybrid parallel search (Liu et al. 2019). The FFT/IFFT-based circular correlation algorithm (Van Nee and Coenen 1991) and its extensions are typical parallel code phase search algorithms. As for the parallel Doppler search algorithms, FFT is employed to complete the coherent integration. In recent years, researchers have also been exploring new ideas to reduce the computational effort of conventional algorithms and improve the acquisition speed (Gao and Xia 2018). Kong (2013) proposed an acquisition method based on compressed sensing, whereby the GNSS signal was sampled at a lower rate than that required by Nyquist’s theorem, reducing the computational effort of the associated operations and Kim and Kong (2014) further extended this approach using FFT. The synthesized Doppler frequency hypothesis testing (SDHT) method proposed by Kong (2015) used interpolation estimation to calculate the correlation results between two Doppler frequency bins, thus reducing the number of actual correlation operations. However, such methods generally harm the acquisition sensitivity and are unsuitable for designing high-sensitivity receivers.

Third, the decision strategy of acquisition is also important to the acquisition sensitivity and speed performance (Duan et al. 2015). The threshold method (Viterbi 1995) was proposed for serial search algorithms that compared the current test statistic against the detection threshold until the statistic exceeded the threshold. The maximum-to-threshold method (Corazza 1996) extended the serial threshold method used in parallel search algorithms. This method found the maximum value after all test statistics had been obtained and then compared it to the detection threshold to complete the decision. The maximum-to-second-maximum ratio (MTSMR) (Geiger et al. 2012) used the first and second peaks ratio as the test statistic, not the correlation results. The K-largest method selected the K-largest correlation values and compared them twice to test the first verdict (Kong 2017).

Due to the use of the tiered code, the bit transitions problem can be overcome naturally. Because the secondary code period is perfectly synchronized with the navigation bit, the coherent integration time can be extended to a navigation bit when the secondary code phase is identified. It allows the gain advantage of coherent integration to be fully exploited. Much relevant research has emerged over the past decade. Tawk et al. (2011) proposed a secondary code wipe-off technique for Galileo signals. Meng et al. (2017) proposed the Neumann–Hoffman (NH) code evasion and stripping method based on a statistical analysis of NH codes and enhanced the acquisition sensitivity of BDS signals using NH codes. Liu et al. (2018) proposed a two-stage high-sensitivity acquisition method based on sign combinations. Svatoň et al. (2020) proposed a modified single-block zero-padding (mSBZP) partially correlated code phase parallel search algorithm to achieve high sensitivity. However, it is worth noting that all the above works are based on the circular correlation algorithm, which uses FFT/IFFT to replace correlation in the time domain. Although this algorithm can be easily implemented in DSP (Kong 2015) or software-defined receiver (Guo et al. 2017), the vast computational load, coming with conducts of two Fourier transforms as well as one Fourier inversion (Guo et al. 2017), making it unsuitable for hardware receivers. Furthermore, these works pay insufficient attention to the search strategy and the decision method.

The partial-matched filter with FFT (PMF-FFT) algorithm is proposed initially in the communication literature (Spangenberg et al. 2000). It enables a two-dimensional parallel search of code phase and carrier Doppler and provides a good trade-off between acquisition performance and complexity (De et al. 2006; Guo et al. 2017). Based on the conventional PMF-FFT algorithm, we fully consider the integration, search, and decision methods. Then, a high-sensitivity acquisition algorithm is proposed for designing acquisition modules in high-sensitivity hardware receivers.

Summary of work

We propose a high-sensitivity GNSS signal acquisition algorithm, the improved high-sensitivity partial-matched filter with FFT (IHSPF), which is compatible with acquiring all BDS-2 and BDS-3 signals. In the IHSPF, we make the following improvements to the PMF-FFT algorithm:

The parallel secondary code phase estimate (PScPE) can extend the coherent integration time to one secondary code period and search for all secondary code phases in parallel, significantly compressing the time consumed in the secondary code phase dimension search.

The envelope loss mitigation (ELM) combines spectrum interleaving and zero-padding FFT to mitigate the scalloping loss caused by the FFT.

The improved multi-search code phase compare detection algorithm (I-MCCD), which introduces the comparison of the secondary code phase, is proposed in the detection process to improve the acquisition sensitivity further.

These improvements are described in subsequent sections. Simulation results of the IHSPF show that the acquisition sensitivity of the BDS B1I signal can reach 23 dB-Hz.

First, a mathematical model of the signal is used to analyze the challenge of acquiring tiered-code-modulated GNSS signals. Then, we introduce the conventional PMF-FFT algorithm, describe the proposed IHSPF acquisition algorithm, and present simulations and analysis of the IHSPF’s performance. Finally, conclusions are drawn.

Model description

To better introduce the proposed algorithm, we describe some basic models in this section. First, we give the models of tiered-code-modulated GNSS signal and signal acquisition. Then, the bit transitions problem is introduced. Finally, we describe the traditional PMF-FFT algorithm, which is the basis of our proposed algorithm.

Mathematical model of tiered-code-modulated signal

We use the binary phase shift keying (BPSK) signal as an example. The GNSS IF signal sequence \(r\) obtained after the RF front end can be written as:

where \(A\) is the signal amplitude, \(k\) is the time of the sample point, \(c[\bullet ]\) is the primary code sequence, \({\tau }_{c}\) is the primary code phase, \(\mathrm{sc}[\bullet ]\) is the secondary code, \({\tau }_{\mathrm{sc}}\) is the secondary code phase, \(d[\bullet ]\) is the data bit, \({T}_{s}\) is the sampling interval, \({f}_{d}\) is the Doppler frequency, \({\varphi }_{0}\) is the initial phase of the carrier, and \({W}_{n}[k]\) is the signal noise.

Tiered-code-modulated GNSS signal acquisition

The acquisition is a typical maximum likelihood (ML) estimation process (Lo Presti et al. 2009). This process, seen as a two-dimensional search, is finished when the estimates are close to the actual value and the maximal correlation value is obtained. This process sees each possible code phase and Doppler frequency value as a search point.

In the two-dimensional search, we do not care about the secondary codes and data bits, i.e., we consider \(\mathrm{sc}\left[k\right]\) and \(d[k]\) to be invariant constants. The receiver only needs to provide \({\widehat{\tau }}_{c}\) and \({\widehat{f}}_{d}\) as the estimates of \({\tau }_{c}\) and \({f}_{d}\). According to the estimates, the local replica of the carrier is generated to strip off the Doppler frequency, and the local replica of the primary code sequence is generated to complete the correlation and coherent integration as:

where \({c}_{\mathrm{L}}[\bullet ]\) represents the local replica of the primary sequence, \(n\) represents the \(n\) th coherent integration in a single non-coherent integration, \(l\) is the number of samples used for integration, and \(\Delta {\tau }_{c}= {{\widehat{\tau }}_{c}-\tau }_{c}\) and \(\Delta f= {\widehat{f}}_{d}-{f}_{d}\) are the estimated deviation of the primary code phase and Doppler, respectively. The several consecutive results of coherent integration are non-coherently accumulated to obtain the tests statistic:

where \({N}_{\mathrm{NC}}\) is the times of non-coherent accumulation. When the set of estimated deviations corresponding to the search cells is small enough, the \({I}_{\mathrm{NC}}\) tends to the maximum.

It is worth noting that equation (2) is based on the assumption that the secondary codes and data bits can be ignored under the limited length of integration, i.e., the size of \(l\). The length of a message bit limits the integration time due to the bit transitions. Figure 1 depicts the bit transitions phenomenon, which means the sign transitions may occur and reduce the correlation value. The orange line represents the data bit. Furthermore, the use of the tiered code complicates the question. The possible sign transitions between the secondary code chips may also cause gain loss.

Here, the structure of the tiered code is described using the BDS B1I signal as an example (shown in Fig. 2). The B1I signal's message symbol rate is 50 bps. The primary code rate is 2.046 Mbps, with 2046 code chips in one period. The secondary code employs NH code with a chip rate of 1kbps and 20 code chips in one period. One secondary code period's edges are aligned with the edges of the message bits, and one primary code period's edges are aligned with a secondary code chip. Due to the potential secondary code sign transitions, we can only guarantee an effective integration time of 1 ms.

One solution to extend the coherent integration time is to transform the acquisition process into a three-dimensional search process to locate the secondary code phase \({\widehat{\tau }}_{sc}\). A local replica of the secondary code sequence can be introduced concurrently in the correlation operation, extending the coherent integration time to 20 ms and bringing about a noticeably higher acquisition sensitivity. In this case, Equation (2) is modified as:

where \({\mathrm{sc}}_{L}\left[\bullet \right]\) is the local replica of the secondary code sequence and \(\Delta {\tau }_{\mathrm{sc}}={\widehat{\tau }}_{\mathrm{sc}}-{\tau }_{\mathrm{sc}}\) is the estimated deviation of the secondary code phase. The addition of a new dimension greatly increases the computational load. Covering all search spaces is time-consuming and needs an efficient search strategy. The PMF-FFT uses partial-matched filters for correlation to realize a parallel search of the primary code phase. The FFT operation is performed on the partial correlation results to achieve a parallel search of the Doppler frequency domain while completing coherent integration.

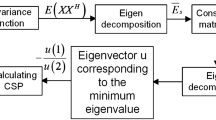

Conventional PMF-FFT algorithm

Here is a description of the PMF-FFT as shown in Fig. 3. For brevity, the following phrases are defined and used throughout the text:

Coarse Doppler estimates are used as the main frequency point for generating the local replica of the carrier

Fine Doppler estimates are determined by FFT spectrum lines. A Doppler estimate consists of both a coarse and a fine estimate.

A search cell comprises a Doppler estimate and a primary code phase estimate.

A test statistic is the final integration result at a search cell.

All search cells in a single partial parallel search are combined to form a search space.

The conventional PMF-FFT algorithm flow is as follows:

-

(1)

The input IF signal, whose center frequency is the sum of the Doppler shift and the IF, is sampled at twice the primary code rate. A PMF bank consists of \(P\) sections of PMF. Each section can complete the correlation of \(S\) sample points with the local replica of code (\(P\) and \(S\) are chosen by combining the desired level of parallelism with the hardware consumption, e.g., \(P=6\) and \(S=341\), allowing 2046 correlation operations on different code phases to be performed in parallel). The IF signal sample points are multiplied by the local replica of the carrier (main frequency point \({f}_{dc}\) plus IF) and moved into the PMF bank.

-

(2)

The local replica of the code sequence is set as the matched filter's coefficients, and the code phase is changed by shifting the sample points. The correlation procedure is continued until all the correlation operations of \({T}_{\mathrm{CI}}\) duration on each primary code phase are finished. The partial correlation results are assembled into an M-segment partial correlation matrix with \(P\times S\times M\) correlation results (each correlation time is \({T}_{p}\)).

-

(3)

The coherent integration results are obtained by performing FFT at each primary code phase. Only the first and last \(1/\left(4M\right)\) of the M points are used as Doppler fine estimates. It is due to the significant correlation loss that occurs when the estimated residuals of Doppler are large. In this case, an FFT covers a frequency range from \(-1/\left(4{T}_{p}\right)+{f}_{dc}\) to \(1/\left(4{T}_{p}\right)+{f}_{dc}\), and the frequency resolution is \(1/\left(M\times {T}_{p}\right)\).

-

(4)

The non-coherent integration is applied after code Doppler compensation to increase the SNR gain further. To cover all search cells, we should serially switch the search space. Repeat the procedure above until all search spaces are searched. The detection is completed by testing the maximum test statistics of all search spaces.

Because the secondary code phase is not considered and the FFT introduces envelope loss, the conventional PMF-FFT cannot be used directly to acquire tiered-code-modulated GNSS signals in weak signal environments. We propose the IHSPF algorithm, which enhances the conventional PMF-FFT algorithm to attain high sensitivity.

Improved high-sensitivity partial-matched filter with FFT (IHSPF)

The IHSPF algorithm is shown in Fig. 4, where the improvements have been marked with dashed boxes. The phrases defined in the last chapter are made the following modifications:

A search cell comprises a Doppler estimate, a primary code phase estimate, and a secondary code phase estimate. Accordingly, the search space becomes three-dimensional.

The process of the IHSPF is described using the example of acquiring the B1I signal, where \(P=6\), \(S=341\), and \(M=128\). Figure 5 shows the correlation and coherent integration process.

-

(1)

The sample points are mixed with the local replica of the carrier. Then, successively shifting \(2046\times 40\) times obtains 240 partial correlation results under 2046 primary code phase estimates. The length of each segment is 1/12 ms. The four adjacent partial correlation results are added to obtain 1/3 ms correlation results. The local replica of the secondary code sequence is then multiplied by 60 1/3 ms correlation results. The correlation results are multiplied by 20 secondary code sequences with different initial phases.

-

(2)

Each of the 20 sets of partial correlation values is subjected to a zero-padding 128-point FFT operation. One 20 ms coherent integration is completed at all search cells in a search space. A search space is made up of \(2046\times 20\times 64\) search cells. The coherent integration process is repeated, and the non-coherent integration is performed.

-

(3)

The processing above continues until the 4092 primary code phases, 20 secondary code phases, and \(\pm\) 5000 Hz Doppler range are covered. Here, the main frequency points are set using spectrum interleaving. When the above process is finished, the test statistics’ value, Doppler, and code phase matrices are recorded. Then, one search is completed.

-

(4)

When the signal strength is high enough, the maximum threshold compare detection (MTCD, which compares the maximum test statistic to the threshold to complete the detection) is used to complete the detection. If not, the search is repeated, and the I-MCCD is used.

Parallel secondary code phase estimate (PScPE)

Using the tiered code enables us to extend the length of the coherent integration to the length of a message bit without considering bit transitions. By including a third dimension (the secondary code phase) in the conventional search process, we extend the acquisition to a three-dimensional search process.

Algorithm principle

The IF signal samples (length of one secondary code period) can be written as a row vector:

where \({r}_{k}=r[k]\). The estimates of a search cell \(\left({\widehat{\tau }}_{c},{\widehat{\tau }}_{sc},{\widehat{f}}_{d}\right)\) can be used to generate local replicas in the form of row vectors:

where \({c}_{k}=c\left[k-{\widehat{\tau }}_{c}\right]\), \(s{c}_{k}=sc\left[k-{\widehat{\tau }}_{sc}\right]\), \(c{a}_{k}=\mathrm{exp}\left(2\pi k{T}_{s}{\widehat{f}}_{d}+{\varphi }_{L}\right)\). In the order of stripping off the carrier, stripping off the secondary code, stripping off the primary code, and correlating, the coherent integration at a search cell is:

where \(\circ\) denotes Hadamard product. This step can easily be mathematically extended to parallel search by extending vector operations to matrix operations. Assuming that the number of the Doppler frequency estimates, secondary code phase estimates, and primary code phase estimates are \(A\), \(B,\) and \(\Upsilon\), respectively, we can form the local replica of the carrier matrix, the local replica of the secondary code matrix, and the local replica of the primary code matrix as:

where the first number in the lower corner of each element is the ordinal number of the estimate and the second number is the chronological number. Each row of the matrix represents a local replica sequence.

The flow of a fully parallel search in three dimensions can be expressed as:

where \(\odot\) denotes Khatri–Rao product. We can obtain a \(\left(AB\right)\times \Upsilon\) coherent integration matrix \({\mathbf{I}}_{(AB)\times \Upsilon }\), where each matrix element is a coherent integration value at a search cell. Since the local primary and secondary code sequences have only two numbers, 1 and − 1, the code stripping operation only requires the multiplication of ± 1. The original value of multiplication by 1 remains unchanged, and multiplication by -1 can be replaced by inverting the sign. To obtain \({\mathbf{I}}_{(AB)\times \Upsilon }\), \(AB\times l+AB\Upsilon \times l\) ± 1 multiplication (code stripping), \(A\times l\) complex multiplication operations and \(AB\times \Upsilon \times \left(l-1\right)\) addition operations are required.

The number of code chips in a secondary code period is \(B\), and one chip has \(l/B\) sample points. Therefore, we can use PMF for partial correlation and strip off the secondary code over the correlation results rather than all sample points. The primary code sequence matrix can be rewritten as a matrix of \(D\cdot B\) (\(D\) is the partial correlation results in one primary code period) chunks:

where \(1\le i\le DB\). Carrier stripping yields matrix \({\mathbf{J}}_{{A}_{r}\times l}\) , which is written in the same chunked form:

where \({A}_{r}=A/\left(M/2\right)\) will be described later. Partial correlation produces the correlation results matrix:

where \({\mathbf{K}}_{i}\) is the partial correlation results for \(A\) different Doppler frequency and \(\Upsilon\) different primary code phases. The same-position element of different \({\mathbf{K}}_{i}\) matrix is extracted to create a row vector, which is then combined to create a \({A}_{r}\Upsilon \times DB\) partial correlation results matrix (16). \(\widetilde{\mathbf{K}}\) and \(\mathbf{K}\) differ only in the order of elements.

Each partial correlation time is \(1/D\) primary code period. A single secondary code period has \(D\cdot B\) correlation results.

Accordingly, we generate \(B\) secondary code sequences of length \(D\cdot B\) based on different secondary code phases, forming a secondary code sequence matrix:

Then, complete secondary code stripping and coherent integration over the partial correlation results:

M-point FFT is used for parallel search in the Doppler frequency domain, with carrier stripping applied only at the \({A}_{r}=A/\left(M/2\right)\) main frequency points and other frequency points covered by FFT spectrum lines. The PMF partial correlation and M-point FFT operations carry out the coherent integration.

To obtain the coherent integration matrix \({\mathbf{I}}_{{A}_{r}\Upsilon B\times \left(M/2\right)}\), a \(M\)-point FFT operation is performed on each row vector, taking the results on \(M/2\) spectrum lines in each FFT. A total of \({A}_{r}\Upsilon \times \left(l/DB\right)\times \mathrm{DB}+{A}_{r}\mathrm{\Upsilon DB}\times B\)±1 multiplications (code stripping), \({A}_{r}\times l+{A}_{r}\Upsilon B\times \left(M/2\right){\mathrm{log}}_{2}M\) complex multiplication and \({A}_{r}\Upsilon \times (\left(l/DB\right)\times \mathrm{DB}-\mathrm{DB})+{A}_{r}\Upsilon B\times M{\mathrm{log}}_{2}M\) additions are carried out. The integration gain envelope is as:

where \(\Delta {\tau }_{c}\)and \(\Delta {\tau }_{sc}\) are the estimated residuals of the primary code phases and secondary code phases, respectively, and \(\Delta f\) is the estimated residual of the coarse Doppler estimates.

Analysis of computational complexity

To analyze the computational complexity, the B1I signal is used as an example, in which \({A}_{r}=7\),\(B=20\),\(\Upsilon =4092\),\(l=81840\) (\({T}_{\mathrm{CI}}=20\mathrm{ms}\)),\(D=3\), \(M=128\) , and the radix-2 FFT is used. To compare the computational complexity, the conventional algorithm, in this case, also executes a coherent integration of 20 ms. The conventional two-dimensional parallel search algorithms must serially change the local secondary phase 20 times to obtain the secondary code phase estimate. Thanks to the three-dimensional parallel search, which reduces repetitive correlation operations, the PScPE algorithm greatly reduces the computational complexity (as shown in Table 1).

Envelope loss mitigation (ELM)

The scalloping loss problem caused by the discrete Fourier transform (DFT) (Lyons 2015) reflects fluctuations in the overall amplitude–frequency response of the N-point DFT. In the PMF-FFT algorithm, the FFT (fast algorithm of the DFT) accomplishes two tasks, one is to complete the coherent integration as the last step and the other is to complete the determination of the spectrum peak. To analyze the integration gain envelope, we are only concerned with the effect of \(\Delta f\). The envelope gain is shown in the following equation:

where the left factorization is the partial correlation gain term and the right factorization in bold font is the FFT gain term. When the Doppler precisely lies in the middle of the two spectrum lines, the amplitude of the coherent integration result obtained is cut, and the acquisition sensitivity is decreased. At this point, the \(\Delta f{T}_{p}-n/M\) is maximal and the gain is minimal.

Zero-padding FFT

A common solution to reduce the scalloping loss is using zero padding. The FFT/DFT is a sampling of the DTFT function. By padding zeros in the time domain, the DTFT is sampled at smaller intervals in the frequency domain, leading to a smaller spacing between spectrum lines, effectively reducing the scalloping loss. Padding zeros does not add more noise or exacerbate spectral leakage. However, zero padding means that the number of points involved in the FFT operation increases, which causes additional computation complexity. Therefore, we must choose the appropriate FFT points to balance envelope gain and computational complexity.

The B1I signal acquisition is used as an example to analyze the number of main frequency points and the Doppler frequency resolution when various FFT points and zero-padding numbers are used. The main frequency points are set to cover a Doppler frequency range of \(\pm\) 5000 Hz from 0. The coherent integration time is 20 ms. In one search space, it is necessary to search through 4092 primary code phase estimates and 20 estimates of the secondary code phase, performing a total of 81,840 128-FFT operations. The complexity and search time directly increase with adding one search space. Table 2 lists potential segmentation and FFT points combinations.

A smaller hardware implementation is achieved by selecting fewer FFT points, compromising the acquisition speed. We use a 128-point FFT to keep the hardware size small when the acquisition speed is adequate. There is an analysis of the gain envelope curve of the 128-point FFT. After completing the partial correlation, 120 partial correlation results of 1/6 ms were obtained for a 128-point FFT. Reserving half of the FFT spectrum, the maximum gain loss was 4.29 dB, with 3.4 dB due to the scalloping loss. Such a significant loss would severely deteriorate the acquisition sensitivity at this Doppler frequency (shown in Fig. 6). We use the zero-padding FFT method. The partial correlation results are recombined into 60 partial correlation results, padding zeros and performing a 128-point FFT. Reserving half of the FFT spectrum, the maximum gain loss is 1.68 dB, with the loss due to the scalloping loss being 0.8 dB (shown in Fig. 6).

Spectrum interleaving

Another way to reduce the effect of scalloping loss is to interleave the spectrum by adding main frequency points at 1/2 spectral resolution next to the original main frequency points. There is no conflict between spectrum interleaving and zero padding, so we can combine them. A 128-point zero-padding FFT on 60 partial correlation results is performed, and spectrum interleaving is used (shown in Fig. 7). After interleaving the spectrum lines, the lowest gain point of the original curve (blue) is precisely the highest gain point of the new curve (red). The higher gain part of the two gain curves forms the final gain curve (black). The maximum gain loss is 1.09 dB, of which the scalloping loss causes 0.20 dB. The combination of zero padding and spectrum interleaving reduces the loss effectively.

Improved multi-search code phase compare detection (I-MCCD) method

The MCCD method records multiple maximums from multiple consecutive searches and compares the phase of the primary code between multiple searches to complete the detection. Duan et al. (2015) provide an experimental analysis of the acquisition sensitivity of the MCCD method. It shows that, when using the MCCD method, the acquisition sensitivity of the L1CA signal is about 2 dB higher than using the MTCD method.

Based on the MCCD method, we introduce the comparison of the secondary code phase. We denote it as I-MCCD (\(R,{N}_{M}\)), where \(R\) denotes the number of searches and \({N}_{M}\) denotes the first \({N}_{M}\) maximums recorded per search. Unlike the primary code phase, which is significantly affected by Doppler, the secondary code has a low chip rate, eliminating the need for a code Doppler calculation. As the first step in detection, we can compare the secondary code phase. Only two possibilities exist: phase alignment and phase deviation by one chip. Comparing the primary code phases is unnecessary if the requirements are not satisfied. Instead, the following cell is compared. Otherwise, the comparison of the primary code phase continues, and the code Doppler estimates must be determined from the Doppler frequency estimates. Introducing a secondary code phase comparison can avoid unnecessary primary code Doppler calculations while ensuring a low false alarm rate. The algorithm flow is shown in Fig. 8.

-

(1)

The same satellite is searched \(R\) times consecutively. Each search starts at time \(T\left(1\right)\), \(T\left(2\right)\), … \(T\left(R\right)\).

-

(2)

The \({N}_{M}\) maximal test statistics of each search and the corresponding Doppler frequency matrix \({\mathbf{F}}_{R\times {N}_{M}}\), the primary code phase matrix \({\mathbf{C}}_{R\times {N}_{M}}\), and the secondary code phase matrix \(\mathbf{S}{\mathbf{C}}_{R\times {N}_{M}}\) are recorded. The first value \({f}_{ij}\) in \({\mathbf{F}}_{R\times {N}_{M}}\) is the object of comparison, and the N elements of the matrix whose difference is less than \({f}_{th}\) are selected. The corresponding primary code and the secondary code phase array constitute V.

-

(3)

The first array \({v}_{{i}_{0}}\) in V is compared with the rest of the arrays in V. Taking the second array \({v}_{{i}_{1}}\) as an example. First, the secondary code phases are compared, and when the phases aligned or differ by one chip, the code shift is calculated based on the acquisition time \(T\left({i}_{0}\right)\) and \(T\left({i}_{1}\right)\) corresponding to \({v}_{{i}_{0}}\) and \({v}_{{i}_{1}}\), and then the primary code phases are compared. If the difference between secondary code phases is greater than one chip, there is no need to compare the phase of the primary code.

-

(4)

The difference between the two primary code phases is calculated directly if the secondary code is in phase. If the secondary code phases differ by one, the number of half-chips in a code period is added to the smaller one, and the difference is made. If the absolute value of the difference is less than or equal to \(|B|+1\), the statistic \(\mathrm{SD}\) is added by one. Otherwise, \(\mathrm{SD}\) remains unchanged.

-

(5)

After all values in V are compared with \({\mathrm{v}}_{{\mathrm{i}}_{0}}\), if \(\mathrm{SD}\)>\(th\), \({f}_{{i}_{0},{j}_{0}}\), \({v}_{{i}_{0}}\) is the Doppler and code phase of the auto-correlation peak; \(\mathrm{SD}\)<\(th\), then the second value in \({\mathbf{F}}_{R\times {N}_{M}}\) is taken for comparison, and processes (3) and (4) are repeated.

In the detection process, four parameters need to be set as follows: \(R\), \({N}_{M}\), \({f}_{th}\) and \(th\). Taking speed and sensitivity into account, we use I-MCCD (3,20), set \({f}_{th}\) as the resolution of the frequency search, and set \(th\) = 1.

Simulation results and analysis

Our work focuses on BDS-2 and BDS-3 signals with secondary code modulation. But it applies equally to other GNSS signals with secondary code modulation (including Galileo E1C, E5, and GPS L5). To confirm this, in addition to BDS signals, we have also implemented the acquisition of E1C, and the acquisition of other signals will be realized in the future work.

The algorithm simulation uses software-generated BDS B1I, B2I, B3I, B2a, and Galileo E1B/C signal IF data. No less than 100 Monte Carlo simulations are performed in each condition.

Sensitivity simulation results

The carrier-to-noise ratio (\(C/{N}_{0}\)) of the signal is controlled by the signal amplitude. Under each \(C/{N}_{0}\), the Doppler of the IF data is generated separately at the main frequency point and the scalloping loss maximum point. The former is where the integration gain is highest and has the best theoretical sensitivity performance. The latter is where the integration gain is lowest and has the worst theoretical sensitivity performance. Counting the acquisition success probability at both points fully confirms the algorithm's sensitivity performance. The properties of signals are listed in Table 3.

The algorithm is implemented by a playback acquisition framework. The envisaged acquisition engine implementation uses a 5Mbits storage space for storing and playing back the IF data at high speed. The acquisition parameters are listed in Table 4. The CodeBlocks in the table is \(\left(\mathrm{Primary\;code\;length}\right)\times 2/2046\).

The simulations are performed in a weak signal environment. The estimation accuracy and acquisition speed that can be achieved are shown in Table 5, where the acquisition speed is calculated based on the acquire engine working at 200 MHz.

The probability of correct acquisition at different signals \(C/{N}_{0}\) is shown in Fig. 9. We can see that the IHSPF algorithm has extremely good acquisition sensitivity performance, especially for B1I and B2I.

The IHSPF algorithm can complete nine searches of the B1I signal in five seconds. Considering the worst case (all satellites with Doppler frequency at the gain nadir), the probability that four and more satellites (enough for positioning) can be acquired in five seconds is 98%. It is not a bottleneck for TTFF.

Analysis

The analysis of the sensitivity gap between the B3I/B2a and B1I/B2I signals is provided in the following. Equation (23) illustrates the SNR gain of coherent integration compared to the 1-ms correlation. By deducting the non-coherent loss (25) from the gain, (24) calculates the SNR gain produced by the non-coherent integration (Tsui 2005):

where \({T}_{\mathrm{coh}}\) is the coherent integration time in milliseconds and \({N}_{\mathrm{nc}}\) is the number of non-coherent accumulations. The ideal measurement factor, \({D}_{c}\left(1\right)\), is related to the system's required probability of detection and the probability of false alarms. Here, it is taken to have a typical value of 21.001. Due to limited IF storage capacity, the integration time for the B3I and B2a signal is much shorter because of the higher code rate. The strength of a single channel is -3 dB of the total signal strength, with the energy of the B2a signal being equally distributed between the data and pilot channels. Additionally, the coherent integration time is constrained by the secondary code period of B2a. The total integration SNR gain of the B1I, B2I, B3I, and B2a signals is calculated in Table 6.

Figure 10 shows the statistical results of the Doppler error of the acquisition results. For various \(C/{N}_{0}\), the percentage of errors less than or equal to the resolution (shown in Table 5) is plotted. The signal \(C/{N}_{0}\) has little impact on the Doppler estimation.

Comparison

The acquisition parameters of the simulation in the last subsection are set to exactly simulate the receiver chip in the expectation that the best acquisition sensitivity will be obtained in the hardware implementation. Here, we compare the acquisition sensitivity of the IHSPF with that of the receiver chips and other algorithms.

Comparison 1: Beidou B1I

Table 7 shows two commercial GNSS receiver SOC with the highest B1I signal acquisition sensitivity (Unicorecomm 2022; u-blox 2020). Calculating according to ambient temperature 290 K and RF Front-End loss 3 dB, the simulation results are converted to input power (26) to compare with the two chips. Correspondingly, the power of the signal input to SOC can also be converted to the \(C/{N}_{0}\) of the signal input to the acquire engine

where \({N}_{0}=kT=1.38\times 1{0}^{-23}\times 290=4.00\times 1{0}^{-21}=-203.98\mathrm{dBW}/\mathrm{Hz}=-173.98\mathrm{dBm}/\mathrm{Hz}\) and \({L}_{\mathrm{RF}}=3 \mathrm{dB}\).

The sensitivity of IHSPF is estimated here based on the BD 410034-2022 (2022), an evaluation standard specified by China Satellite Navigation Office. The standard states that the sensitivity is the minimum input signal power at which the receiver can complete positioning within 300 s. There are 45 satellites of BDS-2 and BDS-3 in space. The IHSPF takes 0.5156 s to complete one search, enabling 12 rounds of 45 satellites to be searched in 300 s. Considering the worst case at 23 dB-Hz (shown in Fig. 9), the successful probability that at least one of the 12 searches for a satellite is up to \(100\mathrm{\%}-{\left(100-74\mathrm{\%}\right)}^{12}=99.9999904\mathrm{\%}\). There are about ten BDS satellites visible in the sky. The success probability is sufficient for acquiring four or more satellites to achieve positioning. Therefore, the sensitivity of the IHSPF algorithm is 23 dB-Hz.

The Grouping-FFT-2stage algorithm proposed by Liu et al. (2018) can actualize the 90% success possibility of the B1I signal at a carrier-to-noise ratio (\(C/{N}_{0}\)) of 23.9 dB-Hz with a Doppler accuracy of about 31 Hz. In their work, several conventional algorithms were simulated at the same time, including the Conventional-NC and DC-with-sign-recovery. Here, the simulation results of the IHSPF are compared with them (shown in Fig. 11).

The proposed IHSPF algorithm can achieve an acquisition success probability of 93% (at the maximum gain points) at 23 dB-Hz with a Doppler accuracy of 11.72 Hz. The IHSPF algorithm has a better sensitivity performance under all conditions except for 22 dB-Hz, where the success probability (at the minimum gain points) is lower than other algorithms. The Grouping-FFT-2stage algorithm uses a two-stage structure. The first stage uses the conventional NC method based on circular correlation to obtain a coarse estimate of the phase of the main code, and the second stage performs an FFT on the sample points stripped off the primary code to obtain a Doppler estimate.

Complex multiplication is the most computationally loaded of the acquisition algorithms and is used in many works to evaluate algorithm complexity (Liu et al. 2018; Svatoň et al. 2020). In the Grouping-FFT-2stage algorithm, the number of complex multiplication operations in correlation and integration is taken as the computational complexity, and the number of addition operations is ignored. Meanwhile, the FFT of the local code is calculated and stored in the receiver in advance. It is not counted in the computational complexity. Here, we compare the computational complexity of the IHSPF with that of the Grouping-FFT-2stage algorithm (as shown in Table 8, \({A}_{r}=14\), \(B=20\), \(\Upsilon =4092\), \(l=81840\), \(M=128\)). The computational complexity of the IHSPF is higher than the Grouping-FFT-2stage. It is mainly due to using the ELM method, which increases the number of main frequency points. However, higher acquisition success probability and frequency resolution are also obtained.

Comparison 2: Galileo E1C

The mSBZP method proposed by Svatoň et al. (2020) performed simulations for E1C acquisition sensitivity at different lengths of coherent integration. Our work also performs sensitivity simulations for the Galileo E1C signal (the signal properties shown in Table 9) to verify adaptability to other constellations’ signals. The Doppler of the IF data is also generated separately at the main frequency point and the scalloping loss maximum point. The acquisition engine uses the parameter settings in Table 10. A comparison of the success probability of acquisition is shown in Fig. 12.

The 'mSBZP' method in Fig. 12 results from the proposed improved algorithm in Svatoň et al. (2020). The acquisition success probability of the IHSPF outperforms the 'mSBZP' method. The advantage of IHSPF is also in the reduction of computational complexity. Table 11 shows the comparison of the computational complexity of the search for 8184 primary and 25 secondary code phases at one main frequency point using 100 ms integration (where \({A}_{r}=1\), \(B=25\), \(\Upsilon =8184\), \(l=8184\times 25\), \(M=128\)). The complexity of the IHSPF is only about 1/15 of the mSBZP. This is because the mSBZP method uses a serial search for the secondary code phase. The IHSPF does not need to repeat the correlation operations thanks to parallel search in the secondary code domain.

Conclusion

The IHSPF algorithm is proposed based on the PMF-FFT. The conventional 2-D parallel search in the primary code phase and Doppler frequency is extended to a 3-D parallel search in the primary code phase, secondary code phase, and Doppler frequency using the PScPE. The envelope loss problem of the FFT is analyzed, and the integration gain envelope is improved by the ELM method, which combines zero padding and spectrum interleaving. An improved detection method, the I-MCCD, is introduced to improve the acquisition sensitivity further.

Simulations show that the proposed IHSPF algorithm has superior acquisition sensitivity performance to the BDS-2 and BDS-3 signals with secondary code modulation. The acquisition sensitivity of the B1I signal is effectively improved up to 23 dB-Hz. The success probability can get 93% at the maximum gain points and 74% at the minimum gain points. Theoretically, when the algorithm is implemented using hardware and the circuit works at 200 MHz, the time to complete a search is 515.6 ms. The IHSPF can also be applied to other GNSS signals, and the acquisition of E1C signals is implemented in our work.

In future, we will validate the IHSPF algorithm on FPGAs. The total integration time and the number of searches in the I-MCCD can be further researched to balance the acquisition sensitivity and speed in different applications. The IHSPF algorithm was designed with multi-constellation and multi-frequency compatibility in mind. It can be extended to a full-constellation and full-frequency acquisition algorithm.

Data Availability

The data that support this research are available from the corresponding author upon reasonable request.

References

BD 410034-2022 (2022) Performance requirements and test methods for BeiDou multi-constellation multi-frequency System-on-Chip. http://www.beidou.gov.cn/yw/gfgg/202211/t20221114_24871.html

Corazza G (1996) On the MAX/TC criterion for code acquisition and its application to DS-SSMA systems. IEEE Trans Commun 44:1173–1182

Corazza G, Pedone R (2007) Generalized and average likelihood ratio testing for post detection integration. IEEE Trans Commun 55(11):2159–2171

Duan H, Ba X, Chen J (2015) Detection method for weak GPS signal under cross-correlation effect. J Data Acquisit Processg 30(3):677–682

Foucras M, Julien O, Macabiau C, Ekambi B, Bacard F (2016) Probability of detection for GNSS signals with sign transitions. IEEE Trans Aerosp Electron Syst 52(3):1296–1308

Gao F, Xia H (2018) Fast GNSS signal acquisition with Doppler frequency estimation algorithm. GPS Solutions 22(4):103

Geiger B, Vogel C, Soudan M (2012) Comparison between ratio detection and threshold comparison for GNSS acquisition. IEEE Trans Aerosp Electron Syst 48:1772–1779

Guo W, Niu X, Guo C, Cui J (2017) A new FFT acquisition scheme based on partial matched filter in GNSS receivers for harsh environments. Aerosp Sci Technol 61:66–72

Kim B, Kong SH (2014) Design of FFT-based TDCC for GNSS acquisition. IEEE Trans Wireless Commun 13:11

Kong SH (2013) A deterministic compressed GNSS acquisition technique. IEEE Trans Veh Technol 62:511–521

Kong SH (2015) SDHT for fast detection of weak GNSS signals. IEEE J Sel Areas Commun 33:2366–2378

Kong SH (2017) High sensitivity and fast acquisition signal processing techniques for GNSS receivers. IEEE Signal Process Mag 34(5):59–71

Leclere J, Botteron C, Farine P (2014) Acquisition of modern GNSS signals using a modified parallel code phase search architecture. Signal Process 72:177–191

Leclere J, Landry R (2018) Combining secondary code correlations for fast GNSS signal acquisition. In: 2018 IEEE/ION position, location and navigation symposium (PLANS). IEEE, Monterey, pp 46–55.

Liu Q, Kou Y, Huang Z, Wang J, Yao Y (2019) Mean acquisition time analysis for GNSS parallel and hybrid search strategies. GPS Solutions 23(4):94

Liu A, Zhao L, Ding J, et al (2018) Grouping FFT based two-stage high sensitivity signal acquisition with sign transitions. Journal of Navigation 6:52479–52489

Lo Presti L, Zhu X, Fantino M, Mulassano P (2009) GNSS signal acquisition in the presence of sign transition.IEEE J Selected Topics Signal Process 3(4):557–570

Lyons RG (2015) Understanding digital signal processing, 3rd edn. Publish House of Electronics Industry Press, Beijing

Meng Q, Liu J, Zeng Q, Feng S, Chen R (2017) Neumann-Hoffman code evasion and stripping method for BeiDou software-defined receiver. J Navig 70(1):101–119

Spangenberg S, Scott I, McLaughlin S, Povey G, Cruickshank D, Grant P (2000) An FFT-based approach for fast acquisition in spread spectrum communication systems. Wireless Pers Commun 13(1–2):27–56

Svatoň J, Vejražka F, Kubalík P, Schmidt J, Borecký J (2020) Novel partial correlation method algorithm for acquisition of GNSS tiered signals. Navigation 67(4):745–762

Svatoň J, Vejražka F (2018) Pre- and postcorrelation method for acquisition of new GNSS signals with secondary code. In: 2018 IEEE/ION position, location and navigation symposium (PLANS). IEEE, Monterey, pp 1422–1427.

Ta T, Qaisar S, Dempster A, Dovis F (2012) Partial differential postcorrelation processing for GPS L2C signal acquisition. IEEE Trans Aerosp Electron Syst 48(2):1287–1305

Tawk Y, Jovanovic A, Leclere J, Botteron C, Farine P-A (2011) A New FFT-Based Algorithm for Secondary Code Acquisition for Galileo Signals. In: 2011 IEEE Vehicular Technology Conference (VTC Fall). IEEE, San Francisco, CA, USA, pp 1–6

Tsui JBY (2005) Fundamentals of global positioning system receivers: a software approach, 2nd edn. Wiley, New York

u-blox (2020) NEO-M9N datasheet https://www.u-blox.com/en/positioning-chips-and-modules

Unicorecomm (2022) UFirebird UC6226 datasheet https://en.unicorecomm.com/products/detail/11

Van Nee DJR, Coenen AJRM (1991) New fast GPS code-acquisition technique using FFT. Elect Lett 27(2):158–160

Viterbi AJ (1995) CDMA: principles of spread spectrum communication. Addison-Wesley, Reading

Xie G (2017) Principles of GPS and receiver design. Publish House of Electronics Industry Press, Beijing

Zhu C, Fan X (2015) A novel method to extend coherent integration for weak GPS signal acquisition. IEEE Commun Lett 19(8):1343–1346

Acknowledgements

This work was supported by high-technology project between Jilin Province and the Chinese Academy of Sciences (Grant Number 2022SYHZ0030).

Author information

Authors and Affiliations

Contributions

XD was involved in methodology, conceptualization, software, formal analysis, visualization, and writing—original draft. YY was involved in methodology, validation, software, and writing—review and editing. JC was involved in supervision.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ding, X., Yang, Y. & Chen, J. Improved high-sensitivity partial-matched filter with FFT-based acquisition algorithm for BDS-2 and BDS-3 signals with secondary code modulation. GPS Solut 27, 143 (2023). https://doi.org/10.1007/s10291-023-01480-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10291-023-01480-y