Abstract

Lately, the model-driven engineering community has been paying more attention to the techniques offered by the search-based software engineering community. However, even though the conformance of models and metamodels is a topic of great interest for the modeling community, the works that address model-related problems through the use of search metaheuristics are not taking full advantage of the strategies for handling nonconforming individuals. The search space can be huge when searching in model artifacts (magnitudes of around \(10^{150}\) for models of 500 elements). By handling the nonconforming individuals, the search space can be drastically reduced. In this work, we present a set of nine generic strategies for handling nonconforming individuals that are ready to be applied to model artifacts. The strategies are independent from the application domain and only include constraints derived from the meta-object facility. In addition, we evaluate the strategies with two industrial case studies using an evolutionary algorithm to locate features in models. The results show that the use of the strategies presented can reduce the number of generations needed to reach the solution by 90% of the original value. Generic strategies such as the ones presented in this work could lead to the emergence of more complex fitness functions for searches in models or even new applications for the search metaheuristics in model-related problems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Since the term search-based software engineering (SBSE) was coined [46] in 2001, it has attracted many research efforts from many different research fields such as testing [3, 6], maintenance [47], requirements [47] and Software Product Lines [45]. Part of the success of SBSE resides in the fact that many of the problems present in the field of software engineering can be expressed in a way that can be successfully addressed by existing metaheuristic algorithms, such as evolutionary algorithms. In fact, only three key ingredients are needed to begin: (1) a representation (encoding) of the problem, (2) the definition of a fitness function and (3) the definition of a set of operators. Then, candidate solutions (which are encoded following the representation chosen) are evolved (by applying the operators) and are evaluated (by the fitness function) in an iterative process until optimal solutions to the problem are found.

Similarly, model-driven engineering (MDE) [52] aims to facilitate the development of complex systems by using models as the main artifacts of the software development process. However, with the widespread application of MDE to larger and more complex systems, new software engineering issues are emerging to support the development, evolution and maintenance of large models. SBSE techniques are best applied in situations where a large search space is present with a set of conflicting constraints.

This has led to the combination of MDE and SBSE techniques into a new field of study known as search-based model-driven engineering (SBMDE) [14, 53], where search-based techniques are applied for MDE-related tasks, such as discovering or optimizing models, automatically generating test procedures, and maintaining consistency between models and metamodels.

However, when applying SBSE to model artifacts, the search space can grow too large (a model of 500 elements can yield a search space of around \(10^{150}\) individuals [35]), making the search impractical if the search space is not reduced. Another problem that has originated when SBSE techniques are applied to MDE is the generation of models that do not conform to the metamodel. Conformance between the model and its metamodel has been widely studied [39, 72] and is required by existing tools [62, 80, 81].

One solution for reducing the search space while managing the conformance between the metamodel and the models generated by the search metaheuristic is the use of strategies to handle nonconforming individuals. In other words, the conformance between a model and its metamodel can be formulated as a constraint that needs to be guaranteed by the metaheuristic algorithm being applied. Therefore, we will refer to conforming or nonconforming individuals, depending on whether or not the model encoded by the individual conforms to its metamodel. There are several methods proposed in the literature [19, 60] to handle these constraints, which belong to different categories:

-

Penalty functions The application of penalty functions to the nonconforming individuals that hinders their spread during the evolution. [10, 51, 75, 89]

-

Strong encoding The use of a representation for the problem that guarantees (by construction) that all the individuals are conforming individuals [12].

-

Closed operators The use of operators that return conforming individuals as output when provided with conforming individuals as input [61].

-

Repair operators The use of repair operators that transform nonconforming individuals into conforming ones. [18, 65, 66]

The application of these strategies will result in a reduction of the search space and fine-grained control over the conformance between the models and the metamodel. However, most of the works in the literature do not apply these strategies to MDE problems [19, 60], or they do not encode model artifacts as individuals. This results in a lack of strategies to handle nonconforming individuals when working directly with model artifacts as individuals.

The meta-object facility (MOF) [64] is a specification from the Object Management Group to define a universal metamodel for describing modeling languages. In this paper, we present and compare nine different generic strategies for coping with nonconforming individuals when applying SBSE techniques that encode model artifacts built within the MOF modeling space. Specifically, we present and compare: (1) a set of five different penalty functions; (2) a strong encoding and its associated operations; (3) a set of closed operations; and (4) a set of two repair operators. All of these strategies have been designed to work with MOF models as individuals, and, therefore, are generic in the sense that they do not contain any particularities of the application domain; they only include constraints derived from the definition of MOF models.

In our previous works [37, 38] we have successfully applied SBSE techniques to perform Feature Location in Models (FLiM). Feature Location (FL) is one of the most common activities performed by developers during software maintenance and evolution [26] and is known as the process of finding the set of software artifacts that realize a specific feature. We use the FLiM problem as a running example throughout the paper.

We evaluate the different strategies for coping with nonconforming individuals by applying them to perform FLiM on the product models from two industrial domains: BSH, the leading manufacturer of home appliances in Europe; and CAF, a leading company that manufactures railway solutions all over the world. The evaluation is performed using two fitness functions, an optimal fitness based on an oracle and a state-of-the-art fitness based on textual similarity. The results show that these strategies for handling nonconforming individuals can reduce the number of generations needed to reach the solution to 90% of the original value. This can result in gains in performance of more than 20% for some of the metrics analyzed. In addition, we provide a statistical analysis to ensure the significance of the results obtained.

The community that is currently applying SBSE solutions to MDE problems is not taking full advantage of the improvements that the use of strategies such as the one presented in this paper can provide. Therefore, we want to provide evidence of their benefits and contribute to the community with a set of domain-independent strategies that have been evaluated on two industrial case studies of FLiM and can be applied by other researchers when applying SBSE to MDE problems. The strategies can be applied without modification to other FLiM problems whose models are created with any MOF domain-specific language expecting similar results. In addition, the encoding presented has been used in other SBMDE problems as Bug Location [7] and Traceability Link Recovery [70] and we expect similar results when applying the strategies. For SBMDE problems requiring a different encoding (with expressiveness to generate model fragments that are not part of the parent model), modifications of the strategies may be required, and further experimentation is needed to evaluate if the results are similar.

The rest of the paper is structured as follows. Section 2 discusses related work. Section 3 establishes the foundations for the rest of the paper, including the problem of FLiM and the Evolutionary Algorithm that we use to address it, the model and metamodel conformance, and the search space. Section 4 presents the nine generic strategies for handling the nonconforming individuals introduced in this work. Section 5 presents the evaluation performed with two industrial case studies. Section 6 provides a discussion of the results obtained. Section 7 discusses the threats to validity, and Sect. 8 concludes the paper.

2 Related work

This section presents works from the literature that are related to the approach presented here. There are some works that apply SBSE strategies to address MDE problems. However, not all of them use models as the individuals; some apply the searches to model transformation rules, while others focus on the improvement of the metamodel through the use of Object Constraint Language (OCL) rules. We also present works about feature location in models. Finally, we discuss works that are related to models in the context of a Software Product Line.

2.1 Model transformation rules

Some works that apply EAs to models use model transformation rules to encode the individuals. Nonconforming individuals are mainly handled through repair operators or death penalties:

The work from [2] applies a Non-dominated Sorting Genetic Algorithm (NSGA) to the problem of rule-based, design-space exploration. The aim is to find the candidates that are reachable from an initial model by applying a sequence of exploration rules. In that work, the authors make use of a custom repair operator that fixes nonconforming individuals. However, their individuals are encoded as sequences of exploration rules, not models themselves, and, therefore, their repair operator is specific to their particular domain. In our work individuals are encoded as model fragments and the repair operators that we propose in this paper can be applied to individuals encoding models from any domain.

In [25], the authors apply search directly to model transformations, without the need for an intermediate representation. The approach proposes the creation of model transformation rules that are capable of performing the tasks associated with an Evolutionary Algorithm (EA), such as the creation of the initial population. The approach is applied to a problem of resource allocation, where the nonconforming individuals are pruned out through the use of one of these model transformation rules. Similarly to our work, the authors apply a death penalty to prune out nonconforming individuals. However, the rule that is used to identify those individuals is specific to their particular domain, and cannot be applied to identify nonconforming model fragments from other domains.

In [32], the authors present MOMoT, a tool that applies SBSE strategies to optimize the set of model transformation rules needed to maximize the requested quality goals of a given model. The approach is further refined in [31] to include support for many objectives and an exhaustive performance comparison of different search strategies is presented in [13]. The tool makes use of three different strategies to handle duplicated or non-executable sets of transformations that could arise when performing genetic operations. The first one is the use of a death penalty, removing those transformations sets. The second one is the replacement of the malformed transformation by a random transformation (or a placeholder transformation) so the set of transformations can be executed. The third strategy is the use of a dedicated re-combination operator (such as the partially matched crossover [42]) that is able to consider some constraints avoiding the creation of non-executable transformation sets. However, the strategies used in those works are designed to work on individuals encoding the order of the transformations, and cannot be applied to repair nonconforming individuals that encode model fragments. Furthermore, the impact of the use of those strategies on the performance of the approach is not evaluated.

In [15], the authors describe strategies for generating closed operators. They use graph transformation rules to encode the mutation operators that are then automatically generated in the form of transformations. These operators guarantee the consistency of the mutated models with the metamodel multiplicity constraints. The resulting operators are similar to the closed operators proposed in this work, but their operators are generated taking into account the multiplicities from the metamodel. In this work, we obtain the constraints for the closed operators from the inherent constraints of the metalanguage used to build the metamodels, instead of using the multiplicities of the metamodel. In addition, our operations are designed to work over EA encoding model fragments.

2.2 Metamodel enhancement

Other works try to enhance the metamodel to avoid the generation of models that should not be part of the modeling space for that metamodel. This is usually done through the use of the Object Constraint Language (OCL) rules defined throughout the metamodel.

In [43], the authors propose an approach that helps the modeler find the boundaries of the modeling space of a metamodel. To do this, the approach generates samples of all of the models that can be built with a given metamodel and iterates those samples (through a simulated annealing algorithm) to maximize the coverage of the sample. Then, the sample is presented to experts so that they can fix the metamodel if any of the presented models should not be allowed. By doing this, the gap between the modeling space (all of the models that are reachable from a metamodel) and the intended modeling space (the models that the experts want to be built with the metamodel) can be reduced, and the accuracy of the metamodel can be increased. Similar to our work, their work deals with nonconforming individuals. However, in [43], the undesired individuals are identified by experts and then turned into nonconforming by modifications of the metamodel that was used to create the individuals. In its current form, their approach cannot be applied to handle nonconforming individuals of a running EA.

In [29], the authors take two sets of models (one that includes valid models and another one that includes invalid models) and use an EA to automatically generate well-formedness rules that are derived from the two sets of models provided. As a result, they provide a set of OCL rules that can be used to improve the metamodel into a more precise metamodel + well-formedness rules.

Other works, such as [4], take into account the OCL constraints that are embedded throughout the metamodel and try to generate sets of parameters that fulfill the OCL constraints with the aim of using them as test data. The approach is further refined in [5] to include more types from OCL and heuristics to guide the search. They compare themselves with the most widely used OCL constraint solver, achieving better results. Similarly, the graph solver presented in [78, 79] generates consistent models of a designated size from a specification defined by a metamodel and a set of well-formedness constraints. However, these approaches do not solve the problem of handling nonconforming individuals when using search strategies. They do take into account constraints that models should fulfill and use EAs or other generators to help in this task. In contrast, the strategies that we present in this paper are designed to be applied to existing searches in models that are not benefiting from the advantages associated with the proper management of nonconforming individuals.

In [86, 87], the authors propose Crepe, a domain-specific language (DSL) that can be used to specify individuals that represent any model conforming to a specific metamodel. Thus, they are able to encode individuals in the form of a model (or model fragments) as we do in our work. In [88], the authors report the generation of nonconforming individuals when applying their encoding for models as individuals, which allowed them to improve the DSL being used. In [56], the authors identify the generation of nonconforming individuals in Crepe, and propose a repair operator to address this issue. After an individual is modified, they use a re-coding operation to repair the individual, preserving the semantics of the model in those aspects not directly affected by the crossover and mutation operations. However, individuals are only partially repaired as the expressiveness of the operators and encoding being used allows for the generation of individuals that cannot be automatically repaired. We believe that approaches such as [56, 88] could be improved through the use of the strategies to handle nonconforming individuals presented in this paper.

2.3 Feature location in models

Some works from the literature focus only on capturing guidelines and techniques for manual transformations of a set of existing products into assets of a Software Product Line. Those works are interesting because they are based on industrial experiences; however, there is almost no automation in the process.

Other works [35, 40, 49, 58, 85, 90, 91] focus on the location of features in models through comparisons with each other. As a result, the variability is expressed in the form of a model expressing the differences (which is eventually turned into a Software Product Line). These include the following:

-

The authors in [90] present a generic approach that is able to perform comparisons of MOF models, resulting in the features being located in the form of a Common Variability Model [82]. The approach in [90] was further refined in [91] to allow the extension of the model capturing the features, when new models are added to the comparison. This reduces the complexity of the process, avoiding the need for executing all of the comparisons from scratch and allowing them to be performed incrementally.

-

Wille et al. [85] present an approach based on an exchangeable metric that is used to measure the similarity between different attributes of the models. The approach in [85] was further refined in [49] to minimize the number of comparisons needed to obtain the model representing the similarities and differences among the different models.

-

Martinez et al. [58] propose an extensible approach based on models’ comparisons that can obtain the features from a family of related models. The approach can be extended through a system of templates, allowing the identification of differences of any type of model-based content (as long as the comparison method is provided)

However, all of these approaches are based on mechanical comparisons among the models, classifying the elements based on their similarity, identifying the dissimilar elements and formalizing them as the feature realizations. In contrast, in our work the feature location is applied to a single model, so it does not rely on model comparisons to locate the features; instead it relies on searches across the modeling space performed by an EA.

Some of our previous works focus on the topic of feature location in models, ranging from approaches based on comparisons [40] to human-in-the-loop approaches [35] or searches based on metaheuristics [16, 36,37,38]. One of them focuses on the influence of genetic operations on the quality of the results [71]. Some of them focus on the possibility of sharing the information scattered among different engineers in order to empower them to produce better queries that guide the EA [68, 69], while other works explore the use of Multi-Objective Evolutionary Algorithms [16, 70]. However, none of those works has ever investigated the possibility of handling nonconforming individuals to boost the search process, as is the case in this work.

2.4 Software product lines

Finally, some works report problems when nonconforming individuals are automatically generated by their genetic operations in the context of a software product line. In [20], the authors propose a representation of a software product line architecture that can be used by search-based techniques. This allows the optimization of the architecture model through the use of different search operations. The authors report the generation of some solutions that are non-consistent with their definition of the product line architecture that are repaired by a domain-dependant repair operator.

In [77], the authors present ETHOM, an EA that is capable of generating computationally hard feature models in order to use them to feed analysis tools for feature models. To this end, the EA encodes feature models as a combination of a tree and the related cross-tree constraints. Since the use of this encoding leads to the generation of nonconforming individuals, the authors use a repair operator or discard the individual (death penalty), depending on the complexity of repairing the individual. However, since the encoding used by the authors is particular to their specific domain (their representation of feature models), the repair operator proposed is also particular to their domain and captures inconsistencies that only occur in their representation of feature models. In contrast, the strategies presented in our work are designed to work with models created with any domain-specific language created using the meta-object facility [64] metalanguage, improving its re-usability by different practitioners whose domain-specific languages are created using MOF.

3 Overview of the problem

This section provides the foundation for the rest of the paper. It describes the following: (1) what Feature Location in Models is; (2) how it is achieved through an evolutionary algorithm; (3) what model and metamodel conformance is and what makes an individual nonconforming; (4) what the search space is when searching for model fragments and what it looks like.

3.1 Feature location in models (FLiM)

Feature Location [26, 74] is the process of identifying the set of software artifacts that realize a specific feature. That is, Feature Location requires to find and indicate all the software artifacts that are used for the design, development and further maintenance of a specific feature (such as requirements, source code, documentation, or tests). Depending on the nature of the software artifacts and the features being located, a different granularity may be applied; for instance, when features are located across source code, a feature could correspond to a single class, a set of methods from different classes, some conditions inside a switch statement, or even a whole package.

We define the Feature Location in Models (FLiM) as the process of identifying the set of model elements that realize a specific feature. The results of the FLiM process are model fragments that represent a specific feature. At this point, it is important to define what a model fragment [36,37,38] is: A model fragment is always defined in reference to a parent model. A model fragment is a subset of the elements of the parent model. Therefore, all of the model fragments of a given parent model are subsets of the parent model.

Similarly to other software artifacts, the granularity can vary depending on the nature of the models and the features being located. Taking into account the MOF specification from the Object Management Group (OMG) and our experience with models from industrial domains [37,38,39], we divide the relevant elements of a model into a set of atomic elements (meta-class, meta-reference and meta-property), and we do not consider further subdivisions of those units in this work.

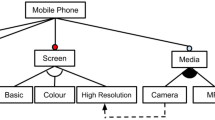

To illustrate the elements, Fig. 1 (top-left) shows the induction hob domain-specific language (IHDSL) metamodel, which is a simplificationFootnote 1 of the DSL used by one of our industrial partners. The DSL is used to model the firmware of the Induction Hobs in the context of a model-based software product line, where some of the features are reused by different products. In the following, we explain the concepts of meta-class, meta-reference and meta-property.

-

Meta-class is the core element, holds a set of meta-properties and meta-references, e.g., the Inductor meta-class element from the metamodel in Fig. 1 (top-left).

-

Meta-reference relates two meta-class elements and includes a source and a target meta-class element, a multiplicity for the target and the source meta-classes, and a name. Meta-references can also be distinguished by whether or not they are containment meta-references. For instance, the inductors meta-reference from the metamodel in Fig. 1 (top-left) is a containment meta-reference whose source is the Induction Hob meta-class (multiplicity 1) and whose target is the Inductor meta-class (multiplicity any), while the from meta-reference is a meta-reference (non-containment) whose source is the Provider Channel meta-class (multiplicity 1) and whose target is the Inverter meta-class (multiplicity 1).

-

Meta-property gives information about a meta-class, including the meta-property name, the type and the value. For instance, the Inverter meta-class element from the metamodel in Fig. 1 (top-left) contains a meta-property named pow whose type is a String.

Based on this division, a model fragment is a subset of the model elements that are present in the parent model, with the granularity of the elements being meta-classes, meta-references, or meta-properties.

3.2 Feature location in models by an evolutionary algorithm (FLiMEA)

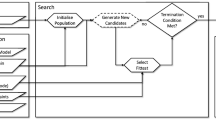

Figure 2 depicts an activity diagram for a generic EA. First, a set of individuals is obtained (following a previously designed specific representation) to be the initial population of solution candidates for the EA. Then, a fitness function is designed to assess the quality of individuals as solutions to the problem. If the stop condition is met, the execution ends; otherwise, a set of operators that is compatible with the representation and capable of evolving the individuals is executed to evolve the population. The following subsections present each of the EA parts in detail.

3.2.1 Representation of the individuals

Traditionally, the representation used in EAs comes in the form of binary strings [73]. For this EA, the individuals encode model fragments that are defined in the context of a parent model. Therefore, the representation needs to be able to capture any model fragment that can be generated from a given parent model. We use a binary string where each bit in the sequence represents the presence or absence of one specific element of the candidate solution.

In our case, the different elements that may or may not be part of an individual are the ones defined in the previous subsection (class, reference and property). Each of the elements present in the parent model will be ‘tagged’ with a position in the binary string, and then the binary string will be filled with either 0 (to indicate the absence of that element in the model fragment) or 1 (to indicate the presence of that element in the model fragment).

Figure 1 (bottom-left) shows a parent model of an example Induction Hob that contains one of each of the elements defined by the metamodel and the encoding associated with it. All of the individuals encoded in reference to this parent model will use a string of the same length, one gene for each of the elementsFootnote 2 present in the parent model (\(G_0\) to \(G_9\), up to a total of ten elements that may or may not be part of model fragments of this parent model). It is important to note that all of the elements (classes, references and properties) that are present in the parent model need to be present in the encoded binary string so that we are able to represent any possible model fragment using it.

Figure 1 (right) shows two individuals that are encoded in reference to this parent model (Model Fragment 1 and Model Fragment 2). Below each individual, a string with ten genes where the presence or absence of each element can be indicated is depicted. For instance, Model Fragment 1 is composed of an Inverter class element (\(G_0\)), a Provider Channel class element (\(G_3\)), a Power Manager class element (\(G_5\)), a from reference element (\(G_2\)), a to reference element (\(G_4\)) and a pow property element (\(G_1\)).

The function value(mf, i) is used to retrieve the value of a gene of a given model fragment (mf) and a given gene index i. For instance, the value(MF1, 2) is 1 while value(MF2, 0) is 0 (Fig. 1).

It is important to note that the presented encoding can be applied without changes to any MOF-compliant metamodel since it is expressed at the level of the building blocks of MOF metamodels. No domain-dependant information is embedded into the encoding (although it is presented in the context of a specific Induction Hob metamodel).

3.2.2 Fitness function

The fitness function is used to evaluate how good each of the individuals is as a solution to the problem. In the past [33, 37, 38], we have successfully applied fitness functions based on textual similarity between a feature definition and a model fragment. However, we identified some issues that influenced negatively the value of the fitness when using textual similarity as the fitness for FLiM [16]: (i) some feature descriptions may be incomplete (guiding the search to an incomplete model fragment), (ii) there may be vocabulary mismatch (the specific concepts defining the feature are different from those present in the feature, even though both represent the same concept), and (iii) there may be some concepts related to the domain that are not present in the model fragments but that are present in the model transformation rules applied afterward.

Therefore, in this work we perform the evaluation applying two different fitness functions. First, to isolate the effect of the strategies used to handle nonconforming individuals from the fitness function chosen, we make use of an optimal fitness function. Secondly, to study the effect of the strategies on a realistic scenario we apply a state-of-the-art fitness function based on textual similarity.

The Optimal Fitness function is used only for evaluation purposes; it relies on an oracle to guide the search (and the existence of an oracle is not always the case on real scenarios). An oracle (or golden set) is a set of problems and the solutions to those problems. In the case of FLiM, we have two oracles that were extracted from industry that include a set of problems of Feature Location in industrial models. Each of them includes a parent model where the feature should be located and a model fragment that realizes the feature. By using this oracle as the fitness function, we can remove the noise that is produced by fitness functions based on textual similarity, and focus only on the different strategies for handling nonconforming individuals and their impact on the search.

where m any given model fragment, n size of the model fragment, o model fragment from the oracle.

Equation (1) shows how to compute the fitness of a model fragment (m). The fitness is the result of adding the g(i) values for all of the genes present in the model fragment (from 0 to n) and dividing the sum by the size of the fragment. The g(i) is 1 if the gene value is the same in the model fragment and in the oracle (\(\mathrm{value}(m,i) = \mathrm{value}(o,i)\)) or \(-1\), otherwise.

Figure 3 shows an example of the fitness calculation for Model Fragment 1 (left). First, the binary string of the individual is compared with the solution that was extracted from the oracle (right). If the value of the gene is the same in both model fragments, \(\frac{1}{10}\) (it is divided by ten as there are ten genes) is added to the result. If the value of the gene is not the same in both model fragments, \(\frac{1}{10}\) is subtracted from the result. Finally, the resulting fitness(mf1) is equal to \(\frac{6}{10}\).

The resulting fitness value of the model fragment ranges from the worst value, \(-1\) (if all of the genes are the opposite of the oracle), to the best value, 1 (if all of the genes are the same as the oracle). In the case of a randomly generated individual, the fitness value should be close to 0 since the probability of correctly guessing a gene is the same as incorrectly guessing it.

The Textual Similarity Fitness that we apply in this work relies on Latent Semantic Indexing (LSI) [48] to determine how similar is each of the individuals of the population compared to a textual query that describes the feature being located. Before comparing the textual query and the texts obtained from the individuals of the population, texts need to be homogenized through the use of Natural Language Processing techniques [54].

First, the text is tokenized into words, different tokenizers can be applied based on the type of text being processed (i.e., white space for regular text, camelCase or underscore for source code). Secondly, the Parts-Of-Speech (POS) tagging technique can be applied to identify the grammatical role of each word, allowing to filter out some categories that do not contain relevant information and may introduce noise in the search process (i.e., prepositions). Thirdly, some words may not contain semantic information when used in particular domains (given their widespread), so they can be automatically removed if a list of stop words or domain terms is provided (e.g., in the induction hob domain, the word ‘hob’ will appear too many times, being no useful at all). Fourthly, stemming or lemmatization techniques can be applied to reduce the words to its root or lemma, enabling grouping and comparison of terms from the same family (e.g., ‘induction’ and ‘inductors’ would be reduced to ‘induct’).

LSI builds a vector representation of the query and a set of text documents, arranging them as a term-by document co-occurrence matrix. The rows of the matrix include all the terms present across the documents, the columns represent each of the individuals of the population and the query as last column, each cell indicates the number of occurrences of a particular term in an individual (or the query). Then, the matrix is decomposed applying the Singular Value Decomposition [54], resulting in a set of vectors that represents the latent semantic (one vector for each individual of the population and one vector for the query). Then, to compare the vectors we apply the cosine similarity between each of the vectors representing an individual and the vector of the query, resulting in the fitness value of each individual. The values range from \(-1\) (no similarity at all) to 1 (both vectors are the same).

3.2.3 Genetic operators

There are four basic operators that are generally applied in EAs (as depicted in Fig. 2):

-

Parent selection This operator selects the parents that will be used as the basis for the new individuals of the population. In this case, we use the Roulette Wheel Selection operator. This selection strategy assigns a probability of being selected to each individual in the population proportional to their fitness score. As a result, the fittest individuals are selected more often than individuals that are unfitted.

-

Crossover The aim of the crossover operator is to combine the genetic material from some individuals into new ones. In our case, we use a crossover operation that is based on a mask [37] that combines two parent individuals into two new offspring individuals.

-

Mutation The aim of the mutation operator is to emulate the spontaneous mutations that occur in nature. In this case, we use an evenly distributed mutation where each gene of each individual has the same probability of undergoing a mutation.

-

Replacement The aim of the replacement operator is to modify the population, adding the new offspring generated by the evolution and replacing some of the old individuals of the population. In this case, we apply widespread replacement of the least fit individuals by the new offspring.

3.3 Model and metamodel conformance

A model conforms to a metamodel if it is expressed by the terms that are encoded in the metamodel. In other words, the metamodel specifies all of the concepts used by the model, and the model uses those concepts following the rules specified by the metamodel. Conformance between a model and the metamodel can be described as a set of constraints between the two [67, 72]. For example, one of the constraints could be that all multiplicities specified in references must be fulfilled.

In addition, as presented in [30], current metamodel techniques tend to define the metamodel as having two parts: a domain structure that captures the context and relationships used to build the models (typically expressed as class diagrams), and well-formedness rules that impose further constraints that must be satisfied by the models (typically expressed as logical formulas). In this work, we focus on the constraints imposed by the domain structure. The additional constraints imposed by the well-formedness rules are out of the scope of this work, and some works on the topic are available elsewhere [4, 5].

MDE is built around the concept of modeling, and several tasks can be automated through the use of models and specific tools (e.g., the generation of graphical editors and tools [62, 80] or model-to-text transformation [22]). However, those tools implicitly require that models conform to a metamodel in order to be used. Model and metamodel conformance is a topic that is widely studied in the field of software evolution [39, 72].

Model and metamodel conformance is a desired property of models, which is implicitly required by the MDE tools and approaches. However, when working with model fragments, the constraints that ensure conformance are not so clear (as we are not dealing with whole models, but only with model fragments). In this work, we explore nine strategies that are built around the conformance of model fragments and metamodels in order to boost the search process of model fragments that realize a specific feature (subsets of a parent model that conforms to a metamodel).

In what follows, we will work with a conformance between a model fragment and the metamodel where some constraints should be preserved:

-

Valid reference A reference is considered to be valid if both the source and target model classes pointed by the reference are present in the model fragment. For instance, Fig. 1 shows Model Fragment 1, where the reference encoded by G2 is valid (the source of G2 is G3 and the target is G1, and both are present in the model fragment). In contrast, in Model Fragment 2, the reference encoded by G2 is not considered to be valid (the source of the reference is G0, which is not present in the model fragment).

-

Valid property A property is considered to be valid if its parent class is present in the model fragment. For instance, Fig. 1 shows Model Fragment 1, where the property encoded by G1 is valid (the parent class, G0, is present in the model fragment). In contrast, in Model Fragment 2, the property encoded by G1 is not valid (the parent class G0 is not present in the model fragment).

With these conformance constraints, model fragments can be classified into conforming individuals if they fulfill the constraints for all of the elements present in the model fragment (Model Fragment 1) or into nonconforming individuals, where any of the constraints are violated by any of the elements present in the model fragment (Model Fragment 2).

3.4 Search space

The search space is the space where the EA performs the search, i.e., the set of all possible individuals that an EA is able to reach by applying the different operations. Depending on the encoding and operations being used by the EA, different search spaces will result.

In general, a search space consists of two disjoint subsets of feasible and unfeasible subspaces (\(\mathcal {F}\) and \(\mathcal {U}\), respectively) [59]. In this work, we use the term conforming subspace instead of the term feasible subspace and nonconforming subspace instead of unfeasible subspace. We make this distinction in order to focus on the conformance between models and metamodels since it is what determines if an individual resides in one subspace or in the other. The individuals in the \(\mathcal {F}\) subspace satisfy the constraints for the problem (conforming model fragments), while the individuals in the \(\mathcal {U}\) subspace do not satisfy the constraints (nonconforming model fragments).

Figure 4 shows a representation of an example of a search space. The gray areas correspond to the conforming subspace, and white areas correspond to the nonconforming subspace. Each point corresponds to a specific individual, while the star corresponds to the solution of the problem, which is the individual that gets the best fitness value. When applying a Multi-Objective Evolutionary Algorithm such as NSGA-II [24], the search is guided by a fitness with multiple objectives and the solution is output as non-dominated set of solutions where all the objectives are optimal. In this work we apply a single objective fitness function, so we only depict a solution in Fig. 4, but we plan to study the application of these strategies in combination with Multi-Objective Evolutionary Algorithms in the future.

For instance, the individual tagged with an ‘a’ is a conforming individual, such as the one depicted in Model Fragment 1. The point tagged with a ‘d’ is a nonconforming individual, such as the one depicted in Model Fragment 2. The fittest individual that fulfills the constraints is considered the best-solution to the problem and resides in the conforming subspace. The fittest individual in Fig. 4 is depicted by the star tagged with an ‘s’.

In the case of SBSE applied to MDE, we want the EA to produce a conforming individual as a solution to the problem. Nevertheless, exploring nonconforming individuals could also lead to the solution faster and benefit the search. Therefore, we will study different methods to cope with nonconforming individuals. The next section presents our strategies for handling nonconforming individuals and how they can be applied to individuals encoding MOF models.

4 Handling nonconforming individuals in SBSE encoding model artifacts

This section presents the main strategies that are available in the literature for handling nonconforming individuals and how they can be applied to work when individuals encode model fragments. The main strategies studied are penalty functions, strong representations, closed operators and repair operators.

4.1 Penalty functions

Penalty functions [10, 51, 75, 89] are functions applied to nonconforming individuals that are designed to hinder their spread during the evolution. There are different variants of the penalty function method, ranging from static penalty functions and dynamic penalty functions, to the death penalty function, which is the most extreme one.

4.1.1 Static penalty

A static penalty applies a reduction to the fitness value of nonconforming individuals. In static penalties, the value can be a static constant or it can be proportional to the degree of violation of the constraints. To apply a static penalty in EAs, we need to identify nonconforming individuals and then modify their fitness value by subtracting the penalty value. This is done as an extra step after calculating the fitness value of the individuals.

Equation 2 shows the definition of sta, which is a static penalty function that applies a constant penalty value (\(\lambda _{s}\)) to the fitness of an individual (I) if it is a nonconforming individual. The value of the penalty applied (\(\lambda _{s}\)) needs to be adjusted depending on the domain.

Equation 3 shows the definition of staDeg, which is a static penalty function that applies a penalty value (\(\lambda _\mathrm{sd}\)) to the fitness of a nonconforming individual proportional to the degree of violation of the constraints (deg(I)) of the given individual. The degree of violation of an individual (deg(I)) is calculated as the sum of the violation degree of each gene (vio(i)), where all violations of a constraint are weighted the same. A gene that is not violating any constraint is not taken into account for the calculations.

Static penalty functions can be easily applied to EAs that are used to find model fragments, with the trickiest parts being the adjustment of the constant (\(\lambda _\mathrm{sd}\)) and the selection of the method used to assess the degree of violation of the constraints (deg(I)). As part of this work, we try different values and use the ones that provide the best results.

4.1.2 Dynamic penalty

Dynamic penalty functions are similar to static penalty functions in that they apply a reduction to the fitness value of nonconforming individuals. The difference with static penalty functions is that the penalty value applied is proportional to the current generation, making it more difficult for nonconforming individuals to survive as the evolution goes on. This penalty is well suited for the problem since we do not want nonconforming individuals as solutions; however, nonconforming individuals can lead to better results early in the process and removing them prematurely can affect the search negatively.

Depending on the representation used for the problem, some of the optimal individuals (those with the highest fitness scores) will be close to the boundaries between the \(\mathcal {U}\) and \(\mathcal {F}\) subspaces. Therefore, evolving a nonconforming individual into a conforming and optimal individual may be less expensive (in computational costs) than reaching the same optimal conforming individual through the evolution of another conforming individual (e.g., in Fig. 4, evolving ‘d’ to ‘s’ may be less expensive than evolving ‘a’ to ‘s’).

Equation 4 shows the definition of dyn, which is a dynamic penalty function that applies a penalty value (\(\lambda {d}\)) to the fitness of a nonconforming individual proportional to the number of the current generation (g).

Similarly, Eq. 5 shows the definition of dynDeg, which is a dynamic penalty function that applies a penalty value (\(\lambda _\mathrm{dd}\)) to the fitness of a nonconforming individual proportional to the degree of violation of the constraints (deg(I)) of the given individual and the number of the current generation (g).

4.1.3 Death penalty

The death penalty is the most extreme case of penalty. When new offspring are created through the combination of the genetic operators, the individuals are evaluated to check whether they belong to the conforming or the nonconforming subspace. If they belong to the nonconforming subspace, they are removed from the offspring, so they do not end up in the population of the next generation. If they belong to the conforming subspace, the EA continues normally, adding them to the population through the replace operator. When using this strategy, the population will never contain a nonconforming element, guaranteeing that the solution is a conforming individual.

4.2 Strong encoding

The second strategy for handling nonconforming individuals is the use of a strong representation (or encoding) for the problem. Changing the encoding may also involve a change in some of the genetic operators being applied since the operations are designed to work on a specific representation. The main idea is to devise a strong encoding that guarantees by construction that any individual encoded using this representation is a conforming individual. Having this type of encoding makes the search space change reducing the \(\mathcal {U}\) subspace to the empty set, thus simplifying the search space.

This solution has been successfully applied to problems that can be represented as a permutation of a set of values. For instance, the Travelling Salesman Problem poses the next question: Given a set of cities and their distances from each other, what is the shortest path to visits all of the cities? A typically strong encoding to solve these problems is a list that includes all of the cities. Each city appears once in each individual in the order it is visited, ensuring that all of the candidates fulfill the constraint (since all of the cities are visited).

In the case of model fragments, some restrictions must be introduced in the encoding to guarantee that all individuals fulfill the constraints (valid references and valid properties) in order to be considered a conforming individual. Our strong encoding consists in introducing a hierarchy of requirements among the genes; that is, some genes require other genes and can only be set to true if the required genes are also true.

Figure 5 (left) shows an example of our proposed strong encoding for models in EAs that use model fragments as individuals. It shows the encoding for a parent model, including the correspondence between each gene and the model elements (dashed arrows) and the requirements among the genes (regular arrows). For instance, the gene \(G_0\) indicates the presence or absence of the inverter class element, while the gene \(G_1\) corresponds to the pow property of the inverter class. The gene \(G_1\) requires the gene \(G_0\), so \(G_1\) can only be true if \(G_0\) is also true.

To ensure the ‘valid reference’ constraint, all of the reference elements require that their source and target class element are present in the model fragment. Therefore, a reference element can only be set to true if both the source and target class elements are also true. For instance, in Fig. 5 (left) the gene \(G_2\) corresponds to the from reference of the provider channel class element. \(G_2\) can only be true if the source of the reference (\(G_3\)) and the target of the reference (\(G_0\)) are also true in the model fragment.

To ensure the ‘valid property’ constraint, all of the property elements require their parent class element. Therefore, a gene representing a property can only be set to true if the parent element is also true.

By doing this, the representation ensures that both constraints are fulfilled by all of the individuals that are encoded using this strong encoding. Therefore, all of the individuals will be conforming individuals and the nonconforming space is reduced to the empty set.

Figure 5 (center) shows the representation of a conforming individual, Model Fragment 1, encoded following the strong encoding instead of the regular encoding (as in Fig. 1). All of the genes that require other genes (\(G_1, G_2, G_4, G_6, G_8\)) can only be set to 1 if the required genes ( \(G_0,G_3,G_5,G_7,G_9\)) are also set to 1.

Figure 5 (right) shows a wrong and invalid representation of a nonconforming individual, Model Fragment 2 (the same model fragment as in Fig. 1). This is a nonconforming individual and is not allowed by the strong encoding. It is only depicted for clarification purposes (as the encoding will not allow it to exist). Gene \(G_1\) requires \(G_0\) and since \(G_0\) is set to 0, \(G_1\) cannot be set to 1. Similarly, \(G_2\) requires \(G_0\) and cannot be set to 1 either. Model Fragment 2 is nonconforming, so it cannot be built using the strong encoding.

The new strong encoding just introduced also needs genetic operations that are designed to work properly for this representation. The selection and replacement operations used by the regular encoding can also be applied directly to the strong encoding. However, the new strong encoding requires new mutation and crossover operations.

4.2.1 Mutation operation for strong encoding

The Mutation operation that is used with the strong encoding is similar to the operation used with the regular encoding. Each gene will have a probability of mutation, changing its value (from 1 to 0 or from 0 to 1). However, the operator will act differently in some cases due to the dependencies. Figure 6 shows a summary of how the mutation behaves when a gene affected by requirements is going to mutate. It also includes examples of mutations applied to Model Fragment 1.

The first row of Fig. 6 shows the behavior when the gene that is going to mutate has a value of 0 and is going to mutate to 1. The second row shows the behavior when the gene mutates from 1 to 0. The first column shows the behavior when the gene that is going to mutate requires other genes. The second column shows the behavior when the gene that is going to mutate is required by other genes.

For instance, in a mutation of a gene from 0 to 1 when the mutating gene requires other genes (\(G_6\) mutation), the gene will only mutate if all of the genes required are set to 1 (otherwise, the strong encoding does not allow setting it to 1). Since \(G_7\) is set to 0, the mutation will not take place, and \(G_6\) will remain unchanged with a value of 0.

In a mutation of a gene from 1 to 0 when the mutating gene is required by other genes (\(G_0\) mutation), the gene can mutate from 1 to 0, but then all of the genes that require it also need to mutate to 0 (as the strong encoding requires). Since \(G_1\) and \(G_2\) require \(G_0\), they will also mutate to 0 (if their previous value was already 0, nothing changes).

In a mutation of a gene from 0 to 1 when the mutating gene is required by others genes (\(G_9\) mutation), the mutation does not need any special action, so it proceeds as usual (e.g., mutating gene \(G_9\) from 0 to 1). Similarly, in a mutation of a gene from 1 to 0 when the mutating gene requires other genes (\(G_1\) mutation), the mutation proceeds without further action (e.g., mutating gene \(G_1\) from 1 to 0).

4.2.2 Crossover operation for strong encoding

The crossover operation that is used with the strong encoding needs to take into account the hierarchy of the representation. To do this, it follows three steps: (1) generate a random mask; (2) check the validity of the random mask; (3) generate offspring. Figure 7 shows this three-step process along with an example.

-

Generate a random mask The random mask is randomly generated each time a crossover operation is performed. The idea is to divide the set of genes that are present in the representation of an individual into two subsets (\(G_A\) and \(G_B\)) and then use them to determine which elements come from one parent and which from the other when performing the crossover. First, a random point in the encoding is selected (a random number from 0 to the size of the individual). Then, all of the elements below that index will be the first subset (\(G_A\)), while the rest will be the second subset (\(G_B\)). Figure 7 (center) shows an example of a mask. In this case, the randomly selected index is 3, so genes \(G_0,G_1,G_2,\) and \(G_3\) are the subset \(G_A\) (the encoding is shaded in dark gray); the rest of the genes are the second subset \(G_B\) (the encoding is empty and the elements of the individual are faded out).

-

Check validity The next step is to check the validity of the mask. Some masks could lead to nonconforming individuals (which is not possible in the strong encoding), so they cannot be applied. The purpose of this step is to detect those situations and generate a new mask when the current one is not valid. First, the boundaries between the two subsets are identified. In other words, any element from subset \(S_A\) that requires or is required by an element from subset \(S_B\) is considered a boundary. Each boundary has two parts, a requiring gene and a required gene each of which is in a different subset, \(S_A\) or \(S_B\). Then, each boundary is classified into one of the following categories depending on the values of the boundary in each of the parents:

-

The requiring gene is 0 in both parents In this case, the value of the required gene does not matter since the requiring gene is not going to be part of any of the two combinations generated as offspring. The mask is not invalidated.

-

The required gene is 1 in both parents In this case, the value of the requiring gene does not matter since the required gene will always be part of the two combinations generated as offspring. The mask is not invalidated.

-

Otherwise In the rest of the cases, the value of the requiring and required genes is different in each of the parents. This leads to a situation where one of the combinations generated as offspring is nonconforming. The mask is invalidated (making it necessary to generate a new mask)

-

-

Generate offspring Finally, the crossover is applied following the valid mask, and two new individuals are generated. The first individual obtains the value for the genes contained in subset \(S_A\) from the Parent 1 and the value for the genes contained in subset \(S_B\) from the Parent 2. The second individual is the opposite and takes the values for genes in subset \(S_A\) from Parent 2 and the values for genes in subset \(S_B\) from Parent 1.

As a result of the crossover operation, two new conforming individuals that inherit genes from both parents are generated. By using these two new operations, the resulting individual will always be in the conforming subspace.

4.3 Closed operators

Another method for coping with nonconforming individuals in EAs is the development of closed operators. Closed operators have their roots in mathematics. Specifically, a set has closure under an operation if the application of that operation to elements of the set always produces an element of the set. For instance, the set \(\mathbb {N}\) of positive numbers (some definitions also include 0) has closure under the addition operation (+); the addition of any two numbers from \(\mathbb {N}\) will produce a number in \(\mathbb {N}\). Or more formally:

By extending this concept of closure, we can create operators that guarantee that if the individuals used as input are in the conforming subspace, the resulting individual produced by the operator will also be in the conforming space. Closed operators are similar to the operators used with strong encoding because they also ensure that resulting individuals reside in the conforming subspace. In addition to the definition of closed operations, the EA must be initiated with a set of conforming elements. By doing so, the population will always be part of the conforming space, guaranteeing that the solution will be a conforming individual.

In this work, we use two closed operators, which are adapted from the ones presented for the strong encoding, to apply them directly to the regular encoding. In order to obtain the initial population, we generate two types of seeds: (1) the empty model fragment (a model fragment where all of the genes are set to 0), which is a conforming individual since no constraint is violated; and (2) the whole model fragment (a model fragment where all of the genes are set to 1), which is also a conforming individual since all of the constraints are satisfied. The evolution of those individuals (through mutations and crossovers) will eventually lead to the solution model fragment.

4.4 Repair operators

Repair operators [18, 65, 66] are those capable of turning a nonconforming individual into a conforming one. The repair operator is an operator that is applied after the evolution has taken place (selection, crossover, mutation) but before the individuals are included in the population (replacement).

Repair operators are usually bound to the domain since knowledge about how to repair an individual is needed. However, in this work, we have identified different generic scenarios where the repair operators can be applied. First, when taking into account the valid reference constraint, two scenarios may arise: missing Source and missing Target (Table 1). Taking into account the valid property constraint, a new scenario may arise: missing Parent (Table 2):

-

Missing source This scenario occurs when the individual includes the reference element and the target element of the reference but not the source element of the reference (See Initial situation of the first row in Table 1).

-

Missing target This scenario occurs when the individual includes the reference element and the source element of the reference but not the target element of the reference (See Initial situation of the second row in Table 1).

-

Missing parent This scenario occurs when a property element is present in the individual, but the parent element of the property is not present (See Initial situation of the first row in Table 2).

To repair these scenarios, we propose two different repair operators based on the addition or removal of elements

4.4.1 Add repair

Add Repair will be applied to the repair scenarios described above and repair them by adding the missing elements:

-

Missing source The repair operator will add the source element of the reference to the individual (See Add Repair of the first row in Table 1).

-

Missing target The repair operator will add the target element of the reference to the individual (See Add Repair of the second row in Table 1).

-

Missing parent The repair operator will add the parent element of the property to the individual (See Add Repair of the first row in Table 2).

4.4.2 Remove repair

Remove Repair will be applied to the repair scenarios described above and repair them by removing the elements causing the individual to be nonconforming:

-

Missing source The repair operator will remove the reference element of the reference to the individual (See Remove Repair of the first row in Table 1).

-

Missing target The repair operator will remove the reference element of the reference to the individual (See Remove Repair of the second row in Table 1).

-

Missing parent The repair operator will remove the property element of the individual (See Remove Repair of the first row in Table 2).

After applying the operators, the nonconforming individual will turn into a conforming one (either by adding or removing elements). One problem that may arise with the remove operator is that it hinders the evolution of the model fragment because the operator does not allow new elements to emerge if they are not valid.

5 Evaluation

This section presents the evaluation performed to address the following research questions.

RQ1 Can the strategies for handling nonconforming individuals presented so far (penalty functions, strong encoding, closed operations or repair operators) improve the results of SBSE on models in terms of the number of generations and/or wall-clock time needed to reach the solution?

RQ2 If so, which strategies produce better results?

RQ3 Can any of the strategies produce solutions of better quality, in terms of precision, recall, F-measure and MCC, than those produced by the baseline when combined with a state-of-the-art fitness function as the textual similarity fitness presented?

To address these research questions, the following subsections present a description of the experimental setup, the set of performance metrics used, a description of the two case studies where the strategies were applied, the results obtained, and the statistical analysis performed on the results.

5.1 Experimental setup

To evaluate the different strategies, we apply them as part of the EA explained in Sect. 3.2 following the process depicted in Fig. 8.

The oracles (left) contain a set of product models and several features contained in those product models. The oracles were obtained from industry and contain the realization of each feature in the form of a model fragment. In other words, the oracle can be considered a set of ‘problems’ and the ‘answer’ to each one. We use it to evaluate the impact of each of the strategies proposed in the search process. Each oracle is organized as a set of test cases where each test case contains a model (where the feature must be located) a feature that is already located, and a feature description (elaborated by the engineers of our industrial partners).

Most of the execution time of an EA is spent on the evaluation of the fitness function. Specifically, in the case of FLiM using a fitness function based on textual similarity [37], we have reported that around 85% of the execution time is spent on the fitness function. Therefore, to evaluate the impact of the search strategies in the search process, we will perform two experiments, using a different fitness function each time. First, to avoid the impact of the fitness function on the results, we use the optimal fitness function (see Sect. 3.2.2), which indicates how far from or how close to the solution each of the individuals is. This setup will allow to answer RQ1 and RQ2, although is not possible to apply it to solve real problems (as it needs the existence of an oracle containing the answers to the questions beforehand). Secondly, to answer RQ3 and test the impact of the strategies on a real scenario, we repeat the experiment using a state-of-the-art fitness, the textual similarity fitness function (see Sect. 3.2.2).

For each test case (n) and each of the strategies (s), we executed 30 independent runs [8] (to avoid the effect of chance due to the stochastic nature of EAs) for each of the experiments. The set of strategies tested are the ones presented in Sect. 4. The EA with no strategy for handling nonconforming individuals is used as the baseline. The resulting data of the first experiment was averaged and is compared in Table 4 and statistically analyzed to guarantee the validity of the results obtained (Tables 7, 8, 9, 10 and 11, available in the Appendix). Similarly, the data obtained from the second experiment was averaged and used to build the confusion matrix of the result of each test case. Then, the performance measures (precision, recall, F-measure and MCC) were derived from the confusion matrix, compared in Table 5 and statistically analyzed to guarantee the validity of the results obtained (Tables 7, 12, 13, 14, 15, 16, 17, 18 and 19, available in the Appendix).

5.2 Performance metrics

To measure the performance of the strategies on the search process, we make use of standard metrics from the literature, so comparisons among different studies can be performed. In general, there are two types of performance measures that are relevant for EAs: solution quality and search effort. The experiment using the optimal fitness is designed to measure the impact of the strategies on the search effort of the algorithm. To do so, we use the number of generations and the wall-clock time. The experiment using the textual similarity fitness is designed to measure the solution quality. To do so, we use a confusion matrix to extract four metrics, precision, recall, F-measure and Mathew Correlation Coefficient (MCC).

The performance of each of the strategies is directly related to the number of times that the fitness function needs to be executed (i.e., the number of generations). Therefore, for the experiment using the optimal fitness we measure the performance of each strategy as the number of generations needed to find the optimal solution (extracted from the oracle), as suggested in the literature [50]. The fitness function is executed once for each individual in the population for each generation. Using the number of generations as metric allows us to compare the impact of the different strategies and the baseline (no strategy) fairly.

In addition, we use the wall-clock time as metric to measure the performance of each strategy. However, the time spent by the EA to find the solution does not depend only on the strategy being applied, the computing power of the computer used to run the experiments will influence the results. Similarly, the differences in performance of the implementation of each of the strategies can also introduce noise into the results. Therefore, the number of generations should be used to compare the performance of different strategies and the wall-clock time can be used as an indicator on the time needed by each strategy but should not be used to compare performance among strategies.

For the experiment using the textual similarity fitness, the EA will run for a fixed amount of time and then the best candidate obtained so far will be compared to the optimal solution from the oracle. To perform that comparison we make use of a confusion matrix, a table typically used to describe the performance of a classification model (the EA + strategy) on a set of test data (each of the test cases) for which the true values are known (the optimal solution from the oracle). The confusion matrix distinguishes between the predicted values (solution of the EA + strategy) and the real values (optimal solution from oracle) and arranges the elements (each of the genes of each individual) into four categories:

-

True Positive (TP) Values that are true in the real scenario and have been predicted as true.

-

True Negatives (TN) Values that are false in the real scenario and have been predicted as false.

-

False Positive (FP) Values that are false in the real scenario but have been predicted as true.

-

False Negative (FN) Values that are true in the real scenario but have been predicted as false.

Then, performance metrics can be derived from the confusion matrix, in this experiment we use precision, recall, F-measure and MCC.

Precision (see Eq. 7) measures the number of elements from the solution that are correct according to the optimal solution from the oracle. Precision values can range from 0% (no single element present in the solution is also present in the optimal solution from the oracle) to 100% (all the elements present in the solution are also present in the optimal solution from the oracle).

Recall (see Eq. 8) measures the number of elements of the optimal solution that have been correctly retrieved in the solution. Recall values range from 0% (none of the elements that are true in the oracle solutions is present in the solution) to 100% (all the elements that are true in the optimal solution are also present in the solution).

However, achieving a high value in precision or recall alone is not enough. The empty model fragment (where all the genes are set to false) would achieve 100% in precision (but 0% in recall). Similarly, the complete model fragment (where all the genes are set to true) would achieve 100% recall (but 0% in precision). Therefore, there is a need for overall measures that take into account all the figures present in the confusion matrix.

F-measure (see Eq. 9) is the harmonic mean between precision and recall, and provides a good overview of the overall performance of a strategy. Values can range from 0% (either precision or recall is 0%) to 100% (both, precision and recall are 100%).

Finally, MCC (see Eq. 10) has recently proven to be more informative than F-measure as metric of the overall performance [17], as it takes into account all the values from the confusion matrix (including the TN, which is not used by the F-measure). The values range from \(-1\) (worst value possible) to 1 (best value possible).

5.3 Case studies: BSH and CAF

The present work has been evaluated in two industrial case studies. The first case study used for the evaluation was BSH, the leading manufacturer of home appliances in Europe. Their induction division has been producing induction hobs (under the brands of Bosch and Siemens among others) for more than 15 years. The second case study used for the evaluation was CAF, a worldwide provider of railway solutions. They have been developing a family of PLC software to control their trains for more than 25 years.

The BSH case study has already been used as a running example throughout the paper. Their newest induction hobs include full cooking surfaces where dynamic heating areas are dynamically generated and activated or deactivated depending on the cookware placed on top of them. In addition, the new hobs have increased the amount and type of feedback provided to the user while cooking, providing data such as the temperature of the food being cooked or real-time measures of the power consumption of the hob. These changes have been made possible by increasing the complexity of the software that drives the induction hob.

The DSL used by our industrial partner to specify the induction hobs is composed of 46 meta-classes, 74 references with each other, and more than 180 properties. The running example presented in 3.2 shows a simplification of their DSL (to increase legibility and due to intellectual property rights concerns).

Their oracle is composed of 46 product models (induction hob), where each product contains (on average) around 500 model elements. The oracle is composed of 96 features that may or may not be part of a specific product model. Those features correspond to products that are currently on the market or will be released to the market in the near future. Each of the 96 features can be part of several product models, making a total of 608 occurrences of any of the features in any of the product models. Therefore, there are 608 test cases, each of which includes the product model where the feature should be located and the model fragment itself that realizes the feature (which is used as fitness).

The CAF case study is based on the family of software products used to manage their trains in different forms (regular train, subway, light rail, monorail, etc.) all over the world. Each train unit is equipped with different pieces of hardware installed on their vehicles and cabins. Those pieces of equipment are often provided by different companies, and their aim is to carry out specific tasks for the train such as traction, compression for the hydraulic brakes and harvesting of power from the overhead wires. The control software is responsible for making the cooperation among all the equipment possible in order to achieve the functionality desired for a specific train and guaranteeing compliance with the specific regulations of each country.

The DSL used to specify the products from CAF has expressiveness to describe the interaction among the equipment pieces. In addition, the DSL also provides expressiveness to specify non-functional aspects that are related to specific regulations (such as the quality of the signals or the different levels of redundancies needed).

The CAF oracle is composed of 23 product models (train units), where each product contains (on average) 1200 elements. The products are built from 121 different features that may or may not be part of a specific product model. Again, some features are present in more than one product model, making a total of 140 occurrences. For each occurrence, there is a test case that includes the product model and the model fragment that realizes the feature (which is used as fitness).

For the evaluation with the BSH oracle, we performed 608 (test cases) * 30 (repetitions) * 10 (baseline + strategies) * 2 (fitness functions) = 364,800 independent runs. For the evaluation with the CAF oracle, we performed 140 (test cases) * 30 (repetitions) * 10 (baseline + strategies) * 2 (fitness functions) = 84,000 independent runs.

To prepare the oracles, our industrial partners provided us with the product models and the model fragments that were used to build those product models. Therefore, the information about which elements realize each of the features comes directly from industry. For each test case, we had previously checked that the model fragment exists in the provided product model and that there are no inconsistencies (such as the empty model fragment or the complete model fragment).

5.4 Implementation details

The presented strategies were implemented within the Eclipse environment and the source code has been released to the public [34] as part of this work. We have used the Eclipse Modeling Framework [81] to manipulate the models from our industrial partner. The EA is based on the watchmaker framework [27] for evolutionary computation, creating custom genetic operators and representations to implement the strategies. The IR techniques that were used to process the language were implemented using OpenNLP [1] for the POS-Tagger and the English (Porter2) [83] as stemming algorithm. Finally, the LSI fitness was implemented using the Efficient Java Matrix Library (EJML [28]). We performed the execution of the EA with the strategies using an array of computers with processors ranging from 4 to 8 cores, clock speeds between 2.2 and 4 GHz, and 4–16 GB of RAM. All of them were running Windows 10 Pro N 64 bits as the hosting operative system and the Java SE runtime environment (build 1.8.0_73-b02).

5.5 Parameters and budget

There are some parameters in EAs that need to be configured prior to running them. We use default parameter values extracted from the literature [9] (and previously tested for this EA [38]) when available. However, the new penalty functions proposed in Sect. 4.1 also need values for some parameters. To give those values we have performed a tuning to determine which parameters work better for this problem. In other words, we have tried different combinations of parameters to determine the ones that result in a faster search. The parameters that we use are shown in Table 3.