Abstract

QVT Relations (QVT-R) is the standard language proposed by the OMG to specify bidirectional model transformations. Unfortunately, in part due to ambiguities and omissions in the original semantics, acceptance and development of effective tool support have been slow. Recently, the checking semantics of QVT-R has been clarified and formalized. In this article, we propose a QVT-R tool that complies to such semantics. Unlike any other existing tool, it also supports meta-models enriched with OCL constraints (thus avoiding returning ill-formed models) and proposes an alternative enforcement semantics that works according to the simple and predictable “principle of least change.” The implementation is based on an embedding of both QVT-R transformations and UML class diagrams (annotated with OCL) in Alloy, a lightweight formal specification language with support for automatic model finding via SAT solving. We also show how this technique can be applied to bidirectionalize ATL, a popular (but unidirectional) model transformation language.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Model-driven engineering (MDE) is an approach to software development that focuses on models as the primary development entity. In MDE, different models may capture different views of the same system (typically different models are used to specify structural and dynamic issues) or may be used at different levels of abstraction (code is obtained by refining platform-independent models to platform-specific ones). All these (possibly overlapping) models should be kept somehow consistent, and changes to one model should be propagated to all the others in a consistent manner. Ideally, specifications of transformations between models should be bidirectional, in the sense that a single artifact denotes transformations that can be used in both directions. Moreover, these transformations cannot just map a source to a target model and vice-versa: If some source information is discarded by the transformation, to propagate an update in the target back to a new consistent source, access to the original source model is also required, so that discarded information can be recovered.

To support the MDE approach, the Object Management Group (OMG) has launched the Model-driven Architecture (MDA) initiative, which prescribed the usage of MOF [43] (usually presented as UML class diagrams [41]) and OCL [42] for the specification of (object oriented) models and constraints over them. To specify transformations between models, the OMG proposed the Query/View/Transformation (QVT) standard [40]. While QVT provides three different languages for the specification of transformations, the most relevant to MDE is the QVT Relations (QVT-R) language, that allows the specification of a bidirectional transformation by defining a single declarative consistency relation between two (or more) meta-models. Given this specification, the transformation can be run in two modes: checkonly, to test whether two models are consistent according to the specified relation; or enforce, that given two models and an execution direction (picking one of them as the target) updates the target model in order to recover consistency. The standard prescribes a “check-before-enforce” semantics, that is, enforce mode cannot modify the target if the models happen to be already consistent according to checking semantics.

Effective tool support for QVT-R has been slow to emerge, which hinders the universal adoption of this standard. In part, this is due to the incomplete and ambiguous semantics defined in [40]. While the checking semantics has recently been clarified and formalized [4, 19, 47], the enforcement semantics still remains largely obscure and even incompatible with other OMG standards, despite some recent efforts to provide a formal specification [5]. Namely, it completely ignores possible OCL constraints over the meta-models, thus allowing updates that can lead to ill-formed target models. Likewise, none of the existing QVT-R model transformation tools supports such constraints, which makes them unusable in many realistic scenarios. Unfortunately, there are other problems that affect them. Some do not even comply to the standard syntax and support only a “QVT-like” language (including not providing both running modes as required by the standard). Others do not support truly non-bijective bidirectional transformations (for example, ignoring the original target model in the enforce mode). Some purposely disregard the intended QVT-R semantics (including checking semantics) and implement a new (still unclear and ambiguous) one. In most cases, it is not clear whether the supported checking semantics is equivalent to the one formalized in [4, 19, 47]. And finally, none clarifies the problems and ambiguities in the standard concerning enforcement semantics, and none presents a simple enough alternative for this mode that makes its behavior predictable to the user.

In this article, we propose a QVT-R bidirectional model transformation tool that addresses all these issues. Both the meta-models and transformation specifications may be annotated with OCL, and it supports a large subset of the standard QVT-R language, including execution of both modes independently as prescribed. The main restriction is that recursion must be non-circular (or well-founded), which is satisfied by most of the interesting case studies. The checking semantics closely follows the one specified in the standard, being equivalent to the one formalized in [4, 19, 47]. Finally, instead of the ambiguous (and OCL incompatible) enforcement semantics proposed in the standard, our tool follows the clear and predictable principle of least change [35] and just returns updated consistent target models that are at a minimal distance from the original. In particular, the “check-before-enforce” policy required by QVT-R is trivially satisfied by this semantics. Our tool supports two different mechanisms to measure the distance between two models: the graph edit distance [51], that just counts insertions and deletions of nodes and edges in the graph that corresponds to a model; and a variation where the user is allowed to parameterize which operations should count as valid edits, by attaching them to the meta-model and specifying their pre- and post-conditions in OCL.

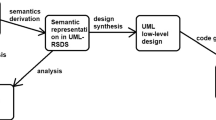

To achieve this, we propose an embedding of both QVT-R transformations and UML class diagrams (annotated with OCL) in Alloy [24], a lightweight formal specification language with support for automatic model finding via SAT solving. Alloy is based on relational logic, which has been shown to be very effective to validate and verify object-oriented models. Its connection with the MDA has also been explored before through tools that translate UML class diagrams annotated with OCL to Alloy [1, 9], on top of which we build our embedding. The proposed technique has been implemented as part of Echo, a tool for the consistent exploration and transformation of models through model finding [33] and has already proved effective in debugging existing transformations, namely helping us unveiling several errors in the well-known object-relational mapping that illustrates the QVT-R specification [40].

Our approach is sufficiently general to be applied to other model transformation languages. To exemplify, we apply our bidirectionalization technique to a significative subset of the Atlas Transformation Language (ATL) [25], a widely used, but unidirectional, model transformation language. From the specification of an ATL forward transformation, we first infer a consistency relation between source and target meta-models, which then enables us to apply our bidirectionalization engine and automatically obtain a backward transformation that follows the principle of least change.

The present article is an extended version of a previous conference paper [31]. The application of our technique to bidirectionalize ATL is the main new contribution, but in addition to the previous content, this article describes the proposed technique with more detail, how it was deployed in a user-friendly tool, and includes a new case study and an extensive evaluation to access its effectiveness. Section 2 introduces the QVT-R language, describes the standard checking semantics, presents some of the problems with the enforcement semantics and proposes and formalizes a simpler alternative based on the principle of least change. Section 3 presents our embedding of UML class diagrams (annotated with OCL) and QVT-R transformations in Alloy. Section 4 explores how the proposed technique can also be used to bidirectionalize ATL. Section 5 describes how it was deployed as part of the Echo framework as an Eclipse IDE plugin for managing of model consistency, while Sect. 6 presents the evaluation and scalability tests. Finally, Sect. 7 analyzes related work, while Sect. 8 draws conclusions and points to future work.

2 QVT relations

In this section, the basic concepts and the semantics of the QVT-R language are introduced. A more detailed presentation can be found in the OMG standard [40].

2.1 Basic concepts

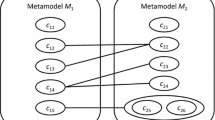

A QVT-R specification consists of a transformation \({ T }\) between a set of meta-models that states under which conditions their conforming models are considered consistent. For the remainder of this article, we will restrict ourselves to transformations between two meta-models for simplicity purposes, although most concepts could be generalized to multi-directional transformations [32]. From \({ T }\), QVT-R requires the inference of three artifacts: a relation \(\varvec{{ T }} \subseteq { M } \times { N }\) that tests if two models \({ m }\mathbin {:}{ M }\) and \({ n }\mathbin {:}{ N }\) are consistent and transformations \(\overrightarrow{ \varvec{{ T }} } \mathbin {:}{ M } \times { N }\rightarrow { N }\) and \(\overleftarrow{ \varvec{{ T }} } \mathbin {:}{ M } \times { N }\rightarrow { M }\) that propagate changes on a source model to a target model, restoring consistency between the two. Thus, transformations can be executed in two modes: checkonly mode, where the models are simply checked for consistency, denoted as \(\varvec{{ T }}\;({ m },{ n })\); and enforce mode, where \(\overrightarrow{ \varvec{{ T }} } \) or \(\overleftarrow{ \varvec{{ T }} } \) is applied to inconsistent models in order to restore consistency, depending on which of the two models should be updated. Note that both transformations take as extra argument the original opposite model: if models \({ m }\mathbin {:}{ M }\) and \({ n }\mathbin {:}{ N }\) are initially consistent, and \({ m }\) is updated to \({ m' }\), \(\overrightarrow{ \varvec{{ T }} } \) takes as input both \({ m' }\) and \({ n }\) to produce the new consistent \({ n' }\). This way the system is able to retrieve from \({ n }\) information discarded by the transformation. This formalization of QVT-R is inspired by the concept of maintainer [35] and was first proposed in [46]. Naturally, when the transformations propagate an update, the result is expected to be consistent. Formally, the transformation is said to be correct if:

The transformations are also required to follow a “check-before-enforce” policy (also referred to as hippocraticness [46]), that can be formalized as follows:

A QVT-R transformation is defined by a set of relations. A relation consists of a domain pattern for each meta-model of the transformation that defines which objects of the model it relates by pattern matching. It also may include when and where constraints, that act as a kind of pre- and post-conditions for the relation application, respectively. These constraints may contain arbitrary OCL expressions. The abstract syntax of a relation is the following:

In relation \(R\), the domain pattern for meta-model \(M\) consists of a domain variable \(a\) and a template \(\pi _M\) that binds the values of some of its properties (attributes or related associations), which candidate objects of type \(A\) must match. Likewise for the domain pattern \(\pi _N\) for meta-model \(N\). To simplify the presentation, the above syntax restricts relations to have exactly one domain variable per meta-model. If the multiplicity of a navigated property \({ R }\) is different from one, pattern templates involving it denote inclusion tests, i.e., a pattern \({ R }\mathrel {=}{ a }\) denotes the test \({ a } \in { R }\). Properties can also be navigated backwards by using the opposite keyword. Templates can be complemented with arbitrary OCL constraints. Relations can optionally be marked as top, in which case they must hold for all objects of the specified class. Otherwise, they are only tested for particular objects when invoked in when or where clauses.

2.2 Examples

As a first example, we will define a simplified version of the classic object-relational mapping transformation that illustrates the QVT-R specification [40]. Although simplified, this version still exhibits some of the problems of the original version, which we will describe in the next section. Figure 1 depicts a simplified version of the object (UML) and relational (RDBMS) meta-models, including signatures of possible edit operations. Figure 2 defines a transformation uml2rdbms, whose goal is to map every persistent class in a package to a table in a schema with the same name. Each table should contain a column for each attribute (including inherited ones) of the corresponding class. A constraint of the UML meta-model that cannot be captured by class diagrams, neither QVT-R key constraints, is the requirement that the association \( \mathtt{general } \) should be acyclic. One must resort to OCL to express it, for example by adding the following invariant to the UML meta-model:

The constraint relies on the transitive closure operator, which has recently been introduced to the OCL standard [42, p. 168].

There are two top relations: P2S that maps each package to a schema with the same name and C2T that maps each class to a table with the same name. To ensure that a class is only mapped to a table if the respective package and schema are related, relation C2T invokes P2S (with concrete domain variables) in the when clause. For concrete class \(c\) and table \(t\), C2T also calls relation A2C in the where clause, that will be responsible to map each attribute in \(c\) to a column in \(t\). A2C directly calls PA2C, that translates each attribute directly declared in \(c\) to a column in \(t\) and SA2C, that recursively calls A2C on the general class of \(c\), so that each inherited attribute is also translated to a column in \(t\).

Another classical bidirectional model transformation example is that of the expansion/collapse of a hierarchical state machine (HSM). In a HSM, states may themselves contain sub-states (in which case they are called composite states), as defined by the HSM meta-model in Fig. 3. Transitions may exist between sub-states and states outside their owning composite state. Like with the UML meta-model, the HSM meta-model also requires an additional OCL constraint to avoid circular containment. One advantage of HSMs is abstraction, and a HSM can be collapsed into a non-hierarchical state machine (NHSM) that presents only top-level states, inheriting the incoming and outcoming transitions of their sub-states. The NHSM meta-model is similar to HSM without the container association and the CompositeState class and thus is omitted.

The consistency relation between a HSM and its collapsed view is specified by the hsm2nhsm QVT-R transformation in Fig. 4. Top relation S2S relates every state of a HSM with a NHSM state with the same name as the top-level state owning it. The where clause of top relation S2S tests whether the HSM state is top-level or not: If so, TS2S is called, which matches itself to a NHSM state with the same name; otherwise, SS2S is called, which recursively calls S2S with its container state. Each transition is mapped by the top relation T2T, which can be trivially specified by resorting to a where clause stating that two transitions are related if their source and target states are related by S2S. Since sub-states in a HSM are related to top-states in a NHSM, every transition is automatically pushed up to the top-states.

2.3 Checking semantics

QVT-R’s checking semantics assesses whether two models are consistent according to the specified transformation. Although the consistency check is by itself important, it is also an essential feature in enforce mode since the latter must “check-before-enforce.” The semantics of a relation differs whether it is invoked at the top-level or with concrete domain variables in when and where clauses. The specified top-level semantics is directional. As such, from each relation \(R\), two consistency relations \({ R } _\blacktriangleright \mathbin {:}{ M } \times { N }\) and \({ R } _\blacktriangleleft \mathbin {:}{ M } \times { N }\) must be derived, to check whether \(m : M\) is \(R\)-consistent with \(n : N\) and if \(n : N\) is \(R\)-consistent with \(m : M\), respectively. The former can be formalized as follows:

Here, \(\mathsf {fv}({ e })\) retrieves the set of free variables from the expression \({ e }\), so \({ xs }\) denotes the set of variables used in the when constraint and the source pattern, while \({ ys }\) is the set of variables used exclusively in the where constraint and in the target pattern. Given a formula \(\psi \), \(\psi _\vartriangleright \) denotes the same formula with all relation invocations replaced by the respective directional version. This semantics is rather straightforward: Essentially, for every element \({ a }\mathbin {:}{ A }\) that satisfies the when condition \(\psi \) and matches the \(\pi _M\) domain pattern, there must exist an element \({ b }\mathbin {:}{ B }\) that satisfies the where condition \(\phi \) and matches the \(\pi _N\) domain pattern. The semantics in the opposite direction is dual. Two models are consistent according to a QVT-R transformation \({ T }\) if they are consistent for all top relations in both directions. Assuming that \(\mathcal {R}_{{ T }}\) is the set of all top-level relations of transformation \({ T }\), we have:

The QVT-R standard [40] defines rather precisely the top-level semantics, but is omissive about the semantics of relations invoked with concrete domain variables. Recent works on the formalization of QVT-R checking semantics [4, 19, 47] clarify that it is essentially the same as the top-level—still directional, but defined over specific objects by fixing the domain variables. As such, from each relation \(R\) with domain variables of type \({ A }\) and \({ B }\), two consistency relations \({ R } _\vartriangleright \mathbin {:}{ M } \times { N } \times { A } \times { B }\) and \({ R } _\vartriangleleft \mathbin {:}{ M } \times { N } \times { A } \times { B }\) are inferred, to check if two concrete objects \({ a }\) and \({ b }\) (belonging to models \({ m }\mathbin {:}{ M }\) and \({ n }\mathbin {:}{ N }\), respectively) are consistent:

Although it may be tempting (and probably more intuitive) to define \({ R } _\blacktriangleright \) in terms of \({ R } _\vartriangleright \), that is \({ R } _\blacktriangleright \;({ m },{ n })\equiv \forall \;{ a }\mathbin {:}{ A }\mid \exists \;{ b }\mathbin {:}{ B }\mid { R } _\vartriangleright \;({ m },{ n },{ a },{ b })\), this definition is not semantically equivalent to the one presented above, as already discussed in [4]. For instance, consider the semantics (in the direction of UML) of relation \( \mathtt{PA2C } \) from the uml2rdbms transformation:

Consider a simple UML model where a class \({ a }\) with an attribute \({ x }\) extends a class \({ b }\) with an attribute \({ y }\). Consider also a RDBMS model with a single table \({ a }\) containing a column \({ x }\) and a column \({ y }\). While \( \mathtt{PA2C } _\blacktriangleleft \) holds for this pair of models, \( \mathtt{PA2C } _\vartriangleleft \) returns false for every pair of \( \mathtt{Class } \) and \( \mathtt{Table } \). Of course, there are cases where the two semantics are equivalent. For instance, C2T could be defined as a non-top relation and be called from the where clause of \( \mathtt{P2S } \). The behavior is equivalent because the only free variable (\(n\)) is bound to a unitary attribute.

Due to this asymmetry and the directionality of the semantics, QVT-R transformations may not have the expected behavior. In particular, uml2rdbms as defined in the standard does not have a bidirectional semantics, in the sense that the only pairs of consistent and valid finite models are ones where all classes are non-persistent and there are no tables. To see why this happens, consider the relations \( \mathtt{A2C } \) and \( \mathtt{SA2C } \) when checked in the direction of \( \mathtt{UML } \). These relations call each other recursively, and their non-top-level semantics is:

If the transformation takes into account the OCL constraint requiring general to be acyclic, the predicate \( \mathtt{A2C } _\vartriangleleft \;({ uml },{ rdb },{ c },{ t })\) never holds in a finite model, since \({ c }\) will be required to have an infinite ascending chain of general objects. This is due to the under-restrictive \( \mathtt{SA2C } \) domain pattern in the RDBMS side (empty in this case), that requires every table to have a matching class with a general, which, due to recursion, is also required to have a general, and so on. This is but one of the problems that occur in the original specification of this transformation and is another example of the ambiguities that prevail in the QVT standard [40]: While it requires consistency to be checked in both directions, the case study used to illustrate it was clearly not developed with bidirectionality in mind. Note that checking consistency only in the direction of RDBMS does not suffice, since, for example, it will not prevent spurious tables to appear in the target schema.

Concerning recursion, we can distinguish two situations: one is well-founded recursion, where the call graph of the transformation contains a loop, but in any evaluation, it is traversed only finitely many times; another is cyclic (or infinite) recursion, where such a loop may actually be traversed infinitely many times (e.g., when a relation directly or indirectly calls itself with the same arguments). The semantics of well-founded recursion is not problematic, but the standard is omissive about what should happen when infinite recursion occurs. A possible interpretation is that it should not be allowed, although in general, it is undecidable to detect whether that is the case. Similarly to some QVT-R formalizations [19, 47], the embedding presented in this article is not well-defined when infinite recursion occurs.

Recently, a formal semantics of QVT-R was proposed [4] that is well-defined even in presence of infinite recursion, by resorting to modal mu calculus. To see why taking OCL constraints into account is fundamental, a transformation conforming to this semantics, but that ignores the requirement that general is acyclic, would consider an (ill-formed) UML model with a single persistent \( \mathtt{Class } \) \({ a }\) that generalizes itself consistent with a RDBMS model with a \( \mathtt{Table } \) \({ a }\).

To prevent the problem in the uml2rdbms transformation described above, one could tag each column with the path to the particular \( \mathtt{general } \) they originated from and then refine the RDBMS domain pattern to prevent problematic recursive calls. A simpler alternative is to resort to the transitive closure operation and map at once every declared or inherited attribute of a given class to a column of the respective table. In this new version of uml2rdbms (that will be considered in the remainder of the article), A2C, PA2C and SA2C are replaced by the following alternative definition of A2C:

The additional OCL constraint in the UML domain pattern acts as a pre-condition when applying the transformation in the direction of RDBMS, and as a post-condition in the other direction. As such, it could not be specified in the when clause, since it would act as (an undesired) pre-condition for both scenarios.

Unlike uml2rdbms, the recursive version of hsm2nhsm does produce the intended behavior. The reason is that, while a single attribute in uml2rdbms may give origin to columns in multiple tables, a HSM transition in hsm2nhsm gives rise to a single NHSM transition. As a consequence, unlike \( \mathtt{A2C } \) that must be defined over class elements, \( \mathtt{T2T } \) can be defined directly over transition elements. These particularities are difficult to grasp at design time, thus effective tool support for QVT-R is essential for the design of consistency relations that embody the intentions of the user.

2.4 Enforcement semantics

Despite showing many ambiguities and omissions, we believe that, due to the reasons presented next, the enforcement semantics intended in the standard for this mode is quite undesirable. Instead, we propose an alternative that is easy to formalize, more flexible, and more predictable to the end-user.

In the QVT-R standard, update propagation is required to be deterministic. This is a desirable property, since it makes its behavior more predictable. However, to ensure determinism, every transformation is required to follow very stringent syntactic rules that reduce update translation to a trivial imperative procedure. Namely, it should be possible to order all constraints in a relation (except for the target domain pattern), such that the value of every free variable is fixed by a previous constraint. Although not clarified in the standard, this means that every relation invoked in when and where constraints is either invoked with previously bound variables, or required to also be deterministic, even if the intention was to only make update propagation deterministic. For example, in transformation uml2rdbms, update propagation in the RDBMS direction will only be deterministic for relation C2T if at most one \({ s }\) is consistent with \({ p }\) according to relation P2S (note that \({ s }\) is still free in the when clause). In this particular example, that happens to be true, but in general such determinism is undesirable since it forces relations to be one-to-one mappings, limiting the expressiveness of the language. Moreover, it defeats the purpose of a declarative transformation language, since one is forced to think in terms of imperative execution and write more verbose transformations. For example, our simpler version of A2C using transitive closure would not be allowed, since the value of \({ g }\) is not known a priori when enforcing consistency in the direction of UML.

Another problem is the predictability of update propagation. Being deterministic is just part of the story—it should be clear to the user why some particular element was chosen to be updated instead of another. The only mechanism proposed by QVT-R to control updatability is keys. For example, one could add the statement key Table (name, schema); to the running example to assert that each table is uniquely identified by the pair of properties name and schema. If an update is required on a table to restore consistency (for example, when an attribute is added to a class), such key is used to find a matching table. When found, an update is performed, otherwise a new table is created. This works well when all domains involved in relations have natural keys, which again points to one-to-one mappings only, but fails if such keys do not exist. In those cases, the standard prescribes that update propagation should always be made by means of creation of new elements, even if sometimes a simple update to an existing element would suffice. Since creation requires defaults for mandatory (multiplicity one) properties, this would result in models with little resemblance with the original (which would basically be discarded).

Our alternative enforcement semantics is based on the principle of least change, first proposed in the context of maintainers [35], and that promotes predictability by requiring updates to be as small as possible. QVT-R “check-before-enforce” policy is just a particular case of this more general principle. Let \({ \varDelta }_{{ M }}\mathbin {:}{ M } \times { M }\rightarrow \mathbb {N} \) be an operation that computes the update distance between \({ M }\) models. Then, the principle of least change states that the models returned by the transformations \(\overrightarrow{ \varvec{{ T }} } \) and \(\overleftarrow{ \varvec{{ T }} } \) are just the consistent models closest to the original. Formally, we have:

Assuming that the distance is only null when the model is unchanged (i.e., \( \varDelta \;({ n },{ n' })\mathrel {=}\mathrm {0}\equiv { n }\mathrel {=}{ n' }\)), it is trivial to show that these laws reduce to hippocraticness when the models \({ m }\) and \({ n }\) are already consistent. Note that this principle by itself does not ensure determinism, although it substantially reduces the set of possible results. If among the returned models, the user wishes to favor a particular subset, keys or OCL constraints can be added to the meta-model to further guide the transformation engine.

We propose two different techniques to measure the update distance between models. In the first one, models are interpreted as graphs and the graph edit distance (GED) [51] is measured. GED measures the distance between two graphs as the number of node and edge insertions and deletions needed to obtain one from the other. Concretely, graph nodes denote model elements and literal values (i.e., primitive type values or enumeration literals), while edges denote links between model elements or attributes between model elements and literal values. GED counts changes in this graph representation, with the exception of literal values, which are considered external to particular model instances and thus do not affect model distance. This is a meta-model-independent metric that is automatically inferred by our tool for any meta-model provided by the user.

The simple definition for distance provided by GED assumes a fixed repertoire of edit operations which may not be desirable. In particular, there is no control over the “cost” of complex operations. For example, changing the name of a Class will have a cost of 2, since it requires deleting the current name edge and inserting a new one, while adding a new attribute to a class will cost 3, since it requires creating a new attribute, setting its name, and adding it to the class. One may wish both these operations to be atomic edits and have the same unitary cost. Also, one may wish to allow only particular edits in order to control non-determinism of enforcement runs.

To address such limitations, we propose as an alternative measure an operation-based distance (OBD) that allows the user to control the range of valid repairs by specifying in the meta-model which edit operations can be applied to update the model. These are specified using pre- and post-conditions defined in (a subset of) OCL. For the purposes of our running example, we assume the existence of the edit operations whose interfaces are defined in Fig. 1. The following is an OCL specification of the operation setName from Class:

In this case, \(\varDelta \) will be the length of the edit operation sequence (built over the user-defined operations) required to achieve the new model. Enforcing the principle of least change entails minimizing this sequence between the original and the updated models. While OBD allows the assignment of lower costs to complex updates (simply create an operation that composes smaller operations), assigning higher costs to simple operations is not as straight-forward as they may not be decomposable. This would require customizable operation costs which is left as future work.

A source of ambiguity in OCL concerns frame conditions. Assuming that everything that is not mentioned in the post-condition is not changed is generally a reasonable assumption, but this is not trivial to infer from declarative specifications. Given the lack of OCL statements focusing on frame conditions, we introduce “modifies” clauses, through which the user must explicitly specify which elements of the model may be modified by the operation—the remainder are assumed to remain unchanged. This mechanism is similar to those introduced by behavioral interface specification languages, like the Java Modeling Language (JML) [29]. In the previous example, the modifies keyword states that only the attribute name in Class is modified by operation setName.

While our semantics, following the constraint maintainers framework and the QVT-R standard, was developed in a bidirectional transformation scenario (in the sense that consistency is restored by updating a single model), it is worth noting that it could be adapted to synchronization scenarios where both models can be updated simultaneously: Resorting to the same consistency relation, enforcement runs would try to minimize the distance of both models to the original ones, rather than just one. Given a synchronization procedure \(\overleftrightarrow { \varvec{{ T }} } \mathbin {:}{ M } \times { N }\rightarrow { M } \times { N }\) and a distance metric over pairs of models \({ \varDelta }_{{ M } \times { N }}\mathbin {:}({ M } \times { N }) \times ({ M } \times { N })\rightarrow \mathbb {N} \), typically

the least-change principle would be formalized as:

While this generalization allows the application of the technique to simple synchronization problems that can be solved by minimal updates, addressing more complex synchronization problems that require user interaction to solve conflicts between the domains cannot be attained directly by least-change techniques.

This generalization is related to the multi-directional transformation scenario, where the user may wish to update multiple models in order to restore consistency, to which our technique can also be adapted [32]. Here, the system tries to minimize the distance between the set of original and target models that the user chose as targets of the enforcement run.

3 Embedding QVT-R in Alloy

In this section, we present how the semantics proposed in the previous section can be operationalized by an embedding in Alloy. To keep the paper self-contained, a brief introduction to Alloy is presented, focusing on the concepts deemed essential to understand our embedding; for a deeper exposition, the reader is redirected to [24]. The reader not interested in the technical details of the embedding can skip over to Sect. 4.

3.1 A brief introduction to Alloy

Alloy is a lightweight formal specification language that, supported by the Alloy Analyzer, provides bounded model checking and model finding functionalities through an embedding in off-the-shelf SAT solvers. Alloy is a rich and flexible language; in this section, we focus only on concepts deemed essential for the scope of this article.

An Alloy specification is developed in modules that consist of paragraphs: signature declarations, constraints and commands. A signature declaration introduces a set of elements sharing a similar structure and properties. In Alloy, such elements are uninterpreted, immutable and indivisible and are thus denoted atoms. A signature declaration may also introduce fields, i.e., relations that connect its atoms to those of other (or the same) signatures. These are represented as sets of tuples of atoms in instances. Alloy is not restricted to binary relations, and it is not uncommon to have fields that relate three or more signatures. A signature that extends other signatures inherits their fields. It can also be contained in another signature, in which case it is simply a subset of the parent signature.

Signatures may be annotated with multiplicity keywords to restrict their cardinality, namely some (at least some elements), lone (at most one element) and one (exactly one element). The range signature in a field declaration can also be annotated with such multiplicities, to restrict the number of atoms that can be connected to each atom of the source signature. If that number is arbitrary, the special multiplicity keyword set should be used.

Facts specify properties that must hold in every instance. These may call functions and predicates that are essentially containers for reusable expressions. Commands are used to perform particular analyses, by invoking the underlying solver. Run commands try to find instances for which the specified properties hold, while check commands try to find counter-examples that refute them. Commands can be parametrized by scopes for the declared signatures, thus bounding the search-space for the solver. If no scope is specified, a default of 3 is assumed.

Figure 5 depicts a possible (incomplete) specification of the UML class diagram meta-model using Alloy. Signatures Package, Class and Attribute declare the corresponding classes and introduce (binary) fields to represent the classes’ attributes and associations. Alloy does not have a primitive boolean type, so boolean attributes are usually represented by subset signatures containing the elements that have the attribute set to true. This is the case of the persistent attribute of Class, here represented by the Persistent subset signature. The run command instructs the analyzer to search for instances conforming to the acyclic predicate, setting a specific scope for each of the signatures.

Formulas in Alloy are defined in relational logic, an extension of first-order logic with relational and closure operators. Everything in Alloy is a relation, i.e., a set of tuples of atoms (with uniform arity). Signatures are unary relations (sets) containing the respective atoms and scalar values (including quantified variables) are just singleton sets. This uniformity of concepts leads to a very simple semantics. The relational logic operators also favor a navigational style of specification that is appealing to software engineers, as it resembles object-oriented languages.

The key operator in Alloy is the dot join composition that allows the navigation through fields (and relational expressions in general). For example, if c is a Class, c.name denotes its name (a scalar) and c.general accesses its super-class (a set containing at most one Class). Besides composition, relational expressions can also be built using the union (+), intersection (&), difference (-) and cartesian product (-> ) operators. In particular, singleton tuples can be defined by taking the cartesian product of two (or more) scalars. Relations can also have their domain restricted to a given set

and likewise for the range

. For example,

is the binary relation that associates persistent classes with the respective names. Binary relational expressions can also be reversed

, extended with the transitive closure

, or with the reflexive transitive closure (*). For example, in the acyclic predicate, expression self.

general retrieves all the super-classes of self. Relational expressions may also be created by set comprehension. Finally, there are some primitive relations pre-defined in Alloy: univ denotes the universe, i.e., the set of all tuples, none denotes the empty set, and iden the binary identity relation over the universe.

Alloy has limited support for integers: The pre-defined Int signature contains all available integers. In commands, the scope of Int determines the available number of bits to represent them (in two’s complement notation). Integers can be added and subtracted with the functions plus and minus, respectively. The default semantics for integer operations is wrap around: for example, if the scope for Int is 3, plus [3,1] is \(-4\). Every relation expression can have its cardinality determined with the # operator.

Atomic formulas are built from relational expressions using inclusion (in), equality (=) or cardinality checks (besides lone, some and one, keyword no can also be used to check if a relational expression is empty). Formulas can be combined with conjunction (&&), disjunction (||), implication (=> ), possibly associated with an else formula, equivalence

, and negation (not). Besides the universal (all) and existential (some) quantifiers, Alloy also supports lone (property holds for at most one atom), one (property holds for exactly one atom), and no (property holds for no atom) quantifiers. In the acyclic predicate, as expected, the formula quantifies over all atoms of signature Class and tests if the inheritance chain is acyclic.

3.2 Meta-models annotated with OCL

The models upon which our transformations are defined consist of UML class diagrams annotated with OCL constraints. Some translations have been proposed to embed such models in Alloy, namely [1, 9]. Our embedding will be based on the translation proposed in [9], since, unlike other proposals, it covers an expressive OCL subset that includes closure and operation specification via pre- and post-conditions. Here, we will just briefly present this translation.

Classes, their attributes and related associations can be directly translated to signatures and fields in Alloy. Likewise for the inheritance relationship, that Alloy also supports. The main difference between the embedding from the previous section is that, since Alloy instances are built from immutable atoms, the transformation resorts to the well-known local state idiom [24] to capture updates to a given model. This means that a special signature will be introduced to represent each meta-model, whose atoms will denote different models (or evolutions of a given model). To each field (representing an association or an attribute), an extra column of this type is added, to allow its value to change in different models. The translation proposed in [9] is also extended to allow classes to have different elements in different models: For each class, a special binary field (with the same name) will capture the objects of that class that exist in each model, to which we will refer as the signature’s state field. Boolean attributes are encoded similarly: A binary field captures which objects have the attribute set to true in each model. For example, class Class of our UML meta-model is translated to the following signature declaration.

The binary state field class captures the Class objects that exist in each UML model. The remaining fields model the respective Class associations and attributes. With the relational composition operator, we can access the values of these fields for a given UML model m. For example, class.m is the set of Class objects that exist in model m, general.m is a binary relation that maps each Class to its general in model m, and persistent.m is the set of Class objects that have the attribute persistent set to true in model m.

Constraints must also be generated to ensure the correct multiplicities and that fields only relate atoms existing in the same model (inclusion dependencies). For example, fact

is generated to capture the cardinality constraints of relation namespace, and to force it, for each UML model m, to be a subset of the cartesian product between class.m and package.m (respectively, the sets of Class and Package elements of model m). Constraints that guarantee the integrity of the class hierarchy are also inserted, for instance, in the HSM meta-model, fact

would ensure that if a CompositeState exists in model m, it is also registered as a State in that model. OCL invariants in a given context are also automatically translated to Alloy facts, resulting in universal quantifications over the respective signature state fields, following the technique previously developed in [9]. Table 1 summarizes the currently supported operations from the OCL standard library [42] (operation oclIsNew may only be used in controlled contexts as explained in Sect. 3.4). For example, the OCL invariant stating that association general is acyclic is translated to Alloy as

Here,

is the transitive closure of field general projected over m.

The QVT standard extends the OCL language with the insertion of the opposite keyword that allows the navigation of associations in the opposite direction, which can be directly translated to Alloy using the converse operator \(\sim \).

3.3 QVT-R transformations

Top relations \({ R } _\blacktriangleright \) and \({ R } _\blacktriangleleft \) are specified by predicates parameterized by the model instances. The definition of all these predicates follows closely the formalization in Sect. 2.3. In particular, auxiliary predicates are used to specify the when and where clauses, and the domain patterns of each relation. For example, back to uml2rdbms, Fig. 6 presents the result of embedding \( \mathtt{C2T } _\blacktriangleright \) in Alloy as the predicate Top_C2T_RDBMS, as well as the necessary auxiliary predicates. Note how, in the specification of \( \mathtt{C2T } _\blacktriangleright \), quantifications are restricted to range over atoms existing in the respective models.

For each relation \({ R }\), we also declare two Alloy predicates to specify \({ R } _\vartriangleright \) and \({ R } _\vartriangleleft \). For example, in Fig. 6 the omitted predicates P2S_RDBMS and A2C_RDBMS specify \( \mathtt{P2S } _\vartriangleright \) and \( \mathtt{A2C } _\vartriangleright \), respectively. Besides the respective domain elements, these are also parameterized by the models they are being applied to. Since in Alloy predicates cannot call each other recursively, predicates \({ R } _\vartriangleright \) and \({ R } _\vartriangleleft \) are defined in terms of auxiliary relations over the model state signatures, specified by comprehension. For instance, the following recursive predicates, that would arise from a direct encoding of \( \mathtt{S2S } _\vartriangleright \) and \( \mathtt{SS2S } _\vartriangleright \) in hsm2nhsm, are invalid in Alloy.

Instead, we declare auxiliary relations S2S_NHSM’ and SS2S_NHSM’ with types HSM->NHSM->State->State and HSM->NHSM->State->State, respectively, and axiomatize their value using set comprehension as follows:

Note how predicate invocation is replaced by membership check: For example, instead of the predicate call TS2S_NHSM[hsm,nhm,s,t], we check that the tuple hsm->nhs->s->t is included in relation TS2S_NHSM’. By resorting to these, the predicate encodings \( \mathtt{S2S } _\vartriangleright \) and \( \mathtt{SS2S } _\vartriangleright \) can now be redefined simply as

As discussed in Sect. 2.3, this embedding will not be well behaved in presence of cyclic recursion.

The checking semantics of the transformation is represented by a predicate that checks all top relations in both directions. In the uml2rdmbs example, we have:

Regarding enforcement semantics, to implement the principle of least change as described in Sect. 2.4, we require the measurement of the update distance between two models. The first proposed metric is GED that interprets models as graphs and measures the distance as the number of node and edge insertions and deletions needed to obtain one graph from the other. Note that an Alloy instance is isomorphic to a labeled graph whose nodes are the atoms, and edges tuples in fields (technically to hypergraphs, since fields are n-ary). With this mechanism, \(\varDelta _\mathtt{UML }\) can be computed as follows:

Assuming m’ represents an updated version of m, this function sums up, for every signature and field, the size of their symmetric difference in both models. To avoid Alloy’s standard wrap around semantics for integers, model finding is executed with the option Forbid Overflow set [36].

Regarding OBD, the edit operations, specified by the user in OCL using pre- and post-conditions, are automatically converted to Alloy using the translation procedure defined in [9]. Essentially, each operation will originate an Alloy predicate that specifies when it can hold between two models. The resulting Alloy predicate takes as arguments the pre- and post-states of the affected model (with the post-state being denoted by a primed variable), the receiver element of the edit operation (denoted by argument self of the appropriate context class), as well as the stated operation parameters. For example, the result of translating setName to Alloy is the following:

The body of the predicate consists of the translation of the pre- and post-conditions from the OCL specification. Pre-conditions (if any) are evaluated over the pre-state of the UML model m, while post-conditions refer to the respective post-model m’, except in the case of operations and properties marked by the tag @ pre which are still evaluated in the pre-state. Frame conditions for all classes and associations not included in the modifies clause are also automatically inferred.

Given the specifications of operations, we constrain models to form a sequence, where each step corresponds to the application of an edit operation:

The Alloy module ordering imposes a total order on all atoms of the given signature (in this case, UML) and declares a binary relation next that captures such order. The presented fact restricts the possible values of next, by requiring each state m and subsequent state m.next to be related by one of the specified operations.

In this case, \(\varDelta _\mathtt{UML }\) will be the number of models (intermediate steps) required to achieve a consistent target, which, as we will see next, will be determined by the scope of the signature denoting the respective meta-model, UML in this case.

3.4 Executing the semantics

Executing the transformation in checkonly mode is fairly simple: We just need to check the consistency predicate for a pair of concrete models. To represent a concrete model, since Alloy has no specific constructs to denote model instances, we use singleton signatures to denote specific objects and facts to fix the interpretation of fields. For example, a UML model M with Class A and Class B, with no Attribute elements, in a single Package P, where A is persistent and extends the non-persistent B, can be specified as follows:

To check whether UML model M is consistent with a RDBMS model N the command check { uml2rdbms[M,N] } is issued, with the scope of each signature being set to the number of elements of the respective class in each of the two models. Regarding enforce mode with GED minimization, in order to determine a new UML model M’ consistent with RDBMS model N, with original model M, the command

is issued with increasing \(\varDelta \) values (starting at 0). In this case, the scope of each signature is set to the number of elements of the respective class plus \(\varDelta \), to allow complete freedom in the choice of edit operations. The calculation and increment of both \(\varDelta \) and the scope are performed automatically by our tool. Since we are dealing with exact scopes, the class hierarchy must also be taken into consideration. For instance, for a HSM solution with one CompositeState and one State, the scope of State must be set to \(2\).

Regarding enforce mode with OBD minimization, the command

is issued with increasing scopes \(\varDelta \) (plus one) for signature UML, as all UML atoms will belong to the total order entailed by the operations. Singleton fields first and last denote the first and last atoms of the next total order: They are constrained to be the original and updated model, respectively, meaning that the latter should be obtained from the former using \(\varDelta \) edit operations. The scope of the remaining signatures is inferred from the operations specified in the meta-model, allowing a finer control over the scopes of the model finder, since we know the behavior of all possible update steps. This requires the creation of elements by the operations to be detected, which is by itself an ambiguous issue in OCL-specified operations. For our technique, we assume that every new element created by an operation is identified with the oclIsNew () operation in the post-condition and inside a one quantification (a predicate which holds for exactly one element [42, p. 170]). With oclIsNew () tags inside other quantifiers we would not be able to precisely measure the scope increment. For instance, consider the operation addAttribute(n:String) from the Class class. Its post-condition would contain, among others, the following constraint:

This in turn would be translated to Alloy as:

The user is required to specify an upper-bound for \(\varDelta \) that limits the search for consistent targets. If several consistent models are found at the minimum distance, our tool warns the user and allows him to see the different alternatives. If the user then desires to reduce such non-determinism, he can, for example, add extra OCL constraints to the meta-model or narrow the set of allowed edit operations to target a specific class of solutions. Section 6 will present a concrete example of how such narrowing can be done.

4 Bidirectionalizing ATL

ATL [25] is a widely used model transformation language created to answer the original QVT RFP and thus shares some characteristics with the standardized QVT languages. Unlike QVT-R, ATL has de facto standard operational semantics implemented as a plugin for the Eclipse IDE.Footnote 1 However, it is unidirectional, in the sense that a transformation between \(M\) and \(N\) meta-models (which will be denoted by \(\overrightarrow{ \varvec{{ t }} } \mathbin {:}{ M }\rightarrow { N }\), as it is a deterministic procedure), only specifies how to create an \(N\) model from an \(M\) model. The prescribed method to obtain bidirectional transformations with ATL is to write two unidirectional transformations. Unfortunately, this leads to obvious correctness and maintenance problems, since the language provides no means to check that they are inverses of each other, nor to automatically derive one from the other. Moreover, that only works well for essentially bijective transformations, since, unlike in QVT-R, in ATL transformations are not able to recover missing information from the previous target model, as they only receive the source model as input (as specified in the type of \(\overrightarrow{ \varvec{{ t }} } \)). In this section, we explore how our technique can be adapted to confer bidirectional semantics to ATL transformations.

4.1 ATL language

ATL is a hybrid language with both declarative and imperative constructs. The authors advocate that transformations should be declarative whenever possible, and imperative specifications should only be used if specifying the transformation declaratively proves to be difficult [25]. In this work, we restrict ourselves to declarative constructs, disregarding imperative ones (known in ATL as called rules and do blocks). Giving semantics to imperative constructs in Alloy’s relational logic is doable (see, for example, [38]), but the need to explicitly represent all intermediate updates to the models in execution traces would deem our solver-based approach completely unfeasible, in particular in presence of loops. The main constituents of ATL transformations are rules, the ATL equivalent to QVT-R relations, whose abstract syntax is:

Rules consist of a single source element \(a\) from the source meta-model and a set of target elements (for the scope of this presentation we restrict ourselves to a single target element \(b\)) from the target meta-model. Source elements are selected by an OCL pattern \(\pi _M\) over their properties, while target patterns consist of bindings \(\phi \) over the target element, which may consider values from source elements. Roughly, the execution semantics creates a target element for every source element that matches \(\pi _M\). Source models are read-only and target models write-only, and thus, transformations are not able to take into consideration existing elements in the target model, not even being able to “check-before-enforce” (although more recent work on incremental ATL executions could eventually be used to address this issue [27]).

Default rules are called matched rules and must be executed for all elements of the source model (similarly to QVT-R top relations). Lazy rules, unlike matched rules, are only executed if explicitly called from other rules (similarly to QVT-R non-top relations). They can either be unique or not: In unique lazy rules, a source element is always matched to the same target element, no matter how many times the rule is called over that source element; in non-unique lazy rules, a new target element is created every time it is called.

One particular characteristic of ATL is that target bindings may rely on implicit traceability links between elements created by other matched rules. Target elements may be directly “assigned” source elements, in which case, traces are used to retrieve the corresponding target element. This is possible because execution is divided in two phases: The first phase binds source elements to the source patterns and creates the target elements, implicitly creating traces between them; the second phase applies the bindings to the target elements, resorting to the traces if necessary. Since a source element cannot be matched by more than one rule [2], there is no ambiguity in the choice of the target element for each source element. Lazy rules must be explicitly called and thus are not taken into consideration in implicit resolutions.

Figure 7 presents an ATL version of the hsm2nhsm transformation using transitive closure. Rule S2S relates every top-level state in HSM to a state in NHSM with the same name, while rule T2T maps every transition in HSM to a transition on NHSM between the top-level containers of its source and target states. These are retrieved by filtering the result of the closure operation by a select operation. Note how in T2T, states of the HSM input model are being attributed to the source and target of transitions in the NHSM output model: The rule is taking advantage of the implicit traces created by S2S between HSM and NHSM states. A similar situation occurs in S2S when assigning state machines previously bound by M2M.

4.2 Overview of the bidirectionalization technique

To bidirectionalize ATL transformations, we will first derive a consistency relation \(\varvec{{ T }} \subseteq { M } \times { N }\) from an ATL transformation \(\overrightarrow{ \varvec{{ t }} } \mathbin {:}{ M }\rightarrow { N }\) and then use it to determine suitable (inverse) transformations according to the least-change semantics proposed in Sect. 2.4 for QVT-R enforce mode.

Since we are given the forward transformation \(\overrightarrow{ \varvec{{ t }} } \mathbin {:}{ M }\rightarrow { N }\), one could imagine that it would suffice to derive a suitable backward transformation \(\overleftarrow{ \varvec{{ t }} } \mathbin {:}{ M } \times { N }\rightarrow { N }\), thus lifting ATL transformations to the framework of lenses [13], as attempted before by Sasano et al [45]. A well-behaved lens consists precisely of a pair of transformations \(\overrightarrow{ \varvec{{ t }} } \mathbin {:}{ M }\rightarrow { N }\) (usually known as get) and \(\overleftarrow{ \varvec{{ t }} } \mathbin {:}{ M } \times { N }\rightarrow { M }\) (usually known as put), that is acceptable, \(\overrightarrow{ \varvec{{ t }} } \;(\overleftarrow{ \varvec{{ t }} } \;({ m },{ n }))\mathrel {=}{ n }\), guaranteeing that an update on \(n\) is indeed propagated to \(m\), and stable, \(\overleftarrow{ \varvec{{ t }} } \;({ m },\overrightarrow{ \varvec{{ t }} } \;({ m }))\mathrel {=}{ m }\), the lens equivalent to hippocraticness. The lens framework is designed to deal with transformations that are abstractions (i.e., surjective transformations), as implied by the asymmetric nature of the two transformations: The view \(n\) can always be derived solely from a source \(m\) as it contains less information. In particular, if a model \(n\) is updated to \(n'\) that falls outside the range of \(\overrightarrow{ \varvec{{ t }} } \), the behavior of \(\overleftarrow{ \varvec{{ t }} } \) is undefined. Such well-behaved lens could be obtained in our least-change maintainer framework, by setting the forward transformation as an implicit consistency relation as \(\varvec{{ T }}\;({ m },{ n })\equiv { n }\mathrel {=}\overrightarrow{ \varvec{{ t }} } \;({ m })\).

Unfortunately, this imposes some undesirable limitations in the allowed usage scenarios. Consider a very simple example where a source model World consists of a set of Person elements with a name, and a target model Company consists of a set of Employee elements with a name and an (optional) salary (Fig. 8), and a trivial ATL transformation employ that maps every Person to an Employee with the same name and an empty salary (Fig. 9). This transformation is clearly not surjective since it only targets the subset of Company models where Employee elements have no salary. Now, consider a model \({ wrd }\mathbin {:} \mathtt{World } \) with a single person \(p\) and the corresponding model \({ cpn }\mathbin {:} \mathtt{Company } \) created by \(\overrightarrow{ \varvec{ \mathtt{employ } } } \). If the user updates \(cpn\) to \(cpn'\) by assigning a salary to \(p\), there will be no valid \(wrd'\) such that \({ cpn' }\mathrel {=}\overrightarrow{ \varvec{ \mathtt{employ } } } \;{ wrd' }\), and thus \(cpn'\) would be an invalid model. This limitation would greatly reduce the updatability of the framework.

A possible solution to this problem would be to weaken the lens laws, as suggested in [45], by allowing \(\overleftarrow{ \varvec{{ t }} } \;({ m },{ n })\) to produce a source \(m'\) whose view \({ n' }\mathrel {=}\overrightarrow{ \varvec{{ t }} } \;({ m' })\) is not \(n\) (breaking acceptability) as long as propagating \(n'\) backward produces \(m'\) again, i.e.,

(deemed weakly acceptable). However, even if the above update is now allowed, if the user updates the \( \mathtt{World } \) model (for example, inserting a new person) and wishes to propagate such change to the \( \mathtt{Company } \), the forward transformation \(\overrightarrow{ \varvec{ \mathtt{employ } } } \) would erase the previously assigned salary of \(p\), since it is not incremental. Embedding ATL in a lens framework with such weakened laws presumes that once \(\overrightarrow{ \varvec{{ t }} } \) is run to generate a new target model from a source, subsequent updates can only be safely propagated backwards.

To overcome this limitation, we opt instead to embed ATL transformations in the framework of maintainers, likewise to QVT-R. The main idea is to infer from \(\overrightarrow{ \varvec{{ t }} } \mathbin {:}{ M }\rightarrow { N }\) a consistency relation \(\varvec{{ T }} \subseteq { M } \times { N }\) such that every model \(m\) is considered consistent with any model that extends \(\overrightarrow{ \varvec{{ t }} } \;({ m })\), in the sense that it sets values for properties not bound by \(\overrightarrow{ \varvec{{ t }} } \). This of course implies that \(\overrightarrow{ \varvec{{ t }} } \subseteq \varvec{{ T }}\). From \(\varvec{{ T }}\) a new forward transformation \(\overrightarrow{ \varvec{{ T }} } \mathbin {:}{ M } \times { N }\rightarrow { N }\) and a backward transformation \(\overleftarrow{ \varvec{{ T }} } \mathbin {:}{ M } \times { N }\rightarrow { M }\) can then be derived to propagate updates in both directions, using the least-change semantics described in Sect. 2.4 (obviously satisfying both the correctness and hippocraticness laws). Back to the example from Fig. 9, since \(\overrightarrow{ \mathtt{employ } } \) does not bind the salary attribute in Company models, the World with a single Person \(p\) would be consistent with any Company with a single Employee \(p\), whatever his salary. The following section will present a technique to infer one such possible \(\varvec{{ T }}\) from \(\overrightarrow{ \varvec{{ t }} } \).

The bidirectional ATL framework obtained with this technique satisfies the following properties. First, since \(\overrightarrow{ \varvec{{ t }} } \subseteq \varvec{{ T }}\), the consistency relation trivially holds for pairs of models \(({ m },{ n })\) such that \(\overrightarrow{ \varvec{{ t }} } \;({ m })\mathrel {=}{ n }\). Second, if \(\overrightarrow{ \varvec{{ t }} } \) is surjective, applying either \(\overrightarrow{ \varvec{{ t }} } \) or \(\overrightarrow{ \varvec{{ T }} } \) to an updated source will yield the same updated target: in this case, \(\overrightarrow{ \varvec{{ t }} } \) completely defines the target elements, thus \(\varvec{{ T }}\) only related a model \(m\) to \(\overrightarrow{ \varvec{{ t }} } \;({ m })\). In this case, the pair of transformations \(\overrightarrow{ \varvec{{ t }} } \) and \(\overleftarrow{ \varvec{{ T }} } \) will form a well-behaved lens. In contrast, for non-surjective ATL transformations this is no longer the case. It is easy to see why by considering the toy example from Fig. 9: by updating a view \(\overrightarrow{ \varvec{{ t }} } \;{ wrd }\) to \(cpn\) with the insertion of a salary, \(wrd\) and \(cpn\) will still be consistent by \(\varvec{ \mathtt{Employ } }\), and thus neither \(\overrightarrow{ \varvec{ \mathtt{Employ } } } \) nor \(\overleftarrow{ \varvec{ \mathtt{Employ } } } \) will update the models; applying \(\overrightarrow{ \varvec{ \mathtt{employ } } } \), however, would revert \(cpn\) back to \(\overrightarrow{ \varvec{{ t }} } \;{ wrd }\). In this case \(\overrightarrow{ \varvec{ \mathtt{employ } } } \) and \(\overleftarrow{ \varvec{ \mathtt{Employ } } } \) do not form a well-behaved lens, satisfying only weak acceptability. Thus, while the derivation of a maintainer \(\varvec{{ T }}\) does not invalidate the use of \(\overrightarrow{ \varvec{{ t }} } \) paired with \(\overleftarrow{ \varvec{{ T }} } \)—the pair comprises a stable and weakly acceptable lens—due to its non-incrementality, \(\overrightarrow{ \varvec{{ t }} } \) is better suited to initially create the target model from a source, at which point \(\overrightarrow{ \varvec{{ T }} } \) and \(\overleftarrow{ \varvec{{ T }} } \) can be used to propagate updates in both directions to maintain consistency.

4.3 Inferring a consistency relation

At first glance, the semantics of an ATL transformation shares some similarities with the checking semantics of QVT-R described in Sect. 2.3: Pattern matching is used to filter candidate source elements, and it resembles the forall-there-exists quantification pattern to relate source and target elements. There are also some apparent differences: It is a directional semantics, in the sense that the above forall-there-exists quantification in principle should only be checked in the direction of the target (the direction of the transformation), and the existential quantifier should be unique, that is, for all candidate source elements, there must exist exactly one target element built with the target bindings. However, there are some subtle differences: As the following example will show, the forall-there-exists semantics cannot be realized using quantifiers, and explicit traceability links must be used instead; furthermore, checks must be also performed in the opposite direction to avoid spurious target elements.

Consider again the simple transformation from Fig. 9, and the following semantics for the rule \( \mathtt{P2E } \) with quantifiers, using a notation similar to the one introduced in Sect. 2.3 for QVT-R:

Given a source model \(wrd\) with two Person elements with the same name \(a\), this semantics would force a consistent target model \(cpn\) with exactly one Employee with name \(a\). This is obviously not the intended ATL semantics, as two Employee elements with the same name are created by the transformation, one for each source Person. Obviously, relaxing the uniqueness constraint of the existential quantifier will not solve the problem, as an arbitrary number of Employee elements would be allowed. The one-to-one mapping between candidate source and generated target elements cannot be realized by quantifiers, but through an explicit traceability relation between them. Moreover, this directional check does not guarantee that the only Employee elements in the target model are the ones created by the transformation, and some check in the opposite direction must be performed to ensure that every Employee originates from a Person. Such semantics can be encoded through a higher-order quantification as follows:

That is, the transformation ensures that there exists a traceability relation \( \mathtt{P2E } _{\vartriangleleft \! \vartriangleright } \) between every Person (since \(\pi _\mathtt{World }\) is empty) to a unique Employee with the same name, and vice-versa. Note that this semantics considers target elements that fall outside the range of \(\overrightarrow{ \varvec{ \mathtt{employ } } } \), namely those that have the salary defined.

In general, the semantics of a matched rule \({ R }\) can be specified as follows:

This defines \({ R } _{\vartriangleleft \! \vartriangleright } \) as a one-to-one relation between every candidate source element and a single corresponding valid target element. The first expression states that every \(a : A\) that matches the pattern \(\pi _M\) must be related to a single \(b : B\) with the bindings \(\phi \); the second expression states that every \(b : B\) must be related to a valid \(a : A\). The binding \(\phi \) in a rule assigns to the target element values possibly from the source element: Unlike in QVT-R target patterns, all variables in \(\phi \) must be previously assigned in the source pattern \(\pi _M\). As such, they are interpreted likewise to where conditions in QVT-R.

An implicit call that might occur in the right-hand side \(e\) of a binding is handled as follows. If \(e\) has a primitive type or is a target element, then it is directly translated to Alloy. If \(e\) denotes a source element of type \(A\), we retrieve the matching target element of type \(B\) from the respective traceability relation \({ R } _{\vartriangleleft \! \vartriangleright } \) (notice that it is always possible to uniquely determine \({ R } _{\vartriangleleft \! \vartriangleright } \), since source elements of a given type are restricted to be matched by a single rule [2]). Traceabilities can also be implicitly called over collections, as in the T2T transformation. Our tool also supports these implicit calls for collections that are sets, the above procedures being applied for every element \(e\) in the set.

Although the semantics of unique lazy rules also relies on explicit traceability links, there is a subtlety that prevents their encoding in a similar way to (top) matched rules, namely it is quite difficult to infer in static time what elements a unique lazy rule must relate—recall that they only create unique target elements when called from another rule. As such, the semantics of these rules will be divided in two parts. Given an unique lazy rule \({ R }\), the following predicate will only enforce the correctness of the respective traceability relation, with the existence and uniqueness checks being deferred to the rule call:

Moreover, when a unique lazy rule \(R\) is called over an expression \(e\), we insert the following additional constraints to ensure that the trace between \(e\) and the matched element \(b\) exists and is unique:

where \(\mathcal {T}_{{ U }}\) denotes the union of all unique lazy traces. The first part of the conjunction states that \(e\) is uniquely matched to a \(b\) by \({ R } _{\vartriangleleft \! \vartriangleright } \), and the second that \(b\) is not being matched to any other element by any other rule.

Finally, for an ATL transformation \({ T }\), we assume that two models are consistent if the above semantics holds for all (matched and unique lazy) rules \(\mathcal {R}_{{ T }}\):

This semantics can be encoded in Alloy in a similar manner to that of QVT-R, as described in Sect. 3.3. The higher-order existential quantification that asserts the existence of the traceability relation \({ R } _{\vartriangleleft \! \vartriangleright } \) can be encoded by skolemization, by explicitly declaring an Alloy relation that represents it. This ends up being similar to the actual encoding of QVT-R, where an auxiliary relation was also declared to encode \({ R } _\vartriangleright \), albeit for a different reason, namely to support recursion. Non-unique lazy rules are currently not supported by our technique.

5 Deployment

The technique for bidirectional model transformations presented in this article has been implemented for both QVT-R and ATL transformations as part of the Echo framework,Footnote 2 a tool for managing intra- and inter-model consistency. In this section, we first briefly present Echo features and architecture and then describe with more detail two of its key components: the model visualizer and transformation optimizer.

5.1 The Echo framework

The focus of this framework is to help users develop and keep their models consistent. It supports both intra-model (i.e., consistency between a model and its meta-model) and inter-model consistency (relating several models via bidirectional transformations, the focus of this article). In both cases, Echo can detect and repair inconsistencies.

Concerning intra-model consistency, given a meta-model \(M\) with OCL constraints, Echo can automatically check whether a model \(m\) is consistent with \(M\), that is \(m : M\). This can be done for newly created models or every time the user updates an existing model. If consistency of model \(m\) is broken, for example because some of the OCL constraints is violated, then Echo can automatically suggest minimally repaired models \(m' : M\) using the model finding procedure described in this article, that is, it can find consistent models \(m'\) at minimum GED or OBD from \(m\). Various alternatives for repaired models are presented to the user in increasing distance to the original model, among which the user is able to choose the preferred one. To help the user choose the preferred model, they can be depicted as graphs by resorting to the Alloy visualizer, as seen in Fig. 10. For better readability, an Alloy theme is automatically inferred from the meta-models (as described in Sect. 5.2). A user-defined theme can also be provided if desired. To help kickstart model development, Echo can also be used to generate a new minimal model \(m : M\) (notice that often models cannot be empty due to meta-model constraints), or to generate scenarios for meta-model validation, that is, models parameterized by particular scopes and/or additional OCL constraints targeting specific configurations.

Concerning inter-model consistency, given a QVT-R or ATL transformation \({ T }\), from which consistency relation \(\varvec{{ T }} \subseteq { M } \times { N }\) is inferred, and models \(m : M\) and \(n : N\), Echo can automatically check if \(m\) and \(n\) are \(\varvec{{ T }}\)-consistent, that is \(\varvec{{ T }}\;({ m },{ n })\). In the case of QVT-R, it follows the standard-compliant checking semantics presented in Sect. 2.3. For ATL, the semantics described in Sect. 4.3 is used. Given a transformation \(\varvec{{ T }} \subseteq M \times N\) and models \(m : M\) and \(n : N\) such that \(\lnot \varvec{{ T }}\;({ m },{ n })\), Echo can perform a minimal update to one of the models to recover consistency, for example produce \(n' : N\) such that \(\varvec{{ T }}\;({ m },{ n' })\). This repair follows the enforcement semantics satisfying the principle of least-change, as described in Sect. 2.4. Likewise to intra-model consistency recovery, the user is able to choose the desired repaired model among all minimal consistent models. Finally, given a transformation \(\varvec{{ T }} \subseteq M \times N\) and a model \(m : M\), Echo can produce a minimal model \(n : N\) such that \(\varvec{{ T }}\;({ m },{ n })\) (likewise for the opposite direction). This is useful at early phases of model-driven software development, when the user has developed a first version of the source model, from which he wishes to derive a first version of the target. Afterward, updates can be performed and consistency recovered incrementally to any of the models, by resorting to the same transformation.

While also available as a command-line application, Echo’s main distribution platform is as a plugin for the Eclipse IDE, which automates the features just presented. Echo’s environment consists of a set models, conforming to OCL-annotated meta-models, and a set of inter-model constraints specified by QVT-R and ATL transformations. Each model is thus restricted by the intra-model constraint entailed by the meta-model and any number of inter-model constraints simultaneously. The Echo plugin was designed to be used in an online setting, in the sense that the consistency tests are automatically applied as the user is editing the models and, thus, updates are expected to be incremental, leaving the original models as unmodified as possible. Every time the user updates a model, the system automatically checks its consistency in relation to the other artifacts. If a model is deemed inconsistent, the plugin displays an inconsistency error and proposes possible fixes. As there may be more than one consistent model at minimal distance, Echo presents all possible models in succession, allowing the user to choose the desired one, at which time the update is effectively applied to the model instance. If none of the minimal solutions is chosen, Echo presents models at increasingly higher distances from the original.

The plugin is built on top of the Eclipse Modeling Framework (EMF),Footnote 3 and resorts to the Model Development Tools (MDT) component to process OCL formulas and to the Model-to-Model Transformation (MMT) component to parse QVT-R and ATL specifications. EMF prescribes Ecore for the specification of meta-models, while model instances are presented as XMI resources. To enhance the meta-models with additional constraints, we follow the technique proposed by MDT, of embedding the OCL constraints in meta-model annotations. Both Ecore meta-models and XMI instances are translated to Alloy following the techniques from Sect. 3, so that the transformation engine described in this article can be applied.