Abstract

In this work, we study the problem of learning a partial differential equation (PDE) from its solution data. PDEs of various types are used to illustrate how much the solution data can reveal the PDE operator depending on the underlying operator and initial data. A data-driven and data-adaptive approach based on local regression and global consistency is proposed for stable PDE identification. Numerical experiments are provided to verify our analysis and demonstrate the performance of the proposed algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Partial differential equations (PDE) have been used as a powerful tool in modeling, studying, and predicting in science, engineering, and many real-world applications. Many of them are naturally time-dependent, i.e., modeling the dynamics of an underlying system that is evolving in time, such as heat/diffusion equation, convection/transport equation, Schrödinger equation, Navier–Stokes equation. In particular, a PDE can be an effective way to model the underlying dynamics or represent a map from the initial input to the terminal output. Effectiveness comes from the fact that while a PDE model typically does not have many terms, it can capture various physical laws, diverse mechanisms, and rich dynamics. Moreover, a PDE model is easy to interpret and the parameters (coefficients) are meaningful.

In the past, most of these equations are derived from basic physical laws and assumptions, such as Newton’s laws of motion [30], conservation laws [33], Fick’s laws of diffusion [12], plus simplification/approximation to various extent. They have been extremely successful in modeling and studying physical, biological, environmental, and social systems and solving real-world problems. With the advance of technologies, abundant data are available from measurements and observations in many complex situations where the underlying model is not yet available or not accurate enough. Whether one can learn a PDE model directly from measured or observed data becomes an interesting question. Here, data mean the observed/measured solution and its (numerically computed) derivatives, integrals, and other transformed/filtered quantities.

Many methods have been proposed for PDE learning [7, 11, 17, 19, 22, 23, 27, 28, 31, 34, 38, 39, 42,43,44, 46, 48,49,50]. In general, there are two approaches. One is to directly approximate the operator/mapping, i.e., \(u(t)\rightarrow u(t+\Delta t)\), learned/trained from solution data. We call this approach differential operator approximation (DOA). The approximation is restricted to a chosen finite-dimensional space such as on discretized meshes in the physical domain, or a transformed space, e.g., Fourier/Galerkin space. In particular, several recent works proposed using various types of neural networks to approximate the operator/mapping [26,27,28, 48]. Although this approach is general and flexible, it does not explicitly reconstruct the differential operator and hence does not help to understand the underlying physics or laws. Also, it does not take advantage of the compact parameterized form of a differential operator and hence requires a large degree of freedom to represent the mapping. Moreover, it means more data and more computational costs are required to train such a model. Another approach is to approximate the underlying PDE using a combination of candidates from a dictionary of basic differential operators and their functions. We call this approach differential operator identification (DOI), which determines the explicit form of differential operators. Since DOI uses terms from a predetermined dictionary (prior knowledge) to approximate the underlying PDE, it involves much fewer degrees of freedom, data, and computational cost compared to the previous approach. Moreover, using the explicit form of differential operator allows approximation using local measurements in space and time, which is more practical in real applications. Once found it is also more helpful to understand the underlying physics or laws. Several models and methods [7, 17, 23, 27, 28, 31, 34, 38, 39, 42,43,44] have been proposed along this line. However, most of the previous works are focused on PDE identification with constant coefficients, which has only a few unknowns. The problem is formulated as a regression problem from a prescribed dictionary, e.g., polynomials of the solution and its derivatives. Model selection by promoting sparsity may be applied. Typically single or multiple solutions to the PDE are sampled on a global and dense rectangular grid in space and time (from \(t=0\)) to identify a PDE model.

A common issue in most of the previous studies is that PDE learning is posed in an ideally controlled setup in terms of both initial data and the number of solution trajectories, which are as diverse and as many as one wants. Moreover, the solution data are sampled on a uniform and dense grid in space and time, including \(t=0\). In many real applications, one only has the chance to observe a phenomenon and its dynamics once it happens, for examples, recording seismic waves [10], satellite data of weather development [16], remote sensing of air pollution [5], etc. Either the event does not happen often or when it happens next time, the environment and setup are very different. This means that the observation data are the solution to a PDE corresponding to one initial condition, i.e., a single trajectory. Moreover, one may not afford if not impossible to measure or observe the data everywhere in space and time (especially close to initial time). In other words, only one solution trajectory with uncontrollable initial data measured by local sensors (for some time duration) at certain locations after some time lapses may be available for PDE learning in practice. In addition to these realities, heterogeneous environment or material properties require PDE learning with unknown coefficients varying in space and time, which is even more challenging due to the coupling of the unknown functions with the solution data. Last but not least, not all solution data should be used in PDE learning indifferently since PDE solution may be locally degenerate, e.g., zero, or singular in certain regions in space and time, which may lead to significant errors due to measurement noise and/or numerical discretization errors.

In this work, we will study a few basic questions in PDE learning using various types of PDE models. In particular, we will characterize the data space, the dimension of the space spanned by all snapshots of a solution trajectory with certain tolerance, and show how it is affected by the underlying PDE operator and initial data. This information is of particular importance for DOA approaches. Since the operator approximation is trained by snapshots along a trajectory, it means the operator can be possibly learned only in this data space. Then we focus on the PDE identification problem using a single trajectory. We first study the identifiability issue and then propose a data-driven and data-adaptive approach for general PDEs with variable coefficients. The main goal is to identify the PDE type correctly and robustly using a minimal amount of local data. The required data are a few patches of local measurements that can resolve the variation of the coefficients and the solution. Our proposed Consistent and Sparse Local Regression (CaSLR) method finds a differential operator which is 1) globally consistent, 2) built from as few terms as possible from the dictionary, and 3) a good local fit to data using different linear combinations at different locations. Once the PDE type is determined, a more accurate estimation of coefficients can be achieved by using regression on (more refined) local measurements and/or proper regularization. Numerical examples are used to verify our analysis and demonstrate the performance of the proposed algorithms.

We note that our CaSLR method has some similarities to the method proposed in [38] which uses group sparsity to enforce global consistency. However, [38] uses solution data on a dense grid in space and time and restrained their exploration to PDEs with coefficients varying either in space or in time, but not both. When the coefficients depend only on space, they propose to identify a constant coefficient PDE for each time using the corresponding snapshot data in whole space, and they address the case where coefficients depend only on time analogously. The CaSLR is different from this previous work in several major aspects. First, our method identifies PDE with coefficients varying both in space and time based on patches of local data. Second, a different feature identification process that does not require additional thresholding is used. We also provide an identification guarantee analysis.

In our theoretical analysis, we use linear evolution partial differential equations of the following form as examples:

To avoid the complication of different possible boundary conditions for different operator \({\mathcal {L}}\) in our study, we consider the periodic setup: \(\Omega = {\mathbb {T}}^d:= {\mathbb {R}}^d /(2\pi {\mathbb {Z}})^d\) as the d-torus. The time-independent linear differential operator \({\mathcal {L}}\) is parameterized by the unknown coefficients \(\{ p_{\alpha }(x) \}_{|\alpha |=0}^n\subset {\mathcal {K}}(\Omega ; {\mathbb {R}})\),

where \({\mathcal {K}}(\Omega ;{\mathbb {R}})\) is a certain set of real-valued periodic functions.

Remark 1.1

When one learns a PDE operator, a source term should not be part of the unknown. Otherwise, there is no unique solution.

2 Data Space Spanned by Solution Trajectory

As discussed before, a single solution trajectory, i.e., the solution u(x, t) corresponding to an initial condition, is likely what one can observe in practice. Hence a basic question in PDE learning from its solution is how large is the data space spanned by all snapshots \(u(x,t), 0\le t\le T<\infty \), along a solution trajectory. In the DOA approach, snapshot pairs \((u(x,t), u(x,t+\Delta t))\) are typically used to train the approximation, which implies that the action of the operator restricted to this data space can be observed. In the following study, we characterize the information content of a solution trajectory by estimating the least dimension of a linear space (in \(L^{2}(\Omega )\)) where all snapshots \(u(\cdot ,t)\), \(0\le t\le T\) are \(\varepsilon \) close to in \(L^2\) norm. This characterization is dual to the Kolmogorov n-width [24] of the solution trajectory as a family of functions in \(L^2(\Omega )\) parameterized by time t.

As examples, we use two different types of operators where \({\mathcal {L}}\) is, 1) a strongly elliptic operator, and 2) a first-order hyperbolic operator, to show different behaviors. These two types of operators are ubiquitous for physical models such as diffusion equation, viscous Stokes flow, transport equation. Intuitively, when \({\mathcal {L}}\) is the Laplace operator, the solution stays close to a low-dimensional space since \(u(\cdot , t)\), \(t>0\) is analytic in space due to the smoothing effect. We show in Theorem 2.8 that for a general elliptic operator \({\mathcal {L}}\), all snapshots of any single trajectory u(x, t), i.e., \(u(\cdot ,t)\), \(0<t<T\), stay \(\varepsilon \) close to a linear space of dimension at most of the order \(O(|\log \varepsilon |^2)\). This implies the intrinsic difficulty in a direct approximation of the mapping, \(u(x,t)\rightarrow u(x,t+\Delta t)\) for small \(\Delta t\), i.e., the generator, for a parabolic differential operator by a single trajectory since the test function space spanned by all snapshots of a solution trajectory is very small. On the other hand, if \({\mathcal {L}}\) is a first-order hyperbolic operator, the data space spanned by all snapshots of a single trajectory stays \(\varepsilon \) close to linear space of dimension \(O(\varepsilon ^{-\gamma })\), where \(\gamma \) depends on the regularity of the initial data.

2.1 Strongly Elliptic Operator

2.1.1 Preliminaries

Let X be a Banach space and \({\mathcal {A}}: X\rightarrow X\) is a linear, densely defined, closed operator with the spectral set \(\text {sp}({\mathcal {A}})\) and the resolvent set \(\rho ({\mathcal {A}})\).

Definition 2.1

Let the sector region \(\Sigma _{\delta }\subset {\mathbb {C}}\) be

We say the operator \({\mathcal {A}}\) is admissible if

where M is a positive constant.

When the operator \({\mathcal {A}}\) is admissible, one can take a suitable contour integration along \({\mathcal {C}}\) for the following Dunford-Cauchy integral

the evaluation of \(e^{-{\mathcal {A}}t}\) then can be approximated through a well-designed quadrature scheme on \({\mathcal {C}}\). It has been shown in [29] that the approximation can have exponential convergence. Similar results are found in [14, 15] as well. Such exponential convergence also enables fast algorithms for solutions of (1.1), see [21].

Theorem 2.2

(Theorem 1, [29]) If the operator \({\mathcal {A}}\) is admissible, then there exists an operator \({\mathcal {A}}_N\) in the form of

with constants \(c_k, z_k\in {\mathbb {C}}\) and

uniformly on the time interval \([t_0, \Lambda t_0]\) with \(c = {\mathcal {O}}(1/\log \Lambda )\).

It should be noticed that in (2.5) the exponent’s dependence on time is quite weak. By a simple modification of the above theorem, we have tailored the following corollary for later use.

Corollary 2.3

For \(t\in [t_0, T]\) that \(t_0 = \varepsilon ^{\kappa }\), \(\kappa >0\), one can take \(N = C_{{\mathcal {A}}}(\kappa )|\log \varepsilon |^2\) such that

for certain constant \(C_{{\mathcal {A}}}(\kappa ) > 0\) depending on \({\mathcal {A}}\) and \(\kappa \).

2.1.2 Properties of Strongly Elliptic Operator

Assume \({\mathcal {L}}\) is a strongly elliptic operators of order \(n=2m\), that is, its principal part

for some constant \(\nu > 0\) for all \(x\in {\overline{\Omega }}\), \(\xi \in {\mathbb {Z}}^d\). Let the Hilbert space \(H^{s}(\Omega )\)

we cite the following two classical lemmas [2, 32] for our case.

Lemma 2.4

There exist a constant \(C > 0\) such that

for all \(u\in H^{2m}(\Omega )\).

Lemma 2.5

Let \({\mathcal {L}}\) be a strongly elliptic operator of order \(n = 2m\), then there exists constants \(C, R > 0\) and \(\theta \in (0, \frac{\pi }{2})\) that

for all \(u\in H^{2m}(\Omega )\) and \(z\in {\mathbb {C}}\) satisfying \(|z|\ge R\) and \(\theta - \pi<\arg z<\pi - \theta \).

From Lemma 2.4, \({\mathcal {L}}\) is a closed operator and because the domain of \({\mathcal {L}}\) includes all \(C^{\infty }(\Omega )\) functions, therefore \({\mathcal {L}}\) is also densely defined. The following is a direct corollary of Lemma 2.5.

Corollary 2.6

Let \({\mathcal {L}}\) be a strongly elliptic operator of order \(n = 2m\), then there exists a positive constant \(\mu >0\) such that \({\mathcal {L}}+\mu \) is admissible.

Since the parameters \(\{ p_{\alpha } \}_{|\alpha |=0}^n\) are real-valued, then the strongly elliptic operator \({\mathcal {L}}\) permits a decomposition \({\mathcal {L}}= {\mathcal {L}}_0 + {\mathcal {B}}\) such that \({\mathcal {L}}_0\) is a self-adjoint operator of order n and \({\mathcal {B}}\) is a differential operator of order \(<n\); therefore, the spectrum of \({\mathcal {L}}\) is discrete, there are only a finite number of eigenvalues outside the sector \(\Sigma _{\delta }\) and the eigenfunctions may form a complete basis in \(L^2(\Omega )\) under certain circumstances [1, 8]. Let \(\mu > 0\) such that \({\mathcal {L}}_{\mu }:={\mathcal {L}}+ \mu \) is admissible, in the following we assume the initial condition \(u_0\in L^2(\Omega )\) can be represented by eigenfunctions

where \(\{\lambda _k, \phi _k\}_{k\ge 1}\) are the eigenpairs of \({\mathcal {L}}_{\mu }\) sorted by \(\Re \lambda _k\) in ascending order including multiplicity. Furthermore, the eigenvalues \(\{ \lambda _k \}_{k\ge 1}\) satisfy the growth rate \(\Re \lambda _k = {\mathcal {O}}(k^{\beta })\) with \(\beta =n/d\) [13].

Lemma 2.7

Let the eigenpairs of \({{\mathcal {L}}}_{\mu }\) be \(\{\lambda _k, \phi _k\}_{k\ge 1}\), then the solution of (1.1) can be represented by

where the coefficients \(\{c_k\}_{k=1}^{\infty }\) satisfy \( u_0(x) = \sum _{k=1}^{\infty } c_k \phi _k(x)\).

Theorem 2.8

Suppose \({\mathcal {L}}_{\mu }\) is admissible that the spectrum sits in the interior of the sector \(\Sigma _{\delta }\) and the coefficients in (2.12) decay as \(|c_k|\le \theta k^{-\gamma }\), \(\theta> 0, \gamma > 1\), then there exists a linear space \(V\subset L^2(\Omega )\) of dimension \(C_{{\mathcal {L}}}(\kappa ) |\log \varepsilon |^2\) such that

where \(P_V\) is the projection operator onto V and \(C=C(\theta , \gamma )\). The constant \(C_{{\mathcal {L}}}(\kappa )\) is chosen from Corollary 2.3 and \(\kappa ={\mathcal {O}}(\beta /(\gamma -1))\).

Proof

Without loss of generality, we assume that \(\Vert u_0\Vert =1\). Let \(u_M\) be the truncated series from Lemma 2.7,

then

Let \(q\in {\mathbb {N}}\) such that \(\Re \lambda _q > \mu \), we denote \(M_{\varepsilon } = q + \varepsilon ^{1/(1-\gamma )}\), \(L = |\log \varepsilon |\) and define the following approximation

then for each t, \(w_{\varepsilon }\) sits in the linear space

For \(t\in [0, \frac{1}{C_{\delta }\Re \lambda _{M_{\varepsilon }}}]\), \(C_{\delta } = 1+\tan |\delta |\) since the spectrum is included in the sector region, we have

for \( k=1,\dots , M_{\varepsilon } \), then by the error estimate of Taylor expansion on \([0, \frac{1}{(1+\delta )\Re \lambda _{M_{\varepsilon }}}]\),

which implies

On the interval \([ \frac{1}{(1+\delta )\Re \lambda _{M_{\varepsilon }}},T]\), since \(\Re \lambda _{M_{\varepsilon }} = {\mathcal {O}}(M_{\varepsilon }^{\beta })\), we take \(\kappa = \log (\lambda _{M_{\varepsilon }})/|\log \varepsilon | = {\mathcal {O}}(\beta / (\gamma - 1))\), then by Corollary 2.3, there exists a linear space \(V_2\) of dimension \(C_{{\mathcal {L}}}(\kappa )|\log \varepsilon |^2\), i.e., spanned by \((z_k-{\mathcal {A}})^{-1}u_0, k=1, \ldots , N=C_{{\mathcal {L}}}(\kappa )|\log \varepsilon |^2\), such that

Now we define \(V = V_1\cup V_2\), then \(\dim V\le \dim V_1+\dim V_2 = {\mathcal {O}}(|\log \varepsilon |^2)\) and

\(\square \)

Remark 2.9

If the elliptic operator \({\mathcal {L}}_{\mu }\) in Theorem 2.8 has eigenfunctions that form an orthonormal basis, e.g., a self-adjoint elliptic operator, the statement is still true for \(|c_k|\le \theta k^{-\gamma }\) for some \(\theta>0, \gamma >\frac{1}{2}\).

Following Lemma 2.10 shows that, for a self-adjoint elliptic operator \({\mathcal {L}}\), even if multiple trajectories are available, the data space stays \(\varepsilon \) close to the space spanned by the first \({\mathcal {O}}((\tau ^{-1}|\log \varepsilon |)^{d/n})\) eigenfunctions of \({\mathcal {L}}\) after certain \(\tau >0\). This poses two difficulties for the direct operator approximation approach. First, unless diverse initial data u(x, 0) can be used and the corresponding solution u(x, t) can be observed at \(t\ll 1\), an accurate approximation of the mapping is not possible. Second, although all solution trajectories stay close to a low-dimensional space spanned by a few leading eigenfunctions of \({\mathcal {L}}\), it is not known a priori unless in the special case of constant coefficients and simple geometry.

Lemma 2.10

If \({\mathcal {L}}\) is a self-adjoint strongly elliptic operator, then there exists a linear space \(V\subset L^2(\Omega ) \) such that for any solution data u(x, t) to the equation

with initial condition \(u_0\in L^2(\Omega )\),

where \(\dim V = {\mathcal {O}}((\tau ^{-1}|\log \varepsilon |)^{d/n})\).

Proof

Let \(\phi _1, \phi _2, \dots \) be the eigenfunctions of \({\mathcal {L}}\), which forms an orthonormal basis for \(L^2(\Omega )\), with eigenvalues \(\lambda _1, \lambda _2, \dots \) and \(V = \text {span} \{\phi _1, \dots , \phi _M\}\) is the subspace formed by the first M eigenfunctions of \({\mathcal {L}}\). For each \(t\in [\tau , T]\),

We select M such that \(e^{(-\lambda _{M+1}+\mu )\tau }\le \varepsilon \), then \(\lambda _{M+1} \ge \frac{|\log \varepsilon |}{\tau } + \mu \). From the growth rate that \(\lambda _k\sim {\mathcal {O}}(k^{n/d})\), we see \(M = {\mathcal {O}}((\tau ^{-1}|\log \varepsilon |)^{d/n}) \) would suffice. \(\square \)

2.2 Hyperbolic PDE

Next, we show that the behavior of solution trajectory for hyperbolic PDEs can be quite different. The data space spanned by snapshots of a single solution trajectory depends on the regularity of the initial data and can be quite rich. We use the following first-order hyperbolic PDE defined on a torus \(x\in \Omega =[0,2\pi ]^d\) with the periodic condition and \(t\in [0, T]\) as an example

Define the following two correlation functions in space and time of a solution trajectory,

where K(x, y) and G(s, t) define two symmetric semi-positive compact integral operators on \(L^2(\Omega )\) and \(L^2[0,T]\), respectively. They have the same non-negative eigenvalues \(\lambda _1\ge \lambda _2 \ge \ldots \ge \lambda _j\ge \ldots \) with \(\lambda _j\rightarrow 0\) as \(j\rightarrow \infty \). Their normalized eigenfunctions form an orthonormal basis in \(L^2(\Omega )\) and \(L^2[0, T]\). Define \(V_K^k\) and \(V_G^k\) to be the linear space spanned by their k leading eigenfunctions of K(x, y) and G(s, t), respectively, which provides the best k-dimensional linear spaces that approximate the family of functions \(u(\cdot ,t)\) (in \(L^2(\Omega )\)) and \(u(x, \cdot )\) (in \(L^2([0,T])\)). We have

where \(P_{V_K^k}\) and \(P_{V_G^k}\) denote the projection operator to \(V_K^k\subset L^2(\Omega )\) and \(V_G^k\subset L^2[0,T]\).

Below we show an upper bound for the dimension of the best linear subspace in \(L^2(\Omega )\) that can approximate all snapshots of a single trajectory to \(\varepsilon \) tolerance in \(L^2(\Omega \times [0,T])\).

Lemma 2.11

Let \({\textbf{c}}(x)\in C^p(\Omega )\) be a velocity field and \(u_0 \in C^p(\Omega )\), then there exists a subspace \(V\subset L^2(\Omega )\) of dimension \(o(\varepsilon ^{-2/p})\) that

Proof

From (2.26), the linear space spanned by the leading k eigenfunctions of the compact operator induced by the kernel function K(x, y) is the best approximation of the family of functions \(u(\cdot ,t)\) and satisfies

where \(\lambda _j\) is the eigenvalues of the compact operator induced by kernel function K(x, y) or G(s, t) defined in (2.25).

Let Z(t; x) solve the ODE

Since \({\textbf{c}}(x)\in C^p(\Omega )\), then the solution \(Z(t; x)\in C^p[0, T]\), The solution to hyperbolic PDE (2.24) is \(u(x,t) = u_0(Z(t;x))\), therefore the correlation

Since \(G(s,t)\in C^p([0,T]^2)\), its eigenvalue decay follows \(\lambda _n = o(n^{-(p+1)})\) [35, 36]. Therefore

which completes the proof. \(\square \)

Remark 2.12

For hyperbolic PDE, the trajectory of a solution corresponding to initial data with less regularity contains more information about the underlying differential operator. For example, when the initial data \(u_0(x)\) with \(\Vert u_0\Vert _2=1\) have a compact support of size h. As the support size h goes to zero, the correlation between two snapshots at two times separated by \({\mathcal {O}}(h)\) is zero assuming the magnitude of the velocity field \({\textbf{c}}(x)\) is \({\mathcal {O}}(1)\). Hence the dimension of the data space spanned by a single trajectory with any fixed tolerance is at least \({\mathcal {O}}(h^{-1})\). On the other hand, if \({\textbf{c}}(x)\) is analytic, the dimension of the data space spanned by a single trajectory corresponding to an analytic initial data with tolerance \(\varepsilon \) would become \({{\mathcal {O}}(|\log \varepsilon |^{d})}\). However, the low regularity of the solution u(x, t) can cause large discretization errors in the numerical approximation of derivatives of the solution which leads to poor PDE identification. If initial condition design is part of the PDE learning process, it should be a function with its spatial and Fourier support as large as possible while being resolved by the measurement and computation resolution.

Remark 2.13

Now we use an example to give a lower bound for the dimension of the best linear subspace in \(L^2(\Omega )\) that can approximate all snapshots of a single trajectory to \(\varepsilon \) tolerance. Let \({\textbf{c}}(x)\) be the unit vector parallel to \(x_1\) axis, \(T=2\pi \), \(u_0(x) = \sum _{n=1}^{\infty } \sqrt{a_n} \cos n x_1\) with \(0<a_n = o(n^{-2(p+1)})\), then \(\partial _{x_1}^p u_0\in C(\Omega )\), \(u(x,t)=u_0(x_1-t)\). Assume \(s>t>0\),

The eigenvalues of G(s, t) on interval \([0,2\pi ]\) are \(\frac{\pi }{2}(2\pi )^{d} a_n\) with multiplicity of two. Hence we have

Since \(\max _{0\le t \le 2\pi }\Vert u(\cdot ,t)-P_{V_K^k}u(\cdot ,t)\Vert _{L^2(\Omega )}\!\ge \! \sqrt{\frac{1}{2\pi }\int _0^{2\pi }\!\Vert u(\cdot ,t)\!-\!P_{V_K^k}u(\cdot ,t)\Vert ^2_{L^2(\Omega )}\textrm{d}t}\), we see that the dimension of the best linear subspace in \(L^2(\Omega )\) that can approximate all snapshots of a single trajectory with this chosen initial condition \(u_0\) to \(\varepsilon \) tolerance has to be of order \( {\mathcal {O}}(\varepsilon ^{-1/(p+\frac{1}{2})})\).

Remark 2.14

For a hyperbolic operator with multiple trajectories, unlike the case for elliptic operator stated by Lemma 2.10, the solution data space on an interval [0, T] is as rich as the solution data space on \([\tau , T+\tau ]\) due to the diffeomorphism induced by the flow X in (2.29).

The above study shows two possible challenges for a DOA approach in practice: 1) limited data space to train the approximation if the underlying differential operator is compressive or smoothing, such as an elliptic operator, or 2) a large number of parameters and a large amount of data as well as an expensive training process are required to approximate a differential operator, such as a hyperbolic operator, with rich trajectory dynamics.

2.3 Numerical Examples

Here we use a few numerical examples to corroborate our analysis above of the dimension estimates of the space spanned by all snapshots along a single solution trajectory for different types of PDEs.

First, we show how the dimension of the data space corresponding to a single solution trajectory depends on the PDE operator.

-

I.

Transport equation.

$$\begin{aligned} \begin{aligned} u_t(x,t)&= 4u_{x}(x,t),\quad (x,t)\in [-8,8)\times (0,5]\\ u(-8,t)&= u(8,t),\quad t\in (0, 5]\\ u(x,0)&={\left\{ \begin{array}{ll}\exp (-\frac{1}{1-x^2})\,&{}\quad x\in (-1,1)\\ 0,&{}\text {otherwise.} \end{array}\right. } \end{aligned} \end{aligned}$$(2.34) -

II.

Heat equation.

$$\begin{aligned} \begin{aligned} u_t(x,t)&= 4u_{xx}(x,t),\quad (x,t)\in [-8,8)\times (0,5]\\ u(-8,t)&= u(8,t),\quad u_x(-8, t) = u_x(8, t),\quad t\in (0, 5]\\ u(x,0)&={\left\{ \begin{array}{ll}\exp (-\frac{1}{1-x^2})\,&{}\quad x\in (-1,1)\\ 0,&{}\text {otherwise.} \end{array}\right. } \end{aligned} \end{aligned}$$(2.35)

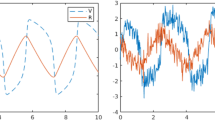

Taking the maximal time \(T=5\), we split the observation into two phases: the early phase \(t\in [0, 2.5)\) and the late phase \(t\in (2.5, 5]\). Within each phase, we compute the singular values of the solution matrix \(u_{jk}=u(x_j, t_k)\) sampled on the space-time grid with grid size \(\Delta x = 16/500\) and \(\Delta t = 5/5000\). In Fig. 1, (a) shows the singular value distribution of the solution space for transport equation (2.34), and (b) shows that for heat equation (2.35). We see that the data space spanned by a single solution trajectory of a hyperbolic operator has a significantly larger dimension than that of a parabolic operator. Also, we see that the dimension of the data space at a later time interval does not decrease for a hyperbolic PDE, whereas it decreases considerably for a parabolic PDE.

Singular value distribution of the solution matrix \(u_{jk}=u(x_j,t_k)\) restricted to the first half and the second half of the time interval of (a) transport equation (2.34); b diffusion equation (2.35). The indices annotated in the figures indicate the numbers of singular values that are greater than \(10^{-3}\). In both cases, \(T_{\max }=5\) is the maximal observation time

Now we test the following PDEs with variable coefficients over a time-space grid with widths \(\Delta x = 16/500\) and \(\Delta t = 5/5000\).

-

I’.

Transport equation.

$$\begin{aligned} \begin{aligned} u_t(x,t)&= \left( 2+\cos \left( \frac{\pi x}{8}\right) \right) u_{x}(x,t),\quad (x,t)\in [-8,8)\times (0,5]\\ u(-8,t)&= u(8,t),\quad t\in (0, 5]\\ u(x,0)&=\sin \left( \frac{\pi x}{8}\right) . \end{aligned} \end{aligned}$$(2.36) -

II’.

Heat equation.

$$\begin{aligned} \begin{aligned} u_t(x,t)&= \left( 2+\cos \left( \frac{\pi x}{8}\right) \right) u_{xx}(x,t),\quad (x,t)\in [-8,8)\times (0,5]\\ u(-8,t)&= u(8,t),\quad u_x(-8, t) = u_x(8, t),\quad t\in (0, 5]\\ u(x,0)&=\sin \left( \frac{\pi x}{8}\right) . \end{aligned} \end{aligned}$$(2.37)

The results are shown in Fig. 2. For the transport equation with constant coefficients, since the initial condition contains only a single sinusoidal mode, the whole solution trajectory contains this single mode with a phase shift and hence lives in a two-dimensional space. For the heat equation with constant coefficients, the whole solution trajectory contains this single mode with a decaying magnitude, which lives in a one-dimensional space. However, the variable coefficients “pump” various modes into the solution trajectory. We see the dimension of the data space of a single solution trajectory behaves similarly to the counterpart with constant coefficients except that the variation of the coefficient in the diffusion equation keeps pumping in modes into the solution and slows down the decay of the singular values in a later time interval.

Finally, we show how the dimension of the solution data space of a single solution trajectory depends on the initial data. We use two types of initial data. One is initial data with compact support with different regularities. The other one is using initial data that contain a different number of Fourier modes with random amplitude for each mode. For initial data with different regularity, we consider

and \(u_{\text {hat}}(x, 0) = {\mathcal {G}}(u_{\text {square}}(x, 0))\), \( u_{\text {int}}(x, 0) = {\mathcal {G}}(u_{\text {hat}}(x, 0))\), where \({\mathcal {G}}\) is the mapping:

And for the random initial data, we consider

Here M is the total number of Fourier modes in the initial data and the amplitudes \(a_0,a_j,b_j\sim {\mathcal {N}}(0,1/(2M+1))\), \(j=1,2,\dots , M\).

For initial data with different regularities, we show the percentage of dominant singular values, \(\lambda >\varepsilon \), for different threshold \(\varepsilon >0\) in Fig. 3. Figure 3a shows the percentage in a log-log plot of the exact solution matrix \(u_{jk}=u(x_j, t_k)\) sampled on the space-time grid for the transport equation with constant speed (2.34). As shown by the argument in Lemma 2.11, the less regular the initial data is, the faster the two solution snapshots decorrelate in time and hence the larger the space spanned by the solution trajectory for the transport equation. For the tested initial data given by (2.38) and (2.39), according to the example given in Remark 2.13, the corresponding singular values of the solution matrix decay at an algebraic rate \(\lambda _n={\mathcal {O}}(n^{-p-1})\) with \(p=0,1,2\), which is verified by the numerical result shown in Fig. 3a. Figure 3b shows the percentage of dominant singular values in terms of \(\log \epsilon \) for the heat equation with constant conductivity (2.35). Due to exponential decay in time for all eigenmodes in the initial data, the singular value of the solution matrix decays very quickly for all cases, i.e., the space spanned by all snapshots along a single solution trajectory is small for the diffusion equation. As shown by Theorem 2.8, the growth cannot be more than \(|\log \epsilon |^2\). Actually, the numerical results suggest the growth is \(c|\log \epsilon |\), where c depends on the regularity of the initial data. The smoother the initial data, the smaller the c is.

Figure 4 shows the percentage of dominant singular values for solutions of (2.34), (2.36), (2.35), and (2.37) with random initial data constructed as in (2.40). We see that the more Fourier modes the initial data contain, the larger the space spanned by the solution trajectory, while the growth rate for the diffusion equation is much slower than that of the transport equation. Also, variable coefficients can introduce Fourier modes into the solution and hence increase the space spanned by the solution trajectory. The increment is more significant when initial data contain fewer Fourier modes.

Singular value distribution of the solution matrix \(u_{jk}=u(x_j,t_k)\) with random initial functions with a varying number of modes for a transport equations (constant coef. (2.34); variable coef. (2.36)); b heat equations (constant coef. (2.35); variable coef. (2.37)). In both figures, the y-axes denote the percentage of dominant singular values (\(\lambda _n>1\times 10^{-3}\)). Each plot is the average of 20 experiments

3 PDE Identification from a Single Solution Trajectory

In this section, we study PDE identification problem based on a combination of candidates from a dictionary of basic differential operators and their functions using a single trajectory. We first focus on the basic question of identifiability and stability. We then propose a data-driven and data-adaptive computational model based on local regression and global consistency for PDE identification with variable coefficients.

Identifying a differential operator \({\mathcal {L}}\) of form (1.1) from its solution data u(x, t) can be formulated in the following weak form: Choose a filter function \(\psi (x)\) and use integration by part (in a weak sense if needed),

where \({\mathcal {L}}^{*}\) is the formal adjoint of \({\mathcal {L}}\). The left-hand side of weak formulation (3.1), denoted by h(t), can be computed from the trajectory data and identification of \({\mathcal {L}}\) can be cast in a Galerkin formulation

It shows that the information of a differential operator \({\mathcal {L}}\) is projected to the space spanned by snapshots of solution trajectory \(u(\cdot , t)\) through its operation on the filter function \(\psi (x)\). For example, if \({\mathcal {L}}\) is a differential operator with constant coefficients and one chooses \(\psi (x;y)=\delta (x-y)\), the PDE identification problem becomes a linear regression problem using the solution and its derivatives sampled at different locations in space and time, which has been the main study in the literature.

Remark 3.1

Theorem 2.8 shows that when \({\mathcal {L}}\) is a strongly elliptic operator, a single trajectory of the solution stays \(\varepsilon \) close in \(L^2\) norm to a linear space of dimensions of at most \({\mathcal {O}}(|\log \varepsilon |^2)\). It implies the eigenvalues of the compact operator induced by the correlation function between two snapshots \(G(t,s)=\int _{\Omega }u(x,t)u(x,s)\textrm{d}x\) has at least an exponential decay as \(\lambda _k={\mathcal {O}}(e^{-c\sqrt{k}})\). This implies that, when Galerkin formulation (3.1) is discretized and many snapshots along a single trajectory are used as the test functions, the eigenvalues of the resulting linear system also have a fast decay and hence are ill-conditioned, which will affect both accuracy and stability of the identification problem.

3.1 PDE Identification with Constant Coefficient

For PDE identification with constant coefficients, one can transform it into the Fourier domain and show that the underlying differential operator \({\mathcal {L}}\) can be identified by one trajectory at two different instants if and only if the solution contains enough Fourier modes.

Defining the Fourier transform of u with respect to the space variable as

the PDE \(\partial _t u = {\mathcal {L}}u\) is converted to an ODE for each frequency \(\zeta \in {\mathbb {Z}}^d\),

whose solution is

Suppose there is a \(\zeta \in {\mathbb {R}}^d\) such that \({\widehat{u}}(0,\zeta ) \ne 0\), then for any \(t_2> t_1>0\), we have

By denoting \(c_\alpha = p_\alpha i^{|\alpha |}\) when \(|\alpha |\) is even, and \(c_\alpha = p_\alpha i^{|\alpha |-1}\) when \(|\alpha |\) is odd, we associate the \((\zeta , t)\) pair with the following decoupled system

It is thus clear that, given a single solution u(x, t) corresponding to an initial data u(0, t), the underlying constant coefficient PDE is identifiable, i.e., there exists a unique set of parameters \(p_\alpha \) such that \(\partial _t u = -{\mathcal {L}}u\) if and only if (3.5) and (3.6) admit unique solutions for \(c_\alpha \), which are coefficients of two polynomials. If \(t_2-t_1>0\) is small enough, the phase ambiguity in (3.6) is removed. Hence one can apply standard polynomial regression results to this problem in the spectral domain.

Theorem 3.2

Let \(Q = \{\zeta \in {\mathbb {Z}}^d: {\widehat{u}}_0(\zeta )\ne 0\}\), then if

and Q is not located on an algebraic polynomial hypersurface of degree \(\le n\) consists of only even-order terms or odd-order terms, then the parameters \(p_{\alpha }\) are uniquely determined by the solution at two instants \(u(x,t_2), u(x,t_1)\) if \(|t_2-t_1|\) is small enough.

Proof

Choose Fourier modes \(\zeta _k\in Q, k=1, 2, \ldots , K\ge \max \left( \sum _{k=0}^{\lfloor \frac{n}{2}\rfloor }\left( {\begin{array}{c}2k+d-1\\ d-1\end{array}}\right) , \sum _{k=0}^{\lfloor \frac{n-1}{2}\rfloor }\left( {\begin{array}{c}2k+d\\ d-1\end{array}}\right) \right) \) and \(t_2>t_1\ge 0\), (3.5) and (3.6) imply

respectively, where

and

By the assumption, \(A_e\) and \(A_o\) are both of full ranks. Hence \(p_{\alpha }\) can be determined uniquely. \(\square \)

Remark 3.3

Theorem 4.4 in Sect. 4.2 states that actually there exist solution data on a local patch (in space and time) that can identify PDE with constant coefficients by local regression if the solution has enough Fourier modes.

Determining a differential operator \({\mathcal {L}}\) in the spectral domain requires observing the solution globally in space. In reality, it may be more practical to observe the solution merely by local sensors. In other words, one can approximate the filter function in (3.1) by the delta function sampled at certain points \((x_k, t_k), k=1, 2, \ldots , K\) in space and time and identify the PDE through the following least-squares problem

where \({\textbf{F}}\) is called the feature matrix defined by a set of basic partial differential operators, a linear combination of which can form \({\mathcal {L}}\), acted on the observed solution at sampled locations \((t_k,x_k)\), \({\textbf{p}}\) represents the unknown coefficient vector, and

For example, in one dimension \(d=1\), the feature matrix

Assume the solution and its derivatives are sampled on an equally spaced grid \(x_k\), \(k=1,\dots , K\), at a single observation time \(t_k \equiv \tau \),

Since the discrete Fourier transform (DFT) matrix is unitary, the singular values of \({\textbf{F}}\) are identical to its discrete Fourier transform

where \({\widehat{u}}\) is the discrete Fourier transform of u and

The matrix \(\widehat{{\textbf{F}}}\) can be factorized as

where \(\mathbf {\Lambda }\) is a \(K\times K\) diagonal matrix with \(\mathbf {\Lambda }_{kk}={\widehat{u}}(\zeta _k,0)W(\zeta _k,\tau )\) and \({\textbf{V}}\) is the Vandermonde matrix with \({\textbf{V}}_{kj} = (i\zeta _k)^{j}\), \(k=1,2,\dots , K\), \(j=0,1, 2,\dots , n\). We can see that the PDE identification problem using pointwise information is a little different from a polynomial regression problem. In addition to the Vandermonde matrix \({\textbf{V}}\), we see that initial/input data \(u_0\) and sampling can also affect the conditioning of \({\textbf{F}}\). Let \({\overline{\lambda }}=\max _{1\le k\le K} |\mathbf {\Lambda }_{kk}|\), \(\underline{\lambda }=\min _{1\le k\le K} |\mathbf {\Lambda }_{kk}|\) and \({\overline{\sigma }}=\max _{0\le j\le n} |\sigma _j|, \underline{\sigma }=\min _{0\le j\le n} |\sigma _j|\), where \(\sigma _j\) are eigenvalues of the corresponding Vandermonde matrix. Assume \(K=n+1\), denote \(L_j(\zeta )=\sum _{k=0}^{n}=\xi _{jk}(i\zeta )^k\) to be the Lagrange basis polynomials, i.e., \(L_j(\zeta _k)=\delta _{jk}\) and \(\mathbf {\xi }_j=[\xi _{j0}, \xi _{j1}, \ldots , \xi _{jn}]^T\). We have \({\textbf{V}}\mathbf {\xi }_j={\textbf{e}}_j\), where \({\textbf{e}}_j\) is the canonical basis in \({\mathbb {R}}^{n+1}\), and \({\overline{\sigma }}^{-1}\le \Vert \mathbf {\xi }_j\Vert \le \underline{\sigma }^{-1}\). On the other hand, let \(\omega _j, j=0,1, \ldots , n\) be the eigenvalues of \(\widehat{{\textbf{F}}}\) and \({\overline{\omega }}=\max _{0\le j\le n}|\omega _j|\), \(\underline{\omega }=\min _{0\le j\le n} |\omega _j|\). We have \(\underline{\lambda } =\min _{0\le j\le n} \Vert \widehat{{\textbf{F}}}\mathbf {\xi }_j\Vert , ~{\overline{\lambda }}=\max _{0\le j\le n} \Vert \widehat{{\textbf{F}}}\mathbf {\xi }_j\Vert \), which implies \({\overline{\omega }} \ge {\overline{\lambda }} \underline{\sigma }\) and \(\underline{\omega } \le \underline{\lambda } {\overline{\sigma }}\), and hence \(\frac{{\overline{\omega }} }{\underline{\omega }}\ge \frac{{\overline{\lambda }} \underline{\sigma }}{\underline{\lambda }{\overline{\sigma }}}\). For example, \({\textbf{F}}\) may become ill-conditioned if

-

(1)

Fourier modes with small amplitudes in the initial/input data are involved;

-

(2)

the differential operator \({\mathcal {L}}\) is elliptic and the solution is sampled at a large time (due to the exponential decay of \(|W(\zeta _k,\tau )|\) in time).

In general, using the solution data at all points on a rectangular grid in space and time, which most existing methods are based on, may be too costly or not practical in real applications. In particular, for PDEs with constant coefficients, it is unnecessary. Moreover, it may not be a good strategy for PDE identification even if the data are available. For example, at certain sampling locations, the solution may be degenerate, e.g., (nearly) zero in a neighborhood, which is then sensitive to noise, or becomes singular, which can lead to large numerical errors. However, we do not know what type of PDE or initial data a priori, so the sampling strategy and PDE identification method should be data-driven and data-adapted.

3.2 PDE Identification with Variable Coefficients Using a Single Trajectory

For the identification of time-dependent PDEs with coefficients varying in space, the unknowns are (coefficient) functions, which means 1) the regression problems at different spatial locations are different; 2) the variations of the coefficients are intertwined with the solution in both frequency and physical domains.

In the following, we will start with an identifiability and stability study. Then we propose a computational model for PDE identification with variable coefficients that enforces both local regression and global pattern consistency. The main goal is to identify a consistent differential operator that is built from as few terms as possible from the library that can fit observed data well locally by using different linear combinations at different locations. Once the PDE type is determined, a more accurate estimation of coefficients can be achieved by independent local regression and/or appropriate regularization.

3.3 Identifiability with a Single Trajectory

In this section, we focus on the question that whether or not a single solution trajectory u(x, t) can determine the underlying differential operator from a given library, i.e., identifiability of the differential operator \({\mathcal {L}}\). In the sequel, we first introduce the general statement in parallel to the identifiability statement for PDE with constant coefficients, which states if there are enough Fourier modes in the initial data and one can observe a single trajectory at two different instants, one can recover those constant coefficients. For PDEs with variable coefficients, one has to recover these unknown functions. Hence more Fourier modes in the initial data and more snapshots along the trajectory depending on the order of the differential operators and space dimensions are needed for identifiability.

Theorem 3.4

Let \(m = \left( {\begin{array}{c}n+d\\ d\end{array}}\right) \). For any given \(x\in \Omega \), the parameters \(p_{\alpha }(x)\) can be recovered if and only if one can find m instants \(t_1,\dots , t_m\) that the matrix \(A = (A_{k,\alpha } ) \) is non-singular where \(A_{k,\alpha }:= \partial ^{\alpha } u(x, t_k) \).

Proof

This is directly from the following linear system of \(p_{\alpha }(x)\),

\(\square \)

Consider the limiting case that \(t_1,\dots , t_m\rightarrow 0\). One can then take k-th derivatives of the solution in time at \(t=0\). The above lemma becomes the requirement that the matrix \(S:= (S_{k, \alpha }), k=1, 2, \ldots , m\) with \(S_{k, \alpha }:= \partial ^{\alpha }{\mathcal {L}}^{k-1} u_0(x) \) is non-singular almost everywhere. In the following, we show that if the initial condition is randomly generated, then S is almost surely non-singular if the parameters \(p_{\alpha }\) are sufficiently smooth. In the following, we denote the diagonal multi-indices set \(D_n\) by

Theorem 3.5

Assume the coefficients \(p_{\alpha }\in C^{mn}(\Omega )\) and let the initial condition \(u_0\) be generated by

where \(r > \left( {\begin{array}{c}mn + d\\ d\end{array}}\right) \) and the vectors \(\zeta _j\in {\mathbb {Z}}^d\) are not on an algebraic hypersurface of degree mn and the weights \(w_j\) are random variables that \(w_j\sim {\mathcal {U}}[a_j, b_j]\) with \(a_j < b_j\). Denote \({\mathbb {P}}\) the induced probability measure on \(\Omega \times \prod _{j=1}^m[a_j, b_j]\). If \(\sum _{\alpha \in D_n} |p_{\alpha }(x)|^2\ne 0\) almost everywhere in \(\Omega \), then the matrix S is non-singular \({\mathbb {P}}\)-almost surely.

Proof

Let \(x'\in \Omega \) be a fixed point that \(\sum _{D_n} |p_{\alpha }(x ')|^2\ne 0\). Notice that the probability \({\mathbb {P}}[S \text { is non-singular}] = 1 - {\mathbb {P}}[\det S = 0]\), since \(S_{k, \alpha }(x ') = \sum _{j=1}^r w_j \partial ^{\alpha } {\mathcal {L}}^{k-1} e^{i\zeta _j\cdot x} |_{x=x'}\), the determinant \(\det (S)\) can be written in the following form

where \(\beta = (\beta _1,\beta _2,\dots , \beta _r)\) and each \(F_{\beta }\) is a polynomial of \(\partial ^{\gamma } p_{\alpha }(x)\) with \(|\alpha |\le n\), \(|\gamma |\le (m-1)n\), and the degree of the polynomial is at most \(m-1\), each coefficient of the polynomial is uniquely determined by a polynomial in terms of \(\zeta _j\), \(1\le j\le r\).

It is clear that the set of \(w_i, i=1, 2,\ldots , r\) that satisfy (3.13) has measure zero in \(\prod _{j=1}^r[a_j, b_j]\) unless all of the coefficients \(F_{\beta } = 0\) at \(x'\in \Omega \). If the later is true, it means \(\det (S) = 0\) at \(x'\in \Omega \) for any \(w_j\in [a_j, b_j]\), \(j=1,\dots , r\). Let the matrix \(S^j:= (S_{k,\alpha }^j)\), where \(S_{k,\alpha }^j = \partial ^{\alpha }{\mathcal {L}}^{k-1} e^{i\zeta _j\cdot x}|_{x=x'}\) and we denote the linear functional \(f_j:{\mathbb {C}}^{m\times m}\rightarrow {\mathbb {C}}\), \(j=1,2,\dots , r\) by

In the following, we show that \(\bigcap _{j=1}^r \ker f_j \ne \{{{\textbf{0}}}\}\). By Lemma 3.9 of [37], if \( \bigcap _{j=1}^r \ker f_j = \{{{\textbf{0}}}\}\), then any linear functional f on \({\mathbb {C}}^{m\times m}\) can be written as linear combination

However, if we take a full rank matrix \(B\in {\mathbb {C}}^{m\times m}\) and define \({\tilde{f}}(X) = \textrm{trace}(X B)\), then B can be represented by \(B = \sum _{j=1}^r w_j S^j\), which violates the condition that \(\det (\sum _{j=1}^r w_j S^j) = 0\). Hence there exists a nonzero matrix \({\textbf{c}}= (c_{k,\alpha })\in {\mathbb {C}}^{m\times m}\) such that

The differential operator \({\mathcal {L}}':= \sum _{|\alpha |\le n}\sum _{k=1}^m c_{k,\alpha }\partial ^{\alpha } {\mathcal {L}}^{k-1}\) is uniquely determined by the derivatives \(\partial ^{\gamma } p_{\alpha }\) and has the order of at most mn. Without loss of generality, we denote \({\mathcal {L}}'\) by

We first show \({\mathcal {L}}'\) is non-trivial. Let \(1\le m'\le m\) be the largest index that \(c_{m',\alpha '}\ne 0\) for some \(\alpha '\), then according to the assumption, almost everywhere in \(\Omega \), at least one of the leading-order terms \(c_{m',\alpha '}p_{\alpha }^{m'-1}(x)\partial ^{(m'-1)\alpha +\alpha '}\) for certain \(\alpha \in D_n\) in \(c_{m',\alpha '}\partial ^{\alpha '}{\mathcal {L}}^{m'-1}\) is nonzero. In the next, we denote \((i\zeta )^{\gamma }:= \prod _{k=1}^d (i\zeta _{k})^{\gamma _k}\), where \(\zeta = (\zeta _{1},\dots , \zeta _{d})\) and \(\gamma = (\gamma _1,\dots , \gamma _d)\). Since \(r > \left( {\begin{array}{c}mn+d\\ d\end{array}}\right) \) and the vectors \(\zeta _j\in {\mathbb {Z}}^d\), \(1\le j\le r\) are not located on an algebraic polynomial hypersurface of degree \(\le mn\), then the equation

implies \(\sum _{|\gamma |=0}^{mn} q_{\gamma }(x ') (i\zeta _j)^{\gamma }=0\) which gives a contradiction. \(\square \)

3.3.1 Ergodic Orbits

In the following, we consider the solution to (2.24) with infinite observation time \(T=\infty \). The previous dimensionality analysis of the solution space would not work due to the non-compactness. For the sake of simplicity, we assume \({\textbf{c}}(x)\) is measure-preserving and has no singular points, that is

For dimension \(d=2\), the ergodic properties of the measure-preserving dynamic system

on torus have been studied by [3, 40, 47]. Let

it has been proved that if \(a_1/a_2\) is irrational, then the flow X(t) is ergodic and can be regarded as a rectilinear flow on a two-dimensional Euclidean torus by some suitable choice of coordinate change. The dynamics on a high-dimensional torus are studied by [25, 41].

Theorem 3.6

([40]) For \(d=2\), every orbit of (3.20) is ergodic if and only if \(a_1, a_2\) are linearly independent with respect to integral coefficients.

Theorem 3.7

([41]) For \(d\ge 3\) and assume every orbit of (3.20) is Lyapunov stable in both directions in time, then every orbit is ergodic if and only if \(a_1, a_2,\dots , a_d\) are linearly independent with respect to integral coefficients.

Using the ergodic property, we can derive the following simple corollary to characterize the solution data by tracking the locally unique value in time.

Corollary 3.8

Let \(u_0\in C^1(\Omega )\), suppose every orbit of (3.20) is ergodic and there exists a point \(x_0\in \Omega \) such that for any \(y\in \Omega \), \(\textrm{dist}(y,x_0)\in (0, \delta )\), \(u_0(y)\ne u_0(x_0)\), then \({\textbf{c}}(x)\) is uniquely determined from u(x, t), \(t\in [0,\infty )\).

Proof

Let X(t) be the orbit

Then \({\textbf{c}}(X(t))\) is uniquely determined by tracing the location of the local unique value \(u_0(x_0)\). By the ergodic property of X(t) and continuity of \({\textbf{c}}(x)\), for any \(z\in \Omega \) one can find a sequence \(X(t_j)\rightarrow z\), \(j\rightarrow \infty \), which reconstructs \({\textbf{c}}(z)=\lim _{j\rightarrow \infty } {\textbf{c}}(X(t_j))\). \(\square \)

3.4 Possible Instability for Identification of Elliptic Operator

In this section, we study the stability issue for PDE identification and use the elliptic operator as an example to show possible instability. First, we show local instability when one has a short observation time for a single trajectory. Suppose each coefficient \(p_{\alpha }\) is sufficiently smooth, we consider a small perturbation \(p_{\alpha }(x)\rightarrow p_{\alpha }(x) + \mu f_{\alpha }(x)\) that \(|\mu |\ll 1\), then the Frechét derivative \(w(x,t) = \partial _{\mu } u(x,t)\) satisfies the equation

When \({\mathcal {L}}= \sum _{|\alpha |=0}^n p_{\alpha }(x)\partial ^{\alpha }\) is a dissipative operator, there exists a constant \(c > 0\) such that

Define the functions \(K_{\alpha \beta }(x)\) by

and denote the normalized set \(F = \{\{ f_{\alpha } \}_{|\alpha |=0}^n\subset C^n(\Omega ;{\mathbb {R}})\mid \sum _{\alpha }\Vert f_{\alpha }\Vert ^2_{L^2(\Omega )}=1 \}\), then the local instability amounts to find the minimum

where \(K(x) = (K_{\alpha \beta }(x))\) is a positive definite matrix for each \(x\in \Omega \). Given any fixed \(x\in \Omega \),

where \(m = \left( {\begin{array}{c}n+d\\ d\end{array}}\right) \) and \(\lambda _1\ge \lambda _2\ge \cdots \ge \lambda _m \ge 0\) are the eigenvalues of \(K_{\alpha \beta }(x)\), the equality holds when \(f = (f_{\alpha }(x))\) is the eigenvector of K(x) for \(\lambda _m\). Therefore

It should be noted that if \(\partial ^{\alpha } u\) are continuous, then \(K_{\alpha \beta }(x)\) varies continuously; therefore, \({\lambda }_m(x)\) is also a continuous function over \(\Omega \). On the other hand, use Taylor expansion for short time that \(T\ll 1\), the matrix entries of K are

For each \(0\le l\le m-2\) in the summation, the matrix formed by the entry

is rank one; therefore, the summation of the terms \(0\le l\le m-2\) of (3.28) is at most rank \(m-1\), then by Theorem VI.3.3 of [6],

where M(x) is the rank one matrix defined by \(M_{\alpha \beta } (x):= \partial ^{\alpha }{\mathcal {L}}^{m-1} u_0(x) \partial ^{\beta } u_0(x) \), then the norm \(\Vert M(x)\Vert \) is bounded by

Combining above estimate with (3.27) and Cauchy-Schwartz inequality, we obtain

Remark 3.9

In fact, one can show \(\lambda _k \le {\mathcal {O}}(T^{k})\), \(1\le k\le m\) by following the similar argument. Furthermore, from estimate (3.32), one can observe that \({\mathcal {L}}^{m-1} u_0\) plays a role. Indeed, if \({\mathcal {L}}^{m-1} u_0 = 0\), then the solution is simply

which means the matrix formed by \(A_{\alpha , k}:=\partial ^{\alpha } u(x, t_k)\), \(k=1,2,\dots , m\),

has a rank at most \(m-1\). Hence the identification is non-unique.

Now we show instability for high-frequency perturbations for elliptic operators. For the sake of simplicity, in the following we let \({\mathcal {L}}= -\sum _{\alpha = 0}^n p_{\alpha }(x) \partial ^{\alpha }\) be an elliptic differential operator in 1D where \(p_{\alpha }(x)\) are constant functions. Consider the perturbation \({\widetilde{{\mathcal {L}}}} = {\mathcal {L}}- \delta e^{i q \cdot x } \partial ^{\alpha '}\), where \(q \in {\mathbb {Z}}\) is some frequency and \(\alpha '\) is certain index. Denote u and \({{\tilde{u}}}\) the solutions to (1.1) with respect to the operators \({\mathcal {L}}\) and \({{\widetilde{{\mathcal {L}}}}}\), respectively. Then we have

where \(\lambda _k = -\sum _{\alpha =0}^n p_{\alpha } (i k)^{\alpha }\), \(\phi _k(t) = c_k e^{-\lambda _k t}\), and the constant \(c_k\) is the Fourier coefficient of \(u_0\). In the following, we study the instability of identification for large |q| under the assumptions that \(\Re \lambda _k \ge C_1 \left\langle {k} \right\rangle ^{n}\) and \(|c_k|\le C_2 \left\langle {k} \right\rangle ^{-\beta }\), \(\beta > \frac{1}{2}\), where \(C_1, C_2\) are two positive constants and \(\left\langle {k} \right\rangle := (1+k^2)^{1/2}\). Using Fourier transform, we may write the solution \({\tilde{u}}\) in the form

where \( {{\widetilde{\phi }}}_k(t)\) satisfies the coupled ODE,

The solution can be written as

since \(c_k = {{\widetilde{\phi }}}_k(0)\). Therefore using Cauchy-Schwartz inequality, we obtain the estimate

Lemma 3.10

Suppose \(0\le \alpha ' \le \frac{n}{2}\), \(\beta > \frac{1}{2}\), and \(\delta ^2 T < \gamma C_1\) for some \(\gamma \in (0,1)\), then

If \(kq \le 0\), we would have

Proof

The first inequality can be easily proved by summation of (3.39) on both sides over \(k\in {\mathbb {Z}}\). For the second inequality, by iteratively using (3.39), we find that

If \(k q \le 0\), we would have \(|k - l q|\ge |k|\) for all \(l\ge 0\), then

\(\square \)

Theorem 3.11

Assuming the conditions of Lemma 3.10, the following inequality holds for any \(q\in {\mathbb {Z}}\),

where \(C_3 = \sum _{k\in {\mathbb {Z}}} \left\langle {k} \right\rangle ^{2\alpha ' - 2 \beta - n}\) and \(C_4 = \sum _{k\in {\mathbb {Z}}} \left\langle {k} \right\rangle ^{- n}\).

Proof

Let \(w_k = \phi _k(t) - {{\widetilde{\phi }}}_k(t)\), then

and note that \(\delta ^2 T \le \gamma C_1\),

The last inequality uses the fact that \(|k+q|\le \frac{|q|}{2}\) implies \(|k| \ge \frac{|q|}{2}\) when \(kq \le 0\) and vice versa, therefore

\(\square \)

Remark 3.12

From the assumption in the above theorem, one can view \(\gamma ={\mathcal {O}}(\delta ^2)\). The result shows that the high-frequency component of perturbation will have a limited impact on the solution. In particular, both the order of the differential operator and the smoothness of the initial data affect the instability estimate. The above analysis can also possibly be extended to high-dimensional and smooth coefficient cases.

4 Local Regression and Global Consistency Enforced PDE Identification Method

For PDE identification in practice, the first and most important task is to robustly identify the PDE type using a combination of the fewest possible candidates from a (relatively large) dictionary with minimal data, e.g., a single trajectory (corresponding to unknown and uncontrollable initial data) with local (in space and time) measurements. When the PDE has variable coefficients, this amounts to different regression problems at different measurement locations. Since a variable coefficient may be degenerate or small at certain locations, e.g., certain components of a velocity field, independent local regressions may render different types of PDEs at different locations. The key idea is to enforce consistency and sparsity across local regressions to enhance both robustness and accuracy. Here we propose a local regression and global consistency enforced strategy, the Consistent and Sparse Local Regression (CaSLR) method, which enforces global consistency of the differential operator and involves as few terms as possible from the dictionary while having the flexibility to fit local measurements as well as possible. Numerical tests show that CaSLR can identify PDE successfully even with a small amount of local data.

4.1 Proposed PDE Identification Method

Suppose the solution data can be observed/measured by local sensors in a neighborhood at different locations, i.e., local patches, that can be used to approximate the solution derivatives and their functions corresponding to those terms in the dictionary. Once the measured/computed solution and its derivatives are available at certain locations we propose the following local regression and global consistency-enforced PDE identification method.

Assume that the unknown PDE takes the following form:

where \({\mathcal {F}} = \{f_k:\Omega \times [0,T]\rightarrow {\mathbb {R}}\}_{k=1}^K\) is a dictionary of features that contains partial derivatives of u with respect to the space (e.g., \(u_x,u_{xxx}\), etc.), functions of these terms (e.g., \(uu_x\) and \(u^2\), \(\sin (u)\) etc.); and \(c_k:\Omega \times [0,T]\rightarrow {\mathbb {R}}\), \(k=1,2,\dots , K\) are the respective coefficients. We assume that the dictionary is rich enough that it is over-complete, i.e., the underlying PDE is expressed as (4.1) when at least one of the feature’s coefficients is null. One also has to assume the measurements can resolve local variation of the coefficients, i.e., one can approximate the PDE coefficients by constants in each small patch neighborhood (in space and time) centered at \((x_j,t_j), j=1, 2, \ldots , J\). Otherwise, the measurement data are not enough to identify the PDE. Denote \(\Omega _j\) to be the local neighborhood centered at \((x_j,t_j)\) and \(\hat{{\textbf{c}}}_j=({\hat{c}}_1^j,\ldots , {\hat{c}}_K^j))\), we define the local regression error in each patch as

Define the global regression error as

For each \(l=1,2,\dots , K\), we search for l terms from the dictionary \({\mathcal {F}}\) whose linear combination using \(\hat{{\textbf{c}}}_j\) minimizes the global fitting error. In particular, we optimize

using the Group Subspace Pursuit (G-SP) algorithm proposed in [18]. As a generalization of the well-known subspace pursuit [9], G-SP sequentially chooses K groups of variables from the pool of candidates that best fit the residual obtained from the previous iteration. Here the group sparsity is defined by

where \(\tilde{{\textbf{c}}}_k=({\widehat{c}}_k^1,\dots ,{\widehat{c}}_k^J)\in {\mathbb {R}}^J, k=1,2,\dots ,K\). As we increase the sparsity level l, \({\mathcal {E}}(\widehat{{\textbf{c}}}^{l})\) decreases. Setting \(\widehat{{\textbf{c}}}^0 = [0,\dots ,0]\), we employ the model score

for \(l=1,2,\dots , K-1\), where \(\rho >0\) is a penalty parameter for using more terms from the dictionary. We decide that the candidate with \(l^*\) features is the optimal if \(S^{l^*}=\min _{l=1,\dots ,K-1}S^l\). The metric \(S^k\) in (4.5) evaluates a candidate model with l features by considering two factors: the fitting error and the model complexity penalty controlled by the parameter \(\rho \). In this work, we fix \(\rho \) to be the mean of \(\{{\mathcal {E}}(\widehat{{\textbf{c}}}^{k})\}_{k=1}^K\) so that the two components of (4.5) are balanced. We find it applicable for both clean and noisy data.

Once the PDE operator type is identified, one may use various regression techniques to each patch locally to refine the recovery of those coefficients. In case there are sufficiently many sensors densely distributed in the space, one may employ different types of regularizations to further improve the reconstruction, e.g., TV-norm. We leave such explorations to future works and focus on situations where few sensors are used.

4.2 Identification Guarantee by Local Regression

In this section, we show that local patch regression using operators with constant coefficients approximation indeed can identify the underlying PDE with variable coefficients under these conditions: 1) The variable coefficients are bounded away from zero and vary slowly on the patch, 2) the solution data contain diverse information content, on the patch. The first condition basically requires the mathematical problem to be well-posed, and the second condition says the local regression system determined by the solution data is well-conditioned.

First, we define the admissible set for the coefficients \({\mathcal {C}}_{\varepsilon } \subset {\mathbb {R}}\) by

which means the nonzero coefficients in the unknown PDE should be above a certain threshold \(\varepsilon \) on a local patch. The local regression-based identification problem is to find what terms in the PDE operator those nonzero coefficients correspond to. We also introduce the condition constant of the solution data corresponding to the set of candidates in the dictionary \({\mathcal {F}}=\{f_k\}_{k=1}^K\) in a neighborhood \(B\subset \Omega \),

where \({{\hat{{\textbf{c}}}}} = ({{\hat{c}}}_{k})\in {\mathbb {R}}^{K}\), \(k=1,2,\dots , K\). We say a vector \({{\hat{{\textbf{c}}}}} \in {\mathcal {C}}_{\varepsilon }\) if each element of \({{\hat{{\textbf{c}}}}}\) is in \({\mathcal {C}}_{\epsilon }\).

Theorem 4.1

Suppose the coefficients \(c_k(x,t)\) in (4.1) are admissible at some point \((x_0, t_0)\) and take B as a neighborhood of \((x_0, t_0)\). Let \({\mathcal {A}}= \{k\mid c_k\ne 0\}\) be the index set for nonzero coefficients at \((x_0, t_0)\). Let \(\{{\overline{c}}_{k}^{*}\}_{k=1}^K\) be an optimal set of constant coefficients such that

If we denote \({\mathcal {A}}^{*} = \{k \mid {\overline{c}}_k^{*}\ne 0\}\), then \({\mathcal {A}}={\mathcal {A}}^{*}\) if the patch size \(R = \text {diam}(B)\) satisfies

where L is the Lipschitz constant for all \(c_{k}(x,t)\).

Proof

We prove this by contradiction. Let \({\tilde{c}}_{k} = c_{k}(x_0, t_0)\) for \(k\in {\mathcal {A}}\) and \({\tilde{c}}_{k} = 0\) for \(k\in {\mathcal {A}}^{\complement }\), then

On the other hand, if the conclusion does not hold, then there exists an index \(k'\) such that \(|{\overline{c}}_{k'}^{*} - {\tilde{c}}_{k'}|\ge \varepsilon \) and

Note the linear scaling,

Therefore, if

we would obtain that

which is a contradiction with the optimal choice of \({\overline{c}}^{*}_{k}\). \(\square \)

From the above result, we can immediately see the following.

Corollary 4.2

For a differential operator, \({\mathcal {L}}\) with constant coefficients, local regression on a neighborhood B can identify the PDE exactly if \({\mathcal {K}}_B^{{\mathcal {F}}}>0\).

Lemma 4.3

For a linear differential operator \({\mathcal {L}}\) with constant coefficients, if the solution u contains enough Fourier modes, we have \({\mathcal {K}}_{B}^{{\mathcal {F}}} > 0\) for \(B = \Omega \times (t_0, t_1)\).

Proof

Suppose \({\mathcal {K}}_B^{{\mathcal {F}}} = 0\) on \(B= \Omega \times (t_0, t_1)\). It implies that \(\sum _{k=1}^{K} {\hat{c}}_{k} f_k(y, \tau ) = 0\) for any \((y,\tau )\in B\) and some \({\hat{{\textbf{c}}}}=({\hat{c}}_k)\in {\mathbb {R}}^K, \Vert {\hat{{\textbf{c}}}}\Vert _{\infty }=1\). Therefore, we must have

for all \(y\in \Omega \) and \(\tau \in (t_0, t_1)\). Expanding the solution in terms of the Fourier series

Since each \(f_k\) is a partial derivative, \(f_k(e^{iv\cdot y}) = P_k(iv) e^{iv\cdot y}\) for certain polynomial \(P_k\), we have \( d_{v}(\tau ) \sum _{k=1}^{K} {\hat{c}}_{k} P_k(iv) = 0\). Each \(d_v(\tau )\) satisfies an autonomous ODE \(d_v^{'}= (\sum _{k=1}^{K} c_{k} P_k(iv))d_v\) from (4.1), which means either \(d_{k}(\tau ) \equiv 0\) on \((t_0, t_1)\) or

Since the above algebraic equation only permits finitely many solutions of v, we prove the result. \(\square \)

The above lemma indicates that if \({\mathcal {L}}\) is a linear differential operator with constant coefficients and the solution has sufficiently many Fourier modes, then we can always find a local patch \(B\subset \Omega \times (t_0, t_1)\) such that \({\mathcal {K}}_{B}^{{\mathcal {F}}} > 0\). Combined with Corollary 4.2, we have the following Corollary.

Theorem 4.4

A linear differential operator \({\mathcal {L}}\) with constant coefficients can be identified exactly by local regression if the solution u contains sufficiently many Fourier modes.

Remark 4.5

The numerator in the definition of \({\mathcal {K}}_B^{{\mathcal {F}}}\) measures the minimal support of the set \({\mathcal {S}}(B):=\{(f_1(y,\tau ),\ldots , f_K(y,\tau ))\in {\mathbb {R}}^K, (y,\tau )\in B\}\) on the unit \(\ell ^{\infty }\) sphere. The denominator can be relaxed to \(\max _{(y,\tau )\in B}\sum _{k\in {\mathcal {A}}} | f_k(y, \tau )| \).

If the solution varies little on B, e.g., R is small, the set \({\mathcal {S}}(B)\) is close to a constant vector in \({\mathbb {R}}^K\); hence, \({\mathcal {K}}_B^{{\mathcal {F}}}\) is small. On the other hand, the coefficients need to vary slowly on B so that LR is small. So some scale separation between the coefficients and the solution is needed on B.

In general, the larger K is, the smaller \({\mathcal {K}}_B^{{\mathcal {F}}}\) will be. In other words, the larger the dictionary, the harder the identification of the underlying PDE.

Remark 4.6

For robust identification of PDE with variable coefficients using local patch data, it is desirable to have local sensors that support local patches whose patch size R is small enough to resolve the variation of the coefficients, while the measurement resolution h is fine enough so that \(\frac{R}{h}\) is large enough and hence can detect a good bandwidth of modes in the solution. At the same time, one needs to select patch data that contain as many modes as possible that can be resolved by patch resolution.

4.3 Data-Driven and Data-Adaptive Measurement Selection

In practice, using the solution data indiscriminately for PDE identification may not be a good strategy.

On each patch, the local regression is approximated by a PDE with constant coefficients as studied. For a stable local identification, the solution should contain some variation as shown in the previous section. On the other hand, the data on those patches whose measurement resolution cannot resolve the rapid variation of the solution lead to significant error, e.g., truncation error in numerical differentiation, and hence should not be used either. To improve CaSLR’s robustness and accuracy, we propose the following process to select patches containing reliable and accurate information.

First, we propose to use the following numerical estimate of the local Sobolev semi-norm to filter out those patches in which the solution may be singular or oscillate rapidly,

where \(P_{\max }\) is the maximal order of partial derivatives in the dictionary. We remove those local regressions at \((x_j,t_j)\) in (4.2) if \(\beta (x_j,t_j) < \underline{\beta }\) or \(\beta (x_j,t_j)>{\bar{\beta }}\) for some thresholds \(\underline{\beta },{\bar{\beta }}>0\). In this work, we fix \(\underline{\beta }\) to be the 1st percentile and \({\bar{\beta }}\) the 99th percentile of all the collected local Sobolev semi-norms.

However, when the measurement data contain noise, the constant solution may not be detected using numerical approximation of (4.18). Here we design the following criterion to detect whether the solution is almost constant in a neighborhood. For an arbitrary \(({\textbf{x}},t)\in \Omega \times [0,T_{\max }]\), consider a neighborhood centered at \(({\textbf{x}},t)\) denoted by \(B_{({\textbf{x}},t)}=\prod _{i=1}^{d}[x_i-r_i,x_i+r_i]\times [t-r,t+r]\) with radius in each dimension \(r_i>0\), \(i=1,2,\dots ,d\), \(r>0\). Suppose we observe a discrete set of data (including \(({\textbf{x}},t)\)) with noise in this neighborhood: \({\widehat{B}}_{({\textbf{x}},t)}=\{{\widehat{u}}({\textbf{y}},s):=u({\textbf{y}},s)+\varepsilon ({\textbf{y}},s):~\varepsilon ({\textbf{y}},s)\overset{\text {i.i.d.}}{\sim }{\mathcal {N}}(0,\sigma ^2),~({\textbf{y}},s)\in B_{({\textbf{x}},t)}\}\). Furthermore, we assume that u is locally Lipschitz continuous in \(B(({\textbf{x}},t))\) with constant \(L>0\). Let \(|{\widehat{B}}_{({\textbf{x}},t)}|\) be the cardinality of \({\widehat{B}}_{({\textbf{x}},t)}\) and \(R = \max \{r_1,\dots ,r_d,r\}\). We note that

where \(\mu _{({\textbf{x}},t)}=u({\textbf{x}},t)-{\overline{u}}_{{\widehat{B}}}\), \({\overline{u}}_{{\widehat{B}}}\) and \(\overline{{\widehat{u}}}_{{\widehat{B}}}\) is the average of u and \({\widehat{u}}\) over \({\widehat{B}}\), respectively. By the assumption of Lipschitz continuity of u, \(|\mu _{({\textbf{x}},t)}|\le \sqrt{D}LR\), where \(D:=d+1\).

Hence, to estimate \(\sigma ^2\), we consider a collection of points \(\{({\textbf{x}}_1,t_1),\dots , ({\textbf{x}}_N,t_N)\}\subset \Omega \times [0,T_{\max }]\) with non-intersecting boxes \(B_{({\textbf{x}}_n,t_n)}\), \(n=1,2,\dots , N\) centered at each of them, respectively. Within each \(B_{({\textbf{x}}_n,t_n)}\), we observe noisy data over a finite discrete subset \({\widehat{B}}_{({\textbf{x}}_n,t_n)}\subset B_{({\textbf{x}}_n,t_n)}\) having cardinality \(B>0\). Denote \(\zeta _n={\widehat{u}}({\textbf{x}}_n,t_n) -\overline{{\widehat{u}}}_{{\widehat{B}}_{({\textbf{x}}_n,t_n)}}\), then by the computations detailed in Appendix A,

is a biased estimator for \(\sigma ^2\) with

and

where \(\gamma : = 4DL^2R^2\). From the results above, we observe that to reduce the estimator’s bias, we should use small patches, i.e., small R, and to control the estimator’s variance, we need to use more patches, i.e., larger N. Notice that the upper bound of the bias in (4.19) is closely related to the magnitude of u’s local variation in \(B({\textbf{x}},t)\) expressed as \(\gamma \). This implies that when u has mild variation in \(B({\textbf{x}},t)\), estimator (4.19) becomes approximately unbiased. This was expected since the statistical characteristics of the additive noise become more identifiable if the underlying function does not introduce extra variations. We note that the upper bound of variance (4.21) consists of two terms. The first term reflects the fact that when sufficiently many samples are provided, the estimator becomes more stable, while the second term also shows the influence of the magnitude of u’s variation.

Now for any two distinct points \(({\textbf{x}},t),({\textbf{y}},s)\in \Omega \times [0,T_{\max }]\), we know that \({\widehat{u}}({\textbf{x}},t)-{\widehat{u}}({\textbf{y}},s)\sim {\mathcal {N}}(u({\textbf{x}},t)-u({\textbf{y}},s),2\sigma ^2)\); hence, under the hypothesis \({\mathcal {H}}_0:\) \(u({\textbf{x}},t)=u({\textbf{y}},s)\), we would have

where \(\alpha _{0.90}=1.644853\). By estimation (4.19), if

we reject \({\mathcal {H}}_0\); otherwise, we accept \({\mathcal {H}}_0\). In case of PDE systems of multiple unknown functions \(\{u_j\}_{j=1}^J\), we consider the hypothesis \({\mathcal {H}}_0: u_j({\textbf{x}},t)=u_j({\textbf{y}},s)\) at least for one \(j=1,\dots ,J\), and reject \({\mathcal {H}}_0\) if \(\min _{j=1,\dots ,J}\{|{\widehat{u}}_j({\textbf{x}},t)-{\widehat{u}}_j({\textbf{y}},t)|\}>\sqrt{2}\alpha _{0.90}{\widehat{\sigma }}\); otherwise, we accept \({\mathcal {H}}_0\).

Statistical test (4.23) provides guidance on determining if the underlying function restricted to a patch shows variation when the collected data are contaminated by Gaussian noise. Specifically, for each pair of sampled points in a patch, we conduct comparison (4.23): If any of them yields a rejection of \({\mathcal {H}}_0\), we keep the patch; otherwise, we discard the data collected in the patch. Due to multiple tests [4, 45], the proposed approach does not imply that the type-I error of concluding a patch contains only noise is controlled by 0.1. To appropriately address this issue, we need to adjust the p-value for each test while considering strong correlations due to the function’s continuity, which can be complicated. We thus take this heuristic criterion guided by (4.23), and numerical experiments show that such a procedure is practically effective.

4.4 Numerical Experiments

In this section, we present numerical examples to demonstrate our local regression and global consistency-enforced PDE identification method. In some of the numerical examples, we use the following transition function in time with slope \(s\in {\mathbb {R}}\) and critical point \(t_c\in {\mathbb {R}}\) as

which models a smooth emergence (when \(s>0\)) or a smooth decaying (when \(s<0\)) behavior; when |s| increases, the transition becomes sharper. The critical point \(t_c\) signifies the boundary point between two phases. Moreover, we use the Jaccard score [20]

as a metric for identification accuracy. Here \(S_0\) and \(S_1\) denote two sets and the Jaccard score measures the similarity between them. In our case, \(S_0\) represents the set of indices of the true features in a given dictionary and \(S_1\) denotes the set of indices of features in an identified PDE model. We note that \(J(S_0,S_1) \in [0,1]\), and \(J(S_0,S_1) = 1\) if and only if \(S_0=S_1\). A higher Jaccard score indicates that the sets to be compared are more similar.

In the following, we consider a general setup for PDE identifications using a collection of local sensors. For each experiment, we put sensors randomly in space, and each of them collects data at some fixed intervals in time. Each sensor collects data in a cubic neighborhood in space and time whose side length is \( 2r+1\) in each spatial dimension and \(2r_t+1\) in time. Here \(r>0\) and \(r_t>0\) are referred to as the sensing radius and time duration, respectively.

Example 1

Transport equation.

First, we examine a transport equation with a periodic boundary condition whose speed varies both in space and time:

We solved it numerically over a grid with 100 points in space and 5000 points in time, and Fig. 5a shows the solution.

Identification of transport equation (4.26) with space-time-varying speed

Based on local patch data and using a dictionary of size 59 including up to 4-th-order partial derivatives of the solution and products of them up to 3 terms, plus \(\sin (u)\), \(\cos (u)\), \(\sin (u_x)\), and \(\cos (u_x)\), we employed the proposed patch selection scheme described in Sect. 4.3 and applied CaSLR to identify the PDE type as well as to reconstruct the associated local coefficients. For a series of combinations of different numbers of sensors and sensing radii, we conducted 20 independent experiments and recorded the mean values of identification accuracy, shown in (b), and reconstruction error, shown in (c). A set of sensor distributions is shown in (a) as red markers. We observe that the identification of a transport equation is highly reliable even with a single sensor whose patch size is small. This considerable robustness is closely related to the fact that the solution data contain diverse Fourier modes over the time-space domain. As we will see in Sect. 4.4.2, our data-driven patch selection helps to preserve such performances even when the solution has compact support at all snapshots.

Example 2

KdV-type equation.

Next, we test on the KdV-type equation with space-time-varying coefficients and a periodic boundary condition in the following form

Identification of KdV-type equation (4.27) with space-time-varying coefficients

Figure 6a shows its numerical solution with a set of exemplary sensors indicated by red markers. Using a dictionary of size 59 as specified in the previous example, the identification and reconstruction results of CaSLR are reported in (b) and (c), respectively. As there are more terms and of different orders in the PDE, more Fourier modes, in particular, relatively low-frequency modes for lower-order terms in the PDE, need to be included in each local patch measurement for accurate and robust identification. That is why patches of size larger than that in the transport equation example are needed.

Example 3

Schrödinger equation.

We perform a test on the Schrödinger equation