Abstract

This paper addresses the optimal control problem known as the linear quadratic regulator in the case when the dynamics are unknown. We propose a multistage procedure, called Coarse-ID control, that estimates a model from a few experimental trials, estimates the error in that model with respect to the truth, and then designs a controller using both the model and uncertainty estimate. Our technique uses contemporary tools from random matrix theory to bound the error in the estimation procedure. We also employ a recently developed approach to control synthesis called System Level Synthesis that enables robust control design by solving a quasi-convex optimization problem. We provide end-to-end bounds on the relative error in control cost that are optimal in the number of parameters and that highlight salient properties of the system to be controlled such as closed-loop sensitivity and optimal control magnitude. We show experimentally that the Coarse-ID approach enables efficient computation of a stabilizing controller in regimes where simple control schemes that do not take the model uncertainty into account fail to stabilize the true system.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Having surpassed human performance in video games [41] and Go [51], there has been a renewed interest in applying machine learning techniques to planning and control. In particular, there has been a considerable amount of effort in developing new techniques for continuous control where an autonomous system interacts with a physical environment [16, 34]. A tremendous opportunity lies in deploying these data-driven systems in more demanding interactive tasks including self-driving vehicles, distributed sensor networks, and agile robotics. As the role of machine learning expands to more ambitious tasks, however, it is critical these new technologies be safe and reliable. Failure of such systems could have severe social and economic consequences including the potential loss of human life. How can we guarantee that our new data-driven automated systems are robust?

Unfortunately, there are no clean baselines delineating the possible control performance achievable given a fixed amount of data collected from a system. Such baselines would enable comparisons of different techniques and would allow engineers to trade off between data collection and action in scenarios with high uncertainty. Typically, a key difficulty in establishing baselines is in proving lower bounds that state the minimum amount of knowledge needed to achieve a particular performance, regardless of method. However, in the context of controls, even upper bounds describing the worst-case performance of competing methods are exceptionally rare. Without such estimates, we are left to compare algorithms on a case-by-case basis, and we may have trouble diagnosing whether poor performance is due to algorithm choice or some other errors such as a software bug or a mechanical flaw.

In this paper, we attempt to build a foundation for a theoretical understanding of how machine learning interfaces with control by analyzing one of the most well-studied problems in classical optimal control, the Linear Quadratic Regulator (LQR). Here we assume that the system to be controlled obeys linear dynamics, and we wish to minimize some quadratic function of the system state and control action. This problem has been studied for decades in control: it has a simple, closed-form solution on the infinite time horizon and an efficient, dynamic programming solution on finite-time horizons. When the dynamics are unknown, however, there are far fewer results about achievable performance.

Our contribution is to analyze the LQR problem when the dynamics of the system are unknown, and we can measure the system’s response to varied inputs. A naïve solution to this problem would be to collect some data of how the system behaves over time, fit a model to this data, and then solve the original LQR problem assuming this model is accurate. Unfortunately, while this procedure might perform well given sufficient data, it is difficult to determine how many experiments are necessary in practice. Furthermore, it is easy to construct examples where the procedure fails to find a stabilizing controller.

As an alternative, we propose a method that couples our uncertainty in estimation with the control design. Our main approach uses the following framework of Coarse-ID control to solve the problem of LQR with unknown dynamics:

-

1.

Use supervised learning to learn a coarse model of the dynamical system to be controlled. We refer to the system estimate as the nominal system.

-

2.

Using either prior knowledge or statistical tools like the bootstrap, build probabilistic guarantees about the distance between the nominal system and the true, unknown dynamics.

-

3.

Solve a robust optimization problem over controllers that optimizes performance of the nominal system while penalizing signals with respect to the estimated uncertainty, ensuring stable and robust execution.

We will show that for a sufficient number of observations of the system, this approach is guaranteed to return a control policy with small relative cost. In particular, it guarantees asymptotic stability of the closed-loop system. In the case of LQR, step 1 of Coarse-ID control simply requires solving a linear least squares problem, step 2 uses a finite sample theoretical guarantee or a standard bootstrap technique, and step 3 requires solving a small semidefinite program. Analyzing this approach, on the other hand, requires contemporary techniques in nonasymptotic statistics and a novel parameterization of control problems that renders nonconvex problems convex [38, 59].

We demonstrate the utility of our method on a simple simulation. In the presented example, we show that simply using the nominal system to design a control policy frequently results in unstable closed-loop behavior, even when there is an abundance of data from the true system. However, the Coarse-ID approach finds a stabilizing controller with few system observations.

1.1 Problem Statement and Our Contributions

The standard optimal control problem aims to find a control sequence that minimizes an expected cost. We assume a dynamical system with state\(x_t\in {\mathbb {R}}^n\) can be acted on by a control\(u_t \in {\mathbb {R}}^p\) and obeys the stochastic dynamics

where \(w_t\) is a random process with \(w_t\) independent of \(w_{t'}\) for all \(t\ne t'\). Optimal control then seeks to minimize

Here, \(c_t\) denotes the state-control cost at every time step, and the input \(u_t\) is allowed to depend on the current state \(x_t\) and all previous states and actions. In this generality, problem (2) encapsulates many of the problems considered in the reinforcement learning literature.

The simplest optimal control problem with continuous state is the linear quadratic regulator (LQR), in which costs are a fixed quadratic function of state and control and the dynamics are linear and time-invariant:

Here Q (resp. R) is a \(n\times n\) (resp. \(p\times p\)) positive definite matrix, A and B are called the state-transition matrices, and \(w_t\in {\mathbb {R}}^n\) is Gaussian noise with zero mean and covariance \(\Sigma _w\). Throughout, \(M^*\) denotes the Hermitian transpose of the matrix M.

In what follows, we will be concerned with the infinite time horizon variant of the LQR problem where we let the time horizon T go to infinity and minimize the average cost. When the dynamics are known, this problem has a celebrated closed-form solution based on the solution of matrix Riccati equations [64]. Indeed, the optimal solution sets \(u_t = K x_t\) for a fixed \(p\times n\) matrix K, and the corresponding optimal cost will serve as our gold-standard baseline to which we will compare the achieved cost of all algorithms.

In the case when the state-transition matrices are unknown, fewer results have been established about what cost is achievable. We will assume that we can conduct experiments of the following form: given some initial state \(x_0\), we can evolve the dynamics for T time steps using any control sequence \(\{u_0,\ldots , u_{T-1}\}\), measuring the resulting output \(\{x_1,\ldots , x_T\}\). If we run N such independent experiments, what infinite time horizon control cost is achievable using only the data collected? For simplicity of bookkeeping, in our analysis we further assume that we can prepare the system in initial state \(x_0 = 0\).

In what follows, we will examine the performance of the Coarse-ID control framework in this scenario. We will estimate the errors accrued by least squares estimates \(({\widehat{A}},{\widehat{B}})\) of the system dynamics. This estimation error is not easily handled by standard techniques because the design matrix is highly correlated with the model to be estimated. Regardless, for theoretical tractability, we can build a least squares estimate using only the final sample \((x_{T},x_{T-1},u_{T-1})\) of each of the N experiments. Indeed, in Sect. 2 we prove the following

Proposition 1

Define the matrices

Assume we collect data from the linear, time-invariant system initialized at \(x_0 = 0\), using inputs \(u_t~{\mathop {\sim }\limits ^{{{\mathrm{i.i.d.}}}}}{\mathcal {N}}(0,\sigma _u^2 I_p)\) for \(t= 0, \ldots ,T - 1\), with \(T \ge 2\). Suppose that the process noise is \(w_t~{\mathop {\sim }\limits ^{{{\mathrm{i.i.d.}}}}}{\mathcal {N}}(0,\sigma _w^2 I_n)\) and that

Then, with probability at least \(1-\delta \), the least squares estimator using only the final sample of each trajectory satisfies both the inequality

and the inequality

The details of the estimation procedure are described in Sect. 2. Note that this estimation result seems to yield an optimal dependence in terms of the number of parameters: (A, B) together have \(n(n+ p)\) parameters to learn and each measurement consists of \(n\) values. Moreover, this proposition further illustrates that not all linear systems are equally easy to estimate. The matrices \(G_T G_T^*\) and \(F_T F_T^*\) are finite-time controllability Gramians for the control and noise inputs, respectively. These are standard objects in control: each eigenvalue/vector pair of such a Gramian characterizes how much input energy is required to move the system in that particular direction of the state space. Therefore, \(\lambda _{\min }\left( \sigma _u^2G_T G_T^* + \sigma _w^2F_T F_T^*\right) \) quantifies the least controllable, and hence most difficult to excite and estimate, mode of the system. This property is captured nicely in our bound, which indicates that for systems for which all modes are easily excitable (i.e., all modes of the system amplify the applied inputs and disturbances), the identification task becomes easier.

While we cannot compute the operator norm error bounds (5) and (6) without knowing the true system matrices (A, B), we present a data-dependent bound in Proposition 3. Moreover, as we show in Sect. 2.3, a simple bootstrap procedure can efficiently upper bound the errors \(\epsilon _A:=\Vert A-{\widehat{A}} \Vert _2\) and \(\epsilon _B:=\Vert B-{\widehat{B}} \Vert _2\) from simulation.

With our estimates \(({\widehat{A}},{\widehat{B}})\) and error bounds \((\epsilon _A,\epsilon _B)\) in hand, we can turn to the problem of synthesizing a controller. We can assert with high probability that \(A = {\widehat{A}}+ \Delta _A\) and \(B = {\widehat{B}}+ \Delta _B\), for \(\Vert \Delta _A \Vert _2 \le \epsilon _A\) and \(\Vert \Delta _B \Vert _2 \le \epsilon _B\), where the size of the error terms is determined by the number of samples N collected. In light of this, it is natural to pose the following robust variant of the standard LQR optimal control problem (3), which computes a robustly stabilizing controller that seeks to minimize the worst-case performance of the system given the (high probability) norm bounds on the perturbations \(\Delta _A\) and \(\Delta _B\):

Although classic methods exist for computing such controllers [21, 45, 53, 60], they typically require solving nonconvex optimization problems, and it is not readily obvious how to extract interpretable measures of controller performance as a function of the perturbation sizes \(\epsilon _A\) and \(\epsilon _B\). To that end, we leverage the recently developed System Level Synthesis (SLS) framework [59] to create an alternative robust synthesis procedure. Described in detail in Sect. 3, SLS lifts the system description into a higher-dimensional space that enables efficient search for controllers. At the cost of some conservatism, we are able to guarantee robust stability of the resulting closed-loop system for all admissible perturbations and bound the performance gap between the resulting controller and the optimal LQR controller. This is summarized in the following proposition.

Proposition 2

Let \(({\widehat{A}},{\widehat{B}})\) be estimated via the independent data collection scheme used in Proposition 1 and \({\varvec{{\widehat{K}}}}\) synthesized using robust SLS. Let \({\widehat{J}}\) denote the infinite time horizon LQR cost accrued by using the controller \({\varvec{{\widehat{K}}}}\) and \(J_\star \) denote the optimal LQR cost achieved when (A, B) are known. Then the relative error in the LQR cost is bounded as

with probability \(1-\delta \) provided N is sufficiently large.

The complexity term \({\mathcal {C}}_{\mathrm {LQR}}\) depends on the rollout length T, the true dynamics, the matrices (Q, R) which define the LQR cost, and the variances \(\sigma _u^2\) and \(\sigma _w^2\) of the control and noise inputs, respectively. The \(1-\delta \) probability comes from the probability of estimation error from Proposition 1. The particular form of \({\mathcal {C}}_{\mathrm {LQR}}\) and concrete requirements on N are both provided in Sect. 4.

Though the optimization problem formulated by SLS is infinite dimensional, in Sect. 5 we provide two finite-dimensional upper bounds on the optimization that inherit the stability guarantees of the SLS formulation. Moreover, we show via numerical experiments in Sect. 6 that the controllers synthesized by our optimization do indeed provide stabilizing controllers with small relative error. We further show that settings exist wherein a naïve synthesis procedure that ignores the uncertainty in the state-space parameter estimates produces a controller that performs poorly (or has unstable closed-loop behavior) relative to the controller synthesized using the SLS procedure.

1.2 Related Work

We first describe related work in the estimation of unknown dynamical systems and then turn to connections in the literature on robust control with uncertain models. We will end this review with a discussion of a few works from the reinforcement learning community that have attempted to address the LQR problem and related variants.

Estimation of Unknown Dynamical Systems

Estimation of unknown systems, especially linear dynamical systems, has a long history in the system identification subfield of control theory. While the text of Ljung [36] covers the classical asymptotic results, our interest is primarily in nonasymptotic results. Early results [10, 57] on nonasymptotic rates for parameter identification featured conservative bounds which are exponential in the system degree and other relevant quantities. More recently, Bento et al. [46] show that when the A matrix is stable and induced by a sparse graph, then one can recover the support of A from a single trajectory using \(\ell _1\)-penalized least squares. Furthermore, Hardt et al. [26] provide the first polynomial-time guarantee for identifying stable linear systems with outputs. Their guarantees, however, are in terms of predictive output performance of the model and require an assumption on the true system that is more stringent than stability. It is not clear how their statistical risk guarantee can be used in a downstream robust synthesis procedure.

Next, we turn our attention to system identification of linear systems in the frequency domain. A comprehensive text on these methods (which differ from the aforementioned state-space methods) is the work by Chen and Gu [11]. For stable systems, Helmicki et al. [29] propose to identify a finite impulse response (FIR) approximation by directly estimating the first r impulse response coefficients. This method is analyzed in a nonadversarial probabilistic setting by [23, 54], who prove that a polynomial number of samples are sufficient to recover a FIR filter which approximates the true system in both \(\ell _p\)-norm and \({\mathcal {H}}_\infty \)-norm. However, transfer function methods do not easily allow for optimal control with state variables, since they only model the input/output behavior of the system.

In parallel with the system identification community, identification of auto-regressive time-series models is a widely studied topic in the statistics literature (see, e.g., Box et al. [7] for the classical results). Goldenshluger and Zeevi [24] show that the coefficients of a stationary auto-regressive model can be estimated from a single trajectory of length polynomial in \(1/(1-\rho )\) via least squares, where \(\rho \) denotes the stability radius of the process. They also prove that their rate is minimax optimal. More recently, several authors [33, 39, 42] have studied generalization bounds for non-i.i.d. data, extending the standard learning theory guarantees for independent data. At the crux of these arguments lie various mixing assumptions [63], which limits the analysis to only hold for stable dynamical systems. Results in this line of research suggest that systems with smaller mixing time (i.e., systems that are more stable) are easier to identify (i.e., take less samples). Our result in Proposition 1, however, suggests instead that identification benefits from more easily excitable systems. While our analysis holds when we have access to full state observations, empirical testing suggests that Proposition 1 reflects reality more accurately than arguments based on mixing. In follow-up work, we have begun to reconcile this issue for stable linear systems [52].

Robust Controller Design

For end-to-end guarantees, parameter estimation is only half the picture. Our procedure provides us with a family of system models described by a nominal estimate and a set of unknown but bounded model errors. It is therefore necessary to ensure that the computed controller has stability and performance guarantees for any such admissible realization. The problem of robustly stabilizing such a family of systems is one with a rich history in the controls community. When modeling errors to the nominal system are allowed to be arbitrary norm-bounded linear time-invariant (LTI) operators in feedback with the nominal plant, traditional small-gain theorems and robust synthesis techniques can be applied to exactly solve the problem [13, 64]. However, when the errors are known to have more structure, there are more sophisticated techniques based on structured singular values and corresponding \(\mu \)-synthesis techniques [15, 19, 44, 62] or integral quadratic constraints (IQCs) [40]. While theoretically appealing and much less conservative than traditional small-gain approaches, the resulting synthesis methods are both computationally intractable (although effective heuristics do exist) and difficult to interpret analytically. In particular, we know of no results in the literature that bound the degradation in performance of controlling an uncertain system in terms of the size of the perturbations affecting it.

To circumvent this issue, we leverage a novel parameterization of robustly stabilizing controllers based on the SLS framework for controller synthesis [59]. We describe this framework in more detail in Sect. 3. Originally developed to allow for scaling optimal and robust controller synthesis techniques to large-scale systems, the SLS framework can be viewed as a generalization of the celebrated Youla parameterization [61]. We show that SLS allows us to account for model uncertainty in a transparent and analytically tractable way.

PAC Learning and Reinforcement Learning

In terms of end-to-end guarantees for LQR, our work is most comparable to that of Fiechter [22], who shows that the discounted LQR problem is PAC-learnable. Fietcher analyzes an identify-then-control scheme similar to the one we propose, but there are several key differences. First, our probabilistic bounds on identification are much sharper, by leveraging modern tools from high-dimensional statistics. Second, Fiechter implicitly assumes that the true closed-loop system with the estimated controller is not only stable but also contractive. While this very strong assumption is nearly impossible to verify in practice, contractive closed-loop assumptions are actually pervasive throughout the literature, as we describe below. To the best of our knowledge, our work is the first to properly lift this technical restriction. Third, and most importantly, Fietcher proposes to directly solve the discounted LQR problem with the identified model and does not take into account any uncertainty in the controller synthesis step. This is problematic for two reasons. First, it is easy to construct an instance of a discounted LQR problem where the optimal solution does not stabilize the true system (see, e.g., [47]). Therefore, even in the limit of infinite data, there is no guarantee that the closed-loop system will be stable. Second, even if the optimal solution does stabilize the underlying system, failing to take uncertainty into account can lead to situations where the synthesized controller does not. We will demonstrate this behavior in our experiments.

We are also particularly interested in the LQR problem as a baseline for more complicated problems in reinforcement learning (RL). LQR should be a relatively easy problem in RL because one can learn the dynamics from anywhere in the state space, vastly simplifying the problem of exploration. Hence, it is important to establish how well a pure exploration followed by exploitation strategy can fare on this simple baseline.

There are indeed some related efforts in RL and online learning. Abbasi-Yadkori and Szepesvari [1] propose to use the optimism in the face of uncertainty (OFU) principle for the LQR problem, by maintaining confidence ellipsoids on the true parameter, and using the controller which, in feedback, minimizes the cost objective the most among all systems in the confidence ellipsoid. Ignoring the computational intractability of this approach, their analysis reveals an exponential dependence in the system order in their regret bound and also makes the very strong assumption that the optimal closed-loop systems are contractive for every A, B in the confidence ellipsoid. The regret bound is improved by Ibrahimi et al. [30] to depend linearly on the state dimension under additional sparsity constraints on the dynamics.

In response to the computational intractability of the OFU principle, researchers in RL and online learning proposed the use of Thompson sampling [49] for exploration. Abeille and Lazaric [2] show that the regret of a Thompson sampling approach for LQR scales as \(\widetilde{{\mathcal {O}}}(T^{2/3})\) and improve the result to \(\widetilde{{\mathcal {O}}}(\sqrt{T})\) in [3], where \(\widetilde{{\mathcal {O}}}(\cdot )\) hides poly-logarithmic factors. However, their results are only valid for the scalar \(n=d=1\) setting. Ouyang et al. [43] show that in a Bayesian setting, the expected regret can be bounded by \(\widetilde{{\mathcal {O}}}(\sqrt{T})\). While this matches the bound of [1], the Bayesian regret is with respect to a particular Gaussian prior distribution over the true model, which differs from the frequentist setting considered in [1,2,3]. Furthermore, these works also make the same restrictive assumption that the optimal closed-loop systems are uniformly contractive over some known set.

Jiang et al. [31] propose a general exploration algorithm for contextual decision processes (CDPs) and show that CDPs with low Bellman rank are PAC-learnable; in the LQR setting, they show the Bellman rank is bounded by \(n^2\). While this result is appealing from an information-theoretic standpoint, the proposed algorithm is computationally intractable for continuous problems. Hazan et al. [27, 28] study the problem of prediction in a linear dynamical system via a novel spectral filtering algorithm. Their main result shows that one can compete in a regret setting in terms of prediction error. As mentioned previously, converting prediction error bounds into concrete bounds on sub-optimality of control performance is an open question. Fazel et al. [20] show that randomized search algorithms similar to policy gradient can learn the optimal controller with a polynomial number of samples in the noiseless case; an explicit characterization of the dependence of the sample complexity on the parameters of the true system is not given.

2 System Identification Through Least Squares

To estimate a coarse model of the unknown system dynamics, we turn to the simple and classical method of linear least squares. By running experiments in which the system starts at \(x_0=0\) and the dynamics evolve with a given input, we can record the resulting state observations. The set of inputs and outputs from each such experiment will be called a rollout. For system estimation, we excite the system with Gaussian noise for N rollouts, each of length T. The resulting dataset is \(\{({x}_t^{(\ell )}, {u}_t^{(\ell )}) ~:~ 1 \le \ell \le N, 0 \le t \le T\}\), where t indexes the time in one rollout and \(\ell \) indexes independent rollouts. Therefore, we can estimate the system dynamics by

For the Coarse-ID control setting, a good estimate of error is just as important as the estimate of the dynamics. Statistical theory and tools allow us to quantify the error of the least squares estimator. First, we present a theoretical analysis of the error in a simplified setting. Then, we describe a computational bootstrap procedure for error estimation from data alone.

2.1 Least Squares Estimation as a Random Matrix Problem

We begin by explicitly writing the form of the least squares estimator. First, fixing notation to simplify the presentation, let \(\Theta := \begin{bmatrix} A&B \end{bmatrix}^* \in {\mathbb {R}}^{(n+ p) \times n}\) and let \(z_t := \begin{bmatrix} x_t \\ u_t \end{bmatrix} \in {\mathbb {R}}^{n+ p}\). Then, the system dynamics can be rewritten, for all \(t \ge 0\),

Then in a single rollout, we will collect

The system dynamics give the identity \( X = Z \Theta + W \). Resetting state of the system to \(x_0=0\) each time, we can perform N rollouts and collect N datasets like (10). Having the ability to reset the system to a state independent of past observations will be important for the analysis in the following section, and it is also practically important for potentially unstable systems. Denote the data for each rollout as \((X^{(\ell )}, Z^{(\ell )}, W^{(\ell )})\). With slight abuse of notation, let \(X_N\) be composed of vertically stacked \(X^{(\ell )}\), and similarly for \(Z_N\) and \(W_N\). Then, we have

The full data least squares estimator for \(\Theta \) is (assuming for now invertibility of \(Z_N^*Z_N\)),

Then the estimation error is given by

The magnitude of this error is the quantity of interest in determining confidence sets around estimates \(({{\widehat{A}}}, {{\widehat{B}}})\). However, since \(W_N\) and \(Z_N\) are not independent, this estimator is difficult to analyze using standard methods. While this type of analysis is an open problem of interest, in this paper we turn instead to a simplified estimator.

2.2 Theoretical Bounds on Least Squares Error

In this section, we work out the statistical rate for the least squares estimator which uses just the last sample of each trajectory \(({x}_T^{(\ell )}, {x}_{T-1}^{(\ell )}, {u}_{T-1}^{(\ell )})\). This estimation procedure is made precise in Algorithm 1. Our analysis ideas are analogous to those used to prove statistical rates for standard linear regression, and they leverage recent tools in nonasymptotic analysis of random matrices. The result is presented above in Proposition 1.

In the context of Proposition 1, a single data point from each T-step rollout is used. We emphasize that this strategy results in independent data, which can be seen by defining the estimator matrix directly. The previous estimator (11) is amended as follows: the matrices defined in (10) instead include only the final time step of each trial, \(X_N = \begin{bmatrix} x_T^{(1)}&x_T^{(2)}&\ldots&x_T^{(N)} \end{bmatrix}^*\), and similar modifications are made to \(Z_N\) and \(W_N\). Estimator (11) uses these modified matrices, which now contain independent rows. To see this, recall the definition of \(G_T\) and \(F_T\) from (4),

defined for \(T \ge 2\). We can unroll the system dynamics and see that

Using Gaussian excitation, \(u_t\sim {\mathcal {N}}(0,\sigma ^2_u I_p)\) gives

Since \(F_TF_T^*\succ 0\), as long as both \(\sigma _u,\sigma _w\) are positive, this is a nondegenerate distribution.

Therefore, bounding the estimation error can be achieved via proving a result on the error in random design linear regression with vector-valued observations. First, we present a lemma which bounds the spectral norm of the product of two independent Gaussian matrices.

Lemma 1

Fix a \(\delta \in (0, 1)\) and \(N\ge 2(n+m)\log (1/\delta )\). Let \(f_k \in {\mathbb {R}}^m\), \(g_k \in {\mathbb {R}}^n\) be independent random vectors \(f_k \sim {\mathcal {N}}(0, \Sigma _f)\) and \(g_k \sim {\mathcal {N}}(0, \Sigma _g)\) for \(1 \le k \le N\). With probability at least \(1-\delta \),

We believe this bound to be standard and include a proof in the appendix for completeness. Lemma 1 shows that if X is \(n_1 \times N\) with i.i.d. \({\mathcal {N}}(0, 1)\) entries and Y is \(N \times n_2\) with i.i.d. \({\mathcal {N}}(0, 1)\) entries, and X and Y are independent, then with probability at least \(1-\delta \) we have

Next, we state a standard nonasymptotic bound on the minimum singular value of a standard Wishart matrix (see, e.g., Corollary 5.35 of [56]).

Lemma 2

Let \(X \in {\mathbb {R}}^{N \times n}\) have i.i.d. \({\mathcal {N}}(0, 1)\) entries. With probability at least \(1-\delta \),

We combine the previous lemmas into a statement on the error of random design regression.

Lemma 3

Let \(z_1, \ldots , z_N \in {\mathbb {R}}^{n}\) be i.i.d. from \({\mathcal {N}}(0, \Sigma )\) with \(\Sigma \) invertible. Let \(Z^*:= \begin{bmatrix} z_1&...&z_N \end{bmatrix}\). Let \(W \in {\mathbb {R}}^{N \times p}\) with each entry i.i.d. \({\mathcal {N}}(0, \sigma _w^2)\) and independent of Z. Let \(E := (Z^*Z)^{\dag } Z^*W\), and suppose that

For any fixed matrix Q, we have with probability at least \(1-\delta \),

Proof

First, observe that Z is equal in distribution to \(X \Sigma ^{1/2}\), where \(X \in {\mathbb {R}}^{N \times n}\) has i.i.d. \({\mathcal {N}}(0, 1)\) entries. By Lemma 2, with probability at least \(1-\delta /2\),

The last inequality uses (15) combined with the inequality \((a+b)^2 \le 2(a^2 + b^2)\). Furthermore, by Lemma 1 and (15), with probability at least \(1-\delta /2\),

Let \({\mathcal {E}}\) denote the event which is the intersection of the two previous events. By a union bound, \({\mathbb {P}}({\mathcal {E}}) \ge 1-\delta \). We continue the rest of the proof assuming the event \({\mathcal {E}}\) holds. Since \(X^*X\) is invertible,

Taking operator norms on both sides,

Combining the inequalities above,

The result now follows. \(\square \)

Using this result on random design linear regression, we are now ready to analyze the estimation errors of the identification in Algorithm 1 and provide a proof of Proposition 1.

Proof

Consider the least squares estimation error (12) with modified single-sample-per-rollout matrices. Recall that rows of the design matrix \(Z_N\) are distributed as independent normals, as in (14). Then applying Lemma 3 with \(Q_A = \begin{bmatrix} I_n&0 \end{bmatrix}\) so that \(Q_A E\) extracts only the estimate for A, we conclude that with probability at least \(1 - \delta /2\),

as long as \(N \ge 8(n+ p) + 16\log (4/\delta )\). Now applying Lemma 3 under the same condition on N with \(Q_B = \begin{bmatrix} 0&I_p\end{bmatrix}\), we have with probability at least \(1 - \delta /2\),

The result follows by application of the union bound. \(\square \)

There are several interesting points to make about the guarantees offered by Proposition 1. First, as mentioned in the introduction, there are \(n(n+ p)\) parameters to learn and our bound states that we need \(O(n+ p)\) measurements, each measurement providing \(n\) values. Hence, this appears to be an optimal dependence with respect to the parameters \(n\) and \(p\). Second, note that intuitively, if the system amplifies the control and noise inputs in all directions of the state space, as captured by the minimum eigenvalues of the control and disturbance Gramians \(G_TG_T^*\) or \(F_TF_T^*\), respectively, then the system has a larger “signal-to-noise” ratio, and the system matrix \(A\) is easier to estimate. On the other hand, this measure of the excitability of the system has no impact on learning \(B\). Unlike in Fiechter’s work [22], we do not need to assume that \(G_T G_T^*\) is invertible. As long as the process noise is not degenerate, it will excite all modes of the system.

Finally, we note that Proposition 1 offers a data-independent guarantee for the estimation of the parameters (A, B). We can also provide data-dependent guarantees, which will be less conservative in practice. The next result shows how we can use the observed states and inputs to obtain more refined confidence sets than the ones offered by Proposition 1. The proof is deferred to Appendix B.

Proposition 3

Assume we have N independent samples \((y^{(\ell )}, x^{(\ell )}, u^{(\ell )})\) such that

where \(w^{(\ell )}\) are i.i.d. \({\mathcal {N}}(0,\sigma _w^2 I_n)\) and are independent from \(x^{(\ell )}\) and \(u^{(\ell )}\). Also, let us assume that \(N \ge n+ p\). Then, with probability \(1 - \delta \), we have

where \(C(n, p, \delta ) = \sigma _w^2 (\sqrt{n+ p} + \sqrt{n} + \sqrt{2 \log (1/\delta )})^{2}\). If the matrix on the right-hand side has zero as an eigenvalue, we define the inverse of that eigenvalue to be infinity.

Proposition 3 is a general result that does not require the inputs \(u^{(\ell )}\) to be normally distributed and it allows the states \(x^{(\ell )}\) to be arbitrary as long as all the samples \((y^{(\ell )}, x^{(\ell )}, u^{(\ell )})\) are independent and the process noise \(w^{(\ell )}\) is normally distributed. Nonetheless, both Propositions 1 and 3 require estimating (A, B) from independent samples. In practice, one would collect rollouts from the system, which consist of many dependent measurements. In that case, using all the data is preferable. Since the guarantees offered in this section do not apply in that case, in the next section we study a different procedure for estimating the size of the estimation error.

2.3 Estimating Model Uncertainty with the Bootstrap

In the previous sections, we offered theoretical guarantees on the performance of the least squares estimation of \(A\) and \(B\) from independent samples. However, there are two important limitations to using such guarantees in practice to offer upper bounds on \(\epsilon _A = ||A- {\widehat{A}} ||_{2}\) and \(\epsilon _B = ||B- {\widehat{B}} ||_{2}\). First, using only one sample per system rollout is empirically less efficient than using all available data for estimation. Second, even optimal statistical analyses often do not recover constant factors that match practice. For purposes of robust control, it is important to obtain upper bounds on \(\epsilon _A\) and \(\epsilon _B\) that are not too conservative. Thus, we aim to find \({\widehat{\epsilon }}_A\) and \({\widehat{\epsilon }}_B\) such that \(\epsilon _A \le {\widehat{\epsilon }}_A\) and \(\epsilon _B \le {\widehat{\epsilon }}_B\) with high probability.

We propose a vanilla bootstrap method for estimating \({\widehat{\epsilon }}_A\) and \({\widehat{\epsilon }}_B\). Bootstrap methods have had a profound impact in both theoretical and applied statistics since their introduction [18]. These methods are used to estimate statistical quantities (e.g., confidence intervals) by sampling synthetic data from an empirical distribution determined by the available data. For the problem at hand, we propose the procedure described in Algorithm 2.Footnote 1

For \({\widehat{\epsilon }}_A\) and \({\widehat{\epsilon }}_B\) estimated by Algorithm 2, we intuitively have

There are many known guarantees for the bootstrap, particularly for the parametric version we use. We do not discuss these results here; for more details, see texts by Van Der Vaart and Wellner [55], Shao and Tu [50], and Hall [25]. Instead, in Appendix F we show empirically the performance of the bootstrap for our estimation problem. For mission critical systems, where empirical validation is insufficient, the statistical error bounds presented in Sect. 2.2 give guarantees on the size of \(\epsilon _A\), \(\epsilon _B\). In general, data-dependent error guarantees will be less conservative. In follow-up work, we offer guarantees similar to the ones presented in Sect. 2.2 for estimation of linear dynamics from dependent data [52].

3 Robust Synthesis

With estimates of the system \(({\widehat{A}},{\widehat{B}})\) and operator norm error bounds \((\epsilon _A,\epsilon _B)\) in hand, we now turn to control design. In this section, we introduce some useful tools from System Level Synthesis (SLS), a recently developed approach to control design that relies on a particular parameterization of signals in a control system [38, 59]. We review the main SLS framework, highlighting the key constructions that we will use to solve the robust LQR problem. As we show in this and the following section, using the SLS framework, as opposed to traditional techniques from robust control, allows us to (a) compute robust controllers using semidefinite programming and (b) provide sub-optimality guarantees in terms of the size of the uncertainties on our system estimates.

3.1 Useful Results from System Level Synthesis

The SLS framework focuses on the system responses of a closed-loop system. As a motivating example, consider linear dynamics under a fixed static state-feedback control policy K, i.e., let \(u_k = Kx_k\). Then, the closed-loop map from the disturbance process \(\{w_0, w_1, \dots \}\) to the state \(x_k\) and control input \(u_k\) at time k is given by

Letting \(\Phi _x(k) := (A+ BK)^{k-1}\) and \(\Phi _u(k) := K(A+ BK)^{k-1}\), we can rewrite Eq. (18) as

where \(\{\Phi _x(k),\Phi _u(k)\}\) are called the closed-loop system response elements induced by the static controller K.

Note that even when the control is a linear function of the state and its past history (i.e., a linear dynamic controller), expression (19) is valid. Though we conventionally think of the control policy as a function mapping states to input, whenever such a mapping is linear, both the control input and the state can be written as linear functions of the disturbance signal \(w_t\). With such an identification, the dynamics require that the \(\{\Phi _x(k),\Phi _u(k)\}\) must obey the constraints

As we describe in more detail below in Theorem 1, these constraints are in fact both necessary and sufficient. Working with closed-loop system responses allows us to cast optimal control problems as optimization problems over elements \(\{\Phi _x(k),\Phi _u(k)\}\), constrained to satisfy the affine Eq. (20). Comparing Eqs. (18) and (19), we see that the former is nonconvex in the controller K, whereas the latter is affine in the elements \(\{\Phi _x(k),\Phi _u(k)\}\).

As we work with infinite horizon problems, it is notationally more convenient to work with transfer function representations of the above objects, which can be obtained by taking a z-transform of their time-domain representations. The frequency-domain variable z can be informally thought of as the time-shift operator, i.e., \(z\{x_k,x_{k+1},\dots \} = \{x_{k+1},x_{k+2},\dots \}\), allowing for a compact representation of LTI dynamics. We use boldface letters to denote such transfer functions signals in the frequency domain, e.g., \({\varvec{\Phi }}_x(z) = \sum _{k = 1}^\infty \Phi _x(k) z^{-k}\). Then, constraints (20) can be rewritten as

and the corresponding (not necessarily static) control law \(\mathbf {u} = \mathbf {K} \mathbf {x}\) is given by \(\mathbf {K} = {\varvec{\Phi }}_u {\varvec{\Phi }}^{-1}_x\). The relevant frequency-domain connections for LQR are illustrated in Appendix C.

We formalize our discussion by introducing notation that is common in the controls literature. For a thorough introduction to the functional analysis commonly used in control theory, see Chapters 2 and 3 of [64]. Let \({\mathbb {T}}\) (resp. \({\mathbb {D}}\)) denote the unit circle (resp. open unit disk) in the complex plane. The restriction of the Hardy spaces \({\mathcal {H}}_\infty ({\mathbb {T}})\) and \({\mathcal {H}}_2({\mathbb {T}})\) to matrix-valued real-rational functions that are analytic on the complement of \({\mathbb {D}}\) will be referred to as \({{\mathcal {R}}}{{\mathcal {H}}}_\infty \) and \({{\mathcal {R}}}{{\mathcal {H}}}_2\), respectively. In controls parlance, this corresponds to (discrete-time) stable matrix-valued transfer functions. For these two function spaces, the \({\mathcal {H}}_\infty \) and \({\mathcal {H}}_2\) norms simplify to

Finally, the notation \(\frac{1}{z} {{\mathcal {R}}}{{\mathcal {H}}}_\infty \) refers to the set of transfer functions \(\mathbf {G}\) such that \(z \mathbf {G} \in {{\mathcal {R}}}{{\mathcal {H}}}_\infty \). Equivalently, \(\mathbf {G} \in \frac{1}{z} {{\mathcal {R}}}{{\mathcal {H}}}_\infty \) if \(\mathbf {G} \in {{\mathcal {R}}}{{\mathcal {H}}}_\infty \) and \(\mathbf {G}\) is strictly proper.

The most important transfer function for the LQR problem is the map from the state sequence to the control actions: the control policy. Consider an arbitrary transfer function \(\mathbf {K}\) denoting the map from state to control action, \(\mathbf {u} = \mathbf {K} \mathbf {x}\). Then the closed-loop transfer matrices from the process noise \(\mathbf {w}\) to the state \(\mathbf {x}\) and control action \(\mathbf {u}\) satisfy

We then have the following theorem parameterizing the set of stable closed-loop transfer matrices, as described in Eq. (22), that are achievable by a given stabilizing controller \(\mathbf {K}\).

Theorem 1

(State-Feedback Parameterization [59]) The following are true:

-

The affine subspace defined by

$$\begin{aligned} \begin{bmatrix} zI - A&- B \end{bmatrix} \begin{bmatrix} {\varvec{\Phi }}_x \\ {\varvec{\Phi }}_u \end{bmatrix} = I, \ {\varvec{\Phi }}_x, {\varvec{\Phi }}_u \in \frac{1}{z}{{\mathcal {R}}}{{\mathcal {H}}}_\infty \end{aligned}$$(23)parameterizes all system responses (22) from \(\mathbf {w}\) to \((\mathbf {x}, \mathbf {u})\), achievable by an internally stabilizing state-feedback controller \(\mathbf {K}\).

-

For any transfer matrices \(\{{\varvec{\Phi }}_x, {\varvec{\Phi }}_u\}\) satisfying (23), the controller \(\mathbf {K} = {\varvec{\Phi }}_u {\varvec{\Phi }}_x^{-1}\) is internally stabilizing and achieves the desired system response (22).

Note that in particular, \(\{{\varvec{\Phi }}_x, {\varvec{\Phi }}_u\}=\{ (zI - A-B\mathbf {K})^{-1}, \mathbf {K} (zI-A-B \mathbf {K})^{-1} \}\) as in (22) are elements of the affine space defined by (23) whenever \(\mathbf {K}\) is a causal stabilizing controller.

We will also make extensive use of a robust variant of Theorem 1.

Theorem 2

(Robust Stability [38]) Let \({\varvec{\Phi }}_x\) and \({\varvec{\Phi }}_u\) be two transfer matrices in \(\frac{1}{z}{{\mathcal {R}}}{{\mathcal {H}}}_\infty \) such that

Then the controller \(\mathbf {K} = {\varvec{\Phi }}_u {\varvec{\Phi }}_x^{-1}\) stabilizes the system described by (A, B) if and only if \((I+{\varvec{\Delta }})^{-1} \in {{\mathcal {R}}}{{\mathcal {H}}}_\infty \). Furthermore, the resulting system response is given by

Corollary 1

Under the assumptions of Theorem 2, if \(\Vert {\varvec{\Delta }}\Vert <1\) for any induced norm \(\Vert \cdot \Vert \), then the controller \(\mathbf {K} = {\varvec{\Phi }}_u {\varvec{\Phi }}_x^{-1}\) stabilizes the system described by (A, B).

Proof

Follows immediately from the small-gain theorem, see for example Sect. 9.2 in [64]. \(\square \)

3.2 Robust LQR Synthesis

We return to the problem setting where estimates \(({\widehat{A}}, {\widehat{B}})\) of a true system (A, B) satisfy

where \(\Delta _A := {\widehat{A}}-A\) and \(\Delta _B := {\widehat{B}}-B\) and where we wish to minimize the LQR cost for the worst instantiation of the parametric uncertainty.

Before proceeding, we must formulate the LQR problem in terms of the system responses \(\{\Phi _x(k),\Phi _u(k)\}\). It follows from Theorem 1 and the standard equivalence between infinite horizon LQR and \({\mathcal {H}}_2\) optimal control that, for a disturbance process distributed as \(w_t \overset{i.i.d.}{\sim {}} {\mathcal {N}}(0,\sigma _w^2 I)\), the standard LQR problem (3) can be equivalently written as

We provide a full derivation of this equivalence in Appendix C. Going forward, we drop the \(\sigma _w^2\) multiplier in the objective function as it affects neither the optimal controller nor the sub-optimality guarantees that we compute in Sect. 4.

We begin with a simple sufficient condition under which any controller \(\mathbf {K}\) that stabilizes \(({\widehat{A}},{\widehat{B}})\) also stabilizes the true system (A, B). To state the lemma, we introduce one additional piece of notation. For a matrix M, we let \({\mathfrak {R}}_{M}\) denote the resolvent

We now can state our robustness lemma.

Lemma 4

Let the controller \(\mathbf {K}\) stabilize \(({\widehat{A}}, {\widehat{B}})\) and \(({\varvec{\Phi }}_x,{\varvec{\Phi }}_u)\) be its corresponding system response (22) on system \(({\widehat{A}},{\widehat{B}})\). Then if \(\mathbf {K}\) stabilizes (A, B), it achieves the following LQR cost

Furthermore, letting

a sufficient condition for \(\mathbf {K}\) to stabilize (A, B) is that \(\Vert {\varvec{\hat{\Delta }}} \Vert _{{\mathcal {H}}_\infty } <1\).

Proof

Follows immediately from Theorems 1, 2 and Corollary 1 by noting that for system responses \(({\varvec{\Phi }}_x, {\varvec{\Phi }}_u)\) satisfying

it holds that

for \(\hat{{\varvec{\Delta }}}\) as defined in Eq. (29). \(\square \)

We can therefore recast the robust LQR problem (7) in the following equivalent form

The resulting robust control problem is one subject to real-parametric uncertainty, a class of problems known to be computationally intractable [8]. Although effective computational heuristics (e.g., DK iteration [64]) exist, the performance of the resulting controller on the true system is difficult to characterize analytically in terms of the size of the perturbations.

To circumvent this issue, we take a slightly conservative approach and find an upper bound to the cost \(J(A,B,\mathbf {K})\) that is independent of the uncertainties \(\Delta _A\) and \(\Delta _B\). First, note that if \(\Vert \hat{{\varvec{\Delta }}} \Vert _{{\mathcal {H}}_\infty } < 1\), we can write

Because \(J({\widehat{A}},{\widehat{B}},\mathbf {K})\) captures the performance of the controller \(\mathbf {K}\) on the nominal system \(({\widehat{A}},{\widehat{B}})\), it is not subject to any uncertainty. It therefore remains to compute a tractable bound for \(\Vert \hat{{\varvec{\Delta }}} \Vert _{{\mathcal {H}}_\infty }\), which we do using the following fact.

Proposition 4

For any \(\alpha \in (0,1)\) and \({\varvec{\hat{\Delta }}}\) as defined in (29)

Proof

Note that for any block matrix of the form \(\begin{bmatrix} M_1&M_2 \end{bmatrix}\), we have

To verify this assertion, note that

With (33) in hand, we have

completing the proof. \(\square \)

The following corollary is then immediate.

Corollary 2

Let the controller \(\mathbf {K}\) and resulting system response \(({\varvec{\Phi }}_x, {\varvec{\Phi }}_u)\) be as defined in Lemma 4. Then if \(H_\alpha ({\varvec{\Phi }}_x, {\varvec{\Phi }}_u) < 1\), the controller \(\mathbf {K} = {\varvec{\Phi }}_u {\varvec{\Phi }}_x^{-1}\) stabilizes the true system (A, B).

Applying Proposition 4 in conjunction with bound (31), we arrive at the following upper bound to the cost function of the robust LQR problem (7), which is independent of the perturbations \((\Delta _A,\Delta _B)\):

The upper bound is only valid when \(H_\alpha ({\varvec{\Phi }}_x, {\varvec{\Phi }}_u) < 1\), which guarantees the stability of the closed-loop system as in Corollary 2. We remark that Corollary 2 and the bound in (34) are of interest independent of the synthesis procedure for \(\mathbf {K}\). In particular, they can be applied to the optimal LQR controller \({\widehat{K}}\) computed using the nominal system \(({\widehat{A}},{\widehat{B}})\).

As the next lemma shows, the right-hand side of Eq. (34) can be efficiently optimized by an appropriate decomposition. The proof of the lemma is immediate.

Lemma 5

For functions \(f:{\mathcal {X}}\rightarrow {\mathbb {R}}\) and \(g:{\mathcal {X}}\rightarrow {\mathbb {R}}\) and constraint set \(C\subseteq {\mathcal {X}}\), consider

Assuming that \(f(x) \ge 0\) and \(0 \le g(x) < 1\) for all \(x\in C\), this optimization problem can be reformulated as an outer single-variable problem and an inner-constrained optimization problem (the objective value of an optimization over the emptyset is defined to be infinity):

Then combining Lemma 5 with the upper bound in (34) results in the following optimization problem:

We note that this optimization objective is jointly quasi-convex in \((\gamma , {\varvec{\Phi }}_x, {\varvec{\Phi }}_u)\). Hence, as a function of \(\gamma \) alone the objective is quasi-convex and furthermore is smooth in the feasible domain. Therefore, the outer optimization with respect to \(\gamma \) can effectively be solved with methods like golden section search. We remark that the inner optimization is a convex problem, though an infinite-dimensional one. We show in Sect. 5 that a simple finite impulse response truncation yields a finite-dimensional problem with similar guarantees of robustness and performance.

We further remark that because \(\gamma \in [0,1)\), any feasible solution \(({\varvec{\Phi }}_x, {\varvec{\Phi }}_u)\) to optimization problem (35) generates a controller \(\mathbf {K} = {\varvec{\Phi }}_u {\varvec{\Phi }}_x^{-1}\) satisfying the conditions of Corollary 2 and hence stabilizes the true system (A, B). Therefore, even if the solution is approximated, as long as it is feasible, it will be stabilizing. As we show in the next section, for sufficiently small estimation error bounds \(\epsilon _A\) and \(\epsilon _B\), we can further bound the sub-optimality of the performance achieved by our robustly stabilizing controller relative to that achieved by the optimal LQR controller \(K_\star \).

4 Sub-optimality Guarantees

We now return to analyzing the Coarse-ID control problem. We upper bound the performance of the controller synthesized using optimization (35) in terms of the size of the perturbations \((\Delta _A\), \(\Delta _B)\) and a measure of complexity of the LQR problem defined by A, B, Q, and R. The following result is one of our main contributions.

Theorem 3

Let \(J_\star \) denote the minimal LQR cost achievable by any controller for the dynamical system with transition matrices (A, B), and let \(K_\star \) denote the optimal controller. Let \(({\widehat{A}},{\widehat{B}})\) be estimates of the transition matrices such that \(\Vert \Delta _A \Vert _2 \le \epsilon _A\), \(\Vert \Delta _B \Vert _2 \le \epsilon _B\). Then, if \(\mathbf {K}\) is synthesized via (35) with \(\alpha = 1/2\), the relative error in the LQR cost is

as long as \((\epsilon _A + \epsilon _B\Vert K_\star \Vert _2)\Vert {\mathfrak {R}}_{A+BK_\star }\Vert _{{\mathcal {H}}_\infty }\le 1/5\).

This result offers a guarantee on the performance of the SLS synthesized controller regardless of the estimation procedure used to estimate the transition matrices. Together with our result (Proposition 1) on system identification from independent data, Theorem 3 yields a sample complexity upper bound on the performance of the robust SLS controller \(\mathbf {K}\) when (A, B) are not known. We make this guarantee precise in Corollary 3. The rest of the section is dedicated to proving Theorem 3.

Recall that \(K_\star \) is the optimal LQR static state-feedback matrix for the true dynamics (A, B), and let \( {\varvec{\Delta }}:= -\left[ \Delta _A + \Delta _BK_\star \right] {\mathfrak {R}}_{A+BK_\star }\). We begin with a technical result.

Lemma 6

Define \(\zeta := (\epsilon _A + \epsilon _B\Vert K_\star \Vert _2)\Vert {\mathfrak {R}}_{A + BK_\star } \Vert _{{\mathcal {H}}_\infty }\), and suppose that \(\zeta < (1 +\sqrt{2})^{-1}\). Then \((\gamma _0, \tilde{{\varvec{\Phi }}}_x, \tilde{{\varvec{\Phi }}}_u)\) is a feasible solution of (35) with \(\alpha = 1/2\), where

Proof

By construction \(\tilde{{\varvec{\Phi }}}_x, \tilde{ {\varvec{\Phi }}}_u \in \frac{1}{z}{{\mathcal {R}}}{{\mathcal {H}}}_\infty \). Therefore, we are left to check three conditions:

The first two conditions follow by simple algebraic computations. Before we check the last condition, note that \(\Vert {{\varvec{\Delta }}} \Vert _{{\mathcal {H}}_\infty } \le (\epsilon _A + \epsilon _B \Vert K_\star \Vert _2)\Vert {\mathfrak {R}}_{A+BK_\star } \Vert _{{\mathcal {H}}_\infty } = \zeta < 1\). Now observe that,

\(\square \)

Proof of Theorem 3

Let \((\gamma _\star , {{\varvec{\Phi }}}_x^\star , {{\varvec{\Phi }}}_u^\star )\) be an optimal solution to problem (35) and let \(\mathbf {K} = {\varvec{\Phi }}_u^\star ({{\varvec{\Phi }}}_x^\star )^{-1}\). We can then write

where the first inequality follows from bound (31), and the second follows from the fact that \(\Vert \hat{{\varvec{\Delta }}} \Vert _{{\mathcal {H}}_\infty } \le \gamma _\star \) due to Proposition 4 and the constraint in optimization problem (35).

From Lemma 6, we know that \((\gamma _0, \tilde{{\varvec{\Phi }}}_x, \tilde{{\varvec{\Phi }}}_u)\) defined in Eq. (37) is also a feasible solution. Therefore, because \(K_\star = \tilde{{\varvec{\Phi }}}_u \tilde{{\varvec{\Phi }}}_x^{-1} \), we have by optimality,

where the second inequality follows by the argument used to derive (31) with the true and estimated transition matrices switched. Recall that \(\Vert {\varvec{\Delta }} \Vert _{{\mathcal {H}}_\infty } \le \zeta \) and that \(\gamma _0 = \sqrt{2}\zeta /(1 + \zeta )\). Therefore,

where the last inequality follows because \(\zeta< 1/5 < 1/(2 + 2\sqrt{2})\). The conclusion follows. \(\square \)

With this sub-optimality result in hand, we are now ready to give an end-to-end performance guarantee for our procedure when the independent data estimation scheme is used.

Corollary 3

Let \(\lambda _G = \lambda _{\min }(\sigma _u^2 G_TG_T^*+ \sigma _w^2 F_TF_T^*)\), where \(F_T, G_T\) are defined in (4). Suppose the independent data estimation procedure described in Algorithm 1 is used to produce estimates \(({\widehat{A}},{\widehat{B}})\) and \(\mathbf {K}\) is synthesized via (35) with \(\alpha = 1/2\). Then, there are universal constants \(C_0\) and \(C_1\) such that the relative error in the LQR cost satisfies

with probability \(1 - \delta \) if \(N \ge C_1(n+ p)\sigma _w^2 \Vert {\mathfrak {R}}_{A + BK_\star } \Vert _{{\mathcal {H}}_\infty }^2(1 / \lambda _G + \Vert K_\star \Vert _2^2/\sigma _u^2) \log (1/\delta )\).

Proof

Recall from Proposition 1 that for the independent data estimation scheme, we have

with probability \(1 - \delta \), as long as \(N \ge 8 (n+ p) + 16 \log (4/\delta )\).

To apply Theorem 3, we need \((\epsilon _A + \epsilon _B\Vert K_\star \Vert _2)\Vert {\mathfrak {R}}_{A + BK_\star } \Vert _{{\mathcal {H}}_\infty } < 1/5\), which will hold as long as \(N \ge {\mathcal {O}}\left\{ (n+ p)\sigma _w^2 \Vert {\mathfrak {R}}_{A + BK_\star } \Vert _{{\mathcal {H}}_\infty }^2(1 / \lambda _G + \Vert K_\star \Vert _2^2/\sigma _u^2) \log (1/\delta )\right\} \). A direct plug in of (40) in (36) yields the conclusion. \(\square \)

This result fully specifies the complexity term \({\mathcal {C}}_{\mathrm {LQR}}\) promised in the introduction:

Note that \({\mathcal {C}}_{\mathrm {LQR}}\) decreases as the minimum eigenvalue of the sum of the input and noise controllability Gramians increases. This minimum eigenvalue tends to be larger for systems that amplify inputs in all directions of the state space. \({\mathcal {C}}_{\mathrm {LQR}}\) increases as function of the operator norm of the gain matrix \(K_\star \) and the \({\mathcal {H}}_\infty \) norm of the transfer function from disturbance to state of the closed-loop system. These two terms tend to be larger for systems that are “harder to control.” The dependence on Q and R is implicit in this definition since the optimal control matrix \(K_\star \) is defined in terms of these two matrices. Note that when R is large in comparison with Q, the norm of the controller \(K_\star \) tends to be smaller because large inputs are more costly. However, such a change in the size of the controller could cause an increase in the \({\mathcal {H}}_\infty \) norm of the closed-loop system. Thus, our upper bound suggests an odd balance. Stable and highly damped systems are easy to control but hard to estimate, whereas unstable systems are easy to estimate but hard to control. Our theorem suggests that achieving a small relative LQR cost requires for the system to be somewhere in the middle of these two extremes.

Finally, we remark that the above analysis holds more generally when we apply additional constraints to the controller in the synthesis problem (35). In this case, the sub-optimality bounds presented in Theorem 3 and Corrollary 3 are true with respect to the minimal cost achievable by the constrained controller with access to the true dynamics. In particular, the bounds hold unchanged if the search is restricted to static controllers, i.e., \(u_t = K x_t\). This is true because the optimal controller is static and therefore feasible for the constrained synthesis problem.

5 Computation

As posed, the main optimization problem (35) is a semi-infinite program, and we are not aware of a way to solve this problem efficiently. In this section, we describe two alternative formulations that provide upper bounds to the optimal value and that can be solved in polynomial time.

5.1 Finite Impulse Response Approximation

An elementary approach to reducing the aforementioned semi-infinite program to a finite-dimensional one is to only optimize over the first L elements of the transfer functions \({\varvec{\Phi }}_x\) and \({\varvec{\Phi }}_u\), effectively taking a finite impulse response (FIR) approximation. Since these are both stable maps, we expect the effects of such an approximation to be negligible as long as the optimization horizon L is chosen to be sufficiently large—in what follows, we show that this is indeed the case.

By restricting our optimization to FIR approximations of \({\varvec{\Phi }}_x\) and \({\varvec{\Phi }}_u\), we can cast the \({\mathcal {H}}_2\) cost as a second-order cone constraint. The only difficulty arises in posing the \({\mathcal {H}}_\infty \) constraint as a semidefinite program. Though there are several ways to cast \({\mathcal {H}}_\infty \) constraints as linear matrix inequalities, we use the formulation in Theorem 5.8 of Dumitrescu’s text to take advantage of the FIR structure in our problem [17]. We note that using Dumitrescu’s formulation, the resulting problem is affine in \(\alpha \) when \(\gamma \) is fixed, and hence, we can solve for the optimal value of \(\alpha \). Then the resulting system response elements can be cast as a dynamic feedback controller using Theorem 2 of [4].

5.1.1 Sub-optimality Guarantees

In this subsection, we show that optimizing over FIR approximations incurs only a small degradation in performance relative to the solution to the infinite horizon problem. In particular, this degradation in performance decays exponentially in the FIR horizon L, where the rate of decay is specified by the decay rate of the spectral elements of the optimal closed-loop system response \({\mathfrak {R}}_{A+ BK_\star }\).

Before proceeding, we introduce additional concepts and notation needed to formalize guarantees in the FIR setting. A linear-time-invariant transfer function is stable if and only if it is exponentially stable, i.e., \({\varvec{\Phi }}= \sum _{t=0}^\infty z^{-t}\Phi (t) \in {{\mathcal {R}}}{{\mathcal {H}}}_\infty \) if and only if there exists positive values C and \(\rho \in [0,1)\) such that for every spectral element \(\Phi (t)\), \(t\ge 0\), it holds that

In what follows, we pick \(C_\star \) and \(\rho _\star \) to be any such constants satisfying \(||{\mathfrak {R}}_{A+ BK_\star }(t) ||_{2} \le C_\star \rho _\star ^t\) for all \(t\ge 0\).

We introduce a version of the optimization problem (30) with a finite number of decision variables:

In this optimization problem, we search over finite response transfer functions \({\varvec{\Phi }}_x\) and \({\varvec{\Phi }}_u\). Given a feasible solution \({\varvec{\Phi }}_x \), \( {\varvec{\Phi }}_u\) of problem (42), we can implement the controller \(\mathbf {K}_L = {\varvec{\Phi }}_u {\varvec{\Phi }}_x^{-1}\) with an equivalent state-space representation \((A_K, B_K, C_K, D_K)\) using the response elements \(\{ \Phi _x(k) \}_{k=1}^{L}\) and \(\{ \Phi _u(k) \}_{k=1}^{L}\) via Theorem 2 of [4].

The slack term V accounts for the error introduced by truncating the infinite response transfer functions of problem (30). Intuitively, if the truncated tail is sufficiently small, then the effects of this approximation should be negligible on performance. The next result formalizes this intuition.

Theorem 4

Set \(\alpha = 1/2\) in (42) and choose two real values \(C_\star > 0\) and \(\rho _\star \in [0,1)\) such that \(||{\mathfrak {R}}_{(A+ BK_\star )}(t) ||_{2} \le C_\star \rho _\star ^t\) for all \(t \ge 0\). Then, if \(\mathbf {K}_L\) is synthesized via (42), the relative error in the LQR cost is

as long as

The proof of this result, deferred to Appendix D, is conceptually the same as that of the infinite horizon setting. The main difference is that care must be taken to ensure that the approximation horizon L is sufficiently large so as to ensure stability and performance of the resulting controller. From the theorem statement, we see that for such an appropriately chosen FIR approximation horizon L, our performance bound is the same, up to universal constants, to that achieved by the solution to the infinite horizon problem. Furthermore, the approximation horizon L only needs to grow logarithmically with respect to one over the estimation rate in order to preserve the same statistical rate as the controller produced by the infinite horizon problem. Finally, an end-to-end sample-complexity result analogous to that stated in Corollary 3 can be easily obtained by simply substituting in the sample-complexity bounds on \(\epsilon _A\) and \(\epsilon _B\) specified in Proposition 1.

5.2 Static Controller and a Common Lyapunov Approximation

As we have reiterated above, when the dynamics are known, the optimal LQR control law takes the form \(u_t = K x_t\) for properly chosen static gain matrix K. We can reparameterize the optimization problem (35) to restrict our attention to such static control policies:

Under this reparameterization, the problem is no longer convex. Here we present a simple application of the common Lyapunov relaxation that allows us to find a controller K using semidefinite programming.

Note that the equality constraints imply:

revealing that we must have

With these identifications, (43) can be reformulated as

Using standard techniques from the robust control literature, we can upper bound this problem via the semidefinite program

Note that this optimization problem is affine in \(\alpha \) when \(\gamma \) is fixed. Hence, in practice we can find the optimal value of \(\alpha \) as well. A static controller can then be extracted from this optimization problem by setting \(K=Z X^{-1}\). A full derivation of this relaxation can be found in Appendix E. Note that this compact SDP is simpler to solve than the truncated FIR approximation. As demonstrated experimentally in the following section, the cost of this simplification is that the common Lyapunov approach provides a controller with slightly higher LQR cost.

6 Numerical Experiments

We illustrate our results on estimation, controller synthesis, and LQR performance with numerical experiments of the end-to-end Coarse-ID control scheme. The least squares estimation procedure (9) is carried out on a simulated system in Python, and the bootstrapped error estimates are computed in parallel using PyWren [32].

All of the synthesis and performance experiments are run in MATLAB. We make use of the YALMIP package for prototyping convex optimization [37] and use the MOSEK solver under an academic license [5]. In particular, when using the FIR approximation described in Sect. 5.1, we find it effective to make use of YALMIP’s dualize function, which considerably reduces the computation time.

6.1 Estimation of Example System

We focus experiments on a particular example system. Consider the LQR problem instance specified by

The dynamics correspond to a marginally unstable graph Laplacian system where adjacent nodes are weakly connected, each node receives direct input, and input size is penalized relatively more than state. Dynamics described by graph Laplacians arise naturally in consensus and distributed averaging problems. For this system, we perform the full data identification procedure in (9), using inputs with variance \(\sigma _u^2=1\) and noise with variance \(\sigma _w^2=1\). The errors are estimated via the bootstrap (Algorithm 2) using \(M=2000\) trials and confidence parameter \(\delta = 0.05\).

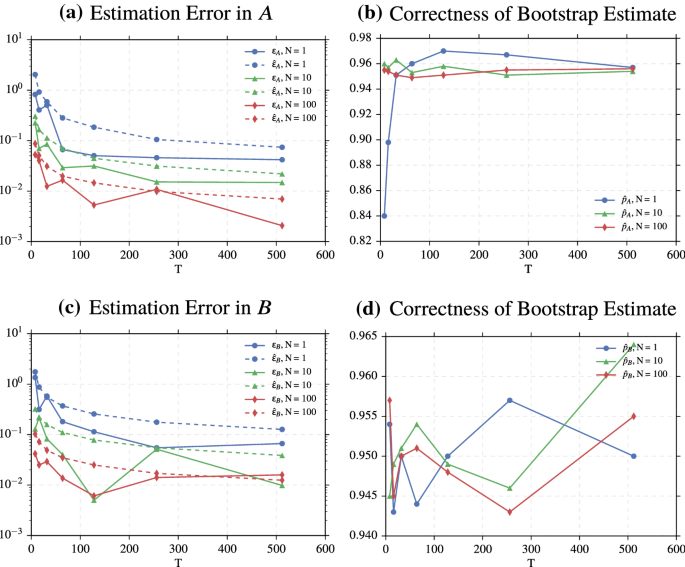

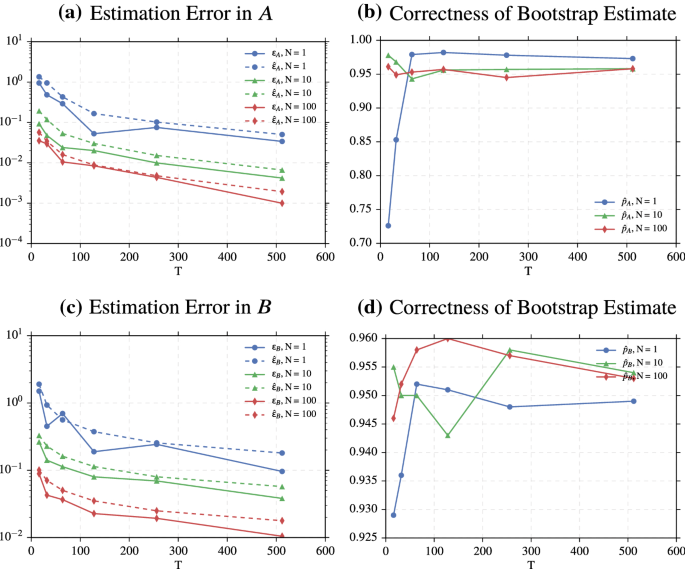

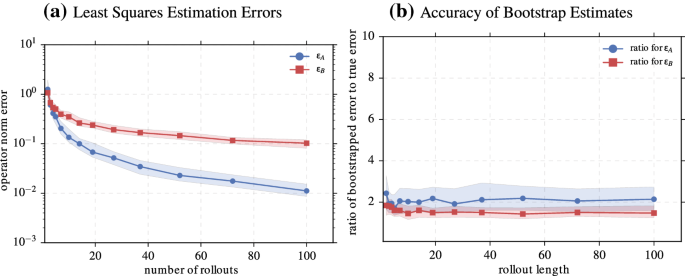

The behavior of the least squares estimates and the bootstrap error estimates is illustrated in Fig. 1. The rollout length is fixed to \(T=6\), and the number of rollouts used in the estimation is varied. As expected, increasing the number of rollouts corresponds to decreasing errors. For large enough N, the bootstrapped error estimates are of the same order of magnitude as the true errors. In Appendix G, we show plots for the setting in which the number of rollouts is fixed to \(N=6\), while the rollout length is varied.

The resulting errors from 100 repeated least squares identification experiments with rollout length \(T=6\) is plotted against the number of rollouts. In (a), the median of the least squares estimation errors decreases with N. In (b), the ratio of the bootstrap estimates to the true estimates hovers at 2. Shaded regions display quartiles

6.2 Controller Synthesis on Estimated System

Using the estimates of the system in (46), we synthesize controllers using two robust control schemes: the convex problem in 42 with filters of length \(L=32\) and V set to 0, and the common Lyapunov (CL) relaxation of the static synthesis problem (43). Once the FIR responses \(\{ \Phi _x(k) \}_{k=1}^{F}\) and \(\{ \Phi _u(k) \}_{k=1}^{F}\) are found, we need a way to implement the system responses as a controller. We represent the dynamic controller \(\mathbf {K} = {\varvec{\Phi }}_u {\varvec{\Phi }}_x^{-1}\) by finding an equivalent state-space realization \((A_K, B_K, C_K, D_K)\) via Theorem 2 of [4]. In what follows, we compare the performance of these controllers with the nominal LQR controller (the solution to (3) with \({\widehat{A}}\) and \({\widehat{B}}\) as model parameters), and explore the trade-off between robustness, complexity, and performance.

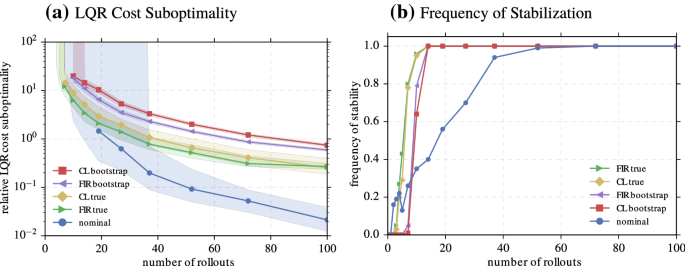

The relative performance of the nominal controller is compared with robustly synthesized controllers in Fig. 2. For both robust synthesis procedures, two controllers are compared: one using the true errors on \(A\) and \(B\), and the other using the bootstrap estimates of the errors. The robust static controller generated via the common Lyapunov approximation performs slightly worse than the more complex FIR controller, but it still achieves reasonable control performance. Moreover, the conservative bootstrap estimates also result in worse control performance, but the degradation of performance is again modest.

Furthermore, the experiments show that the nominal controller often outperforms the robust controllers when it is stabilizing. On the other hand, the nominal controller is not guaranteed to stabilize the true system, and as shown in Fig. 2, it only does so in roughly 80 of the 100 instances after \(N=60\) rollouts. It is also important to note a distinction between stabilization for nominal and robust controllers. When the nominal controller is not stabilizing, there is no indication to the user (though sufficient conditions for stability can be checked using our result in Corollary 4 or structured singular value methods [48]). On the other hand, the robust synthesis procedure will return as infeasible, alerting the user by default that the uncertainties are too high. We observe similar results when we fix the number of trials but vary the rollout length. These figures are provided in Appendix G.

Figure 3 explores the trade-off between performance and complexity for the computational approximations, both for FIR truncation and for the common Lyapunov relaxation. We examine the trade-off in terms of both the bound on the LQR cost (given by the value of the objective) and the actual achieved value. It is interesting that for smaller numbers of rollouts (and therefore larger uncertainties), the benefit of using more complex FIR models is negligible, in terms of both the actual costs and the upper bound. This trend makes sense: as uncertainties decrease to zero, the best robust controller should approach the nominal controller, which is associated with infinite impulse response (IIR) transfer functions. Furthermore, for the experiments presented here, FIR length of \(L = 32\) seems to be sufficient to characterize the performance of the robust synthesis procedure in (35). Additionally, we note that static controllers are able to achieve costs of a similar magnitude.

The SLS framework guarantees a stabilizing controller for the true system, provided that the computational approximations are feasible for any value of \(\gamma \) between 0 and 1, as long as the system errors \((\epsilon _A,\epsilon _B)\) are upper bounds on the true errors. Figure 4 displays the controller performance for robust synthesis when \(\gamma \) is set to 0.999. Simply ensuring a stable model and neglecting to optimize the nominal cost yields controllers that perform nearly an order of magnitude better than those where we search for the optimal value of \(\gamma \). This observation aligns with common practice in robust control: constraints ensuring stability are only active when the cost tries to drive the system up against a safety limit. We cannot provide end-to-end sample-complexity guarantees for this method and leave such bounds as an enticing challenge for future work.

Performance of controllers synthesized on the results of the 100 identification experiments is plotted against the number of rollouts. Controllers are synthesis nominally, using FIR truncation, and using the common Lyapunov (CL) relaxation. In (a), the median sub-optimality of nominal and robustly synthesized controllers is compared, with shaded regions displaying quartiles, which go off to infinity in the case that a stabilizing controller was not found. In (b), the frequency that the synthesis methods found stabilizing controllers

Performance of controllers synthesized with varying FIR filter lengths on the results of 10 of the identification experiments using true errors. The median sub-optimality of robustly synthesized controllers does not appear to change for FIR lengths greater than 32, and the common Lyapunov (CL) synthesis tracks the performance in both upper bound and actual cost

7 Conclusions and Future Work

Coarse-ID control provides a straightforward approach to merging nonasymptotic methods from system identification with contemporary Systems Level Synthesis approaches to robust control. Indeed, many of the principles of Coarse-ID control were well established in the 1990s [11, 12, 29], but fusing together an end-to-end result required contemporary analysis of random matrices and a new perspective on controller synthesis. These results can be extended in a variety of directions, and we close this paper with a discussion of some of the shortcomings of our approach and of several possible applications of the Coarse-ID framework to other control settings.

Other Performance Metrics Though we focused exclusively on LQR in this paper, we note that all of our results on robust synthesis and end-to-end performance analysis extend to other metrics popular in control. Indeed, any norm on the system responses \(\{\Phi _x(k),\Phi _u(k)\}\) can be solved robustly using our approach; Lemma 4 holds for any norm. In turn, we can mimic the derivation in Sect. 3 to yield a constrained optimization problem with respect to the nominal dynamics and a norm on the uncertainty \({\varvec{{\hat{\Delta }}}}\). This means that our sub-optimality bound in Corollary 3 holds true when we replace \({\mathcal {H}}_2({\mathbb {T}})\) with \({\mathcal {H}}_\infty ({\mathbb {T}})\). Furthermore, similar results can be derived for other norms, so long as care is placed on the associated sub-multiplicative properties of the norms in question. For example, in follow-up work we analyze robustness under the \({\mathcal {L}}_1\) norm in the context of constraints on the states and control signals [14].

Improving the End-to-End Analysis There are several places where our analysis could be substantially improved. The most obvious is that in our estimator for the state-transition matrices, our algorithm only uses the final time step of each rollout. This strategy is data inefficient, and empirically, accuracy only improves when including all of the data. Analyzing the full least squares estimator is nontrivial because the design matrix strongly depends on data to be estimated. This poses a challenging problem in random matrix theory that has applications in a variety of control and reinforcement learning settings. In follow-up work, we have begun to address this issue for stable linear systems [52].

In the context of SLS, we use a very coarse characterization of the plant uncertainty to bound the quantity in Lemma 4 and to yield a tractable optimization problem. Indeed, the only property we use about the error between our nominal system and the true system is that the maps

are contractions. Nowhere do we use the fact that these are linear operators, or even the fact that they are the same operator from time step to time step. Indeed, there are stronger bounds that could be engineered using the theory of integral quadratic constraints [40] that would take into account these additional properties. Such tighter bounds could yield considerably less conservative control schemes in both theory and practice.

Additionally, it would be of interest to understand the loss in performance incurred by the common Lyapunov relaxation we use in our experiments. Empirically, we see that the approximation leads to good performance, suggesting that it does not introduce much conservatism into the synthesis task. Further, our numerical experiments suggest that optimizing a nominal cost subject to robust stability constraints, as opposed to directly optimizing the SLS upper bound, leads to better empirical performance. Future work will seek to understand whether this is a phenomenological observation specific to the systems used in our experiments, or if there is a deeper principle at play that leads to tighter sub-optimality guarantees.

Lower Bounds Finding lower bounds for control problems when the model is unknown is an open question. Even for LQR, it is not at all clear how well the system (A, B) needs to be known in order to attain good control performance. While we produce reasonable worst-case upper bounds for this problem, we know of no lower bounds. Such bounds would offer a reasonable benchmark for how well one could ever expect to do with no priors on the linear system dynamics.

Integrating Coarse-ID Control in Other Control Paradigms The end-to-end Coarse-ID control framework should be applicable in a variety of settings. For example, in model predictive control (MPC), controller synthesis problems are approximately solved on finite-time horizons, one step is taken, and then this process is repeated [6]. MPC is an effective solution which substitutes fast optimization solvers for clever, complex control design. We believe it will be straightforward to extend the Coarse-ID paradigm to MPC, using a similar perturbation argument as in Sect. 3. The main challenges in MPC lie in how to guarantee that safety constraints are maintained throughout execution without too much conservatism in control costs.

Another interesting investigation lies in the area of adaptive control, where we could investigate how to incorporate new data into coarse models to further refine constraint sets and costs. Indeed, some work has already been done in this space. We propose to investigate how to operationalize and extend the notion of optimistic exploration proposed in the context of continuous control by Abbasi-Yadkori and Szepesvari [1]. The idea behind optimistic improvement is to select the model that would give the best optimization cost if the current model was true. In this way, we fail fast, either receiving a good cost or learning quickly that our model is incorrect. It would be worth investigating whether the Coarse-ID framework can make it simple to update a least squares estimate for the system parameters and then provide an efficient mechanism for choosing the next optimistic control.