Abstract

Mixtures of t-factor analyzers have been broadly used for model-based density estimation and clustering of high-dimensional data from a heterogeneous population with longer-than-normal tails or atypical observations. To reduce the number of parameters in the component covariance matrices, the mixtures of common t-factor analyzers (MCtFA) have been recently proposed by assuming a common factor loading across different components. In this paper, we present an extended version of MCtFA using distinct covariance matrices for component errors. The modified mixture model offers a more appropriate way to represent the data in a graphical fashion. Two flexible EM-type algorithms are developed for iteratively computing maximum likelihood estimates of parameters. Practical considerations for the specification of starting values, model-based clustering, classification of new subject and identification of potential outliers are also provided. We demonstrate the superiority of the proposed methodology by analyzing the Italian wine data and a simulation study.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Mixtures of factor analyzers (MFA) originally introduced by Ghahramani and Hinton (1997) have become the most popular tool for clustering and local dimensionality reduction of high-dimensional data, especially when the number of observations is not relatively large than their dimension. The MFA along with their applications have been extensively studied by Hinton et al. (1997), McLachlan and Peel (2000) and McLachlan et al. (2002, 2003), among others. To reduce the number of parameters, especially when the number of components or features is quite large, Baek et al. (2010) extended the MFA using common component-factor loadings, called mixtures of common factor analyzers (MCFA), and described an alternating expectation conditional maximization (AECM) algorithm (Meng and Dyk 1997) for conducting maximum likelihood (ML) estimation. Wang (2013) further studied an extension of the MCFA approach, which allows practitioners to handle model-based density estimation, clustering, visualization and discriminant analysis of high-dimensional data in the presence of missing values.

A number of different Bayesian strategies have been developed for inferring finite mixture models and its extensions through factor-analytic representations. Diebolt and Robert (1994) presented a Gibbs-sampling scheme to perform posterior inference on Gaussian mixture (GMIX) models. Zhang et al. (2004) advocated the use of the reversible jump Markov chain Monte Carlo (MCMC) algorithm (Green 1995; Richardson and Green 1997) for fitting GMIX models with unknown number of components. Lopes and West (2004) explored feasible MCMC methods for Bayesian model assessments in factor analysis models. Bayesian treatments on MFA have been investigated through a variational Bayes (VB) approximation (Ghahramani and Beal 2000) and a stochastic simulation procedure (Fokouè and Titterington 2003), where there is uncertainty about the dimensionality of the latent spaces, i.e., the unknown number of mixture components and common factors. Recently, Wei and Li (2013) proposed a VB algorithm for learning MCFA from a Bayesian perspective.

In the MFA and MCFA frameworks, component factors and errors are routinely assumed to be normally distributed for mathematical convenience and computational tractability. However, the normality assumption is not always realistic because of its known sensitivity to outliers. Furthermore, a poor fit for the data with longer than normal tails may subsequently yield a wrong clustering identification. To cope with such an obstacle, McLachlan et al. (2007) proposed the mixtures of t-factor analyzers (MtFA), whereby the multivariate t family (Kotz and Nadarajah 2004) with dimension p, mean vector \({\varvec{\mu }} (\nu >1)\), covariance matrix \(\nu (\nu -2)^{-1}{\varvec{\varSigma }} (\nu >2\)), and degrees of freedom (df) \(\nu \), denoted by \(t_p({\varvec{\mu }},{\varvec{\varSigma }},\nu )\), is used to be the underlying distribution for both component factors and errors. The multivariate t density is

where the df \(\nu \) may be viewed as a robustness turning parameter that is used to control the fatness of the tails of the probability distribution.

Specifically, let \({\varvec{y}}_j=(y_{j1},\ldots ,y_{jp})^{\mathrm{T}}\), \(j=1,\ldots ,n\), be np-dimensional vectors of feature variables. The MtFA approach formulates \({\varvec{y}}_j\) as:

where \({\varvec{\mu }}_i\) is a \(p\times 1\) vector of component location, \({\varvec{B}}_i\) is a \(p\times q\) matrix of component factor loadings, \({\varvec{u}}_{ij}\) is a q-dimensional vector of component factors, and \({\varvec{e}}_{ij}\) is a p-dimensional vector of component errors. Here, \(({\varvec{u}}_{ij}^{\mathrm{T}},{\varvec{e}}_{ij}^{\mathrm{T}})^\mathrm{T}\) is assumed to jointly follow a multivariate t distribution with zero mean, a block-diagonal scale-covariance matrix \(\text{ diag }\{{\varvec{I}}_q,{\varvec{D}}_i\}\), and the df \(\nu _i\), where \({\varvec{I}}_q\) is an identity matrix of size q and \({\varvec{D}}_i\) is a diagonal matrix. Consequently, the density of \({\varvec{y}}_j\) for the MtFA is

where \({\varvec{\varSigma }}_i={\varvec{B}}_i{\varvec{B}}_i^{\mathrm{T}}+{\varvec{D}}_i\). Note that MtFA will be reduced to MFA as all component dfs \(\nu _i\)’s tend to infinity. McNicholas and Murphy (2008) introduced a new class of Gaussian mixture models with several parsimonious covariance structures, called parsimonious Gaussian mixture models (PGMM). Andrews and McNicholas (2011) investigated a restricted MtFA model, which is obtained by imposing constraints on the df, the factor loadings, and the error covariance matrices. Recently, Wang and Lin (2013) proposed an ad-hoc expectation conditional maximization (ECM; Meng and Rubin 1993) algorithm on the basis of a much smaller hidden data space for fast ML estimation of MtFA. They have also done a simulation study to show that their new procedure can substantially outperform the commonly used expectation maximization (EM; Dempster et al. 1977) algorithm and the AECM algorithm used in McLachlan et al. (2007) in most situations, regardless of how the convergence speed is assessed by the computing time and/or number of iterations.

For model-based clustering of high-dimensional data, in practice, the dimension p is sometime quite large and/or the number of components (clusters) g is sometimes not small. Therefore, the number of parameters in model (1) might be unmanageable and, thus, encounters near-singular estimates or inestimable component covariance matrices. As a robust extension of MCFA, Baek and McLachlan (2011) proposed a parsimonious version of the MtFA, named as mixtures of common t-factor analyzers (MCtFA), which utilizes common factor loadings to reduce further the number of parameters in the specification of the component-covariance matrices. For the consideration of different covariance matrices for latent factors, this paper presents an extended version of MCtFA, called the EMCtFA, studies its essential properties and describes two variants of the EM algorithm, including the ECM and the expectation conditional maximization either (ECME; Liu and Rubin 1994) algorithms for ML estimation of model parameters.

As an alternative to exact ML methods, the simulated ML estimation can be implemented for the model using the Monte Carlo (MC) or importance sampling (IS) methods, known as the MCEM and ISEM algorithms. One drawback of simulated ML methods is that the model fitting procedure relies on MC estimates which can be difficult to implement due to the heavy computational burden. Another issue is that an increase in log-likelihood at each iteration is not guaranteed because of MC errors (McLachlan and Krishnan 2008). Our proposed EM-type algorithms have exactly closed-form expressions in the E-step and analytically reduced expressions in CM-steps, yielding more accurate estimates than the simulated ML methods.

In this work, we provide a guideline for choosing a set of suitable initial values. Furthermore, the probabilistic classification of new subjects and estimation of latent factors are also investigated. Under the assumption of non-normality, importantly, there is also a problem of outlier detection in mixture modeling. Outliers usually lead to overestimating the number of components to offer a good presentation of the data (Fraley and Raftery 2002). We also offer a rule for identifying which observations are suspected outliers under the EMCtFA framework.

The remainder of this paper is structured as follows. In Sect. 2, we establish the notation and formulate the EMCtFA model. Section 3 presents two EM-type algorithms for fitting EMCtFA and outlines a simple way of setting the initialization. Section 4 describes some practical tools, including model-based clustering, classification, outlier detection and model selection. In Sect. 5, the application of the proposed methodology is illustrated through analyzing the Italian wine data. In Sect. 6, we conduct a simulation study to compare the performance of our recommended initialization procedure with the existing method. We conclude the paper with a short summary in Sect. 7. The detailed derivations are sketched in “Appendix”.

2 Extended mixtures of common t-factor analyzers (EMCtFA)

Consider n independent p-dimensional feature vectors \({\varvec{y}}_1,\ldots ,{\varvec{y}}_n\), which come independently from a nonhomogeneous population with g subgroups. In the sense of dimensionality reduction, q must be smaller than p. The EMCtFA model for continuous features \({\varvec{y}}_j\) can be described as:

where \({\varvec{A}}\) is a \(p\times q\) matrix of common factor loadings, \({\varvec{e}}_{ij}\) is a p-dimensional vector of component errors, and \(\pi _i\in (0,1)\) is the mixing proportion subject to \(\sum _{i=1}^g\pi _i=1\). The joint distribution of \({\varvec{u}}_{ij}\) and \({\varvec{e}}_{ij}\) for the ith component is assumed to be

where \({\varvec{\beta }}_i\) is a q-dimensional location vector, \({\varvec{\varOmega }}_i\) is a \(q\times q\) positive-definite scale-covariance matrix, \({\varvec{D}}_i\) is a \(p\times p\) diagonal covariance matrix, and \(\nu _i\) is the df. We further assume that the joint distributions of \(({\varvec{u}}_{ij}^{\mathrm{T}},{\varvec{e}}_{ij}^{\mathrm{T}})^\mathrm{T}\) for distinct subjects are independent. When \({\varvec{D}}_i={\varvec{D}}\) for all i, the EMCtFA reduces to the original MCtFA model (Baek and McLachlan 2011). Generally, the component dfs are allowed to vary for flexibly controlling possibly different degrees of the tail thickness of component distributions. The special case of equal df, say \(\nu _i=\nu \) for all i, is usually considered for the sake of parsimony and fast convergence of the algorithm. The MCtFA includes the MCFA as a limiting/special case when all component dfs approach infinity simultaneously. It can also be shown that MCtFA is a special case of MtFA by virtue of Eqs. (17)–(21) in Baek et al. (2010).

For the MtFA in (1), \(q(q-1)/2\) uniqueness constraints are imposed for component factor loadings \({\varvec{B}}_i\) and, thus, the total number of parameters in (1) is

For the EMCtFA in (2) along with assumption (3), the common factor loadings \({\varvec{A}}\) must be unique only up to postmultiply by a nonsingular matrix such that its number of free parameters is \(pq-q^2\). As a result, the total number of parameters in (2) is

while that in the MCtFA (Baek and McLachlan 2011) is

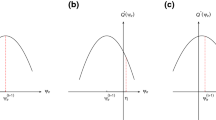

It follows straightforwardly that the difference in numbers of parameters between MtFA and EMCtFA is \(d_1-d_2=(g-1)q(p-q)+g(p-q)\), which is nonnegative when \(p\ge q\) and \(g\ge 1\). Meanwhile, the difference in numbers of parameters between the EMCtFA and MCtFA is \(d_2-d_3=(g-1)p\), which is also nonnegative when \(g\ge 1\). Therefore, we have \(d_1\ge d_2\ge d_3\) if and only if \(p\ge q\) and \(g\ge 1\). Clearly, the EMCtFA reaches a compromise between the MtFA and MCtFA approaches through the specification of distinct covariance matrices for component errors. The EMCtFA as well as MCtFA are preferable to the MtFA model if the dimension p or the number of component g is relatively large to suffer from the convergence problems. Furthermore, unlike MtFA, the estimated posterior means of factor scores of EMCtFA can be used to portray the data in low-dimensional subspaces.

Let \({\varvec{\varTheta }}=\{{\varvec{A}},{\varvec{\theta }}_1,\ldots ,{\varvec{\theta }}_g\}\) denote the entire unknown model parameters where \({\varvec{\theta }}_i=(\pi _i,{\varvec{\beta }}_i,{\varvec{\varOmega }}_i,{\varvec{D}}_i,\nu _i)\), \(i=1,\ldots ,g\), represents the parameter vector for the ith component. According to (2) and (3), the probability density function (pdf) of \({\varvec{y}}_j\) is

where \({\varvec{\varSigma }}_i={\varvec{A}}{\varvec{\varOmega }}_i{\varvec{A}}^{\mathrm{T}}+{\varvec{D}}_i\). Therefore, the ML estimates \(\hat{{\varvec{\varTheta }}}\) based on a set of independent observations \({\varvec{y}}=\{{\varvec{y}}_1,\ldots ,{\varvec{y}}_n\}\) is \(\hat{{\varvec{\varTheta }}}=\mathop {\text{ argmax }}_{{\varvec{\varTheta }}}\ell ({\varvec{\varTheta }}\mid {\varvec{y}})\), where \(\ell ({\varvec{\varTheta }}|{\varvec{y}})=\sum _{j=1}^n\log f({\varvec{y}}_j|{\varvec{\varTheta }})\) is the observed log-likelihood function. Unfortunately, there are no explicit analytical solutions for ML estimator of \({\varvec{\varTheta }}\). In this case, we resort to the EM-type algorithm (Dempster et al. 1977), which is popular iterative device for ML estimation in models incorporating new hidden variables.

In the EM framework on supporting the interpretation of missing data problem, it is convenient to introduce a set of allocation variables \({\varvec{Z}}_j=(z_{1j},\ldots ,z_{gj}), j=1,\ldots ,n\), where the component membership \(z_{ij}=1\) if \({\varvec{y}}_j\) belongs to the ith component and \(z_{ij}=0\) otherwise. This indicates that \({\varvec{Z}}_j\) independently follows a multinomial distribution with one trial and mixing properties \((\pi _1,\ldots ,\pi _g)\) subject to \(\sum _{i=1}^g\pi _i=1\), denoted as \({\varvec{Z}}_j\sim {\mathscr {M}}(1;\pi _1,\ldots ,\pi _g)\). Based on the essential property of multivariate t distribution, we also utilize the scaling variables \(\tau _j\)s following the gamma distribution with shape \(\nu _i/2\) and rate \(\nu _i/2\) conditioning on \(z_{ij}=1\). Through introducing the latent variables \({\varvec{Z}}_j\) and \(\tau _j\), for \(j=1,\ldots ,n\), three hierarchical representations of the EMCtFA are sketched in “Appendix”.

As a consequence, we establish Proposition 1, which is useful for evaluating the conditional expectations involved in the ECME algorithm described in the next section.

Proposition 1

Given the hierarchical representations (18)–(20), we have

It follows that

A simple algebra shows that

where \({\varvec{\gamma }}_i={\varvec{\varSigma }}_i^{-1}{\varvec{A}}{\varvec{\varOmega }}_i\) and \(\delta _{ij}=({\varvec{y}}_j-{\varvec{A}}{\varvec{\beta }}_i)^\mathrm{T}{\varvec{\varSigma }}_i^{-1}({\varvec{y}}_j-{\varvec{A}}{\varvec{\beta }}_i)\) denotes the Mahalanobis distance between the observation \({\varvec{y}}_j\) and the component mean \({\varvec{A}}{\varvec{\beta }}_i\). Subsequently, multiplying (4) by (5) and then integrating out \(\tau _{j}\) implies

Proof

The proof is straightforward and, hence, is omitted. \(\square \)

3 Parameter estimation

3.1 ML estimation via the ECM and ECME algorithms

The EM algorithm has several appealing features including simplicity of implementation and monotone convergence with each iteration increasing the likelihood. However, the EM algorithm is not straightforward for ML estimation of the model (2) because its M-step is computationally difficult. To go further, we exploit a variant of the EM algorithm, called the ECME (Liu and Rubin 1994) algorithm. The ECME algorithm proceeds to estimate parameters by replacing the M-steps of EM with either CM-steps that maximize a sequence of constrained Q functions, as in ECM, or CML-steps that maximize the correspondingly constrained actual likelihood function. Furthermore, it shares the appealing features of EM (Dempster et al. 1977) and ECM (Meng and Rubin 1993), and possesses typically a faster convergence rate than either EM or ECM in terms of CPU time and/or number of iterations.

For notational convenience, we denote the allocation variables by \({\varvec{Z}}=({\varvec{Z}}_1,\ldots ,{\varvec{Z}}_n)\), the scaling variables by \({\varvec{\tau }}=\{\tau _1,\ldots ,\tau _n\}\) and unobservable factors by \({\varvec{U}}=\{{\varvec{u}}_{ij};i=1,\ldots ,g,j=1,\ldots ,n\}\). Treating \(({\varvec{Z}}, {\varvec{\tau }}, {\varvec{U}})\) as the “missing” data and combining them with the observed data \({\varvec{y}}\) as the “complete” data, the complete-data log-likelihood function of \({\varvec{\varTheta }}\) based on hierarchy (20) is

where \(\phi _p(\cdot |{\varvec{\mu }},{\varvec{\varSigma }})\) stands for the pdf of the p-variate normal distribution with mean vector \({\varvec{\mu }}\) and covariance matrix \({\varvec{\varSigma }}\), and \(\mathscr {G}(\cdot |a,b)\) denotes the pdf of the gamma distribution with mean a / b and variance \(a/b^2\).

Let \(\hat{{\varvec{\varTheta }}}^{(k)}=(\hat{{\varvec{A}}}^{(k)},\hat{\pi }_i^{(k)},\hat{{\varvec{\beta }}}_i^{(k)},\hat{{\varvec{\varOmega }}}_i^{(k)},\hat{{\varvec{D}}}_i^{(k)},\hat{\nu }_i^{(k)},i=1,\ldots ,g)\) be the estimates of \({\varvec{\varTheta }}\) at the kth iteration. In the E-step of ECME, one needs to evaluate the conditional expectation of (6) at \({\varvec{\varTheta }}=\hat{{\varvec{\varTheta }}}^{(k)}\), which is the so-called Q-function:

All necessary conditional expectations in (7) can result from Eq. (21). The CM-steps, each of which maximizes the constrained Q-function or the constrained log-likelihood function over \({\varvec{\varTheta }}\) step-by-step but conditioned on some vector functions of \({\varvec{\varTheta }}\) being estimated at its previous step, proceed as follows:

-

CM-step 1 for ECM and ECME Fix \(\nu _i=\hat{\nu }_i^{(k)} (i=1,\ldots ,g)\), and update \(\hat{\pi }_i^{(k)}, \hat{{\varvec{A}}}^{(k)}\), \(\hat{{\varvec{\beta }}}_i^{(k)}, \hat{{\varvec{\varOmega }}}_i^{(k)}\), and \(\hat{{\varvec{D}}}_i^{(k)}\) by maximizing (7), which gives

$$\begin{aligned} \hat{\pi }_i^{(k+1)}= & {} \sum _{j=1}^n\hat{z}^{(k)}_{ij}/n,\nonumber \\ \hat{{\varvec{A}}}^{(k+1)}= & {} \left\{ \sum _{j=1}^n\sum _{i=1}^g\hat{z}_{ij}^{(k)} \hat{\tau }_{ij}^{(k)}{\varvec{y}}_j\Big [\hat{{\varvec{\beta }}}_i^{(k)\mathrm{T}}+\hat{{\varvec{y}}}_{ij}^{(k) \mathrm{T}}\hat{{\varvec{\gamma }}}_i^{(k)}\Big ]\right\} \nonumber \\&\times \left\{ \sum _{j=1}^n\sum _{i=1}^g\hat{z}_{ij}^{(k)} \Big [({\varvec{I}}_q-\hat{{\varvec{\gamma }}}_i^{(k)\mathrm{T}}\hat{{\varvec{A}}}^{(k)})\hat{{\varvec{\varOmega }}}_i^{(k)}+\hat{\tau }_{ij}^{(k)}\hat{{\varvec{u}}}_{ij}^{(k)}\hat{{\varvec{u}}}_{ij}^{(k)\mathrm T}\Big ]\right\} ^{-1}, \end{aligned}$$(8)$$\begin{aligned} \hat{{\varvec{\beta }}}_i^{(k+1)}= & {} \hat{{\varvec{\beta }}}_i^{(k)}+\frac{\sum _{j=1}^n\hat{z}_{ij}^{(k)}\hat{\tau }_{ij}^{(k)}\hat{{\varvec{\gamma }}}_i^{(k)\mathrm T}\hat{{\varvec{y}}}_{ij}^{(k)}}{\sum _{j=1}^n\hat{z}_{ij}^{(k)}\hat{\tau }_{ij}^{(k)}}, \end{aligned}$$(9)$$\begin{aligned} \hat{{\varvec{\varOmega }}}_i^{(k+1)}= & {} \frac{\sum _{j=1}^n\hat{z}_{ij}^{(k)}\hat{\tau }_{ij}^{(k)}\hat{{\varvec{\gamma }}}_i^{(k)\mathrm T}\hat{{\varvec{y}}}_{ij}^{(k)}\hat{{\varvec{y}}}_{ij}^{(k) \mathrm T}\hat{{\varvec{\gamma }}}_i^{(k)}}{\sum _{j=1}^n\hat{z}_{ij}^{(k)}}+({\varvec{I}}_q-\hat{{\varvec{\gamma }}}_i^{(k)\mathrm T}\hat{{\varvec{A}}}^{(k)})\hat{{\varvec{\varOmega }}}_i^{(k)},\nonumber \\ \end{aligned}$$(10)and

(11)

(11)for \(i=1,\ldots ,g\). When \({\varvec{D}}_i\)s are assumed to be the same across components, that is \({\varvec{D}}_1=\cdots ={\varvec{D}}_g={\varvec{D}}\), the updated formula for \({\varvec{D}}\) is given by

$$\begin{aligned} \hat{{\varvec{D}}}^{(k+1)}= & {} \text{ diag }\left\{ \frac{\sum _{j=1}^n \sum _{i=1}^g\hat{z}_{ij}^{(k)}\hat{{\varvec{D}}}^{(k)}- \sum _{j=1}^n\sum _{i=1}^g\hat{z}_{ij}^{(k)}\hat{{\varvec{D}}}^{(k)} \hat{{\varvec{\varSigma }}}_i^{(k)-1}\hat{{\varvec{D}}}^{(k)}}{\sum _{j=1}^n \sum _{i=1}^g\hat{z}_{ij}^{(k)}}\right. \nonumber \\&\left. +\frac{\sum _{j=1}^n\sum _{i=1}^g\hat{z}_{ij}^{(k)} \hat{\tau }_{ij}^{(k)}\hat{{\varvec{D}}}^{(k)} \hat{{\varvec{\varSigma }}}_i^{(k)-1}\hat{{\varvec{y}}}_{ij}^{(k)}\hat{{\varvec{y}}}_{ij}^{(k) \mathrm T}\hat{{\varvec{\varSigma }}}_i^{(k)-1}\hat{{\varvec{D}}}^{(k)}}{\sum _{j=1}^n\sum _{i=1}^g\hat{z}_{ij}^{(k)}}\right\} . \end{aligned}$$ -

CM-step 2 for ECM Solve the roots of the following equation, which maximizes the constrained Q-functions:

$$\begin{aligned} \log \Bigg (\frac{\nu _i}{2}\Bigg )+1-\mathscr {D_G} \Bigg (\frac{\nu _i}{2}\Bigg )+\frac{\sum ^n_{j=1}\hat{z}_{ij}^{(k)} (\hat{\kappa }_{ij}^{(k)}-\hat{\tau }_{ij}^{(k)})}{\sum ^n_{j=1} \hat{z}_{ij}^{(k)}}=0,\quad i=1,\ldots ,g.\nonumber \\ \end{aligned}$$(12)As \(\nu _1=\cdots =\nu _g=\nu \), we obtain \(\hat{\nu }^{(k+1)}\) as the solution of the following equation:

$$\begin{aligned} \log \Bigg (\frac{\nu }{2}\Bigg )+1-\mathscr {D_G} \Bigg (\frac{\nu }{2}\Bigg )+\frac{\sum ^g_{i=1}\sum ^n_{j=1} \hat{z}_{ij}^{(k)}(\hat{\kappa }_{ij}^{(k)}-\hat{\tau }_{ij}^{(k)})}{n}=0. \end{aligned}$$(13) -

CM-step 2 for ECME Alternatively, to improve the convergence, we may exploit the advantage of the ECME step. Given current estimates, calculate \(\hat{\nu }_i^{(k+1)}\) by maximizing the constrained log-likelihood function, i.e.,

$$\begin{aligned} \hat{\nu }_i^{(k+1)}=\arg \max _{\nu _i}\left\{ \sum _{j=1}^n\log \Big (\hat{\pi }_i^{(k+1)}t_p({\varvec{y}}_j|\hat{{\varvec{A}}}^{(k+1)} \hat{{\varvec{\beta }}}_i^{(k+1)},\hat{{\varvec{\varSigma }}}_i^{(k+1)},\nu _i)\Big )\right\} . \end{aligned}$$(14)Similarly, as the case of common dfs \((\nu _1=\cdots =\nu _g=\nu )\), we calculate

$$\begin{aligned} \hat{\nu }^{(k+1)}=\arg \max _{\nu }\left\{ \sum _{j=1}^n\log \Bigg (\sum _{i=1}^g\hat{\pi }_i^{(k+1)}t_p({\varvec{y}}_j| \hat{{\varvec{A}}}^{(k+1)}\hat{{\varvec{\beta }}}_i^{(k+1)}, \hat{{\varvec{\varSigma }}}_i^{(k+1)},\nu )\Bigg )\right\} .\nonumber \\ \end{aligned}$$(15)

Note that the solutions of Eqs. (12) and (13) involve a one-dimensional search for \(\nu _i\) one at a time or for the common df \(\nu \), which can be directly done by employing the uniroot routine built in the R package (R Development Core Team 2009) constrained within an appropriate [2, 200] interval. The procedures (14) and (15) can be implemented straightforwardly using the optim routine with starting value \(\hat{\nu }_i^{(k)}\) at each iteration. Given a set of suitable initial values \(\hat{{\varvec{\varTheta }}}^{(0)}\) recommended in the next subsection, the ECM or ECME algorithms are performed to obtain the ML estimates \(\hat{{\varvec{\varTheta }}}=(\hat{{\varvec{A}}},\hat{\pi }_i,\hat{{\varvec{\beta }}}_i,\hat{{\varvec{\varOmega }}}_i,\hat{{\varvec{D}}}_i,\hat{\nu }_i,i=1,\ldots ,g)\) iteratively until the user’s specified stopping rule is achieved. While carrying out quantitative analysis of experimental data, the stopping rule \(\ell (\hat{{\varvec{\varTheta }}}^{(k+1)}|{\varvec{y}})-\ell (\hat{{\varvec{\varTheta }}}^{(k)}|{\varvec{y}})<10^{-6}\) is employed.

3.2 Initialization

The EM-type algorithm, like other iteration-based methods, may suffer from computational difficulties such as slow or even non-convergence. In particular, when the data are too sparse or the dimension of latent factors is over-specified, a poor choice of initial values \(\hat{{\varvec{\varTheta }}}^{(0)}\) may lead to the convergence in the boundary of the parameter space. To alleviate such potential problems, a simple way of automatically generating a set of suitable initial values is recommended below:

-

1.

Perform a K-means clustering (Hartigan and Wong 1979) initialized with respect to a random start. Specify the zero-one component indicator \(\hat{{\varvec{Z}}}_j^{(0)}=(\hat{z}_{1j}^{(0)},\ldots ,\hat{z}_{gj}^{(0)})\) according to the K-means results. The initial values of the mixing proportions \(\pi _i\)s are taken as

$$\begin{aligned} \hat{\pi }_i^{(0)}=n^{-1}\sum _{j=1}^n\hat{z}_{ij}^{(0)},\quad i=1,\ldots ,g. \end{aligned}$$ -

2.

Let \({\varvec{y}}_{(i)}\) be the data in the ith subpopulation (group). Perform the ordinary factor analysis (Spearman 1904) for \({\varvec{y}}_{(i)}\). The initial estimate of \(\hat{{\varvec{\varOmega }}}_i^{(0)}\) is chosen as the sample variance–covariance matrix of the estimated factor scores.

-

3.

Obtain the factor loading matrix for \({\varvec{y}}_{(i)}\) via the principle components analysis (PCA; Flury 1984) method, denoted by \(\hat{{\varvec{B}}}^{(0)}_i\) for \(i=1,\ldots ,g\). Set the initial estimate of \({\varvec{A}}\) as

$$\begin{aligned} \hat{{\varvec{A}}}^{(0)}=\sum _{i=1}^g\hat{\pi }^{(0)}_i\hat{{\varvec{B}}}^{(0)}_i\hat{{\varvec{\varOmega }}}_i^{{(0)}^{-1/2}}. \end{aligned}$$ -

4.

As for initial estimate of \({\varvec{\beta }}_i\), set \(\hat{{\varvec{\beta }}}_i^{(0)}=\hat{{\varvec{A}}}^{(0)}\bar{{\varvec{y}}}_i\), where \(\bar{{\varvec{y}}}_i\) is the sample mean vector of \({\varvec{y}}_{(i)}\), \(i=1,\ldots ,g\).

-

5.

The initial estimate of \({\varvec{D}}_i\) is obtained as a diagonal matrix formed from the diagonal elements of the sample covariance matrix of \({\varvec{y}}_{(i)}\). As \({\varvec{D}}_1=\cdots ={\varvec{D}}_g={\varvec{D}}\), we set \(\hat{{\varvec{D}}}^{(0)}\) as a diagonal matrix formed from the diagonal elements of the pooled within-cluster sample covariance matrix of g partitioned groups of the data.

-

6.

With regard to the initial estimate of \(\nu _i\), we recommend setting a relatively large initial value, say \(\hat{\nu }_i^{(0)}=50\), \(\forall i\), which corresponds to an initial assumption of near-normality for the component factors and errors.

When implementing ECM and ECME for the EMCtFA, it is advantageous to use the Sherman–Morrison–Woodbury formula (Golub and Loan 1989) to avoid inverting any large \(p\times p\) matrix. That is, the inversion of the \(p\times p\) matrix \(({\varvec{A}}{\varvec{\varOmega }}_i{\varvec{A}}^\mathrm{T}+{\varvec{D}}_i)\) can be undertaken using the following result:

which involves only the inverse of \(q\times q\) matrix on the right hand side. It follows that \({\varvec{\gamma }}_i\) can be rewritten as \({\varvec{D}}_i^{-1}{\varvec{A}}({\varvec{\varOmega }}_i^{-1}+{\varvec{A}}^\mathrm{T}{\varvec{D}}_i^{-1}{\varvec{A}})^{-1}\). Moreover, to obtain the unique solution of \({\varvec{A}}\), as suggested by Baek et al. (2010), we perform the Cholesky decomposition on \(\hat{{\varvec{A}}}\) such that \(\hat{{\varvec{A}}}^{\mathrm{T}}\hat{{\varvec{A}}}={\varvec{C}}^{\mathrm{T}}{\varvec{C}}\), where \({\varvec{C}}\) is the upper triangular matrix of order q. If we replace \(\hat{{\varvec{A}}}\) by \(\hat{{\varvec{A}}}{\varvec{C}}^{-1}\), then the orthonormal estimate of \({\varvec{A}}\), which satisfies the condition of \(\hat{{\varvec{A}}}^{\mathrm{T}}\hat{{\varvec{A}}}={\varvec{I}}_q\), can be obtained. Consequently, the limiting estimates \(\hat{{\varvec{\beta }}}_i\) and \(\hat{{\varvec{\varOmega }}}_i\) are given as \({\varvec{C}}\hat{{\varvec{\beta }}}_i\) and \({\varvec{C}}\hat{{\varvec{\varOmega }}}_i{\varvec{C}}^{\mathrm{T}}\), respectively.

Notably, the EM-based procedures can get trapped in one of the many local maxima of the likelihood function, and such a phenomenon may still occur in the estimation of the EMCtFA, especially when the number of latent factors is over-specified. To circumvent such a limitation, we recommend initializing the algorithm with a variety of slightly different initial values by performing the K-means allocation of subjects with various random starts. The global optimum is obtained by choosing the one with the largest log-likelihood value.

4 Computational aspects

4.1 Clustering

The estimation of the component labels \({\varvec{Z}}_j\) and factor scores \({\varvec{u}}_j\) is meaningful for clustering each observation \({\varvec{y}}_j\) to a suitable cluster and displaying the high-dimensional data in lower-dimensional plots. Once the EMCtFA model has been fitted, a probabilistic clustering of the data into g clusters can be determined based on the maximum a posteriori (MAP) of component membership. That is, \(\hat{z}_{ij}^{(k)}\) evaluated at \({\varvec{\varTheta }}=\hat{{\varvec{\varTheta }}}\), denoted by \(\hat{z}_{ij}\), indicates the estimated posterior probability that \({\varvec{y}}_j\) belongs to the ith component. A natural assignment is achieved by assigning each observation to the component which has the highest estimated posterior probability.

From Eq. (21), we calculate the estimated conditional expectation of component factors \({\varvec{u}}_{ij}\) corresponding to \({\varvec{y}}_j\) evaluated at \({\varvec{\varTheta }}=\hat{{\varvec{\varTheta }}}\), denoted by \(\hat{{\varvec{u}}}_{ij}\). Then, it is straightforward to estimate the jth factor scores corresponding to \({\varvec{y}}_j\) as

Let \(\tilde{z}_{ij}=1\) if \(\hat{z}_{ij}\ge \hat{z}_{hj}\) for \(h\ne i\), \(i,h=1,\ldots ,g\), and \(\tilde{z}_{ij}=0\) otherwise. Alternatively, substituting \(\tilde{z}_{ij}\) for \(\hat{z}_{ij}\) in (16) leads to the other posterior estimates of factor scores. Therefore, we can display the p-dimensional observations \({\varvec{y}}_j\) in a q-dimensional subspace by plotting the corresponding values \(\hat{{\varvec{u}}}_j\). In addition, the fitted values of \({\varvec{y}}_j\) can be calculated as \(\hat{{\varvec{y}}}_j=\hat{{\varvec{A}}}\hat{{\varvec{u}}}_j\).

4.2 Classification for new subjects

It is also of interest to classify a new subject using the EMCtFA approach. For this purpose, let \({\varvec{y}}_\mathrm{new}=(y_\mathrm{new 1},\ldots ,y_{\mathrm{new}p})^{\mathrm{T}}\) be the observations for a new subject. Suppose that the model for \({\varvec{y}}_\mathrm{new}\) can be written as:

where the joint distribution of \({\varvec{u}}_{i,\mathrm{new}}\) and \({\varvec{e}}_{i,\mathrm{new}}\) satisfies the assumption (3). We now turn our attention to diagnose the allocated group of the new subject and characterize its predictive density. The work of classification of the new subject is based on a fitted (i) conditional distribution of the observed vector \({\varvec{y}}_\mathrm{new}\) given an appropriate predictor of factor scores (conditional prediction) and (ii) marginal distribution of the observed vector \({\varvec{y}}_\mathrm{new}\) (marginal prediction).

Given the model parameters, the strength of allocating \({\varvec{y}}_\mathrm{new}\) to the ith group is characterized by a predictive density \(p({\varvec{y}}_\mathrm{new}|{\varvec{A}},{\varvec{\theta }}_i)\) whose estimated expression is discussed below. The predictive density of \({\varvec{y}}_\mathrm{new}\) is

in which the predictive density belonging to component i, say \(\hat{p}({\varvec{y}}_\mathrm{new}|{\varvec{A}},{\varvec{\theta }}_i)\), can be estimated by the conditional and marginal predictions described below.

For conditional prediction, the predictive density \(p({\varvec{y}}_\mathrm{new}|{\varvec{A}},{\varvec{\theta }}_i)\) is the conditional density of \({\varvec{y}}_\mathrm{new}\) given the estimated factor scores \(\hat{{\varvec{u}}}_{i,\mathrm{new}}\). Specifically,

As in (16), a suitable estimate of component factors is the conditional mean of \({\varvec{u}}_{i,\mathrm{new}}\) which is calculated using an expression analogous to \(\hat{{\varvec{u}}}_{ij}^{(k)}\) with \({\varvec{y}}_j, {\varvec{u}}_{ij}\) and \(\hat{{\varvec{\varTheta }}}^{(k)}\) replaced by \({\varvec{y}}_\mathrm{new}, {\varvec{u}}_{i,\mathrm{new}}\) and \(\hat{{\varvec{\varTheta }}}\), respectively.

For marginal prediction, the predictive density \(p({\varvec{y}}_\mathrm{new}|{\varvec{A}},{\varvec{\theta }}_i)\) is the marginal density of \({\varvec{y}}_\mathrm{new}\), where the term ‘marginal’ reflects the fact that the component factors \({\varvec{u}}_{i,\mathrm{new}}\) are integrated out from the joint density of \(({\varvec{y}}_\mathrm{new}^{\mathrm{T}}, {\varvec{u}}_{i,\mathrm{new}}^{\mathrm{T}})^\mathrm{T}\). We, thus, have

Subsequently, the estimated allocation of the new subject to group i is according to a combination of the prior probabilities \(\pi _1,\ldots ,\pi _g\) and the estimated values of predictive densities \(\hat{p}({\varvec{y}}_\mathrm{new}|{\varvec{A}},{\varvec{\theta }}_1),\ldots ,\hat{p}({\varvec{y}}_\mathrm{new}|{\varvec{A}},{\varvec{\theta }}_g)\), given by

Within the likelihood-based approach, all model parameters are estimated by the ML estimates \(\hat{{\varvec{A}}}\) and \(\hat{{\varvec{\theta }}}_i\). Consequently, based on the MAP classification rule, the feature vector \({\varvec{y}}_\mathrm{new}\) is classified to group i if \(\hat{\mathscr {P}}_{i,\mathrm{new}}>\hat{\mathscr {P}}_{h,\mathrm{new}}\), for \(h\ne i, i,h=1,\ldots ,g\).

4.3 Outlier identification

Identification of outliers is an important issue because few outliers may produce poor clustering results. Just like the use of allocation indicator \(z_{ij}\), introducing the scaling variable \(\tau _{j}\) not only facilitates the implementation of the EM-type algorithm but also enables the interpretation of the estimated model. As can be seen from (8) to (11), \(\hat{\tau }_{ij}^{(k)}\) can be treated as the weight in the estimation of \({\varvec{A}}, {\varvec{\beta }}_i, {\varvec{\varOmega }}_i\) and \({\varvec{D}}_i\). Because the estimated value of \(\tau _j\) is negatively correlated with the estimated Mahalanobis distance \(\delta _{ij}\) between \({\varvec{y}}_j\) and \({\varvec{A}}{\varvec{\beta }}_i\), a small value of \(\hat{\tau }_{ij}\) (i.e., \(\hat{\tau }_{ij}^{(k)}\) at convergence) would downweight the influence of the corresponding subject, which can be thought of as a suspected outlier.

To explicitly identify which subject should be an outlier, we follow the idea of Lo and Gottardo (2012) to establish a convenient rule of judging a subject with the associated \(\hat{\tau }_j=\sum _{i=1}^g\tilde{z}_{ij}\hat{\tau }_{ij}\) value smaller than a critical value, where \(\tilde{z}_{ij}=1\) if \(\hat{z}_{ij}\ge \hat{z}_{hj}\) for \(h\ne i\), and \(\tilde{z}_{ij}=0\) otherwise. From a viewpoint of hypothesis testing, if we treat \(\hat{\tau }_j\) as a test statistic, then the critical value should be theoretically selected based on some standard distributions. Given \(z_{ij}=1\), \({\varvec{y}}_j\) follows a p-dimensional t-distribution with location \({\varvec{A}}{\varvec{\beta }}_i\), scale-covariance \({\varvec{\varSigma }}_i\) and df \(\nu _i\), and the Mahalanobis distance \(\delta _{ij}\) follows \(p\mathscr {F}(p,\nu _i)\), where \(\mathscr {F}(a,b)\) denotes a F distribution with dfs a and b. Thus, \(\hat{\tau }_{j}\) has a scale Beta distribution, say \((1+p/\nu _i)\mathscr {B}eta(\nu _i/2, p/2)\). Under a significance level of \(\alpha \), the critical value is determined as:

where \(\mathscr {B}_\alpha (\cdot ,\cdot )\) denotes the \(\alpha \) quantile of the Beta distribution such that \(P(B\ge \mathscr {B}_{\alpha })=1-\alpha \). Consequently, given \({\varvec{y}}_j\) belonging to the ith group, if \(\hat{\tau }_j<c\) then the corresponding subject will be treated as a suspect outlier.

4.4 Model selection

To choose the preferred models and determine the numbers of latent factors q and components g, we adopt two widely used model selection criteria. Let \(\ell _\mathrm{max}\) be the maximized log-likelihood, and m the number of free parameters in the model. The Bayesian information criterion (BIC; Schwarz 1978), defined as

is the most commonly employed approach to identifying which model gives the best approximation to the underlying density. Accordingly, models with smaller BIC scores are preferred. Under certain regularity conditions, Keribin (2000) presented a theoretical justification for the efficacy of the BIC in determining the number of components of a mixture model. Fraley and Raftery (2002) gave some empirical evidence that the BIC performs well in model-based clustering tasks.

As argued by Biernacki et al. (2000), BIC may not be an ideal way of identifying the number of clusters. Indeed, BIC favors models with more mixture components to provide a good density estimation of the data. Instead they proposed an alternative promising measure for estimating the proper number of clusters based on the integrated completed likelihood (ICL), calculated as:

where \(EN({\varvec{z}})=-\sum _{j=1}^n\sum _{i=1}^g\hat{z}_{ij}\log \hat{z}_{ij}\) is the entropy of the classification matrix with the (i, j)th entry being \(\hat{z}_{ij}\). In the same vein, the smaller the ICL value, the better the model. Typically, the ICL is preferable to BIC for EMCtFA as it leads to fewer factors since it places a higher penalty on more complex models. Nevertheless, there is no unanimity about which criterion is always the best, and a combined use of BIC and ICL could be of help in screening reasonable candidate models.

From a classification viewpoint, the accuracy of classification can be taken as an alternative measure of fitness of data in some sense. To measure the agreement between a clustering of the data and their true group labels, we employ the leave-one-out (LOO) cross validation of the MAP classifications against the true group labels to evaluate the correct classification rate (CCR; Lee et al. 2003) and the adjusted Rand index (ARI; Hubert and Arabie 1985). The LOO technique is to take one out of subjects and use the remaining subjects as the training data to update the parameters. The CCR, which ranges from zero to one, is computed as the proportion of correct clusters with respect to the true group labels. As a measure of class agreement between two data clustering, the ARI has an expected value of zero under random classification and takes the maximized value one for perfect classification.

5 Application: the Italian wine data

Forina et al. (1986) reported 28 chemical and physical properties of three types of Italian wine, including 59 Barolo, 71 Grignolino and 48 Barbera. A subset of \(p = 13\) of these variables (listed in the first column of Table 2) for \(n = 178\) wines is available as part of the gclus package (Hurley 2004) of R software. The proposed techniques are demonstrated on the analysis of these Italian wines.

For the sake of comparison, in addition to the EMCtFA model, the MFA, MtFA, MCFA, MCtFA and EMCFA (extended MCFA, which is the original MCFA with distinct variance–covariance matrices for latent factors) approaches are also fitted to the data. Prior to analyses, each variable is standardized to have zero mean and unit standard deviation. For the MtFA, EMCtFA, and MCtFA, the assumption of equal and unequal dfs is imposed on the component factors and errors. Henceforth, their counterparts in the case of equal dfs, say \(\nu _i=\nu \) for all i, are named as the ‘MtFAe’, ‘EMCtFAe’, and ‘MCtFAe’, respectively. For supervised learning of the wine data with three class labels, the nine candidate models are fitted with \(g=3\) components and q varying from 1 to 8, where the choice of maximum \(q=8\) satisfies the restriction of \((p-q)^2\ge (p+q)\), as recommended by McLachlan and Peel (2000, Chapter 8). All models are trained by the proposed ECME algorithm over five trials of different K-means initializations. The optimal solution is the one providing the largest log-likelihood value.

Table 1 reports the number of model parameters m and the values of BIC and ICL for the considered 72 scenarios in terms of the specification of models and the number of factors q. In light of BIC and ICL, the t-based models outperform their normal counterparts except for the case of \(q=1\). Furthermore, it is evident that both criteria give a consistent preference in the study, that is, the best fit to the data is EMCtFAe (\(q=4\)), followed by EMCtFA (\(q=4\)), MCtFA (\(q=4\)) and MCtFAe (\(q=4\)).

The resulting ML estimates of common factor loadings \(\hat{{\varvec{A}}}\) and component means \(\hat{{\varvec{\mu }}}_i=\hat{{\varvec{A}}}\hat{{\varvec{\beta }}}_i\) (\(i=1,2,3\)) together with the empirical sample means \(\bar{{\varvec{y}}}_i\) for the best model are presented in Table 2. Herein, the names for the cluster components are matched with the shortest Euclidean norm of the distance between the sample class means \(\bar{{\varvec{y}}}_i\) and the estimated component means \(\hat{{\varvec{\mu }}}_i\), for \(i=1,2,3\). The estimates of mixing proportions are \(\hat{\pi }_1=0.331\), \(\hat{\pi }_2=0.382\) and \(\hat{\pi }_3=0.287\), respectively, and they are very close to the proportions of the corresponding groups of wine data. Besides, the estimate of common df (\(\hat{\nu }=12.658\)) is somewhat small, signifying that the heavy-tailed behavior exhibits within the multi-dimensional Italian wine data.

In the model-based classification framework, the main objective is to estimate the group memberships for new subjects with unknown group memberships. To empirically demonstrate the performance of the proposed classification procedure described in Sect. 4.2, we calculate the CCR and ARI of the classification results based on both marginal and conditional predictions. Table 3 tabulates the classification performance of the best fitting models under each class of EMCtFA and MCtFA models, respectively, namely EMCtFAe (\(q=4\)) and MCtFA (\(q=4\)). The EMCtFAe model shows a slight improvement on the classification accuracy by virtue of having higher CCR and ARI values compared to the MCtFA.

Figure 1 shows the 3D scatter-ellipsoids plots of two triple estimated factor scores calculated using (16) for the best model, where the colors of the dots correspond to the true class labels. It is interesting to see that the three groups of wines can be visually separated by mapping the estimated factor scores to a low-dimensional space. Furthermore, it is of interest to detect outlying observations based on the identification rule described in Sect. 4.2. With a significance level of \(\alpha =0.05\), any subject with the estimated \(\hat{\tau }_j\) which is less than the critical value \(c=0.552\), calculated by (17), will be deemed as an outlier. Using such an identification rule indicates that Barolo wine 14, Grignolino wines 62, 69, 70, 74, 96, 97, 111 and 122, and Barbera wines 159 and 160 can be thought of as potential outliers. The finding is consistent with the estimate of df, reflecting that the wine data have longer-than-normal noises.

6 Simulation

In this section, we conduct a small-scale simulation study to compare the performance of the initialization method presented in Sect. 3.2 (Method 1) with the strategy described in the Appendix of Baek et al. (2010) (Method 2). The computation was carried out by R package 2.13.1 in win 64 environment of desktop PC machine with 3.40 GHz/Intel Core(TM) i7-2600 CPU Processor and 8.0 GB RAM. We generate 100 artificial data points in \(\mathscr {R}^{10+p_2}\) of size \(n=100\) and 250 from a five-component EMCtFA model with \(q=2\). The dimension for noise variables \(p_2\) is set to 0 and 20, so the numbers of total variables p are equal to 10 and 30, respectively. Specifically, the artificial data were generated from

in which the distributional assumption for \(({\varvec{u}}_{ij}^\mathrm{T},{\varvec{e}}_{ij}^{\mathrm{T}})^\mathrm{T}\) satisfies Eq. (3). The presumed model parameters are the same with those specified in Section 6 of Baek et al. (2010), except for \(\nu _i=5\) for \(i=1,\ldots ,5\). Each simulated dataset was fitted with the EMCtFA (\(g=5,~q=2\)) by implementing the ECME algorithm with parameters initialized once from each of the two methods. A total of 100 independent replications were run for each simulated case.

Table 4 lists the averages of required numbers of iterations, consumed CPU time (in seconds) until convergence, initial and maximized (converged) log-likelihood values, and the CCR and ARI values for clustering results along with the number of non-convergence cases (in parentheses) over 100 trials. Those non-convergence cases occur due mostly to the singularity of scale-covariance matrices \({\varvec{\varSigma }}_i\) during the iterations before convergence. Figure 2 displays the typical evolvements of log-likelihood values for one of the 100 replicates for each considered case. The numerical results indicate that the initialization of Method 1 leads to much faster convergence speed as it requires smaller numbers of iterations and less CPU time than Method 2. Meanwhile, Method 1 can obtain higher starting log-likelihood values (closer to the maximized log-likelihood values upon convergence of ECME) and maximized log-likelihood values as well as better classification performance in terms of CCR and ARI. This study illustrates the effectiveness of our recommended initialization procedure. The poor performance of Method 2 is largely attributable to the fact that it generates inappropriate initial values simply from the standard normal distribution for each entry of \(\hat{{\varvec{A}}}^{(0)}\).

7 Conclusion

The MtFA approach indeed provides a more flexible formulation of the component scale-covariances and the component means without restrictions. Hence, it is useful for analyzing the high-dimensional data with heavy tails or atypical observations. In this paper, we have studied a comparable approach, named as EMCtFA, using a factor-analytic representation of the multivariate t-component scale-covariance matrices with common factor loadings and distinct covariance matrices for latent factors and errors. The EMCtFA approach, which contains the MCtFA as a special case, achieves a compromised reduction in the number of parameters, particularly when the dimension p and the number of clusters g are not small. This approach is very well suited for clustering a wide variety of high-dimensional data into several clusters and provides robustness (less sensitive to outliers) in the sense of resulting number of clusters.

In this work, we have developed two computationally flexible EM-type algorithms and offered a simple way of generating suitable initial values for carrying out ML estimation of the EMCtFA model within a convenient complete data framework. The utility of the proposed approach has been demonstrated through experimental studies based on the real and simulated datasets. Numerical results have also shown that the proposed techniques perform reasonably well for the Italian wine data and outperform some common existing approaches.

To alleviate some limitations associated with the deterministic likelihood-based approach, one may resort to the VB approximation method working with maximization of a lower bound on the marginal log-likelihood (Jordan 1999; Corduneanu and Bishop 2001; Tzikas et al. 2008; Zhao and Yu 2009). The VB strategy has been shown effective to simultaneous estimate model parameters and determine the number of components for the MFA (Ghahramani and Beal 2000) and MCFA (Wei and Li 2013) models. Therefore, it is worthwhile to establish a novel VB scheme for learning the EMCtFA model under an approximated Bayesian paradigm. Besides, it is of interest to extend the EMCtFA based on a broader mixture family of component densities such as the multivariate skew t (Lin 2010; Lee and McLachlan 2014) and the canonical fundamental multivariate skew t (Lee and McLachlan 2016) distributions.

References

Andrews, J.L., McNicholas, P.D.: Extending mixtures of multivariate \(t\)-factor analyzers. Stat. Comput. 21(3), 361–373 (2011)

Baek, J., McLachlan, G.J.: Mixtures of common \(t\)-factor analyzers for clustering high-dimensional microarray data. Bioinformatics 27(9), 1269–1276 (2011)

Baek, J., McLachlan, G.J., Flack, L.K.: Mixtures of factor analyzers with common factor loadings: applications to the clustering and visualization of high-dimensional data. IEEE Trans. Pattern Anal. Mach. Intell. 32(7), 1–13 (2010)

Biernacki, C., Celeux, G., Govaert, G.: Assessing a mixture model for clustering with the integrated completed likelihood. IEEE Trans. Pattern Anal. Mach. Intell. 22, 719–725 (2000)

Corduneanu, A., Bishop, C.: Variational Bayesian model selection for mixture distributions. In: Proceedings of the AI and Statistics Conference, pp. 27–34 (2001)

Dempster, A.P., Laird, N.M., Rubin, D.B.: Maximum likelihood from incomplete data via the EM algorithm (with discussion). J. R. Stat. Soc. B 39(1), 1–38 (1977)

Diebolt, J., Robert, C.: Estimation of finite mixtures through Bayesian sampling. J. R. Stat. Soc. B 56, 363–375 (1994)

Flury, B.N.: Common principle components in \(k\) groups. J. Am. Stat. Assoc. 79(388), 892–898 (1984)

Fokouè, E., Titterington, D.M.: Mixtures of factor analyzers. Bayesian estimation and inference by stochastic simulation. Mach. Learn. 50, 73–94 (2003)

Forina, M., Armanino, C., Castino, M., Ubigli, M.: Multivariate data analysis as a discriminating method of the origin of wines. Vitis 25(3), 189–201 (1986)

Fraley, C., Raftery, A.E.: Model-based clustering, discriminant analysis and density estimation. J. Am. Stat. Assoc. 97(458), 611–631 (2002)

Ghahramani, Z., Beal, M.: Variational inference for Bayesian mixture of factor analysers. In: Solla, S., Leen, T., Muller, K.-R (eds.) Advances in Neural Information Processing Systems, vol. 12. MIT Press, Cambridge, pp. 449–455 (2000)

Ghahramani, Z., Hinton, G.E.: The EM algorithm for factor analyzers. In: Technical Report No. CRG-TR-96-1. The University of Toronto, Toronto (1997)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 2nd edn. Johns Hopkins University Press, Baltimore (1989)

Green, P.J.: Reversible jump Markov chain Monte Carlo computation and Bayesian model determination. Biometrika 82(4), 711–732 (1995)

Hartigan, J.A., Wong, M.A.: Algorithm AS 136: a \(K\)-means clustering algorithm. Appl. Stat. 28(1), 100–108 (1979)

Hinton, G., Dayan, P., Revow, M.: Modeling the manifolds of images of handwritten digits. IEEE Trans. Neural Netw. 8(1), 65–73 (1997)

Hubert, L., Arabie, P.: Comparing partitions. J. Classif. 2(1), 193–218 (1985)

Hurley, C.: Clustering visualizations of multivariate data. J. Comput. Graph. Stat. 13(4), 788–806 (2004)

Jordan, M.I.: An introduction to variational methods for graphical models. Mach. Learn. 37, 183–233 (1999)

Keribin, C.: Consistent estimation of the order of mixture models. Sankhyã Indian J. Stat. 62, 49–66 (2000)

Kotz, S., Nadarajah, S.: Multivariate \(t\) Distributions and Their Applications. Cambridge University Press, Cambridge (2004)

Lee, S., McLachlan, G.J.: Finite mixtures of multivariate skew \(t\)-distributions: some recent and new results. Stat. Comput. 24, 181–202 (2014)

Lee, S.X., McLachlan, G.J.: Finite mixtures of canonical fundamental skew \(t\)-distributions. Stat. Comput. 26, 573–589 (2016)

Lee, W.L., Chen, Y.C., Hsieh, K.S.: Ultrasonic liver tissues classification by fractal feature vector based on M-band wavelet transform. IEEE Trans. Med. Imaging 22, 382–392 (2003)

Lin, T.I.: Robust mixture modeling using multivariate skew \(t\) distributions. Stat. Comput. 20, 343–356 (2010)

Liu, C., Rubin, D.B.: The ECME algorithm: a simple extension of EM and ECM with faster monotone convergence. Biometrika 81(4), 633–648 (1994)

Lo, K., Gottardo, R.: Flexible mixture modeling via the multivariate \(t\) distribution with the Box–Cox transformation: an alternative to the skew-\(t\) distribution. Stat. Comput. 22, 33–52 (2012)

Lopes, H.F., West, M.: Bayesian model assessment in factor analysis. Stat. Sin. 14, 41–67 (2004)

McLachlan, G.J., Bean, R.W., Jones, L.B.T.: Extension of the mixture of factor analyzers model to incorporate the multivariate \(t\)-distribution. Comput. Stat. Data Anal. 51(11), 5327–5338 (2007)

McLachlan, G.J., Bean, R.W., Peel, D.: A mixture model-based approach to the clustering of microarray expression data. Bioinformatics 18(3), 413–422 (2002)

McLachlan, G.J., Krishnan, T.: The EM Algorithm and Extensions, 2nd edn. Wiley, New York (2008)

McLachlan, G.J., Peel, D., Bean, R.W.: Modelling high-dimensional data by mixtures of factor analyzers. Comput. Stat. Data Anal. 41, 379–388 (2003)

McNicholas, P.D., Murphy, T.B.: Parsimonious Gaussian mixture models. Stat. Comput. 18(3), 285–296 (2008)

McLachlan, G.J., Peel, D.: Finite Mixture Models. Wiley, New York (2000)

Meng, X.L., Rubin, D.B.: Maximum likelihood estimation via the ECM algorithm: a general framework. Biometrika 80(2), 267–278 (1993)

Meng, X.L., van Dyk, D.: The EM algorithm—an old folk-song sung to a fast new tune. J. R. Stat. Soc. B 59, 511–567 (1997)

R Development Core Team: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna (ISBN 3-900051-07-0). http://www.R-project.org (2009)

Richardson, S., Green, P.J.: On Bayesian analysis of mixtures with an unknown number of components. J. R. Stat. Soc. B 59, 731–792 (1997)

Schwarz, G.: Estimating the dimension of a model. Ann. Stat. 6, 461–464 (1978)

Spearman, C.: ‘General intelligence’, objectively determined and measured. Am. J. Psychol. 15, 201–292 (1904)

Tzikas, D.G., Likas, A.C., Galatsanos, N.P.: The variational approximation for Bayesian inference. IEEE Signal Process. 25, 131–146 (2008)

Wang, W.L.: Mixtures of common factor analyzers for high-dimensional data with missing information. J. Multivar. Anal. 117, 120–133 (2013)

Wang, W.L., Lin, T.I.: An efficient ECM algorithm for maximum likelihood estimation in mixtures of \(t\)-factor analyzers. Comput. Stat. 28, 751–769 (2013)

Wei, X., Li, C.: Bayesian mixtures of common factor analyzers: model, variational inference, and applications. Signal Process. 93, 2894–2905 (2013)

Zhang, Z., Chan, K.L., Wu, Y., Chen, C.: Learning a multivariate Gaussian mixture model with the reversible jump MCMC algorithm. Stat. Comput. 14, 343–355 (2004)

Zhao, J., Yu, P.L.H.: A note on variational Bayesian factor analysis. Neural Netw. 22, 988–997 (2009)

Acknowledgments

The authors are grateful to the Chief Editor, the Associate Editor, and two anonymous reviewers for their insightful comments and suggestions that greatly improved this article. This work was partially supported by the Ministry of Science and Technology of Taiwan under Grant Nos. MOST 105-2118-M-035-004-MY2 and MOST 105-2118-M-005-003-MY2.

Author information

Authors and Affiliations

Corresponding author

Appendix A: Hierarchies and some properties for the EMCtFA

Appendix A: Hierarchies and some properties for the EMCtFA

For the convenience of developing ML estimation, we have the first hierarchy of model (2) with assumption (3):

Based on the characterization of multivariate t distributions, the second hierarchy can be expressed as:

From (3), the zero covariance of \(({\varvec{u}}_{ij}^\mathrm{T},{\varvec{e}}_{ij}^{\mathrm{T}})^\mathrm{T}\) implicitly implies that \({\varvec{u}}_{ij}\mid \tau _{j}\) and \({\varvec{e}}_{ij}\mid \tau _{j}\) are assumed to be independent. The third hierarchy can be written as:

Furthermore, we need the following conditional moments of latent variables for E-step of the ECM and ECME procedures. According to hierarchies (18)–(20), it follows from Proposition 1 that

where \(\hat{{\varvec{\varSigma }}}_i^{(k)}=\hat{{\varvec{A}}}^{(k)}\hat{{\varvec{\varOmega }}}_i^{(k)}\hat{{\varvec{A}}}^{{(k)}^{\mathrm{T}}}+\hat{{\varvec{D}}}_i^{(k)}\), \(\hat{{\varvec{\gamma }}}_i^{(k)}=\hat{{\varvec{\varSigma }}}_i^{{(k)}^{-1}}\hat{{\varvec{A}}}^{(k)}\hat{{\varvec{\varOmega }}}_i^{(k)}\), \(\hat{\delta }_{ij}^{(k)}=\hat{{\varvec{y}}}_{ij}^{(k)\mathrm T}\hat{{\varvec{\varSigma }}}_i^{{(k)}^{-1}}\hat{{\varvec{y}}}_{ij}^{(k)}\), \(\hat{{\varvec{y}}}_{ij}^{(k)}={\varvec{y}}_j-\hat{{\varvec{A}}}^{(k)}\hat{{\varvec{\beta }}}_i^{(k)}\), and \(\mathscr {D_G}(\cdot )\) is the digamma function.

Rights and permissions

About this article

Cite this article

Wang, WL., Lin, TI. Flexible clustering via extended mixtures of common t-factor analyzers. AStA Adv Stat Anal 101, 227–252 (2017). https://doi.org/10.1007/s10182-016-0281-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10182-016-0281-0