Abstract

Prediction-based estimating functions (PBEFs), introduced in Sørensen (Econom J 3:123–147, 2000), are reviewed, and PBEFs for the Heston (Rev Financ Stud 6:327–343, 1993) stochastic volatility model are derived with and without the inclusion of noise in the data. The finite sample performance of the PBEF-based estimator is investigated in a Monte Carlo study and compared to the performance of the Generalized Method of Moments (GMM) estimator from Bollerslev and Zhou (J Econom 109:33–65, 2002) that is based on conditional moments of integrated variance. We derive new moment conditions in the presence of noise, but we also consider noise correcting the GMM estimator by basing it on a realized kernel instead of realized variance. Our Monte Carlo study reveals great promise for the estimator based on PBEFs. The study also shows that the PBEF-based estimator outperforms the GMM estimator, both in the setting with MMS noise and in the setting without MMS noise, especially for small sample sizes. Finally, in an empirical application we fit the Heston model to SPY data and investigate how the two methods handle real data and possible model misspecification. The empirical study also shows how the flexibility of the PBEF-based method can be used for robustness checks.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Continuous time stochastic volatility (SV) models are widely used in econometrics and empirical finance for modeling prices of financial assets. Considerable efforts have been put into modeling and estimation of the latent volatility process. Most of this research is surveyed in part II of Andersen et al. (2009). Stochastic volatility diffusion models, such as the Heston (1993) model, represent a popular class of models within the continuous time framework. The Heston model will be the baseline model considered in this paper, since it is one of the most widely used models in financial institutions, due to its analytical tractability.

Parameter estimation in SV models is difficult because the volatility process is latent. The hidden Markov structure complicates inference, since the observed log-price process will not in itself be a Markov process, which implies that computing conditional expectations of functions of the observed process is practically infeasible. As a consequence, martingale estimating functions will not be a useful tool for conducting inference in SV models. Likewise, likelihood inference is not straightforward, because an analytical expression for the transition density is almost never available and methods based on extensive simulations are called for.

We will circumvent the above-mentioned problems for conducting inference in SV models using prediction-based estimating functions (PBEFs), introduced in Sørensen (2000), which are a generalization of martingale estimating functions. This generalization becomes particularly useful when applied to observations from a non-Markovian model. PBEFs are estimating functions based on predictors of functions of the observed process.

In this paper, we investigate and contrast two estimation approaches. First, PBEFs will be reviewed, detailed, and used for parameter estimation in the Heston model. The estimation method is fairly easy to implement and fast to execute, as the construction of PBEFs relies only on the computation of unconditional moments. When the Heston SV model is considered, no simulations are needed for constructing the PBEFs used in the paper.Footnote 1 As a benchmark, we consider the method suggested in Bollerslev and Zhou (2002). In Bollerslev and Zhou (2002), a Generalized Method of Moments (GMM) type estimator based on the first and second order conditional moments of the integrated variance (IV) is derived. Since IV is latent, realized variance (RV) is used as a proxy and the sample moments of RV are matched to the population moments of IV implied by the model. When high-frequency data are available, several other simulation-free methods have been suggested in the literature, see for instance Barndorff-Nielsen and Shephard (2002), Corradi and Distaso (2006), and Todorov (2009). Common to these methods, including the GMM-based estimator from Bollerslev and Zhou (2002), is that they are all based on time series of daily realized measures, such as realized variance (RV) and bipower variation (BV). Instead of being transformed into daily realized measures, the squared intra-daily returns are used directly when constructing PBEFs. This means that PBEFs have a potential informational advantage, the strength of which will be investigated throughout this paper. More specifically, this paper investigates the finite sample properties of the PBEF-based estimator in a Monte Carlo study and compares its performance to that of the GMM estimator from Bollerslev and Zhou (2002).

Both the case where the efficient price is assumed to be directly observable and the case where noise is present in data are considered. In particular, we contribute by extending the two competing methods to handle the presence of noise. The usage of PBEFs for estimating SV models was suggested, among others, in Barndorff-Nielsen and Shephard (2001), but to the best of our knowledge this is the first time the finite sample performance of PBEFs applied to SV models is being studied. In fact, this is the most extensive Monte Carlo study of the finite sample performance of the PBEF-based estimation method. In Nolsøe et al. (2000) the authors conduct a small Monte Carlo study for the case where a Cox–Ingersoll–Ross (CIR) process is observed with additive white noise, but the potential of using PBEFs to estimate SV models has not previously been studied.

This paper also addresses the link between the estimation method based on PBEFs and GMM based on the moment conditions underlying the PBEF. Especially, the connection between the optimal PBEF and the optimal choice of the weight matrix in GMM estimation is established.

Lastly, an empirical application using SPY data is carried out, investigating how the two estimation methods handle real data characteristics and possible model misspecification. In the empirical application we also study how different choices in the flexible PBEF-based estimation method might impact the parameter estimates. In particular, we investigate how considering different choices of the predictor space might serve as a robustness check of whether there is a need for additional volatility factors in the model.

This paper is organized as follows: In the following section the PBEF estimation method is reviewed and detailed. The connection to GMM estimation is established, and a brief review of the GMM-based estimator from Bollerslev and Zhou (2002) is provided. For both methods, the estimator of the parameters in the Heston model is derived with and without the inclusion of noise in the data. In Sect. 3, we present our Monte Carlo study. This includes an investigation of how i.i.d. noise impacts the performances of the two methods and how the noise-corrected estimators perform. Section 4 contains an empirical application to SPY data that investigates how the methods handle real data and if and how the choice of estimation method impacts the parameter estimates. The final section concludes, and ideas on further research are outlined. Some details have been relegated to a separate appendix that can be obtained from the authors upon request.

2 Estimating stochastic volatility models

In this section, the two estimation methods from Sørensen (2000) and Bollerslev and Zhou (2002) are reviewed and extended to handle MMS noise. We also discuss the link between the optimal PBEF and a GMM estimator with the optimal choice of weight matrix. The focus of this paper is on the performance of the two considered estimation methods used for estimating SV models of the form

where \(W\) and \(B\) are independent standard Brownian motions. The independence assumption rules out the possibility of leverage effects, but it is only imposed for computational ease and could be relaxed in other applications. In particular, we are interested in studying inference for the Heston SV model

where the spot volatility, \(v_t\), is a CIR-process. The parameter, \(\alpha \), is the long run average variance of the observed process, \(\{X_t\}\), and the other drift parameter, \(\kappa \), is the rate at which \(v_t\) reverts to the long run average. The third parameter, \(\sigma \), can be interpreted as the volatility of volatility. The Heston model is widely used in mathematical finance where the observed process, \(\{X_t\}\), would be the logarithm of an asset price. The popularity of the Heston model in financial institutions is primarily due to the analytical tractability of the model, which allows for (quasi) closed form expressions for prices of financial derivatives, such as European options.

2.1 Estimation using prediction-based estimating functions

First, we explain the general setup and ideas underlying the estimation method based on PBEFs that was introduced in Sørensen (2000) and further developed in Sørensen (2011). Then, following Sørensen (2000), we derive the PBEFs for the Heston model without MMS noise. Finally, we add MMS noise to the observations and derive PBEFs in this setting.

2.1.1 The general setup and ideas

The estimation method based on PBEFs is used for conducting parametric inference on observations \(Y_1, Y_2, \ldots , Y_n\) from a general stochastic process. The stochastic process is assumed to belong to a class of models parametrized by a \(p\)-dimensional vector, \(\theta \in \Theta \subseteq \mathbb {R}^p\), that we wish to estimate. An estimating function is a \(p\)-dimensional function \(G_n(\theta )\) that depends on the data \(Y_1, Y_2, \ldots , Y_n\) and \(\theta \), and an estimator is obtained by solving the \(p\) equations \(G_n(\theta ) = \underline{0}\) w.r.t. \(\theta \). Specifically, the estimating functions we study have the form

where the function to be predicted, \(f(Y_i)\), is defined on the state space of the data generating process \(Y\). The function \(f\) is assumed to satisfy the condition \(E_{\theta }[f(Y_i)^2]<\infty \) for all \(\theta \in \Theta \) and for \(i=1, \ldots ,n\). \(\Pi ^{(i-1)}(\theta )=\big (\pi _{1}^{(i-1)}(\theta ),\ldots , \pi _{p}^{(i-1)}(\theta )\big )\) has elements that belong to \(\mathcal {P}^{\theta }_{i-1}\), the predictor space for predicting \(f(Y_i)\), and \(\hat{\pi }^{(i-1)}(\theta )\) is the minimum mean square error (MMSE) predictor of \(f(Y_i)\) in \(\mathcal {P}^{\theta }_{i-1}\). For each \(i\), the predictor space, \(\mathcal {P}^{\theta }_{i-1}\), can be chosen as closed linear subspace of the Hilbert space \(\mathcal {H}^{\theta }_{i-1}\) . So \(\hat{\pi }^{(i-1)}(\theta )\) is the orthogonal projection of \(f(Y_i)\) onto \(\mathcal {P}^{\theta }_{i-1}\) w.r.t. the inner product in \(\mathcal {H}_i^{\theta }\).Footnote 2 This orthogonal projection exists and is uniquely determined by the normal equations

see e.g. Theorem 3.1 in Karlin and Taylor (1975).Footnote 3

A special class of PBEFs is the class of martingale estimating functions (MGEFs), which is obtained by choosing \(\mathcal {P}^{\theta }_{i-1}:=\mathcal {H}^{\theta }_{i-1}\). In this case, the MMSE predictor of \(f(Y_i)\) in \(\mathcal {P}_{i-1}^{\theta }\) is the conditional expectation, \(\hat{\pi }^{(i-1)}(\theta ) = E_{\theta }\big [f(Y_i)|Y_1,\ldots , Y_{i-1}\big ]\), and \(G_n(\theta )\) becomes a \(P_{\theta }\)-martingale w.r.t. the filtration generated by the data process. MGEFs are however mainly useful when considering Markovian models, since for Non-Markovian models it is practically infeasible to calculate conditional expectations, conditioning on the entire past of observations. The idea underlying PBEFs is to use a smaller and more tractable predictor space in place of \(\mathcal {H}^{\theta }_{i-1}\) and think of the resulting PBEF as an approximation of the MGEF. The advantage of considering this approximation is that it is only based on unconditional moments, which are much easier to compute, or simulate, than conditional moments.

In the rest of the paper, we will restrict our attention to finite-dimensional predictor spaces, \(\mathcal {P}^{\theta }_{i-1}\), and assume that the observed process \(\{Y_i\}\) is stationary. For asymptotic properties of the estimator in this setting, consult Sørensen (2000) and Sørensen (2011). In order to obtain even more tractable PBEFs, we will only consider \(q+1\) dimensional predictor spaces with basis elements of the form \(Z_k^{(i-1)}=h_k(Y_{i-1},\ldots ,Y_{i-s}), \, k=0,\ldots ,q\), where \(h_k: \mathbb {R}^s \mapsto \mathbb {R}, s\in \mathbb {N}\) and where the functions \(h_0, h_1, \ldots , h_q\) are linearly independent and do not depend on \(\theta \). The predictor space used for predicting \(f(Y_i)\) is then given by \(\mathcal {P}_{i-1}^{\theta }={\hbox {span}}\{Z_{0}^{(i-1)},Z_{1}^{(i-1)},\ldots ,Z_{q}^{(i-1)}\}\). The basis elements of the predictor space are no longer assumed to be functions of the entire past, but they are instead functions of the “most recent past” of a period of length \(s\). The predictors in \(\mathcal {P}_{i-1}^{\theta }\) will therefore be of the form \(a_0 + a^{\prime }Z^{(i-1)}\), where \(a^{\prime }=(a_1,\ldots ,a_q)\) and \(Z^{(i-1)}=\big (Z_{1}^{(i-1)},\ldots ,Z_{q}^{(i-1)}\big )^{\prime }\), imposing that \(h_0=1\).Footnote 4 The normal equations (4) lead to the MMSE predictor

where \(\hat{a}(\theta ))=C(\theta )^{-1}b(\theta )\) and \(\hat{a}_{0}(\theta ) = E_{\theta }[f(Y_i)] - \hat{a}(\theta )^{\prime }E_{\theta }[Z^{(i-1)}]\). \(C(\theta )\) denotes the \(q \times q\) covariance matrix of \(Z^{(i-1)}\) and \(b(\theta ) = \big [\text {Cov}_{\theta }(Z_{1}^{(i-1)},f(Y_i)),\ldots , \text {Cov}_{\theta }(Z_{q}^{(i-1)},f(Y_i)) \big ]^{\prime }\). Note that, since the observed process \(\{Y_i\}\) is stationary, the coefficients of the MMSE predictor do not depend on \(i\), but stay constant across time.Footnote 5 For a formal derivation of the expressions for the coefficients in (5) see Appendix A. From (3)–(5) it follows that PBEFs can be calculated if we can calculate the first- and second-order moments of the random vector \(\big (f(Y_i),Z_{1}^{(i-1)},\ldots , Z_{q}^{(i-1)} \big )\).

Within the setup of the finite-dimensional predictor spaces considered above, we now turn to the specification of the \(p\times 1\)-vector \(\Pi ^{(i-1)}(\theta )\) from (3). Since each element of the vector \(\Pi ^{(i-1)}(\theta )\) belongs to the predictor space \(\mathcal {P}^{\theta }_{i-1}\), the \(j^{th}\) element of \(\Pi ^{(i-1)}(\theta )\) is of the form \(\pi _{j}^{(i-1)}(\theta ) = \sum _{k=0}^{q}a_{jk}(\theta )Z^{(i-1)}_{k}\), where, as before, \(Z_{0}^{(i-1)}=1\). Note that the coefficients \(a_{jk}(\theta )\) do not depend on \(i\) but are, like the coefficients of the MMSE, \(\hat{a}(\theta )\) and \(\hat{a}_0(\theta )\), constant over time. Therefore, in order to ease notation, we define

for \(i=1,\ldots ,n\) and \(F_n(\theta ):=\sum _{i=s+1}^n H^{(i)}(\theta )\).Footnote 6 With this notation at hand we are considering PBEFs of the form

where we need \(p \le q+1\) to identify the \(p\) unknown parameters. Finding the optimal PBEF within a class of PBEFs of the type (6) is then a question of choosing an optimal weight matrix, \(A^*(\theta )\). The optimal PBEF will then be the estimating function, within the considered class of estimating functions of type (6), that is closest to the score in an \(\mathcal {L}^2\)-sense. For further details on the optimal PBEF see Sørensen (2000) or Appendix B.

2.1.2 Relating PBEFs to GMM estimation

The PBEFs and martingale estimating functions share many similarities with GMM estimation from the econometrics literature. In this subsection we will explain the link between the optimal PBEF and the optimal GMM estimator based on the moment conditions from the normal equations.

The PBEF-based estimator is obtained by solving \(G_n(\theta )=\underline{0}\) for \(\theta \), but for numerical reasons it is often easier to minimize \(G_n(\theta )'G_n(\theta )\) w.r.t. \(\theta \in \Theta \). We employ this approach and find an estimator by solving

This expression looks very similar to the GMM objective function that emerges if we perform GMM estimation using the \(q+1\) moment conditions \(E_{\theta }[H(\theta )]=\underline{0}\). In this case the GMM objective function to be minimized is \(\left( \frac{1}{n-s}F_n(\theta )\right) 'W_n(\theta )\left( \frac{1}{n-s}F_n(\theta )\right) \), which is equivalent to minimizing \(F_n(\theta )'W_n(\theta )F_n(\theta )\). In the latter case, the corresponding \(p\) first-order conditions are

if we evaluate the GMM weight matrix, \(W_n(\hat{\theta })\), at some consistent parameter estimate \(\hat{\theta }\) such that the weight matrix does not depend on \(\theta \). The first-order conditions (7) have the same structure as the PBEFs in (6). The only difference is that the term in front of \(F_n(\theta )\) in (7) becomes data dependent. However, it turns out that there is a strong link between (7) with \(W_n(\hat{\theta })\) chosen optimally and the optimal PBEF of type (6). The optimal PBEF takes the form \(G^*_n(\theta )=A^*_n(\theta )F_n(\theta )\), where \(A^*_n(\theta )=U(\theta )'\overline{M}_n(\theta )^{-1}\) and the expression for \(U(\theta )\) and \(\overline{M}_n(\theta )^{-1}\) can be found in Sørensen (2000) or Appendix B. Straightforward calculations show that

From the theory on GMM estimation, we know that the optimal choice of weight matrix, \(W_n(\theta )\), is the inverse of the covariance matrix of \(F_n(\theta )\) since the \(H^{(i)}\)’s are correlated. In the GMM setting this weight matrix will in practice be constructed using the sample version of the covariance matrix. When \(W_n(\theta )\) is chosen optimally, \(W_n(\theta )\) equals \(\frac{1}{n-s}\overline{M}_n(\theta )^{-1}\) and (7) becomes the empirical analog of the optimal PBEF, \(G^*_n(\theta )=A^*_n(\theta )F_n(\theta )\). Constructing the optimal PBEF is therefore the same as constructing the theoretical first-order conditions that emerge from the optimal GMM objective functions based on the moment conditions, \(E_{\theta }[H(\theta )]=\underline{0}\), from the normal equations. The choice of \(f\) and predictor space then translates into which moment conditions to use in the GMM estimation. Hence, these choices will also impact the efficiency of the resulting PBEF-based estimator. Once these choices are made, the optimal PBEF-based estimator is linked to the optimal GMM estimator, based on the moment conditions from the normal equations, as described above. Note that a sub-optimal choice of the weight matrix \(W_n(\theta )\) will lead to a sub-optimal PBEF, but the class of PBEFs is in general broader than the ones having the structure (7).

2.1.3 PBEFs for SV models without noise in the data

We now return to the setup from (1) and, following Sørensen (2000), review how to compute PBEFs for SV models without MMS noise.

Suppose the process \(X\) has been observed at discrete time points \(X_0, X_{\Delta }, X_{2\Delta },\ldots , X_{n\Delta }\). In this setup, inference based on MGEFs becomes practically infeasible, since the conditional expectations appearing in the MGEFs, which are based on \(f(Y_i)- E_{\theta }[f(Y_i)|Y_{i-1},\ldots , Y_1]\), are difficult to compute analytically, as well as numerically. One feasible approach for conducting inference is to use PBEFs instead. In fact, for many models, such as the Heston model, we are able to derive analytical expressions for the PBEFs. Then the continuous time returns from (1) are given by

which allows for the decomposition \(Y_i = \sqrt{S_i}Z_i\), where the \(Z_i\)’s are i.i.d. standard normal random variables independent of \(\{S_i\}\), and where the process \(\{S_i\}\) is given by \(S_i = \int _{(i-1)\Delta }^{i\Delta } \! v_t \, \mathrm {d}t\). We will assume \(v\) to be a positive, ergodic, diffusion process with invariant measure \(\mu _{\theta }\) and that \(v_0\sim \mu _{\theta }\) is independent of \(B\), which implies that \(v\) is stationary.

To construct PBEFs, we have to decide on which function of the data to predict. Since the \(Y_i's\) are uncorrelated, trying to predict \(Y_i\) using \(Y_{i-1},Y_{i-2}, \ldots Y_{i-q}\) will not work and \(f(y)=y\) is a bad choice. To match empirical data, where we have volatility clustering, squared returns from the considered SV models are often correlated, and a natural choice for \(f\) would therefore be \(f(y)=y^2\). The decomposition \(Y_i = \sqrt{S_i}Z_i\) also reveals that \(f(y)=y^2\) is a convenient choice, as it eases the computation of the moments required to construct the PBEFs. Other choices of \(f\) might result in efficiency gains, but without further knowledge of the intractable score functions that we aim to estimate, we will stick to the class of polynomial PBEFs as they offer computational ease.Footnote 7 We choose \(\mathcal {P}_{i-1}^{\theta } = \{a_0 + a_1Y_{i-1}^2 + \ldots + a_qY_{i-q}^2 | a_k \in \mathbb {R} \; k=0,1,\ldots ,q\}\) as our predictor space. Hence, \(Z_k^{(i-1)}=Y_{i-k}^2\) for \(k=1,2,\ldots ,q\) having the same functional form as the function of the data to predict.Footnote 8 Notice that in this case \(s=q\) since \(\mathcal {P}_{i-1}^{\theta }\) is spanned by the “most recent past of squared returns of length \(q\)”.Footnote 9 With the above choice of \(f\) and predictor space, the MMSE predictor is given by

As before, \(C\) denotes the covariance matrix of \(Z^{(i-1)}\), and \(b\) is the \(q\times 1\)-vector with \(\textit{j}^{\,th}\) element given by \(\text {Cov}_{\theta }(Y_{i-j}^2,Y_i^2)\). Together with (6), this means that we are considering PBEFs of the form

with \(\Pi ^{(i-1)}(\theta )= A(\theta )\tilde{Z}^{(i-1)}\), where \(\tilde{Z}^{(i-1)}=(1,Y_{i-1}^2, \ldots , Y_{i-q}^2)^{\prime }\). In our Monte Carlo study we will use the following sub-optimal, yet simple weight matrix

since computing the optimal weight matrix \(A^*(\theta )\) involves computing the covariance matrix of \(F_n(\theta )\).Footnote 10 The resulting sub-optimal PBEF is

Equating (12) to zero and solving for \(\theta \) gives a \(\sqrt{n}\)-consistent estimator, but we may loose some efficiency for not using the optimal weight matrix \(A^*(\theta )\). However, the aim of the paper is to study the finite sample performance of an easy implementable and simulation-free PBEF. As we shall see in our Monte Carlo study, the estimator based on the sub-optimal PBEF performs well in finite samples, and a study of the possible further improvements from using the optimal PBEF is left for future research.

The sub-optimal PBEF from (12) can now be computed if we can calculate \(\hat{a}_0(\theta ),\hat{a}_1(\theta ),\ldots , \hat{a}_q(\theta )\). For this we need \(E_{\theta }[Y_i^2], \text {Var}_{\theta }(Y_i^2)\) and \(\text {Cov}_{\theta }(Y_i^2,Y_{i+j}^2)\) for \(j=1,\ldots , q\). Following (Barndorff-Nielsen and Shephard 2001, pp. 179–181), the required moments can be calculated from the moments of the volatility process \(\{v_t\}\). For the Heston they are given by

2.1.4 PBEFs for SV models with noisy data

We now add noise to the observation scheme from (9). More specifically, we add i.i.d. Gaussian noise to the log-price process

where the efficient log-price process \(X^*\) comes from the Heston model and \(X^*\) and \(U\) are assumed to be independent. The additive error term \(U_i\) will be interpreted as market microstructure (MMS) noise due to market frictions such as bid-ask bounce, liquidity changes, and discreteness of prices. When MMS noise is present, the observed returns have the following structure

where the MA(1) process, \(\epsilon \), is normally distributed, \(N(0,2\omega ^2)\), and independent of the efficient return process \(Y^*\).

To correct for MMS noise in the PBEFs, the moments used to construct the MMSE predictor have to be recalculated. That is, \(E_{\theta }[Y_i^2], \text {Var}_{\theta }(Y_i^2)\) and \(\text {Cov}_{\theta }(Y_i^2,Y_{i+j}^2)\) need to be computed in the setting from (14).

Straightforward calculations give \(E_{\theta }[Y_i^2] = \Delta \alpha + 2\omega ^2\), since \(Y^*\) and \(\epsilon \) are independent and have mean zero. We can now derive the bias in \(\alpha \) that can be expected to occur, when performing the PBEF-based estimation procedure without correcting for MMS noise. If the MMS noise is not taken into account, then the equation, \(E_{\theta }[Y_i^2]= \Delta \alpha \), is erroneously used for constructing the PBEF. Therefore, the expected bias in \(\alpha \) is given by \(\frac{2\omega ^2}{\Delta }\), and as we shall see, this quantity matches with the bias found in our Monte Carlo Study. Since we do not have an analytical expression for the estimators, we will not attempt to derive the bias encountered in \(\kappa \) and \(\sigma \).

As for the variance of the squared returns, it follows from (14) that \(Y_i^2 = Y^{*^2}_i + \epsilon _i^2 + 2Y^*_i\epsilon _i\), and since the three terms are uncorrelated, we have that

Given the structure of the noise process, and since the noise process is normally distributed with mean zero and a variance of \(2\omega ^2\), we find that \(\text {Var}_{\theta }(\epsilon _i^2)=8\omega ^4\). The efficient return process and the noise process are independent, and both have mean zero, so \(\text {Var}_{\theta }(Y^*_i\epsilon _i)=E_{\theta }[\epsilon ^2_i]E_{\theta }[Y^{*^2}_i]=2\omega ^2\Delta \alpha \). Plugging this into (15) yields

Regarding the covariance structure of the squared returns, only the first-order covariance will change due to the MA(1) structure in the return errors \(\epsilon \). By, once again, exploiting that \(Y^*\) and \(\epsilon \) are independent and both have mean zero, we obtain the following expression for the first-order covariance of the observed squared return series

We can now compute the noise corrected version of the PBEF previously described. Note that we can choose to estimate the variance of the noise, \(\omega ^2\), in a first step, by for instance, plugging a non-parametric estimator into the noise corrected PBEF used for estimating \(\kappa , \alpha \) and \(\sigma \). Another approach would be to expand the parameter vector to \(\theta = (\kappa , \alpha , \sigma , \omega ^2)\) and use the noise corrected PBEF to estimate all four parameters. In the last approach one would have to choose a \(4 \times (q+1)\) weight matrix, \(A(\theta )\). This will result in a \(4\times 1\) estimating function \(G_n(\theta )\), such that our estimator \(\hat{\theta }\) is obtained by solving four equations in four unknowns. We will follow the last approach and estimate all four parameters in one step. Since we have chosen \(q=3\) and wish to estimate \(\omega ^2\), the weight matrix will be a \(4 \times 4\) matrix. This means, that the weight matrix can be ignored when solving \(G_n(\theta )=\underline{0}\), under the assumption that \(A(\theta )\) is invertible and the sub-optimal PBEF we have considered so far will, in this setting, be optimal.

2.2 A GMM estimator based on moments of integrated volatility

In this subsection, the GMM-based estimation procedure from Bollerslev and Zhou (2002) is reviewed and extended to handle MMS noise. In Bollerslev and Zhou (2002), the moment conditions for constructing the GMM estimator arise from the analytical derivations of the conditional first- and second-order moments of the daily integrated variance (IV) process. We will consider both a parametric and a non-parametric way of accounting for the presence of MMS noise in the data used for constructing the GMM estimator. In the parametric approach, the moment conditions are adjusted to hold in the MMS noise setting, and in the non-parametric approach we use a noise robust estimate of IV, namely the realized kernel (RK) from Barndorff-Nielsen et al. (2008a).

2.2.1 The GMM estimator without noise in the data

We now review the GMM estimator from Bollerslev and Zhou (2002) in the case where we have observations from the Heston model without MMS noise. Since the daily IV is latent, the realization of this time series is approximated by the daily realized variance (RV). Replacing population moments of IV with sample moments of RV results in an easy-to-implement GMM estimator. The GMM-based estimation method crucially depends on the availability of high-frequency data, since high-frequency data will ensure that RV is a good approximation of IV the moment conditions will hold approximately for RV.

When considering the Heston model, the conditional moment conditions used for constructing the GMM estimator are given by

where \(\hbox {IV}_{t,t+1}\) denotes the integrated variance from day \(t\) to day \(t-1\) and \(\mathcal {G}_t=\sigma \big (\{\hbox {IV}_{t-s-1,t-s} | s=0,1,2,\ldots \infty \}\big )\). The functions \(\delta , \beta , H, I\), and \(J\) are functions of the parameters \(\kappa ,\alpha \), and \(\sigma \) and can be found in Appendix C. The functions \(\delta \) and \(\beta \) only depend on the drift parameters \(\kappa \) and \(\alpha \) which is why the second moment condition is needed. For further details on the derivation of the two conditional moments conditions see Bollerslev and Zhou (2002) or Appendix C. To get enough moment conditions to identify \(\theta \), the two moment conditions are augmented by \(\hbox {IV}_{t-1,t}\) and \(\hbox {IV}_{t-1,t}^2\), yielding a total of six moment conditions. By replacing daily IV with daily RV, and using the unconditional versions of the moment conditions, a feasible GMM estimator is obtained from

with \(\hat{W}=\hat{S}^{-1}\), where \(\hat{S}\) is a consistent estimate of the asymptotic covariance matrix of \(g_T(\theta )= \frac{1}{T-2}\sum _{t=1}^{T-2}f_t(\theta )\) and \(f_t(\theta )\) is given by

2.2.2 Parametrically correcting for noisy data in the GMM approach

Recall, when MMS noise is present, the observed returns have the following structure

If we denote the realized variance based on the MMS noise contaminated returns by \(\hbox {RV}^{\mathrm{MMS}}\) and the realized variance based on the efficient return process by \(\hbox {RV}^*\), we can rewrite \(\hbox {RV}^{\mathrm{MMS}}\) over day \(t\) as

where the number of intra-day observations is given by \(m:=\Delta ^{-1}\).

The idea is to noise correct the GMM estimation approach from Bollerslev and Zhou (2002) by adjusting the moment condition from (20) such that they hold for \(\hbox {RV}^{\mathrm{MMS}}\). In order to do so, we have to extend the filtration we condition on to a larger filtration, making \(\hbox {RV}^{\mathrm{MMS}}\) measurable w.r.t that filtration. The moment conditions from Bollerslev and Zhou (2002) were derived using the sigma-algebra \(\mathcal {G}_t=\sigma \big (\{\hbox {IV}_{t-s-1,t-s}| s=0,1,2,\ldots \}\big )\), that was approximated by \(\hat{\mathcal {G}}_t=\sigma \big (\{\hbox {RV}^*_{t-s-1,t-s}| s=0,1,2,\ldots \}\big )\). Instead of \(\hat{\mathcal {G}}_t\), we will now consider the larger filtration, \(\hat{\mathcal {H}}_t\), generated by \(\hbox {RV}^*\), the efficient returns process, \(Y^*\), and the noise process, \(\epsilon \), up until the beginning of day \(t\). Define

where \(Y^*_{i,t-1}\) and \(\epsilon _{i,t-1}\) for \(i=1,2,\ldots ,m\) denote the intra-day returns of the efficient price process and the MMS noise process during day \(t-1\) respectively. We now consider how to extend the first conditional moment condition from (18), using the decomposition from (21)

Let us first consider (22). From the moment conditions used in the no noise case we know that we approximately get a zero when conditioning on \(\hat{\mathcal {G}}_t\) in (22) instead. Under the assumption that the efficient return series, \(\{Y^*\}\), up till time \(t\) do not add significant explanatory power compared to the information contained in \(\hat{\mathcal {G}}_t\), (22) will also equal zero approximately, since both the \(\{\hbox {RV}^*\}\) and \(\{Y^*\}\) series are independent of the noise process. We do not use overnight returns, so the MA(1) structure in the noise process only holds within the trading day. This means that it will not impact the calculation of the conditional expectation, which will just equal the unconditional expectation \((1-\delta )2\omega ^2m\). Since, as just discussed, the noise process is independent of any realizations from previous days and has mean zero, the conditional expectation will just equal zero. All in all, this leaves us with the noise adjusted moments condition

As in the no MMS noise case we will augment the conditional moment condition (23) by \(\hbox {RV}^{\mathrm{MMS}}_{t-1,t}\) and \((\hbox {RV}^{\mathrm{MMS}}_{t-1,t})^2\) to get two additional moment conditions.

Turning our attention to the second conditional moment condition from (18), we wish to compute

This task is however not feasible since it involves computing \(E_{\theta }[Y^{*^2}_{i,t+1}|\mathcal {H}_t]\) and \(E_{\theta }[Y^{*^2}_{i,t}|\mathcal {H}_t]\). If this was possible, we could use these expressions to form martingale estimating functions and would not need to use PBEFs for the estimation of our SV model. The problem is that we do not have an analytical expression for the conditional expectation of the squared returns during day \(t+1\) and day \(t\) given the filtration generation by the return series up until time \(t\). Instead we will settle for four moment conditions and simply use the unconditional expectation of (24) given by

The derivation of the moment condition above can be found in Appendix C.

2.2.3 Non-parametrically correcting for noisy data in the GMM approach

In the presence of i.i.d. MMS noise that is independent of the efficient log-price process, Hansen and Lunde (2006) show that the bias in RV equals \(2 \Delta ^{-1}\omega ^2\).

In fact, the variance of RV also diverges to infinity as the sampling frequency increases. In the setting with MMS noise, RV is no longer a consistent estimator of IV. We will therefore use a noise robust estimate of IV when constructing the GMM estimator. Instead of basing the estimation procedure on the time series of daily RV, we will use the time series of daily realized kernels (RK) from Barndorff-Nielsen et al. (2008a). The estimator is then constructed using the moment conditions (20), replacing RV with RK. We use the flat-top Tukey–Hanning\(_2\) kernel, since the resulting RK is closest to being efficient in the setting of i.i.d. noise that is independent of the observed process. As for the bandwidth, \(H\), we follow the asymptotic derivations from Barndorff-Nielsen et al. (2008a) and let \(H\propto (1/\Delta )^{1/2}\), in order to obtain the optimal rate of convergence, \((1/\Delta )^{1/4}\), of RK to IV.Footnote 11

3 A Monte Carlo study of the finite sample performances

3.1 The setup and the case without noisy data

In this subsection and the following, the finite sample performances of the PBEF-based estimator from Sørensen (2000) and the GMM estimator from Bollerslev and Zhou (2002) are investigated in a Monte Carlo study. This subsection investigates the potential of using the intra-day returns directly in the PBEF-based estimator in a setting without MMS noise. The benchmark used for evaluating the performance of the PBEF-based method is the GMM approach from the previous subsection. In the next subsection, we first consider a setup with mild model misspecification, in the sense that we now add MMS noise to the simulated data and investigate how this impacts the two estimation methods. Afterwards, the performance of the noise corrected estimation methods are studied.

The data used for constructing the estimators are simulated realizations from the Heston model (2). We use a first-order Euler scheme to simulate the volatility- and log-price processes. The log-price is sampled every 30 s in the artificial 6.5 h of daily trading, for sample sizes of \(T=100,400,1000\) and \(4000\) trading days. Using the simulated data, daily realized variances based on the artificial 5-min returns are constructed. We will think of the five-minute returns as our available data. Since we are using 5-min returns over 6.5 h of trading, we have \(\Delta = 1/78\).

To get a better grasp of the finite sample performance of the estimator based on PBEFs, as well as the GMM estimator, we conduct our Monte Carlo experiment in three different scenarios of parameter configurations.

-

Scenario 1: \((\kappa , \alpha , \sigma ) = (0.03, 0.25, 0.10)\). The volatility process is highly persistent (near unit-root). The autocorrelation is given by \(r(u,\theta )=\hbox {e}^{-\kappa u}\) and the correlation between the volatility processes sampled five minutes apart equals \(\hbox {e}^{-0.03*1/78}=0.9996\). The half-life of the volatility process equals 23.1 days.

-

Scenario 2: \((\kappa , \alpha , \sigma )= (0.10, 0.25, 0.10)\). Here we have a slightly less persistent volatility process due to the increase in the mean-reversion parameter. The half-life now equals 6.93 days.

-

Scenario 3: \((\kappa , \alpha , \sigma )=(0.10, 0.25, 0.20)\). The local variance of volatility is now increased. This process is also close to the non-stationary region since the CIR process is stationary if and only if \(2\kappa \alpha \ge \sigma ^2\), and here \(2\kappa \alpha -\sigma ^2=0.01\) (compared to 0.04 in scenario 2).

The same scenarios were considered in the Monte Carlo study conducted in Bollerslev and Zhou (2002). In Bollerslev and Zhou (2002), the authors only consider the rather large sample sizes \(T=1000\) and \(T=4000\), corresponding to 4 and 16 years of data. Our Monte Carlo study therefore also contributes by investigating the usability of this method when less data are available. We impose strict positivity of the parameter estimates \(\hat{\kappa },\hat{\alpha }\) and \(\hat{\sigma }\) and use the true values of \(\theta \) as starting values in the numerical routines. In each case the number of Monte Carlo replications is 1000.

An interesting question that arises when considering PBEFs is how to optimally choose \(q\). No theory exists for this choice, as this would require knowledge of the intractable conditional expectation \(E_{\theta }\big [f(Y_i)|Y_1,\ldots , Y_{i-1}\big ]\) that we wish to approximate. One approach for answering this question could be to consider the partial-autocorrelation function for \(f(Y_i)\) and the functions used for predicting \(f(Y_i)\) and then choose \(q\) as the cutoff point where the function dies out. In our setting this corresponds to considering the partial-autocorrelation function for the squared returns. However, inverting the covariance matrix \(C(\theta )\) can cause numerical challenges and inaccuracies for large values of \(q\).

Instead, we start at the smallest interesting choice, \(q=3\), and then later on investigate the sensitivity w.r.t the choice of \(q\).Footnote 12 When the parameters \(\theta =(\kappa ,\alpha ,\sigma )\) are estimated, we minimize \(G_n(\theta )'G_n(\theta )\) instead of solving \(G_n(\theta )=\underline{0}\). In the implementation of the GMM estimation procedure, we use continuously updated GMM, where the weight matrix is estimated simultaneously with the parameters \(\theta \). The asymptotic covariance matrix of \(g_T(\theta )\) is estimated using the heteroscedasticity and autocorrelation consistent estimator from Newey and West (1987). Regarding the lag length in the Bartlett kernel we follow a rule-of-thumb from Newey and West (1987), that is \(\lfloor 4(T/100)^{2/9}\rfloor \). The results on the finite sample performance of the two estimation methods in the absence of noise are summarized in Table 1.

The potential of PBEFs is clear from Panel A in Table 1. The estimators are practically unbiased for the large sample sizes, \(T=1000,4000\), and the bias for the small sample size is of an acceptable size. For the small sample sizes, there is a small downwards bias in \(\kappa \) and a more pronounced upwards bias in \(\sigma \). The root MSREs behave as expected, decaying with \(T\) and are roughly halved when the sample size grows from \(T=100\) to \(T=400\) and from \(T=1000\) to \(T=4000\). The mean-reversion rate, \(\kappa \), is most accurately estimated in terms of root MSRE, whereas the other drift parameter, \(\alpha \), has the highest root MSRE. All three parameters are easiest to estimate in Scenario 2, where the volatility process is less persistent and less volatile. This could be because the other two scenarios are closer to the non-stationary region where the Feller condition is violated. In Scenario 1, with a highly persistent volatility process, the root MSREs are higher compared to the other two scenarios.

Turning our attention to Panel B of Table 1, we see that the GMM estimator from Bollerslev and Zhou (2002) performs poorly when the sample size is small. In fact, the results for \(T=100\) indicate that the method is not working for sample sizes this small. For the larger sample sizes, our results match those found in Bollerslev and Zhou (2002). The table reveals an upwards bias in \(\kappa \) and a smaller downwards bias in \(\alpha \). The volatility of volatility parameter \(\sigma \) has a small, yet systematic, upwards bias that actually seems to worsen when the sample size increases.Footnote 13 The drift parameters again appear to be easiest to estimate in Scenario 2. In contrast to the results for the PBEF-based estimator, the most accurate estimates of \(\sigma \) are now found in Scenario 3, where the volatility of volatility is high.

If we compare Panel A and B of Table 1, it is clear that the PBEF-based method outperforms the GMM approach, especially when the sample size is small. The informational content of 100 observations of daily realized variance is too small to fully extract the dynamics of the underlying volatility process compared to 7800 observations of intra-day squared returns, and in general it seems that the PBEF-based estimator is able to exploit the extra information contained in the intra-daily returns. Furthermore, a GMM estimator based on 100 observations will, in general, often result in inaccurate estimates. The gains from using PBEFs are most prominent for the mean-reversion rate, \(\kappa \), whereas the root MSREs for \(\alpha \) are similar across the two estimation methods for the larger sample sizes.

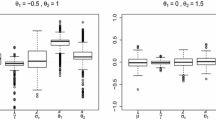

To investigate the optimal choice of \(q\) in the PBEF, the impact on the root MSREs from increasing \(q\) is examined. In Fig. 1 the three different scenarios are considered for \(T=1000\), and the root MSREs for the three parameters are plotted against the choice of \(q\). The shape of the plots for \(\kappa \) and \(\sigma \) looks almost identical. In Scenarios 1 and 3, where the volatility process is close to the non-stationary region, \(q=3\) seems to be the optimal choice for \(\kappa \) and \(\sigma \). In Scenario 2, \(q=6\) appears to be the optimal choice for both parameters. Looking at the three plots for \(\alpha \) there does not seem to be much variation in the root MSRE across the choice of \(q\). In Scenario 1 and 3, where we are close to the non-stationary region, the root MSRE decreases when \(q\) increases. Therefore, we chose \(q=3\) in our Monte Carlo Study even though the variations in the root MSREs are small.

3.2 Including noise in the observations

In this section, the impact of MMS noise on the parameter estimates from the two estimation procedures is investigated. We consider the noise level, \(\omega ^2=0.001\), as this choice is in line with the empirical estimates found for stock returns in Hansen and Lunde (2006).Footnote 14 First, we will simulate data from the Heston model with the inclusion of MMS noise and then perform parameter estimation ignoring the presence of noise. The resulting estimates will be analyzed in the next subsection. Then, in the following subsection, the finite sample performance of the noise corrected estimators will be investigated.

3.2.1 The impact of failing to correct for the presence of noise

The finite sample performances of the two estimation methods without noise correction are summarized in Table 2. Panel A of the table reports the results for the PBEF-based estimator, and Panel B reports the results for the GMM estimator based on RV.

From the results, it follows that the inclusion of MMS noise in the observed process leads to biases in the parameters. Panel A of the table shows that the downwards bias in \(\kappa \) has worsened in the presence of noise and the small downwards bias in \(\alpha \) has turned into a severe upwards bias. The bias in \(\alpha \) matches exactly the expected bias, \(\tfrac{2\omega ^2}{\Delta }\), which in relative terms equals \(62.4\,\%\). The upwards bias in \(\sigma \) has also worsened, but \(\alpha \) is the parameter that is most affected by ignoring the presence of noise. For all three parameters, the highest root MSREs still occur in Scenario 1, and the lowest in Scenario 2. In Panel B, the results for the performance of the GMM estimator based on RV reveal that the bias in \(\kappa \) has roughly doubled compared to the no noise setting, and it is approximately twice the size of the bias in \(\kappa \) in Panel A. As for the other drift parameter, the downwards bias in \(\alpha \) has been turned into a severe upwards bias of a similar size as the one found in Panel A. The sign of the bias in \(\sigma \) has also changed, and the table now reports a downwards bias around the same size as the upwards bias in Panel A.

The root MSREs reported in Table 2 have all gone up compared to the no noise case from Table 1, and the results show that failing to correct for noise strongly impacts the parameter estimates. The long run mean of volatility, \(\alpha \), appears to be affected the most. As in the no noise setting of Table 1, the performance of the GMM-based estimator is quite poor for small sample sizes, and it only produces trustworthy results when \(T=400\) and we are in Scenario 2 or 3. When the larger sample sizes \(T=1000\) and \(T=4000\) are considered, the root MSREs of \(\kappa \) are higher in Panel B, but the impact of noise on the root MSREs of \(\alpha \) and \(\sigma \) appears to be the same across the two estimation methods.

3.2.2 Finite sample performances of the noise corrected estimation procedures

The performances of the estimator based on the noise corrected PBEF and the GMM estimator where noise is corrected for parametrically are reported in Table 3. Table 4 presents the results for the GMM estimator based on RK.

The results in Panel A of Table 3 show that the noise corrected PBEF-based estimation procedure is able to correctly account for the presence of noise and produce unbiased estimates for the larger sample sizes. In fact, the small upwards bias in \(\sigma \) found in Table 1 has decreased significantly for the smaller sample sizes, and it disappears as the sample size grows. The variance of the noise process, \(\omega ^2\), is also accurately estimated. The root MSREs for \(\kappa \) are in general very similar to those reported in Table 1. For the other drift parameter, \(\alpha \), the root MSREs have now gone down, compared to the no noise setting. Due to the bias reduction in \(\sigma \), for the smaller sample sizes \(T=100\) and \(T=400\), the root MSREs are also lower than what was reported in Table 1. For the larger sample sizes \(T=1000\) and \(T=4000\), the root MSREs for \(\sigma \) are a bit higher or similar to those found in Table 1. For all three parameters, the root MSREs are smallest in Scenario 2, as was also the case in the no noise setting.

The performance of the parametrically noise corrected GMM estimator is summarized in Panel B of Table 3. An inspection of the results shows that although the GMM estimator does not produce unbiased estimates it succeeds at incorporating the noise and produce estimates with small biases comparable to those from Table 1. In fact, the biases are now a bit lower than in Table 1, with the difference being most apparent in Scenario 3. The results for the smallest sample size, \(T=100\), appear unreliable, in the sense that 100 observations are simply not enough to infer the dynamics of the underlying volatility process and produce estimates with an acceptable level of bias and root MSREs. Comparing the root MSREs to those reported in Table 1, we find that the root MSREs are in general similar to the no noise setting, with the root MSREs for \(\kappa \) and \(\sigma \) being a bit bigger than in Table 1 and the root MSREs for \(\alpha \) a bit smaller. The patterns, found in Panel B of Table 1, across the different scenarios are repeated, the drift parameters have lower root MSREs in Scenario 2, whereas \(\sigma \) is most accurately estimated in Scenario 3.

When comparing Panel A and B of Table 3, it is clear that the PBEF-based estimation method produces more accurate parameter estimates. The root MSREs of \(\alpha \) are quite similar for the two methods, but the root MSREs are lower in Panel A, for the other two parameters and the noise variance. The difference between the two methods is most prominent for the mean-reversion parameter, which is extremely well estimated with the PBEF-based method.

We also considered estimating the Heston model using noisy data, by simply replacing RV with a realized kernel, RK, in the original moment conditions from Bollerslev and Zhou (2002). The finite sample performance of this estimator is summarized in Table 4. Even though the estimator is based on six moment conditions, compared to the four moment conditions used for constructing the parametrically noise corrected estimator, the performance is not nearly as good. The biases in the parameters are larger, but have the same signs as in Panel B of Table 3. The small systematic bias in \(\sigma \) due to the discretization error and noisy data has also increased compared to Table 1. This could be explained by the slower rate of convergence of RK to IV, compared to the convergence rate of RV. The biases in the drift parameters are however comparable to those reported in the no noise setting, and for \(\kappa \) the bias is in fact a bit lower. The two ways of correcting for noise in the GMM approach give rise to somewhat similar results for the mean-reversion rate \(\kappa \), but the root MSREs are lower for \(\alpha \) and \(\sigma \) when the noise corrected estimator based on RV is employed. The investigation of robustness towards misspecification of the noise process is outside the scope of this paper. In our setting, without model misspecification, the parametric approach of correcting for the noise outperforms the non-parametric approach.

In conclusion, the PBEF-based estimation method produced promising results, and it appears that the method is able to exploit the extra information contained in directly using the dynamics of the intra-day returns, instead of aggregating them into realized measures. What remains to be investigated is how the two estimation methods perform empirically.

4 Empirical application

In this section, we use actual 5-min returns as input in the two estimation methods analyzed in our Monte Carlo study. We are aware that the Heston model might not fit the chosen data. As such our application should be seen as a check of what happens when the estimation methods are used to fit a (possibly misspecified) model to real data.

4.1 Data description

For our empirical illustration we use 5-min returns for SPDR S&P 500 (SPY). SPY is an exchange traded fund (ETF) that tracks the S&P 500. The sample covers the period from January 4, 2010 through December 31, 2013. We sample the first price each day at 9:35 and then every 5 minute until the close at 16:00. Thus, we have 77 daily 5-min returns for each of the 1006 trading days in our sample, yielding a total of 77,462 5-min returns. We cleaned the data following the step-by-step cleaning procedure used in Barndorff-Nielsen et al. (2008b).

As a first inspection of the data characteristics, we consider the empirical autocorrelation functions for the squared 5-min returns, reported in the top panel of Fig. 2. The autocorrelation function does not seem to be exponentially decaying, revealing that the Heston model will not be able to properly account for the dynamics of the data. However, our main interest lies in investigating whether the two estimation methods will yield similar parameter estimates or whether they perform differently. The autocorrelation function also exhibits cyclical patterns corresponding to a lag length of one trading day. This is due to the well-documented intra-day periodicity in volatility in foreign exchange and equity markets, see for instance Andersen and Bollerslev (1997) or Dacorogna et al. (1993).

The intra-daily periodicity in the volatility might cause the two estimation methods to perform differently. The intra-daily periodicity should not affect the GMM-based estimator much, since the intra-daily pattern in volatility will be “smoothed out” when the 5-min returns are aggregated into the daily realized measures. The same does not apply for the estimator based on PBEFs, since this estimator is based directly on the squared 5-min returns. Hence, the intra-daily periodicity might effect the parameter estimates when the PBEF-based estimation method is carried out. In order to avoid this, the intra-daily volatility pattern is captured by fitting a spline function to the intra-daily averages of 5-min returns using a non-parametric kernel regression.

The data are then adjusted for periodicity in intra-daily volatility by dividing the squared returns through by the fitted values from the spline function, matched according to the intra-daily 5-min interval in which the observation falls. Finally, the squared returns are normalized such that the overall variance of the squared returns remains unchanged. The bottom panel of Fig. 2 displays the autocorrelation function for the adjusted data. From the figure, it is clear that the intra-daily periodicity has been removed. It is however also evident that the autocorrelation function is not exponentially decaying, rendering the Heston model a poor model choice. There seems to be a need for at least a two factor SV model, in order to properly capture the dynamics of the autocorrelation function. One factor is needed for capturing the fast decay in the autocorrelation function at the short end, whereas the other factor should be slowly mean reverting and thereby account for the persistent or long memory-like factor in the variance.

4.2 Estimation results

In the Heston model, the decay rate of the autocorrelation function for the squared returns is uniquely governed by the mean-reversion parameter \(\kappa \). Due to the dynamic structure of the autocorrelation function, discussed above, the choice of prediction space might heavily influence the estimated value of \(\kappa \). Depending on the largest time lag of past squared returns included in the predictor space, different dynamics might be captured. When fitting the Heston model to the adjusted data, we hold the dimension of the predictor space fixed at 4, (\(q=3\)), but consider four different choices of basis elements, spanning the predictor space. The four cases correspond to having 1 h, 1, 2, and 4 days between each of the basis elements.Footnote 15 The time lag between the basis elements will be denoted by the \(l\) variable. The simple choice of weight matrix used in the Monte Carlo study is also employed when constructing the PBEFs. We will only consider the noise corrected estimators, as MMS noise is a stylized feature of the present data. The variance of the noise process is not estimated in the GMM approach based on RK, so instead we report the non-parametric estimate of the daily MMS noise variance, using the formula favored in Barndorff-Nielsen et al. (2008a),

with RK and RV constructed using the intra-daily adjusted 5-min returns and where \(\hat{\omega }^2= \hbox {RV}/2m\). In our case \(m=77\), and the overall estimate of the MMS noise variance is found by averaging the 1006 daily estimates. For the SPY data we find \(\check{\omega }^2_{\mathrm{avg}}=0.0015629\). The realized kernel is now computed using the parzen kernel with \(H\propto (1/\Delta )^{3/5}\) as recommended in Barndorff-Nielsen et al. (2008b) for empirical applications. The obtained convergence rate of RK to IV is now \((1/\Delta )^{1/5}\). The choice of bandwidth \(H\), that resulted in the convergence rate of \((1/\Delta )^{1/4}\) in our Monte Carlo study, relies heavily on the assumption of i.i.d. MMS noise which might not hold in practice. The results from fitting the Heston model to the data, using the various estimators, are reported in Table 5. Computation of asymptotic standard errors for the PBEF-based estimator is challenging. It involves computation of the matrix \(\overline{M}_n(\theta )\), that also enters the expression for the optimal weight matrix \(A^*(\theta )\), and was in fact the main reseason why we focused on the sub-optimal PBEF in our Monte Carlo study. Therefore, we resort to bootstrap methods for computing standard errors. The standard errors and \(95\%\) confidence intervals (CI) reported in Table 5 are computed using the moving block bootstrap, as recommended in Lahiri (1999). The confidence intervals are equal tail intervals, constructed using the percentile method. As for the block length in the bootstrap method, then \(T^{1/3} (\approx \!10)\) days is the rule-of-thumb advocated in Hall et al. (1995) for constructing standard errors. Due to the strong persistence in the data, we choose to be conservative and use a block length of 20 days.

Table 5 also reports the fit to the moments of the adjusted squared returns implied by the parameter estimates from using the various estimation methods. The obtained parameter estimates vary across the different estimation methods, but all are meaningful and within the same range. From Table 5, we see that the two different ways of noise correcting the GMM estimator impact the parameter estimates, especially \(\sigma \). The estimated noise variance in the parametrically noise corrected method is only about half of the non-parametric estimate \(\check{\omega }^2_{\mathrm{avg}}\). It also becomes evident that the choice of the predictor space, represented by the variable \(l\), highly impacts the parameter estimates. When \(l\) is low, \(\kappa \) is high, and more emphasis is put, by the PBEF estimator, on capturing the fast decaying part in the short end of the autocorrelation function. As the \(l\) variable increases, \(\kappa \) drastically drops and reveals the need for several volatility factors in order to fully capture the dynamics of the data. The split between how much of the mean of the squared adjusted returns is due to the long run mean of the volatility process, \(\alpha \), and how much is due to the variance of MMS noise, \(\omega ^2\), also varies with the \(l\) variable. An exception is when \(l=77\), where the estimated noise variance is similar to the estimate obtained with the parametrically noise corrected GMM method, otherwise the noise variance is either severely underestimated or potentially overestimated. This could be a consequence of the i.i.d. Gaussian noise assumption, which might not hold. It could also be that the PBEF-based method has problems identifying \(\omega ^2\) and \(\alpha \) in the data at hand. We therefore also consider fixing the noise variance at the estimate \(\check{\omega }^2_{\mathrm{avg}}\) and only estimate the three parameters from the Heston model. This procedure is denoted by PBEF 2 step in the Table. The results from the PBEF 2 step method reveal that fixing the noise variance mainly affects the estimate of \(\alpha \), which is now constant across the choice of predictor space. The PBEF 2 step method also appears more stable, with the relative standard errorsFootnote 16 being constant across the values of the time lag in the predictor space, except for the intra-day time lag (\(l=12\)). Checking for parameter stability across the different predictor spaces employed could serve as a general robustness check that might reveal model misspecification.

The last three columns of Table 5 report the model-implied fit to the sample moments of the data. The mean of the adjusted squared returns is extremely well fitted by the PBEF 2 step procedure, whereas the other methods do not give as good fits. The variance is however reasonably well matched when the lower values of \(l\) are used in the PBEF 1 step procedure, but is poorly matched when \(l\) is high. The PBEF 2 step procedure and the GMM estimator based on RK also produce reasonable fits to the variance. The first-order autocorrelation of the squared returns is best matched by the GMM method based on RV and the PBEF 1 step method with \(l=12\), with the PBEF 2 step approach being the runner-up. Not surprisingly, the overall best fit is obtained by the PBEF-based method, and the fit appears more stable when the PBEF 2 step approach is used.

From Table 5 we also observe that the Feller condition is violated in all the estimation procedures, indicating that the Heston model provides a poor fit to the data.Footnote 17 However, this has no influence on the specific aim of this section which was to investigate how the two different estimation methods handle real data with possible model misspecification. The problem seems to be that the dynamic structure implied by the Heston model is not flexible enough to adequately model the observed dynamics. The need for allowing for several volatility factors is best highlighted by the PBEF-based estimation method. The flexibility of the PBEF-based method can in general serve as a robustness check of the specified model, including the noise specification.

5 Conclusion and final remarks

The general theory underlying PBEFs was reviewed and detailed. We explicitly constructed PBEFs for parameter estimation in the Heston model with and without the inclusion of noise in the data. The link between optimal GMM estimation and the optimal PBEF was also derived. As a benchmark, we considered the GMM estimator from Bollerslev and Zhou (2002), and we extended the method to handle noisy data in a parametric and non-parametric way. The finite sample performance of the estimator based on PBEFs was investigated in a Monte Carlo study and compared to that of the GMM estimator. In the no MMS noise setting, there are gains to be made from using PBEFs, both in terms of bias and root MSRE, especially when the sample size is small. The gain from using the PBEF-based method was most prominent for the mean-reversion parameter, \(\kappa \), that was extremely well estimated. The PBEF-based method produced promising results in all three parameter configurations, but the root MSREs were lower when the volatility process was less persistent and less volatile.

Including MMS noise in the observation equation, but neglecting to correct for it, produced biased estimates with the upwards bias in the long run average variance, \(\alpha \), being most severe.

We then considered the performance of the noise corrected estimation methods. The PBEF-based estimator and the parametrically noise corrected GMM estimator produced results similar to those found in the no MMS noise setting. The non-parametric approach, where the GMM estimator is based on RK, did not perform as well. Hence, the GMM estimator could not compete with the results obtained using the noise corrected PBEF.

In our empirical application, by fitting the Heston model to SPY data, we investigated how the two different approaches handle real data. The empirical application revealed that the choice of estimation approach impacts the parameter estimates. The study also made it clear, how the great flexibility of the PBEF-based estimation method could serve as a way of conducting robustness checks, for instance by checking for parameter stability across different time spans of the predictor space.

It would be of interest to see how the estimation method based on PBEFs performs if we extend the Monte Carlo setup by leaving the assumption of i.i.d. noise. This would however complicate the construction of the MMS noise corrected PBEF and the recalculation of the moments used for constructing the GMM estimator. A solution to this potential problem could be to filter out the noise in a first step using the method of pre-averaging introduced by Jacod et al. (2009), instead of modeling the noise directly. The performance of this approach is still to be investigated. Since the PBEF-based estimation method is quite general, an important contribution to the existing literature would be to consider PBEFs in a setting where the driving sources of randomness are general Lévy processes, like the models considered in Brockwell (2001), Barndorff-Nielsen and Shephard (2001), and in Todorov and Tauchen (2006). Finally, quantifying the gain from using the optimal PBEF in different settings, as well as how to simulate or approximate the optimal weight matrix, would also be a topic for future research.

Notes

An implementable version of the optimal PBEF will however require simulation of a covariance matrix. Simulation based estimation methods for continuous time SV models, such as indirect inference, see Gourieroux et al. (1993), the efficient method of moments (EMM), see Gallant and Tauchen (1996), or Markov Chain Monte Carlo (MCMC), see Eraker (2001), are not as easily implemented, since many of them require substantial computational efforts. Another way of tackling the difficulties, that arise when considering parameter estimation in continuous time SV models, is based on approximations of the likelihood function, see for example Ant-Sahalia and Kimmel (2007).

One could also choose to predict functions of the type \(f(Y_i,\ldots ,Y_{i-s})\), see Sørensen (2011), but for the purpose of this study \(f(Y_i)\) will be general enough. The function \(f\) can be chosen freely but will often take the form \(f(Y_i)=Y_i^{\nu },\; \nu \in \mathbb {N}\), such that the moments needed to find the (optimal) PBEF are easier to calculate. PBEFs can in fact be further generalized to a setup where several functions of the data, \(f_j(Y_i) \; j=1,\ldots ,N\), are predicted, see Sørensen (2000) and Sørensen (2011). This generalization will, however, not be necessary for estimating the SV model we are considering.

Unique in the sense of mean square distance.

In the case of the Heston model, we will consider basis elements of the form \(Z_k^{(i-1)}=Y_{i-k}^2, \, k=1,\ldots q\).

PBEFs with finite-dimensional predictor spaces can also be computed for non-stationary processes, but in this case computing the MMSE predictor, \(\hat{\pi }(\theta )\), is a bit more complicated since the coefficients, \(\hat{a}_0(\theta ),\ldots , \hat{a}_q(\theta )\), become time varying.

Note that the sum starts at \(i=s+1\) since \(Z_k^{(i-1)}\) is only well defined for \(i\ge s+1\).

Higher power of \(y\) could also have been considered, but very high moments are often not reliable for empirical investigations, and we choose to stick with \(f(y)=y^2\) as suggested in Sørensen (2000).

It should be noted that one does not have to choose a predictor space spanned by variables of the same functional form as \(f\), even though it seems like the most natural choice.

When the volatility process \(\{v_t\}\) is \(\rho \)-mixing the coefficient \(\hat{a}_k(\theta )\) decreases exponentially with \(k\). Then \(q\) is not required to be very large since \(q\) represents the “‘required” information for predicting \(f(Y)\), [see Theorem 3.3 in Bradley (2005)].

A task that involves computing \(E_{\theta }[Y_i^2Y_j^2Y_k^2Y_1^2]\) for \(i\ge j \ge k\). For further details on how to compute optimal PBEFs for stochastic volatility models, see Sørensen (2000). In Sørensen (2000) an analytical formula for the optimal PBEF for an affine SV model, such as the Heston model, is also given. Even though an analytical expression for \(A^*(\theta )\) is in principle available, it is a very complicated expression and not easily implementable. In practice, a feasible strategy could be to simulate \(A^*(\theta )\).

For further details on how the bandwidth is chosen, consult section 4 of Barndorff-Nielsen et al. (2008a).

\(q=2\) would automatically result in an optimal PBEF because the weight matrix \(A(\theta )\) would then be a \(3 \times 3\) matrix and could be disregarded when solving \(G_n(\theta )=\underline{0}\).

This bias in \(\sigma \) can, as found in Bollerslev and Zhou (2002), be explained by the variance of the discretization error \(u_{t,t+1} := \hbox {RV}_{t,t+1} - \hbox {IV}_{t,t+1}\), since Barndorff-Nielsen and Shephard (2002) show that \(\hbox {RV}^2_{t,t+1}\) , is for any fixed sampling frequency, an upwards biased estimator of \(\hbox {IV}^2_{t,t+1}\). To account for this discretization error, Bollerslev and Zhou (2002) introduce a nuisance parameter, \(\gamma \), and approximate \(\hbox {IV}_{t+1,t+2}^2\) by \(\hbox {RV}_{t+1,t+2}^2 -\gamma \). We also implemented this simple discretization error correction and found, in line with Bollerslev and Zhou (2002), that it helps remove the systematic bias in \(\sigma \), but also roughly doubles the root MSRE. We will therefore proceed without this correction in the rest of our Monte Carlo study. The results with discretization error correction are available upon request.

We also considered the noise level, \(\omega ^2=0.0005\), and the results are available upon request.

That is, in the first case we let the predictor space be spanned by \(Y_{i-1}^2, Y_{i-13}^2, Y_{i-25}^2\) and a constant. In the second case we choose \(Y_{i-1}^2, Y_{i-78}, Y_{i-155}\) and a constant as the basis elements of the predictor space and so on.

The standard errors normalized by the parameter estimate.

0 is actually contained in the CI’s for the FC variable when the GMM method based on RK and the PBEF 1 step method with \(l=12\) and \(l=77\) are employed, but only barely.

References

Andersen, T.G., Bollerslev, T.: Intraday periodicity and volatility persistence in financial markets. J. Empir. Financ. 4, 115–158 (1997)

Andersen, T.G., Davis, R., Kreiss, J.-P., Mikosch, T.: Handbook of Financial Time Series. Springer, Berlin (2009)

Ant-Sahalia, Y., Kimmel, R.: Maximum likelihood estimation of stochastic volatility models. J. Financ. Econ. 83, 413–452 (2007)

Barndorff-Nielsen, O.E., Hansen, P.R., Lunde, A., Shephard, N.: Designing realized kernels to meausure the ex post variantion of equity prices in the presence of noise. Econometrica 76, 1481–1536 (2008a)

Barndorff-Nielsen, O.E., Hansen, P.R., Lunde, A., Shephard, N.: Realised kernels in practice: trades and quotes. Econom. J. 04, 1–32 (2008b)

Barndorff-Nielsen, O.E., Shephard, N.: Non-Gaussian OU-based models and some of their uses in financial economics (with dicussion). J. R. Stat. Soc. B 63, 167–241 (2001)

Barndorff-Nielsen, O.E., Shephard, N.: Econometric analysis of realized volatility and its use in estimating stochastic volatility models. J. R. Stat. Soc. B 64, 253–280 (2002)

Bollerslev, T., Zhou, H.: Estimating stochastic volatility diffusions using conditional moments of integrated volatility. J. Econom. 109, 33–65 (2002)

Bradley, R.C.: Basic properties of strong mixing conditions: a survey and some open questions. Probab. Surv. 2, 107–144 (2005)

Brockwell, P.J.: LTvy-driven CARMA processes. Ann. Inst. Statist. Math. 53, 113–124 (2001)

Corradi, V., Distaso, W.: Semi-parametric comparison of stochastic volatility models using realized measures. Rev. Econ. Stud. 73, 635–667 (2006)

Dacorogna, M., Mnller, U., Nagler, R., Olsen, R., Pictet, O.: A geographical model for the daily and weekly seasonal volatility in the foreign exchange market. J. Int. Money Financ. 12, 413–438 (1993)

Eraker, B.: Markov Chain Monte Carlo analysis of diffusion models with application to finance. J. Bus. Econ. Stat. 19–2, 177–191 (2001)

Gallant, A.R., Tauchen, G.: Which moments to match? Econom. Theory 12, 657–681 (1996)

Gourieroux, C., Monfort, A., Renault, E.: Indirect inference. J. Appl. Econom. 8, S85–S118 (1993)

Hall, P., Horowitz, J., Jing, B.: On blocking rules for the bootstrap with dependent data. Biometrika 82, 561–574 (1995)

Hansen, P.R., Lunde, A.: Realized variance and market microstructure noise. J. Bus. Econ. Stat. 24, 127–218 (2006)

Heston, S.L.: A closed-form solution for options with stochastic volatility with applications to bond and currency options. Rev. Financ. Stud. 6, 327–343 (1993)

Jacod, J., Li, Y., Mykland, P., Podolskij, M., Vetter, M.: Microstructure noise in the continuous case: the pre-averaging approach. Stoch. Process. Appl. 119, 2249–2276 (2009)

Karlin, S., Taylor, H.M.: A First Course in Stochastic Processes. Academic Press, New York (1975)

Lahiri, S.N.: Theoretical comparison of block bootstrap methods. Ann. Stat. 27, 386–404 (1999)

Newey, W.K., West, K.D.: A simple positive semi-definite, heteroskedasticity and autocorrelation consistent covariance matrix. Econometrica 55, 703–708 (1987)

Nolsøe, K., Nielsen, J.N., Madsen, H.: Prediction-based estimating functions for diffusion processes with measurement noise. In: Technical Reports no. 10, Informatics and mathematical modelling. Technical University of Denmark (2000)

Sørensen, M.: Prediction-based estimating functions. Econom. J. 3, 123–147 (2000)

Sørensen, M.: Prediction-based estimating functions: review and new developments. Braz. J. Probab. Stat. 25, 362–391 (2011)

Todorov, V.: Estimation of continuous-time stochastic volatility models with jumps using high-frequency data. J. Econom. 148, 131–148 (2009)

Todorov, V., Tauchen, G.: Simulation methods for LTvy-driven CARMA stochastic volatility models. J. Bus. Econ. Stat. 24, 455–469 (2006)

Acknowledgments

The authors acknowledge financial support by the Center for Research in Econometric Analysis of Time Series, CREATES, funded by the Danish National Research Foundation.

Author information

Authors and Affiliations

Corresponding author

Additional information

We are grateful to Michael Sørensen for interesting discussions as well as many useful comments. We also appreciate the comments from the editor and two anonymous referees which have improved the quality of the paper significantly. We acknowledge financial support by the Center for Research in Econometric Analysis of Time Series, CREATES, funded by the Danish National Research Foundation.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Brix, A.F., Lunde, A. Prediction-based estimating functions for stochastic volatility models with noisy data: comparison with a GMM alternative. AStA Adv Stat Anal 99, 433–465 (2015). https://doi.org/10.1007/s10182-015-0248-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10182-015-0248-6