Abstract

Process mining aims at obtaining information about processes by analysing their past executions in event logs, event streams, or databases. Discovering a process model from a finite amount of event data thereby has to correctly infer infinitely many unseen behaviours. Thereby, many process discovery techniques leverage abstractions on the finite event data to infer and preserve behavioural information of the underlying process. However, the fundamental information-preserving properties of these abstractions are not well understood yet. In this paper, we study the information-preserving properties of the “directly follows” abstraction and its limitations. We overcome these by proposing and studying two new abstractions which preserve even more information in the form of finite graphs. We then show how and characterize when process behaviour can be unambiguously recovered through characteristic footprints in these abstractions. Our characterization defines large classes of practically relevant processes covering various complex process patterns. We prove that the information and the footprints preserved in the abstractions suffice to unambiguously rediscover the exact process model from a finite event log. Furthermore, we show that all three abstractions are relevant in practice to infer process models from event logs and outline the implications on process mining techniques.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Process mining comprises methods and techniques for understanding possibly unknown processes from recorded events. Input to process mining is usually an event log containing sequences of events that describe activity occurrences observed in the past. An event log is always a finite sample of the possibly infinite behaviour of the underlying process. Process discovery has the aim of learning a process model that can describe the behaviour of this unknown process [1].

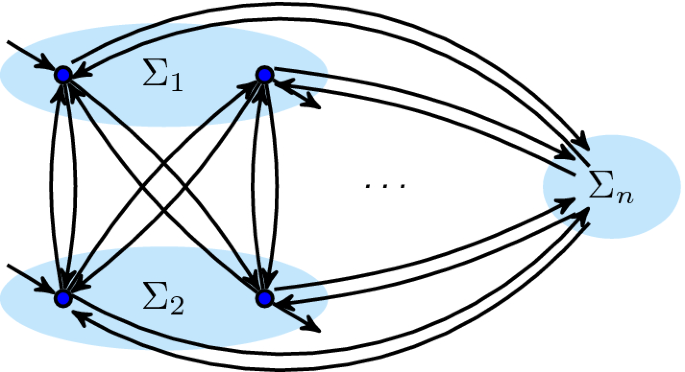

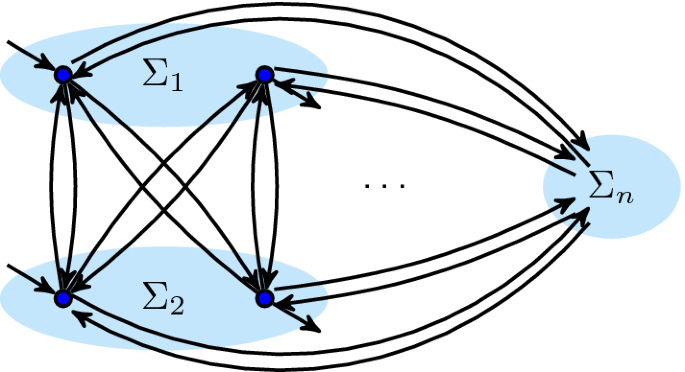

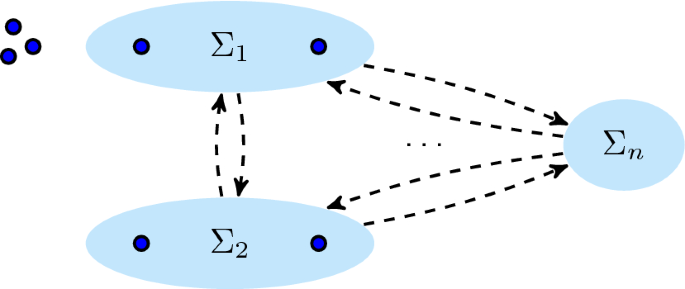

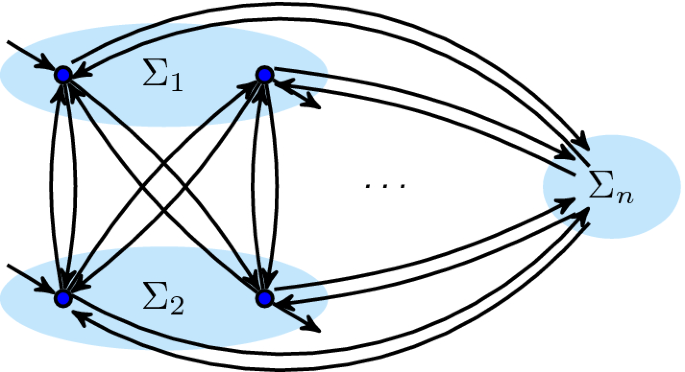

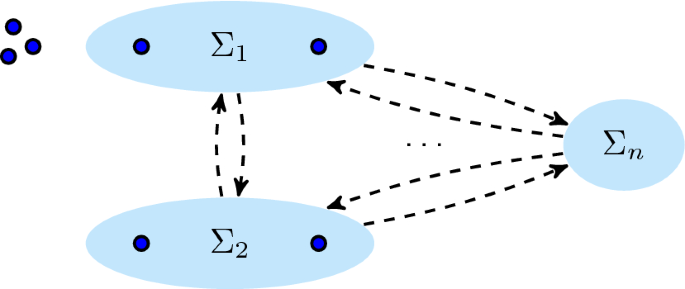

Process discovery is typically studied in terms of formal languages as illustrated in Fig. 1: process S has unknown behaviour \(\mathcal {L}(S)\) from which some process executions are recorded in a log \(L \subseteq \mathcal {L}(S)\)—a finite language over a set of activities. A process discovery algorithm then shall infer from finite L the remaining, infinite, unobserved behaviour of S and encode them in a model M, describing possible process executions as language \(\mathcal {L}(M)\). Ideally, the discovered model M describes precisely the behaviour of the underlying process S, that is, \(\mathcal {L}(M) = \mathcal {L}(S)\). In practice, the underlying process S is unknown, so finding the “right” model M is often seen as a pareto-optimization problem between fitness (maximize \(L \cap \mathcal {L}(M)\)), precision (minimize \(\mathcal {L}(M) \setminus L\)), generalization (maximize behaviour not seen in L but likely to occur in the future), and simplicity of M [2].

1.1 Information preservation in process discovery

In many time-efficient discovery algorithms [3,4,5,6], a function \(\alpha \) first abstracts the known behaviour seen in L into abstract behavioural information \(B = \alpha (L)\) on the activities recorded in L, thereby generalizing from the sample L. Common abstractions are the “directly follows” [3] and “eventually follows” [4] relations and their variants [7], and “behavioural profiles” [8] that also include “conflict” and “concurrency”. The discovery algorithm then synthesizes a model \(M = \gamma (B)\) from B using a concretization function \(\gamma \), after optionally processing B [4, 9, 10], thereby interpreting information in abstraction B.

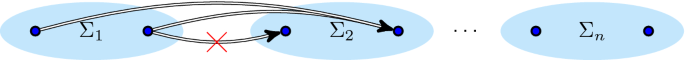

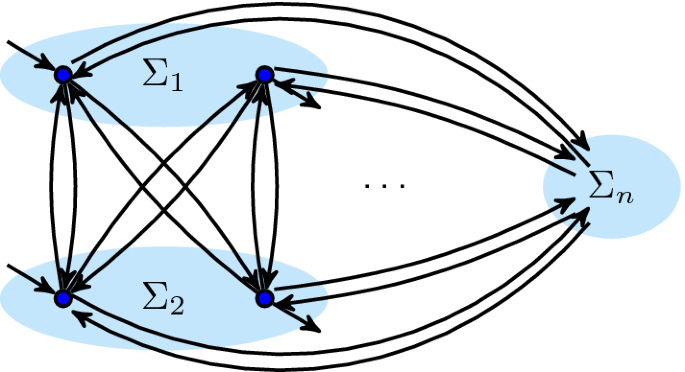

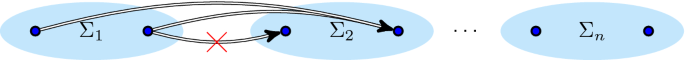

Two process models in BPMN [11], (a, b), with a different language but the same directly follows abstraction (c)

This renders the abstraction central to information preservation and inference. If for two logs (two different samples) \(L_x\) and \(L_y\), the abstraction yields \(\alpha (L_x) = \alpha (L_y)\), then any discovery algorithm using \(\alpha \) will return the same model \(\gamma (\alpha (L_x)) = \gamma (\alpha (L_y))\), even if \(L_x\) and \(L_y\) come from different processes. For example, the two different processes in Fig. 2a, b may yield logs \(L_{1} = \{ \langle a, b, c \rangle , \langle a, c, b\rangle \}\) and \(L_{2} = \{ \langle a, b, c\rangle , \langle a, c, b \rangle , \langle a, b\rangle , \langle a, c\rangle \}\) both having the same directly follows abstraction shown in Fig. 2c. The directly follows abstraction is not preserving all information of Fig. 2a, b, and any discovery algorithm working with this abstraction alone cannot distinguish these logs.

Beyond event logs, stream process discovery techniques require information-preserving abstraction functions to retain information from event streams in a memory-efficient representation [12], and event databases use indexes based on abstractions [13] to efficiently discover models from very large event data sets [14]. This importance of \(\alpha \) gives rise to two questions:

When does \(\alpha \) extract the “right” behavioural information about the underlying process S so that \(\gamma \) can use this information to obtain the “right” model, M, with \(\mathcal {L}(S) = \mathcal {L}(M)\)?

What information from a log has to be used and stored in a finite abstraction \(\alpha \) to allow inferring the “right” model M?

1.2 State of the art

Although considerable progress has been made in recent years by using different abstraction and concretization functions in process discovery, more complex behaviour can still not be accurately discovered from event data [10, 15]. At the same time, the inherent information-preserving properties of the used abstractions have received considerable less attention. That is, the class of models that can be (re-)discovered has been precisely characterized for specific algorithms [3, 16,17,18,19,20,21,22]; however, a systematic study into the information preserved by the abstractions that these algorithms use has not been performed.

Information preservation and recovery for behavioural profiles independent of the alpha-algorithm are only known for acyclic processes, while behavioural profiles cannot preserve information of regular languages in general [8]. Information-preserving “footprints” of directly follows abstraction have been studied [5, 23] and used in multiple discovery algorithms [5, 6] for basic process operators. No existing study considers information preservation in abstractions for practically relevant and frequent process patterns such as unobservable skip paths, interleaving or the inclusive gateways of Fig. 2b.

1.3 Contribution

In this paper, we study how and under which circumstances event data abstractions preserve behavioural information in a way that allows to unambiguously recover basic and complex process patterns for process discovery. Specifically, we consider sequence, exclusive choice (split/join), structured loops, concurrency (split/join), critical section and inclusive choice (split/join), and unobservable paths for skipping [24]. The last three patterns have not been studied before systematically in process mining.

In Sect. 2, we expose the problem in more detail against related work and we contribute a framework to systematically investigate whether for a specific class C of languages (that are generated by process models having a particular set of operators), we can define an abstraction function \(\alpha \) that can abstract any language to a finite representation and is injective: \(\alpha (L_1) = \alpha (L_2)\) implies \(L_1 = L_2\) (for languages \(L_1\) and \(L_2\)), or \(\alpha (\mathcal {L}(M_1)) = \alpha (\mathcal {L}(M_2))\) implies \(\mathcal {L}(M_1) = \mathcal {L}(M_2)\). In these cases, discovering \(\gamma (\alpha (L_1)) = \gamma (\alpha (L_2))\) is correct. Central to our proof is the definition of footprints that allow to precisely recover behavioural information from an abstraction. As the problem has no general solution, even on regular languages [8], we restrict ourselves to languages that can be described syntactically by the relevant subclass of block-structured process models in which each activity is represented at most once (that is, no duplicate activities), which we explain in Sect. 3.

We then use our framework to consolidate previous results on the information-preserving properties and to sharply characterize the limitations of the well-known directly follows abstraction \(\alpha _{\text {dfg}}\) in Sect. 5. To overcome these limitations, we then propose and study two new abstractions: the minimum self-distance abstraction and its footprints discussed in Sect. 6 preserve information about loops in the context of concurrency, and our results close a gap left in earlier works [5]. The concurrent-optional-or abstraction discussed in Sect. 7 preserves information about optional behaviour, and we are the first to show that unobservable paths for skipping and inclusive choices are preserved and can be recovered from footprints in finite abstractions of languages, and hence event logs. The operational nature of the footprints allows applying them in process discovery algorithms. We report on experiments showing the practical relevance and applicability of these information-preserving abstractions for process discovery on real-life event logs in Sect. 8. Further, we discuss practical implications of our results on process mining research, mining from streams, and index design for event databases in Sect. 9.

2 Background, problem exposition, and research framework

We first discuss existing abstractions of processes and their applications. We then work out the research problem of information preservation in behavioural abstractions and propose a framework to research this problem systematically.

2.1 Background

Behavioural abstractions of process behaviour have been studied previously, generally with the aim to obtain a finite representation structure for reasoning about and comparing process behaviours. The directly follows graph (DFG) or Transition-adjacency relations [3, 5, 25] are derived from behaviour (a language or log) and relate two activities ‘A’ and ‘B’ if and only if ‘A’ can be directly followed by ‘B’; this relation can be enriched with arc weights (based on frequency of observed behaviour) [9] or semantic information from external sources [26]. Causal footprints [27] abstract model structures to graphs where multi-edges between activities (visible process steps) represent ‘and’ (concurrent) and ‘xor’ (exclusive choice) pre- and post-dependencies between activities. Behavioural profiles (BPs) [8] derived from behaviour or models combine DFGs and causal footprints by providing binary relations for directly-following, concurrent, and conflicting (mutually exclusive) activities. Further, binary relations between activities can be defined to preserve more subtle aspects of concurrency [28]. Principal transition sequences abstract behaviour as a truncated execution tree of the model that contains all acyclic executions and truncates repetitions and unbounded behaviour [29].

Most behavioural abstractions have been used to measure similarity between process models [30]; BPs [31] and abstraction in [26] preserve more information and allow to define a metric space in order to perform searches in process model collections. In this context also non-behaviour preserving abstractions have been studied, such as projections to “relevant” subsequences [32] and isotactics for preserving concurrency of user-defined sets of activities in true-concurrency semantics [33]. BPs have also been used to propagate changes in behaviour in process model collections to other related process models [34]. Various sets of binary relations in BPs give rise to implications between relations structured along a lattice, allowing to reason within the abstractions [23, 28]. Most process discovery techniques use BPs or DFGs in several variations to abstract and generalize behavioural information from event logs [3,4,5,6, 35].

Information preservation for BPs has been studied in various ways. van der Aalst et al. [3] characterize the rediscoverable models S where from a “complete” log \(L \subseteq \mathcal {L}(S)\) the discovered model M describes the same process executions: \(\mathcal {L}(M) = \mathcal {L}(S)\). Their proof (1) sufficiently characterizes the rediscoverable model structures that remain distinguished under abstraction \(\alpha \) into BPs, and (2) shows that the model synthesis \(\gamma \) step of the alpha-algorithm reconstructs the original model. Badouel et al. [16] also provide necessary conditions. While the alpha-algorithm preserves complex structures including non-free choice constructs, behaviours such as unobservable steps, inclusive choices or interleaving are not preserved, and information is only preserved under the absence of deviations or noise [15]. Furthermore, the characterization and proofs are tied to the alpha-algorithm and cannot be applied to other contexts and discovery algorithms. Independent of an algorithm, BPs are not expressive enough to preserve even trace equivalence for any general class of behaviour (within regular languages) [8]. BPs preserve behaviour for acyclic, unlabelled models, i.e. where each activity in the model is observable and distinct from all other activities [8, 36]. For cyclic models, only for unlabelled, free-choice workflow nets a variant of BPs including the “up-to-k” successor relation preserves trace equivalence, though k is model specific [7]; for large enough k, this resembles the eventually follows relation.

Information preservation for DFGs has been studied as rediscoverability for the Inductive Miner [5] and the alpha-algorithm [3, 16] and its variants. For instance, several variants of the alpha-algorithm have been introduced to include short loops (alpha\(^+\) [17]), to include long-distance dependencies [18] (using the eventually follows relation, but only for acyclic processes), to include non-free-choice constructs (alpha\(^{++}\) [19]) and to include certain types of silent transitions (alpha\(^\#\) [20, 21], alpha\(^{\$}\) [22]). For these algorithms, it was shown that they could obtain enough information from an event log to reliably rediscover certain constructs; however, these characterizations and proofs are tied to the specific algorithms and cannot be applied to other contexts and discovery algorithms. Information preservation in Inductive Miner [5] was proven by showing that behavioural information can be completely retrieved from the abstraction itself, in the form of footprints in the DFG, independent of a specific model synthesis algorithm, and characterizing the block-structured models for which this information is preserved in the DFG. However, these footprints allow reusing information abstraction and recovery in other (non-block-structured) contexts such as the Split Miner [6], which uses footprints from the DFG in a different way to synthesize a model, or Projected Conformance Checking [37], which uses abstractions to provide guarantees in approximative fast conformance checking measures. Other process discovery techniques might be able to obtain enough information from event logs to reconstruct a model, such as (conjectured) the ILP miner [35] and several genetic techniques [38, 39], but no insights into guarantees and limits of information preservation are available.

2.2 Problem exposition

This paper particularly addresses information-preserving capabilities of abstractions of languages in general, that hold in any application using these abstractions, rather than just a single specific algorithm. To approach the problem in an algorithm-independent setting, we generalize prior work on rediscoverability to the following sub-problems:

- RQ1

For which class \(\textsc {C}\) of languages is each language Luniquely defined by its syntactic model M with \(L = \mathcal {L}(M)\), i.e. \(\mathcal {L}(M_1) = \mathcal {L}(M_2)\) implies \(M_1 = M_2\)?

- RQ2

For which class \(\textsc {C}\) of languages does an abstraction \(\alpha \) distinguish languages on their abstractions alone, that is, when does \(\alpha (L_1) = \alpha (L_2)\) imply \(L_1 = L_2\) for \(L_1,L_2 \in \textsc {C}\)?

- RQ3

For which class \(\textsc {C}\) of languages does \(\alpha \) preserve the information about the entire model syntax, i.e. when does \(\alpha (\mathcal {L}(M_1)) = \alpha (\mathcal {L}(M_2))\) imply \(M_1 = M_2\)?

- RQ4

For which classes \(\textsc {C}\) of languages and which abstractions \(\alpha \) can syntactic constructs of model M be recognized in \(\alpha (\mathcal {L}(M))\)?

The specific objective of RQ4 is to not only ensure that an abstraction \(\alpha \) can distinguish different types of behaviour, but that information about the behaviour can be recovered from the abstraction, so the abstraction can be exploited by different process discovery algorithms.

2.3 Research framework

As discussed in Sect. 1, we will answer the above questions for three different abstractions: directly follows \(\alpha _{\text {dfg}}\), minimum self-distance \(\alpha _{\text {msd}}\), and concurrent-optional-or \(\alpha _{\text {coo}}\). Each abstraction function \(\alpha _x\) returns a finite abstraction \(\alpha _x(L)\) for any language L in the form of finite graphs over the alphabet \(\Sigma \) of L. As RQ2 has no general answer [8], we limit our model class to a very general class of block-structured models \(C_\mathcal {B}\) defined in Sect. 3. We then employ the following framework to answer RQ1–RQ4 for each abstraction:

Using a system of sound rewriting rules on block-structured process models [40, p. 113] explained in Sect. 4, we obtain a unique syntactic normal form \( NF (M)\) for each model \(M\in C_\mathcal {B}\).

We show that each abstraction \(\alpha _x(L)\) of any language L of some model \(M \in C_\mathcal {B}, L=\mathcal {L}(M)\) preserves not only the behaviour but also syntax of M in an implicit form: we can recover from \(\alpha _x(L)\) the top-level operator of the normal form \( NF (M)\) through specific characteristic features in \(\alpha _x(L)\), called footprints, answering RQ4. Specifically, much of the information preserved in BPs is already contained in the more basic \(\alpha _{\text {dfg}}\) from which not only sequence, choice, loops and concurrency but also interleaving (Sect. 5) can be recovered. The minimum self-distance abstraction \(\alpha _{\text {msd}}\) preserves information about loops in the context of concurrency (Sect. 6) allowing to preserve information from a larger model class. The new concurrent-optional-or abstraction \(\alpha _{\text {coo}}\) (Sect. 7) preserves information about unobservable skipping and inclusive choices not considered before.

We then characterize for each abstraction \(\alpha _x\) the class of models \(C_x \subset C_\mathcal {B}\) for which the entire recursive structure of \(M \in C_x\) is uniquely preserved in the abstraction of its language \(\alpha _x(\mathcal {L}(M))\); each \(C_x\) partially constrains the nesting of operators, most notably concurrency.

As our core result, we then show that the languages \(\mathcal {L}(M_1),\mathcal {L}(M_2)\) of any two models \(M_1,M_2 \in C_x\) with different normal forms \( NF (M_1) \ne NF (M_2)\) can always be distinguished in their finite abstractions \(\alpha _x(\mathcal {L}(M_1)) \ne \alpha _x(\mathcal {L}(M_2))\) based on the footprints in \(\alpha _x\). Our characterization is sharp in the sense that for any necessary condition, we provide a counterexample, answering RQ3.

As the normal form \( NF (M)\) is unique, we can then conclude RQ2: any two different languages\(L_1 \ne L_2\) of any two models \(L_i = \mathcal {L}(M_i), M_i \in C_x\) can always be distinguished by their finite abstractions \(\alpha _x(L_1) \ne \alpha _x(L_2)\).

We finally can answer RQ1: by RQ3 two different syntactic normal forms \( NF (M_1) \ne NF (M_2)\) have different abstractions of their languages \(\alpha _x(L_1) \ne \alpha _x(L_2), L_i = \mathcal {L}( NF (M_i))\) and have therefore different languages \(L_1 \ne L_2 \in C_x\) (as \(\alpha _x\) is a deterministic function).

3 Preliminaries

3.1 Traces, languages, partitions

A trace is a sequence of events, which are executions of activities (i.e. the process steps). The empty trace is denoted with \(\epsilon \).

A language is a set of traces, and an event log is a finite set of traces. For instance, the language \(L_{3} = \{\langle a, b, c \rangle , \langle a, c, b\rangle \}\) consists of two traces. For the first trace, first activity a was executed, followed by b and c. Traces can be concatenated using \(\cdot \).

In this paper, we limit ourselves to regular languages, that is, languages that can be defined using the regular expression patterns sequence, exclusive choice and Kleene star.

We refer to the activities that appear in a language or event log as the alphabet\(\Sigma \) of the language or event log. A partition of an alphabet consists of several sets, such that each activity of the alphabet appears in precisely one of the sets, and each activity in the sets appears in the alphabet. For instance, the alphabet of \(L_{3}\) is \(\{a, b, c\}\) and a partition of this alphabet is \(\{\{a, b\}, \{c\}\}\).

3.2 Block-structured models

A block-structured process model can be recursively broken up into smaller pieces. These smaller pieces form a hierarchy of model constructs, that is, a tree [41].

A process tree is an abstract representation of a block-structured workflow net [1]. The leaves are either unlabelled or labelled with activities, and all other nodes are labelled with process tree operators. A process tree describes a language: the leaves describe singleton or empty languages, and an operator describes how the languages of its subtrees are to be combined. We formally define process trees recursively:

Definition 3.1

(process tree syntax) Let \(\Sigma \) be an alphabet of activities, then

activity\(a \in \Sigma \) is a process tree;

the silent activity\(\tau \) (\(\tau \notin \Sigma \)) is a process tree;

let \(M_1 \ldots M_n\) with \(n > 0\) be process trees and let \(\oplus \) be a process tree operator; then, the operator node\(\oplus (M_1, \ldots M_n)\) is a process tree. We also write this as

Each process tree describes a language: an activity describes the execution of that activity, a silent activity describes the empty trace, while an operator node describes a combination of the languages of its children. Each operator combines the languages of its children in a specific way.

In this paper, we consider six process tree operators:

- 1.

exclusive choice

,

, - 2.

sequence \(\rightarrow \),

- 3.

interleaved (i.e. not overlapping in time) \(\leftrightarrow \),

- 4.

concurrency (i.e. possibly overlapping in time) \(\wedge \),

- 5.

inclusive choice

and

and - 6.

structured loop \(\circlearrowleft \) (i.e. the loop body \(M_1\) and alternative loop back paths \(M_2 \ldots M_n\)).

For instance, the language of

is \(\{\langle a, b, c \rangle \), \(\langle b, a, c \rangle \), \(\langle b, c, a \rangle \), \(\langle d, e, f \rangle \), \(\langle e, f, d \rangle \), \(\langle g \rangle \), \(\langle h \rangle \), \(\langle g, h \rangle \), \(\langle h, g \rangle \), \(\langle i \rangle \), \(\langle i, j, i \rangle \), \(\langle i, j, i, j, i \rangle \)\(\ldots \}\). A formal definition of these operators is given in “Appendix A”.

Each of the process tree operators can be translated to a sound block-structured workflow net, and to, for instance, a BPMN model [11]. Figure 3 provides some intuition by giving the translation of the process tree operators to (partial) Petri nets [42].

We refer to a process tree that is part of a larger process tree as a subtree of the larger tree.

4 A canonical normal form for process trees

Some process trees are structurally different but nevertheless express the same language. For instance, the process trees

and

have a different structure but the same language, consisting of the choice between a, b and c. Such trees are indistinguishable by their language, and thus also indistinguishable by any language abstraction. To take such structural differences out of the equation, we use a set of rules that, when applied, preserve the language of a tree while reducing the structural size and complexity of the tree. It does not matter which rules or in which order the rules are applied: applying the rules exhaustively always yields the same canonical normal form.

The set of rules consist of four types of rules: (1) a singularity rule, which removes operators with only a single child: \(\oplus (M) \Rightarrow M\) for any process tree M and operator \(\oplus \) (except \(\circlearrowleft \)); (2) associativity rules, which remove nested operators of the same type, such as \(\circlearrowleft ( \circlearrowleft ( M, \ldots _1 ), \ldots _2 ) \Rightarrow \,\circlearrowleft ( M, \ldots _1, \ldots _2 )\); (3) \(\tau \)-reduction rules, which remove superfluous \(\tau \)s, such as \(\wedge (\ldots , M, \tau ) \Rightarrow \wedge (\ldots , M)\); and (4) rules that establish the relation between concurrency and other operators, such as \(\leftrightarrow (M_1, \ldots M_n) \Rightarrow \wedge (M_1, \ldots M_n)\) with \(\forall _{1 \leqslant i \leqslant n, t \in \mathcal {L}(M_i)}~ |t| \leqslant 1\).

The set of rules is terminating, that is, to any process tree only a finite number of rules can be applied sequentially; the set is locally confluent, that is, if two rules are applicable to a single tree, yielding trees A and B, then it is possible to reduce A and B to a common tree C; and by extension, the set of rules is confluent and canonical. For more details, please refer to [40, p. 118].

As a result, the rules are canonical, so in the remainder of this paper, we only need to consider process trees in canonical normal form, to which we refer as reduced process trees; a reduced process tree is a process to which the reduction rules have been applied exhaustively. This allows us to reason based on the structure of reduced trees, rather than on the behaviour of arbitrarily structured trees.

5 Preservation and recovery with directly follows graphs

In order to study the information preserved in abstractions, in this section we study the first abstraction: directly follows graphs. This abstraction has been well studied in context of process discovery. For instance, in the context of the alpha-algorithm, the directly follows abstraction has been shown to preserve information about a certain class of Petri nets. In this section, we show that directly follows graphs preserve even more information than previously known.

We first formally introduce the abstraction, after which we introduce characteristics of the recursive process tree operators in the abstraction: footprints. Using these footprints, we show that every two reduced process trees that are structurally different have different abstractions. That is, we show that if two trees have the same directly follows abstraction, then there cannot be a structural difference between the two trees. For subsequent abstractions in this paper, which get more involved, we will use a similar proof strategy.

We first introduce the abstraction. Second, we introduce the footprints of the recursive process tree operators in this abstraction. Third, we introduce a class of recursively defined languages and show that this class is tight, that is, structurally different trees outside this class might yield the same abstraction. Finally, we show that different normal forms within the class have different abstractions (and hence languages), thereby using the research framework of Sect. 2.

5.1 Directly follows graphs

A directly follows graph abstracts from a language by only expressing which activities (the nodes of the graph) are followed directly by which activities in a trace in the language. Furthermore, a directly follows graph expresses the activities with which traces start or end in the language, and whether the language contains the empty trace.

Definition 5.1

(directly follows graph) Let \(\Sigma \) be an alphabet and let L be a language over \(\Sigma \) such that \(\epsilon \notin \Sigma \). Then, the directly follows graph\(\alpha _{\text {dfg}}\) of L consists of:

For a directly follows graph \(\alpha _{\text {dfg}}\), \(\Sigma (\alpha _{\text {dfg}})\) denotes the alphabet of activities, i.e. the nodes of \(\alpha _{\text {dfg}}\).

For instance, let \(L_{4} = \{ \langle a, b, c \rangle , \langle a, c, b \rangle , \epsilon \} \) be a language. Then, the directly follows graph of \(L_{4}\) consists of the edges \(\alpha _{\text {dfg}}(a, b)\), \(\alpha _{\text {dfg}}(b, c)\), \(\alpha _{\text {dfg}}(a, c)\), \(\alpha _{\text {dfg}}(c, b)\). The start activity is a, the end activities are b and c and the directly follows graph contains \(\epsilon \). A graphic representation of this directly follows graph is shown in Fig. 4.

5.2 Footprints

Each process tree operator has a particular characteristic in a directly follows graph, a footprint. We will use footprints in our uniqueness proofs to show differences between trees: if one tree contains a particular footprint and another one does not, the languages of these trees must be different. Furthermore, process discovery algorithms can use footprints to infer syntactic constructs that capture the abstracted behaviour.

In this section, we introduce these characteristics and show that process tree operators exhibit them.

A cut is a tuple \((\oplus , \Sigma _1, \ldots \Sigma _m)\), such that \(\oplus \) is a process tree operator and \(\Sigma _1 \ldots \Sigma _m\) are sets of activities. A cut is non-trivial if \(m > 1\) and no \(\Sigma _i\) is empty.

Definition 5.2

(directly follows footprints) Let \(\alpha _{\text {dfg}}\) be a directly follows relation and let \(c = (\oplus , \Sigma _1, \ldots \Sigma _n)\) be a cut, consisting of a process tree operator  and a partition of activities with parts \(\Sigma _1 \ldots \Sigma _n\) such that \(\Sigma (\alpha _{\text {dfg}}) = \bigcup _{1 \leqslant i \leqslant n} \Sigma _i\) and \(\forall _{1 \leqslant i < j \leqslant n}~ \Sigma _i \cap \Sigma _j = \emptyset \).

and a partition of activities with parts \(\Sigma _1 \ldots \Sigma _n\) such that \(\Sigma (\alpha _{\text {dfg}}) = \bigcup _{1 \leqslant i \leqslant n} \Sigma _i\) and \(\forall _{1 \leqslant i < j \leqslant n}~ \Sigma _i \cap \Sigma _j = \emptyset \).

Exclusive choice. c is an exclusive choice cut in \(\alpha _{\text {dfg}}\) if

and

and

- x.1:

No part is connected to any other part:

Sequential. c is a sequence cut in \(\alpha _{\text {dfg}}\) if \(\oplus = \rightarrow \) and

- s.1:

Each node in a part is indirectly and only connected to all nodes in the parts “after” it:

Interleaved. c is an interleaved cut in \(\alpha _{\text {dfg}}\) if \(\oplus = \leftrightarrow \) and

- i.1:

Between parts, all and only connections exist from an end to a start activity:

Concurrent. c is a concurrent cut in \(\alpha _{\text {dfg}}\) if \(\oplus = \wedge \) and

- c.1:

Each part contains a start and an end activity.

- c.2:

All parts are fully interconnected.

Loop. c is a loop cut in \(\alpha _{\text {dfg}}\) if \(\oplus \,=\,\circlearrowleft \) and

- l.1:

All start and end activities are in the body (i.e. the first) part.

- l.2:

Only start/end activities in the body part have connections from/to other parts.

- l.3:

Redo parts have no connections to other redo parts.

- l.4:

If an activity from a redo part has a connection to/from the body part, then it has connections to/from all start/end activities.

A formal definition is included in “Appendix B.1”.

For instance, consider the directly follows graph of \(L_{4}\) shown in Fig. 4: in this graph, the cut \((\rightarrow , \{a\}, \{b, c\})\) is a \(\rightarrow \)-cut.

Inspecting the semantics of the process tree operators (Definition A.1), it follows that in the directly follows graphs of process trees, these footprints are indeed present:

Lemma 5.1

(Directly follows footprints) Let \(M = \oplus (M_1, \ldots M_m)\) be a process tree without duplicate activities, with  . Then, the cut \((\oplus \), \(\Sigma (M_1)\), \(\ldots \Sigma (M_n))\) is a \(\oplus \)-cut in \(\alpha _{\text {dfg}}(M)\) according to Definition 5.2.

. Then, the cut \((\oplus \), \(\Sigma (M_1)\), \(\ldots \Sigma (M_n))\) is a \(\oplus \)-cut in \(\alpha _{\text {dfg}}(M)\) according to Definition 5.2.

5.3 A class of trees: \(\textsc {C}_{\text {dfg}}\)

Not all process trees can be distinguished using the introduced footprints. In this section, we describe the class of trees that can be distinguished by directly follows graphs: \(\textsc {C}_{\text {dfg}}{}\). To illustrate that this class is tight, we give counterexamples of pairs of trees outside of \(\textsc {C}_{\text {dfg}}{}\) and show that these trees cannot be distinguished using their directly follows graphs.

Definition 5.3

(Class \(\textsc {C}_{\text {dfg}}\)) Let \(\Sigma \) be an alphabet of activities. Then, the following process trees are in \(\textsc {C}_{\text {dfg}}\):

a with \(a \in \Sigma \) is in \(\textsc {C}_{\text {dfg}}\)

Let \(M_1 \ldots M_n\) be reduced process trees in \(\textsc {C}_{\text {dfg}}\) without duplicate activities:

\(\forall _{i \in [1\ldots n], i \ne j \in [1\ldots n]}~ \Sigma (M_i) \cap \Sigma (M_j) = \emptyset \). Then,

\(\oplus (M_1, \ldots M_n)\) with

is in \(\textsc {C}_{\text {dfg}}\);

is in \(\textsc {C}_{\text {dfg}}\);\(\leftrightarrow (M_1, \ldots M_n)\) is in \(\textsc {C}_{\text {dfg}}\) if all:

- i.1:

At least one child has disjoint start and end activities:

\(\exists _{i \in [1\ldots n]}~ {{\,\mathrm{Start}\,}}(M_i) \cap {{\,\mathrm{End}\,}}(M_i) = \emptyset \);

- i.2:

No child is interleaved itself:

\(\forall _{i\in [1\ldots n]}~ M_i \ne \leftrightarrow (\ldots )\);

- i.3:

Each concurrent child has at least one child with disjoint start and end activities:

\(\forall _{i \in [1\ldots n] \wedge M_i = \wedge (M_{i_1}, \ldots M_{i_m}) }~ \exists _{j \in [1 \ldots m]}~ {{\,\mathrm{Start}\,}}(M_{i_j}) \cap {{\,\mathrm{End}\,}}(M_{i_j}) = \emptyset \)

\(\circlearrowleft (M_1, \ldots M_n)\) is in \(\textsc {C}_{\text {dfg}}\) if:

- l.1:

the first child has disjoint start and end activities:

\({{\,\mathrm{Start}\,}}(M_1) \cap {{\,\mathrm{End}\,}}(M_1) = \emptyset \).

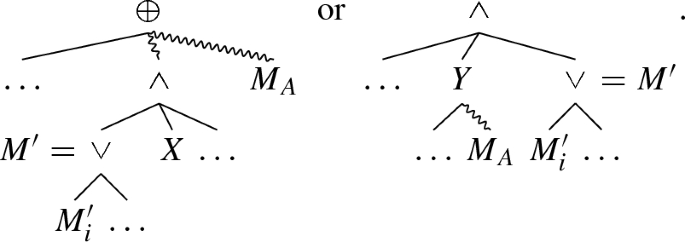

These requirements are tight as for each requirement there exists counterexamples of the following form: two process trees whose syntax violates the requirement and who have different languages but have identical directly follows graphs. Consequently, the two trees cannot be distinguished by the directly follows abstraction.

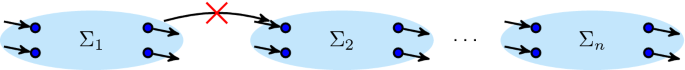

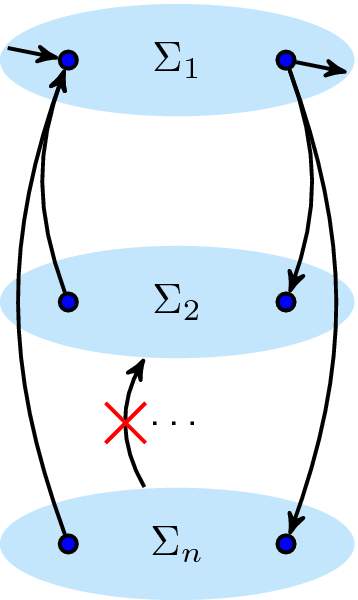

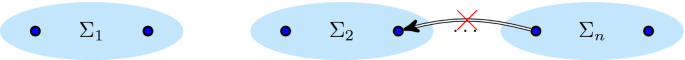

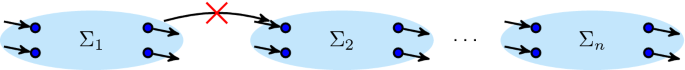

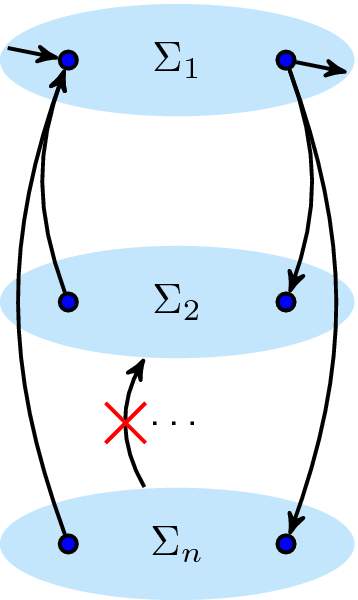

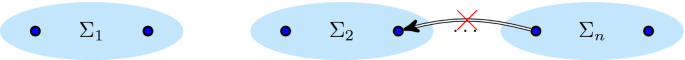

Figure 5 illustrates the counterexample for \(\hbox {C}_{\mathrm{dfg}}.\hbox {l}.1\). This requirement has some similarity with the so-called short loops of the \(\alpha \) algorithm [1].

The counterexamples for \(\hbox {C}_{\mathrm{dfg}}.\hbox {i}.1\), \(\hbox {C}_{\mathrm{dfg}}.\hbox {i}.2\) and \(\hbox {C}_{\mathrm{dfg}}.\hbox {i}.3\) follow a similar reasoning and are given in “Appendix C.1”.

Trees with \(\tau \) and  nodes are excluded from \(\textsc {C}_{\text {dfg}}\) entirely. The corresponding counterexamples for the directly follows abstraction and how to preserve information on \(\tau \) and

nodes are excluded from \(\textsc {C}_{\text {dfg}}\) entirely. The corresponding counterexamples for the directly follows abstraction and how to preserve information on \(\tau \) and  will both be discussed in Sect. 7.

will both be discussed in Sect. 7.

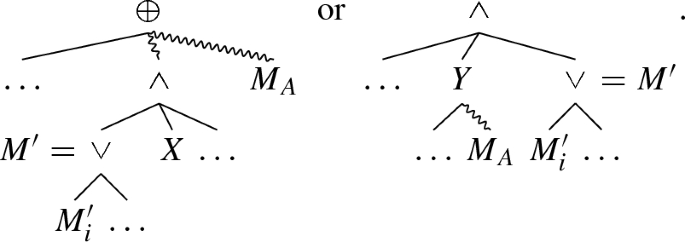

A weaker requirement one could consider to replace \(\hbox {C}_{\mathrm{dfg}}.\hbox {l}.1\)is that at least one child of the loop should have disjoint start and end activities: \(M'\,=\,\circlearrowleft (M'_1, \ldots M'_n): \exists _{1 \leqslant i \leqslant n}~ {{\,\mathrm{Start}\,}}(M'_i) \cap {{\,\mathrm{End}\,}}(M'_i) = \emptyset \). This weaker requirement would allow both \(M_{7}\) and \(M_{8}\) (see Fig. 6), i.e. c can be in the redo of the inner or outer \(\circlearrowleft \)-nodes. However, the counterexample in Fig. 6 shows that this weaker constraint is not strong enough.

5.4 Uniqueness

Now that all concepts for abstraction and recovery have been introduced, what is left is to prove for \(\alpha _{\text {dfg}}\) which behaviour can be preserved. Here, we provide the lemmas and theorems according to the framework of Sect. 2 to establish the fundamental formal limits. This proof structure will be reused on the more complex abstractions later.

That is, in this section, we prove that two structurally different reduced process trees of \(\textsc {C}_{\text {dfg}}\) always have different directly follows graphs. To prove this, we exploit the recursive structure of process trees: we first show that if the root operator of two process trees differs, then their directly follows graphs differ. Second, we show that if the activity partition (that is, the distribution of activities over the children of the root) of two trees differs, then their directly follows graphs differ. These two results yield that any structural difference over the entire tree will result in a different directly follows graph.

Lemma 5.2

(Operators are mutually exclusive) Take two reduced process trees of \(\textsc {C}_{\text {dfg}}\)\(K = \oplus (K_1, \ldots K_n)\) and \(M = \otimes (M_1, \ldots M_m)\) such that \(\oplus \ne \otimes \). Then, \(\alpha _{\text {dfg}}(K) \ne \alpha _{\text {dfg}}(M)\).

This lemma is proven by showing that for each pair of operators, there is always a difference in their directly follows graphs. For instance, the directly follows graph of a  node is not connected, while all other operators make the graph connected. For a detailed proof, please refer to “Appendix D.1”.

node is not connected, while all other operators make the graph connected. For a detailed proof, please refer to “Appendix D.1”.

Lemma 5.3

(Partitions are mutually exclusive) Take two reduced process trees of \(\textsc {C}_{\text {dfg}}\)\(K = \oplus (K_1 \ldots K_n)\) and \(M = \oplus (M_1 \ldots M_m)\) such that their activity partition is different: \(\exists _{1 \leqslant w \leqslant \text {min}(n, m)}~ \Sigma (K_w) \ne \Sigma (M_w)\). Then, \(\alpha _{\text {dfg}}(K) \ne \alpha _{\text {dfg}}(M)\).

For this proof, we assume a fixed order of children for the non-commutative operators \(\rightarrow \) and \(\circlearrowleft \). Then, we show, for each operator, that a difference in activity partitions leads to a difference in directly follows graphs. For a detailed proof, please refer to “Appendix D.2”.

Lemma 5.4

(Abstraction uniqueness for \(\textsc {C}_{\text {dfg}}\)) Take two reduced process trees of \(\textsc {C}_{\text {dfg}}\)K and M such that \(K \ne M\). Then, \(\alpha _{\text {dfg}}(K) \ne \alpha _{\text {dfg}}(M)\).

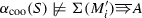

Proof

Towards contradiction, assume that there exist two reduced process trees K and M, both of \(\textsc {C}_{\text {dfg}}\), such that \(\alpha _{\text {dfg}}(K) = \alpha _{\text {dfg}}(M)\), but \(K \ne M\). Then, there exist topmost subtrees \(K'\) in K and \(M'\) in M such that \(\alpha _{\text {dfg}}(K') = \alpha _{\text {dfg}}(M')\) and such that \(K'\), \(M'\) are structurally different in their activity, operator or activity partition, i.e. either

\(K'\) or \(M'\) is a \(\tau \), while the other is not. Then, obviously \(\alpha _{\text {dfg}}(K') \ne \alpha _{\text {dfg}}(M')\).

\(K'\) or \(M'\) is a single activity while the other is not. Then, by the restrictions of \(\textsc {C}_{\text {dfg}}\), \(\alpha _{\text {dfg}}(K') \ne \alpha _{\text {dfg}}(M')\).

\(K' = \otimes (K'_1 \ldots K'_ n)\) and \(M' = \oplus (M'_1 \ldots M'_n)\) such that \(\oplus \ne \otimes \). By Lemma 5.2, \(\alpha _{\text {dfg}}(K') \ne \alpha _{\text {dfg}}(M')\).

\(K' = \oplus (K'_1 \ldots K'_ n)\) and \(M' = \oplus (M'_1 \ldots M'_n)\) such that the activity partition is different, i.e. there is an i such that \(\Sigma (K'_i) \ne \Sigma (M'_i)\). By Lemma 5.3, \(\alpha _{\text {dfg}}(K') \ne \alpha _{\text {dfg}}(M')\).

Hence, there cannot exist such K and M, and therefore, \(\alpha _{\text {dfg}}(K) \ne \alpha _{\text {dfg}}(M)\). \(\square \)

As a language abstraction is solely derived from a language, different directly follows graphs imply different languages (the reverse not necessarily holds), we immediately conclude uniqueness:

Corollary 5.1

(Language uniqueness for \(\textsc {C}_{\text {dfg}}\)) There are no two different reduced process trees of \(\textsc {C}_{\text {dfg}}\) with equal languages. Hence, for trees of class \(\textsc {C}_{\text {dfg}}\), the normal form of Sect. 4 is uniquely defined.

6 Preservation and recovery with minimum self-distance

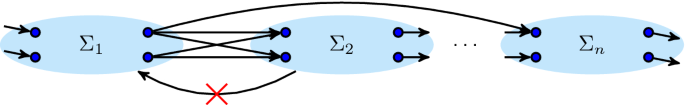

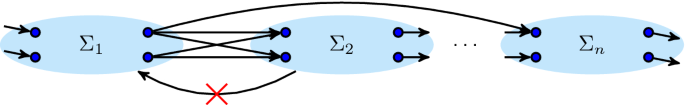

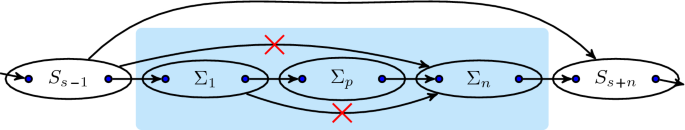

A structure that is difficult to distinguish in directly follows graphs is the nesting of \(\circlearrowleft \) and \(\wedge \) operators, resembling the so-called short loops of the \(\alpha \) algorithm. For instance, Fig. 7 shows an example of two process trees with such a nesting, having the same directly follows graph. In \(\textsc {C}_{\text {dfg}}\), such nestings were excluded.

In this section, we introduce a new abstraction, the minimum self-distance abstraction, that distinguishes some of such nestings by considering which activities must be in between two executions of the same activity. As illustrated in Fig. 7, the minimum self-distance abstraction captures more information of process trees, enabling discovery and conformance checking algorithms to distinguish the languages of these trees.

The remainder of this section follows a strategy similar to Sect. 5: first, we introduce the language abstraction of minimum self-distance graphs. Second, we introduce adapted footprints of the recursive process tree operators in minimum self-distance graphs. Third, we characterize the extended class of recursively defined languages and show where this class is tight (conditions cannot be dropped). Fourth, we show that different trees in normal form of this class have different combinations of directly follows graphs and minimum self-distance graphs (and hence languages), using the research framework of Sect. 2.

6.1 Minimum self-distance

The minimum self-distancem of an activity is the minimum number of events in between two executions of that activity:

Definition 6.1

(Minimum self-distance) Let L be a language, and let \(a, b \in \Sigma (L)\). Then,

A minimum self-distance graph\(\alpha _{\text {msd}}\) is a directed graph whose nodes are the activities of the alphabet \(\Sigma \). An edge \(\alpha _{\text {msd}}(a, b)\) in a minimum self-distance graph denotes that b is a witness of the minimum self-distance of a, i.e. activity b can appear in between two minimum-distant executions of activity a:

Definition 6.2

(Minimum self-distance graph) Let L be a language, and let \(a, b \in \Sigma (L)\). Then,

For a minimum self-distance graph \(\alpha _{\text {msd}}\), \(\Sigma (\alpha _{\text {msd}})\) denotes the alphabet of activities, i.e. the nodes of \(\alpha _{\text {msd}}\).

For instance, consider the log \(L_{11} = \{ \langle a, b \rangle , \langle b, a, c, a, b \rangle , \langle a, b, c, a, b, c, b, a \rangle \}\). In this log, the minimum self-distances are \(m(a) = 1\), \(m(b) = 1\) and \(m(c) = 2\), witnessed by the subtraces \(\langle a, c, a \rangle \) (for a), \(\langle b, c, b \rangle \) (for b) and \(\langle c, a, b, c \rangle \) (for c). Thus, the minimum self-distance relations are \(\alpha _{\text {msd}}(a, c)\), \(\alpha _{\text {msd}}(b, c)\), \(\alpha _{\text {msd}}(c, a)\) and \(\alpha _{\text {msd}}(c, b)\). Figure 8 visualizes this minimum self-distance relation as a graph.

6.2 Footprints

In this section, we introduce the characteristics that process tree operators leave in minimum self-distance graphs.

Definition 6.3

(minimum self-distance footprints) Let \(\alpha _{\text {msd}}\) be a minimum self-distance graph and let \(c = (\oplus , \Sigma _1, \ldots \Sigma _n)\) be a cut, consisting of a process tree operator  and a partition of activities with parts \(\Sigma _1 \ldots \Sigma _n\) such that \(\Sigma (\alpha _{\text {msd}}) = \bigcup _{1 \leqslant i \leqslant n} \Sigma _i\) and \(\forall _{1 \leqslant i < j \leqslant n}~ \Sigma _i \cap \Sigma _j = \emptyset \).

and a partition of activities with parts \(\Sigma _1 \ldots \Sigma _n\) such that \(\Sigma (\alpha _{\text {msd}}) = \bigcup _{1 \leqslant i \leqslant n} \Sigma _i\) and \(\forall _{1 \leqslant i < j \leqslant n}~ \Sigma _i \cap \Sigma _j = \emptyset \).

Concurrent and interleaved. If \(\oplus = \wedge \) or \(\oplus = \leftrightarrow \), then in \(\alpha _{\text {msd}}\):

- ci.1:

There are no \(\alpha _{\text {msd}}\) connections between parts:

Loop. If \(\oplus = \circlearrowleft \) then in \(\alpha _{\text {msd}}\):

- l.1:

Each activity has an outgoing edge.

- l.2:

All redo activities that have a connection to a body activity have connections to the same body activities:

- l.3:

All body activities that have a connection to a redo activity have connections to the same redo activities:

- l.4:

No two activities from different redo children have an \(\alpha _{\text {msd}}\)-connection:

A formal definition is included in “Appendix B.2”.

For instance, consider the example shown in Fig. 7: the two process trees \(M_{9}\) and \(M_{10}\) have the same directly follows graph (Fig. 7c). However, the minimum self-distance graphs of these two trees differ (Fig. 7d, e). To exploit this difference, the footprints in both graphs differ: in \(\alpha _{\text {msd}}(M_{9})\), the only footprint is \((\circlearrowleft , \{a, b\}, \{c\})\), while in \(\alpha _{\text {msd}}(M_{9})\) the only footprint is \((\wedge , \{a, c\}, \{b\})\) footprint.

From the semantics of process trees, it follows that these footprints are present in minimum self-distance graphs of process trees without duplicate activities:

Lemma 6.1

(Minimum self-distance footprints) Let \(M = \oplus (M_1, \ldots M_m)\) be a process tree without duplicate activities, with  . Then, the cut \((\oplus \), \(\Sigma (M_1)\), \(\ldots \Sigma (M_n))\) is a \(\oplus \)-cut in \(\alpha _{\text {msd}}(M)\) according to Definition 6.3.

. Then, the cut \((\oplus \), \(\Sigma (M_1)\), \(\ldots \Sigma (M_n))\) is a \(\oplus \)-cut in \(\alpha _{\text {msd}}(M)\) according to Definition 6.3.

6.3 A class of trees: \(\textsc {C}_{\text {msd}}\)

Using the minimum self-distance graph, we can lift a restriction of \(\textsc {C}_{\text {dfg}}\) partially. In this section, we introduce the extended class of process trees \(\textsc {C}_{\text {msd}}\), in which a \(\circlearrowleft \)-node can be a direct child of a \(\wedge \)-node.

Definition 6.4

(Class\(\textsc {C}_{\text {msd}}\)) Let \(\Sigma \) be an alphabet of activities. Then, the following process trees are in \(\textsc {C}_{\text {msd}}\):

\(M \in \textsc {C}_{\text {dfg}}\) is in \(\textsc {C}_{\text {msd}}\);

Let \(M_1 \ldots M_n\) be reduced process trees in \(\textsc {C}_{\text {msd}}\) without duplicate activities: \(\forall _{i \in [1\ldots n], i \ne j \in [1\ldots n]}~ \Sigma (M_i) \cap \Sigma (M_j) = \emptyset \). Then, \(\circlearrowleft (M_1, \ldots M_n)\) is in \(\textsc {C}_{\text {msd}}\) if:

- l.1:

the body child is not concurrent:

\(M_1 \ne \wedge (\ldots )\)

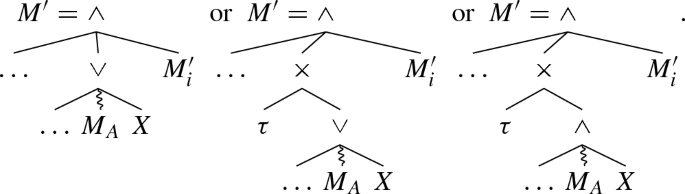

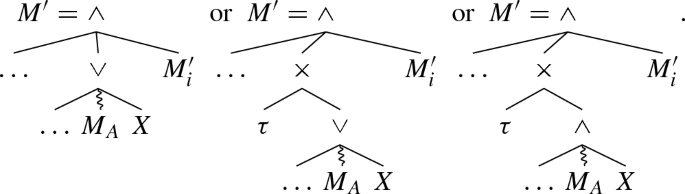

We neither have a proof nor a counterexample for the necessity of Requirement \(\hbox {C}_{\mathrm{msd}}.\hbox {l}.1\). Figure 9 illustrates this: the two trees have a different language, an equivalent \(\alpha _{\text {dfg}}\)-graph (shown in Fig. 9b) but a different \(\alpha _{\text {msd}}\)-graph (shown in Fig 9c, d). Thus, they could be distinguished using their \(\alpha _{\text {msd}}\)-graph. However, the footprint (Definition 6.3) cannot distinguish these trees: both cuts \((\circlearrowleft , \{a, b, c\}, \{d\})\) and \((\circlearrowleft , \{a, c, d\}, \{b\})\) are valid in both \(\alpha _{\text {msd}}\)-graphs, where \((\circlearrowleft , \{a, b, c\}, \{d\})\) corresponds to \(M_{12}\) and \((\circlearrowleft , \{a, c, d\}, \{b\})\) corresponds to \(M_{13}\). This implies that a discovery algorithm that uses only the footprint cannot distinguish these two trees. In “Appendix E.1”, we elaborate on this.

6.4 Uniqueness

To prove that the combination of directly follows and minimum self-distance graphs distinguishes all reduced process trees of \(\textsc {C}_{\text {msd}}\), we follow a strategy that is similar to the one used in Sect. 5.4: we first show that, given two process trees, a difference in root operators implies a difference in either the \(\alpha _{\text {dfg}}\) or \(\alpha _{\text {msd}}\)-abstractions of the trees. Second, we show that, given two process trees, a difference in root activity partitions implies a difference in the abstractions. Third, using the first two results, we show that any structural difference between two process trees implies that the trees have different abstractions. For the full proofs, please refer to “Appendix E”. Consequently:

Corollary 6.1

(Language uniqueness for \(\textsc {C}_{\text {msd}}\)) There are no two different reduced process trees of \(\textsc {C}_{\text {msd}}\) with equal languages. Hence, for trees of class \(\textsc {C}_{\text {msd}}\), the normal form of Sect. 4 is uniquely defined.

7 Preserving and recovering optionality and inclusive choice

The abstractions discussed in previous sections preserve information only if all behaviour is always fully observable. The \(\alpha _{\text {dfg}}\) and \(\alpha _{\text {msd}}\) abstractions cannot preserve information about unobservable behaviour, or skips, caused by the inclusive choice operator  or by an alternative silent \(\tau \) step making behaviour optional.

or by an alternative silent \(\tau \) step making behaviour optional.

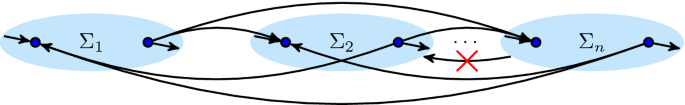

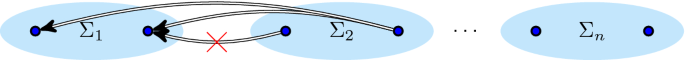

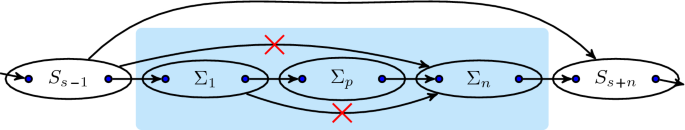

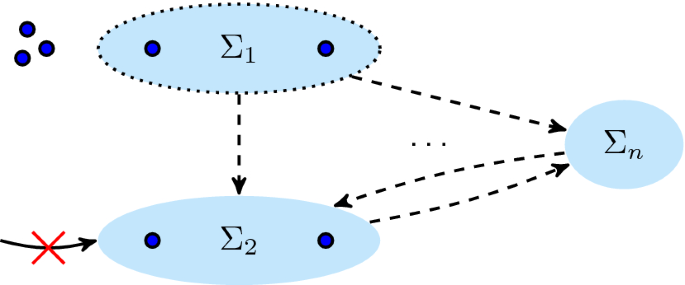

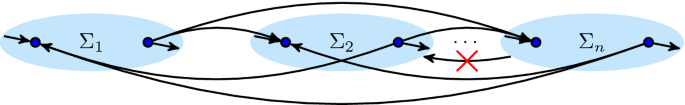

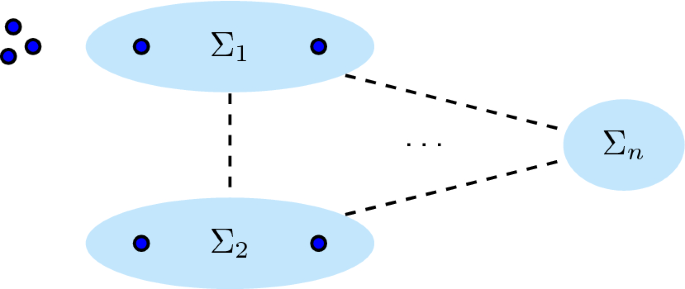

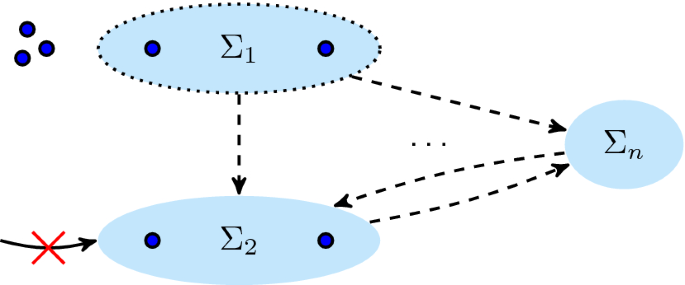

The two models \(M_{16} \in \textsc {C}_{\text {msd}}{}\) and \(M_{17} \notin \textsc {C}_{\text {msd}}{}\) have different behaviour but the same \(\alpha _{\text {dfg}}\)-graph (c) and the same \(\alpha _{\text {msd}}\)-graph (d). Information about \(\tau \) and  in \(M_{17}\) is preserved in the \(\alpha _{\text {coo}}\)-graphs in (f) and (g)

in \(M_{17}\) is preserved in the \(\alpha _{\text {coo}}\)-graphs in (f) and (g)

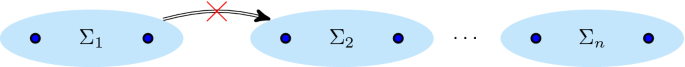

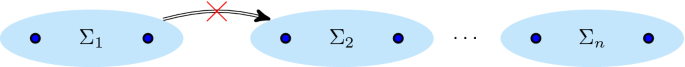

The model \(M_{15}\) in Fig. 10b exhibits optionality of the sequence b, c due to a silent activity \(\tau \) under a choice  . Although its \(\alpha _{\text {dfg}}\)-graph in Fig. 10c differs from the \(\alpha _{\text {dfg}}\)-graph of \(M_{14}\) in Fig. 10a without optionality, the optionality of b, c cannot be recovered as both models have the same \(\rightarrow \)-footprint of Sect. 5.2. Model \(M_{17}\) in Fig. 11a exhibits skips due to the inclusive choice operator

. Although its \(\alpha _{\text {dfg}}\)-graph in Fig. 10c differs from the \(\alpha _{\text {dfg}}\)-graph of \(M_{14}\) in Fig. 10a without optionality, the optionality of b, c cannot be recovered as both models have the same \(\rightarrow \)-footprint of Sect. 5.2. Model \(M_{17}\) in Fig. 11a exhibits skips due to the inclusive choice operator  and

and  under \(\wedge \). Yet, its \(\alpha _{\text {dfg}}\) and \(\alpha _{\text {msd}}\) abstractions are identical to the \(\alpha _{\text {dfg}}\) and \(\alpha _{\text {msd}}\) abstractions (Fig. 11c and d) of the model of Fig. 11b having no skips, making their behaviour indistinguishable under these abstractions.

under \(\wedge \). Yet, its \(\alpha _{\text {dfg}}\) and \(\alpha _{\text {msd}}\) abstractions are identical to the \(\alpha _{\text {dfg}}\) and \(\alpha _{\text {msd}}\) abstractions (Fig. 11c and d) of the model of Fig. 11b having no skips, making their behaviour indistinguishable under these abstractions.

In this section, we introduce techniques to preserve and recover information about these unobservable types of behaviour. We first discuss the influence of optionality on \(\alpha _{\text {dfg}}\)-footprints and exclude some cases, in particular some types of loops, from further discussion in this paper (Sect. 7.1). We then follow our framework and provide abstractions and footprints to preserve information about optionality and inclusive choices. We show that partial cuts (that consider only a subset of the activities in a language) allow to localize skips.

For example, to recover the optionality in \(\alpha _{\text {dfg}}(M_{15})\) of Fig. 10c, we find the partial \(\rightarrow \)-cut footprint\((\rightarrow ,\{b\},\{c\})\) as a subgraph that adheres to the \(\rightarrow \)-cut of Sect. 5.2and can be tightly skipped by \(\alpha _{\text {dfg}}(M_{15})(a, d)\). We formalize this notion and show that it correctly recovers skips in a sequence from \(\alpha _{\text {dfg}}\) in Sect. 7.2.

Preserving information about  requires an entirely new abstraction as the \(\alpha _{\text {dfg}}\)-graph for

requires an entirely new abstraction as the \(\alpha _{\text {dfg}}\)-graph for  and \(\wedge \) is identical. We introduce the concurrent-optional-or (coo, \(\alpha _{\text {coo}}\)) abstraction which preserves information on a family of graphs “bottom-up”. For instance, the traces of \(M_{17}\) such as \(\langle b \rangle \), \(\langle a, b\rangle \), \(\langle c, d, b\rangle \), \(\langle c, b, d, a\rangle \), \(\langle c, d\rangle \) and \(\langle c, d, a\rangle \) show that if \(\{a\}\) occurs there is a similar trace in which \(\{a\}\) does not occur (\(\{a\}\) is optional), and that if any of the sets \(\{b\}\) and \(\{c,d\}\) occur, then similar traces with any combination of \(\{b\}\) and \(\{c, d\}\) occur as well (are interchangeable), which we encode in the coo-graph of Fig. 11f. A pattern for also relating \(\{a\}\) emerges by grouping b, c and d: the presence of \(\{a\}\) in a trace implies the occurrence of \(\{b, c, d\}\) in that trace (but not vice versa), which we encode in the coo-graph of Fig. 11g. Both coo-graphs together are the \(\alpha _{\text {coo}}\)-abstraction of \(M_{17}\). Similarly, Fig. 11e is the \(\alpha _{\text {coo}}\)-abstraction of 11a. In Sect. 7.3, we further explain and define \(\alpha _{\text {coo}}\) for languages and we show that

and \(\wedge \) is identical. We introduce the concurrent-optional-or (coo, \(\alpha _{\text {coo}}\)) abstraction which preserves information on a family of graphs “bottom-up”. For instance, the traces of \(M_{17}\) such as \(\langle b \rangle \), \(\langle a, b\rangle \), \(\langle c, d, b\rangle \), \(\langle c, b, d, a\rangle \), \(\langle c, d\rangle \) and \(\langle c, d, a\rangle \) show that if \(\{a\}\) occurs there is a similar trace in which \(\{a\}\) does not occur (\(\{a\}\) is optional), and that if any of the sets \(\{b\}\) and \(\{c,d\}\) occur, then similar traces with any combination of \(\{b\}\) and \(\{c, d\}\) occur as well (are interchangeable), which we encode in the coo-graph of Fig. 11f. A pattern for also relating \(\{a\}\) emerges by grouping b, c and d: the presence of \(\{a\}\) in a trace implies the occurrence of \(\{b, c, d\}\) in that trace (but not vice versa), which we encode in the coo-graph of Fig. 11g. Both coo-graphs together are the \(\alpha _{\text {coo}}\)-abstraction of \(M_{17}\). Similarly, Fig. 11e is the \(\alpha _{\text {coo}}\)-abstraction of 11a. In Sect. 7.3, we further explain and define \(\alpha _{\text {coo}}\) for languages and we show that  , \(\wedge \), and

, \(\wedge \), and  can be correctly recovered from \(\alpha _{\text {coo}}\) through corresponding partial cut footprints. In Sect. 7.4, we characterize the class \(\textsc {C}_{\text {coo}}{}\) of behaviour (in terms of process trees) and prove that \(\alpha _{\text {dfg}}\), \(\alpha _{\text {msd}}\), and \(\alpha _{\text {coo}}\) abstractions and footprints together uniquely preserve behavioural information in \(\textsc {C}_{\text {coo}}{}\), thereby using the research framework outlined in Sect. 2.

can be correctly recovered from \(\alpha _{\text {coo}}\) through corresponding partial cut footprints. In Sect. 7.4, we characterize the class \(\textsc {C}_{\text {coo}}{}\) of behaviour (in terms of process trees) and prove that \(\alpha _{\text {dfg}}\), \(\alpha _{\text {msd}}\), and \(\alpha _{\text {coo}}\) abstractions and footprints together uniquely preserve behavioural information in \(\textsc {C}_{\text {coo}}{}\), thereby using the research framework outlined in Sect. 2.

7.1 Optionality

Unobservable skips can be described syntactically by an  construct and a \(\circlearrowleft (\tau ,.)\) construct, making one or more subtrees optional. We discuss the influence of optional behaviour on abstractions for these two cases.

construct and a \(\circlearrowleft (\tau ,.)\) construct, making one or more subtrees optional. We discuss the influence of optional behaviour on abstractions for these two cases.

The effect of a  construct is that its subtree is optional, that is, it can be skipped. We call a process tree optional (\({\overline{?}}\)) if its language contains the empty trace:

construct is that its subtree is optional, that is, it can be skipped. We call a process tree optional (\({\overline{?}}\)) if its language contains the empty trace:

Definition 7.1

(optionality)

Optionality has surprisingly little direct influence on the directly follows graph: in a directly follows graph, optionality shows up as an empty trace (by Definition 5.1).

Lemma 7.1

( footprint) Let M be a process tree such that \({\overline{?}}(M)\). Then, \(\epsilon \in \alpha _{\text {dfg}}(M)\).

footprint) Let M be a process tree such that \({\overline{?}}(M)\). Then, \(\epsilon \in \alpha _{\text {dfg}}(M)\).

However, optionality may influence the footprints in the \(\alpha _{\text {dfg}}\)-graph of other behaviour it is embedded in, that is, when an optional subtree is below another operator. The case of  under

under  can be eliminated through syntactical normal forms;

can be eliminated through syntactical normal forms;  under \(\leftrightarrow \) does not influence the footprints in the \(\alpha _{\text {dfg}}\)-graph [40, p. 150]. The case of

under \(\leftrightarrow \) does not influence the footprints in the \(\alpha _{\text {dfg}}\)-graph [40, p. 150]. The case of  under \(\rightarrow \) changes the \(\alpha _{\text {dfg}}\)-graph and requires a new \(\rightarrow \)-footprint which we introduce in Sect. 7.2.

under \(\rightarrow \) changes the \(\alpha _{\text {dfg}}\)-graph and requires a new \(\rightarrow \)-footprint which we introduce in Sect. 7.2.

The cases of  under \(\circlearrowleft \) and of \(\circlearrowleft (\tau ,.)\) also change the \(\alpha _{\text {dfg}}\)-graph but cannot be recovered by existing \(\circlearrowleft \)-footprints. However, in contrast to \(\rightarrow \), we may find two syntactically different models with

under \(\circlearrowleft \) and of \(\circlearrowleft (\tau ,.)\) also change the \(\alpha _{\text {dfg}}\)-graph but cannot be recovered by existing \(\circlearrowleft \)-footprints. However, in contrast to \(\rightarrow \), we may find two syntactically different models with  under \(\circlearrowleft \) and \(\circlearrowleft (\tau ,.)\) which have identical languages, which renders our proof strategy inapplicable as it relies on the assumption that each behaviour (language) has a unique syntactic description, see [40, p. 150] for details. We exclude these cases in the following.

under \(\circlearrowleft \) and \(\circlearrowleft (\tau ,.)\) which have identical languages, which renders our proof strategy inapplicable as it relies on the assumption that each behaviour (language) has a unique syntactic description, see [40, p. 150] for details. We exclude these cases in the following.

Finally, the \(\alpha _{\text {dfg}}\)-graph cannot distinguish whether  is present under \(\wedge \) or not. Likewise,

is present under \(\wedge \) or not. Likewise,  and \(\wedge \) yield the same \(\alpha _{\text {dfg}}\)-graph. Preserving information requires a new abstraction which we introduce in Sect. 7.3.

and \(\wedge \) yield the same \(\alpha _{\text {dfg}}\)-graph. Preserving information requires a new abstraction which we introduce in Sect. 7.3.

In [20], five types of silent Petri net transitions were identified: Initialize and Finalize, which start or end a concurrency, are captured by the \(\wedge \)-operator and considered in this work. Skips, which bypass one or more transitions, are the transitions under study in this section, that is,  -constructs and

-constructs and  -constructs, see Fig. 3. Redo, which allows the model to go back and redo transitions, are not studied in this paper, that is, \(\circlearrowleft (., \tau )\)-constructs. Switch-transitions, which allow jumping between exclusive branches, have no equivalent in block-structured models and are typically mimicked by duplicating activities.

-constructs, see Fig. 3. Redo, which allows the model to go back and redo transitions, are not studied in this paper, that is, \(\circlearrowleft (., \tau )\)-constructs. Switch-transitions, which allow jumping between exclusive branches, have no equivalent in block-structured models and are typically mimicked by duplicating activities.

7.2 Sequence

The example of Fig. 10 showed that optionality cannot be detected from \(\alpha _{\text {dfg}}\) when it is contained in a sequence. The reason is, as shown in the proofs for \(\alpha _{\text {dfg}}\), that the footprints of Sect. 5.2 recover behaviour for all activities together, i.e. the \(\rightarrow \)-cut at the root level, from the \(\alpha _{\text {dfg}}\) abstraction. As shown, in the presence of  -constructs, a new directly follows footprint is necessary for \(\rightarrow \).

-constructs, a new directly follows footprint is necessary for \(\rightarrow \).

To detect optionality for only a subset of activities, we use cuts that partition an alphabet only partially, called partial cuts. Our proof strategy will be to show that if two trees differ in the \(\alpha _{\text {dfg}}\)-abstraction, then they differ in at least one such partial cut showing a particular \(\rightarrow \) relation that can be recovered from \(\alpha _{\text {dfg}}\).

Definition 7.2

(partial cut) Let \(\Sigma \) be an alphabet of activities and let \(\oplus \) be a process tree operator. Then, \((\oplus , \Sigma _1, \ldots \Sigma _n)\) is a partial cut of \(\Sigma \) if \(\Sigma _1, \ldots \Sigma _n \subseteq \Sigma \) and \(\forall _{1 \leqslant i < j \leqslant n}~\Sigma _i \cap \Sigma _j = \emptyset \). A partial cut expresses an \(\oplus \)-relation between its parts \(\Sigma _1 \ldots \Sigma _n\).

For the partial \(\rightarrow \)-cut footprint, one set \(\Sigma _p\) of activities has a special role which we call a pivot. Intuitively, occurrence of \(a\in \Sigma _p\) signals the occurrence of the optional behaviour: if one of the activities in the partial cut appears in a trace, then the pivot must appear in the trace as well.

Definition 7.3

(Partial\(\rightarrow \)-cut footprint) Let \(\Sigma \) be an alphabet of activities, let \(\alpha _{\text {dfg}}\) be a directly follows graph over \(\Sigma \) and let \(C = (\rightarrow , S_1, \ldots S_m)\) be a \(\rightarrow \)-cut of \(\alpha _{\text {dfg}}\) according to Definition 5.2. Then, a partial cut \((\rightarrow , \Sigma _1, \ldots \Sigma _n)\) is a partial \(\rightarrow \)-cut if there is a pivot\(\Sigma _p\) such that in \(\alpha _{\text {dfg}}\):

- s.1:

The partial cut is a consecutive part of C.

- s.2:

There are no end activities before the pivot in the partial cut.

- s.3:

There are no start activities after the pivot in the partial cut.

- s.4:

There are no directly follows edges bypassing the pivot in the partial cut.

- s.5:

The partial cut can be tightly avoided:

“Appendix B.3” provides the complete formal definition and proves information preservation according to our framework: in a process tree each \(\rightarrow \)-node has a pivot and adheres to the footprint. The proof that the pivot, and hence the \(\rightarrow \)-node, can be uniquely rediscovered uses that by the reduction rules, at least one of the children of any \(\rightarrow \)-node is not optional. This child is the pivot, and by the reduction rules, a pivot cannot be a sequential node itself.

For instance, consider the process trees \(M_{14}\) and \(M_{15}\) shown in Fig. 10. Their directly follows graphs shown in Fig. 10c are obviously different, and this becomes apparent in the new directly follows footprint: in \(\alpha _{\text {dfg}}(M_{14})\), there is no pivot present, and therefore, there is partial cut that satisfies Definition 7.3 (this allows the conclusion that there is no nested \(\rightarrow \)-behaviour). However, in \(\alpha _{\text {dfg}}(M_{15})\), both b and c are pivots, yielding the partial \(\rightarrow \)-cut \((\rightarrow , \{b\}, \{c\})\). Intuitively, considering the structure of the directly follows graph, the combination of b and c forms a partial cut because executing either implies executing the other, but both together can be avoided.

7.3 Inclusive choice

7.3.1 Idea

Preserving and recovering inclusive choice require remembering more behaviour than what is stored in \(\alpha _{\text {dfg}}\) as illustrated by Fig. 11. We show that this additional information can be stored and recovered in a new abstraction, called \(\alpha _{\text {coo}}\). The different nature of the \(\alpha _{\text {coo}}\)-abstraction requires a slightly different proof and reasoning strategy as we illustrate next on the example tree \(M_{17}\) of Fig. 11 before providing the definitions.

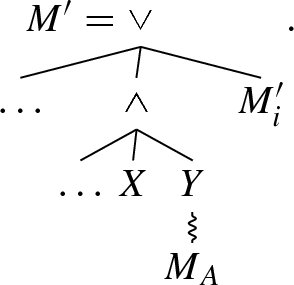

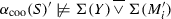

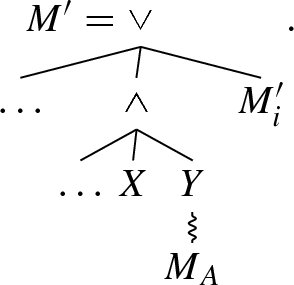

While the directly follows graph does not suffice to distinguish \(M_{17}\) from other process trees, it yields the concurrent cut \(C = (\wedge , \{a\}, \{b\}, \{c, d\})\). From this concurrent cut, we conclude that c and d are not of interest for distinguishing  - from \(\wedge \)-behaviour; thus, we group them together.

- from \(\wedge \)-behaviour; thus, we group them together.

From this concurrent cut, we work our way upwards. That is, we consider the \(\alpha _{\text {coo}}\)-graph for our partition \(\{\{a\}, \{b\}, \{c, d\}\}\), shown in Fig. 11f. In this \(\alpha _{\text {coo}}\)-graph, we identify a footprint that involves two or more sets of activities of the partition. In our example, we identify that the sets \(\{b\}\) and \(\{c, d\}\) are related using  . Then, we merge b, c and d in our partition, which becomes \(\{\{a\}, \{b, c, d\}\}\) and repeat the procedure.

. Then, we merge b, c and d in our partition, which becomes \(\{\{a\}, \{b, c, d\}\}\) and repeat the procedure.

Figure 11g shows the \(\alpha _{\text {coo}}\)-graph belonging to \(\{\{a\}, \{b, c, d\}\}\). In this graph, a footprint is present linking \(\{a\}\) to \(\{b, c, d\}\) using \(\wedge \). Thus, we have shown that the \(\alpha _{\text {coo}}\)-abstraction, that is, the family of graphs \(\alpha _{\text {coo}}(M_{17})\), can only belong to a process tree with root \(\wedge \) and activity partition \(\{a\}\), \(\{b, c, d\}\).

The proof strategy of this section formalizes each of these steps and proves that at each step, footprints can only hold in the relevant \(\alpha _{\text {coo}}\)-graph if and only if they correspond to nodes in the tree.

7.3.2 Coo-abstraction

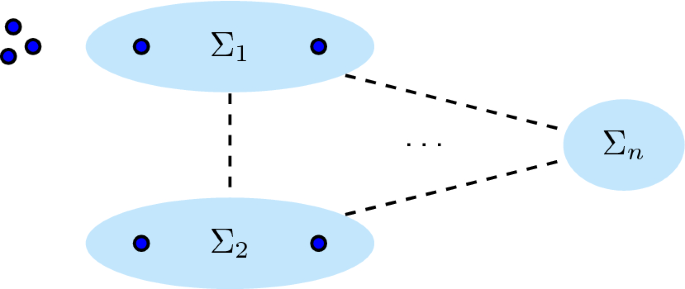

A coo-abstraction \(\alpha _{\text {coo}}\) is a family of coo-graphs, that is, a coo-abstraction contains a coo-graph for each partition of the alphabet of the language. Intuitively, the coo-abstraction indicates dependencies between the sets of activities that can occur in the language described by the \(\alpha _{\text {coo}}\)-abstraction. Therefore, we first introduce a helper function that expresses which sets of activities occur together in a language. Second, we introduce coo-graphs and third, the coo-abstraction.

Definition 7.4

(occurrence function\(f_o\)) Let \(S = \{\Sigma _1, \ldots \Sigma _n\}\) be a partition, let t be a trace and let L be a language. Then, the occurrence function of t under S yields the sets of S that occur in t or L:

For instance:

Using this occurrence function, we can define coo-graphs. A coo-graph expresses three types of properties of and between sets:

The unary optionality (\({\overline{?}}\)) expresses that if the set occurs in a trace, then there is a trace without the set as well;

The binary implication (\(\overline{\Rightarrow }\)) expresses that if one set occurs, then the other set occurs as well;

The binary interchangeability (

) expresses that if one of the two sets occur, then either or both can occur.

) expresses that if one of the two sets occur, then either or both can occur.

Notice that \(\overline{\Rightarrow }\)-edges are directed, while  -edges are undirected. Formally:

-edges are undirected. Formally:

Definition 7.5

(coo-graph\(\alpha _{\text {coo}}\)) Let L be a language, let S be a partition of \(\Sigma (L)\) and let \(A, B \in S\). Then, a coo-graph\(\alpha _{\text {coo}}(L, S)\) is a graph in which the nodes are the activities of \(\Sigma (L)\) and the edges connect sets of activities of S:

For instance, Fig. 12 shows several \(\alpha _{\text {coo}}\)-graphs of our example log \(L_{18}\). Coo-graphs bear some similarities with directed hypergraphs [43], however use two types of edges (\(\overline{\Rightarrow }\) and  ) and a node annotation (\({\overline{?}}{}\)).

) and a node annotation (\({\overline{?}}{}\)).

Finally, we combine all possible coo-graphs for a language into the coo-abstraction:

Definition 7.6

(coo-abstraction\(\alpha _{\text {coo}}\)) Let L be a language. Then, the coo-abstraction\(\alpha _{\text {coo}}(L)\) is the family of coo-graphs consisting of one coo-graph \(\alpha _{\text {coo}}(L, S)\) for each possible partition S of \(\Sigma (L)\).

In the remainder of this section, we might omit L: \(\alpha _{\text {coo}}\) denotes a particular coo-abstraction and \(\alpha _{\text {coo}}(S)\) denotes one of its coo-graphs for a particular partition S. Next, we introduce footprints that link the coo-relations to process tree operators.

7.3.3 Footprints

For inclusive choice and concurrency, we can now introduce the \(\alpha _{\text {coo}}\)-abstraction footprints. We first give the footprints, after which we illustrate them using an example.

Definition 7.7

(partial -cut) Let \(\Sigma \) be an alphabet of activities, S a partition of \(\Sigma \), let \(\alpha _{\text {coo}}(S)\) be a coo-graph, let \(\alpha _{\text {dfg}}\) be a directly follows graph, and let

-cut) Let \(\Sigma \) be an alphabet of activities, S a partition of \(\Sigma \), let \(\alpha _{\text {coo}}(S)\) be a coo-graph, let \(\alpha _{\text {dfg}}\) be a directly follows graph, and let  be a partial cut such that \(\forall _{1 \leqslant i \leqslant n}~ \Sigma _i \in S\). Then, C is a partial

be a partial cut such that \(\forall _{1 \leqslant i \leqslant n}~ \Sigma _i \in S\). Then, C is a partial  -cut if in \(\alpha _{\text {coo}}(S)\) and \(\alpha _{\text {dfg}}\):

-cut if in \(\alpha _{\text {coo}}(S)\) and \(\alpha _{\text {dfg}}\):

- o.1:

C is a part of a \(\alpha _{\text {dfg}}\)-concurrent cut (Definition 5.2).

- o.2:

All parts are interchangeable (

) in \(\alpha _{\text {coo}}(S)\)::

) in \(\alpha _{\text {coo}}(S)\)::

A formal definition is included in “Appendix B.4”.

Definition 7.8

(partial\(\wedge \)-cut) Let \(\Sigma \) be an alphabet of activities, S a partition of \(\Sigma \), let \(\alpha _{\text {coo}}(S)\) be a coo-graph, let \(\alpha _{\text {dfg}}\) be a directly follows graph, and let \(C = (\wedge , \Sigma _1, \ldots \Sigma _n)\) be a partial cut such that \(\forall _{1 \leqslant i \leqslant n}~ \Sigma _i \in S\). Then, C is a partial \(\wedge \)-cut if in \(\alpha _{\text {coo}}(S)\) and \(\alpha _{\text {dfg}}\):

- c.1:

C is a part of an \(\alpha _{\text {dfg}}\)-concurrent cut (Definition 5.2).

Furthermore, in \(\alpha _{\text {coo}}(S)\):

– EITHER –

- c.2.1:

All parts bi-imply (\(\overline{\Rightarrow }\)) one another::

– OR –

- c.3.1:

The first part is optional (\({\overline{?}}\)).

- c.3.2:

The first part implies all other parts.

- c.3.3:

All non-first parts bi-imply one another.

- c.3.4:

No non-first part \(\Sigma _i\) is implied by any part not in C::

A formal definition and a proof that these footprints apply in process trees without duplicate activities are included in “Appendix B.5”.

For instance, consider a process tree \(M_{19}\) without duplicate activities, and one of its subtrees  , in which \(M_1\) and \(M_2\) are arbitrary subtrees. Furthermore, consider a partition S of the activities of \(M_{20}\). In the language of \(M_{20}\), whenever in a trace \(M_1\) is executed, there must be a similar trace in which both \(M_1\) and \(M_2\) are executed, and there must be a trace in which \(M_2\) is executed but \(M_1\) is not. Then in \(\alpha _{\text {coo}}(\mathcal {L}(M_{19}), S)\), the coo-relation

, in which \(M_1\) and \(M_2\) are arbitrary subtrees. Furthermore, consider a partition S of the activities of \(M_{20}\). In the language of \(M_{20}\), whenever in a trace \(M_1\) is executed, there must be a similar trace in which both \(M_1\) and \(M_2\) are executed, and there must be a trace in which \(M_2\) is executed but \(M_1\) is not. Then in \(\alpha _{\text {coo}}(\mathcal {L}(M_{19}), S)\), the coo-relation  holds, and hence,

holds, and hence,  is a partial

is a partial  -cut of \(\alpha _{\text {coo}}(\mathcal {L}(M_{19}), S)\).

-cut of \(\alpha _{\text {coo}}(\mathcal {L}(M_{19}), S)\).

7.4 A class of trees: \(\textsc {C}_{\text {coo}}\)

In this section, we introduce the class of trees that we consider for the handling of  - and

- and  -constructs: \(\textsc {C}_{\text {coo}}\). This class of trees extends \(\textsc {C}_{\text {dfg}}\) by including

-constructs: \(\textsc {C}_{\text {coo}}\). This class of trees extends \(\textsc {C}_{\text {dfg}}\) by including  -nodes and some \(\tau \)-leaves.

-nodes and some \(\tau \)-leaves.

Definition 7.9

(Class\(\textsc {C}_{\text {coo}}\)) Let \(\Sigma \) be an alphabet of activities, then the following process trees are in \(\textsc {C}_{\text {coo}}{}\):

\(\tau \) is in \(\textsc {C}_{\text {coo}}{}\);

a with \(a \in \Sigma \) is in \(\textsc {C}_{\text {coo}}\);

Let \(M_1 \ldots M_n\) be reduced process trees in \(\textsc {C}_{\text {coo}}{}\) without duplicate activities: \(\forall _{i \in [1\ldots n], i \ne j \in [1\ldots n]}~ \Sigma (M_i) \cap \Sigma (M_j) = \emptyset \). Then,

A node \(\oplus (M_1, \ldots M_n)\) with

is in \(\textsc {C}_{\text {coo}}{}\)

is in \(\textsc {C}_{\text {coo}}{}\)An interleaved node \(\leftrightarrow (M_1, \ldots , M_n)\) is in \(\textsc {C}_{\text {coo}}{}\) if all:

- i.1:

At least one child has disjoint start and end activities: \(\exists _{i \in [1\ldots n]}~ {{\,\mathrm{Start}\,}}(M_i) \cap {{\,\mathrm{End}\,}}(M_i) = \emptyset \)

- i.2:

no child is interleaved itself: \(\forall _{i\in [1\ldots n]}~ M_i \ne \leftrightarrow (\ldots )\)

- i.3:

no child is optionally interleaved:

- i.4:

each concurrent or inclusive choice child has at least one child with disjoint start and end activities: \(\forall _{i \in [1\ldots n]}~ M_i = \oplus (M'_1, \ldots M'_m) \Rightarrow \exists _{j \in [1 \ldots m]}~ {{\,\mathrm{Start}\,}}(M'_j) \cap {{\,\mathrm{End}\,}}(M'_j) = \emptyset \) with

A loop node \(\circlearrowleft (M_1, \ldots M_n)\) is in \(\textsc {C}_{\text {coo}}{}\) if all:

- l.1:

the body child is not concurrent: \(M_1 \ne \wedge (\ldots )\)

- l.2:

no redo child can produce the empty trace: \(\forall _{i \in [2\ldots n]}~ \epsilon \notin \mathcal {L}(M_i)\)

Notice that \(\textsc {C}_{\text {dfg}}{} \subseteq \textsc {C}_{\text {msd}}{} \subseteq \textsc {C}_{\text {coo}}{}\). We illustrate the necessity of the newly added or relaxed requirements:

Requirement \(\hbox {C}_{\mathrm{coo}}.\hbox {i}.3\): the child of an interleaved node cannot be an optional interleaved node. Nested interleaved nodes cannot be distinguished using directly follows graphs, as was shown in Sect. 5.3. As an optional nested interleaved has the same directly follows graph as a nested interleaving, a similar argument applies here.

Requirement \(\hbox {C}_{\mathrm{coo}}.\hbox {l}.2\): redo children of loops cannot be optional. As shown in Sect. 7.1, the reduction rules of Sect. 4 are not strong enough to distinguish trees with \(\circlearrowleft (.,\tau )\) constructs. Furthermore, as shown in Sect. 7.1, optional children under a \(\circlearrowleft \) might invalidate directly follows footprints.

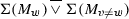

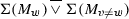

From our discussion in Sect. 7.1, it follows that  constructs preserve the footprints of the process tree operators

constructs preserve the footprints of the process tree operators  , \(\rightarrow \), \(\leftrightarrow \), \(\wedge \) and \(\circlearrowleft \) for trees in \(\textsc {C}_{\text {coo}}\).

, \(\rightarrow \), \(\leftrightarrow \), \(\wedge \) and \(\circlearrowleft \) for trees in \(\textsc {C}_{\text {coo}}\).

Corollary 7.1

(optionality preserves cuts) Take two reduced process trees \(M \in \textsc {C}_{\text {dfg}}{}\), and \(M' \in \textsc {C}_{\text {coo}}{}\), such that \(M = \oplus (M_1, \ldots M_m)\), \(M' = \oplus (M'_1, \ldots M'_n)\) and each \(M'_i\) is equal to either \(M_i\) or  . Then, \(\alpha _{\text {dfg}}(M')\) contains a cut \((\oplus , \Sigma (M_1) \ldots \Sigma (M_m))\), i.e. a footprint according to Definition 5.2.

. Then, \(\alpha _{\text {dfg}}(M')\) contains a cut \((\oplus , \Sigma (M_1) \ldots \Sigma (M_m))\), i.e. a footprint according to Definition 5.2.

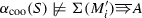

7.5 Uniqueness