Abstract

Besides the text content, documents usually come with rich sets of meta-information, such as categories of documents and semantic/syntactic features of words, like those encoded in word embeddings. Incorporating such meta-information directly into the generative process of topic models can improve modelling accuracy and topic quality, especially in the case where the word-occurrence information in the training data is insufficient. In this article, we present a topic model called MetaLDA, which is able to leverage either document or word meta-information, or both of them jointly, in the generative process. With two data augmentation techniques, we can derive an efficient Gibbs sampling algorithm, which benefits from the fully local conjugacy of the model. Moreover, the algorithm is favoured by the sparsity of the meta-information. Extensive experiments on several real-world datasets demonstrate that our model achieves superior performance in terms of both perplexity and topic quality, particularly in handling sparse texts. In addition, our model runs significantly faster than other models using meta-information.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the rapid growth of the internet, huge amounts of text data are generated in social networks, online shopping and news websites, etc. These data are generally short but may contain rich and complex kinds of information that can be difficult to find in traditional information sources [44], therefore create demand for both effective and efficient machine learning techniques. Probabilistic topic models such as Latent Dirichlet Allocation (LDA) [4] are among the popular approaches for this task. In topic modelling, a document is assumed to be generated from a mixture of topics, where each topic is a probability distribution over a vocabulary. However, most existing topic models discover topics purely based on the word-occurrences, ignoring the meta-information (a.k.a., side information) associated with the content, which often results in degraded performance. We argue that meta-information associated with diverse texts can play the role of background knowledge in human text comprehension. When we humans read text, it is natural for us to leverage metadata, such as categories, authors, timestamps, words’ semantic/syntactic information, to improve our understanding of the text. Therefore, it is reasonable to expect topic models can also benefit from the meta-information and yield improved modelling accuracy and topic quality.

In practice, various kinds of meta-information are associated to tweets, product reviews, blogs, etc. They are often available at both the document level and the word level. At the document level, labels of documents can be used to guide topic learning so that more meaningful topics can be discovered. It is likely that documents with common labels should discuss similar topics, which can be modelled by similar distributions over topics. In the case of tweets, as shown in Fig. 1, they can have an author, hashtag, timestamp, etc. Previous work on tweet pooling [12, 19] has shown that aggregating tweets according to their authors or hashtags can significantly improve topic modelling. Furthermore, if we use authors as labels for scientific papers, the research topics of the papers published by the same researcher can be closely related, and authors having similar research topics are more likely to collaborate [34].

At the word level, different semantic/syntactic features are also accessible. For example, there are features regarding word relationships, such as synonyms obtained from WordNet [22], word co-occurrence patterns obtained from a large corpus, and linked concepts from knowledge graphs. It is preferable that words having similar meaning but different morphological forms, like “dog” and “puppy”, are likely to be assigned to the same topic, even if they barely co-occur in the modelled corpus. Recently, word embeddings generated by GloVe [27] and word2vec [20, 21] have attracted a lot of attention in natural language processing and related fields. It has been shown that the word embeddings can capture both the semantic and syntactic features of words so that similar words are close to each other in the embedding space. It is reasonable to expect that these word embeddings will improve topic modelling [8, 26]. Figure 1 also shows some word-level meta-information associated with the tweet.

It is known that most conventional topic models can suffer from a large performance degradation on short texts (e.g., tweets and news headlines) due to insufficient word co-occurrence information. In such cases, meta-information of documents and words can play the role of auxiliary information in analysing short texts, which can compensate for the lost information in word co-occurrences. At the document level, we can leverage the hashtags, users, locations, and timestamps of tweets so that the data sparsity problem can be alleviated. At the word level, word semantic similarity and embeddings obtained or trained on large external corpus (e.g., Google News or Wikipedia) can also be built into the generative process of topic models [17, 26, 36].

Recently, significant research effort has been devoted to handle short texts in topic modelling. Models along this line often take classical topic models, like LDA, as a building block, and manipulate the graphical structure to incorporate meta-information into the generative process [23, 26, 30]. However, what we found is that those models make use of either the document level or the word level meta-information, rather than both. The limitation is often caused by their complicated model structures, which lose conjugacy favoured by sampling methods, and further result in inefficient inference algorithms.

In this article, we propose MetaLDA,Footnote 1 a new topic model that can effectively and efficiently make use of arbitrary document and word meta-information encoded in binary form. Specifically, the labels of a document in MetaLDA are incorporated in the prior of the per-document topic distributions. If two documents have similar labels, their topic distributions should be generated with similar Dirichlet priors. Analogously, at the word level, the features of a word are incorporated in the prior of the per-topic word distributions, which encourages words with similar features to have similar proportions across topics. Therefore, both document and word meta-information, if and when they are available, can be flexibly and simultaneously incorporated in the generative process. MetaLDA has the following key properties:

-

1.

MetaLDA jointly incorporates various kinds of document and word meta-information for both regular and short texts, yielding better modelling accuracy and topic quality.

-

2.

With data augmentation techniques, the inference of MetaLDA can be done by an efficient and closed-form Gibbs sampling algorithm that benefits from the full local conjugacy of the model.

-

3.

The simple structure of incorporating meta-information and the efficient inference algorithm give MetaLDA advantage in terms of running speed over other models with meta-information.

-

4.

MetaLDA has an improved interpretability. For example, the inclusion of the document labels directly in the generative process gives the ability of both explaining each label with topics and assigning labels to each topic.

We conduct extensive experiments with several real datasets including regular and short texts in various domains. The experimental results demonstrate that MetaLDA outperforms all the competitors we considered in terms of perplexity, topic coherence and running time. The rest of the article, which extends our earlier contribution [42], is organised as follows. We first briefly discuss the related work in Sect. 2. Then, we elaborate on MetaLDA and derive its sampling algorithm in Sects. 3 and 4, respectively. The experimental results derived on several real-world datasets are reported in Sect. 5. We conclude the article in Sect. 6.

2 Related work

In this section, we review three lines of related work: models with document meta-information, models with word meta-information, and models for short texts.

At the document level, Supervised LDA (sLDA) [18] models document labels by learning a generalised linear model with an appropriate link function and exponential family dispersion function. But the restriction for sLDA is that one document can only have one label. Labelled LDA (LLDA) [29] assumes that each label has a corresponding topic and a document is generated by a mixture of the topics. Although multiple labels are allowed in LLDA, it requires that the number of topics must equal to the number of labels, i.e., exactly one topic per label. As an extension to LLDA, Partially Labelled LDA (PLLDA) [30] relaxes this requirement by assigning multiple topics to a label. The Dirichlet Multinomial Regression (DMR) model [23] incorporates document labels on the prior of the topic distributions like our MetaLDA but with the logistic-normal transformation. As full conjugacy does not exist in DMR, a part of the inference has to be done by numerical optimisation, which is slow for large sets of labels and topics. Similarly, in the Hierarchical Dirichlet Scaling Process (HDSP) [14], conjugacy is broken as well since the topic distributions have to be renormalised. A Poisson factorisation model with hierarchical document labels is introduced in [13], but the technique cannot be applied to regular topic models as the topic proportion vectors are also unnormalised.

There has been growing interest in incorporating word features in topic models. For example, DF-LDA [2] incorporates word must-links and cannot-links using a Dirichlet forest prior in LDA; MRF-LDA [35] encodes word semantic similarity in LDA with a Markov random field; WF-LDA [28] extends LDA to model word features with the logistic-normal transform; LF-LDA [26] integrates word embeddings into LDA by replacing the topic-word Dirichlet multinomial component with a mixture of a Dirichlet multinomial component and a word embedding component; Instead of generating word types (tokens), Gaussian LDA (GLDA) [8] directly generates word embeddings with the Gaussian distribution. Despite the exciting applications of the above models, their inference is usually less efficient due to the non-conjugacy and/or complicated model structures.

Analysis of short text with topic models has been an active area with the development of social networks. Generally, there are two ways to deal with the sparsity problem in short texts, either using the intrinsic properties of short texts or leveraging meta-information. For the first way, one popular approach is to aggregate short texts into pseudo-documents, for example, [12] introduces a model that aggregates tweets containing the same word; Recently, PTM [46] aggregates short texts into latent pseudo-documents. Another approach is to assume one topic per short document, known as mixture of unigrams or Dirichlet Multinomial Mixture (DMM) such as [36, 39]. For the second way, document meta-information can be used to aggregate short texts, for example, [12] aggregates tweets by the corresponding authors and [19] shows that aggregating tweets by their hashtags yields superior performance over other aggregation methods. Closely related work to ours are models that use word features for short texts. For example, [36] introduces an extension of GLDA on short texts which samples an indicator variable that chooses to generate either the type of a word or the embedding of a word and GPU-DMM [17] extends DMM with word semantic similarity obtained from embeddings for short texts. Although with improved performance, there still exist challenges for existing models:

-

for aggregation-based models, it is usually hard to choose which meta-information to use for aggregation;

-

the “single topic” assumption makes DMM models lose the flexibility to capture different topic ingredients of a document;

-

the incorporation of meta-information in the existing models is usually less efficient.

To our knowledge, the attempts that jointly leverage document and word meta-information are relatively rare. For example, meta-information can be incorporated by first-order logic in Logit-LDA [3] and score functions in SC-LDA [37]. However, the first-order logic and score functions need to be defined for different kinds of meta-information and the definition can be infeasible for incorporating both document and word meta-information simultaneously.

3 The MetaLDA model

Given a corpus, LDA uses the same Dirichlet prior for all the per-document topic distributions and the same prior for all the per-topic word distributions [33]. While in MetaLDA, each document has a specific Dirichlet prior on its topic distribution, which is computed from the meta-information of the document, and the parameters of the prior are estimated during training. Similarly, each topic has a specific Dirichlet prior computed from the word meta-information. In this section we elaborate on our MetaLDA, in particular on how the meta-information is incorporated. Hereafter, we will use labels as document meta-information, unless otherwise stated. Table 1 summarises the notations used in this section.

The basic formulation mirrors that of standard LDA. Given a collection of D documents \({\mathcal {D}}\), MetaLDA generates document \(d \in \{1,\ldots ,D\}\) with a mixture of K topics and each topic \(k \in \{1,\ldots ,K\}\) is a distribution over the vocabulary with V tokens, denoted by \({\varvec{\phi }}_{k} \in {\mathbb {R}}^{V}_{+}\). For document d with \(N_d\) words, to generate the ith (\(i \in \{1,\ldots ,N_d\}\)) word \(w_{d,i}\), we first sample a topic \(z_{d,i} \in \{1,\ldots ,K\}\) from the document’s topic distribution \(\varvec{\theta _d} \in {\mathbb {R}}^{K}_{+}\), and then sample \(w_{d,i}\) from \({\varvec{\phi }}_{z_{d,i}}\). Now this is extended with meta-information. Assume the labels of document d are encoded in a binary vector \(\varvec{f_d} \in \{0,1\}^{L_\mathrm{doc}}\) where \(L_\mathrm{doc}\) is the total number of unique labels. \(f_{d,l}=1\) indicates label l is active in document d and vice versa. MetaLDA allows each document to have multiple labels. Similarly, the \(L_\mathrm{word}\) features of token v are stored in a binary vector \({\varvec{g}}_v \in \{0,1\}^{L_\mathrm{word}}\). Therefore, the document and word meta-information associated with \({\mathcal {D}}\) are stored in the matrix \(\mathbf {F} \in \{0,1\}^{D \times L_\mathrm{doc}}\) and \(\mathbf {G} \in \{0,1\}^{V \times L_\mathrm{word}}\), respectively. Although MetaLDA incorporates binary features, categorical features and real-valued features can be converted into binary values with proper transformations such as discretisation and binarisation [10].

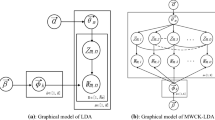

Figure 2 shows the graphical model of MetaLDA and the generative process is as follows:

-

1.

For each topic k:

-

(a)

For each doc-label l: Draw \(\lambda _{l,k} \sim \mathrm {Ga}(\mu _0,\mu _0)\)

-

(b)

For each word-feature \(l'\): Draw \(\delta _{l',k} \sim \mathrm {Ga}(\nu _0,\nu _0)\)

-

(c)

For each token v: Compute \(\beta _{k,v} = \prod _{l'=1}^{L_\mathrm{word}} \delta _{l',k}^{g_{v,l'}}\)

-

(d)

Draw \({\varvec{\phi }}_k \sim \text {Dir}_V({\varvec{\beta }}_{k})\)

-

(a)

-

2.

For each document d:

-

(a)

For each topic k: Compute \(\alpha _{d,k} = \prod _{l=1}^{L_\mathrm{doc}} \lambda _{l,k}^{f_{d,l}}\)

-

(b)

Draw \({\varvec{\theta }}_d \sim \text {Dir}_K({\varvec{\alpha }}_d)\)

-

(c)

For each word in document d:

-

(i)

Draw topic \(z_{d,i} \sim \text {Cat}_K(\varvec{\theta }_d)\)

-

(ii)

Draw word \(w_{d,i} \sim \text {Cat}_V(\varvec{\phi }_{z_{d,i}})\)

-

(i)

-

(a)

where \(\text {Ga}(\cdot ,\cdot )\), \(\text {Dir}(\cdot )\), \(\text {Cat}(\cdot )\) are the gamma distribution with shape and rate parameters, the Dirichlet distribution, and the categorical distribution, respectively. K, \(\mu _0\), and \(\nu _0\) are the hyper-parameters.

To incorporate document labels, MetaLDA learns a specific Dirichlet prior over the topics for each document by using the label information. Specifically, the information of document d’s labels is incorporated in \(\varvec{\alpha }_d\), the parameter of Dirichlet prior on \(\varvec{\theta }_d\). As shown in Step 2a, \(\alpha _{d,k}\) is computed as a log linear combination of the labels \(f_{d,l}\). Since \(f_{d,l}\) is binary, \(\alpha _{d,k}\) is indeed the multiplication of \(\lambda _{l,k}\) over all the active labels of document d, i.e., \(\{l \mid f_{d, l}=1\}\). Drawn from the gamma distribution with mean 1, \(\lambda _{l,k}\) controls the impact of label l on topic k. If label l has no or less impact on topic k, \(\lambda _{l,k}\) is expected to be 1 or close to 1, and then \(\lambda _{l,k}\) will have no or little influence on \(\alpha _{d,k}\) and vice versa. The hyper-parameter \(\mu _0\) controls the variation of \(\lambda _{l,k}\). The incorporation of word features is analogous but in the parameter of the Dirichlet prior on the per-topic word distributions as shown in Step 1c.

The intuition of our way of incorporating meta-information is as follows. At the document level, if two documents have more labels in common, their Dirichlet parameter \(\varvec{\alpha }_d\) will be more similar, resulting in more similar topic distributions \(\varvec{\theta }_d\); At the word level, if two words have similar features, their \(\beta _{k,v}\) in topic k will be similar and then we can expect that their \(\phi _{k,v}\) could be more or less the same. Finally, the two words will have similar probabilities of showing up in topic k. In other words, if a topic “prefers” a certain word, we expect that it will also prefer other words with similar features to that word. Moreover, at both the document and the word level, different labels/features may have different impact on the topics (\(\lambda \)/\(\delta \)), which can be automatically learnt in MetaLDA from the data.

4 Inference

Unlike most existing methods, our way of incorporating the meta-information facilitates the derivation of an efficient Gibbs sampling algorithm. With two data augmentation techniques (i.e., the introduction of auxiliary variables), MetaLDA admits the local conjugacy that further gives us a close-form Gibbs sampling algorithm. Note that MetaLDA incorporates the meta-information on the Dirichlet priors, so we can still use LDA’s collapsed Gibbs sampling algorithm for the topic assignment \(z_{d,i}\). Thus, there is no need to use a hybrid learning algorithm (i.e., optimisation + sampling), such as those in [23, 26]. Moreover, as shown in Step 2a and 1c, we only need to consider nonzero entries of \(\mathbf {F}\) and \(\mathbf {G}\) in computing the full conditionals, which further reduces the inference complexity, particularly when the feature space is sparse. This is often the case in real-world scenarios. In the rest of this section, we will focus on the derivation of the full conditionals for sampling the two Gamma random variables, \(\varvec{\lambda }\) and \(\varvec{\delta }\), used to modelling the influence of document labels and word features on topics. Table 2 shows the statistics that we need while running the inference.

Given \(\varvec{\phi }_{1:K}\) and \(\varvec{\theta }_{1:D}\), the complete model likelihood (i.e., joint distribution) of MetaLDA is exactly the same as LDA’s likelihood, which is as follows:

where \(n_{k,v} = \sum _{d}^{D} \sum _{i=1}^{N_d} \varvec{1}_{(w_{d,i} = v, z_{d,i} = k)}\) counts the number of words v assigned to topic k, \(m_{d,k} = \sum _{i=1}^{N_d} \varvec{1}_{(z_{d,i} = k)}\) counts the number of words in document d assigned to topic k, and \(\varvec{1}_{(\cdot )}\) is the indicator function. In the standard LDA model, we can marginalise out \(\varvec{\phi }\) and \(\varvec{\theta }\) using the Dirichlet multinomial conjugacy, and then yield

where \(\varGamma (\cdot )\) is the Gamma function, \(\text{ Beta }_N(\cdot )\) is a N-dimensional beta function as

and here we assume that the Dirichlet priors are document and topic specific. Given \(\varvec{\beta }_k\) and \({\varvec{\alpha }}_d\), it is straightforward to compute the full conditional for sampling topic assignment \(z_{d,i}\), i.e.,

In MetaLDA, we have replaced \({\varvec{\alpha }}_d\) and \(\varvec{\beta }_k\) with a log linear model in order to build informative priors from various side information associated with both documents and words. They are deterministically computed from a set of Gamma random variables, as shown in Step 2a and 1c in the generative process. Equation (3) can still be used in MetaLDA to sample the topic assignments. However, the major challenge is to sample the Gamma random variables, \(\varvec{\lambda }\) and \(\varvec{\delta }\) without significantly complicating the inference procedure.

4.1 Sampling Gamma random variable \(\lambda _{l,k}\)

\(\lambda _{l,k}\) is involved in computing the Dirichlet prior over \(\varvec{\theta }_{1:D}\) via the parameter \({\varvec{\alpha }}_{1:D}\). To sample \(\lambda _{l,k}\), we expand the first Beta ratio in Eq. (2) with Gamma functions as follows:

where \(\alpha _{d,\cdot } = \sum _{k=1}^{K} \alpha _{d,k}\), and \(m_{d,\cdot } = \sum _{k=1}^{K} m_{d,k}\). It is not easy to directly work with these Gamma functions, while we replace \(\alpha _k\) with \(\prod _{l=1}^{L_\mathrm{doc}} \lambda _{l,k}^{f_{d,l}}\). In order to retain the sampling efficiency of the standard LDA model, we appeal to data augmentation.

Gamma ratio 1 in Eq. (4) can be seen to be the marginalisation of a set of Beta random variables, therefore can be augmented as (similar to the sampling of the Pitman–Yor concentration parameter in [9]):

where for each document d, \(q_d \sim \text {Beta}(\alpha _{d,\cdot }, m_{d,\cdot })\). Given a set of \(q_{1:D}\) for all the documents, Gamma ratio 1 can be approximated by the product of \(q_{1:D}\), i.e., \(\prod _{d=1}^{D} q_d^{\alpha _{d, \cdot }}\).

Gamma ratio 2 in Eq. (4) is the Pochhammer symbol for a rising factorial, which can be augmented with an auxiliary variable \(t_{d,k}\) [7, 31, 40, 45] as follows:

where \(S^{m}_{t}\) indicates an unsigned Stirling number of the first kind. Gamma ratio 2 is indeed a normalising constant for the probability of the number of tables in the Chinese Restaurant Process (CRP) [5], \(t_{d,k}\) can be sampled by a CRP with \(\alpha _{d,k}\) as the concentration and \(m_{d,k}\) as the number of customers:

where \(\text {Bern}(\cdot )\) samples a sequence of binary variables from the Bernoulli distribution. The complexity of sampling \(t_{d,k}\) by Eq. (7) is \({\mathcal {O}}(m_{d,k})\). For large \(m_{d,k}\), as the standard deviation of \(t_{d,k}\) is \({\mathcal {O}}(\sqrt{\log m_{d,k}})\) [5], one can sample \(t_{d,k}\) in a small window around the current value in complexity \({\mathcal {O}}(\sqrt{\log m_{d,k}})\).

By ignoring the terms unrelated to \(\alpha \), the augmentation of Eq. (6) can be simplified to a single term \(\alpha _{d,k}^{t_{d,k}}\). With those auxiliary variables, we can simplify Eq. (4) as:

Now, replacing \(\alpha _{d,k}\) with \(\lambda _{l,k}\) (i.e., \(\alpha _{d,k} = \prod _{l=1}^{L_\mathrm{doc}} \lambda _{l,k}^{f_{d,l}}\) ), we get:

Recall that all the document labels are binary and \(\lambda _{l,k}\) is involved in computing \(\alpha _{d,k}\) if and only if \(f_{d,l}=1\). Extracting all the terms related to \(\lambda _{l,k}\) in Eq. (9), we get the posterior likelihood of \(\lambda _{l,k}\):

where \(\frac{\alpha _{d,k}}{\lambda _{l,k}}\) is the value of \(\alpha _{d,k}\) with \(\lambda _{l,k}\) removed when \(f_{d,l}=1\). With these data augmentation techniques, the likelihood is transformed into a form that is conjugate to the gamma prior of \(\lambda _{l,k}\).

Therefore, it is straightforward to yield the following sampling strategy for \(\lambda _{l,k}\):

Before \(\lambda _{l,k}\) is sampled, the value of \(\alpha _{d,k}\) can be computed and cached. After a new value of \(\lambda _{l,k}\) is sampled, \(\alpha _{d,k}\) is updated by:

where \(\lambda '_{i,k}\) is the newly sampled value of \(\lambda _{i,k}\).

To sample/compute Eqs. (10)–(13), one only iterates over the documents where label l is active (i.e., \(f_{d,l}=1\)). Thus, the sampling for all \(\lambda \) takes \({\mathcal {O}}(D'KL_\mathrm{doc})\) where \(D'\) is the average number of documents where a label is active (i.e., the column-wise sparsity of \(\mathbf {F}\)). It is usually that \(D' \ll D\) because if a label exists in nearly all the documents, it provides little discriminative information and can then be neglected. This demonstrates how the sparsity of document meta-information is leveraged. Moreover, sampling all the tables t takes \({\mathcal {O}}(\tilde{N})\) (\(\tilde{N}\) is the total number of words in \({\mathcal {D}}\)) which can be accelerated with the window sampling technique explained above.

4.2 Sampling Gamma random variable \(\delta _{l',k}\)

The derivation of sampling \(\delta _{l',k}\) is analogous to \(\lambda _{l,k}\). Here, we use the same data augmentation methods for re-parameterising the second Beta ratio in Eq. (2), i.e.,

as

where \(\hat{q}_k \sim \text {Be}(\beta _{k,.}, n_{k,.})\) and \(t'_{k,v} = \sum _{i=1}^{n_{k,v}} \text {Bern}\left( \frac{\beta _{k,v}}{\beta _{k,v}+i}\right) \). Now, we replace \(\beta _{k,v}\) with \(\prod _{l'=1}^{L_\mathrm{word}} \delta _{l',k}^{g_{v,l'}}\),

and then extract all the terms related to \(\delta _{l',k}\) in Eq. (15), and add the Gamma prior, we derive the posterior of \(\delta _{l',k}\):

We can then sample \(\delta _{l',k}\) from a Gamma distribution parameterised with

\(\beta _{k,v}\) can be updated in a similar way to \(\alpha _{d,k}\), i,e,

where \(\delta '_{l',k}\) is newly sampled value of \(\delta _{l',k}\). Sampling all \(\delta \) takes \({\mathcal {O}}(V'KL_\mathrm{word})\) where \(V'\) is the average number of tokens where a feature is active (i.e., the column-wise sparsity of \(\mathbf {G}\) and usually \(V' \ll V\)) and sampling all the tables \(t'\) takes \({\mathcal {O}}(\tilde{N})\). Figure 3 illustrates the full sampling algorithm.

4.3 MetaLDA as a hyper-parameter sampling approach

Besides the observed labels/features associated with the datasets, a default label/feature for each document/word is introduced in MetaLDA, which is always equal to 1. The default can be interpreted as the bias term in \(\alpha \)/\(\beta \), which is supposed to capture the information unrelated to the labels/features. When working without document labels with the default, MetaLDA samples the Dirichlet parameters (i.e., Hyper-parameters of LDA) of the document-topic distributions, \(\alpha \), according to the statistics in the target corpus. Similarly, without word features, the Dirichlet parameters of the topic-word distributions, \(\beta \), are sampled. We demonstrate this by taking the document-topic distributions as an example.

Now assume each document only has a default label that is always equal to 1, i.e., \(f_{d, 0} = 1\) and \(f_{d, l} = 0\) for all \(l > 0\). According to our construction (Step 1 and 2a), \(a_{d, k} = \lambda _{0,k}\) for all the document. In other words, all the documents share the same asymmetric Dirichlet prior on the document-topic distributions (\(\varvec{\theta }_d\)) which is constructed as follows:

In this case, we can sample \(\alpha _k\) as follows:

Alternatively, we can vary MetaLDA to have a symmetric Dirichlet prior:

In this case, we can sample \(\alpha \) as follows:

Discussed in [6, 33], sampling the Dirichlet priors can gain significant performance improvement in topic models. In the case where document labels/word features are not used, MetaLDA offers an alternative hyper-parameter sampling approach to the methods such as fixed-point iterations [24] and Newton–Raphson [32]. These methods use MAP to optimise the hyper-parameters while ours uses MCMC sampling. We would like to point out that MetaLDA’s sampling of symmetric Dirichlet prior is similar to the approach introduced in [31]. However the sampling of asymmetric prior was not considered in [31]. Compared with the built-in hyper-parameter sampling methods in MalletFootnote 2 which are based on histograms of the statistics, our approach is more robust in the case where the statistics are not sufficient (e.g., short texts). This is further discussed with experiments in Sect. 5.4.3.

5 Experiments

In this section, we evaluate the proposed MetaLDA against several recent alternatives that also incorporate meta-information, using 6 real datasets including both regular and short texts. We will focus on the evaluation of

-

the modelling accuracy of MetaLDA in terms of perplexity, a standard measure used in topic modelling. The goal is to study how the meta-information contributes to the predictive likelihood of unseen documents.

-

the quality of topics learned by MetaLDA. It is interesting to see whether or not the meta-information will positively affect the topic coherence. We will report both quantitative and qualitative analyses.

-

the running time of MetaLDA. The introduction of meta-information increases the modelling complexity to some extend. However, as we discussed in previous sections, MetaLDA can benefit from the local conjugacy given by the data augmentation methods, and also be parallelised using the same distributed framework [25] in Mallet. Therefore, we will empirically study the efficiency of MetaLDA.

Besides, we will also study how word embeddings learnt by different techniques affect both perplexity and topic coherence.

5.1 Datasets

In the experiments, we used three regular and three short text datasets, which are as follows:

-

Reuters is a widely used corpus extracted from the Reuters-21578 dataset where documents without any labels are removed.Footnote 3 There are 11,367 documents and 120 labels. Each document is associated with multiple labels. The vocabulary size is 8817, and the average document length is 73.

-

20NG 20 Newsgroups is a widely used dataset consists of 18,846 news articles with 20 categories. The vocabulary size is 22,636 and the average document length is 108.

-

NYT New York Times is extracted from the documents in the category “Top/News/Health” in the New York Times Annotated Corpus.Footnote 4 There are 52,521 documents and 545 unique labels. Each document is with multiple labels. The vocabulary contains 21,421 tokens, and there are 442 words in a document on average.

-

WS Web Snippets, used in [17], contains 12,237 web search snippets and each snippet belongs to one of 8 categories. The vocabulary contains 10,052 tokens, and there are 15 words in one snippet on average.

-

TMN Tag My News, used in [26], consists of 32,597 English RSS news snippets from Tag My News. With a title and a short description, each snippet belongs to one of 7 categories. There are 13,370 tokens in the vocabulary, and the average length of a snippet is 18.

-

AN ABC News, is a collection of 12,495 short news descriptions and each one is in multiple of 194 categories. There are 4255 tokens in the vocabulary, and the average length of a description is 13.

All the datasets were tokenised by Mallet (see footnote 2) and we removed the words that exist in less than 5 documents and more than 95% of the documents.

5.2 Meta-information settings

At the document level, the labels associated with documents in each dataset were used as the meta-information. At the word level, we used a set of binarised word embeddings as word features (see footnote 3), which are obtained from real-valued word embeddings such as GloVe or word2vec. To binarise word embeddings, we first adopted the following method similar to [11]:

where \(\varvec{g}''_{v}\) is the original embedding vector for word v, \(g'_{v,j}\) is the binarised value for jth element of \(\varvec{g''_{v}}\), and \(\text {Mean}_{+}(\cdot )\) and \(\text {Mean}_{-}(\cdot )\) are the average value of all the positive elements and negative elements, respectively.

The insight is that we only consider features with strong opinions (i.e., large positive or negative value) on each dimension. To transform \(g' \in \{-\,1,1\}\) to the final \(g \in \{0,1\}\), we use two binary bits to encode one dimension of \(g'_{v,j}\): the first bit is on if \(g'_{v,j} = 1\) and the second is on if \(g'_{v,j} = -\,1\). This means that if the original embeddings are 100-dimensional, the binarised embeddings will be with 200 dimensions. In our experiments, we also tried some other word embedding binarisation methods including the one in [10]. However, the performance with those binarisation methods is not comparable with the one we proposed above. Therefore, the experimental results with different binarisation methods will not be reported.

In the perplexity and topic coherence evaluation, i.e., Sects. 5.4 and 5.5, we will use the 50-dimensional GloVe word embeddings pre-trained on WikipediaFootnote 5 as the source of word features. We then study how different word embedding sources influence the performance of our model in Sect. 5.6. It is noteworthy that MetaLDA can also work with other word features such as semantic similarity.

5.3 Compared models and parameter settings

We evaluate the performance of the following models:

-

MetaLDA and its variants: the proposed model and its variants. Here we use MetaLDA to indicate the model considering both document labels and word features. Several variants of MetaLDA with document labels and word features separately were also studied, which are shown in Table 3. These variants differ in the method of estimating \({\varvec{\alpha }}\) and \(\varvec{\beta }\). All the models listed in Table 3 were implemented on top of Mallet. The hyper-parameters \(\mu _0\) and \(\nu _0\) were set to 1.0.

-

LDA [4]: the baseline model. The Mallet implementation of SparseLDA [38] is used.

-

LLDA, Labelled LDA [29] and PLLDA, Partially Labelled LDA [30]: two models that make use of multiple document labels. The original implementationFootnote 6 is used.

-

DMR, LDA with Dirichlet Multinomial Regression [23]: a model that can use multiple document labels. The Mallet implementation of DMR based on SparseLDA was used. Following Mallet, we set the mean of \(\lambda \) to 0.0 and set the variances of \(\lambda \) for the default label and the document labels to 100.0 and 1.0, respectively.

-

WF-LDA, Word Feature LDA [28]: a model with word features. We implemented it on top of Mallet and used the default settings in Mallet for the optimisation.

-

LF-LDA, Latent Feature LDA [26]: a model that incorporates word embeddings. The original implementationFootnote 7 was used. Following the original paper, we used 1500 and 500 MCMC iterations for initialisation and sampling, respectively, and set \(\lambda \) to 0.6, and used the original 50-dimensional GloVe word embeddings as word features.

-

GPU-DMM, Generalized Pólya Urn DMM [17]: a model that incorporates word semantic similarity. The original implementationFootnote 8 was used. The word similarity was generated from the distances of the word embeddings. Following the original paper, we set the hyper-parameters \(\mu \) and \(\epsilon \) to 0.1 and 0.7, respectively, and the symmetric document Dirichlet prior to 50 / K.

-

PTM, Pseudo document based Topic Model [46]: a model for short text analysis. The original implementationFootnote 9 was used. Following the paper, we set the number of pseudo-documents to 1000 and \(\lambda \) to 0.1.

All the models, except where noted, the symmetric parameters of the document and the topic Dirichlet priors were set to 0.1 and 0.01, respectively, and 2000 MCMC iterations are used to train the models. We summarise the compared models in terms of their usage of meta-information in Table 4.

5.4 Perplexity evaluation

Perplexity is a measure that is widely used [33] to evaluate the modelling accuracy of topic models. The lower the score, the higher the modelling accuracy. To compute perplexity, we randomly selected some documents in a dataset as the training set and the remaining as the test set. We first trained a topic model on the training set to get the word distributions of each topic k (\(\varvec{\phi }_k^\mathrm{train}\)). Each test document d was split into two halves containing every first and every second word, respectively. We then fixed the topics and trained the models on the first half to get the topic proportions (\(\varvec{\theta }_d^\mathrm{test}\)) of test document d and compute perplexity for predicting the second half. With regard to MetaLDA, we fixed the matrices \(\mathbf {\Phi }^\mathrm{train}\) and \(\mathbf {\Lambda }^\mathrm{train}\) output from the training procedure. On the first half of test document d, we computed the Dirichlet prior \(\varvec{\alpha }_d^\mathrm{test}\) with \(\mathbf {\Lambda }^\mathrm{train}\) and the labels \(\varvec{f}_d^\mathrm{test}\) of test document d (See Step 2a), and then point-estimated \(\varvec{\theta }_d^\mathrm{test}\). We ran all the models 5 times with different random number seeds and report the average scores and the standard deviations.

In testing, we may encounter words that never occur in the training documents (a.k.a., unseen words or out-of-vocabulary words). There are two strategies for handling unseen words for calculating perplexity on test documents: ignoring them or keeping them in computing the perplexity. Here we investigate both strategies:

5.4.1 Perplexity computed without unseen words

In this experiment, the perplexity is computed only on the words that appear in the training vocabulary. Here we used 80% documents in each dataset as the training set and the remaining 20% as the test set.

Tables 5 and 6 showFootnote 10 the average perplexity scores with standard deviations for all the models. Note that: (1) The scores on AN with 150 and 200 topics are not reported due to overfitting observed in all the compared models. (2) Given the size of NYT, the scores of 200 and 500 topics are reported. (3) The number of latent topics in LLDA must equal to the number of document labels. (4) For PLLDA, we varied the number of topics per label from 5 to 50 (2 and 5 topics on NYT). The total number of topics used by PPLDA is the product of the number of labels and the number of topics per label.

The results show that the proposed MetaLDA outperformed all the competitors in terms of perplexity on nearly all the datasets, showing the benefit of using both document and word meta-information. Specifically, we have the following remarks:

-

By looking at the models using only the document-level meta-information, we can see the significant improvement of these models over LDA, which indicates that document labels can play an important role in guiding topic modelling. Although the performance of the two variants of MetaLDA with document labels and DMR is comparable, our models run much faster than DMR, which will be studied later in Sect. 5.8.

-

It is interesting that PLLDA with 50 topics for each label has better perplexity than MetaLDA with 200 topics in the 20NG dataset. With the 20 unique labels, the actual number of topics in PLLDA is 1000. However, if 10 topics for each label in PLLDA are used, which is equivalent to 200 topics in MetaLDA, PLLDA is outperformed by MetaLDA significantly.

-

At the word level, MetaLDA-def-wf performed the best among the models with word features only. Moreover, our model has a clear advantage in running speed (see Table 13). Furthermore, comparing MetaLDA-def-wf with MetaLDA-def-def and MetaLDA-0.1-wf with LDA, we can see using the word features indeed improved perplexity.

-

The scores show that the improvement gained by MetaLDA over LDA on the short text datasets is larger than that on the regular text datasets. This is expected because meta-information serves as complementary information in MetaLDA and can have significant impact when the data is sparse.

-

It can be observed that models usually gained improved perplexity, if the Dirichlet parameter \(\alpha \) is sampled/optimised, in line with [33]. We further study this in Sect. 5.4.3.

-

On the AN dataset, there is no statistically significant difference between MetaLDA and DMR. On NYT, a similar trend is observed: the improvement in the models with the document labels over LDA is obvious but not in the models with the word features. Given the number of the document labels (194 of AN and 545 of NYT), it is possible that the document labels already offer enough information and the word embeddings have little contribution in the two datasets.

5.4.2 Perplexity computed with unseen words

To test the hypothesis that the incorporation of meta-information in MetaLDA can significantly improve the modelling accuracy in the cases where the corpus is sparse, we varied the proportion of documents used in training from 20 to 80% and used the remaining for testing. It is natural that when the proportion is small, the number of unseen words in testing documents will be large. Instead of simply excluding the unseen words in the previous experiments, here we compute the perplexity with unseen words for LDA, DMR, WF-LDA and the proposed MetaLDA. For perplexity calculation, \(\phi ^\mathrm{test}_{k,v}\) for each topic k and each token v in the test documents is needed. If v occurs in the training documents, \(\phi ^\mathrm{test}_{k,v}\) can be directly obtained. While if v is unseen, \(\phi ^\mathrm{unseen}_{k,v}\) can be estimated by the prior:

For LDA and DMR which do not use word features, \(\beta ^\mathrm{unseen}_{k,v} = \beta ^\mathrm{train}_{k,v}\); For WF-LDA and MetaLDA which are with word features, \(\beta ^\mathrm{unseen}_{k,v}\) is computed with the features of the unseen token. Following Step 1c, for MetaLDA, \(\beta ^\mathrm{unseen}_{k,v} = \prod _{l'}^{L_\mathrm{word}} \delta _{l',k}^{g^\mathrm{unseen}_{v,l}}\).

Figure 4 shows the perplexity scores on Reuters, 20NG, TMN and WS with 200, 200, 100 and 50 topics, respectively. MetaLDA outperformed the other models significantly with a lower proportion of training documents and relatively higher proportion of unseen words. The gap between MetaLDA and the other three models increases while the training proportion decreases. It indicates that the meta-information helps MetaLDA to achieve better modelling accuracy on predicting unseen words.

Perplexity comparison with unseen words in different proportions of the training documents. Each pair of the numbers on the horizontal axis are the proportion of the training documents and the proportion of unseen tokens in the vocabulary of the test documents, respectively. For each setting, the four coloured bars from left to right correspond to LDA, WF-LDA, DMR and MetaLDA. The error bars are the standard deviations over 5 runs. a Reuters with 200 topics, b 20NG with 200 topics, c TMN with 100 topics, d WS with 50 topics

5.4.3 Perplexity evaluation for using MetaLDA as a hyper-parameter sampling approach

We further study how MetaLDA performs in terms of perplexity when used as a hyper-parameter sampling approach without meta-information. The experimental settings are the same as the ones used in Sect. 5.4.1. Table 7 shows the results of different variants of MetaLDA on hyper-parameter sampling. We would like to point out that MetaLDA-0.1-asym is equivalent to MetaLDA-0.1-def, MetaLDA-asym-0.01 is equivalent to MetaLDA-def-0.01, and MetaLDA-asym-asym is equivalent to MetaLDA-def-def in Table 3. Here we use the former to make the comparison clear. We have the following observations:

-

In general, the best perplexity score is derived with the use of both asymmetric \(\alpha \) and asymmetric \(\beta \).

-

If we fix the setting for the topic side and vary the setting for the document side (for example, compare MetaLDA-0.1-0.01, MetaLDA-sym-0.01 and MetaLDA-asym-0.01), we can derive that 1) the use of sampled priors (either symmetric or asymmetric) can significantly lower the perplexity scores, This is in line with the findings in [33]; 2) using asymmetric prior can further decrease perplexity.

-

Similarly, fixing the setting for the document side and varying the setting for the topic side (for example, comparing MetaLDA-sym-0.01, MetaLDA-sym-sym and MetaLDA-sym-asym), we found that sampling either symmetric or asymmetric prior on per-topic word distributions does not significantly affect the perplexity scores, which also complies with [33]. However, there is a subtle difference: for our method an asymmetric prior on per-topic word distributions is marginally better, whereas it is often worse in [33].

-

Now comparing the last row in Table 7 with the corresponding results in Tables 5 and 6 shows that constructing the priors with meta-information can further decrease the perplexity scores, which further proves our assumption that it is beneficial to use meta-information in topic modelling.

5.5 Topic coherence evaluation

We further evaluate the semantic coherence of the words in a topic learnt by LDA, PTM, DMR, LF-LDA, WF-LDA, GPU-DMM and MetaLDA. Here we use the normalised point-wise mutual information (NPMI) [1, 16] to calculate topic coherence score for topic k with top T words:

where \(p(w_i)\) is the probability of word i, and \(p(w_i,w_j)\) is the joint probability of words i and j that co-occur together within a sliding window. Those probabilities were computed on an external large corpus, i.e., a 5.48 GB Wikipedia dump in our experiments. The NPMI score of each topic in the experiments is calculated with top 10 words (\(T=10\)) by the Palmetto package.Footnote 11 Again, we report the average scores and the standard deviations over 5 random runs.

It is known that conventional topic models directly applied to short texts suffer from low quality topics, caused by the insufficient word co-occurrence information. Here we study whether or not the meta-information helps MetaLDA improve topic quality, compared with other topic models that can also handle short texts. Table 8 shows the NPMI scores on the three short text datasets. Higher scores indicate better topic coherence. All the models were trained with 100 topics. Besides the NPMI scores averaged over all the 100 topics, we also show the scores averaged over top 20 topics with highest NPMI, where “rubbish” topics are eliminated, following [37]. It is clear that MetaLDA performed significantly better than all the other models in WS and AN dataset in terms of NPMI, which indicates that MetaLDA can discover more meaningful topics with the document and word meta-information. We would like to point out that on the TMN dataset, even though the average score of MetaLDA is still the best, the score of MetaLDA overlaps with the others’ when allowing for standard deviation, which indicates the difference is not statistically significant.

5.6 Changing word embeddings

In the above experiments, we used the binarised 50-dimensional GloVe embeddings as word features to demonstrate the superiority of MetaLDA over all the other competitors. It is also interesting to study how the performance of MetaLDA changes while we use different word embeddings. In this set of experiments, we varied the sources (i.e., the methods used to train the word embeddings) as well as the dimensions of those word embeddings. Here we used the embeddings pre-trained by three methods: GloVe, SkipGramFootnote 12 and CBOW [20].\(^{12}\) For each word embedding method, 50 and 100 dimensional embeddings were used.

Tables 9 and 10 show the perplexity and topic coherence performance of MetaLDA, respectively, on the WS and TMN datasets. We followed the experiment settings used in the previous sections, except for the word features. MetaLDA work marginally better with GloVe embeddings than with word2vec embeddings. However, the difference is not significant, given the standard errors. The reasons might be:

-

1.

The binarisation could water down the differences between word embeddings. Therefore, minor differences in word embedding might not significantly influence the performance. But it is interesting to develop a model that can directly utilise the real-valued word embeddings.

-

2.

Using the embeddings as the prior information could make MetaLDA insensitive to the quality of binarised word embeddings.

5.7 Qualitative analysis

Now we show that besides better quantitative performance, MetaLDA with meta-information also allows more informative and interesting interpretation of the discovered topics.

As discussed in Sect. 3, the latent variable \(\lambda _{l,k}\) is the weight measuring the association between document label l and topic k. Each label can be interpreted as an unnormalised mixture of topics, represented by a K-dimensional vector \(\varvec{\lambda }_{l}\). Therefore, similar to finding the top words for each topic, ranking \(\lambda _{l,k}\) can give us the most related topics for each label. Table 11 shows the top 5 related topics among 100 discovered by MetaLDA for the 9 document labels in the WS dataset. For each topic, the top 5 words (ranked with \(\phi _{k,v}\)) are listed. The results show that the topics are closely related to the labels. For example, the top 5 topics for the “Computers” category describe hardware, software, internet, and system, which are different aspects of computers. The “Sports” category broadly covers football, rugby, tennis, golf, cricket, etc. The major topics discussed in the “Health” related documents include diet, infectious diseases, lung cancer and its causes, and so on.

Furthermore, MetaLDA can also automatically assign the labels to the latent topics, which is known as automatic topic labelling [15]. The method proposed in [15] generates label from the top-ranked topic terms and the titles of Wikipedia articles containing these terms. It is an ad hoc process. In contrast, MetaLDA automatically learns the association between the document labels and the latent topics via the association matrix \(\varvec{\lambda }\). Specifically, for each topic k, we rank the labels according the weight \(\lambda _{l,k}\), and then retrieve the most likely labels for each topic. Table 12 shows some examples derived one the WS dataset. For instance, topic 46 is about web programming. The most probable label for this topic assigned by MetaLDA is “Computers”. The second and third probable labels are also very related to this topic. Topic 17 is about movies, and the most probable label found by MetaLDA is “Culture&Arts&Entertainment”. It is clear that topics and their most probable labels are well correlated. All these findings demonstrate that MetaLDA is able to discover meaningful topics and label the topics automatically.

5.8 Running time

In this section, we empirically study the efficiency of the models in term of per-iteration running time. The implementation details of our MetaLDA are as follows:

-

The SparseLDA framework [38] reduces the complexity of LDA to be sub-linear by breaking the conditional of LDA into three “buckets”, where the “smoothing only” bucket is cached for all the documents and the “document only” bucket is cached for all the tokens in a document. We adopted a similar strategy when implementing MetaLDA. When only the document meta-information is used, the Dirichlet parameters \(\alpha \) for different documents in MetaLDA are different and asymmetric. Therefore, the “smoothing only” bucket has to be computed for each document, but we can cache it for all the tokens, which still gives us a considerable reduction in computing complexity. However, when the word meta-information is used, the SparseLDA framework no longer works in MetaLDA as the \(\beta \) parameters for each topic and each token are different.

-

By adapting the Distributed framework in [25], our MetaLDA implementation runs in parallel with multiple threads, which makes MetaLDA able to handle larger document collections. The parallel implementation was tested on the NYT dataset.

The per-iteration running time of all the models is shown in Table 13. Note that:

-

On the Reuters and WS datasets, all the models ran with a single thread on a desktop PC with a 3.40 GHz CPU and 16 GB RAM.

-

Due to the size of NYT, we report the running time for the models that are able to run in parallel. All the parallelised models ran with 10 threads on a cluster with a 14-core 2.6 GHz CPU and 128 GB RAM.

-

All the models were implemented in JAVA.

-

As the models with meta-information add extra complexity to LDA, the per-iteration running time of LDA can be treated as the lower bound.

At the document level, both MetaLDA-df-0.01 and DMR use priors to incorporate the document meta-information and both of them were implemented in the SparseLDA framework. However, our variant is about 6 to 8 times faster than DMR on the Reuters dataset and more than 10 times faster on the WS dataset. Moreover, it can be seen that the larger the number of topics, the faster our variant is over DMR. At the word level, similar patterns can be observed: our MetaLDA-0.1-wf ran significantly faster than WF-LDA and LF-LDA especially when more topics are used (20–30 times faster on WS). It is not surprising that GPU-DMM has comparable running speed with our variant, because only one topic is allowed for each document in GPU-DMM. With both document and word meta-information, MetaLDA still ran several times faster than DMR, LF-LDA, and WF-LDA. On NYT with the parallel settings, MetaLDA maintains its efficiency advantage as well.

To further examine our model’s scalability, we report the per-iteration running time of MetaLDA on NYT with 500 topics in Fig. 5. For this, we varied the proportion of training documents from 20 to 80% as well as the number of threads from 1 to 8. For the single thread version, when the training proportions change from 40 to 80% the per-iteration running time becomes 4 times slower. However, with multi-threading, our model scales much better. The per-iteration running time is only doubled while the training proportions quadruple. In terms of speed-up, the per-iteration running time increases nearly linearly with the number of threads. For example, given 60% training data, the per-iteration running time is reduced to half while the number of thread doubles.

6 Conclusion

In this article, we have presented a topic modelling framework named MetaLDA that can efficiently incorporate document and word meta-information. This results in a significant improvement over other models in terms of perplexity and topic quality. With two data augmentation techniques, MetaLDA enjoys full local conjugacy, allowing efficient Gibbs sampling, demonstrated by superiority in the per-iteration running time. MetaLDA\(^{1}\) has been implemented within Mallet using the DistributedLDA framework, and works efficiently in a multicore context. Furthermore, without losing generality, MetaLDA can work with both regular texts and short texts. The improvement of MetaLDA over other models that also use meta-information is remarkable, particularly when the word-occurrence information is insufficient. Moreover, MetaLDA efficiently demonstrates that asymmetric-asymmetric LDA does beat regular symmetric LDA.

MetaLDA takes a particular approach for incorporating meta-information on topic models. However, the approach is general enough to be applied to other Bayesian probabilistic models that go beyond topics modelling, such as multi-label learning with sparse features [43]. Moreover, it would be interesting to extend our method to use real-valued meta-information directly without binarisation [41], which is the subject of future work.

Notes

MetaLDA is able to handle documents/words without labels/features. But for fair comparison with other models, we removed the documents without labels and words without features.

For GPU-DMM and PTM, perplexity is not evaluated because the inference code for unseen documents is not public available. The random number seeds used in the code of LLDA and PLLDA are pre-fixed in the package. So the standard deviations of the two models are not reported.

References

Aletras N, Stevenson M (2013) Evaluating topic coherence using distributional semantics. In: Proceedings of the 10th international conference on computational semantics, p 13–22

Andrzejewski D, Zhu X, Craven M (2009) Incorporating domain knowledge into topic modeling via Dirichlet forest priors. In: Proceedings of the 26th annual international conference on machine learning, p 25–32

Andrzejewski D, Zhu X, Craven M, Recht B (2011) A framework for incorporating general domain knowledge into Latent Dirichlet Allocation using first-order logic. In: Proceedings of the twenty-second international joint conference on artificial intelligence, p 1171–1177

Blei DM, Ng AY, Jordan MI (2003) Latent Dirichlet allocation. J Mach Learn Res 3:993–1022

Buntine W, Hutter M (2010) A Bayesian view of the Poisson–Dirichlet process. arXiv preprint arXiv:1007.0296

Buntine WL, Mishra S (2014) Experiments with non-parametric topic models. In: Proceedings of the 20th ACM SIGKDD international conference on knowledge discovery and data mining, p 881–890

Chen C, Du L, Buntine W (2011) Sampling table configurations for the hierarchical Poisson–Dirichlet process. In: Proceedings of the 2011 European conference on Machine learning and knowledge discovery in databases, p 296–311

Das R, Zaheer M, Dyer C (2015) Gaussian LDA for topic models with word embeddings. In: Proceedings of the 53rd annual meeting of the association for computational linguistics and the 7th international joint conference on natural language processing, p 795–804

Du L, Buntine W, Jin H, Chen C (2012) Sequential latent Dirichlet allocation. Knowl Inf Syst 31(3):475–503

Faruqui M, Tsvetkov Y, Yogatama D, Dyer C, Smith N (2015) Sparse overcomplete word vector representations. In: Proceedings of the 53rd annual meeting of the association for computational linguistics and the 7th international joint conference on natural language processing, p 1491–1500

Guo J, Che W, Wang H, Liu T (2014) Revisiting embedding features for simple semi-supervised learning. In: Proceedings of the 2014 conference on empirical methods in natural language processing, p 110–120

Hong L, Davison BD (2010) Empirical study of topic modeling in Twitter. In: Proceedings of the first workshop on social media analytics, p 80–88

Hu C, Rai P, Carin L (2016) Non-negative matrix factorization for discrete data with hierarchical side-information. In: Proceedings of the 19th international conference on artificial intelligence and statistics, p 1124–1132

Kim D, Oh A (2017) Hierarchical Dirichlet scaling process. Mach Learn 106(3):387–418

Lau JH, Grieser K, Newman D, Baldwin T (2011) Automatic labelling of topic models. In: Proceedings of the 49th annual meeting of the association for computational linguistics: human language technologies, p 1536–1545

Lau JH, Newman D, Baldwin T (2014) Machine reading tea leaves: automatically evaluating topic coherence and topic model quality. In: Proceedings of the 14th conference of the european chapter of the association for computational linguistics, p 530–539

Li C, Wang H, Zhang Z, Sun A, Ma Z (2016) Topic modeling for short texts with auxiliary word embeddings. In: Proceedings of the 39th international ACM SIGIR conference on research and development in information retrieval, p 165–174

Mcauliffe JD, Blei DM (2008) Supervised topic models. Adv Neural Inf Process Syst 20:121–128

Mehrotra R, Sanner S, Buntine W, Xie L (2013) Improving LDA topic models for microblogs via tweet pooling and automatic labeling. In: Proceedings of the 36th international ACM SIGIR conference on research and development in information retrieval, p 889–892

Mikolov T, Chen K, Corrado G, Dean J (2013) Efficient estimation of word representations in vector space. In: International conference on learning representations (workshop)

Mikolov T, Sutskever I, Chen K, Corrado GS, Dean J (2013) Distributed representations of words and phrases and their compositionally. Adv Neural Inf Process Syst 26:3111–3119

Miller GA (1995) WordNet: a lexical database for English. Commun ACM 38(11):39–41

Mimno D, McCallum A (2008) Topic models conditioned on arbitrary features with Dirichlet-multinomial regression. In: Proceedings of the 24th conference in uncertainty in artificial intelligence, p 411–418

Minka T (2000) Estimating a dirichlet distribution

Newman D, Asuncion A, Smyth P, Welling M (2009) Distributed algorithms for topic models. J Mach Learn Res 10:1801–1828

Nguyen DQ, Billingsley R, Du L, Johnson M (2015) Improving topic models with latent feature word representations. Trans Assoc Comput Linguist 3:299–313

Pennington J, Socher R, Manning C (2014) GloVe: global vectors for word representation. In: Proceedings of the 2014 conference on empirical methods in natural language processing, p 1532–1543

Petterson J, Buntine W, Narayanamurthy SM, Caetano TS, Smola AJ (2010) Word features for latent Dirichlet allocation. Adv Neural Inf Process Syst 23:1921–1929

Ramage D, Hall D, Nallapati R, Manning CD (2009) Labeled LDA: a supervised topic model for credit attribution in multi-labeled corpora. In: Proceedings of the 2009 conference on empirical methods in natural language processing, p 248–256

Ramage D, Manning CD, Dumais S (2011) Partially labeled topic models for interpretable text mining. In: Proceedings of the 17th ACM SIGKDD international conference on Knowledge discovery and data mining, p 457–465

Teh YW, Jordan MI, Beal MJ, Blei DM (2006) Hierarchical Dirichlet processes. J Am Stat Assoc 101(476):1566–1581

Wallach HM (2008) Structured topic models for language. Ph.D. thesis, University of Cambridge

Wallach HM, Mimno DM, McCallum A (2009) Rethinking LDA: why priors matter. Adv Neural Inf Process Syst 22:1973–1981

Wang C, Blei DM (2011) Collaborative topic modeling for recommending scientific articles. In: Proceedings of the 17th ACM SIGKDD international conference on knowledge discovery and data mining, p 448–456

Xie P, Yang D, Xing E (2015) Incorporating word correlation knowledge into topic modeling. In: Proceedings of the 2015 conference of the north American chapter of the association for computational linguistics: human language technologies, p 725–734

Xun G, Gopalakrishnan V, Ma F, Li Y, Gao J, Zhang A (2016) Topic discovery for short texts using word embeddings. In: Proceedings of IEEE 16th international conference on data mining, p 1299–1304

Yang Y, Downey D, Boyd-Graber J (2015) Efficient methods for incorporating knowledge into topic models. In: Proceedings of the 2015 conference on empirical methods in natural language processing, p 308–317

Yao L, Mimno D, McCallum A (2009) Efficient methods for topic model inference on streaming document collections. In: Proceedings of the 15th ACM SIGKDD international conference on Knowledge discovery and data mining, p 937–946

Yin J, Wang J (2014) A Dirichlet multinomial mixture model-based approach for short text clustering. In: Proceedings of the 20th ACM SIGKDD international conference on knowledge discovery and data mining, p 233–242

Zhao H, Du L, Buntine W (2017) Leveraging node attributes for incomplete relational data. In: Proceedings of the 34th international conference on machine learning, p 4072–4081

Zhao H, Du L, Buntine W (2017) A word embeddings informed focused topic model. In: Proceedings of the ninth Asian conference on machine learning, p 423–438

Zhao H, Du L, Buntine W, Liu G (2017) MetaLDA: a topic model that efficiently incorporates meta information. In: Proceedings of 2017 IEEE international conference on data mining, p 635–644

Zhao H, Rai P, Du L, Buntine W (2018) Bayesian multi-label learning with sparse features and labels, and label co-occurrences. In: Proceedings of the 21st international conference on artificial intelligence and statistics (in press)

Zhao WX, Jiang J, Weng J, He J, Lim EP, Yan H, Li X (2011) Comparing twitter and traditional media using topic models. In: Proceedings of the 33rd European conference on advances in information retrieval, p 338–349

Zhou M, Carin L (2015) Negative binomial process count and mixture modeling. IEEE Trans Pattern Anal Mach Intell 37(2):307–320

Zuo Y, Wu J, Zhang H, Lin H, Wang F, Xu K, Xiong H (2016) Topic modeling of short texts: a pseudo-document view. In: Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, p 2105–2114

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zhao, H., Du, L., Buntine, W. et al. Leveraging external information in topic modelling. Knowl Inf Syst 61, 661–693 (2019). https://doi.org/10.1007/s10115-018-1213-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-018-1213-y