Abstract

The accuracy of a text classification method based on a SVM learner depends on the weighting metric used in order to assign a weight to a term. Weighting metrics can be classified as supervised or unsupervised according to whether they use prior information on the number of documents belonging to each category. A supervised metric should be highly informative about the relation of a document term to a category, and discriminative in separating the positive documents from the negative documents for this category. In this paper, we propose 80 metrics never used for the term-weighting problem and compare them to 16 functions of the literature. A large number of these metrics were initially proposed for other data mining problems: feature selection, classification rules and term collocations. While many previous works have shown the merits of using a particular metric, our experience suggests that the results obtained by such metrics can be highly dependent on the label distribution on the corpus and on the performance measures used (microaveraged or macroaveraged \(F_1\)-Score). The solution that we propose consists in combining the metrics in order to improve the classification. More precisely, we show that using a SVM classifier which combines the outputs of SVM classifiers that utilize different metrics performs well in all situations. The second main contribution of this paper is an extended term representation for the vector space model that improves significantly the prediction of the text classifier.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Text classification is the problem of automatically labeling natural language texts with predefined thematic categories. In the last two decades, a huge number of machine learning techniques were proposed to automatically classify and organize text documents [1, 28]. These studies were motivated by the exponential growing of the number of texts available online. Applications includes classification of news articles, web pages and scientific publications into controlled vocabulary, sentiment analysis and opinion mining among social networks, spam filtering, protein classification.

In text classifier systems, documents are preprocessed in order to be suitable as training data for a learning algorithm. Traditionally, each text document is converted into a vector where each dimension represents a term which value is the weight that will be used in the learning process. As the weight reflects the importance of the term in the document, an appropriate choice of the metric function used for weighting terms is crucial for correct classification.

Traditional unsupervised term weights metrics, as the popular TFIDF, depend only on term frequency in the document and the (inverse) number of training documents containing this term. For the purpose of text classification, supervised alternatives have been developed to take into account the categories of the corpus documents [3, 5, 7–10, 14, 20, 21, 23, 24, 27]. The key idea is to build a metric function that discriminates the terms according to the category for which we are testing the document membership. Such a metric should be highly informative about the relation of a document term t to a category c and discriminative in separating the positive documents from the negative documents of category c. While unsupervised term weights depend only on term frequency in the document and the number of training corpus documents containing this term, supervised term weights are computed for each category and use the number of documents belonging to the category containing this term. Intuitively, supervised term weights measure the degree of correlation between term’s presence in a document and membership of this document in the category.

In this work, we propose to experiment for term weighting the large number of metric functions which were proposed for other data mining problems in order to measure the correlation between two events. We use metrics collected from papers dealing with feature selection [13, 26, 31], supervised term weighting [3, 8, 10, 14, 21, 23, 27], classification rules [15] and term collocations [25]. We compare experimentally 96 metrics for term weighting. Only 16 of them have been used for term-weighting problem in the literature, and 9 are new metrics designed by the authors of this paper. It appears that using these metrics instead of already used weights can improve the performances of SVM classifiers. Moreover, we show that combining metrics improves the quality of the classification. While many previous works have shown the merits of using a particular metric [3, 5, 7–10, 14, 20, 21, 23, 24, 27], our experience suggests that the results obtained by such metrics can be highly dependent on the label distribution on the corpus and also on the performance measures used (microaveraged or macroaveraged \(F_1\)-Score). However, we show that using a SVM classifier which combines the outputs of SVM classifiers that use different metrics improves significantly text classification performances in all situations.

The second main contribution of this paper is an extended term representation for the vector space model. Following the scheme used by TFIDF metric, which is the product of the term frequency (TF) and inverse document frequency (IDF), alternative supervised forms have been traditionally formulated by replacing the IDF term with a supervised metric function [3]. However, merging by product TF with a factor such as IDF, Chi square or odds ratio is problematic. It is not clear why a two times larger TF is equivalent to a two times larger IDF (Chi square or odds ratio). From our point of view, TFIDF is the product of two quantities that are not of the same statistical nature. TF counts the number of occurrences of a term t in a document, while IDF counts the (inverse) number of documents containing the term t. We propose as an alternative to count the (inverse) number of documents containing the term with frequency at least n. By doing this, we integrate the term frequency in a document in the number of documents. The IDF quantity becomes the number of documents containing the term t at least n times. Precisely, in our model, we propose to convert a text document into a vector where each dimension represents a feature of the form (t, n), meaning that the term t appears in the document at least n times. If the term t appears 10 times in the document, we generate all the term frequency features (t, n) with \(n=1,2,4\text { and }8\) (powers of 2 in order to limit the number of features). Hence, rather than associating the weight TFIDF to a term t, we affect in our model the weight IDF to features (t, n) which depends on the inverse number of documents containing a term t at least n times. Our intuition is that this new definition of IDF keeps more information for learning. This assumption is confirmed experimentally as it improves the quality of the text classification. As all term weighting metrics (such as Chi square or odds ratio) depend on the document frequency, our extended term representation, explained here for IDF, is applicable to any metric. We also propose another type of extended term feature based on the idea that the terms which are more correlated with the subject of the document tend to appear at the beginning. We generate term position features (t, p), meaning that the first position of t in the document is lower or equal to p. Experimental results show that using these two extended term representations improves significantly the prediction of the text classifier.

A brief review about supervised term-weighting metrics for text classification is presented in Sect. 2. Our extended term representation is presented in Sect. 3. The metrics that we proposed to compare are described in Sect. 4. The experimental comparison on Reuters-21578, Ohsumed and 20 Newsgroups datasets are presented in Sect. 5.

2 Related works

First term-weighting metrics for text classification were unsupervised and generally borrowed from information retrieval (IR) field. The simplest IR metric is the binary representation BIN which assigns a weight of 1 if the term appears in the document and 0 otherwise. The term can be assigned a weight TF that depends on its frequency \(\textit{tf}\) in the document. Different variants of term frequency have been presented, for example, the raw term frequency \(\textit{tf}\) or its logarithm \(\log (1+\textit{tf})\). TFIDF is the most commonly used weighting metric in text classification. TFIDF is the product of TF and IDF, the inverse document frequency which favors rare terms in the corpus over frequent ones. However, there are some drawbacks on using unsupervised weighting functions, as the category information is omitted.

Previous studies proposed different supervised weighting metrics where the document frequency factor IDF of TFIDF is replaced by a factor that use prior information on the number of documents belonging to each category. Several classical metrics were tested in the literature, for instance, Chi square (\(\chi ^{2}\)), information gain (IG), gain ratio (GR) and odds ratio (OR) [5, 7, 8, 10]. These early studies get an improvement with TF.\(\chi ^{2}\), TF.IG, TF.GR and TF.OR term weights trained with SVM.

Accurate SVM text classification was obtained using Bi-Normal Separation (BNS) metric [13] for supervised term weighting [14]. In the later study, Forman tested two variants TF.BNS and BIN.BNS (considering the term frequency or not) and noticed that “best F-measure was obtained by using binary features with BNS scaling” (BIN.BNS) but “recall was slightly better with TF.BNS features”. This observation shows that merging TF with a factor such as BNS is problematic, i.e., not using TF yields to a better \(F_1\)-Score but decreases the fraction of the predicted categories that are relevant for a document.

More recently, other specific metrics were proposed for the supervised term-weighting problem. Liu et al. [21] use a probability-based (PB) term weight in order to tackle the problem of imbalanced distribution of documents among categories. Lan et al. [20] utilize a term weight TF.RF based of the relevance frequency (RF) metric. The relationship and differences between these term-weighting metrics are studied in [2]. Martineau et al. [23] propose a metric TF.\(\delta \)IDF where IDF is replaced by the class inverse document frequency difference (\(\delta \)IDF). Altinçay and Erenel [3] combine RF metric with mutual information and the difference of term occurrence probabilities in the collection of the documents belonging to the category and in its complementary set. Nguyen et al. [24] propose a weighting scheme based on the Kullback–Leibler (KL) and Jensen–Shannon (JS) divergence measures for centroid-based classifiers. Ren and Sohrab [27] test two metrics TF.IDF.ICF and TF.IDF.ICS\(_\delta \)F that incorporate the inverse class frequency (ICF) and inverse class space density frequency (ICS\(_\delta \)F) to TF.IDF. Bouillot et al. [6] propose alternative metrics for centroid term weighting and investigated the influence of numbers of categories, documents and terms in the classification of small datasets. Deng et al. [9] and Fattah [12] adapt and compare various text classification weighting metrics for sentiment analysis. This application is also considered in the two pre-cited papers [23] and [24]. Badawi and Altinçay [4] propose a framework based on employing the co-occurrence statistics of pairs of terms for term selection and weighting in binary text classification. Escalante et al. [11] use genetic programming for weighting terms. Ko [19] use a weighting scheme based on the term relevance ratio (TRR).

From this state-of-the-art, we notice that each paper in the literature gives a new metric and demonstrates its classification improvement on some corpora considering a certain number of categories (typically 10 categories for Reuters corpus). However, as we will show in the following, there is no metric among the literature and also among the 80 metrics we propose that yields the best results in all situations (corpus and number of categories). In order to overcome this problem, we propose to combine the metrics.

3 Extended term representation

Text classification is traditionally achieved by applying a learning method to a representation of the text document. In the vector space model, the document is represented as a vector in the term space. Each dimension of the vector space represents a term which value is the weight that will be used in the learning process. In this section, we propose to represent each dimension by a term together with its minimal frequency or its minimal first position in the document. We call these alternatives extended term representations.

3.1 Term features

In this classical representation, terms are viewed as the dimensions of the learning space. A term may be a single word or a phrase (n-gram).

3.2 Term frequency features

The number of occurrences of a term t in a document d is by itself a property that we propose to use as a feature. Let us consider, for example, a particular term t such that 25 % of the documents where t appears are in category c. If 45 % of the documents where t appears at least 3 times are in category c, then the term t is probably more correlated with the category c when its frequency exceeds 2. Hence, we propose features of the form (t, n) in documents containing t with a term frequency at least n. If a document d contains ten times a term t, we must generate ten features (t, i) (\(i = 1,2, \ldots , 10\)), meaning that t occurs at least once, twice, \(\ldots \), ten times. This could unnecessarily grow the number of features so we consider only powers of 2 less or equal to n. Then, if t occurs ten times, we will generate the features (t, 1), (t, 2), (t, 4) and (t, 8). The number of frequency features associated with a term t which appears n times in a document d will only be \(\log _2{n}\) in the worst case. In practice, however, most terms have a low frequency and the number of features grows moderately as we will show in the experiments (see Sect. 5.4).

3.3 Term position features

Most of the terms that are related to the main topics of a document occur at its beginning. In order to validate this assumption, we propose features of the form (t, p), meaning that the first position of t in the document is lower or equal to p. The position being defined as the number of words preceding the term occurrence. As for term frequency features, we generate only features (t, p) when p is a power of 2. For example, if a term t first appears at position 5 in a document of size 100 words, we generate the features (t, 8), (t, 16), (t, 32) et (t, 64), meaning that the first position of t is lower or equal than 8, 16, 32 and 64. The number of position features associated with a term t which appears in a document d at first position p will be \(\log _2{|d|}\) in the worst case, where |d| is the size of d in number of words. However, using term frequency features augments moderately the number of features (see Sect. 5.4).

4 Weighting metrics

Supervised term metrics try to give a high weight to a feature that is particularly present in documents that belong to a category. Hence, a good term-weighting metric must be a measure of an observed correlation between two events in the set of training documents: containing a term and belonging to a category. In this section, we propose to use the large number of metric functions proposed for other data mining problems, but not yet used for term weighting, in order to measure the correlation between the two events.

4.1 Notations

We consider a corpus D of N documents and d a particular document of D.

Let x denotes a nominal feature of d representing either:

-

t a term that occurs in d,

-

(t, n) a term that occurs at least n times in d,

-

or (t, p) a term which first position is lower or equal to p in the document d.

Each document can belong to one or many categories (labels or classes) \(c_{1}, c_{2}, \ldots , c_{M}\). We denote by y a particular category \(c_{i}\).

We denote by \(\bar{x}\) the fact that the feature x is not present in d and by \(\bar{y}\) the fact that d does not belong to the category y.

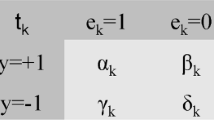

The number of documents containing the feature x and belonging to the category y is denoted by f(xy) and represents the document frequency. In general, f(uv) denotes the number of documents containing u and belonging to v, u being x, \(\bar{x}\) or \(*\) (documents containing any term) and v being y, \(\bar{y}\) or \(*\) (documents belonging to any category). These frequencies are represented in the contingency table (Table 1) in which the number of documents is denoted by N, f(xy) by a and \(f_{11}\), \(f(x\bar{y})\) by b and \(f_{12}\), and so on.

Many metrics are based on the estimation of the probability P(uv), the probability that a document containing u belongs to the category v, u being x, \(\bar{x}\) or \(*\) and v being y, \(\bar{y}\) or \(*\). Under the maximum-likelihood hypothesis, this probability is estimated by:

Some metrics are based on the difference between the observed and the expected frequencies. The expected contingency frequencies under the null hypothesis of independence \(H_{0}\) are given in Table 2.

Few metrics use the number of categories containing a document that contains a feature x. This quantity is denoted by \(f_c(x)\) and corresponds to:

4.2 Metrics

Giving a weight to a feature x associated with a term in a document labeled with y depends on the correlation between x and y in the training corpus. This correlation can be estimated by different metrics, and all the metrics used in this paper depend only on five values:

-

N the number of training documents.

-

f(xy) the joint frequency.

-

\(f(x *)\) and \(f(*{y})\) the marginal frequencies.

-

\(f_c(x)\) the number of categories containing (a document that contains) feature x.

-

M the number of categories.

Given these values, one can compute the contingency table and then compute any of the 96 metrics described in Table 3. Most of these metrics are collected from papers dealing with feature selection [13, 26, 31], supervised term weighting [3, 8, 10, 14, 21, 23, 27], classification rules [15] and term collocations [25]. The first 16 metrics of Table 3 are those already used for the term- weighting problem in the literature [3, 8, 10, 14, 20, 21, 23, 27]. The last 9 metrics are proposed by the authors of this paper.

5 Experiments

5.1 Benchmark

In order to compare experimentally the metrics, we use Reuters-21578, Ohsumed and 20 Newsgroups corpora. These datasets are the most widely used benchmarks for text classification.

The distribution of the categories in Reuters-21578 corpus is highly unbalanced. In order to study the performances obtained by each weighting metric in more or less unbalanced situations, our results on Reuters-21578 are reported:

-

for the 115 categories with at least one training example,

-

for the 52 categories with at least 16 training examples,

-

and for the set of the 10 categories with the highest number of training examples.

Ohsumed is a medical abstract corpus with 23 cardiovascular diseases categories. Twenty Newsgroups corpus contains articles taken from 20 Usenet newsgroups (categories).

Term variation can affect its frequency which is an important parameter in the term weight, and the solution consists in replacing each word by its stem. For all the corpora, we used Porter stemmer which gives the best performances in our experiments. After stemming, we have tokenized the text documents. For each sentence in a document, we generate all possible n-grams (terms). We choose the size of n-grams according to the performances obtained in each corpus. For Reuters-21578 corpus, the size of n was fixed to 1; for Ohsumed and 20 Newsgroups corpora, we fixed \(n \le 2\).

We used the training/testing split proposed in Reuters-21578 (Mobapte split) and Ohsumed corpora. There is no fixed literature split for 20 Newsgroups. It is usually used for cross-validation. We have adopted a fivefold cross-validation on 20 Newsgroups corpus in order to evaluate the statistical significance of the achieved performance improvements.

Traditionally, the performance of a classifier on a corpus is estimated by learning the classification on the training data and evaluating the accuracy of the prediction obtained on the evaluation data. The evaluation metrics used are the precision which is the proportion of documents placed in the category that are really in the category, the recall which is the proportion of documents in the category that are actually placed in the category, and the \(F_1\)-Score defined as:

The microaveraged \(F_1\)-Score is computed globally for all the categories, while the macroaveraged \(F_1\)-Score is the average of the \(F_1\)-Scores computed for each category. The latter measures the ability of a classifier to perform well when the distribution of the categories is unbalanced, while the microaveraged \(F_1\)-Score gives a global view of the document classification performance.

5.2 Learning

In multi-label text classification, each document d belongs to one or many of the categories in \(C = \{c_{1}, c_{2}, \ldots , c_{l}\}\). In order to learn for multi-label classification, we use the traditional binary relevance (BR) strategy [22, 29, 32], the well-known one-against-all problem transformation method that learns |C| independent binary classifiers, one for each category. Each binary classifier gives a probability that d belongs or not to the category \(y = c_{i}\).

5.2.1 Weighting

For each binary classifier associated with a category y, every document d is transformed to a vector \(W_d = (w(x_1,y,d), w(x_2,y,d),\ldots , w(x_n,y,d))\) where each feature x is weighted by:

The term frequency weight \(w_\mathrm{TF}\) depends on the frequency of x in the document d. The document frequency weight \(w_\mathrm{DF}\) is one of the metrics described in Table 3.

Each feature x can be either:

-

a term feature t in the classical model,

-

or a term frequency feature (t, n) or a term position (t, p) feature as defined in Sect. 3.

For the classical term representation, following [20], we experimented three possible term frequency weights (see Table 4). For our model, we use only binary term weights (\(w_\mathrm{TF}(x,d) = \text {BIN}(x,d)\)), because the frequency of the term is already considered in the extended term representation \(x=(t,n)\).

5.2.2 SVM classifier

For each category, we have used a SVM binary classifier which learns a linear combination of the features in order to define the decision hyperplane. We adopted the SVMLight tool [17] with a linear kernel and used the default settings. Previous studies show that SVMLight performs well for text classification [16].

5.2.3 SVM classifier combination

Classifier combination [30] methods are ensemble techniques that use the predictions of several classifiers to obtain better predictive performance than could be obtained from any of the constituent classifiers. One way to combine several classifiers is to consider the multiple classifier outputs as inputs to a generic classifier called secondary classifier.

In our case for each category, we combine with a SVM classifier the scores given by multiple base SVM binary classifiers, each classifier uses one of the 96 metrics for weighting the features. Base SVM learners are trained with the same set of documents. The classification scores obtained by each training document are used as input features for the secondary SVM learner. We also tried random forest as secondary classifier, but SVM gives better results.

5.3 Results

In order to estimate the performance of both our model and the 96 metrics, we have compared the \(F_1\)-Score of SVM classification on Reuters-21578, Ohsumed and 20 Newsgroups documents with classical and extended term representations using different weighting schemes.

5.3.1 Metric comparison

We recall that for a fixed category y the weight w(x, y, d) of a feature x in a document d is:

where the term frequency weight \(w_\mathrm{TF}\) (see Table 4) depends on the frequency of x in the document d and the document frequency weight \(w_\mathrm{DF}\) is one of the metrics described in Table 3. The feature x represents either a term feature t, a term frequency feature (t, n) or a term position feature (t, p).

For each document frequency weight metric \(w_\mathrm{DF}\) we have experimented 5 weighting schemes:

-

raw term frequency weight (\(w_\mathrm{TF}=\text {RTF}\)) for term features t

-

term frequency logarithm weight (\(w_\mathrm{TF}=\text {LTF}\)) for term features t

-

inverse term frequency weight (\(w_\mathrm{TF}=\text {ITF}\)) for term features t

-

binary term frequency weight (\(w_\mathrm{TF}=\text {BIN}\)) for term frequency features (t, n)

-

binary term frequency weight (\(w_\mathrm{TF}=\text {BIN}\)) for term frequency features (t, n) and term position features (t, p)

Table 5 reports the microaveraged \(F_1\)-Score for Reuters-21578 (10, 52 and 115 categories), Ohsumed and 20 Newsgroups. After calculation of the \(F_1\)-Score for each classifier, the metrics are ranked in descending order of the best weighting scheme score. Table shows only the top-10 metrics. It is clearly observed that the proposed representation models (t, n) and (t, n) and (t, p) perform significantly better than the classical representation and achieve the best performances in all experiments in terms of microaveraged \(F_1\)-scores for all the metrics. The model (t, n) and (t, p) performs better than the model (t, n), which means that using the position improves the performances. The only exception is Reuters-21578 with 115 categories. We think this is due to the fact that a significant number of categories (40/115) are represented by only up to 3 training documents, and the influence of position in the document cannot be learned correctly.

These observations comfort our intuition that including term frequency in document frequency formula as a feature is more relevant than multiplying those quantities. For the classical term representation model, the inverse term frequency (ITF) weight gives better \(F_1\)-scores than raw and logarithm term frequency (RTF and LTF). We also notice that the baseline metric TFIDF with the classical representation, precisely ITF.IDF, performs significantly worse than other metrics. Put together using other metrics than TFIDF and using extended term representation gives significant improvement to the classification. For example, the \(F_1\)-Score increased from 0.892 for TFIDF to 0.947 for Yulle’s \(\omega \) with the extended term representation (t, n) and (t, p) in the Reuters-21578 corpus with 10 categories. The best improvement is obtained in Ohsumed corpus as we move from a \(F_1\)-Score of 0.380 to 0.639 with one-way Klosgen metric with an extended term representation. However, we notice that the best metrics are very different according to the corpus used and whether the label distribution is balanced or not for Reuters-21578 corpus.

Table 6 provides the macroaveraged \(F_1\)-Scores of the top-10 metrics among all weighting schemes. We observe also that the proposed representation models (t, n) and (t, n) and (t, p) achieve better macroaveraged \(F_1\)-scores. However, the top-10 metrics when we consider the macroaveraged \(F_1\)-scores are generally different from the top-10 metrics considering the microaveraged \(F_1\)-scores.

We also notice that lots of metrics proposed in this paper for the first time in term weighting give better results than metrics previously used for this problem.

5.3.2 Classifier combination

As no metric gives the best results in all situations, we have tested classifier combination. The classification of a new document is done in two steps: We first compute classification scores with 96 base SVM learners, and each learner uses a different metric for weighting the features, and then, we use these scores as features for classifying the document with the secondary SVM learner.

Table 7 provides the \(F_1\)-Scores obtained by different weighting metrics and their combination when we use extended term representation (t, n) and (t, p) on Reuters (10, 52 and 115 categories), Ohsumed and 20 Newsgroups corpora. We can see that the performances of the classifier combination are always better according to all criteria: the microaveraged and the macroaveraged \(F_1\)-score for all the corpora. This confirms that by combining the predictions of several classifiers using different metrics one obtains better predictive performance than could be obtained from any of the constituent classifiers that use one metric.

The statistical significance of the achieved performances on 20 Newsgroups corpus are given in Table 8. Besides the fact that the \(F_1\)-score obtained by the classifier combination is more (in 20 Newsgroups) or less (in Reuters with 10 categories) significantly better than the best metric for each corpus, combination is the only method that gives good results for all corpora, all number of categories and both type of \(F_1\)-Scores (micro and macro).

5.4 Computation time and time complexity

Table 9 gives the number of features considered in the training set according to the term representations we have considered in our experiments. It shows a moderate growth in the number of features, by a factor of 3, when we consider term frequency and position features compared to traditional term features.

It is interesting to note in the same table that SVM learning computation time (on one processor of an Intel(R) Core(TM) i7-3520M at 2.90 GHz) is almost proportional to the number of features. Indeed, Thorsten Joachims showed that training linear SVM can be achieved in time O(ns), where s is the number of nonzero features (terms) in each example (document) and n the number of examples [18], with the assumption that \(s\ll N\), N being the number of features in the entire corpus. This last assumption is verified for a corpus of text documents. As we have already discussed in Sects. 3.2 and 3.3, the number of frequency and position features associated with a term t in each document grows with the logarithm of s. This means that the time complexity for training a corpus with documents containing at most s terms is \(O(n s \log s)\). In practice, \(\log s\) is small as it is confirmed by both the number of features and the computation time presented in Table 9.

Our classifier combination method implies the use of 96 SVM learners in the first step, then another SVM learner for the final classification. The theoretical time complexity is not affected because 96 is a constant value. However, in practice, it means that it multiplies the computation time by 96. In many text classification problems, one can afford such computation time constant growth in order to obtain significant improvement in the quality of the classification (from a microaveraged \(F_1\)-Score of 0.444 with IDF to 0.679 by combining 96 metrics in the case of Ohsumed corpus).

6 Conclusion

In this paper, we have studied 96 term-weighting metrics, and among them 80 metrics have not been used for this problem in the literature. Many of them provide better results than those already used for term weighting. We have also proposed an extended term representation where the term frequency and the term position in the document are adequately integrated to the document frequency. As no metric gives the best results according to whether the label distribution is balanced or not, we have proposed a classifier combination method with different metrics that performs well for both macroaveraged and microaveraged \(F_1\)-scores for different cases of label distribution.

Future work includes searching for superior weighting metrics, using other learning methods (Naives Bayes, centroid, etc.) and testing on large-scale benchmark data sets. In particular, it would be interesting to improve the computation time in order to apply our ideas on MEDLINE and Wikipedia corpora.

References

Aggarwal CC, Zhai C (2012) A survey of text classification algorithms. In: Aggarwal CC, Zhai C (eds) Mining text data. Springer, New York, pp 163–222

Altinçay H, Erenel Z (2010) Analytical evaluation of term weighting schemes for text categorization. Pattern Recognit Lett 31(11):1310–1323

Altinçay H, Erenel Z (2012) Using the absolute difference of term occurrence probabilities in binary text categorization. Appl Intell 36(1):148–160

Badawi D, Altinçay H (2014) A novel framework for termset selection and weighting in binary text classification. Eng Appl Artif Intell 35:38–53

Batal I, Hauskrecht M (2009) Boosting KNN text classification accuracy by using supervised term weighting schemes. In: Cheung DW-L, Song I-Y, Chu WW, Hu X, Lin JJ (eds), Proceedings of the 18th ACM conference on information and knowledge management, CIKM 2009. Hong Kong, China, November 2–6, 2009. ACM, pp 2041–2044

Bouillot F, Poncelet P, Roche M (2014) Classification of small datasets: why using class-based weighting measures?. In: Andreasen T, Christiansen H, Talavera JCC, Ras ZW (eds), Foundations of intelligent systems–21st international symposium, ISMIS 2014, Roskilde, Denmark, June 25–27, 2014. Proceedings, vol 8502 of Lecture notes in computer science, Springer, pp 345–354

Debole F, Sebastiani F (2002) Supervised term weighting for automated text categorization, Technical Report Technical Report 2002-TR-08. Istituto di Scienza e Tecnologie dellInformazione, Consiglio Nazionale delle Ricerche, Pisa, IT

Debole F, Sebastiani F (2003) Supervised term weighting for automated text categorization. In: Proceedings of the 2003 ACM symposium on applied computing (SAC), March 9–12, 2003. Melbourne, FL, USA. ACM, pp 784–788

Deng Z-H, Luo K-H, Yu H (2014) A study of supervised term weighting scheme for sentiment analysis. Expert Syst Appl 41(7):3506–3513

Deng Z-H, Tang S, Yang D, Zhang M, Li L, Xie K (2004) A comparative study on feature weight in text categorization. In: Yu JX, Lin X, Lu H, Zhang Y (eds), Advanced web technologies and applications, 6th Asia-Pacific web conference, APWeb 2004, Hangzhou, China, April 14–17, 2004, Proceedings, vol 3007 of Lecture notes in computer science, Springer, pp 588–597

Escalante HJ, García-Limón MA, Morales-Reyes A, Graff M, Montes-y-Gómez M, Morales EF, Martínez-Carranza J (2015) Term-weighting learning via genetic programming for text classification. Knowl Based Syst 83:176–189

Fattah MA (2015) New term weighting schemes with combination of multiple classifiers for sentiment analysis. Neurocomputing 167:434–442

Forman G (2003) An extensive empirical study of feature selection metrics for text classification. J Mach Learn Res 3:1289–1305

Forman G (2008) BNS feature scaling: an improved representation over tf-idf for svm text classification. In: Shanahan JG, Amer-Yahia S, Manolescu I, Zhang Y, Evans DA, Kolcz A, Choi K-S, Chowdhury A (eds), Proceedings of the 17th ACM conference on information and knowledge management, CIKM 2008, Napa Valley, California, USA, October 26–30, 2008. ACM, pp 263–270

Geng L, Hamilton HJ (2006) Interestingness measures for data mining: a survey. ACM Comput Surv 38(3):9

Guan H, Zhou J, Guo M (2009) A class-feature-centroid classifier for text categorization. In: Quemada J, León G, Maarek YS, Nejdl W (eds), Proceedings of the 18th international conference on world wide web, WWW 2009, Madrid, Spain, April 20–24, 2009. ACM, pp 201–210

Joachims T (1999) Making large-scale SVM learning practical. In: Schölkopf B, Burges C, Smola A (eds) Advances in kernel methods–support vector learning. MIT Press, Cambridge, pp 169–184 (Chapter 11)

Joachims T (2006) Training linear SVMs in linear time. In: Eliassi-Rad T, Ungar LH, Craven M, Gunopulos D (eds), Proceedings of the Twelfth ACM SIGKDD international conference on knowledge discovery and data mining. Philadelphia, PA, USA, August 20–23, 2006. ACM, pp 217–226

Ko Y (2015) A new term-weighting scheme for text classification using the odds of positive and negative class probabilities. J Assoc Inf Sci Technol 66:2553–2565

Lan M, Tan CL, Su J, Lu Y (2009) Supervised and traditional term weighting methods for automatic text categorization. IEEE Trans Pattern Anal Mach Intell 31(4):721–735

Liu Y, Loh HT, Sun A (2009) Imbalanced text classification: a term weighting approach. Expert Syst Appl 36(1):690–701

Madjarov G, Kocev D, Gjorgjevikj D, Dzeroski S (2012) An extensive experimental comparison of methods for multi-label learning. Pattern Recognit 45(9):3084–3104

Martineau J, Finin T, Joshi A, Patel S (2009) Improving binary classification on text problems using differential word features. In: Cheung DW-L, Song I-Y, Chu WW, Hu X, Lin JJ (eds), Proceedings of the 18th ACM conference on information and knowledge management, CIKM 2009. Hong Kong, China, November 2–6, 2009. ACM, pp 2019–2024

Nguyen TT, Chang K, Hui SC (2013) Supervised term weighting centroid-based classifiers for text categorization. Knowl Inf Syst 35(1):61–85

Pecina P (2010) Lexical association measures and collocation extraction. Lang Resour Eval 44(1–2):137–158

Rehman A, Javed K, Babri HA, Saeed M (2015) Relative discrimination criterion–a novel feature ranking method for text data. Expert Syst Appl 42(7):3670–3681

Ren F, Sohrab MG (2013) Class-indexing-based term weighting for automatic text classification. Inf Sci 236:109–125

Sebastiani F (2002) Machine learning in automated text categorization. ACM Comput Surv 34(1):1–47

Tsoumakas G, Katakis I, Vlahavas IP (2010) Mining multi-label data. In: Maimon O, Rokach L (eds) Data mining and knowledge discovery handbook, 2nd edn. Springer, New York, pp 667–685

Tulyakov S, Jaeger S, Govindaraju V, Doermann DS (2008) Review of classifier combination methods. In: Marinai S, Fujisawa H (eds) Machine learning in document analysis and recognition, vol 90 of Studies in computational intelligence. Springer, New York, pp 361–386

Yang Y, Pedersen JO (1997) A comparative study on feature selection in text categorization. In: Fisher DH (eds), Proceedings of the fourteenth international conference on machine learning (ICML 1997), Nashville, Tennessee, USA, July 8–12, 1997. Morgan Kaufmann, pp 412–420

Zhang M, Zhou Z (2014) A review on multi-label learning algorithms. IEEE Trans Knowl Data Eng 26(8):1819–1837

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Haddoud, M., Mokhtari, A., Lecroq, T. et al. Combining supervised term-weighting metrics for SVM text classification with extended term representation. Knowl Inf Syst 49, 909–931 (2016). https://doi.org/10.1007/s10115-016-0924-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-016-0924-1