Abstract

First-order primal-dual methods are appealing for their low memory overhead, fast iterations, and effective parallelization. However, they are often slow at finding high accuracy solutions, which creates a barrier to their use in traditional linear programming (LP) applications. This paper exploits the sharpness of primal-dual formulations of LP instances to achieve linear convergence using restarts in a general setting that applies to alternating direction method of multipliers (ADMM), primal-dual hybrid gradient method (PDHG) and extragradient method (EGM). In the special case of PDHG, without restarts we show an iteration count lower bound of \(\Omega (\kappa ^2 \log (1/\epsilon ))\), while with restarts we show an iteration count upper bound of \(O(\kappa \log (1/\epsilon ))\), where \(\kappa \) is a condition number and \(\epsilon \) is the desired accuracy. Moreover, the upper bound is optimal for a wide class of primal-dual methods, and applies to the strictly more general class of sharp primal-dual problems. We develop an adaptive restart scheme and verify that restarts significantly improve the ability of PDHG, EGM, and ADMM to find high accuracy solutions to LP problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Linear programming (LP) is a fundamental tool in operations research, with applications in transportation, scheduling, inventory control, revenue management [4, 10, 16, 35, 50, 53]. An LP problem with n variables and m constraints in standard form is

where \({\mathbb {R}}\) denotes the set of real numbers.

Currently, most practitioners solve LP problems with either simplex [18] or interior-point methods [41]. There are excellent reasons for this, namely that both methods typically find highly accurate solutions in a reasonable amount of time, and reliable implementations are widely available. While the two methods are quite different, both exactly solve linear system subproblems using factorizations (i.e., matrix inversion). These factorizations can be extremely fast, even for large problems. However, there are some drawbacks of relying on methods that use factorizations. Factorization speed heavily depends on the sparsity pattern of the linear systems, and in some examples, can be very slow. Moreover, for large enough problems, factorizations will run out of memory, even when the original problem data can fit into memory. Finally, factorizations are challenging to parallelize or distribute across multiple machines.

For this reason, there is a recent interest in developing methods for LP that forgo factorizations and instead use matrix–vector multiplies [5, 7, 8, 32, 45, 49]. Methods built on matrix–vector multiplication have many computationally appealing aspects. For example, they are readily parallelized or distributed across multiple machines. Moreover, their memory footprint is almost the same as the original problem data, and each matrix–vector multiply is reliably fast. Because of these fundamental differences, Nesterov [58] categorizes optimization methods that use factorizations as capable of handling medium-scale problems, and those that instead use matrix–vector multiplies as capable of handling large-scale problems.

A major reason why methods based on matrix–vector multiplies remain unsuitable replacements for factorization-based methods for LP is that they often struggle to obtain moderate to high accuracy solutions in a reasonable time-frame [32]. This paper attempts to tackle this issue in an important setting: first-order primal-dual methods for LP. Primal-dual methods solve:

where \(\mathcal {L}(x,y)\) is differentiable, convex in x and concave in y, and X, Y are closed convex sets. For notational convenience, we denote \(z=(x,y)\) as the primal-dual variables and \(Z=X\times Y\) as the feasible region. Furthermore, denote

as the first-order derivatives of \(\mathcal {L}(x,y)\). Throughout the paper, we assume the feasible region of (1) is non-empty and that (1) has a bounded optimal value, which are standard assumptions in the convex optimization literature [61].Footnote 1. Well-known primal-dual methods include proximal point method (PPM) [74], extragradient method (EGM) [43, 77], primal-dual hybrid gradient (PDHG) [14], and alternating direction method of multipliers (ADMM) [21]. We can solve LP problems with primal-dual methods by writing them in the form:

To obtain high accuracy solutions for (2), our key idea is to use restarts and sharpness. A restart feeds the output of an algorithm back in as input; a restart scheme will restart an algorithm whenever certain criteria are met. Sharpness presents a lower bound on the objective function growth in terms of the distance to the optimal solution set. Restart schemes and sharpness conditions are well-studied in the context of unconstrained minimization [64, 73, 78]. In this context they can improve the theoretical and practical convergence of minimization algorithms to high accuracy solutions. We analyze restarts in a different context: primal-dual methods for solving sharp primal-dual problems, such as those derived from LP, obtaining similar improvements.

1.1 Contributions

The contributions of the paper can be summarized as follow:

-

We introduce a sharpness condition of primal-dual problems, and show LP problems are sharp.

-

We propose restarted algorithms for solving sharp primal-dual problems, and present their linear convergence rates. Their linear convergence rate matches the lower bound for a general class of primal-dual methods.

-

We analyze primal-dual hybrid gradient without restarts and show that it is slower than its restarted variant on bilinear problems.

-

We present an adaptive restart scheme that requires no hyperparameter searching over restart lengths. Numerical experiments showcase the effectiveness of our scheme.

While not a contribution of this paper, a subsequent computational study [5] evaluates a method named PDLP, which builds on top of the theory in the present paper by implementing a restarted version of PDHG with various additional enhancements. The study shows that adaptive restarts contribute significantly to PDLP’s ability to solve a wide variety of benchmark LP problems to high levels of accuracy (see Section C.2 in [5]). Applegate et al. [5] includes an open source Julia prototype of PDLP.Footnote 2 More recently, a C++ version of PDLP was released within the open source OR-Tools library.Footnote 3

There are two concepts we introduce to facilitate our implementation and analysis: the normalized duality gap and sharpness.

1.2 Normalized duality gap

The traditional metric for measuring solution quality for primal-dual problems is the primal-dual gap [14, 57] defined as

where for brevity we denote \((\hat{x}, \hat{y}) = \hat{z}\). A solution to (1) has zero primal-dual gap. However, when the feasible region is unbounded, such as in LP (2), this quantity can be infinite. For this reason, for unbounded problems, we cannot provide convergence guarantees on this quantity. This makes it a poor progress metric. To overcome this issue we introduce the normalized duality gap:

where \(W_{r}(z) {:}{=}\{\hat{z}\in Z \mid \Vert z-\hat{z}\Vert \le r\}\) is the ball centered at z with radius \(r \in (0, \infty )\) intersected with the set Z, and \(\Vert \cdot \Vert \) is a carefully selected semi-norm on Z. If \(r = 0\) then for completeness we defineFootnote 4:

Similar to primal-dual gap, we show the normalized duality gap attains zero if and only if it is evaluated at a solution to (1). Unlike the primal-dual gap, if \(\Vert \cdot \Vert \) is a norm, then the normalized duality gap \(\rho _{r}(z)\) is always finite at any point \(z \in Z\) with \(r \in (0, \infty )\).

The normalized duality gap plays two roles in our work. The first is that it is used as a potential function in our restart scheme. In particular, our adaptive restarts are triggered by constant factor reductions in the normalized duality gap. Secondly, it forms the foundations of our analysis for restart schemes and is directly involved in our definition of sharpness for primal-dual problems.

1.3 Sharpness for primal-dual problems

Sharpness, first introduced by Polyak [68], has been an important concept and a useful analytical tool in convex minimization. For a given function f, we say f is \(\alpha \)-sharp if it holds for any \(z\in Z\) that

where \(Z^{\star }\) is the set of minima of the function f, \(f^{\star }\) is the optimal function value, and \(\mathbf{dist{}}(z, Z) {:}{=} \inf _{\hat{z} \in Z} \Vert z - \hat{z} \Vert \). When f is Lipschitz continuous, convex and sharp, it is well-known that restarted sub-gradient descent obtains linear convergence. In contrast, without sharpness it obtains a slow sublinear rate [78]. In this work, we generalize sharpness from minimization problems to primal-dual problems (1). Informally, we say a primal-dual problem is sharp if and only if the normalized duality gap \(\rho _r(z)\) is sharp as per (5) with \(f=\rho _r\). This condition is satisfied by LP problems, and the sharpness constant is proportional to the inverse of the Hoffman constant [38] of the matrix that forms the KKT conditions.

1.4 Notation

Let \({\mathbb {N}}\) denote the set of natural numbers (starting from one). Let \(\log (\cdot )\) and \(\exp (\cdot )\) refer to the natural log and exponential function respectively. Let \(\sigma _{\min }( \cdot )\), \({\sigma _{\min }^+}{(\cdot )}\), \({\sigma _{\max }}{(\cdot )}\) denote the minimum singular value, minimum nonzero singular value and maximum singular value of a matrix. Let \(\Vert z\Vert _2=\sqrt{\sum _j z_j^2}\) be the \(\ell _2\) norm of z. For a bounded set \(S \subseteq Z\), \(\mathbf{diam{}}( S )\) denotes the diameter of S, i.e., \(\mathbf{diam{}}( S ) {:}{=} \sup _{s, s' \in S} \Vert s - s' \Vert \).

The remainder of this section discusses related literature. In Sect. 2, we present a unified viewpoint of the sublinear rate of classic primal-dual algorithms. In Sect. 3, we discuss the basic properties and examples of sharp primal-dual problems. In Sect. 4, we present two restart schemes: fixed frequency restarts and adaptive restarts, and prove the linear convergence of both algorithms. In Sect. 5, we consider the special case of bilinear problems. In this setting, we show restarts obtain optimal convergence rates and that standard PDHG is provably slower than restarted PDHG for solving bilinear problems. In Sect. 6, we present efficient algorithms to compute the normalized duality gap. In Sect. 7, we present numerical experiments that showcase the effectiveness of our proposed restart schemes.

1.5 Related literature

1.5.1 Convex–convave primal-dual problems

The study of convex-concave primal-dual problems has a long history. Convex-concave primal-dual problems are a special case of monotone variational inequalities. In his seminal work [74], Rockafellar studied PPM for solving monotone variational inequalities. Later on, Tseng [77] shows that both PPM and EGM have a linear convergence rate for solving variational inequalities when certain complicated conditions are satisfied, and these conditions are satisfied for solving strongly-convex-strongly-concave primal-dual problems. In 2004, Nemirovski [57] proposed the Mirror Prox algorithm (a special selection of the prox function recovers EGM), which first showed that EGM has \(O(\frac{1}{\varepsilon })\) sub-linear convergence rate for solving convex-concave primal-dual problems over a bounded and compact set.

There are several works that study the special case of (1) when the primal-dual function has bilinear interaction terms, i.e., \(\mathcal {L}(x,y)=f(x)+x^{\top } B y - g(y)\) where \(f(\cdot )\) and \(g(\cdot )\) are both convex functions. Algorithms for solving these bilinear interaction problems include Douglas–Rachford splitting (a special case is Alternating Direction Method of Multipliers (ADMM)) [21, 22] and Primal-Dual Hybrid Gradient Method (PDHG) [14].

The linear convergence of primal-dual methods is widely studied. Daskalakis et al. [19] studies the Optimistic Gradient Descent Ascent (OGDA), designed for training GANs, and shows that OGDA converges linearly for bilinear problems \(\mathcal {L}(x,y)=x^{\top } A y\) with a full rank matrix A. Mokhtari et al. [56] shows that OGDA and EGM both approximate PPM (indeed, the fact that EGM is an approximation to PPM was first shown in Nemirovski’s earlier work [57]). They further show that these three algorithms have a linear convergence rate when \(\mathcal {L}(x,y)\) is strongly-convex strongly-concave or when \(\mathcal {L}(x,y)=x^{\top } A y\) is bilinear with a square and full rank matrix. Lu [51] presents an ODE framework to analyze the dynamics of unconstrained primal-dual algorithms, yielding tight conditions under which different algorithms exhibit linear convergence, including bilinear problems with nonsingular A. Eckstein and Bertsekas [23] use a variant of ADMM to solve LP problems and show the linear convergence of their method. More recently, [44, 46] show that many primal-dual algorithms, including PDHG and ADMM, have eventual linear convergence when applied to LP problems satisfying a non-degeneracy condition. Very recently, for PDHG on LP problems, linear convergence is established without requiring a non-degeneracy assumption [1].

However, not all linear convergence rates are equal. For example, it is well known that both gradient descent and accelerated gradient descent (AGD) obtain linear convergence on smooth, strongly convex functions. However, the gradient descent bound is \(O(L / \theta \log (1/\epsilon ))\) and accelerated gradient descent’s bound is \(O(\sqrt{L / \theta } \log (1/\epsilon ))\) where L is the smoothness and \(\theta \) is strong convexity parameter [61]. Therefore, accelerated gradient descent has a better dependence on the condition number (i.e., the ratio between \(\theta \) and L) by a square root factor. This significant improvement is seen in practice too. A similar phenomena occurs for PDHG where we show for bilinear problems, the dependency on the condition number improves by a square root with introduction of restarts. We also show that, in practice, restarts improve the performance of PDHG. A more detailed discussion of this issue and related literature appears in Sect. 5.

1.5.2 Sharpness conditions

The concept of sharpness of a minimization problem was first proposed by Polyak [68] in 1970s. The original work by Polyak assumes the optimal solution set is a singleton. This work was then generalized by Burke and Ferris [12] to include the possibility of multiple optima. The early work on sharp minimization problems focused on analyzing the finite termination of certain algorithms, for example, projected gradient descent [69], proximal-point method [26], and Newton’s method [13]. Sharpness of monotone variational inequalities with bounded feasible regions was studied by Marcotte and Zhu [54], and they focused on the finite convergence of a two-stage algorithm. Notice the primal-dual problem (1) is a special case of a variational inequality (VI) [36]. Indeed, when the feasible region is bounded, the sharpness of primal-dual problems we propose, translating to VI form, is equivalent to the sharpness of variational inequalities up to a constant. Our results generalize the concept for unbounded regions (when translating to VI). More recently, sharpness plays an important role for developing faster convergence rates for first-order methods. In particular, linear convergence for subgradient (restarted) descent on sharp non-smooth functions [78] and sharp non-convex (weakly-convex) minimization [20]. Finally, similar to strong convexity, sharpness provides a lower bound on the optimality gap in distance to optimality. Both sharpness and strong convexity can be viewed as error bound conditions with different parameters [73].

1.5.3 Restart schemes

Restarting is a powerful technique in continuous optimization that can improve the practical and theoretical convergence of a base algorithm [67]. The approach does not require modifications to the base algorithm, and thus is in general easy to incorporate within standard implementations. Recently, there have been extensive work on this technique, in smooth convex optimization [64, 73], non-smooth convex optimization [27, 78], and stochastic convex optimization [40, 47, 75]. Many of the previous works require estimating algorithmic parameters such as the strong convexity constant. This estimation often requires computationally intensive hyperparameter searches. An important topic is to avoid this estimation by developing adaptive methods, which were well-studied for accelerated gradient descent [2, 25, 30, 48, 60, 64]. In this paper, we present two restart schemes for primal-dual methods: fixed frequency restarts and adaptive restarts, where the former needs an estimation of the sharpness constant, while the latter does not.

1.5.4 Hoffman constant

The Hoffman constant [38], upper bounds the ratio between the distance to a non-empty polyhedron and the corresponding constraint violation. More formally, for a given non-empty polyhedron \(P =\{z \in {\mathbb {R}}^{n + m} \mid K z \ge h\}\), the Hoffman constant H(K) satisfies \(\mathbf{dist{}}(z, P)\le H(K) \Vert (h-K z)^+\Vert \) for all z. The Hoffman constant can also be viewed as the inverse of the sharpness constant of the function \(f(z)=\Vert (h-Kz)^+\Vert \), namely,

by noticing the minimal objective is \(f^{\star }=0\) when the polyhedron is non-empty. Furthermore, there is a natural connection between the Hoffman constant and Renegar’s condition number [71, 72], which measures the distance to ill-posedness of a system of linear inequalities. The Hoffman constant also characterizes the inherent difficulty to solve a system of linear inequalities, and plays an important role in establishing the convergence properties of many optimization methods [34, 52, 66, 72].

1.5.5 Concurrent work

After posting the first version of our manuscript, we noticed a concurrent and independent work [24]. This work presents a new regularity condition of primal-dual problems called quadratic error bound of the smoothed gap (QEB). Furthermore, [24] shows that LP problems satisfy QEB, and restarted PDHG has linear convergence under QEB condition if the QEB parameter is known to the user. Our condition is different from the QEB condition as it involves the sharpness of the normalized duality gap. Contributions of our paper that do not appear in [24] include: (i) we present an adaptive scheme that achieves the optimal convergence rate without knowing the sharpness parameter; (ii) we provide a lower bound that proves our restarted algorithms achieve the optimal linear convergence rate under our sharpness condition, whereas it is unclear whether the algorithms presented in [24] are optimal in their setting; (iii) our analysis applies to a variety of primal-dual algorithms including PPM, EGM, ADMM and PDHG, whereas [24] focuses on PDHG. On the other hand, contributions of [24] that do not appear in our paper include that (i) our sharpness condition is tied to LP, while the QEB condition is possibly more general; (ii) we show the linear convergence of the current iterate only in the bilinear setting whereas [24] analyzes the linear convergence of the current iterate under the QEB condition.

2 A unified viewpoint of the sublinear ergodic convergence rate of primal-dual algorithms

While there have been recent works on studying the last iterations of primal-dual algorithms [17], many previous convergence bounds for primal-dual problems (1) are with respect to the average over the algorithm’s iterates [15, 37, 57]. This bound is known as the algorithm’s ergodic convergence rate. In this section, we discuss a unified viewpoint of the sublinear ergodic convergence rate for classic primal-dual algorithms, which we will later use to develop the analysis for our restart schemes.

We consider a generic class of primal-dual algorithms for primal-dual problems starting from \(t = 0\) with iterate update

where \(z^t\) is the iterate solution at time t, \(z^{t+1}\) is the iterate solution at time \(t+1\), \(\hat{z}^{t+1}\) is the target solution at time \(t+1\), and \(\eta ~{ \in (0,\infty )}\) is the step size of the algorithm. As we will see later, the convergence guarantee of some primal-dual algorithms may not be on the iterate solution \(z^t\); hence, we introduce the target solution \(\hat{z}^t\). The output of the algorithm is a function of target sequence \(\hat{z}^1,\ldots , \hat{z}^t\), and for the standard ergodic rate it is the average of target solutions, namely, \(\bar{z}^t=\frac{1}{t}\sum _{j=1}^t \hat{z}^i\). Here we discuss four classic primal-dual algorithms that can be represented as (6):

-

(Proximal point method) Proximal point method (PPM) [74] is a classic algorithm for solving (1). The update can be written as:

$$\begin{aligned} \hat{z}^{t+1}=\,&z^{t+1}=\left( x^{t+1},y^{t+1}\right) \in \arg \min _{x\in X}\max _{y\in Y} \mathcal {L}(x,y)\nonumber \\&+\frac{1}{2\eta } \Vert x-x^t\Vert _2^2-\frac{1}{2\eta } \Vert y-y^t\Vert _2^2\ . \end{aligned}$$(7) -

(Extragradient method) Extragradient method [77] (a special case of mirror prox [57]) is another classic method for solving (1). It is an approximation of PPM [57]. The update can be written as:

$$\begin{aligned} \begin{aligned} \hat{z}^{t+1}&\in {\mathop {\textrm{argmin}}\limits _{z\in Z}} \left\{ F(z^t)^{\top } z + \frac{1}{2\eta } \Vert z-z^t\Vert _2^2 \right\} \\ z^{t+1}&\in {\mathop {\textrm{argmin}}\limits _{z\in Z}} \left\{ F(\hat{z}^{t+1})^{\top } z + \frac{1}{2\eta } \Vert z-z^t\Vert _2^2 \right\} \ . \end{aligned} \end{aligned}$$(8)For this method, we assume F(z) is \(L\)-Lipschitz continuous.

-

(Primal-dual hybrid gradient) Primal-dual hybrid gradient (also called Chambolle and Pock method) targets primal-dual problems with bilinear interaction term, namely, \(\mathcal {L}(x,y)=f(x)+y^{\top } Ax - g(y)\), and it is widely used in image processing [14, 15]. The update can be written asFootnote 5:

$$\begin{aligned} \begin{aligned} x^{t+1}&\in {\mathop {\textrm{argmin}}\limits _{x\in X}} f(x)+(y^t)^{\top } Ax+\frac{1}{2\eta } \Vert x-x^t\Vert _2^2 \\ y^{t+1}&\in {\mathop {\textrm{argmin}}\limits _{y\in Y}} g(y)-y^{\top } A(2 x^{t+1}-x^t) +\frac{1}{2\eta } \Vert y-y^t\Vert _2^2 \\ \hat{z}^{t+1}&=z^{t+1} =(x^{t+1}, y^{t+1}) \ . \end{aligned} \end{aligned}$$(9) -

(Alternating direction method of multipliers) Alternating direction method of multipliers (ADMM) [11, 21, 22, 37] targets linearly constrained convex optimization problems with separable structure

$$\begin{aligned} \min _{x_U\in {X}_{U}, x_V\in {X}_{V}}\&\ \theta _1(x_U) + \theta _2(x_V) \\ \text {s.t.}\&\ U x_U + V x_V = q \ , \end{aligned}$$for which the Lagrangian becomes

$$\begin{aligned} \min _{x\in X}\max _{y\in Y} \mathcal {L}(x,y) := \theta (x) - y^{\top } \begin{pmatrix} U&V\end{pmatrix} \begin{pmatrix} x_{U} \\ x_{V} \end{pmatrix} + q^{\top } y \ , \end{aligned}$$(10)where \(x=(x_{U},x_{V})\), \(\theta (x) = \theta _{U}(x_{U}) + \theta _{V}(x_{V})\), \(X=X_U \times X_V\), and \(Y={\mathbb {R}}^m\). The update of ADMM is

$$\begin{aligned} \begin{aligned} x_{U}^{t+1}&\in {\mathop {\textrm{argmin}}\limits _{x_{U}\in X_{U}}}\left\{ \theta _1(x_{U})+\frac{\eta }{2} \left\| \left( U x_U+V x_V^t - q\right) -\frac{1}{\eta } y^t\right\| ^2_2 \right\} \\ x_{V}^{t+1}&\in {\mathop {\textrm{argmin}}\limits _{x_{V}\in X_{V}}}\left\{ \theta _2(x_{V})+\frac{\eta }{2} \left\| \left( U x_U^{t+1}+V x_V - q\right) -\frac{1}{\eta } y^t\right\| ^2_2 \right\} \\ y^{t+1}&=y^t-\eta \left( U x_U^{t+1}+V x_V^{t+1} - q\right) \\ \hat{z}^{t+1}&= \left( x_{U}^{t+1}, x_{V}^{t+1}, y^t-\eta \left( U x_U^{t+1}+V x_V^{t} - q\right) \right) \\ z^{t+1}&=\left( x^{t+1}_U, x^{t+1}_V, y^{t+1}\right) \end{aligned} \end{aligned}$$(11)

Although the above four classic primal-dual algorithms have different update rules and may even target different primal-dual problems, it turns out they all satisfy the following assumption, which provides a unified viewpoint of primal-dual algorithms as well as a unified analysis on their ergodic rate.

Property 1

Consider a primal-dual algorithm with iterate update (6). There exists \( C>0\) and a semi-norm \(\Vert \cdot \Vert \) such that it holds for any \(t \in {\mathbb {N}}\) and \(z\in Z\) that

Property 1 presents an upper bound on the primal-dual gap at the target solution \(\hat{z}^{t+1}\). Notice the RHS of (12) involves the iterate solution \(z^t\) instead of the target solution \(\hat{z}^t\), which is intentional. The next proposition shows how classic primal-dual algorithms satisfy Property 1 as well as their corresponding constants C and semi-norms \(\Vert \cdot \Vert \). These are known results for individual algorithms; here we provide a unified viewpoint.

Proposition 1

PPM, EGM, PDHG and ADMM satisfy Property 1 with

-

(PPM) \(C=\frac{1}{\eta }\) and Euclidean norm for any \(\eta >0\);

-

(EGM) \(C=\frac{1}{\eta }\) and Euclidean norm for \(0<\eta \le \frac{1}{L}\);

-

(PDHG) \(C=\frac{1}{\eta }\) and \(\Vert z\Vert ^2=z^{\top } M z\) for \(0<\eta \le \frac{1}{{\sigma _{\max }}{(A)}}\), where \(M=\begin{pmatrix} {{\textbf {I}}} &{} -\eta A^{\top }\\ -\eta A &{} {{\textbf {I}}} \end{pmatrix}\);

-

(ADMM) \(C=1\) and \(\Vert z\Vert ^2=z^{\top } M z\) for \(\eta >0\), where \(M=\begin{pmatrix} 0 &{} 0 &{} 0 \\ 0 &{} \eta V^{\top } V&{} 0 \\ 0 &{} 0 &{} \frac{1}{\eta } {{\textbf {I}}}\end{pmatrix}\).

Proof

(PPM) It follows from the optimality condition of (7) that for all \( z\in Z \),

After rearranging the above inequality, we obtain

We finish the proof for PPM by noticing \(\mathcal {L}(x^{t+1},y) - \mathcal {L}(x,y^{t+1})\le \left\langle F(z^{t+1}), z^{t+1} - z \right\rangle \).

(EGM) See, for example, the proof of Theorem 3.2 in [57] (a simplified version of the proof can be found in Theorem 18.2 in [63]).

(PDHG) See, for example, Lemma 1 and the proof of Theorem 1 in [15].

(ADMM) See, for example, Lemma 3.1 in [37]. \(\square \)

The next proposition presents how to obtain the ergodic sublinear convergence rate and non-expansiveness of primal-dual algorithms as a direct consequence of Property 1, which will be later used in developing the convergence results for our restart scheme. Again, these are known results for each individual algorithm.

Proposition 2

Consider the sequences \(\{\hat{z}^t, z^t\}\) generated from a generic primal-dual algorithm with iterate update (6) and initial solution \(z^{0}\). Denote \(\bar{z}^{t} = \frac{1}{t}\sum _{i=1}^t \hat{z}^i\) as the average target solution. Suppose the primal-dual algorithm satisfies Property 1, then it holds for any \(z^{\star }\in Z^{\star }\) and \(z\in Z\) that

-

i.

(Non-expansiveness) \(\Vert z^{t+1}- z^{\star }\Vert \le \Vert z^{t}- z^{\star }\Vert \).

-

ii.

(Sublinear rate on the primal-dual gap) \(\mathcal {L}(\bar{x}^t, y)-\mathcal {L}(x,\bar{y}^t)\le \frac{C \Vert z-z^{0}\Vert ^2}{2 t}\).

Proof

First we prove i. By setting \(z=z^{\star }\) in (12) and rearranging the inequality, it holds for any t that

where the second inequality is because  and

and  .

.

We now prove ii:

where the first inequality is from convexity-concavity of \(\mathcal {L}(x,y)\) and Jensen’s inequality, and the second inequality holds by summing up (12) over t and telescoping. \(\square \)

The non-expansiveness in Proposition 2 implies that \(z^t\) stays in a bounded region. The next assumption assumes the target solution \(\hat{z}^t\) is not far away from either \(z^t\) or \(z^{t-1}\), thus \(\hat{z}^t\) also stays in a bounded region.

Property 2

There exists \(q > 0\) such that for all \(t \in {\mathbb {N}}\) either \(\Vert \hat{z}^t-z^t\Vert \le q \mathbf{dist{}}{(z^t, Z^{\star })}\), or \(\Vert \hat{z}^t-z^{t-1}\Vert \le q \mathbf{dist{}}{(z^t, Z^{\star })}\).

The four classic primal-dual algorithms we discussed above satisfy Property 2:

Proposition 3

Under the same conditions as Proposition 1, PPM, EGM, PDHG and ADMM satisfy Assumption 2 since

-

(PPM) \(\hat{z}^t = z^{t}\) for all \(t \in {\mathbb {N}}\), and therefore \(q = 0\);

-

(EGM) \(\Vert \hat{z}^{t} - z^{t-1} \Vert \le 3 \mathbf{dist{}}( z^t, Z^{\star })\) for all \(t \in {\mathbb {N}}\), and therefore \(q = 3\);

-

(PDHG) \(\hat{z}^t = z^{t}\) for all \(t \in {\mathbb {N}}\), and therefore \(q = 0\);

-

(ADMM) \(\Vert \hat{z}^t - z^{t} \Vert \le 2 \mathbf{dist{}}(z^t, Z^{\star })\) for all \(t \in {\mathbb {N}}\), and therefore \(q = 2\).

Proof

Note that the results for PPM and PDHG hold immediately.

Proof for EGM. The proof for EGM is somewhat technical so we defer it to “Appendix B”.

Proof for ADMM. Let \(z^{\star }= \min _{z \in Z^{\star }} \Vert z^{t} - z \Vert \). By (11) we have \(\hat{x}^{t+1}_U = x^{t+1}_U\), \(\hat{x}^{t+1}_V = x^{t+1}_V\), and \(\hat{y}^{t+1} = y^{t+1} + \eta V (x_{V}^{t+1} - x_{V}^t)\). Therefore,

where the last inequality uses Proposition i. \(\square \)

Property 3 captures the minimal algorithmic properties required for restart schemes to achieve linear convergence, as shown in Sect. 4. This technical assumption essentially states that the primal-dual algorithm imposes an order 1/t sublinear convergence on the decay of the normalized duality gap (Property i.) and the iterates do not move too far away from the starting point (Property ii.). Proposition 4 demonstrates that if Property 1 and 2 hold, then Property 3 also holds (with different parameters q and C), so Property 3 holds for the classic primal-dual algorithms (PPM, EGM, PDHG and ADMM).

Property 3

There exist scalar constants \(q, C >0\) such that for all initial points \(z^{0} \in Z\), the output sequence \(\{\bar{z}^{t}\}_{t=1}^{\infty }\) generated by the algorithm satisfies \(\bar{z}^{t} \in Z\) and

-

i.

\(\rho _{\Vert \bar{z}^{t} - z^{0} \Vert } (\bar{z}^{t}) \le \frac{2 C \Vert \bar{z}^{t} - z^{0} \Vert }{t}\),

-

ii.

\(\Vert \bar{z}^{t} - z^{0} \Vert \le (q + 2) \mathbf{dist{}}( z^{0}, Z^{\star })\).

Proposition 4

Consider the sequences \(\{\hat{z}^t, z^t\}\) generated from a generic primal-dual algorithm with iterate update (6) and initial solution \(z^{0}\). Suppose the primal-dual algorithm satisfies Property 1 and 2, then Property 3 holds with \(\bar{z}_t=\frac{1}{t}\sum _{i=1}^t \hat{z}_t\).

Proof

Suppose \(r=\Vert \bar{z}^{t} - z^{0} \Vert >0\), then

where the first inequality is from \(W_{r}(\bar{z}^{t}) \subseteq W_{2 r}(z^{0})\) by the definition of r, and the second inequality uses Proposition ii.. Alternatively, if \(\Vert \bar{z}^{t} - z^{0} \Vert = 0\) then it suffices to observe that \(\rho _r(\bar{z}^{t}) \le 2 C r / t\) and \(\rho _r(z) \ge 0\) implies \(\limsup _{r \rightarrow 0^{+}}\rho _r(\bar{z}^{t}) = 0\). This establishes Property i..

We turn to proving Property ii.. Let \(z^{\star }\in {\mathop {\textrm{argmin}}\limits _{z \in Z^{\star }}} \Vert z - z^{0} \Vert \), then for either \(i = t\) or \(i = t - 1\),

where the first inequality uses the triangle inequality, the second inequality uses Proposition i, and the third inequality uses \(\Vert \hat{z}^{t} - z^{i} \Vert \le q \mathbf{dist{}}( z^{i}, Z^{\star })\) as per Property 2. From \(\Vert \hat{z}^{t} - z^{0} \Vert \le (q + 2) \Vert z^{0} - z^{\star }\Vert \) it follows that

which establishes Property ii.. \(\square \)

3 Sharpness for primal-dual problems

This section discusses the basic properties of the normalized duality gap and sharp primal-dual problems, and then presents the concept of sharp primal-dual problems along with examples.

Critical to the paper is the concept of a sharp primal-dual problem. Indeed, when this condition holds, we are able to establish linear convergence using restarts (Sect. 4).

Definition 1

We say a primal-dual problem (1) is \(\alpha \)-sharp on the set \(S\subseteq Z\) if \(\rho _{r}\) is \(\alpha \)-sharp (see (5)) on S for all \(r \in (0, \mathbf{diam{}}(S)]\), i.e., it holds for all \(z \in S\) that

As we can see, the sharpness of a primal-dual problem essentially says the localized duality gap \(\rho _r(z)\) is sharp in the traditional sense. A key concept is that the sharpness is defined on a set \(S\subseteq Z\). Even if the domain Z is unbounded, the sharpness can be defined on a bounded set S.

From the definition of the normalized duality gap given in (4) and the sharpness condition, we can immediately establish the monotonicity of sharpness in r and S (Facts 1 and 2 and Proposition 5).

Fact 1

It holds for any z that \(r \rho _r(z)\) is monotonically non-decreasing for \(r \in [0,\infty )\).

Fact 2

The sharpness condition is monotone in set S, namely, suppose \(S_1 \subseteq S_2\subseteq Z\) and \(\rho _r(z)\) is \(\alpha \)-sharp in \(S_2\), then \(\rho _r(z)\) is \(\alpha \)-sharp in \(S_1\).

Proposition 5

It holds for any fixed z that \(\rho _r(z)\) is monotonically non-increasing for \(r \in [0,\infty )\).

Proof

We prove the result for \(r \in (0,\infty )\) which immediately implies the result also holds for \(r = 0\) by definition of \(\rho _0(z)\). Consider any \(z\in Z\) and \(0<r_1<r_2\). Let \(z_2\in {\mathop {\textrm{argmax}}\limits _{ \hat{z}\in W_{r_2}(z)}} \left\{ \mathcal {L}(x, \hat{y}) - \mathcal {L}(\hat{x}, y) \right\} \). Then we have that \(z_1{:}{=}z+\frac{r_1}{r_2} (z_2-z)\in W_{r_1}(z)\). Moreover, it follows from convexity of \(\mathcal {L}(x,y)\) over x that \(\mathcal {L}(x_1, y)\le \frac{r_1}{r_2} \mathcal {L}(x_2, y) + (1- \frac{r_1}{r_2}) \mathcal {L}(x,y)\), which after rearrangement becomes

Similarly, it follows from concavity of \(\mathcal {L}(x,y)\) over y that

Therefore, it holds that

which finishes the proof for the proposition. \(\square \)

Proposition 6 shows that when the normalized duality gap is a reasonable metric to measure the quality of a solution: the normalized duality gap is zero if and only if the primal-dual gap is also zero.

Proposition 6

For any \(r \in [0,\infty )\), the primal-dual gap (3) at z is zero if and only if \(\rho _r(z) = 0\).

Proof

Suppose that the primal-dual gap at z is zero, then by definition of \(\rho _r\) we get for all \(r \in (0,\infty )\) that \(0 \le \rho _r(z) \le \frac{1}{r} \max _{\hat{z}\in Z}\{ \mathcal {L}(x, \hat{y}) - \mathcal {L}(\hat{x}, y)\} = 0\). Taking the limit as \(r \rightarrow 0^{+}\) shows \(\rho _0(z) = 0\).

For the other direction argue by contrapositive. In particular, we assume the primal-dual gap at z is nonzero, and using this assumption show that for all \(r\in [0,\infty )\) that \(\rho _r(z)>0\). From the assumption that the primal-dual gap is nonzero, there exists \(\hat{z} \in Z\) such that \(\mathcal {L}(x, \hat{y}) - \mathcal {L}(\hat{x}, y) > 0\). For any \(r \in (0,\infty )\), setting \(\alpha = \min \left\{ 1, \frac{r}{\Vert \hat{z} - z \Vert } \right\} \) yields \(\Vert \alpha (\hat{z} - z) \Vert = \alpha \Vert \hat{z}- z \Vert \le r\) and \(z + \alpha (\hat{z}- z) = (1 - \alpha ) z + \alpha \hat{z}\in Z\). Therefore \(z + \alpha (\hat{z} - z) \in W_{r}(z)\). By definition of \(\hat{z}\), \(\alpha > 0\), convex-concavity of \(\mathcal {L}\), and \(z + \alpha (\hat{z} - z) \in W_{r}(z)\) we get

thus \(\rho _r(z)>0\) for \(r \in (0,\infty )\). Moreover, since \(\rho _r(z) > 0\) for \(r \in (0,\infty )\) we deduce \(\rho _0(z) > 0\) by Proposition 5. This finishes the proof. \(\square \)

We next present examples of sharp primal-dual problems. Here we utilize Euclidean norm in the sharpness definition to illustrate the examples. For any other norms, the sharpness condition still holds (upto a constant) by noticing that any norms in finite space are equivalent to each other (See, for example, Theorem 5.36 in [39]). As an illustrating example, we show below that the corresponding norm for PDHG in Proposition 1 is approximately Euclidean (up to a constant):

Proposition 7

Let \(\eta \in (0, 1/{\sigma _{\max }}{(A)})\). If \(\Vert z \Vert ^2 = \Vert x \Vert _2^2 - 2\eta y^{\top } A x + \Vert y \Vert _2^2\), which is the PDHG norm, then

Proof

Lower bounding \(\Vert z \Vert ^2\) gives, \(\Vert z \Vert ^2 \ge \Vert x \Vert _2^2 -2 \eta {\sigma _{\max }}{(A)} \Vert y \Vert _2 \Vert x \Vert _2 + \Vert y \Vert _2^2 = {(1 - \eta {\sigma _{\max }}{(A)})} \Vert z \Vert _2^2 + \eta {\sigma _{\max }}{(A)} (\Vert x \Vert _2 - \Vert y \Vert _2)^2 \ge {(1 - \eta {\sigma _{\max }}{(A)})} \Vert z \Vert _2^2\). Similarly, we have the other side. \(\square \)

3.1 Sharpness of primal-dual problems with bounded feasible regions

Lemma 1

Consider a primal-dual problem (1) with a bounded feasible set \(Z = X \times Y\). Suppose the constraint set Z has diameter R, and Z is equipped with the Euclidean norm. Let \(P(x)=\max _{y\in Y} \mathcal {L}(x,y)\) and \(D(y)=\min _{x\in X} \mathcal {L}(x,y)\) denote the primal objective and the dual objective, respectively. If P(x) is \(\alpha _1\)-sharp in x and D(y) is \(\alpha _2\)-sharp in y, then the primal-dual problem (1) is \(\min \{\alpha _1,\alpha _2\}/R\)-sharp on \(S=Z\).

Proof

Let \(X^{\star }\) and \(Y^{\star }\) denote the minimizers of P(x) and D(x) respectively. Then it holds for any \(z\in Z\) and \(r\le R\) that

where the first inequality utilizes Proposition 5, the first equality is from the definition of P(x) and D(y), the second inequality uses sharpness, and the third inequality uses the triangle inequality. \(\square \)

3.2 Sharpness of bilinear problems

We will find the following well-known Lemma useful. The proof of Lemma 2 appears in the “Appendix A” for completeness.

Lemma 2

Suppose \(\Vert \cdot \Vert \) is the Euclidean norm. Let n and m be positive integers, \(H \in {\mathbb {R}}^{m \times n}\) and \(h \in {\mathbb {R}}^{m}\). Define \(S {:}{=} \{ z \in {\mathbb {R}}^{n}: H z = h \}\). If \(S \ne \emptyset \), then \(\mathbf{dist{}}(S, z) \le \frac{1}{{\sigma _{\min }^+}{(H)}} \Vert H z - h \Vert \).

Lemma 3

Suppose \(\mathcal {L}(x,y)= c^{\top } x - y^{\top } Ax + b^{\top } y\), \(Z={\mathbb {R}}^{m+n}\) and Z is equipped with the Euclidean norm. Suppose there exists a finite solution to (1). Then the primal-dual problem (1) is \(\alpha \)-sharp on Z with \(\alpha ={\sigma _{\min }^+}{(A)}\).

Proof

Since \(\mathcal {L}(x,y) = c^{\top } x - y^{\top } Ax + b^{\top } y\) it holds for any z and \(\hat{z}\) that

Therefore, it holds that

Meanwhile, notice \(Z^{\star }=\{z \in Z \mid F(z)=0\}=\{z \in Z \mid Hz=h\}\), where \(H=\begin{pmatrix} &{} A^{\top }\\ A &{} \end{pmatrix}\) and \(h=\begin{pmatrix} c \\ b \end{pmatrix}\). It then follows from Lemma 2 that

Combining (15) and (16), we arrive at

which finishes the proof. \(\square \)

Remark 1

In the above bilinear example, we see that the primal-dual problem (1) is \(\alpha \)-sharp if and only if \(\Vert F(z)\Vert \) is \(\alpha \)-sharp in the standard sense. Indeed, \(\Vert F(z)\Vert \) being a sharp function is a necessary condition for \(\mathcal {L}(x,y)\) to be sharp for any unconstrained convex-concave primal-dual problem. This can be seen by noticing

and by utilizing the monotonicity of \(\rho _r(z)\) stated in Proposition 5.

3.3 Sharpness of standard linear programming

Consider a generic LP problem:

its dual:

and its Lagrangian form:

Suppose (17) and (18) have feasible solutions. By strong duality we can reduce (17) and (18) to solving the system \(K z \ge h\) where

The term \(\Vert (h - K z)^{+} \Vert \) is a common metric for the termination of algorithms for LP [3]. Lemma 4 shows that this metric can be bounded via the normalized duality gap.

Lemma 4

Let \(\Vert \cdot \Vert \) be the Euclidean norm. Consider the Lagrangian (19), formed from the LP setup. Suppose that there is a finite solution to (19). Then, for all \(R \in (0,\infty )\), \(r \in (0, R]\), and \(z \in W_{R}(0)\) we have

Proof

Consider \(z \in Z\). Let \(v = \begin{pmatrix} (-c+A^{\top }y)^+ \\ b - Ax \end{pmatrix}\) and note that \(F(z) = \begin{pmatrix} c-A^{\top }y \\ Ax - b \end{pmatrix}.\) Recall from (14) that \(\mathcal {L}(x, \hat{y})-\mathcal {L}(\hat{x},y)=F(z)^{\top } (z-\hat{z})\). Therefore, it follows from the definition of \(\rho _r\) that for all \(\hat{z}\in W_{r}(z)\),

Let \(z_1 = z + r \frac{v}{\Vert v\Vert } \), then \(\Vert z_1-z\Vert = r\). Meanwhile we have \((-c+A^{\top }y)^+ \ge 0\), thus \(z_1\in Z\) by noticing \(z\in Z\). Therefore, \(z_1\in W_{r}(z)\) and it follows from (21) that

where the last inequality utilizes the definition of v.

If \(z=0\), let \(z_2=0\in W_{r}(z)\), then we have from (21) that

Otherwise, let \(z_2= z - \min \left\{ \frac{r}{\Vert z \Vert }, 1 \right\} z\), then \(\Vert z_2-z\Vert \le \frac{r}{\Vert z \Vert }\Vert z\Vert \le r\). Meanwhile, we have \(x_2\ge x-x =0\), thus \(z_2\in Z\). Therefore, \(z_2 \in W_{r}(z)\) and it follows from (21) that

Taking the worst case bound in (23) and (24) by noticing that \(0<r\le R\), \(0\le \Vert z\Vert \le R\) and \(\rho _r(z)\ge 0\) yields

Combining (22) and (25), we obtain

where the equality utilizes the fact \(x \ge 0\) and the property of \(\ell _2\) norm. Taking the square root finishes the proof. \(\square \)

Let H(K) be the Hoffman constant [38] of the matrix K in Euclidean norm, i.e., it holds for any \(z \in {\mathbb {R}}^{n+m}\) that

Remark 2

A popular characterization of \(H(A)\) for linear inequalities is (see for example [33, 42, 66])

where \(A_J\) is the matrix with the corresponding rows of \(A\) indexed by J. In terms of the singular values of \(A\), a slightly looser bound would be

which ignores the constraints \(v\in {\mathbb {R}}_+^J\) in (27) and take advantage of the Euclidean norm.

Lemma 5

(Sharpness of linear programming) Let \(R \in (0, \infty )\) and assume (19) has a solution. Then, the primal-dual problem (19) is \(\alpha \)-sharp on the set \(W_{R}({0})\) where \(\alpha =\frac{1}{H(K)\sqrt{1+4R^2}}\).

Proof

For all \(r \in (0, \mathbf{diam{}}(W_{R}(0))] \subseteq (0, 2R]\), by Lemma 4 and (26),

\(\square \)

3.4 Sharpness of ADMM for linear programming

Following Lemma 5, we can show different formulations of LP may also be sharp. In particular, one popular method for LP is using ADMM [65]. Let \(X_U=\{x \in {\mathbb {R}}^{n} \mid Ax=b\}\) and \(X_V=\{x \in {\mathbb {R}}^{n} \mid x\ge 0\}\). Consider the following form of LP:

and its Lagrangian form

Lemma 6

(Sharpness of ADMM for linear programming) Suppose (28) has a solution. Then there exists a matrix \(K'\) and vector \(h'\) such that the set of solutions to (28) are equal to \(\{ z: K' z \ge h' \}\). Let \(z^{\star }\in Z^{\star }\), then it holds for any set \(S(R, z^{\star })=\{z \in Z: \Vert z^{\star }- z \Vert \le R\}\) that the primal-dual problem (28) is \(\alpha \)-sharp where \(\alpha =\frac{1}{ \max \{ \eta ^2, 1/\eta ^2 \} H(K')\sqrt{1+4R^2}}\).

The proof of Lemma 6 appears in Section C and follows the same structure as the proof of Lemma 5.

4 Restart schemes for primal-dual methods

In this section, we present a generic restart scheme for primal-dual methods and show its linear convergence rate for solving sharp primal-dual problems. We discuss two restart schemes: fixed frequency restart and adaptive restarts, where the former requires knowledge of the sharpness constant \(\alpha \) while the latter does not.

Algorithm 1 presents our nested-loop restarted primal-dual algorithm. We initialize with \(z^{0,0}\in Z\), a suitable primal-dual algorithm, and a step-size of the algorithm \(\eta \). At each outer loop, we keep running \(\text {PrimalDualStep}\) until one of the restart conditions holds (to be discussed later). More specifically, at the t-th inner loop of the n-th outer loop, we call PrimalDualStep to update the solution \(z^{n,t}\) and keep track of the target solution \(\hat{z}^{n,t}\) as well as the running average \(\bar{z}^{n,t}\). At the end of each outer loop, we restart the next outer loop from the output of a primal-dual algorithm, namely, the running average \(\bar{z}^{n,\tau ^{n}}\), and store the length of its inner loops as \(\tau ^{n}\). Here we propose two restart schemes:

4.1 Fixed frequency restarts

Suppose we know the sharpness constant \(\alpha \) of the primal-dual problem and an upper bound on C. In this scheme, we break the inner loop and restart the outer loop with a fixed frequency \(t^{\star }\). Namely, we restart the algorithm if

where C and q are the corresponding parameters of a given primal-dual algorithm, as stated in Property 1 and 2. The parameter \(\beta \in (0,1)\) controls when a restart is triggered. The value of \(\beta \) can be tuned to improve both practical performance and the constants in the theoretical bound. Concretely, it follows from Proposition 1, Proposition 3 and Proposition 4 that

-

For PPM with \(\eta \in (0,\infty )\), \(t^{\star }= \bigg \lceil { \frac{4}{\alpha \beta \eta }} \bigg \rceil \);

-

For PDHG with \(\eta \in (0, 1/{\sigma _{\max }}{(A)}]\), \(t^{\star }= \bigg \lceil { \frac{4}{\alpha \beta \eta }} \bigg \rceil \);

-

For EGM with \(\eta \in (0,1/L]\), \(t^{\star }= \bigg \lceil { \frac{10}{\alpha \beta \eta }} \bigg \rceil \);

-

For ADMM, \(t^{\star }= \bigg \lceil { \frac{8}{\alpha \beta }} \bigg \rceil \).

4.2 Adaptive restarts

In practice, it can be non-trivial to compute the sharpness constant \(\alpha \) of a given primal-dual problem. Here we propose an adaptive restart scheme that does not need an estimate of \(\alpha \). In this scheme, we restart when the normalized duality gap has sufficient decay; more specifically, we restart the algorithm if

where the first restart interval length \(\tau ^{0} \in {\mathbb {N}}\) can be selected by the user as a hyperparameter of the algorithm. In practice, one can pick \(\tau ^{0} = 1\) for simplicity.

Although the two restarting schemes (29) and (30) look disconnected, it turns out the restart interval length in (30) is upper bounded by \(t^{\star }\), as shown later in Theorem 2. Furthermore, although the parameter \(\beta \in (0,1)\) seems to play different roles in fixed frequency restart scheme (29) and adaptive restart scheme (30), it determines the contraction of the distance to optimal solution set for both schemes.

The rest of this section presents the linear convergence rate of the two restart schemes stated above. We start from the fixed frequency restart scheme.

Proposition 8 is useful throughout this section to ignore awkward cases in our proofs when \(\Vert \bar{z}^{n,t} - z^{n, 0} \Vert = 0\). For example, technically sharpness (Definition 1) is only defined for \(r > 0\).

Proposition 8

If Property 3 holds and \(\Vert \bar{z}^{n,t} - z^{n, 0} \Vert = 0\) then \(\bar{z}^{n,t} = z^{n, 0} \in Z^{\star }\).

Proof

Property 3 implies \(\rho _0(\bar{z}^{n,t}) = 0\) and therefore by Proposition 6 we get \(\bar{z}^{n,t} \in Z^{\star }\). \(\square \)

Theorem 1

(Fixed frequency restarts) Consider the sequence \(\{ z^{n, 0} \}_{i=0}^{\infty }\), \(\{ \tau ^{n} \}_{i=1}^{\infty }\) generated by Algorithm 1 with the fixed frequency restart scheme, namely, we restart the outer loop if (29) holds. Suppose the (6) satisfies Property 3, and the primal-dual problem (1) is \(\alpha \)-sharp on the set \(W_{R}(z^{0,0})\) with \(R = \frac{q + 2}{1 - \beta } \mathbf{dist{}}(z^{0,0},Z^{\star })\). Then it holds for each outer iteration \(n \in {\mathbb {N}}\cup \{ 0 \}\) that:

Proof

We argue by induction. Note this hypothesis trivially holds for \(n = 0\). Suppose the hypothesis (31) holds for all \(n \le N\). Then it holds that

where the first inequality uses triangle inequality, the second inequality is from Property ii., the third inequality uses the induction hypothesis, and the last inequality is from bounds on sum of a geometric sequence. This implies \(z^{N + 1, 0} \in W_{R}(z^{0,0})\). It then follows that

where the first inequality uses \(\alpha \)-sharpness of the primal-dual problem by choosing \(r=\Vert z^{N+1,0}-z^{N,0}\Vert \) and \(z=z^{N + 1, 0} \in W_{R}(z^{0,0})\) in (13), the second inequality utilizes Property i., the third inequality is from Property ii. by noticing \(z^{N+1,0}=\bar{z}^{t^{\star }}\), and the fourth inequality comes from the definition of \(t^{\star }\). This finishes the proof by induction. \(\square \)

Remark 3

As a direct consequence of Theorem 1, we need \(O(\frac{C}{\alpha }\log (\frac{1}{\epsilon }))\) total iterations of PDHG to find an approximate solution z such that the distance \(\mathbf{dist{}}(z,Z^*)\le \epsilon \).

Remark 4

We comment the proof logic of Theorem 1, and compare it with the standard linear convergence proofs for restart schemes in convex optimization. Consider restarted accelerated gradient descent to minimize a convex, L-smooth, and that grows \(\mu \)-quadraticallyFootnote 6 function f. The standard analysis of restarted accelerated gradient descent can be written as (see, for example, [60])

where the second inequality uses the \(O(1/k^2)\) rate of accelerated gradient descent [61, 62] when the inner loop iteration is larger than \(\hat{t}^{\star }\), and the last inequality is from \(\hat{t}^{\star }= \frac{1}{\beta } \sqrt{\frac{ 2\,L}{\mu }}\). This showcases the (accelerated) linear convergence rate of restarted accelerated gradient descent. Our analysis of Theorem 1 follows from a similar argument: sharpness allows us to transform the sublinear bound on the normalized duality gap \(\rho _{\Vert z^{n+1, 0} - z^{n, 0} \Vert }(z^{n+1, 0})\) (of a classic primal-dual algorithm) to a constant factor contraction in the distance to optimality after \(t^{\star }\) inner iterations. A major difference is that we are able to evaluate the normalized duality gap in our setting, while in convex optimization, it can be non-trivial to evaluate the optimality gap \(f(z^{n+1,0})-f^{\star }\). Indeed, this feature is crucial as we develop the adaptive restarting scheme.

The next theorem presents the linear convergence rate of the adaptive restart scheme:

Theorem 2

(Adaptive restarts) Consider the sequence \(\{ z^{n, 0} \}_{i=0}^{\infty }\), \(\{ \tau ^{n} \}_{i=1}^{\infty }\) generated by Algorithm 1 with the adaptive restart scheme, namely, we restart the outer loop if (30) holds. Suppose (6) satisfies Property 3. Suppose there exists a set \(S\subseteq Z\) such that \(z^{n,0}\in S\) for any \(n\ge 0\), and the primal-dual problem (1) is \(\alpha \)-sharp on the set S. Then it holds for each outer iteration \(n \in {\mathbb {N}}\) that

-

i.

The restart length, \(\tau ^{n}\), is upper bounded by \(t^{\star }\):

$$\begin{aligned} \tau ^{n} \le t^{\star }= \bigg \lceil { \frac{2 C (q+2)}{\alpha \beta }} \bigg \rceil \ ; \end{aligned}$$(33) -

ii.

The distance to primal-dual solution set decays linearly:

$$\begin{aligned} \mathbf{dist{}}(z^{n, 0}, Z^{\star }) \le \beta ^{n} \frac{t^{\star }}{\tau ^{0}}\mathbf{dist{}}(z^{0,0}, Z^{\star }). \end{aligned}$$

Proof

We prove (33) by contradiction. If it is not satisfied, there exists \(n\ge 1\) such that \(\tau ^n>t^{\star }\), and it holds for \(t=t^{\star }\) that

where the first inequality utilizes Property i., the second inequality follows from sharpness of the primal-dual problem (13) by choosing \(r=\Vert z^{n,0} - z^{n-1,0} \Vert \) and \(z=z^{n,0}\in S\), the third inequality utilizes Property ii., and the last inequality is from the definition of \(t^{\star }\). This is a contradiction: the restart condition (30) should have been triggered at \(t = t^{\star }< \tau ^{n}\). Therefore (33) holds.

For ii., it follows from sharpness condition that

where the second inequality recursively utilizes the restarting condition (30), the third inequality is from Property i. and the last inequality utilizes Property ii.. \(\square \)

Remark 5

(Choice of \(\beta \)) From Theorem 2 we can obtain

If we assume \(\frac{\mathbf{dist{}}(z^{0,0}, Z^{\star })}{\mathbf{dist{}}(z^{n,0}, Z^{\star })} \gg t^{\star }/ \tau ^{0}\) and use \(t^{\star }\approx \frac{2 C (q+2)}{\alpha \beta }\) the right hand side of (34) is approximately:

optimizing this with respect to \(\beta \) yields \(\beta = \exp (-1)\).

Compared with the assumptions for fixed frequency restarts (Theorem 1), Theorem 2 additionally requires that \(z^{n,0}\) stays in a set \(S\subseteq Z\), where the primal-dual problem is sharp. Suppose the feasible region Z is bounded, we can simply choose \(S=Z\). However, in the LP example (i.e., Lemma 5) where Z can be unbounded, and the primal-dual problem is sharp only on a bounded region. Below we show that the iterates of restarted PDHG, PPM, EGM and ADMM stay in a bounded region:

Proposition 9

Consider the sequence \(\{ z^{n, 0} \}_{i=0}^{\infty }\), \(\{ \tau ^{n} \}_{i=1}^{\infty }\) generated by Algorithm 1 with proper step-size \(\eta \) (see Table 1) and \(\beta \in (0,1)\). For restarted PDHG or restarted PPM, there exists a constant \(R >0\) such that \(z^{n,0} \in W_{R}(z^{0,0})\) for all \(n \in {\mathbb {N}}\).

Proof

For PDHG and PPM, the proof uses the non-expansiveness property of \(\hat{z}^{t,n}=z^{t,n}\) (see Proposition i). In particular, let \(z^{\star }\in {\mathop {\textrm{argmin}}\limits _{z \in Z^{\star }}} \Vert z - z^{0,0} \Vert \), then it holds for any n, t that

where the first inequality is from triangle inequality and the second inequality uses Proposition i. Thus, we have

whereby \(\Vert z^{\star }- z^{n,0} \Vert \le \Vert z^{\star }- z^{0,0} \Vert \) by induction. It then follows from the triangle inequality that

which concludes the proof for PDHG and PPM with \(R=2 \mathbf{dist{}}(z^{0,0}, Z^{\star })\). \(\square \)

Proposition 10

Consider the sequence \(\{ z^{n, 0} \}_{i=0}^{\infty }\), \(\{ \tau ^{n} \}_{i=1}^{\infty }\) generated by Algorithm 1 with the adaptive restart scheme, namely, we restart the outer loop if (30) holds. If \(0< \beta < \frac{1}{q+3}\) then, there exists a constant \(R >0\) such that \(z^{n,0} \in W_{R}(z^{0,0})\) for all \(n \in {\mathbb {N}}\) in the following settings:

The proof of Proposition 10 is more technical than Proposition 9 because \(\hat{z}^{t,n}=z^{t,n}\) no longer holds, and we defer it to “Appendix D”.

5 Tight convergence results for bilinear problems

Bilinear problems are a special case of LP where there are no nonnegativity or inequality constraints. Moreover, in many situations the asymptotic behaviour of primal-dual methods applied to LP is equivalent to applying the method to a bilinear problem. In particular, if after a certain number of iterations the set of active variablesFootnote 7 does not change, then the subset of the matrix, objective, and right hand side corresponding to this active set defines such a bilinear subproblem. Recent results [44, 46] show that for many primal-dual algorithms, including PDHG and ADMM, if the problem is non-degenerateFootnote 8 then after a finite number of iterations the active set freezes. See [44] for a differential geometry argument for this phenomenon. Thus, the convergence of methods for bilinear problems can characterize their asymptotic behavior on nondegenerate LP problems.

Already we have some basic results in this setup. In particular, Lemma 3, and Proposition 7 imply that the primal-dual problem is \(\Omega ({\sigma _{\min }^+}{(A)})\)-sharp on bilinear problems with respect to the PDHG norm for \(\eta \le 1/(2{\sigma _{\max }}{(A)})\). Combining the sharpness result with Table 1, and Theorem 1 (or Theorem 2 with a small loss in the log term) implies that after

total matrix–vector multiplications restarted PDHG finds a point \(z^{n, 0}\) satisfying

The remainder of this section proceeds as follows. First we present a lower bound showing that, up to a constant factor, restarted PDHG yields the best convergence rates for solving bilinear problems. Next, we show tight convergence bounds for the average and last iterate of PDHG which we use to demonstrate our restarted PDHG convergence rate represents a strict improvement over the latter two methods. Finally, we compare the performance of other methods from literature for solving bilinear problems.

5.1 Lower bounds for primal-dual algorithms

In this subsection, we present a lower bound for solving a sharp primal-dual problem (1) demonstrating that restarting gives the best possible worst-case convergence bound for a large class of primal-dual methods. First, we review related lower bounds for first-order unconstrained function minimization.

Definition 2

An algorithm is span-respecting for a minimization problem \(\min _{x \in {\mathbb {R}}^{m}} f(x)\) if

for all \(t \in {\mathbb {N}}\).

Span-respecting algorithms cover a large number of unconstrained optimization algorithms including gradient descent, conjugate gradient, and accelerated gradient descent. As is well-known one can form a lower bound using convex quadratics for these types of algorithms as given in Theorem 3.

Theorem 3

(Theorem 2.1.12 of [61]) For all \(\gamma _{\max }> \gamma _{\min } > 0\), \(m \in {\mathbb {N}}\), there exists a positive definite matrix \(H \in {\mathbb {R}}^{m \times m}\) and vector \(h \in {\mathbb {R}}^{m}\) such that \(\sigma _{\max }(H) = \gamma _{\max }\), \(\sigma _{\min }(H) = \gamma _{\min }\), and any span-respecting unconstrained minimization algorithm for solving

satisfies for \(t < m\) that

Our goal is to supply a similar result to Theorem 3 but in the primal-dual setting.

Definition 3

An algorithm is span-respecting for a unconstrained primal-dual problem \(\max _{y \in {\mathbb {R}}^{n}} \min _{x \in {\mathbb {R}}^{n}} \mathcal {L}(x, y)\) if:

Definition 3 is analogous to Definition 2 but in the primal-dual setting. If \(\mathcal {L}\) is bilinear then with appropriate indexing of their iterates, primal-dual algorithms including primal-dual hybrid gradient, extragradient and their restarted variants satisfy Definition 3. Corollary 1 provides a lower bound on span-respecting primal-dual algorithms. The proof is by reduction to Theorem 3.

Corollary 1

Let \(\Vert \cdot \Vert \) be the Euclidean norm. For all \(\alpha , C \in (0, \infty )\), \(m \in {\mathbb {N}}\), there exists a matrix \(A \in {\mathbb {R}}^{m \times m}\) and vector \(b \in {\mathbb {R}}^{m}\) such that

is \(\alpha \)-sharp on \({\mathbb {R}}^{m \times m}\), C-smooth and any span-respecting unconstrained primal-dual algorithm satisfies

for \(t < m\).

Proof

Consider initial solution \(y^0 = x^0 = \textbf{0}\), where A and b. Then, for any span-respecting primal-dual method satisfies

thus it holds that

Let \(\gamma _{\max } = C^2\), \(\gamma _{\min } = \alpha ^2\), and consider the positive definite matrix H and vector h supplied by Theorem 3. Define \(A = H^{1/2}, \quad b = H^{-1/2} b\). Note \(H^{1/2}\) exists and is positive definite as H is positive definite. Also, note that the unique solution to the primal-dual problem is \(x^{\star }= A^{-1} b\) and \(y^{\star }= A^{-1} \textbf{0} = \textbf{0}\) with \(x^{\star }\) matching the optimal solution to (35). Then by (37) we have \(x^{t} \in {\textit{Span}}\left\{ H x^{0} - h, \dots , H x^{t-1} - h\right\} \) which implies \(x^{t}\) is span-respecting for (35). Applying the iteration bound of Theorem 3 and using that \(y^{\star }= y^{0} = \textbf{0}\) yields the result. \(\square \)

Therefore we have established that the convergence bounds of our restart schemes (Theorems 1 and 2) matches the lower bound (Corollary 1) up to a constant. In other words, a restarted method gives the best worst-case bounds.

5.2 Tight bounds for the average and last iterate

Previous section shows that our restart schemes match the lower bound (up to a constant) for solving sharp primal-dual problems. However, at this point it is unclear if standard approaches, e.g., the last and average iterate do not also obtain this worst-case bound with a careful analysis for sharp primal-dual problems. In this section, we show for bilinear problems that the last iterate and the average iterate of PDHG cannot match convergence bounds of restarted PDHG. Such statement is genuinely the case for other primal-dual first-order methods with a similar arguments.

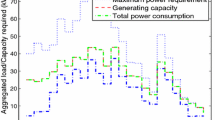

Simple empirical tests support the hypothesis that the restarted PDHG is geninuely faster than the average or last iterate. In particular, Fig. 1 plots the last iterate (\(z_t\)) and the average iterate (\(\bar{z}_t\)) of both non-restarted PDHG (i.e., PDHG without restarting) and restarted PDHG (with fixed frequency) for solving a simple two-dimensional bilinear problem \(\mathcal {L}(x,y)=x y\) with step-size \(\eta =0.2\). As we can see, the average solution of the restarted algorithm has faster convergence to the primal-dual solution (0, 0) than the average or last iterate of PDHG.

We can formalize this improvement from restarted PDHG. Table 2 compares the complexity of the last iterate of PDHG, the average iterate of PDHG, and restarted PDHG on bilinear problems. From the table we can see that only the last iterate achieves linear convergence and its linear convergence constant is a square root factor worse than restarted PDHG. We expect similar results would also apply to other primal-dual algorithms, such as EGM. The remainder of this subsection is devoted to proving the results in Table 2.

Consider an bilinear problem with \(\mathcal {L}(x,y)=y^{\top } A x\). Without loss of generality, we can assume A is a diagonal matrix with entries \(0<\sigma _1\le \cdots \le \sigma _m\) and \(x,y\in {\mathbb {R}}^m\) (See the beginning of the proof of Theorem 6 in Applegate et. al [6] for an explanation.)

The unique optimal solution to this primal-dual problem is (0, 0). PDHG with step-size \(\eta \le \frac{1}{\sigma _m}\) has iterate update

where \(z^t=\begin{pmatrix} x^t \\ y^t \end{pmatrix}\). We study the convergence of three sequences: the last iterates of PDHG (\(z^t\)), the average iterates of PDHG (\(\bar{z}^{K}=\frac{1}{K}\sum _{t=1}^{K} z^t\)) and the solution \(\bar{z}^{n,t}\) obtained in our restart scheme with PDHG as a subroutine.

Notice the PDHG dynamic (38) is separable over coordinates, and there are m independently evolving 2-d linear dynamical systems given by:

for \(i = 1, \dots , m\) where \(z_i=\begin{pmatrix} x_i \\ y_i \end{pmatrix}\)and \(P_i=\begin{pmatrix} 1 &{} -\eta \sigma _i\\ \eta \sigma _i &{} 1-2\eta ^2\sigma _i^2 \end{pmatrix}\). Let \(P_i=Q_i\Gamma _i Q^{-1}_i\) be the eigen-decomposition of \(P_i\), where

and \(\textbf{i}\) denotes the imaginary part of a complex number.

Moreover, with \(\tilde{z}_i^t = Q_i^{-1} z_i^{t}\), \(B_i = (Q_i^{-1})^\dagger Q_i^{-1}\) and \(\Vert v \Vert _M = \sqrt{v^\dagger M v}\), where \(\dagger \) is the conjugate transpose, we get

where the second to last equality uses

Moreover, note that

with eigenvalues \(2 \pm 2 \sigma _i \eta \). Therefore, as \(\eta \in [0, 1/(2 \sigma _m)]\) we get \(1 \le 2 \pm 2 \sigma _i \eta \le 3\), which implies

for all \(v \in \mathcal {C}^{n}\) where \(\mathcal {C}\) is the set of complex numbers and the implication uses that \(B_i Q_i Q_i^\dagger = {{\textbf {I}}}\).

By (42) we get \(\frac{1}{3} \Vert z^t-z^{\star }\Vert _{2}^2 \le \sum _{i=1}^{n} \Vert z_i - z_i \Vert _{B_i}^2 \le \Vert z^t-z^{\star }\Vert _{2}^2\) and therefore (40) implies that we reach a solution \(z^t\) such that

within \(O\left( \left( \frac{1}{\eta \sigma _1}\right) ^2 \log \left( \frac{1}{\epsilon }\right) \right) \) iterations.

From (42) and (40) we deduce that

Therefore by \(\sigma _1 \le \sigma _m\) and \(\eta = 1 / (2 \sigma _m)\) we get \(\Omega \left( \left( \frac{\sigma _m}{\sigma _1}\right) ^2 \log \left( \frac{1}{\epsilon }\right) \right) \) as a lower bound for the last iterate convergence of PDHG.

Define \(\Vert M \Vert _2 {:}{=} \sup _{\Vert v \Vert _2 = 1} \Vert M v \Vert _2\) be the \(\ell _2\) norm of a matrix M. Now we study the solution of the average iterates \(\bar{z}^K=\frac{1}{K}\sum _{t=1}^{K} z^t\), and we claim \(\bar{z}^K\) converges to the unique optimal solution \(z^{\star }=0\) with sublinear complexity \(\Omega (\frac{\sigma _m}{\sigma _1 \epsilon })\). Notice that

where the second inequality uses

and the third inequality utilizes \(\Vert \Gamma _i\Vert _2\le 1\). Setting \(\eta = \frac{1}{2 \sigma _m}\) and using (42) demonstrates \(\bar{z}^t\) converges to \(z^{\star }\) in \(O\left( \frac{\sigma _m}{\sigma _1 \epsilon }\right) \) iterations, which is consistent with the ergodic rate of PDHG.

We will now establish a matching  lower bound for the average iterate. Observe that

lower bound for the average iterate. Observe that

where the third inequality uses the fact that \((1 - a)^b \le \frac{1}{1 + b a}\) for \(a \ge 0\), \(b \ge 0\) (to see this note that \(f(a,b) = (1 - a)^b (1 + b a)\) satisfies \(f(0,b) = 1\) and \(\frac{\partial f}{\partial a} \le 0\)). Therefore,

where the second equality uses that the absolute value is completely multiplicative, the second inequality uses (41), (44) and (45) and the final inequality uses \(\eta = \sigma _m / 2\). This establishes the lower bound for the average iterate. We note that this lower bound only matches the upper bound for \(K = \Omega (\frac{1}{\eta ^2 \sigma _1^2})\). We believe matching the upper bound across all K is possible but the analysis appears to be much more technical.

5.3 Comparison with other methods

Mokhtari et al. [56] study EGM and the optimistic gradient descent, in this setting they show an

bound for both methods on bilinear problems (recall \(\sigma _{\min }(A^{\top } A) = \sigma _{\min }(A)\) and \(\sigma _{\max }(A^{\top } A) = {\sigma _{\max }}{(A)}\)). As expected, this matches the last iterate performance of PDHG.

We can use other methods to solve bilinear problems. One approach is reduce the bilinear game to a pair of nonsmooth optimization problems:

Each of these problems is \({\sigma _{\min }^+}{(A)}\)-sharp (Lemma 2) and \({\sigma _{\max }}{(A)}\)-Lipschitz. Therefore according to [78, Corollary 9] restarted subgradient method requires

iterations on each problem to find an approximate solution satisfying \(\frac{\Vert A x - b \Vert _2}{\Vert A x_{0} - b \Vert _2} \le \varepsilon \) and \(\frac{\Vert A^{\top } y - c \Vert _2}{\Vert A^{\top } y_{0} - c \Vert _2} \le \varepsilon \). Another approach is to reformulate solving bilinear game as a pair of smooth unconstrained problems:

One can apply conjugate gradient or accelerated gradient descent to each of these subproblems. The well-known convergence bounds for these methods [61] imply that they have the following iteration bounds

which matches the bounds we obtained by restarted PDHG. We also point the reader to Gilpin et al. [29], who obtain similar results to ours in the LP (with bounded feasible region) and bilinear setting. The main difference is that they modify Nesterov’s smoothing algorithm for nonsmooth convex optimization [59], which is a primal method, whereas we combine restarts with primal-dual methods to achieve our result.

6 Efficient computation of the normalized duality gap

6.1 Separable norm

This subsection shows that the normalized duality gap can be computed efficiently under the mild assumption that the norm is separable:

To evaluate (4a) we need to find a solution to

Recall that due to Proposition 8 solving (4b) is unnecessary for adaptive restarts.

Solving (46) can be done using standard methods which we briefly outline here. By the assumption that \(\mathcal {L}\) is convex-concave and by duality, there exists a \(\lambda \in [0,\infty ]\) such that (46) is equivalent to

For any \(\lambda \in (0,\infty )\), define

where \(\bar{z}(\tau )\) is the unique solution to (47) (the solution is unique because \(\mathcal {L}\) is convex-concave which means (47) is strongly concave). Observe the function \(h(\lambda )\) is nonincreasing. If \(h(\lambda ) < 0\) for all \(\lambda \in (0,\infty )\) then the solution to (47) with \(\lambda = 0\) that is closest to z solves (46) (and can be approximated by picking an arbitrarily tiny \(\lambda \)). If \(h(\lambda ) > 0\) for all \(\lambda \in (0,\infty )\) then \(r = 0\), and the optimal solution to (46) is \(\hat{z}= z\). The final possibility is that there exist \(\lambda , \lambda ' \in (0,\infty )\) such that \(h(\lambda )< 0 < h(\lambda ')\) in which case there exists a root of h which we can find using a standard root finding methods such as the bisection method. This root corresponds to the optimal solution of (46).

Problem (47) is separable into

which is equivalent to the subproblem iterations for PPM, PDHG, and ADMM (Sect. 2). Even if this root finding only requires evaluating (48) a small number of times it could significantly increase the cost of running the algorithm. Therefore, it makes sense to only test condition (30) at fixed intervals with large separation, e.g., every 200 iterations.

6.2 Primal-dual hybrid gradient

Section 6.1 considered the case where \(\Vert \cdot \Vert \) is seperable. However, the (natural) norm that we previously used to analyze PDHG is \(\Vert z \Vert = \sqrt{ \Vert x \Vert _2^2 - 2 \eta y^{\top } A x + \Vert y \Vert _2^2}\) which is not separable. Fortunately, the following Corollary shows that Property 3 also holds for PDHG with the Euclidean norm. This allows us the possibility of computing \(\rho _r\) with \(\Vert \cdot \Vert \) being the Euclidean norm and retaining our theoretical guarantees. Indeed this is how we implement the adaptive restart scheme for PDHG in Sect. 7.

Corollary 2

For PDHG with \(\eta \in (0, 1/{\sigma _{\max }}{(A)})\), Property 3 holds when \(\Vert \cdot \Vert \) is the Euclidean norm, \(C = \frac{2}{\eta (1 - \eta {\sigma _{\max }}{(A)})}\) and \(q = 4 \frac{1 + \eta {\sigma _{\max }}{(A)}}{1 - \eta {\sigma _{\max }}{(A)}}\).

The proof uses that Property 3 holds for the PDHG norm, and Proposition 7 which establishes that \(\Vert z \Vert \) is approximately Euclidean. The proof appears in “Appendix E”.

By Proposition 9 we also know that the iterates will remain bounded even after this change of norm. Therefore, using Lemma 5 we have established the premise of Theorem 2 for PDHG with the Euclidean norm on LP problems.

6.3 Linear time implementation for linear programming

This subsection shows that we can more efficiently compute the normalized duality gap in the LP setting.

6.3.1 Linear time algorithm for linear trust region problems

The key ingredient to the linear time computation of the normalized duality gap is a linear time algorithm for linear trust region problems. In particular, consider a trust region problem with a linear objective g, center z, Euclidean norm, and lower bounds l (possibly infinite) on the variables:

If \(g_i < 0\) then setting \(g_i = -g_i\) and \(l_i = -\infty \) will create an equivalent problem satisfying \(g_i > 0\). Therefore without loss of generality we can assume \(g_i > 0\) for all i.

The optimal \(\hat{z}\) will be of the form

for some \(\lambda \in [0,\infty )\), so (49) reduces to the univariate problem

Letting \(\hat{\lambda }_i\) be the value of \(\lambda \) at which \(\hat{z}(\lambda )_i\) encounters the bound, that is, \(\hat{\lambda }_i {:}{=} (z_i - l_i) / g_i\), we have

This forms the basis for a linear time algorithm: by computing (52) for the median value \(\lambda _{\text {med}}\) of \(\hat{\lambda }_i\) (in linear time [9]), and comparing it with \(r^2\), we determine whether or not the optimal \(\lambda \) for (50) is greater or less than \(\lambda _{\text {med}}\). This lets us eliminate half of the \(\hat{\lambda }_i\), collapsing their contributions into the appropriate summand. Applying this recursively gives a running time recurrence \(f(n+m) = O(n+m) + f((n+m)/2)\), i.e., \(f(n+m) = O(n+m)\). Details are in “Appendix F”.

6.3.2 Extragradient and primal-dual hybrid gradient

Recall that for extragradient the norm for defining \(\rho _r\) is Euclidean. For LP with extragradient (Sect. 3.3), we use the Lagrangian:

for which the trust region problem (46) is equivalent to

which is a trust region problem with a linear objective, Euclidean norm, and lower bounds on variables (the dual lower bounds are \(-\infty \)). Given \(y^{\top } A\) and \(Ax\), this can be solved in time \(O(n+m)\) using the algorithm in Sect. 6.3.1. The same result hold for PDHG with Euclidean norm as described in Corollary 2.

6.3.3 ADMM

Recall for ADMM for LP (Lemma 6) that

and \(\Vert \cdot \Vert = \Vert \cdot \Vert _M\) with

Therefore, for \(Q_r(x_{V}, y) = \{ (\hat{x}_{V}, \hat{y}): \hat{x}_{V} \ge 0, { \eta \Vert x_V - \hat{x}_{V} \Vert _2^2 + \frac{1}{\eta } \Vert y - \hat{y}\Vert _2^2 } \le r^2 \}\) we have

where the first equality follows from definition of the normalized duality gap, the second equality uses the definition of M and \(W_{r}(z)\), and the third equality uses that \(\bar{y}^t \cdot \hat{x}_{U} = \bar{y}^t \cdot \bar{x}_U\). To see this claim that \(\bar{y}^t \cdot \hat{x}_{U} = \bar{y}^t \cdot \bar{x}_U\) note that \(\bar{x}^t_U \in X_{U} = \{ x: A x = b\}\) and that if \(\bar{y}^t \cdot \hat{x}_{U} \ne \bar{y}^t \cdot \bar{x}^t_{U}\) for \(\hat{x}_{U} \in X_{U}\) then \(\bar{x}^t_{U} + \alpha (\hat{x}_{U} - \bar{x}^t) \in X_{U}\) for all \(\alpha \in {\mathbb {R}}\) and either \(\lim _{\alpha \rightarrow \infty } \bar{y}^t \cdot ( \bar{x}^t_{U} + \alpha (\hat{x}_{U} - \bar{x}^t) ) = \infty \) or \(\lim _{\alpha \rightarrow -\infty } \bar{y}^t \cdot ( \bar{x}^t_{U} + \alpha (\hat{x}_{U} - \bar{x}^t) ) = \infty \), this contradicts that \(\rho _r(\bar{z}^t) < \infty \) (Proposition 4).

Equation (55) is again a trust region problem with a linear objective, Euclidean norm, and bounds on the variables, so can be solved in linear time using the algorithm from Sect. 6.3.1.

7 Numerical experiments

As validation of the linear convergence rates predicted by Theorems 1 and 2, we implemented and tested the proposed restart schemes for PDHG, EGM and ADMM applied to LP. Indeed, for our test problems we are able to achieve very high accuracy solutions, commensurate with the accuracy expected from second-order methods on LP problems. The experiments also show that our adaptive scheme is competitive with the best fixed frequency restart scheme without any need for hyperparameter search. This section primarily covers the results for PDHG. Additional details and results for EGM and ADMM are presented in “Appendix G”. The code for the numerical experiments is publicly available at https://github.com/google-research/google-research/tree/master/restarting_FOM_for_LP.

We select four LP instances, qap10, qap15, nug08-3rd, and nug20, from the Mittelmann collection set [55], a standard benchmark set for LP. These four problems are relaxations of quadratic assignment problems [70], a classical NP-hard combinatorial optimization problem. These instances are known to be challenging for traditional solvers and amenable to first-order methods [28]. The instance sizes are given in Table 3.

For the step size of PDHG we use \(\eta = 0.9 {\sigma _{\max }}{(A)}\) which ensures the theoretical bounds for PDHG hold, where \({\sigma _{\max }}{(A)}\) is estimated using power iteration.

It is known that a major factor in the practical performance of PDHG is the relative scaling of the primal and dual [14, 31]. We call this the primal weight (\(\omega \)). It can be viewed as a scaling factor on the objective value, i.e., setting \(c_{\text {new}} = c / \omega ^2\), or as the relative step size for the primal and dual, i.e., the primal step size is \(\eta / \omega \) and the dual step size is \(\eta \omega \). Before running PDHG with restarts on each of these problems we estimate the optimal primal weight by running the non-restarted PDHG algorithm for 5000 iterations across the range \(\omega \in \{ 4^{-5}, 4^{-4}, 4^{-3}, 4^{-2}, 4^{-1}, 1, 4^{1}, 4^{2}, 4^{3}, 4^{4}, 4^{5} \}\). We then choose the \(\omega \) value with the smallest value of the KKT error \(\Vert (K z - h)^+ \Vert _2\) where K and h are defined as per (20). We use this \(\omega \) value for our experiment across all restart schemes.

On these problems we run PDHG with: no restarts, fixed frequency restarts with different restart lengths, and adaptive restarts (where the norm used for computing the normalized duality gap is Euclidean as described in Sect. 6.3.2). Fixed restart lengths of \(4^{1}, 4^2, \dots , 4^9\) were tested and the best three restart lengthsFootnote 9 were plotted for each problem. In Fig. 2, we plot the value of the normalized duality gap against the number of iterations for each of the method where the input radius for the normalized duality gap is equal to the distance traveled since the last restart (for no restarts this is the starting point). For the adaptive restarts we use \(\beta = \exp (-1)\) as per Remark 5 and only evaluate (30) every 30 iterations to ensure the cost of evaluating the normalized duality gap is negligible. There are a few consistent patterns. One is that often a few iterations after a restart, the normalized duality gap spikes and then falls quickly below its previous value. This is particularly apparent for nug20. Another observation is that after the active set is identified, the linear convergence is often apparent in the plot. However, for qap10, qap15, and nug20, restarted PDHG outperforms non-restarted PDHG, much earlier than when the active set freezes.

Plots show normalized duality gap (in \(\log \) scale) versus number of iterations for restarted PDHG. Results from left to right, top to bottom for qap10, qap15, nug08-3rd, and nug20. Each plot compares no restarts, adaptive restarts, and the three best fixed restart lengths. For the restart schemes we evaluate the normalized duality gap at the average, for no restarts we evaluate it at the last iterate as the average performs much worse. A star indicates the last iteration that the active set changed before the iteration limit was reached. Series markers indicate when restarts occur