Abstract

Many fundamental \(\mathsf {NP}\)-hard problems can be formulated as integer linear programs (ILPs). A famous algorithm by Lenstra solves ILPs in time that is exponential only in the dimension of the program, and polynomial in the size of the ILP. That algorithm became a ubiquitous tool in the design of fixed-parameter algorithms for \(\mathsf {NP}\)-hard problems, where one wishes to isolate the hardness of a problem by some parameter. However, in many cases using Lenstra’s algorithm has two drawbacks: First, the run time of the resulting algorithms is often double-exponential in the parameter, and second, an ILP formulation in small dimension cannot easily express problems involving many different costs. Inspired by the work of Hemmecke et al. (Math Program 137(1–2, Ser. A):325–341, 2013), we develop a single-exponential algorithm for so-called combinatorial n-fold integer programs, which are remarkably similar to prior ILP formulations for various problems, but unlike them, also allow variable dimension. We then apply our algorithm to many relevant problems problems like Closest String, Swap Bribery, Weighted Set Multicover, and several others, and obtain exponential speedups in the dependence on the respective parameters, the input size, or both. Unlike Lenstra’s algorithm, which is essentially a bounded search tree algorithm, our result uses the technique of augmenting steps. At its heart is a deep result stating that in combinatorial n-fold IPs, existence of an augmenting step implies existence of a “local” augmenting step, which can be found using dynamic programming. Our results provide an important insight into many problems by showing that they exhibit this phenomenon, and highlights the importance of augmentation techniques.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Integer Linear Programming (ILP) problem is fundamental as it models many combinatorial optimization problems. Since it is NP-complete, we naturally ask about the complexity of special cases. A fundamental algorithm by Lenstra from 1983 shows that ILPs can be solved in polynomial time when their number of variables (the dimension) d is fixed [52]; that algorithm is thus a natural tool to prove that the complexity of some special cases of other NP-hard problems is also polynomial.

A systematic way to study the complexity of “special cases” of NP-hard problems was developed in the past 25 years in the field of parameterized complexity. There, the problem input is augmented by some integer parameter k, and one then measures the problem complexity in terms of both the instance size n as well as k. Of central importance are algorithms with run times of the form \(f(k) n^{{\mathcal {O}}(1)}\) for some computable function f, which are called fixed-parameter algorithms. The key idea is that the degree of the polynomial does not grow with k. For background on parameterized complexity, we refer to the monograph [13].

Kannan’s improvement [42] of Lenstra’s algorithm runs in time \(d^{{\mathcal {O}}(d)} \langle I \rangle ^{{\mathcal {O}}(1)}\), where \(\langle I \rangle \) is the binary encoding length of the instance, which is thus a fixed-parameter algorithm for parameter d. Gramm et al. [33] pioneered the application of Lenstra’s and Kannan’s algorithm in parameterized complexity: they modeled Closest String with k input strings as an ILP of dimension \(k^{{\mathcal {O}}(k)}\), and thereby concluded with the first fixed-parameter algorithm for Closest String. This success led Niedermeier [59] to propose in his book:

[...] It remains to investigate further examples besides Closest String where the described ILP approach turns out to be applicable. More generally, it would be interesting to discover more connections between fixed-parameter algorithms and (integer) linear programming.

Since then, many more applications of Lenstra’s and Kannan’s algorithm for parameterized problems have been proposed. However, essentially all of them, e.g. [8, 16, 23, 37, 49, 58], share a common trait with the algorithm for Closest String: they have a double-exponential run time dependence on the parameter. This is because solving an ILP takes time exponential in the number of variables, and these algorithms amount to solving ILP formulations with exponentially many variables. Moreover, it is difficult to find ILP formulations with small dimension for problems whose input contains many objects with varying cost functions, such as in Swap Bribery [6, Challenge #2].

1.1 Our contributions

We show that a certain form of ILP, which is closely related to the previously used formulations for Closest String and Swap Bribery and other problems, can be solved in single-exponential time, even if the dimension is allowed to be variable. For example, Gramm et al.’s [33] algorithm for Closest String runs in time \(2^{2^{{\mathcal {O}}(k \log k)}} {\mathcal {O}}(\log L)\) for k strings of length L and has not been improved since 2003, while our algorithm runs in time \(k^{{\mathcal {O}}(k^2)} {\mathcal {O}}(\log L)\). Moreover, our algorithm has a strong combinatorial flavor and is based on different notions than are typically encountered in parameterized complexity, most importantly so-called augmenting steps (cf. Sect. 1.3). We note that all formal definitions are postponed until or repeated in Sect. 2.

As an example of our form of ILP, its motivation and connections to earlier formulations, consider the Closest String problem. We are given k strings \(s_1, \dots , s_k\) of length L that come (after some preprocessing) from the alphabet \([k]{:}{=}\{1,\ldots ,k\}\), and an integer d. The goal is to find a string \(y \in [k]^L\) such that, for each \(s_i\), the Hamming distance \(d_H(y, s_i)\) between y and \(s_i\), i.e., the number of positions on which y and \(s_i\) differ, is at most d, if such y exists.

Arranging the input strings in an \(k \times L\) matrix whose ith row is \(s_i\), we have that, for \(j \in [L]\), \((s_1[j], \dots , s_k[j])\) is the jth column of this input representation. Clearly, there are at most \(k^k\) different column types in the input, and we can represent the input succinctly with multiplicities \(b^{{\mathbf{f }}}\) of each column type \({\mathbf{f }}\in [k]^k\). Moreover, there are k choices for the output string y in each column. Thus, we can encode the solution by describing, for each column type \({\mathbf{f }}\in [k]^k\) and each output character \(e \in [k]\), how many solution columns are of type \(({\mathbf{f }}, e)\) with a variable \(x_{{\mathbf{f }}, e}\). This is the basic idea behind the formulation of Gramm et al. [33], as depicted on the left:

The formulation on the right is obtained from the one on the left by copying each variable once for each \({\mathbf{f }}\in [k]^k\), and “turning off” all variables \(x^{{\mathbf{f }}'}_{{\mathbf{f }},e}\) with \({\mathbf{f }}' \ne {\mathbf{f }}\). In other words, the columns of the formulation on the left are a subset of the columns on the right, and variables corresponding to columns which only exist in the formulation on the right are set to zero.

Let \((1~\ldots ~1) = {\mathbf {1}}^{\intercal }\) be a row vector of all ones. Alternatively, we can view the above as

where \(D = (D_1~D_2~\dots ~D_{k^k})\) and \({\mathbf{d }}= (d \ldots d)^{\intercal } \in \mathbb {Z}^{k}\). Our motivation for the formulation on the right is that it has the nicely uniform structure

with \(D \in \mathbb {Z}^{r \times t}\), \(A \in \mathbb {Z}^{s \times t}\), and containing \(n=k^k\) blocks D and A. Integer programming (IP) with constraint matrix of the form (1) is known as n-fold integer programming. An algorithm of Hemmecke et al. [36] solves n-fold IP in time \(\varDelta ^{(rst + t^2s)} n^3 \langle I \rangle \), where \(\varDelta = 1+\left\| E^{(n)}\right\| _\infty \). However, since the ILP on the right for Closest String has \(t = k^k\), applying this algorithm gives no advantage over applying Lenstra’s algorithm to solve the Closest String problem.

We overcome this impediment by harnessing the special structure of the ILP for Closest String. Observe that its constraint matrix A has the form

We call any n-fold IP with this matrix A a combinatorial n-fold IP. Our main result is a fast algorithm for combinatorial n-fold IPs, which is exponentially faster in t than previous works for general n-fold IPs.

Theorem 1

(simplified) Any combinatorial n-fold IP of size L can be solved in time \(t^{{\mathcal {O}}(r)} (\varDelta r)^{{\mathcal {O}}(r^2)} {\mathcal {O}}(n^3 \langle I \rangle ) + {{\,\mathrm{\mathrm{poly}}\,}}(n,\langle I \rangle )\), where \(\varDelta = 1 + \Vert D\Vert _\infty \).

Observe that, when applicable, our algorithm is not only asymptotically faster than Lenstra’s, but provides a fixed-parameter algorithm even if the dimension is variable and not a parameter. Moreover, note that n-fold IP is allowed to have not only a linear objective function, but also a separable convex objective function. Therefore, while Theorem 1 does indeed show that certain ILPs can be solved in single-exponential time, it also shows that certain IPs with more general objective functions can also be solved efficiently.

1.2 Applications

We apply Theorem 1 to several fundamental combinatorial optimization problems, for which we obtain exponential improvements in the parameter dependence, the input length, or both. For a summary of results, see Table 1; this list is not meant to be exhaustive. In fact, we believe that for any Lenstra-based result in the literature which only achieves double-exponential run times, there is a good chance that it can be sped up using our algorithm. The only significant obstacles seem to be either large coefficients in the constraint matrix or an exponential number of rows of the matrix D.

We discuss applications in detail in Sect. 4, which is structured as follows. We first show (Sect. 4.1) it is possible to use inequalities (and not only equations) and discuss the representation of the input (Sect. 4.2). In Sect. 4.3 we use the Weighted Set Multicover problem (which has applications for example in graph algorithms and computational social choice) as a detailed example of modeling a problem as a combinatorial n-fold IP. Then, in Sects. 4.4 and 4.5 we discuss many variations on the Closest String and Bribery problems, respectively, and give combinatorial n-fold IP formulations for them. In Sect. 4.6 we discuss the Huge n-fold IP problem. Finally, in Sect. 4.7, we show that many other ILP formulations in the literature have a format very close to combinatorial n-fold IP and can in fact be modeled as one. Together, we obtain exponential speed-ups for all of the discussed problems.

1.3 Comparison with Lenstra’s algorithm

The basic idea behind Lenstra’s algorithm is the following. Given a system \(A {\mathbf{x }}\le {\mathbf{b }}\) it is possible to compute the volume of the polyhedron it defines and determine that it is either too large not to contain an integer point, or too small not to be flat in some direction. In the first case we are done. In the second case we can take d slices of dimension \(d-1\) and recurse into them, achieving a \(d^{{\mathcal {O}}(d)} \langle I \rangle ^{{\mathcal {O}}(1)}\) runtime. Note that we only decide feasibility and optimization can be then done by binary search. On the other hand, the basic idea behind our algorithm is the following. We only focus on optimizing and later show that testing feasibility reduces to it. Starting from some feasible solution, the crucial observation is that if there is a step improving the objective, there is one which does not modify many variables, and can be found quickly by dynamic programming. Moreover, if the current solution is far from the optimum, then it is possible to make a long step, and polynomially many long steps will reach the optimum.

More concretely, consider the run of these two algorithms on an instance of Closest String consisting of k strings, each of length L. Lenstra’s algorithm essentially either determines that the bounds are loose enough that there must exist a solution, or (oversimplifying) determines that there is a column type \({\mathbf{f }}\in [k]^k\) and a character \(e \in [k]\) such that there are at most \(k^k\) consecutive choices for how many times the solution contains character e at a column of type \({\mathbf{f }}\). Then, we recurse, obtaining a \(2^{2^{{\mathcal {O}}(k \log k)}}{\mathcal {O}}(\log L)\)-time algorithm. On the other hand, our algorithm views the problem as an optimization problem, so we think of starting with a string of all blanks which is trivially at distance 0 from any string, and the goal is to fill in all blanks such that the result is still at distance at most d from the input strings. An augmenting step is a set of character swaps that decreases the number of blanks. The crucial observation is that if an augmenting step exists, then there is also one only changing few characters, and it can be found in time \(k^{{\mathcal {O}}(k^2)}{\mathcal {O}}(\log L)\). Thus (omitting details), we can iteratively find augmenting steps until we reach the optimum.

Related work. Our main inspiration are augmentation methods based on Graver bases, especially a fixed-parameter algorithm for n-fold IP of Hemmecke et al. [36]. Our result improves the runtime of their algorithm for a special case. More generally, our work follows in the vein of Papadimitriou [64], whose algorithm was recently improved by Eisenbrand and Weismantel [19] and Jansen and Rohwedder [41]. Subsequent to the publication of the extended abstract version of this work, the main technical result (Theorem 1) was improved by Koutecký et al. [47] and Eisenbrand et al. [17]. All the following related work is orthogonal to ours in either the achieved result, or the parameters used for it.

In fixed dimension, Lenstra’s algorithm [52] was generalized for arbitrary convex sets and quasiconvex objectives by Grötschel et al. [34, Theorem 6.7.10]. The currently fastest algorithm of this kind is due to Dadush et al. [14]. The first notable fixed-parameter algorithm for a non-convex objective is due to Lokshtanov [54], who showed that optimizing a quadratic function over the integers of a polytope is fixed-parameter tractable if all coefficients are small. Ganian and Ordyniak [30] and Ganian et al. [31] studied the complexity of ILP with respect to structural parameters such as treewidth and treedepth, and introduced a new parameter called torso-width.

Besides fixed-parameter tractability, there is interest in the (non-)existence of polynomial kernels of ILPs, which formalize the (im)possibility of various preprocessing procedures. Jansen and Kratsch [39] showed that ILPs containing parts with simultaneously bounded treewidth and bounded domains are amenable to polynomial kernelization, unlike ILPs containing totally unimodular parts. Kratsch [48] studied the kernelizability of sparse ILPs with small coefficients.

Outline. The paper is structured as follows. In Sect. 2 we provide the definitions and notions necessary for the proof of Theorem 1. Section 3 is dedicated to the proof of Theorem 1. Finally, in Sect. 4 we turn to applications of Theorem 1.

2 Preliminaries

For positive integers m, n we set \([m : n] = \{m,\ldots , n\}\) and \([n] = [1 : n]\). For a graph G we denote by V(G) its set of vertices. We write vectors in boldface (e.g., \({\mathbf{x }}, {\mathbf{y }}\)) and their entries in normal font (e.g., the ith entry of \({\mathbf{x }}\) is \(x_i\)).

For a matrix \(A \in \mathbb {Z}^{m \times n}\), vectors \({\mathbf{b }}\in \mathbb {Z}^{m}\), \({\mathbf{l }}, {\mathbf{u }}\in \mathbb {Z}^{n}\), and a function \(f:\mathbb {Z}^n \rightarrow \mathbb {Z}\), let \((IP)_{A, {\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\) be the problem

We say that a vector \({\mathbf{x }}\) is a feasible solution to \((IP)_{A, {\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\) if \(A{\mathbf{x }}= {\mathbf{b }}\) and \({\mathbf{l }}\le {\mathbf{x }}\le {\mathbf{u }}\).

n-fold IP. Let \(r,s,t,n \in \mathbb {N}\) be integers, \({\mathbf{u }}, {\mathbf{l }}\in \mathbb {Z}^{nt}\) and \({\mathbf{b }}\in \mathbb {Z}^{r+ns}\) be vectors, and \(f:\mathbb {Z}^{nt} \rightarrow \mathbb {Z}\) be a separable convex function (i.e., \(f({\mathbf{x }}) = \sum _{i=1}^n \sum _{j=1}^t f^i_j(x^i_j)\) with every \(f^i_j:\mathbb {Z}\rightarrow \mathbb {Z}\) univariate convex). Let \(D \in \mathbb {Z}^{r \times t}\) be an \(r\times t\)-matrix and \(A \in \mathbb {Z}^{s \times t}\) be an \(s\times t\)-matrix. We define a matrix \(E^{(n)}\) as

and we call \(E^{(n)}\) the n-fold product of \(E = \left( {\begin{matrix}D\\ A\end{matrix}}\right) \). The problem (IP) with the constraint matrix \(E^{(n)}\) is called n-fold integer programming \((IP)_{E^{(n)},{\mathbf{b }},{\mathbf{l }},{\mathbf{u }},f}\). By \(\langle I \rangle = \langle {\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f \rangle \) we denote the length of the binary encoding of the (IP) instance, which is given by vectors \({\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}\) and the objective function f. Here, \(\langle f \rangle = \langle \max _{{\mathbf{x }}: {\mathbf{l }}\le {\mathbf{x }}\le {\mathbf{u }}} |f({\mathbf{x }})| \rangle \) is the encoding length of the maximum absolute value attained by f over the feasible region.

Building on a dynamic program of Hemmecke, Onn, and Romanchuk [36] and a so-called proximity technique of Hemmecke, Köppe and Weismantel [35], Knop and Koutecký [43] proved that:

Proposition 1

([43, Thm. 5]) There is an algorithm that solvesFootnote 1\((IP)_{E^{(n)},{\mathbf{b }},{\mathbf{l }},{\mathbf{u }},f}\) encoded with \(\langle I \rangle = \langle {\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f \rangle \) bits in time \(\varDelta ^{O(trs + t^2s)}\cdot n^3 \langle I \rangle \), where \(\varDelta = 1 + \max \{\Vert D\Vert _\infty , \Vert A\Vert _\infty \}\).

The structure of \(E^{(n)}\) (in equation (3)) allows us to divide any nt-dimensional object, such as the vector of variables \({\mathbf{x }}\), the bounds \({\mathbf{l }}\) and \({\mathbf{u }}\), or the objective function f, into n bricks of size t. We use subscripts to index within a brick and superscripts to denote the index of the brick, i.e., we write \({\mathbf{x }}= \left( {\mathbf{x }}^1, \dots , {\mathbf{x }}^n\right) \) with \({\mathbf{x }}^i\) being the ith brick of \({\mathbf{x }}\), and \(x_j^i\) denotes the jth variable of the ith brick with \(j \in [t]\) and \(i \in [n]\). We refer to the constraints \(\left( D \cdots D\right) \cdot {\mathbf{x }}\le {\mathbf{d }}\) as globally uniform constraints and we call the other constraints locally uniform.

Our focus here is on the following special form of n-fold IP which we call combinatorial n-fold IP.

Definition 1

(Combinatorial n-fold IP) Let \(A = (1~\cdots ~1)\in {\mathbb {Z}}^{1\times t}\), let \(D\in \mathbb {Z}^{r\times t}\) be a matrix, and let \(E = \left( {\begin{matrix}D\\ A\end{matrix}}\right) \). Let \(f:\mathbb {Z}^{nt} \rightarrow \mathbb {Z}\) be a separable convex function represented by an evaluation oracle. A combinatorial n-fold IP is

An approximate continuous relaxation oracle is an algorithm which, queried on an instance of (IP), returns a solution \({\mathbf{x }}_\varepsilon \) of the continuous relaxation of (IP)Footnote 2 such that there exists an optimum \({\tilde{{\mathbf{x }}}}\) of the relaxation satisfying \(\Vert {\mathbf{x }}_\varepsilon - {\tilde{{\mathbf{x }}}}\Vert _\infty \le \varepsilon \). For any separable convex function such an oracle can be implemented in polynomial time by Chubanov’s algorithm [11, Corollary 14]. We denote by \({\mathcal {R}}\) the time needed to realize one call to this oracle when the instance is clear from the context. For more details cf. De Loera et al. [15, Problem 4.3.1].

Graver bases and augmentation. Let us now introduce Graver bases and discuss how they can be used for optimization. For background, we refer to the books of Onn [61] and De Loera et al. [15].

Let \({\mathbf{x }}, {\mathbf{y }}\) be n-dimensional integer vectors. We call \({\mathbf{x }}, {\mathbf{y }}\) sign-compatible if they lie in the same orthant, that is, for each \(i \in [n]\) the sign of \(x_i\) and \(y_i\) is the same. We call \(\sum _i {\mathbf{g }}^i\) a sign-compatible sum if all \({\mathbf{g }}^i\) are pairwise sign-compatible. Moreover, we write \({\mathbf{y }}\sqsubseteq {\mathbf{x }}\) if \({\mathbf{x }}\) and \({\mathbf{y }}\) are sign-compatible and \(|y_i| \le |x_i|\) for each \(i \in [n]\), and write \({\mathbf{y }}\sqsubset {\mathbf{x }}\) if at least one of the inequalities is strict. Clearly, \(\sqsubseteq \) imposes a partial order called “conformal order” on n-dimensional vectors. For an integer matrix \(A \in \mathbb {Z}^{m \times n}\), its Graver basis \(\mathcal{G}(A)\) is the set of \(\sqsubseteq \)-minimal non-zero elements of the lattice of A, \(\ker _{\mathbb {Z}}(A) = \{{\mathbf{z }}\in \mathbb {Z}^n \mid A {\mathbf{z }}= {\mathbf {0}}\}\). An important property of \(\mathcal{G}(A)\) is the following.

Proposition 2

([61, Lemma 3.2]) Every integer vector \({\mathbf{x }}\ne {\mathbf {0}}\) with \(A {\mathbf{x }}= {\mathbf {0}}\) is a sign-compatible sum \({\mathbf{x }}= \sum _i {\mathbf{g }}^i\) of Graver basis elements \({\mathbf{g }}^i \in \mathcal{G}(A)\), with some elements possibly appearing with repetitions.

Let \({\mathbf{x }}\) be a feasible solution to \((IP)_{A, {\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\). We call a vector \({\mathbf{g }}\) a feasible step if \({\mathbf{x }}+ {\mathbf{g }}\) is feasible for \((IP)_{A, {\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\). Further, call a feasible step \({\mathbf{g }}\) augmenting if \(f({\mathbf{x }}+{\mathbf{g }}) < f({\mathbf{x }})\). An augmenting step \({\mathbf{g }}\) and a step length \(\alpha \in \mathbb {Z}\) form an \({\mathbf{x }}\)-feasible step pair with respect to a feasible solution \({\mathbf{x }}\) if \({\mathbf{l }}\le {\mathbf{x }}+ \alpha {\mathbf{g }}\le {\mathbf{u }}\). An augmenting step \({\mathbf{g }}\) and a step length \(\alpha \in \mathbb {Z}\) form a Graver-best step if \(f({\mathbf{x }}+ \alpha {\mathbf{g }}) \le f({\mathbf{x }}+ \alpha ' {\tilde{{\mathbf{g }}}})\) for all \({\mathbf{x }}\)-feasible step pairs \(({\tilde{{\mathbf{g }}}},\alpha ') \in \mathcal{G}(A)\times \mathbb {Z}\).

The Graver-best augmentation procedure for \((IP)_{A, {\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\) with given feasible solution \({\mathbf{x }}_0\) works as follows:

-

1.

If there is no Graver-best step for \({\mathbf{x }}_0\), return it as optimal.

-

2.

If a Graver-best step \((\alpha , {\mathbf{g }})\) for \({\mathbf{x }}_0\) exists, set \({\mathbf{x }}_0 {:}= {\mathbf{x }}_0 + \alpha {\mathbf{g }}\) and go to 1.

Proposition 3

([15, implicit in Theorem 3.4.1]) Given a feasible solution \({\mathbf{x }}_0\) for \((IP)_{A, {\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\) where f is separable convex, the Graver-best augmentation procedure finds an optimum of \((IP)_{A, {\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\) in at most \((2n-2) \log M\) steps, where \(M = f({\mathbf{x }}_0) - f({\mathbf{x }}^*)\) and \({\mathbf{x }}^* \in \mathbb {Z}^n\) is an optimum of \((IP)_{A, {\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\).

Graver complexity. The key property of the n-fold product \(E^{(n)}\) is that, for any \(n \in \mathbb {N}\), the number of nonzero bricks of any \({\mathbf{g }}\in \mathcal{G}\big (E^{(n)}\big )\) is bounded by some constant g(E) called the Graver complexity of E. A proof is given for example by Onn [61, Lemma 4.3]. To emphasize the differences with our approach, we give a rough outline of the proof, omitting several details.

Consider any \({\mathbf{g }}\in \mathcal{G}\big (E^{(n)}\big )\) and take its restriction to its nonzero bricks \({\bar{{\mathbf{g }}}}\). Since each brick \({\bar{{\mathbf{g }}}}^i\) of \({\bar{{\mathbf{g }}}}\) satisfies \(A{\bar{{\mathbf{g }}}}^i = {\mathbf{0 }}\), by Proposition 2 it can be decomposed into elements from \(\mathcal{G}(A)\). Let \({\mathbf{h }}\) be a concatenation of all members of \(\mathcal{G}(A)\) from the decompositions obtained above. Since \({\mathbf{g }}\in \mathcal{G}\big (E^{(n)}\big )\) and \({\mathbf{h }}\) is obtained from decompositions of its nonzero bricks, we have that \(\sum _{j} D {\mathbf{h }}^j = {\mathbf{0 }}\). Then, consider a compact representation \({\mathbf{v }}\) of \({\mathbf{h }}\) by counting how many times each element from \(\mathcal{G}(A)\) appears. Let G be a matrix with the elements of \(\mathcal{G}(A)\) as columns. It is then not difficult to show that \({\mathbf{v }}\in \mathcal{G}(DG)\). Since \(\Vert {\mathbf{v }}\Vert _1\) is an upper bound on the number of bricks of \({\mathbf{h }}\) and thus of nonzero bricks of \({\mathbf{g }}\) and clearly does not depend on n, \(g(E) = \max _{{\mathbf{v }}\in \mathcal{G}(DG)} \Vert {\mathbf{v }}\Vert _1\) is finite.

Let us make precise two observations from this proof, which we will use later.

Lemma 1

([36, Lemma 3.1], [61, implicit in the proof of Lemma 4.3]) Let \(D \in \mathbb {Z}^{r \times t}\), \(A \in \mathbb {Z}^{s \times t}\) and \(E = \left( {\begin{array}{c}D\\ A\end{array}}\right) \).

Let \(({\mathbf{g }}^1, \dots , {\mathbf{g }}^n) \in \mathcal{G}\big (E^{(n)}\big )\). Then for \(i = 1,\ldots ,n\) there exist vectors \({\mathbf{h }}^{i,1}, \dots , {\mathbf{h }}^{i,m_i} \in \mathcal{G}(A)\) such that \({\mathbf{g }}^i = \sum _{k=1}^{m_i} {\mathbf{h }}^{i,k}\), and \(\sum _{i=1}^n m_i \le g(E)\).

Lemma 2

([36, Lemma 6.1], [61, implicit in the proof of Lemma 4.3]) Let \(D \in \mathbb {Z}^{r \times t}\), \(A \in \mathbb {Z}^{s \times t}\) and let \(G \in \mathbb {Z}^{t \times p}\) be the matrix whose columns are the elements of \(\mathcal{G}(A)\). Then \(|\mathcal{G}(A)| \le \Vert A\Vert _\infty ^{st}\), and for \(E = \left( {\begin{matrix}D\\ A\end{matrix}}\right) \) it holds

3 Combinatorial \({\varvec{n}}\)-fold IPs

This section is dedicated to proving Theorem 1:

Theorem 1

(repeated) Let \(D \in \mathbb {Z}^{r \times t}\), \({\mathbf{l }}, {\mathbf{u }}\in \mathbb {Z}^{nt}\), \({\mathbf{b }}\in \mathbb {Z}^{r+n}\), and a separable convex function \(f:\mathbb {Z}^{nt} \rightarrow \mathbb {R}\) be given. There is an algorithm that solves the combinatorial n-fold IP \((IP)_{E^{(n)},{\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\) of size \(\langle I \rangle = \langle {\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f \rangle \) in time \(t^{{\mathcal {O}}(r)} (\varDelta r)^{{\mathcal {O}}(r^2)} {\mathcal {O}}(n^3 \langle I \rangle ) + {\mathcal {R}}\), where \(\varDelta = 1 + \Vert D\Vert _\infty \), \(E = \left( {\begin{matrix} D \\ {\mathbf {1}}^{\intercal }\end{matrix}} \right) \), and \({\mathcal {R}}\) is the time required for one call to an optimization oracle for the continuous relaxation of \((IP)_{E^{(n)},{\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\).

We fix an instance of combinatorial n-fold IP, that is, a tuple \(\left( n, D, {\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f\right) \) that we use throughout this whole section.

3.1 Graver complexity of combinatorial n-fold IP

Recall that g(E) denotes the maximum number of non-zero bricks of any augmenting step \({\mathbf{g }}\in \mathcal{G}\big (E^{(n)}\big )\). The bound on g(E) we get from Lemma 2 is exponential in t. Our goal now is to improve the bound on g(E) in terms of t, exploiting the simplicity of the matrix A in combinatorial n-fold IPs.

To see this, we will need to understand the structure of \(\mathcal{G}({\mathbf {1}}^{\intercal })\):

Lemma 3

For any \(i \ne j \in [t]\), let \({\varvec{\zeta }}(i,j) \in \{-1,0,1\}^t\) satisfy \(\zeta _i = 1\), \(\zeta _j = -1\), and \(\zeta _\ell =0\) for all \(\ell \in [t] {\setminus } \{i,j\}\). It holds that

-

\(\mathcal{G}({\mathbf {1}}^{\intercal }) = \{{\varvec{\zeta }}(i,j) \mid i,j \in [t], i \ne j\}\),

-

\(p = |\mathcal{G}({\mathbf {1}}^{\intercal })| = t(t-1)\), and

-

\(\Vert {\mathbf{g }}\Vert _1 = 2\) for all \({\mathbf{g }}\in \mathcal{G}({\mathbf {1}}^{\intercal })\).

Proof

Observe that the claimed set of vectors is clearly \(\sqsubseteq \)-minimal in \(\ker _{\mathbb {Z}}({\mathbf {1}}^{\intercal })\). We are left with proving there is no other non-zero \(\sqsubseteq \)-minimal vector in \(\ker _{\mathbb {Z}}({\mathbf {1}}^{\intercal })\). For contradiction assume there is such a vector \({\mathbf{h }}\). Since it is non-zero, it must have a positive entry \(h_i\). On the other hand, since \({\mathbf {1}}^{\intercal } {\mathbf{h }}= {\mathbf {0}}\), it must also have a negative entry \(h_j\). But then for a vector \({\mathbf{g }}\) with \(g_i = 1\), \(g_j = -1\) and \(g_k = 0\) for all \(k \not \in \{i,j\}\) it holds that \({\mathbf{g }}\sqsubset {\mathbf{h }}\), a contradiction. The rest follows. \(\square \)

With this lemma at hand, we can prove the following.

Lemma 4

Let \(D \in \mathbb {Z}^{r \times t}\) and \(\varDelta = 1 + \Vert D\Vert _\infty \). Then, \(g(\left( {\begin{matrix}D\\ {\mathbf {1}}^{\intercal }\end{matrix}}\right) ) \le t^2 (2 r \varDelta )^r\).

Proof

We simply plug the correct values into the bound of Lemma 2. By Lemma 3, \(p = t(t-1) \le t^2\). Also, \(\Vert DG\Vert _\infty \le \max _{{\mathbf{g }}\in \mathcal{G}({\mathbf {1}}^{\intercal })} \left\{ \Vert D\Vert _\infty \cdot \Vert {\mathbf{g }}\Vert _1 \right\} \le 2\varDelta \), where the last inequality follows from \(\Vert {\mathbf{g }}\Vert _1 = 2\) for all \({\mathbf{g }}\in \mathcal{G}({\mathbf {1}}^{\intercal })\), again by Lemma 3. \(\square \)

3.2 Dynamic programming

Hemmecke et al. [36] devised a clever dynamic programming algorithm to find augmenting steps for a feasible solution of an n-fold IP. Lemma 1 is crucial in their approach, as they continue by building a set Z(E) of all sums of at most g(E) elements of \(\mathcal{G}(A)\) and then use it to construct the dynamic program. However, such a set Z(E) would clearly be of size exponential in t—too large to achieve our single-exponential run times. In their dynamic program, a directed acyclic graph is constructed whose layers correspond to partial sums of elements of \(\mathcal{G}(A)\).

Our insight is to build a different dynamic program. In our dynamic program, we will exploit the simplicity of \(\mathcal{G}(A) = \mathcal{G}({\mathbf {1}}^{\intercal })\) so that the constructed directed acyclic graph will have layers which correspond to individual coordinates \(h^i_j\) of an augmenting vector \({\mathbf{h }}\). Additionally, we also differ in how we enforce feasibility with respect to the globally uniform constraints \((D~D~\cdots ~D) {\mathbf{x }}= {\mathbf{b }}^0\).

We now give the details of our approach. The signature set of E is \(\varSigma (E) = \prod _{j = 1}^r \left[ -2\varDelta \cdot g(E) : 2\varDelta \cdot g(E) \right] \); its elements are called signatures. Essentially, we will use the signature set to keep track of partial sums of prefixes of the augmenting vector \({\mathbf{h }}\) to ensure that it satisfies \(D {\mathbf{h }}= {\mathbf {0}}\). Crucially, we notice that to ensure \(D {\mathbf{h }}= {\mathbf {0}}\), it suffices to remember the partial sum of the prefixes of \({\mathbf{h }}\) multiplied by D, thus shrinking them from dimension t to dimension r. This is another insight which allows us to avoid the exponential dependence on t. Note that \(\left| \varSigma (E)\right| = \left( 1 + \varDelta 4 g(E) \right) ^r\).

Definition 2

(Augmentation graph) Let \({\mathbf{x }}\) be a feasible solution for \((IP)_{E^{(n)}, {\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\) and let \({\alpha \in \mathbb {N}}\). The augmentation graph \(DP({\mathbf{x }}, \alpha )\) is a vertex-weighted directed layered graph with two distinguished vertices S and T called the source and the sink, and nt layers \({\mathcal{L}}(1,1),\ldots , {\mathcal{L}}(n,t)\) structured according to the bricks, such that for all \(i \in [n]\), \(j \in [t]\),

Thus, each vertex is a tuple \(\big (i,j, h^i_j, \beta ^i_j, {\varvec{\sigma }}^i_j\big )\), with the following meaning:

-

\(i \in [n]\) is the index of the brick,

-

\(j \in [t]\) is the position within the brick,

-

\(h^i_j \in [-g(E) : g(E)]\) is the value of the corresponding coordinate of a proposed augmenting vector \({\mathbf{h }}\),

-

\(\beta ^i_j \in [-g(E) : g(E)]\) is a brick prefix sum \(\sum _{\ell =1}^j h^i_\ell \) of the proposed augmenting vector \({\mathbf{h }}\), and,

-

\({\varvec{\sigma }}^i_j \in \varSigma (E)\) is the signature that represents the prefix sum \(\sum _{k=1}^i D {\mathbf{h }}^k + \sum _{\ell =1}^j D_\ell h^i_\ell \), where \(D_\ell \) is the \(\ell \)th column of the matrix D.

A vertex \(\big (i,j, h^i_j, \beta ^i_j, {\varvec{\sigma }}^i_j\big )\) has weight \(f^i_j(\alpha h^i_j + x^i_j) - f^i_j(x^i_j)\).

Let \(S = \big (0,t,0,0, {\mathbf {0}}\big )\) and \(T = \big (n+1,1,0,0, {\mathbf {0}}\big )\), where the last coordinate is an r-dimensional all-zero vector.

Edges to the first layer of a brick. Every vertex \(\big (i,t, h^i_t, 0, {\varvec{\sigma }}^i_t\big )\) has edges to each vertex \(\big (i+1,1, h^{i+1}_1, h^{i+1}_1, {\varvec{\sigma }}^{i+1}_1\big )\) for which \(l^{i+1}_1 \le x^{i+1}_1 + \alpha h^{i+1}_1 \le u^{i+1}_1\) and \({\varvec{\sigma }}^{i+1}_1 = {\varvec{\sigma }}^{i}_t + D_1 h^{i+1}_1\). We emphasize that there are no other outgoing edges from layer \({\mathcal{L}}(i,t)\) to layer \({\mathcal{L}}(i+1,1)\).

Edges within a brick. Every vertex \(\big (i,j, h^i_j, \beta ^i_j, {\varvec{\sigma }}^i_j)\) with \(j < t\) has edges to each vertex \(\big (i, j+1, h^i_{j+1}, \beta ^i_{j+1}, {\varvec{\sigma }}^i_{j+1} \big )\) for which

-

\(l^{i}_{j+1} \le x^{i}_{j+1} + \alpha h^{i}_{j+1} \le u^{i}_{j+1}\),

-

\(\beta ^{i}_{j+1} = \beta ^i_j + h^{i}_{j+1}\), with \(\beta ^i_{j+1} \in \left[ -g(E):g(E)\right] \), and,

-

\({\varvec{\sigma }}^{i}_{j+1} = {\varvec{\sigma }}^{i}_{j} + D_{j+1} h^{i}_{j+1}\).

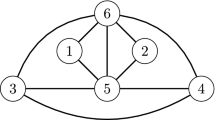

See Fig. 1 for a scheme of the augmentation graph.

Note that by the bounds on g(E) by Lemma 4 the number of vertices in each layer (recall that there is a layer for each \(i \in [n]\) and \(j \in [t]\)) of \(DP({\mathbf{x }}, \alpha )\) is bounded by

Let P be an S–T path in \(DP({\mathbf{x }},\alpha )\). We define the P-augmentation vector \({\mathbf{h }}\in \mathbb {Z}^{nt}\) by the \(h^i_j\)-coordinates of the vertices of P.

Let \({\mathbf{x }}\) be a feasible solution of the combinatorial n-fold IP instance fixed at the beginning of Sect. 3. We say that \({\mathbf{h }}\) is a solution of \(DP({\mathbf{x }},\alpha )\) if there exists an S–T path P such that \({\mathbf{h }}\) is the P-augmentation vector. The weight \(w({\mathbf{h }})\) is then defined as the weight of the path P (i.e., the sum of weights of vertices of the path P). Note that \(w({\mathbf{h }}) = f({\mathbf{x }}+ \alpha {\mathbf{h }}) - f({\mathbf{x }})\).

The following lemma relates solutions of \(DP({\mathbf{x }}, \alpha )\) to potential feasible steps in \(\mathcal{G}\big (E^{(n)}\big )\).

Lemma 5

Let \({\mathbf{x }}\in \mathbb {Z}^{nt}\) be a feasible solution, \(\alpha \in \mathbb {N}\), and let \({\mathbf{h }}\) be a solution of \(DP({\mathbf{x }}, \alpha )\). Then \({\mathbf{l }}\le {\mathbf{x }}+\alpha {\mathbf{h }}\le {\mathbf{u }}\) and \(E^{(n)}{\mathbf{h }}={\mathbf {0}}\).

Proof

To see that \({\mathbf{l }}\le {\mathbf{x }}+ \alpha {\mathbf{h }}\le {\mathbf{u }}\), recall that there is no incoming edge to a vertex \((i,j, h^i_j, \beta ^i_j, {\varvec{\sigma }}^i_j)\) which would violate the bound \(l^i_j \le x^i_j + \alpha h^i_j \le u^i_j\).

To see that \(E^{(n)}{\mathbf{h }}= {\mathbf {0}}\), first observe that by the definition of \(\beta ^i_j\), and the condition that only if \(\beta ^i_t = 0\) for all \(i = 1, \ldots , n\) there is an outgoing edge, we have that every brick \({\mathbf{h }}^i\) satisfies \({\mathbf {1}}^{\intercal } {\mathbf{h }}^i = 0\). Second, by the definition of \({\varvec{\sigma }}^i_j\) and the edges incoming to T, we have that \(D {\mathbf{h }}= {\mathbf {0}}\). Together, this implies \(E^{(n)}{\mathbf{h }}= {\mathbf {0}}\). \(\square \)

Lemma 6

Let \({\mathbf{x }}\in \mathbb {Z}^{nt}\) be a feasible solution. Then every \({\mathbf{g }}\in \mathcal{G}\big (E^{(n)}\big )\) with \({\mathbf{l }}\le {\mathbf{x }}+ \alpha {\mathbf{g }}\le {\mathbf{u }}\) is a solution of \(DP({\mathbf{x }}, \alpha )\).

Proof

Let \({\mathbf{g }}\in \mathcal{G}\big (E^{(n)}\big )\) satisfy \({\mathbf{l }}\le {\mathbf{x }}+ \alpha {\mathbf{g }}\le {\mathbf{u }}\). We shall construct an S–T path P in \(DP({\mathbf{x }}, \alpha )\) such that \({\mathbf{g }}\) is the P-augmentation vector. We will describe which vertex is selected from each layer, and argue that this is well defined. Then, by the definition of \(DP({\mathbf{x }}, \alpha )\), it will be clear that the selected vertices are indeed connected by edges.

In layer \({\mathcal{L}}(1,1)\), we select vertex \(\big (1,1,g^1_1, g^1_1, D_1 g^1_1\big )\). In layer \({\mathcal{L}}(i,j)\), we select vertex \(\big (i,j, g^i_j, \beta ^i_j, {\varvec{\sigma }}^i_j \big )\) with \(\beta ^i_j = \beta ^i_{j-1} + g^i_j\) and \({\varvec{\sigma }}^i_j = {\varvec{\sigma }}^i_{j-1} + D_j g^i_j\) if \(j > 1\), and \(\beta ^i_j = \beta ^{i-1}_{t} + g^i_j\) and \({\varvec{\sigma }}^i_j = {\varvec{\sigma }}^{i-1}_t + D_1 g^{i-1}_t\) otherwise.

We shall argue that this is well defined, i.e., that all of the specified vertices actually exist. From Lemma 1 it follows that \({\mathbf{g }}\) can be decomposed into \(M \le g(E)\) vectors \({\tilde{{\mathbf{g }}}}^1, \dots {\tilde{{\mathbf{g }}}}^M \in \mathcal{G}({\mathbf {1}}^{\intercal })\). Moreover, since \(M \le g(E)\) and each \({\tilde{g}}^\ell \), \(\ell \in [M]\), has one 1 and one \(-1\) (again by Lemma 1), we have that for every \(i \in [n]\) and every \(j \in [t]\), \(\left| \sum _{\ell = 1}^j g^i_\ell \right| \le g(E)\) (i.e., the brick prefix sum is also bounded by g(E) in absolute value). Thus, vertices with the appropriate \(g^i_j\)- and \(\beta ^i_j\)- coordinates exist. Regarding the \({\varvec{\sigma }}^i_j\) coordinate, we make a similar observation: for every \(i \in [n]\) and \(j \in [t]\), \(\left( \sum _{{\hat{\ell }}=1}^{i-1} D {\mathbf{g }}^{{\hat{\ell }}} \right) + \left( \sum _{\ell = 1}^{j} D_\ell g^i_\ell \right) \in \varSigma (E)\).

From the definition of the edges in \(DP({\mathbf{x }}, \alpha )\), and the fact that \({\mathbf{l }}\le {\mathbf{x }}+ \alpha {\mathbf{g }}\le {\mathbf{u }}\), the selected vertices create a path. \(\square \)

Lemma 7

(optimality certification) There is an algorithm that, given a feasible solution \({\mathbf{x }}\in \mathbb {Z}^{nt}\) for \((IP)_{E^{(n)}, {\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\) and \(\alpha \in \mathbb {N}\), in time \(t^{{\mathcal {O}}(r)}(\varDelta r)^{{\mathcal {O}}(r^2)} n\) either finds a vector \({\mathbf{h }}\) such that (i) \(E^{(n)}{\mathbf{h }}= {\mathbf {0}}\), (ii) \({\mathbf{l }}\le {\mathbf{x }}+ \alpha {\mathbf{h }}\le {\mathbf{u }}\), and (iii) \(f({\mathbf{x }}+ \alpha {\mathbf{h }}) < f({\mathbf{x }})\), or decides that no such \({\mathbf{h }}\) exists.

Proof

It follows from Lemma 5 that all solutions of \(DP({\mathbf{x }})\) fulfil (i) and (ii). Observe that if we take \({\mathbf{h }}\) to be a solution of \(DP({\mathbf{x }}, \alpha )\) with minimum weight, then either \(f({\mathbf{x }}) = f({\mathbf{x }}+\alpha {\mathbf{h }})\) or \(f({\mathbf{x }}) > f({\mathbf{x }}+\alpha {\mathbf{h }})\). Due to Lemma 6 the set of solutions of \(DP({\mathbf{x }}, \alpha )\) contains all \({\mathbf{h }}\in \mathcal{G}\big (E^{(n)}\big )\) with \({\mathbf{l }}\le {\mathbf{x }}+ \alpha {\mathbf{h }}\le {\mathbf{u }}\). Thus, by Proposition 3, if \(f({\mathbf{x }}) = f({\mathbf{x }}+\alpha {\mathbf{h }})\), no \({\mathbf{h }}\) satisfying all three conditions (i), (ii), (iii) exists.

Our goal is then to find the lightest S–T path in the graph \(DP({\mathbf{x }}, \alpha )\). However, since edges of negative weight will be present, we cannot use, e.g., Dijkstra’s algorithm. Still, it can be observed that \(DP({\mathbf{x }}, \alpha )\) is a directed acyclic graph, and moreover, finding the lightest path can be done in a layer-by-layer manner (by the standard algorithm of relaxing edges in a directed acyclic graph [12, Theorem 24.5]) in time \({\mathcal {O}}(|V(DP({\mathbf{x }}, \alpha ))|\cdot L_{\max }) = {\mathcal {O}}(nt L_{\max }^2)\), also cf. [36, Lemma 3.4]. The claimed run time follows from the bound on the maximum size of a layer (4). \(\square \)

3.3 Step lengths

We have shown how to find a feasible step \({\mathbf{h }}\) for any given step length \(\alpha \in \mathbb {N}\) such that \(f({\mathbf{x }}\,+\, \alpha {\mathbf{h }}) {\le } f({\mathbf{x }}\,+\, \alpha {\mathbf{g }})\) for any feasible step \({\mathbf{g }}\in \mathcal{G}\big (E^{(n)}\big )\). Now, we will show that there are not too many step lengths that need to be considered in order to find a Graver-best step, which, by Proposition 3, leads to a good bound on the total required number of steps. Observe that since all feasible solutions are contained in a box \({\mathbf{l }}\le {\mathbf{x }}\le {\mathbf{u }}\), no steps of length \(\alpha > \Vert {\mathbf{u }}- {\mathbf{l }}\Vert _\infty \) are feasible. Thus, if the quantity \(\Vert {\mathbf{u }}- {\mathbf{l }}\Vert _\infty \) can be controlled, we may control the number of step-lengths which need to be checked.

In the following, we use the proximity technique pioneered by Hochbaum and Shantikumar [38] in the case of totally unimodular matrices and extended to the setting of Graver bases by Hemmecke et al. [35]. This technique allows to show that, provided some structure of the constraints (e.g., total unimodularity or bounded \(\ell _\infty \)-norm of its Graver elements), any continuous optimum is not too far from an integer optimum of the instance.

Proposition 4

( [35, Theorem 3.14]) Consider a combinatorial n-fold IP \((IP)_{E^{(n)}, {\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\). Then for any optimal solution \({\hat{{\mathbf{x }}}}\) of its continuous relaxation there is an optimal solution \({\mathbf{x }}^*\) of \((IP)_{E^{(n)}, {\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\) with

This allows us then to reduce the original instance to an “equivalent” instance contained in a small box, as hinted at above.

Lemma 8

(equivalent bounded instance) Let \((IP)_{E^{(n)},{\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\) be a combinatorial n-fold IP. With one call to an optimization oracle of its continuous relaxation, one can construct \({\hat{{\mathbf{l }}}}, {\hat{{\mathbf{u }}}} \in \mathbb {Z}^{nt}\) such that

and \(\left\| {\hat{{\mathbf{u }}}} - {\hat{{\mathbf{l }}}} \right\| _\infty \le nt\cdot g(E)\).

Proof

Returning to Proposition 4, observe that the quantity \(\max \left\{ \Vert {\mathbf{g }}\Vert _\infty \mid {\mathbf{g }}\in \mathcal{G}\big (E^{(n)}\big )\right\} \) is bounded by g(E) (Lemma 3 and Lemma 1). Hence, we can set new lower and upper bounds \({\hat{{\mathbf{l }}}}\) and \({\hat{{\mathbf{u }}}}\) defined by \({\hat{l}}_j^i {:}{=} \max \left\{ \lfloor {\hat{x}}_j^i \rfloor - nt g(E), l_j^i \right\} \) and \({\hat{u}}_j^i {:}{=} \min \left\{ \lceil {\hat{x}}_j^i \rceil + nt g(E), u_j^i \right\} \), and Proposition 4 assures that the integer optimum also lies within the new bounds. \(\square \)

3.4 Finishing the proof

We are now going to prove Theorem 1 by combining the results we have established in the previous sections.

Proof of Theorem 1

We proceed in three steps.

Step 1: Bounding the feasible region. First, we use Lemma 8 to construct new lower and upper bounds \({\hat{{\mathbf{l }}}}, {\hat{{\mathbf{u }}}}\) satisfying \(\Vert {\hat{{\mathbf{u }}}} - {\hat{{\mathbf{l }}}} \Vert _\infty \le nt\cdot g(E)\) and preserving the optimal value of \((IP)_{E^{(n)},{\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\). Thus, we shall replace \({\mathbf{l }}\) and \({\mathbf{u }}\) by \({\hat{{\mathbf{l }}}}\) and \({\hat{{\mathbf{u }}}}\) from now on and assume that \(\Vert {\mathbf{u }}- {\mathbf{l }}\Vert _\infty \le nt\cdot g(E)\).

Step 2: Optimization. Let us assume that we have an initial feasible solution \({\mathbf{x }}_0\) of \((IP)_{E^{(n)},{\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\). Given a step length \(\alpha \in \mathbb {N}\), it takes time \(t^{{\mathcal {O}}(r)} (\varDelta r)^{{\mathcal {O}}(r^2)} n\) by Lemma 7 to find a feasible step \({\mathbf{h }}\) satisfying \(f({\mathbf{x }}+ \alpha {\mathbf{h }}) \le f({\mathbf{x }}+ \alpha {\mathbf{g }})\) for all \({\mathbf{g }}\in \mathcal{G}\big (E^{(n)}\big )\). Recall that no \({\mathbf{g }}\in \mathcal{G}\big (E^{(n)}\big )\) can be feasible for \(\alpha > n t g(E)\) by our bound on \(\Vert {\mathbf{u }}- {\mathbf{l }}\Vert _\infty \). Thus, applying Lemma 7 for all \(\alpha \in [nt\cdot g(E)]\) and choosing a pair \((\alpha , {\mathbf{h }})\) which minimizes \(f({\mathbf{x }}+ \alpha {\mathbf{h }})\) surely finds a Graver-best step in time \(t^{{\mathcal {O}}(r)} (\varDelta r)^{{\mathcal {O}}(r^2)} n^2\) (or reports that no such \({\mathbf{h }}\) exists). In order to reach the optimum, by Proposition 3 we need to make at most \((2nt - 2) \cdot {\mathcal {O}}(\langle I \rangle )\) Graver-best steps, where \(\langle I \rangle = \langle {\mathbf{b }}, {\mathbf {0}}, {\mathbf{u }}, f \rangle \). This follows from \({\mathcal {O}}(\langle I \rangle )\) being an upper bound on \(f({\mathbf{x }}^0) - f({\mathbf{x }}^*)\) for some optimal solution \({\mathbf{x }}^*\) of \((IP)_{E^{(n)},{\mathbf{b }}, {\mathbf{l }}, {\mathbf{u }}, f}\). In total, we need time \(t^{{\mathcal {O}}(r)} (\varDelta r)^{{\mathcal {O}}(r^2)} n^3 \langle I \rangle \).

Step 3: Feasibility. Now we are left with the task of finding a starting feasible solution \({\mathbf{x }}_0\) in the case when we do not have it. We follow the lines of Hemmecke et al. [36, Lemma 3.8] and solve an auxiliary combinatorial n-fold IP given by the matrix \({\bar{E}} = \left( {\begin{matrix}{\bar{D}}\\ {\bar{A}}\end{matrix}}\right) \) with \({\bar{D}} {:}{=} (D ~ I_{r} ~ -I_{r} ~ {\mathbf {0}})\) and \({\bar{A}} {:}{=} (A ~ {\mathbf {1}}^{\intercal }_{2r+1}) = {\mathbf {1}}^{\intercal } \in \mathbb {Z}^{t+2r+1}\), where \(I_r\) is the identity matrix of dimension r, \({\mathbf {0}}\) is the zero vector of length r and \({\mathbf {1}}_{2r+1}\) is the vector of all 1s of length \(2r+1\). The variables \({\bar{{\mathbf{x }}}}\) of this auxiliary problem have a natural partition into nt variables \({\mathbf{x }}\) corresponding to the original problem fixed at the beginning of Sect. 3, and \(n(2r+1)\) new auxiliary variables \({\tilde{{\mathbf{x }}}}\). Keep the original lower and upper bounds on \({\mathbf{x }}\) and introduce a lower bound 0 and upper bound \(ntg(E)\varDelta \) on each auxiliary variable. Finally, let the new objective be the linear function \(f({\mathbf{x }}) = {\bar{{\mathbf{w }}}}^\intercal {\bar{{\mathbf{x }}}}\) which expresses the sum of the auxiliary variables, i.e., \({\bar{{\mathbf{w }}}} = \left( {\bar{{\mathbf{w }}}}^1, \dots , {\bar{{\mathbf{w }}}}^n\right) \) and \({\bar{{\mathbf{w }}}}^i = (0, \dots , 0, 1, \dots , 1)\) with t zeroes and \(2r+1\) ones for all \(i = 1, \ldots , n\). Observe that it is easy to construct an initial feasible solution by setting \({\mathbf{x }}= {\mathbf{l }}\) and computing \({\tilde{{\mathbf{x }}}}\) accordingly: \({\tilde{{\mathbf{x }}}}\) serve the role of slack variables, and the slack in any constraint is at most \(nt^2g(E)\varDelta \) by the fact that \(\Vert {\mathbf{u }}- {\mathbf{l }}\Vert _\infty \le nt\cdot g(E)\) and \(\varDelta = 1 + \Vert D\Vert _\infty \).

Then, applying Step 1 and Step 2 either finds a solution to the auxiliary problem with objective value 0, implying \({\tilde{{\mathbf{x }}}} = {\mathbf {0}}\), and thus \({\mathbf{x }}\) is feasible for the original problem, or no such solution exists, meaning that the original problem is infeasible. \(\square \)

4 Applications

Our aim in this section is to spell out the implications of Theorem 1 for several problems from the literature. In preparation for this, first we show how to deal with inequalities (Sect. 4.1), and second, we discuss some common encoding aspects (Sect. 4.2). Then, we give combinatorial n-fold IP formulations for several specific problems (Sects. 4.3–4.6), which constitute the bulk of this section. Finally, we show that many ILP formulations in the literature can be turned into a combinatorial n-fold IP formulations, implying speed-ups for the corresponding problems (Sect. 4.7).

All the formulations in this section use integer variables. We implicitly assume it throughout and specifically highlight it in the first formulation for the sake of completeness.

4.1 Inequalities in constraints

In applications, it is common for problems to exhibit a structure similar to that of combinatorial n-fold IP with the only difference being that some of the equations \(E^{(n)} {\mathbf{x }}= {\mathbf{b }}\) are actually inequalities. Given an n-fold IP (in particular a combinatorial n-fold IP), we call the upper rows \((D~D~\cdots ~D) {\mathbf{x }}= {\mathbf{b }}^{0}\) globally uniform constraints, and the lower rows \(A {\mathbf{x }}^i = {\mathbf{b }}^i\), for all \(i \in [n]\), locally uniform constraints. So we first show that introducing inequalities into a combinatorial n-fold IP is possible. However, in the case of globally uniform constraints, we need a slightly different approach than in a standard n-fold IP to keep the rigid format of a combinatorial n-fold IP.

Lemma 9

For an integer programming problem with equations and inequalities

where \(E^{(n)}\) is a combinatorial n-fold IP matrix, there exists an equivalentFootnote 3 combinatorial n-fold IP instance \((IP)_{{\bar{E}}^{(n)},{\mathbf{b }},{\bar{{\mathbf{l }}}},{\bar{{\mathbf{u }}}},{\bar{f}}}\) of dimension \(n {\bar{t}}\) with \({\bar{t}} \le t+r+1\) and \(\langle {\bar{{\mathbf{l }}}}, {\bar{{\mathbf{u }}}}, {\bar{f}} \rangle \le {\mathcal {O}}(\langle {\mathbf{l }}, {\mathbf{u }}, f \rangle )\).

Remark

For both types of constraints, a strict inequality “<” can be enforced by enforcing a “\(\le \)” inequality and increasing the corresponding right hand side of the inequality by one, and similarly for “>”.

Proof of Lemma 9

Inequalities in locally uniform constraints. We add n variables \(x_{t+1}^i\) for all \(i \in [n]\) and we replace D with \((D~{\mathbf {0}})\). For each row i where we wish to enforce \({\mathbf {1}}^{\intercal } {\mathbf{x }}^i \le b^i\), we set the upper bound on \(u_{t+1}^i = \Vert {\mathbf{u }}^i-{\mathbf{l }}^i\Vert _1\) and lower bound \(l_{t+1}^i = 0\). Similarly, for each row i where we wish to enforce \({\mathbf {1}}^{\intercal } {\mathbf{x }}^i \ge b^i\), we set a lower bound \(l_{t+1}^i = -\Vert {\mathbf{u }}^i-{\mathbf{l }}^i\Vert _1\) and an upper bound \(u_{t+1}^i = 0\). For all remaining rows we set \(l_{t+1}^i = u_{t+1}^i = 0\), enforcing \({\mathbf {1}}^{\intercal } {\mathbf{x }}^i = b^i\).

Inequalities in globally uniform constraints. We replace the (already augmented) matrix D with \((D~I_r)\), where \(I_r\) is the \(r \times r\) identity matrix. Thus, we have introduced r new variables \(x_{t+j}^i\) with \(i \in [n]\) and \(j \in [r]\); however, we enforce them all to be 0 by setting \(l_{t+j}^i = u_{t+j}^i = 0\) for all \(i \in [n]\) and \(j \in [r]\). Next, we introduce an \((n+1)\)-st brick, set \(l_j^{n+1} = u_j^{n+1} = 0\) for all \(j \in [t]\) and set \(b^{n+1} = \Vert D\Vert _\infty \cdot \Vert (b^1, \dots , b^n)\Vert _1\). Then, for each row \(i \in [r]\) where we wish to enforce a “\(\le \)” inequality, we set \(l_{t+i}^{n+1} = 0\) and \(u_{t+i}^{n+1} = b^{n+1}\), and for each row \(i \in [r]\) with a “\(\ge \)” inequality, we set \(l_{t+i}^{n+1} = -b^{n+1}\) and \(u_{t+i}^{n+1} = 0\). We let \(l_{t+i}^{n+1} = u_{t+i}^{n+1} = 0\) for equality.

It remains to argue how for \(i \in [n], j \in [t]\) obtain the value of the original variable \(x^i_j\). Note that we have only added new (slack) variables to the original IP and thus for this we only have to project out the newly added variables. An injection \(\varphi \) certifying the equivalence of I and \(I'\) is the mapping which projects out the new variables (\(x^i_{t+1}\) for all i, \(x^i_{t+1+j}\) for all \(j \in [r]\), and \(x^{n+1}_j\) for all \(j \in [t + r + 1]\)). \(\square \)

4.2 Succinctness

Before approaching specific applications we first discuss our particular choice of encoding.

A common aspect shared by all of our applications is that bounding some parameter of the instance makes it preferable to view the instance in a succinct way (following the terminology of Faliszewski et al. [21], Onn [62, 63] calls these problems huge whereas Goemans and Rothvoß [32] call them high multiplicity). The standard way of viewing an instance is that the input is a collection of individual objects (bricks, matrix columns, voters, covering sets etc.). The succinct way of viewing an instance is by saying that identical objects are of the same type, giving a bound T on the number of distinct types, and then presenting the input as numbers \(n_1, \dots , n_{T}\) such that \(n_i\) is the number of objects of type i. Clearly, any standard instance can be converted to a succinct instance of roughly the same size (the number of objects is an upper bound on T), but the converse is not true as the numbers \(n_i\) might be large. Also, it is sometimes non-trivial to see (see Sect. 4.6) that the output can be represented succinctly, but we show that in all relevant cases it can.

In our applications we always state what are the types and what is the upper bound T on the number of types. We assume some arbitrary enumeration of the types. We also assume that the input is presented succinctly and thus we do not include the time needed to read and convert a standard instance into a succinct instance in the runtime of our algorithms.

4.3 Weighted set multicover

Bredereck et al. [8] defined the Weighted Set Multicover (WSM) problem, which is a significant generalization of the classical Set Cover problem:

The motivation of Bredereck et al. to study WSM was that it captures several problems from computational social choice and optimization problems on graphs implicit to previous works [24, 28, 49]. Bredereck et al. [8] designed an algorithm for WSM that runs in time \(2^{2^{{\mathcal {O}}(k\log k)}}{\mathcal {O}}(n)\), using Lenstra’s algorithm.

Our result yields an exponential improvement over the algorithm by Bredereck et al. [6], both in the dependence on the parameter and instance size:

Theorem 2

There is an algorithm that solves Weighted Set Multicover in time \(k^{{\mathcal {O}}(k^2)} {\mathcal {O}}(\log n + \log w_{\max })\) for succinctly represented instances of n sets over a universe of size k, where \(w_{\max }\) is the maximum weight of any set.

Proof

Observe that there are at most \(2^k\) different sets \(F \in 2^U\); let T be the number of these sets. We classify each pair (F, w) in the input into one of \(T \le 2^k\) different types, where (F, w) and \((F',w')\) are of the same type if \(F = F'\). Let \(n_i\), \(i \in [T]\), be the number of sets of type i. Further, for any two pairs (F, w) and \((F, w')\) with \(w \le w'\), for any solution containing \((F', w')\) and not containing (F, w) there is another solution which is at least as good and contains (F, w). We thus order the pairs \((F,w),(F,w'),\cdots \) by non-decreasing weight, so that lighter elements are used before heavier ones in any optimal solution.

This allows us to represent the input instance in a succinct way by T functions \(g^i, \dots , g^T:[n] \rightarrow \mathbb {N}\) such that, for any \(i \in [T]\), \(g^i(\ell )\) is defined as the sum of the \(\ell \) lightest elements of type i or \(+\infty \) in case that there are less than \(\ell \) elements of type i. Observe that since each \(g^i\) is a partial sum of a non-decreasing sequence of weights, it is a convex function.

We construct a combinatorial n-fold IP to solve the problem. Let \(x_{{\mathbf{f }}}^\tau \) for each \({\mathbf{f }}\in 2^U\) and each \(\tau \in [T]\) be a variable. Let \(l_{{\mathbf{f }}}^\tau = u_{{\mathbf{f }}}^\tau = 0\) for each \({\mathbf{f }}\in 2^U\) such that \({\mathbf{f }}\ne F^\tau \), and let \(l_{{\mathbf{f }}}^\tau = 0\) and \(u_{{\mathbf{f }}}^\tau = n_\tau \) for \({\mathbf{f }}= F^\tau \). The variable \(x_{{\mathbf{f }}}^\tau \) with \({\mathbf{f }}= F^\tau \) represents the number of sets of type \(\tau \) in the solution. The IP formulation then reads

note that \(f_i\) is 1 if \(i \in {\mathbf{f }}\) and 0 otherwise.

This formulation is a combinatorial n-fold IP for the following reason. First, its objective function is separable convex. Second, its constrains are divided into two sets as follows. The first k constraints are described by a sum whose indices run from 1 to T and whose coefficients are identical for each \(\tau \in [T]\) – these are the globally uniform constraints. The second T constraints are described by a sum whose indices run over \({\mathbf{f }}\in 2^U\) and each constraint only applies to a subset of variables determined by \(\tau \in [T]\) – these are the locally uniform constraints.

Let us determine the parameters \({\hat{\varDelta }},{\hat{r}},{\hat{t}},{\hat{n}}\) and \(\langle {\hat{I}} \rangle \) of this combinatorial n-fold IP instance \({\hat{I}}\). Clearly, the largest coefficient \(||{\hat{D}}||_\infty \) is 1, the number of globally uniform constraints \({\hat{r}}\) is k, the number of variables per brick \({\hat{t}}\) is \(2^k\), the number of bricks \({\hat{n}}\) is T, and the length of the input \(\langle {\hat{I}} \rangle \) is at most \(\log n + \log w_{\max }\). Thus, instance \({\hat{I}}\) can be solved using Theorem 1 in the claimed time \(k^{{\mathcal {O}}(k^2)} {\mathcal {O}}(\log n + \log w_{\max })\). \(\square \)

4.4 Stringology

A typical problem from stringology is to find a string y satisfying certain distance properties with respect to k strings \(s_1, \dots , s_k\). All previous fixed-parameter algorithms for such problems we are aware of for parameter k rely on Lenstra’s algorithm, or their complexity status was open (e.g., the complexity of Optimal Consensus [2] was unknown for all \(k \ge 4\)). Interestingly, Boucher and Wilkie [5] showed the counterintuitive fact that Closest String is easier to solve when k is large, which makes the parameterization by k even more significant. Finding an algorithm with run time only single-exponential in k was a repeatedly posed problem, e.g. by Bulteau et al. [9, Challenge #1] and Avila et al. [3, Problem 7.1]. By applying our result, we close this gap for a wide range of problems.

In order to do so, we show a single-exponential algorithm for an artificial “meta-problem” called \(\delta \)-Multi Strings which generalizes many previously studied problems in stringology.

We call a distance function \(\delta :\varSigma ^* \times \varSigma ^* \rightarrow \mathbb {N}\) character-wise wildcard-compatible if \(\delta (x,y) = \sum _{i=1}^L \delta (x[i], y[i])\) for any two strings \(x,y \in \varSigma ^L\), and \(\delta (e, \star ) = 0\) for all \(e \in \varSigma \). Note that a character-wise wildcard-compatible can be described by a \(|\varSigma | \times |\varSigma |\) sized table of the character distances.

Theorem 3

There is an algorithm that solves instances of \(\delta \)-Multi Strings in time \(K^{{\mathcal {O}}(k^2)} {\mathcal {O}}(\log L)\), where \(K = \max \left\{ |\varSigma |, k, \max _{e,f \in \varSigma } \delta (e,f) \right\} \) and \(\delta \) is a character-wise wildcard-compatible function.

Proof

Let us fix an instance of \(\delta \)-Multi Strings. We create an instance of combinatorial n-fold IP and show how solving it corresponds to solving the original \(\delta \)-Multi Strings problem.

As is standard, we represent the input as an \(L \times k\) matrix C with entries from \(\varSigma \cup \{\star \}\) whose rows are the input strings \(s_1, \dots , s_k\). There are at most \(T = (|\varSigma |+1)^k\) different input column types. Let \(n_{{\mathbf{e }}}\) be the number of columns of type \({\mathbf{e }}\in (\varSigma \cup \{\star \})^k\) and denote \(\mathcal{T}_c \subseteq (\varSigma \cup \{\star \})^k\) the set of input column types. A solution can be represented as an \(L \times (k+1)\) matrix with entries from \(\varSigma \cup \{\star \}\) whose last row does not contain any \(\star \) symbol. Thus, there are at most \((|\varSigma |+1)^k \cdot |\varSigma |\) solution column types \({\varvec{\alpha }}= ({\mathbf{e }}, f) \in \mathcal{T}_s\), where \(\mathcal{T}_s = \mathcal{T}_c \times \varSigma \) is the set of all solution column types. We say that an input column type \({\mathbf{e }}\in \mathcal{T}_c\) is compatible with a solution column type \({\varvec{\alpha }}\in \mathcal{T}_s\) if \({\varvec{\alpha }}= ({\mathbf{e }}, f)\) for some \(f \in \varSigma \).

Let us describe the combinatorial n-fold IP formulation. It consists of variables \(x_{{\varvec{\alpha }}}^{{\mathbf{e }}}\) for each \({\varvec{\alpha }}\in \mathcal{T}_s\) and each \({\mathbf{e }}\in \mathcal{T}_c\). Intuitively, the variable \(x_{{\varvec{\alpha }}}^{{\mathbf{e }}}\) encodes the number of columns \({\varvec{\alpha }}\) in the solution. However, to obey the format of combinatorial n-fold IP, we need a copy of this variable for each brick, hence the upper index \({\mathbf{e }}\). We set an upper bound \(u_{{\varvec{\alpha }}}^{{\mathbf{e }}} = n_{{\mathbf{e }}}\) for each two compatible \({\mathbf{e }}\) and \({\varvec{\alpha }}\), and we set \(u_{{\varvec{\alpha }}}^{{\mathbf{e }}} = 0\) for each pair which is not compatible; all lower bounds are set to 0. The locally uniform constraints are simply \(\sum _{{\varvec{\alpha }}\in \mathcal{T}_s} x_{{\varvec{\alpha }}}^{{\mathbf{e }}} = n_{{\mathbf{e }}}\) for all \({\mathbf{e }}\in \mathcal{T}_c\). The globally uniform constraints are

and the objective is

We then apply Theorem 1 with the following set of parameters:

-

\({\hat{\varDelta }}\) is one plus the largest coefficient in D, which is \({1+\max \nolimits _{e,f \in \varSigma } \delta (e,f) \le 1+ K}\),

-

\({\hat{r}}\) is the number of globally uniform constraints, which is 2k,

-

\({\hat{t}}\) is the number of variables per brick, which is \(|\mathcal{T}_s| \le (|\varSigma |+1)^k |\varSigma | \le K^{2k}\),

-

\({\hat{n}}\) is the number of bricks, which is \(|\mathcal{T}_c| \le (|\varSigma |+1)^k \le (K+1)^k\), and,

-

\(\langle {\hat{I}} \rangle \) is the size of the input \(\langle {\mathbf{b }}, {\mathbf {0}}, {\mathbf{u }}, {\mathbf{w }}\rangle \le \log L\).

Now, applying Theorem 1 yields the running time

where we have used \(k \le K\) and \({\mathcal {R}}\) is the time required to solve the continuous relaxation of the above IP. Note that \({\mathcal {R}}\) is polynomial in \({\hat{r}},{\hat{t}},{\hat{n}},\langle {\hat{I}} \rangle \). \(\square \)

When \(\delta \) is the Hamming distance \(d_H\), it is standard to first “normalize” the input to an equivalent instance over the alphabet [k] [37, Lemma 1]. With a slight abuse of notation we also define the Hamming distance for the wildcard character \(\star \) to be \(d_H(e, \star )=0\) for all \(e \in \varSigma \). Note that the “normalization” procedure still works in that case, cf. [37, Lemma 1]. Thus, for \(\delta = d_H\) we get rid of the dependence on \(|\varSigma |\):

Corollary 1

\(d_H\)-Multi Strings can be solved in time \(k^{{\mathcal {O}}(k^2)} {\mathcal {O}}(\log L)\).

That is, \(d_H\)-Multi Strings is fixed-parameter tractable for parameter k. Now we will show that many other string problems are single-exponential fixed-parameter tractable parameterized by k as well because they either can be seen as special cases of \(d_H\)-Multi Strings or (Turing) reduce to it. Foremost, this is the case for Closest String.

It is easy to see that Closest String is a special case of \(d_H\) -Multi Strings with \(b=0\), and \(D_i=d\) and \(d_i=0\) for all \(i \in [k]\).

Similar reasoning applies to many other problems. We first give their definitions and either explain how they reduce to Closest String, or in Table 2 explain how they reduce to \(d_H\)-Multi Strings.

Note. c-HRC has a Turing reduction (with \(k^k\) instances) to Closest String [1].

See Table 2 for a summary of our improvements for the above-mentioned problems. Now, the following theorem is a simple corollary of Corollary 1 and the fact that \(d_H\)-Multi Strings generalizes all of the problems defined above.

Theorem 4

The problems

-

Closest String, Farthest String, Distinguishing String Selection, Neighbor String, Closest String with Wildcards, Closest to Most Strings, c-HRC and Optimal Consensus are solvable in time \(k^{{\mathcal {O}}(k^2)} {\mathcal {O}}(\log L)\), and,

-

d-Mismatch is solvable in time \(k^{{\mathcal {O}}(k^2)} {\mathcal {O}}(L^2 \log L)\),

for inputs consisting of k strings of length L succinctly encoded by multiplicities of identical columns.

4.5 Computational social choice

A typical problem in computational social choice takes as input an election consisting of a set V of voters and a set C of candidates which are ranked by the voters, and the objective is to manipulate the election in certain ways to let a desired candidate win the election under some voting rule \({\mathcal {R}}\). This setup leads to a class of bribery problems, a prominent example of which is \({\mathcal {R}}\)-Swap Bribery where manipulation is by swaps of candidates which are consecutive in voters’ preference orders. For a long time, the only known algorithms minimizing the number of swaps required run times which were double-exponential in |C|. Improving those run times was posed as a challenge [6, Challenge #1]. Recently, Knop et al. [46] solved the challenge using Proposition 1. However, Knop et al.’s result has a cubic dependence \({\mathcal {O}}(|V|^3)\) on the number of voters, and the dependence on the number of candidates is still quite large, namely \(|C|^{{\mathcal {O}}(|C|^6)}\).

We improve their result to logarithmic dependence on |V|, and smaller dependence on |C| – as we shall see. However, before we do so, we first introduce the necessary definitions and terminology.

Elections. An election (C, V) consists of a set C of candidates and a set V of voters, who indicate their preferences over the candidates in C, represented via a preference order \(\succ _v\) for every \(v \in V\) which is a total order over C. For a candidate c we denote by \({\text {rank}}(c,v)\) their rank in \(\succ _v\) (that is, \({\text {rank}}(c,v) = \left| \left\{ c' \in C \mid c \succ _v c' \right\} \right| \)). Then, v’s most preferred candidate has rank 1 and their least preferred candidate has rank |C|. For distinct candidates \(c,c'\in C\), we write \(c\succ _v c'\) if voter v prefers c over \(c'\). To simplify notation, we sometimes identify the candidate set C with \(\{1,\cdots ,|C|\}\), in particular when expressing permutations over C. We sometimes identify a voter v with their preference order \(\succ _v\), as long as no confusion arises.

Swaps. Let (C, V) be an election and let \(\succ _v\in V\) be a voter. For candidates \(c,c'\in C\), a swap \(s = (c,c')_v\) means to exchange the positions of c and \(c'\) in \(\succ _v\). We denote the perturbed order by \(\succ _v^s\). A swap \((c,c')_v\) is admissible in \(\succ _v\) if \({{\,\mathrm{\mathrm{rank}}\,}}(c,v) = {{\,\mathrm{\mathrm{rank}}\,}}(c',v)-1\). A set S of swaps is admissible in \(\succ _v\) if they can be applied sequentially in \(\succ _v\), one after the other, in some order, such that each one of them is admissible. Note that the perturbed vote, denoted by \(\succ _v^S\), is independent from the order in which the swaps of S are applied (if each swap is admissible when applied). We also extend this notation for applying swaps in several votes and denote it \(V^S\). We specify v’s cost of swaps by a function \(\sigma ^v: C\times C\rightarrow \mathbb {Z}\), where \(\sigma ^v(c,c')\) is the cost of swapping c and \(c'\).

Voting rules. A voting rule \({\mathcal {R}}\) is a function that maps an election (C, V) to a subset \(W\subseteq C\) of winners. Let us define two significant classes of voting rules:

Scoring protocols. A scoring protocol is defined through a vector \({\mathbf{s }}= (s_1,\ldots ,s_{|C|})\) of integers with \(s_1\ge \cdots \ge s_{|C|} \ge 0\). For each position \({p\in \{1,\ldots ,|C|\}}\), value \(s_p\) specifies the number of points that each candidate c receives from each voter that ranks c as \(p^{\text {th}}\) best. Any candidate with the maximum number of points is a winner. Examples of scoring protocols include the Plurality rule with \({\mathbf{s }}= (1,0,\ldots ,0)\), the d-Approval rule with \({\mathbf{s }}= (1,\ldots ,1,0,\ldots ,0)\) with d ones, and the Borda rule with \({\mathbf{s }}= (|C|-1, |C|-2, \ldots , 1, 0)\). Throughout, we consider only natural scoring protocols for which \(s_1 \le |C|\), as is the case for the aforementioned popular rules.

C1 rules. A candidate \(c\in C\) is a Condorcet winner if any other \(c'\in C \setminus \{c\}\) satisfies \(|\{\succ _v \in V \mid c\succ _v c' \}| > |\{v \in V \mid c' \succ _v c\}|\). Fishburn [26] classified voting rules as C1, C2 or C3, depending on the kind of information needed to determine the winner. For candidates \(c,c' \in C\) let \(v(c,c')\) be the number of voters who prefer c over \(c'\), that is, \(v(c,c') = |\{\succ _v \in V \mid c \succ _v c'\}|\); we write \(c <_M c'\) if c beats \(c'\) in a head-to-head contest, that is, if \(v(c,c') > v(c',c)\).

A rule \({\mathcal {R}}\) is C1 if knowing \(<_M\) suffices to determine the winner, that is, for each pair of candidates \(c,c'\) we know whether \(v(c,c') > v(c',c), v(c,c') < v(c',c)\) or \(v(c,c') = v(c',c)\). An example is the \(\hbox {Copeland}^\alpha \) rule for \(\alpha \in [0,1]\), which specifies that for each head-to-head contest between two distinct candidates, if some candidate is preferred by a majority of voters then they obtain one point and the other candidate obtains zero points, and if a tie occurs then both candidates obtain \(\alpha \) points; the candidate with the largest sum of points wins.

We say that two voters \(v,v'\) are of the same type if \(\succ _{v} = \succ _{v'}\) and \(\sigma ^{v} = \sigma ^{v'}\); clearly \(T \le |V|\).

Theorem 5

\(\mathcal{R}\)-Swap Bribery can be solved in time

-

\(|C|^{{\mathcal {O}}(|C|^2)} {\mathcal {O}}(T^3 (\log |V| + \log \sigma _{\max }))\) for \(\mathcal{R}\) any natural scoring protocol, and,

-

\(|C|^{{\mathcal {O}}(|C|^4)} {\mathcal {O}}(T^3 (\log |V| + \log \sigma _{\max }))\) for \(\mathcal{R}\) any C1 rule,

where T is the number of voter types and \(\sigma _{\max }\) is the maximum cost of a swap.

Remark

For simplicity, we only show how Theorem 1 can be applied to speed up the \({\mathcal {R}}\)-Swap Bribery for two representative voting rules \({\mathcal {R}}\).

Proof of Theorem 5

Let \(n_1, \dots , n_{T}\) be the numbers of voters of given types. We enumerate all total orders on C by numbers in [|C|!]; that is, a \(j \in [|C|!]\) uniquely determines a total order on C. Let \(x_j^i\) for \(j \in [|C|!]\) and \(i \in [T]\) be a variable encoding the number of voters of type i that are bribed to be of order j in the solution. With slight abuse of notation, we denote by \(\sigma ^i(i,j)\) the cost of bribery for a voter of type i to change order to j (as by [20, Proposition 3.2] this cost is fixed). Regardless of the voting rule \(\mathcal{R}\), the objective and the locally uniform constraints are identical:

The number of variables per brick \({\hat{t}}\) is |C|!, the number of bricks \({\hat{n}}\) is T, and the size of the instance \(\langle {\hat{I}} \rangle \) is \(\log n + \log (|C|^2 \sigma _{\max })\), because at most \(|C|^2\) swaps suffice to permute any order \(i \in \left[ |C|!\right] \) to any other order \(j \in [|C|!]\) [20, Proposition 3.2]. Let us now describe the globally uniform constraints separately for the two classes of voting rules which we study here.

Natural scoring protocol. Let \({\mathbf{s }}= (s_1, \dots , s_{|C|})\) be a natural scoring protocol, i.e., \(s_1 \ge \dots \ge s_{|C|}\) and \(\Vert {\mathbf{s }}\Vert _\infty \le |C|\). With slight abuse of notation, we denote \(s_j(c)\), for \(j \in [|C|!]\) and \(c \in C\), the number of points obtained by candidate c from a voter of order j. The globally uniform constraints then enforce that \(c^{\star }\) gets at least as many points as any other candidate c:

The number \({\hat{r}}\) of these constraints is \(|C|-1\), and the largest coefficient in them is \(\Vert {\mathbf{s }}\Vert _\infty \le |C|\) (since \({\mathbf{s }}\) is a natural scoring protocol). Therefore, \({\hat{\varDelta }} = 1 + \Vert {\mathbf{s }}\Vert \le 1 + |C|\).

Any C1 rule. Let \(\alpha _j(c,c')\) be 1 if a voter with order \(j \in [|C|!]\) prefers c to \(c'\) and 0 otherwise. Recall that a voting rule is C1 if, to determine the winner, it is sufficient to know, for each pair of candidates \(c,c'\), whether \(v(c,c') > v(c',c), v(c,c') < v(c',c)\) or \(v(c,c') = v(c',c)\), where \(v(c,c') = |\{v \mid c \succ _v c'\}|\). We call a tuple \(<_M \in \{<, =, >\}^{|C|^2}\) a scenario. Thus, a C1 rule can be viewed as partitioning the set of all scenarios into those that select \(c^{\star }\) as a winner and those that do not. Then, it suffices to enumerate all the at most \(3^{|C|^2}\) scenarios \(<_M\) where \(c^{\star }\) wins, and for each of them to solve a combinatorial n-fold IP with the following globally uniform constraints enforcing the scenario \(<_M\).

The number \({\hat{r}}\) of these constraints is \(\left( {\begin{array}{c}|C|\\ 2\end{array}}\right) \le |C|^2\), and the largest coefficient in them is 1, so \({\hat{\varDelta }} = 2\). The proof is finished by plugging in the values \({\hat{\varDelta }}, {\hat{r}}, {\hat{t}}, {\hat{n}}\) and \(\langle {\hat{I}} \rangle \) into Theorem 1. \(\square \)

Connections between stringology and computational social choice. Challenge #3 of Bulteau et al. [9] asks for connections between problems in stringology and computational social choice. We demonstrate that in both fields combinatorial n-fold IP is an important tool. An important feature of both Bribery-like problems and Closest String-like problems is that permuting voters or characters does not have any effect. This fits well the n-fold IP format, which does not allow any interaction between bricks. It seems that this feature is important, as when it is taken away, such as in Closest Substring, the problem becomes \({\mathsf {W}}[1]\)-hard [56], even for parameter \(d+k\).

Another common feature is that both types of problems naturally admit ILP formulations for succinct variants of the problems, as mentioned above. Moreover, it was precisely this fact that made all previous algorithms double-exponential—the natural succinct formulation has exponentially many (in the parameter) variables and thus applying Lenstra’s algorithm leads to a double-exponential runtime.

4.6 Huge n-fold integer programming with small domains

Onn [62] introduced a high-multiplicity version of the standard n-fold IP problem, where the number of bricks is now given in binary. It thus closely relates to the Cutting Stock problem, the high-multiplicity version of Bin Packing, where the number of items of each size is given in binary. The complexity of Cutting Stock for constantly many item sizes was a long-standing open problem that was recently shown to be polynomial-time solvable by Goemans and Rothvoß [32] and by Jansen and Klein [40]. Previously, Huge n-fold IP was shown to be fixed-parameter tractable when \(D=I\) and A is totally unimodular. Using our result, we show that it is also fixed-parameter tractable when D and A are arbitrary, but the size of variable domains is bounded by a parameter.

Huge n-fold IP concerns problems that can be formulated as an n-fold IP with the number of bricks n given in binary. Bricks are thus represented not explicitly, but succinctly by their multiplicity. It is at first unclear if this problem admits an optimal solution which can be encoded in polynomial space, but this is possible by a theorem of Eisenbrand and Shmonin [18, Theorem 2], as pointed out by Onn [62, Theorem 1.3 (1)].

Let \(E = \left( {\begin{matrix}D\\ A\end{matrix}}\right) \in \mathbb {Z}^{(r+s) \times t}\). Let T be a positive integer representing the number of types of bricks. We are given T positive integers \(n_1, \dots , n_{T}\) with \(n = \sum _{i=1}^{T} n_i\) and vectors \({\mathbf{b }}^0 \in \mathbb {Z}^r\) and \({\mathbf{l }}^i, {\mathbf{u }}^i \in \mathbb {Z}^t\) and \({\mathbf{b }}^i \in \mathbb {Z}^s\), and for every \(i\in [T]\) a separable convex function \(f^i:\mathbb {Z}^t \rightarrow \mathbb {Z}\). For \(i \in [T]\) and \(\ell \in [n_i]\) we define the index function \(\iota \) as \(\iota (i, \ell ) {:}{=} \left( \sum _{j=1}^{i-1} n_j \right) + \ell \).

We call an n-fold IP instance given by the constraint matrix \(E^{(n)}\) with the right hand side \({\hat{{\mathbf{b }}}}\) defined by \({\hat{{\mathbf{b }}}}^{\iota (i,\ell )} \,{:=}\, {\mathbf{b }}^i\) and \({\hat{{\mathbf{b }}}}^0 \,{:=}\, {\mathbf{b }}^0\), lower and upper bounds defined by \({\hat{{\mathbf{l }}}}^{\iota (i, \ell )} := {\mathbf{l }}^i\) and \({\hat{{\mathbf{u }}}}^{\iota (i,\ell )} := {\mathbf{u }}^i\) with the objective function \(f({\mathbf{x }}) := \sum _{i=1}^T \sum _{\ell =1}^{n_i} f^i\big ({\mathbf{x }}^{\iota (i,\ell )}\big )\) the huge instance.