Abstract

We examine the behavior of accelerated gradient methods in smooth nonconvex unconstrained optimization, focusing in particular on their behavior near strict saddle points. Accelerated methods are iterative methods that typically step along a direction that is a linear combination of the previous step and the gradient of the function evaluated at a point at or near the current iterate. (The previous step encodes gradient information from earlier stages in the iterative process). We show by means of the stable manifold theorem that the heavy-ball method is unlikely to converge to strict saddle points, which are points at which the gradient of the objective is zero but the Hessian has at least one negative eigenvalue. We then examine the behavior of the heavy-ball method and other accelerated gradient methods in the vicinity of a strict saddle point of a nonconvex quadratic function, showing that both methods can diverge from this point more rapidly than the steepest-descent method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider methods for the smooth unconstrained optimization problem

where \(f: \mathbb {R}^n \rightarrow \mathbb {R}\) is a twice continuously differentiable function. We say that \(x^*\) is a critical point of (1) if \(\nabla f(x^*)=0\). Critical points that are not local minimizers are of little interest in the context of the optimization problem (1), so a desirable property of any algorithm for solving (1) is that it not be attracted to such a point. Specifically, we focus on functions with strict saddle points, that is, functions where the Hessian at each saddle point has at least one negative eigenvalue.

Our particular interest here is in methods that use gradients and momentum to construct steps. In many such methods, each step is a linear combination of two components: the gradient \(\nabla f\) evaluated at a point at or near the latest iterate, and a momentum term, which is the step between the current iterate and the previous iterate. There are rich convergence theories for these methods in the case in which f is convex or strongly convex, along with extensive numerical experience in some important applications. However, although these methods are applied frequently to nonconvex functions, little is known from a mathematical viewpoint about their behavior in such settings. Early results showed that a certain modified accelerated gradient method achieves the same order of convergence on a nonconvex problem as gradient descent [7, 10]—not a faster rate, as in the convex setting.

The heavy-ball method was studied in the nonconvex setting in [17]. From an argument based on a Lyapunov function, this work shows that heavy-ball converges to some set of stationary points when short step sizes are used. Their result also implies that with these shorter stepsizes, heavy-ball converges to these stationary points with a sublinear rate, just as gradient descent does in the nonconvex case. Another work studied the continuous time heavy-ball method [2]. For Morse functions (functions where all critical points have a non-singular Hessian matrix), this paper shows that the set of initial conditions from which heavy-ball converges to a local minimizer is an open dense subset of \(\mathbb {R}^n \times \mathbb {R}^n\). We present a similar result for a larger class of functions, using techniques like those of [9], where the authors show that gradient descent, started from a random initial point, converges to a strict saddle point with probability zero. We show that the discrete heavy-ball method essentially shares this property. We also study whether momentum methods can “escape” strict saddle points more rapidly than gradient descent. Experience with nonconvex quadratics indicate that, when started close to the (measure-zero) set of points from which convergence to the saddle point occurs, momentum methods do indeed escape more quickly.

After submission of our paper, [8] described a method that combines accelerated gradient, perturbation at points with small gradients and explicit negative curvature detection to attain a method with worst-case complexity guarantees.

Notation For compactness, we sometimes use the notation (y, z) to denote the vector \([y^T z^T]^T\), for \(y \in \mathbb {R}^n\) and \(z \in \mathbb {R}^n\).

2 Heavy-ball is unlikely to converge to strict saddle points

We show in this section that the heavy-ball method is not attracted to strict saddle points, unless initialized in a very particular way, that cannot occur if the starting point is chosen at random and the algorithm is modified slightly. Following [9], our proof is based on the stable manifold theorem.

We make the following assumption throughout this section.

Assumption 1

The function \(f: \mathbb {R}^n \rightarrow \mathbb {R}\) is \(r+1\) times continuously differentiable, for some integer \(r \ge 1\), and \(\nabla f\) has Lipschitz constant L.

Under this assumption, the eigenvalues of the Hessian \(\nabla ^2 f(x^*)\) are bounded in magnitude by L.

The heavy-ball method is a prototypical momentum method (see [13]), which proceeds as follows from a starting point \(x^0\):

Following [13], we can write (2) as follows:

Convergence for this method is known for the special case in which f is a strongly convex quadratic. Denote by m the positive lower bound on the eigenvalues of the Hessian of this quadratic, and recall that L is the upper bound. For the settings

a rigorous version of the eigenvalue-based argument in [13, Section 3.2] can be applied to show R-linear convergence with rate constant \(\sqrt{\beta }\), which is approximately \(1-\sqrt{m/L}\) when the ratio L / m is large. This suggests a complexity of \(O(\sqrt{L/m} \log \epsilon )\) iterations to reduce the error \(\Vert x^k - x^* \Vert \) by a factor of \(\epsilon \) (where \(x^*\) is the unique solution). Such rates are typical of accelerated gradient methods. They contrast with the \(O((L/m) \log \epsilon )\) rates attained by the steepest-descent method on such functions.

We note that the eigenvalue-based argument that is “sketched” by [13] does not extend rigorously beyond strongly convex quadratic functions. A more sophisticated argument based on Lyapunov functions is needed, like the one presented for Nesterov’s accelerated gradient method in [14, Chapter 4].

The key to our argument for non-convergence to strict saddle points lies in formulating the heavy-ball method as a mapping whose fixed points are stationary points of f and to which we can apply the stable manifold theorem. Following (3), we define this mapping to be

Note that

We have the following elementary result about the relationship of critical points for (1) to fixed points for the mapping G.

Lemma 1

If \(x^*\) is a critical point of f, then \((z_1^*,z_2^*)=(x^*,x^*)\) is a fixed point for G. Conversely, if \((z_1^*,z_2^*)\) is a fixed point for G, then \(x^*=z_1^*=z_2^*\) is a critical point for f.

Proof

The first claim is obvious by substitution into (5). For the second claim, we have that if \((z_1^*,z_2^*)\) is a fixed point for G, then

from which we have \(z_2^*=z_1^*\) and \(\nabla f(z_1^*)=0\), giving the result. \(\square \)

We now establish that G is a diffeomorphic mapping, a property needed for application of the stable manifold result.

Lemma 2

Suppose that Assumption 1 holds. Then the mapping G defined in (5) is a \(C^r\) diffeomorphism.

Proof

We need to show that G is injective and surjective, and that G and its inverse are r times continuously differentiable.

To show injectivity of G, suppose that \(G(x_1,x_2)=G(y_1,y_2)\). Then, we have

Therefore, \(x_1=y_1\), and so

demonstrating injectivity. To show that G is surjective, we construct its inverse \(G^{-1}\) explicitly. Let \((y_1,y_2)\) be such that

Then \(z_1=y_2\). From the first partition in (9), we obtain \(z_2 = (z_1-y_1-\alpha \nabla f(z_1) )/\beta + z_1\), which after substitution of \(z_1=y_2\) leads to

Thus, G is a bijection. Both G and \(G^{-1}\) are continuously differentiable one time less than f, so by Assumption 1, G is a \(C^r\)-diffeomorphism. \(\square \)

We are now ready to state the stable manifold theorem, which provides tools to let us characterize the set of escaping points.

Theorem 1

(Theorem III.7 of [15]) Let 0 be a fixed point for the \(C^r\) local diffeomorphism \(\phi : U \rightarrow E\) where U is a neighborhood of 0 in the Banach space E. Suppose that \(E = E_{cs} \oplus E_u\), where \(E_{cs}\) is the invariant subspace corresponding to the eigenvalues of \(D\phi (0)\) whose magnitude is less than or equal to 1, and \(E_u\) is the invariant subspace corresponding to eigenvalues of \(D\phi (0)\) whose magnitude is greater than 1. Then there exists a \(C^r\) embedded disc \(W_{loc}^{cs}\) that is tangent to \(E_{cs}\) at 0 called the local stable center manifold. Additionally, there exists a neighborhood B of 0 such that \(\phi (W_{loc}^{cs}) \cap B \subset W_{loc}^{cs}\), and that if z is a point such that \(\phi ^k (z) \in B\) for all \(k \ge 0\), then \(z \in W_{loc}^{cs}\).

This is a similar statement of the stable manifold theorem to the one found in [9], except that since we have to deal with complex eigenvalues here, we emphasize that the decomposition is between the eigenvalues whose magnitude is less than or equal to 1, and greater than 1, respectively. It guarantees the existence of a stable center manifold of dimension equal to the number of eigenvalues of the Jacobian at the critical point that are less than or equal to 1.

We show now that the Jacobian \(DG(x^*,x^*)\) has the properties required for application of this result, for values of \(\alpha \) and \(\beta \) similar to the choices (4) (note that the conditions on \(\alpha \) and \(\beta \) in this result hold when \(\alpha \in (0, 4/L)\) and \(\beta \in (-1+\alpha L/2,1)\), where L is the Lipschitz constant from Assumption 1). For purposes of this and future results in this section, we assume that at the point \(x^*\) we have \(\nabla f(x^*)=0\) and that the eigenvalue decomposition of \(\nabla ^2 f(x^*)\) can be written as

where the eigenvalues \(\lambda _1,\lambda _2,\ldots ,\lambda _n\) have

for some p with \(1 \le p < n\), where \(\varLambda = {{\mathrm{diag}}}(\lambda _1,\lambda _2, \ldots , \lambda _n)\), and where \(v_i\), \(i=1,2,\cdots ,n\) are the orthonormal set of eigenvectors that correspond to the eigenvalues in (12). The matrix \(V = [ v_1 \, | \, v_2 \, | \, \cdots \, | \, v_n]\) is orthogonal.

Theorem 2

Suppose that Assumption 1 holds. Let \(x^*\) be a critical point for f at which \(\nabla ^2 f(x^*)\) has p negative eigenvalues, where \(p \ge 1\). Consider the mapping G defined by (5) where

where \(\lambda _1\) is the largest positive eigenvalue of \(\nabla ^2 f(x^*)\). Then there are matrices \({\tilde{V}}_s \in \mathbb {R}^{2n \times (2n-p)}\) and \({\tilde{V}}_u \in \mathbb {R}^{2n \times p}\) such that (a) the \(2n \times 2n\) matrix \({\tilde{V}} = [ {\tilde{V}}_s \, | \, {\tilde{V}}_u ]\) is nonsingular; (b) the columns of \({\tilde{V}}_s\) span an invariant subspace of \(DG(x^*,x^*)\) corresponding to eigenvalues of \(DG(x^*,x^*)\) whose magnitude is less than or equal to 1; (c) the columns of \({\tilde{V}}_u\) span an invariant subspace of \(DG(x^*,x^*)\) corresponding to eigenvalues of \(DG(x^*,x^*)\) whose magnitude is greater than 1.

Proof

Since

we have from (11) that

By performing a symmetric permutation P on this matrix, interleaving rows/columns from the first block with rows/columns from the second block, we obtain a block diagonal matrix with \(2 \times 2\) blocks of the following form on the diagonals, that is,

where

The eigenvalues of \(M_i\) are obtained from the following quadratic in \(\mu \):

that is,

for which the roots are

We examine first the matrices \(M_i\) for which \(\lambda _i<0\). We have

so both roots in (18) are real. Since \(t(\cdot )\) is convex quadratic, with \(t(0) = \beta >0\) and \(t(1) = \alpha \lambda _i <0\), one root is in (0, 1) and the other is in \((1,\infty )\). We can thus write

where \(\mu _i^{\text {hi}}\) is the eigenvalue of \(M_i\) in the range \((1,\infty )\) and \(\mu _i^{\text {lo}}\) is the eigenvalue of \(M_i\) in the range (0, 1) (This claim can be verified by direct calculation of the product (19a)).

Consider now the matrices \(M_i\) for which \(\lambda _i = 0\). From (18), we have that the roots are 1 and \(\beta \), which are distinct, since \(\beta \in (0,1)\). The eigenvalue decompositions of these matrices have the form

and the \(S_i\) are \(2 \times 2\) nonsingular matrices.

When \(\lambda _i>0\), we show that the eigenvalues of \(M_i\) both have magnitude less than 1, under the given conditions on \(\alpha \) and \(\beta \). Both roots in (18) are complex exactly when the term under the square root is negative, and in this case the magnitude of both roots is

which is less than 1 by assumption. When both roots are real, we have \((1+\beta -\alpha \lambda _i)^2 - 4 \beta \ge 0\), and we require the following to be true to ensure that both are less than 1 in absolute value:

We deal with the right-hand inequality in (21) first. By rearranging, we show that this is implied by the following sequence of equivalent inequalities:

where the last is clearly true, because of \(\alpha >0\) and \(\lambda _i>0\). Thus the right-hand inequality in (21) is satisfied.

For the left-hand inequality in (21), we have

and the last condition holds because of the assumption that \(\beta > -1 + \alpha \lambda _1/2\). This completes our proof of the claim (21). Thus our assumptions on \(\alpha \) and \(\beta \) suffice to ensure that both eigenvalues of \(M_i\) defined in (15) have magnitude less than 1 when \(\lambda _i>0\).

By defining

where \(S_i\), \(i=n-p+1,\cdots ,n\) are the matrices defined in (19), we have from (14) that

We now define another 2n-dimensional permutation matrix \({\tilde{P}}\) that sorts the entries of the diagonal matrices \(\varLambda _i\), \(i=n-p+1, \cdots ,n\) into those whose magnitude is greater than one and those whose magnitude is less than or equal to one, to obtain

where

We now define

which is a nonsingular matrix, by nonsingularity of S and orthogonality of V, P, and \({\tilde{P}}\). As in the statement of the theorem, we define \({\tilde{V}}_s\) to be the first \(2n-p\) columns of \({\tilde{V}}\) and \({\tilde{V}}_u\) to be the last p columns. These define invariant spaces. For the stable space, we have

where all eigenvalues of \({\tilde{\varLambda }}_s\) have magnitude less than or equal to 1. For the unstable space, we have

where \({\tilde{\varLambda }}^{\text {hi}}\) is a diagonal matrix with all diagonal elements greater than 1. \(\square \)

We find a basis for the eigenspace that corresponds to the eigenvalues of \(DG(x^*,x^*)\) that are greater than 1 (that is, the column space of \({\tilde{V}}_u\)) in the following result.

Corollary 1

Suppose that the assumptions of Theorem 2 hold. Then the eigenvector of \(DG(x^*,x^*)\) that corresponds to the unstable eigenvalue \(\mu _i^{\text {hi}} >1\), \(i=n-p+1,\ldots ,n\) defined in (18) is

where \(v_i\) is an eigenvector of \(\nabla ^2 f(x^*)\) that corresponds to \(\lambda _i<0\). The set of such vectors forms an orthogonal basis for the subspace of \(\mathbb {R}^{2n}\) corresponding to the eigenvalues of \(DG(x^*,x^*)\) whose magnitude is greater than 1.

Proof

We have from (13) that

so the result holds provided that

But this is true because of (17), so (24) is an eigenvector of \(DG(x^*,x^*)\) corresponding to the eigenvalue \(\mu _i^{\text {hi}}\). Since the vectors \(\{ v_i \, | \, i=n-p+1, \ldots ,n \}\) form an orthogonal set, so do the vectors (24) for \(i=n-p+1, \ldots ,n\), completing the proof. \(\square \)

Our next result is similar to [9, Theorem 4.1]. It is for a modified version of the heavy-ball method in which the initial value for \(x^{-1}\) is perturbed from its usual choice of \(x^0\).

Theorem 3

Suppose that the assumptions of Theorem 2 hold. Suppose that the heavy-ball method is applied from an initial point of \((x^0,x^{-1}) = (x^0,x^0+\epsilon y)\), where \(x^0\) and y are random vectors with i.i.d. elements, and \(\epsilon >0\) is small. We then have

where the probability is taken over the starting vectors \(x^0\) and y.

Proof

Our proof tracks that of [9, Theorem 4.1]. As there, we define the stable set for \(x^*\) to be

For the neighborhood B of \((x^*,x^*) \in \mathbb {R}^{2n}\) promised by Theorem 1, we have for all \(z \in W^s (x^*)\) that there is some \(l \ge 0\) such that \(G^t(z) \in B\) for all \(t \ge l\), and therefore by Theorem 1 we must have \(G^l(z) \in W^{cs}_{loc} \cap B\). Thus \(W^s(x^*)\) is the set of points z such that \(G^l(z) \in W^{cs}_{loc}\) for some finite l. From Theorem 1, \( W^{cs}_{loc}\) is tangent to the subspace \(E_{cs}\) at \((x^*,x^*)\), and the dimension of \(E_{cs}\) is \(2n-p\), by Theorem 2 (since \(E_{cs}\) is the space spanned by the columns of \({\tilde{V}}_s\)). This subspace has measure zero in \(\mathbb {R}^{2n}\), since \(p \ge 1\). Since diffeomorphisms map sets of measure zero to sets of measure zero, and countable unions of measure zero sets have measure zero, we conclude that \(W^s(x^*)\) has measure zero. Thus the initialization strategy we have outlined produces a starting vector in \(W^s(x^*)\) with probability zero. \(\square \)

Theorem 3 does not guarantee that once the iterates leave the neighborhood of \(x^*\), they never return. It does not exclude the possibility that the sequence \(\{ (x^{k+1},x^k) \}\) returns infinitely often to a neighborhood of \((x^*,x^*)\).

We note that the tweak of taking \(x^{-1}\) slightly different from \(x^0\) does not affect practical performance of the heavy-ball method, and has in fact been proposed before [17]. It also does not disturb the theory that exists for this method, which for the case of quadratic f discussed in [13] rests on an argument based on the eigendecomposition of the (linear) operator DG, which is not affected by the modified starting point. We note too that the accelerated gradient methods to be considered in the next section can also allow \(x^{-1} \ne x^0\) without significantly affecting the convergence theory. A Lyapunov-function-based convergence analysis of this method (see, for example [14, Chapter 4], based on arguments in [16]) requires only trivial modification to accommodate \(x^{-1} \ne x^0\).

For the variant of heavy-ball method in which \(x^0=x^{-1}\), we could consider a random choice of \(x^0\) and ask whether there is zero probability of \((x^0,x^0)\) belonging to the measure-zero set \(W^s(x^*)\) defined by (25). The problem is of course that \((x^0,x^0)\) lies in the n-dimensional subspace \(Y^n := \{ (z_1,z_1) \, | \, z_1 \in \mathbb {R}^n \}\), and we would need to establish that the intersection \(W^s(x^*) \cap Y^n\) has measure zero in \(Y^n\). In other words, we need that the set \(\{ z_1 \, | \, (z_1,z_1) \in W^s(x^*) \}\) has measure zero in \(\mathbb {R}^n\). We have a partial result in this regard, pertaining to the set \(W_{loc}^{cs}\), which is the local counterpart of \(W^s(x^*)\). This result also makes use of the subspace \(E_{cs}\), defined as in Theorem 1, which is the invariant subspace corresponding to eigenvalues of \(DG(x^*,x^*)\) whose magnitudes are less than or equal to one.

Theorem 4

Suppose that the assumptions of Theorem 2 hold. Then any vector of the form (w, w) where \(w \in \mathbb {R}^n\) lies in the stable subspace \(E_{cs}\) only if \(w \in \text{ span } \{ v_1,v_2, \ldots , v_{n-p} \}\) that is, the span of eigenvectors of \(\nabla ^2 f(x^*)\) that correspond to nonnegative eigenvalues of this matrix.

Proof

We write \(w = \sum _{i=1}^n \tau _i v_i\) for some coefficients \(\tau _i\), \(i=1,2,\ldots ,n\), and show that \(\tau _i=0\) for \(i=n-p+1,\ldots ,n\).

We first show that

where \(\sigma _{0,i}=\tau _i\) and \(\eta _{0,i}=\tau _i\), \(i=1,2,\ldots ,n\). To derive recurrences for \(\sigma _{k,i}\) and \(\eta _{k,i}\), we consider the multiplication by \(DG(x^*,x^*)\) that takes us from stages k to \(k+1\). We have

By matching terms, we have

where \(M_i\) is defined in (15). Using the factorization (19), we have

By substitution from (19), we obtain

Because \(0< \mu _i^{\text {lo}}<1<\mu _i^{\text {hi}}\), it follows from this formula that

so if w has any component in the span of \(v_i\), \(i=n-p+1,\ldots ,n\) (that is, if \(\tau _i \ne 0\)), repeated multiplications of \((-w,w)\) by \(DG(x^*,x^*)\) will lead to divergence, so \((-w,w)\) cannot be in the subspace \(E_{cs}\). \(\square \)

A consequence of this theorem is that for a random choice of \(x^0\), there is probability zero that \((x^0-x^*,x^0-x^*) \in E_{cs}\), which is tangential to \(W^{cs}_{loc}\) at \(x^*\). Thus for \(x^0\) close to \(x^*\), there is probability zero that \((x^0,x^0)\) is in the measure-zero set \(W^{cs}_{loc}\). Successive iterations of (2) are locally similar to repeated multiplications of \((x^0-x^*,x^0-x^*)\) by the matrix \(DG(x^*,x^*)\), that is, for \((x^{k+1}-x^*,x^k-x^*)\) small, we have

Under the probability-one event that \(x^0-x^* \notin E_{cs}\), this suggests divergence of the iteration (2) away from \((x^*,x^*)\).

On the other hand, we can show that if the sequence passes sufficiently close to a point \((x^*,x^*)\) such that \(x^*\) satisfies second-order sufficient conditions to be a solution of (1), it subsequently converges to \((x^*,x^*)\). For this result we need the following variant of the stable manifold theorem.

Theorem 5

(Theorem III.7 of [15]) Let 0 be a fixed point for the \(C^r\) local diffeomorphism \(\phi : U \rightarrow E\) where U is a neighborhood of 0 in the Banach space E. Suppose that \(E_{s}\) is the invariant subspace corresponding to the eigenvalues of \(D\phi (0)\) whose magnitude is strictly less than 1. Then there exists a \(C^r\) embedded disc \(W_{loc}^{s}\) that is tangent to \(E_{s}\) at 0, and a neighborhood B of 0 such that \(W_{loc}^s \subset B\), and for all \(z \in W_{loc}^{s}\), we have \(\phi ^k(z) \rightarrow 0\) at a linear rate.

When \(x^*\) satisfies second-order conditions for (1), all eigenvalues of \(\nabla ^2 f(x^*)\) are strictly positive. It follows from the proof of Theorem 2 that under the assumptions of this theorem, all eigenvalues of \(DG(x^*,x^*)\) have magnitude strictly less than 1. Thus, the invariant subspace \(E_s\) in Theorem 5 is the full space (in our case, \(\mathbb {R}^{2n}\)), so \(W_{loc}^s\) is a neighborhood of \((x^*,x^*)\). It follows that there is some \(\epsilon >0\) such that if \(\Vert (x^{K+1},x^K) - (x^*,x^*) \Vert < \epsilon \) for some K, the sequence \((x^{k+1},x^k)\) for \(k \ge K\) converges to \((x^*,x^*)\) at a linear rate.

3 Speed of divergence on a Toy problem

In this section, we investigate the rate of divergence of an accelerated method on a simple nonconvex objective function, the quadratic with \(n=2\) defined by

Obviously, this function is unbounded below with a saddle point at \((0,0)^T\). Its gradient has Lipschitz constant \(L=1\). Despite being a trivial problem, it captures the behavior of gradient algorithms near strict saddle points for indefinite quadratics of arbitrary dimension, as is apparent from the analysis below.

We have described the heavy-ball method in (2). The steepest-descent method, by contrast, takes steps of the form

for some \(\alpha _k>0\). When \(\nabla f(x)\) has Lipschitz constant L, the choice \(\alpha _k \equiv 1/L\) leads to decrease in f at each iteration that is consistent with convergence of \(\Vert \nabla f(x^k) \Vert \) to zero at a sublinear rate when f is bounded below [12] (The classical theory for gradient descent says little about the case in which f is unbounded below, as in this example).

The gradient descent and heavy-ball methods will converge to the saddle point 0 for (27) only from starting points of the form \(x^0=(x^0_1,0)\) for any \(x^0_1 \in \mathbb {R}\). (In the case of heavy-ball, this claim follows from Theorem 4, using the fact that \((1,0)^T\) is the eigenvector of \(\nabla ^2 f\) that corresponds to the positive eigenvalue 1.) From any other starting point, both methods will diverge, with function values going to \(-\infty \). When the starting point \(x^0\) is very close to (but not on) the \(x_1\) axis, the typical behavior is that these algorithms pass close to 0 before diverging along the \(x_2\) axis. We are interested in the question: Does the heavy-ball method diverge away from 0 significantly faster than the steepest-descent method? The answer is “yes,” as we show in this section.

We consider a starting point that is just off the horizontal axis, that is,

For the steepest-descent method with constant steplength, we have

so that

One measure of repulsion from the saddle point is the number of iterations required to obtain \(|x^k_2| \ge 1\). Here it suffices for k to be large enough that \((1+\delta \alpha )^k \epsilon \ge 1\), for which (using the usual bound \(\log (1+\gamma ) \le \gamma \)) a sufficient condition is that

Making the standard choice of steplength \(\alpha = 1/L = 1\), we obtain

Consider now the heavy-ball method. Following (2), the iteration has the form:

(For this quadratic problem, the operator G defined by (5) is linear, so that DG is constant.) We can partition this recursion into \(x_1\) and \(x_2\) components, and write

where

The eigenvalues of these two matrices are given by (18), by setting \(\lambda _1=1\) and \(\lambda _2=-\delta \), respectively. For \(\alpha \) and \(\beta \) satisfying the conditions of Theorem 2, which translate here to

both eigenvalues of \(M_1\) are less than 1 in magnitude (as we show in the proof of Theorem 2), so the \(x_1\) components converge to zero. Again referring to the proof of Theorem 2, the eigenvalues of \(M_2\) are both real, with one of them greater than 1, suggesting divergence in the \(x_2\) component.

To understand rigorously the behavior of the \(x_2\) sequence, we make some specific choices of \(\alpha \) and \(\beta \). Consider

for some parameter \(\gamma \ge 0\). Note that for small \(\delta \) and \(\gamma \), these choices are consistent with (35). By substituting into (18), we see that the two eigenvalues of \(M_2\) are

For reasonable choices of \(\gamma \), we have that \(\mu _2^{\text {hi}} = 1 + c \sqrt{\delta }\) for a modest positive value of c. For specificity (and simplicity) let us consider \(\alpha =3\) and \(\gamma =0\), for which we have

The formula (19) yields \(M_2 = S_2 \varLambda _2 S_2^{-1}\), where \(\varLambda _2 = {{\mathrm{diag}}}( 1+\sqrt{3 \delta } , 1-\sqrt{3 \delta })\) and

From (33), and setting \(x^0_2=x^{-1}_2 = \epsilon \), we have

By substituting for \(\varLambda _2\) and \(S_2\), we obtain

where we simply drop the term involving \(\mu _2^{\text {lo}}\) in the final step and use \(1-\sqrt{3 \delta }>0\). It follows that

It follows from this bound, by a standard argument, that a sufficient condition for \(x_2^k \ge 1\) is

Thus we have confirmed that divergence from the saddle point occurs in

\(O(| \log \epsilon |/\sqrt{\delta })\) iterations for heavy-ball, versus \(O(| \log \epsilon |/\delta )\) iterations for gradient descent.

For larger values of \(\delta \), the divergence of steepest-descent and heavy-ball methods are both rapid, For appropriate choices of \(\alpha \) and \(\beta \), the iterates generated by both algorithms leave the vicinity of the saddle point quickly.

Steepest descent and heavy-ball on (27) with \(\delta =0.02\), from starting point \((0.25,0.01)^T\), with \(\alpha =0.75\), \(\beta =1-\alpha \delta = 0.985\). Every 5th iterate is plotted for each method

Figure 1 illustrates the divergence behavior of steepest descent and heavy-ball on the function (27) with \(\delta =.02\). We set \(\alpha =.75\) for both steepest descent and heavy-ball. For heavy-ball, we chose \(\beta =1-\alpha \delta = .985\). Both methods were started from \(x^0=(.25,.01)^T\). We see that the trajectory traced by steepest descent approaches the saddle point quite closely before diverging slowly along the \(x_2\) axis. The heavy-ball method “overshoots” the \(x_2\) axis (because of the momentum term) but quickly returns to diverging along the \(x_2\) direction at a faster rate than for steepest descent.

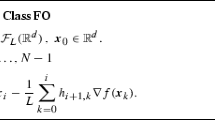

4 General accelerated gradient methods applied to quadratic functions

Here we analyze the rate at which a general class of accelerated gradient methods escape the saddle point of an n-dimensional quadratic function:

where H is a symmetric matrix with eigenvalues satisfying (12). We assume without loss of generality that H is in fact diagonal, that is,

The Lipschitz constant L for \(\nabla f\) is \(L=\max (\lambda _1,-\lambda _n)\).

As in Sect. 3, gradient descent with \(\alpha \in (0,1/L)\) satisfies

It follows that for all \(i \ge n-p+1\), for which \(\lambda _i<0\), gradient descent diverges in that component at a rate of \((1-\alpha \lambda _i)\).

Algorithm 1 describes a general accelerated gradient framework, including gradient descent when \(\gamma _k = \beta _k = 0\), heavy-ball when \(\gamma _k = 0\) and \(\beta _k > 0\), and accelerated gradient methods when \(\gamma _k = \beta _k > 0\). With f defined by (38), the update formula can be written as

which because of (39) is equivalent to

The following theorem describes the dynamics of \(x^{k+1}_i\) in (41) when \(\lambda _i < 0\).

Theorem 6

For all i such that \(\lambda _i < 0\), we have from (41) that

where

In addition if \(\gamma _{k+1} \ge \gamma _k\) and \(\beta _{k+1} \ge \beta _k\) for all k then,

Proof

We begin by showing that (43) holds for \(k=0\) and \(k=1\). The case for \(k=0\) is trivial as \(x^1 = x^0\). In addition, for \(k=1\), the update formula (41) becomes

Thus because \(b_{i,0} = 0\), we can make this consistent with (42) by setting \(b_{i,1} = \alpha |\lambda _i|\) which is exactly (43) for \(k = 1\).

Now assume that (42) holds for all \(k \le K-1\). From (41), using the inductive hypothesis for \(K-1\) and \(K-2\), we need to show

by the given definition of \(b_{i,K}\) in (43). Dividing both sides by \(x^0_i \prod _{m=0}^{K-1} (1 + b_{i,m})\), this is equivalent to

which is true because

is (43) with \(k = K\), as required.

Now we assume that \(\gamma _{K+1} \ge \gamma _K\) and \(\beta _{K+1} \ge \beta _K\) holds for all \(K \ge 1\) and show by induction that \(b_{i,K+1} \ge b_{i,K}\) holds for all \(K \ge 0\). This is clearly true for \(K=0\) since \(\alpha |\lambda _i| > 0\). Assume now that \(b_{i,k+1} \ge b_{i,k}\) holds for all \(0 \le k \le K-1\). We have

where the second inequality above follows from \(\gamma _{K+1} \ge \gamma _K\), \(\beta _{K+1} \ge \beta _K\) and \(b_{i,K-1} \ge b_{i,0} = 0\). \(\square \)

Since \(b_{i,k} \ge \alpha |\lambda _i|\) for all \(k \ge 1\), Theorem 6 shows that Algorithm 1 diverges at a faster rate than gradient descent when at least one of \(\gamma _k > 0\) or \(\beta _k > 0\) is true. Now we explore the rate of divergence by finding a limit for the sequence \(\{b_{i,k}\}_{k=1,2,\ldots }\).

Theorem 7

Let \(\gamma _{k+1} \ge \gamma _k\) and \(\beta _{k+1} \ge \beta _k\) hold for all k and denote \(\bar{\gamma } = \lim _{k \rightarrow \infty } \gamma _k\) and \(\bar{\beta } = \lim _{k \rightarrow \infty } \beta _k\). Then, for all i such that \(\lambda _i < 0\), we have

\(\lim _{k \rightarrow \infty } b_{i,k} = {\bar{b}}_i\), where \({\bar{b}}_i\) is defined by by

Proof

We can write (41) as follows:

Recall from Theorem 6 that \(x_i^k = (1 + b_{i,k-1}) x_i^{k-1}\). By substituting into the equation above, we have

Using Theorem 6 again, we have

By matching this expression with (47), we obtain

which after division by \(b_{i,k-1}\) yields

Now assume for contradiction that the nondecreasing sequence \(\{ b_{i,k}\}_{k=1,2,\ldots }\) has no finite limit, that is, \(b_{i,k} \rightarrow \infty \). Recalling that \(\gamma _k\) and \(\beta _k\) have a finite limit (as they are nondecreaseing sequences restricted to the interval [0, 1]), we have by taking the limit as \(k \rightarrow \infty \) in (49) that the left-hand side approaches \(\left( 1 + \alpha |\lambda _i| + \bar{\beta } + \bar{\gamma } \alpha |\lambda _i| \right) \), while the right-hand side approaches \(\infty \), a contradiction. Thus, the nondecreasing sequence \(\{b_{i,k}\}_{k=1,2,\ldots }\) has a finite limit, which we denote by \({\bar{b}}_i\).

To find the value for \({\bar{b}}_i\), we take limits as \(k \rightarrow \infty \) in (48) to obtain

By solving this quadratic for \({\bar{b}}_i\), we obtain

By Theorem 6, we know that \(b_{i,k} \ge 0\) for all k, so that \({\bar{b}}_i \ge 0\). Therefore, \({\bar{b}}_i\) satisfies (46), as claimed. \(\square \)

We apply Theorem 7 to parameter choices that typically appear in accelerated gradient methods.

Corollary 2

Let the assumptions of Theorem 7 hold, let \(\gamma _k = \beta _k\) hold for all k and let \(\bar{\gamma } = \bar{\beta } = 1\). Then,

Proof

By direct computation with \(\bar{\beta } = \bar{\gamma } = 1\), we have

\(\square \)

The above corollary gives a rate of divergence for many standard choices of the extrapolation parameters found in the accelerated gradient literature. In particular, it includes the sequence \(\beta _k = \gamma _k = \frac{t_{k-1}-1}{t_k}\) where \(t_0 = 1\) and

which was used in a seminal work by Nesterov [11]. (For completeness, we provide a proof that \(t_k \rightarrow \infty \), so that the assumptions of Corollary 2 hold for this sequence in the “Appendix”). Another setting used in recent works \(\beta _k = \gamma _k = \frac{k-1}{k+\eta +1}\) [1, 3, 5]. For proper choices of \(\eta > 0\), this scheme has a number of impressive properties such as fast convergence of iterates for accelerated proximal gradient as well as achieving a \(o(\frac{1}{k^2})\) of convergence in the weakly convex case.

We can also use Theorem 7 to derive a bound for the heavy-ball method. If we target the n-th eigenvalue and set \(\gamma _k = 0\) and \(\beta _k = 1 - \alpha |\lambda _n|\) for all k, simple manipulation shows that \({\bar{b}}_n = \sqrt{\alpha |\lambda _n|}\), which gives us an equivalent rate to that derived in (37). Note that for \({\bar{b}}_n\) defined in (50) we also have \({\bar{b}}_n \ge \sqrt{\alpha | \lambda _n|}\).

The divergence rates for accelerated gradient and heavy-ball methods are significantly faster than the per-iteration rate of \((1+\alpha |\lambda _n|)\) obtained for steepest descent.

5 Experiments

Some computational experiments verify that accelerated gradient methods escape saddle points on nonconvex quadratics faster than steepest descent.

We apply these methods to a quadratic with diagonal Hessian, with \(n=100\) and a single negative eigenvalue, \(\lambda _n = -\delta = -0.01\). The nonnegative eigenvalues are i.i.d. from the uniform distribution on [0, 1], and starting vector \(x^0\) is drawn from a uniform distribution on the unit ball. Figure 2 plots the norm of the component of \(x^k\) in the direction of the negative eigenvector \(e_n = (0,0, \ldots ,0,1)^T\) at each iteration k, for accelerated gradient, heavy-ball, and steepest descent. It also shows the divergence that would be attained if the theoretical limit \({\bar{b}}_i\) from Theorem 7 applied at every iteration. Steepest descent and heavy-ball were run with \(\alpha = 1/L\). Heavy-ball uses (36) to calculate \(\beta \), yielding \(\beta = 0.989\) in the case of \(\delta =.01\). Accelerated gradient is run with \(\alpha = 0.99/L\) and \(\beta _k = \gamma _k = \frac{t_k-1}{t_{k+1}}\) where \(t_k\) is defined in (51).

It is clear from Fig. 2 that accelerated gradient and heavy-ball diverge at a significantly faster rate than steepest descent. In addition, there is only a small discrepancy between applying accelerated gradient and its limiting rate that is derived in Corollary 2, suggesting that \(b_{i,k}\) approaches \({\bar{b}}_i\) rapidly as \(k \rightarrow \infty \).

Next we investigate how these methods behave for various dimensions n and various distributions of the eigenvalues. For two values of n (\(n=100\) and \(n=1000\)), we generate 100 random matrices with \(n-5\) eigenvalues uniformly distributed in the interval [0, 1], with the 5 negative eigenvalues uniformly distributed in \([-2\delta , -\delta ]\). The starting vector \(x^0\) is uniformly distributed on the unit ball. Algorithmic constants were the same as those used to generate Fig. 2. Each trial was run until the norm of the projection of the current iterate into the negative eigenspace of the Hessian was greater than the dimension n. The results of these trials are shown in Table 1.

As expected, accelerated gradient outperforms gradient descent in all respects. All convergence results are slightly faster for \(n=100\) than for \(n=1000\), because the random choice of \(x^0\) will, in expectation, have a smaller component in the span of the negative eigenvectors in the latter case. The eigenvalue spectrum has a much stronger effect on the divergence rate. For steepest descent, an order of magnitude decrease in the absolute value of the negative eigenvalues corresponds to an order of magnitude increase in iterations, whereas Nesterov’s accelerated gradient sees significantly less growth in the iteration count. While the accelerated gradient method diverges at a slightly slower rate than the theoretical limit, the relative difference between the two does not change much as the dimensions change. Thus, Theorem 7 provides a strong indication of the practical behavior of Nesterov’s method on these problems.

6 Conclusion

We have derived several results about the behavior of accelerated gradient methods on nonconvex problems, in the vicinity of critical points at which at least one of the eigenvalues of the Hessian \(\nabla ^2 f(x^*)\) is negative. Section 2 shows that the heavy-ball method does not converge to such a point when started randomly, while Sects. 3 and 4 show that when f is an indefinite quadratic, momentum methods diverge faster than the steepest-descent method.

It would be interesting to extend the results on speed of divergence to non-quadratic smooth functions f. It would also be interesting to know what can be proved about the complexity of convergence to a point satisfying second-order necessary conditions, for unadorned accelerated gradient methods. A recent work [6] shows that gradient descent can take exponential time to escape from a set of saddle points. We believe that a similar result holds for accelerated methods as well. The report [8], which appeared after this paper was submitted, describes an accelerated gradient method that add noise selectively to some iterates, and exploits negative curvature search directions when they are detected in the course of the algorithm. This approach is shown to have the \(O(\epsilon ^{-7/4})\) rate that characterizes the best known gradient-based algorithms for finding second-order necessary points of smooth nonconvex functions.

References

Attouch, H., Cabot, A.: Convergence rates of inertial forward–backward algorithms. SIAM J. Optim. 28(1), 849–874 (2018)

Attouch, H., Goudou, X., Redont, P.: The heavy ball with friction method, I. The continuous dynamical system: global exploration of the local minima of a real-valued function by asymptotic analysis of a dissipative dynamical system. Commun. Contemp. Math. 2(01), 1–34 (2000)

Attouch, H., Peypouquet, J.: The rate of convergence of Nesterov’s accelerated forward-backward method is actually faster than \(1/k^2\). SIAM J. Optim. 26(3), 1824–1834 (2016)

Bubeck, S.: Convex optimization: algorithms and complexity. Found. Trends Mach. Learn. 8(3–4), 231–357 (2015)

Chambolle, A., Dossal, Ch.: On the convergence of the iterates of the “fast iterative shrinkage/thresholding algorithm”. J. Optim. Theory Appl. 166(3), 968–982 (2015)

Du, S.S., Jin, C., Lee, J.D., Jordan, M.I., Singh, A., Poczos, B.: Gradient descent can take exponential time to escape saddle points. In: Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 30, pp. 1067–1077. Curran Associates Inc, Red Hook (2017)

Ghadimi, S., Lan, G.: Accelerated gradient methods for nonconvex nonlinear and stochastic programming. Math. Program. 156(1–2), 59–99 (2016)

Jin, C., Netrapalli, P., Jordan, M.I.: Accelerated gradient descent escapes saddle points faster than gradient descent. arXiv preprint arXiv:1711.10456, (2017)

Lee, J.D., Simchowitz, M., Jordan, M.I., Recht, B.: Gradient descent only converges to minimizers. JMLR Workshop Conf. Proc. 49(1), 1–12 (2016)

Li, H., Lin, Z.: Accelerated proximal gradient methods for nonconvex programming. In: Cortes, C., Lawrence, N.D., Lee, D.D., Sugiyama, M., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 28, pp. 379–387. Curran Associates Inc, Red Hook (2015)

Nesterov, Y.: A method for unconstrained convex problem with the rate of convergence \(O(1/k^2)\). Dokl AN SSSR 269, 543–547 (1983)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course. Springer, New York (2004)

Polyak, B.T.: Introduction to Optimization. Optimization Software (1987)

Recht, B., Wright, S.J.: Nonlinear Optimization for Machine Learning (2017). (Manuscript in preparation)

Shub, M.: Global Stability of Dynamical Systems. Springer, Berlin (1987)

Tseng, P.: On accelerated proximal gradient methods for convex-concave optimization. Technical report, Department of Mathematics, University of Washington, (2008)

Zavriev, S.K., Kostyuk, F.V.: Heavy-ball method in nonconvex optimization problems. Comput. Math. Model. 4(4), 336–341 (1993)

Acknowledgements

We are grateful to Bin Hu for his advice and suggestions on the manuscript. We are also grateful to the referees and editor for helpful suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by NSF Awards IIS-1447449, 1628384, 1634597, and 1740707; AFOSR Award FA9550-13-1-0138; and Subcontract 3F-30222 from Argonne National Laboratory. Part of this work was done while the second author was visiting the Simons Institute for the Theory of Computing, and partially supported by the DIMACS/Simons Collaboration on Bridging Continuous and Discrete Optimization through NSF Award CCF-1740425.

A properties of the sequence \(\{t_k\}\) defined by (51)

A properties of the sequence \(\{t_k\}\) defined by (51)

In this “Appendix” we show that the following two properties hold for the sequence defined by (51):

and

We begin by noting two well known properties of the sequence \(t_k\) (see for example [4, Section 3.7.2]):

and

To prove that \(\frac{t_{k-1}-1}{t_k}\) is monotonically increasing, we need

Since \(t_{k+1} \ge t_k\) (which follows immediately from (51)), it is sufficient to prove that

By manipulating this expression and using (54), we obtain the equivalent expression

By definition of \(t_{k+1}\), we have

Thus (56) holds, so the claim (52) is proved. The sequence \(\{ (t_{k-1}-1)/t_k \}\) is nonnegative, since \((t_0-1)/t_1 = 0\).

Now we prove (53). We can lower-bound \((t_{k-1}-1)/t_k\) as follows:

For an upper bound, we have from \(t_k \ge t_{k-1}\) that

Since \(t_{k-1} \rightarrow \infty \) (because of (55)), it follows from (57) and (58) that (53) holds.

Rights and permissions

About this article

Cite this article

O’Neill, M., Wright, S.J. Behavior of accelerated gradient methods near critical points of nonconvex functions. Math. Program. 176, 403–427 (2019). https://doi.org/10.1007/s10107-018-1340-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-018-1340-y