Abstract

This paper proposes tight semidefinite relaxations for polynomial optimization. The optimality conditions are investigated. We show that generally Lagrange multipliers can be expressed as polynomial functions in decision variables over the set of critical points. The polynomial expressions are determined by linear equations. Based on these expressions, new Lasserre type semidefinite relaxations are constructed for solving the polynomial optimization. We show that the hierarchy of new relaxations has finite convergence, or equivalently, the new relaxations are tight for a finite relaxation order.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A general class of optimization problems is

where f and all \(c_i, c_j\) are polynomials in \(x :=(x_1, \ldots , x_n)\), the real decision variable. The \(\mathcal {E}\) and \(\mathcal {I}\) are two disjoint finite index sets of constraining polynomials. Lasserre’s relaxations [17] are generally used for solving (1.1) globally, i.e., to find the global minimum value \(f_{\min }\) and minimizer(s) if any. The convergence of Lasserre’s relaxations is related to optimality conditions.

1.1 Optimality conditions

A general introduction of optimality conditions in nonlinear programming can be found in [1, Section 3.3]. Let u be a local minimizer of (1.1). Denote the index set of active constraints

If the constraint qualification condition (CQC) holds at u, i.e., the gradients \(\nabla c_i(u)\) \((i \in J(u))\) are linearly independent (\(\nabla \) denotes the gradient), then there exist Lagrange multipliers \(\lambda _i \, (i \in \mathcal {E} \cup \mathcal {I})\) satisfying

The second equation in (1.4) is called the complementarity condition. If \(\lambda _j + c_j(u) >0\) for all \(j \in \mathcal {I}\), the strict complementarity condition (SCC) is said to hold. For the \(\lambda _i\)’s satisfying (1.3)–(1.5), the associated Lagrange function is

Under the constraint qualification condition, the second order necessary condition (SONC) holds at u, i.e., (\(\nabla ^2\) denotes the Hessian)

Here, \(\nabla c_i(u)^\perp \) is the orthogonal complement of \(\nabla c_i(u)\). If it further holds that

then the second order sufficient condition (SOSC) is said to hold. If the constraint qualification condition holds at u, then (1.3), (1.4) and (1.6) are necessary conditions for u to be a local minimizer. If (1.3), (1.4), (1.7) and the strict complementarity condition hold, then u is a strict local minimizer.

1.2 Some existing work

Under the archimedean condition (see Sect. 2), the hierarchy of Lasserre’s relaxations converges asymptotically [17]. Moreover, in addition to the archimedeanness, if the constraint qualification, strict complementarity, and second order sufficient conditions hold at every global minimizer, then the Lasserre’s hierarchy converges in finitely many steps [33]. For convex polynomial optimization, the Lasserre’s hierarchy has finite convergence under the strict convexity or sos-convexity condition [7, 20]. For unconstrained polynomial optimization, the standard sum of squares relaxation was proposed in [35]. When the equality constraints define a finite set, the Lasserre’s hierarchy also has finite convergence, as shown in [18, 24, 31]. Recently, a bounded degree hierarchy of relaxations was proposed for solving polynomial optimization [23]. General introductions to polynomial optimization and moment problems can be found in the books and surveys [21, 22, 25, 26, 39]. Lasserre’s relaxations provide lower bounds for the minimum value. There also exist methods that compute upper bounds [8, 19]. A convergence rate analysis for such upper bounds is given in [9, 10]. When a polynomial optimization problem does not have minimizers (i.e., the infimum is not achievable), there are relaxation methods for computing the infimum [38, 42].

A new type of Lasserre’s relaxations, based on Jacobian representations, were recently proposed in [30]. The hierarchy of such relaxations always has finite convergence, when the tuple of constraining polynomials is nonsingular (i.e., at every point in \(\mathbb {C}^n\), the gradients of active constraining polynomial are linearly independent; see Definition 5.1). When there are only equality constraints \(c_1(x)=\cdots = c_m(x)=0\), the method needs the maximal minors of the matrix

When there are inequality constraints, it requires to enumerate all possibilities of active constraints. The method in [30] is expensive when there are a lot of constraints. For unconstrained optimization, it is reduced to the gradient sum of squares relaxations in [27].

1.3 New contributions

When Lasserre’s relaxations are used to solve polynomial optimization, the following issues are typically of concerns:

-

The convergence depends on the archimedean condition (see Sect. 2), which is satisfied only if the feasible set is compact. If the set is noncompact, how can we get convergent relaxations?

-

The cost of Lasserre’s relaxations depends significantly on the relaxation order. For a fixed order, can we construct tighter relaxations than the standard ones?

-

When the convergence of Lasserre’s relaxations is slow, can we construct new relaxations whose convergence is faster?

-

When the optimality conditions fail to hold, the Lasserre’s hierarchy might not have finite convergence. Can we construct a new hierarchy of stronger relaxations that also has finite convergence for such cases?

This paper addresses the above concerns. We construct tighter relaxations by using optimality conditions. In (1.3)–(1.4), under the constraint qualification condition, the Lagrange multipliers \(\lambda _i\) are uniquely determined by u. Consider the polynomial system in \((x,\lambda )\):

A point x satisfying (1.8) is called a critical point, and such \((x,\lambda )\) is called a critical pair. In (1.8), once x is known, \(\lambda \) can be determined by linear equations. Generally, the value of x is not known. One can try to express \(\lambda \) as a rational function in x. Suppose \(\mathcal {E}\cup \mathcal {I} = \{1,\ldots , m\}\) and denote

When \(m\le n\) and \(\text{ rank }\,G(x)=m\), we can get the rational expression

Typically, the matrix inverse \(\big ( G(x)^TG(x) \big )^{-1}\) is expensive for usage. The denominator \(\det \big ( G(x)^TG(x) \big )\) is typically a high degree polynomial. When \(m>n\), \(G(x)^TG(x)\) is always singular and we cannot express \(\lambda \) as in (1.9).

Do there exist polynomials \(p_i\) (\(i \in \mathcal {E} \cup \mathcal {I}\)) such that each

for all \((x,\lambda )\) satisfying (1.8)? If they exist, then we can do:

-

The polynomial system (1.8) can be simplified to

$$\begin{aligned} \sum _{ i \in \mathcal {E} \cup \mathcal {I} } p_i(x) \nabla c_i(x) = \nabla f(x), \quad c_i(x) = 0 (i \in \mathcal {E}), \quad p_j(x) c_j(x) = 0 (j \in \mathcal {I}). \end{aligned}$$(1.11) -

For each \(j \in \mathcal {I}\), the sign condition \(\lambda _j \ge 0\) is equivalent to

$$\begin{aligned} p_j(x) \ge 0. \end{aligned}$$(1.12)

The new conditions (1.11) and (1.12) are only about the variable x, not \(\lambda \). They can be used to construct tighter relaxations for solving (1.1).

When do there exist polynomials \(p_i\) satisfying (1.10)? If they exist, how can we compute them? How can we use them to construct tighter relaxations? Do the new relaxations have advantages over the old ones? These questions are the main topics of this paper. Our major results are:

-

We show that the polynomials \(p_i\) satisfying (1.10) always exist when the tuple of constraining polynomials is nonsingular (see Definition 5.1). Moreover, they can be determined by linear equations.

-

Using the new conditions (1.11)–(1.12), we can construct tight relaxations for solving (1.1). To be more precise, we construct a hierarchy of new relaxations, which has finite convergence. This is true even if the feasible set is noncompact and/or the optimality conditions fail to hold.

-

For every relaxation order, the new relaxations are tighter than the standard ones in the prior work.

The paper is organized as follows. Section 2 reviews some basics in polynomial optimization. Section 3 constructs new relaxations and proves their tightness. Section 4 characterizes when the polynomials \(p_i\)’s satisfying (1.10) exist and shows how to determine them, for polyhedral constraints. Section 5 discusses the case of general nonlinear constraints. Section 6 gives examples of using the new relaxations. Section 7 discusses some related issues.

2 Preliminaries

Notation The symbol \(\mathbb {N}\) (resp., \(\mathbb {R}\), \(\mathbb {C}\)) denotes the set of nonnegative integral (resp., real, complex) numbers. The symbol \(\mathbb {R}[x] := \mathbb {R}[x_1,\ldots ,x_n]\) denotes the ring of polynomials in \(x:=(x_1,\ldots ,x_n)\) with real coefficients. The \(\mathbb {R}[x]_d\) stands for the set of real polynomials with degrees \(\le d\). Denote

For a polynomial p, \(\deg (p)\) denotes its total degree. For \(t \in \mathbb {R}\), \(\lceil t \rceil \) denotes the smallest integer \(\ge t\). For an integer \(k>0\), denote \( [k]:= \{1,2,\ldots , k\}. \) For \(x =(x_1,\ldots , x_n)\) and \(\alpha = (\alpha _1, \ldots , \alpha _n)\), denote

The superscript \(^T\) denotes the transpose of a matrix/vector. The \(e_i\) denotes the ith standard unit vector, while e denotes the vector of all ones. The \(I_m\) denotes the m-by-m identity matrix. By writing \(X\succeq 0\) (resp., \(X\succ 0\)), we mean that X is a symmetric positive semidefinite (resp., positive definite) matrix. For matrices \(X_1,\ldots , X_r\), \(\text{ diag }(X_1, \ldots , X_r)\) denotes the block diagonal matrix whose diagonal blocks are \(X_1,\ldots , X_r\). In particular, for a vector a, \(\text{ diag }(a)\) denotes the diagonal matrix whose diagonal vector is a. For a function f in x, \(f_{x_i}\) denotes its partial derivative with respect to \(x_i\).

We review some basics in computational algebra and polynomial optimization. They could be found in [4, 21, 22, 25, 26]. An ideal I of \(\mathbb {R}[x]\) is a subset such that \( I \cdot \mathbb {R}[x] \subseteq I\) and \(I+I \subseteq I\). For a tuple \(h := (h_1,\ldots ,h_m)\) of polynomials, \(\hbox {Ideal}(h)\) denotes the smallest ideal containing all \(h_i\), which is the set

The 2kth truncation of \(\hbox {Ideal}(h)\) is the set

The truncation \(\hbox {Ideal}(h)_{2k}\) depends on the generators \(h_1,\ldots , h_m\). For an ideal I, its complex and real varieties are respectively defined as

A polynomial \(\sigma \) is said to be a sum of squares (SOS) if \(\sigma = s_1^2+\cdots + s_k^2\) for some polynomials \(s_1,\ldots , s_k \in \mathbb {R}[x]\). The set of all SOS polynomials in x is denoted as \(\varSigma [x]\). For a degree d, denote the truncation

For a tuple \(g=(g_1,\ldots ,g_t)\), its quadratic module is the set

The 2kth truncation of \(\hbox {Qmod}(g)\) is the set

The truncation \(\hbox {Qmod}(g)_{2k}\) depends on the generators \(g_1,\ldots , g_t\). Denote

The set \(\hbox {IQ}(h,g)\) is said to be archimedean if there exists \(p \in \hbox {IQ}(h,g)\) such that \(p(x) \ge 0\) defines a compact set in \(\mathbb {R}^n\). If \(\hbox {IQ}(h,g)\) is archimedean, then

must be a compact set. Conversely, if K is compact, say, \(K \subseteq B(0,R)\) (the ball centered at 0 with radius R), then \(\hbox {IQ}(h, (g, R^2-x^Tx) )\) is always archimedean and \(h=0, \, (g, R^2-x^Tx) \ge 0\) define the same set K.

Theorem 2.1

(Putinar [36]) Let h, g be tuples of polynomials in \(\mathbb {R}[x]\). Let K be as above. Assume \(\hbox {IQ}(h,g)\) is archimedean. If a polynomial \(f\in \mathbb {R}[x]\) is positive on K, then \(f\in \hbox {IQ}(h,g)\).

Interestingly, if f is only nonnegative on K but standard optimality conditions hold (see Sect. 1.1), then we still have \(f \in \hbox {IQ}(h,g)\) [33].

Let \(\mathbb {R}^{\mathbb {N}_d^n}\) be the space of real multi-sequences indexed by \(\alpha \in \mathbb {N}^n_d\). A vector in \(\mathbb {R}^{\mathbb {N}_d^n}\) is called a truncated multi-sequence (tms) of degree d. A tms \(y :=(y_\alpha )_{ \alpha \in \mathbb {N}_d^n}\) gives the Riesz functional \(\mathscr {R}_y\) acting on \(\mathbb {R}[x]_d\) as

For \(f \in \mathbb {R}[x]_d\) and \(y \in \mathbb {R}^{\mathbb {N}_d^n}\), we denote

Let \(q \in \mathbb {R}[x]_{2k}\). The kth localizing matrix of q, generated by \(y \in \mathbb {R}^{\mathbb {N}^n_{2k}}\), is the symmetric matrix \(L_q^{(k)}(y)\) such that

for all \(a_1,a_2 \in \mathbb {R}[x]_{k - \lceil \deg (q)/2 \rceil }\). (The \(vec(a_i)\) denotes the coefficient vector of \(a_i\).) When \(q = 1\), \(L_q^{(k)}(y)\) is called a moment matrix and we denote

The columns and rows of \(L_q^{(k)}(y)\), as well as \(M_k(y)\), are indexed by \(\alpha \in \mathbb {N}^n\) with \(2|\alpha | + \deg (q) \le 2k\). When \(q = (q_1, \ldots , q_r)\) is a tuple of polynomials, we define

a block diagonal matrix. For the polynomial tuples h, g as above, the set

is a spectrahedral cone in \(\mathbb {R}^{ \mathbb {N}_n^{2k} }\). The set \(\hbox {IQ}(h,g)_{2k}\) is also a convex cone in \(\mathbb {R}[x]_{2k}\). The dual cone of \(\hbox {IQ}(h,g)_{2k}\) is precisely \(\mathscr {S}(h,g)_{2k}\) [22, 25, 34]. This is because \(\langle p, y \rangle \ge 0\) for all \(p \in \hbox {IQ}(h,g)_{2k}\) and for all \(y \in \mathscr {S}(h,g)_{2k}\).

3 The construction of tight relaxations

Consider the polynomial optimization problem (1.1). Let

be the vector of Lagrange multipliers. Denote the critical set

Each point in \(\mathcal {K}\) is called a critical pair. The projection

is the set of all real critical points. To construct tight relaxations for solving (1.1), we need the following assumption for Lagrange multipliers.

Assumption 3.1

For each \(i \in \mathcal {E} \cup \mathcal {I}\), there exists a polynomial \(p_i \in \mathbb {R}[x]\) such that for all \((x,\lambda ) \in \mathcal {K}\) it holds that

Assumption 3.1 is generically satisfied, as shown in Proposition 5.7. For the following special cases, we can get polynomials \(p_i\) explicitly.

-

(Simplex) For the simplex \(\{e^Tx-1 =0, \, x_1 \ge 0, \ldots , x_n \ge 0\}\), it corresponds to that \(\mathcal {E} = \{0\}\), \(\mathcal {I} = [n]\), \(c_0(x)=e^Tx-1, \, c_j(x)=x_j \, (j\in [n]).\) The Lagrange multipliers can be expressed as

$$\begin{aligned} \lambda _0 = x^T \nabla f(x), \quad \lambda _j = f_{x_j}-x^T\nabla f(x) \quad (j \in [n]). \end{aligned}$$(3.3) -

(Hypercube) For the hypercube \([-1,1]^n\), it corresponds to that \(\mathcal {E} = \emptyset \), \(\mathcal {I} = [n]\) and each \(c_j(x)= 1-x_j^2\). We can show that

$$\begin{aligned} \lambda _j = - \frac{1}{2}x_j f_{x_j} \quad (j\in [n]). \end{aligned}$$(3.4) -

(Ball or sphere) The constraint is \(1-x^Tx=0\) or \(1-x^Tx\ge 0\). It corresponds to that \(\mathcal {E} \cup \mathcal {I} = \{1\}\) and \(c_1 = 1-x^Tx\). We have

$$\begin{aligned} \lambda _1 = -\frac{1}{2}x^T \nabla f(x). \end{aligned}$$(3.5) -

(Triangular constraints) Suppose \(\mathcal {E} \cup \mathcal {I} =\{1, \ldots , m\} \) and each

$$\begin{aligned} c_i(x) = \tau _i x_i + q_i(x_{i+1}, \ldots , x_n) \end{aligned}$$for some polynomials \(q_i \in \mathbb {R}[x_{i+1}, \ldots , x_n]\) and scalars \(\tau _i \ne 0\). The matrix T(x), consisting of the first m rows of \([ \nabla c_1(x), \ldots , \nabla c_m(x) ],\) is an invertible lower triangular matrix with constant diagonal entries. Then,

$$\begin{aligned} \lambda = T(x)^{-1} \cdot \begin{bmatrix} f_{x_1}&\quad \cdots&\quad f_{x_m} \end{bmatrix}^T. \end{aligned}$$Note that the inverse \(T(x)^{-1}\) is a matrix polynomial.

For more general constraints, we can also express \(\lambda \) as a polynomial function in x on the set \(\mathcal {K}_c\). This will be discussed in Sect. 4 and Sect. 5.

For the polynomials \(p_i\) as in Assumption 3.1, denote

When the minimum value \(f_{\min }\) of (1.1) is achieved at a critical point, (1.1) is equivalent to the problem

We apply Lasserre’s relaxations to solve it. For an integer \(k>0\) (called the relaxation order), the kth order Lasserre’s relaxation for (3.7) is

Since \(x^0 = 1\) (the constant one polynomial), the condition \(\langle 1, y \rangle = 1\) means that \((y)_0=1\). The dual optimization problem of (3.8) is

We refer to Sect. 2 for the notation used in (3.8)–(3.9). They are equivalent to semidefinite programs (SDPs), so they can be solved by SDP solvers (e.g., SeDuMi [40]). For \(k=1,2,\ldots \), we get a hierarchy of Lasserre’s relaxations. In (3.8)–(3.9), if we remove the usage of \(\phi \) and \(\psi \), they are reduced to standard Lasserre’s relaxations in [17]. So, (3.8)–(3.9) are stronger relaxations.

By the construction of \(\phi \) as in (3.6), Assumption 3.1 implies that

By Lemma 3.3 of [6], f achieves only finitely many values on \(\mathcal {K}_c\), say,

A point \(u \in \mathcal {K}_c\) might not be feasible for (3.7), i.e., it is possible that \(c_{in}(u) \not \ge 0\) or \(\psi (u) \not \ge 0\). In applications, we are often interested in the optimal value \(f_c\) of (3.7). When (3.7) is infeasible, by convention, we set

When the optimal value \(f_{\min }\) of (1.1) is achieved at a critical point, \(f_c = f_{\min }\). This is the case if the feasible set is compact, or if f is coercive (i.e., for each \(\ell \), the sublevel set \(\{f(x) \le \ell \}\) is compact), and the constraint qualification condition holds. As in [17], one can show that

for all k. Moreover, \(\{ f_k \}\) and \(\{ f_k^\prime \}\) are both monotonically increasing. If for some order k it occurs that

then the kth order Lasserre’s relaxation is said to be tight (or exact).

3.1 Tightness of the relaxations

Let \(c_{in},\psi , \mathcal {K}_c,f_c\) be as above. We refer to Sect. 2 for the notation \(\hbox {Qmod}\,(c_{in},\psi )\). We begin with a general assumption.

Assumption 3.2

There exists \(\rho \in \hbox {Qmod}(c_{in},\psi )\) such that if \(u\in \mathcal {K}_c\) and \(f(u) < f_c\), then \(\rho (u) < 0\).

In Assumption 3.2, the hypersurface \(\rho (x) = 0\) separates feasible and infeasible critical points. Clearly, if \(u\in \mathcal {K}_c\) is a feasible point for (3.7), then \(c_{in}(u) \ge 0\) and \(\psi (u) \ge 0\), and hence \(\rho (u) \ge 0\). Assumption 3.2 generally holds. For instance, it is satisfied for the following general cases.

-

(a)

When there are no inequality constraints, \(c_{in}\) and \(\psi \) are empty tuples. Then, \(\hbox {Qmod}(c_{in},\psi ) = \varSigma [x]\) and Assumption 3.2 is satisfied for \(\rho =0\).

-

(b)

Suppose the set \(\mathcal {K}_c\) is finite, say, \(\mathcal {K}_c = \{ u_1, \ldots , u_D \}\), and

$$\begin{aligned} f(u_1), \ldots , f(u_{t-1}) < f_c \le f(u_{t}), \ldots , f(u_D). \end{aligned}$$Let \(\ell _1,\ldots , \ell _D\) be real interpolating polynomials such that \(\ell _i(u_j) = 1\) for \(i=j\) and \(\ell _i(u_j) = 0\) for \( i \ne j\). For each \(i = 1, \ldots , t{-}1\), there must exist \(j_i \in \mathcal {I}\) such that \(c_{j_i}(u_i) < 0\). Then, the polynomial

$$\begin{aligned} \rho := \sum _{i < t} \frac{-1}{c_{j_i}(u_i)} c_{j_i}(x) \ell _i(x)^2 + \sum _{i \ge t} \ell _i(x)^2 \end{aligned}$$(3.12)satisfies Assumption 3.2.

-

(c)

For each x with \(f(x)=v_i<f_c\), at least one of the constraints \(c_j(x)\ge 0, p_j(x) \ge 0 (j \in \mathcal {I})\) is violated. Suppose for each critical value \(v_i < f_c\), there exists \(g_i \in \{ c_j, p_j \}_{j \in \mathcal {I} }\) such that

$$\begin{aligned} g_i < 0 \quad \hbox { on } \quad \mathcal {K}_c \cap \{ f(x) = v_i \}. \end{aligned}$$Let \(\varphi _1, \ldots , \varphi _N\) be real univariate polynomials such that \(\varphi _i( v_j) = 0\) for \(i \ne j\) and \(\varphi _i( v_j) = 1\) for \(i = j\). Suppose \(v_t = f_c\). Then, the polynomial

$$\begin{aligned} \rho := \sum _{ i < t } g_i(x) \big ( \varphi _i(f(x)) \big )^2 + \sum _{ i \ge t } \big ( \varphi _i(f(x)) \big )^2 \end{aligned}$$(3.13)satisfies Assumption 3.2.

We refer to Sect. 2 for the archimedean condition and the notation \(\hbox {IQ}(h,g)\) as in (2.1). The following is about the convergence of relaxations (3.8)–(3.9).

Theorem 3.3

Suppose \(\mathcal {K}_c \ne \emptyset \) and Assumption 3.1 holds. If

-

(i)

\(\hbox {IQ}(c_{eq},c_{in}) + \hbox {IQ}(\phi , \psi )\) is archimedean, or

-

(ii)

\(\hbox {IQ}(c_{eq},c_{in}) \) is archimedean, or

-

(iii)

Assumption 3.2 holds,

then \(f_k = f_k^\prime = f_c\) for all k sufficiently large. Therefore, if the minimum value \(f_{\min }\) of (1.1) is achieved at a critical point, then \(f_k = f_k^\prime = f_{\min }\) for all k big enough if one of the conditions (i)–(iii) is satisfied.

Remark

In Theorem 3.3, the conclusion holds if any of conditions (i)–(iii) is satisfied. The condition (ii) is only about constraining polynomials of (1.1). It can be checked without \(\phi ,\psi \). Clearly, the condition (ii) implies the condition (i).

The proof for Theorem 3.3 is given in the following. The main idea is to consider the set of critical points. It can be expressed as a union of subvarieties. The objective f is a constant in each one of them. We can get an SOS type representation for f on each subvariety, and then construct a single one for f over the entire set of critical points.

Proof of Theorem 3.3

Clearly, every point in the complex variety

is a critical point. By Lemma 3.3 of [6], the objective f achieves finitely many real values on \(\mathcal {K}_c = \mathcal {K}_1 \cap \mathbb {R}^n\), say, they are \(v_1< \cdots < v_N\). Up to the shifting of a constant in f, we can further assume that \( f_c = 0. \) Clearly, \(f_c\) equals one of \(v_1, \ldots , v_N\), say \( v_t = f_c = 0. \)

Case I Assume \(\hbox {IQ}(c_{eq},c_{in}) + \hbox {IQ}(\phi , \psi )\) is archimedean. Let

the critical ideal. Note that \(\mathcal {K}_1 = \mathcal {V}_{\mathbb {C}}(I)\). The variety \(\mathcal {V}_{\mathbb {C}}(I)\) is a union of irreducible subvarieties, say, \(V_1, \ldots , V_\ell \). If \(V_i \cap \mathbb {R}^n \ne \emptyset \), then f is a real constant on \(V_i\), which equals one of \(v_1, \ldots , v_N\). This can be implied by Lemma 3.3 of [6] and Lemma 3.2 of [30]. Denote the subvarieties of \(\mathcal {V}_{\mathbb {C}}(I)\):

Let \(T_{t-1}\) be the union of irreducible subvarieties \(V_i\), such that either \(V_i \cap \mathbb {R}^n = \emptyset \) or \(f \equiv v_j\) on \(V_i\) with \(v_j < v_t = f_c\). Then, it holds that

By the primary decomposition of I [11, 41], there exist ideals \(I_{t-1}, I_t, \ldots , I_N \subseteq \mathbb {R}[x]\) such that

and \(T_i = \mathcal {V}_{\mathbb {C}}(I_i)\) for all \(i = t-1, t, \ldots , N\). Denote the semialgebraic set

For \(i=t-1\), we have \(\mathcal {V}_{\mathbb {R}}(I_{t-1}) \cap S = \emptyset \), because \(v_1, \ldots , v_{t-1} < f_c\). By the Positivstellensatz [2, Corollary 4.4.3], there exists \(p_0 \in \hbox {Preord}(c_{in},\psi )\)Footnote 1 satisfying \(2 + p_0 \in I_{t-1}\). Note that \(1+p_0 >0\) on \(\mathcal {V}_{\mathbb {R}}(I_{t-1}) \cap S\). The set \(I_{t-1} + \hbox {Qmod}(c_{in}, \psi )\) is archimedean, because \(I \subseteq I_{t-1}\) and

By Theorem 2.1, we have

Then, \(1+p_1 \in I_{t-1}\). There exists \(p_2 \in \hbox {Qmod}(c_{in}, \psi )\) such that

Since \(f = (f/4+1)^2 -1 \cdot (f/4-1)^2\), we have

So, when k is big enough, we have \(\sigma _{t-1} \in \hbox {Qmod}(c_{in}, \psi )_{2k}\).

For \(i=t\), \(v_t=0\) and f(x) vanishes on \(\mathcal {V}_{\mathbb {C}}(I_t)\). By Hilbert’s Strong Nullstellensatz [4], there exists an integer \(m_t > 0\) such that \(f^{m_t} \in I_t\). Define the polynomial

Then, we have that

This is because in the subtraction of \(D_1\), after expanding \(\big ( s_t(\epsilon ) \big )^2\), all the terms \(f^j\) with \(j < m_t\) are cancelled and \(f^j \in I_t\) for \(j \ge m_t\). So, \(D_1 \in I_t\). Let \(\sigma _t(\epsilon ) := s_t(\epsilon )^2\), then \(f + \epsilon - \sigma _t(\epsilon ) = D_1\) and

for some real scalars \(b_j(\epsilon )\), depending on \(\epsilon \).

For each \(i = t+1, \ldots , N\), \(v_i>0\) and \(f(x)/v_i - 1\) vanishes on \(\mathcal {V}_{\mathbb {C}}(I_i)\). By Hilbert’s Strong Nullstellensatz [4], there exists \(0< m_i \in \mathbb {N}\) such that \((f/v_i - 1)^{m_i} \in I_i.\) Let

Like for the case \(i=t\), we can similarly show that \(f - s_i^2 \, \in \, I_i\). Let \(\sigma _i = s_i^2\), then \(f - \sigma _i \, \in \, I_i\).

Note that \(\mathcal {V}_{\mathbb {C}}(I_i) \cap \mathcal {V}_{\mathbb {C}}(I_j) = \emptyset \) for all \(i \ne j\). By Lemma 3.3 of [30], there exist polynomials \(a_{t-1}, \ldots , a_N \in \mathbb {R}[x]\) such that

For \(\epsilon >0\), denote the polynomial

then

For each \(i \ne t\), \(f-\sigma _i \in I_i\), so

Hence, there exists \(k_1 >0\) such that

Since \(f+\epsilon -\sigma _t(\epsilon ) \in I_t\), we also have

Moreover, by the Eq. (3.15),

Each \(f^{m_t+j} a_t^2 \in I\), since \(f^{m_t+j} \in I_t\). So, there exists \(k_2 >0\) such that for all \(\epsilon >0\)

Since \(1-a_{t-1}^2-\cdots -a_N^2 \in I\), there also exists \(k_3 >0\) such that for all \(\epsilon >0\)

Hence, if \(k^* \ge \max \{k_1, k_2, k_3\}\), then we have

for all \(\epsilon >0\). By the construction, the degrees of all \(\sigma _i\) and \(a_i\) are independent of \(\epsilon \). So, \(\sigma _\epsilon \, \in \, \hbox {Qmod}(c_{in}, \psi )_{2k^*}\) for all \(\epsilon >0\) if \(k^*\) is big enough. Note that

This implies that \(f_{k^*} \ge f_c -\epsilon \) for all \(\epsilon >0.\) On the other hand, we always have \(f_{k^*} \le f_c\). So, \(f_{k^*} = f_c\). Moreover, since \(\{ f_k \}\) is monotonically increasing, we must have \(f_{k} = f_c\) for all \(k\ge k^*\).

Case II Assume \(\hbox {IQ}(c_{eq},c_{in})\) is archimedean. Because

the set \(\hbox {IQ}(c_{eq},c_{in})+ \hbox {IQ}(\phi ,\psi )\) is also archimedean. Therefore, the conclusion is also true by applying the result for Case I.

Case III Suppose the Assumption 3.2 holds. Let \(\varphi _1, \ldots , \varphi _N\) be real univariate polynomials such that \(\varphi _i( v_j) = 0\) for \(i \ne j\) and \(\varphi _i( v_j) = 1\) for \(i = j\). Let

Then, \(s \in \varSigma [x]_{2k_4}\) for some integer \(k_4 > 0\). Let

We show that there exist an integer \(\ell > 0\) and \(q \in \hbox {Qmod}(c_{in}, \psi )\) such that

This is because, by Assumption 3.2, \(\hat{f}(x) \equiv 0\) on the set

It has only a single inequality. By the Positivstellensatz [2, Corollary 4.4.3], there exist \(0< \ell \in \mathbb {N}\) and \(q = b_0 + \rho b_1 \) (\(b_0, b_1 \in \varSigma [x]\)) such that \(\hat{f}^{2\ell } + q \in \hbox {Ideal}(c_{eq},\phi ).\) By Assumption 3.2, \(\rho \in \hbox {Qmod}(c_{in}, \psi )\), so we have \(q \in \hbox {Qmod}(c_{in}, \psi )\).

For all \(\epsilon >0\) and \(\tau >0\), we have \(\hat{f} + \epsilon = \phi _\epsilon + \theta _\epsilon \) where

By Lemma 2.1 of [31], when \(\tau \ge \frac{1}{2\ell }\), there exists \(k_5\) such that, for all \(\epsilon >0\),

Hence, we can get

where \(\sigma _\epsilon = \theta _\epsilon + s \in \hbox {Qmod}(c_{in},\psi )_{2k_5}\) for all \(\epsilon >0\). Note that

For all \(\epsilon >0\), \(\gamma = f_c-\epsilon \) is feasible in (3.9) for the order \(k_5\), so \(f_{k_5} \ge f_c\). Because of (3.11) and the monotonicity of \(\{ f_k \}\), we have \(f_{k} = f_{k}^\prime = f_c\) for all \(k \ge k_5\). \(\square \)

3.2 Detecting tightness and extracting minimizers

The optimal value of (3.7) is \(f_c\), and the optimal value of (1.1) is \(f_{\min }\). If \(f_{\min }\) is achievable at a critical point, then \(f_c = f_{\min }\). In Theorem 3.3, we have shown that \(f_k = f_c\) for all k big enough, where \(f_k\) is the optimal value of (3.9). The value \(f_c\) or \(f_{\min }\) is often not known. How do we detect the tightness \( f_k = f_c \) in computation? The flat extension or flat truncation condition [5, 14, 32] can be used for checking tightness. Suppose \(y^*\) is a minimizer of (3.8) for the order k. Let

If there exists an integer \(t \in [d, k]\) such that

then \(f_k = f_{c}\) and we can get \(r := \text{ rank }\, M_t (y^*)\) minimizers for (3.7) [5, 14, 32]. The method in [14] can be used to extract minimizers. It was implemented in the software GloptiPoly 3 [13]. Generally, (3.17) can serve as a sufficient and necessary condition for detecting tightness. The case that (3.7) is infeasible (i.e., no critical points satisfy the constraints \(c_{in} \ge 0, \psi \ge 0\)) can also be detected by solving the relaxations (3.8)–(3.9).

Theorem 3.4

Under Assumption 3.1, the relaxations (3.8)–(3.9) have the following properties:

-

(i)

If (3.8) is infeasible for some order k, then no critical points satisfy the constraints \(c_{in} \ge 0, \psi \ge 0\), i.e., (3.7) is infeasible.

-

(ii)

Suppose Assumption 3.2 holds. If (3.7) is infeasible, then the relaxation (3.8) must be infeasible when the order k is big enough.

In the following, assume (3.7) is feasible (i.e., \(f_c < + \infty \)). Then, for all k big enough, (3.8) has a minimizer \(y^*\). Moreover,

-

(iii)

If (3.17) is satisfied for some \(t \in [d,k]\), then \(f_k = f_c\).

-

(iv)

If Assumption 3.2 holds and (3.7) has finitely many minimizers, then every minimizer \(y^*\) of (3.8) must satisfy (3.17) for some \(t \in [d,k]\), when k is big enough.

Proof

By Assumption 3.1, u is a critical point if and only if \(c_{eq}(u) = 0, \phi (u)=0\).

-

(i)

For every feasible point u of (3.7), the tms \([u]_{2k}\) (see Sect. 2 for the notation) is feasible for (3.8), for all k. Therefore, if (3.8) is infeasible for some k, then (3.7) must be infeasible.

-

(ii)

By Assumption 3.2, when (3.7) is infeasible, the set

$$\begin{aligned} \{x\in \mathbb {R}^n:c_{eq}(x)=0, \phi (x)=0, \rho (x) \ge 0 \} \end{aligned}$$is empty. It has a single inequality. By the Positivstellensatz [2, Corollary 4.4.3], it holds that \( -1\in \hbox {Ideal}(c_{eq}, \phi ) + \hbox {Qmod}(\rho ). \) By Assumption 3.2,

$$\begin{aligned} \hbox {Ideal}(c_{eq}, \phi ) + \hbox {Qmod}(\rho ) \subseteq \hbox {IQ}(c_{eq}, c_{in}) + \hbox {IQ}(\phi , \psi ). \end{aligned}$$Thus, for all k big enough, (3.9) is unbounded from above. Hence, (3.8) must be infeasible, by weak duality. When (3.7) is feasible, f achieves finitely many values on \(\mathcal {K}_c\), so (3.7) must achieve its optimal value \(f_c\). By Theorem 3.3, we know that \(f_k = f_k^\prime = f_c\) for all k big enough. For each minimizer \(u^*\) of (3.7), the tms \([u^*]_{2k}\) is a minimizer of (3.8).

-

(iii)

If (3.17) holds, we can get \(r := \text{ rank }\, M_t (y^*)\) minimizers for (3.7) [5, 14], say, \(u_1, \ldots , u_r\), such that \(f_k = f(u_i)\) for each i. Clearly, \(f_k = f(u_i) \ge f_c\). On the other hand, we always have \(f_k \le f_c\). So, \(f_k = f_c\).

-

(iv)

By Assumption 3.2, (3.7) is equivalent to the problem

$$\begin{aligned} \left\{ \begin{array}{rl} \min &{} f(x) \\ s.t. &{} c_{eq}(x) = 0, \, \phi (x) = 0, \, \rho (x) \ge 0. \end{array} \right. \end{aligned}$$(3.18)The optimal value of (3.18) is also \(f_c\). Its kth order Lasserre’s relaxation is

$$\begin{aligned} \left\{ \begin{array}{rl} \gamma _k^\prime := \min &{} \langle f, y \rangle \\ s.t. &{} \langle 1, y \rangle = 1, M_k(y) \succeq 0, \\ &{} L_{c_{eq}}^{(k)}(y) = 0, \, L_{\phi }^{(k)}(y) = 0, \, L_{\rho }^{(k)}(y) \succeq 0. \end{array} \right. \end{aligned}$$(3.19)Its dual optimization problem is

$$\begin{aligned} \left\{ \begin{array}{rl} \gamma _k := \, \max &{} \gamma \\ s.t. &{} f-\gamma \in \hbox {Ideal}(c_{eq},\phi )_{2k} + \hbox {Qmod}(\rho )_{2k}. \end{array} \right. \end{aligned}$$(3.20)By repeating the same proof as for Theorem 3.3(iii), we can show that

$$\begin{aligned} \gamma _k = \gamma _k^\prime = f_c \end{aligned}$$for all k big enough. Because \(\rho \in \hbox {Qmod}(c_{in}, \psi ),\) each y feasible for (3.8) is also feasible for (3.19). So, when k is big, each \(y^*\) is also a minimizer of (3.19). The problem (3.18) also has finitely many minimizers. By Theorem 2.6 of [32], the condition (3.17) must be satisfied for some \(t\in [d,k]\), when k is big enough. \(\square \)

If (3.7) has infinitely many minimizers, then the condition (3.17) is typically not satisfied. We refer to [25, §6.6].

4 Polyhedral constraints

In this section, we assume the feasible set of (1.1) is the polyhedron

where \(A = \begin{bmatrix} a_1&\cdots&a_m \end{bmatrix}^T \in \mathbb {R}^{m \times n}\), \(b = \begin{bmatrix} b_1&\cdots&b_m \end{bmatrix}^T \in \mathbb {R}^m\). This corresponds to that \(\mathcal {E}=\emptyset \), \(\mathcal {I}=[m]\), and each \(c_i(x) = a_i^Tx - b_i\). Denote

The Lagrange multiplier vector \(\lambda := \begin{bmatrix} \lambda _1&\cdots&\lambda _m \end{bmatrix}^T\) satisfies

If \(\text{ rank }\, A= m\), we can express \(\lambda \) as

If \(\text{ rank }\, A < m\), how can we express \(\lambda \) in terms of x? In computation, we often prefer a polynomial expression. If there exists \(L(x) \in \mathbb {R}[x]^{m \times (n+m)}\) such that

then we can get

where \(L_1(x)\) consists of the first n columns of L(x). In this section, we characterize when such L(x) exists and give a degree bound for it.

The linear function \(Ax-b\) is said to be nonsingular if \(\text{ rank }\, C(u) = m\) for all \(u \in \mathbb {C}^n\) (also see Definition 5.1). This is equivalent to that for every u, if \(J(u) = \{ i_1, \ldots , i_k \}\) (see (1.2) for the notation), then \( a_{i_1}, \ldots , a_{i_k} \) are linearly independent.

Proposition 4.1

The linear function \(Ax-b\) is nonsingular if and only if there exists a matrix polynomial L(x) satisfying (4.4). Moreover, when \(Ax-b\) is nonsingular, we can choose L(x) in (4.4) with \(\deg (L) \le m-\text{ rank }\,A\).

Proof

Clearly, if (4.4) is satisfied by some L(x), then \(\text{ rank }\,C(u) \ge m\) for all u. This implies that \(Ax-b\) is nonsingular.

Next, assume that \(Ax-b\) is nonsingular. We show that (4.4) is satisfied by some \(L(x) \in \mathbb {R}[x]^{m\times (n+m)}\) with degree \(\le m-\text{ rank }\,A\). Let \(r = \text{ rank }\,A\). Up to a linear coordinate transformation, we can reduce x to a r-dimensional variable. Without loss of generality, we can assume that \(\text{ rank }\, A = n\) and \(m \ge n\).

For a subset \(I := \{i_1, \ldots , i_{m-n}\}\) of [m], denote

For the case that \(I=\emptyset \) (the empty set), we set \(c_{\emptyset }(x) = 1\). Let

Step I For each \(I \in V\), we construct a matrix polynomial \(L_I(x)\) such that

The matrix \(L_I:= L_I(x)\) satisfying (4.5) can be given by the following \(2\times 3\) block matrix (\(L_I(\mathcal {J},\mathcal {K})\) denotes the submatrix whose row indices are from \(\mathcal {J}\) and whose column indices are from \(\mathcal {K}\)):

Equivalently, the blocks of \(L_I\) are:

For each \(I \in V\), \( A_{[m]\backslash I}\) is invertible. The superscript \(^{-T}\) denotes the inverse of the transpose. Let \(G:=L_I(x) C(x)\), then one can verify that

This shows that the above \(L_I(x)\) satisfies (4.5).

Step II We show that there exist real scalars \(\nu _I\) satisfying

This can be shown by induction on m.

-

When \(m=n\), \(V = \emptyset \) and \(c_{\emptyset }(x) = 1\), so (4.7) is clearly true.

-

When \(m > n\), let

$$\begin{aligned} N := \{ i \in [m] \, \mid \, \text{ rank }\, A_{[m]\backslash \{i\} } = n \}. \end{aligned}$$(4.8)

For each \(i \in N\), let \(V_i\) be the set of all \(I' \subseteq [m]\backslash \{i\}\) such that \(|I'|=m-n-1\) and \(\text{ rank }\, A_{[m]\backslash (I'\cup \{i\}) } = n\). For each \(i \in N\), by the assumption, the linear function \(A_{m \backslash \{i\} } x - b_{m \backslash \{i\}}\) is nonsingular. By induction, there exist real scalars \(\nu _{I'}^{(i)}\) satisfying

Since \(\text{ rank }\, A = n\), we can generally assume that \(\{a_1, \ldots , a_n\}\) is linearly independent. So, there exist scalars \(\alpha _1, \ldots , \alpha _n\) such that

If all \(\alpha _i =0\), then \(a_m = 0\), and hence A can be replaced by its first \(m-1\) rows. So, (4.7) is true by the induction. In the following, suppose at least one \(\alpha _i \ne 0\) and write

Then, \(a_{i_1}, \ldots , a_{i_k}, a_{m}\) are linearly dependent. For convenience, set \(i_{k+1} := m\). Since \(Ax-b\) is nonsingular, the linear system

has no solutions. Hence, there exist real scalars \(\mu _1, \ldots , \mu _{k+1}\) such that

This above can be implied by echelon’s form for inconsistent linear systems. Note that \(i_1, \ldots , i_{k+1} \in N\). For each \(j = 1,\ldots , k+1\), by (4.9),

Then, we can get

Since each \(I' \cup \{i_j\} \in V\), (4.7) must be satisfied by some scalars \(\nu _I\).

Step III For \(L_I(x)\) as in (4.5), we construct L(x) as

Clearly, L(x) satisfies (4.4) because

Each \(L_I(x)\) has degree \(\le m-n\), so L(x) has degree \(\le m-n\). \(\square \)

Proposition 4.1 characterizes when there exists L(x) satisfying (4.4). When it does, a degree bound for L(x) is \(m-\text{ rank }\,A\). Sometimes, its degree can be smaller than that, as shown in Example 4.3. For given A, b, the matrix polynomial L(x) satisfying (4.4) can be determined by linear equations, which are obtained by matching coefficients on both sides. In the following, we give some examples of \(L(x)C(x)=I_m\) for polyhedral sets.

Example 4.2

Consider the simplicial set

The equation \(L(x)C(x) = I_{n+1}\) is satisfied by

Example 4.3

Consider the box constraint

The equation \(L(x)C(x) = I_{2n}\) is satisfied by

Example 4.4

Consider the polyhedral set

The equation \(L(x)C(x) = I_{5}\) is satisfied by

Example 4.5

Consider the polyhedral set

The matrix L(x) satisfying \(L(x)C(x) = I_4\) is

5 General constraints

We consider general nonlinear constraints as in (1.1). The critical point conditions are in (1.8). We discuss how to express Lagrange multipliers \(\lambda _i\) as polynomial functions in x on the set of critical points.

Suppose there are totally m equality and inequality constraints, i.e.,

If \((x,\lambda )\) is a critical pair, then \(\lambda _i c_i(x) = 0\) for all \(i \in \mathcal {E} \cup \mathcal {I}\). So, the Lagrange multiplier vector \(\lambda := \begin{bmatrix} \lambda _1&\cdots&\lambda _m \end{bmatrix}^T\) satisfies the equation

Let C(x) be as in above. If there exists \(L(x) \in \mathbb {R}[x]^{m \times (m+n)}\) such that

then we can get

where \(L_1(x)\) consists of the first n columns of L(x). Clearly, (5.2) implies that Assumption 3.1 holds. This section characterizes when such L(x) exists.

Definition 5.1

The tuple \(c := (c_1, \ldots , c_m)\) of constraining polynomials is said to be nonsingular if \(\text{ rank }\, C(u) = m\) for every \(u \in \mathbb {C}^n\).

Clearly, c being nonsingular is equivalent to that for each \(u \in \mathbb {C}^n\), if \(J(u) = \{ i_1, \ldots , i_k \}\) (see (1.2) for the notation), then the gradients \( \nabla c_{i_1}(u), \ldots , \nabla c_{i_k}(u) \) are linearly independent. Our main conclusion is that (5.2) holds if and only if the tuple c is nonsingular.

Proposition 5.2

-

(i)

For each \(W(x) \in \mathbb {C}[x]^{s \times t}\) with \(s \ge t\), \(\text{ rank }\,W(u) = t\) for all \(u \in \mathbb {C}^n\) if and only if there exists \(P(x) \in \mathbb {C}[x]^{t \times s}\) such that

$$\begin{aligned} P(x) W(x) = I_t. \end{aligned}$$Moreover, for \(W(x) \in \mathbb {R}[x]^{s \times t}\), we can choose \(P(x) \in \mathbb {R}[x]^{t \times s}\) for the above.

-

(ii)

The constraining polynomial tuple c is nonsingular if and only if there exists \(L(x) \in \mathbb {R}[x]^{m \times (m+n)}\) satisfying (5.2).

Proof

(i)“\(\Leftarrow \)”: If \(L(x) W(x) = I_t\), then for all \(u\in \mathbb {C}^n\)

So, W(x) must have full column rank everywhere.

“\(\Rightarrow \)”: Suppose \(\text{ rank }\,W(u) = t\) for all \(u\in \mathbb {C}^n\). Write W(x) in columns

Then, the equation \(w_1(x)=0\) does not have a complex solution. By Hilbert’s Weak Nullstellensatz [4], there exists \(\xi _1(x) \in \mathbb {C}[x]^s\) such that \(\xi _1(x)^T w_1(x) = 1.\) For each \(i = 2, \ldots , t\), denote

then (use \(\sim \) to denote row equivalence between matrices)

where each (\(i=2,\ldots ,m\))

So, there exists \(P_1(x) \in \mathbb {R}[x]^{(s+1) \times s}\) such that

Since W(x) and \(W_1(x)\) are row equivalent, \(W_1(x)\) must also have full column rank everywhere. Similarly, the polynomial equation

does not have a complex solution. Again, by Hilbert’s Weak Nullstellensatz [4], there exists \(\xi _2(x) \in \mathbb {C}[x]^{s}\) such that

For each \(i = 3, \ldots , t\), let \(r_{2,i}(x):= \xi _2(x)^T w_2^{(1)}(x)\), then

where each (\(i=3,\ldots ,m\))

Similarly, \(W_1(x)\) and \(W_2(x)\) are row equivalent, so \(W_2(x)\) has full column rank everywhere. There exists \(P_2(x) \in \mathbb {C}[x]^{(s+2) \times (s+1)}\) such that

Continuing this process, we can finally get

Consequently, there exists \(P_i(x) \in \mathbb {R}[x]^{(s+i) \times (s+i-1)}\) for \(i=1,2,\ldots , t\), such that

Since \(W_t(x)\) is a unit upper triangular matrix polynomial, there exists \(P_{t+1}(x) \in \mathbb {R}[x]^{t \times (s+t)}\) such that \(P_{t+1}(x) W_t(x) = I_t.\) Let

then \(P(x) W(x) = I_m\). Note that \(P(x) \in \mathbb {C}[x]^{t \times s}\). For \(W(x) \in \mathbb {R}[x]^{s\times t}\), we can replace P(x) by

\(\big (P(x) + \overline{P(x)}\big )/2\) (the \(\overline{P(x)}\) denotes the complex conjugate of P(x)), which is a real matrix polynomial. (ii) The conclusion is implied directly by the item (i). \(\square \)

In Proposition 5.2, there is no explicit degree bound for L(x) satisfying (5.2). This question is mostly open, to the best of the author’s knowledge. However, once a degree is chosen for L(x), it can be determined by comparing coefficients of both sides of (5.2). This can be done by solving a linear system. In the following, we give some examples of L(x) satisfying (5.2).

Example 5.3

Consider the hypercube with quadratic constraints

The equation \(L(x)C(x)=I_n\) is satisfied by

Example 5.4

Consider the nonnegative portion of the unit sphere

The equation \(L(x)C(x)=I_{n+1}\) is satisfied by

Example 5.5

Consider the set

The equation \(L(x)C(x)=I_2\) is satisfied by

Example 5.6

Consider the quadratic set

The matrix \(L(x)^T\) satisfying \(L(x)C(x)=I_2\) is

We would like to remark that a polynomial tuple \(c=(c_1, \ldots , c_m)\) is generically nonsingular and Assumption 3.1 holds generically.

Proposition 5.7

For all positive degrees \(d_1, \ldots , d_m\), there exists an open dense subset \(\mathcal {U}\) of \(\mathcal {D} := \mathbb {R}[x]_{d_1} \times \cdots \times \mathbb {R}[x]_{d_m}\) such that every tuple \(c=(c_1, \ldots , c_m) \in \mathcal {U}\) is nonsingular. Indeed, such \(\mathcal {U}\) can be chosen as a Zariski open subset of \(\mathcal {D}\), i.e., it is the complement of a proper real variety of \(\mathcal {D}\). Moreover, Assumption 3.1 holds for all \(c \in \mathcal {U}\), i.e., it holds generically.

Proof

The proof needs to use resultants and discriminants, which we refer to [29].

First, let \(J_1\) be the set of all \((i_1, \ldots , i_{n+1})\) with \(1 \le i_1< \cdots < i_{n+1} \le m\). The resultant \(Res(c_{i_1}, \ldots , c_{i_{n+1}})\) [29, Section 2] is a polynomial in the coefficients of \(c_{i_1}, \ldots , c_{i_{n+1}}\) such that if \(Res(c_{i_1}, \ldots , c_{i_{n+1}}) \ne 0\) then the equations

have no complex solutions. Define

For the case that \(m\le n\), \(J_1 = \emptyset \) and we just simply let \(F_1(c) = 1\). Clearly, if \(F_1(c) \ne 0\), then no more than n polynomials of \(c_1, \ldots , c_m\) have a common complex zero.

Second, let \(J_2\) be the set of all \((j_1, \ldots , j_k)\) with \(k \le n\) and \(1 \le j_1< \cdots < j_k \le m\). When one of \(c_{j_1}, \ldots , c_{j_k}\) has degree bigger than one, the discriminant \(\varDelta (c_{j_1}, \ldots , c_{j_k})\) is a polynomial in the coefficients of \(c_{j_1}, \ldots , c_{j_k}\) such that if \(\varDelta (c_{j_1}, \ldots , c_{j_k}) \ne 0\) then the equations

have no singular complex solution [29, Section 3], i.e., at every complex common solution u, the gradients of \(c_{j_1}, \ldots , c_{j_k}\) at u are linearly independent. When all \(c_{j_1}, \ldots , c_{j_k}\) have degree one, the discriminant of the tuple \((c_{j_1}, \ldots , c_{j_k})\) is not a single polynomial, but we can define \(\varDelta (c_{j_1}, \ldots , c_{j_k})\) to be the product of all maximum minors of its Jacobian (a constant matrix). Define

Clearly, if \(F_2(c) \ne 0\), then then no n or less polynomials of \(c_1, \ldots , c_m\) have a singular complex comon zero.

Last, let \(F(c) := F_1(c) F_2(c)\) and

Note that \(\mathcal {U}\) is a Zariski open subset of \(\mathcal {D}\) and it is open dense in \(\mathcal {D}\). For all \(c\in \mathcal {D}\), no more than n of \(c_1, \ldots , c_m\) can have a complex common zero. For any k polynomials (\(k \le n\)) of \(c_1, \ldots , c_m\), if they have a complex common zero, say, u, then their gradients at u must be linearly independent. This means that c is a nonsingular tuple.

Since every \(c \in \mathcal {U}\) is nonsingular, Proposition 5.2 implies (5.2), whence Assumption 3.1 is satisifed. Therefore, Assumption 3.1 holds for all \(c \in \mathcal {U}\). So, it holds generically. \(\square \)

6 Numerical examples

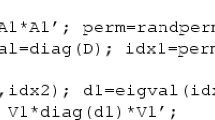

This section gives examples of using the new relaxations (3.8)–(3.9) for solving the optimization problem (1.1), with usage of Lagrange multiplier expressions. Some polynomials in the examples are from [37]. The computation is implemented in MATLAB R2012a, on a Lenovo Laptop with CPU@2.90GHz and RAM 16.0G. The relaxations (3.8)–(3.9) are solved by the software GloptiPoly 3 [13], which calls the SDP package SeDuMi [40]. For neatness, only four decimal digits are displayed for computational results.

The polynomials \(p_i\) in Assumption 3.1 are constructed as follows. Order the constraining polynomials as \(c_1, \ldots , c_m\). First, find a matrix polynomial L(x) satisfying (4.4) or (5.2). Let \(L_1(x)\) be the submatrix of L(x), consisting of the first n columns. Then, choose \((p_1, \ldots , p_m)\) to be the product \(L_1(x) \nabla f(x)\), i.e.,

In all our examples, the global minimum value \(f_{\min }\) of (1.1) is achieved at a critical point. This is the case if the feasible set is compact, or if f is coercive (i.e.,the sublevel set \(\{f(x) \le \ell \}\) is compact for all \(\ell \)), and the constraint qualification condition holds.

By Theorem 3.3, we have \(f_k = f_{\min }\) for all k big enough, if \(f_c = f_{\min }\) and any of its conditions i)-iii) holds. Typically, it might be inconvenient to check these conditions. However, in computation, we do not need to check them at all. Indeed, the condition (3.17) is more convenient for usage. When there are finitely many global minimizers, Theorem 3.4 proved that (3.17) is an appropriate criteria for detecting convergence. It is satisfied for all our examples, except Examples 6.1, 6.7 and 6.9 (they have infinitely many minimizers).

We compare the new relaxations (3.8)–(3.9) with standard Lasserre’s relaxations in [17]. The lower bounds given by relaxations in [17] (without using Lagrange multiplier expressions) and the lower bounds given by (3.8)–(3.9) (using Lagrange multiplier expressions) are shown in the tables. The computational time (in seconds) is also compared. The results for standard Lasserre’s relaxations are titled “w./o. L.M.E”, and those for the new relaxations (3.8)–(3.9) are titled “with L.M.E.”.

Example 6.1

Consider the optimization problem

The matrix polynomial L(x) is given in Example 4.2. Since the feasible set is compact, the minimum \(f_{\min } =0\) is achieved at a critical point. The condition ii) of Theorem 3.3 is satisfied.Footnote 2 Each feasible point \((x_1,x_2,x_3)\) with \(x_1x_2=0\) is a global minimizer. The computational results for standard Lasserre’s relaxations and the new ones (3.8)–(3.9) are in Table 1. It confirms that \(f_k = f_{\min }\) for all \(k\ge 3\), up to numerical round-off errors.

Example 6.2

Consider the optimization problem

The matrix polynomial \(L(x) = \begin{bmatrix} \frac{1}{2}x_1&\frac{1}{2}x_2&\frac{1}{2}x_3&-1 \end{bmatrix}\). The objective f is the sum of the positive definite form \(x_1^4+x_2^4+x_3^4\) and the Motzkin polynomial

Note that M(x) is nonnegative everywhere but not SOS [37]. Clearly, f is coercive and \(f_{\min }\) is achieved at a critical point. The set \(\hbox {IQ}(\phi ,\psi )\) is archimedean, because

defines a compact set. So, the condition i) of Theorem 3.3 is satisfied.Footnote 3 The minimum value \(f_{\min }= \frac{1}{3}\), and there are 8 minimizers \( (\pm \frac{1}{\sqrt{3}}, \, \pm \frac{1}{\sqrt{3}}, \, \pm \frac{1}{\sqrt{3}}). \) The computational results for standard Lasserre’s relaxations and the new ones (3.8)–(3.9) are in Table 2. It confirms that \(f_k = f_{\min }\) for all \(k\ge 4\), up to numerical round-off errors.

Example 6.3

Consider the optimization problem:

The matrix polynomial L(x) is

The feasible set is compact, so \(f_{\min }\) is achieved at a critical point. One can show that \(f_{\min } =0\) and the minimizer is the origin. The condition ii) of Theorem 3.3 is satisfied, because \(\hbox {IQ}(c_{eq},c_{in})\) is archimedean.Footnote 4 The computational results for standard Lasserre’s relaxations and the new ones (3.8)–(3.9) are in Table 3.

Example 6.4

Consider the polynomial optimization problem

It is motivated from an example in [12, §3]. The first column of L(x) is

and the second column of L(x) is

The objective is coercive, so \(f_{\min }\) is achieved at a critical point. The minimum value \(f_{\min } = 56+3/4+25\sqrt{5} \approx 112.6517\) and the minimizers are \((\pm \,\sqrt{1/2}, \pm \,(\sqrt{5/8}+\sqrt{1/2}))\). The computational results for standard Lasserre’s relaxations and the new ones (3.8)-(3.9) are in Table 4. It confirms that \(f_k = f_{\min }\) for all \(k\ge 4\), up to numerical round-off errors.

Example 6.5

Consider the optimization problem

The matrix polynomial L(x) is

The objective is a variation of Robinson’s form [37]. It is a positive definite form over the nonnegative orthant \(\mathbb {R}_+^3\), so the minimum value is achieved at a critical point. In computation, we got \(f_{\min } \approx 0.9492\) and a global minimizer (0.9071, 1.1024, 0.9071). The computational results for standard Lasserre’s relaxations and the new ones (3.8)-(3.9) are in Table 5. It confirms that \(f_k = f_{\min }\) for all \(k\ge 3\), up to numerical round-off errors.

Example 6.6

Consider the optimization problem (\(x_0 := 1\))

The matrix polynomial \(L(x) = \begin{bmatrix} \frac{1}{2}\text{ diag }(x)&-I_4 \end{bmatrix}\).The first part of the objective is \(x^Tx\), while the second part is a nonnegative polynomial [37]. The objective is coercive, so \(f_{\min }\) is achieved at a critical point. In computation, we got \(f_{\min } = 4.0000\) and 11 global minimizers:

The computational results for standard Lasserre’s relaxations and the new ones (3.8)-(3.9) are in Table 6. It confirms that \(f_k = f_{\min }\) for all \(k\ge 4\), up to numerical round-off errors.

Example 6.7

Consider the optimization problem

The matrix polynomial \( L(x) = \left[ \begin{array}{rrrrr} 1 &{} 0 &{} 0 &{} 0 &{} 0\\ - x_{3} &{} -1 &{} 0 &{} 0 &{} 0 \end{array}\right] . \) By the arithmetic-geometric mean inequality, one can show that \(f_{\min } = 0 \). The global minimizers are \((x_1,0,x_3)\) with \(x_1 \ge 0\) and \(x_1x_3 =0\). The computational results for standard Lasserre’s relaxations and the new ones (3.8)-(3.9) are in Table 7. It confirms that \(f_k = f_{\min }\) for all \(k\ge 5\), up to numerical round-off errors.

Example 6.8

Consider the optimization problem

The matrix polynomial \(L(x) = \left[ \begin{array}{rrrrrr} 1 &{} 0 &{} 0 &{} 0 &{} 0 &{} 0\\ 1 &{} 1 &{} 0 &{} 0 &{} 0 &{} 0 \end{array}\right] .\) In the objective, the sum of the first 4 terms is a nonnegative form [37], while the sum of the last 3 terms is a coercive polynomial. The objective is coercive, so \(f_{\min }\) is achieved at a critical point. In computation, we got \(f_{\min } \approx 0.9413 \) and a minimizer

The computational results for standard Lasserre’s relaxations and the new ones (3.8)–(3.9) are in Table 8. It confirms that \(f_k = f_{\min }\) for all \(k\ge 3\), up to numerical round-off errors.

Example 6.9

Consider the optimization problem

The matrix L(x) is given in Example 4.3. The objective is the dehomogenization of Horn’s form [37]. The feasible set is compact, so \(f_{\min }\) is achieved at a critical point. The condition ii) of Theorem 3.3 is satisfied.Footnote 5 The minimum value \(f_{\min }=0\). For each \(t\in [0,1]\), the point \((t,0,0,1-t)\) is a global minimizer. The computational results for standard Lasserre’s relaxations and the new ones (3.8)–(3.9) are in Table 9.

For some polynomial optimization problems, the standard Lasserre’s relaxations might converge fast, e.g., the lowest order relaxation may often be tight. For such cases, the new relaxations (3.8)–(3.9) have the same convergence property, but might take more computational time. The following is such a comparison.

Example 6.10

Consider the optimization problem (\(x_0 :=1\))

where each coefficient \(c_{ijk}\) is randomly generated (by randn in MATLAB). The matrix L(x) is the same as in Example 4.3. Since the feasible set is compact, we always have \(f_c = f_{\min }\). The condition ii) of Theorem 3.3 is satisfied, because of box constraints. For this kind of randomly generated problems, standard Lasserre’s relaxations are often tight for the order \(k=2\), which is also the case for the new relaxations (3.8)–(3.9). Here, we compare the computational time that is consumed by standard Lasserre’s relaxations and (3.8)–(3.9). The time is shown (in seconds) in Table 10. For each n in the table, we generate 10 random instances and we show the average of the consumed time. For all instances, standard Lasserre’s relaxations and the new ones (3.8)–(3.9) are tight for the order \(k=2\), while their time is a bit different. We can observe that (3.8)–(3.9) consume slightly more time.

7 Discussions

7.1 Tight relaxations using preorderings

When the global minimum value \(f_{\min }\) is achieved at a critical point, the problem (1.1) is equivalent to (3.7). We proposed relaxations (3.8)–(3.9) for solving (3.7). Note that

If we replace the quadratic module \(\hbox {Qmod}(c_{in},\psi )\) by the preordering of \((c_{in},\psi )\) [21, 25], we can get further tighter relaxations. For convenience, write \((c_{in},\psi )\) as a single tuple \((g_1, \ldots , g_\ell ).\) Its preordering is the set

The truncation \(\hbox {Preord}(c_{in},\psi )_{2k}\) is similarly defined like \(\hbox {Qmod}(c_{in},\psi )_{2k}\) in Sect. 2. A tighter relaxation than (3.8), of the same order k, is

Similar to (3.9), the dual optimization problem of the above is

An attractive property of the relaxations (7.1)–(7.2) is that: the conclusion of Theorem 3.3 still holds, even if none of the conditions (i)–(iii) there is satisfied. This gives the following theorem.

Theorem 7.1

Suppose \(\mathcal {K}_c \ne \emptyset \) and Assumption 3.1 holds. Then,

for all k sufficiently large. Therefore, if the minimum value \(f_{\min }\) of (1.1) is achieved at a critical point, then \(f_k^{pre} = f_k^{\prime ,pre} = f_{\min }\) for all k big enough.

Proof

The proof is very similar to the Case III of Theorem 3.3. Follow the same argument there. Without Assumption 3.2, we still have \(\hat{f}(x) \equiv 0\) on the set

By the Positivstellensatz, there exists an integer \(\ell >0\) and \(q \in \hbox {Preord}(c_{in},\psi )\) such that \( \hat{f}^{2\ell } + q \in \hbox {Ideal}(c_{eq},\phi ). \) The resting proof is the same. \(\square \)

7.2 Singular constraining polynomials

As shown in Proposition 5.2, if the tuple c of constraining polynomials is nonsingular, then there exists a matrix polynomial L(x) such that \(L(x) C(x) = I_m.\) Hence, the Lagrange multiplier \(\lambda \) can be expressed as in (5.3). However, if c is not nonsingular, then such L(x) does not exist. For such cases, how can we express \(\lambda \) in terms of x for critical pairs \((x,\lambda )\)? This question is mostly open, to the best of the author’s knowledge.

7.3 Degree bound for L(x)

For a nonsingular tuple c of constraining polynomials, what is a good degree bound for L(x) in Proposition 5.2? When c is linear, a degree bound is given in Proposition 4.1. However, for nonlinear c, an explicit degree bound is not known. Theoretically, we can get a degree bound for L(x). In the proof of Proposition 5.2, the Hilbert’s Nullstellensatz is used for t times. There exists sharp degree bounds for Hilbert’s Nullstellensatz [16]. For each time of its usage, if the degree bound in [16] is used, then a degree bound for L(x) can be eventually obtained. However, such obtained bound is enormous, because the one in [16] is already exponential in the number of variables. An interesting future work is to get a useful degree bound for L(x).

7.4 Rational representation of Lagrange multipliers

In (5.1), the Lagrange multiplier vector \(\lambda \) is determined by a linear equation. Naturally, one can get

when C(x) has full column rank. This rational representation is expensive for usage, because its denominator is typically a high degree polynomial. However, \(\lambda \) might have rational representations other than the above. Can we find a rational representation whose denominator and numerator have low degrees? If this is possible, the methods for optimizing rational functions [3, 15, 28] can be applied. This is an interesting question for future research.

Notes

It is the preordering of the polynomial tuple \((c_{in},\psi )\); see Sect. 7.1.

Note that \(1-x^Tx =(1-e^Tx)(1+x^Tx)+\sum _{i=1}^n x_i(1-x_i)^2+\sum _{i\ne j}x_i^2x_j \in \hbox {IQ}(c_{eq},c_{in}).\)

This is because \(-c_1^2p_1^2 \in \hbox {Ideal}(\phi ) \subseteq \hbox {IQ}(\phi ,\psi )\) and the set \(\{-c_1(x)^2p_1(x)^2 \ge 0 \}\) is compact.

This is because \(1-x_1^2-x_2^2\) belongs to the quadratic module of \((x_1, x_2,1-x_1-x_2)\) and \(1-x_3^2-x_4^2\) belongs to the quadratic module of \((x_3, x_4,1-x_3-x_4)\). See the footnote in Example 6.1.

Note that \(4-{\sum }_{i=1}^4 x_i^2 = {\sum }_{i=1}^4 \Big ( x_i (1-x_i)^2 + (1-x_i) (1+x_i^2) \Big ) \in \hbox {IQ}(c_{eq}, c_{in}).\)

References

Bertsekas, D.: Nonlinear Programming, 2nd edn. Athena Scientific, Cambridge (1995)

Bochnak, J., Coste, M., Roy, M.-F.: Real Algebraic Geometry. Springer, New York (1998)

Bugarin, F., Henrion, D., Lasserre, J.: Minimizing the sum of many rational functions. Math. Programm. Comput. 8, 83–111 (2016)

Cox, D., Little, J., O’Shea, D.: Ideals, Varieties, and Algorithms. An Introduction to Computational Algebraic Geometry and Commutative Algebra, 3rd edn. Undergraduate Texts in Mathematics. Springer, New York (1997)

Curto, R., Fialkow, L.: Truncated K-moment problems in several variables. J. Oper. Theory 54, 189–226 (2005)

Demmel, J., Nie, J., Powers, V.: Representations of positive polynomials on non-compact semialgebraic sets via KKT ideals. J. Pure Appl. Algebra 209(1), 189–200 (2007)

de Klerk, E., Laurent, M.: On the Lasserre hierarchy of semidefinite programming relaxations of convex polynomial optimization problems. SIAM J. Optim. 21, 824–832 (2011)

de Klerk, E., Lasserre, J., Laurent, M., Sun, Z.: Bound-constrained polynomial optimization using only elementary calculations. Math. Oper. Res. 42, 834–853 (2017)

de Klerk, E., Laurent, M., Sun, Z.: Convergence analysis for Lasserre’s measure-based hierarchy of upper bounds for polynomial optimization. Math. Programm. 162, 363–392 (2017)

de Klerk, E., Hess, R., Laurent, M.: Improved convergence rates for Lasserre-type hierarchies of upper bounds for box-constrained polynomial optimization. Preprint (2016). arXiv:1603.03329 [math.OC]

Greuel, G.-M., Pfister, G.: A Singular Introduction to Commutative Algebra. Springer, New York (2002)

He, S., Luo, Z., Nie, J., Zhang, S.: Semidefinite relaxation bounds for indefinite homogeneous quadratic optimization. SIAM J. Optim. 19(2), 503–523 (2008)

Henrion, D., Lasserre, J., Loefberg, J.: GloptiPoly 3: moments, optimization and semidefinite programming. Optim. Methods Softw. 24(4–5), 761–779 (2009)

Henrion, D., Lasserre, J.B.: Detecting global optimality and extracting solutions in GloptiPoly. Positive polynomials in control, 293–310. Lecture Notes in Control and Information Science 312. Springer, Berlin (2005)

Jibetean, D., de Klerk, E.: Global optimization of rational functions: a semidefinite programming approach. Math. Program. 106(1), 93–109 (2006)

Kollár, J.: Sharp effective Nullstellensatz. J. Am. Math. Soc. 1(4), 963–975 (1988)

Lasserre, J.B.: Global optimization with polynomials and the problem of moments. SIAM J. Optim. 11(3), 796–817 (2001)

Lasserre, J., Laurent, M., Rostalski, P.: Semidefinite characterization and computation of zero-dimensional real radical ideals. Found. Comput. Math. 8(5), 607–647 (2008)

Lasserre, J.: A new look at nonnegativity on closed sets and polynomial optimization. SIAM J. Optim. 21, 864–885 (2011)

Lasserre, J.B.: Convexity in semi-algebraic geometry and polynomial optimization. SIAM J. Optim. 19, 1995–2014 (2009)

Lasserre, J.B.: Moments, Positive Polynomials and Their Applications. Imperial College Press, London (2009)

Lasserre, J.B.: Introduction to Polynomial and Semi-algebraic Optimization. Cambridge University Press, Cambridge (2015)

Lasserre, J.B., Toh, K.C., Yang, S.: A bounded degree SOS hierarchy for polynomial optimization. EURO J. Comput. Optim. 5, 87–117 (2017)

Laurent, M.: Semidefinite representations for finite varieties. Math. Program. 109, 1–26 (2007)

Laurent, M.: Sums of squares, moment matrices and optimization over polynomials. In: Putinar, M., Sullivant, S. (eds.) Emerging Applications of Algebraic Geometry, vol. 149 of IMA Volumes in Mathematics and its Applications, pp. 157–270. Springer, New York (2009)

Laurent, M.: Optimization over polynomials: Selected topics. In: Proceedings of the International Congress of Mathematicians (2014)

Nie, J., Demmel, J., Sturmfels, B.: Minimizing polynomials via sum of squares over the gradient ideal. Math. Programm. Ser. A 106(3), 587–606 (2006)

Nie, J., Demmel, J., Gu, M.: Global minimization of rational functions and the nearest GCDs. J. Global Optim. 40(4), 697–718 (2008)

Nie, J.: Discriminants and nonnegative polynomials. J. Symb. Comput. 47(2), 167–191 (2012)

Nie, J.: An exact Jacobian SDP relaxation for polynomial optimization. Math. Programm. Ser. A 137, 225–255 (2013)

Nie, J.: Polynomial optimization with real varieties. SIAM J. Optim. 23(3), 1634–1646 (2013)

Nie, J.: Certifying convergence of Lasserre’s hierarchy via flat truncation. Math. Programm. Ser. A 142(1–2), 485–510 (2013)

Nie, J.: Optimality conditions and finite convergence of Lasserre’s hierarchy. Math. Programm. Ser. A 146(1–2), 97–121 (2014)

Nie, J.: Linear optimization with cones of moments and nonnegative polynomials. Math. Programm. Ser. B 153(1), 247–274 (2015)

Parrilo, P.A., Sturmfels, B.: Minimizing polynomial functions. In: Basu, S., Gonzalez-Vega, L. (eds.) Algorithmic and Quantitative Aspects of Real Algebraic Geometry in Mathematics and Computer Science, volume 60 of DIMACS Series in Discrete Mathematics and Computer Science, pp. 83–99. AMS (2003)

Putinar, M.: Positive polynomials on compact semi-algebraic sets. Ind. Univ. Math. J. 42, 969–984 (1993)

Reznick, B.: Some concrete aspects of Hilbert’s 17th problem. In: Contemporary Mathematics, vol 253, pp. 251–272. American Mathematical Society (2000)

Schweighofer, M.: Global optimization of polynomials using gradient tentacles and sums of squares. SIAM J. Optim. 17(3), 920–942 (2006)

Scheiderer, C.: Positivity and sums of squares: A guide to recent results. In: Putinar, M., Sullivant, S. (eds.) Emerging Applications of Algebraic Geometry, IMA Volumes Math. Appl. 149. Springer, pp. 271–324 (2009)

Sturm, J.F.: SeDuMi 1.02: a MATLAB toolbox for optimization over symmetric cones. Optim. Methods Softw. 11–12, 625–653 (1999)

Sturmfels, B.: Solving systems of polynomial equations. CBMS Regional Conference Series in Mathematics, vol. 97. American Mathematical Society, Providence (2002)

Vui, H.H., Són, P.T.: Global optimization of polynomials using the truncated tangency variety and sums of squares. SIAM J. Optim. 19(2), 941–951 (2008)

Acknowledgements

The author was partially supported by the NSF grants DMS-1417985 and DMS-1619973. He would like to thank the anonymous referees for valuable comments on improving the paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Nie, J. Tight relaxations for polynomial optimization and Lagrange multiplier expressions. Math. Program. 178, 1–37 (2019). https://doi.org/10.1007/s10107-018-1276-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-018-1276-2