Abstract

We propose a two-stage stochastic variational inequality model to deal with random variables in variational inequalities, and formulate this model as a two-stage stochastic programming with recourse by using an expected residual minimization solution procedure. The solvability, differentiability and convexity of the two-stage stochastic programming and the convergence of its sample average approximation are established. Examples of this model are given, including the optimality conditions for stochastic programs, a Walras equilibrium problem and Wardrop flow equilibrium. We also formulate stochastic traffic assignments on arcs flow as a two-stage stochastic variational inequality based on Wardrop flow equilibrium and present numerical results of the Douglas–Rachford splitting method for the corresponding two-stage stochastic programming with recourse.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

All stochastic variational models involve inherently a “dynamic” component that takes into account decisions taken over time, or/and space, where the decisions depend on the information that will become available as the decision process evolves. So far, the models proposed for stochastic variational inequalities have either bypassed or not made explicit this particular feature(s). Various “stochastic” extensions of variational inequalities have been proposed in the literature but so far relatively little concern has been paid to the ‘dynamics’ of the decision, or solution, process that is germane to all stochastic variational problems: stochastic programs, stochastic optimal control, stochastic equilibrium models in economics or finance, stochastic games, and so on. The “dynamics” of the model considered here are of limited scope. What is essential is that it makes a distinction between two families of variables: (i) those that are of the “here-and-now” type and cannot depend on the outcome of random events to be revealed at some future time or place and (ii) those that are allowed to depend on these outcomes. Our restriction to the two-stage model allows for a more detailed exposition and analysis as well as the development of computational guidelines, implemented here in a specific instance. By empathy with the terminology used for stochastic programming models, one might be tempted to refer to such a class of problems as stochastic variational inequalities with recourse but, as we shall see from the formulation and examples, that would not quite catch the full nature of the variables, mostly because the decision-variables aren’t necessarily chosen sequentially. We shall refer to this “short horizon” version as a two-stage stochastic variational inequality. In principle, the generalization to a multistage model is not challenging, at least conceptually, notwithstanding that a careful description of the model might become rather delicate, involved and technically, not completely trivial; a broad view of multistage models, as well as some of their canonical features, is provided in [59].

We consider the two-stage stochastic variational inequality: Given the (induced) probability space \((\Xi \subset {\mathbb {R}}^N ,\mathcal{A},P)\), find a pair \(\big (x \in {\mathbb {R}}^{n_1}, u: \Xi \rightarrow {\mathbb {R}}^{n_2} \,\mathcal{A}\)-measurable\(\big ),\) such that the following collection of variational inequalities is satisfied:Footnote 1

with

-

\(G:(\Xi ,{\mathbb {R}}^{n_1}, {\mathbb {R}}^{n_2}) \rightarrow {\mathbb {R}}^{n_1}\) a vector-valued function, continuous with respect to (x, u) for all \(\xi \in \Xi \), \(\mathcal{A}\)-measurable and integrable with respect to \(\xi \).

-

\(N_{D}(x)\) the normal cone to the nonempty closed-convex set \(D\subset {\mathbb {R}}^{n_1}\) at \(x \in {\mathbb {R}}^{n_1}.\)

-

\(F:(\Xi ,{\mathbb {R}}^{n_1}, {\mathbb {R}}^{n_2}) \rightarrow {\mathbb {R}}^{n_2}\) a vector-valued function, continuous with respect to (x, u) for all \(\xi \in \Xi \) and \(\mathcal{A}\)-measurable with respect to \(\xi \).

-

\(N_{C_\xi }\big (v \big )\) the normal cone to the nonempty closed-convex set \(C_\xi \subset {\mathbb {R}}^{n_2}\) at \(v \in {\mathbb {R}}^{n_2}\), the random set \(C_{\pmb {\xi }}\) is \(\mathcal{A}\)-measurable.

-

\(H: ({\mathbb {R}}^{n_1}, {\mathbb {R}}^{n_2}) \rightarrow {\mathbb {R}}^{n_2}\) a continuous vector-valued function.

The definition of the normal cone yields the following, somewhat more explicit, but equivalent formulation:

The model assumes that the uncertainty can be described by a random vector \({\varvec{\xi }}\) with known distribution P and a two-stage decision process: (i) x to be chosen before the value \(\xi \) of \({\varvec{\xi }}\) is revealed (observed) and (ii) u to be selected with full knowledgeFootnote 2 of the realization \(\xi \). From the decision-maker’s viewpoint, the problem can be viewed as choosing a pair \((x,\xi \mapsto u_\xi )\) where u depends on the events that might occur, or in other words, is \(\mathcal{A}\)-measurable. It is noteworthy that in our formulation of the stochastic variational inequality this pair is present, in one way or another, in each one of our examples. We find it advantageous to work here with this slightly more explicit model where the first collection of inclusions suggest the presence of (expected) equilibrium constraints; in [59], taking a somewhat more comprehensive approach, it is shown how these “equilibrium constraints” can be incorporated in a global, possibly more familiar, variational inequality of the same type as the second inclusion.

This paper is organized as follows. In Sect. 2, we devote a review on some fundamental examples and applications that are special cases of this model. In the last two examples in Sect. 2, we concentrate our attention on the stochastic traffic flow problems with the accent being placed on getting implementable solutions. To do this we show how an alternative formulation, based on the Expected Residual Minimization (ERM) might actually be more adaptable to coming up with solutions that are of immediate interest to the initial design or capacity expansion of traffic networks. In Sect. 3, we develop the basic properties of such a model, lay out the theory to justify deriving solutions via a sample average approximation approach in Sect. 4 and finally, in Sect. 5, describe an algorithm procedure based on the Douglas–Rachford splitting method which is then illustrated by numerical experimentation involving a couple of “classical-test” networks. One of the basic goals of this article was to delineate the relationships between various formulations of stochastic variational inequalities as well as to show how the solution-type desired might also lead us to work with some variants that might not fit perfectly the canonical model.

2 Stochastic variational inequalities: examples

When \({\varvec{\xi }}\) is discretely distributed with finite support, i.e., \(\Xi \) is finite, one can write the problem as:

When expressed in this form, we are just dealing with a, possibly extremely large, deterministic variational inequality over the set \(X\times \Xi \). How large will depend on the cardinality \(|\Xi |\) of the support and this immediately raises the question of the design of solution procedures for large scale variational inequalities. The difficulty in finding a solution might also depend on how challenging it is to compute \(\mathbb {E}[G({\varvec{\xi }},x,u_\xi )]\), even when G doesn’t depend on u.

Of course, both the choice of x and the realization \(\xi \) of the random components of the problem influence the ‘upcoming’ environment so we can also think as the state of the system being determined by the pair \((\xi ,x)\) and occasionally it will be convenient, mostly for technical reasons, to view the u-decision as a function of the state, i.e., \((\xi , x) \mapsto u(\xi ,x)\), cf. Sect. 3.

On the other hand, various generalizations are possible:

-

(a)

One could also have D depend on \({\varvec{\xi }}\) and x, in which case we are dealing with a random convex-valued mapping:

and one would, usually, specify the continuity properties of D with respect to \({\varvec{\xi }}\) and x; the analysis then enters the domain of stochastic generalized equations and can get rather touchy [45, 46, 63]. Here, we restrict our analysis to the case when D is independent of \({\varvec{\xi }}\) and x.

and one would, usually, specify the continuity properties of D with respect to \({\varvec{\xi }}\) and x; the analysis then enters the domain of stochastic generalized equations and can get rather touchy [45, 46, 63]. Here, we restrict our analysis to the case when D is independent of \({\varvec{\xi }}\) and x. -

(b)

Another extension is the case when there are further global type constraints on the choice of the functions u, for example, constraints involving \(\mathbb {E}[u_\xi ]\) or equilibrium-type constraints [9, 47].

-

(c)

The formulation can be generalized to a two-stage stochastic quasi variational inequality or generalized Nash equilibrium. For example the second-stage variational inequality problem can be defined as follows:

$$\begin{aligned} -F\big ({\varvec{\xi }}, x, u_{\pmb {\xi }}\big ) \in _{a.s.} \;N_{C_{\pmb {\xi }}(x)}(H(x,u_{\pmb {\xi }})), \end{aligned}$$where the set \(C_{\pmb {\xi }}\) depends on x [21]. However, the analysis of the generalization is not trivial and deserves a separate analysis. In this paper, we restrict our analysis to the case when \(C_{\pmb {\xi }}\) is independent of x.

In order to illustrate the profusion of problems that are covered by this formulation we are going to go through a series of examples including some that are presently understood, in the literature, to fall under the “stochastic variational inequality” heading.

2.1 One-stage examples

Example 2.1

Single-stage problems. This is by all means the class of problems that, so far, has attracted the major interest in the literature. In terms of our notation, it reads

where D is a closed convex set, possibly bounded and often polyhedral, for all \(x\in D\), the vector-valued mapping \(\xi \mapsto G(\xi ,x) \in {\mathbb {R}}^n\) is integrable and \(x \mapsto \mathbb {E}[G({\varvec{\xi }},x)]\) is continuous. Especially in the design of solution procedures, it is convenient to rely on the alternative formulation,

Detail. It’s only with some hesitation that one should refer to this model as a “stochastic variational equality.” Although, certain parameters of the problem are random variables and the solution will have to take this into account, this is essentially a deterministic variational inequality with the added twist that evaluating \(\mathbb {E}[G({\varvec{\xi }},x)]\), usually a multidimensional integral, requires relying on quadrature approximation schemes. Typically, P is then approximated by a discrete distribution with finite support, obtained via a cleverly designed approximation scheme or as the empirical distribution derived from a sufficiently large sample. So far, no cleverly designed approximation scheme has been proposed although the approach used by Pennanen and Koivu in the stochastic programming context might also prove to be effective [48, 49] in this situation. To a large extent the work has been focused on deriving convergence of the solutions of approximating variational inequalities where P has been replaced by a sequence of empirical probability measures \(P^\nu \), generated from independent samples of \({\varvec{\xi }}\): \(\xi ^1, \xi ^2, \ldots .,\xi ^\nu \). The approximating problem:

Two basic questions then become:

-

(i)

Will the solutions \(x^\nu \) converge to a solution of the given problem when the number of samples gets arbitrarily large?

-

(ii)

Can one find bounds that characterize the error, i.e., can one measure the distance between \(x^\nu \) and the (set of) solution(s) to the given variational inequality?

There is a non-negligible literature devoted to these questions which provides satisfactory answers under not too stringent additional restrictions, cf. [5, 25, 28, 30,31,32, 35, 38, 40, 41, 61, 66]. \(\square \)

In [27] Gürkan, Özge and Robinson rely on solving a variational inequality of this type to price an American call option with strike price K for an instrument paying a dividend d at some time \(\tau \le T\). The expiration date comes down to calculating the expectation of the option-value based on whether the option is exercised, or not, at time \(\tau ^{\scriptscriptstyle -}\) just before the price of the (underlying) instrument drops by d which otherwise follows a geometric Brownian motion, cf. [27, Section 3] and the references therein. The authors rely on the first order optimality conditions, generating a one-stage stochastic variational inequality where the vector-valued mapping \(G(\xi , \cdot )\) corresponds to the gradient of the option-value along a particular price-path. It turns out that contrary to an approach based on a sample-path average approximation of these step functions [27, Figure 1] the sample average approximation of their gradients is quite well-behaved [27, Figure 2]. \(\square \)

Example 2.2

Stochastic linear complementarity problem. The stochastic linear complementarity version of the one-stage stochastic variational inequality,

where some or all the elements of the matrix M and vector q are potentially stochastic, was analyzed by Chen and Fukushima [8] suggesting in the process a solution procedure based on “ERM: Expected Residual Minimization.”

Details. Their residual function can be regarded as a relative of the gap function used by Facchinei and Pang to solve deterministic variational inequalities [21]. More specifically,

is minimized for \(x\in {\mathbb {R}}^n_{\scriptscriptstyle +}\), where \(\varphi : {\mathbb {R}}^2 \rightarrow {\mathbb {R}}\) is such that \(\varphi (a,b) = 0\) if and only if \((a,b)\in {\mathbb {R}}^2_+\), \(ab = 0\); for example, the min-function \(\varphi (a,b) = \min \big \lbrace a,b \big \rbrace \) and the Fisher–Burmeister function \(\varphi (a,b) = (a+b) - \sqrt{a^2 + b^2}\) satisfy these conditions. Quite sharp existence results are obtained, in particular, when only the vector q is stochastic. They are complemented by convergence result when the probability measure P is replaced by discrete empirical measures generated via (independent) sampling. The convergence of the stationary points is raised in [8, Remark 3.1].

The stochastic linear complementarity problem gets recast as a (stochastic) optimization problem where one is confronted with an objective function defined as a multi-dimensional integral. Convergence of the solutions of the discretized problems can then be derived by appealing to the law of large numbers for random lsc (lower semicontinuous) functions [2, 37].

The solution provided by the ERM-reformulated problem

doesn’t, strictly speaking, solve the originally formulated complementarity problem for every realization \(\xi \); this could only occur if the optimal value turns out to be 0, or equivalently, if one could find a solution that satisfies for (almost) all \(\xi \), the system \(0 \le _{a.s.} M_\xi x + q_\xi \;\perp _{a.s.}\; x \ge 0\).

The residual function \(\Vert \Phi (\xi , x)\Vert \) can be considered as a cost function which measures the loss at the event \(\xi \) and decision x. The ERM formulation minimizes the expected values of the loss for all possible occurrences due to failure of the equilibrium. Recently Xie and Shanbhag construct tractable robust counterparts as an alternative way to using the ERM approach [67]. \(\square \)

2.2 Two-stage examples

Our first two examples in this subsection haven’t been considered, so far, in the literature but in some ways best motivates our formulation, cf. Sect. 1, in the same way that optimality conditions for (deterministic) linear and nonlinear optimization problems lead us to a rich class of variational inequalities [21].

Example 2.3

(Optimality conditions for a stochastic program) Not unexpectedly, the optimality conditions for a stochastic program with recourse (two-stage) lead immediately to a two-stage stochastic variational inequality. Here, we only develop this for a well-behaved simple (linear) recourse problem; to deal with more general formulations one has to rely on the full duality theory developed in [53, 59]. The class of stochastic programs considered are of the following (classical) type:

with

where the matrices and vectors subscripted by \(\xi \) indicate that they depend on the realization of a (global) random variable \({\varvec{\xi }}\) with support \(\Xi \); for any fixed \(\xi \): \(x^1\in {\mathbb {R}}^{n_1}, x^2_\xi \in {\mathbb {R}}^{n_2}, A\in {\mathbb {R}}^{m_1\times n_1}, b\in {\mathbb {R}}^{m_1}, q_\xi \in {\mathbb {R}}^{n_2}, W_\xi \in {\mathbb {R}}^{m_2\times n_2}, d_\xi \in {\mathbb {R}}^{m_2}\) and \(T_\xi \in {\mathbb {R}}^{m_2\times n_1}.\)

Detail. Let’s assume, relatively complete recourse: for all \(x^1\) satisfying the (explicit) constraints \(C^1 = \big \lbrace x^1\in {\mathbb {R}}^{n_1} {\,\big \vert \,}Ax^1 \ge b, \,x^1\ge 0 \big \rbrace \) and all \(\xi \in \Xi \), one can always find a feasible recourse \(x^2_\xi \), i.e., \(Q(\xi ,x^1) < \infty \) on \(\Xi \times C^1\), and

Strict feasibility: for some \(\varepsilon >0\), arbitrarily small, one can find \(x^1\in C^1\) and \(\tilde{x}^2\in \mathcal{L}_{n_2}^{\scriptscriptstyle \infty }\) such that \(\tilde{x}^2 \ge _{a.s.} 0\) and for almost all \(\xi \): \(W_\xi \tilde{x}^2_\xi > \tilde{\varepsilon }+ d_\xi - T_\xi x^1\), where \(\tilde{\varepsilon }\) is simply an \(m_2\) dimensional vector of \(\varepsilon \)’s.

For a problem of this type, \(\big (\bar{x}^1, \bar{x}^2)\in {\mathbb {R}}^{n_1}_{\scriptscriptstyle +}\times \mathcal{L}^{\scriptscriptstyle \infty }_{n_2,{\scriptscriptstyle +}}\) is an optimal solution [52, 54] if and only if

-

(a)

it is feasible, i.e., satisfies the constraints,

-

(b)

\(\exists \,\) multipliers \((y^1\in {\mathbb {R}}^{m_1}, y^2\in \mathcal{L}^1_{m_2})\) such that

$$\begin{aligned} 0 \le y^1 \perp A\bar{x}^1 -b \ge 0, \quad 0 \le _{a.s.} y^2_\xi \perp _{a.s.} T_\xi \bar{x}^1 + W_\xi \bar{x}^2_\xi -d_\xi \ge _{a.s.} 0, \end{aligned}$$ -

(c)

\(\big (\bar{x}^1, \bar{x}^2)\) minimize

$$\begin{aligned} \mathbb {E}[\langle c - A^\top y^1 - T_\xi ^\top y^2_\xi , x^1 \rangle + \langle q_\xi - W_\xi ^\top y^2_\xi , x^2_\xi \rangle ] \end{aligned}$$for \(x^1\in {\mathbb {R}}^{n_1}_{\scriptscriptstyle +}\) and \(x^2 \in \mathcal{L}^{\scriptscriptstyle \infty }_{n_2,{\scriptscriptstyle +}}\).

This means that under these assumptions, the double pairs

must satisfy the stochastic variational inequality:

and

In terms of our general formulation in Sect. 1, the first pair of inequalities define the function G and the set \(D={\mathbb {R}}^{n_1}_{\scriptscriptstyle +}\times {\mathbb {R}}^{m_1}_{\scriptscriptstyle +}\) whereas the second pair define F and the random convex set \(C_{\pmb {\xi }}\) with \((x^1, y^1)\) corresponding to x and \((x^2, y^2)\) corresponding to u; \(C_\xi ={\mathbb {R}}^{n_2}_{\scriptscriptstyle +}\times {\mathbb {R}}^{m_2}_{\scriptscriptstyle +}\). Of course, this can also be viewed as a stochastic complementarity problem albeit, in general, an infinite dimensional one. When, the probability distribution P has finite support, one can remove the “\(a.s.\)” in the second pair of inequalities and it’s a problem involving only a finite number of variables and inequalities but this finite number might be truly considerable. \(\square \)

Example 2.4

A Walras equilibrium problem. In some ways, this example is an extension of the preceding one except that it doesn’t lead to a stochastic complementarity problem but to a stochastic variational inequality that might not have the wished-for monotonicity properties for \(\mathbb {E}[G({\varvec{\xi }},\cdot )]\) and F.

Detail. We consider a stripped down version of the GEI-model, (General Equilibrium with Incomplete Markets), but even this model has a variety of immediate applications in finance, international commodity trading, species interaction in ecological models, \(\dots \). The major difference with the extensive (economic) literature devoted to the GEI-model is the absence of a so-called financial market that allows agents to enter into contracts involving the delivery of goods at some future date.

Again, \({\varvec{\xi }}\) provides the description of the uncertainty about future events. We are dealing with a finite number of (individual) agents \(i\in \mathcal{I}\). Each agent, endowed with vectors of goods \(e_i^1\in {\mathbb {R}}^L\) (here-and-now) and \(e_{\xi ,i}^2\) (in the future), choose its consumption plan, \(c^{1\star }\) here-and-now and \(c^{2\star }_\xi \) after observing \(\xi \), so as to maximize their expected utilities \(v^1_i(c^1)+\mathbb {E}[v_i^2(c^2_\xi )]\), where the utility functions \((v_i^1,v_i^2)\) are continuous, concave functions in \((c^1,c^2)\) on closed convex sets \(C_i^1 \subset {\mathbb {R}}^{n_1}_{\scriptscriptstyle +}\) and \(C_i^2 \subset {\mathbb {R}}^{n_2}_{\scriptscriptstyle +}\) respectively and \(v_i^2\) is \(\mathcal{A}\)-measurable with respect to \(\xi \). One often refers to \(C^1_i\) and \(C_i^2\) as agent-i’s survival sets; in some situations it would be appropriate to let \(C^2_i\) also depend on \(\xi \), this wouldn’t affect significantly our ensuing development. Each agent can also engage in here-and-now activities \(y\in {\mathbb {R}}^{m_i}\) that will use up a vector of goods \(T^1_i y\) which, in turn, will generate, possibly depending on \(\xi \), goods \(T^2_{\xi ,i}y\) in the future; a simple example could be the saving of some goods and a more elaborate one would involve “home production.” The market process allows each agent to exchange their (modified) endowments \((e^1_i - T^1_i y, e^2_{\xi ,i}+T^2_{\xi ,i}y)\) for their consumption at the prevalent market prices \(p^1\) in the here-and-now market and \(p^2_\xi \) in the future market. Thus, given \((p^1, p^2_{\pmb {\xi }})\), each agent will aim, selfishly, to maximize its expected rewards taking only into account its survival and budgetary limitations: choose \(\big (c_i^{1\star }, (c_{\xi ,i}^{2\star },\,\xi \in \Xi ))\) that solves the following stochastic program with recourse:

The Walras equilibrium problem is to find a (nonnegative) price system \(\big (p^1, (p^2_\xi ,\,\xi \in \Xi )\big )\) that will clear the here-and-now market and the future markets, i.e., such that the total demand does not exceed the available supply:

Since the budgetary constraints of the agents are positively homogeneous with respect to the price system, up to eventual rescaling after a solution is found, one can, without loss of generality, restrict the prices \(p^1\) and \(p^2_\xi \) for each \(\xi \) to the unit simplex, i.e., a compact set.

At this point, by combining the optimality conditions associated with the individual agents’ problems with the “clearing the market” conditions it’s possible to translate the equilibrium problem in an equivalent two-stage stochastic variational inequality. Unfortunately, so far, our assumptions don’t guarantee existence of a solution of this variational inequality. At this stage, it’s convenient to proceed with following assumptions that are common in the extensive literature devoted to the GEI-model:

-

the utility functions \(v^1_i\) and \(v^2_i\) are upper semicontinuous, strictly concave,

-

the agents’ endowments are such that \(e_i^1\in \mathop {\mathrm{int}}C_i^1\) and, for all \(\xi \in \Xi \), \(e_{\xi ,i}^2\in \mathop {\mathrm{int}}C_i^2\);

The GEI-literature makes these assumptions to be able to rely on differentiable Topology-methodology to obtain a “generic” existence proof. It’s rather clear that the implications of these assumptions are quite stiff. In particular, they imply that every agent must be endowed, in all situations, with a minimal amount, potentially infinitesimal, of every good and that this agent will be interested, possibly also minimally, in acquiring some more of every good.Footnote 3 Not only do these conditions yield existence [34], and not just generic, but they also imply that Walras’ Law must be satisfied, i.e., the following complementarity conditions involving equilibrium prices and excess supply will hold:

Moreover, it means that the agents’ problems are stochastic programs with relatively complete recourse which means that their optimality conditions can be stated in terms of \(\mathcal{L}^1\)-multipliers, refer to [53, 55]; note that here we haven’t restricted ourselves to a situation when the description of the uncertainty is limited to a finite number of events.

For any equilibrium price system \(\big (p^1\in \Delta , (p^2_\xi \in \Delta , \xi \in \Xi )\big )\) with \(\Delta \) \(=\{p \, | \, \sum ^L_{i=1} p_i \le 1, p \ge 0 \}\) the unit simplex in \({\mathbb {R}}^L\): the agents’ consumption plans must satisfy the following optimality conditions: the pair \(\big ((c_i^{1\star }, y_i^\star ), (c_{\xi ,i}^{2\star }, \xi \in \Xi )\big )\) is an optimal solution for agent-i if and only if

-

(a)

it satisfies the budgetary constraints,

-

(b)

\(\exists \) multipliers \(\big (\lambda _i^1\in {\mathbb {R}}, (\lambda _{\cdot ,i}^2\in \mathcal{L}^1)\big )\) such that

$$\begin{aligned} 0\le & {} \lambda _i^1 \perp \langle p^1, e^1_i - T_i^1 y_i^\star - c_i^{1\star } \rangle \ge 0, \\ 0\le & {} _{a.s.}\, \lambda ^2_{\xi ,i} \perp _{a.s.}\, \langle p^2_\xi , e^2_{\xi ,i} + T^2_{\xi ,i} y_i^\star - c^{2\star }_{\xi ,i} \rangle \ge _{a.s.}\, 0, \end{aligned}$$ -

(c)

and

$$\begin{aligned} c_i^{1\star } \in&\;\mathop {\mathrm{argmax}}\limits \nolimits _{c^1\in C^1_i} v^1_i(c^1) - \lambda ^1_i \langle p^1, c^1 \rangle , \\ c^{2\star }_{\xi ,i} \in \,&\;\mathop {\mathrm{argmax}}\limits \nolimits _{c^2\in C^2_i} v^2_i(c^2) - \lambda _{\xi ,i}^2 \langle p^2_\xi , c^2 \rangle , \; \forall _{a.s.}\, \xi \in \Xi ,\\ y_i^\star \in&\;\mathop {\mathrm{argmax}}\limits \nolimits _{y\in {\mathbb {R}}_{\scriptscriptstyle +}^{m_i}} -\lambda ^1_i \langle p^1, T_i^1 y \rangle + \mathbb {E}[\lambda ^2_{\xi ,i} \langle p^2_\xi , T^2_{\xi ,i} y \rangle ]. \end{aligned}$$

Assuming the utility functions are also differentiable, these last conditions can be translated in terms of the first order optimality conditions for these programs:

In conjunction with Walras law, we can regroup these conditions so that they fit the pattern of our two-stage formulation in Sect. 1: find \(x = \big ((p^1,c^1_i, y_i, \lambda ^1_i), i\in \mathcal{I}\big )\) and \(u_{\pmb {\xi }}= \big ((p^2_{\pmb {\xi }}, c^2_{{\pmb {\xi }},i}, \lambda ^2_{{\pmb {\xi }},i}), i\in \mathcal{I}\big )\) such that for all \(i\in \mathcal{I}\):

and

One approach in designing solution procedures for such a potentially whopping stochastic variational inequality is to attempt a straightforward approach relying, for example, on PATH Solver [18]. Notwithstanding, the capabilities of this excellent package, it is bound to be quickly overwhelmed by the size of this problem even when the number of agents and potential \(\xi \)-event is still quite limited.Footnote 4 An approach based on decomposition is bound to be indispensable. One could rely on a per-agent decomposition first laid out in [24] and used in a variety of applications, cf. for example [50]. Another approach is via scenarios (events) based decomposition, relying on the Progressive Hedging algorithm [56, 57] and an approximation scheme as developed in [17]. Finally, one should also be able to expand on a splitting algorithm elaborated in Sect. 5 to allow for an agent/scenario decomposition expected to be reasonably competitive. \(\square \)

The two last examples are stochastic variational inequalities that arise in connection with transportation/communication problems whose one must take into account uncertainties about some of the parameters of the problem. To fix terminology and notation, we begin with a brief description of the deterministic canonical model, the so called Wardrop equilibirum model; for excellent and thorough surveys of this model, see [14, 44] and as far as possible we follow their overall framework. There is no straightforward generalization to the “stochastic version” which is bound to very much depend on the motivation, or in other words, on the type of solution one is interested in. Here, we are going to be basically interested in problems where the uncertainty comes from the components of the underlying structure (network) or demand volume. Cominetti [13] and the references therein consider an interesting set of alternative questions primarily related to the uncertainty in the users’ information and behavior.

Given an oriented network \(\mathcal{N}=\big (\mathcal{G}\text { (nodes)}, \mathcal{A}\text { (arcs)}\big )\) together with for \(c_a\ge 0\) the maximum flow capacity for each arc (a) and demand \(h_{od}\) for each origin(o)-destination(d) pairs. \(R_{od}\) are all (acyclic) routes r connecting o to d with N being the arcs(a)/routes(r) incidence matrix, i.e., \(N_{a,r} = 1\) if arc \(a\in r\). A route-flow \(f = \big \lbrace f_r, r \in \cup _{od} R_{od}\big \rbrace \) results in an arc-flow \(x = \big \lbrace x_a = \langle N_a, f \rangle , \;a\in \mathcal{A}\big \rbrace \). The travel time on route r, assumed to be additive, \(\sum _{a\in r} t_a(x_a)\) where \(t_a(\cdot )\), a congestion dependent function, specifies the travel time on arc a. Let

be the polyhedral set of the arc-flows satisfying the flow conservation constraints. One refers to \(x^\star = N f^\star \in C\) as a Wardrop equilibrium if

i.e., flow travels only on shortest routes. A minor variation, due to the capacity constraints on the arcs, of the authoritative argument of Beckmann et al. [4], shows that these feasibility and equilibrium can be interpreted as the first order optimality condition of the convex program

Indeed, \(x^\star \) is optimal if and only if it satisfies the variational inequality:

Example 2.5

Prevailing flow analysis. The main motivation in [10], which by the way is probably the first article to introduce a(n elementary) formulation of a two-stage stochastic variational inequality, was to determine the steady flow f that would prevail given an established network but where the origin(o)-destination(d) demand as well as the arcs capacities are subject to (stochastic) perturbations. The individual users would make adjustments to their steady-equilibrium routes, depending on these perturbations \(\xi \), by choosing a recourse decision \(u_\xi \) that ends up to be the “nearest feasible” route to their steady-route. Although, one would actually like to place as few restrictions as possible on the class of recourse functions \((\xi ,f) \mapsto u(\xi ,f)\), from a modeling as well as a computational viewpoint, one might be satisfied with some restrictions or the application might dictate these restrictions as being the only really implementable “recourse” decisions; in [10] “nearest feasible” was interpreted as meaning the projection on the feasible region.

Detail. Recasting the problem considered in [10] in our present notation, it would read: find \((f,u_\xi )\) such that for all \(\xi \in \Xi \),

and

This problem comes with no additional variational inequality, i.e., of the type \(-\mathbb {E}[G(\cdot )] \in N_D(\cdot )\). Actually, in [10], it’s assumed that one can restrict the choice of f to a set \(D\subset {\mathbb {R}}^n_{\scriptscriptstyle +}\) which will guarantee that for all \(\xi \), one can find \(u_\xi \) so that the corresponding variational inequality is solvable. This is a non-trivial assumption and it is only valid if we expect the perturbations of both the od-demand and those modifying the capacities to be relatively small, i.e., can essentially be interpreted as “noise” affecting these uncertain quantities. \(\square \)

Example 2.6

Capacity expansion. Arc capacity expansion, \(\big (c_a \rightarrow c_a + x_a, a\in \mathcal{A}\big )\), is being considered for an existing, or to be designed, network (traffic, data transmission, high-speed rail, \(\dots \)). Only a limited pool of resources is available and thus, determine a number of constraints on the choice of x. To stay in tune with our general formulation of the two-stage model and provide a wide margin of flexibility in the formulation of these x-constraints, we express them as a variational inequality \(\langle G(x), x'-x\rangle \ge 0\) for all \(x'\in D\) where D is a closed convex subset of \({\mathbb {R}}^L\), \(G: {\mathbb {R}}^L \rightarrow {\mathbb {R}}^L\) is a continuous vector-valued mapping and \(L = |\mathcal{A}|\). The overall objective is to determine the arcs where capacities expansion will be most useful, i.e., will as well as possible respond to the “average” needs of the users of this network (minimal travel times, rapid connections, \(\dots \)). We interpret this to mean that the network flow will be at, or at least seek, a Wardrop equilibrium based on the information available: each od-pair demand and arcs’ capacity both of which are subject to stochastic fluctuations. Taking our clue from the deterministic version described earlier, given a capacity expansion x and an environment \(\xi \in \Xi \) affecting both demands and capacities, a solution \(\big (u_{\xi ,a}^\star , a\in \mathcal{A}\big )\) of the following variational inequality would yield a Wardrop equilibrium:

with

Our two-stage stochastic variational inequality, cf. Sect. 1, would take the form:

where

Detail. However, finding the solution to this particular stochastic variational inequality might not provide us with a well thought out solution to the network design problem: find optimal arc-capacities extension x that would result in expected minimal changes in the traffic flow when there are arbitrary, possibly significant, perturbations in the demand and the capacities. These concerns lead us to a modified formulation of this problem which rather than simply asking for a (feasible) solution of the preceding stochastic variational inequality wants to take into account a penalty cost associated with the appropriate recourse decisions when users are confronted with specific scenarios \(\xi \in \Xi \). A “recourse cost” needs to be introduced. It’s then also natural, and convenient computationally, to rely on an approach that allows for the possibility that in unusual circumstances some of the preceding variational inequalities might actually fail to be satisfied. This will be handled via a residual function. This application oriented reformulation results in a recasting of the problem and brings it closer to what has been called an Expected Residual Minimization or ERM-formulation. The analysis and the proposed solution procedure in the subsequent sections is devoted to this re-molded problem. Although this ERM-reformulation, see (4) below and Sect. 5, might usuallyFootnote 5 only provide an approximate solution of the preceding variational inequality, it provides a template for the design of optimal networks (traffic, communication,\(\dots \)) coming with both structural and demand uncertainties as argued in the next section. \(\square \)

3 An expected residual minimization formulation

We proceed with an ERM-formulation that in some instances might provide a solution which better suits the overall objective of the decision maker, cf. Sect. 5. Our focus will be on a particular class of stochastic variational inequalities which, in particular, include a number of traffic/communication problems (along the lines of Example 2.6):

where

D is closed and convex, and \(G: {\mathbb {R}}^n\rightarrow {\mathbb {R}}^n\) and \(F:\Xi \times {\mathbb {R}}^n\rightarrow {\mathbb {R}}^n\), as in Sect. 1, are continuous vector-valued functions in x and u respectively for all \(\xi \in \Xi \), and F is \(\mathcal{A}\)-measurable in \(\xi \) for each \(u\in {\mathbb {R}}^n\).

The dependence of F on x is assimilated in u by letting it depend on the state of the system determined by (\({\varvec{\xi }},x)\); it is thus convenient in the ensuing analysis to view F as just a function of \({\varvec{\xi }}\) and u. A convenient interpretation is to think of \(x_a\) as the “average” flow that would pass through arc a when uncertainties aren’t taken into account whereas, when confronted with scenario \(\xi \), the actual flow would turn out to be \(u_{\xi ,a}\). Let’s denote by \(y_\xi = \big (y_{\xi ,a} = u_{\xi ,a} - x_a, \;a\in \mathcal{A}\big )\) the “recourse” required to adapt \(x_a\) to the events/scenario \(\xi \), this will come at an expected cost (time delay) to the users. Since \(\bar{x}\) is conceivably a Wardrop equilibrium, these adjustments will usually result in less desirable solutions. Such flow adjustments will come at a cost, say  , i.e. a generalized version of a least square cost proportional to the recourse decisions. This means that H, usually but not necessarily a diagonal matrix, would be positive definite. So, rather than viewing our decision variables as being \(u_\xi \), we can equivalently formulate the recourse decisions in terms of the \(y_\xi \), but now at some cost, and define \(u_\xi = x + y_\xi \). In fact, let us go one step further and enrich our model by treating these \(y_\xi \) as activitiesFootnote 6 that result in a flow adjustment \(Wy_\xi \) and, hence, \(u_\xi = x + Wy_\xi \); whenever \(W=I\), the \(y_\xi \) just compensate for deviations from x.

, i.e. a generalized version of a least square cost proportional to the recourse decisions. This means that H, usually but not necessarily a diagonal matrix, would be positive definite. So, rather than viewing our decision variables as being \(u_\xi \), we can equivalently formulate the recourse decisions in terms of the \(y_\xi \), but now at some cost, and define \(u_\xi = x + y_\xi \). In fact, let us go one step further and enrich our model by treating these \(y_\xi \) as activitiesFootnote 6 that result in a flow adjustment \(Wy_\xi \) and, hence, \(u_\xi = x + Wy_\xi \); whenever \(W=I\), the \(y_\xi \) just compensate for deviations from x.

When dealing with a deterministic variational inequality \(-G(x) \in N_D(x)\), it is well known that its solution can be found by solving the following optimization problem

where \(\theta \) is a residual (gap-type) function, i.e., such that \(\theta \ge 0\) on D and \(\theta (x) = 0\) if and only if \(x\in D\) solves the variational inequality. In this article, we work with the following residual function, to which one usually refers as the regularized gap function [26]

for some \(\alpha > 0\). In terms of the overall framework of Sect. 1, this residual function-type will be attached to the inclusion \(-G(x):=-\mathbb {E}[G(\xi ,x)] \in N_D(x)\). The corresponding optimization problem reads

When dealing with the second inclusions \(-F(\xi ,u(\xi ,x)) \in N_{C_\xi }(u(\xi ,x))\) for P-almost all \(\xi \in \Xi \), one could similarly introduce a collection of residual functions \(\tilde{\theta }(\xi ,u)\) whose properties would be similar to those of \(\theta \) and ask that with probability 1, \(\tilde{\theta }(\xi ,u(\xi ,x)) = 0\) if and only if the function \((\xi , x) \mapsto u(\xi ,x)\) satisfies the corresponding stochastic variational inequality. This certainly might be appropriate in certain instances, but in a variety of problems one might want to relax this condition and replace it by a weaker criterion, namely that the Expected Residual \(\mathbb {E}[\tilde{\theta }(\xi ,u(\xi ,x))]\) be minimal. A somewhat modified definition of a residual function will be more appropriate when dealing with this “collection of variational inequalities”, we adopt here the one introduced in [10].

Definition 3.1

(SVI-Residual function) Given a closed, convex set \(D\subset {\mathbb {R}}^n\) and the random vector \({\varvec{\xi }}\) defined on \((\Xi ,\mathcal{A},P)\), let us consider the following collection of variational inequalities (SVI):

A function \(r: \Xi \times {\mathbb {R}}^{n} \rightarrow {\mathbb {R}}_+\) is a residual function for these inclusions if the following conditions are satisfied:

-

(i)

P-almost all \(\xi \in \Xi \), \(r(\xi , u)\ge 0\) for all \( u\in C_\xi \),

-

(ii)

For any \(u:\Xi \times D\rightarrow {\mathbb {R}}^n\) \(\mathcal{A}\)-measurable, it holds that

$$\begin{aligned} -F\big (\xi ,u(\xi ,x) \big ) \in _{a.s.} N_{C_\xi }(u(\xi ,x)) \Leftrightarrow r(\xi , u(\xi ,x))=_{a.s.}0 \ \mathrm{and }\ u(\xi ,x)\in _{a.s.} C_\xi . \end{aligned}$$

The stochastic variational inequality in this definition is in line with the second \(a.s.\)-inclusion in Example 2.6. We will work with a concrete example of SVI-residual functions: the regularized gap residual function with \(\alpha >0\),

We will show in Theorem 3.3 that the above function satisfies the two conditions in Definition 3.1. The use of residual functions leads us to seeking a solution of the stochastic program

With the positive definite property of H and the convexity of \(C_\xi \), \(u(\xi , x)\) is uniquely defined by the unique solution \(y^*_\xi \) of the second stage optimization problem in (4). Moreover, with the residual functions \(\theta \) and r defined in (1) and (3), respectively, the positive parameter \(\lambda \) in (4) allows for a further adjustment of the weight to ascribe to the required recourse decisions and residuals; with \(\lambda \) relatively large, and adjusting \(H\succ 0\) correspondingly, one should end up with a solution that essentially avoids any violation of the collection (SVI) of variational inequalities.Footnote 7

Assumption 3.2

Assume

-

(i)

W has full row rank;

-

(ii)

for almost all \(\xi \), \(C_\xi \subset C^\dagger \), a compact convex set.

For almost all \(\xi \), since \(C_\xi \) is convex and compact, it is easy to show that for these \(\xi \), \(u\mapsto r(\xi ,u)\) is continuous. On the other hand, for each fixed u, it follows from [58, Theorem 14.37] that \(\xi \mapsto r(\xi ,u)\) is measurable. This means that r is a Carathéodory function. In addition, consider for each \(\xi \in \Xi \),

Recall that \(v(\xi ,u)\) attains the maximum in (3). Clearly, \(u\mapsto v(\xi ,u)\) is continuous, and for each fixed u, the measurability of \(\xi \mapsto v(\xi ,u)\) again follows from [58, Theorem 14.37]. Consequently, also v is a Carathéodory function.

Theorem 3.3

(Residual function) When Assumption 3.2 is satisfied, r is a residual function for our collection (SVI) and for any \(x \in D\) and almost every \(\xi \in \Xi \), the function \(r(\xi ,u(\xi ,x))+Q(\xi ,x)\) in (4) is finite, nonnegative with

as the unique maximizer of the maximization problem in (3).

Proof

Let \(x\in D\) and \(u:\Xi \times D\rightarrow {\mathbb {R}}^n\) be \(\mathcal A\)-measurable in \(\xi \). We now check the two conditions in Definition 3.1. First of all, we see that \(r(\xi , u)\) is nonnegative for all \(u\in C_\xi \) from the definition. Moreover, from the property of the regularized gap function for VI, we have \(r(\xi , u(\xi , x))=0\) and \(u(\xi ,x)\in C_\xi \) if and only if \(u(\xi , x)\) solves the (deterministic) variational inequality \(-F(\xi ,u(\xi ,x))\in N_{C_\xi }(u(\xi ,x))\) for fixed \(\xi \in \Xi \). Thus, it follows that r is a residual function of this collection of variational inequalities \(-F(\xi ,u(\xi ,x))\in _{a.s.} N_{C_\xi }(u(\xi ,x)), \;\xi \in \Xi \).

We next show that for any \(x\in D\), the \(y^*_\xi \) in (4) is, in fact, uniquely defined. To this end, consider \(y=W^\top (WW^\top )^{-1}(\mathop {\mathrm{prj}}_{C_\xi } x -x)\), where \(WW^\top \) is invertible by Assumption 3.2. Then \(Wy= \mathop {\mathrm{prj}}_{C_\xi }x-x\) and \(Wy +x \in C_\xi \) which means that the feasible set of the second stage optimization problem in (4), i.e., \(\big \lbrace y {\,\big \vert \,}x+Wy\in C_\xi \big \rbrace \), is nonempty. Moreover, for almost all \(\xi \in \Xi \), this set is closed and convex since \(C_\xi \) is compact and convex. Consequently, the second stage optimization problem in (4) has a strongly convex objective and a nonempty closed convex feasible set. Therefore, it has a unique minimizer \(y^*_\xi \), and \(\xi \mapsto y^*_\xi \) is measurable thanks to [58, Theorem 14.37].

Finally, for \(u(\xi ,x) = x + Wy^*_\xi \), we recall the well-known fact that the problem

has a solution, with the unique maximizer given by the projection (6). Thus, the function \(r(\xi , u(\xi ,x))\) in (4) is also finite-valued. Furthermore, since H is positive definite and r is nonnegative, \(r(\xi , u(\xi ,x))+Q(\xi , x)\) has a finite nonnegative value at any \(x \in D\) for almost every \(\xi \in \Xi \). This completes the proof. \(\square \)

Theorem 3.3 means that under Assumption 3.2, problem (4) is a stochastic program with complete recourse, that is, for any x and almost all \(\xi \), the second stage optimization problem in (4) has a solution. In general, this is not an innocuous assumption but in the context that we are considering it only means that the set D has singled-out a set of possible traffic network layouts that will guarantee that traffic will proceed, possibly highly disturbed and arduously, whatever be the perturbations resulting from the stochastic environment \(\xi \).

We need the following to guarantee the objective of (4) is finite-valued:

Assumption 3.4

The functions \(F(\xi ,\cdot )\) and \(G(\cdot )\) are continuously differentiable for all \(\xi \in \Xi \) and \(\nabla F\) is \(\mathcal{A}\)-measurable. Moreover, for any compact set \(\Omega \), there are functions \(d, \rho : \Xi \rightarrow {\mathbb {R}}_+\) such that

for all \(u\in \Omega \), where \(d\in \mathcal{L}_1^{\scriptscriptstyle \infty }\) and \(\rho \in \mathcal{L}_1^1\).

Lemma 3.5

Suppose Assumptions 3.2 and 3.4 hold. Then for almost all \(\xi \), r is continuously differentiable and its gradient is given by

Moreover, for any measurable \(u_\xi \in _{a.s.}C_\xi \), both \(\xi \mapsto r(\xi ,u_\xi )\) and \(\xi \mapsto \nabla r(\xi , u_\xi )\) are not only measurable but actually integrable uniformly in \(u_\xi \). In particular, this means that the objective function in (4) is well defined at any \(x\in D\), and the optimal value of (4) is finite.

Proof

From Theorem 3.2 in [26], we know that \(r(\xi , \cdot )\) is continuously differentiable and its gradient is given by (7). Moreover, notice that \(r(\xi ,u)\) and its gradients with respect to u are both Carathéodory functions. Hence, the measurability of \(r(\xi , u_\xi )\) and \(\nabla r(\xi , u_\xi )\) for any measurable function \(u_\xi \) follows. We now establish uniform integrability.

Since \(C^\dagger \) is compact, for a \(u\in C^\dagger \), \(\Vert u\Vert \le \gamma \) for some \(\gamma >0\). This together with Assumption 3.4 yields \(r(\xi ,u_\xi ) \le 2\gamma d_\xi + \frac{\alpha }{2} (2\gamma )^2\) and

This proves uniform integrability.

Finally, let x be feasible for (4) and consider the corresponding \(y^*_\xi \) (exists and is measurable according to the proof of Theorem 3.3). Set \(\hat{y} = W^\top (WW^\top )^{-1}(\mathop {\mathrm{prj}}_{C_\xi }x - x)\). Then \(W\hat{y} + x \in C_\xi \) and hence we have from the definition of \(y^*_\xi \) and \(C^\dagger \) that \(\langle y^*_\xi ,Hy^*_\xi \rangle \le \langle \hat{y},H\hat{y}\rangle \le c\) for some constant \(c > 0\) (that depends on x but is independent of \(\xi \)). Hence

which implies the well-definedness of the objective in (4) and the finiteness of the optimal value. This completes the proof. \(\square \)

Lemma 3.5 establishes the differentiability of r and the finiteness of optimal value of (4). However, the objective function of problem (4) involves minimizers of constrained quadratic programs for \(\xi \in \Xi \) and is not necessarily differentiable even when the sample is finite, despite the fact that the function \(r(\xi ,\cdot )\) in (3) is continuously differentiable for almost all \(\xi \in \Xi \).

Below, we adopt the strategy of the L-shaped algorithm for two-stage stochastic optimization problems [6, 36, 65] to obtain a relaxation of (4), whose objective function will be smooth when the sample is finite. We start by rewriting the recourse program. First, observe that the second stage problem is the same as

With the substitution \(z = H^{\frac{1}{2}} y\), the above problem is equivalent to

Observe that for each fixed u and x, the minimizer of \(\min \big \lbrace \frac{1}{2}\Vert z\Vert ^2 {\,\big \vert \,}WH^{-\frac{1}{2}}z = u - x\big \rbrace \) is

Plugging this expression back in (8), the second stage problem is further equivalent to

whose unique solution \(u_\xi ^*\) can be interpreted as a weighted projection of x onto \(C_\xi \) for each \(\xi \in \Xi \). From this and (9), for each \(\xi \in \Xi \), the unique solution \(y^*_\xi \) of the second stage problem is given by

Combining the preceding reformulation of the second stage problem with the idea of the L-shaped algorithm, we are led to the following problem, whose objective is smooth when the sample is finite:

It is not hard to see that the optimal value of (11) is smaller than that of (4) since fewer restrictions are imposed on \(u_\xi \), or, equivalently on \(y_\xi \) in (10). Hence, it follows from Lemma 3.5 that the optimal value of (11) is also finite.

Unlike (4) which only depends explicitly on the finite dimensional decision variable x, problem (11) depends also explicitly on the measurable function \(\xi \mapsto u_\xi \). However, notice that (11) can be equivalently rewritten as

where \(\delta _{C_\xi }\) is the indicator function of the set \(C_\xi \) which is zero inside the set and is infinity otherwise. We next recall the following result, which allows interchange of minimization and integration. This is a consequence of the finiteness of the optimal value of the inner minimization in (12) (thanks to Lemma 3.5), Exercise 14.61 and Theorem 14.60 (interchange of minimization and integration) in [58].

Lemma 3.6

Under Assumptions 3.2 and 3.4, for any fixed \(x\in D\), we have

where

Moreover, for \(\bar{u}\in \mathcal{L}^{\scriptscriptstyle \infty }_{n}\),

Using the above result, one can further reformulate (11) equivalently as

which is an optimization problem that depends only explicitly on the finite dimensional decision variable x. Moreover, for each \(x\in D\), from (14), we have \(u^*\in \mathcal{L}_n^\infty \) attaining the minimum of the inner minimization problem in (12) if and only if its value at \(\xi \), i.e., \(u^*_\xi \), is a minimizer of the minimization problem defining \(\psi (\xi ,x)\) for almost all \(\xi \in \Xi \). We note that \(\psi (\xi ,x)\) is also a Carathéodory function: the measurability with respect to \(\xi \) for each fixed x follows from [58, Theorem 14.37], while the continuity with respect to x for almost all \(\xi \) follows from the compactness of \(C_\xi \) and the continuity of \((u,x)\mapsto r(\xi ,u) + \frac{1}{2}\langle u-x,B(u-x)\rangle \). In addition, thanks to Lemma 3.5, it is not hard to check that the objective in (15) is finite at any \(x\in D\) under Assumptions 3.2 & 3.4.

We show next that both (4) and (11) (and hence (15)) are solvable, and discuss their relationship.

Theorem 3.7

(Solvability) Suppose Assumptions 3.2 and 3.4 hold. Then problems (4) and (11) are solvable. Let \(\nu _1\) and \(\nu _2\) be the optimal values of (4) and (11), respectively. Then \(\nu _1\ge \nu _2\). Moreover, if for any \(x\in D\) and \(x+Wy\), \(x+Wz\in C_\xi \), we have

then the two problems have the same optimal values.

Proof

According to Lemma 3.5 and the preceding discussions, the optimal value of both problems (4) and (11) are finite. We show that the values are attained.

We first show that the optimal value of problem (4) is attained. To this end, consider \(\Vert x\Vert \rightarrow \infty \). Then from the boundedness of \(C^\dagger \supseteq C_\xi \), it follows that \(\Vert y^*_\xi \Vert \) in (4) must go to \(\infty \) uniformly in \(\xi \) except for a set of measure zero, which implies that \(\mathbb {E}[Q(\xi , x)]\) goes to \(\infty \) in (4). This together with the nonnegativity of r and \(\theta \) shows that the objective function of (4), as a function of x, is level bounded. Next, we show that the objective is lower semicontinuous. To this end, consider a sequence \(x_k\rightarrow x_0\). From the discussions in (8) to (10) and using the continuity of weighted projections, we see that \(u(\xi ,x_k)\rightarrow u(\xi ,x_0)\) for almost all \(\xi \in \Xi \). This implies the continuity of \(x\mapsto r(\xi ,u(\xi ,x)) +Q(\xi , x)\), which is a nonnegative function, and Fatou’s lemma gives the lower semicontinuity of \(\mathbb {E}[r(\xi ,u(\xi ,x)) +Q(\xi , x)]\). The lower semicontinuity of the objective of (4) now follows upon recalling that \(\theta (x)\) is continuous. Hence (4) has a solution \(x^*\), from which one can easily construct the second stage solution \(y^*_\xi \).

We now show that the optimal value of problem (11) is attained. Note that from the discussion preceding this lemma, one can equivalently consider problem (15). For this latter problem, observe that

where the first two inequalities follow from the nonnegativity of the residual functions, and the third inequality follows from \(C_\xi \subseteq C^\dagger \). The above relation together with the positive definiteness of B and the compactness of \(C^\dagger \) shows that the objective function of (15) is level bounded. We note also that the objective is lower semicontinuous, which is a consequence of the continuity of \(\theta (x)\), the continuity of \(\psi (\xi ,x)\) in x and Fatou’s lemma. Hence an optimal solution \(x^*\) exists, from which the corresponding \(u^*_\xi \) that minimizes the subproblem defining \(\psi (\xi ,x)\) can be obtained easily. From this and the relationship between the solutions of (11) and (15), one concludes that a solution of problem (11) exists.

Next, we consider the relationship between the optimal values of (4) and (11), which we denote by \(\nu _1\) and \(\nu _2\), respectively. Obviously, \(\nu _1\ge \nu _2\).

Suppose in addition that (16) holds, then \(\nu _1 = \nu _2\). To prove it, let \((x^2, u^2_\xi )\) be a solution of (11) and \(x^1\) be a solution of (4). Set

and \(y^2_\xi =H^{-1}W^\top B(u^2_\xi -x^2).\) Since \(u^2_\xi =x^2+Wy^2_\xi \in C_\xi \), we have

Using this inequality, condition (16) and \(\langle y_\xi ^2,Hy_\xi ^2\rangle =\langle u^2_\xi -x^2,B(u^2_\xi -x^2)\rangle \) yield

Therefore, problems (4) and (11) have the same optimal values. \(\square \)

Similarly to Lemma 3.5, we now study the differentiability of the objective function of problem (11). Let

where r is given by (3). The proof of the following lemma is similar to that of Lemma 3.5 and will thus be omitted.

Proposition 3.8

Suppose that Assumptions 3.2 and 3.4 hold. Then for almost all \(\xi \), f is continuously differentiable and its gradient is given by

Moreover, \(f(\xi , x, u_\xi )\) and \(\nabla f(\xi , x, u_\xi )\) are measurable in \(\xi \). Furthermore, for any compact set \(\bar{D}\subseteq D\), there are \(d^f, \rho ^f \in \mathcal{L}_1^1\) such that \(\Vert f(\xi , x, u_\xi )\Vert \le d^f_\xi \) and \(\Vert \nabla f(\xi , x, u_\xi )\Vert \le \rho ^f_\xi \) whenever \(u_\xi \in C_\xi \) and \(x\in \bar{D}\).

In general, a two-stage stochastic VI cannot be reformulated as a convex two-stage stochastic optimization problem. Below, we show that convexity is inherited partially in f from a linear two-stage stochastic optimization problem. This proposition will be used in the next section to establish, for this particular class of problems, a stronger convergence result for linear two-stage stochastic optimization problems.

Proposition 3.9

Under Assumptions 3.2 and 3.4, if for \(\xi \in \Xi \), \(F(\xi , x)=M_\xi x+q_\xi \) and \(\inf _{\xi \in \Xi }\lambda _{\min }(B+M_\xi +M_\xi ^\top ) > \alpha \), then \(f(\xi ,x, \cdot )\) is strongly convex for each \(x\in D\), with a strong convexity modulus independent of x and \(\xi \). Moreover, if \(\inf _{\xi \in \Xi }\lambda _{\min }(M_\xi +M_\xi ^\top ) \ge \alpha \), then \(f(\xi ,\cdot ,\cdot )\) is convex.

Proof

For a fixed \(\xi \), notice that the function

is convex in u if the function

is convex in u for any fixed \(v\in {\mathbb {R}}^n\) and \(x\in {\mathbb {R}}^n\). Since

is positive definite, \(\varphi (\xi , x, \cdot ,v)\) and thus \(f(\xi , x, \cdot )\) is convex. The independence of the modulus on x can be seen from the fact that \(\nabla ^2_{uu}\varphi (\xi ,x,u,v)\) does not depend on x, while the independence on \(\xi \) can be seen from the assumption on the eigenvalue. Finally, when \(\inf _{\xi \in \Xi }\lambda _{\min }(M_\xi +M_\xi ^\top ) \ge \alpha \), the convexity of \(f(\xi , \cdot , \cdot )\) in (x, u) follows from the positive semi-definiteness of the matrix

This completes the proof. \(\square \)

Remark 3.10

In [1], Agdeppa, Yamashita and Fukushima defined a convex ERM formulation for the stochastic linear VI by using the regularized gap function under the assumption that \(\inf _{\xi \in \Xi }\lambda _{\min }(M_\xi +M_\xi ^\top ) > \alpha \). In [10], Chen, Wets and Zhang defined a convex ERM formulation for the stochastic linear VI by using the gap function under a weaker assumption that \(E[ M_\xi +M^\top _\xi ]\) is positive semi-definite. In this paper, Lemma 3.9 shows that for any stochastic linear VI, we can choose a positive definite matrix B to define a convex optimization problem in the second stage of the generalized ERM formulation (11).

To end this section, we include a corollary concerning the entire objective function of (11).

Corollary 3.11

Suppose that Assumptions 3.2 and 3.4 hold. Then the objective function \(\theta (x)+\lambda \mathbb {E}[f(\xi , x, u_\xi )]\) is well defined at any \(x\in D\) and any measurable function \(u_\xi \in C_\xi \). Moreover, if \(G(x)=\mathbb {E}[M_\xi ] x + \mathbb {E}[q_\xi ]\), then the objective function is convex under all the assumptions of Proposition 3.9.

4 Convergence analysis

In this section, we discuss a sample average approximation (SAA) for (15) (and hence, equivalently, (11)) and derive its convergence. Let \(G(x)=\mathbb {E}[F(\xi , x)]\) and \(\xi ^1, \ldots , \xi ^\nu \) be iid (independent and identically distributed) samples of \({\varvec{\xi }}\) and

Note that \(G^\nu \) and \(\theta ^\nu \) are continuous P-a.s. for all \(\nu \), and recall that \(\psi \) is a Carathéodory function; cf. the discussion following (15). We consider the following SAA of (15) (and hence, equivalently, (11)):

Let \(X^*\) and \(X^\nu \) be the solution sets of problems (15) and (18). We will give sufficient conditions for that \(X^*\) and \(X^\nu \) to be nonempty and bounded, and for any cluster point of a sequence \(\{x^\nu \}_{x^\nu \in X^\nu }\) to be in \(X^*\).

Lemma 4.1

Under Assumptions 3.2 and 3.4, \(X^*\) and \(X^\nu \) are nonempty P-a.s. for all \(\nu \). Moreover, there exists a compact convex set \(\bar{D}\subseteq D\) so that \(X^*\subseteq \bar{D}\) and \(X^\nu \subseteq \bar{D}\) P-a.s. for all \(\nu \).

Proof

From the nonnegativity of \(r(\xi ,u)\), we have for almost all \(\xi \in \Xi \),

where \(\lambda _B > 0\) is the smallest eigenvalue of the matrix B. On the other hand, recall from Assumption 3.2 that there exists \(\tau > 0\) so that \(\Vert u\Vert \le \tau \) for all \(u\in C^\dagger \), and from Assumption 3.4 that \(\Vert F(\xi ,u)\Vert \le d_\xi \) for some \(d\in \mathcal{L}_1^{\scriptscriptstyle \infty }\). Hence, for a fixed \(x_0 \in D\), we have

for some constant \(\gamma _1\) P-a.s. for all \(\nu \), since \(d\in \mathcal{L}_1^{\scriptscriptstyle \infty }\). Furthermore, we have

P-a.s.for all \(\nu \), since \(F(\cdot ,x_0)\in \mathcal{L}_1^{\scriptscriptstyle \infty }\).

Hence we have

P-a.s. for all \(\nu \), and that

These, together with the boundedness of \(C^\dag \), imply that the level sets of \(\varphi ^\nu (\cdot )\) and \(\varphi (\cdot )\), are bounded and are included in a compact set that is independent of \(\xi \), P-a.s. for all \(\nu \). Moreover, the objective functions of (15) and (18) are all lower semicontinuous, and \(\theta \), \(\theta ^\nu \), \(\psi (\xi , x)\) are nonnegative. Hence \(X^*\) and \(X^\nu \) are nonempty P-a.s. for all \(\nu \). Finally, from (19) and (20), one can choose for \(\bar{D} = \{ x\in D \, | \, \lambda \cdot \lambda _B\Vert \mathop {\mathrm{prj}}\nolimits _{C^\dagger } x -x\Vert ^2\le 2\max \{\varphi (x_0),\lambda \gamma _1 + \gamma _2\} \}\). \(\square \)

Theorem 4.2

(Convergence theorem) Suppose Assumptions 3.2 and 3.4 hold. Then \(\varphi ^\nu \) converges to \(\varphi \) a.s.-uniformly on the compact set \(\bar{D}\) specified in Lemma 4.1. Let \(\{x^\nu \}\) be a sequence of minimizers of problems (18) generated by iid samples. Then \(\{x^\nu \}\) is P-a.s. bounded and any accumulation point \(x^*\) of \(\{x^\nu \}\) as \(\nu \rightarrow \infty \) is P-a.s. a solution of (15).

Proof

From Lemma 4.1, the convex compact set \(\bar{D}\subseteq D\) is such that \(X^*\subseteq \bar{D}\),

and for P-a.s. all \(\nu \),

Note that \(\varphi ^\nu (x) = \theta ^\nu (x)+ \frac{\lambda }{\nu }\sum ^\nu _{i=1}\psi (\xi ^i, x)\). To analyze the convergence, we first show that \(\theta ^\nu (x)\) converges to \(\theta (x)\) P-a.s. uniformly on \(\bar{D}\). Since \(\bar{D}\) is a nonempty compact subset of \({\mathbb {R}}^n\), \(F(\xi , \cdot )\) is continuous at x for almost every \(\xi \in \Xi \), \(\Vert F(\xi , x)\Vert \le d_\xi \) for some \(d\in \mathcal{L}_1^{\scriptscriptstyle \infty }\) due to Assumption 3.4, and the sample is iid, we can apply Theorem 7.48 in [62] to claim that \(G^\nu (x)\) converges to G(x) a.s.-uniformly on \(\bar{D}\), that is, for any \(\varepsilon >0\), there is \(\hat{\nu }\) such that for any \(\nu \ge _{a.s.} \hat{\nu }\), one has

Moreover, from the definition of \(\theta \) in (1), we have

where

Obviously, one has for \(\nu \ge _{a.s.} \hat{\nu }\),

From the boundedness of \(\bar{D}\) and the continuity of G, there is \(\gamma >0\) such that \(\max \{\Vert z\Vert ,\Vert v(z)\Vert ,\Vert G(z)\Vert \}\le \gamma \) for any \(z\in \bar{D}\). Hence we obtain for \(\nu \ge _{ a.s.} \hat{\nu }\), that \(\Vert v^\nu (z)\Vert \le \gamma + \frac{\varepsilon }{\alpha }\) for any \(z\in \bar{D}\) and

Hence, \(\theta ^\nu (x)\) converges to \(\theta (x)\) a.s.-uniformly on \(\bar{D}\). Similarly, again by using Theorem 7.48 in [62], we can show that \(\frac{1}{\nu }\sum ^\nu _{i=1}\psi (\xi ^i, x)\rightarrow \mathbb {E}[\psi (\xi ,x)]\) a.s.-uniformly on \(\bar{D}\). Consequently, \(\varphi ^\nu \) converges to \(\varphi \) a.s.-uniformly on \(\bar{D}\) as claimed.

Combining the convergence result with Theorem 7.11, Theorem 7.14 and Theorem 7.31 in [58], we obtain further that

Since \(\bar{D}\) is compact, we conclude further that \(\{x^\nu \}\) is a.s. bounded and any accumulation point of \(\{x^\nu \}\), as \(\nu \rightarrow \infty \), is a.s. a solution \(x^*\) of (15). \(\square \)

It is worth noting that the function

is the sum of an EV formulation for the first stage and an ERM formulation for the second stage of the two stage stochastic VI. The convergence analysis of SAA for the EV formulation of a single stage stochastic VI in [62] cannot be directly applied to \(\varphi ^\nu \).

We next present a result in the presence of convexity: when \(F(\xi ,\cdot )\) is an affine mapping, we can obtain further results if a first-order growth condition is satisfied. For the same reason explained above, the corresponding results in [62] cannot be directly applied.

Theorem 4.3

(Convergence theorem for the convex problem) In addition to Assumptions 3.2 and 3.4, suppose that for \(\xi \in \Xi \), \(F(\xi , u)=M_\xi u+q_\xi \). Let \(\theta (x)\) be defined by (1) with \(G(x) = \mathbb {E}(F(\xi ,x))\) and \(\inf _{\xi \in \Xi }\lambda _{\mathop {\mathrm{min}}\nolimits }(M_\xi +M_\xi ^\top ) > \alpha \). Then \(\varphi \) and \(\varphi ^\nu \) are strongly convex P-a.s. for all \(\nu \). Let \(\{x^\nu \}\) be a sequence of minimizers of problems (18) and the samples be iid. Then \(\varphi ^\nu \) converges to \(\varphi \) a.s.-uniformly on the compact set \(\bar{D}\) specified in Lemma 4.1. Moreover, let \(x^*\) be the unique solution of (15) such that

where \(c>0\) is a constant.Footnote 8 Then a.s., we have \(x^\nu =x^*\) for all sufficiently large \(\nu \).

Proof

From Theorem 3.2 in [26], the functions \(\theta \) and \(\theta ^\nu \) defined by the regularized gap function are strongly convex and continuously differentiable P-a.s. for all \(\nu \). Moreover, we also have the convexity of \(\psi (\xi ,\cdot )\) and \(\psi ^\nu (\xi ,\cdot )\) from the second conclusion in Proposition 3.9. Hence \(\varphi \) and \(\varphi ^\nu \) are strongly convex P-a.s. for all \(\nu \).

The a.s.-uniform convergence of \(\varphi ^\nu \) to \(\varphi \) on \(\bar{D}\) is obtained from Theorem 4.2. Alternatively, we have a much simpler proof thanks to the presence of convexity: since \(\varphi ^\nu (x)\rightarrow \varphi (x)\) at each \(x\in \bar{D}\) a.s., we have \(\varphi ^\nu (x) \rightarrow \varphi (x)\) for every x in any countable dense subset of \(\bar{D}\) a.s., and consequently, using Theorem 10.8 from [51], we can also conclude that \(\varphi ^\nu \) converges to \(\varphi \) a.s.-uniformly on \(\bar{D}\).

Next, assume in addition that (21) holds at the unique solution \(x^*\) of (15). We first recall from [62, Theorem 7.54] that using the convexity and the differentiability of \(\varphi ^\nu (\cdot )\) and \(\varphi (\cdot )\), we have \(\nabla \varphi ^\nu (x^*)\) converging to \(\nabla \varphi (x^*)\) a.s.-uniformly on the unit sphere. Combining this with (21), we have for large enough \(\nu \)

where \(\mathcal{T}\) is the tangent cone to \(\bar{D}\) at \(x^*\). This implies that \(x^*\) is the unique minimizer of \(\varphi ^\nu \) on \(\bar{D}\) and hence \(x^\nu = x^*\). \(\square \)

We close this section with the following result concerning a Lipschitz continuity property of \(\psi \) when \(F(\xi ,\cdot )\) is affine. The conclusion might be useful for future sensitivity analysis.

Proposition 4.4

In addition to Assumptions 3.2 and 3.4, if for \(\xi \in \Xi \), \(F(\xi , u)=M_\xi u+q_\xi \) and \(\inf _{\xi \in \Xi }\lambda _{\mathop {\mathrm{min}}\nolimits }(B+M_\xi +M_\xi ^\top ) > \alpha \), then for any arbitrary compact set \(\bar{D}\subseteq D\), there is a measurable function \(\kappa : \Xi \rightarrow {\mathbb {R}}_+\) with \(\kappa \in \mathcal{L}_1^1\) such that for any \(x, z\in \bar{D}\subseteq D\), we have

Proof

Let \(\bar{D}\subseteq D\) be an arbitrary compact set. For any x, \(z\in D\), and \(\xi \in \Xi \), let

In particular, we have from the definition of \(\psi \) that

From Proposition 3.9, \(f(\xi , x, \cdot )\) is strongly convex. Using this, Proposition 3.8, (22) and the first-order optimality conditions, we have

Adding these two inequalities, we obtain that

Combining this with the strong convexity of f with respect to u established in Proposition 3.9, we have further that

where \(\sigma > 0\) is independent of x, z and \(\xi \). Hence, we obtain that the solution is Lipschitz continuous, that is,

whenever x, \(z\in D\). Next, by the definition of r and Theorem 3.3, we have

Since the projection and F are Lipschitz continuous, \(\Vert F(\xi ,u)\Vert \le d_\xi \) and \(\Vert \nabla F(\xi ,u)\Vert \le \rho _\xi \) for some \(d\in \mathcal{L}_1^{\scriptscriptstyle \infty }\) and \(\rho \in \mathcal{L}_1^1\), and \(C_\xi \subseteq C^\dagger \) for almost all \(\xi \in \Xi \), there is \(\kappa _1\in \mathcal{L}_1^1\) such that whenever \(u,w\in C_\xi \),

Finally, since \(\bar{D}\) and \(C^\dagger \) are bounded, there is \(L>0\) such that for any \(y\in \bar{D}\cup C^\dagger \), we have \(\Vert y\Vert \le L/2.\)

Combining this with (23), (24) and (25), we obtain the following Lipschitz continuity property of \(\psi \) for any x, \(z\in \bar{D}\):

This completes the proof. \(\square \)

Proposition 4.4 provides sufficient conditions for the Lipschitz continuity of \(\psi \) with modulus \(\kappa (\xi )\), which can be used for deriving convergence rate. As an illustration, suppose in addition that D is compact. Then, under the assumptions of Proposition 4.4, we have for any \(x_1\), \(x_2\), \(v_1\), \(v_2\in D\) and all \(\xi \in \Xi \) that

where \(r_D:= \sup _{x\in D}\Vert x\Vert \) and \(\zeta (\xi ):= \sqrt{2}(3r_D\Vert M_\xi \Vert + \Vert q_\xi \Vert )\).

Thus, if we further assume that

and for any x, \(v\in D\),

then for any \(\varepsilon >0\), there exist positive constants \(c(\varepsilon )\) and \(\beta (\varepsilon )\) such that

for all \(\nu \), where the first inequality follows from the elementary relation that \(|\max _{v\in D}g(v) - \max _{v\in D}h(v)|\le \max _{v\in D}|g(v) - h(v)|\) for any functions g and h, and we refer the readers to [62, Theorem 7.65] for reference for the second inequality.

A further convergence statement can be made if we assume in addition that \(\mathbb {E}[(\langle x-v, F(\xi ,x)\rangle -\frac{\alpha }{2}\Vert x-v\Vert ^2+\lambda \psi (\xi ,x))^2]\) is finite for some \((x,v)\in D\times D\) and that \(\inf _{\xi \in \Xi } \lambda _\mathrm{min}(M_\xi +M_\xi ^T)\ge \alpha .\) To see this, note that problem (15) can be written as a minimax stochastic problem

Thus, we may use [62, Theorem 5.10] to obtain the following convergence rate of optimal values

where \(\longrightarrow _d\) denotes convergence in distribution and z is a random number having normal distribution with zero mean and variance \(\sigma ^2=Var[F(x^*,v^*,\xi )]\), where (\(x^*, v^*)\) is the unique solution of (28).

Finally, we note that under the assumptions of Theorem 4.3 and the linear growth condition (21), we have that \(x^\nu =x^*\) for all sufficient large \(\nu \), which gives the finite convergence rate of the SAA solutions.

5 Implementation and experimentation

We begin with a more detailed reformulation of Example 2.6, more compatible with our computational approach, in particular it provides a more explicit version of the flow-conservation equations; in the traffic transportation community all possible node pairs are implicitly included in the od-collection even those with \(d=o\) and \(h_{oo} = 0\) when the o-node is simply a transhipment node. We follow the Ferris-Pang multicommodity formulation [23] which associates a (different) commodity with each destination node \(d\in \mathcal{D}\subset \mathcal{N}\). In their formulation, a commodity is associated with each destination node in \(\mathcal{D}\subseteq \mathcal{N}\) and \(x^j\in {\mathbb {R}}^{|\mathcal{A}|}\) representing the flows of the commodities \(j=1,2,\ldots ,|\mathcal{D}|\) with \(x^j_a\) denoting the flow of commodity j on arc \(a\in \mathcal{A}\).

Let \(V=(v_{ij})\) denote the node-arc incidence matrix with entries \(v_{ij}=1\) if node i has outgoing flows on arc j, \(v_{ij}=-1\) if node i has incoming flows on arc j, and \(v_{ij}=0\) otherwise. The following condition represents conservation of flows of commodities,

where \(d^j_i\) is demand at node \(i\in \mathcal{N}\) for commodity j.

Let

Then (30) can be written as

If we add the constraints on capacity \(c_a\) of travel flows on each arc a, then the constraints on the arc flows are presented as follows

where \(P=(I,\ldots , I) \in {\mathbb {R}}^{|\mathcal{A}|\times |\mathcal{D}||\mathcal{A}|}\) and I is the \(|\mathcal{A}|\times |\mathcal{A}|\) identity matrix.

Traffic equilibrium models are built based on travel demand, travel capacity on each arc and travel flows via nodes. The demand and capacity depend heavily on various uncertain parameters, such as weather, accidents, etc. Let \(\Xi \subseteq {\mathbb {R}}^N\) denote the set of uncertain factors. Let \((d^j_\xi )_i>0\) denote the stochastic travel demand at the ith node for commodity j and \((c_{\xi })_a\) denote the stochastic capacity of arc a.

For a realization of random vectors \(d^j_\xi \in {\mathbb {R}}^{|\mathcal{N}|}\) and \(c_\xi \in {\mathbb {R}}^{|\mathcal{A}|}, \, \xi \in \Xi \), an assignment of flows to all arcs for commodity j is denoted by the vector \(u_\xi ^j\in {\mathbb {R}}^{|\mathcal{A}|}\), whose component \((u_\xi ^j)_a\) denotes the flow on arc a for commodity j.

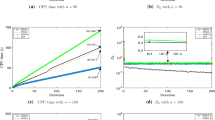

The network in Fig. 1 from [68] has 5 nodes, 7 arcs and 2 destination nodes \(\{4, 5\}\). The node-arc matrix for the network in Fig. 1 is given as follows.

and the matrices and vectors are as follows

For a forecast robust arc flows x, let the feasible set for each realization \(\xi \) be

where \(Pu_\xi =\sum _{j=1}^{|\mathcal{D}|} u_\xi ^j\) is the total travel flows.

The arc travel time function \(h(\xi , \cdot ):{\mathbb {R}}^{|\mathcal{D}||\mathcal{A}|}\rightarrow {\mathbb {R}}^{|\mathcal{A}|}\) is a stochastic vector and each of its entries \(h_a(\xi ,u_\xi )\) is assumed to follow a generalized Bureau of Public Roads (GBPR) function,

where \(\eta _a, \tau _a, (\gamma _\xi )_a\) and \(n_a\) are given positive parameters, and \((Pu_\xi )_a\) is the total travel flows on each arc \(a\in \mathcal{A}\). Let

Then

which is symmetric positive semi-definite for any \(u_\xi \in C_\xi \subseteq {\mathbb {R}}_+^{|\mathcal{D}||\mathcal{A}|}\). One commonly considered special case is when \(n_a=1\), for all \(a\in \mathcal{A}\). In this case, \(F(\xi , u_\xi )=M_\xi u_\xi + q\), where

Here, we have rank\((P)=|\mathcal{A}|\), and for any \(\xi \in \Xi \), \(M_\xi \in {\mathbb {R}}^{|\mathcal{D}||\mathcal{A}|\times |\mathcal{D}||\mathcal{A}|}\) is a positive semi-definite matrix. Thus, \(\mathbb {E}[M_\xi ]\) is positive semi-definite. Another commonly considered case is when \(n_a=3\), for all \(a\in \mathcal A\); see [23] and our numerical experiments in Sect. 5.2.

To define a here-and-now solution x, let

and \(G(x)=P^T\bar{h}(x)\) where the components of \(\bar{h}\) is defined by

The deterministic VI formulation for Wardrop’s user equilibrium, seeks a forecast arc flows \(x \in D\) satisfying

On the other hand, the stochastic VI formulation for Wardrop’s user equilibrium, seeks an equilibrium arc flow \(u_\xi \in C_\xi \) for a known event \(\xi \in \Xi \), such that

In general, the solution sets of the variational inequalities (33) and (34) can have multiple solutions. Naturally, a here-and-now solution x should have minimum total distances to the solution set of (34) for almost all observations \(\xi \in \Xi \). It can be written as a mathematical programming with equilibrium constraints [43] as the following

Recalling the definitions of the residual functions induced by the regularized gap function given in §3, we have

Note that \(\theta (x)\ge 0, r(\xi , u_\xi )\ge 0\) for \(x\in D\) and \(u_\xi \in C_\xi \), and they are continuously differentiable. It is natural to consider the following \(l_1\) penalty problem which trades off optimization of total distance and the violation of the constraints in (35):

for some positive numbers \(\lambda \) and \(\rho \). Notice that this is a special case of (11), which was derived in §3 as a relaxation of (4). Our discussion above provided an alternative interpretation of this problem as an \(l_1\) penalty problem.

Using Lemma 3.6, we see that (36) is further equivalent to the following problem

In this problem, for each \(\xi \in \Xi \), the decisions \(u_\xi \) are dependent on a forecast arc flows x. The two-stage stochastic program (37) uses x as the first stage decision variable, and \(u_\xi \) as the second stage variable.

In a stochastic environment, \(\xi \) belongs to a set \(\Xi \) representing future states of knowledge. The stochastic optimization approach (36) (equivalently, (37)) is to find a vector \(x^*\) which minimizes the expected residual values with recourse cost. The main role of traffic model is to provide a forecast for future traffic states. The solution of the stochastic optimization approach (36) is a “here-and-now” solution which provides a robust forecast and has advantages over other models for long term planning. Stochastic traffic assignment on path flow has been formulated as stochastic complementarity problems and stochastic variational inequalities in [1, 10, 70]

5.1 The Douglas–Rachford splitting method

In this section, we focus on problem (36), which is a special case of (11) with \(B = 2\rho I\) and \(D = \{x\in {\mathbb {R}}^{|\mathcal{D}||\mathcal{A}|}_+\,|\, Ax=\mathbb {E}[b_\xi ],\, Px\le \mathbb {E}[c_\xi ]\}\). We will discuss an algorithm for solving the following SAA of problem (36), for any fixed \(\nu \):

where \(\big \lbrace \xi ^1,\ldots ,\xi ^\nu \big \rbrace \) is an iid sample of \(\Xi \) of size \(\nu \).

Notice that the objective of (38) is highly structured: the decision variables are only related due to the quadratic term \(\frac{\lambda \rho }{\nu }\sum _{i=1}^\nu \Vert u_{\xi ^i} - x\Vert ^2\); if this quadratic term was absent, then the problem would be decomposed into \(\nu +1\) smaller independent optimization problems that can be solved in parallel. This observation leads us to consider splitting methods in which the objective is decoupled into two parts and minimized separately. One such method is the Douglas–Rachford (DR) splitting method. This method was first proposed in [19] for solving feasibility problems and has been extensively studied in the convex scenario; see, for example, [3, 20, 29]. Moreover, the global convergence of the method for some class of problems with nonconvex objectives has been recently studied and established in [39].

To apply the DR splitting method, we first note that (38) can be equivalently written as a minimization problem that minimizes the sum of the following two functions:

where g is a proper closed function and f is a quadratic function whose Lipschitz continuity modulus is \(2\lambda \rho \left( 1+\frac{1}{\nu }\right) \), and U is the vector in \({\mathbb {R}}^{\nu |\mathcal{D}||\mathcal{A}|}\) formed by stacking \(u_{\xi ^i}\), i.e.,