Abstract

Recently, some matrix exponential-based discriminant analysis methods were proposed for high dimensionality reduction. It has been shown that they often have more discriminant power than the corresponding discriminant analysis methods. However, one has to solve some large-scale matrix exponential eigenvalue problems which constitutes the bottleneck in this type of methods. The main contribution of this paper is twofold: First, we propose a framework of fast implementation on general matrix exponential-based discriminant analysis methods. The key is to equivalently transform large-scale matrix computation problems into much smaller ones. On the other hand, it was mentioned in Wang et al. (IEEE Trans Image Process 23:920–930, 2014) that the exponential model is more reliable than the original one and suppresses the sensitivity to pertubations. However, the interpretation is only heuristic, and to the best of our knowledge, there is no theoretical justification for reliability and stability of the matrix discriminant analysis methods. To fill in this gap, the second contribution of our work is to provide stability analysis for the fast exponential discriminant analysis method from a theoretical point of view. Numerical experiments illustrate the numerical behavior of the proposed algorithm, and demonstrate that our algorithm is more stable than many state-of-the-art algorithms for high dimensionality reduction.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many objects in the real world are stored or represented as high dimensional data, and a direct processing of the data with regular methods is unfeasible and impractical [12, 35]. Dimensionality reduction is a technique to represent high dimensional data by their low-dimensional embedding, so that the low-dimensional data can be used effectively [10, 12, 20, 31, 35]. Nowadays, dimensionality reduction plays a crucial role in many application areas such as face recognition, machine learning, data mining, image reconstruction, information retrieval, and so on [12, 20, 24, 35, 38,39,40].

Principal Component Analysis (PCA) [19, 33] and Linear Discriminant Analysis (LDA) [2] are two extensively utilized approaches for dimensionality reduction. PCA is a unsupervised linear subspace transformation method that maximizes the variance of the transformed features in the projected subspace. LDA is a supervised linear subspace transformation method that encodes discriminant information by maximizing the between-class covariance, while minimizing the within-class covariance in the projected subspace. However, both PCA and LDA may fail to discover the underlying manifold structure [37], where the high dimensional image information lies in. In order to uncover the essential manifold structure of the data, some manifold learning methods have been proposed, to name a few, Laplacianfaces [17], Locality Preserving Projections (LPP) [16], Unsupervised Discriminant Projections (UDP) [43], Marginal Fisher Analysis (MFA) [42], Local Discriminant Embedding (LDE) [4], etc.

In Yan et al. [42], brought most of dimensionality reduction methods into a general framework called graph embedding. By embedding the high dimensional data into an optimal lower-dimensional space, the discriminant power obtained from graph embedding methods is usually stronger than classical classification methods. In the graph embedding framework, the neighbor relationship is measured by the artificially constructed adjacent graph. One concise criterion for feature extraction can be obtained from maximizing the ratio of the nonlocal scatter to the local scatter. Generally speaking, direct graph embedding and its extensions, such as linearization, kernelization and tensorization, all belong to this framework [42].

Most of the above approaches involve matrix inversion operation which may lead to matrix singularity problem in numerical computation, especially when the number of samples is (much) smaller than the dimension of samples. This is called the small sample size problem (SSS) or the undersampled problem [25]. In order to deal with the SSS problem, many techniques were proposed in decades. For example, the regularized method [11], PCA\(+\)LDA [2, 23, 33], the null-space method [5], LDA/QR [44], and so on.

Recently, some matrix exponential-based discriminant analysis methods were proposed to cure the drawback of SSS problem. For instance, Exponential Discriminant Analysis method (EDA) [46], Exponential Marginal Fisher Analysis (EMFA) [15, 37], Exponential Locality Preserving Projections (ELPP) [36], Exponential Local Discriminant Embedding (ELDE) [8], Exponential Elastic Preserving Projections (EEPP) [45], and so on. In [37], a general exponential framework for dimensionality reduction was proposed. As exponential of any square matrix is nonsingular [14], exponential transformation cures the drawback of the SSS problem naturally. Furthermore, the matrix exponential can be considered as the cumulative sum of the similarity/transition matrices after the random walk over the feature similarity matrix, and the behavior of the decay property of matrix exponential is more significant in emphasizing small distance pairs [37]. Consequently, the matrix exponential discriminant analysis methods often have more discriminant power than their original counterparts, and they are competitive candidates for dimensionality reduction [1, 8, 9, 15, 36, 37, 39, 41, 45, 46].

In all the matrix exponential discriminant analysis methods, however, one has to compute two large-scale matrix exponentials and to solve a large-scale eigenproblem. The computational cost is prohibitively large for data with high dimension. The first contribution of this paper is to propose a fast implementation framework for all the above mentioned matrix exponential-based discriminant analysis methods. The key is to equivalently transform the original large matrix computation problems of size d into smaller ones of size n, where n is the number of training samples being much smaller than the data dimension d. So the proposed algorithms will be much cheaper than their original counterparts. Moreover, we show that the transformation is mathematically equivalent, so there will be no recognition rate lost in the accelerated algorithms.

As pointed out in [37, 46], the exponential model can be roughly interpreted as a random walk over the feature similarity matrix, which makes the feature similarity matrix more reliable and suppresses the sensitivity to the size of neighbors. The interpretation is just heuristic, however, to the best of our knowledge, there is no theoretical justification for reliability and stability of the matrix discriminant analysis methods. To fill in this gap, the second contribution of our work is to provide stability analysis for the fast exponential discriminant analysis methods from a theoretical point of view, and show why it is stable to the perturbation of the data matrix. This is also the main contribution of our work.

The remainder of this work is organized as follows. In Sect. 2, we give some preliminaries for this work and present a general framework for the matrix exponential discriminant analysis methods. In Sect. 3, we focus on accelerating the matrix exponential discriminant algorithms and propose a fast implementation framework. In Sect. 4, we provide stability analysis on the proposed algorithm. In Sect. 5, we perform some numerical experiments on some benchmark face databases to show the numerical behavior of our new algorithm. Section 6 gives some concluding remarks.

Let us introduce some notations. Given a matrix A, we denote by \(\mathrm{tr}(A)\) the trace of A, and by \(\mathrm{exp}(A)\) the matrix exponential of A. Let \(\sigma _{\max }(A),\sigma _{\min }(A)\) be the maximal and the minimal (nonzero) singular values of A, and let span\(\{A\}\) be the space spanned by the columns of A. Denote by \(A^{T},A^{H}\) the transpose and conjugate transpose of A, respectively, and by \(A^{\dag }\) the Moore-Penrose inverse of A. In this paper, \(\mathbf{1}_i\) stands for the vector of all ones with dimension i; and r, L for the reducing dimension and for the number of training samples in each class, respectively. Let \(\Vert A\Vert _F\) be the Frobenius norm of A, and let \(\Vert A\Vert _2\) be the 2-norm of A, i.e., the largest singular value of A. Let A, B be two square matrices of the same size, then (A, B) represents a matrix pair. Suppose that the two matrices X, Y have the same rows, then [X, Y] denotes the matrix composed of the columns of X and Y, and \(\sin \angle (X,Y)\) denotes the distance between the two subspaces \(\mathrm{span}\{X\}\) and \(\mathrm{span}\{Y\}\). Let I be the identity matrix and \(\mathbf{0}\) be a zero matrix or vector, whose order are clear from the context.

2 Preliminaries

In this paper, we are interested in the following large generalized eigenvalue problem

where \(X=[\mathbf{x}_1,\mathbf{x}_2,\ldots ,\mathbf{x}_n ]\in {\mathbb {R}}^{d \times n}\) with each column \(\mathbf{x}_i~(1\le i\le n)\) being a sample, and the data dimension d is much larger than the number of samples, i.e., \(d\gg n\). The matrices \(F,H\in {\mathbb {R}}^{n\times n}\) are symmetric positive semidefinite matrices of order n. The large-scale eigenproblem (2.1) arises in many applications in machine learning and dimensionality reduction. Indeed, all the eigenproblems appeared in graph embedding methods, such as LDA [25], LDE [4], LPP [16], MFA [42], Fast and Orthogonal Locality Preserving Projections (FOLPP) [27], and Sparsity Preserving Projections (SPP) [26], can be reformulated as the form of (2.1) [20, 37, 42]. Moreover, in many popular dimension reduction methods such as PCA [19, 33], Multi-Dimensional Scaling (MDS) [32], ISOMAP [31], Neighborhood Preserving Projections (NPP) [21], Orthogonal Neighborhood Preserving Projection (ONPP) [22], and Orthogonal Locality Preserving Projections (OLPP) [21], all the eigenproblems involved are in the form of (2.1) [20]; see Table 1 in [42].

2.1 Linear discriminant analysis

LDA is one of notable subspace transformation methods for dimensionality reduction [25]. In this subsection, we illustrate how the generalized eigenvalues problem involved in LDA can be rewritten as the form of (2.1).

Suppose that the original data \(X=[C_1,C_2,\ldots ,C_K]\), where \(C_i\in {\mathbb {R}}^{ d \times n_i}\) is the data corresponding to the i-th class. We derive the mean vector of the data matrix as \(\mathbf{m}=\sum _{i=1}^{n}{} \mathbf{x}_i/n=X\mathbf{1}_n/n\), and the mean vector of the i-th class as \(\mathbf{m}_i={\sum }_{\mathbf{x}_j\in C_i} \mathbf{x}_j/n_i\), \(i=1,2,\ldots ,K\). Then we define the within-class scatter matrix as \(S_W=H_WH_W^T\) and the between-class scatter matrix as \(S_B=H_BH_B^T\) [25], with

and

Moreover, the total scatter matrix \(S_T=S_B+S_W\). LDA encodes discriminant information by maximizing the between-class scatter, and meanwhile minimizing the within-class scatter in the projected subspace. This resorts to solving the following optimization problem [2]

whose solution is obtained from solving the generalized eigenvalue problem as follows [2]

We now show that (2.3) can be reformulated as a form of (2.1). Consider the diagonal matrix \(D_K=diag(n_1,n_2,\ldots ,n_K)\), then under the above notations, we have

Recall that \(\mathbf{m}_i\) is the mean vector of i-th class, so it is a linear combination of data matrix X. Define

whose (i, j)-th entry is

Decompose the matrix column-by-column as \(P=[\mathbf{p}_{1},\mathbf{p}_{2},\ldots ,\mathbf{p}_{K}]\), where \(\mathbf{p}_{i}\) denotes the i-th column of P. Then we have \(\mathbf{m}_i={\sum }_{\mathbf{x}_j\in C_i} \mathbf{x}_j/n_i=X\mathbf{p}_{i}/n_i,~i=1,2,\ldots ,K,\) and

where \(L_B=PD_K^{-\frac{1}{2}}-\mathbf{1}_n\mathbf{1}_K^TD_K^{\frac{1}{2}}/n\in {\mathbb {R}}^{n\times K}\). As a result, the between-class matrix can be expressed as

Next, we focus on the within-scatter matrix \(S_W=H_WH_W^T\). Notice that

where \(L_W=I_n-PD_K^{-1}P^T\in {\mathbb {R}}^{n\times n}\). Thus, the within-class matrix \(S_W\) can be reformulated as

In conclusion, we have the following theorem.

Theorem 2.1

Under the above notations, we have

and (2.3) can be rewritten as

where

Remark 2.1

Denote by \({\widehat{T}}=\mathbf{1}_{n}\mathbf{1}_{n}^T/n\in {\mathbb {R}}^{n\times n}\), \(T_j=\mathbf{1}_{n_j}\mathbf{1}_{n_j}^T/n_j\in {\mathbb {R}}^{n_j\times n_j}\), and by the block diagonal matrix \(T=diag(T_1,T_2,\ldots ,T_K)\), it was pointed out that [6]

In addition, denote by \({\bar{X}}=X-\mathbf{m}{} \mathbf{1}_n^T\), we have that [20]

see also [42, Eqn(12)]. Note that (2.9) is different from (2.13), and we express \(H_B\) and \(H_W\) as a linear combination of the columns of X. Recall that \({\bar{X}}\) is a column rank-deficient matrix even when X is of full column rank.

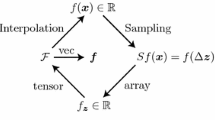

2.2 A general exponential framework for dimensionality reduction

The main difference between the matrix exponential-based discriminant analysis methods and the classical discriminant analysis methods is that the former applies matrix exponential transformation on scatter matrices. More precisely, if we denote by

then in the matrix exponential-based discriminant analysis methods, such as EDA [46], EMFA [15, 37], ELPP [15], ELDE [8], and Exponential Unsupervised Discriminant Projections (EUDP) [37], all of them resort to solving the objective function as follows [1, 8, 9, 15, 36, 37, 39, 41, 46]

As \(S_F\) and \(S_H\) are symmetric, both \(\mathrm{exp}(S_F)\) and \(\mathrm{exp}(S_H)\) are positive definite. The matrix exponential-based discriminant analysis methods seek the projection matrix \({V}^*\) via solving the following symmetrical generalized eigenvalue problem [1, 8, 9, 15, 36, 37, 39, 41, 45, 46]

A framework for the exponential-based discriminant analysis algorithms is presented in Algorithm 1. At the first glance, the computational complexities of the matrix exponential-based discriminant algorithms are prohibitively large and are much higher than those of the classical discriminant analysis algorithms. It will require \({\mathcal {O}}(d^3)\) flops to form the two large matrix exponentials \(\exp (S_H)\) and \(\exp (S_F)\) explicitly, and another \({\mathcal {O}}(d^3)\) flops to solve (2.14). Furthermore, we have to store the two large matrix exponentials which are d-by-d (possibly) dense matrices. Therefore, it is unfavorable to compute and store these large matrix exponentials explicitly, and to solve the large eigenproblem (2.14) directly. Therefore, it is necessary to seek new strategies to speedup the matrix exponential-based discriminant analysis algorithms in practical calculations.

3 A Fast Implementation on Matrix Exponential-based Discriminant Analysis Methods

In this section, we consider how to accelerate the matrix exponential-based discriminant analysis algorithms. The key is to equivalently transform the large matrix computation problems of size d into smaller ones of size n. Consequently, there is no need to form and store the two large matrix exponentials explicitly. To this aim, we first rewrite the matrices \(\mathrm{exp}(S_H)\) and \(\mathrm{exp}(S_F)\) as low-rank updates of the identity matrix, and then reduce the large eigenproblem (2.14) into smaller one.

3.1 Solving the large eigenvalue problem efficiently

The QR decomposition is a powerful tool for small-sample-size problems [25, 44]. Consider the “economical” QR decomposition [13] of the data matrix \(X\in {\mathbb {R}}^{d \times n}\): \(X=QR\), where \(Q \in {\mathbb {R}}^{d \times n}\) is orthogonal and \(R \in {\mathbb {R}}^{n \times n}\) is upper triangular. As \(d \gg n\), the QR decomposition refers to the economized QR decomposition throughout this paper. We have

where

are two n-by-n matrices. The following theorem indicates that there is a close relationship between the eigenpairs of the matrix pencil \(\big (\mathrm{exp}(S_F), \mathrm{exp}(S_H)\big )\) and those of \(\big (\mathrm{exp}({\widetilde{S}}_F), \mathrm{exp}({\widetilde{S}}_H)\big )\).

Theorem 3.1

Let \(X=QR\) be the economized QR decomposition of the data matrix X, where \(Q \in {\mathbb {R}}^{d \times n}\) is orthonormal and \(R \in {\mathbb {R}}^{n \times n}\) is upper triangular. Denote by \({\widetilde{S}}_H=RHR^T\in {\mathbb {R}}^{n \times n}\) and by \({\widetilde{S}}_F=RFR^T\in {\mathbb {R}}^{n \times n}\). If \((\lambda ,\mathbf{y})\) is an eigenpair of \(\big (\mathrm{exp}({\widetilde{S}}_F), \mathrm{exp}({\widetilde{S}}_H)\big )\), then \((\lambda ,Q\mathbf{y})\) is an eigenpair of \(\big (\exp (S_F),\exp (S_H)\big )\), and vice versa.

Proof

It is easy to verify that

On one hand, if \(\mathrm{exp}({\widetilde{S}}_F)\mathbf{y} = \lambda \mathrm{exp}({\widetilde{S}}_H)\mathbf{y}\), by (3.4)–(3.5), we have that

On the other hand, if \(\mathrm{exp}(S_F)(Q\mathbf{y}) = \lambda \mathrm{exp}(S_H)(Q\mathbf{y})\), then it follows from (3.4)–(3.5) that

Applying \(Q^T\) on both sides gives \(\mathrm{exp}({\widetilde{S}}_F)\mathbf{y}=\lambda \mathrm{exp}({\widetilde{S}}_H)\mathbf{y}\), which completes the proof. \(\square\)

Remark 3.1

By (3.1), one can reduce the d-by-d large-scale symmetric positive definite generalized eigenproblem of \((S_H,S_F)\) into an n-by-n symmetric positive definite generalized eigenproblem of \(({\widetilde{S}}_H,{\widetilde{S}}_F)\).

3.2 A fast matrix exponential discriminant analysis algorithm

In practice, it is required to compute \(r~(r\le n)\) eigenpairs of (2.14), where r is the reducing dimension. By Theorem 3.1, we only need to solve the eigenproblems of the \(n\times n\) symmetrical generalized eigenproblem with respect to the n-by-n matrix pencil \(\big (\mathrm{exp}({\widetilde{S}}_F), \mathrm{exp}({\widetilde{S}}_H)\big )\), whose cost is in \({\mathcal {O}}(n^3)\) flops [13]. The main algorithm of this paper is described as follows.

As was suggested in [15, 37, 46], to prevent overflow, it is necessary to normalize the scatter matrices \(S_F\) and \(S_H\) (say, by using their Frobenius norm) in Step 1 of Algorithm 1. However, it is unfavorable to form and store these two matrices as they are very large and dense. The following theorem indicates that it only needs to normalize \({\widetilde{S}}_F\) and \({\widetilde{S}}_H\) of size n in practice; see Step 3 of Algorithm 2.

Theorem 3.2

Under the above notations, we have

Proof

We only prove (3.6), and the proof of (3.7) is similar. From (3.1) and the fact that \(\Vert S_F\Vert _F=\Vert Q {\widetilde{S}}_FQ^T\Vert _{F}=\Vert {\widetilde{S}}_F\Vert _{F}\), we have

\(\square\)

So far we refrain from forming and storing the large matrices \(S_F,S_H\) and \(\exp (S_F)\), \(\exp (S_H)\) in Algorithm 2. On one hand, we only need to store a \(d\times n\) matrix Q and some \(n\times n\) matrices in the new algorithm, and the main storage requirement of the algorithm is \({\mathcal {O}}(dn)\) as \(d\gg n\), rather than \({\mathcal {O}}(d^2)\). On the other hand, it is only required to compute the economized QR decomposition of X once for all, in \({\mathcal {O}}(dn^2)\) flops, moreover, solving the small generalized eigenproblem \(\mathrm{exp}({\widetilde{S}}_F)\mathbf{y}=\lambda \mathrm{exp}({\widetilde{S}}_H)\mathbf{y}\) needs \({\mathcal {O}}(n^3)\) flops, rather than \({\mathcal {O}}(d^3)\) flops. As a result, there is no need to form and store the two large matrix exponentials explicitly. Moreover, the transformations are mathematically equivalent, so the recognition rate as well as the standard derivation obtained from Algorithm 2 would be the same to those from Algorithm 1; see the numerical experiments performed in Sect. 5.

Remark 3.2

Recently, two inexact Krylov subspace algorithms, Arnoldi-EDA and Lanczos-EDA were proposed for solving the large matrix exponential eigenproblem arising in the Exponential Discriminant Analysis (EDA) method [39]. These two algorithms are based on Krylov subspace projection techniques and inexact solvers, in which the matrix exponentials need not to form or store explicitly, and the eigenpairs are solved only approximately.

However, in each step of the Arnoldi or Lanczos process, one has to perform a matrix exponential-vector multiplication of size d, in addition to Gram-Schmidt orthogonalizations. Thus, the proposed algorithm is cheaper than the two inexact Krylov subspace algorithms advocated in [39]; one refers to Sect. 5 for a comprehensive comparison of Algorithm 2 with these two inexact Krylov subspace algorithms.

4 Stability analysis on the proposed algorithm

In practical applications, however, the data is often perturbed or contaminated, and a natural question is [28]: whether the classification performance of the discriminant methods will be affected by the perturbations seriously or not? In this section, we focus on this problem and show the stability of the exponential discriminant methods. More precisely, we consider how the recognition rate will be affected by the perturbation on the original data.

Without loss of generality, we suppose that X is of full column rank and \(\Vert X\Vert _F=1\). Let \(E\in {\mathbb {R}}^{d \times n}\) be a perturbation matrix with \(\Vert E\Vert _F=\varepsilon \ll 1\), and denote by \({\underline{X}}=X+E\) the perturbed matrix of X, such that both the rank and the classification of the data points after perturbation are unchanged. Indeed, as the elements in the matrices F and H are determined by the classification of the data points, we make the assumption that both F and H are unchanged under the perturbation of E. Otherwise, the modifications in H and F will be in the order of \({\mathcal {O}}(1)\), which is not a perturbation problem any more; see, for example, (2.6).

Denote \({\underline{S}}_F={\underline{X}}F{\underline{X}}^T\) and \({\underline{S}}_H={\underline{X}}H{\underline{X}}^T\), then the symmetric generalized exponential eigenvalue problem (2.14) turns out to be

To analyze the stability of the fast matrix exponential-based algorithms, we want to establish the relationship between the eigenspace of (2.14) and that of (4.1). Let V be the (orthonormalized) solution matrix obtained from X by using Algorithm 2, and let \({\underline{V}}\) be that from \({\underline{X}}\), then the distance (in terms of Frobinus norm) between the subspaces \(\mathrm{span}\{V\}\) and \(\mathrm{span}\{{\underline{V}}\}\) is defined as [29]

Let \(\widehat{\mathbf{x}}_{i}\) be a sample from the training set, and \(\widehat{\mathbf{y}}_{j}\) be a sample from the testing set. Then the nearest neighbour classifier gives class membership via investigating the Euclidean distance as follows [7]

where \(\Vert V^T(\widehat{\mathbf{x}}_{i}-\widehat{\mathbf{y}}_{j})\Vert _2\) is the 2-norm or the Euclidean norm of the vector \(V^T(\widehat{\mathbf{x}}_{i}-\widehat{\mathbf{y}}_{j})\). The following theorem indicates that one can use sine of the angle between the two subspaces \(\mathrm{span}\{V\}\) and \(\mathrm{span}\{{\underline{V}}\}\) as a criterion for stability of classification.

Theorem 4.1

[39] Let \(V,{\underline{V}}\in {\mathbb {R}}^{d\times r}\) be orthonormal matrices whose columns are the “exact” and “computed” solutions of (2.14) and (4.1), respectively. Denote by \(d_{ij}=\Vert V^T(\widehat{\mathbf{x}}_{i}-\widehat{\mathbf{y}}_{j})\Vert _2\) and \({\underline{d}}_{ij}=\Vert {\underline{V}}^T(\widehat{\mathbf{x}}_{i}-\widehat{\mathbf{y}}_{j})\Vert _2\) the “exact” and the “computed” Euclidean distances, respectively. If \(\Vert \widehat{\mathbf{x}}_{j}\Vert _2,\Vert \widehat{\mathbf{y}}_{j}\Vert _2=1\) and \(\cos \angle (V,{\underline{V}})\ne 0\), then

Indeed, the above theorem shows that if \(\sin \angle (V,{\underline{V}})\) is sufficiently small, then the \(\{d_{ij}\}'s\) and the \(\{{\underline{d}}_{ij}\}'s\) will be close to each other, where \({\underline{d}}_{ij}=\Vert {\underline{V}}^T(\widehat{\mathbf{x}}_{i}-\widehat{\mathbf{y}}_{j})\Vert _2\) is an approximation to \(d_{ij}\). Consequently, the recognition results of the “exact solution” and those of the “perturbed solution” are about the same. For more details, refer to [39].

Under the framework of Algorithm 2, we will divide the stability analysis into four steps.

(1) Firstly, according to Steps 1–2 of Algorithm 2, we consider the perturbation of E on \({\widetilde{S}}_H\) and \({\widetilde{S}}_F\).

If we denote by

and by \(X(t)=X+tG\), then \(X+tG\) has a unique QR decomposition \(X(t)=Q(t)R(t)\) for all \(|t|\le \varepsilon _1\) [3, Corollary 2.2]. Consider the (economized) QR decomposition of \({\underline{X}}\):

where \(\varDelta R=\varepsilon _1{\dot{R}}(0)+{\mathcal {O}}(\varepsilon _1^2)\), \(\varDelta Q=\varepsilon _1{\dot{Q}}(0)+{\mathcal {O}}(\varepsilon _1^2)\), and \({\dot{R}}(0),~{\dot{Q}}(0)\) are the first order derivatives of R(t) and Q(t) at \(t=0\), respectively. It was shown that [3]

where \(\kappa _2(R)\) and \(\kappa _2(X)\) are the 2-norm condition number of R and X, respectively. Note that Q is orthonormal, so \(\kappa _2(R)=\kappa _2(X)\), moreover, as \(\Vert X\Vert _F=1\), we have

where \(\sigma _{\max }(X)\) and \(\sigma _{\min }(X)\) are the largest and the smallest (nonzero) singular values of X, respectively. Thus,

Let \(\widetilde{{\underline{S}}}_H={\underline{R}}H{\underline{R}}^T\), then we have

If we denote by

then \(\widetilde{{\underline{S}}}_H={\widetilde{S}}_H+\varDelta _1\). Combining the above relation with (4.5) and (4.7), we arrive at

where we use the fact that \(\Vert R\Vert _2=\Vert X\Vert _2\le \Vert X\Vert _F=1\).

(2) Secondly, in Step 3 of Algorithm 2, we need to normalize \(\widetilde{{\underline{S}}}_H\). Let

we investigate the perturbation of E on \(\Vert \widehat{{\underline{S}}}_H-{\widehat{S}}_H\Vert _F\) and \(\Vert \mathrm{exp}(\widehat{{\underline{S}}}_H)-\mathrm{exp}({\widehat{S}}_H)\Vert _F\).

Suppose that \(\varepsilon /\sigma _{\min }(X)\ll 1\), then \(\Vert \triangle _1\Vert _F={\mathcal {O}}\big (\varepsilon /\sigma _{\min }(X)\big )\ll 1\), and there exists a constant \(0\ll \eta \le 1\) satisfying \(\Vert \widetilde{{\underline{S}}}_H\Vert _F=\eta \Vert {\widetilde{S}}_H\Vert _F+\Vert \varDelta _1\Vert _F\). Let \(\varDelta _2=\widehat{{\underline{S}}}_H-{\widehat{S}}_H\), we have from (4.8) that

Let

then

Next, we investigate the relationship between \(\mathrm{exp}(\widehat{{\underline{S}}}_H)\) and \(\mathrm{exp}({\widehat{S}}_H)\), under the condition that \(\varepsilon /{\sigma _{\min }(X)}\ll 1\). For any matrices \(A,\varDelta \in {\mathbb {R}}^{n\times n}\) and \(t>0\), it follows that [34]

Note that \(\Vert \exp (A)\Vert \le e^{\Vert A\Vert }\) for any subordinate matrix norm [14, pp.237], we have

Specifically, if A is a symmetric positive definite (SPD) matrix, we conclude that

Let \(\varDelta _3=\mathrm{exp}(\widehat{{\underline{S}}}_H)-\mathrm{exp}({\widehat{S}}_H)\), it follows from (4.11) and \(\varepsilon /{\sigma _{\min }(X)}\ll 1\) that

where we use \(\varDelta _2=\widehat{{\underline{S}}}_H-{\widehat{S}}_H\) and \(\lambda _{\max }({\widehat{S}}_H)\le \Vert {\widehat{S}}_H\Vert _F=1\). As \(\varepsilon /{\sigma _{\min }(X)}\ll 1\), we can apply the Taylor expansion of \(e^{\eta _1\varepsilon /{\sigma _{\min }(X)}}\) at 0, which gives

Thus,

Analogously, let \(\widetilde{{\underline{S}}}_F\equiv {\underline{R}}F{\underline{R}}^T\), \(\widehat{{\underline{S}}}_F=\widetilde{{\underline{S}}}_F/{\Vert \widetilde{{\underline{S}}}_F\Vert _F}\), \({\widehat{S}}_F={\widetilde{S}}_F/{\Vert {\widetilde{S}}_F\Vert _F}\), \(\varDelta _4\equiv \mathrm{exp}(\widehat{{\underline{S}}}_F)-\mathrm{exp}({\widehat{S}}_F)\), and

where \(0\ll {\widehat{\eta }}\le 1\) satisfying \(\Vert \widetilde{{\underline{S}}}_F\Vert _F={\widehat{\eta }}\Vert {\widetilde{S}}_F\Vert _F+\Vert \widetilde{{\underline{S}}}_F-{\widetilde{S}}_F\Vert _F\). We can prove that

(3) Thirdly, we focus on the distance between the eigenspace of \(\big (\mathrm{exp}({\widehat{S}}_F),\mathrm{exp}({\widehat{S}}_H)\big )\) and that of \(\big (\mathrm{exp}(\widehat{{\underline{S}}}_F),\mathrm{exp}(\widehat{{\underline{S}}}_H)\big )\).

We first need the following theorem:

Theorem 4.2

[30] Let the definite pair (J, S) be decomposed as following

and

where \(Y_1\) and \(Y_2\) have orthonormal columns and the column of \(Y_1\) span an eigenspace of (J, S). Let the analogous decomposition be given for the pair \(({\widetilde{J}},{\widetilde{S}})=(J+\varDelta J)(S+\varDelta S)\). Set

if \(\delta >0\), then

where

and

is a Crawford number [30].

Recall that both \(\big (\mathrm{exp}({\widehat{S}}_F),\mathrm{exp}({\widehat{S}}_H)\big )\) and \(\big (\mathrm{exp}(\widehat{{\underline{S}}}_F),\mathrm{exp}(\widehat{{\underline{S}}}_H)\big )\) are definite matrix pencils. According to [29, pp.79–80], let the columns of the orthonormal matrices \(Z_1\) and \({\underline{Z}}_1\) span an eigenspace of \(\big (\mathrm{exp}({\widehat{S}}_F),\mathrm{exp}({\widehat{S}}_H)\big )\) and \(\big (\mathrm{exp}(\widehat{{\underline{S}}}_F),\mathrm{exp}(\widehat{{\underline{S}}}_H)\big )\), respectively. Then there exist \(Z_2,{\underline{Z}}_2\) such that \([Z_1,Z_2],~[{\underline{Z}}_1,{\underline{Z}}_2]\) are nonsingular and \({\underline{Z}}_1,{\underline{Z}}_2\) are orthonormal, such that

and

In the terminology of Theorem 4.2, we denote by

and note that

where \(e=\exp (1)\), moreover,

where we use the fact that both \({\widehat{S}}_H\) and \({\widehat{S}}_F\) are semi-positive definite. Similarly, we have that \(c^2\big (\mathrm{exp}(\widehat{{\underline{S}}}_F),\mathrm{exp}(\widehat{{\underline{S}}}_H)\big )\ge 2\). As a result,

On the other hand, (4.13) and (4.15) yield

Let

if \(\delta _1>0\), we obtain from Theorem 4.2, (4.18) and (4.19) that

(iv) Finally, we determine the distance between the eigenspace of the matrix pair \(\big (\mathrm{exp}(S_F),\mathrm{exp}(S_H)\big )\) and that of \(\big (\mathrm{exp}({\underline{S}}_F),\mathrm{exp}({\underline{S}}_H)\big )\).

Indeed,

In summary, we have the main theorem in this paper:

Theorem 4.3

Under the above notations and assumptions, if \(\delta _1>0\), then

where \(V=QZ_1\), \({\underline{V}}={\underline{Q}}{\underline{Z}}_1\) are orthonormal bases for the eigenspaces of the definite pairs \(\big (\mathrm{exp}({\underline{S}}_F),\mathrm{exp}({\underline{S}}_H)\big )\) and \(\big (\mathrm{exp}(S_F),\mathrm{exp}(S_H)\big )\), respectively.

Remark 4.1

Given a perturbation matrix E to the data matrix X, whose norm is in the order of \(\varepsilon\), Theorem 4.3 indicates that the perturbation to the “exact” solution V will be in the order of \(\varepsilon /\sigma _{\min }(X)\). Thus, if \(\varepsilon \ll 1\) and \(\sigma _{\min }(X)\gg 0\), we have \(\mathrm{sin}_F\angle (V,{\underline{V}})\ll 1\), and the approximation obtained from Algorithm 2 will be insensitive to the perturbation.

On the other hand, we note that the upper bound provided in Theorem 4.3 may be not sharp and even pessimistic in practice. However, it reveals that the stability of matrix exponential discriminant analysis methods is closely related to the value of \(\varepsilon /\sigma _{min}(X)\), and thus \(\kappa (X)=\sigma _{\max }(X)/\sigma _{\min }(X)\) could be used as a condition number to the distance between the two subspaces span\(\{V\}\) and span\(\{{\underline{V}}\}\).

5 Numerical experiments

In this section, we carry out some numerical experiments to illustrate the numerical behavior and demonstrate the efficiency of Algorithm 2. One refers to [1, 8, 9, 15, 36, 37, 39, 41, 46] for superiority of the exponential-based methods over many state-of-the-art methods for recognition. The aim of this section is two-fold. First, we show that Algorithm 2 runs much faster than its original counterpart Algorithm 1, while the classification accuracy and the standard deviation of the former is comparable to that of the latter. Second, we show the stability of Algorithm 2 over many popular algorithms for dimensionality reduction and face recognition.

Four benchmark face databases, YaleFootnote 1, CMU PIEFootnote 2, ARFootnote 3 and FeretFootnote 4 are used. All the experiments are run on a Hp workstation with 16 cores double Intel(R)Xeon(R) E5-2640 v3 processors, and with CPU 2.60 GHz and RAM 128 GB. The operation system is 64-bit Windows 10. All the numerical results are obtained from using MATLAB R2018b. In all the algorithms, we use the nearest neighbor classifier (NN) [7] for classification, in which the distance is chosen as the Euclidean distance. In all the matrix exponential related algorithms, we use the MATLAB built-in functions expm.m to compute the matrix exponential, and make use of eig.m to solve the eigenvalue problem. Moreover, we exploit the MATLAB built-in functions qr.m and orth.m for the (economized) QR decomposition and for orthonormalizing the projection matrix V, respectively.

In each experiment, we randomly select L images from each class as the training set, and the rest of the images are used as the testing set. That is, there are \(n=KL\) images in the training set, where K is the number of classes. Each experiment will be repeated for 10 times, and the numerical results, i.e., the CPU time in seconds, the recognition rate and the standard deviation (SD), are the mean values from the 10 runs.

5.1 Efficiency of the proposed algorithm

In this subsection, we aim to show the superiority of Algorithm 2 over Algorithm 1. We apply our fast implementation on the following matrix exponential-based discriminant analysis methods proposed recently:

-

EDA: the Matrix Exponential Discriminant Analysis method proposed in [46]. The application of Algorithm 2 on EDA is denoted by FEDA (Alg. 2). As a comparison, we also run the two inexact Krylov-type algorithms, i.e., Arnoldi-EDA and Lanczos-EDA proposed in [39]. The stopping criterion for the two algorithms is chosen as \(\epsilon =10^{-4}\).

-

EMFA: the Exponential Marginal Fisher Analysis method proposed in [15, 37]. The application of Algorithm 2 on EMFA is denoted by FEMFA (Alg. 2).

-

ELPP: the Exponential Locality Preserving Projection method proposed in [36]. The application of Algorithm 2 on ELPP is denoted by FELPP (Alg. 2).

-

ELDE: the Exponential Local Discriminant Embedding method proposed in [8]. The application of Algorithm 2 on ELPP is denoted by FELDE(Alg.2).

In this experiment, we choose \(K_1=L-1\) and \(K_2=2K_1\) in MFA, EMFA and FEMFA (Alg. 2), and use \(K_1=L-1\) and \(K_2=10\) in LDE, ELDE and FELDE(Alg.2), where \(K_1\) and \(K_2\) are the number of nearest neighbors in the same class and different classes, respectively.

(1) First, we illustrate the superiority of Algorithm 2 over its original counterpart as well as the two inexact Krylov-type algorithms.

There are total 165 images for 15 persons in the Yale facial database, with 11 images for each individual. The images demonstrate variation with the following expressions or configurations: (1) lighting: center light, left light, and right light; (2) with/without glasses; (3) facial expressions: normal, happy, sad, sleepy, surprised, and winkling. The original size of images is \(320\times 243\) pixels, and we crop the images to \(d=64\times 64\) and \(100 \times 100\) pixels in this example. We randomly pick \(L=2,\ 3,\ 4,\ 5\) images from each class as the training set, and the remaining images are used as the testing set. The data dimension d is chosen as 4096 and 10000, respectively, the number of classes \(K=15\), and we choose the reducing dimension \(r=K-1=14\).

There are eighteen algorithms on this problem altogether: EDA and its fast implementation FEDA as well as its two inexact Krylov-type algorithms Arnoldi-EDA and Lanczos-EDA; EMFA, ELDE, ELPP and their fast implementations. In order to measure the times and accuracy when PCA is used as a preprocessing step on the exponential methods, the PCA plus exponential graph embedding methods are also run on this problem [28], i.e, PCA + EDA, PCA + LDA EMFA, PCA + LDA ELDE, PCA + LDA ELPP, in which we fix the dimensionality reduction of PCA to \(n-1\). Notice that Algorithm 2 and PCA plus exponential graph embedding methods have comparable amount of computational cost. We also list the numerical results of the original PCA plus graph embedding methods algorithms (PCA + LDA LDA, PCA + LDA MFA, PCA + LDA LDE, PCA + LDA LPP) in our experiment, where we preserve 99% energy in the PCA stage. Table 1 lists the numerical results.

It is obvious to see from Table 1 that our proposed algorithms are very effective. For example, as \(d=10{,}000\), the four matrix exponential-based algorithms are very slow, which used about 166 s. As a comparison, the four fast algorithms run much faster than their original counterparts, especially when the dimension d is large. Indeed, all the fast algorithms require less than 0.1 s, implying that our new algorithms are over 1660 times faster than the original algorithms. This is because our fast algorithms only need to compute matrix exponentials and solve eigenvalue problems of \(n\times n\) matrices, instead of \(d\times d\) matrices that are required in their original counterparts. Specifically, the proposed FEDA (Alg.2) is about three times faster than the two Krylov-type algorithms Arnoldi-EDA and Lanczos-EDA. On the other hand, we observe that the recognition rates and the standard deviations of the fast algorithms and their original counterparts are the same. This is because the fast algorithms and their original algorithms are mathematically equivalent.

Furthermore, it is seen that cropping the original images may lose some useful information and thus may result in a low recognition accuracy. For instance, the recognition rate of the exponential methods is about 86% when \(d=10000,L=2\), while it decreases to about 75% as \(d=4096,L=2\). Therefore, it is interesting to consider high dimensionality reduction problems for the (uncropped) data. In this case, our fast implementation is preferable as d is large.

For this Yale data base, we see that the CPU timings of PCA plus exponential graph embedding algorithms and PCA plus graph embedding algorithms are comparable, which is a little faster than Algorithm 2. This is because the PCA-preprocessing algorithms construct the adjacency matrices by using the “reduced” data matrices instead of the “original” data matrices; see also Table 2. On the other hand, the PCA plus exponential algorithms and Algorithm 2 share about the same recognition rates which are a little lower than those from the PCA plus exponential graph embedding algorithms in some cases. However, as will be shown latter, the exponential-based algorithms are more stable to perturbations.

(2) Next, we show the feasibility of our new algorithms on data with very high dimensionality.

We consider the CMU PIE (Pose, Illumination, Expression) database. It is taken from CMU 3D Room, which includes over 40000 facial images of 68 individuals. For each individual, it has 13 different poses, under 43 different illumination and 4 different expressions. We choose total 800 images of 40 people as the subset, and for each individual we select 20 images with different illuminations, poses and expressions. The size of images is \(d= 486\times 640\) pixels. We randomly choose \(L=2,\ 3,\ 4,\ 5\) images from each class as the training set, and use the rest as the testing set.

In the CMU PIE database, the data dimension \(d=311040\), the number of classes \(K=40\), and we choose the reducing dimension \(r=K-1=39\). We run the eighteen algorithms on this problem, and the numerical results are listed in Table 2. It is seen that EDA, EMFA, ELDE and ELPP do not work at all for this problem, because they have to deal with matrix exponential problems of size \(311040\times 311040\). Indeed, all of them suffer from the difficulty of out-of-memory (abbreviated as “O.M.” in Table 2). As a comparison, the four fast algorithms and two inexact Krylov-type algorithms perform quite well, and FEDA is about four times faster than Arnoldi-EDA and Lanczos-EDA. So Algorithm 2 can deal with data with very high dimensionality.

For the CMU PIE database, we see that the recognition rates from all the algorithms are comparable. On the other hand, Algorithm 2 is slower than the other two PCA-processing algorithms. Indeed, the PCA-preprocessing algorithms construct the adjacency matrices by using the “reduced” data matrices whose size is much smaller than the “original” data matrices. Furthermore, FEMFA (Alg. 2) is slower than the other three fast exponential-based algorithms for this problem. This is due to the fact that the overhead for constructing scatter matrices in the MFA-type algorithm is a little higher than that for the other three algorithms.

(3) We demonstrate that our fast algorithms can be more powerful than original PCA plus graph embedding methods and other popular LDA-based methods for dimensionality reduction.

We consider the AR database, which contains 1680 face images of 120 individuals, with 14 images per people. In the AR database, all images are cropped and scaled to \(50\times 40\). We randomly select \(L=2,\ 3,\ 4,\ 5\) images from each class as the training set, and the rest are used as the testing set. The data dimension \(d=2000\), the number of classes \(K=120\), and we choose the reducing dimension \(r=50\).

To illustrate the competitiveness of Algorithm 2, we compare it with some PCA plus graph embedding methods. PCA preprocessed algorithms are popular for dimensionality reduction [2, 33]. The algorithms for comparison include PCA + LDA LDA, PCA + LDA MFA, PCA + LDA LDE, and PCA + LDA LPP, in which we preserve \(99\%\) energy in the PCA stage, followed by the corresponding algorithms. Also we run some popular LDA-based methods for dimensionality reduction including the regularized LDA (RLDA) (the regularized parameter is chosen as 0.001) [11], and the null space LDA (NLDA) [5], QRLDA [44], GSVDLDA [18]. The numerical results are given in Table 3.

It is seen from Table 3 that the recognition rates obtained from the fast algorithms based on Algorithm 2 (FEDA, FEMFA, FELPP, FELDE) are (much) higher than the original PCA pre-processed methods (PCA + LDA LDA, PCA + LDA MFA, PCA + LDA LPP, PCA + LDA LDE), especially when L is small. Meanwhile, the standard deviations of the FEDA, FEMFA, FELPP, FELDE (Alg.2) are often lower than those of other original PCA pre-processed methods (PCA + LDA LDA, PCA + LDA MFA, PCA + LDA LPP, PCA + LDA LDE). Again, all the fast algorithms based on Algorithm 2 run much faster than the original matrix exponential-based algorithms. We see that the CPU time used in Algorithm 2 is comparable to the PCA pre-processed algorithm, and at the same time, Algorithm 2 shares the same recognition rates and standard derivations as the original matrix exponential-based algorithms. In conclusion, our new algorithms possess the advantages of both the original matrix exponential-based algorithms and the PCA pre-processed algorithms, and meanwhile diminish the disadvantages of those two algorithms.

In addition, we find that the recognition rates of FEDA (Alg. 2) are higher than those of PCA + LDA LDA and GSVDLDA. Though the recognition rates and standard deviations obtained form FEDA (Alg. 2), EDA, RLDA, NLDA, Arnoldi-EDA, Lanczos-EDA and QRLDA are similar, QRLDA runs faster than FEDA (Alg. 2), and FEDA(Alg.2) run much faster than other algorithms. This demonstrates that our fast algorithms FEDA (Alg. 2) is more powerful than these LDA-based methods, expect for QRLDA. Although our algorithm FEDA (Alg. 2) is weaker than QRLDA in terms of CPU time, in next subsection, we will show that FEDA (Alg. 2) is more stable than QRLDA.

5.2 Stability of the proposed algorithm

In this subsection, we aim to illustrate the stability of Algorithm 2, and show the effectiveness of the theoretical analysis given in Sect. 4. The test set is the FERET database, which consists of 14051 eight-bit grayscale images of human faces with views ranging from frontal to left and right profiles.

To show the stability of Algorithm 2, we perturb the training set X as \({\underline{X}}=X+E\), where E is a Gaussian matrix generated by using the MATLAB command normrnd(0,l,[d,n]), i.e., a d-by-n zero-mean Gaussian distributed matrix with variance l:

Here \(\varepsilon\) is a user-described parameter, which is chosen as \(\varepsilon =10^{-1}, 10^{-2}\), \(10^{-3}\) and \(10^{-4}\) in the experiment, moreover, \(\Vert E\Vert _F/\Vert X\Vert _F=\varepsilon\).

We first run the four fast algorithms FEDA (Alg. 2), FEMFA (Alg. 2), FELDE(Alg.2) and FELPP (Alg. 2) on this problem. We compare them with the four corresponding PCA plus algorithms PCA + LDA LDA, PCA + LDA MFA, PCA + LDA LDE and PCA + LDA LPP, where \(99\%\) energy is preserved in the PCA stage. Table 4 lists the recognition rates of the algorithms with and without (i.e., \(\varepsilon =0,~l=0\)) perturbation. All the experiments are repeated for 10 times, and the numerical results are the mean from the 10 runs.

Again, we see from Table 4 that the recognition rates of the four fast exponential-based algorithms are much higher than those from the four PCA pre-processed algorithms. This again demonstrates the efficiency of the exponential-based methods for face recognition. In terms of recognition accuracy, we see that the four fast exponential-based algorithms are much more stable than the four PCA pre-processed algorithms.

In order to show this more precisely, we define “variation” of recognition rates as follows:

where “Perturbed recognition rate” and “Original recognition rate” stand for the recognition rates of algorithms “with” and “without” perturbation, respectively. Figure 1 (the upper figure) plots the figure of variation for the eight algorithms when \(\varepsilon =10^{-1},10^{-2},10^{-3}\) and \(10^{-4}\) with \(l=0.01\). It is obvious to see from Table 5 and Fig. 1 (the upper figure) that the exponential-based algorithms are much more stable than the PCA plus algorithms, because the variation values of the former are much smaller than those of the latter.

To interpret this more precisely, we plot all the singular values of the training set X in Fig. 2, and the smallest nonzero singular value is about 0.0358, where those close to zero are caused by rounding off errors. By Theorem 4.3, the distance between V and \({\underline{V}}\) are bounded by \({\mathcal {O}}\big (\varepsilon /\sigma _{\min }(X)\big )\), which will be much less than 1 as \(\varepsilon\) is small enough, say, \(\varepsilon =10^{-4}\). In terms of Theorem 4.1, the recognition rates from the perturbed data are about the same as those from the unperturbed one. This shows the effectiveness of our theoretical results. Interestingly, we see that the four fast exponential-based algorithms are still very robust even when \(\varepsilon\) is much larger than the smallest singular value, say, \(\varepsilon =10^{-1}\).

Variation of the recognition rates on the FERET database, \(d=6400\), \(K=200\), \(L=3\), and \(n=KL\), \(l=0.01\). The upper is for comparing our fast algorithms (FEDA, FEMFA, FELPP, FELDE) with the four corresponding PCA plus algorithms (PCA + LDA LDA, PCA + LDA MFA, PCA + LDA LPP, PCA + LDA LDE). The lower is for comparing the six LDA-based methods including PCA + LDA LDA, FEDA, RLDA, NLDA, QRLDA and GSVDLDA

Next, we compare FEDA (Alg. 2) with some popular discriminant analysis methods including NLDA [5], QRLDA [44], GSVDLDA [18], and RLDA [11], where the regularized parameter in RLDA is set to be 0.001. The recognition rates with and without perturbation are listed on Table 6, and the variation of the recognition rates is given in Table 7. We see from Table 6 that the recognition rates of FEDA (Alg. 2) are higher than those of the others in most cases, which demonstrates the superiority of the exponential discriminant methods for recognition. Furthermore, it is observed from Table 7 and Fig. 1 (the lower figure) that FEDA (Alg. 2) is the most stable one compared with others. Thus, Algorithm 2 is both fast and stable, and it is a competitive candidate for high dimensionality reduction and recognition.

6 Conclusion

Exponential discriminant analysis methods can be utilized to settle the small-sample-size problem arising in dimensionality reduction, and they often have more discriminant power than their original counterparts. However, one has to solve large-scale matrix exponential eigenproblems which are the bottleneck in this type of methods.

The first contribution of this paper is to propose a fast implementation framework on exponential discriminant analysis methods. To this aim, we first reformulate large-scale matrix exponential of size d to a concise form, and then reduce the large-scale exponential eigenproblem to a small-sized one of size n, where d is dimension of the data and n is the number of samples. Our new algorithm runs much faster than its original counterpart, with no recognition rate lost.

The second contribution of this paper is to show the stability of the exponential discriminant analysis methods from a matrix perturbation point of view. The key result indicates that, unlike conventional discriminant analysis methods, the exponential discriminant methods refrain from the difficulty of (possible) small Crawford number; see (4.17). Numerical experiments illustrate the numerical behavior of the new algorithm and demonstrate the effectiveness of the theoretical results. Furthermore, we point out that our framework can be generalized to all exponential-based matrix transformation methods, and it is favorable for data with high dimension and small number of training samples.

References

Ahmed, N.: Exponential discriminant regularization using nonnegative constraint and image descriptor. In: IEEE 9th international conference on emerging technologies, pp. 1–6 (2013)

Belhumeur, P., Hespanha, J., Kriegman, D.: Eigenfaces vs Fisherface: recognition using class-specific linear projection. IEEE Trans. Pattern Anal. Mach. Intell. 19, 711–720 (1997)

Chang, X., Paige, C.C., Stewart, G.W.: Preturbation analysis for the QR factorization. SIAM J. Matrix Anal. Appl. 18, 775–791 (1997)

Chen, H., Chang, H., Liu, T.: Local discriminant embedding and its variants. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 846–853 (2005)

Chen, L., Liao, H., Ko, M., Lin, J., Yu, G.: A new LDA-based face recognition system which can solve the small sample size problem. Pattern Recognit. 33, 1713–1726 (2000)

Chu, D., Thye, G.: A new and fast implementation for null space based linear discriminant analysis. Pattern Recognit. 43, 1373–1379 (2010)

Cover, T., Hart, P.: Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 13, 21–27 (1967)

Dornaika, F., Bosaghzadeh, A.: Exponential local discriminant embedding and its application to face recognition. IEEE Trans. Syst. Man Cybern. Part B Cybern. 43, 921–934 (2013)

Dornaika, F., El Traboulsi, Y.: Matrix exponential based semi-supervised discriminant embedding for image classification. Pattern Recognit. 61, 92–103 (2017)

Duda, R., Hart, P., Stock, D.: Pattern Classification, 2nd edn. Wiley, New York (2004)

Friedman, J.: Regularized discriminant analysis. J. Am. Stat. Assoc. 84, 165–175 (1989)

Fukunaga, K.: Introduction to Statistical Pattern Recognition, 2nd edn. Elsevier Academic Press, London (1999)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 4th edn. The Johns Hopkins university Press, Baltimore (2013)

Higham, N.J.: Functions of Matrices: Theory and Computation. SIAM, Philadelphia (2008)

He, J., Ding, L., Cui, M., Hu, Q.: Marginal Fisher analysis based on matrix exponential transformation. Chin. J. Comput. 10, 2196–2205 (2014). (in Chinese)

He, X., Niyogi, P.: Locality preserving projections. In: Thrun, S., Saul, L., Schölkopf, B. (eds.) Advances in Neural Information Processing Systems, vol. 16, pp. 153–160. MIT Press, Cambridge (2004)

He, X., Yan, S.C., Hu, Y., Niyogi, P., Zhang, H.J.: Face recognition using laplacianfaces. IEEE Trans. Pattern Anal. Mach. Intell. 27, 328–340 (2005)

Howland, P., Park, H.: Generalizing discriminant analysis using the generalized singular value decomposition. IEEE Trans. Pattern Anal. Mach. Intell. 26, 995–1006 (2004)

Jolliffe, I.: Principal Component Analysis. Springer, New York (1986)

Kokiopoulou, E., Chen, J., Saad, Y.: Trace optimization and eigenproblems in dimension reduction methods. Numer. Linear Algebra Appl. 18, 565–602 (2011)

Kokiopoulou, E., Saad, Y.: Orthogonal neighborhood preserving projections. In: Houston, T.X., Han, J., et al. (eds.) IEEE 5th International Conference on Data Mining (ICDM05), pp. 234–241. IEEE, New York (2005)

Kokiopoulou, E., Saad, Y.: Orthogonal neighborhood preserving projections: a projection-based dimensionality reduction technique. IEEE Trans. Pattern Anal. Mach. Intell. 29, 2143–2156 (2007)

Kramer, R., Young, A., Burton, A.: Understanding face familiarity. Cognition 172, 46–58 (2018)

Mohri, M., Rostamizadeh, A., Talwalkar, A.: Foundations of Machine Learning. The MIT Press, Cambridge (2012)

Park, C., Park, H.: A comparision of generalized linear discriminant analysis algorithms. Pattern Recognit. 41, 1083–1097 (2008)

Qiao, L., Chen, S., Tan, X.: Sparsity preserving projections with applications to face recognition. Pattern Recognit. 43, 331–341 (2010)

Seghouane, A., Shokouhi, N., Koch, I.: Sparse principal component analysis with preserved sparsity pattern. IEEE Trans. Image Process. 28, 3274–3285 (2019)

Shi, W., Wu, G.: Why PCA plus graph embedding methods can be unstable for extracting classification features? (submitted for publication)

Stewart, G.W.: Perturbation bounds for the definite generalized eigenvalue problem. Linear Algebra Appl. 23, 69–85 (1979)

Sun, J.: The perturbation bounds for eigenspaces of a definite matrix-pair. Numer. Math. 41, 321–343 (1983)

Tenenbaum, J., de Silva, V., Langford, J.: A global geometric framework for nonlinear dimensionality reduction. Science 290(5500), 2319–2323 (2000)

Torgerson, W.: Multidimensional scaling: I. Theory and method. Psychometrika 17, 401–419 (1952)

Turk, M., Pentland, A.: Eigenfaces for recognition. J. Cognit. Neurosci. 3, 71–86 (1991)

Van Loan, C.F.: The sensitivity of the matrix exponential. SIAM J. Numer. Anal. 14, 971–981 (1977)

Wang, J.: Geometric Structure of High-Dimensional Data and Dimensionality Reduction. Higher Education Press, Beijing (2011)

Wang, S., Chen, H., Peng, X., Zhou, C.: Exponential locality preserving projections for small sample size problem. Neurocomputing 74, 36–54 (2011)

Wang, S., Yan, S., Yang, J., et al.: A general exponential framework for dimensionality reduction. IEEE Trans. Image Process. 23, 920–930 (2014)

Webb, A.: Statistical Pattern Recognition, 2nd edn. Wiley, Hoboken (2002)

Wu, G., Feng, T., Zhang, L., Yang, M.: Inexact implementation using Krylov subspace methods for large scale exponential discriminant analysis with applications to high dimensionality reduction problems. Pattern Recogniti. 66, 328–341 (2017)

Wu, G., Xu, W., Leng, H.: Inexact and incremental bilinear Lanczos components algorithms for high dimensionality reduction and image reconstruction. Pattern Recognit. 48, 244–263 (2015)

Yan, L., Pan, J.: Two-dimensional exponential discriminant analysis and its application to face recognition. In: International Conference on Computational Aspects of Social Networks (CASoN), pp. 528–531 (2010)

Yan, S., Xu, D., Zhang, B., Zhang, H., Yang, Q., Lin, S.: Graph embedding and extensions: a general framework for dimensionality reduction. IEEE Trans. Pattern Anal. Mach. Intell. 29, 40–51 (2007)

Yang, J., Zhang, D., Yang, J., Niu, B.: Globally maximizing, locally minimizing: unsupervised discriminant projection with applications to face and palm biometrics. IEEE Trans. Pattern Anal. Mach. Intell. 29, 650–664 (2007)

Ye, J., Li, Q.: LDA/QR: an efficient and effective dimension reduction algorithm and its theoretical foundation. Pattern Recognit. 37, 851–854 (2004)

Yuan, S., Mao, X.: Exponential elastic preserving projections for facial expression recognition. Neurocomputing 275, 711–724 (2018)

Zhang, T., Fang, B., Tang, Y., Shang, Z., Xu, B.: Generalized discriminant analysis: a matrix exponential approach. IEEE Trans. Syst. Man Cybern. Part B Cybern. 40, 186–197 (2010)

Acknowledgements

We would like to express our sincere thanks to the anonymous referees and our editor for insightful comments and suggestions that greatly improved the representation of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work is supported by the Natural Science Foundation of Jiangsu Province under grant BK20171185, and the Fundamental Research Funds for the Central Universities of China under grant 2019XKQYMS89.

Rights and permissions

About this article

Cite this article

Shi, W., Luo, Y. & Wu, G. On general matrix exponential discriminant analysis methods for high dimensionality reduction. Calcolo 57, 18 (2020). https://doi.org/10.1007/s10092-020-00366-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10092-020-00366-6

Keywords

- Dimensionality reduction

- Small-sample-size problem

- Matrix exponential

- Large-scale eigenvalue problem

- Stability analysis