Abstract

Pseudo-Jacobi–Fourier moments (PJFMs) are a set of orthogonal moments which have been successfully applied in the fields of image processing and pattern recognition. In this paper, we present a new set of quaternion fractional-order orthogonal moments for color images, named accurate quaternion fractional-order pseudo-Jacobi–Fourier moments (AQFPJFMs). We initially propose a fast and accurate algorithm for the PJFMs computation of an image using new recursive approach and polar pixel tiling scheme. Then, we define a new set of orthogonal moments, named accurate fractional-order pseudo-Jacobi–Fourier moments, which is characterized by the generic nature and time–frequency analysis capability. We finally extend the gray-level fractional-order PJFMs to color images and present the quaternion fractional-order pseudo-Jacobi–Fourier moments. In addition, we develop a new color image representation for enhancing simultaneously the discriminability and robustness, called mixed low-order moments feature. We conduct extensive experiments to evaluate the performance of the proposed AQFPJFMs, in which the encouraging results demonstrate the efficacy and superiority of the proposed scheme.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

How to effectively describe an image is a vital issue in image processing. It means that a small set of descriptions represents an image. However, compared with the original image, the recognized image will have a certain degree of distortion (for example, translation, and rotation). Therefore, moments are proposed to solve this question which are not sensitive to various distortions or noises and have invariant characteristics. Because the invariant moments highly summarize the image features and have a variety of invariance, moments have been widely used in scene analysis, image classification, and image pattern recognition.

1.1 Brief review of moments

As early as 1962, Hu [1] proposed using geometric moments to describe images. However, with the deepening of research, scientists found that low-order geometric moments do not adequately describe the details of the image, while high-order geometric moments have lower robustness. Therefore, the geometric moments are not easy to reconstruct the original image. In 1980, Teague [2] proposed orthogonal moments (OMs) to solve the shortcomings of geometric moments. OMs have excellent image reconstruction ability and low sensitivity to noise invariance [3], so they are widely used in object recognition applications. The most common orthogonal moment is the Zernike moments (ZMs) proposed by Teague [2] in 1980. Although ZMs have good performance in information redundancy, noise sensitivity and image description ability [4], it is challenging to describe smaller images. In 1994, Sheng [5] proposed Orthogonal Fourier-Mellin moments (OFMMs). OFMMs are better than ZMs in describing smaller images, but the values of radial polynomials of OFMMs are large at \(r = 0\), OFMMs did not solve the problem. Many experiments [5,6,7] have proved that the radial basis functions’ zeros are related to the moments’ descriptive ability. Subsequently, Ping [6] proposed Chebyshev–Fourier moments (CHFMs), but CHFMs still failed to overcome the shortcomings of OFMMs. Lately, Ping [7] proposed Jacobi–Fourier moments (JFMs) based on Jacobi polynomials. This kind of moment is a generalized orthogonal moment; ZMs [8], pseudo-Zernike moments (PZMs) [9], pseudo-Jacobi–Fourier moments (PJFMs) [10], OFMMs, CHFMs, and Legendre–Fourier moments (LFMs) [11] are all special cases of JFMs. The almost radial basis functions of JFMs have \(n\) zeros in the interval \({(0,1)}\), and the zeros’ distribution in this interval is practically uniform. However, PJFMs have \((n + 2)\) zeros in the special cases of JFMs. Compared with the basis functions of other moments based on Jacobi polynomials, PJFMs added two zeros. The distribution of PJFMs’ zeros is more uniform, and the first zero is very close to the origin. Therefore, PJFMs are more suitable for describing and recognizing multi-distortion invariant images [12].

1.2 State of the art and motivation

The research directions of traditional orthogonal moments mainly include the following five aspects: (1) accurate calculation; (2) fast calculation; (3) robustness/ invariance optimization; (4) definition extension; (5) application [13]. The ideal moments should have outstanding performance in all aspects. However, the existing moments can only be optimized in a few aspects. There will always be shortcomings in some aspects compared with the ideal moments. Therefore, how to design the more ideal moments is still a problem to be solved.

The direct calculation method of traditional OMs will produce two kinds of errors: geometric errors and numerical integration errors [14]. These errors affect the accuracy of the image reconstruction ability. Wee [15] minimized geometric errors by circular mapping. This method mapped the entire square image to the unit disk and calculated integrals separately like precise geometric moments. However, if the moment’s order is bigger than 50th, this method will appear a numerically unstable phenomenon. Also, the calculation time of the moments will increase with the order increase. To reduce the calculation time, many mathematicians have proposed some algorithms to accelerate the calculation of orthogonal moments [16,17,18,19]. The most common acceleration method is the recursive method to calculate the radial basis functions’ coefficients, this method can not only reduce the time complexity, but also improve the numerical stability. However, studies have found that using the recursive method [20] to calculate high-order polynomials can also cause errors. Xin [21] proposed a high-precision numerical calculation algorithm for ZMs, which significantly improved image reconstruction ability. First, this algorithm re-divides the pixels with a specific strategy; then, the image is generated through interpolation in the polar coordinates. After that, the integral is calculated directly on the image in polar coordinates. This method eliminates geometric errors and can calculate integrals better. Inspired by this, Camacho-Bello [22] combined the polar pixel tiling scheme and recursive methods, and the proposed method has an excellent performance in terms of speed and accuracy.

In recent years, people have attached great importance to using more accurate orthogonal polynomials fractional differential equations [23,24,25,26]. They introduce a fractional-order parameter \(\alpha { > 0}\) to extend the conception of integer-order \(N\) to fractional-order \(\alpha n\) (\(n \in N\)). The classical orthogonal polynomials can be acquired by setting \(\alpha = 1\). This new kind of fractional orthogonal polynomials can control the zeros’ distribution by changing the fractional parameter and solve the problem of information suppression. Compared with traditional orthogonal moments that can only reflect global information, fractional orthogonal moments can better describe the image information of the region of interest. Therefore, it is also applied to the new radial basis functions to define the fractional orthogonal moments. Zhang [27] defined fractional-order orthogonal Fourier–Mellin moments (FOFMMs). Benouini [28] defined fractional-order Chebyshev moments (FCMs). Yang [29] defined fractional-order Jacobi–Fourier moments (FJFMs), Hosny [30] defined fractional-order polar Harmonic transform (FPHT). Wang [31] defined fractional-order polar harmonic Fourier moments (FPHFMs). To make the fractional orthogonal moments not only limited to grayscale images, Chen [32] proposed fractional-order orthogonal quaternion Zernike moments (FQZMs) to calculate color images. Wang [33] proposed fractional-order quaternion exponential moments (FQEMs), Yamni [34] proposed novel quaternion radial fractional Charlier moments (QRFCMs).

1.3 Contribution of this paper

In order to design an ideal moment descriptor, we propose a new algorithm called quaternion accurate fractional-order Pseudo-Jacobi–Fourier moments (AQFPJFMs) in this paper. Compared with PJFMs, AQFPJFMs have improved accuracy, numerical stability and applicability.

The contributions of this paper are as follows:

-

We propose a new recursive method of PJFMs. This new recursive relationship can increase the calculation speed and reduce the numerical instability of high-order radial basis functions.

-

Based on the recursive relationship and polar pixel tiling scheme between radial basis functions, accurate Pseudo-Jacobi–Fourier moments (APJFMs) are realized. The polar pixel tiling scheme can cancel geometric and numerical integration errors, so we can reconstruct high-order images better.

-

To show more details, we extended APJFMs to accurate fractional-order pseudo-Jacobi–Fourier moments (AFPJFMs). Due to introducing the fractional-order parameter, we can adjust the distribution of radial basis functions’ zeros by altering the fractional-order parameter according to different application backgrounds.

-

We use quaternion to calculate color images, then we get AQFPJFMs. AQFPJFMs reflect the color information and the correlation between the color channels. Therefore, AQFPJFMs have better image representation and distinguishing capabilities.

-

To making full use of the time–frequency analysis capabilities of AQFPJFMs, we propose a scheme that can simultaneously enhance the discriminability and robustness of global features, called mixed low-order moments feature (MLMF).

-

We apply AQFPJFMs and MLMF to zero-watermarking. Comparing our proposed algorithm with other advanced algorithms, the test results prove that our advanced algorithm has significant advantages in robustness and discriminability.

The structure of this paper is as follows. In Sect. 2, first, we will propose the recursive method of PJFMs; second, we will use the polar pixel tilling scheme to eliminate error, then we will get APJFMs. In Sect. 3, we will define AFPJFMs and discuss AFPJFMs’ properties. In Sect. 4, we will use quaternion to calculate color images, this algorithm is called AQFPJFMs. In Sect. 5, we will define MLMF and discuss the time–frequency analysis capabilities of MLMF. In Sect. 6, we will show a lot of experimental results of AQFPJFMs and apply MLMF to the zero-watermarking algorithm. Finally, Sect. 7 will summarize our work and point out the direction of future work.

2 Accurate pseudo-Jacobi–Fourier moments

In this section, first, we will review PJFMs; second, we will propose the recursive method of PJFMs; finally, we will define APJFMs.

2.1 Definition

The classical PJFMs’ radial basis functions \(J_{n} (r)\) in the polar coordinate system \((r,\theta )\) are defined as:

where \(G_{n} (r)\) represents the Jacobi polynomials with parameters \(p = 4,q = 3\) [21], \(w(r)\) is the weighting function and \(b_{n}\) are normalization factors:

The Jacobi polynomials \(G_{n} (r)\) satisfies the normalization condition:

\(\delta\) is the Kronecker delta. Similar:

Therefore, the classic PJFMs can be defined as [10] with order \(n \in N\) and repetition \(m \in Z\):

\(f(r,\theta )\) can be approximated reconstruction by classical PJFMs and basis functions:

Some visual illustrations of the kernels are given in Fig. 1.

Some examples of the PJFMs’ kernels. a PJFMs’ radial kernels, b the 2D view of PJFMs’ phase angle \(\angle V_{nm} (r,\theta ) = \arctan ({\text{Im}} (V_{nm} (r,\theta ))/{\text{Re}} (V_{nm} (r,\theta )))\) (The color from blue to yellow indicates that the phase angle is from small to large), c The 3D view of PJFMs’ phase angle \(\angle V_{nm} (r,\theta ) = \arctan ({\text{Im}} (V_{nm} (r,\theta ))/{\text{Re}} (V_{nm} (r,\theta )))\). (\(n = 3,m = 3\), \(X\) and \(Y\) are the horizontal and vertical coordinates of the image)

2.2 Recursive computation

The Jacobi polynomials \(G_{n} (r)\) involve multiple factorials and gamma functions; therefore, the time complexity in the calculation process is relatively large, and numerical instability may overflow when calculating high-order images. To solve this problem, referring to [35, 36], we will put forward a recursive calculation method of the PJFMs’ radial basis functions \(J_{n} (r)\). This method has the following two advantages:

2.2.1 The time complexity is low

PJFMs directly calculate \(K\) times \(J_{n} \left( {\sqrt {x_{i}^{2} + y_{j}^{2} } } \right)\) with a size of \(N \times N\) digital image requiring \(O(K^{2} N^{2} )\) additions; PJFMs recursively calculate \(K\) times \(J_{n} \left( {\sqrt {x_{i}^{2} + y_{j}^{2} } } \right)\) with a size of \(N \times N\) digital image requiring \(O(KN^{2} )\) additions.

2.2.2 Numerical stability is better

When PJFMs directly calculate double-precision numbers, the factorial term \(a!\) is accurate while \(a \le 21\). When \(a > 21\), the direct calculation method of PJFMs will be numerically unstable. [10] However, the recursive calculation method of PJFMs we proposed does not involve the factorial (or gamma function) of large numbers, so it has better numerical stability.

Based on Eq. (2), let:

Using the property that \(a! = a \cdot (a - 1)!\) and \(\Gamma (a) = a \cdot \Gamma (a - 1)\), \(P_{n} (r)\) can be computed recursively:

where

and the initial values of \(P_{n} (r)\) are:

2.3 Accurate pseudo-Jacobi–Fourier moments

2.3.1 Error analysis in Cartesian coordinates

In the traditional moment calculation method, the direct calculation method in the Cartesian coordinate system is based on the zero-order approximation (ZOA) discrete calculation method. We define the discrete digital image which size is \(N \times N\) as \(f[i,j],\;(i,j) \in [1,2, \ldots ,N]^{2}\). This method defines the Cartesian coordinate system \((x,y)\) by the integral variable in Eq. (5):

The discrete digital image cannot be used directly; therefore, we need to map the domain \([1,\;2,\; \ldots ,\;N]^{2}\) of the discrete digital image to the unit circle \([ - 1,\;1]^{2}\), where \((x_{i} ,y_{j} ) \in [ - 1,\;1]^{2}\):

PJFMs’ discrete calculation method according to Eqs. (11) and (12) can be defined as:

\(x_{i}\) and \(y_{j}\) meet the condition \(x_{i}^{2} + y_{j}^{2} \le 1\), then:

The width of the integral unit area is \(\Delta = 2/N\). In the Cartesian coordinate system, the exact value of Eq. (14) cannot be obtained, but the estimated value can only be obtained from Eq. (15), so it will result in a numerical integration error:

Substituting the estimated value into Eq. (13), the discrete form of PJFMs are obtained:

As shown in Fig. 2, only when the total points are in the unit circle can they be substituted. Therefore, when using Eq. (20) to perform the discrete calculation of PJFMs in the Cartesian coordinate system, numerical integration errors will occur from the above derivation process.

2.3.2 Polar coordinate division and pixel interpolation

To eliminate geometric errors, the method we proposed should satisfy: All pixels together exactly cover the unit circle, and the pixel areas do not intersect. It can be expressed as the following equation, where each pixel can be represented by \(\Omega_{u,v}\), and \(D\) represented the unit circle:

The problem of eliminating geometric errors and integration errors now comes down to rearranging the polar coordinates in the calculation process. The following segmentation method can satisfy Eqs. (17) and (18) well. Figure 3a shows the result of the division method. Assuming that the area size of PJFMs needs to be calculated as \(N \times N\), the radius of the circular area of PJFMs is defined by PJFMs as \(N{/}2\), the division method will be presented as follows:

-

The unit circle is equally divided into \(U\) layer ring regions with equidistant concentric circles along the radial direction. The ring closest to the center of the circle is marked as the first layer, which increases outward in sequence.

-

The unit circle is equally divided into \(V\) sectors. This process divides all the circles into \(V\) regions equally.

-

Divide all the circles from the inside to the outside in sequence, and divide the \(u\)th ring equally into \(S_{u} = (2u - 1)\;V\) pixel areas.

We can get the original image through the mentioned above division method to obtain the pixel division in polar coordinates. The unit circle image after division is divided into \(VU^{2}\) sectors, the size of each sector is \(\pi {/}VU^{2}\). The value of \(U\) and \(V\) will affect the amount of calculation and calculation accuracy; therefore, the number of pixels and calculation amount will increase with the increase of \(VU^{2}\). Then more accurate image moments can be obtained. However, when the number of divided pixels exceeds the pixels in the original image, increasing the pixels’ number will significantly increase the time and amount of calculation and thus lose meaning. We take \(U = N{/}2\), \(V = 4\), so that we can get roughly the same number of pixels as the original image. We define the divided pixel label as \(\Omega_{u,v}\) (\(u = 1,2,3, \ldots ,U\);\(v = 1,2,3, \ldots ,V(2u - 1)\)). Figure 3b shows the schematic diagram of \(\Omega_{u,v}\). The pixel point \(\Omega_{u,v}\) under the polar coordinate division represents the pixel area converted to the polar coordinate \((\rho_{uv} ,\theta_{uv} )\), the start and end radius of \(\Omega_{u,v}\) are \(\rho_{uv}^{(s)}\) and \(\rho_{uv}^{(e)}\). The start and end angles of the pixel area of \(\Omega_{u,v}\) are \(\theta_{uv}^{(s)}\) and \(\rho_{uv}^{(e)}\), so \((\rho_{uv} ,\theta_{uv} )\) can be defined as:

The digital image is usually defined in Cartesian coordinates, and this pixel position cannot directly correspond to the gray value of the original image. Therefore, we use the pixel sampling method to solve this question, then the gray value of the new pixel can be estimated through the points in the original image. The sampling method we use in this article is the nearest neighbor interpolation. It is also suggested to choose other more complex nonlinear interpolation methods [14] to improve accuracy.

2.3.3 Accurate computation of fractional-order pseudo-Jacobi–Fourier moments

After completing the polar coordinate pixel sampling method, the image \(f^{\prime}\) in polar coordinates is obtained. The discrete PJFMs calculation method in polar coordinates can be obtained as follows:

\(f^{\prime}(r_{u} ,\theta_{uv} )\) is the approximate interpolation of \(f(r_{i,j} ,\theta_{i,j} )\) in the pixel block \(\Omega_{u,v}\). Therefore, the new complete orthogonal basis functions can be defined as:

Studies have found that recursive methods [20] to calculate high-order polynomials can also produce numerical instability. Many scholars have used Gaussian integration for the accurate calculation of orthogonal moments [37, 38]. Therefore, on the basis of the recursive calculation, we use the ten-point integration [39] to calculate the integral. This method can maintain numerical stability when calculating the integral of high-order polynomials.

The compound Gauss quadrature rule of radial basis functions integration can be expressed as:

\(\eta_{k}\) is the weight and \(z_{k}\) is the sampling point. Next, Table 1 will give the ten-point Gaussian integral node table. As described above, in conjunction with Eqs. (20)–(23), we obtained the accurate fractional-order Pseudo-Jacobi–Fourier moments (APJFMs) and do not introduce any geometric errors or numerical integration errors during calculation.

3 Accurate fractional-order pseudo-Jacobi–Fourier moments

To analyze APJFMs’ characteristics in the fractional order, we give a general definition of AFPJFMs and discuss orthogonality, recursion, and invariance. Also, we investigate the influence of the fractional-order parameter on the zeros’ distribution and the ROI feature extraction ability.

3.1 Definition

Let the fractional-order parameter \(\alpha\) be a positive rational number \(\alpha \in R^{ + }\). Let the variable \(r = r^{\alpha }\) (\(r \in [0,\;1]\)), accurate fractional-order recursive Pseudo-Jacobi radial basis functions can be obtained (refer to “Appendix A”):

here

\(L_{1} ,L_{2} ,L_{3}\) are the same as Eq. (9).

The recursive initial values of \(P_{n} (\alpha ,r)\) are:

The radial basis functions satisfies the orthogonal condition:

Based on the above, we define AFPJFMs with repetition of \(m \in Z\) and order of \(n \in N\) as:

Similarly, the image can be reconstructed by AFPJFMs and basis functions as:

3.2 Zeros of radial kernels

The position and number of the radial basis functions’ zeros are related to the moments’ ability to capture high-frequency information [5,6,7]. The zeros distribution is also a fundamental property because it is related to information suppression. It means that calculated moments emphasize certain parts while ignoring other factors of the image. Unlike classical PJFMs, the fractional-order parameter can control the AFPJFMs’ zeros distribution, which shows that the focus described by AFPJFMs can be transferred to a more resolving image area. We call it local/ROI feature extraction. This ability comes from the variable replacement \(r = r^{\alpha }\) in Eq. (24). Figure 4 shows the relationship between \(r^{\alpha }\) and \(r\). Figures 5, 6, 7 show some visual examples of radial kernels \(J_{n} (\alpha ,r)\). In summary, we have the following conclusions on the zeros’ distribution and ROI feature extraction of AFPJFMs:

-

When \(\alpha = 1\), the distribution of radial kernels’ zeros is basically uniform. At this time, the moment value describes the entire image area equally.

-

When \(\alpha < 1\), there will be \(r = r^{\alpha } > r\) in the interval \((0,\;1)\) and move the radial kernels’s zeros to the \(r = 0\) direction. The moments value emphasizes the information in the central area of the image.

-

When \(\alpha > 1\), there will be \(r = r^{\alpha } < r\) in the interval \((0,\;1)\) and move the radial kernels’ zeros to the \(r = 1\) direction. The moments value emphasizes the information in the outer area of the image.

The 2D view of AFPJFMs’ phase angle \(\angle V_{nm\alpha } (r,\theta ) = \arctan ({\text{Im}} (V_{nm\alpha } (r,\theta )){/}{\text{Re}} (V_{nm\alpha } (r,\theta )))\) with \(\alpha = 0.1,\;0.5,\;1,\;1.5,\;2\) from a–e (The color from blue to yellow indicates that the phase angle is from small to large)

The 3D view of AFPJFMs’ phase angle \(\angle V_{nm\alpha } (r,\theta ) = \arctan ({\text{Im}} (V_{nm\alpha } (r,\theta )){/}{\text{Re}} (V_{nm\alpha } (r,\theta )))\) with \(\alpha = 0.1,\;0.5,\;1,\;1.5,\;2\) from a–e (\(n = 3,m = 3\), \(X\) and \(Y\) are the horizontal and vertical coordinates of the image)

4 Accurate quaternion fractional-order pseudo-Jacobi–Fourier moments

In [10, 35, 36, 40], PJFMs are only applied to image processing of the grayscale images and cannot be directly applied to color images. Therefore, in this section, we will extend AFPJFMs to the color image domain and propose accurate quaternion fractional-order Pseudo-Jacobi–Fourier moments (AQFPJFMs) to suit color image processing. AQFPJFMs reflect the color information and the correlation between the color channels. Therefore, AQFPJFMs have better image representation and distinguishing capabilities.

4.1 Definition

Mathematically, quaternion [41] is an extended number system of the complex number system, which is usually expressed in the following form:

\(a,\;b,\;c,\;d\) are quaternion real parts, and \({\varvec{i}},\;{\varvec{j}},\;{\varvec{k}}\) are quaternion imaginary parts. We list some basic operation rules of the quaternion imaginary unit below:

The quaternion conjugate and modulus are:

Given any two quaternions \({\varvec{q}}_{1}\) and \({\varvec{q}}_{2}\), they satisfy the following properties:

In recent years, the quaternion theory is widely used in color images. Assuming a continuous color image as:

\(f_{R} (r,\theta )\), \(f_{G} (r,\theta )\), and \(f_{B} (r,\theta )\) represent three color channels of the color image \(f(r,\theta ,z)\), respectively. Here we can define the color image \(f(r,\theta ,z)\) in pure quaternion form as:

Since quaternion multiplication is not commutative, from [42], we can get the following two definitions of AQFPJFMs:

\({\varvec{\mu}}\) is a pure quaternion in any unit, \({\varvec{\mu}} = ({\varvec{i}} + {\varvec{j}} + {\varvec{k}}){/}\sqrt 3\) is used in this article. \(H_{nm\alpha }^{L}\) and \(H_{nm\alpha }^{R}\) are called left AQFPJFMs coefficients and right AQFPJFMs coefficients, respectively. According to Eq. (33), we prove that \(H_{nm\alpha }^{L}\) and \(H_{nm\alpha }^{R}\) have the following relationship (refer to “Appendix B”):

In this paper, AQFPJFMs represent to the left AQFPJFMs.

AQFPJFMs coefficients \(H_{nm\alpha }^{L}\) (or \(H_{nm\alpha }^{R}\)) and the complete orthogonal basis functions \(V_{nm\alpha } (r,\theta )\) can be used to reconstruct the color image \(f(r,\theta ,z)\):

If the reconstruction restriction condition \(\left| n \right|,\;\left| m \right| \le K\) is added, the approximate reconstruction color image \(\tilde{f}(r,\theta ,z)\) can be obtained by Eq. (39):

By Eqs. (20)–(23), we can obtain (refer to “Appendix C”):

where

Here \(H_{nm\alpha } ( \cdot )\) represents the AFPJFMs coefficients of \(\cdot\), \({\text{Im}} \;( \cdot )\) represents the imaginary part of the imaginary number \(\cdot\), and \({\text{Re}} \;( \cdot )\) represents the real part of the imaginary number \(\cdot\).

4.2 Invariance

4.2.1 Rotation invariance

\(f(r,\theta ,z)\) is the color image, \(\vartheta\) is the rotation angle. Then the rotated image \(f^{{{\text{rot}}}} (r,\theta ,z)\) can be defined as:

Next we will prove that the AQFPJFMs’ magnitude coefficients of the rotated image \(f^{{{\text{rot}}}} (r,\theta ,z)\) have rotation invariance:

It can be seen that rotated image only affects the phase of AQFPJFMs, and does not affect the magnitude of AFPJFMs. So there is:

4.2.2 Scaling invariance

Theoretically, AQFPJFMs are not scaling invariance. However, in the calculation of AQFPJFMs’ process, the image functions are all normalized and mapped to the unit circle. All the scaling images are the same image after being mapped to the unit circle. Therefore, we can consider the AQFPJFMs coefficients to be scaling invariance.

5 Image representation via mixed low-order moments

Low-order moments reflect more robust low-frequency information, global feature descriptions usually use low-order moments to improve robustness. However, features only containing a few low-order moments usually cannot distinguish multiple objects in an image. Therefore, in actual situations, high-order moments are needed to improve the discriminability, but this comes at the cost of robustness. Based on this, we propose a strategy called mixed low-order moments feature (MLMF). It uses fractional-order parameters to improve discriminability and robustness.

5.1 Definition

Mathematically, we define MLMF based on AQFPJFMs as:

generates the rotation invariant of AQFPJFMs \(H_{nm\alpha }\). In this paper,

generates the rotation invariant of AQFPJFMs \(H_{nm\alpha }\). In this paper,  is the magnitude of AQFPJFMs. \(w(\alpha ,n,m)\) is the weight functions, we can set \(w(\alpha ,n,m)\) to strengthen specific features by changing the order \((n,m)\) and fractional-order parameter \(\alpha\). \(\Phi\) is the set of order \((n,m)\), priority is given to low order. In this article, we use the largest order \(K\) to define \(\Phi\):

is the magnitude of AQFPJFMs. \(w(\alpha ,n,m)\) is the weight functions, we can set \(w(\alpha ,n,m)\) to strengthen specific features by changing the order \((n,m)\) and fractional-order parameter \(\alpha\). \(\Phi\) is the set of order \((n,m)\), priority is given to low order. In this article, we use the largest order \(K\) to define \(\Phi\):

5.2 Robustness

In this section, we will design an experiment to verify the robustness of AQFPJFMs with mixed low-order moments features. First, we define the AQFPJFMs’ MLMF feature \({\varvec{v}}\) of the image \(f\) as:

where

To intuitively understand the robustness of AQFPJFMs hybrid low-order moment features, Fig. 8 shows the feature \({\varvec{v}}\) of the original image and each attack image. Here we set \(w\;(\alpha ,n,m) = 1\), attack parameters are listed in Table 2. We can see that the variation range of the characteristic \({\varvec{v}}\) under each attack and intensity is small. Therefore, experiments have proved that AQFPJFMs hybrid low-order moment features have good robustness.

5.3 Time–frequency analysis and discriminability

The MLFM strategy is based on the AQFPJFMs’ time–frequency analysis capabilities. Figure 9 shows the different parts of AQFPJFMs’ basis functions. The following conclusions can be drawn:

-

When we fixed the fractional-order parameter \(\alpha\), the order \((n,m)\) increases and zeros’ number of the basis functions \(V_{nm\alpha } (r,\theta )\) will increase accordingly.

-

When we fixed the order \((n,m)\), zeros’ number of the basis functions \(V_{nm\alpha } (r,\theta )\) does not change. The position of the zeros will be affected by the fractional-order parameter \(\alpha\).

This phenomenon proves that AQFPJFMs have time–frequency analysis capabilities: The fractional-order parameter \(\alpha\) affects the time-domain characteristics, the order \((n,m)\) affects the frequency-domain characteristics. Different fractional-order parameters \(\alpha\) reflect different time-domain characteristics. Combining these moments will make the features more distinguishable. At the same time, since the features only contain low-frequency components, better robustness is ensured.

6 Experiments and application

In this section, we will prove the effectiveness of AQFPJFMs and MLMF through several experiments. All the tests are implemented with MATLAB R2017a and run under the Microsoft Windows 7 environment equipped with a 3.60 GHz CPU and 16 GB memory computer.

6.1 Computation complexity and numerical stability

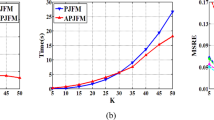

To prove the correctness and availability of the PJFMs’ recursive calculation and polar pixel tiling scheme, the image "Lena" (\(128 \times 128\)) will be reconstructed in the following three ways:

-

Direct calculation method of PJFMs.

-

Recursive calculation method of PJFMs.

-

Recursive calculation method of APJFMs.

We use the mean square reconstruction error (MSRE) here to evaluate the reconstruction quality of the image:

where \(\tilde{f}(x,y)\) represents the reconstructed image, \(f(x,y)\) represents the original image. Figure 10 shows the reconstructed image, the comparison of the MSRE values and calculation time are shown in Fig. 11. These results indicate that:

-

When \(K < 22\), PJFMs’ direct and recursive calculation method will obtain consistent subjective reconstruction results and objective reconstruction accuracy. Therefore, our recursive calculation method is correct.

-

When \(K \ge 22\), the direct calculation method of PJFMs has serious numerical instability problems, and the computational complexity is relatively high. Since the recursive calculation method of PJFMs does not involve the factorial (or gamma function) of large numbers, it exhibits better stability during \(K \ge 22\), and the computational complexity is relatively low. Therefore, our recursive calculation method is effective and meets the theoretical expectations in Sect. 2.2.

-

Compared with the recursive calculation of PJFMs, the image reconstruction ability of APJFMs has been greatly improved. Therefore, our polar pixel tiling scheme is effective.

The recursive calculation method of APJFMs is used in the experiments after this section.

6.2 Image reconstruction

This section will analyze the significance of the fractional-order parameter on the image reconstruction ability of AFPJFMs and AQFPJFMs. As we mentioned in Sect. 3.2, when \(\alpha = 1\), AFPJFMs and AQFPJFMs equally extract global image features, and when the value of \(\alpha\) deviates from 1, AFPJFMs and AQFPJFMs more reflect the local characteristics of the image. Therefore, this experiment sets the parameter as: \(\alpha = \{ 0.1,\;0.5,\;1,\;1.5,\;2\}\). Figure 12 shows the gray image "Lena" (\(128 \times 128\)) with different fractional-order parameter under AFPJFMs’ recursive method, Fig. 13 shows the color image "Lena" (\(128 \times 128\)) with different fractional-order parameter under AQFPJFMs’ recursive method. The corresponding reconstruction error, decomposition time, and reconstruction time are given in Figs. 14 and 15. We can see:

-

When \(\alpha = 1\), AFPJFMs’ recursive calculation method will obtain consistent subjective reconstruction results and objective reconstruction accuracy with APJFMs’ recursive calculation method. Therefore, our fractional expansion is correct.

-

AQFPJFMs’ recursive calculation method will obtain similar subjective reconstruction results and objective reconstruction accuracy with AFPJFMs’ recursive calculation method. Therefore, quaternion expansion is effective.

-

From the perspective of global features, the reconstructed image effect is better than PJFMs because of the adjust zeros distribution and polar pixel tiling scheme. However, when \(\alpha < 0.5\), the image center will produce a vital integration error. On the contrary, the integration accuracy and numerical stability of the image center when \(\alpha \ge 0.5\) are better.

-

From the perspective of local features, when \(\alpha < 1\), the image reconstruction effect of the central area is better than the outer area of the image. When \(\alpha > 1\), the reconstruction effect of the outer area of the image is better than that of the central area of the image. This is in line with the theory we gave in Sect. 3.2. By observing Figs. 12 and 14, we can see that AFPJFMs and AQFPJFMs show more obvious spatial characteristics when \(\alpha > 1\).

6.3 Application to image zero-watermarking

The watermarked image obtained by traditional digital watermarking technology will be distorted. Image distortion is unacceptable in some applications, such as military imaging systems, artwork scanning, and medical diagnosis. Moreover, this method is difficult to balance robustness, imperceptibility, and capacity due to its inherent contradictions [43]. Based on this, Wen [44] offered a new zero-watermarking algorithm. The zero-watermarking algorithm does not change the host image but constructs a watermark from the host image’s characteristics. In this process, the digital image is absolutely fidelity. Therefore, zero-watermarking has perfect imperceptibility and effectively solves the balance between robustness, imperceptibility, and watermark capacity.

Moments and moments invariants have constantly been researching hotspots in the area of zero-watermarking due to their excellent image description ability and invariance. However, moments based on the zero-watermarking algorithm still has problems to be solved, including:

-

Traditional zero-watermarking algorithm is generally applicable to the protection of grayscale images, in reality, color images to be protected are more common;

-

Traditional zero-watermarking algorithm paid too much attention to robustness and ignoring the distinction. This phenomenon leads to a high false detection rate;

-

Most zero-watermarking algorithms based on frequency domain features, their features are not robust to geometric attacks. Therefore, they can only improve robustness through machine learning (such as SVM). Also, their time complexity and applicability are inferior;

-

In the algorithm based on the moment feature, the moment calculation methods almost have the problems of enormous calculation complexity, numerical instability, and low calculation accuracy, which directly affect the performance of the algorithm.

Therefore, we applied AQFPJFMs and MLMF in zero-watermarking. Compared with other advanced algorithms, our proposed algorithm has the following advantages:

-

We proposed a new moment AQFPJFMs to effectively reflect the correlation between color information and color channels.

-

We proposed an image description method based on AQFPJFMs called mixed low-order moments features (MLMF). The low-order AQFPJFMs have good robustness. The moments’ value of the fractional-order parameter is mixed to enhance the features’ discriminability, thereby MLMF achieving a good balance of robustness and discriminability.

-

We proposed a new moment calculation scheme AQFPJFMs, which has significant advantages over existing calculation methods by calculation speed, numerical stability, and calculation accuracy.

The process of our zero-watermarking algorithm is as follows:

6.3.1 Initial setup

-

Host image \(I = \{ f(i,j,z):f \in [0,\;255],(i,j) \in [1,\;N_{I} ]^{2} ,z \in \{ 1,\;2,\;3\} \}\).

-

Fractional-order parameter \(\alpha = \{ 0.1,\;0.5,\;1,\;1.5,\;2\}\).

-

Weight functions \(w(\alpha ,n,m) = 1\).

-

Logo \(W = \{ w(i,j):w \in \{ 0,1\} ,(i,j) \in [1,\;N_{W} ]^{2} \}\).

-

Key \({\text{key1}} = \{ x_{0} = 0.5,\;\mu = 0.45,\;n = N_{W} \}\),\({\text{key2}} = \{ a = 2,\;b = 3,\;It = 10\}\),\({\text{key3}} = \{ a = 1,\;b = 2,\;It = 5\}\).

6.3.2 Use AQFPJFMs and MLMF to generation zero-watermarking

-

1.

Get the R, G, B color channel components of the host image \(I\).

-

2.

Calculate the AQFPJFMs coefficients according to Eq. (41).

-

3.

Generate mixed low-order moments features:

(50)

(50) -

4.

Quantify and MLMF \({\varvec{v}} = \{ v_{i} \}_{i = 1}^{{N_{W} }}\) to obtain quantized features \({\varvec{bv}} = \{ bv_{i} \}_{i = 1}^{{N_{W} }}\):

$$ \begin{aligned} bv_{i} & = \left\{ {\begin{array}{*{20}l} 0 \hfill & {v_{i} < T} \hfill \\ 1 \hfill & {v_{i} \ge T} \hfill \\ \end{array} } \right., \\ T & = {\text{median}}\;{\text{(mean(}}{\varvec{v}}_{1} {),}\;{\text{mean(}}{\varvec{v}}_{2} {),}\; \ldots \;{,}\;{\text{mean(}}{\varvec{v}}_{{N_{W} }} {))}{\text{.}} \\ \end{aligned} $$(51) -

5.

Use \({\text{key1}}\) and asymmetric Tent map to generate a chaotic sequence \(\{ x_{i} \}\):

$$ x_{i} = \left\{ {\begin{array}{*{20}l} {\frac{{x_{i - 1} }}{\mu }} \hfill & {0 \le x_{i - 1} \le \mu } \hfill \\ {\frac{{1 - x_{i - 1} }}{1 - \mu }} \hfill & {\mu < x_{i - 1} \le 1} \hfill \\ \end{array} } \right.. $$(52) -

6.

Use chaotic sequences \(\{ x_{i} \}\) and quantized features \(bv\) to perform left, right, and cyclic shift operations [45] to generate feature maps \(W\).

-

7.

Use \({\text{key2}}\) and \({\text{key3}}\) to scramble the watermarking \(W\) and the feature map \(B\) through the generalized Arnold transformation to obtain the scrambled watermarking \({\text{WS}}\) and the scrambled feature map \({\text{BS}}\);

$$ \left( {\begin{array}{*{20}l} {x^{\prime } } \hfill \\ {y^{\prime } } \hfill \\ \end{array} } \right) = \left( {\begin{array}{*{20}l} 1 \hfill & b \hfill \\ a \hfill & {ab + 1} \hfill \\ \end{array} } \right)\left( {\begin{array}{*{20}l} x \hfill \\ y \hfill \\ \end{array} } \right)\bmod N_{W} . $$(53)\((x^{\prime},y^{\prime})\) represents the position of the pixel after transformation, and \((x,y)\) represents the position of the pixel before transformation.

-

8.

Take the XOR of the scrambled watermarking \({\text{WS}}\) and the scrambled feature map \({\text{BS}}\) to get the zero-watermarking \({\text{ZW}}\):

$$ {\text{ZW}} = {\text{XOR}}\;({\text{WS}},\;{\text{BS}}). $$(54) -

9.

Send the zero-watermark \({\text{ZW}}\), \({\text{key1}}\), \({\text{key2}}\), \({\text{key3}}\), and the identity information \({\text{ID}}_{{{\text{Owner}}}}\) of the copyright owner to a trusted organization through a confidential channel, requesting it to be authenticated. After accepting the request, the trusted organization will verify the related message received and stamp it with a digital time stamp:

$$ C_{TA} = h_{TA} ({\text{ZW}}||{\text{key1}}||{\text{key2}}||{\text{key3}}||{\text{ID}}_{{{\text{Owner}}}} ||t). $$(55)\(t\) is a digital timestamp means the authentication time; \(h_{TA} ( \cdot )\) is a single-channel hash function; | | marks the concatenation of data.

6.3.3 Use AQFPJFMs and MLMF to verification zero-watermarking

-

1.

The verifier downloads information from the trusted organization, including: zero-watermarking \({\text{ZW}}\), \({\text{key1}}\), \({\text{key2}}\), \({\text{key3}}\) and identity information \({\text{ID}}_{{{\text{Owner}}}}\), uses the trusted organization to calculate whether the timestamp is complete and authentic:

$$ C^{\prime}_{TA} = h_{TA} ({\text{ZW}}||{\text{key1}}||{\text{key2}}||{\text{key3}}||{\text{ID}}_{{{\text{Owner}}}} ||t). $$(56) -

2.

Perform steps B.1 to B.6 on \(I^{\prime}\) to obtain a feature map \(B^{\prime}\).

-

3.

Use \({\text{key3}}\) to scramble the feature map \(B^{\prime}\) through the generalized Arnold transformation, then we obtain the scrambled feature map \({\text{BS}}^{\prime }\):

$$ \left( {\begin{array}{*{20}l} {x^{\prime } } \hfill \\ {y^{\prime } } \hfill \\ \end{array} } \right) = \left( {\begin{array}{*{20}l} {ab + 1} \hfill & { - b} \hfill \\ { - a} \hfill & 1 \hfill \\ \end{array} } \right)\left( {\begin{array}{*{20}l} x \hfill \\ y \hfill \\ \end{array} } \right)\bmod N_{W} . $$(57) -

4.

Take the XOR of the zero-watermarking \({\text{ZW}}\) and the scrambled feature map \({\text{BS}}^{\prime }\) to get the scramble watermark \({\text{WS}}^{\prime }\):

$$ {\text{WS}}^{\prime } = {\text{XOR}}({\text{BS}}^{\prime } ,\;{\text{ZW}}). $$(58) -

5.

Use \({\text{key2}}\) to reverse the scrambled watermarking \({\text{WS}}^{\prime }\), then we obtain the detected watermarking \(W^{\prime }\):

$$ W^{\prime} = \{ w^{\prime}(i,j):w^{\prime} \in \{ 0,1\} ,(i,j) \in [1,\;N_{W} ]^{2} \} . $$(59)

The above is all the processes of the zero-watermark algorithm. Figure 16 shows the generation process of our proposed zero-watermarking algorithm, Fig. 17 shows the verification process of our proposed zero-watermarking algorithm.

Next, the peak signal-to-noise ratio (PSNR) of the carrier image is estimated to the degradation after being attacked:

We use the detected watermark’s bit error rate (BER) to estimate the robustness of the algorithm:

Here, we take the color image “Lena” (\(512 \times 512\)) as an example. Table 3 gives the images of “Lena” under various attacks, and their corresponding PSNR values, the detected watermarking and BER values. These results show that our proposed algorithm has excellent robustness. In the face of various attacks, our algorithm is still identifiable.

To test the effectiveness of our algorithm, we use the mean value of the detected watermark BER to compare with other similar zero-watermarking algorithms. In this experiment, eighteen colorful MRI medical images from the “Whole Brain Atlas” image library [46], Fig. 18 shows the test images whose size was \(256 \times 256\), and a binary image with the size of \(32 \times 32\). The experimental results will be compared with the five most advanced zero-watermarking algorithms [47,48,49,50,51]. Among them, Yang2020 [47], Xia2019 [48], Wang2017 [49], and Wang2016 [50] are some zero-watermarking algorithms based on moments, while Chang2008 [51] is the zero-watermarking algorithm based on spatial domain features. It can be seen from Table 4 that for most attacks, our proposed algorithm has the lowest bit error rate and higher robustness. This is because the AQFPJFMs’ low-order moments and MLMF algorithms are more robust to conventional image attacks (sharpening, blurring, compression, noise, etc.). For [47,48,49,50], the algorithms have to use high-order moments to increase the watermark capacity, so their robustness is poor.

To ensure the universality of proposed algorithm, we select 30 color images with the size of \(256 \times 256\) as carrier images from the USC-SIPI image database [52]. A binary image with the size of \(32 \times 32\) as a watermark. Figure 19 shows some of these images.

The watermark of [53, 54] is composed of two different kinds of moments. The watermark of algorithm [55] is composed of DTCWT-DCT. Table 5 shows the comparison of the proposed algorithm and the above algorithm. We use the detected watermark accuracy rate (AR) to estimate the robustness of the algorithm:

Obviously, under most attacks, the AR values of our proposed algorithm is the highest. These experiments further confirmed our previous analysis; MLMF and AQFPJFMs improve the robustness of the proposed algorithm. Since most zero-watermarking algorithms are publicly verifiable, in addition to their robustness, discriminability is also extremely important. However, the existing zero-watermarking algorithms often ignore the discriminability that will lead zero-watermarking algorithm may mistakenly confirm some carrier to be verified. Therefore, we designed the following discriminability experimental program: First, we choose five carrier images in the Coil-100 image database [56] and generate the corresponding zero-watermarking. Then, we use the attack version (zero-mean Gaussian noise) of these five images to verify and record the BER of the detected watermark. Finally, set a verification threshold. Only when the BER is less than this threshold, the algorithm will confirm the verification. For the algorithm’s discriminability, here we use the false positive ratio (FPR) to measure it:

\({\text{TP}}\) and \({\text{FP}}\), respectively, represent the number of the algorithm correctly times confirmed and incorrectly confirmed. The test results of our algorithm will be compared with Wang2017 [49] and Wang2021 [57]. Figure 20 shows the discriminability test results comparison between Wang2017, Wang2021 and our algorithm. The red background shows the failed verification. The green background shows the passed verification. The noise variance of Wang2017, Wang2021, and our proposed algorithm is 0.01; the verification threshold of Wang2017, Wang2021, and our proposed algorithm is 0.13, 0.22, and 0.04, respectively; the FPR of Wang2017, Wang2021, and our proposed algorithm is 0.615, 0, and 0. Here, robustness requires confirmation and verification when the carrier content is consistent, even if the verification carrier is attacked. This required that the BER on the diagonal of Fig. 20 should be as low as possible. The discriminability requires that when the carrier content is consistent, even if the verification carrier is attacked, robustness can be used for confirmation and verification. It means that the off-diagonal BER of Fig. 20 should be as high as possible. If the algorithm is good enough, there should be a clear boundary between the BER of the above two cases. For Wang2017, the length of the generated feature is equal to the length of the watermark, so the feature contains high-order PCET coefficients \((K = 32)\), which has better discriminability. Simultaneously, the robustness of high-order coefficients is poor (Fig. 20a BER on the diagonal is too high), which makes Wang2017's FPR too large. For Wang2021, the algorithm has obvious boundaries, so it has better discriminability than Wang2017. But in Fig. 20b, we can see that the discriminative values of Wang2021 is generally too large. Compared with Fig. 20c, the values on the diagonal of Wang2021 are not 0, but the values on the diagonal of our proposed algorithm are all 0, the boundary is clearer. Therefore, the discriminability of our proposed algorithm is better than Wang2021. This is because the proposed algorithm is based on AQFPJFMs and MLMF. On the one hand, MLMF is composed of the low-order moments of \(K = 2\), which ensures that the algorithm has good robustness. On the other hand, based on the AQFPJFMs’ different fractional-order parameters, the rich time-domain information contained in MLMF enhances the discriminability of the algorithm. It can be seen that compared with other watermarking algorithm methods, our proposed zero-watermarking algorithm performs better in both robustness and discriminability.

To prove the security of our proposed algorithm, we test the impact of changing a single \(x_{0} \in {\text{key1}}\) on the algorithm security. Here, AR is used to check the security of the zero-watermarking algorithm.

We increase \(x_{0}\) from 0.4 to 0.6 with a step size of 0.001. Figure 21 shows that the carrier image to be inspected can pass the verification only when the \(x_{0}\) is correct (\(x_{0} = 0.5\)). When the key \(x_{0}\) is incorrect, the value of AR fluctuates around 0.8. It proves that the key is the crux to the security of the algorithm. When the key is not disclosed, even if all the algorithm details are totally exposed, our proposed zero-watermarking algorithm still has sufficient security.

Our proposed zero-watermarking algorithm’s watermark capacity is:

\(K\) is the maximum order, \(C\) is the watermark capacity (bit), and \(S\) is the number of selected parameters \(s\). Figure 22 shows the relationship between \(K\), \(S\), and watermark capacity. Obviously, adding \(K\) and \(S\) can increase the watermark capacity of the zero-watermarking algorithm, but we recommend that users increase \(S\) instead of increasing \(K\), because the low-order moments values are more robust.

The calculation time of our proposed algorithm is mainly spent on calculating the AQFPJFMs of the carrier image. Because we use a recursive calculation method, the time complexity of the moment is reduced from \(O(N^{2} K^{2} )\) to \(O(N^{2} K)\). (\(N\) is the size of carrier image, \(K\) is the maximum order.) Although using polar pixel tiling scheme and the increase of fractional-order parameter will increase the time complexity, the calculation time of our proposed zero-watermarking algorithm will not be significantly affected because the low-order moments and recursive calculation method we used. Therefore, the time complexity of our zero-watermarking algorithm is \(O\;(3 \cdot 10 \cdot N \cdot N^{2} \cdot S \cdot K) = O\;(SN^{3} K)\) (\(S\) is the number of fractional-order parameter \(\alpha\)). Figure 23 shows the relationship between the zero-watermarking algorithm spent time and the carrier image’s size.

7 Conclusion

In this paper, first, we proposed the recursive method of PJFMs, because the recursive calculation method does not involve the factorial (or gamma function) of large numbers, so it has better numerical stability; second, we proposed the accurate algorithm of PJFMs, called APJFMs. The polar pixel tiling scheme can cancel geometric and numerical integration errors, so we can reconstruct high-order images better; third, we proposed a new set of generalized fractional orthogonal moments, called AFPJFMs. Due to the introduction of fractional-order parameter, users can adjust the radial basis functions’ zeros distribution by changing the fractional-order parameter according to different application backgrounds; fourthly, we extended AFPJFMs to the color images by quaternion. AQFPJFMs are realized. It has been improved in terms of accuracy, numerical stability and applicability; finally, we make full use of the time–frequency analysis capabilities of AQFPJFMs to propose MLMF and apply it to the digital image zero-watermarking algorithm. Compared with other advanced zero-watermarking algorithms, our proposed algorithm proves that MLMF has significant advantages in both global feature robustness and discriminability. Also, after testing, our proposed zero-watermarking algorithm has high security and has certain advantages in zero-watermarking capacity and calculation speed.

For future work, there are still many aspects of this paper that need to be improved. First, the nearest neighbor interpolation method in the AQFPJFMs algorithm affects the accuracy of the algorithm. We should use a higher-precision interpolation method to achieve upsampling; second, because of the powerful image description ability of MLMF to improve system performance, we consider applying AQFPJFMs and MLMF in other digital image processing fields.

Abbreviations

- AFPJFMs:

-

Accurate fractional-order pseudo-Jacobi–Fourier moments

- APJFMs:

-

Accurate fractional-order pseudo-Jacobi–Fourier moments

- AQFPJFMs:

-

Accurate quaternion fractional-order pseudo-Jacobi–Fourier moments

- AR:

-

Accuracy rate

- BER:

-

Bit error rate

- CHFMs:

-

Chebyshev–Fourier moments

- FCMs:

-

Fractional-order Chebyshev moments

- FJFMs:

-

Fractional-order Jacobi–Fourier moments

- FOFMMs:

-

Fractional-order orthogonal Fourier–Mellin moments

- FPHFMs:

-

Fractional-order polar harmonic Fourier moments

- FPHT:

-

Fractional-order polar harmonic transforms

- FPR:

-

False positive ratio

- FQEMs:

-

Fractional-order quaternion exponential moments

- FQZMs:

-

Fractional-order orthogonal quaternion Zernike moments

- JFMs:

-

Jacobi–Fourier moments

- LFMs:

-

Legendre–Fourier moments

- MLMF:

-

Mixed low-order moments feature

- MSRE:

-

Mean square reconstruction error

- OFMMs:

-

Orthogonal Fourier–Mellin moments

- OMs:

-

Orthogonal moments

- PJFMs:

-

Pseudo-Jacobi–Fourier moments

- PSNR:

-

Peak signal-to-noise ratio

- PZMs:

-

Pseudo-Zernike moments

- QRFCMs:

-

Quaternion radial fractional Charlier moments

- RST:

-

Rotation, scaling, translation

- ZMs:

-

Zernike moments

- ZOA:

-

Zero-order approximation

References

Hu MK (1962) Visual pattern recognition by moment invariants. IRE Trans Inf Theory 2(8):179–187

Teague MR (1980) Image-analysis via the general-theory of moments. J Opt Soc Am 69(8):920–930

Pawlak M (2014) Over 50 years of image moments and moment invariants. Gate Comput Sci Res 73(2):91–110

Teh CH, Chin RT (1988) On image analysis by the method of moments. IEEE Trans Pattern Anal Mach Intell 10(4):556–561

Sheng YL, Shen LX (1994) Orthogonal Fourier–Mellin moments for invariant pattern recognition. J Opt Soc Am 11(6):1748–1757

Ping ZL, Wu RG, Sheng YL (2002) Image description with Chebyshev–Fourier moments. J Opt Soc Am 19(9):1748–1754

Khotanzad A, Hong YH (1990) Invariant image recognition by Zernike moments. IEEE Trans Pattern Anal Mach Intell 12(5):489–497

Ping Z, Ren H, Jian Z et al (2007) Generic orthogonal moments: Jacobi–Fourier moments for invariant image description. Pattern Recogn 40(4):1245–1254

Bailey RR, Srinath MD (1996) Orthogonal moment features for use with parametric and non-parametric classifiers. IEEE Trans Pattern Anal Mach Intell 18(4):389–399

Amu G, Hasi S, Yang X et al (2004) Image analysis by pseudo-Jacobi (p = 4, q = 3)-Fourier moments. Appl Opt 43(10):2093–2101

Camacho C, Báez-Rojas JJ, Toxqui-Quitl C, Padilla-Vivanco A (2014) Color image reconstruction using quaternion Legendre–Fourier moments in polar pixels. In: 2014 IEEE international conference on mechatronics, electronics and automotive engineering (ICMEAE), Cuernavaca, pp 3–8

Amu G, Hasi S, Ai AZ (2015) Research progress of moment invariant image analysis. J Inner Mongolina Agric Univ 26(4):146–150

Qi S, Zhang Y, Wang C et al (2023) A survey of orthogonal moments for image representation: theory, implementation, and evaluation. ACM Comput Surv 55(1):1–35. https://doi.org/10.1145/3479428

Liao SX, Pawlak M (1998) On the accuracy of Zernike moments for image analysis. IEEE Trans Pattern Anal Mach Intell 20:1358–1364

Wee CY, Paramesran R (2007) On the computational aspects of Zernike moments. Image Vis Comput 25(6):967–980

Biswas R, Biswas S (2012) Polar Zernike moments and rotational invariance. Opt Eng 51(8):1–9

Mukundan R, Ramakrishnan KR (1995) Fast computation of Legendre and Zernike moments. Pattern Recogn 28(9):1433–1442

Papakostas GA, Boutalis YS, Karras DA, Mertzios BG (2007) Fast numerically stable computation of orthogonal Fourier–Mellin moments. IET Comput Vis 1(1):11–16

Hosny KM, Shouman MA, Abdel Salam HM (2011) Fast computation of orthogonal Fourier–Mellin moments in polar coordinates. J Real-Time Image Process 6(2):73–80

Walia E, Singh C, Goyal A (2012) On the fast computation of orthogonal Fourier–Mellin moments with improved numerical stability. J Real-Time Image Process 7(4):247–256

Xin Y, Pawlak M, Liao S (2012) Accurate computation of Zernike moments in polar coordinates. IEEE Trans Image Process 6(7):996–1004

Camacho-Bello C, Padilla-Vivanco A, Toxqui-Quitl C et al (2016) Reconstruction of color biomedical images by means of quaternion generic Jacobi–Fourier moments in the framework of polar pixels. J Med Imaging 3(1):014004

Bhrawy A, Zaky M (2016) A fractional-order Jacobi Tau method for a class of time-fractional PDEs with variable coefficients. Math Methods Appl Sci 39(7):1765–1779

Vargas-Vargas H, Camacho-Bello C, Rivera-López JS et al (2021) Some aspects of fractional-order circular moments for image analysis. Pattern Recogn Lett 149:99–108

Su X, Tao R, Kang X (2019) Analysis and comparison of discrete fractional Fourier transforms. Signal Process 160(7):284–298

Kazem S, Abbasbandy S, Kumar S (2013) Fractional-order Legendre functions for solving fractional-order differential equations. Appl Math Model 37(7):5498–5510

Zhang H, Li Z, Liu Y (2016) Fractional orthogonal Fourier–Mellin moments for pattern recognition. In: 2016 Chinese conference on pattern recognition (CCPR). Springer, Singapore, pp 766–778

Benouini R, Batioua I, Zenkouar K, Zahi A, Najah S, Qjidaa H (2019) Fractional-order orthogonal Chebyshev moments and moment invariants for image representation and pattern recognition. Pattern Recogn 86:332–343

Yang H, Qi S, Tian J et al (2021) Robust and discriminative image representation: fractional-order Jacobi–Fourier moments. Pattern Recogn 115:107898

Hosny KM, Darwish MM, Aboelenen T (2020) Novel fractional-order polar harmonic transforms for gray-scale and color image analysis. J Frankl Inst 357(4):2533–2560

Wang C, Gao H, Yang M et al (2021) Invariant image representation using novel fractional-order polar harmonic Fourier moments. Sensors 21(4):1544

Chen B, Yu M, Su Q, Shim HJ, Shi YQ (2018) Fractional quaternion Zernike moments for robust color image copy-move forgery detection. IEEE Access 6:56637–56646

Wang C, Hao Q, Ma B et al (2021) Fractional-order quaternion exponential moments for color images. Appl Math Comput 400:126061

Yamni M, Karmouni H, Sayyouri M et al (2021) Robust zero-watermarking scheme based on novel quaternion radial fractional Charlier moments. Multimed Tools Appl 80(14):21679–21708

Upneja R, Singh C (2015) Fast computation of Jacobi–Fourier moments for invariant image recognition. Pattern Recogn 48(5):1836–1843

Sáez JL (2017) Comments on “fast computation of Jacobi–Fourier moments for invariant image recognition.” Pattern Recogn 67:16–22

Singh C, Upneja R (2012) Accurate computation of orthogonal Fourier Mellin moments. J Math Imaging Vis 44(3):411–431

Singh C, Walia E, Upneja R (2013) Accurate calculation of Zernike moments. Inf Sci 233:255–275

Karakasis EG, Papakostas GA, Koulouriotis DE, Tourassis VD (2013) A unified methodology for computing accurate quaternion color moments and moment invariants. IEEE Trans Image Process 23(2):596–611

Camacho-Bello C (2014) High-precision and fast computation of Jacobi–Fourier moments for image description. J Opt Soc Am 31(1):124–134

Hamilton WR (1866) Elements of quaternions. Longmans, Green, & Company, London

Camacho-Bello C, Padilla-Vivanco A, Toxqui-Quitl C et al (2016) Reconstruction of color biomedical images by means of quaternion generic Jacobi–Fourier moments in the framework of polar pixels. J Med Imaging 3(1):57–66

Petitcolas APF (2000) Watermarking schemes evaluation. IEEE Signal Process Mag 17(5):58–64

Wen Q, Sun TF, Wang SX (2003) Concept and application of zero-watermark. Acta Electron Sin 31:214–216

Shao Z, Shang Y, Zeng R, Shu H, Coatrieux G, Wu J (2016) Robust watermarking scheme for color image based on quaternion-type moment invariants and visual cryptography. Signal Process Image Commun 48:12–21

The Whole Brain Atlas. http://www.med.harvard.edu/AANLIB/home.html

Yang H, Qi S, Niu P, Wang X (2020) Color image zero-watermarking based on fast quaternion generic polar complex exponential transform. Signal Process Image Commun 82:115747

Xia ZQ, Wang XY, Zhou W, Li R, Wang C, Zhang C (2019) Color medical image lossless watermarking using chaotic system and accurate quaternion Polar Harmonic transforms. Signal Process 157:108–118

Wang CP, Wang XY, Chen XJ, Zhang C (2017) Robust zero-watermarking algorithm based on polar complex exponential transform and logistic mapping. Multimed Tools Appl 76(24):26355–26376

Wang CP, Wang XY, Xia ZQ, Zhang C, Chen XJ (2016) Geometrically resilient color image zero-watermarking algorithm based on quaternion exponent moments. J Vis Commun Image Represent 41:247–259

Chang CC, Lin PY (2008) Adaptive watermark mechanism for rightful ownership protection. J Syst Softw 81(7):1118–1129

The USC-SIPI image database. http://sipi.usc.edu/database/

Xia Z, Wang X, Han B et al (2021) Color image triple zero-watermarking using decimal-order polar harmonic transforms and chaotic system. Signal Process 180:0165–1684

Kang X, Zhao F, Chen Y et al (2020) Combining polar harmonic transforms and 2D compound chaotic map for distinguishable and robust color image zero-watermarking algorithm. J Vis Commun Image Represent 70:1047–3203

Liu J, Li J, Ma J et al (2019) A robust multi-watermarking algorithm for medical images based on DTCWT-DCT and Henon map. Appl Sci 9(4):700–722

Coil-100. http://www.cs.columbia.edu/CAVE/software/softlib/coil-100.php

Wang XY, Wang L, Tian JL et al (2021) Color image zero-watermarking using accurate quaternion generalized orthogonal Fourier–Mellin moments. J Math Imaging Vis 63:708–734

Acknowledgements

This work was supported partially by the National Natural Science Foundation of China (Nos. 61472171 & 61701212), Natural Science Foundation of Liaoning Province (No. 2019-ZD-0468), and Scientific Research Project of Liaoning Provincial Education Department (No. LJKZ0985).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical standards

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendices

1.1 Appendix A

By Eq. (4), the weighting function is:\(w(r) = (1 - r) \cdot r^{2}\).

Here replace the independent variable \(r\) of the radial basis function with the new variable \(r = r^{\alpha } ,r \in [0,1]\), and the new weight function can be obtained as:

We define a new radial basis functions:

The proof is complete.

1.2 Appendix B

Taking the conjugate of \(H_{nm\alpha }^{L}\), there are:

Therefore, \(H_{nm\alpha }^{L} = - (H_{( - n)( - m)\alpha }^{R} )^{ * }\).

1.3 Appendix C

Rights and permissions

About this article

Cite this article

Wang, X., Zhang, Y., Tian, J. et al. Accurate quaternion fractional-order pseudo-Jacobi–Fourier moments. Pattern Anal Applic 25, 731–755 (2022). https://doi.org/10.1007/s10044-022-01071-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10044-022-01071-6