Abstract

As a generation of fuzzy set theory, intuitionistic fuzzy (IF) set theory has received considerable attention for its capability on dealing with uncertainty. Similarity measures of IF sets are used to indicate the degree of commonality between IF sets. Although several similarity measures for IF sets have been proposed in previous studies, some of those cannot satisfy the axiomatic definitions of similarity, or provide counter-intuitive cases. In this paper, a new similarity measure between IF sets is proposed. The definition of similarity matrix is also presented to depict the relations among more than two IF sets. It is proved that the proposed similarity measures satisfy the properties of the axiomatic definition for similarity measures. Comparison between the previous similarity measures and the proposed similarity measure indicates that the proposed similarity measure does not provide any counter-intuitive cases. Moreover, it is demonstrated that the proposed similarity measure can be applied to define a positive definite similarity matrix.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since it was proposed by Zadeh [1], the theory of fuzzy set (FS) has achieved a great success due to its capability of handling uncertainty [2, 3]. Therefore, over the last decades, several higher order fuzzy sets have been introduced in the literature. Intuitionistic fuzzy (IF) set, as one of the higher order FSs, was proposed by Atanassov [4] to deal with vagueness. The main advantage of IF set is its capability on coping with the uncertainty that may exist due to information impression. Because it assigns to each element a membership degree, a non-membership degree and a hesitation degree, and thus, IF set constitutes an extension of Zadeh’s fuzzy set which only assigns to each element a membership degree, taking 1 minus it as the degree of non-membership [5]. So IF set is regarded as a more effective way to deal with vagueness than FS [6].

Similarity measure is of great significance in almost every scientific field. Similarity measures between two IF sets are related to their commonality on the information conveyed by them. Since it is difficult to measure the amount of information hidden in IF sets, we cannot compare two IF sets directly. Therefore, similarity measure and its counterpart, distance measure, play an important role in discriminating IF sets. With the development of IF set theory, the definition of similarity measure for IF sets has also received considerable attention in recent years [7, 8]. It has developed and will continue to develop into an important tool for decision making, fault detection, pattern recognition, machine learning, image processing, etc.

For its fundamental importance in application, many similarity measures have been proposed. The first study was carried out by Szmidt and Kacprzyk [9]. They applied Hamming distance and Euclidian distance to IF environment and comparing them with the approaches used for ordinary FSs. Following this work, many researchers presented similarity measures for IF sets by extending the well-known distance measures, such as Hamming distance, Euclidian distance, and Hausdorff distance [10–16]. Meanwhile, some studies defined new similarity measures for IF sets by defining some intermediate variables based on membership and non-membership degrees [7, 17–21]. For example, Li and Cheng [17] suggested a new similarity measure for IF sets based on the definition of φ A . Recently, many novel similarity measures are emerging in an endless stream. This trend can be illustrated by similarity measures defined based on cosine similarity [8], Sugeno integral [22], interval comparison [23], intuitionistic entropy [24], and so on. In addition, Boran and Akay [25] proposed a new general type of similarity measure for IF sets with two parameters, expressing norm and the level of uncertainty, respectively. This similarity measure can also make sense in terms of counter-intuitive cases. As a comprehensive study on similarity measures of IF sets, Papakostas et al. [26] investigated the main theoretical and computational properties of the measures, as well as the relationships between them. Moreover, a comparison of the distance and similarity measures was carried out from a pattern recognition point of view.

Among the proposed similarity measures between IF sets, some of those, however, cannot satisfy the axioms of similarity, or provide counter-intuitive cases, or are produced by complex formats. Therefore, we propose a new similarity measures between IF sets in this paper, based on the cosine similarity and Euclidean distance between IF sets. Axiomatic definitions of similarity and the distance measures are first presented. Then the relation between similarity and distance measure follows. Similarity matrix is also defined to describe relationships among more than two IF sets. The properties of similarity matrix are examined to explore the performance of our new similarity measure, which is defined after critically analyzing the cosine similarity and similarity generated by Euclidean distance. Properties and performances of the proposed similarity measure are indicated by both mathematical proofs and illustrative examples.

The remainder of this paper is organized as follows. Section 2 recalls the definitions related to the IF sets. In Sect. 3, distance measure, similarity measure, similarity matrix, and their properties with proofs are proposed. The new similarity measure is defined in Sect. 4. Its properties are also proved in this section. Comparison between similarity measures and illustration of the positive definiteness of similarity matrix is carried out in Sect. 5. We come to the conclusion of this paper in Sect. 6.

2 Preliminaries

In this section, we briefly recall the basic concepts related to IF set, and then list the properties of the axiomatic definition for similarity measures.

Definition 1

[1] Let \(X = \{ x_{1} ,x_{2} , \ldots ,x_{n} \}\) be a universe of discourse, then a FS A in X is defined as follows:

where \(\mu_{A} (x):X \to [0,1]\) is the membership degree.

Definition 2

[4] An IF set A in X defined by Atanassov can be written as

where \(\mu_{A} (x):X \to [0,1]\) and \(v_{A} (x):X \to [0,1]\) are membership degree and non-membership degree, respectively, with the condition:

π A (x) determined by the following expression:

is called the hesitancy degree of the element x ∊ X to the set A, and \(\pi_{A} (x) \in [0,1],\,\forall x \in X\).

π A (x) is also called the intuitionistic index of x to A. Greater π A (x) indicates more vagueness on x. Obviously, when \(\pi_{A} (x) = 0,\,\forall x \in X\), the IF set degenerates into an ordinary FS.

In the sequel, the couple \(\left\langle {\mu_{A} (x),v_{A} (x)} \right\rangle\) is called an IF set or IF value for clarity. Let IFSs(X) denote the set of all IF sets in X.

Definition 3

For A ∊ IFSs(X) and B ∊ IFSs(X), some relations between them are defined as:

- (R1):

-

A ⊂ B iff \(\forall x \in X\,\mu_{A} (x) \le \mu_{B} (x),v_{A} (x) \ge v_{B} (x)\)

- (R2):

-

A = B iff \(\forall x \in X\,\mu_{A} (x) = \mu_{B} (x),v_{A} (x) = v_{B} (x)\)

- (R3):

-

\(A^{C} = \left\{ {\left\langle {x,v_{A} (x),\mu_{A} (x)} \right\rangle \left| {x \in X} \right.} \right\}\), where A C is the complement of A.

It is worth noting that besides Definition 2 there are other possible representations of IF sets proposed in the literature. Hong and Choi [27] proposed to use an interval representation \(\left[ {\mu_{A} (x),1 - v_{A} (x)} \right]\) of IF set A in X instead of pair \(\left\langle {\mu_{A} (x),v_{A} (x)} \right\rangle\). This approach is equivalent to the interval valued FSs interpretation of IF set, where the interval \(\left[ {\mu_{A} (x),1 - v_{A} (x)} \right]\) represents the membership degree of x ∊ X to the set A. Obviously, \(\left[ {\mu_{A} (x),1 - v_{A} (x)} \right]\) is a valid interval, since \(\mu_{A} (x) \le 1 - v_{A} (x)\) always holds for \(\mu_{A} (x) + v_{A} (x) \le 1\).

3 Distance and similarity measures for IF sets

3.1 Related definitions and properties

Generally, a distance is a measure of the difference between two elements of a set. For the case of IF sets, the distance between them must satisfy the axiomatic definitions of a metric distance. Moreover, the distance should not counter-intuitive analysis, i.e., the distance measure must be capable of reflecting the similarity among the IF sets. So we have next definition.

Definition 4

Let D denote a mapping \(D:IFS\, \times \, IFS \to [0,1]\), if D(A, B) satisfies the following properties, is called a distance between \(A \in IFSs(X)\) and \(B \in IFSs(X)\).

- (D1):

-

\(0 \le D(A,B) \le 1,\)

- (D2):

-

\(D(A,B) = 0 \Leftrightarrow A = B,\)

- (D3):

-

\(D(A,B) = D(B,A),\)

- (D4):

-

If \(A \subseteq B \subseteq C\), then \(D(A,B) \le D(A,C)\), and \(D(B,C) \le D(A,C),\)

- (D5):

-

\(D(A,B) + D(B,C) \ge D(A,C).\)

As the complementary concept of distance measure, the similarity measure between two IF sets can be described in next definition.

Definition 5

A mapping \(S:IFS \times IFS \to [0,1]\) is called a degree of similarity between \(A \in IFSs(X)\) and \(B \in IFSs(X)\) a, if \(S(A,B)\) satisfies the following properties:

- (S1):

-

\(0 \le S(A,B) \le 1,\)

- (S2):

-

\(S(A,B) = 1 \Leftrightarrow A = B,\)

- (S3):

-

\(S(A,B) = S(B,A),\)

- (S4):

-

If \(A \subseteq B \subseteq C\), then \(S(A,B) \ge S(A,C)\) and \(S(B,C) \ge S(A,C).\)

Theorem 1

Let D denote the distance measure between IF sets. Then, \(S_{D} = 1 - D\) is the similarity measure between IF sets.

Proof

As the distance measure between IF sets, D satisfies the conditions in Definition 4 as:

Considering \(S_{D} = 1 - D\), we can get the following expressions straightforwardly:

Given IF sets A, B and C satisfying \(A \subseteq B \subseteq C\), we have \(D(A,B) \le D(A,C)\) and \(D(B,C) \le D(A,C).\)

So we get:

Thus, \(S_{D} (A,B) \ge S_{D} (A,C)\), \(S_{D} (B,C) \ge S_{D} (A,C)\).

Hence, \(S_{D} (A,B) = 1 - D\) is the similarity measure between IF sets. \(\square\)

Theorem 1 says that the distance measure can be applied to define its complementary concept, similarity measure. Given a similarity measure S, we can learn from the proof of Theorem 1 that D = 1 − S satisfies all the properties in Definition 4, excluding D5, the triangle inequality. So all the distance measures can be transformed to a similarity measure, but not vice versa. We can say that the axiomatic definition for distance measure is stricter than that of the similarity measure.

Since the inception of IF sets, many similarity measures between IF sets have been proposed in the technical literature. Table 1 summarizes several well-known similarity measures that will be analyzed in this paper. In this table, we let \(X = \{ x_{1} ,x_{2} , \ldots ,x_{n} \}\) be a universe of discourse, \(A \in IFSs(X)\) and \(B \in IFSs(X)\) be two IF sets in X, denoted by \(A = \left\{ {\left\langle {x,\mu_{A} (x),v_{A} (x)} \right\rangle \left| {x \in X} \right.} \right\}\) and \(B = \left\{ {\left\langle {x,\mu_{B} (x),v_{B} (x)} \right\rangle \left| {x \in X} \right.} \right\}\), respectively. For clarity, we only give the expressions of similarity measures, with an absence of the interpretations of other intermediate variables, which can be found in related references.

3.2 Similarity matrix for IF sets

The distance and similarity measures can demonstrate the relationships between two IF sets. However, under most circumstances, more than two IF sets will we are confronted with, where the distance and similarity measures are not suitable to cope with the relationships among them. So we define the similarity matrix for IF sets.

Definition 6

Let \(A_{1} ,A_{2} , \ldots ,A_{N}\) denote \(N(N \ge 2)\) IF sets in the universe of discourse \(X = \{ x_{1} ,x_{2} , \ldots ,x_{n} \} .\) S denotes the similarity measure between IF sets. The similarity matrix between them is defined as:

Since \(S(A_{i} ,A_{j} ) = S(A_{j} ,A_{i} )\) for \(i = 1,2, \ldots ,N\) and \(S(A_{i} ,A_{i} ) = 1\), the similarity matrix \(\varvec{S}\) is a square and symmetric matrix, with 1 as its diagonal elements.

Theorem 2

The similarity matrix \(\varvec{S}\) between IF sets is a non-singular matrix.

Proof

Let’s suppose that \(\varvec{S}\) is a singular matrix. Then at least two of its column vectors are linearly dependent. Without any loss of generality, we can assume \(\varvec{x}_{j}\) and \(\varvec{x}_{k}\) are linearly dependent. So we have:

Hence,

Given p = j and p = k, we have:

And then, two contradictory equations can be achieved as:

Subsequently, the assumption that S is a singular matrix cannot stand up.

So the similarity matrix S between IF sets is a non-singular matrix.\(\square\)

Theorem 3

Let D be a metric distance measure between IF sets. The similarity matrix S D defined by \(S_{D} = 1 - D\) according Eq. (5) is a positive definite matrix.

Proof

Theorem 1 indicates that \(S_{D} = 1 - D\) is a similarity measure between IF sets. The similarity defined by matrix can be expressed as:

Given \(D(A_{i} ,A_{i} ) = 0\) and \(D(A_{i} ,A_{j} ) = D(A_{j} ,A_{i} )\) for i = 1, 2, …, N, we also get:

Since the eigenvalues of symmetric matrix are all real numbers, the similarity matrix S D has N real eigenvalues (including repeated eigenvalues), denoted by λ 1, λ 2, …, λ N .

Let λ be an arbitrary eigenvalue of S, i.e., \(\lambda \in \left\{ {\lambda_{1} ,\lambda_{2} , \ldots ,\lambda_{N} } \right\}\). Following the Gerschgorin Theorem, we can get:

Thus,

Considering the following relations:

where λ min and λ max are the minimum and maximum eigenvalues, respectively, we have λ min ≤ 1 and λ max ≥ 1.

Then, can get:

No generality will be lost by considering i = 1, i.e., λ min is in the first Gerschgorin circle of S D. So we have:

-

(i)

For N = 2, we have:

-

(ii)

For N = 3, we have:

Considering the randomicity of \(D(A_{2} ,A_{3} )\) and \(D(A_{2} ,A_{3} ) - 1 \le 0\), we can conclude that only λ min ≥ 0 can make \(\lambda_{\hbox{min} } \ge D(A_{2} ,A_{3} ) - 1\) holds for any \(D(A_{2} ,A_{3} )\). So we have \(\lambda_{\hbox{min} } \ge 0\).

-

(iii)

Given \(N \ge 4\) and \(D(A_{1} ,A_{2} ) + D(A_{1} ,A_{3} ) \ge D \;(A_{2} ,A_{3} )\), we have:

We can also get

Since \(\lambda_{\hbox{min} } \ge {\kern 1pt} {\kern 1pt} 2 - N + D(A_{2} ,A_{3} ) + \sum\nolimits_{j = 4}^{N} {D(A_{1} ,A_{j} )}\) is constant for any arbitrary \(D(A_{2} ,A_{3} )\) and \(D(A_{1} ,A_{j} )\) (\(j = 4,5, \ldots ,N\)), λ min should be not less than the maximum of the right hand side. Then we have \(\lambda_{\hbox{min} } \ge 0\).

Considering (i)–(iii), we can conclude that all the eigenvalues of \(\varvec{S}_{D}\) are nonnegative. So the similarity matrix \(\varvec{S}_{D}\) is positive semidefinite.

Taking \(\prod\nolimits_{i = 1}^{N} {\lambda_{i} \text{ = det}{\kern 1pt} \varvec{S}_{D} }\) and \(\text{det}{\kern 1pt} \varvec{S}_{D} \ne 0\) (S D is non-singular) into consideration, we can know there is no zero eigenvalue in the eigenvalues of S D, i.e., all the eigenvalues of S D are strictly positive.

So the similarity matrix S D defined by \(S_{D} = 1 - D\) is positive definite. \(\square\)

4 A new similarity measure between IF sets

In the similarity measures shown in Table 1, some of them are based on the well-known distances measures, such as the Hamming distance and the Euclidian distance, while others are characterized by the linear or non-linear combinations of the membership and non-membership functions of the IF sets, respectively. Since the similarity measures based on the Euclidian distance are of definitude physical meaning, we will review the generation of Euclidian distance in IF environment [11]. Moreover, some of its properties will be presented along with their proofs.

Definition 7

[11] The distance between two IF sets A and B in \(X = \{ x_{1} ,x_{2} , \ldots ,x_{n} \}\) can be defined as:

Theorem 4

\(d_{o} (A,B)\) is a metric distance measure between IF sets.

Proof

(1) Since \(\mu (x),v(x) \in [0,1]\), we have:

Hence,

So we get:

Thus, \(0 \le D_{o} (A,B) \le 1\).

(2) \(D_{o} (A,A) = 0\) is straightforward. Conversely, if \(D_{o} (A,B) = 0\), according to the definition of D o , it must follow that \(\mu_{A} (x) = \mu_{B} (x)\) and \(v_{A} (x) = v_{B} (x)\) for all x ∊ X, i.e., A = B. So we have \(D_{o} (A,B) = 0 \Leftrightarrow A = B\).

(3) It is evident that \(D_{o} (A,B) = D_{o} (B,A)\).

(4) Given A ⊆ B ⊆ C, we have \(\mu_{A} (x) \le \mu_{B} (x) \le \mu_{C} (x)\) and \(v_{A} (x) \ge v_{B} (x) \ge v_{C} (x)\) for all x ∊ X. Therefore,

Consequently,

According to Eq. (6), we can get: \(D_{o} (A,B) \le D_{o} (A,C)\) and \(D_{o} (B,C) \le D_{o} (A,C)\).

(5) By the Cauchy inequality, \(\left( {\sum\nolimits_{i = 1}^{n} {a_{i} b_{i} } } \right)^{2} \le \left( {\sum\nolimits_{i = 1}^{n} {a_{{_{i} }}^{2} } } \right)\left( {\sum\nolimits_{i = 1}^{n} {b_{{_{i} }}^{2} } } \right)\), we can get:

Making the following assignments:

we have:

Considering such inequality:

we get:

And then

Finally we obtain \(D_{o} (A,C) \le D_{o} (A,B) + D_{o} (B,C)\). So \(D_{o} (A,B)\) is a metric distance measure between IF sets.\(\square\)

According to Theorem 1, \(S_{o} = 1 - D_{o} (A,B)\) is a similarity measure between IF sets. This similarity has been proposed by Li et al. [16]. However, this similarity measure has a drawback that it is not sensitive to the change of IF sets. Considering an example where \(A = \left\{ {\left\langle {x,0.3,0.2} \right\rangle } \right\}\), \(B = \left\{ {\left\langle {x,0.4,0.3} \right\rangle } \right\}\), \(C = \left\{ {\left\langle {x,0.4,0.1} \right\rangle } \right\}\), \(D = \left\{ {\left\langle {x,0.2,0.1} \right\rangle } \right\}\), \(E = \left\{ {\left\langle {x,0.2,0.3} \right\rangle } \right\}\), we can find \(S_{o} (A,B) = S_{o} (A,C) = S_{o} (A,D) = S_{o} (A,E)\). So S o is not capable of discriminating the difference between IF sets.

Besides, there is another interesting similarity measure between IF sets, cosine similarity measure, defined by Ye [8]. He has proved that \(C_{IFS} (A,B)\) satisfied properties (S1) and (S3) in Definition 5. He only illustrated that \(C_{IFS} (A,B) = 1\) if A = B. However, for two different IF sets \(A = \left\{ {\left\langle {x,0.3,0.3} \right\rangle } \right\}\) and \(B = \left\{ {\left\langle {x,0.4,0.4} \right\rangle } \right\}\), we can get \(C_{IFS} (A,B) = 1\). That is, the condition (S2) in Definition 5 is not satisfied. So we can say \(C_{IFS} (A,B)\) is not a genuine similarity measure.

Taking a close examine on \(C_{IFS} (A,B)\) and \(D_{o} (A,B)\), we can get that the cosine similarity measure indicates the angle which quantifies how orthogonal two IF sets are, while the distance between IF sets can quantify how close two IF sets are from each other. Therefore, we can combine the cosine similarity measure and the distance measure together to define a new similarity measure for IF sets.

Definition 8

Let A and B be two IF sets in \(X = \{ x_{1} ,x_{2} , \ldots ,x_{n} \}\). A new similarity measure between them can be defined as:

Theorem 5

The measure \(S_{F} (A,B)\) is a similarity measure between IF sets A and B.

Proof

(1) \(C_{IFS} (A,B)\) can be taken as the cosine value between vectors, so \(0 \le C_{IFS} (A,B) \le 1\). According to Theorems 1 and 3, it is evident that \(0 \le 1 - D_{o} (A,B) \le 1\). Then we can get: \(0 \le S_{F} (A,B) \le 1\).

(2) \(S_{F} (A,A) = 1\) is straightforward. Since \(0 \le C_{IFS} (A,B) \le 1\) and \(0 \le 1 - D_{o} (A,B) \le 1\), \(C_{IFS} (A,B) = 1 - D_{o} (A,B) = 1\) must holds when \(S_{F} (A,B) = 1\).

\(C_{IFS} (A,B) = 1\) indicates that \(\mu_{A} (x) = k \cdot v_{A} (x),\mu_{B} (x) = k \cdot v_{B} (x)\) with \(k \in [0, + \infty )\) for all x ∊ X. Considering \(D_{o} (A,B) = 0 \Rightarrow A = B\) shown in Theorem 3, we can get the converse proposition \(S_{F} (A,B) = 1 \Rightarrow A = B\).

Finally, we get \(S_{F} (A,B) = 1 \Leftrightarrow A = B\).

(3) \(S_{F} (A,B) = S_{F} (B,A)\) can be obtain straightforwardly.

(4) For three IF sets A, B and C satisfying \(A \subseteq B \subseteq C\), we have \(\mu_{A} (x) \le \mu_{B} (x) \le \mu_{C} (x)\) and \(v_{A} (x) \ge v_{B} (x) \ge v_{C} (x)\) for all x ∊ X.

A function \(f(y,z)\) can be defined as:

We can calculate its derivatives as:

If \(y \ge a,z \le b\), we have \(\frac{\partial f}{\partial y} \le 0\), \(\frac{\partial f}{\partial z} \ge 0\). So for \(a = \mu_{A} (x) \le \mu_{B} (x) \le \mu_{C} (x)\) and \(b = v_{A} (x) \ge v_{B} (x) \ge v_{C} (x)\), we have \(f(\mu_{C} (x),v_{C} (x)) \le f(\mu_{B} (x),v_{B} (x))\), which can be written as:

If \(y \le a,z \ge b\), we have \(\frac{\partial f}{\partial y} \ge 0\), \(\frac{\partial f}{\partial z} \le 0\). So for \(a = \mu_{C} (x) \ge \mu_{B} (x) \ge \mu_{A} (x)\) and \(b = v_{C} (x) \le v_{B} (x) \le v_{A} (x)\), we have \(f(\mu_{A} (x),v_{A} (x)) \le f(\mu_{B} (x),v_{B} (x))\), which can be written as:

Since \(\mu_{A} (x) \le \mu_{B} (x) \le \mu_{C} (x)\) and \(v_{A} (x) \ge v_{B} (x) \ge v_{C} (x)\) for all \(x \in X\), (8) and (9) holds for all \(x \in X\). So we get: \(C_{IFS} (A,C) \le C_{IFS} (A,B)\), \(C_{IFS} (A,C) \le C_{IFS} (B,C)\).

Moreover, \(D_{o} (A,B) \le D_{o} (A,C)\) and \(D_{o} (B,C) \le D_{o} (A,C)\) have been proved in the proof of Theorem 3. So we have \(1 - D_{o} (A,C) \le 1 - D_{o} (A,B)\) and \(1 - D_{o} (A,C) \le 1 - D_{o} (B,C)\). We can finally get: \(S_{F} (A,C) \le S_{F} (A,B)\) and \(S_{F} (A,C) \le S_{F} (B,C)\).

Thus, \(S_{F} (A,B)\) satisfies all the properties in Definition 5, and it is a similarity measure between IF sets A and B. \(\square\)

Theorem 6

Let \(A_{1} ,A_{2} , \ldots ,A_{N}\) denote N (N ≥ 2) IF sets in the universe of discourse X. The similarity matrix S F defined by S F is a positive definite matrix.

Proof

The similarity matrix S F can be decomposed as:

where

The cosine similarity can be also written as:

So S C can be further decomposed as:

where for \(i = 1,2, \ldots ,n\),

with \(F_{j} (x_{i} ) = F\left( {\mu_{{A_{j} }} (x_{i} ),v_{{A_{j} }} (x_{i} } \right) = \frac{{\mu_{{A_{j} }} (x_{i} )}}{{\sqrt {\left( {\mu_{{A_{j} }} (x_{i} )} \right)^{2} + \left( {v_{{A_{j} }} (x_{i} )} \right)^{2} } }}\), and \(G_{j} (x_{i} ) = G\left( {\mu_{{A_{j} }} (x_{i} ),v_{{A_{j} }} (x_{i} )} \right) = \frac{{v_{{A_{j} }} (x_{i} )}}{{\sqrt {\left( {\mu_{{A_{j} }} (x_{i} )} \right)^{2} + \left( {v_{{A_{j} }} (x_{i} )} \right)^{2} } }}\), for \(j = 1,2, \ldots ,N\).

Let \(\varvec{\mu}_{i} = \left[ {F_{1} (x_{i} ),F_{2} (x_{i} ), \ldots ,F_{N} (x_{i} )} \right]\) and \(\varvec{v}_{i} = \left[ {G_{1} (x_{i} ),G_{2} (x_{i} ), \ldots ,G_{N} (x_{i} )} \right]\), then we can get:

So \(\varvec{S}_{\mu } (x_{i} )\) and \(\varvec{S}_{v} (x_{i} )\) are positive semidefinite.

And then \(\varvec{S}_{C} = \frac{1}{n}\sum\nolimits_{i = 1}^{n} {\varvec{S}_{\mu } (x_{i} )} + \frac{1}{n}\sum\nolimits_{i = 1}^{n} {\varvec{S}_{v} (x_{i} )}\) is also positive semidefinite.

Considering S o is positive definite and S C is positive semidefinite, we can conclude that \(\varvec{S}_{F} = \frac{1}{2}\varvec{S}_{o} + \frac{1}{2}\varvec{S}_{C}\) is positive definite.\(\square\)

5 Illustrative examples

5.1 Performance of similarity measure S F

To illustrate the superiority of the proposed similarity measure, a comparison between the proposed similarity measure and all the existing similarity measures is conducted. The comparison is implemented based on the widely used counter-intuitive examples. Table 2 presents the result with p = 1 for \(S_{HB} ,\,S_{e}^{p} ,\,S_{s}^{p} ,\,S_{h}^{p}\) and p = 1 t = 2 for \(S_{t}^{p}\).

From Table 2, We can see that \(S_{C} (A,B) = S_{DC} (A,B) = C_{IFS} (A,B) = 1\) for two different IF sets \(A = \left\langle {0.3,0.3} \right\rangle\) and \(B = \left\langle {0.4,0.4} \right\rangle\). This indicates that the second axiom of similarity measure (S2) is not satisfied by \(S_{C} (A,B)\), \(S_{DC} (A,B)\) and \(C_{IFS} (A,B)\). This also can be illustrated by \(S_{C} (A,B) = S_{DC} (A,B) = 1\) when \(A = \left\langle {0.5,0.5} \right\rangle\), \(B = \left\langle {0,0} \right\rangle\) and \(A = \left\langle {0.4,0.2} \right\rangle\), \(B = \left\langle {0.5,0.3} \right\rangle\). As for S H , S O , S HB , \(S_{e}^{p}\), \(S_{s}^{p}\) and \(S_{h}^{p}\), different pairs of A, B may provide the identical results, which cannot satisfy the application of pattern recognition. It can be read from Table 2 that \(S_{HB} = 0.9\) for both \(A = \left\langle {0.3,0.3} \right\rangle\), \(B = \left\langle {0.4,0.4} \right\rangle\) and \(A = \left\langle {0.3,0.4} \right\rangle\), \(B = \left\langle {0.4,0.3} \right\rangle\). Such situation seems to be worse for \(S_{HY}^{1}\), \(S_{HY}^{2}\) and \(S_{HY}^{3}\), where all the cases take the same similarity degree except case 3 and case 4. \(S_{t}^{p}\) seems to be reasonable without any counter-intuitive results, but it bring new problem with the choice of parameters p and t, which is still an open problem. Moreover, we can notice an interesting situation when comparing case 3 and case 4. For three IF sets \(A = \left\langle {1,0} \right\rangle\), \(B = \left\langle {0.5,0.5} \right\rangle\) and \(C = \left\langle {0,0} \right\rangle\), intuitively, it is more reasonable to take the similarity degree between them as: \(S_{F} (A,C) = 0.15\), \(S_{F} (B,C) = 0.25\) than taking \(S_{t}^{p} (A,C) = 0.5\) and \(S_{t}^{p} (B,C) = 0.833\). In such a sense, the proposed similarity measure is the most reasonable one with a relative simple expression, and has none of the counter-intuitive cases. Three IF sets \(A = \left\langle {0.4,0.2} \right\rangle\), \(B = \left\langle {0.5,0.3} \right\rangle\) and \(C = \left\langle {0.5,0.2} \right\rangle\) can be written in forms of interval values as: \(A = \left[ {0.4,0.8} \right]\), \(B = \left[ {0.5,0.7} \right]\) and \(C = \left[ {0.5,0.8} \right]\), respectively. In such a sense, we can say that the similarity degree between A and C is greater than the similarity degree between A and B.

Therefore, our proposed similarity measure is in agreement with this analysis. The proposed similarity measure is the most reasonable similarity measure without any counter-intuitive cases. That is because that our proposed similarity measure combines cosine similarity C IFS and distance-inducing similarity \((1 - D_{o} )\). If we take IF sets \(A = \left\langle {\mu_{A} (x),v_{A} (x)} \right\rangle\) and \(B = \left\langle {\mu_{B} (x),v_{B} (x)} \right\rangle\) as two vectors, \(C_{IFS} (A,B)\) and \(D_{o} (A,B)\) represent the angle which quantifies how orthogonal two IF sets are and the distance between IF sets can quantify how close two IF sets are from each other, respectively. Generally, the relationship between two vectors can be determined by angle and distance. So the combination of \(C_{IFS} (A,B)\) and \((1 - D_{o} (A,B))\) can be applied to define similarity measure for IF sets without any counter-intuitive cases. Moreover, there is no need to determine other parameters. Such analysis is also potentially applicable to any IF sets on arbitrary universe \(X = \{ x_{1} ,x_{2} , \ldots ,x_{n} \}\).

Besides the satisfaction of the definitional axioms and the avoidance of counter-intuitive cases, the discrimination capability of a measure is another important property for similarity measures, which is very useful in pattern recognition applications. To study the effectiveness of the proposed similarity measure for IF sets in the application of pattern recognition, the widely used pattern recognition problem discussed in [8, 17] will be considered.

Suppose there are m patterns, which can be represented by IF sets \(A_{j} = \left\{ {\left\langle {x_{i} ,\mu_{{A_{j} }} (x_{i} ),v_{{A_{j} }} (x_{i} )} \right\rangle \left| {x_{i} \in X} \right.} \right\}\), \(A_{j} \in IFSs(X)\), \(j = 1,2, \ldots ,m\). Let the sample to be recognized be denoted as \(B = \left\{ {\left\langle {x_{i} ,\mu_{B} (x_{i} ),v_{B} (x_{i} )} \right\rangle \left| {x_{i} \in X} \right.} \right\}\). According to the recognition principle of maximum degree of similarity between IF sets, the process of assigning B to A k is described by:

To illustrate the discrimination capability of our proposed similarity measure, comparisons with the measures proposed earlier by other authors will be carried out based on the example analyzed in [22].

Example 1

Assume that there are three IF sets in \(X = \{ x_{1} ,x_{2} ,x_{3} \}\) representing three patterns. The three patterns are written as follows:

Assume that a sample \(B = \left\{ {\left\langle {x_{1} ,0.3,0.3} \right\rangle ,\left\langle {x_{2} ,0.2,0.2} \right\rangle ,\left\langle {x_{3} ,0.1,0.1} \right\rangle } \right\}\) is to be classified.

The similarity degrees of \(S(A_{1} ,B)\), \(S(A_{2} ,B)\) and \(S(A_{3} ,B)\) calculated for all similarity measures listed in Table 1 are shown in Table 3.

The proposed similarity measure S F can be calculated by Eq. 7 as:

It is obvious that B is equal to A 1, which indicates that sample B should be classified to A 1. However, the similarity degrees of \(S(A_{1} ,B)\), \(S(A_{2} ,B)\) and \(S(A_{3} ,B)\) are equal to each other when S C , S H , S DC and C IFS are employed. These four similarity measures cannot capable of discriminating difference between the three patterns. Fortunately, the results of \(S_{F} (A_{i} ,B)\,(i = 1,2,3)\) can be used to make correct classification conclusion. This means that the proposed similarity measure shows an identical discrimination capability with majority of the existing measures.

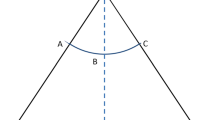

5.2 Properties of similarity matrix S F

As an illustration of Theorem 6, the positive definiteness of the similarity matrix S F will be verified by number of IF sets. Without any loss of generality, we investigate the similarity matrix S F among a group of five IF sets. For the sake of persuasiveness, all IF sets are generated randomly, with 100 replications. The evolution of the smallest eigenvalue of S F is displayed in Fig. 1. We observe that all the smallest eigenvalues are strictly positive, which indicates that similarity matrices between random IF sets are all positive definite. Such result is identical to the proof of Theorem 6. So we have enough confidence to claim that the similarity matrix defined based on our proposed similarity measure is positive definite.

6 Conclusion

The definition of similarity measure between two IF sets has been researched for decades. Even though researchers have defined mounts of similarity measures to depict the similarity degree between IF sets, most of them are stuck with counter-intuitive results. After analyzing these similarity measures critically, a new similarity measure is proposed in this paper. To explore the properties of our proposed similarity measure, the similarity matrix is also defined. A comparison between our proposed similarity measure and other existing measures is carried out based on the widely used counter-intuitive cases. It is illustrated that the proposed similarity measure is more reasonable, without any counter-intuitive results. It has also been proved that the similarity matrix defined by our proposed similarity measure is positive definite, which is significant for the application of similarity matrix.

One case worth mentioning is that our proposed similarity measure is not the only similarity measure that can be used to define positive definite similarity matrix. Besides the combination of cosine similarity and Euclidean distance, cosine similarity can be also combined with Hamming distance to define a new similarity in a similar way. So much work remains to be done for a better exploration and exploitation of IF set theory.

References

Zadeh LA (1965) Fuzzy sets. Inf Control 8:338–353

Saeedi J, Faez K (2013) A classification and fuzzy-based approach for digital multi-focus image fusion. Pattern Anal Appl 16(3):365–379

Moustakidis SP, Theocharis JB (2012) A fast SVM-based wrapper feature selection method driven by a fuzzy complementary criterion. Pattern Anal Appl 15(4):365–379

Atanassov KT (1986) Intuitionistic fuzzy-sets. Fuzzy Set Syst 20:87–96

Xu Z, Yager RR (2008) Dynamic intuitionistic fuzzy multi-attribute decision making. Int J Approx Reason 48(1):246–262

Burduk R (2012) Imprecise information in Bayes classifier. Pattern Anal Appl 15(2):147–153

Xia M, Xu Z (2010) Some new similarity measures for intuitionistic fuzzy values and their application in group decision making. J Syst Sci Syst Eng 19(4):430–452

Ye J (2011) Cosine similarity measures for intuitionistic fuzzy sets and their applications. Math Comput Model 53(1–2):91–97

Szmidt E, Kacprzyk J (2000) Distances between intuitionistic fuzzy sets. Fuzzy Set Syst 114(3):505–518

Wang W, Xin X (2005) Distance measure between intuitionistic fuzzy sets. Pattern Recogn Lett 26(13):2063–2069

Grzegorzewski P (2004) Distances between intuitionistic fuzzy sets and/or interval-valued fuzzy sets based on the Hausdorff metric. Fuzzy Set Syst 148(2):319–328

Chen T-Y (2007) A note on distances between intuitionistic fuzzy sets and/or interval-valued fuzzy sets based on the Hausdorff metric. Fuzzy Set Syst 158(22):2523–2525

Hung WL, Yang MS (2004) Similarity measures of intuitionistic fuzzy sets based on Hausdorff distance. Pattern Recogn Lett 25(14):1603–1611

Hong DH, Kim C (1999) A note on similarity measures between vague sets and between elements. Inf Sci 115(1–4):83–96

Li F, Xu Z (2001) Similarity measures between vague sets. J Softw 12(6):922–927

Li Y, Chi Z, Yan D (2002) Similarity measures between vague sets and vague entropy. J Comput Sci 29(12):129–132

Li D, Cheng C (2002) New similarity measures of intuitionistic fuzzy sets and application to pattern recognitions. Pattern Recogn Lett 23(1–3):221–225

Mitchell HB (2003) On the Dengfeng-Chuntian similarity measure and its application to pattern recognition. Pattern Recogn Lett 24(16):3101–3104

Liang Z, Shi P (2003) Similarity measures on intuitionistic fuzzy sets. Pattern Recogn Lett 24(15):2687–2693

Li Y, Olson DL, Qin Z (2007) Similarity measures between intuitionistic fuzzy (vague) sets: a comparative analysis. Pattern Recogn Lett 28(2):278–285

Chen SM (1995) Measures of similarity between vague sets. Fuzzy Set Syst 74(2):217–223

Hwang CM, Yang MS, Hung WL et al (2012) A similarity measure of intuitionistic fuzzy sets based on the Sugeno integral with its application to pattern recognition. Inf Sci 189:93–109

Zhang H, Yu L (2013) New distance measures between intuitionistic fuzzy sets and interval-valued fuzzy sets. Inf Sci 245:181–196

Li J, Deng G (2012) The relationship between similarity measure and entropy of intuitionistic fuzzy sets. Inf Sci 188:314–321

Boran FE, Akay D (2014) A biparametric similarity measure on intuitionistic fuzzy sets with applications to pattern recognition. Inf Sci 255:45–57

Papakostas GA, Hatzimichailidis AG, Kaburlasos VG (2013) Distance and similarity measures between intuitionistic fuzzy sets: a comparative analysis from a pattern recognition point of view. Pattern Recogn Lett 34(14):1609–1622

Hong DH, Choi C-H (2000) Multicriteria fuzzy decision-making problems based on vague set theory. Fuzzy Set Syst 114(1):103–113

Author information

Authors and Affiliations

Corresponding author

Appendix 1: Definitions and properties about positive definite matrix

Appendix 1: Definitions and properties about positive definite matrix

When proving theorems concerning positive definite matrices, we use some properties of positive definite matrix. For ease of reference, some background knowledge related to positive definite matrix is shown in this part. Since all the results given below are well known, we mainly present definitions and theorems with an absence of their proofs.

Definition 9

An \(n \times n\) real symmetric matrix A is positive semidefinite (PSD) if it holds that \(\varvec{x}^{{\mathbf{T}}} \varvec{Ax} \ge \text{0}\) for every n × 1 column vector \(\varvec{x} \ne {\mathbf{0}}\).It is (strictly) positive definite (PD) if additionally: \(\varvec{x}^{{\mathbf{T}}} \varvec{Ax} = \text{0} \Rightarrow \varvec{x} = {\mathbf{0}}\).

Theorem 7

Eigenvalues of real symmetric matrix A are all real numbers.

Theorem 8

A is PSD iff its eigenvalues are nonnegative and A is PD iff its eigenvalues are strictly positive.

Theorem 9

If two matrices A and B are both PSD, A + B is also PSD.

Consequently, the sum of PSD matrices is PSD. The result is PD if there is at least one PD matrix among the PSD matrices.

Theorem 10

A set of necessary and sufficient conditions for an n × n symmetric matrix A to be PSD is that all the principal leading minors \(\Delta_{pp} (p = 1,2, \ldots ,n)\) of A must be nonnegative. Additionally, A is PD iff \(\Delta_{pp} (p = 1,2, \ldots ,n)\) is strictly positive.

Theorem 11

Let x be an n × 1 column vector, \(\varvec{x} \ne {\mathbf{0}}\). Then \(\varvec{A = xx}^{{\mathbf{T}}}\) is PSD.

Theorem 12

If A is square, symmetric and positive definite, then A can be uniquely factorized as \(\varvec{A = U}^{{\mathbf{T}}} \varvec{U}\) where U is upper triangular with positive diagonal entries (called Cholesky decomposition).

Definition 10

Let \(\varvec{A} = \text{(}a_{ij} \text{)}_{n \times n}\) denote an n × n real matrix, the area defined by \(\left| {z - a_{ii} } \right| \le \sum\nolimits_{j = 1,j \ne i}^{n} {\left| {a_{ij} } \right|}\) is called the ith Gerschgorin circle of A.

Theorem 13

Let \(\varvec{A} = \text{(}a_{ij} \text{)}_{n \times n}\) denote an n × n real matrix. Then all of its eigenvalues are in the union of its n Gerschgorin circles (known as Gerschgorin theorem).

Let λ be an arbitrary eigenvalue of A, we have:

Rights and permissions

About this article

Cite this article

Song, Y., Wang, X. A new similarity measure between intuitionistic fuzzy sets and the positive definiteness of the similarity matrix. Pattern Anal Applic 20, 215–226 (2017). https://doi.org/10.1007/s10044-015-0490-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10044-015-0490-2