Abstract

The fundamental formula in an optical system is Rayleigh diffraction integral. In practice, we deal with Fresnel diffraction integral as approximate diffraction formula. Fresnel transforms and inverse Fresnel transforms have been formulated systematically and defined as a bounded additive, a unitary operator in Hilbert space. To investigate the band-limited effect in Fresnel transform plane, we seek the function that its total power in finite Fresnel transform plane is maximized, on condition that an input signal is zero outside the bounded region. This problem is a variational one with an accessory condition. This leads to the eigenvalue problems of Fredholm integral equation of the first kind. The kernel of the integral equation is of Hermitian conjugate and positive definite. Moreover, we prove that the eigenfunctions corresponding to distinct eigenvalues have dual orthogonal property. By discretizing the kernel and integral range to seek the approximate solutions, the eigenvalue problems of the integral equation can depend on a one of the Hermitian matrix in finite-dimensional complex value vector space. We use the Jacobi method to compute all eigenvalues and eigenvectors of the matrix. We consider the application of the eigenvectors to the problems of the approximating any functions. We show the validity and limitation of the eigenvectors in computer simulations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The solution of many optical problems in a scalar diffraction can be obtained using either the Rayleigh diffraction integral or the Fresnel–Kirchhoff diffraction integral. In practice, we deal with Fresnel diffraction integral as approximate diffraction formula. The Fresnel transform of optical image has been formulated mathematically and presented as a convolution formulation which is a description of Fresnel diffraction [1,2,3,4]. Up to now, moreover, Fresnel transforms and inverse Fresnel transforms have been formulated systematically and defined as a bounded additive, a unitary operator in Hilbert space. It has been revealed the algebraic and topological property by mean of a functional analytic method. Furthermore, the set of Fresnel transform operator has the group property [5,6,7]. In recently, it is used in image processing, optical information processing, optical waveguides, computer-generated holograms, phase retrieval techniques, speckle pattern interferometry and so on [8,9,10,11,12,13,14,15].

The extension of optical fields through an optical instrument is practically limited to some finite area. This leads to the spatially band-limited problem. The effect of a band limitation has been studied for an optical Fourier transform. Sampling theorems have been derived by band-limited effect in Fourier transform plane and applied to some application areas. The sampling function systems are orthogonal system in Hilbert space and can be considered as coordinate system in some functional space. In sampling theorem, it is important to develop the orthogonal or orthonormal functional systems. In the literature, there are many examples of band-limited function in Fourier transform, its applications and references therein [16,17,18]. Band-limited effects in Fresnel diffraction plane and Rayleigh–Sommerfeld equation have been investigated using Fourier transform in which it was based on the angular-spectrum method [19,20,21,22]. However, the band-limited effect in Fresnel transform plane is not revealed sufficiently.

In this paper, the band-limited effect in Fresnel transform plane is investigated. For that, we seek the function that its total power in finite Fresnel transform plane is maximized, on condition that an input signal is zero outside the bounded region. This problem is a variational one with an accessory condition. This leads to the eigenvalue problems of Fredholm integral equation of the first kind. The kernel of the integral equation is of Hermitian conjugate and positive definite. Therefore, the eigenvalues are real nonnegative numbers. Moreover, we prove that the eigenfunctions corresponding to distinct eigenvalues have dual orthogonal property. By discretizing the kernel and integral range to seek the approximate solutions, the eigenvalue problems of the integral equation can depend on a one of the Hermitian matrix in finite-dimensional complex value vector space \(\left( {{\mathbb{C}}^{k} , k < \infty } \right)\). We use the Jacobi method to compute all eigenvalues and eigenvectors of the matrix. We consider the application of the eigenvectors to the problems of the approximating any functions. We show the validity and limitation of the eigenvectors in computer simulations.

2 Fresnel transformation

Assume that we place a diffracting screen on \(z = 0\) plane. The parameter \(z\) represents the normal distance from the input plane. Let \(\left( {\xi ,\eta } \right)\) be the coordinate of any point in input plane. Parallel to the screen at \(z > 0\) is a plane of observation. Let \(\left( {x,y} \right)\) be the coordinate of any point in this latter plane. If \(f\left( {\xi ,\eta } \right)\) represents the amplitude transmittance in Hilbert space, then the Fresnel transform is defined bywhere \(k\) is the wave number and \(i = \sqrt { - 1}\). The inverse Fresnel transform is defined by

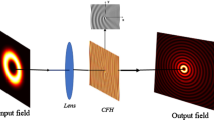

Figure 1 shows a general optical system and its coordinate system.

3 Spatial band-limited signal

We assume that an input signal is zero outside the bounded region R and total power of \(g\left( {x,y} \right)\) is limited in the bounded region S. In Fig. 1, we showed that R is rectangular region in input plane and S is a rectangular region in diffraction plane. In this section we seek the function f(ξn) that maximizes the total power of \(g\left( {x,y} \right)\) provided that the total power in R is fixed.

3.1 Fredholm integral equation of the first kind

To simplify the discussion, we consider only one-dimensional Fresnel transform. The one-dimensional Fresnel transform is defined by

where we set the wave number unit. The inverse Fresnel transform is defined by

Assume that \(f\left( \xi \right)\) is limited within the finite region \(R\) on the \({\upxi }\)-plane and its total power \(P_{R}\), namely the inner product of the function, is constant:

where \(f^{*} \left( \xi \right)\) denotes the complex conjugate function of \(f\left( \xi \right)\). Assume that \(g\left( x \right)\) is the Fresnel transform of the function \(f\left( \xi \right)\) which is bounded by a finite region \(R\), that is,

Then, the total power \(P_{S}\) of \(g\left( x \right)\) in the bounded region \(S\) is

where the kernel function Ks(.,.) is defined by

We seek the function \(f\left( \xi \right)\) that maximizes \(P_{S}\) provided that the total power \(P_{R}\) is fixed. This problem is a variational one with an accessory condition. We use the method of Lagrange multiplier to solve this problem. Let us define two functions \(G\left( \xi \right)\) and \(H\left( \xi \right)\) as following:

We want to maximize the function \(H\left( \xi \right)\), subject to the constraint \(G\left( \xi \right)\). Setting \({\uplambda }\) the Lagrange multiplier, Lagrangian is defined by

We set the gradient of Lagrangian to zero, that is,

where \(\nabla\) indicate the gradient. By Eqs. (7), (9) and (10), we obtain

We conclude that

This is the Fredholm integral equations of the first kind. The light in diffraction plane spread, but for the spatial band-limited function \(f\left( \xi \right)\), the functional system in object plane which maximizes the light energy of the Fresnel transform \(g\left( x \right)\) in the bounded region satisfies this integral equation in analytically. In order to raise resolving power or resolution in general optical instruments, it is necessary to maximize the light energy. In signal processing, it is possible to represent the input signal on the functional system that maximizes the total power in Fresnel transform plane. Solving this equation depends on eigenvalue problems of the integral equation. From now on, we deal with the eigenvalue problem of this integral equation. This equation corresponds to some modification of the integral equation for prolate spheroidal wave functions. The integral equation and differential equation for the prolate spheroidal wave functions have been generalized and revealed its properties. Moreover, discrete prolate spheroidal wave functions have derived and their mathematical properties have been investigated [23,24,25,26,27,28,29]. The prolate spheroidal wave function has been applied to some problems [30, 31].

According to Eq. (7), we can write

Therefore, the kernel \(K_{S} \left( {\xi ,\xi^{\prime}} \right)\) of the integral equation is positive definite.

To prove the eigenvalues of above integral equation are nonnegative, it is necessary to show \(\lambda \varphi \ge 0\). In this case, \({\uplambda }\) is an eigenvalue, \(\varphi\) is an eigenvector and \(\parallel \cdot \parallel\) indicates the norm in Hilbert space [32]. Therefore, by replacing \(f\left( \xi \right)\) with \(\varphi \left( \xi \right)\) and taking Eq. (14) into consideration, we can write

Therefore, the eigenvalues of the integral equation are nonnegative and real number.

Denoting the largest eigenvalue of Eq. (14) by \(\lambda_{max}\), then the maximum \(P_{S - max}\) of Eq. (7) becomes

Theorem 1

For the spatial band-limited function \(\varphi \left( \xi \right)\), the optimum function that maximizes the power of the Fresnel transform \(g\left( {x,z} \right)\) in the bounded region S equals the eigenfunction corresponding to the largest eigenvalue of Eq. (14).

Let us consider the kernel of the integral equation. If object plane and Fresnel transform plane are bounded by finite regions, the kernels of the integral equation are calculated analytically as the kernel function. We set the finite region \(S\) in Fresnel transform plane \(- {\text{a}} \le x \le {\text{a}}\):

Let us consider the complex conjugate of the kernel of the integral equation:

Therefore, the kernel is of Hermitian symmetry.

3.2 Dual orthogonal property

If \(\lambda_{m}\) and \(\lambda_{n}\) are distinct eigenvalues of the above integral equation, i.e., \({\text{m}} \ne {\text{n}}\), and \(\varphi_{m}\), \(\varphi_{n}\) are corresponding eigenfunctions, we can express them as the following integral formulas:

Let us consider the complex conjugate of the kernel of the integral equation:

From Eq. (8), we have

Therefore, we obtain

and the integral kernel \(K_{S} \left( {\xi ,\xi^{\prime}} \right)\) is of Hermitian symmetry. If we multiply the both sides of Eq. (20) by \(\varphi_{n}^{*} \left( \xi \right)\) and integrate with respect to over \(R\), we obtain

After taking the complex conjugate of Eq. (21), we multiply the both sides by \(\varphi_{m} \left( \xi \right)\) and integrate with respect to over \(R\), we obtain

From Eqs. (26) and (24), we obtain

Since the left side of Eq. (25) and the right side of Eq. (27) are equal and \(\lambda_{n}\) is real number, we have

For \(\lambda_{m} \ne \lambda_{n}\), we conclude

That is to say, \(\varphi_{m} \left( \xi \right)\) and \(\varphi_{n} \left( \xi \right)\) are orthogonal on \(R\). If \(m = n\), the orthogonal condition can be shown by Eq. (14), Mercer’s theorem [33] and the complete orthonormal eigenfunctional systems which are derived by the eigenfunctions.

Let us consider the extension of the domain of \({\upxi }\) into one-dimensional Euclidean space \(E\). Now, we can redefine the following integral equation:

Then, for the eigenfunctions\(\varphi_{m}\), and\(\varphi_{n}\), \(m \ne n\), we have

We need to consider the integral part about the kernel:

Using the delta function \(\delta \left( \cdot \right)\), as shown in the Appendix, such that

the above equation can be expressed by the following form:

Substituting Eq. (34) into Eq. (32), we have

If the functional systems \(\left\{ {\varphi_{m} \left( \xi \right)} \right\}\) are orthogonal on \(E\), these also are orthogonal on \(R\). Therefore, the orthogonal functional systems have dual orthogonal property.

4 Numerical computations

It is difficult in general to seek the strict solution for the eigenvalue problem of the integral equation. Therefore, we desire to seek the approximate solutions in practical exact accuracy [34]. By discretizing the integral equation, we deal with an eigenvalue problem of a matrix. We apply the eigenvectors of a matrix to the problems of approximation of any functions in computer.

4.1 Discretization of the kernel function

By discretizing the kernel function and integral range at equal distance and using the values of the discrete sampling points, the integral equation can be written as follows:

where \(i,j\) are the natural number, \(1 \le i \le M\). The matrix \(K_{ij}\) is the Hermitian matrix if the kernel is discretized evenly spaced and \(M = N\). Therefore, the eigenvalue problems of the integral equation depend on one of the Hermitian matrix in finite-dimensional vector space. In general finite-dimensional vector spaces, the eigenvalues of Hermitian matrix are real numbers, and then, eigenvectors from different eigenspaces are orthogonal [35].

However, the diagonal elements of our matrix can be of indeterminate form. Therefore, we seek the limit value. If \(\xi = \xi^{\prime}\), we can write

We can replace \(\xi - \xi^{\prime}\) with \(X\):

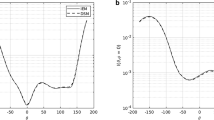

We use the Jacobi method [36] to compute all eigenvalues and eigenvectors of the matrix. The Jacobi method is a procedure for the diagonalization of complex symmetric matrices, using a sequence of plane rotations through complex angles. All eigenvectors computed by the Jacobi method are of orthonormal vectors automatically. Now, we set \(M = N = 100\). Figure 2 shows the eigenvalues in descending order if \(z = 27\), \(S = \left[ { - 15.0, 15.0} \right]\), \(R = \left[ { - 15.0, 15.0} \right]\). They are nonnegative and real number. In Fig. 3, (a) shows real part and imaginary part of the eigenvectors for the largest eigenvalue. (b) is the eigenvectors for second large eigenvalue. (c) is the eigenvectors for third large eigenvalue. (d–f) are eigenvectors for 4th–6th large eigenvalue. Because of 100-dimensional complex-valued vector space \(\left( {{\mathbb{C}}^{100} } \right)\), there are 94 eigenvectors except for these. These eigenvectors are the orthonormal bases in \({\mathbb{C}}^{100}\).

Plots of real part and imaginary part of the eigenvectors for the largest eigenvalue a. b Eigenvectors for second large eigenvalue. c Eigenvectors for third large eigenvalue. d-f Eigenvectors for 4th–6th large eigenvalue. \(S = \left[ { - 15.0, 15.0} \right]\), \(R = \left[ { - 15.0, 15.0} \right]\), z = 27.0

4.2 Approximation of any functions

We consider the application of the eigenvectors to the problem of approximating any functions. Theoretically, we deal with a problem of expressing an arbitrary element on a finite N-dimensional Hilbert space \(H_{N}\) with an orthonormal basis. For any element \(u\) in \(H_{N}\), using orthonormal basis \(\left\{ {\psi_{n} } \right\}_{n = 1}^{N}\), we can write

where \(\left\langle { \cdot \,,\, \cdot } \right\rangle\) is an inner product [32]. Now, we set \(N = 100\). Let us consider the set in \({\mathbb{C}}^{100}\) of all 100-tuples:

where \(u_{1} , u_{2} , \cdots , u_{100}\) are complex number.

First, let us consider a following real value test function:

By discretizing the test function evenly spaced at 100 points, we created test vectors in 100-dimensional real-valued vector space \(\left( {{\mathbb{R}}^{100} } \right)\). It was confirmed that these test vectors can be expressed by the eigenvector expansions. Figure 4 illustrates the mean square error versus the number of eigenvectors. The normalized mean square error is defined by

where \(u^{k}\) is the sum in Eq. (39) up to \(k\), \(u\) is the original vector and \(\parallel \cdot \parallel_{2}\) is the \(\ell^{2}\)-norm. From Fig. 4, we can see that the error decreases with increasing number of eigenvectors used in the expansion.

Second, let us consider a following real value test function which is real-valued hyperbolic cosine function:

By discretizing the test function in the same way, we created a test vector in \({\mathbb{R}}^{100}\). Figure 5 illustrates the mean square error versus the number of eigenvectors. From Fig. 5, we can see that the error decreases with increasing number of eigenvectors used in the expansion.

Third, let us consider a following complex value test function:

By discretizing the test function in the same way, we created a test vector in \({\mathbb{C}}^{100}\). Figure 6 illustrates the mean square error versus the number of eigenvectors. From Fig. 6, we can see that the error decreases with increasing number of eigenvectors used in the expansion.

4.3 Discrete Fresnel transforms of the eigenvectors

By discretizing the function and using the sampling theorem in Eqs. (3) and (4), we can derive one-dimensional discrete Fresnel transform [5, 37], such that

There exists an inverse discrete Fresnel transform, which is

The eigenvectors which computed in Sect. 4.1 are the orthonormal systems in input plane. It can be considered to be a coordinate system. To confirm the appearance of the eigenvectors in Fresnel transform plane, we computed one-dimensional discrete Fresnel transform. In Fig. 7, (a) shows real part and imaginary part of the eigenvectors for the largest eigenvalue, if \(z = 20.0\), \(S = \left[ { - 15.0, 15.0} \right]\), \(R = \left[ { - 15.0, 15.0} \right]\). (b) and (c) are second large eigenvector and third eigenvector, respectively. (d) is discrete Fresnel transform of (a). (e) and (f) are discrete Fresnel transform of (b) and (c), respectively. From Fig. 7, we can see that a real part and an imaginary part become similar form in discrete Fresnel transform plane.

Plots of real part and imaginary part of the eigenvectors for the largest eigenvalue a. b Eigenvector for second large eigenvalue. c Eigenvector for third large eigenvalue. \(S = \left[ { - 15.0, 15.0} \right]\), \(R = \left[ { - 15.0, 15.0} \right]\), z = 20.0. d-f Discrete Fresnel transforms of a-c, respectively

4.4 Extension to two-dimensional case

We consider the extension of the eigenvector expansions to two-dimensional image. Assume that a matrix \(V\) is written in the form

where the elements \(\left\{ {v_{i} } \right\}_{i = 1}^{N}\) are the eigenvectors which are computed in Sect. 4.1 and column vectors. Then, \(V\) can be of unitary and its column vectors form an orthonormal set in \({\mathbb{C}}^{N}\) with respect to the complex Euclidean inner product. Assume that the matrix \(A\) is an image that has been sampled and quantized and is of a square matrix of size \(N \times N\). A general linear transformation on an image matrix \(A\) can be written in the form

The transpose of \(V\) is denoted by \(V^{t}\). The inverse transform of Eq. (48) can be written in the form

We define the standard unit vectors in \({\mathbb{R}}^{N}\).

The matrix \(B\) whose elements are \(b_{st}\) can be expressed as

The matrix \(A\) can be reconstructed by the following equation:

This means the eigenvector expansion of an image.

To confirm the effectiveness of our eigenvectors, reconstruction of an image from the expansion coefficients was carried out using Eq. (52). The original test image 1 which is a rectangular uniform distribution in the center region is shown in Fig. 8a. This image is discretized \(100 \times 100\) pixels and \(8{\text{ bit/pixel}}\). We computed expansion coefficients of the original image, using Eq. (48) and all eigenvectors. The eigenvectors to use were computed on condition that \(z = 27\), \(S = \left[ { - 15.0, 15.0} \right]\), \(R = \left[ { - 15.0, 15.0} \right]\). The reconstructed image in Fig. 8b–f were computed using the coefficients and Eq. (52). The number of the eigenvectors to reconstruct was varied from 10 to 100. From Fig. 8 (b-f), we can see that the reconstructed image can approximate sufficiently to the original image with increasing the number of eigenvectors. The original test image 2 which is based on letters is shown in Fig. 9a. This image is the same size in the test image 1. The reconstructed image in Fig. 9b–f were computed on the same condition in Fig. 8. From Fig. 9b–f, we can see that the letter on reconstructed image can approximate gradually to the original image with increasing the number of eigenvectors.

The image matrix norm is defined by

The normalized mean square error is defined by

where \(A^{k}\) is reconstructed image using \(k\) eigenvectors and \(A_{o}\) is the original test image. Figure 10 illustrates the mean square error versus the number of eigenvectors in reconstructed image of the original test image 1 and 2. From Fig. 10, we can see that NMSE decreased with increasing the number of eigenvectors.

5 Conclusions

We have investigated the band-limited effect in Fresnel transform plane. For that, we have sought the function that its total power is maximized in finite Fresnel transform plane, on condition that an input signal is zero outside the bounded region. We have showed that this leads to the eigenvalue problems of Fredholm integral equation of the first kind. We have proved that the kernel of the integral equation is of Hermitian conjugate and positive definite. We have also shown that the eigenfunctions corresponding to distinct eigenvalues have dual orthogonal property. By discretizing the kernel and integral range, the problem depends on the eigenvalue problem of Hermitian conjugate matrix in finite-dimensional vector space. Using the Jacobi method, we computed the eigenvalues and eigenvectors of the matrix. Furthermore, we applied it to the problem of approximating a function and evaluated the error. We confirmed the validity of the eigenvectors for finite Fresnel transform by computer simulations.

In two-dimensional case, a modified Fredholm integral equation of first kind can be derived using same method and condition. The orthogonal functional systems also have dual orthogonal property. It is difficult to compute all eigenvalue eigenvector by discretizing the equation. Since the matrix can be large scale. It is necessary to use other method.

In this paper, there are many parameters, especially, the input bounded region S, the band-limited areas R, the wave number k and the normal distance z. It is necessary to consist of orthogonal functional systems with the optimal parameters for finite Fresnel transform in application of an optical system. Moreover, in general, the matrix given by discretizing the kernel of the integral equation is not the Hermitian matrix. If so, it is difficult to compute all eigenvalues and eigenvectors accurately. It is also necessary to consider other computational methods for this.

Data availability

The authors confirm that the data supporting the findings of this study are available within the articles.

References

Winthrop, J., Worthington, C.: Theory of Fresnel images. I. plane periodic objects in monochromatic light. J. Opt. Soc. Am. 55, 373–381 (1965)

Winthrop, J., Worthington, C.: Convolution formulation of Fresnel diffraction. J. Opt. Soc. Am. 56, 588–591 (1966)

Marom, E.: Rayleigh-Huygens diffraction formulas: boundary conditions and validity of approximations. J. Opt. Soc. Am. 57, 1390–1391 (1967)

Goodman, J.: Introduction to Fourier optics, 3rd edn. Roberts & company, Colorado (2005)

Aoyagi, N.: Theoretical study of optical Fresnel transformations. Dr. Thesis, Tokyo Institute of Technology, Tokyo (1973)

Aoyagi, N., Yamaguchi, S.: Functional analytic formulation of Fresnel diffraction. Jpn. J. Appl. Phys. 12, 336–370 (1973)

Aoyagi, N., Yamaguchi, S.: Generalized Fresnel transformations and their properties. Jpn. J. Appl. Phys. 12, 1343–1350 (1973)

Cong, W., Chen, N., Gu, B.: Phase retrieval in the Fresnel transform system: a recursive algorithm J. . Opt. Soc. Am. A. 16, 1827–1830 (1999)

Kelly, P.: Numerical calculation of the Fresnel transform. J. Opt. Soc. Am. A. 31, 755–764 (2014)

Zalevsky, Z., Mendlovic, D., Dorsch, R.: Gerchberg-Saxton algorithm applied in the fractional Fourier or Fresnel domain. Opt. Lett. 21, 842–844 (1996)

Gori, F.: Fresnel transform and sampling theorem. Optics Comm. 39, 293–297 (1981)

Gori, F.: The converging prolate spheroidal functions and their use in Fresnel optics. Optics Comm. 45, 5–10 (1983)

James, D., Agarwal, G.: The generalized Fresnel transform and its application to optics. Optics Comm. 126, 207–212 (1996)

Liebling, M., Blu, T., Unser, M.: Fresnelets: new multiresolution wavelet bases for digital holography. IEEE Trans. Image Proc. 12, 29–43 (2003)

Onural, L.: Diffraction from a wavelet point of view. Opt. Lett. 18, 846–848 (1993)

Ogawa, H. (2009) What can we see behind sampling theorems ?. IEICE Trans. Fundam. E92-A: 688–695

Kida, T.: On restoration and approximation of multi-dimensional signals using sample values of transformed signals. IEICE Trans. Fundam. E77-A, 1095–1116 (1994)

Jerri, A.: The Shannon sampling theorem –its various extensions and applications: a tutorial review. Proc. IEEE 65, 1565–1596 (1977)

Onural, L.: Sampling of the diffraction field. Appl. Opt. 39, 5929–5935 (2000)

Matsushima, K., Shimobaba, T.: Band-limited angular spectrum method for numerical simulation of free-space propagation in far and near fields. Opt. Express 17, 19662–19673 (2009)

Okada, N., Shimobaba, T., Ichihashi, Y., Oi, R., Yamamoto, K., Oikawa, M., Kakue, T., Masuda, N., Ito, T.: Band-limited double-step Fresnel diffraction and its application to computer-generated holograms. Opt. Express 21, 9192–9197 (2013)

Chacko, N., Liebling, M., Blu, T.: Discretization of continuous convolution operators for accurate modeling of wave propagation in digital holography. J. Opt. Soc. Am. A 30, 2021–2020 (2013)

Slepian, D., Pollak, H.: Prolate spheroidal wave functions, Fourier analysis and uncertainty -I. Bell Syst. Tech. J. 40, 43–63 (1961)

Landau, H., Pollak, H.: Prolate spheroidal wave functions, Fourier analysis and uncertainty -II. Bell Syst. Tech. J. 40, 65–84 (1961)

Landau, H., Pollak, H.: Prolate spheroidal wave functions, Fourier analysis and uncertainty -III: the dimension of the space of essentially time- and band-limited signals. Bell Syst. Tech. J. 41, 1295–1336 (1962)

Slepian, D.: Prolate spheroidal wave functions, Fourier analysis and uncertainty -IV. Bell Syst. Tech. J. 43, 3009–3058 (1964)

Slepian, D.: Prolate spheroidal wave functions, Fourier analysis and uncertainty -V. Bell Syst. Tech. J. 57, 1371–1430 (1978)

Slepian, D.: On bandwidth. Proc. IEEE 64, 292–300 (1976)

Slepian, D.: A numerical method for determining the eigenvalues and eigenfunctions of analytic kernels. SIAM J. Numer. Anal. 5, 586–600 (1968)

Itoh, Y.: Evaluation of aberrations using the generalized prolate spheroidal wavefunctions. J. Opt. Soc. Am 60, 10–14 (1970)

Walter, G., Soleski, T.: Prolate spheroidal wavelet sampling in computerized tomography. J. Sampling theory in Sign. Image Proc. 5, 21–36 (2006)

Reed, M., Simon, B.: Methods of Modern Mathematical Physics, vol. 1. Academic Press, New York (1972)

Yoshida, K.: Integral Equation, 2nd edn. Iwanami Shoten, Tokyo (1950). ((in Japanese))

Kondo, J.: Integral Equation. Baifukan, Tokyo (1954). ((in Japanese))

Anton, H., Busby, R.: Contemporary Linear Algebra. John Wiley & Sons, NJ (2003)

Press, W., Teukolsky, S., Vettering, W., Flannery, B.: Numerical Recipes in C, 2nd edn. Cambridge University Press, Cambridge (1992)

Aoyagi, T., Ohtsubo, K., Aoyagi, N.: Application of the discrete Fresnel transform to watermarking. Forum on Information Technology 2017, I-006 (2017) (in Japanese)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The delta function can be defined as follows [4]:

where

Noting thatwe can define \(S_{N} \left( \cdot \right)\) as following.

We conclude that

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Aoyagi, T., Ohtsubo, K. Numerical analysis of orthogonal functional systems for finite Fresnel transform. Opt Rev 30, 376–386 (2023). https://doi.org/10.1007/s10043-023-00809-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10043-023-00809-9