Abstract

When blurred images have saturated or over-exposed pixels, conventional blind deconvolution approaches often fail to estimate accurate point spread function (PSF) and will introduce local ringing artifacts. In this paper, we propose a method to deal with the problem under the modified multi-frame blind deconvolution framework. First, in the kernel estimation step, a light streak detection scheme using multi-frame blurred images is incorporated into the regularization constraint. Second, we deal with image regions affected by the saturated pixels separately by modeling a weighted matrix during each multi-frame deconvolution iteration process. Both synthetic and real-world examples show that more accurate PSFs can be estimated and restored images have richer details and less negative effects compared to state of art methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Image blur can significantly degrade an image’s quality and it is often caused due to optical defocusing, atmosphere disturbance, camera shake during exposure, etc. The common blurring process can be formulated using a linear convolution model convolving a latent image with a point spread function (PSF) plus noise:

where \({\mathbf{g,}}\;{\mathbf{f,}}\;{\mathbf{n}}\) denote the column-wise vector forms of the blurred image, latent image, and image noise, respectively, \({\mathbf{H}}\) stands for the convolution matrix of the PSF \({\mathbf{h}}\). The goal of image deblurring is to seek the optimal solution of the unknown latent image \({\mathbf{f}}\) from \({\mathbf{g}}\) given the estimated kernel matrix \({\mathbf{H}}\).

Existing restoration approaches can be classified into two categories: non-blind image deconvolution [1–4] and blind image deconvolution [5–8] in terms of whether the PSF is known. Both of the two problems are ill-posed [9], and in the case of blind image deconvolution, the condition is even worse. Researchers have designed numerous approaches to deal with the ill-posed problem. In recent years, various regularization methods have obtained good deblurring results. In Tikhonov regularization [9], simple quadratic regularization term \(\lambda ||{\mathbf{f}}||^{2}\) is proposed. Since the restored image tends to be smooth, people design a more effective regularization method called total variation (TV) method [10] which behaves better at protecting sharp image edges. It is a nonlinear problem, and many algorithms [11, 12] aim at solving it in an efficient and robust way to obtain satisfactory results. Recently, some new excellent methods [13–16] emerge and they can eliminate the image blur to a large extent when blurry images have salient structures. Except for restoration with single blurred image, multi-frame blurred images with different PSFs can be obtained in many situations and with proper regularization and optimization [17–19], better deblurring results can be expected since multiple images contain more information of the target to be restored.

In poor lighting scenes such as low-light night or high dynamic range scenes, there will be saturated or over-exposed regions due to limited exposure quantization range. These clipped pixels are not in accordance with the linear convolution formulation during the blurring process. Directly using the MAP deblurring algorithms to the blurred image will sometimes give poor restoration results. Some non-blind deblurring methods are proposed considering the problem. Whyte [20] proposes a forward model to treat the saturated pixels under the Richardson-Lucy framework. Cho [21] detects the outlier pixels and handles them in the MAP framework and gets satisfactory results. In blind deconvolution, simply discarding saturated pixels during kernel estimation sometimes will not work; so, some researchers use light streaks to help deduce PSFs [22–24]. Relying on one blurred image to detect light streak has limitations such as the need for interactive manual selection.

We propose a new strategy to restore the partially saturated image using multi-frame blurred images. First, in the kernel estimation step, a regularization term is added to make use of detected light steaks to help estimate PSF. We also combine multi-frame deconvolution framework with related saturated regions constrained separately during the deconvolution iteration process. By comparing our method with existing methods, less ringing artifacts and better deconvolution results are attained. This paper is organized as follows: in Sect. 2, we describe the ringing artifacts due to saturated pixels during image restoration and describe the basic multi-frame blind deconvolution framework of our algorithm. In Sect. 3, the light streak detection scheme is presented and we deal with the solution of our alternating minimization optimization approach considering the regions affected by saturated pixels. We present out experimental results of our method on synthetic and real captured images in Sect. 4. Section 5 contains our conclusions.

2 Problem formulation

As it is stated above, camera sensors have limited dynamic range and image pixels that are beyond the maximum intensities will be clipped. Due to the violation of linear convolution formulation in the saturated regions, there will be ringing artifacts around them in the deblurred image. To illustrate this problem, we synthetically blur a clear image using known PSFs with high intensity values clipped. One of the blurred frames is shown in Fig. 1a, and the magnified patch of the red box is shown in Fig. 1b. The severe ringing artifacts can be seen in Fig. 1c applying single image deconvolution technique. If we perform multi-frame image deconvolution, the deblurred image is better in terms of the restored image details and the alleviated ringing artifacts. However, if we deblur with our modeling saturated pixels technique using multi-frame images, we can get a very satisfactory result shown in Fig. 1e and the light source restored is very similar to its original shape without image ringing around.

Illustrations of the deconvolution artifacts around the saturated regions. a one of the blurred image, b magnified part of the red box in a, c restoration result using only a without considering saturated pixels, d restoration result using multi-frame images without considering saturated pixels, e restoration result using multi-frame images modeling saturated pixels

Before our multi-frame blind deconvolution optimization approach modeling saturated image pixels is introduced, we first formulate the framework of this optimization problem. With the development of camera devices, continuous capturing to get multi-frame images is increasingly common. Suppose that we have obtained \(m\) blurred frames of the same scene \({\mathbf{f}}\) which are denoted by \({\mathbf{g}}_{i} (i = 1 , 2, \ldots ,m)\). If the corresponding PSF convolution matrices are \({\mathbf{H}}_{i} (i = 1 ,2, \ldots ,m)\), considering the limited dynamic range of camera sensors, we have the following equations:

where \({\mathbf{n}}_{i}\) denotes the additive noise of blurred frame \({\mathbf{g}}_{i}\). \(c(t)\) is a clipping function and if \(t\) is within the dynamic range, \(c(t) = t\). Otherwise, the maximum or minimum intensity is given. The scenario is common in night images, high dynamic range images, images with artificial lights, etc.

Under Bayesian probabilistic framework, multi-frame blind deconvolution is formulated by the following MAP estimation problem:

If we logarithm the above expression and take the opposite, Eq. (3) is converted into the following,

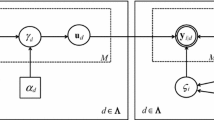

Normally, based on the assumption that image noise follows Gaussian distribution, the conditional likelihood \(P({\mathbf{g}}_{i} {\mathbf{|f,h}}_{i} )\;\;(i = 1,2, \ldots ,m)\) is also Gaussian if an image has no clipped saturated pixels. Thus, the logarithm conditional likelihood is \(||{\mathbf{H}}_{i} {\mathbf{f - g}}_{i} ||_{2}^{2}\) where \(|| \cdot ||_{2}^{{}}\) is indicated as the \(l_{2}\)-norm. However, in the partially saturated images, saturated pixels obviously do not conform to this distribution. As for those pixels which are not saturated in the blurred image, some of them can also affect the restoration effect since a saturated pixel diffusing to the adjacent area in the blurring process has the probability of becoming unsaturated. To solve this problem, we define a corresponding weighted matrix \({\mathbf{S}}_{i}\) which is of great importance in deblurring and will be discussed in the next section. The sum of the conditional likelihood is written as Eq. (5),

where \(\circ\) is the element-wise multiply operator. The expression shows that smaller pixel values of \({\mathbf{S}}_{i}\) make smaller contributions in the restoration process. If all the elements of \({\mathbf{S}}_{i}\) are 1, it becomes the normal deconvolution problem.

We assume that the gradients of a natural image follow a sparse probabilistic distribution, and in this paper, hyper-Laplacian prior is used to model \(\ln P({\mathbf{f}})\). Since most elements of the PSF are zero, it also obeys a sparse distribution. In the kernel estimation step, we assume that the prior for each \({\mathbf{h}}_{i}\) follows the \(l_{1}\) norm sparse distribution and adds a light streak regularization term as a constraint for the PSFs. The specific expressions will be discussed in the following section.

3 Optimization approach

Our optimization approach basically adopts the multi-frame image restoration framework of Eq. (4) given the blur model as Eq. (2). In this section, we first present the light streak detection scheme used in the kernel estimation constraint term and then deal with the solution of our alternating minimization optimization approach considering the saturation affected pixels. Finally, some necessary implementation details for the proposed method are shown.

3.1 Light streak detection

If we observe partially saturated blurred images, some light streaks can be taken as a cue for PSF estimation since they contain rich blur information. Different from other related approaches, our detection scheme focuses on utilizing all the blurred images. Given the properties of light streaks, they can approximately be seen as an estimation of PSF convolved with the unblurred light source. If we can find the overlapping parts of the light streaks in the multi-blurred images, the light source size and corresponding light streak patches are determined.

We first perform the PSO image registration method [25] to estimate the global shifts and possible slight rotations between the images. Then image binarization is performed for each blurred image with a threshold to get binary images which can extract relatively bright regions. One can easily observe that there are still textured regions left which should be removed. The connected regions in the binary image are labeled and we calculate the area of each labeled region which is indicated as the number of pixels, and choose two boundary area values to remove the unlikely too-big or too-small region. After all images have done this operation, the intersections of the above binary images are calculated, and the unblurred light source size and location are obtained. The number of intersected regions that meet the requirements is often more than one. We use two rules to pick the relatively appropriate unblurred light source. One is to calculate the corresponding light intensities in the blurred frames and we choose the brighter ones; the other rule is that the best chosen unblurred light diameter is 4–6. If the light source diameter is very small, it indicates that the light streaks we find may be noise or wrong corresponding regions. However, large-diameter light streaks will lose PSF details to some extent which is not good for image restoration.

After the unblurred light source diameter is determined, we can confirm the corresponding light streak patch of each frame. The light streak patch size is an input parameter which is an estimation of the actual kernel size, and the light streak patch location is determined given the light source location and light streak patch size. We filter out the background of candidate light streak patch based on the intensity value, and the clean light streak patch \({\mathbf{p}}_{i} \;(i = 1,2, \ldots ,m)\) can be acquired. They serve as a useful guidance for estimating PSFs while optimizing.

3.2 The optimization approach

Our proposed multi-frame blind deconvolution considering specific handlings to the saturated pixels aims to solve the following problem,

where \({\mathbf{S}}_{i}\) is the weighted matrix already mentioned in Sect. 2, \({\mathbf{W}}_{j}\) is the convolution matrix of a derivative filter such as [−1, 1], \(n\) denotes the total number of filters. The definition of \(|| \cdot ||_{p}^{p}\) is indicated as the \(l_{p}\)-norm. The \(l_{1}\)-norm-based optimization term ensures that the estimated PSFs will have a sparse energy distribution. \({\mathbf{R}}\) is the convolution matrix of the unblurred light source whose size is estimated in Sect. 3.1, \({\mathbf{p}}_{i}\) denotes the light streak patch detected in the i-th blurred image. Besides,\(\lambda\), \(\xi\) and \(\mu\) are the regularization coefficients.

The overall solution can be reached by iteratively implementing the following intermediate image deconvolution step 1 and intermediate PSF estimation step 2 until convergence to acquire the final estimated PSFs and then step 3 performs final image deconvolution using the final estimated PSFs.

Step 1 Fix the PSFs \({\mathbf{h}}_{i} \;(i = 1 , 2, \ldots ,m)\) and \({\mathbf{S}}_{i}\) estimated from the t-th iteration and estimate \({\mathbf{f}}\) for the iteration t + 1, i.e.,

Step 2 Fix current latent image \({\mathbf{f}}^{t + 1}\) and estimate the PSFs \({\mathbf{h}}_{i}^{t + 1} (i = 1 , 2, \ldots ,m)\), i.e.,

Step 3 After \(\tilde{T}\) times alternating iterations of steps 1 and 2, we perform non-blind deconvolution using the final estimated PSFs \({\mathbf{h}}_{i}^{{\tilde{T}}}\). We also solve Eq. (7) to do the final restoration, but use a different regularization coefficient \(\lambda\).

The overall restoration procedure is shown in Fig. 2.

The problem in step 1 includes determining the weight matrix \({\mathbf{S}}_{i}\) of each frame. This weight matrix is crucial for constraining the saturated deblurring artifacts. We first classify the image pixels into two categories similar as in [21]: inliers whose formation satisfies linear blur model; and outliers which are clipped saturated pixels. Note that if an image is blurred, the pixels that are originally clipped may be an inlier in the blurred image. Simply discarding saturated pixels by defining a threshold value when deblurring is not suitable. A binary map \(b\) is introduced such that \(b_{x} = 1\) if the blurred pixel \(g_{x}\) is an inlier, \(b_{x} = 0\) otherwise where \(x\) is the pixel index. Binary map can also be seen as a random variable since its true value is not known. We use the EM method to deal with the expectation of random variable \(b\) and compute the corresponding \({\mathbf{S}}.\)

According to the theory stated above, if a pixel is an inlier, the conditional likelihood follows Gaussian distribution. Otherwise, we define the likelihood as a Gaussian distribution as well but with a different mean and deviation value. The distribution \(P({\mathbf{g}}_{ix} |b_{i} ,{\mathbf{h}}_{i} ,{\mathbf{f}})\) is as follows:

where \(N\) is the Gaussian distribution, \(\sigma ,\sigma_{0}\) are standard deviations. \(o\) is the Gaussian mean constant which is assigned a high value. This distribution indicates that if the pixel is an outlier, it follows a Gaussian distribution whose shape is narrower than the inliner’s distribution with a relatively high intensity. This distribution can better model the saturated pixels and generate better deblurring result. The product of every pixel’s likelihood of Eq. (9) is likelihood \(P({\mathbf{g}}_{i} |b_{i} ,{\mathbf{h}}_{i} ,{\mathbf{f}})\). After derivations using the EM method, the intermediate weighted matrix \({\bar{\mathbf{S}}}_{i}\) is proportional to the expectation of \(b_{i}\) under \({\mathbf{g}}_{i} ,{\mathbf{h}}_{i} ,{\mathbf{f}}\). So we have

where \(P_{in}\) is the constant probability assumed when the non-clipped observed pixels are inliers. When \(({\mathbf{H}}_{i} {\mathbf{f}})_{x}\) is out of the image dynamic range, it cannot be an inlier, thus the weight is zero. We find that the regions which are outliers in one blurred frame and inliers in another can also affect the restoration effect in the original saturated pixels. Better results can be obtained by further suppressing the weights of these related regions. Then, each \({\bar{\mathbf{S}}}_{i}^{t}\) is performed the segmentation using a threshold (normally above 0.6 will do) to acquire the binary mask \({\mathbf{M}}_{i}^{t}\), and we take the intersections of all the \({\mathbf{M}}_{i}^{t} (i = 1, \ldots ,m)\), which is \(\varOmega = \bigcap\nolimits_{i = 1}^{m} {{\mathbf{M}}_{i}^{t} }\), and then define

Note that \(\varOmega\) holds the same for all blurred frames. The pixels which are not in \(\varOmega\) indicate that at the same positions of the blurred images, there must be at least one image in which the area is saturated.

Once the t-th iteration \({\mathbf{S}}_{i}^{t}\) is determined, Eq. (7) can be minimized using the iteratively reweighted least squares (IRLS) algorithm [18]. The idea is to turn the \(l_{p}\) regularization term into the weighted \(l_{2}\)-norm term and convert into the following equation (for simplicity, we have omitted the subscripts t)

where \({\varvec{\upkappa}}_{j}\) is the weighted matrix of \({\mathbf{W}}_{j}^{{}} {\mathbf{f}}\) during each iteration of IRLS. Then conjugate gradient (CG) method can be used to obtain \({\mathbf{f}}\).

In step 2, a new regularization term \(||{\mathbf{Rh}}_{i} - {\mathbf{p}}_{i} ||_{2}^{2}\) which measures the similarity of light streak and PSF convolved with a disk shape unblurred light source is added to help restrict the estimated PSF to the relatively right kernel shape. To solve the problem in this step, we also adopt the iteratively reweighted least squares (IRLS) method. Equation (8) is equal to the following equation:

where \(\varLambda_{i}\) is a diagonal matrix. The elements on the diagonal are the absolute value of \({\mathbf{h}}_{i}\), that is \(\varLambda_{i} = {\text{diag}}(|{\mathbf{h}}_{i}^{t} |)\). \(\varLambda_{i}^{ - 1}\) is the inverse matrix of \(\varLambda_{i}\). Note that the \(\varLambda_{i}\) in each iteration is constructed with the former iteration \({\mathbf{h}}_{i}^{t}\); so, it is a known matrix in the t + 1 iteration. The derivative of Eq. (13) is the following:

where \({\mathbf{F}}\) is the convolution matrix of \({\mathbf{f}}\). This can be solved by conjugate gradient (CG) method. Also, saturated regions in the blurred images should be discarded to avoid their potential influence to the optimization. The final estimated \({\mathbf{h}}_{i}\) should be normalized after each iteration to ensure energy persevered.

3.3 The implementation details

To use the proposed method, we should first decide the estimated sizes of the PSFs and initialize them with the detected light streaks. \(\mu\) can be assigned 0.1–1 according to the confidence of the light streak. If the blurred saturated images do not have appropriate light streak patches, the regularization parameter \(\mu\) should be set 0 with a lower possibility of obtaining accurate PSFs. The latent image can be initialized with any one of the blurred frames. The kernel estimation step 1 and step 2 usually takes 5–10 alternating iterations to converge.\(\lambda\) is usually set 0.005–0.01 and \(\xi\) is assigned 0.1–0.5. The constant probability \(P_{in}\) and Gaussian mean \(o\) normally set 0.9 can get a good result. \(\sigma\) is set according to the image noise level, and \(\sigma_{0} = 0.08\) is appropriate for most cases. In step 3, \(\lambda\) is usually set at a smaller value 0.001–0.005.

4 Experimental results and evaluation

In this section, we first use our proposed method in synthetic blurred images which are partially saturated. We evaluate the performance of our deblurring scheme in comparison to other methods and use peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) [26] to evaluate the results. Also, restoration results of real-world blurred saturated images are presented. Our testing environment is a PC running Windows 7 with Intel Core i7 CPU and 8 GB RAM.

4.1 Performance of the proposed method on synthetic images

An 800 × 800 clear image shown in Fig. 3 is synthetically blurred and high intensity pixels are clipped with three given PSFs to generate the blurred frames. As the test image is large, we only show part of the image to see it more clearly. The PSFs and parts of the three blurred frames are shown in Fig. 4. The latent image is initialized with the blurred image shown in Fig. 4a and the PSFs are initially estimated with the detected light streaks shown in Fig. 5a.

Figure 5d shows the restored image using our algorithm after 6 iterations of kernel estimation. Using the estimated kernels, the final PSNR value of the restored image is 24.38 dB. The estimated PSFs are shown in Fig. 5a. Compared with the given true PSFs, the estimated PSFs are basically accurate. The restored image is of high quality and has no artifacts around the saturated regions. We also compare deblurring results using less blurred images. From the PSNR performances of the proposed method using one, two and three blurred frames after each iteration in Fig. 6, we can clearly observe the better performance of multi-frame images. Especially, when only one blurred frame is provided, it turns out to be a single blind deconvolution problem. Here, we use the first blurred frame and the corresponding light streak, but the deconvolution result loses lots of details and is not satisfactory. Besides, the PSNR performance of restoring blurred images without saturated regions considered is also compared. The relatively low PSNR value is mainly due to the ringing artifacts around the saturated pixels.

We also remove the light streak regularization constraint and try to restore the blurred frames. We find it hard to estimate the PSFs accurately after various attempts and the PSNR value decreases with the increase of iterations. This proves the effectiveness of adding the light streak constraint in the kernel estimation step.

4.2 Comparisons with other deconvolution methods

We adopt four deconvolution methods to verify the reliability of our method. They are the methods proposed in Refs. [13, 16] which are widely used in single image blind deconvolution, the saturation suppression based method presented in Ref. [22] and the recent multi-frame deconvolution method proposed in Ref. [19]. The PSNRs and SSIMs are listed in Table 1. Our algorithm achieves a much higher PSNR and SSIM than the others. The previous two methods cannot estimate PSF accurately, so their bad deconvolution results are predictable. The estimated PSF using Ref. [22] is acceptable, and the restoration result is shown in Fig. 5b. Figure 5c is the restoration image using the multi-frame method in Ref. [19] which can estimate the PSFs roughly but has artifacts around saturated pixels. However, our proposed method has better restoration effects in terms of image details and saturated regions.

4.3 Experiments on real partially saturated blurred images

We capture the real partially saturated blurred images by a Canon 70D digital camera and the continuous shooting mode is used to make each frame the proper same exposure time. As we can see, estimated PSFs and restored images of these experiments are of high quality.

In Fig. 7, the 800 × 800 blurred frames of a coffee shop are shown and the restored images of different methods are in Fig. 7d–f. The detected light streak patches and estimated PSFs are shown in Fig. 2. To show the results more clearly, we select two areas which are marked green and yellow squares in Fig. 7f. The green area represents one of the saturated regions and the yellow area is one of the regular regions and our method gives the most satisfactory result in both of the two areas. We can see from the result that the method in Ref. [16] has difficulty estimating PSF; so, the restored image is still blurry. The method in Ref. [13] can estimate the PSF after carefully choosing parameters, but the result has obvious ringing effects around saturated regions in Fig. 8b. We use Fig. 7c to perform the restoration using Ref. [22], and the result is acceptable but loses many details as shown in Fig. 8h. Also, this method is not robust as it cannot recover a reasonable PSF using the other two blurred images. Figure 7e and the corresponding patches Fig. 8d, i are results from Ref. [19] using multiple blurred images. Due to the inaccurate estimation of PSFs and untreated saturated pixels, the deconvolution result has obvious ringing artifacts.

We also test our method in another scenario which has a low-light environment shown in Fig. 9. Even if we set the camera’s ISO 2000, the captured images tend to be blurry. The high ISO value indicates a higher noise level. However, the restored image Fig. 9d is of high quality as well. This indicates the robustness of our method.

There are times when no appropriate light streaks are found in the blurred images. If the blur degree of the images is not large and the saturated regions are relatively small, our method can still get a satisfactory estimation of PSFs when the light streak regularization coefficient is set 0. Figure 10 shows an example of this scenario and a good restoration result is obtained shown in Fig. 10d without the light streak constraint.

Despite the effective blind deconvolution results, our method suffers some limitations. Compared to other single image deconvolution methods, the computing speed of our algorithm is about 10 s during one iteration given an 800 × 800 image. We are working on GPU accelerated technique to combine with our method. Besides, when the shooting scenario has too many saturated regions and light streaks cannot be found, our approach can generate unsatisfactory results since PSFs cannot be estimated accurately.

5 Conclusion

In this paper, we propose an approach for restoring the multi-frame blurred images which suffer from partially saturation. In the kernel estimation step, a regularization term based on light steaks is introduced to help estimate PSF. We also combine multi-frame deconvolution framework with related saturated image regions constrained separately during the deconvolution iteration process. Visual experience and image assessment methods PSNR and SSIM show that the proposed method is robust in kernel estimation and it is effective in dealing with saturated deconvolution problem.

References

Richardson, W.H.: Bayesian-based iterative method of image restoration. J. Opt. Soc. Am. 62(1), 55–59 (1972)

Dong, W.D., Feng, H.J., Xu, Z.H., Li, Q.: A piecewise local regularized Richardson–Lucy algorithm for remote sensing image deconvolution. Opt. Laser Technol. 43(5), 926–933 (2011)

Krishnan, D., Fergus, R.: Fast image deconvolution using hyper-Laplacian priors. In: Proceedings of Neural Information Processing Systems (NIPS), pp. 1033–1041 (2009)

Li, A., Li, Y.B., Liu, Y.: Image restoration with dual-prior constraint models based on Split Bregman. Opt. Rev. 20(6), 491–495 (2013)

Xu, Z., Lam, E.Y.: Maximum a posteriori blind image deconvolution with Huber–Markov random-field regularization. Opt. Lett. 34(9), 1453–1455 (2009)

Xu, L., Jia, J.: Two-phase kernel estimation for robust motion deblurring. In: Proceedings of European Conference on Computer Vision (ECCV), pp. 157–170 (2010)

Zhang, J.L., Zhang, Q.H., He, G.M.: Blind image deconvolution by means of asymmetric multiplicative iterative algorithm. J. Opt. Soc. Am. 25(3), 710–717 (2008)

Suetake, N., Sakano, M., Uchino, E.: Gradient-based blind deconvolution with phase spectral constraints. Opt. Rev. 13(6), 417–423 (2006)

Tikhonov, A.N.: On the stability of inverse problems. Dolk. Akad. Nauk Sssr. 39(3), 176–179 (1943)

Bioucas-Dias, J.M., Figueiredo, M.A.T., Oliveira, J.P.: Total variation-based image deconvolution: a majorization–minimization approach. In: Proceedings of ICASSP, pp. 861–864 (2006)

Wang, Y.L., Yang, J.F., Yin, W.T., Zhang, Y.: A new alternating minimization algorithm for total variation image reconstruction. SIAM J. Imaging Sci. 1(3), 248–272 (2008)

Cai, J.F., Dong, B., Osher, S., Shen, Z.: Image restoration: total variation, wavelet frames, and beyond. J. Am. Math. Soc. 25(4), 1033–1089 (2012)

Cho, S., Lee, S.: Fast motion deblurring. ACM Trans. Gr. 28(5), 89–97 (2009)

Fergus, R., Singh, B., Hertzmann, A., Roweis, S.T., Freeman, W.T.: Removing camera shake from a single photograph. ACM Trans. Gr. 25(3), 787–794 (2006)

Shan, Q., Jia, J., Agarwala, A.: High-quality motion deblurring from a single image. ACM Trans. Gr. 27(3), 15–19 (2008)

Xu, L., Zheng, S., Jia, J.: Unnatural L0 sparse representation for natural image deblurring. In: Computer vision and pattern recognition (CVPR), pp. 1107–1114 (2013)

Hope, D.A., Jefferies, S.M.: Compact multiframe blind deconvolution. Opt. Lett. 36(6), 867–869 (2011)

Dong, W., Feng, H., Xu, Z., Li, Q.: Multi-frame blind deconvolution using sparse priors. Opt. Commun. 285(9), 2276–2288 (2012)

Sroubek, F., Milanfar, P.: Robust multichannel blind deconvolution via fast alternating minimization. IEEE Trans. Image Process. 21(4), 1687–1700 (2012)

Whyte, O., Sivic, J., Zisserman, A.: Deblurring shaken and partially saturated images. Int. J. Comput. Vis. 110(2), 185–201 (2014)

Cho, S., Wang, J., Lee, S.: Handling outliers in non-blind image deconvolution. In: Proceedings of IEEE International Conference on Computer Vision (ICCV), pp. 495–502 (2011)

Hu, Z., Cho, S., Wang, J., Yang, M.H.: Deblurring low-light images with light streaks. In: Computer Vision and Pattern Recognition (CVPR), pp. 3382–3389 (2014)

Liu, H., Sun, X., Fang, L., Wu, F.: Deblurring saturated night image with function-form kernel. IEEE Trans. Image Process. 24(11), 4637–4650 (2015)

Hu, B. S., Low, K.L.: Interactive motion deblurring using light streaks. In: Proceedings of IEEE International Conference on Image Processing (ICIP), pp. 1553–1556 (2011)

Kennedy, J., Eberhart, R.: Particle swarm optimization. In: Proceedings of IEEE International Conference on Neural Networks, pp. 1942–1948 (1995)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Acknowledgments

We thank the reviewers for helping us to improve this paper. This work is supported by National Natural Science Foundation of China (Grant No. 61550003).

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

Ye, P., Feng, H., Xu, Z. et al. Multi-frame partially saturated images blind deconvolution. Opt Rev 23, 907–916 (2016). https://doi.org/10.1007/s10043-016-0269-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10043-016-0269-8