Abstract

The minimization of operation costs for natural gas transport networks is studied. Based on a recently developed model hierarchy ranging from detailed models of instationary partial differential equations with temperature dependence to highly simplified algebraic equations, modeling and discretization error estimates are presented to control the overall error in an optimization method for stationary and isothermal gas flows. The error control is realized by switching to more detailed models or finer discretizations if necessary to guarantee that a prescribed model and discretization error tolerance is satisfied in the end. We prove convergence of the adaptively controlled optimization method and illustrate the new approach with numerical examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we discuss the minimization of operation costs for natural gas transport networks based on a model hierarchy (see [11, 21]), which ranges from detailed models based on instationary partial differential equations with temperature dependence to highly simplified algebraic equations. The detailed models are necessary to achieve a good understanding of the system state, but in many practical optimization applications, only the stationary algebraic equations—or even further simplifications like piecewise linearizations as in [15, 16, 32]—are used in order to reduce the high computational effort of evaluating the state of the system with the more sophisticated models. However, it is then unclear how good the true state is approximated by these simplified models and error bounds are typically not available in this context (see the chapter [22] in [23] for a more detailed discussion of this issue). Recently, in [37], a detailed error and perturbation analysis has been developed for several components in the model hierarchy and it has been shown how the more detailed model components can be used to estimate the error obtained in the simplified models.

Here, we use these error estimates from the model hierarchy together with classical error estimate grid adaptation techniques for the space discretization within an optimization method to control the error adaptively by switching to more detailed models or finer discretizations if necessary. Moreover, our adaptive method also allows to locally switch back to coarser models or to coarser discretizations if they are sufficiently accurate with respect to the local flow situation. Our new approach can, in general, be used for the entire model hierarchy by also using space-time grid adaptation. However, to keep things simple and to illustrate the functionality of the new adaptive approach, we will use three stationary isothermal models from the hierarchy in [11].

Using adaptive techniques to achieve a trade-off between computational efficiency and accuracy by using adaptive discretization methods in the context of optimization and optimal control problems is an important research topic, in particular in the context of real-time optimal control of constrained dynamical systems (see, e.g., [3, 8, 9, 29]), or in the context of optimal control of problems constrained by partial differential equations (see, e.g., [1, 24,25,26]). We extend these ideas and combine adaptive grid refinement and model selection in a model hierarchy in the context of nonlinear optimization problems. We also theoretically analyze the new algorithm. First promising numerical results for such an approach were presented in [34, 35].

The paper is structured as follows. The models used in this paper are described in Section 2 together with a simple first-order Euler method for the space discretization. In Section 3, we introduce model and discretization error estimators, which are used in Section 4 to derive an adaptive model and discretization control algorithm for the nonlinear optimization of gas transport networks that, in the end, delivers solutions that satisfy prescribed error tolerances. Numerical results are presented in Section 5 and the paper concludes in Section 6.

2 Problem Description, Modeling Hierarchy, and Discretizations

In this section, we introduce the problem of operation cost minimization for natural gas transport networks. We present our overall model of a gas transport network involving continuous nonlinear models describing a stationary flow for all the considered network elements. Since the majority of the elements are pipes, our focus lies on the precise and physically accurate modeling of these pipes. The typical models for the pipe flow are nonlinear instationary partial differential equations (PDEs) on a graph and their appropriate space-time discretizations. To address the fact that the behavior of the flow and the accuracy of the model may vary significantly in different regions of the network, we discuss a small part of the complete model hierarchy of instationary models (see [11]), where the lower level models in the hierarchy are simplifications of the higher level models. Which model is most appropriate to obtain a computationally tractable, adequately accurate, and finite-dimensional approximation depends on the task that needs to be performed with the model.

Our modeling approach is based on the following physical assumptions. First, we only consider a stationary gas flow, i.e., we neglect all time effects of gas dynamics, so that we have ordinary differential equations (ODEs) in space instead of systems of PDEs on a graph. Second, we assume an isothermal regime, i.e., we neglect all effects arising from changes in the gas temperature.

These assumptions are taken carefully such that we still obtain physically meaningful solutions and such that we are still able to derive and analyze an adaptive model and discretization control algorithm—without unnecessarily overloading the models with all technical details of the application that may distract us from the main mathematical ideas.

2.1 The Network

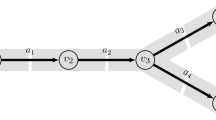

We model the gas transport network by a directed and connected graph G = (V, A). The node set is made up of entry nodes V+, where gas is supplied, of exit nodes V−, where gas is discharged, and of inner nodes V0, i.e., we have V = V+ ∪ V− ∪ V0. The set of arcs in our models comprises pipes Api and compressor machines Acm, i.e., we have A = Api ∪ Acm.

Real-world gas transport networks contain many other element types like (control) valves, short cuts, or resistors. For detailed information on modeling these devices, see [14] in general or [34, 35] for a focus on nonlinear programming (NLP) type models. However, we restrict ourselves to models with pipes and compressors in order to streamline the presentation of our basic ideas and methods, and to show in a prototypical way that our approach of space discretization and model adaptivity leads to major accuracy and efficiency improvements.

As basic quantities we introduce gas pressure variables pu at all nodes u ∈ V and mass flow variables qa at all arcs a ∈ A of the network. Both types of variables are bounded due to technical constraints on the pipes, i.e.,

All other required quantities are introduced where they are used first.

2.2 Nodes

In stationary gas network models, the nodes u ∈ V are modeled by a mass balance equation, i.e., we have the constraint

where for ingoing arcs we use the notation

and for outgoing arcs

Moreover, qu models the supplied or discharged mass flow at the corresponding node, i.e., we have

2.3 Pipes

Isothermal gas flow through cylindrical pipes is described by the Euler equations for compressible fluids,

see, e.g., [13, 27] for a detailed discussion. Here and in what follows, ρ is the gas density, v is its velocity, λ = λ(q) is the friction term, A denotes the cross-sectional area of the pipe, h′ is its slope, and D is the diameter of the pipe. Furthermore, g is the acceleration due to gravity, t is the temporal coordinate, and x ∈ [0, L] is the spatial coordinate with L being the length of the pipe. Equation (3a) is called the continuity equation and (3b) the momentum equation. Since we only consider the stationary case, all partial derivatives with respect to time vanish and we obtain the simplified stationary model

Thus, the continuity equation in its stationary variant simply states that the mass flow along the pipe is constant, i.e., q(x) ≡ q = const for all x ∈ [0, L].

To simplify the stationary momentum equation (4b), we consider two more model equations. First, the equation of state

where c is the speed of sound, Rs is the specific gas constant, and z is the compressibility factor. The second model is the relation of gas mass flow, density, and velocity given by

Substituting both these models into (4b), we obtain

i.e., the stationary momentum equation written in dependence of the gas pressure p = p(x), x ∈ [0, L], and the mass flow q.

A simplified version of the latter equation can be obtained by ignoring the ram pressure term

in (4b), i.e., the total pressure exerted on the gas by the pipe wall, or, equivalently, the term

in (M1). For a discussion of this simplification step, see [38]. Neglecting the ram pressure term (5) yields

Finally, one may also neglect gravitational forces, i.e., set the term \(gh^{\prime } p / c^{2}\) to 0 and obtain

Analytical solutions for the models (M1)–(M3) are only rarely known (see, e.g., [18, 19, 34]). Thus, in order to obtain finite-dimensional nonlinear optimization models, we discretize these differential equations in space. Applying, e.g., the implicit Euler method, we obtain

where pk = p(xk) and \({\Gamma } = \{x_{0}, x_{1},\dots , x_{n}\}\) is an equidistant spatial discretization of the pipe with constant stepsize h = xk − xk− 1 andx0 = 0, xn = L. Of course, one could also apply a higher-order Runge–Kutta method, which would allow a larger stepsize and would thus reduce the computational cost.

These discretizations extend the model hierarchy (M1)–(M3) for the Euler equations by infinitely many models that are parameterized by the discretization stepsize h applied in (D1)–(D3). In summary, we obtain the pipe model hierarchy of stationary Euler equations depicted in Fig. 1.

2.4 Compressors

Compressor machines a = (u, w) ∈ Acm increase the inflow gas pressure to a higher outflow pressure, i.e., they can be described in a simplified way by

Moreover, for simplicity, we assume that we are given cost coefficients ωa ≥ 0 for every compressor a ∈ Acm that converts pressure increase to compression cost. Of course, this is an extremely coarse approximation of a compressor machine. An alternative would be to use a simple input-output surrogate model obtained from a realization or system identification of an input-output transfer function (see, e.g., [5]). However, our focus is on an accurate modeling of the gas flow in pipes and on deriving an adaptive model and discretization control algorithm. Model (6) allows for setting up a reasonable objective function for our NLPs and is thus appropriate in this work. For more details, see [31, 34, 35] or [14].

2.4.1 The Optimization Problem

We will use the adaptive model and discretization control algorithm in the context of the following nonlinear ODE-constrained optimization problem

where our objective function models the cost for the compressor activity that is constrained by an infinite-dimensional description of the gas flow in pipes. Problem (2.4.1) is a classical nonlinear optimal control problem. A typical approach to solve such problems in practice is the first-discretize-then-optimize paradigm (see, e.g., [2]). In this setting, one replaces the ODE constraints by finite sets of nonlinear constraints that arise, e.g., from implicit Euler discretizations like (D1) for (M1). Moreover, practical experience suggests that for the evaluation of the constraints, it is often not required to apply the most accurate model like (D1) with a small stepsize for every pipe in the network. Instead, in many situations, it is sufficient to use simplified models like (D2) and (D3) with a coarse grid, which then typically yields fast execution times for the evaluation of the constraint functions.

To this end, we define discretized problem variants of Problem (2.4.1) by specifying the model level ℓa ∈ {1, 2, 3} for every arc a ∈ Api (i.e., the discretized model (D1), (D2), or (D3), respectively) together with a stepsize ha. This yields the family of finite-dimensional NLPs

Note that the constraints (7b)–(7d) in the infinite-dimensional problem are exactly the same as constraints (8b)–(8d) in the family of discretized problems.

3 Error Estimators

In this section, we introduce a first-order estimate for the error between the most detailed infinite-dimensional and an arbitrary space-discretized model. This error estimator is obtained as the sum of a discretization and a model error estimator. Since we consider the stationary case, mass flows in pipes are constant in the spatial dimension. This is why we base our error estimators on the differences of the pressures p(x) for different models and discretizations.

Suppose that for a given pipe a ∈ Api, the model level ℓa ∈ {1, 2, 3} with discretization stepsize ha is currently used for the computations. The overall solution of the optimization problem for the entire network, also including pressure increases in compressors etc., is denoted by y and contains the discretized pressure distributions of the separate pipes a ∈ Api, which we denote by \(p^{\ell _{a}}(x_{k};h_{a})\) with discretization grid \({\Gamma }_{1} = \{x_{k}\}_{k = 0}^{L_{a}/h_{a}}\) obtained by using the stepsize ha. We now compute an estimate for the error between the solution of the currently used model \((\text {D}_{\ell _{a}})\) and the solution of the reference model (M1). Let the solution of model (M1) for pipe a ∈ Api be denoted by \(\hat {p}(x)\) with x ∈ [0, La].

Furthermore, let the solutions of Model (D1) with discretization grids \({\Gamma }_{2} = \{x_{s}\}_{s = 0}^{L_{a}/(2h_{a})}\) and \({\Gamma }_{3} = \{x_{r}\}_{r = 0}^{L_{a}/(4h_{a})}\) using stepsizes 2ha and 4ha, be denoted by p1(xs;2ha) and p1(xr;4ha), respectively. Due to the larger stepsize, the computation of these two solutions is in general less expensive than computing a solution of Model \((\text {D}_{\ell _{a}})\) on the grid Γ1. Since the discretization grid Γ3 is the coarsest grid and all computed pressure profiles can be evaluated on this grid, Γ3 is called the evaluation grid. This grid is used in the definitions of the following error estimators. The considered discretization grids and the evaluation grid are depicted in Fig. 2.

For a pipe a ∈ Api, let the discretization error estimator be defined by

and let the model error estimator be defined by

Here,

denotes the solution of Model \((\text {D}_{\ell _{a}})\) computed with stepsize ha that is evaluated at the gridpoints xr, i.e., on the grid Γ3. If ℓa = 1, i.e., if the considered solution already corresponds to the most accurate model, then we set the model error to zero, i.e., ηm,a(y) = 0. Furthermore, let the overall error estimator ηa(y) for a pipe a ∈ Api be defined to be a first-order upper bound for the maximum error between the solutions of models (M1) and \((\text {D}_{\ell _{a}})\) at gridpoints xr with stepsize 4ha. Thus, we have

where \(\doteq \) denotes a first-order approximation in ha (see [36, p. 420]), and we use that the implicit Euler method has convergence order 1. The error estimator ηa(y) is the absolute counterpart of the componentwise relative error estimator given in [37]. An overview of the considered models in this section together with the considered stepsizes is depicted in Fig. 3.

We close this section with a remark on the computation of the discretization error estimator in (9). A straightforward way is to solve Model (D1) once with stepsize 2ha and once again with stepsize 4ha for every a ∈ Api. Another possibility would be to use an embedded Runge–Kutta method (see, e.g., [20]), which in general saves computational cost due to the reduced number of function evaluations.

4 The Grid and Model Adaptation Algorithm

In this section, we present and analyze an algorithm that adaptively switches between the model levels in the hierarchy of Fig. 1 and adapts discretization stepsizes in order to find a convenient trade-off between physical accuracy and computational costs. To this end, the algorithm iteratively solves NLPs and initial value problems (IVPs). Solutions of the latter are used to evaluate the error estimators discussed in the last section and to decide on the model levels and the discretization stepsizes for the next NLP.

Consider a single NLP of the sequence of NLPs that are solved during the algorithm and assume that pipe a ∈ Api is modeled using model \((\text {D}_{\ell _{a}})\) and stepsize ha. Let the solution of this NLP be denoted by y. According to the last section, the overall model and discretization error estimator for this pipe is given by ηa(y) as defined in (11). Thus, it is given by the error estimator between the solutions of the most accurate model (M1) and the current model \((\text {D}_{\ell _{a}})\).

The overall goal of our method is to compute a solution of a member of the family of discretized problems (8a) for which it is guaranteed that this solution has an estimated average error per pipe with respect to the reference model (M1), that is less than an a-priorily given tolerance ε > 0. This leads us to the following definition:

Definition 1

(ε-feasibility) Let ε > 0 be given. We say that a solution y of problem (8a) with discretized models \((\text {D}_{\ell _{a}})\), ℓa ∈ {1, 2, 3}, and stepsizes ha for the pipes a ∈ Api is ε-feasible with respect to the reference problem (2.4.1) if

The remainder of this section is organized as follows. Section 4.1 introduces rules about how the model levels and discretization stepsizes are modified. The strategies for marking pipes for model or grid adaptation are explained in Section 4.2. The adaptive model and discretization control algorithm are introduced in Section 4.3, together with a theorem for the finite termination of the algorithm. Finally, some remarks regarding the adaptive control algorithm are given in Section 4.4.

4.1 Model and Discretization Adaptation Rules

Before we present and discuss the overall adaptive model control algorithm, we have to

-

1.

describe the mechanisms of switching up or down pipe model levels as well as that of refining and coarsening the discretization grids, and

-

2.

discuss our marking strategy that determines the arcs on which the model or grid should be adapted.

We start with the first issue and follow the standard PDE grid adaptation technique (see, e.g., [6, 7, 12] or [4]). The general strategy is as follows. We switch up one level in the model hierarchy if this yields an error reduction that is larger than ε; otherwise, we switch up to the most accurate discretized model (D1). Hence, for pipe a ∈ Api, we have the rule

for switching up levels in the model hierarchy. We apply this rule because it is possible that the effects of neglecting the ram pressure term (which is the difference between model levels ℓ = 1 and ℓ = 2) and neglecting gravitational forces for non-horizontal pipes (which is the difference between model levels ℓ = 2 and ℓ = 3) balance each other out in the computation of the pressure profile of model (D3). In this case, switching from model (D3) to (D2) would increase the model error, which is why we switch from (D3) to (D1) directly.

A discretization grid refinement or coarsening with a factor γ > 1 is defined by taking the new stepsize as

For a discretization scheme of order β, it is well-known that a first-order approximation for the discretization error in x ∈ [0, La] is given by \(e_{\text {d},a}(x) \doteq c(x)h_{a}^{\beta }\), where c(x) is independent of ha (see, e.g., [36]). From this, it follows that the new discretization error after a grid refinement or coarsening can be written as

Since the implicit Euler method has convergence order β = 1, with \(h_{a}^{\text {new}}\) in (13) and γ = 2, for the new discretization error estimator after a grid refinement or coarsening, it holds that

4.2 Marking Strategies

We now describe our marking strategies, i.e., how we choose which pipes should be switched up or down in their model level and which pipes should get a refined or coarsened grid. Given marking strategy parameters Θd, Θm ∈ [0, 1], we compute subsets \(\mathcal {R}, \mathcal {U} \subseteq A_{\text {pi}}\) such that they are the minimal subsets of arcs that satisfy

and

with

where \(\ell _{a}^{\text {new}}\) is given in (12). Analogously, given marking strategy parameters Φd, Φm ∈ [0, 1] and τ ≥ 1, we compute \(\mathcal {C}, \mathcal {D} \subseteq A_{\text {pi}}\) such that they are the maximal subsets of arcs that satisfy

and

with

In (18), \(\ell _{a}^{\text {new}}\) is always set to \(\min \{\ell _{a} + 1, 3\}\). For every arc \(a \in \mathcal {R}\) (\(a \in \mathcal {C}\)), we refine (coarsen) the discretization grid by halving (doubling) the stepsize, i.e., we set γ = 2 in (13). We note that these marking strategies are very similar to the greedy strategies on a network described in [10], where those pipes are marked for a spatial, temporal, or model refinement which yield the largest error reduction.

4.3 The Algorithm

With these preliminaries, we can now state the overall adaptive model and discretization control algorithm for finding an ε-feasible solution of the reference problem (2.4.1). The formal listing is given in Algorithm 1.

The algorithm makes use of the safeguard parameter μ ∈ ℕ. This parameter ensures that the algorithm performs grid coarsenings and switches down the model level only after applying μ rounds of grid refinements and switching up model levels. It prevents an alternating switching up and down model levels or an alternating refining and coarsening of the discretization grid. We note that this technique is similar to the use of hysteresis parameters (see, e.g., [28]). By employing this safeguard, we can prove that Algorithm 1 terminates after a finite number of iterations with an ε-feasible point of the reference model (M1).

To improve readability, we split the proof of our main theorem into two parts. The first lemma states finite termination at an ε-feasible point if only discretization grid refinements and coarsenings are applied, whereas the second lemma considers the case of switching levels in the model hierarchy only, i.e., with a fixed stepsize for every pipe.

Lemma 1

Suppose that the model level ℓa ∈ {1, 2, 3} is fixed for every pipe a ∈ Api. Let the resulting set of model levels be denoted by \(\mathcal {M}\). Suppose further that ηa(y) = ηd,a(y) holds in (11) and that every NLP is solved to local optimality. Consider Algorithm 1 without applying the model switching steps in Lines 10 and 17. Then, the algorithm terminates after a finite number of refinements in Line 11 and coarsenings in Line 18 with an ε-feasible solution with respect to model level set \(\mathcal {M}\) if there exists a constant C > 0 such that

holds for all k.

Proof

We consider the total discretization error

and show that for every iteration k the difference between the decrease obtained in the inner for-loop and the increase obtained due to the coarsenings applied in Line 18 is positive and uniformly bounded away from zero. In what follows, we only consider a single iteration and drop its index k for better readability.

First, we consider one refinement step in Line 11. Let \(\eta _{\text {d},a}^{j-1}\) denote the discretization error before the j th inner iteration and let \(\eta _{\text {d},a}^{j}\) denote the discretization error after the j th inner iteration. With this, we have

for every \(j = 1, \dots , \mu \). For the last equality, we have used that the implicit Euler method has convergence order 1, which (for small stepsizes ha) implies \(\eta _{\text {d},a}^{j} = \frac {1}{2} \eta _{\text {d},a}^{j-1}\) when we take the new stepsize as half the current stepsize (see (14)). Summing up over all μ inner iterations, we obtain a telescopic sum and finally get an error decrease of

We now consider the coarsening step. For this, let \(\eta _{\text {d},a}^{\mu }\) denote the discretization error before and \(\eta _{\text {d},a}^{\mu + 1}\) the discretization error after the coarsening step in Line 18. Using similar ideas like above, we obtain

Thus, we are finished if we prove that

is positive and uniformly bounded away from zero. Using

(15), (17), and (19), we obtain

which completes the proof. □

Next, we prove an analogous lemma for the case that we fix the stepsize of every arc a ∈ Api and only allow for model switching.

Lemma 2

Suppose that the discretization stepsize ha is fixed for every pipe a ∈ Api. Suppose further that ηa(y) = ηm,a(y) holds in (11) and that every NLP is solved to local optimality. Consider Algorithm 1 without applying the discretization refinements in Line 11 and the coarsenings in Line 18. Then, Algorithm 1 terminates after a finite number of model switches in Lines 10 and 17 with an ε-feasible solution with respect to the stepsizes ha, a ∈ Api, if there exists a constant C > 0 such that

holds for all k.

Proof

We consider the total model error

and show that the difference between the decrease obtained in the inner loop and the increase obtained due to switching model levels down in Line 17 is positive and uniformly bounded away from zero for every iteration k. We again consider only a single iteration and drop the corresponding index.

First, we consider a single step of switching up the model level in Line 10. Let \(\eta _{\text {m},a}^{j-1}\) denote the model error before the j th inner iteration and \(\eta _{\text {m},a}^{j}\) the model error after the j th inner iteration. We then have

for every \(j = 1, \dots , \mu \). Summing up over all j yields the overall model error decrease after μ for-loop iterations of

We now consider the step of switching down the model level in Line 17. Let \(\eta _{\text {m},a}^{\mu }\) denote the model error before and \(\eta _{\text {m},a}^{\mu + 1}\) the model error after this step. It holds that

Thus, the proof is finished if we show that

is positive and uniformly bounded away from zero. With similar ideas as in the proof of Lemma 1 and using (16), (18), and (20), we obtain

where we used that \(|A_{\text {pi}}^{>\varepsilon }| \geq 1\). This completes the proof. □

Let \(\eta _{\text {m},a}^{\text {new}}(y)\) denote the new model error estimator after a grid refinement or coarsening. In order to prove our main theorem we need to assume that, for every pipe a ∈ Api, the change in the model error estimator after a grid refinement or coarsening can be neglected as compared to ηm,a(y), i.e., \(|\eta _{\text {m},a}(y) - \eta _{\text {m},a}^{\text {new}}(y)| \ll \eta _{\text {m},a}(y)\), such that we may write \(\eta _{\text {m},a}^{\text {new}}(y) = \eta _{\text {m},a}(y)\). A sufficient condition for this assumption to hold is given by ηd,a(y) ≪ ηm,a(y) for every a ∈ Api. This condition also implies that ηm,a(y) is a first-order approximation of the exact model error em,a(y) and is thus reliable for small stepsizes ha.

Lemma 3

Let the discretization and model error estimator ηd,a(y) andηm,a(y) as defined in (9) and (10) be given for every a ∈ Api . Let furtherem,a(y) be the exact error between models (M1) and (M\(_{\ell _{a}}\)) and let \(\eta _{\text {m},a}^{\text {new}}(y)\) be the new model error estimator after a grid refinement or coarsening. Then, the implications

-

1.

\(\eta _{\text {d},a}(y) \ll \eta _{\text {m},a}(y) \Longrightarrow \eta _{\text {m},a}(y) \doteq e_{\text {m},a}(y)\),

-

2.

\(\eta _{\text {d},a}(y) \ll \eta _{\text {m},a}(y) \Longrightarrow \eta _{\text {m},a}^{\text {new}}(y) = \eta _{\text {m},a}(y)\)

hold for every a ∈ Api.

Proof

Let pipe a ∈ Api be arbitrary. To improve readability, in the following we drop the dependencies of the exact errors and the error estimators on a and y. Without loss of generality, we consider only one arbitrary spatial gridpoint xk.

Let us first introduce some notation. The exact model error is given by \(e_{\text {m}}(x_{k}) = \hat {p}(x_{k}) - p^{\text {M}_{\ell _{a}}}(x_{k})\) for the current model level ℓa, the exact discretization error for model (D1) is given by \(e_{\text {d}}^{1}(x_{k}) = \hat {p}(x_{k}) - p^{1}(x_{k};2h_{a})\) and the exact discretization error for model (\(\text {D}_{\ell _{a}}\)) is denoted by \(e_{\text {d}}^{\ell _{a}}(x_{k}) = p^{\text {M}_{\ell _{a}}}(x_{k}) - p^{\ell _{a}}(x_{k};2h_{a})\). Furthermore, the model error estimator is given by \(\eta _{\text {m}}(x_{k}) = p^{1}(x_{k};2h_{a}) - p^{\ell _{a}}(x_{k};2h_{a})\) (see (10)), and we define the discretization error estimators \(\eta _{\text {d}}^{1}(x_{k}) := p^{1}(x_{k};2h_{a}) - p^{1}(x_{k};4h_{a})\) and \(\eta _{\text {d}}^{\ell _{a}}(x_{k}):= p^{\ell _{a}}(x_{k};2h_{a}) - p^{\ell _{a}}(x_{k};4h_{a})\) as in (9). Then, we have \(\eta _{\text {d}}^{1}(x_{k}) \doteq e_{\text {d}}^{1}(x_{k})\) and \(\eta _{\text {d}}^{\ell _{a}}(x_{k}) \doteq e_{\text {d}}^{\ell _{a}}(x_{k})\) (see [36, p. 420]). Further, it holds that

because \(\eta _{\text {d}}^{1}(x_{k})\) and \(\eta _{\text {d}}^{\ell _{a}}(x_{k})\) use the same stepsizes 2ha and 4ha to compute the discrete pressure distributions.

We now prove the first implication. Using the previously defined notation, it holds that

Thus, if \(|\eta _{\text {d}}^{1}(x_{k})|\) and \(|\eta _{\text {d}}^{\ell _{a}}(x_{k})|\) may be neglected as compared to \(|\eta _{\text {m}}(x_{k})|\), then we have \(e_{\text {m}}(x_{k}) \doteq \eta _{\text {m}}(x_{k})\), i.e.,

Considering also the equivalence relation (21), it follows that

from which the first implication follows directly.

Finally, we prove the second implication. We show that this implication holds for the case that \(\eta _{\text {m}}^{\text {new}}(x_{k})\) is the new model error estimator after a grid coarsening. The case for a grid refinement can be shown analogously. It holds that

This yields

Again, considering (21) and (22) results in

from which the second implication follows immediately. □

With the three preceding lemmas at hand, we are now ready to state and prove our main theorem about finite termination of Algorithm 1.

Theorem 1

(Finite termination) Suppose that ηd,a ≪ ηm,a for every a ∈ Api and that every NLP is solved to local optimality. Then, Algorithm1 terminates after a finite number of refinements, coarsenings and model switches in Lines10, 11, 17, and18 with anε-feasible solution with respect to the reference problem (2.4.1) if there exist constantsC1, C2 > 0 such that

hold for all k.

Proof

We consider the total error \({\sum }_{a \in A_{\text {pi}}} \eta _{a}\) and show that the difference between the decrease obtained in the inner loop and the increase obtained due to switching down the model level and coarsening the grid is positive and uniformly bounded away from zero for every iteration k. Again, we consider only a single iteration and drop the corresponding index. We first consider Lines 10 and 11 for fixed j. It holds that

where we use that \(\eta _{\text {m},a}^{j} = \eta _{\text {m},a}^{j-1}\) for every \(a \in \mathcal {R}_{j} \setminus \mathcal {U}_{j}\) since \(\eta _{\text {d},a}^{j-1} \ll \eta _{\text {m},a}^{j-1}\) for every a ∈ Api (see Lemma 3). Moreover, the discretization error estimator ηd,a does not change after a switching up the model level.

Again, summing up over all \(j = 1, \dots , \mu \) yields the overall error decrease after μ for-loop iterations of

With similar arguments as before for Lines 10 and 11, we consider Lines 17 and 18 and obtain

Finally, it remains to prove that

is positive and uniformly bounded away from zero. Using the proofs of Lemmas 1 and 2, we have

which completes the proof. □

4.4 Remarks

Before we close this section, we discuss some details and extensions regarding Algorithm 1. First, we give an overview of the main computations that are performed in the algorithm. In Lines 2 and 12, the NLP (8a) is solved using the current model level ℓa and the current stepsize ha for every pipe a ∈ Api. Most types of NLP algorithms are iterative methods. That is, the computational costs of the algorithms depend on the number of iterations required to converge to a (local) optimal solution and the costs per iteration. The latter mainly consist of the solution of a linear system (e.g., suitable forms of the KKT system for interior-point or active-set methods) for computing the search direction. The size of this linear system typically is \(\mathcal {O}(n+m)\), where n is the number of variables and m is the number of constraints of the NLP. Both n and m are directly controlled by the stepsizes ha that we use in our NLP models. The model level ℓa mainly determines the sparsity/density of the system matrices of the linear systems and the overall nonlinearity of the NLP, which typically influences the number of required iterations.

In Lines 3 and 13, the overall error estimator ηa(y) is computed for every pipe a ∈ Api. Thus, for all pipes, the solution of model (D1) is computed with stepsize both 2ha and 4ha and the solution of model (\(\text {D}_{\ell _{a}}\)) is computed with stepsize ha. These solutions are obtained by solving the initial value problems consisting of the ordinary differential equations (M1) and (\(\text {M}_{\ell _{a}}\)) together with the initial value p(x0), which is contained in the optimal solution y of Problem (8a). Continuing with the example of the implicit Euler method that we use as numerical integration scheme throughout this paper, the initial value problems can be solved (i) by considering the implicit equations in (D1) and (\(\text {D}_{\ell _{a}}\)) and using, e.g., the Newton method to solve for pk in every space integration step, or (ii) by using an existing software code and setting the order of the numerical integration scheme to one.

The subset \(\mathcal {R}\) in Line 9 can be determined efficiently, since ηd,a(y) has already been computed in Line 3 or 13 for every a ∈ Api. For subset \(\mathcal {U}\) in Line 9 and in (16), the error estimator ηm,a(y) has also already been computed in Line 3 or 13 for every a ∈ Api. Moreover, \(\ell _{a}^{\text {new}}\) in (12) has to be computed in order to determine \(\mathcal {U}\). For this, we compute ηm,a(y;ℓa − 1) if and only if ℓa = 3. In the case ℓa = 2 we have \(\eta _{\text {m},a}(y;\ell _{a}-1) = 0\) and for ℓa = 1 we have \(\eta _{\text {m},a}(y;\ell _{a}^{\text {new}}) = \eta _{\text {m},a}(y;\ell _{a}) = 0\). Subset \(\mathcal {C}\) in Line 16 can also be computed efficiently, since ηd,a(y) has already been computed in Line 3 or 13 for every a ∈ Api. For subset \(\mathcal {D}\) in Line 16 and in (18), the error estimator ηm,a(y) has been computed already in Line 3 or 13 for every a ∈ Api. If ℓa ∈{1,2}, then \(\eta _{\text {m},a}(y;\ell _{a}+ 1)\) has to be computed for every a ∈ Api in order to determine \(\mathcal {D}\).

We note that the optimal solution y of Problem (8a) contains, among others, the model level ℓa, stepsize ha, and pressure \(p^{\ell _{a}}(x_{0})\) at the beginning of the pipe, for every \(a \in A_{\text {pi}}\). Using ℓa, ha, and \(p^{\ell _{a}}(x_{0})\), the discretization and model error estimator for pipe \(a \in A_{\text {pi}}\) can be computed without information from other pipes. Hence, the error estimators, e.g., in Line 13, can be computed in parallel.

Up to now, we have discussed two types of errors: modeling and discretization errors. Both are handled by Algorithm 1 and we have shown that the algorithm terminates with a combined model and discretization error that satisfies a user-specified error tolerance ε > 0. What we have ignored so far is that the NLPs are also solved by a numerical method that introduces numerical errors as well. However, it is easy to integrate the control of this additional error source into Algorithm 1. Let εopt > 0 be the optimality tolerance that we hand over to the optimization solver and suppose that the solver always satisfies this tolerance. Furthermore, let the tolerance ε considered so far now be denoted by εdm. Using the triangle inequality, we easily see that the upper bound of the total error (that is aggregated modeling, discretization, and optimization error) is εopt + εdm. Hence, in order to satisfy an overall error tolerance ε > 0, we have to ensure that εopt + εdm ≤ ε holds, which can be formally introduced in Algorithm 1 by replacing ε with εopt + εdm.

Finally, note that this additional error source directly suggests itself for adaptive treatment as well. In the early iterations of Algorithm 1, it is not important that εopt is small. That is, the optimization is allowed to produce coarser approximate local solutions. However, in the course of the algorithm, one can observe the achieved modeling and discretization error and can adaptively tighten the optimization tolerance. Since this strategy allows the optimization method to produce coarse approximate solutions in the beginning, it can be expected that this leads to a speed-up in the overall running times of Algorithm 1.

The choice of the error tolerance ε that has to be provided in Algorithm 1 will depend on the user requirements; however, one should be aware that due to the round-off errors committed during every single step of the procedure, and due to possible ill-conditioning of the linear systems solved by the NLP solver, none of the three errors, the discretization error, the modeling error, and the NLP error can be chosen extremely small. Since the backward error and the associated condition number of the linear systems can be estimated during the procedure (see [17]) and since the error estimates for the discretization method are at hand, it is just the modeling error which is not known a priori. To estimate this latter error (of the finest model) usually requires a comparison with experimental data. If these are available during a real-world process, then it is possible to adjust the required tolerances ε in a feedback loop using a standard PI controller (see, e.g., [30]), i.e., if measured data are available that show that the finest model has a given accuracy, then ε should not be chosen smaller than this.

Finally, we want to stress that the described adaptive error control algorithm can be used with any number of model levels in the hierarchy, with any higher order discretization scheme, and with any number of grid refinement levels.

5 Computational Results

In this section, we present numerical results obtained by the adaptive error control algorithm. To this end, we compare the efficiency of the method with an approach that directly solves an NLP that satisfies the same error tolerance and that is obtained without using adaptivity. Before we discuss the results in detail, we briefly mention the computational setup and the gas transport network instances that we solve.

We implemented the adaptive error control Algorithm 1 in Python 2.7.13 and used the scipy 0.14.0 module for solving the initial value problems. All nonlinear optimization models have been implemented using the C++ framework LaMaTTO++Footnote 1 for modeling and solving mixed-integer nonlinear optimization problems on networks. The computations have been done on a six-core AMD OpteronTM Processor 2435 with 2.2 GHz and 64 GB of main memory. The NLPs have been solved using Ipopt 3.12 (see [39, 40]).

For our computational study, we choose publicly available GasLib instances (see [33]). This has the advantage that, if desired, all numerical results can be reproduced on the same data. In what follows, we consider the networks GasLib-40 and GasLib-135, since these are the largest networks in the GasLib that only contain pipes and compressor stations as arc types. Detailed statistics are given in Table 1.

Next, we describe the parameterization of Algorithm 1. We initialize every pipe a ∈ Api with the coarsest model level ℓa = 3 and with the coarsest possible discretization grid. In order to yield a well-defined algorithm, the number of discretization grid intervals has to be a multiple of 4 (see Fig. 2). Thus, we initially set ha = La/4 and ensure in Step 18 of Algorithm 1 that we never obtain a coarser grid size than the initial one. The overall tolerance is set to ε = 10− 4bar. Moreover, we set Θd = Θm = 0.7, Φd = Φm = 0.3, τ = 1.1, and μ = 4. Here, we refrain from updating these parameters from iteration to iteration, which is possible in general. Note that our parameter choice violates the second inequality of Theorem 1. This could be fixed by simply increasing the hysteresis parameter μ. However, we refrain from using a larger μ in order to give the adaptive algorithm more chances to also switch down in the model hierarchy or to coarsen discretization grids. Our numerical experiments show that the violation of the second inequality of Theorem 1 does not harm convergence in practice but leads to slightly faster computations.

The same rationale holds for the relation between model and discretization error as assumed in Theorem 1 (see also Lemma 3). To be fully compliant with the theory, the initial discretization grids need to be much finer. Again, coarser initial discretization grids do not harm convergence in our numerical experiments but yield much faster computations.

We now turn to the discussion of the numerical results. Both instances are solved using 8 iterations. Thus, together with the initially solved NLP, we have to solve 9 NLPs for solving both instances.

Using the adaptive control algorithm, it takes 3.82 s to solve the GasLib-40 instance and 7.50 s to solve the GasLib-135 instance. For the GasLib-40 network, the final NLP contains 2026 variables and 1988 constraints, whereas for the GasLib-135 the final NLP contains 3405 variables and 3271 constraints.

Most interesting is the speed-up that we obtain by using the adaptive control algorithm. Thus, we compare the above given solution times with the solution times for an NLP that satisfies the same error tolerances but that is obtained without using model level and discretization grid adaptivity. This NLP contains 40 034 variables and 39996 constraints for the GasLib-40 instance and 144757 variables as well as 144623 constraints for the GasLib-135 instance. Compared to the final NLPs that have to be solved within the adaptive algorithm, the NLPs obtained without using adaptivity are quite large scale. This directly translates to solution times. The GasLib-40 instance requires 53.11s and the GasLib-135 instance requires 122.42s. Thus, we get a speed-up factor of 13.89 and 16.33, respectively.

Figure 4 illustrates the adaptivity of the algorithm by plotting how many pipe grids are refined (\(|\mathcal {R}|\)) and how many pipe models are switched up in the hierarchy (\(|\mathcal {U}|\)). It can be clearly seen that increasing the accuracy is only needed for a small fraction of the pipes. For the GasLib-40 network, we never refine grids for more than 9 pipes, whereas we never refine grids for more than 21 pipes for the GasLib-135 network. Thus, for the larger network, we never refine grids for more than 15% of all pipes.

For both networks, the Lines 17 and 18 are only reached once. For the smaller network, only 1 pipe grid is coarsened, whereas 3 pipe grids are coarsened for the larger network. Moreover, the algorithm never switches down in the model hierarchy. Consequently, the NLPs get larger from iteration to iteration. This then yields increased running times for the NLP solver as depicted in Fig. 5. It can be seen that the subsequent NLPs can be solved quite fast. There are two main reasons for this phenomenon. First, the NLP’s size only increases moderately due to the adaptive control strategy. Second, the overall algorithm allows for warm-starting: When solving a single NLP, we always use the last NLP’s solution to set up the initial iterate.

Lastly, we consider the decrease in the respective errors. In Fig. 6, the discretization, model, and total errors are plotted over the course of the iterations. Both profiles show the expected decrease in the errors.

6 Conclusion

We have considered the problem of operation cost minimization for gas transport networks. In this context, we have focused on stationary and isothermal models and developed an adaptive model and discretization error control algorithm for nonlinear optimization that uses a hierarchy of continuous and finite-dimensional models. Out of this hierarchy, the new method adaptively chooses different models in order to finally achieve an optimal solution that satisfies a prescribed combined model and discretization error tolerance. The algorithm is shown to be convergent and its performance is illustrated by several numerical results.

The results pave the way for future work in the context of model switching and discretization grid adaptation for nonlinear optimal control. On the one hand, it should be extended to non-isothermal and instationary models of gas transport, in particular, in a port-Hamiltonian formulation. On the other hand, it would be interesting to extend the new technique to mixed-integer nonlinear optimal control.

References

Becker, R., Kapp, H., Rannacher, R.: Adaptive finite element methods for optimal control of partial differential equations: basic concept. SIAM J. Control Optim. 39, 113–132 (2000)

Biegler, L.T.: Nonlinear Programming: Concepts, Algorithms, and Applications to Chemical Processes. MOS-SIAM Series on Optimization, vol. 10. SIAM, Philadelphia (2010)

Bock, H.G., Diehl, M., Kostina, E., Schlöder, J.P.: Constrained optimal feedback control of systems governed by large differential algebraic equations. In: Biegler, L.T., et al. (eds.) Computational Science & Engineering Real-Time PDE-Constrained Optimization, pp 3–24. SIAM, Philadelphia (2007)

Brenner, S., Scott, R.: The Mathematical Theory of Finite Element Methods. Texts in Applied Mathematics, vol. 15. Springer, New York (2007)

Brockett, R.W.: Finite Dimensional Linear Systems. Classics in Applied Mathematics, vol. 74. SIAM, Philadelphia (2015)

Carstensen, C., Hoppe, R.: Convergence analysis of an adaptive nonconforming finite element method. Numer. Math. 103, 251–266 (2006)

Carstensen, C., Hoppe, R.: Error reduction and convergence for an adaptive mixed finite element method. Math. Comput. 75, 1033–1042 (2006)

Diehl, M., Bock, H. G., Schlöder, J.P.: Newton-type methods for the approximate solution of nonlinear programming problems in real-time. In: Di Pillo, G., Murli, A. (eds.) High Performance Algorithms and Software for Nonlinear Optimization, pp 177–200. Springer, Boston (2003)

Diehl, M., Bock, H.G., Schlöder, J.P.: A real-time iteration scheme for nonlinear optimization in optimal feedback control. J. Control Optim. 43, 1714–1736 (2005)

Domschke, P., Dua, A., Stolwijk, J.J., Lang, J., Mehrmann, V.: Adaptive refinement strategies for the simulation of gas flow in networks using a model hierarchy. Institut für Mathematik 2017/03, Berlin (2017)

Domschke, P., Hiller, B., Lang, J., Tischendorf, C.: Modellierung von Gasnetzwerken: Eine Übersicht Technische Universität Darmstadt. http://www3.mathematik.tu-darmstadt.de/fb/mathe/preprints.html (2017)

Dörfler, W.: A convergent adaptive algorithm for Poisson’s equation. SIAM J. Numer. Anal. 33, 1106–1124 (1996)

Feistauer, M.: Mathematical Methods in Fluid Dynamics. Pitman Monographs and Surveys in Pure and Applied Mathematics Series, vol. 67. Longman Scientific & Technical, Harlow (1993)

Fügenschuh, A., Geiler, B., Gollmer, R., Morsi, A., Pfetsch, M.E., Rövekamp, J., Schmidt, M., Spreckelsen, K., Steinbach, M.C.: Physical and technical fundamentals of gas networks. In: Koch, T., et al. (eds.) Capacities, Evaluating Gas Network, pp. 17-44. MOS-SIAM Series on Optimization. SIAM, Philadelphia (2015)

Geiler, B., Martin, A., Morsi, A., Schewe, L.: Using piecewise linear functions for solving MINLPs. In: Lee, J., Leyffer, S. (eds.) Mixed Integer Nonlinear Programming. The IMA Volumes in Mathematics and Its Applications, vol. 154, pp 287–314. Springer, New York (2012)

Geiler, B., Morsi, A., Schewe, L.: A new algorithm for MINLP applied to gas transport energy cost minimization. In: Jünger, M., Reinelt, G. (eds.) Facets of Combinatorial Optimization, pp 321–353. Springer, Berlin Heidelberg (2013)

Golub, G.H., Van Loan, C.F.: Matrix Computations. Johns Hopkins Studies in the Mathematical Sciences, 3rd edn. Johns Hopkins University Press, Baltimore, MD (1996)

Gugat, M., Hante, F.M., Hirsch-Dick, M., Leugering, G.: Stationary states in gas networks. Netw. Heterog. Media 10, 295–320 (2015)

Gugat, M., Schultz, R., Wintergerst, D.: Networks of pipelines for gas with nonconstant compressibility factor: stationary states. Comput. Appl. Math. 37, 1066–1097 (2018)

Hairer, E., Nørsett, S.P., Wanner, G.: Solving Ordinary Differential Equations I, 2nd edn. Springer Series in Computational Mathematics, vol. 8. Springer, Berlin (1993)

Hante, F.M., Leugering, G., Martin, A., Schewe, L., Schmidt, M.: Challenges in optimal control problems for gas and fluid flow in networks of pipes and canals: from modeling to industrial applications. In: Manchanda, P., Lozi, R., Siddiqi, A. (eds.) Industrial Mathematics and Complex Systems: Emerging Mathematical Models, Methods and Algorithms. Industrial and Applied Mathematics, pp 77–122. Springer Singapore, Singapore (2017)

Joormann, I., Schmidt, M., Steinbach, M.C., Willert, B.M., et al.: What does “Feasible” mean?. In: Koch, T. (ed.) Evaluating Gas Network Capacities, pp. 211-232. MOS-SIAM Series on Optimization. SIAM, Philadelphia (2015)

Koch, T., Hiller, B., Pfetsch, M.E., Schewe, L. (eds.): Evaluating Gas Network Capacities. MOS-SIAM Series on Optimization. SIAM, Philadelphia (2015)

Kröner, A., Kunisch, K., Vexler, B.: Semismooth Newton methods for optimal control of the wave equation with control constraints. SIAM J. Control Optim. 49, 830–858 (2011)

Leykekhman, D., Vexler, B.: A priori error estimates for three dimensional parabolic optimal control problems with pointwise control. SIAM J. Control Optim. 54, 2403–2435 (2016)

Liu, F., Hager, W.W., Rao, A.V.: Adaptive mesh refinement method for optimal control using nonsmoothness detection and mesh size reduction. J. Frankl. Inst. 352, 4081–4106 (2015)

Lurie, M.V.: Modeling of Oil Product and Gas Pipeline Transportation. Wiley-VCH, Weinheim (2008)

Morse, A.S., Mayne, D.Q., Goodwin, G.C.: Applications of hysteresis switching in parameter adaptive control. IEEE Trans. Automat. Control 37, 1343–1354 (1992)

Nagy, Z., Agachi, S., Allgöwer, F., Findeisen, R., Diehl, M., Bock, H.G., Schlöder, J.P.: The tradeoff between modelling complexity and real-time feasibility in nonlinear model predictive control. In: Proceedings of the 6th World Multiconference on Systemics, Cybernetics and Informatics, SCI (2002)

Petkov, P.H., Christov, N.D., Konstantinov, M.M.: Computational Methods for Linear Control Systems. Prentice Hall International Ltd., Hertfordshire (1991)

Rose, D., Schmidt, M., Steinbach, M.C., Willert, B.M.: Computational optimization of gas compressor stations: MINLP models versus continuous reformulations. Math. Methods Oper. Res. 83, 409–444 (2016)

Schewe, L., Koch, T., Martin, A., Pfetsch, M.E.: Mathematical optimization for evaluating gas network capacities. In: Kock, T., et al. (eds.) Evaluating Gas Network Capacities. MOS-SIAM Series on Optimization, vol. 21, pp 87–102. SIAM, Philadelphia (2015)

Schmidt, M., Aßmann, D., Burlacu, R., Humpola, J., Joormann, I., Kanelakis, N., Koch, T., Oucherif, D., Pfetsch, M.E., Schewe, L., Schwarz, R., Sirvent, M.: GasLib—a library of gas network instances. Data 2017, 2 (2017). https://doi.org/10.3390/data2040040

Schmidt, M., Steinbach, M.C., Willert, B.M.: High detail stationary optimization models for gas networks. Optim. Eng. 16, 131–164 (2015)

Schmidt, M., Steinbach, M.C., Willert, B.M.: High detail stationary optimization models for gas networks: validation and results. Optim. Eng. 17, 437–472 (2016)

Stoer, J., Bulirsch, R.: Introduction to Numerical Analysis. Springer, New York (1980)

Stolwijk, J.J., Mehrmann, V.: Error analysis and model adaptivity for flows in gas networks. Anal. Stiintifice ale Univ. Ovidius Constanta. Ser. Mat Accepted for publication (2017)

Wilkinson, J.F., Holliday, D.V., Batey, E.H., Hannah, K.W.: Transient Flow in Natural Gas Transmission Systems. American Gas Association, New York (1964)

Wächter, A., Biegler, L.T.: Line search filter methods for nonlinear programming: motivation and global convergence. SIAM J. Optim. 16, 1–31 (2005)

Wächter, A., Biegler, L.T.: On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming. Math. Program. 106, 25–57 (2006)

Acknowledgements

This research has been performed as part of the Energie Campus Nürnberg and is supported by funding of the Bavarian State Government. The authors acknowledge funding through the DFG Transregio TRR 154, subprojects A05, B03, and B08.

Author information

Authors and Affiliations

Corresponding author

Additional information

Dedicated to Hans Georg Bock on the occasion of his 70th birthday.

Rights and permissions

About this article

Cite this article

Mehrmann, V., Schmidt, M. & Stolwijk, J.J. Model and Discretization Error Adaptivity Within Stationary Gas Transport Optimization. Vietnam J. Math. 46, 779–801 (2018). https://doi.org/10.1007/s10013-018-0303-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10013-018-0303-1

Keywords

- Gas transport optimization

- Isothermal stationary Euler equations

- Model hierarchy

- Adaptive error control

- Marking strategy