Abstract

Private selection algorithms, such as the exponential mechanism, noisy max and sparse vector, are used to select items (such as queries with large answers) from a set of candidates, while controlling privacy leakage in the underlying data. Such algorithms serve as building blocks for more complex differentially private algorithms. In this paper we show that these algorithms can release additional information related to the gaps between the selected items and the other candidates for free (i.e., at no additional privacy cost). This free gap information can improve the accuracy of certain follow-up counting queries by up to 66%. We obtain these results from a careful privacy analysis of these algorithms. Based on this analysis, we further propose novel hybrid algorithms that can dynamically save additional privacy budget.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Industry and government agencies are increasingly adopting differential privacy [18] to protect the confidentiality of users who provide data. Current and planned major applications include data gathering by Google [7, 21], Apple [43] and Microsoft [13]; database querying by Uber [28]; and publication of population statistics at the US Census Bureau [2, 9, 26, 34].

The accuracy of differentially private data releases is very important in these applications. One way to improve accuracy is to increase the value of the privacy parameter \(\epsilon \), known as the privacy loss budget, as it provides a tradeoff between an algorithm’s utility and its privacy protections. However, values of \(\epsilon \) that are deemed too high can subject a company to criticisms of not providing enough privacy [42]. For this reason, researchers invest significant effort in tuning algorithms [1, 11, 22, 29, 40, 47] and privacy analyses [8, 20, 38, 40] to provide better utility at the same privacy cost.

Differentially private algorithms are built on smaller components called mechanisms [37]. Popular mechanisms include the Laplace mechanism [18], geometric mechanism [24], Noisy Max [19], sparse vector technique (SVT) [19, 33] and the exponential mechanism [36]. As we will explain in this paper, some of these mechanisms, such as the exponential mechanism, Noisy Max and SVT, inadvertently throw away information that is useful for designing accurate algorithms. Our contribution is to present novel variants of these mechanisms that provide more functionality at the same privacy cost (under pure differential privacy).

Given a set of queries, Noisy Max returns the identity (not value) of the query that is likely to have the largest value—it adds noise to each query answer and returns the index of the query with the largest noisy value. The exponential mechanism is a replacement for Noisy Max in situations where query answers have utility scores. Meanwhile, SVT is an online algorithm that takes a stream of queries and a predefined public threshold T. It tries to return the identities (not values) of the first k queries that are likely larger than the threshold. To do so, it adds noise to the threshold. Then, as it sequentially processes each query, it outputs “\(\top \)” or “\(\bot \)”, depending on whether the noisy value of the current query is larger or smaller than the noisy threshold. The mechanism terminates after k “\(\top \)” outputs.

In recent work [45], using program verification tools, Wang et al. showed that SVT can provide additional information at no additional cost to privacy. That is, when SVT returns “\(\top \)” for a query, it can also return the gap between its noisy value and the noisy threshold.Footnote 1 We refer to their algorithm as SVT with Gap.

Inspired by this program verification work, we propose novel variations of exponential mechanism, SVT and Noisy Max that add new functionality. For SVT, we show that in addition to releasing this gap information, even stronger improvements are possible—we present an adaptive version that can answer more queries than before by controlling how much privacy budget it uses to answer each query. The intuition is that we would like to spend less of our privacy budget for queries that are probably much larger than the threshold (compared to queries that are probably closer to the threshold). A careful accounting of the privacy impact shows that this is possible. Our experiments confirm that Adaptive SVT with Gap can answer many more queries than the prior versions [19, 33, 45] at the same privacy cost.

For Noisy Max, we show that it too inadvertently throws away information. Specifically, at no additional cost to privacy, it can release an estimate of the gap between the largest and second largest queries (we call the resulting mechanism Noisy Max with Gap). We generalize this result to Noisy Top-K—showing that one can release an estimate of the identities of the k largest queries and, at no extra privacy cost, release noisy estimates of the pairwise gaps (differences) among the top \(k+1\) queries.

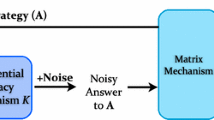

For exponential mechanism, we show that there is also a concept of a gap, which can be used to test whether a non-optimal query was returned. One of the challenges with the exponential mechanism is that for efficiency purposes it can use complex sampling algorithms to select the chosen candidate. We show that it is possible to release the noisy gap information even if the sampling algorithms are treated as black boxes (i.e., without access to its intermediate computations).

The extra noisy gap information opens up new directions in the construction of differentially private algorithms and can be used to improve accuracy of certain subsequent queries. For instance, one common task is to use Noisy Max to select the approximate top k queries and then use additional privacy loss budget to obtain noisy answers to these queries. We show that a postprocessing step can combine these noisy answers with gap information to improve accuracy by up to 66% for counting queries. We provide similar applications for the free gap information in SVT.

This paper is an extension of a conference paper [14]. For this extension we have added the following results: (a) free gap results for the exponential mechanism, (b) free gap results when Noisy Max and SVT are used with one-sided noise, which improves on the accuracy reported in [14] for two-sided noise, (c) novel hybrid algorithms that combine SVT and Noisy Max into an offline selection procedure; these algorithms return the identities of the approximate top-k queries, but only if they are larger than a pre-specified threshold. These algorithms save privacy budget if fewer than k queries are approximately over the threshold, in which case they also provide free estimates of the query answers. (If all k queries are approximately over the threshold, then we obtain information about the gaps between them).

We prove most of our results using the alignment of random variables framework [11, 33, 45, 46], which is based on the following question: If we change the input to a program, how must we change its random variables so that output remains the same? This technique is used to prove the correctness of almost all pure differential privacy mechanisms [19] but needs to be used in sophisticated ways to prove the correctness of the more advanced algorithms [11, 19, 33, 45, 46]. Nevertheless, alignment of random variables is often used incorrectly (as discussed by Lyu et al. [33]). Thus a secondary contribution of our work is to lay out the precise steps and conditions that must be checked and to provide helpful lemmas that ensure these conditions are met. The exponential mechanism does not fit in this framework and requires its own proof techniques, which we explain in Sect. 8. To summarize, our contributions are as follows:

-

1.

We provide a simplified template for writing correctness proofs for intricate differentially private algorithms.

-

2.

Using this technique, we propose and prove the correctness of two new mechanisms: Noisy Top-K with Gap and Adaptive SVT with Gap.

These algorithms improve on the original versions of Noisy Max and SVT by taking advantage of free information (i.e., information that can be released at no additional privacy cost) that those algorithms inadvertently throw away. We also show that the free gap information can be maintained even when these algorithms use one-sided noise. This variation improves the accuracy of the gap information.

-

3.

We demonstrate some of the uses of the gap information that is provided by these new mechanisms. When an algorithm needs to use Noisy Max or SVT to select some queries and then measure them (i.e., obtain their noisy answers), we show how the gap information from our new mechanisms can be used to improve the accuracy of the noisy measurements. We also show how the gap information in SVT can be used to estimate the confidence that a query’s true answer really is larger than the threshold.

-

4.

We show that the exponential mechanism can also release free gap information. Noting that the free gap extensions of Noisy Max and SVT required access to the internal state of those algorithms, we show that this is unnecessary for exponential mechanism. This is useful because implementations of exponential mechanism can be very complex and use a variety of different sampling routines.

-

5.

We propose two novel hybridizations of Noisy Max and SVT. These algorithms can release the identities of the approximate top-k queries as long as they are larger than a pre-specified threshold. If fewer than k queries are returned, the algorithms save privacy budget and the gap information they release directly turns into estimates of the query answers (i.e., the algorithm returns the query identities and their answers for free). If k queries are returned then the algorithms still return the gaps between their answers.

-

6.

We empirically evaluate the mechanisms on a variety of datasets to demonstrate their improved utility.

In Sect. 2, we discuss related work. We present background and notation in Sect. 3. We present simplified proof templates for randomness alignment in Sect. 4. We present Adaptive SVT with Gap in Sect. 5 and Noisy Top-K with Gap in Sect. 6. We present the novel algorithms that combine elements of Noisy Max and SVT in 7. We present exponential mechanism with gap algorithms in Sect. 8. We present experiments in Sect. 9, proofs underlying the alignment of randomness framework in Sect. 10 and conclusions in Sect. 11. Other proofs appear in “Appendix.”

2 Related works

Selection algorithms, such as exponential mechanism [36, 41], sparse vector technique (SVT) [19, 33] and Noisy Max [19] are used to select a set of items (typically queries) from a much larger set. They have applications in hyperparameter tuning [11, 32], iterative construction of microdata [27], feature selection [44], frequent itemset mining [6], exploring a privacy/accuracy tradeoff [31], data preprocessing [12], etc. Various generalizations have been proposed [5, 10, 31, 32, 41, 44]. Liu and Talwar [32] and Raskhodnikova and Smith [41] extend the exponential mechanism for arbitrary sensitivity queries. Beimel et al. [5] and Thakurta and Smith [44] use the propose-test-release framework [17] to find a gap between the best and second best queries and, if the gap is large enough, release the identity of the best query. These two algorithms rely on a relaxation of differential privacy called approximate \((\epsilon ,\delta )\)-differential privacy [16] and can fail to return an answer (in which case they return \(\perp \)). Our algorithms work with pure \(\epsilon \)-differential privacy. Chaudhuri et al. [10] also proposed a large margin mechanism (with approximate differential privacy) which finds a large gap separating top queries from the rest and returns one of them.

There have also been unsuccessful attempts to generalize selection algorithms such as SVT (incorrect versions are catalogued by Lyu et al. [33]), which has sparked innovations in program verification for differential privacy (e.g., [3, 4, 45, 46]) with techniques such as probabilistic coupling [4] and a simplification based on randomness alignment [46]. These are similar to ideas behind handwritten proofs [11, 19, 33]—they consider what changes need to be made to random variables in order to make two executions of a program, with different inputs, produce the same output. It is a powerful technique that is behind almost all proofs of differential privacy, but is very easy to apply incorrectly [33]. In this paper, we state and prove a more general version of this technique in order to prove correctness of our algorithms and also provide the additional results that simplify the application of this technique.

3 Background and notation

In this paper, we use the following notation. D and \(D^\prime \) refer to databases. We use the notation \(D\sim D^\prime \) to represent adjacent databases.Footnote 2M denotes a randomized algorithm whose input is a database. \(\varOmega \) denotes the range of M and \(\omega \in \varOmega \) denotes a specific output of M. We use \(E\subseteq \varOmega \) to denote a set of possible outputs. Because M is randomized, it also relies on a random noise vector \(H\in \mathbb {R}^\infty \). This noise sequence is infinite, but of course M will only use a finite-length prefix of H. Some of the commonly used noise distributions for this vector H include the Laplace distribution, the exponential distribution and the geometric distribution. Their properties are summarized in Table 1.

When we need to draw attention to the noise, we use the notation M(D, H) to indicate the execution of M with database D and randomness coming from H. Otherwise we use the notation M(D). We define \(\mathcal {H}^{M}_{D:E}= \left\{ {H\mid M(D,H)\in E }\right\} \) to be the set of noise vectors that allow M, on input D, to produce an output in the set \(E\subseteq \varOmega \). To avoid overburdening the notation, we write \(\mathcal {H}^{}_{D:E}\) for \(\mathcal {H}^{M}_{D:E}\) and \(\mathcal {H}^{}_{D^\prime :E}\) for \(\mathcal {H}^{M}_{D^\prime :E}\) when M is clear from the context. When E consists of a single point \(\omega \), we write these sets as \(\mathcal {H}^{}_{D:\omega }\) and \(\mathcal {H}^{}_{D^\prime :\omega }\). This notation is summarized in Table 2.

3.1 Formal privacy

Differential privacy [15, 18, 19] is currently the gold standard for releasing privacy-preserving information about a database. It has a parameter \(\epsilon >0\) known as the privacy loss budget. The smaller it is, the more privacy is provided. Differential privacy bounds the effect of one record on the output of the algorithm (for small \(\epsilon \), the probability of any output is barely affected by any person’s record).

Definition 1

(Pure differential privacy [15]) Let \(\epsilon >0\). A randomized algorithm M with output space \(\varOmega \) satisfies (pure) \(\epsilon \)-differential privacy if for all \(E\subseteq \varOmega \) and all pairs of adjacent databases \(D\sim D^\prime \), we have

where the probability is only over the randomness of H. With the notation in Table 2, the differential privacy condition from Eq. (1) is \(\mathbb {P}[\mathcal {H}^{}_{D:E}]\le e^\epsilon \mathbb {P}[\mathcal {H}^{}_{D^\prime :E}]\).

Differential privacy enjoys the following properties:

-

1.

Resilience to postprocessing. If we apply an algorithm A to the output of an \(\epsilon \)-differentially private algorithm M, then the composite algorithm \(A\circ M\) still satisfies \(\epsilon \)-differential privacy. In other words, privacy is not reduced by postprocessing.

-

2.

Composition. If \(M_1,M_2,\dots , M_k\) satisfy differential privacy with privacy loss budgets \(\epsilon _1,\dots ,\epsilon _k\), the algorithm that runs all of them and releases their outputs satisfies \((\sum _i\epsilon _i)\)-differential privacy.

Many differentially private algorithms take advantage of the Laplace mechanism [36], which provides a noisy answer to a vector-valued query \({\varvec{q}}\) based on its \(\ell _1\) global sensitivity \(\varDelta _{{\varvec{q}}}\), defined as follows:

Definition 2

(Global sensitivity [19]) The \(\ell _1\) global sensitivity of a query \({\varvec{q}}\) is \(\varDelta _{{\varvec{q}}} = \sup \nolimits _{D\sim D'} \left\Vert {\varvec{q}}(D)-{\varvec{q}}(D')\right\Vert _1.\)

Theorem 1

(Laplace mechanism [18]) Given a privacy loss budget \(\epsilon \), consider the mechanism that returns \({\varvec{q}}(D)+ H\), where H is a vector of independent random samples from the \({{\,\mathrm{Lap}\,}}(\varDelta _{{\varvec{q}}}/\epsilon )\) distribution. This Laplace mechanism satisfies \(\epsilon \)-differential privacy.

Other kinds of additive noise distributions that can be used in place of Laplace in Theorem 1 include discrete Laplace [24] (when all query answers are integers or multiples of a common base) and Staircase [23].

In some cases, queries may have additional structure, such as monotonicity, which can allow algorithms to provide privacy with less noise (such as one-sided noisy max [19]).

Definition 3

(Monotonicity) A list of queries \({\varvec{q}}=(q_1, q_2,\ldots )\) with numerical values is monotonic if for all pair of adjacent databases \(D\sim D'\) we have either \(\forall i: q_i(D) \le q_i(D')\), or \(\forall i: q_i(D) \ge q_i(D')\).

Monotonicity is a natural property that is satisfied by counting queries—when a person is added to a database, the value of each query either stays the same or increases by 1.

4 Randomness alignment

To establish that the algorithms we propose are differentially private, we use an idea called randomness alignment that previously had been used to prove the privacy of a variety of sophisticated algorithms [11, 19, 33] and incorporated into verification/synthesis tools [3, 45, 46]. While powerful, this technique is also easy to use incorrectly [33], as there are many technical conditions that need to be checked. In this section, we present the results (namely Lemma 1) that significantly simplify this process and make it easy to prove the correctness of our proposed algorithms.

In general, to prove \(\epsilon \)-differential privacy for an algorithm M, one needs to show \(\mathbb {P}[M(D,H)\in E]\le e^\epsilon \mathbb {P}[M(D^\prime ,H')\in E]\) for all pairs of adjacent databases \(D\sim D^\prime \) and sets of possible outputs \(E\subseteq \varOmega \). In our notation, this inequality is represented as \(\mathbb {P}[\mathcal {H}^{}_{D:E}]\le e^\epsilon \mathbb {P}[\mathcal {H}^{}_{D^\prime :E}]\). Establishing such inequalities is often done with the help of a function \(\phi _{D,D^\prime {}}\), called a randomness alignment (there is a function \(\phi _{D,D^\prime {}}\) for every pair \(D\sim D^\prime \)), which maps noise vectors H into noise vectors \(H^\prime \) so that \(M(D^\prime ,H^\prime )\) produces the same output as M(D, H). Formally,

Definition 4

(Randomness alignment) Let M be a randomized algorithm. Let \(D\sim D^\prime \) be a pair of adjacent databases. A randomness alignment is a function \(\phi _{D,D^\prime {}}: \mathbb {R}^\infty \rightarrow \mathbb {R}^\infty \) such that

-

1.

The alignment does not output invalid noise vectors (e.g., it cannot produce negative numbers for random variables that should have the exponential distribution).

-

2.

For all H on which M(D, H) terminates, \(M(D,H) = M(D',\phi _{D,D^\prime {}}(H))\).

Example 1

Let D be a database that records the salary of every person, which is guaranteed to be between 0 and 100. Let q(D) be the sum of the salaries in D. The sensitivity of q is thus 100. Let \(H=(\eta _1,\eta _2,\dots )\) be a vector of independent \({{\,\mathrm{Lap}\,}}(100/\epsilon )\) random variables. The Laplace mechanism outputs \(q(D) + \eta _1\) (and ignores the remaining variables in H). For every pair of adjacent databases \(D\sim D^\prime \), one can define the corresponding randomness alignment \(\phi _{D,D^\prime {}}(H)=H^\prime =(\eta ^\prime _1,\eta ^\prime _2,\dots )\), where \(\eta ^\prime _1=\eta _1 + q(D)-q(D^\prime )\) and \(\eta ^\prime _i=\eta _i\) for \(i>1\). Note that \(q(D)+\eta _1 = q(D^\prime )+\eta ^\prime _1\), so the output of M remains the same.

In practice, \(\phi _{D,D^\prime {}}\) is constructed locally (piece by piece) as follows. For each possible output \(\omega \in \varOmega \), one defines a function \(\phi _{D,D^\prime ,\omega }\) that maps noise vectors H into noise vectors \(H^\prime \) with the following properties: if \(M(D,H)=\omega \) then \(M(D^\prime , H^\prime )=\omega \) (that is, \(\phi _{D,D^\prime ,\omega }\) only cares about what it takes to produce the specific output \(\omega \)). We obtain our randomness alignment \(\phi _{D,D^\prime {}}\) in the obvious way by piecing together the \(\phi _{D,D^\prime ,\omega }\) as follows: \(\phi _{D,D^\prime {}}(H)=\phi _{D,D^\prime {,\omega ^*}}(H)\), where \(\omega ^*\) is the output of M(D, H). Formally,

Definition 5

(Local alignment) Let M be a randomized algorithm. Let \(D\sim D^\prime \) be a pair of adjacent databases and \(\omega \) a possible output of M. A local alignment for M is a function \(\phi _{D,D^\prime ,\omega }: \mathcal {H}^{}_{D:\omega }\rightarrow \mathcal {H}^{}_{D^\prime :\omega }\) (see notation in Table 2) such that for all \( H\in \mathcal {H}^{}_{D:\omega }\), we have \(M(D,H) = M(D',\phi _{D,D^\prime ,\omega }(H))\).

Example 2

Continuing the setup from Example 1, consider the mechanism \(M_1\) that, on input D, outputs \(\top \) if \(q(D)+\eta _1 \ge 10{,}000\) (i.e., if the noisy total salary is at least 10,000) and \(\perp \) if \(q(D)+\eta _1 < 10\),000. Let \(D^\prime \) be a database that differs from D in the presence/absence of one record. Consider the local alignments \(\phi _{D,D^\prime {,\top }}\) and \(\phi _{D,D^\prime {,\perp }}\) defined as follows. \(\phi _{D,D^\prime {,\top }}(H)=H^\prime =(\eta ^\prime _1,\eta ^\prime _2,\dots )\) where \(\eta ^\prime _1 = \eta _1 + 100\) and \(\eta ^\prime _i=\eta _i\) for \(i> 1\); and \(\phi _{D,D^\prime {,\perp }}(H)=H^{\prime \prime }=(\eta ^{\prime \prime }_1,\eta ^{\prime \prime }_2,\ldots )\) where \(\eta ^{\prime \prime }_1 = \eta _1 - 100\) and \(\eta ^{\prime \prime }_i=\eta _i\) for \(i> 1\). Clearly, if \(M_1(D,H)=\top \) then \(M_1(D^\prime , H^\prime )=\top \) and if \(M_1(D, H)=\perp \) then \(M_1(D^\prime , H^{\prime \prime })=\perp \). We piece these two local alignments together to create a randomness alignment \(\phi _{D,D^\prime {}}(H)=H^*=(\eta ^*_1,\eta ^*_2,\dots )\) where:

Special properties of alignments Not all alignments can be used to prove differential privacy. In this section we discuss some additional properties that help prove differential privacy. We first make two mild assumptions about the mechanism M: (1) it terminates with probabilityFootnote 3 one and (2) based on the output of M, we can determine how many random variables it used. The vast majority of differentially private algorithms in the literature satisfy these properties.

We next define two properties of a local alignment: whether it is acyclic and what its cost is.

Definition 6

(Acyclic) Let M be a randomized algorithm. Let \(\phi _{D,D^\prime ,\omega }\) be a local alignment for M. For any \(H=(\eta _1, \eta _2,\ldots )\), let \(H^\prime =(\eta _1^\prime , \eta _2^\prime , \ldots )\) denote \(\phi _{D,D^\prime ,\omega }(H)\). We say that \(\phi _{D,D^\prime ,\omega }\) is acyclic if there exists a permutation \(\pi \) and piecewise differentiable functions \(\psi ^{(j)}_{D,D^\prime ,\omega }\) such that:

Essentially, a local alignment \(\phi _{D,D^\prime ,\omega }\) is acyclic if there is some ordering of the variables so that \(\eta ^\prime _j\) is the sum of \(\eta _j\) and a function of the variables that came earlier in the ordering. The local alignments \(\phi _{D,D^\prime {,\top }}\) and \(\phi _{D,D^\prime {,\perp }}\) from Example 2 are both acyclic. (In general, each local alignment function is allowed to have its own specific ordering and differentiable functions \(\psi ^{(j)}_{D,D^\prime ,\omega }\).) The pieced-together randomness alignment \(\phi _{D,D^\prime {}}\) itself need not be acyclic.

Definition 7

(Alignment cost) Let M be a randomized algorithm that uses H as its source of randomness. Let \(\phi _{D,D^\prime ,\omega }\) be a local alignment for M. For any \(H=(\eta _1, \eta _2,\ldots )\), let \(H^\prime =(\eta _1^\prime , \eta _2^\prime , \ldots )\) denote \(\phi _{D,D^\prime ,\omega }(H)\). Suppose each \(\eta _i\) is generated independently from a distribution \(f_i\) with the property that, for some \(\alpha _i\), \(\ln (\frac{f_i(x)}{f_i(y)}) \le \frac{\left|x-y\right|}{\alpha _i}\) for all x, y in the domain of \(f_i\), then the cost of \(\phi _{D,D^\prime ,\omega }\) is defined as: \({{\,\mathrm{cost}\,}}(\phi _{D,D^\prime ,\omega }) = \sum _i \left|\eta _i - \eta ^\prime _i\right|/\alpha _i\). Distributions that we use in this paper (see Table 1) with this property include the Laplace (i.e., \({{\,\mathrm{Lap}\,}}(\alpha _i)\)), exponential (i.e., \({{\,\mathrm{Exp}\,}}(\alpha _i)\)), and geometric (i.e., \({{\,\mathrm{Geo}\,}}(1-e^{-1/\alpha _i})\)).

The following lemma uses those properties to establish that M satisfies \(\epsilon \)-differential privacy.

Lemma 1

Let M be a randomized algorithm with input randomness \(H=(\eta _1,\eta _2,\dots )\). If the following conditions are satisfied, then M satisfies \(\epsilon \)-differential privacy.

-

1.

M terminates with probability 1.

-

2.

The number of random variables used by M can be determined from its output.

-

3.

Each \(\eta _i\) is generated independently from a distribution \(f_i\) with the property that \(\ln (f_i(x)/f_i(y)) \le \left|x-y\right|/\alpha _i\) for all x, y in the domain of \(f_i\).

-

4.

For every \(\underline{D\sim D^\prime }\) and \(\underline{\omega }\) there exists a local alignment \(\phi _{D,D^\prime ,\omega }\) that is acyclic with \({{\,\mathrm{cost}\,}}(\phi _{D,D^\prime ,\omega })\le \epsilon \).

-

5.

For each \(\underline{D\sim D^\prime }\) the number of distinct local alignments is countable. That is, the set \(\{\phi _{D,D^\prime ,\omega }\mid \omega \in \varOmega \}\) is countable (i.e., for many choices of \(\omega \) we get the same exact alignment function).

We defer the proof to Sect. 10.

Example 3

Consider the randomness alignment \(\phi _{D,D^\prime {}}\) from Example 1. We can define all of the local alignments \(\phi _{D,D^\prime ,\omega }\) to be the same function: \(\phi _{D,D^\prime ,\omega }(H)=\phi _{D,D^\prime {}}(H)\). Clearly \({{\,\mathrm{cost}\,}}(\phi _{D,D^\prime ,\omega }) = \sum _{i=0}^\infty \frac{\epsilon }{100}\left|\eta '_i-\eta _i\right|\ =\frac{\epsilon }{100}\left|q(D')-q(D)\right| \le \epsilon \). For Example 2, there are two acyclic local alignments \(\phi _{D,D^\prime {\top }}\) and \(\phi _{D,D^\prime {\bot }}\), both have \({{\,\mathrm{cost}\,}}= 100\cdot \frac{ \epsilon }{100} = \epsilon \). The other conditions in Lemma 1 are trivial to check. Thus both mechanisms satisfy \(\epsilon \)-differential privacy by Lemma 1.

5 Improving sparse vector

In this section we propose an adaptive variant of SVT that can answer more queries than both the original SVT [19, 33] and the SVT with Gap of Wang et al. [45]. We explain how to tune its privacy budget allocation. We further show that using other types of random noise, such as exponential and geometric random variables, in place of the Laplace, makes the free gap information more accurate at the same cost to privacy. Finally, we discuss how the free gap information can be used for improved utility of data analysis.

5.1 Adaptive SVT with Gap

The sparse vector technique (SVT) is designed to solve the following problem in a privacy-preserving way: given a stream of queries (with sensitivity 1), find the first k queries whose answers are larger than a public threshold T. This is done by adding noise to the queries and threshold and finding the first k queries whose noisy answers exceed the noisy threshold. Sometimes this procedure creates a feeling of regret—if these k queries are much larger than the threshold, we could have used more noise (hence consumed less privacy budget) to achieve the same result. In this section, we show that sparse vector can be made adaptive—so that it will probably use more noise (less privacy budget) for the larger queries. This means if the first k queries are very large, it will still have privacy budget left over to find additional queries that are likely to be over the threshold. Adaptive SVT is shown in Algorithm 1.

The main idea behind this algorithm is that, given a target privacy budget \(\epsilon \) and an integer k, the algorithm will create three budget parameters: \(\epsilon _0\) (budget for the threshold), \(\epsilon _1\) (baseline budget for each query) and \(\epsilon _2\) (smaller alternative budget for each query, \(\epsilon _2<\epsilon _1\)). The privacy budget allocation between threshold and queries is controlled by a hyperparameter \(\theta \in (0,1)\) on Line 2. These budget parameters are used as follows. First, the algorithm adds \({{\,\mathrm{Lap}\,}}(1/\epsilon _0)\) noise to the threshold and consumes \(\epsilon _0\) of the privacy budget. Then, when a query comes in, the algorithm first adds a lot of noise (i.e., \({{\,\mathrm{Lap}\,}}(2/\epsilon _2)\)) to the query. The first “if” branch checks if this value is much larger than the noisy threshold (i.e., checks if the gap is \(\ge 2\sigma \) for someFootnote 4\(\sigma \)). If so, then it outputs the following three items: (1) \(\top \), (2) the noisy gap, and (3) the amount of privacy budget used for this query (which is \(\epsilon _2\)). The use of alignments will show that failing this “if” branch consumes no privacy budget. If the first “if” branch fails, then the algorithm adds more moderate noise (i.e., \({{\,\mathrm{Lap}\,}}(2/\epsilon _1)\)) to the query answer. If this noisy value is larger than the noisy threshold, the algorithm outputs: (1\(^\prime \)) \(\top \), (2\(^\prime \)) the noisy gap, and (3\(^\prime \)) the amount of privacy budget consumed (i.e., \(\epsilon _1\)). If this “if” condition also fails, then the algorithm outputs: (1\(^{\prime \prime }\)) \(\perp \) and (2\(^{\prime \prime }\)) the privacy budget consumed (0 in this case).

To summarize, there is a one-time cost for adding noise to the threshold. Then, for each query, if the top branch succeeds the privacy budget consumed is \(\epsilon _2\), if the middle branch succeeds, the privacy cost is \(\epsilon _1\), and if the bottom branch succeeds, there is no additional privacy cost. These properties can be easily seen by focusing on the local alignment—if M(D, H) produces a certain output, how much does H need to change to get a noise vector \(H^\prime \) so that \(M(D^\prime ,H^\prime )\) returns the same exact output.

Local alignment To create a local alignment for each pair \(D\sim D^\prime \), let \(H=(\eta ,\xi _1,\eta _1,\xi _2,\eta _2, \ldots )\) where \(\eta \) is the noise added to the threshold T, and \(\xi _i\) (resp. \(\eta _i\)) is the noise that should be added to the ith query \(q_i\) in Line 7 (resp. Line 8), if execution ever reaches that point. We view the output \(\omega =(w_1,\dots ,w_s)\) as a variable-length sequence where each \(w_i\) is either \(\perp \) or a nonnegative gap (we omit the \(\top \) as it is redundant), together with a \({{\,\mathrm{tag}\,}}\in \left\{ {0,\epsilon _1,\epsilon _2}\right\} \) indicating which branch \(w_i\) is from (and the privacy budget consumed to output \(w_i\)). Let \(\mathcal {I}_\omega = \left\{ {i\mid {{\,\mathrm{tag}\,}}(w_i) = \epsilon _2}\right\} \) and \(\mathcal {J}_\omega = \left\{ {i\mid {{\,\mathrm{tag}\,}}(w_i) = \epsilon _1}\right\} \). That is, \(\mathcal {I}_\omega \) is the set of indexes where the output is a gap from the top branch, and \(\mathcal {J}_\omega \) is the set of indexes where the output is a gap from the middle branch. For \(H\in \mathcal {H}^{}_{D:\omega }\) define \(\phi _{D,D^\prime ,\omega }(H)=H'=(\eta ',\xi _1',\eta _1',\xi _2', \eta _2', \ldots )\) where

In other words, we add 1 to the noise that was added to the threshold. (Thus if the noisy q(D) failed a specific branch, the noisy \(q(D^\prime )\) will continue to fail it because of the higher noisy threshold.) If a noisy q(D) succeeded in a specific branch, we adjust the query’s noise so that the noisy version of \(q(D^\prime )\) will succeed in that same branch.

Lemma 2

Let M be the Adaptive SVT with Gap algorithm. For all \(D\sim D^\prime \) and \(\omega \), the functions \(\phi _{D,D^\prime ,\omega }\) defined above are acyclic local alignments for M. Furthermore, for every pair \(D\sim D^\prime \), there are countably many distinct \(\phi _{D,D^\prime ,\omega }\).

Proof

Pick an adjacent pair \(D\sim D^\prime \) and an \(\omega =(w_1,\dots ,w_s)\). For a given \(H=(\eta , \xi _1,\eta _1,\dots )\) such that \(M(D,H)=\omega \), let \(H^\prime =(\eta ^\prime ,\xi _1^\prime , \eta _1^\prime , \dots ) = \phi _{D,D^\prime ,\omega }(H)\). Suppose \(M(D',H') = \omega ' =(w_1', \ldots , w_{t}')\). Our goal is to show \(\omega ^\prime =\omega \). Choose an \(i\le \min (s,t)\).

-

If \(i\in \mathcal {I}_\omega \), then by (2) we have

$$\begin{aligned}&q_i' + \xi _i' - (T + \eta ')\\&\quad = q_i' + \xi _i + 1 + q_i - q_i' - (T + \eta + 1) \\&\quad = q_i + \xi _i -(T+\eta ) \ge \sigma . \end{aligned}$$This means the first “if” branch succeeds in both executions and the gaps are the same. Therefore, \(w_i' = w_i\).

-

If \(i\in \mathcal {J}_\omega \), then by (2) we have

$$\begin{aligned}&q_i' + \xi _i' - (T + \eta ')= q_i' + \xi _i - (T + \eta + 1)\\&\quad = q_i' - 1 + \xi _i -(T+\eta ) \le q_i + \xi _i - (T+\eta ) < \sigma ,\\&q_i' + \eta _i' - (T + \eta ')= q_i' +\eta _i + 1\\&\qquad + q_i - q_i' - (T + \eta + 1)\\&\quad = q_i + \eta _i - (T+\eta ) \ge 0. \end{aligned}$$The first inequality is due to the sensitivity restriction: \(\left|q_i-q_i'\right|\le 1 \implies q_i'-1\le q_i\). These two equations mean that the first “if” branch fails and the second “if” branch succeeds in both executions, and the gaps are the same. Hence \(w_i' = w_i\).

-

If \(i\not \in \mathcal {I}_\omega \cup \mathcal {J}_\omega \), then by a similar argument we have

$$\begin{aligned} q_i' + \xi _i' - (T + \eta ')&\le q_i + \xi _i - (T+\eta )< \sigma ,\\ q_i' + \eta _i' - (T + \eta ')&\le q_i + \eta _i - (T+\eta ) < 0. \end{aligned}$$Hence both executions go to the last “else” branch and \(w_i' = (\bot , 0) = w_i\).

Therefore for all \(1\le i \le \min (s,t)\), we have \(w_i'=w_i\). That is, either \(\omega '\) is a prefix of \(\omega \), or vice versa. Let \({\varvec{q}}\) be the vector of queries passed to the algorithm and let \({{\,\mathrm{len}\,}}({\varvec{q}})\) be the number of queries it contains (which can be finite or infinity). By the termination condition of Algorithm 1 we have two possibilities.

-

1.

\(s={{\,\mathrm{len}\,}}({\varvec{q}})\): in this case there is still enough privacy budget left after answering \(s-1\) above-threshold queries, and we must have \(t={{\,\mathrm{len}\,}}({\varvec{q}})\) too because \(M(D',H')\) will also run through all the queries. (It cannot stop until it has exhausted the privacy budget or hits the end of the query sequence.)

-

2.

\(s<{{\,\mathrm{len}\,}}({\varvec{q}})\): in this case the privacy budget is exhausted after outputting \(w_s\) and we must also have \(t=s\).

Thus \(t=s\) and hence \(\omega ' = \omega \). The local alignments are clearly acyclic (e.g., use the identity permutation). Note that \(\phi _{D,D^\prime ,\omega }\) only depends on \(\omega \) through \(\mathcal {I}_\omega \) and \(\mathcal {J}_\omega \) (the sets of queries whose noisy values were larger than the noisy threshold). There are only countably many possibilities for \(\mathcal {I}_\omega \) and \(\mathcal {J}_\omega \) and thus countably many distinct \(\phi _{D,D^\prime ,\omega }\). \(\square \)

Alignment cost and privacy Now we establish the alignment cost and the privacy property of Algorithm 1.

Theorem 2

The Adaptive SVT with Gap satisfies \(\epsilon \)-differential privacy.

Proof

First, we bound the cost of the alignment function defined by Eq. (2). We use the \(\epsilon _0, \epsilon _1, \epsilon _2\) and \(\epsilon \) defined in Algorithm 1. From (2) we have

The first inequality is from the assumption on sensitivity: \(\left|1 + q_i - q_i'\right| \le 1 + \left|q_i - q_i'\right| \le 2\). The second inequality is from loop invariant on Line 17: \(\epsilon _0 + \epsilon _2\left|\mathcal {I}_\omega \right| + \epsilon _1\left|\mathcal {J}_\omega \right| =\mathtt {cost} \le \epsilon - \epsilon _1 + \max (\epsilon _1, \epsilon _2) = \epsilon \).

Conditions 1, 2, 3 of Lemma 1 are trivial to check, 4 and 5 follow from Lemma 2 and the above bound on cost. Thus Theorem 2 follows from Lemma 1. \(\square \)

Algorithm 1 can be easily extended with multiple additional “if” branches. For simplicity we do not include such variations. In our setting, \(\epsilon _2=\epsilon _1/2\) so, theoretically, if queries are very far from the threshold, our adaptive version of Sparse Vector will be able to find twice as many of them as the non-adaptive version. Lastly, if all queries are monotonic queries, then Algorithm 1 can be further improved: We can use \({{\,\mathrm{Lap}\,}}(1/\epsilon _2)\) in Line 7 and \({{\,\mathrm{Lap}\,}}(1/\epsilon _1)\) noises in 8 instead.Footnote 5

Choice of \(\theta \). We can optimize the budget allocation between threshold noise and query noises by following the methodology of [33], which is equivalent to minimizing the variance of the gap between a noisy query and the threshold. If the majority of gaps are expected to be returned from the top branch, then we optimize \({{\,\mathrm{Var}\,}}(\tilde{q}_i- \widetilde{T}) = \frac{2}{\epsilon _0^2} + \frac{8}{\epsilon _2^2} = \frac{2}{\epsilon ^2}(\frac{1}{\theta ^2} + \frac{16k^2}{(1-\theta )^2})\). This variance attains its minimum value of \(2(1+\root 3 \of {16k^2})^3/\epsilon ^2\) when \(\theta = 1/(1+\root 3 \of {16k^2})\). If on the other hand the majority of gaps are expected to be returned from the middle branch, then we optimize \({{\,\mathrm{Var}\,}}(\hat{q}_i- \widetilde{T}) = \frac{2}{\epsilon _0^2} + \frac{8}{\epsilon _1^2} = \frac{2}{\epsilon ^2}(\frac{1}{\theta ^2} + \frac{4k^2}{(1-\theta )^2})\). In this case, the minimum value is \(2(1+\root 3 \of {4k^2})^3/\epsilon ^2\) when \(\theta = 1/(1+\root 3 \of {4k^2})\). If all queries are monotone, then the optimal variance further reduces to \(2(1+\root 3 \of {4k^2})^3/\epsilon ^2\) in the top branch when \(\theta = 1/(1+\root 3 \of {4k^2})\), and \(2(1+\root 3 \of {k^2})^3/\epsilon ^2\) in the middle branch when \(\theta = 1/(1+\root 3 \of {k^2})\).

These allocation strategies also extend to SVT with Gap (originally proposed in [45]). SVT with Gap can be obtained by removing the first branch of Algorithm 1 (Line 9 through 11) or setting \(\sigma = \infty \). For reference, we show its pseudocode as Algorithm 2. In [45], \(\theta \) is set to 0.5, which is suboptimal. The optimal value is \(\theta = 1/(1+\root 3 \of {4k^2})\).

5.2 Using exponential or geometric noise

In this section, we show that Adaptive SVT with Gap also satisfies differential privacy if the Laplace noise is replaced by the exponential distribution or the geometric distribution (when query answers are guaranteed to be integers). Both of these are one-sided distributions that result in a gap estimate with lower variance (see Table 1 for information about those distributions). The same result carries over to SVT with Gap [45].

Exponential noise When using random noise from the exponential distribution, we need to subtract off the expected value of the noise from the queries and threshold. We make the following changes to Lines 3, 4, 7 and 8 of Algorithm 1:

In more detail, the changes are:

-

1.

Line 3: the algorithm changes the value of \(\sigma \) from \(2\sqrt{2}/\epsilon _2\), the standard deviation of \({{\,\mathrm{Lap}\,}}(2/\epsilon _2)\), to \(2/\epsilon _2\), the standard deviation of \({{\,\mathrm{Exp}\,}}(2/\epsilon _2)\). It is worth repeating that the one-sided exponential noise results in a reduction of variance.

-

2.

Lines 4, 7 and 8: change Laplace noises to exponential noises of the same scale, and then subtracts the expected values of the noises.

If all queries are counting queries, we further replace \(\epsilon _1\) and \(\epsilon _2\) in Line 3, 7 and 8 with \(2\epsilon _1\) and \(2\epsilon _2\) respectively.

Geometric noise When all queries have integer values (e.g., counting queries), we could utilize geometric noise to ensure that the gap is also an integer. To do so we make the following changes to Algorithm 1:

If all queries are counting queries, we further replace \(\epsilon _1\) and \(\epsilon _2\) in Line 3, 7 and 8 with \(2\epsilon _1\) and \(2\epsilon _2\) respectively.

Local alignment and privacy The alignment in Eq. 2 for the Adaptive SVT with Gap with Laplace noise also works for both exponential noise and geometric noise, because \(\eta ' - \eta = 1\) and \(\xi '_i - \xi _i, \eta '_i-\eta _i \in \left\{ {0, 1+q_i-q_i'}\right\} \). The value \(1+q_i - q_i'\) is always \(\ge 0\) and is an integer when \(q_i, q_i'\) are integers.

Recall that if f(x) is the probability density function of \({{\,\mathrm{Exp}\,}}(\beta )\), then \(\ln \frac{f(x)}{f(y)}\le \frac{1}{\beta }\left|x-y\right|\). Similarly, if g(x) is the probability mass function of \({{\,\mathrm{Geo}\,}}(p)\), then \(\ln \frac{g(x)}{g(y)} = \ln \frac{p(1-p)^x}{p(1-p)^y} \le -\ln (1-p)\left|x-y\right| \). Therefore, our choice of the parameters ensures that the alignment cost is the same as that of Laplace noise, which is bounded by \(\epsilon \). Thus both variants are \(\epsilon \)-differentially private.

Choice of \(\theta \). As before, we choose the \(\theta \) that minimizes the variance of the gap to make the result most accurate. Note that exponential distribution has half the variance of the Laplace distribution of the same scale. Thus, when exponential noise is used, the minimum variance of the gap is \((1+\root 3 \of {16k^2})^3/\epsilon ^2\) in the top branch when \(\theta = 1/(1+\root 3 \of {16k^2})\), and \((1+\root 3 \of {4k^2})^3/\epsilon ^2\) in the middle branch when \(\theta = 1/(1+\root 3 \of {4k^2})\). If all queries are monotone, then the optimal variance further reduces to \((1+\root 3 \of {4k^2})^3/\epsilon ^2\) in the top branch when \(\theta = 1/(1+\root 3 \of {4k^2})\), and \((1+\root 3 \of {k^2})^3/\epsilon ^2\) in the middle branch when \(\theta = 1/(1+\root 3 \of {k^2})\).

Since the geometric distribution is the discrete analogue of the exponential distribution, the above results apply to geometric noise as well. For example, when all queries are counting queries and geometric noise is used, then \({{\,\mathrm{Var}\,}}(\hat{q_i} - \widetilde{T}) = \frac{e^{\epsilon _0}}{(e^{\epsilon _0}-1)^2} + \frac{e^{\epsilon _1}}{(e^{\epsilon _1}-1)^2} = \frac{e^{\theta \epsilon }}{(e^{\theta \epsilon }-1)^2} + \frac{e^{(1-\theta )\epsilon /k}}{(e^{(1-\theta )\epsilon /k}-1)^2}\) in the middle branch. The variance of the gap, albeit complicated, is a convex function of \(\theta \) on (0, 1). We used the LBFGS algorithm [39] from SciPy to find the \(\theta \) where the variance is minimum, and found that those values are almost the same as those for exponential noise (see Fig. 1). Therefore, we can use the budget allocation strategy for exponential noise as the strategy for geometric noise too.

The blue dots are values of \(\theta _\text {min} ={{\,\mathrm{argmin}\,}}(\frac{e^{\theta \epsilon }}{(e^{\theta \epsilon }-1)^2} + \frac{e^{(1-\theta )\epsilon /k}}{(e^{(1-\theta )\epsilon /k}-1)^2})\) for k from 1 to 50. The orange curve is the function \(\theta ={1}/{(1+\root 3 \of {k^2})}\) (colour figure online)

5.3 Utilizing gap information

When SVT with Gap or Adaptive SVT with Gap returns a gap \(\gamma _i\) for a query \(q_i\), we can add to it the public threshold T. This means \(\gamma _i+T\) is an estimate of the value of \(q_i(D)\). We can ask two questions: how can we improve the accuracy of this estimate and how can we be confident that the true answer \(q_i(D)\) is really larger than the threshold T?

Lower confidence interval Recall that the randomness in the gap in Adaptive SVT with Gap (Algorithm 1) is of the form \(\eta _i - \eta \) where \(\eta \) and \(\eta _i\) are independent zero mean Laplace variables with scale \(1/\epsilon _0\) and \(1/\epsilon _*\), where \(\epsilon _*\) is either \(\epsilon _1\) or \(\epsilon _2\), depending on the branch. The random variable \(\eta _i-\eta \) has the following lower tail bound:

Lemma 3

For any \(t\ge 0\) we have

For proof see “Appendix.” For any confidence level, say 95%, we can use this result to find a number \(t_{0.95}\) such that \(\mathbb {P}((\eta _i-\eta ) \ge -t_{0.95}) = 0.95\). This is a lower confidence bound, so that the true value \(q_i(D)\) is \(\ge \) our estimated value \(\gamma _i + T\) minus \(t_{0.95}\) with probability 0.95.

Improving accuracy To improve accuracy, one can split the privacy budget \(\epsilon \) in half. The first half \(\epsilon '\equiv \epsilon /2\) can be used to run SVT with Gap (or Adaptive SVT with Gap) and the second half \(\epsilon ''\equiv \epsilon /2\) can be used to provide an independent noisy measurement of the selected queries (i.e., if we selected k queries, we add \({{\,\mathrm{Lap}\,}}(k/\epsilon '')\) noise to each one). Denote the k selected queries by \(q_1,\dots , q_k\), the noisy gaps by \(\gamma _1,\dots , \gamma _k\) and the independent noisy measurements by \(\alpha _1,\dots ,\alpha _k\). The noisy estimates can be combined together with the gaps to get improved estimates \(\beta _i\) of \(q_i(D)\) in the standard way (inverse-weighting by variance):

Note that \(\frac{{{\,\mathrm{Var}\,}}(\beta _i)}{{{\,\mathrm{Var}\,}}(\alpha _i)} = \frac{{{\,\mathrm{Var}\,}}(\gamma _i)}{{{\,\mathrm{Var}\,}}(\alpha _i)+{{\,\mathrm{Var}\,}}(\gamma _i)} < 1\).

As discussed in Sect. 5.1, the optimal budget allocation between threshold noise and query noises within SVT with Gap is the ratio 1:\(\root 3 \of {4k^2}\). Under this setting, we have \({{\,\mathrm{Var}\,}}(\gamma _i) = 8(1+\root 3 \of {4k^2})^3 /\epsilon ^2\). Also, we know \({{\,\mathrm{Var}\,}}(\alpha _i) = 8k^2/\epsilon ^2\). Therefore, \( \tfrac{E( |\beta _i - q_i|^2)}{E( |\alpha _i - q_i|^2)} = \tfrac{ {{\,\mathrm{Var}\,}}(\beta _i)}{ {{\,\mathrm{Var}\,}}(\alpha _i)} = \tfrac{(1+ \root 3 \of {4k^2})^3}{ (1+ \root 3 \of {4k^2})^3 + k^2}. \) Since \(\lim \nolimits _{k\rightarrow \infty } \frac{(1+ \root 3 \of {4k^2})^3}{ (1+ \root 3 \of {4k^2})^3 + k^2} = \frac{4}{5}\), the improvement in accuracy approaches \(20\%\) as k increases. For monotonic queries, the optimal budget allocation within SVT with Gap is \(1:\root 3 \of {k^2}\). Then we have \({{\,\mathrm{Var}\,}}(\gamma _i)=8(1+\root 3 \of {k^2})^3/\epsilon ^2\) and therefore \(\frac{{{\,\mathrm{Var}\,}}(\beta _i)}{{{\,\mathrm{Var}\,}}(\alpha _i)} =\frac{(1+ \root 3 \of {k^2})^3}{ (1+ \root 3 \of {k^2})^3 + k^2}\) which is close to 50% when k is large. When the algorithm uses exponential noise, the variance of the gap further reduces to \({{\,\mathrm{Var}\,}}(\gamma _i)=4(1+\root 3 \of {k^2})^3/\epsilon ^2\) and therefore \(\frac{{{\,\mathrm{Var}\,}}(\beta _i)}{{{\,\mathrm{Var}\,}}(\alpha _i)} =\frac{(1+ \root 3 \of {k^2})^3}{ (1+ \root 3 \of {k^2})^3 + 2k^2}\) which is close to a 66% reduction of mean squared errors when k is large. Our experiments in Sect. 9 confirm this improvement.

6 Improving report noisy max

In this section, we present novel variations of the Noisy Max mechanism [19]. Given a list of queries with sensitivity 1, the purpose of Noisy Max is to estimate the identity (i.e., index) of the largest query. We show that, in addition to releasing this index, it is possible to release a numerical estimate of the gap between the values of the largest and second largest queries. This extra information comes at no additional cost to privacy, meaning that the original Noisy Max mechanism threw away useful information. This result can be generalized to the setting in which one wants to estimate the identities of the top k queries—we can release (for free) all of the gaps between each top k query and the next best query (i.e., the gap between the best and second best queries, the gap between the second and third best queries, etc.). When a user subsequently asks for a noisy answer to each of the returned queries, we show how the gap information can be used to reduce squared error by up to 66% (for counting queries).

6.1 Noisy Top-K with Gap

Our proposed Noisy Top-K with Gap mechanism is shown in Algorithm 3. (The function \(\arg \max _c\) returns the top c items.) We can obtain the classical noisy max algorithm [19] from it by setting \(k=1\) and throwing away the gap information (the boxed items on Lines 6 and 7). The Noisy Top-K with Gap algorithm takes as input a sequence of n queries \(q_1,\dots , q_n\), each having sensitivity 1. It adds Laplace noise to each query. It returns the indexes \(j_1,\dots , j_k\) of the k queries with the largest noisy values in descending order. Furthermore, for each of these top k queries \(q_{j_i}\), it releases the noisy gap between the value of \(q_{j_i}\) and the value of the next best query. Our key contribution in this section is the observation that these gaps can be released for free. That is, the classical Top-K algorithm, which does not release the gaps, satisfies \(\epsilon \)-differential privacy. But, our improved version has exactly the same privacy cost yet is strictly better because of the extra information it can release.

We emphasize that keeping the noisy gaps hidden does not decrease the privacy cost. Furthermore, this algorithm gives estimates of the pairwise gaps between any pair of the k queries it selects. For example, suppose we are interested in estimating the gap between the ath largest and bth largest queries (where \(a < b\le k\)). This is equal to \(\sum _{i=a}^{b-1} g_i\) because: \( \sum _{i=a}^{b-1} g_i = \sum _{i=a}^{b-1} (\widetilde{q}_{j_i} - \widetilde{q}_{j_{i+1}}) = \widetilde{q}_{j_a} - \widetilde{q}_{j_b} \) and hence its variance is \({{\,\mathrm{Var}\,}}(\widetilde{q}_{j_a} - \widetilde{q}_{j_b}) = 16k^2/\epsilon ^2\).

The original Noisy Top-K mechanism satisfies \(\epsilon \)-differential privacy. In the special case that all the \(q_i\) are counting queries then it satisfies \(\epsilon /2\)-differential privacy [19]. We will show the same properties for Noisy Top-K with Gap. We prove the privacy property in this section and then in Sect. 6.3 we show how to use this gap information.

Local alignment To prove the privacy of Algorithm 3, we need to create a local alignment function for each possible pair \(D\sim D^\prime \) and output \(\omega \). Note that our mechanism uses precisely n random variables. Let \(H=(\eta _1,\eta _2,\dots )\) where \(\eta _i\) is the noise that should be added to the ith query. We view the output \(\omega =((j_1,g_1),\ldots , (j_k, g_k))\) as k pairs where in the ith pair \((j_i,g_i)\), the first component \(j_i\) is the index of ith largest noisy query and the second component \(g_i\) is the gap in noisy value between the ith and \((i+1)\)th largest noisy queries. As in prior work [19], we will base our analysis on continuous noise so that the probability of ties among the top \(k+1\) noisy queries is 0. Thus each gap is positive: \(g_i>0\).

Let \(\mathcal {I}_\omega = \left\{ {j_1,\ldots , j_k}\right\} \) and \(\mathcal {I}_\omega ^c=\left\{ {1, \ldots , n}\right\} \setminus \mathcal {I}_\omega \), i.e., \(\mathcal {I}_\omega \) is the index set of the k largest noisy queries selected by the algorithm and \(\mathcal {I}_\omega ^c\) is the index set of all unselected queries. For \(H\in \mathcal {H}^{}_{D:\omega }\) define \(\phi _{D,D^\prime ,\omega }(H) = H'=(\eta '_1, \eta '_2, \ldots )\) as

The idea behind this local alignment is simple: We want to keep the noise of the losing queries the same (when the input is D or its neighbor \(D^\prime )\). But, for each of the k selected queries, we want to align its noise to make sure it wins by the same amount when the input is D or its neighbor \(D'\).

Lemma 4

Let M be the Noisy Top-K with Gap algorithm. For all \(D\sim D^\prime \) and \(\omega \), the functions \(\phi _{D,D^\prime ,\omega }\) defined above are acyclic local alignments for M. Furthermore, for every pair \(D\sim D^\prime \), there are countably many distinct \(\phi _{D,D^\prime ,\omega }\).

Proof

Given \(D\sim D^\prime \) and \(\omega =((j_1,g_1),\ldots , (j_k,g_k))\), for any \(H=(\eta _1,\eta _2,\dots )\) such that \(M(D,H)=\omega \), let \(H^\prime =(\eta _1^\prime , \eta _2^\prime , \dots ) = \phi _{D,D^\prime ,\omega }(H)\). We show that \(M(D', H') = \omega \). Since \(\phi _{D,D^\prime ,\omega }\) is identity on components \(i\in \mathcal {I}_\omega ^c\), we have \(\max \nolimits _{l\in \mathcal {I}_\omega ^c}(q'_l+\eta '_l) = \max \nolimits _{l\in \mathcal {I}_\omega ^c}(q'_l+\eta _l)\). From (3) we have that when \(i\in \mathcal {I}_\omega \),

So, for the kth selected query, \( (q'_{j_k} +\eta '_{j_k}) - \max \nolimits _{l\in \mathcal {I}_\omega ^c}(q'_l+\eta _l') = (q_{j_k} + \eta _{j_k}) - \max \nolimits _{l\in \mathcal {I}_\omega ^c}(q_l+\eta _l) = g_{k} > 0. \) This means on \(D'\) the noisy query with index \(j_k\) is larger than the best of the unselected noisy queries by the same margin as it is on D. Furthermore, for all \(1\le i < k\), we have

In other words, the query with index \(j_i\) is still the ith largest query on \(D'\) by the same margin. Therefore, \(M(D', H') = \omega \).

The local alignments are clearly acyclic (any permutation that puts \(\mathcal {I}_\omega ^c\) before \(\mathcal {I}_\omega \) does the trick). Also, note that \(\phi _{D,D^\prime ,\omega }\) only depends on \(\omega \) through \(\mathcal {I}_\omega \) (the indexes of the k largest queries). There are n queries and therefore \(\left( {\begin{array}{c}n\\ k\end{array}}\right) = \frac{n!}{(n-k)!k!}\) distinct \(\phi _{D,D^\prime ,\omega }\). \(\square \)

Alignment cost and privacy To establish the alignment cost, we need the following lemma.

Lemma 5

Let \((x_1,\ldots , x_m), (x'_1,\ldots , x'_m)\in \mathbb {R}^m\) be such that \(\forall i, \left|x_i-x'_i\right|\le 1\). Then \(\left|\max _i(x_i) - \max _i (x'_i)\right|\le 1\).

Proof

Let s be an index that maximizes \(x_i\) and let t be an index that maximizes \(x'_i\). Without loss of generality, assume \(x_{s} \ge x'_{t}\). Then \(x_s \ge x'_t \ge x'_s \ge x_s-1\). Hence \( \left|x_s - x'_t\right| = x_s - x'_t \le x_s - (x_s-1) = 1.\)

Theorem 3

The Noisy Top-K with Gap mechanism satisfies \(\epsilon \)-differential privacy. If all of the queries are counting queries, then it satisfies \(\epsilon /2\)-differential privacy.

Proof

First we bound the cost of the alignment function defined in (3). Recall that the \(\eta _i\)’s are independent \({{\,\mathrm{Lap}\,}}(2k/\epsilon )\) random variables. By Definition 7

By the global sensitivity assumption we have \(\left|q_i-q_i'\right|\le 1\). Apply Lemma 5 to the vectors \((q_l+\eta _l)_{l\in \mathcal {I}_\omega ^c}\) and \((q'_l+\eta _l)_{l\in \mathcal {I}_\omega ^c}\), we have \(\left| \max \nolimits _{l\in \mathcal {I}_\omega ^c}(q_l'+\eta _l)- \max \nolimits _{l\in \mathcal {I}_\omega ^c}(q_l+\eta _l)\right|\le 1\). Therefore,

Furthermore, if \({\varvec{q}}\) is monotonic, then

-

either \(\forall i: q_i \le q'_i\) in which case \(q_{i}-q'_{i}\in [-1,0]\) and \(\max \nolimits _{l\in \mathcal {I}_\omega ^c}(q_l'+\eta _l)- \max \nolimits _{l\in \mathcal {I}_\omega ^c}(q_l+\eta _l)\in [0,1]\),

-

or \(\forall i: q_i \ge q'_i\) in which case \(q_{i}-q'_{i}\in [0,1]\) and \(\max \nolimits _{l\in \mathcal {I}_\omega ^c}(q_l'+\eta _l)- \max \nolimits _{l\in \mathcal {I}_\omega ^c}(q_l+\eta _l)\in [-1,0]\).

In both cases we have \(q_{i}-q_{i}'+ \max \nolimits _{l\in \mathcal {I}_\omega ^c}(q_l'+\eta _l)- \max \nolimits _{l\in \mathcal {I}_\omega ^c}(q_l+\eta _l) \in [-1,1]\) so \(|q_{i}-q_{i}'+ \max \nolimits _{l\in \mathcal {I}_\omega ^c}(q_l'+\eta _l)- \max \nolimits _{l\in \mathcal {I}_\omega ^c}(q_l+\eta _l)|\le 1\). Therefore,

Conditions 1 through 3 of Lemma 1 are trivial to check, 4 and 5 follow from Lemma 4 and the above bound on cost. Therefore, Theorem 3 follows from Lemma 1. \(\square \)

6.2 Noisy top-K with exponential noise

The original noisy max algorithm also works with one-sided exponential noise [19] with smaller variance than the Laplace noise. In this subsection, we show that this result extends to the Noisy Top-K with Gap algorithm by simply changing Line 3 of Algorithm 3 to \(\eta _i \leftarrow {{\,\mathrm{Exp}\,}}(2k/\epsilon )\) and privacy is maintained while the variance of the gap decreases. However, the proof relies on a different local alignment.

Local alignment The alignment used in Sect. 6.1 will not work here because it might set our noise random variables to negative numbers. Thus we need a new alignment. As before, let \(H=(\eta _1,\eta _2,\dots )\) where \(\eta _i\) is the noise that should be added to the ith query. We view the output \(\omega =((j_1,g_1),\ldots , (j_k, g_k))\) as k pairs where in the ith pair \((j_i,g_i)\), the first component \(j_i\) is the index of ith largest noisy query and the second component \(g_i>0\) is the gap in noisy value between the ith and \((i+1)\)th largest noisy queries.

Let \(\mathcal {I}_\omega = \left\{ {j_1,\ldots , j_k}\right\} \) and \(\mathcal {I}_\omega ^c=\left\{ {1, \ldots , n}\right\} \setminus \mathcal {I}_\omega \), i.e., \(\mathcal {I}_\omega \) is the index set of the k largest noisy queries selected by the algorithm and \(\mathcal {I}_\omega ^c\) is the index set of all unselected queries. For \(H\in \mathcal {H}^{}_{D:\omega }\) we will use \(\phi _{D,D^\prime ,\omega }(H) = H'=(\eta '_1, \eta '_2, \ldots )\) to refer to the aligned noise. In order to define the alignment, we need the following quantities:

Note that \(i_*\in \mathcal {I}_\omega \) and \(s, t\in \mathcal {I}_\omega ^c\). We define the alignment according to the value of \(\delta _*\). When \(\delta _* \ge 0\), we use the same alignment as in the Laplace version of the algorithm:

When \(\delta _* < 0\) that alignment could result in a negative \(\eta ^\prime _i\) for some \(i\in \mathcal {I}_\omega \). So instead, we take that alignment and further add the positive quantity \(-\delta _*\) in several places so that overall we are adding nonnegative numbers to each \(\eta _i\) to get \(\eta ^\prime _i\). (This ensures that \(\eta ^\prime _i\) is nonnegative for each i.) Thus, when \(\delta _* < 0\), define

Lemma 6

Let M be the Noisy Top-K with Gap algorithm that uses exponential noise. For all \(D\sim D^\prime \) and \(\omega \), the functions \(\phi _{D,D^\prime ,\omega }\) defined above are acyclic local alignments for M. Furthermore, for every pair \(D\sim D^\prime \), there are countably many distinct \(\phi _{D,D^\prime ,\omega }\).

Proof

First, we show that \(\forall i, \eta _i' \ge \eta _i\). Recall that \(\delta _* = \min _{i\in \mathcal {I}_\omega }\left\{ {q_i-q'_i+(q_t'+\eta _t)- (q_s+\eta _s)}\right\} \). When \(\delta _*\ge 0\), we have \(\eta '_i - \eta _i = q_i-q'_i+(q_t'+\eta _t)- (q_s+\eta _s) \ge \delta _* \ge 0 \) for all \(i\in \mathcal {I}_\omega \). When \(\delta _* < 0\), we have \(\eta '_t - \eta _t = -\delta _* >0\) and \(\eta '_i - \eta _i = (q_i - q_i') - (q_{i_*} - q'_{i_*}) \ge 0\) for \(i\in \mathcal {I}_\omega \). Therefore, all \(\eta '_i\) are nonnegative.

The proof that (4) is an alignment when \(\delta _*\ge 0\) is the same as in the Laplace noise case. To show that (5) is an alignment when \(\delta _* < 0\), first note that since \(t = \mathop {{{\,\mathrm{argmax}\,}}}\nolimits _{l\in \mathcal {I}_\omega ^c} (q'_l+\eta _l)\) and \(-\delta _* > 0\), we have \(t = \mathop {{{\,\mathrm{argmax}\,}}}\nolimits _{l\in \mathcal {I}_\omega ^c} (q'_l+\eta '_l)\). Then from (5), we have that when \(i\in \mathcal {I}_\omega \),

Thus by a similar argument in Lemma 4, all relative orders among the k largest noisy queries and their associated gaps are preserved. The facts that \(\phi _{D,D^\prime ,\omega }\) is acyclic and there are finitely many \(\phi _{D,D^\prime ,\omega }\) are clear. \(\square \)

Alignment cost and privacy Recall from Table 1 that if f(x) is the density of \({{\,\mathrm{Exp}\,}}(\beta )\), then for \(x,y\ge 0\), \(\ln \frac{f(x)}{f(y)} = \frac{y-x}{\beta } \le \frac{\left|y-x\right|}{\beta }.\) When \(\delta _*\ge 0\), the alignment cost computation is the same as with the Laplace version of the algorithm. When \(\delta _* < 0\), we have

and note that there are \(k-1\) terms in the right-most summation. It is clear that \(\left| q_i-q'_i-q_{i_*} + q'_{i_*}\right| \le 2\) (or 1 if \({\varvec{q}}\) is monotone). Also, it is shown in the proof of Theorem 3 that \(\left|\delta _*\right| = |{q_{i_*}-q'_{i_*}+\max \nolimits _{l\in \mathcal {I}_\omega ^c}(q_l'+\eta _l)- \max \nolimits _{l\in \mathcal {I}_\omega ^c}(q_l+\eta _l)}| \le 2\) (or 1 if \({\varvec{q}}\) is monotone). Therefore,

Thus, Algorithm 3 with \({{\,\mathrm{Exp}\,}}(2k/\epsilon )\) noise on Line 3 instead of \({{\,\mathrm{Lap}\,}}(2k/\epsilon )\) noise, satisfies \(\epsilon \)-differential privacy. If all of the queries are counting queries, then it satisfies \(\epsilon /2\)-differential privacy.

6.3 Utilizing gap information

Let us consider one scenario that takes advantage of the gap information. Suppose a data analyst is interested in the identities and values of the top k queries. A typical approach would be to split the privacy budget \(\epsilon \) in half—use \(\epsilon /2\) of the budget to identify the top k queries using Noisy Top-K with Gap. The remaining \(\epsilon /2\) budget is evenly divided between the selected queries and is used to obtain noisy measurements (i.e., add \({{\,\mathrm{Lap}\,}}(2k/\epsilon )\) noise to each query answer). These measurements will have variance \(\sigma ^2=8k^2/\epsilon ^2\). In this section we show how to use the gap information from Noisy Top-K with Gap and postprocessing to improve the accuracy of these measurements.

Problem statement Let \(q_1\),\(\dots \), \(q_k\) be the true answers of the top k queries that are selected by Algorithm 3. Let \(\alpha _1,\ldots , \alpha _k\) be their noisy measurements. Let \(g_1,\ldots ,g_{k-1}\) be the noisy gaps between \(q_1,\ldots ,q_k\) that are obtained from Algorithm 3 for free. Then \(\alpha _i=q_i + \xi _i\) where each \(\xi _i\) is a \({{\,\mathrm{Lap}\,}}(2k/\epsilon )\) random variable and \(g_i = q_i + \eta _i - q_{i+1} - \eta _{i+1}\) where each \(\eta _i\) is a \({{\,\mathrm{Lap}\,}}(4k/\epsilon )\) random variable, or a \({{\,\mathrm{Lap}\,}}(2k/\epsilon )\) random variable if the query list is monotonic (recall the mechanism was run with a privacy budget of \(\epsilon /2\)). Our goal is then to find the best linear unbiased estimate (BLUE) [30] \(\beta _i\) of \(q_i\) in terms of the measurements \(\alpha _i\) and gap information \(g_i\).

Theorem 4

With notations as above let \({\varvec{q}}=[q_1, \ldots , q_k]^{\mathrm{T}}\), \({\varvec{\alpha }}=[\alpha _1, \ldots , \alpha _k]^{\mathrm{T}}\) and \({\varvec{g}}=[g_1, \ldots , g_{k-1}]^{\mathrm{T}}\). Suppose the ratio \({{\,\mathrm{Var}\,}}(\xi _i) : {{\,\mathrm{Var}\,}}(\eta _i)\) is equal to \(1:\lambda \). Then the BLUE of \({\varvec{q}}\) is \({\varvec{\beta }}= \frac{1}{(1+\lambda )k} (X{\varvec{\alpha }}+ Y{\varvec{g}})\) where

For proof, see “Appendix.” Even though this is a matrix multiplication, it is easy to see that it translates into the following algorithm that is linear in k:

-

1.

Compute \(\alpha = \sum _{i=1}^k \alpha _i\) and \(p = \sum _{i=1}^{k-1} (k-i)g_i\).

-

2.

Set \(p_0=0\). For \(i=1, \ldots , k-1\) compute the prefix sum \(p_i= \sum _{j=1}^i g_j = p_{i-1} + g_i\).

-

3.

For \(i=1, \ldots , k\), set \(\beta _i = (\alpha + \lambda k\alpha _i + p - kp_{i-1})/(1+\lambda )k\).

Now, each \(\beta _i\) is an estimate of the value of \(q_i\). How does it compare to the direct measurement \(\alpha _i\) (which has variance \(\sigma ^2 = 8k^2/\epsilon ^2\))? The following result compares the expected error of \(\beta _i\) (which used the direct measurements and the gap information) with the expected error of using only the direct measurements (i.e., \(\alpha _i\) only).

Corollary 1

For all \(i=1,\ldots , k\), we have

For proof, see Appendix. In the case of counting queries, we have \({{\,\mathrm{Var}\,}}(\xi _i) = {{\,\mathrm{Var}\,}}(\eta _i) = 8k^2/\epsilon ^2\) and thus \(\lambda = 1\). The error reduction rate is \(\frac{k-1}{2k}\) which is close to \(50\%\) when k is large. If we use exponential noise instead, i.e., replace \(\eta _i \leftarrow {{\,\mathrm{Lap}\,}}(2k/\epsilon )\) with \(\eta _i \leftarrow {{\,\mathrm{Exp}\,}}(2k/\epsilon )\) at Line 3 of Algorithm 3, then \({{\,\mathrm{Var}\,}}(\eta _i) = 4k^2/\epsilon ^2 = {{\,\mathrm{Var}\,}}(\xi _i)/2\) and thus \(\lambda = 1/2\). In this case, the error reduction rate is \(\frac{2k-2}{3k}\) which is close to \(66\%\) when k is large. Our experiments in Sect. 9 confirm these theoretical results.

7 SVT/noisy max hybrids with gap

In this section, we present two hybrids of SVT with Gap and Noisy Top-K with Gap. Recall that SVT with Gap is an online algorithm that returns the identities and noisy gaps (with respect to the threshold) of the first k noisy queries it sees that are larger than the noisy threshold. Its benefits are: (i) Privacy budget is saved if fewer than k queries are returned. (ii) The queries that are returned come with estimates of their noisy answers (obtained by adding the public threshold to the noisy gap), while the drawbacks are that the returned queries are likely not to resemble the k largest queries. (Queries that come afterward are ignored, no matter how large their values are.)

Meanwhile, Noisy Top-K with Gap returns the identities and gaps (with respect to the runner-up query) of the top k noisy queries. Its benefits are: (i) The queries returned are approximately the top k. (ii) The gap tells us how large the queries are compared to the best non-selected noisy query. The drawbacks are: (i) k queries are always returned, even if their values are small. (ii) Only gap information is returned (not estimates of the query answers).

For users who are interested in identifying the top k queries that are likely to be over a threshold, we present two hybrid algorithms that try to combine the benefits of both algorithms while minimizing the drawbacks. Both algorithms take as input a number k, a list of answers to queries having sensitivity 1, and a public threshold T. They both return a subset of the top k noisy queries that are larger than the noisy threshold T; hence, the privacy cost is dynamic and is smaller if fewer than k queries are returned. The difference is in the gap information.

The first hybrid (Algorithm 4) is a variant of Noisy Top-K with gap. It adds the public threshold T to the list of queries (it becomes Query 0), adds the same noise to them (Lines 2 and 4). In line 6, it takes the top k noisy queries (sorted in decreasing order) and their gaps with the next best query. It filters out any that are smaller than the noisy Query 0. For the queries that did not get removed, it returns their identities (recall the threshold is Query 0) and their gap with the next best query. If the last returned item is Query 0, this means that the gap information tells us how much larger the other returned queries are compared to the noisy threshold Query 0, and this allows us to get numerical estimates for those query answers by adding in the public threshold.

Alignment and privacy cost for Algorithm 4. By replacing the index sets \(\mathcal {I}_\omega \) in Eqs. (4) and (5) with \(\mathcal {I}_\omega =\left\{ {j_1, \ldots , j_t}\right\} \), the same formula can be used as the alignment function for Algorithm 4. Note that since \(\left|\mathcal {I}_\omega \right| = t \le k\), the privacy cost is \((t/k)\epsilon \).

Lemma 7

If Algorithm 4 is run with privacy budget \(\epsilon \) and returns t queries (and their associated gaps), then the actual privacy cost is \((t/k)\epsilon \).

The second hybrid (Algorithm 5) is essentially SVT with Gap applied to the list of queries that is sorted in descending order by their noisy answers. We note that it adds more noise to each query than Algorithm 4 but always returns the noisy gap between the noisy query answer and the noisy threshold, just like SVT with Gap.

Alignment and privacy cost for Algorithm 5 The alignment for Algorithm 5 is the same as the one for SVT with Gap and is hence omitted here. Note that the privacy cost is \(\epsilon _0 + t\epsilon _1 = (\theta + (t/k)(1-\theta ))\epsilon \) where t is the number of queries returned. As discussed in Sect. 5.1, the optimal \(\theta \) is \(1/(1 + \root 3 \of {4k^2})\).

Lemma 8

If Algorithm 5 is run with privacy budget \(\epsilon \) and returns t queries (and their associated gaps), then the actual privacy cost is \((\theta + (t/k)(1-\theta ))\epsilon \).

Benefits of the Hybrid Algorithms Compared with Noisy Top-K with Gap, the hybrid algorithms have these advantages: (i) saving privacy budget: The actual privacy budget consumption for the hybrid algorithms is dynamic—it depends on the number of queries returned. Thus if the threshold T is set high, the hybrid algorithms will likely return fewer than k queries and consume less privacy budget; (ii) providing query estimates: Algorithm 5 always returns the noisy gap with the threshold. (Hence, by adding in the public threshold value, this gives an estimate of the query answer.) Meanwhile, Algorithm 4 only returns the noisy gap with the threshold if the last query returned is the noisy threshold Query 0. (Otherwise it functions like Noisy Top-K with Gap and returns the gaps with the runner up query.)

Compared with SVT with Gap, the hybrid algorithms are trying to select the overall top k queries that are above the threshold, whereas SVT with Gap tries pick the first k queries it sees that are above the threshold. So the queries returned by the hybrid algorithms are expected to have much higher values. There is an important distinction though: SVT with Gap is an online algorithm that can process queries as they arrive, whereas the hybrid algorithms require all queries to be known beforehand.

The first hybrid (Algorithm 4) is more likely to provide accurate identity information than the second hybrid (Algorithm 5). That is, the queries it returns are more likely to be the actual queries whose true values are largest (because the first algorithm adds less noise to the query answers). However, as mentioned before Algorithm 5 always provides estimates of query answers, whereas Algorithm 4 only provides such estimates if the last query returned is the noisy threshold Query 0. Therefore, if it is more desirable to always have query answer estimates then one should use Algorithm 5. Otherwise Algorithm 4 is a good default choice.

8 Improving the exponential mechanism

The exponential mechanism [36] was designed to answer non-numeric queries in a differentially private way. In this setting, \(\mathcal {D}\) is the set of possible input databases and \(\mathcal {R}=\left\{ {\omega _1, \omega _2, \ldots , \omega _n}\right\} \) is a set of possible outcomes. There is a utility function \(\mu : \mathcal {D}\times \mathcal {R}\rightarrow \mathbb {R}\) where \(\mu (D,\omega _i)\) gives us the utility of outputting \(\omega _i\) when the true input database is D. The exponential mechanism randomly selects an output \(\omega _i\) with probabilities that are defined by the following theorem:

Theorem 5

(The Exponential Mechanism [36]) Given \(\epsilon >0\) and a utility function \(\mu : \mathcal {D}\times \mathcal {R}\rightarrow \mathbb {R}\), the mechanism \(M(D, \mu , \epsilon )\) that outputs \(\omega _i\in \mathcal {R}\) with probability proportional to \(\exp (\frac{\epsilon \mu (D,\omega _i)}{2\varDelta _{\mu }})\) satisfies \(\epsilon \)-differential privacy where \(\varDelta _\mu \), the sensitivity of \(\mu \), is defined as

We show that the exponential mechanism can also output (for free) a type of gap information in addition to the selected index. This gap provides noisy information about the difference between the utility scores of the selected output and non-selected outputs. What is surprising about this result is that we can treat the exponential mechanism as a black box (i.e., it does not matter how the sampling is implemented). In contrast, the internal state of the noisy max algorithm was needed (i.e., the gap was computed from the noisy query answers). The details are shown in Algorithm 6, which makes use of the Logistic(\(\theta \)) distribution having pdf \(f(x;\theta ) = \frac{e^{-(x-\theta )}}{(1+e^{-(x-\theta )})^2}\).

Theorem 6

Algorithm 6 satisfies \(\epsilon \)-differential privacy and the expected value of \(g_s\) is \((1+e^{-\theta })\ln (1+e^\theta )\) where s is the index of the query returned by the exponential mechanism and \(\theta = \frac{\epsilon \mu (D, \omega _s)}{2\varDelta _{\mu }} - \ln \sum _{j\ne s}\exp ({\frac{\epsilon \mu (D, \omega _j)}{2\varDelta _{\mu }}})\) is the location parameter of the sampling distribution.

Utilizing the Gap Information. From Theorem 6 and Algorithm 6, we see that \(\theta \) is a kind of gap (scaled by \(\epsilon /2\varDelta _\mu \)) between the selected query \(\omega _s\) and a softmax of the remaining items. While \(\theta \) can be numerically estimated from \(g_s\), one can also use \(g_s\) for the following purpose.

The exponential mechanism is randomized, so an important question is whether it returned a query that has the highest utility. We can use the noisy gap information \(g_s\) from Algorithm 6 to answer this question in a hypothesis testing framework. Specifically, let \(H_0\) be the null hypothesis that the returned query \(\omega _s\) does not have the highest utility score. Then \(g_s\) can tell us how unlikely this null hypothesis is—the quantity \(\mathbb {P}[g_s \ge \gamma \mid H_0]\) is the significance level (also known as a p value), and small values indicate the null hypothesis is unlikely. Its computation is given in Theorem 7.

Theorem 7

\(\mathbb {P}[g_s \ge \gamma \mid H_0]\le {2}/{(1+e^{\gamma })}\).

We note that if we want a significance level of \(\alpha =0.05\) (i.e., there is less than a 5% chance that a non-optimal query could have produced a large noisy gap) then we need \(g_s \ge \ln (\tfrac{2}{\alpha }-1)\approx 3.66\).

9 Experiments

We evaluate the algorithms proposed in this paper using the two real datasets from [33]: BMP-POS, Kosarak and a synthetic dataset T40I10D100K created by the generator from the IBM Almaden Quest research group. These datasets are collections of transactions. (Each transaction is a set of items.) In our experiments, the queries correspond to the counts of each item (i.e., how many transactions contained item #23?)

The statistics of the datasets are listed below (Table 3).

9.1 Improving query estimates with gap information

The first set of experiments is to measure how gap information can help improve estimates in selected queries. We use the setup of Sects. 5.3 and 6.3. That is, a data analyst splits the privacy budget \(\epsilon \) into half. She uses the first half to select k queries using Noisy Top-K with Gap or SVT with Gap (or Adaptive SVT with Gap) and then uses the second half of the privacy budget to obtain independent noisy measurements of each selected query.

If one were unaware that gap information came for free, one would just use those noisy measurements as estimates for the query answers. The error of this approach is the gap-free baseline. However, since the gap information does come for free, we can use the postprocessing described in Sects. 5.3 and 6.3 to improve accuracy (we call this latter approach SVT with Gap with Measures and Noisy Top-K with Gap with Measures).

We first evaluate the percentage reduction of mean squared error (MSE) of the postprocessing approach compared to the gap-free baseline and compare this improvement to our theoretical analysis. As discussed in Sect. 5.3, we set the budget allocation ratio within the SVT with Gap algorithm (i.e., the budget allocation between the threshold and queries) to be \(1:k^\frac{2}{3}\) for monotonic queries and \(1:(2k)^\frac{2}{3}\) otherwise—such a ratio is recommended in [33] for the original SVT. The threshold used for SVT with Gap is randomly picked from the top 2k to top 8k in each dataset for each run.Footnote 6 All numbers plotted are averaged over 10,000 runs. Due to space constraints, we only show experiments for counting queries (which are monotonic).

Our theoretical analysis in Sects. 5.3 and 6.3 suggested that in the case of monotonic queries, the error reduction rate can reach up to 50% when Laplace noise is used, and 66% when exponential or geometric noise is used, as k increases. This is confirmed in Fig. 2a, for SVT with Gap and Fig. 2b, for our Top-K algorithm using the BMS-POS dataset. (the results for the other datasets are nearly identical.) These figures plot the theoretical and empirical percent reduction of MSE as a function of k and show the power of the free gap information.

We also generated corresponding plots where k is held fixed and the total privacy budget \(\epsilon \) is varied. We only present the result for the kosarak dataset as the results for the other datasets are nearly identical. For SVT with Gap, Fig. 3a confirms that this improvement is stable for different \(\epsilon \) values. For our Top-K algorithm, Fig. 3b confirms that this improvement is also stable for different values of \(\epsilon \).

9.2 Benefits of adaptivity