Abstract

Requirements specifications are essential to properly communicate requirements among the software development team members. However, each role in the team has different informational needs in order to perform their activities. Thus, the requirements engineer should provide the necessary information to meet each team member's necessities to reduce errors in software development due to inadequate or insufficient communication. Although some research is concerned with communicating requirements among clients and analysts, no related research has been found to evaluate and improve requirements communication within the software development team. With this in mind, we present the ReComP framework, which assists in the identification of problems in the artifacts used to communicate requirements, identification of informational requirements for each role of the development team, and provide improvement suggestions to address requirements communication problems. ReComP was developed using the Design Science Research (DSR) method and this paper presents the results of two DSR cycles considering the use of ReComP for the developer and tester roles by using, respectively, user stories and use cases as requirements specifications. The results provide evidence that ReComP helps software development teams to identify and improve issues in the requirements specifications used for project communication. In two independent studies, ReComP was able to decrease the frequency of problems by 77% in user stories identified by developers and the frequency of all (100%) problems in use cases identified by testers.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Requirements communication plays an essential role in software development projects for coordinating clients, commercial project functions, and software engineers [1]. According to Fricker et al. [2], requirements communication is the process of transmitting a customer's needs to a development team to implement a solution. Successful requirements communication leads to a common understanding among stakeholders and the development team about what the relevant requirements and their meaning for the system to be developed are. Bjarnason et al. [3] state that requirements communication starts with contact with the customer and continues throughout the development of the project, involving different roles, for example, requirements engineers, developers, and testers. The authors also state that the requirements initially elicited need to be communicated and altered to the requirements negotiated and communicated among all affected roles. During software development, the team needs to communicate effectively and share requirements information in order to achieve understanding, consensus, and commitment to the project's objectives. Requirements communication problems can cause productivity losses or even design failures. For example, misunderstood or unreported requirements can lead to software that does not meet the customer’s requirements and a subsequent low number of sales or additional costs to alter or recreate the implementation [1, 4]. Méndez Fernández et al. [5] highlight that the critical requirements engineering (RE) problems are related to communication problems and incomplete/hidden or unspecified requirements.

The failure or success of any software depends mainly on the software requirement specification, as it contains all the requirements and characteristics of the product [6]. There are different ways to represent the requirements of a system, from the use of free texts to more structured forms, such as use cases, user stories, and prototypes. The use case is a description that is widely used to specify the purpose of a software system and produce a report in terms of interactions between the actors and the system [7,8,9,10]. Differently from user stories, use cases are often used to describe the behavior of a system from a more technical point of view [11]. User stories are a structure most often used in agile software development [12, 13]. User stories can help the development team to understand the requirements from the user's perspective [14]. However, according to Lucassen et al. [15], user stories are often poorly written in practice, and this can create communication problems during development. Prototypes are drawings that show what the system's user interface (UI) should look like during the interaction between the system and the end-user [16]. Prototypes can be used along with use cases and user stories to improve understanding of functional requirements and to gain a better understanding of them.

It is important to represent the requirements in such a way that all involved stakeholders can establish a common understanding of the system’s functionalities so that the final product developed meets the customers' expectations. According to Hoisl et al. [17], the way the analyst specifies the requirements information for stakeholders can influence the understanding of what should be developed. Different stakeholders have different roles and tasks within a project [18], as well as different information needs [19]. For this reason, the requirements specification documents (besides communicating the requirements to the customer) must provide each stakeholder with all the information necessary to perform their specific tasks properly. Stakeholders have difficulties related to the validation and understanding of the information found in the requirements specifications [5, 20]. Therefore, it is worth emphasizing the importance of understanding the requirements of each team member since each member has a role in the development of the system.

We conducted an in-depth study of related work [6, 7, 20, 34, 41] on software development teams' requirements communication problems. In the results, we identified the relevance of the problems raised and this motivated us to carry out our research. As such, the problem addressed in this study is related to the difficulty of communicating requirements between the development team members, considering the informational needs of each team member’s role. Our objective is to support the improvement of requirements communication through requirements specification artifacts, considering the perspectives of different development team members. To achieve this goal, we created the ReComP framework (a framework for Requirements Communication based on Perspectives) to identify the difficulties of developers and testers in finding requirements information in specifications such as use case, user stories and prototypes, in order to perform their activities within the project. Furthermore, to meet team members' informational needs, ReComP is composed of a set of practical solutions to mitigate or eliminate problems, such as information that has been omitted, is poorly described, or lacking in detail or which has errors.

To conduct our research, we applied a Design Science Research (DSR) approach. DSR has been widely used in information systems to create and evaluate new artifacts [21,22,23]. In addition, we conducted exploratory studies in order to fully understand the problem and the evolution of the ReComP framework. In this paper, we report two design cycles carried out to evaluate and evolve ReComP for user story artifacts based on the developers' perspective, and use cases based on the testers’ perspective.

The results of the two cycles show that ReComP helps software development teams in identifying and improving problems in the requirements specifications used for communication in software projects. In the first cycle, the use of ReComP managed to decrease the frequency of the problems identified by the developers in the user stories by 77%. In the second cycle, ReComP decreased the frequency of all (100%) problems identified by testers in use cases.

The remainder of this paper is organized as follows: Section 2 presents the concepts and related work. Section 3 details the DSR cycles for creating ReComP. Section 4 presents ReComP first version. Section 5 discusses the design of the empirical studies in which ReComP was assessed. Section 6 addresses the execution of the first DSR cycle and improvements that led to the ReComP second version. Section 7 presents the second DSR cycle and the ReComP third version, with new improvements. Section 8 presents the limitations and threats to validity. Finally, Sect. 9 presents conclusions and an outlook on future work.

2 Background and related work

This research involves concepts regarding requirements specifications that can used as a means for improving requirements communication in software development teams. The following sections present the background and related work.

2.1 Requirements specifications

The representation of software requirements is a topic that has been widely addressed in the literature. As such, a variety of methods, techniques and approaches have been applied in different domains [7]. Bjarnason et al. [24] state that requirements specifications are used for different purposes and support the main activities associated with obtaining and validating stakeholder requirements, software verification, tracking and management of requirements, and can also be used for contractual purposes through the documentation of customer agreements. The requirements specification contains the user and system requirements, that is, the specification of functional requirements and non-functional requirements [25].

According to Medeiros et al. [26], there are different ways to specify requirements. Three of the most used in the industry are use cases [8, 27], user stories [28] (due to the growth of agile development), and prototypes [12].

2.2 Use case

The use case description is a way to specify the functional requirements of a software system [29]. It is a technique used to specify the purpose of a software system and produce its description in terms of interactions among the actors and the system in question [7]. However, some common problems, such as ambiguities, incompleteness and inconsistencies, can arise when trying to describe the requirements through use cases. These problems can cause difficulties in understanding the requirements and, consequently, defects in the software system under development [7].

In their work, Tiwari and Gupta [29] identified the existence of different ways of representing use cases, which can be applied to various activities in the software development lifecycle. The authors observed that the use case models share some standard fields, such as the use case name, actors, preconditions, basic restrictions, alternative flows, postconditions. One of the structures widely used by requirements engineers is the structure proposed by Phalp et al. [30], which complements the fields suggested by Tiwari and Gupta [29] with items such as: description, containing a brief description of the use case and description of the purpose of the use case; exception flows, which describe ways to recover from errors that may occur in specific steps of the use case; and business rules, which are policies, procedures, or restrictions that must be considered during the execution of the use case.

2.3 User story

User stories are the artifacts most frequently used in agile software development [12, 28]. They consist of brief descriptions, from the perspective of the end-user, of the desired functionalities, and encompass several aspects of the requirements specification represented in natural language [31]. However, when improperly defined, they can trigger several challenges in agile software development, due to incomplete or incorrect documentation [11, 32].

Gilson and Irwin [13] state that many templates have been proposed over the past years, ranging from templates without restrictions and free format to very stringent ones. However, Cohn’s [33] initial suggestion remains the most used model for stories. Cohn proposed the following structure: “As a < type of user > I want < some goal > so that < some reason > ”.

According to Soares et al. [34], the lack of a detailed specification in user stories can lead to the development of features that are not properly aligned with the customer’s expectations. This limitation of information imposed by the template makes it difficult to understand the requirements that are to be implemented. Thus, to make the specification of user stories more complete, the definition of acceptance criteria becomes necessary. The acceptance criteria describe the limits of a user story, and they are used as a parameter to measure whether a user story has been completed [35]. The acceptance criteria may contain information that was not originally in the user story template, such as business rules, exceptions, specific non-functional requirements, system’s screen specification, and other information that the team deems necessary to develop the specified requirement.

2.4 Prototype

The prototype is an excellent way to generate ideas about a user interface (UI) and allows one to evaluate a solution at an early stage of the project [36]. De Lucia et al. [37] recommend the use of prototypes to document the requirements for communication and knowledge sharing between stakeholders and agile teams. For Blomkvist et al. [38], some of the benefits of using prototypes is that the prototypes are easier to interpret, give a clearer overview of the design and function as a stronger means of communication between stakeholders because of interactive qualities inherent in prototypes.

Several authors also use the terms “mockups” and “wireframes” to talk about prototypes [11]. Prototypes can be categorized into low, medium and high fidelity [39]. According to Walker [40], prototypes that are the most similar to the final product are “high fidelity” (e.g., prototypes made in HTML). In contrast, those less similar are “low fidelity” (e.g., paper prototype or sketches). According to Preece et al. [39], the overriding consideration is the prototype's purpose and what level of fidelity is needed to get useful feedback. Low-fidelity prototypes are useful because they tend to be simple, inexpensive, and quick to produce. They serve to identify issues in the early stages of design and, through role interpretation, users can get a real sense of what it will be like to interact with the product. High-fidelity prototyping is useful for selling ideas to people and for testing technical problems.

Prototypes can be used in conjunction with use cases and user stories to simultaneously improve the understanding of functional requirements [7], and allow the representation of non-functional requirements related to the user interface [41]. However, prototypes are not just support for understanding the requirements and are a fundamental part of requirements specifications when they present relevant information which is not documented in use cases or user stories [42].

This study focuses on using low-fidelity prototypes, since the study participants created drawings on paper to represent the system’s user interface and the interaction between the user and the system. For Reggio et al. [7], mockups are drawings that show what the system’s user interface should look like during the interaction between it and the end-user (user-system interaction). Therefore, throughout this article, we will use the UI Mockups nomenclature to reference the prototypes developed by the participants in the studies.

2.5 Related work

Hess et al. [43] present a comparison between traditional requirements artifacts and agile practices used to document requirements information. They conducted empirical studies to investigate the priorities of agile RE practices most frequently used in projects (user stories, sprint backlog, epics, product backlog, planning meetings, and face-to-face communication, personas, requirements prioritization, time-boxed iterations) from the point of view of members (developer/tester, Scrum master, product owner and requirements engineer). In addition, Hess et al. [43] also investigated the challenges faced by participants in their projects. Analysis of the study data revealed that the relevance of agile RE practices differed among different members of the agile team, and that the biggest challenges in agile projects are insufficient communication with customer(s) due to lack of documentation, and rework due to neglect of non-functional requirements, rework due to inadequate quality of documented requirements, Communication lapse due to unavailability of appropriate customer representative(s) (product owner), insufficient requirements communication in teams due to lack of documentation, and communication lapses due to sudden changes in the requirements.

Soares et al. [34] analyzed the use of agile requirements (user stories) with traditional approaches (use cases) for specifying requirements in software development projects. For this, they carried out a literature review, which identified the main difficulties when working with agile requirements, and an exploratory study that characterized the difficulties in using user stories compared to use cases. The results indicated that the main difficulties in using user stories to specify requirements are related to: sparse detailing of requirements information, difficulty in identifying non-functional requirements, non-definition of dependency between requirements, user dependence, lack of definition of business rules, volatility of requirements, communication and collaboration with users, lack of information for validation of requirements. In view of this, prototypes may be needed to assist in understanding this type of specification. In addition, the study participants’ perceptions indicated that, although user stories can provide an initial time gain during the requirements specification activities, difficulties such as the lack of a detailed specification can lead to the development of features that are poorly aligned with customer expectations. Furthermore, using user stories to specify requirements can bring additional challenges to other development activities, such as maintenance and architecture design.

Tu et al. [20] state that the use of more transparent documents, with greater visibility of information for stakeholders, is an essential factor in communication effectiveness in software projects. The authors conducted a study with students and software professionals with different profiles using requirements documents of varying levels of transparency and employed two questionnaires. One had questions about the system described in the received document and required the subjects to identify problems if they could not answer the question about the requirement in the document, and the other asked them to give an opinion on the three attributes of transparency (accessibility, ease of understanding and relevance) in the document they received. The study results showed evidence that participants with the most transparent document spent less time seeking information, answered more questions correctly, and were more confident in their answers than participants with the least transparent document. As such, having a transparent requirements document is useful for communicating requirements to interested parties.

To improve the quality of requirements specification documents, Ali et al. [6] developed a methodology to identify and solve problems with the quality of the requirements specification using four processes to improve different quality attributes. In the first step, in the analysis phase, the input requirements are added, which ensures the integrity of the requirements, especially the domain requirements of the product. Then, the output requirements are inserted in the mapping phase, which acts as a stage for validating and verifying requirements from different stakeholders’ perspectives. After removing the incorrect requirements using the mapping process, the requirements are added to the SRS (software requirement specification) and then supplied to the stakeholders for further inspection. After inspecting the SRS using inspection templates and assigning the total quality score (TQS), the person responsible for the inspection sends a detailed report to the requirements engineering team.

Reggio et al. [7] proposed DUSM (disciplined use cases with screen mockups), a method for describing and refining requirements specifications based on use cases and screen mockups. The results show that, thanks to the screen prototypes, requirements specifications produced with DUSM are easier to understand, less prone to inconsistencies, ambiguities, and do not suffer from incompleteness, thanks to the glossary and many well-formed constraints. In general, DUSM produces “good quality” specifications and is inexpensive to apply.

2.6 Discussion

In the related work, we identified that the main problems in requirements communication are related to incomplete, inaccurate, or incorrect requirements specifications [3, 5] and a lack of standardization of terminologies, models, and documents used to communicate the requirements [5, 20, 25]. We also identified that different roles have different informational needs in relation to the requirements in the software development project [3, 19, 43]. According to Bjarnason et al. [3], the communication gaps between the requirements team and the testers and developers result in problems in specifying unclear, ambiguous, and non-verifiable requirements and subsequent problems when implementing and verifying them. However, the solutions to the problems in the requirements specifications presented by these studies did not consider the difficulty that the team members have in identifying the requirements information that is necessary to carry out their project activities.

In view of this, we identified an opportunity to create the ReComP framework to evaluate and improve requirements communication. This is addressed to requirements specification artifacts and considers the informational requirements needs of the developers and testers in order to execute activities on the project. Since the primary artifacts used to specify requirements are use cases [7, 8, 13, 19, 27, 29], user stories [12, 13, 28] and prototypes [12, 19, 37, 38], we have limited the evaluation and improvements in these types of specifications.

3 Applying DSR to develop ReComP

This section presents ReComP and how it was developed by following the Design Science Research (DSR) method. DSR aims to assist in the creation and evaluation of new artifacts in a given context [21, 22]. DSR seeks to understand the problem and build and evaluate artifacts that allow us to transform situations by changing their conditions to better and more desirable states [44]. According to Hevner [45], DSR is an iterative process that proposes three interlinked research cycles: the relevance cycle, the design cycle, and the rigor cycle.

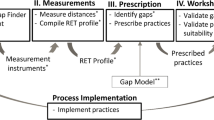

Figure 1 presents the Design Science Research applied in this research. The design cycle considers the relevance cycle and the rigor cycle. These three cycles must be present and clearly identifiable in any Design Science Research project [45]. The inputs for Design Science Research are requirements of the relevance cycle and the theories and methods of design and evaluation that are extracted from the rigor cycle. The relevance cycle links the application domain to the DSR effort, suggesting requirements and, specifically, requiring the artifact to be applied to the application domain to validate its practical use. The design cycle is repeated in two DSR activities: construction and evaluation. Finally, the rigor cycle bases the other cycles on the existing knowledge base and, due to research activities, determines which new knowledge should contribute to the growing knowledge base [45].

Overview of the Design Science Research cycles in this study—based on Hevner and Chatterjee [22]

The purpose of the relevance cycle is to define the problem to be addressed, the requirements for the new artifact, and to define acceptance criteria for evaluating the research results [45]. To highlight the relevance of the present work, we conducted an in-depth study of papers related to the objective of identifying requirements communication problems within development teams and artifacts used for requirements communication. Furthermore, in this cycle, we conducted the following two exploratory studies to more fully understand the problem:

-

1.

A comparative study between types of the specification: we compared the issuance and reception of requirements using a use case and user story, and evaluated and compared the degree of correction of the specifications and the UI mockups created. The study comprised three steps: In step 1, groups received the scenarios to create the specifications with user story and use case. In step 2, the main activity was constructing UI mockups simulating the real system based on the specifications created. It is noteworthy that each group received a different use case and user story specification than the one specified in step 1. In step 3, the UI mockups were inspected by the groups that created the specification using the inspection checklists. To assess the correctness of the study participants’ specifications, the researchers carried out inspections on the specifications by using the inspection checklists to assist in the verification of defects in the use case specification and user stories. From a quantitative and qualitative analysis of the data [46], we observed that different specification formats could provide similar results when communicating requirements, but we should not ignore the human factor. We also noted that the impact and number of defects found in the requirements specification and the construction of UI mockups are not sufficient to determine which of the two specifications is better or worse for communicating requirements between software development teams. So, in this perspective, software development teams that are in doubt about which of the specification forms to adopt can choose to use both user story and use case.

-

2.

Observation study with use cases: We improved the understanding of the needs of developers in the construction of UI mockups. We evaluated the difficulties faced by developers when building UI mockups that employ use cases. In this study, participants received a use case specification developed by professional requirements analysts for a real industry system to construct prototypes, and simulate the real system. Participants also inspected the specification to detect defects that hindered understanding the requirements for constructing the prototype. Finally, they highlighted what information specified in the use case was necessary for the construction of the prototype. From a quantitative and qualitative analysis of the data [47], we observed that the four reasons why developers do not follow the specifications of use cases. These are the existence of specification errors, ambiguous information, lack of detailed specification or incomplete information and due to suggestions for improvement. In addition, the types of defects that most impacted the creation of UI mockups were cited as being due to omission, ambiguity, and incorrect fact.

In these studies, it was possible to identify some requirements information needed in the use case specifications and user stories to help participants build UI models simulating real system construction. It was also possible to identify different formats adopted by the participants to specify use cases and user stories and to create prototypes.

Different studies were necessary for a better understanding of the requirement communication problems within software development teams and the diverse informational needs of the different roles of the members in the development teams. Therefore, the problem addressed by the present study involves the need to improve the communication of requirements within development teams based on the perspective of team members who use the requirements specification documents as input for the execution of their activities.

Thus, our main motivation for developing ReComP is related to the difficulty in communicating requirements between members of the development team. Although there are different ways of representing the requirements of a system, which range from the use of free texts to more structured forms, problems with requirements communication may arise due to the specification model chosen for the system development process [48]. Moreover, since each development team member has different informational needs to execute their activities in the software project problems may arise with the communication of requirements due to the specification model chosen for the system development process [46] and also due to the non-fulfillment of the different informational requirements of the team [19]. These problems occur because requirements engineers do not consider the information needs of each member of their development team when specifying the requirements.

After considering the identified problem, we decided to assist requirements engineers in identifying the flaws in existing requirements information in their artifacts, from the team members’ point of view, and propose improvements according to the development team members’ need for information. We thus defined two requirements for ReComP:

-

R1—ReComP should help team members to identify problems in the artifacts used to communicate requirements within the software development team.

-

R2—ReComP should provide suggestions for improvements to requirements communication problems found in the artifacts.

These requirements were established based on the aspects identified in the literature and exploratory studies. R1 requirement was defined based on Liskin [19] and Tu et al. [20]. They state that the requirements artifacts are used by different people, with different roles and different needs to carry out their activities throughout the project. The authors also claim that there is often no perfect type of artifact that suits the needs of all participants, which makes it necessary to adopt a variety of different artifacts. The R2 requirement was defined based on Méndez Fernández et al. [25], who state that the lack of an adequate template in the requirements specification can lead to failures in communication. We present other metrics for the evaluation of ReComP in Sect. 5.4.

The purpose of the design cycle is to develop a solution to the problem raised in the previous cycle and evaluate the solution against the requirements until reaching a project that is considered satisfactory [45]. We conducted two design cycles (empirical studies) to evaluate the use of ReComP. In the first design cycle, we generated and evaluated the first version of ReComP (v1) through a case study at the Federal University of Amazonas and at the Federal University of the State of Rio de Janeiro. Further information about this version is presented in Sect. 4.

In the second design cycle, we evolved the second version of ReComP (v2) and conducted a new empirical study at the Federal University of Amazonas. Further information about ReComP (v2) is presented in Sect. 6.3. This paper presents the results of both evaluations. Sections 7.3 and 7.4 presents the improvements made to the second version of ReComP that led to the creation of the third version.

Finally, the purpose of the rigor cycle is the use and generation of knowledge [45]. According to Thuan et al. [49], the rigor cycle bases the other cycles on the existing knowledge base and, due to research activities, determines that new knowledge should add to the knowledge base. In this study, the main fundamentals are the knowledge related to the communication problems within the development teams, the requirements communication artifacts, the specific information that each member within the development team needs to perform their activities, and the evaluation method (the case study).

The main contribution to the knowledge base is ReComP itself, as a new framework to aid in the identification of requirements communication problems in the artifacts used by software development teams. Also, studies conducted to evaluate ReComP can serve as examples for others in the application of ReComP. We have also contributed with knowledge regarding: (1) the main artifacts used to communicate requirements within software projects; (2) the requirements communication problems within software projects; (3) informational needs of requirements from the perspective of the role of developer and testers; (4) aspects to be considered when creating the requirements specification for software development teams.

4 ReComP—framework requirements communication based on perspectives (v1)

ReComP was built using the results found in the related work and the results of the exploratory studies. The ReComP frameworkFootnote 1 is a structure that supports the improvement of requirements communication through the supply of artifacts capable of assisting the requirements engineer in the identification of problems in the specification and suggested improvement of the identified problems. Table 1 presents the results found in the relevance cycle that supported the creation of the ReComP framework.

We divided ReComP into two specific artifacts: TAX (Team Artefact eXperience) and TAI (Team Artifact Improvement), as described below. In its current version, there are different adaptations of TAX and TAI to assess various artifacts and these are specific to each member that will make the assessment. In other words, both artifacts support two independent, but complementary perspectives for the roles of developer and tester and the specification artifacts, UI mockups, use cases, and user stories:

-

1.

Team Artifact eXperience (TAX)—Support for evaluating the experience of team members regarding the artifacts used to communicate requirements during the software project.

-

2.

Team Artifact Improvement (TAI)—Support for improving the artifacts, proposing improvement suggestions to solve or mitigate the problems in the artifacts used to communicate requirements, with the aim of meeting the informational needs of requirements. The suggested patterns consist of the adoption of templates or elements in the artifacts to present the necessary information for the different roles of the members of the development team.

In a similar manner to the framework definition used by Lucassen et al. [15] and Jiang and Eberlein [50], the ReComP framework contains specific artifacts addressed to identify informational needs of developers and testers roles, as well as, to assess how requirements specification using user story, use case, and UI mockups meet these needs. Table 2 shows the name of the artifacts.

Because TAX evaluates the team members’ experience with the quality of requirements documents, it can be used independently of TAI. That said, TAI does need the results obtained in assessing TAX. In this way, the organization can only use TAX to discover possible problems in communicating requirements within the team and should not adopt TAI for improvements in artifacts. The solutions proposed by TAI are optional, and the requirements engineer decides whether to use them or not.

In the context of this research, we defined the experience of the members of the development team in a similar manner to the definition of Hassenzahl [51] for user experience (User Experience—UX). Hassenzahl [51] states that a good UX is a consequence of fulfilling human needs through interaction with the product or service. Thus, we consider that the experience of the development team members regarding artifacts consists in identifying the informational needs regarding requirements by team members when using a specific artifact as a source of information that aids them in the development of their activities within the project.

The primary users of the framework are requirements engineers, developers, and testers. ReComP aids requirements engineers in evaluating the informational needs of members of the development team and in improving the requirements specifications used in the project. Initially, developers and testers answer a guided TAX form to identify the problems in the specifications used to perform their activities on the project. After that, the requirements engineer has the opportunity to minimize these problems by using the TAI to make improvements in the specification.

The steps for using ReComP are (1) identify the artifacts that will pass the evaluation and are used to communicate the requirements, (2) identify the team member roles that will evaluate the artifact, (3) apply the assessment through TAX, (4) verify the result of the evaluation with the problems identified in the artifacts and, optionally, if you want to solve the problems (5), apply the TAI improvement solutions. If you are going to perform a new evaluation of the improved artifact, you should go back to step (3).

4.1 Initial proposal for TAX guided forms

TAX is composed of a guided form to be applied by the development team in order to identify problems in the artifacts used to communicate requirements by different roles of the members in the team, and identify the necessary information that each role of the member considers important to carry out their activities in the development of the project.

Table 3 summarizes the TAX questions for the user story specification, from the developer’s perspective in a simplified way. We defined the questions for each specification type and member’s role that use the artifact to develop activities in the project according to the original templates of the use case specifications, user stories, and prototypes. In addition, we added questions about the informational needs requirements identified in the exploratory studies. The questions were divided into two aspects: (i) evaluation of the difficulty in obtaining information from the artifact used and (ii) evaluation of the needs for information about the artifact for the development of their activity.

It is important to note that the guided forms have requirements information that originally may not have been in the specification template used. However, according to the literature [35] and the result of exploratory studies [46] and [47], this requirement information that is not originally in the template is complementary information necessary for the execution of the activities of developers and testers in software projects.

If the team members have any difficulty in identifying any information related to requirements in the artifact adopted by the team, the requirements engineer can make the improvements suggested by the TAI guidelines presented in the following section.

4.2 The initial proposal for TAI guidelines

The templates used in the requirements documentation may be insufficient to communicate some information to all members of the development team. Thus, there was a need to propose suggestions for improvements to problems identified in the artifacts. With the use of TAX, the objective is to meet the informational needs of requirements for members of the software project development team. We created the proposed improvement suggestions according to the information obtained in publications related to requirements specification problems and exploratory studies. We defined two pieces of information to facilitate the adoption of the standard in the artifact to be improved. These were (1) the description of the problem that can occur in the artifact, and (2) suggestions for improving the artifact. All suggestions for TAI improvements related to the problems identified in TAX. Example: The problem identified in question TX1 has the proposed TI1 improvement solution. Each suggestion from TAI has a usage example to better assist the requirements engineer in changing requirements specifications.

Firstly, the company must use TAX to identify problems in the specification of requirements used in the project and, if the company so desires, it can use TAI as an aid to solve the problems identified. The improvement suggestions proposed are adaptations of the artifacts that already exist in the company so that they meet the needs of the members of the development team.

Table 4 presents part of the TAI guideline for user story specification from the developer’s perspective. The TAI and TAX used in the second empirical study can be found in the technical report available in [52].

5 Evaluating ReComP

To guide the research, we defined the research question: “How to aid the requirements communication based on the perspectives of each member role in the development team’s members?” To achieve this objective and answer the research question, we developed ReComP (a framework for Requirements Communication based on Perspectives). It was necessary to evaluate ReComP by applying it to the problem and context, checking whether it produced the desired effects and whether a new interaction and DSR cycle were necessary, while corroborating or questioning the validity of the theoretical assumptions [23]. Thus, to evaluate the use of ReComP in a practical context, we performed two cycles of DSR with an empirical study in each cycle. The following sections present the study planning, execution, and data analysis.

5.1 ReComP evaluation plan

In order to evaluate ReComP (v1) for the user story artifact from the perspective of software developers (ReComP_US_Dev), the first DSR cycle included an empirical study, which was planned based on the research question: “What are the difficulties encountered by developers when building UI mockups using user stories?”. In order to evaluate the ReComP (v2) for the use case artifact from the perspective of software testers (ReComP_UC_Test), the second DSR cycle featured yet another empirical study, which was planned based on the research question: “What are the difficulties encountered by testers when building test cases using use cases and UI mockups?”.

In both evaluations, initially, the participants create their artifacts from an actual requirements specification, make the evaluation of the specification using TAX, and improve the specification using TAI. After each evaluation, we evolved ReComP to solve the problems found in the study that was carried out. Figure 2 shows the stages of the execution of the two ReComP evaluations.

5.2 Participants

We carried out the first DSR cycle (1st empirical study) in two universities, with 50 undergraduate students taking the Agile Requirements and Systems Analysis and Design class. In total, 37 participants were characterized as novices, since they had only academic experience with the specification of user story requirements. The 13 participants who had already worked with user stories in the industry were characterized as experienced. The participants played the role of developers since they received a requirements specification in the format of user stories to build UI mockups, thus simulating the initial development of a system.

We carried out the second DSR cycle (2nd empirical study) in a university with 37 undergraduate students taking the Analysis and Systems Design class. In total, 32 participants were characterized as novices since they had only academic experience with the use case specification. Five participants had development experience in the industry and were characterized as experienced. Participants played the role of testers because they received a specification of use cases and UI mockups to build test cases. Table 5 presents the synthesis of the participants and the use of ReComP.

5.3 Study artifacts

All the participants signed the informed consent form, in which they agreed to provide the results for analysis. In addition, the participants answered a characterization questionnaire so that we could verify their experience with software development in the industry and understand their degree of familiarity with requirements specification documents and test cases. At the end of each cycle, all participants answered an evaluation questionnaire about the ease of use and the usefulness of ReComP for improving requirements communication. The other artifacts used during the study were requirements specifications with a similar complexity level and the specific ReComP for each study. Table 6 shows the artifacts used in each cycle.

The final evaluation questionnaire has been defined based on the Technology Acceptance Model (TAM) for utility and ease of use of the indicators [53]. This consists of a 7-point Likert scale that assesses the participant’s level of agreement for each statement regarding technology. The defined indicators were (1) perceived usefulness, which defines the degree in which a person believes that the technology could improve their performance at work, and (2) perceived ease of use, which defines the degree in which a person believes that using a specific technology would be effortless. The reason for focusing on these indicators is that these aspects are strongly correlated to the user’s acceptance of the technology [53]. The response scale used was as follows: strongly agree, generally agree, partially agree, neutral, partially disagree, generally disagree, and strongly disagree. Table 7 presents the ReComP evaluation questionnaire applied in the cycles.

In addition to these artifacts, the first cycle also used: (a) textual description of two systems in the user story template with similar levels of complexity, evaluated by the researchers; and (b) TAX_US_Dev and TAI_US_Dev—ReComP for user stories and the role of developers.

In the first cycle, we used two specifications of user stories adopted in real industry projects, one regarding a job offers system and the other about ferry ticket sales. A summary of the scenarios is presented herein:

-

1.

“The App RH Mobi is a system that allows users to view job opportunities offered by an HR consulting company and job seekers to submit their resume for analysis. The objective of this web system is for the HR analysts of the consulting company to register job openings for companies and analyze the resumes sent by the application”.

-

2.

“The NetBarco app is a system developed to run on an Android platform that allows sellers, authorized by boats, to sell tickets to passengers who want to travel from one municipality to another by ferryboat. The NetBarco app has two databases, the local one stored on the smartphone itself and the web one located on one of our servers hosted in the cloud”.

Further details about scenarios and requirements specifications are available in the technical report [52].

In the second cycle, the artifacts used were (a) Problem scenario, a textual description of a use case; (b) UI mockups of the use case; and (c) TAX_UC_Test and TAI_UC_Test—ReComP for specification with use case and tester’s role.

In this cycle, we used a use case specification adopted by a real industry project regarding a document management system to prevent documents from running out of their validity and causing business expenses such as fines and stoppages. The following scenario was presented:

The whole process of the company's legal documentation control is carried out through the use of a spreadsheet where information is updated manually. This document management model can result in potential risks, such as the expiration of some important tax documents for company compliance with the tax authorities. Thus, the software will be responsible for serving the sectors that need document validity control. It will allow real-time monitoring of the company’s documents to avoid them from running out of their validity and cause expenses to the company (fines and stoppage of activities).

We highlight that the specifications used in the studies, created by software engineers of real projects, had additional information that was not part of the original template of use cases and user stories. Further details of the scenario and specification of requirements are available in the technical report [52].

5.4 ReComP evaluation indicators

To assess whether ReComP achieved its objective, we must consider the two requirements set out in the relevance cycle presented in Sect. 3. The ability of ReComP to aid in the identification and improvement of problems found in the requirements specifications was also evaluated. For this, we considered its viability and utility. In this perspective, ReComP must be considered viable if it can be executed according to its description if it produces what it promises to deliver and if its execution requires a level of effort that is deemed acceptable. On the other hand, the ReComP framework should be considered useful if it provides benefits to the team that is using it. Thus, we defined viability and utility indicators as follows (Table 8).

For analysis purposes, when assessing the specification carried out by the participants, the following interpretations were considered regarding the responses indicated in the guided TAX forms and shown in Table 9.

To analyze the participants’ perceptions regarding their use of ReComP, the following interpretations were considered regarding the responses indicated in the final evaluation questionnaire based on TAM. These are presented in Table 10.

6 First DSR cycle (1st empirical study)

In the first cycle, we carried out the following 6 steps (Fig. 2): (1) building UI mockups using the user story, (2) application of TAX_US_Dev, (3) improvement of user story with TAI_US_Dev, (4) improvement of UI mockups with user story, (5) reapplication of TAX_US_Dev, and if its execution application of TAX_US_Dev and (6) final evaluation of ReComP. From this point on, we will adopt the following nomenclatures: Round 1 for the first evaluation using the original specification without changes and without worrying about the team role; and Round 2, for the second evaluation using the specification with improvement concerned with the informational need of the team role.

Thus, the execution of the study was divided into two rounds:

-

Round 1: using the original specification without changes (traditional)—without worrying about the team role. In Step 1, we divided the participants randomly into two groups, one group received the specification from RH Mobi, and the other group received the specification from NetBarco. In Step 2, the participants used the ReComP artifact TAX_US_Dev to evaluate the specification received to construct the UI mockup. In Step 3, the participants improved the specification received based on the problems they pointed out in the assessment. For this, they used the improvement suggestions provided by TAI_US_Dev.

-

Round 2: using the specification with the improvement suggested by TAI. In this scenario, the participants used the specified document regarding the developer role. In Step 4, participants received improved specifications to create the UI mockups. In this step, the participants did not make the UI mockup of the specifications they had received in Step 1. For this, we ensured the rotation of specifications among the participants of Round 1 and Round 2. For example, those who used the RH Mobi user story in Step 1, used the NetBarco user story (improved) in Step 4. Thus, we endeavored to reduce the learning bias in the type of specification.

In Step 5, participants received the TAX_US_Dev guided form and re-evaluated the specification received. It is worth mentioning that at this stage, the option “I do not need this information” was added to the guided TAX form so that participants had the option to indicate the information that they considered irrelevant to the development of their activities. Finally, in Step 6, a questionnaire was applied to assess the communication of requirements to the participants.

6.1 ReComP_US_Dev study results

In this section, we present the quantitative and qualitative results regarding the analysis of the difficulties encountered by developers building UI mockups using user stories. We also analyzed the informational requirements needs to build the UI mockup by the developer. In addition, we analyzed the developers’ perceptions regarding the ReComP ease of use and usefulness.

6.1.1 Requirements specification evaluation

The guided TAX_US_Dev form applied in Round 1 allowed us to analyze the participants’ perceptions concerning the fields of the user story specification. In this round, the user stories of the RH Mobi or NetBarco systems were created without considering the needs for a developer’s role. Figure 3 presents the result of the problems encountered by the participants when building UI mockups using a user story in Round 1.

The information that most participants pointed out as having difficulty in identifying was related to the following: precondition (80%), the dependence between user stories (74%), messages (68%), and exception path (64%). On the other hand, the information that the participants had less difficulty in finding in the user story was related to customer requirements (26%), the reason for the functionality (14%), the user of the functionality (12%), and purpose of the functionality (10%).

6.1.2 Requirements specification improvements

As for the improvements made in the user story using TAI_US_Dev, we analyzed the quality of the improvement made by the participants. We checked the information registered in the TAX_US_Dev guided form and checked if the participants improved the US.

Analyzing the user stories, we classified them as: “Great” if the participants made all the improvements pointed out in the TAX (100% improvement); “Good” if the participants made most of the improvements pointed out in the TAX (51%-75% improvement); “Bad” if the participants made half or less of the improvements pointed out in the TAX (< = 50% improvement); “Not recommended” if the participants made incorrect improvements (changes that modified the specified requirement instead) or made no improvements noted and, therefore, it was not possible to build UI mockups from the modified US (0% improvement). Figure 4 presents the evaluation of user stories.

Only 11 (22%) user stories were evaluated as "Great", 27 (54%) user stories evaluated "Good", 7 (14%) were evaluated as "Bad" and 5 (10%) user stories were evaluated as “Not recommended”.

The guided TAX_US_Dev form applied in Round 2 allowed us to analyze the participants’ perceptions regarding the fields of user story specification improved by other participants in Round 1 (RH Mobi or NetBarco—based on the developer’s perspective) that they had difficulty in identifying for the UI mockup development. Figure 5 presents the result of the problems found by the participants when building UI mockups using user stories in Round 2.

During the execution of Round 2, the number of participants was reduced to 49 because one participant did not show up at the study site. We observed that the information that most of the participants indicated they had difficulty in identifying were precondition, the dependence between user stories, and alternative paths. The information that the participants had less difficulty finding in the user story were the following: the reason for functionality, purpose of functionality, and customer requirements. Some participants indicated that they did not need to carry out their activities, such as dependence between the US (3), the user of the functionality (1), the purpose of the functionality (1), navigability (1), screen layout (1), and non-functional requirements (7).

6.1.3 Final analysis of the participants’ perception when using ReComP_US_Dev

In this section, we present the results of the analysis of the participants’ responses (P) to the questionnaire applied after the study was carried out. Our objective was to investigate the acceptability of ReComP. In this questionnaire, the participants answered regarding their level of agreement in relation to ReComP’s usefulness and ease of use. Figure 6 shows the participants’ perceptions of ReComP_US_Dev.

The results shown in Fig. 6 reveal mainly positive perceptions and few negative perceptions. There were few participants who disagreed about the ease of use and usefulness of ReComP for improving the communication of requirements in the team. Further on in the paper, the participants’ perception of TAX and TAI will be detailed.

6.1.3.1 TAX_US_Dev

Regarding the TAX_US_Dev ease of use, we analyzed the answers regarding the first statement (S1. The TAX questions are easy to understand), we can observe in Fig. 6 that 47% of the participants considered the questions in the guided TAX_US_Dev form easy and clear to understand. Also, the participants reported that the guided form helped them to identify problems in user stories.

They have a vocabulary that is easy to understand because they clearly specify the objective of the question and use the same terms used in the user story.—P8

“Questions can clearly address what they want to be specified”—P7

Only 4% of the participants disagreed that the questions found in the guided TAX_US_Dev form were easy to understand.

“The questions presented at TAX were not entirely clear, but they are important questions”—P13

“The questions could be asked more informally; they had a negative impact when reading for the first time”—P5

Figure 6 shows that 49% of the participants had no clear opinion or were neutral about the TAX_US_Dev’s ease of use.

Regarding the TAX_US_Dev’s usefulness, we analyzed the answers to the statement (S2. The TAX questions are useful for detecting problems in user stories), and it can be observed in Fig. 6 that 57% of the participants agreed that the questions in the guided TAX_US_Dev form were useful for detecting problems in user stories.

“(…) I can identify problems more efficiently and safely”-P1

“TAX focuses on the main points that the US should contain, so if those points are not present or if they are confused, it means that there is a problem in the description, and TAX helps to identify precisely those problems”.—P17B.

Only 4% of the participants disagreed that the questions found in the guided TAX_US_Dev form were useful.

“For the questions to be useful, it would be necessary for those who were applying the technique to have good knowledge of user story and prototyping.”—P6

The number of participants who had no clear opinion or were neutral about the usefulness of TAX_US_Dev is 39%.

6.1.3.2 TAI_US_Dev

Regarding the ease of use of TAI_US_Dev, we analyzed the answers regarding the statement (S3. The TAI improvement suggestions are easy to understand), in Fig. 6, we can observe that 63% of the participants agreed that the suggestions for improving TAI_US_Dev were easy to understand. A total of 35% of the participants had no clear opinion or were neutral about the ease of understanding TAI_US_Dev. Only 2% of the participants disagreed with the statement. As for the positive points of TAI ease of use the, we can highlight the following quotes from the participants:

“The suggestions for improvement are simple to understand, and it is easy to implement them with the aid of examples”- P7

“It is possible to understand all the issues necessary for a better understanding of the requirements, and the examples presented further help in understanding.”—P2

Regarding the negative points of the ease of use of TAI, we can highlight the following quotes from the participants:

“It points out what should be done to correct errors, but at times I found the suggestions too generic”—P6

“Some explanations of errors are very formal, thus making the suggestions less useful.”—P20B

As for the usefulness of TAI_US_Dev, we analyzed the answers to statement (S4. The TAI improvement suggestions are useful for solving problems in user stories), we observed that 69% of the participants considered the suggestions provided by TAI_US_Dev useful for improving user stories. A total of 29% of the participants had no clear opinion or were neutral about the usefulness of TAI_US_Dev, and only 2% of the participants disagree that the suggestions for improvement are useful. Regarding the positive points of TAI’s usefulness, we can highlight the following quotes from the participants:

“It is very useful to find missing data at each stage of creating the story.”—P2

“It helped to rewrite the critical points and those that were in doubt on how to redo.”—P1B

Regarding the negative points of the usefulness of TAI, we can highlight the following quotes from the participants:

“I was not able to apply the suggestions very well because I had a hard time transferring the examples to my case.”—P6B

“At the time of applying the TAI, the technique seemed too vague. When correcting the user story, I used my knowledge about user story more than the technique.”—P6

It is worth mentioning that we considered all the opinions of the participants regarding the ease and usefulness of TAX as a point of improvement in the ReComP version.

6.1.3.3 General perception of ReComP

As for the general perception of ReComP in the evaluation and improvement of user stories, the participants acting in the role of developers highlighted that they would use it again, since ReComP was useful and straightforward, helped in the writing of user stories, served as an inspection guide, and presented itself as a manual for construction and error prevention. Below are some quotes from participants regarding the ease and usefulness of ReComP.

“It is useful because you do not need to review the entire description because the problems have already been identified by TAX, and TAI already shows the suggestion for improvement in the specific problem.”—P7B

“They are easy because if we identify a problem with TAX, the TAI solution already indicates exactly where and why the problem occurred.”—P17B

“All the problems that I identified in TAX and that I looked for in TAI were easy to implement.”—P22B

We observed that the participants approved of the way of applying TAX to assess the quality of the requirements specification and TAI to solve the problems with the improvements suggestions presented. Thus, ReComP proved to be effective in its purpose.

6.1.3.4 Improvement suggestions

Regarding suggestions for improvements in ReComP_US_Dev, the participants mainly pointed out improvements in the TAX guided form. The first suggestion for improvement refers to the need for improvement in the questions in the guided TAX form. In relation to this, participant P2 said that “… In TAX, it would be good to standardize the questions,” and P9 complemented the suggestion by saying that “A better description of the TAX questions is necessary.”

It was also suggested that some fields questioned in TAX should be part of TAI. Regarding this suggestion, P9 commented that: “… the main path and acceptance criteria should be part of TAI”. In addition, according to P13, TAX is “incomplete” and they mentioned that “TAX lacks several important issues for the user story. In addition, there are poorly worded questions”. They also added that in TAI “the questions presented are easier to understand.”

6.2 Discussion

According to the analysis carried out on the results obtained in Round 1 (first assessment using the actual specification without changes and without considering the role) and in Round 2 (second assessment using the specification with improvement considering the role), we can see in Fig. 7 that, out of 13 problems found in the specification of the user story in Round 1, 10 (77%) problems decreased their frequency in Round 2 after the use of ReComP. Only 3 (23%) problems in the specification increased their frequency in relation to Round 1 (the user of the functionality, the purpose of the functionality, and non-functional requirements).

We observed that, in Round 2, 10 (20%) participants said they had problems identifying the information “functionality user”, 7 (14%) participants had problems identifying the information “purpose for functionality,” and 20 (41%) participants had trouble identifying the “non-functional requirements” information. These participants confronted the problem of receiving user stories with improvement problems in Round 2. In other words, the participants who should have improved the US in Round 1 with the use of TAI_US_Dev did not do it correctly, creating difficulties for the participants who used the US in Round 2.

Due to the addition of the field “I don't need this information” in the guided TAX form applied in Round 2, we can see that only 6 fields of information were considered unnecessary for the execution of activities. Only 1 (2%) participant pointed out that they do not need the functionality user information, the purpose of the functionality, navigability, screen layout. Another 3 (6%) participants pointed out the dependency information among user stories as unnecessary and, finally, 7 (14%) participants said that information on non-functional requirements is not necessary for the performance of their activities.

Regarding the improvements made in user stories through the application of TAI_US_Dev, 38 (76%) participants improved the user stories according to the proposed suggestions and only 12 (24%) participants had their improvements in the user story considered “bad” and “not recommended.” The number of negative improvements may have occurred due to the difficulty in editing the user story that was carried out manually by the participants. However, it is worth mentioning that the improvements made by the participants minimized the occurrence of problems found in the specifications of the second round, showing that ReComP was effective in identifying and improving the problems in user stories.

From the analysis of the user’s perception, we can see that, in general, most participants agreed with the statements about ease of use and usefulness in identifying problems in the user stories (TAX_US_Dev) and suggestions for improving the user stories (TAI_US_Dev). These results show evidence of ease of use when applying ReComP (v1). The fact that ReComP was widely accepted by the participants may indicate that this technique is also suitable for development teams that want to evaluate and improve their user stories in order to communicate requirements.

Regarding the negative points of the ReComP’s utility mentioned by the participants, we highlight the need to have a minimum amount of knowledge of the specification template used for best use of the framework. Moreover, the application of the improvement suggestions presented in TAI is optional. However, the problems pointed out by TAX must be considered to meet the needs of the specification user.

The participants pointed out some difficulties in using ReComP in this study. Based on these difficulties, some improvements were made to ReComP, with the aim of improving its usefulness, ease of use, and effectiveness.

6.3 ReComP improvements (v2)

The main problems pointed out by the participants related to the guided TAX_US_Dev form were that the questions were very general, non-standardized and very extensive, which made their use difficult. Through this, we realized that R1—ReComP should allow team members to identify problems in the artifacts used to communicate requirements within the software development team—and this was not fully achieved in the study.

Therefore, we revised the guided form and made it more compact, removed the open questions from all fields, standardized it, and gave the questions a more direct approach. In addition, we added the option for the participants to indicate that they “do not need the information” to gain insights from what was described in a specification that is not necessary for a given role, thereby helping to reduce irrelevant information in the specification.

Regarding R2, ReComP should provide suggestions for solutions to improve the requirements communication problems found in the artifacts. As such, we noticed that some information was missing from the improvement standards and the use, as well as TAX_US_Dev being confusing. Therefore, we reviewed all the ReComP TAI guidelines and added all the suggestions to improve the fields corresponding to the problems found in TAX and added the instructions on how to use the ReComP to help in its use. ReComP (v2), which was used in the second empirical study, can be found in the technical report available in [52].

7 Second DSR cycle (2nd empirical study)

In the second DSR cycle, we conducted only one round of evaluation and improvement of ReComP (v2) that led to ReComP third version. As shown in Fig. 2, we performed this study in the following 4 steps: (1) Specification of the test cases, (2) Application of TAX_UC_Test, (3) Improvement of the use case with TAI_UC_Test, (4) ReComP final evaluation.

In Step 1, the participants received the use case specification—UC01: Configure Document and the use case UI mockup. In Step 2, they used ReComP artifact TAX_UC_Test to evaluate the specification received in order to construct the test cases. In Step 3, the participants improved the specification received based on the problems identified in the assessment. For this, they used the TAI_UC_Test improvement suggestions. In Step 4, we applied an evaluation questionnaire to assess the participants’ opinions regarding the communication of the requirements.

7.1 ReComP_UC_Test study results

In this section, we present the results regarding the analysis of the difficulties encountered by testers when building test cases using use cases and UI mockups. We also analyzed the testers’ informational requirements needs to build test cases. In addition, we analyzed the perception of ReComP’s ease of use and usefulness.

7.1.1 Requirements specification evaluation

The result of the guided TAX_UC_Test form allowed us to perform an analysis of the participants’ perceptions concerning the fields of the use case specification (not based on the tester’s perspective) in which they had difficulty in identifying in order to create the test cases. Figure 8 shows the result of the problems encountered by the participants when creating test cases using use cases.

Most participants pointed out that they had difficulty in identifying some information, such as screen field mask (70%), mandatory fields (62%), dependencies among use cases (57%), and size of screen fields (46%). The information that the participants had less difficulty finding in the use case were in regard to exception flows (16%), business rules (16%), main scenario (5%), and purpose of the use case (3%). In some cases, problems were attributed to alternative flows (14%) and messages (11%). Some participants pointed out that they do not need some information to perform their activities, such as main scenario (3%), screen layout (3%), types of screen fields (3%), screen fields mask (3%), navigability (5%), post-condition (8%), size of screen fields (8%), and dependencies among use cases (14%).

7.1.2 Requirements specification improvements

As for the improvements made in the use cases using the TAI_US_Test, we analyzed the quality of the improvement made by the participants. We checked the information marked in the guided TAX_US_Test form and checked to see if the participants made any improvement in the use case.

By analyzing the use cases, we classified them as: “Great” (76%–100% improvement) the use cases that the participants made all the improvements pointed out in the guided form, “Good” (51%–75% improvement) the use cases that the participants failed to make a few improvements, in other words, they indicated the problem in the TAX, “Bad” (< = 50% improvement) for the use cases that the participants made half of the improvements pointed out, and “Not recommended” (0% improvement) for the use cases that participants made incorrect improvements (improvements that modified the specified requirements) or did not make any of the improvements pointed out. Figure 9 shows the evaluation of the use cases.

Overall, all participants made improvements in the use cases using the TAI_UC_Test; 16 (43%) use cases were evaluated as "Great", 20 (54%) use cases evaluated "Good”, only 1 (3%) use case was evaluated as "Bad". No use cases identified as “Not recommended” were identified.

7.1.3 Final analysis of the participants’ perception when using ReComP_UC_Test

After the quantitative analysis, we evaluated ReComP’s acceptability. In this questionnaire, the participants provided their degree of agreement concerning the usefulness and ease of use of ReComP. Figure 10 shows the participants’ perceptions of ReComP_UC_Test.

The results shown in Fig. 10 reveal mainly positive perceptions and a few negative perceptions. There is a slightly negative perception that points to some participants who felt that ReComP is neither easy nor useful for improving requirements communication. In the following section, the participants’ perception of TAX and TAI will be discussed in detail.

7.1.3.1 TAX_UC_Test

Regarding the ease of use of TAX_UC_Test (S1. TAX questions are easy to understand), we can see in Fig. 10 that 60% of the participants found the questions in the guided TAX_UC_Test form easy to understand. Furthermore, the participants reported that the guided form helped them to identify problems in the use cases through objective and precise questions, as shown by the following quotes:

“Short, straightforward sentences, directly addressing the problem. It made it easier.”—P5

“The questions were objective and helped me to find the errors.”—P28

Only 3% of the participants disagreed that the questions found in the guided TAX_UC_Test form were easy to understand.

“… maybe the questions do not help you very much if you do not understand the subject well”—P10

“… it ends up being annoying to have to read many questions”—P32

Figure 10 also points out that 37% of the participants had no clear opinion or were neutral about the ease of using TAX_UC_Test.

As for the usefulness of TAX_UC_Test, we analyzed the answers to statement (S2. The TAX questions are useful for detecting problems in use cases), and the results showed that 62% of the participants agreed that the questions in the TAX_UC_Test were useful for detecting problems in use cases. A total of 33% of the participants had no clear opinion or were neutral about the usefulness of the TAX_UC_Test. Only 5% of the participants disagreed with the statement that the questions found in the TAX_UC_Test were useful. Regarding the positive points of TAI’s usefulness, we can highlight the following quotes from the participants:

“An excellent guide, it basically works as a work instruction.”—P3

“The questions direct the tester to points that are essential in any project.”—P20

Regarding the negative points in relation to the usefulness of TAX, we can highlight the following quotes from the participants:

“Useless, this document must be delivered before the TAI, there is just one more questionnaire to be answered”—P9.

“… TAX depends a little on the opinion of those who are reading the use case, that is, if I don't think something is a problem, I will not mark it as a problem on TAX. So, the usefulness of TAX depends on the perception of those who use it.”—P27.

7.1.3.2 TAI_UC_Test

Regarding the ease of use of the TAI_UC_Test, we analyzed the answers to statement (S3. The TAI improvement suggestions are easy to understand). The results showed that 81% of the participants agreed that the suggestions to improve TAI_UC_Test were easy to understand. Only 16% of the participants had no clear opinion or were neutral in regards to how easy it is to understand the TAI_UC_Test, and 3% of the participants disagreed with the statement. As for the positive points relating to the ease of use TAI, we can highlight the following quotes from the participants:

“As TAI is practically a direct mapping of TAX, it is straightforward to understand and apply the improvements.”—P10

“TAI presents solutions clearly and objectively, so it is elementary to understand and know how to proceed to improve the use case.”—P30

Regarding the negative points of the ease of use TAI, we can highlight the following quotes from the participants:

“In certain instances, TAI only indicates that we have to create a new field, but it does not help us to identify for sure who the actors are, for example.”—P16

“I would like you to have more solution options in each section, to cover more cases and choose from possible solutions that better solve the error.”—P23

As for the TAI_UC_Test’s usefulness, we analyzed the answers to the statement (S4. The TAI improvement suggestions are useful for solving problems in user stories). The results showed that 68% of the participants found the TAI_UC_Test improvement suggestions useful for improving use cases. Only 29% of the participants had no clear opinion or were neutral as to the usefulness of TAI_UC_Test, and 3% of the participants disagreed with the statement that the suggestions for improvement were useful. Regarding the positive points in relation to TAI’s usefulness, we can highlight the following quotes from the participants:

“They are handy for solving problems once they have been identified in TAX.”—P11

“TAI shows exactly where in the UC, the changes should take place.”—P14

Regarding the negative points in relation to the usefulness of TAI, we can highlight the following quotes from the participants:

“I do not usually need TAI since, in general, for the problems I identify, I immediately think of a solution.”—P3

“Generally, the solutions seem to be more complicated than we think. The examples seem to increase the complexity of the use case instead of showing a simpler path.”—P13

7.1.3.3 General perception of ReComP

As for the general perception of ReComP for evaluating and improving the use cases, the participants who played the role of testers pointed out that it was useful and straightforward. Furthermore, ReComP helped in writing of use cases, served as an inspection and error prevention checklist, as well as making the specification more complete and simpler, as shown in the quotes below: