Abstract

The El Niño-Southern Oscillation (ENSO) phenomenon affects the global climate by changing temperature and precipitation patterns mainly in tropical climatic regions and median latitudes. Such event strongly influences agricultural activities and crop yields. The Niño Oceanic Index (ONI) of the US National Oceanic and Atmospheric Administration (NOAA) describes and monitors ENSO intensity from ocean temperature measurements. When ONI in the Niño 3.4 region was + 0.5 °C above normal or − 0.5 °C below normal for 5 consecutive 3-month running averages, El Niño (EN) or La Niña (LN) events, respectively, were established. The prediction of ENSO events is made by modeling at major global weather centers by atmosphere–ocean coupling models; however, no articles were found using decision tree classifier (DTC) for ENSO forecasting purposes. This modeling approach requires much less computational time and capacity. Furthermore, DTC can be sufficiently accurate for agricultural purposes. Thus, the objective of this research was to forecast as early as possible the El Niño and La Niña yearly events using a DTC technique from ONI data from 1950 to 2020. We used as input variables for DTC quarterly ONI values from 15 quarters prior the data of forecasting. The DTC showed an accuracy of 89%, 84%, and 78% to predict La Niña, El Niño, and neutral years, respectively, without training period. For validation, the accuracy was 100%, 79%, and 79% for La Niña, El Niño, and neutral years, respectively. The selected ONI quarters were July–August-September, January–February-March, and February–March-April of the previous year and January–February-March of the current year, allowing an 8-month advance forecast with an average accuracy of 78% (validation).

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Numerous processes drive the natural climatic variability, but the most important is the El Niño-Southern Oscillation (ENSO) phenomenon (Clarke 2008). ENSO is an atmospheric phenomenon characterized by anomalies in temperature in tropical areas of the Pacific Ocean, causing changes in wind and precipitation patterns in tropical and latitudinal regions (Souza Júnior et al. 2009). The ENSO has two distinct phases: El Niño, a hot phase, and La Niña, a cold phase (Berlato et al. 2005). The cycle period in which neither El Niño nor La Niña occur is classified as a neutral phase, which presents intermediate climatic conditions.

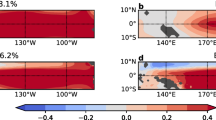

The ENSO phenomenon is tracked and quantified by the Oceanic Niño Index (ONI) which is defined as the average sea surface temperature (SST) anomalies in certain points of the Pacific Ocean (Ludescher et al. 2014). The reference region in the ocean is called “Niño 3.4” corresponding to the area formed between 5° S and 5° N and between 120 and 170° W. It was established that an episode of El Niño occurs when the index is above + 0.5 °C for a period of at least 5 months, just as an episode of La Niña occurs when this index is below − 0.5 °C for the same period of time (Climate Prediction Center, 2012).

Strong episodes of El Niño or La Niña can have major impacts on the world (Ludescher et al. 2014) such as tsunamis, storms, droughts, or abnormal rains (Zhang et al. 2006), as reported by Ronghui and Yifang (1989) in the drought caused in the Indochina Peninsula and southern China during the summer, by Chimeli et al. (2008) in the direct correlation between the effects of the SST anomalies and the price of maize, or by Bastianin et al. (2018) in the impact of ENSO on coffee plantations in Colombia. Therefore, early warning systems in cooperatives, government institutions, companies, and even producers, which have ENSO quality forecasts, are highly desirable.

Although ENSO forecasts using coupled atmosphere–ocean general circulation models (AOGCMs) generally outperform statistical models (Luo et al. 2008), AOGCM systems require high processing power due to the need for simulation of many scenarios (McPhaden et al. 2006) and still do not provide an accurate ENSO forecast for delivery times starting 1 year (Tang et al. 2018).

Several events can improve predictions of El Niño and La Niña episodes such as the evolution of current models, the improvement of observation and analysis systems, the higher spatial resolution, and the use of longer time series. In addition, the construction of statistical deterministic models, based on observed time series, has become increasingly common, especially in regional climate applications (Gavrilov et al. 2019).

Having a long series of observations allows to make the time series stationary by increasing the probability of accurate forecastings (Armstrong et al. 2005). However, statistical models continue to have problems with accuracy due to high spatial and temporal variability, limiting these types of models only in specific regions. Thus, artificial intelligence (AI) methods have been filling these gaps due to the great power of accurate predictions with great computational power associated with simple hardware.

AI methods are considered important when the systems under study are complex and with high data variability (Witten and Frank, 2005; Barsegyan et al. 2007). With the advent of “big data,” AI has had a dramatic impact on many domains of human knowledge (Austin et al. 2013; Arsanjani et al. 2014) making accurate estimates and forecasts possible in a relatively simple way.

Machine learning techniques (ML) are quite robust and can easily analyze patterns and rules in large data sets of multiple predictor variables that have nonlinear relationships with the target variable (Chandra et al. 2019). Aparecido et al. (2021) related that ML is a method that works with data analysis, and it seeks to automate the construction of analytical models. A widely used ML algorithm is the decision tree classifier (DTC) mainly in data prediction analysis (Zhu et al. 2018).

The basic objective of inducing a decision tree is to produce an accurate forecast model (Breiman et al. 1984); this method presents robustness regarding the presence of extreme values (outliers) or even values with error, besides traceability and good performance. For this reason, DTC has already been used for the organization and development of decision support systems related to agro-meteorological databases (Teli et al. 2020).

Nevertheless, few articles in the literature use machine learning (ML) techniques related to the occurrence of ENSO. Silva and Hornberger (2019) related ENSO to rainfall distribution in Sri Lanka using DTC, and Wei et al. (2020) predicted heavy rainfall in East Asia using DTCs in historical series of ENSO and precipitation. However, no work was found using DTCs for ENSO event forecast. Based on this gap, this study has used DTCs to predict ENSO conditions from ONI data, in order to obtain a simple and accurate model for predicting El Niño-Southern Oscillation events.

2 Data and methodology

2.1 Input data

We used quarterly data from the Oceanic Index Niño (ONI) of the ENSO cycles of 1950–2020, provided by the National Oceanic and Atmospheric Administration (NOAA). ENSO cycles in the tropical Pacific Ocean are detected by different methods, including satellite remote sensing, sea level analysis, and by anchored, floating, and disposable buoys (Climate Prediction Center, 2012). ONI is NOAA’s main indicator for monitoring LN and EN. It tracks mean sea surface temperatures (SSTs) quarterly in the eastern-central tropical Pacific, called as Niño index 3.4 (5° N and 5° S in latitude and 170 to 120° W in longitude), as proposed by Trenberth (1997) (Fig. 1). The ENSO is characterized based on the date which the events were mature/seasoned. The SST, in 125 m deep, carries relevant information and provides a source for predicting the extent of the ENSO in advance (Pinault, 2016). Niño 3.4 region is important to delimit the study area since it is accepted that ENSO exhibits a different behavior when observed in the central and/or eastern Pacific regions (Kao and Yu, 2009).

The ENSO extremes in the 1950–2020 period were classified according to the National Oceanic and Atmospheric Administration, which considers that EN conditions are present when ONI is above + 0.5 and LN conditions exist when ONI is under − 0.5, for 5 consecutive 3-month running averages (NOAA, 2017).

2.2 Induction of the decision tree classifier

The decision tree classifier (DTC) is nonparametric supervised methods used for classification. The objective of these methods is to create a model that estimates TARGET (dependent variable) values by learning simple decision rules from FEATURES (independent variables). In this case, TARGET is the annual average value of ONI, which ends in December, and FEATURES are the quarterly ONI values 2 years before.

The data input for the DTC was done as follows: 1 year of quarterly data from the previous year, adding the 3 quarters of the current year, totalling 15 quarters as input variables for the current year forecast (Fig. 2).

Schematic of the input variables in the decision tree model forecast. The letters represent the months, as the following: DJF-p, December, January, and February of previous year; JFM-p, January, February, and March of previous year; FMA-p, February, March, and April of the previous year; MAM-p, March, April, and May of previous year; AMJ-p, April, May, and June of previous year; MJJ-p, May, June, and July of previous year; JJA-p, June, July, and August of previous year; JAS-p, July, August, and September of previous year; ASO-p, August, September, and October of previous year; SON-p, September, October, and November of previous year; OND-p, October, November, and December of previous year; NDJ-p, November, December, and January of previous year; DJF, December, January, and February of current year; JFM, January, February, and March of current year; FMA, February, March, and April of current year

The DTCs build the tree recursively, from top to bottom (Han and Kamber 2001). It starts with the training set, which was divided according to a test on one of the FEATURES, forming a more homogeneous subset in relation to TARGET. This procedure was repeated until to obtain a very homogeneous set, for which it is possible to assign a single value to the dependent variable.

The DTCs have the advantages of being simple and easy to view, require little data preparation, can handle numerical and categorical data, and are robust models, making it possible to validate the models using statistical tests, even when some assumptions are violated. However, as results, complex trees can be generated; nevertheless, there are some mechanisms to avoid this problem, such as adjusting the minimum number of samples for each node and/or the maximum number of nodes in the tree. However, to solve this problem, a large and balanced number of data is normally used, and the appropriate FEATURES are selected in a way that the representation of the system under study is done in a parsimonious way.

The DTC algorithm takes into account the average values and annual variability of ONI quarterly data. It selects which quarters were most important for the annual forecast of the ENSO phenomenon. The criterion used to choose the independent variable that divides the set of examples in each repetition is the main aspect of the induction process. Among the most known and used criteria is the index of information gain or entropy (Quinlan, 1993), related to the (im)purity of the data, which was the chosen criterion for this work. Entropy is a measure of the lack of homogeneity of the input data in relation to its classification. For example, if the sample is completely homogeneous, then entropy is zero, and if it presents maximum entropy (equal to 1), it means that the data set is heterogeneous (Mitchell, 1997; Coimbra et al. 2014).

The induction of the DTC was performed binary, with two branches from each internal node. To avoid overfitting, which would compromise its generalization and performance with new examples, two criteria for stopping the induction algorithm were adopted. The first rule limited the depth of the tree, allowing it to have at most five levels (the root node is considered to be at level zero). The second rule limited the fragmentation of the training set, requiring a minimum of four examples at each node for a new division and at least four examples at each leaf node.

In addition to the stop criteria, called pre-pruning, a procedure of post-pruning was performed, after the induction of the complete tree, in order to decrease the dimensions of the tree. Together with this complete tree, its possible sub-trees were evaluated, and the smallest sub-tree (lowest complexity) with the lowest error rate over the training set was chosen, considering that complex trees can be worse than the simplest trees in predicting new data due to overfitting (Phillips et al. 2017).

2.3 Assessment metrics

Error rate and accuracy are the most common evaluation measures for decision trees (Han and Kamber 2001; Witten and Frank, 2005). The forecast error of a model is defined as the expected square error for new forecasts and for classification as the probability of incorrectly classifying new observations (De'ath and Fabricius 2000). Calculating the error rate on the training set usually results in a highly optimistic estimate, due to the model’s specialization with respect to examples. To work around this problem, the model was randomly divided into two independent sets, one for training and one for validation, with 80 and 20% of the data, respectively (Han and Kamber 2001).

In addition to these measures, the decision tree confusion matrix was produced, which offers effective means for evaluating the model based on each class (Monard and Baranauskas 2003). Each element of the matrix represents the number of examples, being the class observed in line and the class predicted in column. From the generated matrix were extracted the true positive (TP) values, which occur when the model predicts a positive case correctly, false positives (FP) which are those cases where the model is classified as a certain ENSO when in fact it is not, false negative (FN) when the model indicates that it is not a certain ENSO but in fact is, and, finally, the true negative (TN) which is when the model says that it is not determined ENSO and it hits (Hothorn et al. 2005).

The values of TP, TN, FP, and FN were determined as follows from the confusion tables (Fig. 3).

Confusing table used to determine true positive (TP), true negative (TN), false positive (FP), and false negative (FN) in the machine learning analysis by decision trees, for the cases of La Niña (LN) (A), El Niño (EN) (B), and neutral years (NE) (C). Being: OBS the values observed in the historical series and PRED the values predicted by the decision tree

Some statistics, described by Stojanovic et al. (2014), were calculated in two ways: as screening tests and as diagnostic tests. Screening tests are those made to know the proportions of classifications that have already occurred. In this case, the positive predictive value (PPV) is the proportion of correct classifications of the decision trees among all the correct classes of the same type (Eq. 1), and the negative predictive value (NPV) is the proportion of wrong classifications among all the wrong classes of the same type (Eq. 2).

The diagnostic tests, related to the estimated values, are those made to know the probability of the detections for the future: The sensitivity evaluates the capacity of the decision tree to detect a certain class of ENSO when it is really the correct one (Eq. 3). The specificity evaluates the ability of the decision tree not to detect a certain class when it is not really and correctly (Eq. 4). The accuracy is the general performance of the decision tree model (Eq. 5).

All the analyses and data organization were performed using Python 3.9.6 programming language, with the following libraries: numpy, pandas, statsmodels, sklearn, and the graphs with matplotlib, graphviz, and pysankey.

3 Results and discussion

The predictability of ENSO depends on the period in which it is estimated (Chen et al. 2004, 1995; Kirtman and Schopf 1998; Balmaseda et al. 1995). A period of study of 70 years is long enough for the forecast to be statistically robust, different from the work of some authors who are based on forecasts of the last two or three decades only (Goswami and Sukla, 1991; Chen et al. 1995). The evaluation of the time series of the observed seasonal SST anomalies of Niño 3.4 during the period from 1950 to 2020 can qualitatively evaluate the variability of ENSO, which at several moments demonstrates a multi-decadal pattern (Fig. 4), with peaks of El Niño, La Niña, and neutral constant.

During the years 1950 to 2020, at least moderate El Niño events took place in 1986/87, 1996/97, and 2014/15 the same way for La Niña events in 1954/55, 1974/75, and 1999/00. The weakest events, close to neutrality, for El Niño occurred in 1962/63, 1991/92, and 2003/04, already for La Niña, the same occurred in 1955/56, 1984/85, and 2006/07 (Fig. 4).

There was a frequency of neutral years during the analysis of the time series, with sequences marked between the years 1959/62, 1977/81, 2003/06, and 2012/14 indicating a multi-decadal period with these events happening in intervals of 10 or 20 years. The condition of neutral years presents less dispersion of ONI data when compared with the conditions of years of El Niño and La Niña (Fig. 4).

Despite the strong and regular oscillations in the 2000s, there were periods without much activity in El Niño or La Niña, as in the late 70 s and early 80 s. Considering an El Niño as a warm event when the ONI anomaly rate is greater than 0.5 °C and a La Niña as a cold event when the ONI anomaly rate is lower than − 0.5 °C, both for 5 consecutive 3-month running averages, we see that there were 20 El Niño events and 22 La Niña events from 1950 to 2020.

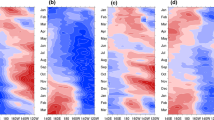

Stratifying the analysis to the quarterly scale, there was a lower variability of ONI during the middle of the calendar year, verified by the smallest standard deviation of seasonal gait. The fall/winter season in the Southern Hemisphere showed the smallest standard deviation from the spring/summer seasons. According to Ham et al. (2019), the ONI index has a high correlation with almost all target seasons, influencing more robust forecasts in the seasons between late spring and boreal fall; however, for southern conditions, there is the opposite making the most informative quarters for ML models the most variable, between August and March. This fact was corroborated by the present study, as the most important quarter for forecasting the annual ENSO condition was January–February-March (Fig. 5).

source NOAA). A quarter is classified as La Niña when the ONI of that period ≤ − 0.5. When the quarterly ONI value ≥ 0.5, the quarter is classified as El Niño. Neutral quarters occur when − 0.5 ≤ average ONI ≤ 0.5. The vertical bars indicate the standard deviation of ONI per year. The letters indicate the quarter, for example, DJF, December, January, and February; JFM, January, February, and March, following this way

Quarterly Oceanic Niño Index (ONI) for the period between 1950 and 2020 (

The boreal spring (between March and June) is seen as a barrier in the ENSO forecast because it causes a drop in ability to persist (decrease in standard deviations) in all ONI forecasts by the models (Chen et al. 2004). The same occurs in the southern hemisphere, however, in the fall season (between March and June) (Fig. 5).

Conversely, in the austral spring (between September and December) occurs the increase of ONI standard deviations allowing greater variability of information in the inputs of the decision tree making this period informative for the ML models (Fig. 5).

The decrease in standard deviations, between May and June, was probably related to the lack of very strong events, such as the El Niño events of 1991/92 and the La Niña events of 1984/84 and was also due to the seasonal dependence of the discharge/recharge cycle on the equatorial (or sea level) heat content, which leads to the SST anomaly for 6 to 8 months and plays a crucial role in ENSO (Jin, 1997; Meinen and McPhaden 2000; McPhaden 2003).

Analysis of the decision tree model showed that some quarters of the ONI index, including July–August-September (JAS-p), January–February-March (JFM-p), and February–March-April (FMA-p) of previous year and January–February-March (JFM) of current year, had greater influence on ENSO event prediction (Fig. 6). The first predictor (JFM) is related to the first quarter of the forecasted year, and the other three predictors used for the ONI prediction are related to quarters of the previous year, demonstrating the anticipation of the forecast and confirming that the ONI event prediction is largely determined by the initial oceanic conditions (Chen et al. 2004) in southern spring–summer.

Decision tree analysis for forecasting El Niño-Southern Oscillation annual events as a function of the quarterly Oceanic Niño Index (ONI). JFM, January–February-March; JAS-p, July–August-September of the previous year; JFM-p, January–February-March of the previous year; FMA-p, February–March-April of the previous year

The first division is based on JFM quarter, which was the most powerful predictor and used by the model for all forecasts, indicating that the model can predict an annual event until the third month of the current year. The left node is strongly homogeneous and is not later divided, being classified as a La Niña year.

Continuing with the right branch, comprising the JAS quarter of previous year, was divided with great information gain, spited into two other nodes at the third level of the decision tree. The right node, again comprising the JFM quarter, was strongly divided, ending with a classification of El Niño years on the right and maintaining the division on the left branch, which ends with a classification of La Niña years on the right and neutral years on the left.

As for the left node, also with the JFM quarter but now in relation to previous year, the division process was strongly repeated, with a classification process of neutral years on the right and with a last division on the left node. The left node again comprises the JFM quarter of current year, which was used for the third time in the decision tree and finalizes the division process by predicting neutral years if the quarter in question has an ONI index less than or equal to − 0.15 and otherwise finalizes with El Niño years on the right.

This delay of one to two stations occurs because the atmosphere takes about 2 weeks to respond to SST anomalies in the tropical Pacific, and then the ocean integrates the force associated with the atmospheric bridge in the coming months (Alexander et al. 2002). The decision tree responses were accurate and robust for both training and validation period, indicating that the proposal for annual ENSO prediction was successful.

During the training period, of the 21 years observed as NE, 18 were predicted as NE, 2 were predicted as EN, and 1 year as LN. For the LN years, of the 18 years observed, 13 were successfully predicted as LN, 4 were predicted as NE, and 1 as EN. Finally, of the 17 years observed of EN, 16 were successfully predicted, and only 1 was predicted as NE (Fig. 7, Appendices).

Therefore, the EN years do not have a 100% specificity for predicting NE and LN events as if they were El Niño years, but as they have a high ability to predict EN events, they have a high sensitivity of 94%, which results in a good accuracy of 93% for this model. A similar case occurs for NE years, which presents 86% sensitivity and 85% specificity, resulting in 86% accuracy.

The opposite occurs for years of LN, although the years of LN have a high specificity of 97%, due to the fact that the vast majority of the predicted events were actually LN years, that is, the model does not normally predict other phenomena as if they were LN years; the sensitivity of the model in the prediction of LN is the lowest, because 4 events were incorrectly predicted as NE years and another 1 event as EN year; therefore, the sensitivity of this model is 72%, resulting in an accuracy of 85% (Table 1).

In the validation period, from the 3 cases of EN, 2 were correctly predicted as EN; however, one-third of the years of this event were incorrectly predicted as NE events; for this, the sensitivity of the model in the prediction of EN is low (67%). For the LN years, with the highest hit rate, of the 3 observed years, the 3 were correctly predicted as LN, resulting in 100% sensitivity and specificity of the model for this event, since no other phenomena are incorrectly predicted as La Niña years. Finally, for the 8 NE years, 6 were predicted as NE, and 2 were predicted as EN, which decreases the sensitivity and, consequently, the accuracy of events of NE (Fig. 8, Appendix).

In the training period (n = 56 years), the decision tree predicted the ENSO events with overall accuracy of 84% and in the validation period (n = 14) equal to 78% (Table 1, Fig. 9). These results were similar to those obtained by other authors such as Nooteboom et al. (2018) who associated artificial neural networks with ARIMA models. However, our results were achieved applying a simpler approach with the same performance.

The highest accuracy of the model (positive predictive value, Table 1) was in the La Niña years forecast (93%), followed by El Niño years (84%) and neutral years (78%). In the validation period, the results improved for the La Niña and neutral years with 100% and 86% accuracy, but the accuracy decreased to 50% for the El Niño years.

The more sensitive the rating, the higher your NPV, meaning that there is more security that the decision tree did not go wrong. Usually, the sensitivity and specificity are inverse. The more specific the model, the more it is overfitting; however, for this analysis, values close to 100, both in training and validation, offer a lot of certainty in the response of the decision tree because we used all the available data from the historical ONI series. In this case, the more specific the better (≈100%). All events presented high specificity, the ability to say that the event did not occur when it really did not occur. For the training period, the best results were obtained for La Niña, El Niño, and neutral years with values of 97%, 92%, and 86%, respectively. In the validation period, the La Niña years presented high specificity (100%), and the neutral and El Niño years presented similar specificities, of 83 and 82%, respectively.

4 Conclusion

The ENSO signal forecast was more robust in the seasons between late spring and autumn boreal, and the most important predictors for El Niño and La Niña years were quarters of previous years. The forecast was possible with 8 months of anticipation, using only quarterly data from the Oceanic Niño Index (ONI) for the July–August-September, January–February-March, and February–March-April periods of the previous year and January–February-March of the current year.

The results indicate that it is possible to forecast the hot and cold events of El Niño-Southern Oscillation only with data of the sea surface temperature, being able to use decision trees for forecasting conditions of years of La Niño, El Niño, and neutral with an average accuracy of 78%. The DTC presented an accuracy of 89%, 84%, and 78% for La Niña, El Niño, and neutral years forecasts, respectively, in the training period and 100%, 79%, and 79%, respectively, for the validation period. However, the model performed best for predicting La Niña years, with high sensitivity and specificity for the cold ENSO event.

Data availability

The data/ material is opened.

Code availability

The software used was python, and scripts are available.

References

Alexander MA, Blade I, Newman M, Lanzante JR, Lau N-C, Scott JD (2002) The atmospheric bridge: the influence of ENSO teleconnections on air-sea interaction over the global oceans. J Climate 15:2205–2231

Aparecido LEO, Meneses KC, Rolim G, de Souza MJN, Carvalho WBS Pereira, Santos PA, Moraes TS, da Silva JRSC (2021) Algorithms for forecastingcotton yield based on climatic parameters in Brazil. Arch Agron Soil Sci 18:365–340. https://doi.org/10.1080/03650340.2020.1864821

Armstrong JS, Collopy F, Yokum JT (2005) Decomposition by causal forces: a procedure for forecasting complex time series. Int J Forecasting 21:25–36

Arsanjani R, Dey D, Khachatryan T, Shalev A, Hayes SW, Fish M (2014) Prediction of revascularization after myocardial perfusion SPECT by machine learning in a large population. J Nucl Cardiol 22(5):877–884

Austin PC, Tu JV, Ho JE, Levy D, Lee DS (2013) Using methods from the data-mining and machine-learning literature for disease classification and prediction: a case study examining classification of heart failure subtypes. J Clin Epidemiol 66(4):398–407

Balmaseda MA, Davey MK, Anderson DLT (1995) Decadal and seasonal dependence of ENSO prediction skill. J Climate 8:2705–2715

Barsegyan AA, Kupriyanov MS, Stepanenko VV, Kholod II (2007) Data analysis technologies. Data mining, visual mining, text mining, OLAP. SPb.: BHV-Petersburg, 384 p.

Bastianin A, Lanza A, Manera M (2018) Economic impacts of El Niño southern oscillation: evidence from the Colombian coffee market. Agric Econ 1:3–17

Berlato MA, Farenzena H, Fontana DC (2005) Associação entre El Niño Oscilação Sul e a produtividade do milho no Estado do Rio Grande do Sul. Pesq Agrop Brasileira 40(5):423–432

Breiman L, Friedman J, Stone CJ, Olshen RA (1984) Classification and Regression Trees. Taylor & Francis, The Wadsworth and Brooks-Cole statistics-probability series, p 369p

Chandra A, Mitra P, Dubey SK, Ray SS (2019) Machine learning approach for Kharif rice yield prediction integrating multi-temporal vegetation indices and weather and non-weather variables. ISPRS-Int Arch Photogramm Remote Sens Spat Inf Sci 423:187–194

Chen D, Zebiak SE, Busalacchi AJ, Cane MA (1995) An improved procedure for El Niño forecasting: implications for predictability. Science 269:1699–1702

Chen D, Cane MA, Kaplan A, Zebiak SE, Huang D (2004) Predictability of El Niño over the past 148 years. Nature 428(6984):733

Chimeli A, Souza Filho F, Holanda M, Petterini F (2008) Forecasting the impacts of climate variability: lessons from the rainfed corn market in Ceará. Brazil Environ Dev Econ 13(2):201–227

Clarke AJ (2008) An Introduction to the Dynamics of El Nino and the Southern Oscillation. Elsevier Academic Press, Londres

Climate Prediction Center – CPC (2012) Frequently asked questions about El Niño and La Niña. Disponível em: https://www.cpc.ncep.noaa.gov/products/analysis_monitoring/ensostuff/ensofaq.shtml#forecasts. Accessed 11 Jun 2021

Coimbra R, Rodriguez-Galiano V, Olóriz F, Chica-Olmo M (2014) Regression trees for modeling geochemical data - an application to Late Jurassic carbonates (Ammonitico Rosso). Comput Geosci 73:198–207

Death G, Fabricius KE (2000) Classification and regression trees: a powerful yet simple technique for ecological data analysis. Ecol 81:3178–3192

Gavrilov A, Seleznev A, Mukhin D, Loskutov E, Feigin A, Kurths J (2019) Linear dynamical modes as new variables for data-driven ENSO forecast. Clim Dyn 52(3–4):2199–2216

Goswami BN, Sukla J (1991) Predictability of a coupled ocean-atmosphere model. J Climate 4:3–22

Ham YG, Kim JH, Luo J-J (2019) Deep learning for multi-year ENSO forecasts. Nature 573:568–572

Han J, Kamber M (2011) Data Mining: Concepts and Techniques. Morgan Kaufmann, San Francisco, p 744

Hothorn T, Leisch F, Zeileis A (2005) The design and analysis of benchmark experimentos. J Comput Graph Stat 14(3):675–699

Jin FF (1997) An equatorial ocean recharge paradigm for ENSO. Part I: conceptual model. J Atmos Sci 54:811–829

Kao H-Y, Yu J-Y (2009) Contrasting eastern-Pacific and central-Pacific types of ENSO. J Clim 22(3):615–632

Kirtman BP, Schopf OS (1998) Decadal variability in ENSO predictability and prediction. J Clim 11:2804–2822

Ludescher J, Gozolchiani A, Bogachev MI, Bunde A, Havlin S, Schellnhuber HJ (2014) Very early warning of next El Niño. Proc Natl Acad Sci 111(6):2064–2066

Luo JJ, Masson S, Behera SK, Yamagata T (2008) Extended ENSO predictions using a fully coupled ocean–atmosphere model. J Clim 21(1):84–93

McPhaden MJ, Zebiak SE, Glantz MH (2006) ENSO as an integrating concept in earth science. Science 314(5806):1740–1745

McPhaden MJ (2003) Tropical Pacific Ocean heat content variations and ENSO persistent barriers. Geophys Res Lett 30:1480–1490

Meinen CS, McPhaden MJ (2000) Observations of warm water volume changes in the equatorial Pacific and their relationship to El Niño and La Niña. J Clim 13:3551–3559

Mitchell T (1997) Decision Tree Learning. In: Mitchell T (ed) Machine Learning. The McGraw-Hill Companies Inc, New York, pp 52–78

Monard MC, Baranauskas JA (2003) Indução de Regras e Árvores de Decisão. In: Rezende SO (ed) Sistemas Inteligentes - fundamentos e aplicações. Manole Ltda, Barueri, pp 115–139

NOAA (2017) Cold & warm episodes by season. Online available. https://origin.cpc.ncep.noaa.gov/products/analysis_monitoring/ensostuff/ONI_v5.php. Accessed 19 Jun 2021

Nooteboom PD, Feng QY, Lopez C, Hernandez-García E, Dijkstra A (2018) Using network theory and machine learning to predict El Nino. Earth Syst Dynam 9:969–983

Phillips ND, Neth H, Woike JK, Gaissmaier W (2017) FFTrees: a toolbox to create, visualize, and evaluate fast-and-frugal decision trees. Judgm Decis Mak 12(4):344–368

Pinault JL (2016) Anticipation of ENSO: what teach us the resonantly forced baroclinic waves. Geophy Astrophys Fluid Dyn 110(6):518–528

Quinlan JR (1992) C4.5 Programs for Machine Learning. San Mateo, CA: Morgan Kaufmann

Ronghui H, Yifang W (1989) The influence of ENSO on the summer climate change in China and its mechanism. Adv Atmos Sci 6(1):21–32

Silva TM, Hornberger GM (2019) Identifying El Niño-Southern Oscillation influences on rainfall with classification models: implications for water resource management of Sri Lanka. Hydrol Earth Syst Sci 23(4):1905–1929

Souza Júnior JA, Nechet D, Oliveira MCF, Albuquerque MF (2009) Estudo do comportamento da temperatura e precipitação nos períodos chuvosos e menos chuvosos em Belém-PA em anos de fortes eventos de El Niño e La Niña. Revista Brasileira De Climatologia 5:87–101

Stojanovic M, Apostolovic M, Stojanovic D, Miloševic Z, Toplaovic A, Lakušic VM, Golubovic M (2014) Understanding sensitivity, specificity and predictive values. Vojnosanit Pregl 71(11):1062–1065

Tang Y, Zhang RH, Liu T (2018) Progress in ENSO prediction and predictability study. Natl Sci Rev 5:826–839

Teli A, Amith A, Bhanu Kaushik K, Gopala Krishna Vasanth K, Sowmya BJ, Seema S (2020) Efficient decision support system on agrometeorological data. Adv Intell Syst Comput 940:875–890

Trenberth KE (1997) The definition of El Niño. Bull Amer Met Soc 78:2771–2777

Wei W, Yan Z, Jones PD (2020) A decision-tree approach to seasonal prediction of extreme precipitation in eastern China. Int J Climatol 40(1):255–72

Witten IH, Frank E (2005) Data Mining: Practical Machine Learning Tools and Techniques. Morgan Kaufmann, San Francisco

Zhang M-N, Liu J, Mao K-X, Li Y, Zhang X-H, Shi Y-J (2006) The general distribution characteristics of thermocline of China Sea. Mar Forecast 23(4):51–58

Zhu X, Xu Q, Tang M, Li H, Liu F (2018) A hybrid machine learning and computing model for forecasting displacement of multifactor-induced landslides. Neural Comput Appl 30(12):3825–3835

Funding

This study was financed in part by the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior — Brasil (CAPES) — Finance Code 001.

Author information

Authors and Affiliations

Contributions

KAS: formal analysis, investigation, data curation, writing — original draft, writing — review and editing, and visualization. GdSR: conceptualization, methodology, supervision, and project administration. LEdOA: writing — review and editing.

Corresponding author

Ethics declarations

Ethics approval

It is not necessary.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendices

Table 2

Table 3

Rights and permissions

About this article

Cite this article

Silva, K.A., de Souza Rolim, G. & de Oliveira Aparecido, L.E. Forecasting El Niño and La Niña events using decision tree classifier. Theor Appl Climatol 148, 1279–1288 (2022). https://doi.org/10.1007/s00704-022-03999-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00704-022-03999-5