Abstract

A novel feature selection algorithm is designed for high-dimensional data classification. The relevant features are selected with the least square loss function and \({\ell _{2,1}}\)-norm regularization term if the minimum representation error rate between the features and labels is approached with respect to only these features. Taking into account both the local and global structures of data distribution with subspace learning, an efficient optimization algorithm is proposed to solve the joint objective function, so as to select the most representative features and noise-resistant features to enhance the performance of classification. Sets of experiments are conducted on benchmark datasets, show that the proposed approach is more effective and robust than existing feature selection algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In real applications, data are often of high dimension, i.e., with a large number of features [18, 22, 37]. And most features are hardly with contributions to data mining tasks. This kind of features is often attributed to irrelevant and redundant features that can affect the classification performance. In other words, high-dimensional datasets not only consume huge storage and computation resources, but also degrade the total performance of a learning algorithm, i.e., so-called the curse of dimensionality [6, 31]. Therefore, feature selection has been regarded as an important and effective technique to weaken the affection of high dimension to classification performance, as well as to significantly improve the comprehensibility of discovered results [17, 21, 30].

Feature selection aims to maintain the original features of the data, at the same time, seeks to identify the main features and weed out the irrelevant ones, so as to build an impactful learning model [1, 11, 23]. Traditional feature selection methods generally evaluate the features one by one without respect to the relationship between features, the local structure of the data, the local structure of the data, and the label distribution of the data. To deal with these issues, sparse learning has been applied to feature selection algorithms. However, there are still two limitations in these feature selection methods based on sparse learning algorithms [19]. One is that the sparse penalty terms, such as \({\ell _{1}}\)-norm and \({\ell _{2,1}}\)-norm, are not enough to regulate the sparseness of feature representation. Namely, they may not select the optimum subset features from the original data [33, 36]. Another is that these methods only consider the global structures of the data, but neglects the local information of the data which can also well impact on selecting the significant features.

Motivated by the above-observed points, we design a novel framework for efficiently integrating the sparse model and subspace learning, so as to select the important features for a given dataset [16, 34]. First of all, the least square loss function is applied to measure the correlation between the feature set and the label set. Furthermore, the \({\ell _{2,1}}\)-norm regularization term is employed to shrink the least square loss function, to generate a sparse model. Because this model only takes into account the global structure of the data, the local structure of the data is combined with manifold constraints during selecting the main relevant features. While the locality preserving projection (LPP) [5] is sensitive to outliers and noise features, the LPP is adopted to keep the local structure of the data. Finally, the Fisher’s LDA [4] is advocated to protect the global structure of the data. According to these measures, the proposed framework can select a subset of important relevant features for dataset which efficiently reduces the dimensionality and speeds up the learning process.

The primary contributions of the study are summarized as follows:

-

A novel framework is designed for feature selection for combining the subspace learning with the sparse graph representation. This framework can select the main discriminative features due to taking both the local and global structures of the data into account.

-

A novel iterative optimization algorithm is exploited to solve the joint objective function and obtain the optimum solution. It can also testify the optimization algorithm which can quickly yet efficiently converge to the optimum solution.

-

Compared with the generic dimensionality reduction algorithms, the proposed algorithm demonstrates the best performed results, especially on high-dimensional data.

The rest of this paper is organized as follows: Sect. 2 summarizes some recently works related to feature selection. Section 3 designs the feature selection algorithm and its improvement. Section 4 experimentally evaluates the proposed approach and compared it with existing feature selection methods. Finally, this paper is concluded in Sect. 5.

2 Related works

This section briefly recalls the recent studies concerning both feature selection algorithm and sparse representation which have been successfully applied in high-dimensional data. Nie et al. employed joint \({\ell _{2,1}}\)-norm minimization on both loss function and regularization to select the most relevant features. Moreover, they proposed an efficient method to solve the objective function of the feature selection [9]. With the graph-theoretic clustering technique, the original features are divided into different clusters. Features in different clusters are relatively independent. And then, the most representative features from each cluster are selected to makeup a subset of features. Based on the above two ideas, Song et al. proposed a fast feature selection algorithm [12]. Liu et al. proposed a novel feature selection algorithm that considered not only both maximal relevance to the class labels and minimal redundancy to the selected features, but also an agglomerative way to enhance the performance of pattern classification [6]. Qiao et al. advocated of preserving the sparse reconstructive structures of the data via minimizing a \({\ell _1}\)-norm regularization-related objective function, called sparsity preserving projection (SPP). Moreover, SPP can automatically choose the neighborhood of the features by the natural discriminating information [10]. Wang et al. integrated multiple kernel learning, sparse coding and graph regularization for feature selection, and developed a novel data representation algorithm to optimize the feature selection objective function [13]. Hence, this algorithm could consider the local manifold structure of the data. Zhu et al. utilized the relationship between indicator vectors, as well as considers the canonical correlations between features of different modalities via projecting them into a canonical space [35]. The framework has successfully been applied in Alzheimer’s disease diagnosis. Zhou et al. proposed to recover the true sparse centroid from the data and advocated the approximation set coding approach [24].

3 Approach

Throughout the paper, we denote matrices as boldface uppercase letters, vectors as boldface lowercase letters, and scalars as normal italic letters, respectively. For a matrix \({\mathbf{X}} = [{x_{ij}}]\), its ith row and jth column are denoted as \({x^i}\) and \({x_j}\), respectively. We denote \({\ell _2}\)-norm and \({\ell _{2,1}}\)-norm as \({\left\| {{x_j}} \right\| _2} = \sqrt{\sum \nolimits _{i = 1}^n {x_{i,j}^2} }\) and \({\left\| {{\mathbf {X}}} \right\| _{2,1}} = \sum \nolimits _{i = 1}^n {\sqrt{\sum \nolimits _{j = 1}^m {x_{i,j}^2} } }\), respectively. We further denote the transpose operator, the trace operator and the inverse of a matrix X as \({\mathbf {X}}^{\it T}\), \({\rm tr}({{\mathbf {X}}})\) and \({{\mathbf {X}}}^{-1}\), respectively.

3.1 Proposed algorithm

In this section, we present an efficient supervised feature selection algorithm to improve the classification performance. Given \({{\mathbf {X}}} \in {R^{p \times s}}\), where p is the number of feature variables and s is the number of samples. The samples corresponding to label matrix is \({\mathbf {Y}}\in {\mathbf {R}^{c \times s}}\), where c is the number of classes, i.e., 0–1 encoding. The proposed feature selection algorithm aims to seek a functional dependence between X and Y. First of all, we use the linear representation method to describe the relation between the feature sets X and the indicator matrix Y [28, 34]. Moreover, the least squares method is a classical estimator in which the method is chosen to minimize the reconstruction error. The objective function of least square is defined as follows:

where \({\mathbf {A}} \in {{\mathbf {R}}^{p \times c}}\) denotes the coefficient representation matrix, namely, the relation between the feature sets and the labels of class. The solution A cannot respond to the information, features of which are main relevant features and the others are the irrelevant or low-relevant features. Moreover, a multi-task learning formula with a sparse least square regression model has been successfully applied for a binary classification [20, 29]. Therefore, we use the follow formula instead of Eq. (1).

where \(\rho\) is a positive parameter and controls the sparsity. Therefore, we can assign a large weight to the main relevant features and a small or zero weight to the weak-relevant features via Eq. (2). Equation (2) has been proved efficient for binary classification [25, 26]. In this paper, we utilize the correlation of different classes via regarding each class as one task to make it deal with the multi-class classification. Nevertheless, it cannot guarantee to be selected which is conductive to better classification performance due to that Eq. (2) cannot ensure that the neighborhood structure of the selected features is preserved.

For the purpose of realizing multi-class classification, we consider the global and the local topological structures of the data according to the distribution of the data features into the proposed framework. Firstly, we employ a Fisher’s LDA [4, 27], which considers the global data distribution based between within-class variance and between-class variance to find the main relevant class. But the Fisher’s LDA penalize term \(\frac{{\mathbf{A ^{\it T}}{\sum _g}\mathbf A }}{{\mathbf{A ^{\it T}}{\sum _h}\mathbf A }}\) is the non-convexity, where \({\sum _g}\) denotes the within-class variance and \({\sum _h}\) denotes the between-class variance. Fortunately, Ye [20] proposed to utilize a multivariate linear regression model that defines the class label matrix \(\mathbf Y = [{y_{i,k}}]\) to replace the Fisher’s LDA penalized term.

where \({n_k}\) denotes the sample size of the class k and \(l({x_i})\) is a class label of \({x_i}\). So we can efficiently utilize the global structure of the data via the class indicator matrix Y, and cannot transform the original features space into a low-dimensional space. Secondly, we employ Locality preserving projection (LPP) [5], which is a subspace learning method to preserve the local structure of the features. The purpose of LPP is to seek an embedding space where the preserved local structure, i.e., maintain the local structure of the data. We briefly describe the definition of LPP as follows:

where the \(y = \mathbf{A ^{\it T}}{x_i}\) , i = \(1, 2,\ldots ,p\). and \({w_{i,j}}\) is a heat kernel \({w_{i,j}} = \exp \left( - \frac{{{{\left\| {{x_i} - {x_j}} \right\| }^2}}}{\delta }\right)\), \(\delta\) is a positive parameter. Substituting the \(y = \mathbf{A ^{\it T}}{x_i}\) into Eq. (2), we obtain:

where \(\mathbf D = [{d_{i,i}} = \sum \nolimits _j {{w_{i,j}}} ]\) is a diagonal matrix, and \(\mathbf L = \mathbf D - \mathbf W\) is a Laplacian matrix. Therefore, we use the \(\text{tr}(\mathbf{A ^{\it T}}\mathbf {XL} \mathbf{X ^{\it T}}\mathbf A )\) term to describe the topological relation of the data [32]. Hence, we present an efficient supervised feature selection algorithm and the objective function as follows.

where \({\rho _1}\) and \({\rho _2}\) are the positive tuning parameters, and the class indicator matrix Y is defined in Eq. (3). Therefore, according to the objective function, we can know that the proposed algorithm is the integration of subspace learning (i.e., LDA and LPP) and feature selection as a consolidated framework. In addition, we develop an optimal algorithm to solve the optimum solution of the objective function. The proposed optimal algorithm could very efficiently and quickly converge to the global optimum solution and the experiments also testify this.

3.2 Optimization

Note that Eq. (6) is a convex function and the last two terms are non-smooth, we cannot solve it straightforwardly. Therefore, we propose an efficient optimization algorithm to solve the objective function.

Denote \(\mathbf{A ^{{\it T}}}\mathbf X - \mathbf Y = \left[ {{v^1},\ldots ,{v^s}} \right]\), and we also define the diagonal matrixes as \({\bar{\mathbf{D }}}\) and \(\tilde{\mathbf{D }}\) with the kth diagonal element as \(\bar{\mathbf{D }}_{i}=\frac{1}{{2||{v^k}||_2}}\) and \(\tilde{\mathbf{D }}_{i}=\frac{1}{{2||{a^k}|{|_2}}}\), respectively. The objective function in Eq. (6) is equivalent to

Specifically, we take the derivative about each row \({a_i}(1 \le i \le s)\) and setting it to zero, we can obtain:

Thus, we can know the following formula:

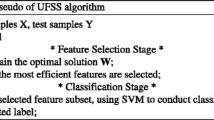

We can know that the \(\bar{\mathbf{D }}\) and \(\widetilde{\mathbf{D }}\) are unknown and depend on A. For the purpose of solving Eq. (9), we present an iteration algorithm as follows, the pseudo of the optimal algorithm is as shown in Algorithm 1.

Lemma 1

For any nonzero vectors \(a,b \in {R^m}\), the following inequality is always true.

Proof

There is an obviously right inequality: \({(\sqrt{l} - \sqrt{m} )^2} \ge 0\), then we have

And utilize the vectors a and b to replace the vectors l and m in Eq. (11) respectively. Hence, we can arrive at Eq. (10).

Theorem 1

Algorithm 1 decreases the objective value in Eq. (9) in each of iterations.

Proof

In terms of Step 2 in Algorithm 1, we have

Therefore, we have

According to Lemma 1, the term \({||a|{|_2}} - \frac{{||a||_2^2}}{{2||{a_0}|{|_2}}} \le ||{a_0}|{|_2} - \frac{{||{a_0}||_2^2}}{{2||{a_0}|{|_2}}}\) is always true for any nonzero vectors a and \(a_0\). Therefore, the objective value can be decreased in each of iteration in Algorithm 1. Moreover, \(\mathbf{A ^{(t)}}\) and \(\mathbf D _i^{(t)}\) will be satisfied when Eq. (12) converges. Thanks to Eq. (6) which is a convex function, so the matrix A is a global optimal solution of Eq. (6) when it satisfies Eq. (12). Thus, Algorithm 1 will converge to the global optimum of Eq. (6), and the experiment also demonstrates that the optimization method can quickly converge to the optimized solution.

4 Experiments

In this section, we adopt extensive experiments to test the performance of our feature selection algorithm for pattern classification. Furthermore, we compare our algorithm with the other dimension reduction methods from MATLAB toolbox.Footnote 1 The compared methods are used to getting the subset of attributions for pattern classification. The compared methods follow principal component analysis (PCA), multidimensional scaling (MDS), Sammon mapping (Sammon), Laplacian eigenmaps (Laplacian), diffusion maps (D-maps), kernel PCA (KPCA), stochastic neighbor embedding (SNE), symmetric stochastic neighbor embedding (SymSNE), t distributed stochastic neighbor embedding (tSNE), neighborhood components analysis (NCA), maximally collapsing metric learning (MCML). To evaluate the validity of the proposed algorithm, we employ the SVM classifier from the LIBSVM toolboxFootnote 2 with the parameter spaces of c and g as \(\left\{ {{2^{ - 5}},\ldots ,{2^5}} \right\}\). Moreover, we also utilize the original data to be classified via the SVM classifier as the baseline algorithms (Original for short).

4.1 Experiments verification and setup

Datasets in our experiments are detailed as follows:

Arcene and Train sets were downloaded from UCIFootnote 3 Breast cancer1, Breast cancer2, Breast cancer3, Breast cancer4 were obtained from works by West et al. [15], Laura J. van’t Veer et al. [7], Mj van de Vijver et al. [8] and Wang et al. [14], respectively. GDS531, GDS1027, GDS1319, GDS1-454 are publicly availableFootnote 4 Colon Cancer and Ovarian Cancer adopted came from the reports by Alon et al. [2] and Berchuck et al. [3], respectively.

Hence, we utilize the above twelve datasets to validate our feature selection algorithm. The detailed description of all datasets concerning samples, features and the number of classes is summarized in Table 1. The compared algorithm is also performed in the same experimental environment with the proposed algorithm. The original data are randomly divided into ten subsets. A single subset is regarded as the test sample and the rest subsets are used as training samples. Hence, we utilize tenfold cross validation in all experiments and repeat the whole process ten times to avoid the sample.

The parameters \({\rho _1}\) and \({\rho _2}\) are set to 0.001, 0.01, 0.1, 1, 10, 100 in Eq. (6). We are also setting the appropriate parameters for the other compared algorithms. The number of selected features is obtained by the maximum likelihood estimator (MLE) to select the optimum feature dimension for compared algorithms. We employ the classification accuracy to evaluate all the algorithms and report the best results of all algorithms using different optimal parameters. Finally, we summarize the results with the average value of 10 times plus or minus the standard deviation.

4.2 Experimental results

We summarize the classification accuracy results of all algorithms both binary classification and multi-class classification problems in the Tables 2 and 3. As shown in Tables 2 and 3, compared with the other algorithms, our proposed algorithm could yield the best performance. Our proposed algorithm had averagely improved at least 11.12 % of accuracy performance than simply classifying without any feature selection method (i.e., Original). Our proposed algorithm gained the best precision on gene dataset GDS1319, and improved about 34.18 % accuracy than SNE; moreover, the performance is best than the other algorithms. The reason is that our proposed algorithm takes into account the global and local structure of the original data space, the similarity of the data is preserved by our proposed framework during feature selection.

About compared algorithms, most of the results with feature selection algorithms are better than the results without feature selection. The reason is that feature selection algorithms not only wipe off the redundancy, noise samples and accelerated computing, but also obtained a better performance. However, there are some data sets with feature selection methods that obtain a worse performance than without feature selection algorithm, which is not surprising; many articles also said that feature selection algorithm could speed up computation and reduced storage cost.

The compared algorithms could be divided into two categories, i.e., manifold learning and others. Manifold learning contains MDS, Sammon, Laplacian, Dmaps et al. These algorithms recover the low-dimensional manifold structure from high-dimensional data, namely the high-dimensional space to find low-dimensional manifold, and to find the corresponding embedding maps. Analyzing these results, we can know that the proposed algorithm is best than these algorithms due to which these algorithms may ignore the global structure of the data.

Our algorithm has been successfully validated and can be applied in binary-class and multi-class classification problems. For example, the proposed algorithm can obtain a best performance than Original, PCA, MDS, Sammon, Laplacian, DMaps, KPCA, SNE, SymSNE, tSNE, NCA, MCML on multi-class datasets such as GDS1027, GDS1319, GDS1454, Train. And the performance of the proposed algorithm is outstanding. Moreover, our proposed algorithm could obtain some more stable results than the other compared algorithms.

5 Conclusions

In this paper, we have formulated a novel feature selection algorithm and focused on integration the subspace learning and feature selection method as a unified framework. The proposed algorithm is called as sparse graph representation feature selection for supervised classification. The proposed algorithm has two advances compared with the state-of-the-art methods: (1) the framework is based on sparse learning model and use an efficient optimization algorithm to solve it; (2) take into account both global and local structures of the data. The proposed feature selection can efficiently select the important features and apply the selected features for SVM classification, the performance is much better than other compared algorithms. Therefore, we could validate the performance of the proposed algorithm by analyzing the classification accuracies of both binary classification and multi-class classification problems.

References

Zhu, X., Suk, H., Lee, S., Shen, D.: Subspace regularized sparse multi-task learning for multi-class neurodegenerative disease identification. IEEE Trans. Biomed. Eng. PP(99), 1 (2015)

Alon, U., Barkai, N., Notterman, D.A., Gish, K., Ybarra, S., Mack, D., Levine, A.J.: Broad patterns of gene expression revealed by clustering analysis of tumor and normal colon tissues probed by oligonucleotide arrays. Proc. Natl. Acad. Sci. 96(12), 6745–6750 (1999)

Berchuck, A., Iversen, E.S., Lancaster, J.M., Pittman, J., Luo, J., Lee, P., Murphy, S., Dressman, H.K., Febbo, P.G., West, M.: Patterns of gene expression that characterize long-term survival in advanced stage serous ovarian cancers. Clin. Cancer Res. Off. J. Am. Assoc. Cancer Res. 11(10), 3686–3696 (2005)

Duda, R.O., Hart, P.E., Stork, D.G.: Pattern Classification. Wiley-Interscience, New York (2001)

He, X., Niyogi, P.: Locality preserving projections. In: NIPS, pp. 153–160 (2003)

Liu, H., Wu, X., Zhang, S.: A new supervised feature selection method for pattern classification. Comput. Intell. 30(2), 342–361 (2014)

Lj, V.T.V., Dai, H., Mj, V.D.V., He, Y.D., Hart, A.A., Mao, M., Peterse, H.L., Van, D.K.K., Marton, M.J., Witteveen, A.T.: Gene expression profiling predicts clinical outcome of breast cancer. Nature 415(6871), 530–536 (2002)

Mj, V.D.V., He, Y.D., van’t Veer, L.J., Dai, H., Hart, A.A., D. W. Voskuil, D.W., Schreiber, G.J., Peterse, J.L., Roberts, C., Marton, M.J.: A gene-expression signature as a predictor of survival in breast cancer. N. Engl. J. Med. 347(25), 1999–2009 (2002)

Nie, F., Huang, H., Cai, X., Ding, C.H.Q.: Efficient and robust feature selection via joint \({\ell _{2,1}}\)-norms minimization. In: Advances in Neural Information Processing Systems, pp. 1813–1821 (2010)

Qiao, L., Chen, S., Tan, X.: Sparsity preserving projections with applications to face recognition. Pattern Recognit. 43(1), 331–341 (2010)

Qin, Y., Zhang, S., Zhu, X., Zhang, J., Zhang, C.: Semi-parametric optimization for missing data imputation. Appl. Intell. 27(1), 79–88 (2007)

Song, Q., Ni, J., Wang, G.: A fast clustering-based feature subset selection algorithm for high-dimensional data. IEEE Trans. Knowl. Data Eng. 25(1), 1–14 (2013)

Wang, J.J., Bensmail, H., Gao, X.: Feature selection and multi-kernel learning for sparse representation on a manifold. Neural Netw. Off. J. Int. Neural Netw. Soc. 51c(3), 9C16 (2013)

Wang, Y., Klijn, J.G., Yi, Z., Sieuwerts, A.M., Look, M.P., Fei, Y., Talantov, D., Timmermans, M., Gelder, M.V., Yu, J.: Gene-expression profiles to predict distant metastasis of lymph-node-negative primary breast cancer. Lancet 365(9460), 671C679 (2005)

West, M., Blanchette, C., Dressman, H., Huang, E., Ishida, S., Spang, R., Zuzan, H., Olson, J.A., Marks, J.R., Nevins, J.R.: Predicting the clinical status of human breast cancer by using gene expression profiles. Proc. Natl. Acad. Sci. 98(20), 11462–11467 (2001)

Wu, X., Zhang, C., Zhang, S.: Efficient mining of both positive and negative association rules. ACM Trans. Inf. Syst. (TOIS) 22(3), 381–405 (2004)

Wu, X., Zhang, C., Zhang, S.: Database classification for multi-database mining. Inf. Syst. 30(1), 71–88 (2005)

Wu, X., Zhang, S.: Synthesizing high-frequency rules from different data sources. IEEE Trans. Knowl. Data Eng. 15(2), 353–367 (2003)

Yan, Y., Shen, H., Liu, G., Ma, Z., Gao, C., Sebe, N.: Glocal tells you more: Coupling glocal structural for feature selection with sparsity for image and video classification. Comput. Vis. Image Underst.124, 99–109 (2014)

Ye, J.: Least squares linear discriminant analysis. In: Proceedings of the 24th International Conference on Machine Learning, pp. 1087–1093 (2007)

Zhang, S., Qin, Z., Ling, C.X., Sheng, S.: “Missing is useful”: missing values in cost-sensitive decision trees. IEEE Trans. Knowl. Data Eng. 17(12), 1689–1693 (2005)

Zhang, S., Zhang, C., Yan, X.: Post-mining: maintenance of association rules by weighting. Inf. Syst. 28(7), 691–707 (2003)

Zhao, Y., Zhang, S.: Generalized dimension-reduction framework for recent-biased time series analysis. IEEE Trans. Knowl. Data Eng. 18(2), 231–244 (2006)

Zhou, G., Geman, S., Buhmann, J.M.: Sparse feature selection by information theory. In: 2014 IEEE International Symposium on Information Theory (ISIT), pp. 926–930 (2014)

Zhu, X., Huang, Z., Cheng, H., Cui, J., Shen, H.T.: Sparse hashing for fast multimedia search. ACM Trans. Inf. Syst. (TOIS) 31(2), 9 (2013)

Zhu, X., Huang, Z., Cui, J., Shen, H.T.: Video-to-shot tag propagation by graph sparse group lasso. IEEE Trans. Multimed. 15(3), 633–646 (2013)

Zhu, X., Huang, Z., Shen, H.T., Cheng, J., Xu, C.: Dimensionality reduction by mixed kernel canonical correlation analysis. Pattern Recognit. 45(8), 3003–3016 (2012)

Zhu, X., Huang, Z., Shen, H.T., Zhao, X.: Linear cross-modal hashing for efficient multimedia search. In: Proceedings of the 21st ACM international conference on Multimedia, pp. 143–152 (2013)

Zhu, X., Huang, Z., Yang, Y., Shen, H.T., Xu, C., Luo, J.: Self-taught dimensionality reduction on the high-dimensional small-sized data. Pattern Recognit. 46(1), 215–229 (2013)

Zhu, X., Li, X., Zhang, S.: Block-row sparse multiview multilabel learning for image classification. IEEE Trans. Cybern. (2015)

Zhu, X., Suk, H., Lee, S., Shen, D.: Canonical feature selection for joint regression and multi-class identification in Alzheimer’s disease diagnosis. Brain Imaging Behav. 1–11 (2015). doi:10.1007/s11682-015-9430-4

Zhu, X., Suk, H.-I., Shen, D.: Matrix-similarity based loss function and feature selection for Alzheimer’s disease diagnosis. In: 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3089–3096 (2014)

Zhu, X., Suk, H.-I., Shen, D.: Multi-modality canonical feature selection for Alzheimer’s disease diagnosis. In: Medical Image Computing and Computer-Assisted Intervention-MICCAI 2014, pp. 162–169 (2014)

Zhu, X., Suk, H.-I., Shen, D.: A novel matrix-similarity based loss function for joint regression and classification in ad diagnosis. NeuroImage 100, 91–105 (2014)

Zhu, X., Suk, H.-I., Shen, D.: Sparse discriminative feature selection for multi-class Alzheimer’s disease classification In: Machine Learning in Medical Imaging, pp. 157–164 (2014)

Zhu, X., Zhang, L., Huang, Z.: A sparse embedding and least variance encoding approach to hashing. IEEE Trans. Image Process. 23(9), 3737–3750 (2014)

Zhu, X., Zhang, S., Jin, Z., Zhang, Z., Xu, Z.: Missing value estimation for mixed-attribute data sets. IEEE Trans. Knowl. Data Eng. 23(1), 110–121 (2011)

Acknowledgments

This work is supported in part by the China “1000-Plan” National Distinguished Professorship; the China 973 Program under Grant 2013CB329404; the Natural Science Foundation of China under Grants 61170131, 61450001, 61363009, 61263035 and 61573270; the China Postdoctoral Science Foundation under Grant 2015M570837; the Guangxi Natural Science Foundation (Grant No: 2015GXNSFCB139011); the funding of Guangxi “100-Plan”; the Guangxi Natural Science Foundation for Teams of Innovation and Research under Grant 2012GXNSFGA060004; and the Guangxi “Bagui” Teams for Innovation and Research; Innovation Project of Guangxi Graduate Education YCSZ2015095, YCSZ2015096.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Cheng, D., Zhang, S., Liu, X. et al. Feature selection by combining subspace learning with sparse representation. Multimedia Systems 23, 285–291 (2017). https://doi.org/10.1007/s00530-015-0487-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-015-0487-0