Abstract

The existence of a smooth complete strictly locally convex hypersurface with prescribed scalar curvature and asymptotic boundary at infinity in \({\mathbb {H}}^{3}\) is proved under the assumption that there exists a strictly locally convex subsolution.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

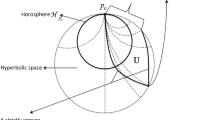

In this paper, we are concerned with the asymptotic Plateau type problem in hyperbolic space \({\mathbb {H}}^{n+1}\): to find a complete strictly locally convex hypersurface \(\Sigma \) with prescribed curvature and asymptotic boundary at infinity. For hyperbolic space, we will use the half-space model

equipped with the hyperbolic metric

The ideal boundary at infinity of \({\mathbb {H}}^{n+1}\) can be identified with

and the asymptotic boundary \(\Gamma \) of \(\Sigma \) is given at \(\partial _{\infty } {\mathbb {H}}^{n+1}\), which consists of a disjoint collection of smooth closed embedded \((n - 1)\) dimensional submanifolds \(\{ \Gamma _1, \ldots , \Gamma _m \}\). Given a positive function \(\psi \in C^{\infty } ({\mathbb {H}}^{n+1})\), we are interested in finding a complete strictly locally convex hypersurfaces \(\Sigma \) in \({\mathbb {H}}^{n+1}\) satisfying the curvature equation

as well as with the asymptotic boundary

where \(\mathbf{x}\) is a conformal Killing field which will be specified in Sect. 6, \(\kappa = (\kappa _1, \ldots , \kappa _n)\) are the hyperbolic principal curvatures of \(\Sigma \) at \(\mathbf{x}\), and

is the k-th elementary symmetric function defined on k-th Gårding’s cone

\(\sigma _k (\kappa )\) is the so called k-th Weingarten curvature of \(\Sigma \). In particular, the 1st, 2nd and n-th Weingarten curvature correspond to mean curvature, scalar curvature and Gauss curvature respectively. We call a hypersurface \(\Sigma \) strictly locally convex (locally convex) if all principal curvatures at any point of \(\Sigma \) are positive (nonnegative).

In this paper, all hypersurfaces are assumed to be connected and orientable. We will see from Lemma 2.1 that a strictly locally convex hypersurface in \({\mathbb {H}}^{n+1}\) with compact (asymptotic) boundary must be a vertical graph over a bounded domain in \({\mathbb {R}}^n\). We thus assume the normal vector field on \(\Sigma \) to be upward. Write

where \(\Omega \) is the bounded domain on \(\partial _{\infty } {\mathbb {H}}^{n+1} = {\mathbb {R}}^n\) enclosed by \(\Gamma \). Consequently, (1.1)–(1.2) can be expressed in terms of u,

The essential difficulty for the Plateau type problem (1.3) is due to the singularity at \(u = 0\). When \(\psi \) is a positive constant, problem (1.3) has been extensively investigated in [1,2,3,4,5] (see also the references therein for some previous work). Their basic idea is: first, to prove the existence of a solution \(u^{\epsilon }\) to the approximate Dirichlet problem

and then, to show these \(u^{\epsilon }\) converge to a solution of (1.3) after passing to a subsequence. For general \(\psi \), Szapiel [6] studied the existence of strictly locally convex solutions to (1.4) for \(f = \sigma _n^{1/n}\), but he also assumed a very strong assumption on f (see (1.11) in [6]) which excluded the case \(f = \sigma _n^{1/n}\). As far as the author knows, there is no literature which gives an existence result for the asymptotic Plateau type problem (1.3) for general \(\psi \).

Our first task in this paper is to improve the result of [6]. As in [7], we assume the existence of a strictly locally convex subsolution \({\underline{u}} \in C^4(\Omega )\), that is,

Different from [2,3,4,5,6], we take a new approximate Dirichlet problem

where the \(\epsilon \)-level set of \({\underline{u}}\) and its enclosed region in \({\mathbb {R}}^n\) are respectively

We may assume the dimension of \(\Gamma _{\epsilon }\) is \((n - 1)\) by Sard’s theorem, and in addition, \(\Gamma _{\epsilon } \in C^4\).

A crucial step for proving the existence of a strictly locally convex solution to (1.6) is to establish second order a priori estimates for strictly locally convex solutions u of (1.6) satisfying \(u \ge {\underline{u}}\) on \(\Omega _{\epsilon }\). An essential difference from [2,3,4,5] is that we allow the \(C^2\) bound to depend on \(\epsilon \). This looser requirement gives us more flexibility to apply techniques for general Dirichlet problem and with less technical assumptions (for example, there is no prescribed upper bound for \(\psi \)). For \(C^2\) boundary estimates, we change the variable from u to v by \(u = \sqrt{v}\) (see [8] for a similar idea for radial graphs), which is the main difference from [2, 6] and fundamentally improves the result in [6].

One reason that we purely study strictly locally convex hypersurfaces is due to \(C^2\) boundary estimates. In [3], Guan-Spruck assumed \(\Gamma \) to be mean convex. Then the solution u behaves nicely near \(\Gamma \) and therefore k-admissible solutions can be studied in their framework. However, without any geometric assumptions on \(\Gamma _{\epsilon }\), \(C^2\) boundary estimates can only be obtained for strictly locally convex hypersurfaces.

In order to apply continuity method and degree theory to prove the existence of a strictly locally convex solution to (1.6), the strict local convexity has to be preserved during the continuity process. This is true when \(k = n\) in view of the nondegeneracy of (1.6), while for \(1 \le k < n\), we have to impose certain assumptions on \(\Omega \), \({\underline{u}}\) and \(\psi \) to guarantee the full rank of the second fundamental form on locally convex \(\Sigma \) up to the boundary. In this paper, we want to apply the constant rank theorem developed in [9,10,11] to Dirichlet boundary value problems when assuming a subsolution. For this, we assume

Besides, we also need a condition which can guarantee that locally convex solutions to the associated equations of (1.6) are strictly locally convex near the boundary \(\Gamma _{\epsilon }\). However, we did not find such a condition. Therefore, our existence results are limited to \(k = n\).

Theorem 1.1

Under the subsolution condition (1.5), for \(k = n\), there exists a smooth strictly locally convex solution \(u^{\epsilon }\) to the Dirichlet problem (1.6) with \(u^{\epsilon } \ge {\underline{u}}\) in \(\Omega _{\epsilon }\).

Our second task in this paper is to solve (1.3). A central issue is to provide certain uniform \(C^2\) bound for \(u^\epsilon \). Different from [2,3,4,5], where the authors derived uniform bound for certain quantities regarding solutions of (1.4) under certain assumptions, we use (1.6) as an approximate Dirichlet problem and tolerate the \(\epsilon \)-dependent \(C^2\) bound for solutions to (1.6), since we are able to use the idea of Guan-Qiu [12], who established \(C^2\) interior estimates for convex hypersurfaces with prescribed scalar curvature in \({\mathbb {R}}^{n+1}\). We extend their estimates to \({\mathbb {H}}^{n+1}\), which, together with Evans-Krylov interior estimates (see [13, 14]) and standard diagonal process, lead to the following existence result. Since the pure \(C^2\) interior estimates can only be derived up to scalar curvature equations (see Pogorelov [15] and Urbas [16] for counterexamples when \(k \ge 3\)), we hope to investigate the cases \(k \ge 3\) in future work by other means. Meanwhile, interior \(C^2\) estimates are limited to hypersurfaces satisfying certain convexity property (see [12]), which also explains why we only focus on strictly locally convex hypersurfaces.

Theorem 1.2

In \({\mathbb {H}}^3\), for \(f = \sigma _2^{1/2}\), under the subsolution condition (1.5), there exists a smooth strictly locally convex solution \(u \ge {\underline{u}}\) to (1.3) on \(\Omega \), equivalently, there exists a smooth complete strictly locally convex vertical graph solving (1.1)–(1.2).

This paper is organized as follows: in Sect. 2, we provide some basic formulae, properties and calculations for vertical graphs. The \(C^2\) estimates for strictly locally convex solutions of (1.6) are presented in Sects. 3 and 4. In Sect. 5, we prove Theorem 1.1 via continuity method and degree theory. Section 6 provides the interior \(C^2\) estimates for convex solutions to prescribed scalar curvature equations in \({\mathbb {H}}^{n+1}\), which finishes the proof of Theorem 1.2.

2 Vertical graphs

Suppose \(\Sigma \) is locally represented as the graph of a positive \(C^2\) function over a domain \(\Omega \subset {\mathbb {R}}^n\):

Since the coordinate vector fields on \(\Sigma \) are

thus the upward Euclidean unit normal vector field to \(\Sigma \), the Euclidean metric, its inverse and the Euclidean second fundamental form of \(\Sigma \) are given respectively by

Consequently, the Euclidean principal curvatures \({\tilde{\kappa }} [ \Sigma ]\) are the eigenvalues of the symmetric matrix:

where

and its inverse

For geometric quantities in hyperbolic space, we first note that the upward hyperbolic unit normal vector field to \(\Sigma \) is

and the hyperbolic metric of \(\Sigma \) is

To compute the hyperbolic second fundamental form \(h_{ij}\) of \(\Sigma \), applying the Christoffel symbols in \({\mathbb {H}}^{n + 1}\),

we obtain

where \(\mathbf{D}\) denotes the Levi-Civita connection in \({\mathbb {H}}^{n+1}\). Therefore,

The hyperbolic principal curvatures \(\kappa [ \Sigma ]\) are the eigenvalues of the symmetric matrix \(A [u] = \{ a_{ij} \}\):

Remark 2.1

The graph of u is strictly locally convex if and only if the symmetric matrix \(\{ a_{ij }\}\), \(\{ h_{ij} \}\) or \(\{ \delta _{ij} + u_i u_j + u u_{ij} \}\) is positive definite.

Remark 2.2

From the above discussion, we can see that

where \(\nu ^{n+1} = \nu \cdot \partial _{n + 1}\) and \(\cdot \) is the inner product in \({\mathbb {R}}^{n+1}\). This formula indeed holds for any local frame on any hypersurface \(\Sigma \) (which may not be a graph). The relation between \(\kappa [\Sigma ]\) and \({\tilde{\kappa }} [\Sigma ]\) is

We observe the following phenomenon for strictly locally convex hypersurfaces in \({\mathbb {H}}^{n+1}\) (see also Lemma 3.3 in [2] for a similar assertion).

Lemma 2.1

Let \(\Sigma \) be a connected, orientable, strictly locally convex hypersurface in \({\mathbb {H}}^{n+1}\) with a specially chosen orientation. Then \(\Sigma \) must be a vertical graph.

Proof

Suppose \(\Sigma \) is not a vertical graph. Then there exists a vertical line (of dimension 1) intersecting \(\Sigma \) at two distinct points \(p_1\) and \(p_2\). Since \(\Sigma \) is orientable, we may assume that \(\nu ^{n+1} (p_1) \cdot \nu ^{n+1} (p_2) \le 0\). Since \(\Sigma \) is connected, there exists a 1-dimensional curve \(\gamma \) on \(\Sigma \) connecting \(p_1\) and \(p_2\). Among the tangent hyperplanes (of dimension n) to \(\Sigma \) along \(\gamma \), choose a vertical one which is tangent to \(\Sigma \) at a point \(p_3\). At \(p_3\), \(\nu ^{n+1} = 0\) and \(u > 0\). By (2.4), \({\tilde{\kappa }}_i > 0\) for all i at \(p_3\). On the other hand, let P be a 2-dimensional plane passing through \(p_1\), \(p_2\) and \(p_3\). If \(P \cap \Sigma \) is 1-dimensional and has nonpositive (Euclidean) curvature at \(p_3\) with respect to \(\nu \), we reach a contradiction; otherwise we take a different orientation of \(\Sigma \), then \(\Sigma \) is either not strictly locally convex or we reach a contradiction. If \(P \cap \Sigma \) is 2-dimensional, then any line on \(P \cap \Sigma \) through \(p_3\) leads to a contradiction. \(\square \)

Equation (1.1) can be written as

Recall that the curvature function f satisfies the fundamental structure conditions

3 Second order boundary estimates

In this section and the next section, we derive a priori \(C^2\) estimates for strictly locally convex solution u to the Dirichlet problem (1.6) with \(u \ge {\underline{u}}\) in \(\Omega _{\epsilon }\). By Evans-Krylov theory [13, 14], classical continuity method and degree theory (see [17]) we prove the existence of a strictly locally convex solution to (1.6). Higher-order regularity then follows from classical Schauder theory.

Let \(u \ge {\underline{u}}\) be a strictly locally convex function over \(\Omega _{\epsilon }\) with \(u = {\underline{u}}\) on \(\Gamma _{\epsilon }\). We have the following \(C^0\) estimate:

In fact, by Remark 2.1, for any \(x_0 \in \Omega _{\epsilon }\), the function \(u^2 + |x - x_0|^2\) is Euclidean strictly locally convex in \(\Omega _{\epsilon }\), over which, we have

Therefore we obtain (3.1).

For the gradient estimate, we perform a transformation \(u = \sqrt{v}\). Denote

The geometric quantities in Sect. 2 can be expressed in terms of v,

Since the graph is strictly locally convex, v satisfies

where \(\Delta \) is the Laplace-Beltrami operator in \({\mathbb {R}}^n\). Let \({\overline{v}}\) be the solution of

By the comparison principle,

Consequently,

where C is a positive constant depending on \(\epsilon \). Hereinafter in this section, C always denotes such a constant which may change from line to line. Equivalently,

For global gradient estimate, consider the test function

Assume its maximum is achieved at an interior point \(x_0 \in \Omega _{\epsilon }\). Then at \(x_0\),

Since the matrix \(\big ( v_{ki} + 2 \delta _{ki} \big )\) is positive definite, thus \(v_k = 0\) for all k at \(x_0\). Along with (3.1) and (3.2), we obtain

Equivalently,

For second order boundary estimate, we change Equ. (2.5) under the transformation \(u = \sqrt{v}\) into

By direct calculation, we obtain the following formulae.

Lemma 3.1

In addition,

Proof

Since

we have,

To compute \(G_v\), note that

Consequently,

Hence,

We then obtain \(G_v\) in view of

For \(G^s\), note that

It follows that

\(\square \)

For an arbitrary point on \(\Gamma _{\epsilon }\), we may assume it to be the origin of \({\mathbb {R}}^n\). Choose a coordinate system so that the positive \(x_n\) axis points to the interior normal of \(\Gamma _{\epsilon }\) at the origin. There exists a uniform constant \(r > 0\) such that \(\Gamma _{\epsilon } \cap B_r (0)\) can be represented as a graph

Since

or equivalently

we have

and

Therefore,

Consequently,

where C is a constant depending on \(\epsilon \).

For the mixed tangential-normal derivative \(v_{\alpha n} (0)\) with \(\alpha < n\), note that the graph of \({\underline{u}}\) is strictly locally convex on \(\overline{\Omega _{\epsilon }}\). Hence we have

for some positive constant \(c_0\). Let d(x) be the distance from \(x \in \overline{\Omega _{\epsilon }}\) to \(\Gamma _{\epsilon }\) in \({\mathbb {R}}^n\). Consider the barrier function

with

where the positive constant N, \(\tau \), B and A are to be determined.

Define the linear operator \( L = \, G^{s t} \,D_{s t} + G^s \,D_s\). By the concavity of G with respect to \(D^2 v\),

Note that

Denote \(\gamma = ( \gamma ^{ik} )\). We have

where \(\tilde{c}\) is a positive constant. Hence

Note that \({\mathcal {H}} = \text{ diag } \Big ( 2 c_0 - C N \delta ,\,\, \ldots ,\,\, 2 c_0 - C N \delta ,\,\, 2 c_0 - C N \delta + N\Big )\). We can choose N sufficiently large and \(\tau \), \(\delta \) sufficiently small (\(\delta \) depends on N) such that

Hence the above inequality becomes

We then require \(\delta \le \frac{\tau }{N}\) so that

By Lemma 3.1,

This, together with (3.9) yields,

Now, we consider the operator

Note that for \(\delta > 0\) sufficiently small,

Also, in view of (3.7),

To compute L(Tv), we need the following lemma (see [2]).

Lemma 3.2

For \(1 \le i, j \le n\),

Proof

For \(\theta \in {\mathbb {R}}\), let

Since \(G - \psi \) is invariant for the rotations of \({\mathbb {R}}^n\), we have

Differentiate with respect to \(\theta \) and change the order of differentiation,

Set \(\theta = 0\) in the above equality and notice that at \(\theta = 0\),

We thus proved the lemma. \(\square \)

Choose B sufficiently large such that

From (3.10) and (3.11) we have

Choose A sufficiently large such that

By the maximum principle,

which implies

Up to now, we have proved that

where \(\xi \) and \(\eta \) are any unit tangential vectors and \(\gamma \) the unit interior normal vector to \(\Gamma _{\epsilon }\) on \(\Omega _{\epsilon }\). It suffices to give an upper bound

Motivated by [18] (see also [19, 20]), we derive (3.13).

First recall some general facts. The projection of \(\Gamma _k \subset {\mathbb {R}}^n\) onto \({\mathbb {R}}^{n - 1}\) is exactly

Let \(\kappa ' = (\kappa '_1, \ldots , \kappa '_{n-1})\) be the roots of

where \((h_{\alpha \beta })\) and \((g_{\alpha \beta })\) are the first \((n - 1) \times (n - 1)\) principal minors of \((h_{ij})\) and \((g_{ij})\) respectively. Then \(\kappa [v] \in \Gamma _k\) implies \(\kappa '[v] \in \Gamma '_{k - 1}\), and this is true for any local frame field. Note that \(\kappa '[v]\) may not be \((\kappa _1, \ldots , \kappa _{n-1})[v]\).

For \(x \in \Gamma _{\epsilon }\), let the indices in (3.14) be given by the tangential directions to \(\Gamma _{\epsilon }\) and \(\kappa '[v](x)\) be the roots of (3.14). Define

Choose a coordinate system in \({\mathbb {R}}^n\) such that m is achieved at \(0 \in \Gamma _{\epsilon }\) and the positive \(x_n\) axis points to the interior normal of \(\Gamma _{\epsilon }\) at 0. We want to prove that m has a uniform positive lower bound.

Let \(\xi _1, \ldots , \xi _{n-1}, \gamma \) be a local frame field around 0 on \(\Omega _{\epsilon }\), obtained by parallel translation of a local frame field \(\xi _1, \ldots , \xi _{n-1}\) around 0 on \(\Gamma _{\epsilon }\) satisfying

and the interior, unit, normal vector field \(\gamma \) to \(\Gamma _{\epsilon }\), along the directions perpendicular to \(\Gamma _{\epsilon }\) on \(\Omega _{\epsilon }\). We can see that this choice of frame field has nothing to do with v (or equivalently, u). In fact, if we denote

where \(e_1, \ldots , e_{n - 1}\) is a fixed local orthonormal frame on \(\Gamma _{\epsilon }\), and consider a general boundary value condition, say \(v = \varphi \) on \(\Gamma _{\epsilon }\), then on \(\Gamma _{\epsilon }\),

Note that there exist \(\eta _{\alpha }^{\tau }\) for \(\alpha , \tau = 1, \ldots , n-1\) such that \(g_{\alpha \beta } = \delta _{\alpha \beta }\) on \(\Gamma _{\epsilon }\). By a rotation, we can further make \((h_{\alpha \beta }(0))\) to be diagonal.

By Lemma 6.1 of [21], there exists \(\mu = (\mu _1, \ldots , \mu _{n-1}) \in {\mathbb {R}}^{n - 1}\) with \(\mu _1 \ge \ldots \ge \mu _{n - 1} \ge 0\) such that

Since \({\underline{v}}\) is strictly locally convex near \(\Gamma _{\epsilon }\) and \(\sum \mu _{\alpha } \ge 1\),

for a uniform positive constant \(c_1\). Consequently,

The first line in (3.16) is true, since we can write \(v - {\underline{v}} = \omega \,\,d\) for some function \(\omega \) defined in a neighborhood of \(\Gamma _{\epsilon }\) in \(\Omega _{\epsilon }\). Differentiate this identity,

Note that \(d_{\xi _{\alpha }} (0) = 0\) and \(d_{\gamma } (0) = 1\). Thus,

We may assume \(\tilde{d} (0) \le \, c_1\), for, otherwise we are done. Then from (3.16),

Since \(0 < ( v - {\underline{v}} )_{\gamma } (0) \le C\),

for some uniform constant \(c_2 > 0\). By continuity of \(d_{\xi _{\alpha } \xi _{\alpha }} (x)\) at 0 and \(0 \le \mu _{\alpha } \le 1\),

for some uniform constant \(\delta > 0\). Thus

On the other hand, by Lemma 6.2 of [21], for any \(x \in \Gamma _{\epsilon }\) near 0,

Thus for any \(x \in \Gamma _{\epsilon }\) near 0,

In view of (3.17), define in \(\Omega _\epsilon \cap B_{\delta }(0)\),

By (3.17) and (3.18), \(\Phi \ge 0\) on \(\Gamma _{\epsilon } \cap B_{\delta } (0)\). In addition, we have in \(\Omega _\epsilon \cap B_{\delta }(0)\),

This is because \(0 \le \mu _{\alpha } \le 1\) and

By (3.10) and (3.19), we may choose \(A>> B> > 1\) such that \(\Psi + \Phi \ge 0\) on \(\partial (\Omega _{\epsilon } \cap B_{\delta }(0))\) and \(L(\Psi + \Phi ) \le 0\) in \(\Omega _{\epsilon } \cap B_{\delta }(0)\). By the maximum principle, \(\Psi + \Phi \ge 0\) in \(\Omega _{\epsilon } \cap B_{\delta }(0)\). Since \((\Psi + \Phi )(0) = 0\) by (3.18) and (3.15), we have \((\Psi + \Phi )_n (0) \ge 0\). Therefore, \(v_{nn} (0) \le C\), which, together with (3.8) and (3.12), gives a bound \(|D^2 v (0)| \le C\), and consequently a bound for all the principal curvatures at 0. By (2.8),

and therefore on \(\Gamma _{\epsilon }\),

where \(c_3\) and \(c_4\) are positive uniform constants.

By a proof similar to Lemma 1.2 of [21], we know that there exists \(R > 0\) depending on the bounds (3.8) and (3.12) such that if \(v_{\gamma \gamma }(x_0) \ge R\) and \(x_0 \in \Gamma _{\epsilon }\), then the principal curvatures \((\kappa _1, \ldots , \kappa _n)\) at \(x_0\) satisfy

in the local frame \(\xi _1, \ldots , \xi _{n-1}, \gamma \) around \(x_0\). When R is sufficiently large, we have

contradicting with Equ. (3.6). Hence \(v_{\gamma \gamma } < R\) on \(\Gamma _{\epsilon }\). (3.13) is proved.

4 Global curvature estimates

For a hypersurface \(\Sigma \subset {\mathbb {H}}^{n+1}\), let g and \(\nabla \) be the induced hyperbolic metric and Levi-Civita connection on \(\Sigma \) respectively, and let \({\tilde{g}}\) and \({\tilde{\nabla }}\) be the metric and Levi-Civita connection induced from \({\mathbb {R}}^{n+1}\) when \(\Sigma \) is viewed as a hypersurface in \({\mathbb {R}}^{n+1}\). The Christoffel symbols associated with \(\nabla \) and \({\tilde{\nabla }}\) are related by the formula

Consequently, for any \(v \in C^2(\Sigma )\),

Note that (4.1) holds for any local frame.

Lemma 4.1

In \({\mathbb {R}}^{n+1}\), we have the following identities.

where \(\tau _1, \ldots , \tau _n\) is any local frame on \(\Sigma \).

Proof

To prove (4.2), we may write

Taking inner product of (4.6) with \(\nu \) in \({\mathbb {R}}^{n+1}\), we obain

Taking inner product of (4.6) with \(\tau _j\) in \({\mathbb {R}}^{n+1}\), we have

where X is the position vector field of \(\Sigma \) (note that this is different from the conformal Killing field when using half space model for \({\mathbb {H}}^{n + 1}\)). Thus,

Therefore,

which implies (4.2).

For (4.3), note that

Here we have applied the Gauss formula for \(\Sigma \) as a hypersurface in \({\mathbb {R}}^{n+1}\).

For (4.4), by the Weingarten formula for \(\Sigma \) as a hypersurface in \({\mathbb {R}}^{n+1}\), we have

Finally, (4.5) follows from (4.4), (4.3) and the Codazzi equation for \(\Sigma \) as a hypersurface in \({\mathbb {R}}^{n + 1}\). In fact,

\(\square \)

Lemma 4.2

Let \(\Sigma \) be a strictly locally convex hypersurface in \({\mathbb {H}}^{n+1}\) satisfying equation (2.5). Then in a local orthonormal frame on \(\Sigma \),

Proof

Since \(\Sigma \) can also be viewed as a hypersurface in \({\mathbb {R}}^{n+1}\),

Differentiate this equation with respect to \({\tilde{\nabla }}_k\) and then multiply by \(\frac{u_k}{u}\),

Take this identity into (4.8),

In view of (2.3), we obtain (4.7). \(\square \)

For global curvature estimates, we use the method in [4]. Assume

for some constant a. Let \(\kappa _{\max } ({ \mathbf{x} })\) be the largest principal curvature of \(\Sigma \) at \(\mathbf{x}\). Consider

Assume \(M_0 > 0\) is attained at an interior point \({ \mathbf{x}}_0 \in \Sigma \). Let \(\tau _1, \ldots , \tau _n\) be a local orthonormal frame about \({ \mathbf{x}}_0\) such that \(h_{ij}(\mathbf{x}_0) = \kappa _i \,\delta _{ij}\), where \(\kappa _1, \ldots , \kappa _n\) are the hyperbolic principal curvatures of \(\Sigma \) at \(\mathbf{x}_0\). We may assume \(\kappa _1 = \kappa _{\max \,}(\mathbf{x}_0)\). Thus, \(\ln h_{11} - \ln ( {\nu }^{n+1} - a )\) has a local maximum at \(\mathbf{x}_0\), at which,

Differentiate equation (2.5) twice,

By Gauss equation, we have the following formula when changing the order of differentiation for the second fundamental form,

Combining (4.10), (4.11), (4.12) and (4.7) yields,

Next, take (4.4), (2.3) into (4.9),

and recall an inequality of Andrews [22] and Gerhardt [23],

Therefore, (4.13) becomes,

For some fixed \(\theta \in (0, 1)\) which will be determined later, denote

The second line of (4.14) can be estimated as follows.

Here we have applied \({\tilde{g}}^{kl} u_k u_l = \frac{\delta _{kl}}{u^2} u_k u_l = 1 - (\nu ^{n+1})^2\) due to (4.2) in deriving the above inequality. Choosing \(\theta = \frac{a^2}{4}\) and taking the above inequality into (4.14), we obtain an upper bound for \(\kappa _1\).

5 Existence of strictly locally convex solutions to (1.6)

The convexity of solutions is a very important prerequisite in this paper, due to the following two reasons: first, the \(C^2\) boundary estimates derived in Sect. 3 require the condition of convexity; second, the \(C^2\) interior estimates for prescribed scalar curvature equations in Sect. 6 need certain convexity assumption (see [12]). Therefore, the preservation of convexity of solutions is vital in order to perform the continuity process. In this section, we first give a constant rank theorem in hyperbolic space (see [9,10,11, 24]).

Theorem 5.1

Let \(\Sigma \) be a \(C^4\) oriented connected hypersurface in \({\mathbb {H}}^{n+1}\) satisfying the prescribed curvature equation

Assume that the second fundamental form \(\{h_{ij}\}\) on \(\Sigma \) is positive semi-definite, and for any \(\mathbf{x} \in \Sigma \) and a local orthonormal frame \(\tau _1, \ldots , \tau _n\) around \(\mathbf{x}\) with \(\{ h_{ij} (\mathbf{x}) \}\) diagonal,

where the symbol \(\lesssim \) is defined in [10] and B is the set of bad indices of \(\mathbf{x}\). Then the second fundamental form on \(\Sigma \) is of constant rank.

Let \(\Sigma \) be a locally convex hypersurface to equation (5.1) for \(k < n\) with boundary \(\partial \Sigma \). If we can find a condition (we call it Condition I) to guarantee that \(\Sigma \) is strictly locally convex in a neighbourhood of the boundary \(\partial \Sigma \), then together with condition (5.2) in Theorem 5.1, we can prove that \(\Sigma \) is strictly locally convex up to the boundary. However, we did not find a suitable Condition I. Still, we proceed to prove the existence as if we have had Condition I in order to show how (5.2) and Condition I play the roles in the continuity process.

Now we prove the existence. We use the geometric quantities in Sect. 2 which are expressed in terms of u and write Equ. (2.5) as

For convenience, denote

Let \(\delta \) be a small positive constant such that

For \(t \in [0, 1]\), consider the following two auxiliary equations (see also [27]).

Lemma 5.1

Let \(\psi (x)\) be a positive function defined on \(\overline{\Omega _{\epsilon }}\). For \(x \in \overline{\Omega _{\epsilon }}\) and a positive \(C^2\) function u which is strictly locally convex near x, if

then

Proof

By direct calculation,

Since \( \sum f_i \kappa _i \le \psi (x) \,u\) by the concavity of f and \(f(0) = 0\),

\(\square \)

Lemma 5.2

For any \(t \in [0, 1]\), if \({\underline{U}}\) and u are respectively any positive strictly locally convex subsolution and solution of (5.5), then \(u \ge {\underline{U}}\). In particular, the Dirichlet problem (5.5) has at most one strictly locally convex solution.

Proof

We only need to prove that \(u \ge {\underline{U}}\) in \(\Omega _{\epsilon }\). If not, then \({\underline{U}} - u\) achieves a positive maximum at \(x_0 \in \Omega _{\epsilon }\), at which,

Note that for any \(s \in [0, 1]\), the deformation \(u[s] := s \,{\underline{U}} + (1 - s)\, u\) is strictly locally convex near \(x_0\). This is because at \(x_0\),

Denote

and define a differentiable function of \(s \in [0, 1]\):

Note that

and

Thus there exists \(s_0 \in [0, 1]\) such that \(a(s_0) = 0\) and \(a'(s_0) \ge 0\), i.e.,

and

However, the above inequality can not hold by (5.7), (5.9) and Lemma 5.1. \(\square \)

Theorem 5.2

Under assumption (1.7) and Condition I, for any \(t \in [0, 1]\), the Dirichlet problem (5.5) has a unique strictly locally convex solution u, which satisfies \(u \ge {\underline{u}}\) in \(\Omega _{\epsilon }\).

Proof

Uniqueness is proved in Lemma 5.2. For existence of a strictly locally convex solution, we first verify that \(\Psi = (\theta (x, t) \, u)^k = \Theta (x, t) \,u^k\) satisfies condition (5.2) in the constant rank theorem. By direct calculation,

By (4.1), (4.3), (2.3) and (4.2), for \(i \in B\) and \(\alpha = 1, \ldots , n\), we have

and

Therefore by (1.7),

Next, we use the standard continuity method to prove the existence. Note that \({\underline{u}}\) is a subsolution of (5.5) by (5.4). We have obtained the \(C^2\) bound for strictly locally convex solution u (satisfying \(u \ge {\underline{u}}\) by Lemma 5.2) of (5.5), which implies the uniform ellipticity of Equ. (5.5). By Evans-Krylov theory [13, 14], we obtain the \(C^{2, \alpha }\) estimate which is independent of t,

Denote

We can see that \(C_0^{2, \alpha } (\, \overline{ \Omega _{\epsilon } } \,)\) is a subspace of \(C^{2, \alpha }( \,\overline{ \Omega _{\epsilon } }\, )\) and \({\mathcal {U}}\) is an open subset of \(C_0^{2, \alpha } (\,\overline{ \Omega _{\epsilon } }\,)\). Consider the map \({\mathcal {L}}: \,{\mathcal {U}} \times [ 0, 1 ] \rightarrow C^{\alpha }(\, \overline{ \Omega _{\epsilon } } \,)\),

Set

Note that \({\mathcal {S}} \ne \emptyset \) since \({\mathcal {L}}(0, 0) = 0\).

We claim that \({\mathcal {S}}\) is open in [0, 1]. In fact, for any \(t_0 \in {\mathcal {S}}\), there exists \(w_0 \in {\mathcal {U}}\) such that \({\mathcal {L}} ( w_0, t_0 ) = 0\). The Fréchet derivative of \({\mathcal {L}}\) with respect to w at \((w_0, t_0)\) is a linear elliptic operator from \(C^{2, \alpha }_0 (\, \overline{\Omega _{\epsilon }} \,)\) to \(C^{\alpha }(\, \overline{\Omega _{\epsilon }} \,)\),

By Lemma 5.1, \({\mathcal {L}}_w \big |_{(w_0, t_0)}\) is invertible. By implicit function theorem, a neighborhood of \(t_0\) is also contained in \({\mathcal {S}}\).

Next, we show that \({\mathcal {S}}\) is closed in [0, 1]. Let \(t_i\) be a sequence in \({\mathcal {S}}\) converging to \(t_0 \in [0, 1]\) and \(w_i \in {\mathcal {U}}\) be the unique (by Lemma 5.2) solution corresponding to \(t_i\), i.e. \({\mathcal {L}} (w_i, t_i) = 0\). By Lemma 5.2, \(w_i \ge 0\). By (5.13), \(u_i := {\underline{u}} + w_i\) is a bounded sequence in \(C^{2, \alpha }(\,\overline{\Omega _{\epsilon }}\,)\), which possesses a subsequence converging to a locally convex solution \(u_0\) of (5.5). By Condition I and Theorem 5.1, we know that \(u_0\) is strictly locally convex in \(\overline{\Omega _{\epsilon }}\). Since \(w_0 := u_0 - {\underline{u}} \in {\mathcal {U}}\) and \({\mathcal {L}}(w_0, t_0) = 0\), thus \(t_0 \in {\mathcal {S}}\). \(\square \)

From now on we may assume \({\underline{u}}\) is not a solution of (1.6), since otherwise we are done.

Lemma 5.3

If \(u \ge {\underline{u}}\) is a strictly locally convex solution of (5.6) in \(\Omega _{\epsilon }\), then \(u > {\underline{u}}\) in \(\Omega _{\epsilon }\) and \((u - {\underline{u}})_{\gamma } > 0\) on \(\Gamma _{\epsilon }\).

Proof

To keep the strict local convexity of the variations in our proof, we rewrite (5.6) in terms of v,

Since \({\underline{u}}\) is a subsolution but not a solution of (5.6), equivalently, \({\underline{v}}\) is a subsolution but not a solution of (5.14), thus,

Denote \(v[s] := s \,{\underline{v}} + (1 - s)\, v\), which is strictly locally convex over \(\Omega _{\epsilon }\) for any \(s \in [0, 1]\) since

From (5.15) we can deduce that

where

Applying the Maximum Principle and Lemma H (see p. 212 of [25]) we conclude that \(v > {\underline{v}}\) in \(\Omega _{\epsilon }\) and \((v - {\underline{v}})_{\gamma } > 0\) on \(\Gamma _{\epsilon }\). Hence the lemma is proved. \(\square \)

Theorem 5.3

Under assumption (1.7), (1.8) and Condition I, for any \(t \in [0, 1]\), the Dirichlet problem (5.6) possesses a strictly locally convex solution satisfying \(u \ge {\underline{u}}\) in \(\Omega _{\epsilon }\). In particular, the Dirichlet problem (1.6) has a strictly locally convex solution \(u^{\epsilon }\) satisfying \(u^{\epsilon } \ge {\underline{u}}\) in \(\Omega _{\epsilon }\).

Proof

We first verify that

satisfies condition (5.2) in the constant rank theorem. In fact, by assumption (1.8), (5.11) and (5.12),

and consequently,

We have established \(C^{2, \alpha }\) estimates for strictly locally convex solutions \(u \ge {\underline{u}}\) of (5.6), which further imply \(C^{4, \alpha }\) estimates by classical Schauder theory,

In addition, we have

where \(C_4\), \(c_2\) are independent of t. Denote

and

which is a bounded open subset of \(C_0^{ 4, \alpha } (\,\overline{\Omega _{\epsilon }}\,)\). Define \({{\mathcal {M}}}_t (w): \,{{\mathcal {O}}} \times [ 0, 1 ] \rightarrow C^{2,\alpha }(\overline{\Omega _{\epsilon }})\),

Let \(u^0\) be the unique strictly locally convex solution of (5.5) at \(t = 1\) (the existence and uniqueness are guaranteed by Theorem 5.2 and Lemma 5.2). Observe that \(u^0\) is also the unique solution of (5.6) when \(t = 0\). By Lemma 5.2, \(w^0: = u^0 - {\underline{u}} \ge 0\) in \(\Omega _{\epsilon }\). By Lemma 5.3, \(w^0 > 0\) in \(\Omega _{\epsilon }\) and \({w^0}_{\gamma } > 0\) on \(\Gamma _{\epsilon }\). Also, \({\underline{u}} + w^0\) satisfies (5.16) and (5.17). Thus, \(w^0 \in {{\mathcal {O}}}\). By Condition I, Theorem 5.1, Lemma 5.3, (5.16) and (5.17), \({{\mathcal {M}}}_t(w) = 0\) has no solution on \(\partial {{\mathcal {O}}}\) for any \(t \in [0, 1]\). Besides, \({{\mathcal {M}}}_t\) is uniformly elliptic on \({{\mathcal {O}}}\) independent of t. Therefore, we can define the t-independent degree of \({{\mathcal {M}}}_t\) on \({{\mathcal {O}}}\) at 0:

To find this degree, we only need to compute \( \deg ({{\mathcal {M}}}_0, {{\mathcal {O}}}, 0) \). By the above discussion, we know that \({{\mathcal {M}}}_0 ( w ) = 0\) has a unique solution \(w^0 \in {{\mathcal {O}}}\). The Fréchet derivative of \({{\mathcal {M}}}_0\) with respect to w at \(w^0\) is a linear elliptic operator from \(C^{4, \alpha }_0 (\overline{\Omega _{\epsilon }})\) to \(C^{2, \alpha }(\overline{\Omega _{\epsilon }})\),

By Lemma 5.1, \(G_u [ u^0 ] - \,\delta \, < 0\) in \(\overline{\Omega _{\epsilon }}\) and thus \({{\mathcal {M}}}_{0,w} |_{w^0}\) is invertible. By the degree theory established in [17],

where \(B_1\) is the unit ball in \(C_0^{4,\alpha }(\overline{\Omega _{\epsilon }})\). Thus \(\deg ({{\mathcal {M}}}_t, {{\mathcal {O}}}, 0) \ne 0\) for all \(t \in [0, 1]\), which implies that the Dirichlet problem (5.6) has at least one strictly locally convex solution \(u \ge {\underline{u}}\) for any \(t \in [0, 1]\). \(\square \)

6 Interior second order estimates for prescribed scalar curvature equations in \(\pmb {{\mathbb {H}}}^{n+1}\)

Let \(u^{\epsilon } \ge {\underline{u}}\) be a strictly locally convex solution over \(\Omega _{\epsilon }\) to the Dirichlet problem (1.6). For any fixed \( \epsilon _0 > 0\), we want to establish the uniform \(C^2\) estimates for \(u^{\epsilon }\) for any \(0< \epsilon < \frac{\epsilon _0}{4}\) on \(\overline{\Omega _{\epsilon _0}}\), namely,

In what follows, let C be a positive constant which is independent of \(\epsilon \) but depends on \(\epsilon _0\). By (3.1), we immediately obtain the uniform \(C^0\) estimate:

For uniform \(C^1\) estimate on \(\overline{\Omega _{\epsilon _0}}\), we make use of the Euclidean strict local convexity of \((u^\epsilon )^2 + |x|^2\) (see [26] for a similar idea) to obtain

It follows that,

We are now in a position to prove

which is equivalent to

Choose \(r = \text{ dist }(\overline{\Omega _{\epsilon _0}}, \Gamma _{\epsilon _0 / 2})\), and cover \(\overline{\Omega _{\epsilon _0}}\) by finitely many open balls \(B_{\frac{r}{2}}\) with radius \(\frac{r}{2}\) and centered in \(\Omega _{\epsilon _0}\). Note that the number of such open balls depends on \(\epsilon _0\). In addition, the corresponding balls \(B_r\) are all contained in \(\Omega _{\epsilon _0 / 2}\), over which, we are able to apply the gradient estimate due to (6.3):

If we are able to establish the following interior \(C^2\) estimate on each \(B_r\):

then (6.5) can be proved. Since the principal curvatures \(\kappa _i [u^{\epsilon }]\), \(i = 1, \ldots , n\) and the gradient \(D u^{\epsilon }\) are invariant under the change of Euclidean coordinate system, we may assume the center of \(B_r\) is 0. For convenience, we also omit the superscript in \(u^{\epsilon }\) and write as u.

In what follows, we will use Guan-Qiu’s idea [12] to derive the interior \(C^2\) estimate

for strictly locally convex hypersurface \(\Sigma \) in \({\mathbb {H}}^{n+1}\) to the following equation

where \(B_r \subset {\mathbb {R}}^n\) is the open ball with radius r centered at 0 and C is a positive constant depending only on n, r, \(\Vert \Sigma \Vert _{C^1( B_r )}\), \(\Vert \psi \Vert _{C^2(B_r)}\) and \(\inf _{B_r} \psi \).

For \(x \in B_r\) and \(\xi \in \mathbb {S}^{n-1} \cap T_{(x, u)} \Sigma \), consider the test function

where \(\rho (x) = r^2 - |x|^2\) with \(|x|^2 = \sum _{i = 1}^n x_i^2\) and \(\alpha \), \(\beta \) are positive constants to be determined later. At this point, we remind the readers that \(\cdot \) means the inner product in \({\mathbb {R}}^{n+1}\) while \(\langle \,\, , \,\, \rangle \) represents the inner product in \({\mathbb {H}}^{n+1}\).

The maximum value of \(\Theta \) can be attained in an interior point \(x^0 = (x_1, \ldots , x_n) \in B_r\). Let \(\tau _1, \ldots , \tau _n\) be a normal coordinate frame around \((x^0, u(x^0))\) on \(\Sigma \) and assume the direction obtaining the maximum to be \(\xi = {\tau }_1\). By rotation of \(\tau _2, \ldots , \tau _n\) we may assume that \(\big ( h_{ij}(x^0) \big )\) is diagonal. Thus, the function

also achieves its maximum at \(x^0\). Therefore, at \(x^0\),

To compute the quantities in (6.8) and (6.9), we first convert them into quantities in \({\mathbb {H}}^{n+1}\), and apply the Gauss formula and Weingarten formula

We also note that in \({\mathbb {H}}^{n+1}\),

where \(\mathbf{y}\) is any vector field in \({\mathbb {H}}^{n+1}\). This implies that \(\partial _{n+1}\) is a conformal Killing field in \({\mathbb {H}}^{n+1}\). By straightforward calculation, we obtain

Now we choose the conformal Killing field \(\mathbf{x}\) in \({\mathbb {H}}^{n+1}\) to be

We can verify that

where \(\mathbf{y}\) is any vector field in \({\mathbb {H}}^{n+1}\).

Again, by straightforward calculation, we find that

Also, since

by direct calculation we obtain

Differentiate (6.7) twice,

Now taking (6.15), (6.10), (6.11), (6.13), (6.8), (6.16), (4.12), (6.17) into (6.9), we obtain

By Theorem 1.2 of [28] (see also Lemma 2 of [12]), we have

Also,

Thus, when \(\kappa _1\) is sufficiently large, (6.18) reduces to

As in [12], we divide our discussion into three cases. We show all the details to indicate the tiny differences due to the outer space \({\mathbb {H}}^{n+1}\).

Case (i): when \(|x|^2 \le \frac{r^2}{2}\), we have \(\frac{1}{\rho } \le \frac{2}{r^2}\). Then (6.19) reduces to

Choosing \(\alpha \) sufficiently large we obtain an upper bound for \(\kappa _1\).

Next, we consider the cases when \(|x|^2 \ge \frac{r^2}{2}\), which implies \(\rho \le \frac{r^2}{2}\). We observe that

Therefore,

Case (ii): if for some \( 2 \le j \le n\), we have \(|\rho _j| > d\), where d is a small positive constant to be determined later.

By (6.8), (6.10) and (6.12), we have

It follows that

when \(\kappa _1\) is sufficiently large. Consequently, (6.19) reduces to

Since \(\sigma _2^{jj} \ge \frac{9}{10} \,\sigma _1\) when \(\kappa _1\) is sufficiently large, we obtain an upper bound for \(\kappa _1\).

Case (iii): if \(|\rho _j| \le d\) for all \( 2 \le j \le n\), from (6.21) we can deduce that \(|\rho _1| \ge c_0 > 0\). By (6.8), (6.10) and (6.12), we have

where

Note that in the last equality we have applied (6.20). Hence

and (6.22) can be estimated as

when \(\beta>> \alpha \) and \(\kappa _1 \rho \) is sufficiently large. Taking this into (6.19) and observing that

as \(\kappa _1\) is sufficiently large, we then obtain an upper bound for \(\rho ^2 \ln \kappa _1\).

References

Guan, B., Spruck, J.: Hypersurfaces of constant mean curvature in hyperbolic space with prescribed asymptotic boundary at infinity. Amer. J. Math. 122, 1039–1060 (2000)

Guan, B., Spruck, J., Szapiel, M.: Hypersurfaces of constant curvature in hyperbolic space I. J. Geom. Anal. 19, 772–795 (2009)

Guan, B., Spruck, J.: Hypersurfaces of constant curvature in hyperbolic space II. J. Eur. Math. Soc. 12, 797–817 (2010)

Guan, B., Spruck, J.: Convex hypersurfaces of constant curvature in hyperbolic space. Surv Geom Anal Relativ ALM 20, 241–257 (2011)

Guan, B., Spruck, J., Xiao, L.: Interior curvature estimates and the asymptotic plateau problem in hyperbolic space. J. Differ. Geom. 96, 201–222 (2014)

Szapiel, M.: Hypersurfaces of prescribed curvature in hyperbolic space, Phd thesis, (2005)

Guan, B., Spruck, J.: Locally convex hypersurfaces of constant curvature with boundary. Comm. Pure Appl. Math. 57, 1311–1331 (2004)

Sui, Z.: Strictly locally convex hypersurfaces with prescribed curvature and boundary in space forms. Comm. Partial Differ. Equ. 45, 253–283 (2020)

Korevaar, N.J., Lewis, J.L.: Convex solutions of certain elliptic equations have constant rank hessians. Arch Ration Mech Anal 97, 19–32 (1987)

Guan, P., Ma, X.: The Christoffel-Minkowski problem I: convexity of solutions of a Hessian equation. Invent. Math. 151, 553–577 (2003)

Guan, P., Lin, C., Ma, X.: The Christoffel-Minkowski problem II: Weingarten curvature equations. Chin. Ann. Math. Ser. B 27, 595–614 (2006)

Guan, P., Qiu, G.: Interior \(C^2\) regularity of convex solutions to prescribing scalar curvature equations. Duke Math. J. 168, 1641–1663 (2019)

Evans, L.C.: Classical solutions of fully nonlinear, convex, second order elliptic equations. Comm. Pure Appl. Math. 35, 333–363 (1982)

Krylov, N.V.: Boundedly nonhomogeneous elliptic and parabolic equations in a domain. Izvestiya Rossiiskoi Akademii Nauk, Seriya Matematicheskaya 47, 75–108 (1983)

Pogorelov, A.V.: The Minkowski multidimensional problem. Wiley, New York (1978)

Urbas, J.: On the existence of nonclassical solutions for two classes of fully nonlinear elliptic equations. Indiana Univ. Math. J. 39, 355–382 (1990)

Li, Y.Y.: Degree theory for second order nonlinear elliptic operators and its applications. Comm. Partial Differ. Equ. 14, 1541–1578 (1989)

Cruz, F.: Radial graphs of constant curvature and prescribed boundary. Calc. Var. Partial Differ. Equ. 56, 83 (2017)

Guan, B.: The Dirichlet problem for Hessian equations on Riemannian manifolds. Calc. Var. Partial Differ. Equ. 8, 45–69 (1999)

Trudinger, N.: On the Dirichlet problem for Hessian equations. Acta Math. 175, 151–164 (1995)

Caffarelli, L.A., Nirenberg, L., Spruck, J.: The Dirichlet problem for nonlinear second-order elliptic equations, III: Functions of the eigenvalues of the Hessian. Acta Math. 155, 261–301 (1985)

Andrews, B.: Contraction of convex hypersurfaces in Euclidean space. Calc. Var. Partial Differ. Equ. 2, 151–171 (1994)

Gerhardt, C.: Closed Weingarten hypersurfaces in Riemannian manifolds. J. Differ. Geom. 43, 612–641 (1996)

Chen, D., Li, H., Wang, Z.: Starshaped compact hypersurfaces with prescribed Weingarten curvature in warped product manifolds. Calc. Var. Parital Differ. Equ. 57, 42 (2018)

Gidas, B., Ni, W.M., Nirenberg, L.: Symmetry and related properties via the maximum principle. Comm. Math. Phys. 68, 209–243 (1979)

Trudinger, N., Urbas, J.: On second derivative estimates for equations of Monge-Ampère type. Bull Aus Math Soc 30, 321–334 (1984)

Su, C.: Starshaped locally convex hypersurfaces with prescribed curvature and boundary. J. Geom. Anal. 26, 1730–1753 (2016)

Chen, C.: Optimal concavity of some Hessian operators and the prescribed \(\sigma _2\) curvature measure problem. Sci. China Math. 56, 639–651 (2013)

Acknowledgements

The author would like to thank Dr. Zhizhang Wang and Dr. Wei Sun for many useful and enlightening discussions. The author also wish to express the deep thanks to the reviewer, who pointed out a mistake in the previous version and gave many helpful suggestions, which help the author have a better understanding of the problem.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by L. Caffarelli.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The author is supported by the Grant (no. AUGA5710000618) from Harbin Institute of Technology and National Natural Science Foundation of China (No. 12001138)

Rights and permissions

About this article

Cite this article

Sui, Z. Convex hypersurfaces with prescribed scalar curvature and asymptotic boundary in hyperbolic space. Calc. Var. 60, 45 (2021). https://doi.org/10.1007/s00526-020-01897-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00526-020-01897-0