Abstract

Ensemble learning has become a cornerstone in various classification and regression tasks, leveraging its robust learning capacity across disciplines. However, the computational time and memory constraints associated with almost all-learners-based ensembles necessitate efficient approaches. Ensemble pruning, a crucial step, involves selecting a subset of base learners to address these limitations. This study underscores the significance of optimization-based methods in ensemble pruning, with a specific focus on metaheuristics as high-level problem-solving techniques. It reviews the intersection of ensemble learning and metaheuristics, specifically in the context of selective ensembles, marking a unique contribution in this direction of research. Through categorizing metaheuristic-based selective ensembles, identifying their frequently used algorithms and software programs, and highlighting their uses across diverse application domains, this research serves as a comprehensive resource for researchers and offers insights into recent developments and applications. Also, by addressing pivotal research gaps, the study identifies exploring selective ensemble techniques for cluster analysis, investigating cutting-edge metaheuristics and hybrid multi-class models, and optimizing ensemble size as well as hyper-parameters within metaheuristic iterations as prospective research directions. These directions offer a robust roadmap for advancing the understanding and application of metaheuristic-based selective ensembles.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Ensemble learning, a powerful approach in machine learning, involves combining multiple classification and regression algorithms to enhance predictive performance [1]. Notably, Fernández-Delgado et al. [2] have highlighted ensemble learning as the state-of-the-art solution approach for resolving a wide range of machine learning challenges, particularly in classification tasks. Selective ensemble learning, or ensemble pruning, further refines this approach by strategically selecting a subset of base learners that maintains or even outperforms the performance of the entire ensemble [3].

As a matter of fact, the main challenge in selective ensemble learning is how to come up with useful algorithms that reduce the ensemble size without reducing generalization performance in comparison with all-member ensembles [4]. Three categories of ensemble selection techniques have been found common, namely clustering-based [5], ordering-based [6], and optimization-based techniques [7].

Optimization-based approaches, a focal point of our study, involve solving an optimization problem \(P\) expressed as a triple (\(S, \Omega , f\)). The search space \(S\) is defined over a finite collection of decision variables \({V=(v}_{1}, {v}_{2}, \dots , {v}_{G})\). The set of constraints/restrictions imposed on the variables \(V\) is symbolized by \(\Omega\). Ultimately, \(f\) is the objective function (sometimes called cost function or fitness function) that assigns a value to each element/solution of \(S\). For a maximization problem, the objective is to locate an optimal solution \({s}^{*}\in S\) such that \(f( {s}^{*}) \ge f(s), \forall s \in S\), whereas for minimization, the optimal solution \({s}^{*}\in S\) satisfies \(f( {s}^{*}) \le f(s), \forall s \in S\). Optimizing more than one objective function at the same time (i.e. multi-objective optimization) is a common target in real-world situations [8].

In terms of ensemble pruning, optimization-based methods attempt to locate the subset of base learners that maximizes diversity by selecting the most diverse classifiers to obtain a complementary subset of learners and/or minimizes the ensemble error of a particular combination rule [7]. Due to the practical importance of optimization problems, many algorithms to tackle them have been developed, including mathematical programming, probabilistic-based, and metaheuristic/search-based methods [9].

Metaheuristics, multipurpose problem-solving techniques, have emerged as effective tools for tackling optimization problems, offering approximate solutions within a reasonable timeframe [10, 11]. In terms of ensemble learning, these high-level algorithms, including trajectory and population-based approaches categorized in Fig. 1, play a pivotal role in pruning problems. Previous studies [12,13,14,15,16,17,18] demonstrate the success of metaheuristic-based selective ensemble models across diverse domains.

In order to structure and understand the perspective of metaheuristic-based selective ensemble learning, the present study provides a comprehensive review of publications over the past decade. While several reviews have explored ensemble learning [3, 5, 19, 20] and metaheuristics [21], [22], [23], [10], [24], [25] disjointly, none have thoroughly examined the publications joining the 2 subjects together. This paper aims to fill this gap by systematically categorizing metaheuristic-based selective ensembles, identifying common algorithms, and exploring their applications in various fields, such as information technology, medical applications, industrial engineering, financial management, as well as agricultural and environmental applications. In general, the key issues that the review aims to address could be summarized as follows:

-

Introducing a comprehensive review that address crucial research aspects that assess the advancements and the real-world applications of metaheuristics in ensemble pruning. In other words, it provides a comprehensive understanding of the current state of the field.

-

Methodologically, categorizing metaheuristic-based selective ensembles and systematically identifying the common employed algorithms and software programs so as to provide the active body of researchers and practitioners with a concrete structured overview.

-

Exploring and categorizing the criteria used to guide the selection of base learners, the practical fields of applications, and the future research directions.

It could be said that the current review not only contributes to a deeper understanding of recent advancements but also serves as a valuable guide for researchers and practitioners. By addressing crucial research aspects, categorizing methodologies, and highlighting application domains, the present study aims to offer insights into prevalent trends and future directions in the dynamic realm of metaheuristic-based selective ensembles within ensemble learning.

After this introductory section, the rest of this paper is structured as follows. A brief background on metaheuristics and ensemble selection is provided in Sect. 2. An exposition of review methodology and selection strategy of publications is given in Sect. 3. Section 4 provides a brief review of the preliminary statistics and publishing trends of metaheuristic-based selective ensembles. Section 5 introduces a taxonomy of technical developments in metaheuristic-based selective ensembles. Following that, a thorough discussion of how metaheuristic-based selective ensembles is employed in real-world practical contexts is outlined in Sect. 6. Section 7 discusses the study’s main results, followed by Sect. 8 which is dedicated to discussing the challenges and factors influencing the generalization of metaheuristic-based selective ensembles across diverse domains and practical contexts. Finally, Sect. 9 summarizes the main conclusions and offers some suggestions for future research directions.

2 Brief background on metaheuristics and ensemble selection

In terms of ensemble learning, the fundamental idea behind metaheuristics is to assign each individual learner a weight that measures how beneficial it would be to include that learner in the final ensemble. With respect to \(N\) individual learners, the weights can be arranged as an \(N\)-dimensional vector, wherein small elements in the weight vector indicate that the associated learners are candidates to be ignored. One ensemble pruning solution, therefore, corresponds to one weight vector. The foremost objective in this situation is to identify the optimal weight vector for choosing selective ensembles. It is crucial to remember that learners’ weights may be configured as integer, float, or bit-encoded vectors. Nonetheless, if the objective is to only select learners to be included in the final aggregated decision and those to be excluded, without using any threshold, the bit-encoded ones (i.e. 0, 1) are frequently employed [9].

In their algorithmic design for search process, metaheuristics usually make use of what is referred to as “neighbouring search” which is a well-known notion in optimization algorithms. The essential principle of this concept is that a set of solutions can be attained from the current one by making use of the considered search operators, if the neighbourhood of a particular solution is defined. The search is over when a predetermined stopping criterion is satisfied [22].

Metaheuristic search is typically conducted using either trajectory-based or population-based approaches. The primary distinction between the 2 types is based on how many tentative solutions are used at each stage of the iterative search process. The following subsections are devoted to introducing a brief background to representative methodologies of both types as well as their employment with regard to selective ensemble learning.

2.1 Trajectory-based selective ensembles

Trajectory-based metaheuristics are often used to identify a locally optimal solution rapidly, and as a result, they are also known as exploitation-oriented methods since they facilitate intensification in the search space, by locally upgrading good-quality acquired solutions [22, 24]. Most common trajectory-based methods include hill-climbing [26], simulated annealing [27], Tabu search [28], greedy randomized adaptive search procedure (GRASP) [29], variable neighbourhood search (VNS) [30], and iterated local search [31]. Details about all of these algorithms could be found at [10].

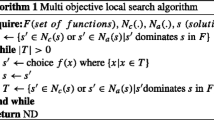

As depicted in Fig. 2, the majority of trajectory-based algorithms adhere to the same procedures. In general, a trajectory-based approach starts with one candidate solution and replaces it, at each iteration, with a new one (often the best found in its neighbourhood) by employing modification operators of the algorithm. According to the literature, the variation of trajectory-based algorithms is in how they alter/modify the current solution. Formally, the overall procedure of trajectory-based algorithms proceeds as shown in Algorithm 1.

When it comes to selective ensembles, algorithms of this category typically begin with an initial solution (i.e. initial weight vector or bit-encoded vector) and incrementally make some variations using modification operators of the algorithm so as to enhance the current solution. The objective function of the metaheuristic algorithm evaluating that solution provides the foundation for these modifications. When the algorithm achieves a local optimum, which means that no more improvements can be obtained with the present set of possible moves, the algorithm stops making further adjustments.

2.2 Population-based selective ensembles

In contrast to trajectory-based methods, population-based metaheuristics typically work with a collection of potential solutions. At each iteration, as depicted by the flow chart in Fig. 3 and algorithm in Algorithm 2, these solutions are modified and updated based on certain guidelines. Further, recombining solutions is permitted by the population paradigm in an effort to improve results by using the salient features of the initial solutions. Each iteration involves replacing some of the population’s solutions with newly produced ones, frequently the best ones, or with some solutions that have been chosen using a predetermined quality-based criterion. It could be said that population-based metaheuristics utilizes a group of search agents instead of a single one in order to take advantage of cooperation and parallelism in the search mechanism. Accordingly, they are commonly known as exploration-oriented methods [22].

In terms of population-based selective ensembles, according to most of the literature, the algorithm proceeds as follows. Firstly, an initial collection of weight vectors (or bit-encoded vectors) is selected or designed at random. The quality of each weight vector is then evaluated, depending on the performance of the relevant ensemble on validation data. The weight vectors are then improved using operators of variation/improvement, and the processes continue until convergence or satisfying a predetermined stopping criterion. In order to create the selective ensemble, the best weight vector is decoded, and learners with low weights are removed.

Even though they mostly adhere to the same procedures, population-based metaheuristics could be divided into many groups, including evolutionary computation, swarm intelligence, biological-based, chemical-based, nature-inspired, and physics-based algorithms. They generally diverge in terms of where they get inspiration from, and the processes that they employ to create new generations of improved solutions [21]. The present study is interested only in evolutionary computation and swarm intelligence.

2.2.1 Evolutionary computation

Evolutionary computation refers to a set of algorithms motivated by the process and mechanisms of biological evolution. They draw inspiration from evolutionary concepts such as natural selection and survival of the fittest. They commonly share adaptation-related characteristics through an iterative process that accumulates and amplifies beneficial variation through a process of trial and error [32].

The following is how evolutionary algorithms operate. An ever-evolving collective learning procedure is applied to a population of candidate solutions, each of which represents a search point in the space of possible solutions. In phases known as generations, the population is randomly seeded and then subjected to the processes of selection, recombination (crossover), and mutation. As a result, the population evolves towards better-suited areas of the search space where new generations are produced. So as to advance the search process, the population’s fitness gets evaluated, solutions with the best fitness values are chosen, and they are combined to form new candidate solutions with a higher probability of better fitness. The solution eventually converges after a certain number of generations, and the one with the highest fitness value denotes an optimal or nearly optimal solution [22], [33], [34].

In fact, genetic algorithm (GA) [35, 36], differential evolution [37], gene expression programming [38], evolutionary strategies [39], and estimation of distribution algorithm [40] are just a few instances of the numerous representative methods of evolutionary computation.

2.2.2 Swarm intelligence

Swarm intelligence refers to a set of distributed problem-solving algorithms motivated by the cooperative group intelligence of swarms. Swarm intelligence systems are often composed of a population of autonomous and self-organizing agents, or entities that are capable of completing certain tasks, interacting with one another and their immediate surroundings. The emergence of global behaviour frequently results through local interactions among such agents, even though there is typically no centralized control structure mandating how individual agents should behave [8, 21], [41].

Similar to evolutionary algorithms, swarm intelligence models are initialized with a population of potential solutions that are then modified over many generations by imitating the social behaviour of insects or other animals in order to locate the optimal solution. Instead of using evolutionary operators, as is the case with evolutionary algorithms, swarm intelligence techniques use possible solutions that fly around the search space by adjusting to their surroundings and interacting with other population members [34], [10].

Examples of swarm intelligence algorithms include, among others, ant colony optimization [42], artificial bee colony [43], firefly [44], bat [45], fireworks [46], particle swarm optimization (PSO) [47], cuckoo [48], glowworm (Y. [49], ant lion [50], moth-flame [51], salp swarm [52], crayfish [53], and puma optimizer [54].

3 Review methodology and selection strategy

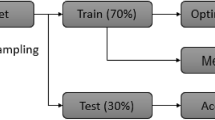

Pursuant to Fahimnia et al. [55], the purpose of literature reviews is to map, evaluate, and highlight the knowledge gaps and to emphasize the limitations of current knowledge. Selecting pertinent search terms, reading the literature, and then completing the analysis are all steps in the process of conducting systematic literature reviews. In contrast to conventional narrative reviews, systematic reviews employ a repeatable, scientific, and objective process that eliminates selection bias by conducting an in-depth literature search. Accordingly, inspired by Haixiang et al. [56], the review is conducted following the process illustrated in Fig. 4.

To fulfil the study’s primary objectives outlined in the Introduction, the research addresses various facets of the current advances concerning the use of metaheuristics in ensemble pruning. The following points encapsulate the key aspects of investigation:

-

Examining the main categories of metaheuristic-based selective ensembles.

-

Determining the algorithms with superior track records and frequent usage in metaheuristic-based selective ensembles.

-

Investigating the criteria employed in selecting the base learners for the selective ensemble model.

-

Identifying the most frequently utilized software programs for metaheuristic-based selective ensemble modelling.

-

Exploring methodologies for evaluating the performance of metaheuristic-based selective ensembles.

-

Analysing the practical applications of metaheuristic-based selective ensembles in real-world settings.

-

Exploring implementation challenges and limitations of metaheuristic-based selective ensembles.

-

Delving into prospective research directions for advancing metaheuristic-based selective ensembles.

After identifying the research questions, the 2-level searching process displayed in Table 1 is conducted on Scopus, an Elsevier’s abstract and citation database, using the methodology described by Fahimnia et al. [55] to collect pertinent research studies published between 2013 and July 2023.Footnote 1 On the first search level, the initial query is applied on the “title, abstract, keywords” fields. Then, the searching outcomes are further searched for the study’s indexed keywords using the filter query to order to draw more accurate analysis conclusions.

Following the initial search, which returned 1673 research results, the filtering procedure yielded 1109 research results. The search space is then restricted to English-language “journal” and “conference” papers only, excluding book series and chapters. This whittled down the raw data set to 1053 papers representing the main source of information for literature study with regard to ensemble selection in general. The publications of ensemble pruning that regards such metaheuristic-based methods are then identified through the following selection strategy.

The studies included for this review ought to make use of such a metaheuristic-based ensemble approach, and they should offer the barest amount of information regarding the suggested search approach to ensemble selection. Meanwhile excluded from the screening process are publications that are not available or inaccessible through an online repository. Additionally, publications that do not directly address the topic at hand are also disregarded.

So as to find the publications that should be considered for the review, the above-mentioned technical filtering procedure has been carried out using Pandas dataframes.Footnote 2 To be taken into account, an abstract of an extracted publication must contain at least one word from the inclusion_listFootnote 3 and must not contain any words from the exclusion_list.Footnote 4 The dataset was reduced by this approach to 287 publications. A manual filtering procedure is then used to remove papers that are not pertinent or those could not be reached. The final 201 research papers deemed relevant after that analysis were eventually moved on to the next step of the reviewing process. Additionally, the inclusion list is expanded by 29 papers based on cross-referencing, bringing the total number of publications reviewed to 230. It is worthy to emphasize that papers employed metaheuristics in their ensemble learning process but not during the ensemble pruning stage are regarded as being out of the scope of the current review.

4 Selective ensembles: preliminary statistics and bibliometric analysis

This section is devoted to introducing some preliminary statistics and the trends for metaheuristic-based selective ensembles. In particular, it offers a preliminary bibliometric analysis of the 230 publications that are chosen, of which 66% are journal articles and 34% are conference papers. The following subsections are covering distribution of publications over years (subSect. 4.1), top contributing affiliated countries (subSect. 4.2), top contributing co-authors (subSect. 4.3), co-occurrence network of indexed keywords (subSect. 4.4), and top-cited publications (subSect. 4.5).

4.1 Distribution of publications over time

Plotting the number of papers chronologically from 2013 to July 2023 reveals the publishing trend shown in Fig. 5. Except for a single significant drop in 2018, the gradual increase in the trend indicates that metaheuristic-based selective ensemble learning is an active field of research.

Additionally, the preliminary data show that the selected publications are appeared in 159 journals and conference proceedings with 59 publishers. Figure 6 shows the top 10 publishers with (198 papers). It could be seen that most studies have been published by Institute of Electrical and Electronics Engineers (IEEE) (32%), Elsevier (28%), and Springer (22%).

Figure 7 presents the top 10 journals/conferences based on the total contribution of each journal. These journals covered about 26% (i.e. 61 paper) of all selected publications in the past decade. It is worth noting that the majority of them are highly esteemed journals/conferences in the fields of computer science, artificial intelligence, engineering, operations research, and knowledge discovery. This further demonstrates how the research of selective ensemble learning has incorporated methods from the computer science community and real-world applications from a variety of fields.

4.2 Top contributing affiliated countries

Depending on author affiliations, Fig. 8—which is produced using the Datawrapper tool [57]—depicts the geographical locations of the top contributing countries in the 230 selected publications. The geographical dispersion makes it abundantly evident that organizations and research institutions around the world have expressed interest in metaheuristic-based selective ensemble modelling. As indicated by the colour legend showing the percentage of publications, greater contributions are made by authors affiliated with Chinese institutions, with 31% of all selected publications. The USA comes second (7%), Brazil (6%), and the UK (5%). Then, with 4% of selected publications, the third group of authors are affiliated to Canada, India, and Iran.

4.3 Top contributing co-authors

To form the collaboration network of authors who contribute to the publications of metaheuristic-based selective ensembles, co-authors of the chosen publications are all collected. Each identified author is therefore listed along with her/his co-authors. Then, the toolkits Gephi [58] and VOSviewer [59] are used to create the graph, shown in Fig. 9, and calculate nodes degrees (i.e. the number of connections that the node has to other nodes in the network) as well as the overall link weight (i.e. the total number of pairwise occurrences in selected publications). The size of nodes reflects the node degree, while the link-width represents to the connection weight.

It could be seen, from this graph, that Zhu, Xuhui; Tang, Jian; Liang, Jing; Hu, Yi; Nguyen, Tien Thanh; Ni, Zhiwei; and Krawczyk, Bartosz; are in general the most collaborative authors in the field within the period under consideration. Table 2 illustrates the top 10 joint authors based on link weights. The most prominent joint authors are Zhu, Xuhui, and Ni, Zhiwei, followed by Krawczyk, Bartosz, and Woźniak, Michał.

4.4 Co-occurrence network of indexed keywords

The indexed keywordsFootnote 5 from the selected publications are all collected to form a co-occurrence network. A minimum of 2 pairwise occurrences is required to include joint keywords in the analysis. Each identified keyword is therefore listed along with the other keywords that are occurred with it, and then, the network of the collected keywords is generated using Gephi and VOSviewer toolkits. Figure 10 reveals that genetic algorithms, learning systems, evolutionary algorithms, classification, particle swarm optimization, algorithms, and learning algorithms are the most often used technical keywords overall.

A significant portion of the selected publications are generally dealing with classification-related issues and primarily employing evolutionary metaheuristics, particularly genetic ones. The graph also shows that the most popular algorithms for swarm intelligence are particle swarm optimization (PSO) and ant colony optimizer. From the application perspective, the most commonly utilized keywords are anomaly detection, image analysis, digital storage, credit scoring, and medical diagnosis, especially breast cancer.

With regard to the joint occurrences, based on total link weights, Table 3 displays the most popular 10 joint indexed keywords. It could be seen that evolutionary algorithms with learning systems as well as classification with genetic algorithms both are coming together on the top of the list. Accordingly, the usage of all the mentioned keywords could enable accurate indexing and searching for papers pertaining to metaheuristic-based selective ensembles.

4.5 Top-cited publications

Focusing on citations,Footnote 6 the top 10 cited papers of metaheuristic-based selective ensembles from 2013 to July 2023 are: [60, 61], [62,63,64,65], [66,67,68] and [69].

The paper of Krawczyk et al. [60] entitled “cost-sensitive decision tree ensembles for effective imbalanced classification” is identified as the most cited paper. In this study, an effective ensemble design algorithm is proposed for imbalanced classification based on cost-sensitive learning and random feature subspaces. It is a technical paper where the authors propose effective framework for ensemble selection by the use of evolutionary algorithm to optimize a cost-sensitive ensemble decision trees (DTs).

The investigation also shows that the paper ranks second is the one entitled “A hybrid ensemble pruning approach based on consensus clustering and multi-objective evolutionary algorithm for sentiment classification” by Onan et al. [61]. The study aims to build an efficient sentiment classification technique, by developing a new hybrid ensemble pruning model that utilizes both multi-objective Pareto-based evolutionary algorithm and clustering techniques.

Additionally, the study entitled “A novel multi-stage hybrid model with enhanced multi-population niche genetic algorithm: An application in credit scoring” by W. Zhang et al. [65] is the third in citation ranks. In this study, a novel multi-stage hybrid ensemble model is proposed based on an enhanced multi-population niche GA. It is evident that the model is efficient especially with regard to the prediction of credit scoring.

5 Metaheuristics and ensemble selection: recent technical contributions

After conducting the preliminary systematic analysis based on the 230 papers selected as described in Sect. 3, and in order to ensure a comprehensive coverage of the in-depth review that follows, a robust selection process is employed to evaluate and identify the more significant publications for inclusion. In the first place, priority is given to incorporating the top 50 cited papers representing fundamental works that have considerable impacts on the topic at hand. Then, in order to acknowledge the most recent developments in the field, the rest of publications is evaluated, resulting in adding 39 more publications from the years 2022–2023. The final selected list of publications is, by no means inclusive, representing the outcome of a screening process to evaluate, uncover and group related studies, and give priority to those with original research or distinct viewpoints.

Based on a total of 89 publications reviewed, this section is dedicated to summarizing those that introduce novel technical developments with regard to metaheuristic-based selective ensemble learning (38 publications). The rest of the selected publications covering real-world applications will be reviewed in Sect. 6.

In the sequel, publications contribute to technical aspects are categorized into 3 groups based on the branch of metaheuristics that authors deploy. Table 4 summarizes the published technical developments that handling trajectory-based selective ensembles. Table 5 provides a summary of those concerned with evolutionary-based selective ensembles. Finally, Table 6 is specified for those interested in swarm-based selective ensembles. For every publication, the corresponding table demonstrates citation, objectives and main contribution, applied techniques, and models’ performance measures.Footnote 7

6 Metaheuristic-based selective ensembles in real-world applications

The main purpose of this section is to provide an overview of the practical contributions found in the publications of metaheuristic-based selective ensembles during the past decade (57% of the 89 publications selected). It should be mentioned that some of these publications contribute theoretically to the domain and then apply the newly developed models on specific real-world applications.

Overall, 5 main practical domains are covered based on the percentage of practical publications found in the selected literature. They could be arranged in descending order, as illustrated in Fig. 11, as follows. Information technology is the subject of 27% of applications, medical-based applications (24%), industrial engineering (20%), financial management (14%), and agricultural and environmental applications representing (8%).

6.1 Information technology applications

In this study, the applications of metaheuristic-based selective ensembles in information technology are categorized into 3 groups, involving human-based activity and emotion recognition, software engineering, and image-based applications.

Concerning human-based activity recognition, Fatima et al. [70] provide a new methodology for activity recognition in smart homes by using a GA to optimize the output generated by the multiple classifiers. For emotion recognition, He et al. [71] suggests a novel Firefly-integrated optimization algorithm to recognize different types of emotions. A ranking probability objective function is employed by the proposed algorithm to ensure adequate recognition accuracy with minimal features. Darekar et al. [72] introduces a novel and automated speech-emotion recognition model based on an ensemble of neural networks and a newly proposed optimization technique called “Arithmetic Exploration updated Wild-Beast Model”.

In terms of software engineering, Krawczyk and Woźniak [73] develop a new evolutionary-based ensemble model for malware detection by using cost-sensitive classification trees and random feature subspaces. Also, Jodavi et al. [74] introduce a novel ensemble-based anomaly detection method called “DbDHunter” for detecting drive-by download attacks. Jodavi et al. [75] suggest a new binary classifier ensemble to detect obfuscated JavaScript code based on PSO. Mauša and Galinac [76] analyse the performance of evolving diverse ensembles using genetic programming for software defect prediction with unbalanced data, by using colonization and migration operators in conjunction with 3 ensemble selection strategies for the multi-objective evolutionary algorithm. Malhotra and Khanna [77] present 4 ensemble learning algorithms by merging 7 separate PSO-based classifiers as ensemble constituents to forecast software change.

Furthermore, based on omni-ensemble learning, a new Bagging method for class-imbalance learning is devised by Mousavi et al. [78] for software defect prediction. Tajoddin and Abadi [79] suggest a new method for detecting anomalous malware in registry data. To identify known and particularly unknown malware that manipulate registry keys and values for malevolent purposes, the proposed model employs an ensemble classifier made up of multinomial classifiers. Recently, Jadhav et al. [80] propose a 3-phase selective ensemble methodology by using omni-ensemble learning to predict software effort in industrial and manufacturing processes.

When it comes to image-based applications, based on GA and SVMs, a new hybrid evolutionary framework is proposed by Goh and Thing [81] to select the best features and classifiers for detecting image tampering in digital forensics. In addition, Zhao et al. [82] offer a new multi-objective sparse ensemble learning model for remote sensing image change detection where an evolutionary multi-objective GA is exploited to find sparse ensemble classifiers with strong performance.

Another research that might be included in this category is the work conducted by Ramos et al. [83] for the purpose of classifying motor imagery in brain-computer interface applications. In this research, different designs of classifier ensembles are assessed and compared with other approaches on 3 different subjects.

6.2 Medical-based applications

For the medical-based applications, research studies conducted for breast cancer diagnosis are of the top category. By employing different techniques, many studies have been developed to provide new frameworks and medical decision systems for the early detection of breast cancer. For example, cost-sensitive DTs based on GA is the main framework used by Krawczyk et al. [84] and Krawczyk et al. [85] as well. Support vector data description, random subspace, and memetic firefly-based algorithm are the main components of the medical system suggested by Krawczyk and Filipczuk [86]. Naïve Bayes, KNN, random forest, and SVMs are used as base learners in the stacking-based genetic programming models developed by Ali and Majid [87] and Majid and Ali [88].

In addition to breast cancer, a novel approach for invasive ductal carcinoma classification is proposed by Alkhaldi and Salari [89] by utilizing Cartesian genetic programming algorithm for ensemble optimization with convolutional neural networks as base learners.

For diabetes, based on multiple criteria, ant colony optimization swarm intelligence algorithm is used by Alghamdi et al. [90] to build an accurate selective ensemble model for diabetic retinopathy detection. Also, Singh and Singh [91] design a new stacking-based evolutionary ensemble learning system called “NSGA-II-Stacking” to predict the beginning of Type-2 diabetic mellitus (T2DM) 5 years in advance.

Other medical-based applications, could be recognized as follows. Mousavi et al. [92] suggest an ensemble pruning and rotation forest (EP-RTF) model as a new method for predicting human microRNAs target. Akkasi and Varoglu [93] propose a new community-based decision-making approach to improve biochemical named entity recognition systems using PSO swarm-based selective ensemble learning. Also, L. Nguyen et al. [94] construct a new ensemble framework called “iANP-EC” for identifying anti-cancer natural products. Further, Notash et al. [95] develop a horizontal-vertical image scanning (HVIS) tool by using GA-based ensemble model to measure lymphedema arm volume.

6.3 Industrial engineering applications

For observing and diagnosing mean changes in multivariate manufacturing processes, a helpful model, made possible by swarm-based ensemble of quantization networks, is proposed by W. Yang [96]. Also, a hybrid selective ensemble model is introduced by W. Yang et al. [97] based on ANNs and PSO to classify out-of-control signals into categories of mean and/or variance abnormalities. In petroleum industry, Al-Qutami et al. [98] suggest a framework for virtual flow metering by using hybrid ensemble-based model where neural networks and regression trees are combined and pruned by a trajectory-based Simulated Annealing algorithm.

Moreover, based on multimodal evolutionary algorithm, Hu et al. [99] suggest a unique data-driven evolutionary ensemble learning forecasting model in an effort to enhance the precision and intelligence of short-term load forecasting systems which can lead to more efficient power generation and modern power systems management. In addition, the problem of feature selection in the Silicon content prediction model is considered from a different angle, and an altered non-dominated sorting differential evolution algorithm is proposed by X. Wang et al. [100] to simultaneously maximize 2 competing goals: accuracy and variety of base learners. Furthermore, a generic evolutionary ensemble learning approach for surface roughness prediction is proposed by Xie et al. [101] based on a pool of ten effective regressors.

For fault diagnosis in manufacturing processes, Peimankar et al. [102] propose a binary variant of the multi-objective particle swarm optimization (bi-MOPSO) technique to classify power transformer failures. The suggested approach simultaneously chooses the classifiers with the highest accuracy and diversity. Next, by utilizing dissolved gas analysis on the oil of the power transformers, the chosen classifiers are merged to identify the real defects in the power transformers. Based on the same techniques, Peimankar et al. [69] complete their research by developing a new 2-step ensemble selection approach for fault diagnosis of power transformers. For fault diagnosis of rotary machinery, a novel method is proposed by using PSO-based selective ensemble learning and probabilistic neural networks by Z. Wang et al. [64].

Finally, a multi-objective teaching–learning-based swarm optimization algorithm for ensemble pruning in random forest (RF) is proposed by Wan et al. [103], with the goal of enhancing both the accuracy and speed of RF in the context of rolling bearing fault diagnosis. The introduced algorithm is specifically designed to maximize classification accuracy and minimize classification time, enabling the identification of a subforest that achieves superior accuracy in diagnosing rolling bearing faults while expediting the classification process.

6.4 Financial management applications

With regard to credit scoring prediction and assessment, effective trajectory-based selective ensemble models, based on GRASP and ELMs, are proposed by T. Zhang et al. [104] as well as T. Zhang and Dai [105]. Ye and Dai [106] suggest a distinctive greedy randomized dynamic ensemble selection algorithm for credit risk assessment to generate relevant classifiers of the ensemble by employing SVMs, Path-Relinking, Variable Neighbourhood Search, and GRASP algorithms. Additionally, W. Zhang et al. [65] propose a novel multi-stage hybrid ensemble model based on an enhanced multi-population niche GA.

More financial-based applications in the selected publications are proposed, including the following. Dinh et al. [107] introduce a 2-phases ensemble learning model for daily exchange rate prediction by using ANNs and differential evolution algorithm. While, Safi et al. [108] use a novel cost sensitivity fitness function in an effort to provide an effective metaheuristic PSO-based ensemble model for financial distress prediction. As for customer churn prediction, a large-scale benchmark study is conducted by Bogaert and Delaere [109] to compare the most novel ensemble methods and their selection strategies. The conducted study involves extensive comparison between 14 homogeneous and 13 heterogeneous ensembles by employing 10 of the most common metaheuristics.

6.5 Agricultural and environmental applications

In agricultural domain, Chaudhary et al. [110] propose a new ensemble-based PSO approach with intent to enhance the performance outcomes of ensemble vote in order to predict vegetable crop diseases. Environmentally, Zhu et al. [111] introduce an efficient selective ensemble model based on artificial fish swarm algorithm and ELMs for the sake of effectively forecasting urban haze pollution. Ekmekcioğlu et al. [112] develop, based on GA and PSO combined with AdaBoost and random forest, an effective ensemble model for hydraulic efficiency assessment of stormwater grate inlets. Moreover, H. Zhang et al. [113] introduce a GA-based selective ensemble approach in order to estimate the uniaxial compressive strength of rock in a convenient and precise manner.

6.6 Other applications

In an effort to generate a population of high-performing networks with the appropriate configuration and initialization combinations, the evolutionary ensemble neural network pool (EENNP) approach is introduced by Ai et al. [33] to efficiently predict household power demand. Moreover, Koohestani et al. [114] introduce an ensemble model for analysing driving behaviour. Their model comprises 2 main components. The first involves ensembling, where KNN, SVMs, and naïve Bayes algorithms are enhanced through bagging, boosting, and voting ensemble learning techniques. And the second incorporates the application of 4 evolutionary optimization algorithms to reduce ensemble size, namely the ant lion optimizer, grey wolf optimizer, and PSO.

Finally, based on SVMs and random subspace, a new PSO selective ensemble model is introduced by Z. Wang et al. [41] for accurate highway traffic flow state identification. Furthermore, Bu et al. [115] develop a novel hybrid intelligent image generation method called “IGASEN-EMWGAN” for ship painting defects by using GA improved by the Simulated Annealing algorithm.

7 Discussions and main findings

This section aims to provide a comprehensive analysis of the current state-of-the-art metaheuristic-based selective ensembles based on the 89 publications that have been extensively addressed in Sects. 5 and 6. Therefore, in the following sequence, the research key aspects that have been identified, in Sect. 3, could be appropriately handled.

7.1 Main categories of metaheuristic-based selective ensembles

The systematic analysis conducted so far unequivocally demonstrates that there are 3 fundamental categories of metaheuristic-based selective ensembles, including trajectory-based, evolutionary population-based, and swarm population-based. A fourth hybrid-based category emerges from combining 2 or more of the mentioned 3 categories of metaheuristics in selecting classifiers. Figure 12 demonstrates that ensemble pruning is frequently carried out, with evolutionary-based algorithms in more than half of the selected publications (52%), while ensemble selection based on swarm intelligence ranks second with (32%). Studies using trajectory-based algorithms come in third place with 11%. Merely 5% of the chosen literature comprises research that integrates various types of metaheuristics.

In general, the utilization of population-based metaheuristics is prevalent, accounting for approximately 84% of cases, and is generally favoured over trajectory-based algorithms in the context of selective ensemble modelling. The extant literature under examination contributes valuable insights distinguishing between these distinct approaches. A comprehensive summary of the distinguishing factors between trajectory and population-based metaheuristics is provided in Table 7.

7.2 Key algorithms of metaheuristic-based selective ensembles

Out of the 89 selected publications, Fig. 13 displays the Treemap of the top 5 algorithms employed in the addressed literature in terms of base learners, ensembles, and optimizers. The figure shows that decision trees (28%), support vector machines (25%), K-nearest neighbours (23%), artificial neural networks (15%), and naïve Bayes (14%) have been employed in the majority of cases as base learners. The top ensemble learners, namely bagging (19%), weighted voting (18%), majority voting (18%), weighted average (9%), and AdaBoost (8%) are applied in most cases. The top metaheuristics used for ensemble pruning are genetic algorithm (GA) (35%), particle swarm optimization (PSO) (18%), firefly (7%), hill-climbing (6%), and greedy randomized adaptive search procedure (GRASP) (5%).

In practice, it is important to note that the in-depth analysis conducted in Sect. 6 reveals that Genetic algorithm is mostly used in information technology, medical, as well as agricultural and environmental applications. On the other hand, GRASP is popular among those provide practical studies in financial management, while PSO is popular among those interested in industrial engineering.

7.3 Criteria for choosing the ensemble base learners

With regard to the identified selection criteria, base learners could be selected based on the overall model performance, diversity, complexity, or efficiency. Performance suggests that the goal of ensemble selection is to maximize a certain performance metric, such as accuracy, or minimize model errors or misclassifications. Diversity denotes that the purpose is to identify the most diverse classifiers to obtain a complementary subset of learners, while complexity indicates that the intention is to minimize the model complexity by reducing the number of selected base learners. Finally, efficiency implies that the goal is to reduce the overall time of computations.

After a thorough analysis, it is evident, as shown in Fig. 14, that most published cases have interest in performance (54%) and diversity (35%). Merely 6% of authors are intrigued by complexity, whereas the remaining 5% are drawn to efficiency. Overall, none of these criteria is sufficient on its own in most cases. Approximately 72% of the addressed publications integrate multiple selection criteria, rendering them multi-objective, especially when it comes to performance together with diversity. In contrast, just 28% of the publications have been carried out with a single-objective basis.

7.4 Performance evaluation in metaheuristic-based selective ensembles

Once the selective ensemble model for prediction/classification has been developed, it is critical to understand how well it works. The exact performance of the model could be determined if it is possible to test it on all potential input objects. Unfortunately, this is unlikely to happen, so an estimate of the performance will have to suffice [116]. In the literature, there are many metrics that could be used to estimate the performance of supervised ensemble learning algorithms such as accuracy, precision, sensitivity, specificity, F-measure, and area under the ROC curve (AUC) [117], [118]. In selected publications, Fig. 15 shows the most widely used metrics. It is clear that overall accuracy is the most employed performance measure (23%) followed by sensitivity (18%), AUC (13%), F-measure (13%), and G-mean (10%).

In binary classification problems, instances of each class, whether being classified correctly or incorrectly, could be counted, and arranged in what is known as confusion matrix representing the 4 possible outcomes. As illustrated in Table 8, the correctly classified instances appear on the 2 cells of the matrix main diagonal, whereas the off-diagonal cells reveal the numbers of instances that have been misclassified.

Based on the confusion matrix, the mentioned top 5 metrics that could be used for evaluating a classifier’s performance with varying evaluation emphases could be summarized as follows [118], [119].

-

Accuracy is the proportion of the correctly classified instances over the total number of instances It is calculated as follows: \(\left[Accuracy= \frac{TP+TN}{TP+TN+FP+FN}\right]\)

-

Sensitivity refers to the rate at which positive instances are correctly classified. It evaluates the classifier’s ability to correctly distinguish positive instances. It is calculated as follows: \(\left[Sensitivity= \frac{TP}{TP+FN}\right]\)

-

F-Measure is the harmonized mean of both Precision and Sensitivity, and it could be calculated as follows: \(\left[F-Measure = \frac{2*TP}{FP+FN+2*TP}\right]\)

-

G-Mean is the geometric mean of true positive and true negative rates. It could be calculated as follows: \(\left[G-Mean = \sqrt{\frac{TP}{TP+FN}*\frac{TN}{TN+FP}}\right]\)

-

AUC is the area beneath the receiver operating characteristic (ROC curve) which is a 2-dimensional representation of the trade-off between true positive and false positive rates. AUC measures a classifier’s ability to discriminate between classes. It is the probability that the classifier will prioritize a randomly selected positive instance higher than a randomly selected negative instance. For more details and representations see [120].

Based on the investigated literature, the following issues are determined to be essential to consider while assessing the metaheuristic-based ensemble models in general. First and foremost, identifying the nature of the problem at hand, whether classification or regression, governs the selection of the relevant metrics that ensure accurate evaluation across different types of tasks. Also, of importance is to choose a measure that is robust to variations in the datasets and generalizable across different settings in order to provide consistent and reliable evaluations of model performance. Further, researchers should realize that using different performance metrics may emphasize different aspects of model performance that could be non-commensurable or even conflicting. Therefore, it is necessary to consider the trade-offs between these metrics and choose the most appropriate ones based on the specific requirements of the practical problem under concern [121].

With regard to classification tasks, additional points should also be considered. First, understanding the classification task—binary, multi-class, multi-label, or hierarchical—is deemed vital since different tasks might need different performance measures. Second, it is also important to consider the characteristics of the deployed dataset, especially the class distribution. This is due to the fact that there are some metrics that are not suitable to be used in class-imbalance situations [122, 123]. Overall, most machine learning researchers evaluate the performance of the developed classifiers by using various metrics, and there is no consensus on a single measure. However, Redondo et al. [124] propose a unified performance measure (UPM) to be used for assessing binary classifiers. The suggested UPM considers all components of the confusion matrix and is defined as the harmonic mean of recall, specificity, precision, and negative predictive rate. It evaluates classifier performance on both positive and negative classes, independently of the dominant class, making it stable and more suitable for imbalanced classification tasks. Diego et al. [125] extend the proposed metric to multi-class problems, calling it the general performance score (GPS), and Rezk and Selim [126] have recently suggested and applied a revised version of UPM measure called weighted unified performance measure (WUPM) which imposes trade-off weighting scheme for its 4 metric components.

7.5 Programmes for metaheuristic-based selective ensemble modelling

Based on the 48 publications in which authors mention the computational tools they used, Fig. 16 depicts the software programs and tools mentioned in the selected literature. With 52% of the deployment, it is evident that MATLAB is the most widely used programming tool for metaheuristic-based selective ensemble modelling. Python programming language ranks second with 19%, followed by Weka [127] with 19% of the publications addressed. Additional tools, each with 2%, include genetic algorithm optimization toolbox (GAOT) [128], Java programming language, machine learning for language toolkit (Mallet) [129], PR-Tools [130], and R programming language.

It is noteworthy that during the review process, it has been found that an extensive Python library for optimization, denoted as “pyMetaheuristic”, has been made available on GitHub by Raiser [131]. Also, a recently open-source library for contemporary metaheuristic algorithms in Python named “MEALPY”, is published by Van Thieu and Mirjalili [132]. Both of these libraries have demonstrated effectiveness, and so it could help many researchers in their computational work. Additionally, an online platform presenting recent metaheuristics, accompanied by supplementary MATLAB and Python codes, has been established by Mirjalili [133].

8 Implementation challenges, limitations, and key considerations of metaheuristic-based selective ensembles

This section is dedicated to discussing the challenges and limitations facing the implementations of metaheuristic-based selective ensembles across diverse domains and practical contexts. Identifying these issues could help researchers and practitioners better understand the practical implications and potential pitfalls of using these techniques and, accordingly, conduct successful application and interpretation in various problem-solving scenarios. It is believed that by addressing these challenges the robustness and applicability of metaheuristic-based ensemble methods could be enhanced in real-world applications.

In their research, Sorensen and Glover [11] clarify that there is a trade-off between deterministic/analytical algorithms and metaheuristics such that deterministic algorithms provide optimal solutions but struggle with computational complexity, while with metaheuristic algorithms, there is no guarantee that solutions found will be globally optimal as they prioritize finding satisfactory solutions within a reasonable timeframe.

Another significant issue of concern is the “curse of dimensionality”, which makes most metaheuristic algorithms less effective as the problem size increases, particularly in large-scale problems [134]. In this context, Chopard and Tomassini [135] illustrate that one of the most critical limitations that metaheuristics might encounter when dealing with complex problems is the fact that they do not have a worst-case time complexity bound like deterministic algorithms, making it difficult to predict how well they would perform in certain situations. Typically, while metaheuristic algorithms can perform extremely well in some cases, they may struggle or take excessive time in others, leading to unpredictable outcomes. In such a situation, Rajwar et al., [24] elucidate that although metaheuristics could theoretically find optimal solutions if time is unlimited, practical constraints necessitate finding solutions within reasonable timeframes, highlighting a gap between theory and implementation.

Additional drawback of several metaheuristic algorithms is their partially unresolved mathematical analysis, making them less mathematically rigorous compared to other domains, and a definitive theoretical framework to address this problem is lacking [24, 25]. Pursuant to X. S. Yang [136], this problem is mainly caused by the nonlinear, complicated, and stochastic nature of the interactions between the multiple components of metaheuristics.

Moreover, the characteristics of the problem being solved could greatly influence the efficacy of metaheuristics, producing a challenge in selecting the most suitable metaheuristic algorithm without knowing much about the problem beforehand [135]. This issue is closely related to the “No free lunch theorem”, which suggests that there is no one algorithm that outperforms all others on every possible problem. This emphasizes the importance of carefully understanding the problem domain before selecting or designing the metaheuristic algorithm. To put it another way, as acknowledged by X. S. Yang [137], while the theorem states that no optimization algorithm is superior to all others across all problems, there might still be opportunities to identify problem-specific characteristics that can be utilized to improve the performance of a particular metaheuristic algorithm. Additional information with regard to the challenges associated with each category of metaheuristic algorithms is available in [138], [139], [140].

When considering metaheuristics-based selective ensembles, understanding the challenges and limitations associated with hybridizing metaheuristics and ensemble learning methods is crucial for progress in the field’s literature. While combining metaheuristics with other ensemble algorithms can boost their performance and robustness, it also introduces complexities and potential drawbacks. One challenge lies in determining the optimal combination of algorithms and techniques so as to achieve the desired improvements without compromising efficiency [20, 141]. Moreover, pursuant to Surabhi and Yogesh [142], integrating diverse algorithms requires careful consideration of compatibility, hyper-parameter tuning, and computational cost. Furthermore, hybridization may increase the complexity of the resulting ensemble, making it more difficult to analyse its behaviour or to interpret its results. Generally, achieving a balance between the benefits of hybridization and its challenges necessitates thorough experimentation, validation, and refinement [143, 144]. Researchers often encounter similar challenges when attempting to combine multiple metaheuristics, as discussed by Ting et al. [145]. Further insights into the challenges of integrating or hybridizing metaheuristics with machine learning algorithms in general could be found in [146].

With regard to the methodological considerations, there are some issues that should be taken into account to enhance the understanding of metaheuristics as suitable strategies for ensemble pruning, including theoretical developments, standardizing procedures for statistical evaluation, and defining standards for problem design and metric definition [141], [24]. All of the mentioned restrictions highlight the field’s complexity and the necessity of rigorous and robust methodologies in undertaking metaheuristic-based ensemble research.

Based on the extensive review conducted and the challenges discussed, it is demonstrated that researchers should keep in mind several key factors and considerations when applying metaheuristic-based selective ensembles in diverse practical settings. These factors could be summarized as follows.

Firstly, it is essential to evaluate whether a given problem follows the assumptions and requirements of the chosen metaheuristic-based ensemble approach. This is owing to the fact that problem characteristics vary widely, including factors such as size, complexity, and structure. Secondly, so as to achieve best performance, metaheuristic algorithms often have various parameters that need to be fine-tuned, where optimal parameter settings may vary depending on the problem domain and context. Therefore, it is very important to emphasize that researchers should conduct hyper-parameter tuning experiments to determine the most effective parameter configurations for each application scenario. Thirdly, it is found that computational resources, such as computing power and memory, have a significant impact on the efficiency of metaheuristic-based selective ensemble models. To guarantee scalability and performance in resource-constrained environments, lightweight algorithms or parallel computing techniques might be required. Furthermore, the efficiency of metaheuristic-based selective ensembles is highly dependent on both the quantity and quality of the utilized datasets. As a result, when developing and assessing ensemble models, data issues (e.g. noise, duplicates, outliers, and missing values) must be taken into account.

By carefully considering these factors and conducting empirical studies across diverse domains, researchers can effectively evaluate the generalizability and applicability of metaheuristic-based selective ensembles, thus advancing their understanding and deployment in practical settings.

9 Conclusions and prospective research directions

The current study delves into the dynamic convergence of ensemble learning, with a specific focus on the emerging domain of metaheuristic-based selective ensembles with emphasis on optimization-based methods. The investigation encompasses diverse metaheuristics, including trajectory-based and population-based approaches. Noteworthy examples from the literature demonstrate the success of metaheuristic-based selective ensembles across diverse domains.

Based on a thorough examination of literature spanning the last decade, this comprehensive review aims to categorize trajectory-based and population-based methodologies, identify common algorithms, and systematically examine the practical applications of selective ensembles in various fields. By addressing the crucial research aspects of concern, the study provides profound insights into recent developments, prevalent trends, and potential directions, serving as a valuable guide for researchers and practitioners navigating the evolving landscape of metaheuristic-based selective ensembles.

Some of the prospective research directions that are elicited by the review systematic analysis could be highlighted as follows. First, employing selective ensemble techniques to unsupervised clustering-based problems by composing 2 or more clustering models, is a promising direction of improvements in this field. Ensemble clustering is still a subject of few studies, and more work must be done before it can be considered as recognized field of research [20].

Second, it is evident that the majority of the provided models are optimized based on genetic algorithms, particle swarm optimization, firefly, hill-climbing, and greedy randomized adaptive search procedure. Therefore, the impact of the new, cutting-edge metaheuristics, like artificial jellyfish search [147], corona virus [148], chef-based optimization [149], crayfish [53], and puma optimizer [54] need to be further investigated, compared, and applied.

Third, although many of the real-world problems require multiple class learning models, it is observed that just 5% of the addressed literature includes studies that incorporate multiple categories of metaheuristics. Thus, research in this field could be enhanced by developing appropriate hybrid multi-class models.

Fourth, instead of fixing the ensemble size beforehand, researchers may consider allowing the metaheuristic to dynamically determine the optimal ensemble size during the optimization process. This can be done by introducing an additional decision variable for the ensemble size and letting the metaheuristic decide the most suitable number of base learners.

Fifth, extending the optimization process to not only select classifiers but also dynamically optimize their hyper-parameters during the metaheuristic’s iterations could enhance the adaptability of the ensemble to different datasets.

In conclusion, the study identifies prospective research directions, including the exploration of selective ensemble techniques for unsupervised clustering-based problems, the investigation of cutting-edge metaheuristics, such as artificial jellyfish search, corona virus, and crayfish optimization algorithm, and the developments of hybrid multi-class models, augmenting the dynamic determination of ensemble size during optimization, and optimizing the hyper-parameters within metaheuristic iterations. These directions offer a robust roadmap for advancing the understanding and application of metaheuristic-based selective ensembles. At last, it is imperative to mention that the accuracy and completeness of this review are contingent on the limitations and constraints of Scopus bibliometric coverage.

Data availability

The study has no associated data.

Notes

Publications are extracted on 24th July 2023.

The analytical work is conducted by Python 3.10.9 programming language under Spyder (5.4.3) as a working platform, and nltk (3.7.2) as well as pandas (1.5.3) as implementation libraries.

Inclusion_list = ['meta-heuristic', 'Metaheuristic', 'heuristic', 'evolutionary', 'genetic', 'swarm', 'trajectory-based', 'population-based', 'search-based', 'search based'].

Exclusion_list = ['clustering-based', 'cluster ensemble', 'clustering ensemble', 'mathematical-based', 'mathematical programming', 'probabilistic-based', 'probabilistic ensemble', 'ordered-based', 'ordering-based', 'ordered ensemble', 'ranking-based'].

Indexed keywords are the classification keywords used by Scopus.

Citations are retrieved from the bibliometric data of the selected publications, as identified on 24th July 2023.

The most important of these performance measures are defined in Sect. 7.

References

Džeroski S, Panov P, Ženko B (2009) Machine learning, ensemble methods in. In: Meyers RA (ed) Encyclopedia of complexity and systems science. Springer, New York, pp 5317–5325

Fernández-Delgado M, Cernadas E, Barro S, Amorim D (2014) Do we need hundreds of classifiers to solve real world classification problems? J Mach Learn Res 15(1):3133–3181

Sagi O, Rokach L (2018) Ensemble learning: a survey. Wiley Interdiscip Rev Data Min Knowl Discov 8(4):1–18

Ruta D, Gabrys B (2001) Application of the evolutionary algorithms for classifier selection in multiple classifier systems with majority voting. In: J Kittler, F Roli (Eds.), Multiple classifier systems. MCS 2001. Lecture notes in computer science (Vol. 2096, pp. 399–408). Springer, Berlin, Heidelberg.

Boongoen T, Iam-On N (2018) Cluster ensembles: a survey of approaches with recent extensions and applications. Comput Sci Rev 28:1–25

Martínez-Muñoz G, Suárez A (2006) Pruning in ordered bagging ensembles. In: Proceedings of the 23rd international conference on machine learning, 609–616.

Mohammed A, Onieva E, Woźniak M (2022) Selective ensemble of classifiers trained on selective samples. Neurocomputing 482(1):197–211

Blum C, National S, Li X (2008). Swarm intelligence in optimization. In: C Blum, D Merkle (Eds.), Swarm intelligence. natural computing series. (pp. 43–85). Springer, Berlin, Heidelberg.

Zhou ZH (2012) Ensemble methods: foundations and algorithms. Taylor & Francis, Oxfordshire

Ferrer J, Delgado-pérez P (2023) Metaheuristics in a nutshell. In: Romero JR, Medina-Bulo I, Chicano F (eds) Optimising the software development process with artificial intelligence. Springer, Singapore, pp 279–307

Sorensen K, Glover FW (2013) Metaheuristics. In: Gass SI, Fu MC (eds) Encyclopedia of operations research and management science. Springer, Boston, MA, pp 960–970

Escovedo T, Da Cruz AA, Koshiyama A, Melo R, Vellasco M (2014) Neve++: a neuro-evolutionary unlimited ensemble for adaptive learning. In: Proceedings of the international joint conference on neural networks, 3331–3338.

Fernandes SEN, de Souza AN, Gastaldello DS, Pereira DR, Papa JP (2017) Pruning optimum-path forest ensembles using metaheuristic optimization for land-cover classification. Int J Remote Sens 38(20):5736–5762

Giovanini LHF, Manffra EF, Nievola JC (2018) Evolutionary ensemble approach for behavioral credit scoring. In: Proceedings of the international conference on computational science, 825–831

Tsakiridis NL, Tziolas NV, Theocharis JB, Zalidis GC (2019) A genetic algorithm-based stacking algorithm for predicting soil organic matter from vis—NIR spectral data. Eur J Soil Sci 70(3):578–590

Asadi S, Roshan SE (2021) A bi-objective optimization method to produce a near-optimal number of classifiers and increase diversity in bagging. Knowl-Based Syst. https://doi.org/10.1016/j.knosys.2020.106656

Hao X, Chen Z, Yi S, Liu J (2023) Application of improved stacking ensemble learning in NIR spectral modeling of corn seed germination rate. Chemom Intell Lab Syst. https://doi.org/10.1016/j.chemolab.2023.105020

Bu H, Ge Z, Zhu X, Yang T, Zhou H (2024) Prediction of ship painting man-hours based on selective ensemble learning. Coatings 14(3):1–23

Cagnini HEL, Dores SCN, Freitas AA, Barros RC (2004) A survey of evolutionary algorithms for supervised ensemble learning. Knowl Eng Rev 20(2):117–125

Mienye ID, Sun Y, Member S (2022) A survey of ensemble learning: concepts, algorithms, applications, and prospects. IEEE Access 10:99129–99149

Reddy MJ, Kumar DN (2012) Computational algorithms inspired by biological processes and evolution. Curr Sci 103(4):370–380

Nesmachnow S (2014) An overview of metaheuristics: accurate and efficient methods for optimisation. Int J Metaheuristics 3(4):320–347

Slowik A, Kwasnicka H (2020) Evolutionary algorithms and their applications to engineering problems. Neural Comput Appl 32(16):12363–12379

Rajwar K, Deep K, Das S (2023) An exhaustive review of the metaheuristic algorithms for search and optimization: taxonomy, applications, and open challenges. Artif Intell Rev 56(1):13187–13257

Kalita K, Ganesh N, Balamurugan S (2024) Metaheuristics for machine learning: algorithms and applications. Wiley, Hoboken

Lin S, Kernighan BW (1973) An effective heuristic algorithm for the traveling-salesman problem. Oper Res 21(2):498–516

Kirkpatrick S, Gelatt CD, Vecchi MP (1983) Optimization by simulated annealing. Science 220(4598):671–680

Glover F (1989) Tabu search-Part I. ORSA J Comput 1(3):190–206

Feo TA, Resende MG (1995) Greedy randomized adaptive search procedures. J Global Optim 6(2):109–133

Mladenovic N, Hansen P (1997) Variable neighborhood search. Comput Oper Res 24(11):1097–1100

Lourenço HR, Martin OC, Stützle T (2010) Iterated local search: framework and applications. In: M Gendreau, JY Potvin (Eds.), Handbook of metaheuristics international series in operations research and management science (Vol. 146, pp. 363–397). Springer:Boston

Mitchell M, Taylor CE (1999) Evolutionary computation: an overview. Annu Rev Ecol Syst 30:593–616

Ai S, Chakravorty A, Rong C (2019) Household power demand prediction using evolutionary ensemble neural network pool with multiple network structures. Sensors 19:721–740

Reddy MJ, Kumar DN (2020) Evolutionary algorithms, swarm intelligence methods, and their applications in water resources engineering: a state-of-the-art review. H2Open J 3(1):135–188

Goldberg DE (1989) Genetic algorithms in search, optimization and machine learning. Addison-Wesley Longman Publishing Co Inc, Boston

Holland JH (1992) Genetic algorithms. Sci Am 267:66–72

Storn R, Price K (1997) Differential evolution—a simple and efficient heuristic for global optimization over continuous spaces. J Global Optim 11:341–359

Ferreira C (2001) Gene Expression Programming: A New Adaptive Algorithm for Solving Problems. Complex Systems 13(2):87–129

Beyer HG, Schwefel HP (2002) Evolution strategies–a comprehensive introduction. Nat Comput 1(1):3–52

Larrañaga P, Lozano JA (2002) Estimation of distribution algorithms: a new tool for evolutionary computation. Springer, New York

Wang Z, Chu R, Zhang M, Wang X, Luan S (2020) An improved selective ensemble learning method for highway traffic flow state identification. IEEE Access 8:212623–212634

Dorigo M, Gambardella LM (1997) Ant colony system: a cooperative learning approach to the traveling salesman problem. IEEE Trans Evol Comput 1(1):53–66

Karaboga D, Basturk B (2007) A powerful and efficient algorithm for numerical function optimization: artificial bee colony (ABC) algorithm. J Global Optim 39:459–471

Yang XS (2009) Firefly Algorithms for Multimodal Optimization. In: O Watanabe, T Zeugmann (Eds.), Stochastic algorithms: foundations and applications. SAGA 2009. Lecture Notes in Computer Science (pp. 169–178). Springer, Berlin, Heidelberg.

Yang XS (2010) A new metaheuristic bat-inspired algorithm. In: González JR, Pelta DA, Cruz C, Terrazas G, Krasnogor N (eds) Nature inspired cooperative strategies for optimization (NICSO 2010) studies in computational intelligence. Springer, Heidelberg, pp 65–74

Tan Y, Zhu Y (2010) Fireworks Algorithm for Optimization. In: Proceedings of the international conference in swarm intelligence, 355–364.

Clerc M (2010) Particle swarm optimization. Wiley

Gandomi AH, Yang XS, Alavi AH (2013) Cuckoo search algorithm: a metaheuristic approach to solve structural optimization problems. Eng Comput 29(2):245

Zhou Y, Luo Q, Liu J (2014) Glowworm swarm optimization for dispatching system of public transit vehicles. Neural Process Lett 40(1):25–33

Mirjalili S (2015b) The ant lion optimizer. Adv Eng Softw 83:80–98

Mirjalili S (2015a) Moth-flame optimization algorithm: a novel nature-inspired heuristic paradigm. Knowl-Based Syst 89:228–249

Mirjalili S, Gandomi AH, Mirjalili SZ, Saremi S, Faris H, Mirjalili SM (2017) Salp swarm algorithm: a bio-inspired optimizer for engineering design problems. Adv Eng Softw 114:163–191

Jia H, Rao H, Wen C, Mirjalili S (2023) Crayfish optimization algorithm. Artif Intell Rev 56(2):1919–1979

Abdollahzadeh B, Khodadadi N, Barshandeh S, Trojovský P, Gharehchopogh FS, El-kenawy ESM, Abualigah L, Mirjalili S (2024) Puma optimizer (PO): a novel metaheuristic optimization algorithm and its application in machine learning. Clust Comput. https://doi.org/10.1007/s10586-023-04221-5

Fahimnia B, Tang CS, Davarzani H, Sarkis J (2017) Quantitative models for managing supply chain risks: a review. Eur J Oper Res 73(1):220–239

Haixiang G, Yijing L, Shang J, Mingyun G, Yuanyue H, Bing G (2017) Learning from class-imbalanced data: review of methods and applications. Expert Syst Appl 73(2):220–239

Lorenz M, Aisch G, Kokkelink D (2012). Datawrapper: create charts and maps. https://www.datawrapper.de/

Bastian M, Heymann S, Jacomy M (2009) Gephi: an open source software for exploring and manipulating networks. International AAAI conference on weblogs and social media. http://www.aaai.org/ocs/index.php/ICWSM/09/paper/view/154

van Eck NJ, Waltman L (2010). VOSViewer: Visualizing Scientific Landscapes (1.6.20). https://www.vosviewer.com

Krawczyk B, Woźniak M, Schaefer G (2014) Cost-sensitive decision tree ensembles for effective imbalanced classification. Appl Soft Comput J 14(1):554–562

Onan A, Korukoglu S, Bulut H (2017) A hybrid ensemble pruning approach based on consensus clustering and multi-objective evolutionary algorithm for sentiment classification. Inf Process Manag 53(1):814–833

Bhowan U, Johnston M, Zhang M, Yao X (2014) Reusing genetic programming for ensemble selection in classification of unbalanced data. IEEE Trans Evol Comput 18(6):893–908

Cavalcanti GDC, Oliveira LS, Moura TJM, Carvalho GV (2016) Combining diversity measures for ensemble pruning. Pattern Recogn Lett 74(1):38–45

Wang Z, Lu C, Zhou B (2018) Fault diagnosis for rotary machinery with selective ensemble neural networks. Mech Syst Signal Process 113(1):112–130

Zhang W, He H, Zhang S (2019) A novel multi-stage hybrid model with enhanced multi-population niche genetic algorithm: an application in credit scoring. Expert Syst Appl 121(1):221–232

Krawczyk B (2015) One-class classifier ensemble pruning and weighting with firefly algorithm. Neurocomputing 150(2):490–500

Li J, Member S, Zhan Z, Member S, Wang H (2020) Data-driven evolutionary algorithm with perturbation-based ensemble surrogates. IEEE Trans Cybern 51(8):3925–3937

Zhang S, Chen Y, Zhang W, Feng R (2021) A novel ensemble deep learning model with dynamic error correction and multi-objective ensemble pruning for time series forecasting. Inf Sci 544(1):427–445

Peimankar A, Weddell SJ, Jalal T, Lapthorn AC (2017) Evolutionary multi-objective fault diagnosis of power transformers. Swarm Evol Comput 36(1):62–75

Fatima I, Fahim M, Lee YK, Lee S (2013) A genetic algorithm-based classifier ensemble optimization for activity recognition in smart homes. KSII Trans Internet Inf Syst 7(11):2853–2873

He H, Tan Y, Ying J, Zhang W (2020) Strengthen EEG-based emotion recognition using firefly integrated optimization algorithm. Appl Soft Comput J. https://doi.org/10.1016/j.asoc.2020.106426

Darekar RV, Chavand MS, Sharanyaa S, Ranjan NM (2023) A hybrid meta-heuristic ensemble based classification technique speech emotion recognition. Adv Eng Softw. https://doi.org/10.1016/j.advengsoft.2023.103412

Krawczyk B, Woźniak M (2014) Evolutionary cost-sensitive ensemble for malware detection. Adv Intell Syst Comput 299(1):433–442

Jodavi M, Abadi M, Parhizkar E (2015a) Dbdhunter: an ensemble-based anomaly detection approach to detect drive-by download attacks. In: Proceedings of the 5th international conference on computer and knowledge engineering, ICCKE 2015, 273–278.

Jodavi M, Abadi M, Parhizkar E (2015b). JSObfusDetector: a binary PSO-based one-class classifier ensemble to detect obfuscated javascript code. In: Proceedings of the international symposium on artificial intelligence and signal processing, AISP, 322–327.

Mauša G, Galinac GT (2017) Co-evolutionary multi-population genetic programming for classification in software defect prediction: an empirical case study. Appl Soft Comput J 55(1):331–351

Malhotra R, Khanna M (2018) Particle swarm optimization-based ensemble learning for software change prediction. Inf Softw Technol 102(1):65–84

Mousavi R, Eftekhari M, Rahdari F (2018) Omni-ensemble learning (OEL): utilizing over-bagging, static and dynamic ensemble selection approaches for software defect prediction. Int J Artificial Intell Tools 27(6). https://doi.org/10.1142/S0218213018500240

Tajoddin A, Abadi M (2019) RAMD: registry-based anomaly malware detection using one-class ensemble classifiers. Appl Intell 49(7):2641–2658

Jadhav A, Shandilya SK, Izonin I, Gregus M (2023) Effective software effort estimation enabling digital transformation. IEEE Access. https://doi.org/10.1109/ACCESS.2023.3293432

Goh J, Thing VLL (2015) A hybrid evolutionary algorithm for feature and ensemble selection in image tampering detection. Int J Electron Secur Digit Forensics 7(1):76–104