Abstract

The least squares support vector machine (LS-SVM) is an effective method to deal with classification and regression problems and has been widely studied and applied in the fields of machine learning and pattern recognition. The learning algorithms of the LS-SVM are usually conjugate gradient (CG) and sequential minimal optimization (SMO) algorithms. Based on this, we propose a conjugate functional gain SMO algorithm and theoretically prove its asymptotic convergence. This algorithm combines the conjugate direction method and the functional gain SMO algorithm with second-order information, which increases the functional gain of the plain SMO algorithm. In addition, we also provide a generalized SMO-type algorithm framework with a simple iterative format and easy implementation for other LS-SVM training algorithms. The numerical results show that the execution time of this algorithm is significantly shorter than that of the other plain SMO-type algorithms and CG-type algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Support vector machines (abbreviated as SVMs) are an important class of methods to solve classification [1] and regression problems [2]. When it solves linear inseparable and nonlinear regression problems, it usually uses kernel tricks to map data from a low-dimensional input space to a high-dimensional space. SVMs have always had a place in the field of pattern recognition with their elegant mathematical optimization theory and excellent data prediction ability. After continuous research, the theory and application of SVMs have been well developed [3]. One of the most successful variants is the least squares support vector machine (abbreviated as LS-SVM). This model is also a powerful tool for handling classification and regression problems in machine learning.

The LS-SVM was first proposed by Suykens et al. [4]. Its primal problem is a convex optimization problem with a linear equality constraint. To make the training of the LS-SVM more efficient, researchers have proposed fast training algorithms. Jiao et al. [5] used an approximate algorithm to quickly train the LS-SVM and improve its sparsity. Yang et al. [6] proposed to use a pruning algorithm to effectively solve the optimization problem of the LS-SVM. Li et al. [7] proposed a fast iterative single-data method for training unconstrained LS-SVM. Xia [8] used QR factorization to train sparse LS-SVM. Chua [9] proposed a computationally efficient method for solving large-scale LS-SVM classifiers based on the Sherman–Morrison–Woodbury (abbreviated as SMW) matrix. However, the algorithm has high requirements on the kernel mapping, and the concrete form of kernel mapping must be given. Suykens et al. [10] used the conjugate gradient (abbreviated as CG) method to solve the Karush–Kuhn–Tucker (abbreviated as KKT) equations of the LS-SVM. However, in their method, the CG algorithm needs to be used twice. Hence, Chu et al. [11] proposed an improved CG (abbreviated as ICG) method to solve the KKT equations. This method only needs to use the CG method once and reduces the order of the KKT equations. Li et al. [12] transformed the equality constraint problem in the LS-SVM into an unconstrained optimization problem and proposed to use recursive formula and CG method to quickly train the LS-SVM. In general, for large-scale datasets, it is more efficient to use the sequential minimal optimization (abbreviated as SMO) algorithm to solve the LS-SVM [12].

The SMO algorithm was proposed by Platt [13] and used to train the standard SVMs. This method takes the decomposition algorithm to the extreme, and only two variables are selected for iteration at a time. For each subproblem, the SMO algorithm can obtain the analytical solution, avoiding the complex matrix computations of quadratic programming numerical algorithm. The SMO algorithm is not only effective for classification problems but also efficient for regression problems [2]. Keerthi et al. [14] first proposed a SMO algorithm for solving the LS-SVM. This algorithm also uses maximal violating pair (abbreviated as MVP) to select the working set and is also called the first-order SMO. In SVMs, the second-order SMO is more efficient than the first-order SMO [15]. Hence, Lopez et al. [16] introduced the second-order SMO into the LS-SVM to improve the training speed. Shao et al. [17] ignored the bias term of the LS-SVM and proposed a single-direction SMO algorithm (abbreviated as SD-SMO). Without considering the bias term, the efficiency of the SD-SMO is higher than that of the first-order SMO. Bo et al. [18] proposed the functional gain SMO algorithm (abbreviated as FGSMO) and verified its efficiency with numerical experiments. And FGSMO can degenerate into the second-order SMO. In general, parallel computing [19] and distributed algorithms can also improve the learning efficiency of the model, for instance, parallel SMO [20,21,22,23,24,25] and ADMM algorithms [26, 27], etc. However, they mostly divide the dataset and assign them to different processors.

Alternatively, the SMO algorithm can also be regarded as a special kind of projected gradient [28]. Naturally, some gradient acceleration tricks can also be applied to SMO-type algorithms. For instance, Lopez et al. [29] combined momentum and SMO algorithm to propose a momentum-accelerated SMO algorithm (abbreviated as MLS-SMO). Torres-Barrán et al. [30] used the Nestrove acceleration strategy on the SMO algorithm, reducing the number of iterations of the SMO algorithm. However, among the optimization algorithms, the conjugate direction method is also an important class of unconstrained optimization algorithms. It not only speeds up the convergence speed of the gradient descent algorithm, but also avoids a large number of numerical computation of Newton’s method. It is a relatively practical and effective optimization algorithm between gradient descent and Newton’s method. Hence, we will develop a new conjugate variant SMO (abbreviated as CSMO) algorithm for LS-SVM. Although the CSMO was originally used to train SVMs [28], from the mathematical model of LS-SVM and SVMs, LS-SVM is more suitable. Because the new CSMO algorithm for LS-SVM will not be affected by the box constraints, and there is no need to set a restart step, the iterative format will be simpler. In addition, the algorithm usually has a larger functional gain than the plain SMO-type algorithms and can converge to the optimal solution of the specified accuracy at a faster speed. Especially when the penalty parameter is large, the efficiency of the new CSMO of LS-SVM will be significantly higher than other plain SMO-type algorithms. Hence, we call it the conjugate functional gain SMO algorithm (abbreviated as CFGSMO)

The rest of this work is organized as follows. In Sect. 2, we will briefly introduce the LS-SVM. The solution algorithms for LS-SVM are summarized in Sect. 3. The CFGSMO algorithm is proposed in Sect. 4. The convergence analysis of the CFGSMO is discussed in Sect. 5. The numerical experiments are shown in Sect. 6. The last section is the summary of the work. Table 1 is the notation table.

2 Preliminaries of the LS-SVM model

The mathematical theory of LS-SVM and its connection with the system of linear equations will be briefly introduced in this section.

Consider a given sample training set \(\varvec{T}=\left\{ \left( \varvec{x}_{i},y_{i}\right) \right\} _{i=1}^{n}\), where \(\varvec{x}_{i}\in {\mathbb {R}}^{p}\) is the i-th pattern input vector, and n is the number of samples. In the binary classification problem, category label \(y_{i} \in \left\{ -1, 1\right\}\). At this time, the LS-SVM needs to solve a quadratic programming problem with linear equality constraints, that is

where the \(\varvec{\phi }:\varOmega \varvec{\rightarrow } \mathcal {\varvec{H}}\) is a kernel mapping from the input space \(\Omega (\Omega \subset {\mathbb {R}}^{p})\) to the high-dimensional feature space \(\mathcal {\varvec{H}}\). The purpose of introducing the kernel mapping \(\varvec{\phi }\) is to transform the nonlinear problem in the input space into the linear problem in the high-dimensional space. \(\varvec{\omega }\) is the weight parameter, b is the bias term, \(\gamma\) is the penalty parameter, and \(\xi _{i}\) is the error variable. When \(y_{i} \in {\mathbb {R}}\), LS-SVM is used to deal with the regression problems. The Lagrangian function of problem (1) is

where \(\varvec{\alpha } \in {\mathbb {R}}^{n}\) is the Lagrangian multiplier. According to the KKT condition of problem (1), we can get

After eliminating the weight variable \(\varvec{\omega }\) and the error variable \(\xi _{i}\), (3) can be further expressed as the linear equation system

where \({[\varvec{K}]_{ij}=k(\varvec{x}_{i}, \varvec{x}_{j})=\left\langle {\varvec{\phi }(\varvec{x}_{i}), \varvec{\phi }(\varvec{x}_{j})} \right\rangle }\) is the Gram matrix, \(k(\varvec{x}_{i}, \varvec{x}_{j})\) is the kernel function that satisfies the Mercer condition, \(\varvec{y}=\left( y_{1},y_{2},\dots ,y_{n}\right) ^{T}\), and \({\mathbf {1}}_{n \times 1}=\left( 1,1,\dots ,1\right) ^{T}\). The matrix \(\varvec{K}+\gamma ^{-1}\varvec{I}\) is a symmetric positive definite matrix, since matrix \(\varvec{K} \in {\mathbb {R}}^{n \times n}\) is a positive semi-definite matrix and \(\gamma\) is always positive, the diagonal of \(\gamma ^{-1}\varvec{I}\) is positive.

If \(\varvec{A}\) is the coefficient matrix of (4), then the matrix \(\varvec{A} \in {\mathbb {R}}^{(n+1) \times (n+1)}\). When the sample \(\varvec{T}=\left\{ (\varvec{x}_{i}, y_{i})\right\} _{i=1}^{n}\) is large, the dimension of the matrix \(\varvec{A}\) is large and storage is difficult. At this time, it is not particularly easy to solve the linear equation system (4). Moreover, the complexity of computing the inverse matrix \(\varvec{A}\) is as high as \(O(n^{3})\). Hence, we need to combine the feature of the LS-SVM to find some more efficient methods to solve the large-scale linear system (4) or optimization problem (1).

3 Related works of the LS-SVM solution

This section will briefly introduce several mainstream algorithms for the fast training of the LS-SVM.

3.1 Conjugate gradient

The conjugate gradient (CG) is an important method for solving large-scale linear equations. But this method requires the coefficient matrix to be symmetric and positive definite. However, the matrix \(\varvec{A}\) in (4) is a non-positive matrix. Hence, the CG method cannot be used directly to solve the problem. Based on this, Suykens et al. [10] presented a linear system with the same solution as the linear system (4), that is

where \({\varvec{\Lambda }:=\varvec{K}+\gamma ^{-1}\varvec{I} (\varvec{\Lambda }=\varvec{\Lambda }^{T}\succ 0)}\). Hence, solving the linear equations (4) is transformed into the solving linear equations (5). This method makes full of use of the properties of the symmetric matrix \(\varvec{A}\). The detailed steps for training LS-SVM using the CG method are summarized in Algorithm 1.

Note that, the method of Suykens [10] needs to use the twice CG to solve the linear equations (5), which increase the amount of arithmetic. Chu [11] proposed a novel computation method based on the characteristics of the block matrix \(\varvec{K}+\gamma ^{-1}\varvec{I}=\left( \begin{array}{ll} \varvec{{\bar{Q}}}\quad &{} \varvec{q}\\ \varvec{q}^{T}\quad &{} \varvec{Q}_{nn} \end{array}\right).\) Here, \(\varvec{{\bar{Q}}} \in {\mathbb {R}}^{(n-1) \times (n-1)}\), \(\varvec{Q}_{nn} \in {\mathbb {R}}\) and \(\varvec{q} \in {\mathbb {R}}^{n-1}\). This method not only reduces the order of the linear equations (4) but also calls the CG once. This greatly reduces the amount of arithmetic. Specifically, the CG is first used to solve the linear system

Then, the optimal solution is obtained according to the \(\varvec{\alpha }^{*}=\left( \begin{array}{c} \varvec{{\widetilde{\alpha }}}^{*} \\ -\varvec{1}^{T}_{n-1} \varvec{{\widetilde{\alpha }}}^{*} \end{array}\right)\) and \(b^{*}=y_{n}+\varvec{Q}_{nn} (\varvec{1}^{T}_{n-1} \varvec{{\widetilde{\alpha }}}^{*})-\varvec{q}^{T} \varvec{{\widetilde{\alpha }}}^{*}\), where \(\varvec{{\widetilde{Q}}}=\varvec{{\bar{Q}}}-\varvec{1}_{n-1} \varvec{q}^{T}-\varvec{q} \varvec{1}^{T}_{n-1}+\varvec{Q}_{nn} \varvec{1}_{n-1} \varvec{1}^{T}_{n-1}\) and \(\varvec{{\widetilde{y}}}=\left( y_{1},y_{2},\cdots ,y_{n-1}\right) ^{T}\). The detailed steps for training the LS-SVM using improved CG (ICG) method are summarized in Algorithm 2

3.2 First-order SMO

The CG method starts from the primal problem to obtain the optimal solution of problem (1). However, solving the dual problem of (1) can also get the optimal solution according to the duality theory. The sequential minimal optimization (SMO) is an algorithm designed for the dual problem of (1). To satisfy the constraints of the dual problem, the SMO algorithm only selects two variables for optimization during each iteration. Compared with CG, SMO is simpler to implement and can deal with large-scale datasets. In some cases, the performance of SMO may be better than CG.

According to the Wolf duality theory, the dual problem of (1) is

where \({[\widetilde{\varvec{K}}]}_{i j}=[\varvec{K}]_{i j}+\delta _{i j}/{\gamma }=k\left( \varvec{x}_{i}, \varvec{x}_{j}\right) +\delta _{i j}/{\gamma }\) and \(\delta _{i j}=1\) if \(i = j\) and 0 otherwise. Obviously, dual problem (7) is a simple convex optimization problem with a equality constraint. The Lagrangian function of (7) is

where \(\nu\) is Lagrangian multiplier. It follows from the KKT condition of (7) that

where \(\varvec{\nabla }_{\alpha _{i}}{\mathcal {D}}(\varvec{\alpha })=\sum _{j=1}^{n}\alpha _{j}{[\widetilde{\varvec{K}}]}_{i j}-y_{i}=G_{i}\left( \varvec{\alpha }\right) , \forall i\). Note that, when \({\max \limits _{i}(G_{i}\left( \varvec{\alpha }\right) )=\min \limits _{i}(G_{i}\left( \varvec{\alpha }\right) )}\) holds and \(\sum _{i=1}^{n}\varvec{\alpha }_{i}=0\), then \(\varvec{\alpha }\) is the optimal solution of the dual problem (7). In actual computation, the strict KKT conditions are generally not used, but a small positive number \(\epsilon\) is set in advance so that

is established. This method has also been adopted by the LIBSVM [31] and SVM Light [32]. Let \((\alpha ^{k}_{i},\alpha ^{k}_{j})\) be the variable pair selected in the k-th iteration. Since the equation constraint \(\sum _{i=1}^{n}\alpha _{i}=0\) must be satisfied, the update of \((\alpha ^{k}_{i},\alpha ^{k}_{j})\) needs to satisfy

\(\Delta \varvec{\alpha }^{k}\) is the amount of variation for \(\alpha ^{k}_{i}\) and \(\alpha ^{k}_{j}\). If the functional gain is denoted by \(f_{G} \left( \Delta \varvec{\alpha }^{k}\right)\), we have

\(f_{G}\) is the quadratic function of \(\Delta \varvec{\alpha }^{k}\). Let \(f_{G}^{\prime }(\Delta \varvec{\alpha }^{k})=0\), then we have

Substituting \(\Delta \varvec{\alpha }^{k}\) into (12) yields the functional gain

Obviously, working set (i, j) needs to be selected to maximize \(f_{G}\left( \Delta \varvec{\alpha }^{k}\right)\), namely

In the first-order SMO algorithm, Keerthi [14] neglected the denominator of \(f_{G}\left( \Delta \varvec{\alpha }^{k}\right)\) and only consider the numerator to reach the maximum, namely

Obviously, the selecting method of working set (i, j) is equivalent to

At this time, working set (i, j) is also called the MVP. The first-order SMO proposed by Keerthi [14] uses the dual gap as the stopping condition. However, we uniformly use (10) as the stopping condition. The detailed computation process of the first-order SMO is summarized in Algorithm 3. Here, \(\varvec{G}\left( {\varvec{\alpha }^{k} } \right) =\left( G_{1}\left( {\varvec{\alpha }^{k} } \right) ,G_{2}\left( {\varvec{\alpha }^{k} } \right) , \cdots , G_{n}\left( {\varvec{\alpha }^{k} } \right) \right) ^{T}\) denotes the gradient of \({\mathcal {D}}\left( \varvec{\alpha }\right)\) at the k-th iteration.

3.3 Second-order SMO

In LIBSVM [31], the SVMs model is trained using the second-order version of the SMO algorithm. Generally, the efficiency of the second-order SMO algorithm is higher than that of the first-order SMO algorithm [15]. Hence, López [16] extended the second-order SMO algorithm of the SVMs to the LS-SVM to improve the training efficiency of the LS-SVM.

Since the first-order SMO algorithm ignores the denominator of \(f_{G}\left( \Delta \varvec{\alpha }^{k}\right)\), the working set (i, j) selected with the MVP may not maximize \(f_{G}\left( \Delta \varvec{\alpha }^{k}\right)\). Based on this, the second-order SMO algorithm of LS-SVM takes the denominator of \(f_{G}\left( \Delta \varvec{\alpha }^{k}\right)\) into consideration when selecting the working set (i, j). However, note that, in order to find the optimal working set (i, j), it is necessary to traverse (15) to find the working set (i, j) corresponding to the maximum value, and the complexity is \(O(n^{2})\). When the sample size n is large, this is unacceptable. Fan [15] and López [16] used a compromise method. This method first uses the first-order SMO to find the coordinate i and then traverses (15) to find the coordinate j corresponding to the maximum value, namely

Although the second-order SMO algorithm increases some kernel operations, the functional gain of the dual function is greater than that of the first-order SMO algorithm in each iteration. Fan [15] and López’s [16] numerical experiments have shown that the efficiency of the second-order SMO is generally higher than that of the first-order SMO. But when the penalty parameter \(\gamma \approx 0\), the kernel parameter \(\sigma \approx 0\) and \(\sigma \gg 0\) of the RBF, the second-order SMO is almost equivalent to the first-order SMO [16]. Replacing the working set selection method of step 3 in Algorithm 3 with (18) is the computation process of the second-order SMO algorithm.

3.4 Functional gain SMO

The coordinate index corresponding to the minimum value of the gradient \(\varvec{G}\left( \varvec{\alpha }\right)\) is the selected i in the second-order SMO algorithm. There may be a problem with this selection method, namely, \(\ G_{i}\left( \varvec{\alpha }\right)\) may not be the most obvious gradient component that violates the KKT condition, namely, there may be a \({\hat{i}}\) that makes \(|\ G_{i}\left( \varvec{\alpha }\right) |\le \ G_{{\hat{i}}}\left( \varvec{\alpha }\right)\). Hence, Bo [18] used functional gain to select the working set (i, j). Specifically, the selection of index i is the coordinate index corresponding to the maximum value of \(|\varvec{G}\left( \varvec{\alpha }\right) |\), and the selection method of index j is consistent with the second-order SMO, namely

This selection method was called FGWSS (functional gain working selection strategy). Obviously, the method of selecting the working set (i, j) by the second-order SMO is only a special case of the FGWSS method. Because \(i=\varvec{\mathop {\arg \min }}_{\ell }\left( G_{\ell }\left( {\user2{\alpha }^{k} } \right) \right)\) or \(i=\varvec{\mathop {\arg \min }}_{\ell }\left( -G_{\ell }\left( {\user2{\alpha }^{k} } \right) \right) =\varvec{\mathop {\arg \max }}_{\ell }\left( G_{\ell }\left( {\user2{\alpha }^{k} } \right) \right)\). In the case of the same gradient \(\varvec{G}\left( \varvec{\alpha }\right)\), using the working set (i, j) selected by FGWSS can always ensure that the variation in the dual function is greater than or equal to the MVP method. However, second-order SMO may not have this property. The computation process of the functional gain SMO algorithm is not significantly different from that of the second-order SMO algorithm. It only needs to replace the working set selection method in Algorithm 3 with (19), and the rest of the computation steps are exactly the same.

4 The proposed conjugate functional gain SMO

The only difference between the three SMO algorithms described above is the way in which the working set is selected. The unified iteration format of the SMO algorithm is \(\varvec{\alpha }^{k+1}=\varvec{\alpha }^{k}+\rho _{k}\varvec{h}^{k}_{i j}\), where \(\varvec{h}^{k}_{i j}=\varvec{e}^{k}_{i}-\varvec{e}^{k}_{j}\) is the direction of the k-th iteration of the SMO algorithm, \(\rho _{k}\) is the step length parameter, and (i, j) is the working set. Next, we will use a conjugate direction \(\varvec{s}_{k}\) to replace the direction \(\varvec{h}_{ij}^{k}\). Consider iterative format

where the direction \(\varvec{h}_{i j}^{k}\) is determined by FGWSS, and \(r_{k}\) is the conjugate parameter. Because \(\varvec{\alpha }^{k+1}\) must satisfy the equality constraint, namely

Hence, if \(\sum _{i=1}^{n} s^{i}_{k-1}=0\) holds, then (21) naturally holds. By the line search criterion, let \(\varphi \left( \rho _{k}\right) ={\mathcal {D}}\left( \varvec{\alpha }^{k}+\rho _{k} \varvec{s}_{k}\right)\), then according to \(\varphi ^{\prime }\left( \rho _{k}\right) =\rho _{k} \varvec{s}_{k}^{T} {\widetilde{\varvec{K}}} \varvec{s}_{k}+\varvec{s}_{k}^{T}\varvec{G}\left( \varvec{\alpha }^{k}\right) =0\), we can obtain the optimal step length parameter

Note that, \(\varvec{s}_{k}^{T}\varvec{G}\left( \varvec{\alpha }^{k+1}\right) =0\). Hence, (22) can be further simplified as \(\rho _{k}^{*} = - \user2{G}\left( {{\text{ }}{\varvec{\alpha }^{k} }} \right)^{T} \user2{h}_{{ij}}^{k} /{\text{ }}\user2{s}_{k}^{T} {\text{ }}{\widetilde{\varvec{K}}}{\text{ }}\user2{s}_{k}\). Since the inner product of the direction \(\varvec{s}_{k}\) and the gradient \(\varvec{G}\left( \varvec{\alpha }^{k}\right)\) satisfies \(\varvec{s}_{k}^{T}\varvec{G}\left( \varvec{\alpha }^{k}\right) =\varvec{G} \left( \varvec{\alpha }^{k}\right) ^{T} \varvec{h}_{i j}^{k}<0\), the direction \(\varvec{s}_{k}\) is a descent direction. At this time, the functional gain is

Substitute \(\rho _{k}^{*}=-\varvec{G} \left( \varvec{\alpha }^{k}\right) ^{T} \varvec{h}_{i j}^{k} / \varvec{s}_{k}^{T} {\widetilde{\varvec{K}}} \varvec{s}_{k}\) and \(\varvec{s}_{k-1}^{T}\varvec{G}\left( \varvec{\alpha }^{k}\right) =0\) into (23) to get

where \(\psi (r)=\varvec{s}_{k}^{T} {\widetilde{\varvec{K}}} \varvec{s}_{k}=\left( \varvec{h}_{i j}^{k}+r \varvec{s}_{k-1}\right) ^{T} {\widetilde{\varvec{K}}} \left( \varvec{h}_{i j}^{k}+r \varvec{s}_{k-1}\right).\) Because there is a parameter r in the denominator \(\psi (r)\), \(f_{G}\left( \Delta \varvec{\alpha }^{k}\right)\) can be further maximized. Let \(\psi ^{\prime } (r) = 2\left( {{\text{ }}\varvec{s}_{k}^{T} {\text{ }}{\widetilde{\varvec{K}}}\varvec{h}_{{ij}}^{k} + r\varvec{s}_{{k - 1}} {\text{ }}{\widetilde{\varvec{K}}}{\text{ }}\varvec{s}_{{k - 1}} } \right) = 0\), we have

Notice that substituting (25) into (20) yields

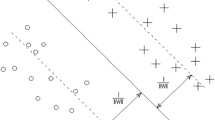

Because \({\widetilde{\varvec{K}}}\) is symmetric and positive definite, \(\varvec{s}_{k}\) is conjugate with \(\varvec{s}_{k-1}\). The above computation process is the conjugate functional gain SMO (CFGSMO) algorithm of LS-SVM. Algorithm 4 is the CFGSMO, and Fig. 1 shows its flowchart.

CFGSMO is an extension of the conjugate SMO (CSMO) [28] for solving SVMs. The dual problem of SVMs has a box constraint \(0 \le \alpha _{i} \le C, \forall i\), which indicates that the dual decision variable \(\varvec{\alpha }\) is confined within an interval. Whether it is the plain SMO or the CSMO, when \(\varvec{\alpha }\) exceeds the box constraint, it is necessary to strictly limit \(\varvec{\alpha }\) within the interval determined by the box constraint through the clipping operation. Not only that, when \(\varvec{\alpha }\) are clipped, the CSMO also needs to restart the entire algorithm with the plain SMO. However, the dual problem of LS-SVM does not contain box constraints. This means that the CFGSMO is not affected by the boundary caused by the box constraint, so it does not need to clip the dual decision variables \(\varvec{\alpha }\), and it does not have the restart step of the CSMO. Hence, the CFGSMO algorithm will be simpler.

5 Convergence

The first-order SMO, second-order SMO, and FGSMO algorithms are proven to converge. Naturally, whether the CFGSMO algorithm used to train the LS-SVM converges to an optimal solution or not should also be discussed. Hence, this section briefly discusses the convergence of CFGSMO algorithm.

5.1 Asymptotic

Next, we will illustrate this problem through several lemmas and a theorem. Lemma 5.1 is only an extension of the conclusion in [28].

Lemma 5.1

In the process of using the CFGSMO algorithm to train the LS-SVM model, the functional gain \(f_{G}(\Delta \varvec{\alpha })_{C F}\) of the CFGSMO is always greater than the functional gain \(f_{G}(\Delta \varvec{\alpha })_{F}\) of the FGSMO algorithm.

Proof

Suppose that in the k-th iteration, the working set selected by the FGSMO algorithm is (i, j), so \(\left( \alpha _{i}^{k}, \alpha _{j}^{k}\right)\) is the variable selected in the k-th iteration. At this time, the functional gain is

where \(\varvec{h}^{k}_{i j}\) is a descent direction of FGSMO algorithm in the k-th iteration. And because of

where \(\left\| \varvec{h}_{i j}^{k}\right\| _{\widetilde{\varvec{K}}}^{2}=\left( \varvec{h}_{i j}^{k}\right) ^{T} {\widetilde{\varvec{K}}} \varvec{h}_{i j}^{k}\). From (23), the functional gain of the CFGSMO algorithm can be further expressed as

Since \(\varvec{s}_{k-1}^{T} {\widetilde{\varvec{K}}} \varvec{h}_{i j}^{k} \le \left\| \varvec{h}_{i j}^{k}\right\| _{\widetilde{\varvec{K}}}\left\| \varvec{s}_{k-1}\right\| _{\widetilde{\varvec{K}}}\) holds, we can therefore obtain \(f_{G}(\Delta \varvec{\alpha })_{F} \le f_{G}(\Delta \varvec{\alpha })_{CF}\). \(\square\)

Lemma 5.2

For the CFGSMO algorithm, when \(k \ge 1\), the functional gain of any two adjacent iterations of the dual function satisfies

Proof

Suppose that the working set selected by the MVP is (i, j), and the working set selected by the FGWSS is \((i_{F},j_{F})\). By the Lemma 5.1, it follows that

As \(f_{G}(\Delta \varvec{\alpha }^{k})_{F} \ge \frac{\left\| \varvec{\alpha }^{k+1}-\varvec{\alpha }^{k}\right\| ^{2}}{2 \gamma }\) holds, we can therefore obtain

\(\square\)

Lemma 5.3

The dual function \({\mathcal {D}}(\varvec{\alpha })\) has a lower bound \(-2 \sum y_{i}^{2} / \sqrt{\lambda _{\min }({\widetilde{\varvec{K}}})}\), where \(\lambda _{\min }({\widetilde{\varvec{K}}}) > 0\) denotes the smallest eigenvalue of the matrix \({\widetilde{\varvec{K}}}\).

Proof

Obviously, the dual function satisfies inequality

By the Cauchy inequality, we can get

Because \(\lambda _{\min }({\widetilde{\varvec{K}}}) \varvec{\alpha }^{T} \varvec{\alpha } \le \varvec{\alpha }^{T} {\widetilde{\varvec{K}}} \varvec{\alpha }\) holds, so we have \(\sqrt{\sum _{i} \alpha _{i}^{2}}=\sqrt{\varvec{\alpha }^{T} \varvec{\alpha }} \le \sqrt{\varvec{\alpha }^{T} {\widetilde{\varvec{K}}} \varvec{\alpha } / \lambda _{\min }({\widetilde{\varvec{K}}})}\) and \(\sum _{i} \alpha _{i} y_{i} \le \sqrt{\sum _{i} y_{i}^{2}} \sqrt{\varvec{\alpha }^{T} {\widetilde{\varvec{K}}} \varvec{\alpha } / \lambda _{\min }({\widetilde{\varvec{K}}})}\). Note that, \(\varvec{0}\) is a feasible solution of dual problem (7), it follows that

According to (35), \(\sqrt{\varvec{\alpha }^{T} {\widetilde{\varvec{K}}} \varvec{\alpha }} \le 2 \sqrt{\sum _{i} y_{i}^{2} / \lambda _{\min }({\widetilde{\varvec{K}}})}\) can be derived. Hence, \(\sum _{i} \alpha _{i} y_{i} \le 2 \sum _{i} y_{i}^{2} / \lambda _{\min }({\widetilde{\varvec{K}}})\). To sum up, \({\mathcal {D}}(\varvec{\alpha }) \ge -2 \sum _{i} y_{i}^{2} / \sqrt{\lambda _{\min }({\widetilde{\varvec{K}}})}\) is true, as desired. \(\square\)

Theorem 5.1

The sequence \(\left\{ \varvec{\alpha }^{k}\right\}\) generated by the CFGSMO algorithm converges to the global optimal solution \(\varvec{\alpha }^{*}\) of the dual problem (7).

Proof

It is known from Lemma 5.2 that the dual function \({\mathcal {D}}(\varvec{\alpha })\) is strictly decreasing in the whole iteration process, because the change \(\varvec{G}(\varvec{\alpha })^{T} \varvec{h}_{i_{F} j_{F}} \ne 0\). According to the Lemma 5.3, \(f_{G}(\Delta \varvec{\alpha }^{k})_{CF}\) converges to 0. It follows that the sequence \(\left\{ \varvec{\alpha }^{k+1}-\varvec{\alpha }^{k}\right\}\) also converges to \(\varvec{0}\). Because the matrix \({\widetilde{\varvec{K}}}\) is a symmetric positive definite matrix, the dual function \({\mathcal {D}}(\varvec{\alpha })\) is strictly convex, there is a unique global optimal solution \(\varvec{\alpha }^{*}\), and \(\left\{ \varvec{\alpha }^{k}\right\} \subset \left\{ \varvec{\alpha }^{t} \mid {\mathcal {D}}\left( \varvec{\alpha }^{t}\right) \le {\mathcal {D}}\left( \varvec{\alpha }^{0}\right) \right\}\) is a compact set. Hence, \(\left\{ \varvec{\alpha }^{k}\right\}\) has a subsequence \(\left\{ \varvec{\alpha }^{k_{s}}\right\}\) with \(\hat{\varvec{\alpha }}\) as the limit point. Note that, dual variable \(\varvec{\alpha } \in {\mathbb {R}}^{n}\). So there are at most \(n^{2}\) options for working set \((i_{F},j_{F})\). Hence, there must be an infinite number of times a working set is selected. Without loss of generality, suppose the working set is \((i_{F},j_{F})\). Consider the limit

where \(A\left( k_{s}\right) =G_{i_{F}}\left( \varvec{\alpha }^{k_{s}}\right) -G_{i_{F}}\left( \varvec{\alpha }^{k_{s}+1}\right)\), \(B\left( k_{s}\right) =G_{i_{F}}\left( \varvec{\alpha }^{k_{s}+1}\right) -G_{j_{F}}\left( \varvec{\alpha }^{k_{s}+1}\right)\) and \(C\left( k_{s}\right) =G_{j_{F}}\left( \varvec{\alpha }^{k_{s}+1}\right) -G_{j_{F}}\left( \varvec{\alpha }^{k_{s}}\right)\). Because \(\left\{ \varvec{\alpha }^{k+1}-\varvec{\alpha }^{k}\right\}\) converges to 0, both \(A(k_{s})\) and \(C(k_{s})\) converge to \(\varvec{0}\) when ks→\(\infty\). Obviously, \(B(k_{s})=0\) always holds. It follows that \(G_{i_{F}}\left( {\hat{\varvec{\alpha }}}\right) -G_{j_{F}}\left( {\hat{\varvec{\alpha }}}\right) =0\). Recalling the selection method of working set \((i_{F},j_{F})\), we consider another limit

Furthermore, by the conclusion of Lemma 5.1 and the proof process of Lemma 5.2, we have

According to (38), we can obtain the \(G_{i}\left( {\hat{\varvec{\alpha }}}\right) =G_{j}\left( {\hat{\varvec{\alpha }}}\right) , \forall i \ne j\). This implies that the KKT condition holds and the limit point \({\hat{\varvec{\alpha} }}\) is a global optimal solution of \({\mathcal {D}}(\varvec{\alpha })\). Since the dual function \({\mathcal {D}}(\varvec{\alpha })\) is strictly convex, there is a unique global optimal solution. It follows that \({\hat{\varvec{\alpha }}}=\varvec{\alpha }^{*}\). \(\square\)

6 Numerical experiment

The main content of this section is to test the performance of the proposed CFGSMO algorithm. Algorithms used for comparison include first-order SMO (abbreviated as SMO1), second-order SMO (abbreviated as SMO2), FGSMO, CG, and ICG. The kernel function is RBF (Radial basis function), namely \(k\left( \varvec{x}_{i}, \varvec{x}_{j}\right) =\left( -\left\| \varvec{x}_{i}-\varvec{x}_{j}\right\| _{2}^{2} /2 \sigma ^{2} \right)\), where \(\sigma\) is the kernel width. To compare the efficiency of the algorithms as much as possible, the kernel width \(\sigma\) is not set to an optimal value, but to a set of grid values. Specifically, the value of the kernel width \(\sigma\) is \(2^{i} \left( -5, \cdots -1,0,1, \cdots 5 \right)\), a total of 11 different kernel widths. The stopping condition \(\epsilon\) is uniformly set to 0.001 [18], and the penalty coefficient \(\gamma\) is \(2^{i}\left( i=-1,0,1,...12\right)\), respectively. All algorithms are implemented using MATLAB R2020a and executed on a personal computer with 64 G memory, Inter\(\left( R\right)\) Xeon\(\left( R\right)\) W-2123 3.6GHz processor and operating system Ubuntu 20.04.

6.1 Benchmarking datasets

Twenty regression benchmark datasets and twenty classification benchmark datasets in Table 2 are used to test the efficiency of these six algorithms. Among them, except the regression datasets ailerons, cal_housing, bank32, kin8nm, cpu_act, puma32H, puma8NH, delta_ailerons and pol are from LIACC,Footnote 1 the rest of the datasets are taken from the LIBSVM Data.Footnote 2

For the regression datasets in Table 2, all are normalized to interval \(\left[ -1,1\right]\). The benchmark datasets a4a, a5a, a6a and a7a, as well as w2a, w3a, w4a and w7a are also used to observe the variation in the number of iterations with the benchmark datasets size.

6.2 Execution time comparison

Next, we will compare the execution time of the SMO1, SMO2, FGSMO, CG, ICG and CFGSMO on regression and classification datasets. Tables 3, 4, 5 and 6 record the total execution time of the six algorithms under 11 different kernel widths \(\sigma\). Table 3 is the execution time comparison of regression datasets ailerons, cal_housing, cart_delve and pol. Tables 4, 5 and 6 show the execution time comparison for the binary datasets a4a, a5a, a6a, a7a, a8a, a9a, w1a, w2a, w3a, w4a, w7a and svmguide1.

For the regression datasets ailerons, cal_housing, cart_delve and pol, the total execution time of CG and ICG methods is significantly more than that of SMO-type algorithms. In particular, the CG method is significantly less efficient than other algorithms. Although the efficiency of the CG-type method is higher than that of SMO1 when the penalty coefficient \(\gamma\) is large, it is still lower than other SMO-type algorithms. From the total execution time in Table 3, it can be seen that the larger the scale of the datasets, the lower the efficiency of the CG-type method and the higher the efficiency of the SMO-type algorithms. Hence, using the SMO-type algorithms to train the LS-SVM is more efficient than the CG-type method when dealing with large-scale datasets. The SMO-type algorithms are more suitable for the LS-SVM learning task of large-scale datasets. In addition, there are also obvious differences between SMO-type algorithms. Note that, when the penalty coefficient \(\gamma\) is small, the total execution time of SMO1 is significantly less than other SMO-type algorithms. However, when the penalty coefficient \(\gamma\) is gradually increased, the total execution time of SMO1 is gradually more than other SMO-type algorithms. This shows that the SMO1 is not efficient in the face of a large penalty coefficient \(\gamma\). At the same time, the efficiency of the SMO2 is gradually improved. However, when the penalty coefficient \(\gamma\) increases to a certain extent, the execution time of SMO2 is gradually longer than that of the CFGSMO. This shows that the CFGSMO is significantly more efficient than other SMO algorithms when faced with a larger penalty coefficient \(\gamma\). Moreover, in other cases, the total execution time of CFGSMO is not significantly different from other SMO-type algorithms.

For the 12 binary classification datasets a4a, a5a, a6a, a7a, a8a, a9a, w1a, w2a, w3a, w4a, w7a and svmguide1 in Table 2, their kernel matrices are usually sparse. We know that SMO-type algorithms are very efficient when dealing with sparse matrices. Hence, when dealing with classification tasks, the learning efficiency of the LS-SVM model using the SMO-type algorithms is usually higher than that of the CG-type algorithms. The total execution times in Tables 4– 6 confirm this conclusion. As with the regression datasets, when the penalty coefficient \(\gamma\) is small, the total execution time of the SMO1 is slightly less than that of the SMO2, FGSMO and CFGSMO and significantly less than that of the CG and ICG algorithms. Therefore, when \(\gamma\) is small, it is efficient to use SMO1 to solve the LS-SVM. But when \(\gamma\) is large, the first-order SMO is no longer suitable for training the LS-SVM. At this time, the efficiency of the SMO algorithm using the second-order information is significantly higher than that of the first-order SMO. Although the SMO2, FGSMO and CFGSMO algorithms all use second-order information, from the numerical results in Tables 4–6, the CFGSMO algorithm is obviously more suitable for the case where the penalty coefficient \(\gamma\) is large. Hence, when the penalty coefficient \(\gamma\) is large, using the CFGSMO algorithm to train the LS-SVM will be faster and more efficient. Similarly, when dealing with large-scale binary datasets, SMO-type algorithms also have advantages over CG-type algorithms. This shows that SMO-type algorithms are usually more efficient than CG-type algorithms for both classification and regression tasks. In addition, in the SMO-type algorithms, the CFGSMO algorithm will have more competitive advantages.

6.3 Comparison of iterative processes

Lemma 5.1 points out that the functional gain of each iteration of the CFGSMO is greater than that of the FGSMO. Naturally, it is also larger than other SMO-type algorithms. Because the functional gain of the SMO2 is greater than that of the SMO1. And SMO2 is a special case of FGSMO, which naturally satisfies the case of Lemma 5.1. Figures 2 and 3 show the iterative process of regression dataset pol and classification dataset w1a under different kernel width \(\sigma\), respectively. Notice that, the results in Figs. 2 and 3 are only the results of the first 2000 iterations. The “Dual” in Figs. 2 and 3 denotes the function value of the dual objective function (7). It can be clearly seen from Figs. 2 and 3 that the convergence speed of the CFGSMO algorithm is significantly faster than that of the other three SMO algorithms. Second only to the CFGSMO are SMO2 and FGSMO, and the slowest convergence speed is SMO1. No matter in the regression dataset pol or the classification dataset w1a, the functional gain of the CFGSMO algorithm is significantly more than that of other SMO-type algorithms. However, with the continuous increase in the kernel width \(\sigma\), the gap between the four SMO algorithms is gradually narrowed, because in the process of gradually reducing the \(\sigma\), the SMO2 will gradually degenerate into the form of the SMO1.

Figure 4 compares the average number of iterations for the four SMO-type algorithms with different training set sizes and different kernel width \(\sigma\). Hence, according to the basic information of the datasets in Table 2, we selected two groups datasets. The first group is a4a, a5a, a6a and a7a, and the second group is w1a, w2a, w3a, w4a and w7a. Note that, the sample sizes of both datasets do not grow strictly linearly.

From the results shown in Fig. 4, we can know that with the gradual increase in the training datasets size, the average number of iterations of the four SMO-type algorithms increases approximately linearly. Among them, the growth rate of SMO1 is the fastest, and the growth rate of CFGSMO algorithm is the slowest. This is because, in each iteration, the functional gain of the CFGSMO algorithm is greater than that of the other three SMO-type algorithms.

6.4 Comparison of accuracy

In this section, we will test the accuracy of CFGSMO. Similarly, the choice of kernel function is still RBF. The range of \(\gamma\) is \(2^{-5}, 2^{-3}, \cdots , 2^{9}, 2^{11}\), and the range of kernel width \(\sigma\) is \(2^{-11}, 2^{-9}, \cdots , 2^{1}, 2^{3}\). The evaluation criterion for classification is

The mean squared error (MSE) is used to evaluate the performance of the regression, that is

where \({\hat{y}}_{i}\) denotes the predicted target value. Tables 7 and 8 are the result of fivefold cross-validation.

The results in Tables 7 and 8 show that the cross-validation accuracy of SMO1, SMO2, FGSMO and CFGSMO is not much different. Although the accuracy of CFGSMO is sometimes lower than one of SMO1, SMO2 and FGSMO, it is not the worst one. In Table 8, CFGSMO has the smallest MSE on the bodyfat, delta_ailerons, mpg, puma8NH, pyrim and triazines datasets, and the accuracy is not bad on other datasets. In terms of the hyper-parameters, the results of \(\gamma ^{*}\) and \(\sigma ^{*}\) selection for four SMO algorithms are mostly the same, especially for the regression datasets. Overall, the accuracy of CFGSMO is not much worse than SMO1, SMO2 and FGSMO.

6.5 Short summary and discussion

At the \(k-\)th iteration, SMO1 and SMO2 need 2n flops (floating point operations) to update the working set, and the update of gradient \(\varvec{G}\left( \varvec{\alpha }\right)\) requires n flops, which requires about 3n iteration costs. Different from the SMO1 and SMO2, FGSMO needs to compute the absolute value of gradient \(\varvec{G}\left( \varvec{\alpha }\right)\) when updating the working set. If \(n_{\varvec{\alpha }} \left( 0 \le n_{\varvec{\alpha }} \le n\right)\) is used to represent the number of elements less than 0 in the gradient \(\varvec{G}\left( \varvec{\alpha }\right)\), the FGSMO update working set requires \(2n+n_{\varvec{\alpha }}\) flops, and the update of the gradient \(\varvec{G}\left( \varvec{\alpha }\right)\) requires n flops. In total, about \(3n+n_{\varvec{\alpha }}\) flops are required. For CFGSMO, updating the working set requires \(2n+n_{\varvec{\alpha }}\) flops, and updating the gradient \(\varvec{G}\left( \varvec{\alpha }\right)\) requires n flops. If \(n_{\varvec{s}}\) and \(n_{\varvec{t}}\) are used to represent the number of nonzero elements in the vectors \(\varvec{s}\) and \(\varvec{t}\), respectively, then the update of the vectors \(\varvec{s}\) and \(\varvec{\alpha }\) requires \(2n_{\varvec{s}}\) flops, and the update of the vector \(\varvec{t}\) requires \(n_{\varvec{t}}\) flops. Since the number of nonzero elements in the vector \(\varvec{s}\) is much less than n, \(n_{\varvec{t}} \approx n\). Hence, the cost of each iteration of CFGSMO is about \(3n+n_{\varvec{\alpha }}+2n_{\varvec{s}}+n_{\varvec{t}} \approx 4n+n_{\varvec{\alpha }}\). Note that, \(4n \le 4n+n_{\varvec{\alpha }} \le 5n\). Compared with SMO1, SMO2 and FGSMO, the cost of each iteration of CFGSMO increases about 25% to 40% of the computation. This shows that the advantages of CFGSMO are only evident when the total number of iterations of CFGSMO is less than 60% to 75% of the other three SMO algorithms.

From the numerical results in Table 3 to Table 6, it can be seen that the SMO-type algorithm with second-order information is more suitable for training the LS-SVM model than the CG-type method. As the penalty parameter increases gradually, the efficiency of SMO2, FGSMO and CFGSMO gradually increases, while the efficiency of SMO1 and CG-type algorithms gradually decreases. Note that, equation (14) can be further rewritten as

where \(\kappa (i, j)=\varvec{[K]}_{ii}+\varvec{[K]}_{jj}-\varvec{[K]}_{ij}-\varvec{[K]}_{ji}\). The SMO1 algorithm ignores the influence of \(\kappa (i, j)\) on \(f_{G}\left( \Delta \varvec{\alpha }^{k}\right)\). When \(\gamma\) is small, \(\kappa (i,j)\) has less influence on \(f_{G}\left( \Delta \varvec{\alpha }^{k}\right)\), and \(\kappa (i, j)\) can be ignored at this time. When \(\gamma\) is large, if \(\kappa (i,j)\) is ignored, \(f_{G}\left( \Delta \varvec{\alpha }^{k}\right)\) will be severely affected. Hence, the SMO1 algorithm is effective when \(\gamma\) is small and gradually becomes less efficient when \(\gamma\) becomes large. However, the FGSMO and SMO2 algorithms consider the influence of \(\kappa (i,j)\) on \(f_{G}\left( \Delta \varvec{\alpha }^{k}\right)\). When \(\gamma\) gradually becomes smaller, FGSMO and SMO2 are gradually equivalent to SMO1, but FGSMO and SMO2 increase some kernel evaluation, so the computational efficiency is slightly lower than that of SMO1. When \(\gamma\) is large, \(f_{G}\left( \Delta \varvec{\alpha }^{k}\right)\) is greatly affected by \(\kappa (i,j)\). At this time, the functional gain \(f_{G}\left( \Delta \varvec{\alpha }^{k}\right)\) of FGSMO and SMO2 far exceeds that of SMO1.

For the CFGSMO, when \(\gamma\) is small, the efficiency of this algorithm will be slightly lower than other SMO algorithms, but for larger \(\gamma\), the efficiency of CFGSMO algorithm is the highest. This may be because CFGSMO uses the descent direction of FGSMO to construct a feasible conjugate descent direction in the iterative process and retains the algorithm characteristics of FGSMO under different parameters \(\gamma\). But CFGSMO increases the functional gain of FGSMO. Therefore, in the face of larger \(\gamma\), the efficiency of CFGSMO is higher than that of FGSMO and SMO2. This indicates that the execution time of the program will be drastically reduced if CFGSMO is used for a wide range of cross-validation.

7 Conclusion

This work proposes a fast training algorithm for LS-SVM, namely the conjugate functional gain SMO algorithm, and theoretically proves its asymptotic convergence. This algorithm is based on the conjugate direction method and the SMO-type algorithm, which is a combination of these two algorithms. Theoretically, at each iteration, the functional gain of this algorithm is greater than or equal to that of the SMO1, SMO2 and FGSMO algorithms.

From the numerical results, the CFGSMO algorithm is indeed more efficient, especially when \(\gamma\) is large. The larger \(\gamma\), the more obvious the advantage of CFGSMO. When \(\gamma\) is small, the efficiency of CFGSMO is slightly lower than that of other SMO algorithms. It may be because the cost of each iteration of CFGSMO is higher than that of other SMO algorithms. In contrast, the efficiency of SMO1 is highest when \(\gamma\) is small, and the smaller \(\gamma\) is the higher the efficiency of SMO1. SMO2 and FGSMO are suitable for moderately sized \(\gamma\) (no more than about 1000). In addition, on large-scale datasets, the efficiency of SMO-type algorithms is significantly higher than that of CG-type, especially CFGSMO. For small- and medium-sized datasets, the efficiency of CG and SMO is not much different. Since CG needs to manipulate the entire kernel matrix every iteration, SMO only needs to manipulate two columns of the kernel matrix. Therefore, SMO-type algorithms may be more efficient than CG-type when training large-scale LS-SVM. In terms of hyper-parameter selection, the optimal hyper-parameters determined by cross-validation of the four SMO algorithms are consistent in most cases, and the performances (Acc and MSE) are not much different.

Since the dual problem of LS-SVM does not contain the box constraint \(0 \le \alpha _{i} \le C, \forall i\). Therefore, CFGSMO does not have a clipping and restart step like CSMO. In comparison, the iterative format of CFGSMO will be simpler and easier to implement and will not suffer from the effects of the bounds of inequalities.

Reviewing the computation flow of CFGSMO, it can be seen that the working set selection strategy of CFGSMO is not strictly limited to FGWSS. This suggests that the efficiency of CFGSMO may be further improved if other more efficient working set selection strategies are used instead of FGWSS. In addition, CFGSMO increases the computational cost by about 1/4–2/5 compared with plain SMO-type algorithms. Therefore, how to reduce the computational cost of CFGSMO on the premise of ensuring the efficiency of CFGSMO is also one of the work that needs to be further studied in the future. Besides, extending the conjugate SMO to other support vector machine models is also the next work to be considered.

Data availability

The authors declare that data supporting the findings of this study are available within the article.

References

Burges CJ (1998) A tutorial on support vector machines for pattern recognition. Data Min Knowl Discov 2(2):121–167

Smola AJ, Schölkopf B (2004) A tutorial on support vector regression. Stat Comput 14(3):199–222

Chen PH, Fan RE, Lin CJ (2006) A study on smo-type decomposition methods for support vector machines. IEEE Trans Neural Netw 17(4):893–908

Suykens JA, Vandewalle J (1999) Least squares support vector machine classifiers. Neural Process Lett 9(3):293–300

Jiao L, Bo L, Wang L (2007) Fast sparse approximation for least squares support vector machine. IEEE Trans Neural Netw 18(3):685–697

Yang X, Lu J, Zhang G (2010) Adaptive pruning algorithm for least squares support vector machine classifier. Soft Comput 14(7):667–680

Li B, Song S, Li K (2013) A fast iterative single data approach to training unconstrained least squares support vector machines. Neurocomputing 115:31–38

Xia X-L (2018) Training sparse least squares support vector machines by the qr decomposition. Neural Netw 106:175–184

Chua KS (2003) Efficient computations for large least square support vector machine classifiers. Pattern Recognit Lett 24(1–3):75–80

Suykens J, Lukas L, Van Dooren P, De Moor B, Vandewalle J, et al. (1999) Least squares support vector machine classifiers: a large scale algorithm. In: European Conference on Circuit Theory and Design, ECCTD, vol. 99, pp. 839–842. Citeseer

Chu W, Ong CJ, Keerthi SS (2005) An improved conjugate gradient scheme to the solution of least squares svm. IEEE Trans Neural Netw 16(2):498–501

Li B, Song S, Li K (2012) Improved conjugate gradient implementation for least squares support vector machines. Pattern Recognit Lett 33(2):121–125

Platt J (1998) Sequential minimal optimization: a fast algorithm for training support vector machines

Keerthi SS, Shevade SK (2003) Smo algorithm for least-squares svm formulations. Neural Comput 15(2):487–507

Fan R-E, Chen P-H, Lin C-J, Joachims T (2005) Working set selection using second order information for training support vector machines. J Mach Learn Res, 6(12)

López J, Suykens JA (2011) First and second order smo algorithms for LS-SVM classifiers. Neural Process Lett 33(1):31–44

Shao X, Wu K, Liao B (2013) Single directional smo algorithm for least squares support vector machines. Comput Intell Neurosci 2013

Bo L, Jiao L, Wang L (2007) Working set selection using functional gain for LS-SVM. IEEE Trans Neural Netw 18(5):1541–1544

Tavara S (2019) Parallel computing of support vector machines: a survey. ACM Comput Surv (CSUR) 51(6):1–38

Cao LJ, Keerthi SS, Ong CJ, Zhang JQ, Periyathamby U, Fu XJ, Lee H (2006) Parallel sequential minimal optimization for the training of support vector machines. IEEE Trans Neural Netw 17(4):1039–1049

Zeng Z-Q, Yu H-B, Xu H-R, Xie Y-Q, Gao J (2008) Fast training support vector machines using parallel sequential minimal optimization. In: 2008 3rd International Conference on Intelligent System and Knowledge Engineering, vol. 1, pp. 997–1001. IEEE

Cao L, Keerthi SS, Ong CJ, Uvaraj P, Fu XJ, Lee H (2006) Developing parallel sequential minimal optimization for fast training support vector machine. Neurocomputing 70(1–3):93–104

Noronha DH, Torquato MF, Fernandes MA (2019) A parallel implementation of sequential minimal optimization on fpga. Microprocess Microsyst 69:138–151

Zanghirati G, Zanni L (2003) A parallel solver for large quadratic programs in training support vector machines. Parallel Comput 29(4):535–551

Chang P, Bi Z, Feng Y (2014) Parallel smo algorithm implementation based on openmp. In: 2014 IEEE International Conference on System Science and Engineering (ICSSE), pp. 236–240. IEEE

Huang S-A, Yang C-H (2019) A hardware-efficient admm-based svm training algorithm for edge computing. arXiv preprint arXiv:1907.09916

Cipolla S, Gondzio J (2021) Training large scale SVMS using structured kernel approximations and ADMM. In: PROCEEDINGS OF SIMAI 2020+ 21

Torres-Barrán A, Alaíz CM, Dorronsoro JR (2021) Faster svm training via conjugate smo. Pattern Recognit 111:107644

López J, Barbero Á, Dorronsoro JR (2011) Momentum acceleration of least–squares support vector machines. In: International Conference on Artificial Neural Networks, pp. 135–142. Springer

Torres-Barrán, A., Dorronsoro, J.R.: Nesterov acceleration for the smo algorithm. In: International Conference on Artificial Neural Networks, pp. 243–250 (2016). Springer

Chang C-C, Lin C-J (2011) LIBSVM: a library for support vector machines. ACM Trans Intell Syst Technol(TIST) 2(3):1–27

Joachims T (1999) Svmlight: Support vector machine. SVM-light support vector machine http://svmlight.joachims.org/, University of Dortmund 19(4)

Acknowledgements

This research was supported by the Graduate Research and Innovation Foundation of Chongqing, China (CYS22074), the National Natural Science Foundation of China (71901184), Humanities and Social Science Fund of Ministry of Education of China (19YJCZH119).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yu, L., Ma, X. & Li, S. A fast conjugate functional gain sequential minimal optimization training algorithm for LS-SVM model. Neural Comput & Applic 35, 6095–6113 (2023). https://doi.org/10.1007/s00521-022-07875-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07875-1