Abstract

Deep learning has been applied in mechanical fault diagnosis. Hereinto, the convolutional neural network (CNN) has the shallow convolution operation, supporting the function of feature learning. However, the interpretability of CNN has always been an urgent problem to be solved. Due to the advantages of lifting wavelets and their transforms for impact fault diagnosis, an interpretable network called LW-Net with smart lifting wavelet kernels is proposed for mechanical feature extraction and fault diagnosis. Different from the traditional CNN, the shallow layer of the net is designed to be the lifting layer, concluding split, prediction and update sublayers by the natural convolution operation of lifting wavelet transforms. The smart lifting wavelet kernels are constructed by the mathematic constraints of lifting wavelets, resulting in the nice properties of signal processing. Meanwhile, the kernels with only two parameters are learned from the input data and updated by the back-propagation process. The lifting layer is suitable to accurately extract the impact fault features, improving the effective fault diagnosis of LW-Net. Moreover, the interpretability of LW-Net to achieve shallow feature extraction is verified and discussed by the repeatable simulations. The underlying logic and physical meaning of the lifting layer is revealed to be the adaptive waveform matching and learning based on the inner product matching principle. LW-Net is applied to the engineering diagnostic cases of the Case Western Reserve University dataset and planetary gearbox dataset to verify the effectiveness. The results show the method outperforms the classical and popular methods on the converge speed, classification accuracy and feature extraction.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the rapid development of artificial intelligence technology, modern mechanical equipment has been widely used in various industries of the national economy. It is particularly important to ensure the safe operation of mechanical equipment and reduce its maintenance cost. Therefore, how to use fault diagnosis technology to identify and maintain the faults in the process of mechanical equipment operation has become the focus of researchers’ attention. The traditional intelligent diagnosis process includes signal processing and feature extraction, machine learning and pattern recognition, among which signal processing and feature extraction technology are the key techniques in mechanical fault diagnosis, directly related to the accuracy and reliability of fault diagnosis. Yuan et al. proposed dual-core denoised synchrosqueezing wavelet transform [1] and multi-lifting synchro-squeezing transform [2] for mechanical fault detection. Zhang et al. [3] proposed a whale optimization algorithm-optimized orthogonal matching pursuit with a combined time–frequency atom dictionary for bearing fault diagnosis. Qian et al. [4] developed an enhanced sparse regularization method by weighted l1-norm convex optimization for impact force identification. Based on the above studies, it can be found that the traditional fault diagnosis method should depend on manual feature extraction. If the extracted features are inadequate for the task, the performance of the final classification algorithm will greatly degenerate.

At the same time, deep learning has been developed for intelligent fault diagnosis, benefited from the development of advanced sensing technology and computing systems in recent years. Zhu et al. [5] proposed an intelligent fault diagnosis approach by principal component analysis and deep belief network for rolling bearings. Li et al. [6] designed a domain generalization framework, i.e., whitening-net for handling domain deviations caused by the different cases of machines, operating conditions and noise. Deep learning is a hierarchical method of machine learning involving multi-level nonlinear transformation, which could avoid feature extraction problems compared with traditional fault diagnosis methods. The deep learning model is directly processed with original signals, the establishment of which is a large amount of data as the support training model to improve diagnostic accuracy, robustness and sensitivity.

As one of the representative deep learning algorithms, convolutional neural network (CNN) contains convolution layer, pooling layer and complete connection layer and has strong robustness and fault tolerance with the easy training and optimization. Yu et al. [7] developed a multi-channel one-dimensional convolutional neural network for dealing with the noise and high dimension signals for fault diagnosis of industrial processes. Cao et al. [8] designed a pseudo-categorized maximum mean discrepancy and then applied it to the multi-input multi-output convolutional network for intelligent fault diagnosis of rolling bearings. CNN has the characteristics of local connection, weight sharing and pooling operation, which could effectively reduce the network complexity, accelerate neural network fast learning, and carry out translation invariant classification of input feature information. More importantly, the shallow convolution operation is very similar to the feature extraction function in fault diagnosis, which could support the function of feature learning for fault diagnosis. However, the interpretability of CNN has always been an urgent problem to be solved, especially the underlying logic and physical implications of the shallow convolution operation for feature extraction and learning.

For the challenging of the interpretability network, the researchers further promote the construction of dynamic, robust and credible intelligence models. Hereinto, several scholars have introduced wavelet transform into the convolutional layer design for CNN and successively proposed such new deep learning networks as wavelet kernel and lifting kernel, which provide the possible explanations for the physical meaning of ‘black box model.’ Li et al. [9] proposed a deep one-dimensional convolutional neural network driven by continuous wavelets at a shallow layer and compared the recognition ability of various types of wavelet cores for feature models. Wang et al. [10] designed an interpretable neural network for machine condition monitoring from the aspects of signal processing and physical feature extraction. Pan et al. [11] proposed lifting net by integrating the second-generation wavelet and CNN. Unfortunately, it only introduced the second-generation wavelet transform to the convolutional layer, lack of the constraint on the convolutional net with the properties of wavelets, which is not a real lifting wavelet kernel.

In the inner product matching principle for mechanical feature extraction and fault diagnosis, it is revealed that the essential nature of FFT, wavelet and lifting wavelet is to explore the components in the signals that are closest or most related to the ‘basis function’ [12, 13]. Hence, the critical issue for meaningful and effective mechanical fault diagnosis is to construct and choose the appropriate basis functions most similar or related to the desired fault features. Impact fault features are one of the most typical and common fault features in mechanical fault diagnosis caused by local faults of key parts such as gears and bearings. Therefore, the basis functions similar or related to impact fault features are pivotal for feature extraction and fault diagnosis of local faults. Compared with the classical wavelet, the lifting wavelet (i.e., the second-generation wavelet) proposed by Swendes [14,15,16] is a type of basis function with typical impact waveform characteristics, which is more suitable for local fault diagnosis than classical wavelets according to the inner product matching principle. Meanwhile, the design of lifting wavelet is in the time domain without Fourier transform, leading to the easy construction adapted to the input signals with the nice wavelet properties. More importantly, the lifting wavelet transform is a kind of natural convolution operation, which has the advantages of simple algorithm, fast operation speed and less memory space.

Thus, the lifting wavelet is introduced into the CNN convolution layer, and a new interpretability smart lifting wavelet kernel is proposed based on the inner product matching principle for mechanical feature extraction and fault diagnosis. Compared with the research of Ref. [11], the smart lifting wavelet kernel is developed by the lifting wavelet basis functions with the adaptive characteristic and the excellent properties of signal processing. With the smart lifting wavelet kernel, the shallow layer network is designed by lifting wavelet transforms to help CNN for discovering the feature extraction principle with physical meaning. On this basis, CNN driven by the smart lifting wavelet kernel is proposed and called LW-Net, which could offer a physical interpretation for the shallow layer of CNN. Particularly, different from the standard convolutional layer depending on a set of randomly initialized parameters, LW-Net convolves the input signals with a set of parameterized lifting wavelet kernels to achieve shallow feature extraction, with the two parameters of predictors and updaters learning from the input signals. Based on the inner product matching principle, the shallow layer of the network is focused on extracting the impact fault features, and also makes the results of the network output have clear physical meaning with the robustness to different data. Thus, the contributions of this paper are summarized as follows.

-

1)

A smart lifting wavelet kernel is constructed with the excellent signal processing properties of high-order vanishing moment, short compactly supported and regularity, restrained by the lifting wavelet theory. Moreover, the kernel could learn from and be adapted to various input signals, which is suitable to accurately extract the impact fault features resulting in the effective fault diagnosis of LW-Net.

-

2)

This paper designs a shallow feature extraction network that is embedded with underlying logic and physical meaning based on lifting wavelet transform. The interpretability of the shallow layer with the new kernel is verified and discussed by repeatable simulations, focusing on the aspects of waveform matching.

-

3)

A new LW-Net model is established and applied to two experimental data cases to verify the effectiveness, compared with the classical and popular networks. Moreover, the kernel layer of the lifting wavelet proposed in this model is universally applicable and can be applied to any convolutional network. In addition, compared with the traditional CNN, LW-Net greatly reduces the parameters of the shallow layer and thus improves the convergence speed of the network.

The remaining arrangement of this paper is as follows: The basic theory and the method of this paper are addressed in Sect. 2. Section 3 uses simulations to study the physical significance of LW-Net. In Sect. 4, two engineering datasets are used to verify the effectiveness of the proposed method. Section 5 is the conclusion.

2 Theoretical foundation

2.1 CNN

As a kind of neural, CNN is easy to train and has higher recognition rate compared with ordinary networks because of its convolutional layer and pooling layer. The working principle of the convolution layer is to take a kernel with a fixed size to scan the entire input matrix and filter out the useless information in the matrix so as to condense a small matrix of useful information. The convolution calculation of the j-th activated feature \(h_{j}^{l}\) of layer \(l\) is described as:

where \(x^{l - 1}\) is the input signal of layer \(l - 1\), \(w_{j}^{l}\) is the j-th convolution kernel of layer \(l\), the symbol \(*\) denotes the convolutional operation, and \(b_{j}^{l}\) is the corresponding deviation. Furthermore, \(f_{1} \left( \cdot \right)\) is the activation function after the convolution operation, where ReLU activation function is selected.

The pooling layer is used to reduce the dimension of data, and also has the function of feature extraction. The mathematical expression of the pooling layer is

where \(\beta_{j}^{l + 1}\) is the j-th weight matrix of layer \(l + 1\), and \(m \times n\) is the size of the matrix,\(\beta\) is set to be a \(\max ( \cdot )\) function when the pooling layer is maximum pooling.

After the pooling layer, a full-connection layer is used for selecting features shown as

where \(W\) is the full-connection matrix, \(y\) is the output of pooling layer, and \(z\) is output. Softmax is selected as the nonlinear function \(f_{2} \left( \cdot \right)\) of probability mapping, which is often used to map the last layer of the network to get the probability of categories.

where \(p_{i}\) is the probability of the i-th label, \(z_{i}\) is the i-th value of the output by the full connection layer, and \(n\) is the number of total label.

Different from the general loss function, the cross-entropy function increases geometrically with the increase of error; that is, the cross-entropy function is very sensitive to error. Its function expression is:

where \(r_{i}\) is the true value of the i-th sample in the class and \(p_{i}\) is the predicted value output in softmax function corresponding to the true value.

2.2 Lifting wavelet transform

Lifting wavelets could be easily designed in the time domain, breaking the convention that classical wavelets can only be constructed in the frequency domain. This not only greatly expands the types of wavelets, but also leads to construct appropriate wavelets in time domain according to the input signals to achieve the high degree of feature matching by the inner product matching principle. The framework of lifting wavelet transform consists of three steps: split, prediction and update. Figure 1 shows the operation process of lifting wavelet transform, and its specific formula is as follows [16].

where (6), (7) are the process of split for \(x\), generating the even samples \({\text{se}}\) and odd samples \({\text{so}}\); (8) is the prediction process, in which \({\text{se}}\) is convolved with the prediction coefficient \(P\) to predict \({\text{so}}\). \(d\) is the prediction error as the high-frequency part of \(x\) after the decomposition, and \(P\left( \cdot \right)\) is a convolutional mode of prediction; (9) is the update process, in which \(d\) is convolved with the update coefficient \(U\) to update \({\text{se}}\). \(s\) is the approximation signal as the low-frequency part of \(x\) after the decomposition, and \(U\left( \cdot \right)\) is a convolutional mode of update.

2.3 Lifting layer

Generally speaking, the features extracted from the first layer of convolutional neural network have a great relationship with the performance of the whole network [17]. The traditional convolution layer cannot effectively extract the useful impact fault features from the input signals. Due to the superiority of lifting wavelets along with the nature convolution of the transforms, the lifting layer is designed by the smart lifting wavelet kernel, which learns from and is adapted to the input signals for extracting the impact fault features.

The whole data flow path of lifting layer is a time-domain lifting processing including the split, prediction and update sublayers to obtain the underlying features.

Split sublayer. The input signal \(x\) is split into odd sample and even sample sequences, shown as:

Prediction sublayer. The calculation is as follows:

where \(f_{3} ( \cdot )\) is the convolutional mode. \(Ps\) is a predictor of the smart lifting wavelet.

Update sublayer. The calculation is as follows:

where \(Us\) is the updater of the smart lifting wavelet.

Next, the smart lifting wavelet kernel \(\left\{ {\omega_{i} } \right\}\) of the lifting layer will be designed. Based on the wavelet theory, the smart lifting wavelet \(\psi_{s}\) is constrained by the filter coefficients indeed related with \(Ps\) and \(Us\), which also have the nice properties of high order vanishing moment, short compactly supported and regularity [16]. First, we assume in the prediction sublayer \(Ps = [p_{1} ,p_{2} , \ldots ,p_{N/2} ,p_{N/2 + 1} , \ldots ,p_{N} ]\), and \(\widetilde{g}\) to be the equivalent high-pass filter coefficients of \(\psi_{s}\) corresponding to \(Ps\). The relation between \(\widetilde{g}\) and \(Ps\) is

It has been proved that the order of the polynomial is equal to the vanishing moment of the wavelet [18]. That is, \(\psi_{s}\) and \(\widetilde{g}\) have the same vanishing moments. Thus, for \(Ps\) with a length \(N\), the polynomial order is limited to be less than \(N\), and the remaining degree of freedom will be used to adjust to the signals, shown as follows [19]. The specific expression is

In the update sublayer, assume \(Us = [u_{1} ,u_{2} , \ldots ,u_{{\widetilde{N}}} ]\) with the length \(\widetilde{N}\). The relation between the dual equivalent high-pass filter \(g\) of the dual wavelet \(\tilde{\psi }_{s}\) for wavelet reconstruction and \(Us\) is given by

Then, it could be obtained from (15) that [19]

where \(\widetilde{V}\) is a matrix with the size of \(\tilde{N} \times (2N + 2\tilde{N} - 1)\), and its elements are represented as

where \(n = - N - \tilde{N} + 2, \ldots ,N + \tilde{N} - 3,N + \tilde{N} - 2\) and \(m = 0,\) \(1, \ldots \widetilde{N} - 1\). It could be seen from (13) and (14) that the coefficients of \(Ps\) is determined by restraining the polynomial order of \(Ps\). Meanwhile, (17) is a system of equations only containing \(Us\), so the coefficients of \(Us\) can be determined by solving the equations of coefficients.

To have the excellent performance on signal adaptation, the value of \(N\) minus \(M\) should be less than or equal to 2 for the predictors [20]. Therefore, the order of coefficient polynomial of \(Ps\) in \(\psi_{s}\) is set as \(N - 2\) in the paper. Then, the remaining degrees of freedom of \(Ps\) are determined by the LW-Net through training and learning to make the feature matching close to the input signals. In the actual prediction, these two parameters directly affect the results of network feature learning and training. It is known from the wavelet theory that the two parameters in the middle of the predictor directly affect the main waveform part of lifting wavelets. Thus, the i-th smart lifting wavelet kernel \(\left\{ {\omega_{i} } \right\}\) of lifting layer is set to be \(\left\{ {\omega_{i} } \right\} = [p_{N/2} ,p_{N/2 + 1} ]\) which is adaptively constructed by according to the input signals. Then, the coefficients of \(Ps\) can be obtained from the following relationship:

\(Us\) can be determined by \(Ps\) through (15)–(17).

It could be found that there are only two parameters in the smart lifting wavelet kernel \(\left\{ {\omega_{i} } \right\}\), improving the convergence speed of the network. By the kernel, the smart lifting wavelet with the high-order vanishing moment, short compactly supported and regularity could be acquired by \(Ps\) and \(Us\). Meanwhile, it is a type of basis function with typical impact waveform characteristics, which is suitable for local fault diagnosis according to the inner product matching principle and will discuss in the following simulations. More importantly, the smart lifting wavelet \(\psi_{s}\) could be adaptively constructed according to the feature learning by the two free parameters, which leads to the effective feature extraction of the designed lifting layer by (10)–(12). Thus, the lifting layer could be a shallow layer embedded with underlying logic and physical meaning.

2.4 Overall structure and parameters of LW-Net

Table 1 shows the specific parameters of LW-Net, hereinto the input sample in this paper is set to have 1024 points: (1) The size of convolution kernel of lifting layer is 2 with the kernel number 6 according to the experimental experience, which greatly promotes the training and convergence rate of the network; (2) Two one-dimensional convolutional layers (Conv1D) are set to conduct deeper mining of the feature information extracted after the lifting layer, one of which is with the kernel size 5 and the kernel number 10, and the other of which is with the kernel size 25 and the kernel number 16; 3) The adaptive maximum pooling layer (AdaptiveMaxPool1D) is designed with the kernel number 16 to make the characteristics obviously; 4) Linear layers with 1 kernel number is added to identify characteristics; 5) The final output is with the size of m, where m represents the number of labels.

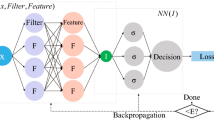

Figure2 shows the flowchart of LW-Net for mechanical feature extraction and fault diagnosis, describing as follows.

-

Step 1 In the stage of data processing, the collected fault data containing various types is divided into the training set and testing set.

-

Step 2 In the training stage, the LW-Net is initialized.

-

Step 3 According to the initialization of \(\left\{ {\omega_{i} } \right\}\) and the order constraint (\(N = \tilde{N} = 10\) here), \(Ps\) and \(Us\) are designed.

-

Step 4 The data are processed by the split, prediction and update sublayers by the adaptive \(\psi_{s}\) with \(Ps\) and \(Us\).

-

Step 5 Combined with the two Conv1Ds and AdaptiveMaxPool1D, the fault features of the input signals are extracted.

-

Step 6 The output results are calculated by the softmax mapping and cross-entropy function. At the same time, the gradient of \(\psi_{s}\) in lifting layer and parameters in other layer is calculated by using the back-propagation algorithm.

-

Step 7 Judge whether the network has been completely trained according to the training loss rate, setting as 0.001 considering the high accuracy and high efficiency.

-

Step 8 The well-trained LW-Net is applied to the testing data, and the classifier of fault types is performed.

-

Step 9 Output the labels for fault diagnosis.

It could see from the flowchart that LW-Net is a shallow feature extraction network that is embedded with underlying logic and physical meaning based on the inner product of lifting wavelet transforms.

3 Interpretability

In this section, the interpretability of the shallow feature extraction network will be studied by exploring the operation mechanism of lifting layer on the repeatable simulations. A commonly used standard lifting wavelet [16] is denoted as SLW(10,10), where \(N\) of \(Ps\) and \(\widetilde{N}\) of \(Us\) are both 10 and \(\left\{ {\omega_{i} } \right\} = [{0}{\text{.6056, 0}}{.6056}]\) is employed as the fault feature of the simulation signal. Meanwhile, two interference features constructed by Daubechies wavelets with the order 2 (Db2) and the order 12 (Db12) are introduced to expand the label types of the simulations. Figure 3 shows the waveforms of the three wavelet labels of the simulations. In addition, a sinusoidal signal restrained by (19) is added to three wavelet labels as the interfering noise.

The mixed signals are shown in Fig. 4. The simulated signals including three wavelet labels are rearranged and randomly intercepted to form the simulation data of each label number 60 with each signal length 1024. Then, the data are divided into the training set and testing set by the ratio of 2:1.

Due to the three wavelet labels, the number of output labels for LW-Net is 3. In the simulations, LW-Net has been trained for three times, and the changes of the loss and classification accuracy during the training are shown in Fig. 5. Specially, in the 30th iteration, the parameter update falls into a local optimization. But in the 31th iteration, the parameter update jumps out of the local optimal solution to find the global optimal solution. Then, it could be seen from Fig. 5 that LW-Net gradually converges in the training process. Meanwhile, the accuracy of LW-Net on the testing set after training is 100%.

The purpose of lifting layer is to make the smart lifting wavelet kernel learn from the characteristics of input signals for matching impact features, the corresponding lifting wavelet of which also possesses the nice properties of signal processing on the high-order vanishing moment, good compact-support and regularity. Next, we will adopt the inner product matching principle to verify the underlying logic and physical meaning of lifting layer. SLW(10,10) shown in Fig. 3a is the designed impact feature submerged in the input data and is supposed to be extracted by the lifting layer. Figure 6 shows the changes of lifting wavelet waveforms of the lifting wavelets in the lifting layer before and after three training sessions. Hereinto, smart lifting wavelet 1 and smart lifting wavelet 2 are, respectively, corresponding to two of the six smart lifting wavelet kernels, the first two of which are most similar to the simulated feature of SLW(10,10) after the training. We could see that although within the different initialization \(\left\{ {\omega_{i} } \right\}\), the smart lifting wavelet kernels could learn from the characteristic of input signals, i.e., the waveform of SLW(10,10). Thus, the various initialization of the smart lifting wavelet in the green lines is learned from the feature waveforms of SLW(10,10) in the input data and trained to approximate to the embedded impact features in the red lines. Especially, the waveforms of smart lifting wavelet 1 and smart lifting wavelet 2 after the third training are extremely similar to SLW(10,10), whose correlation coefficients between them are both more than 99.9%. Obviously, the lifting wavelet kernels of lifting layer perform the effectiveness of feature extraction by learning and matching the embedded features from the input signals, in order to improve the ability of convergence and the accuracy of the network. Meanwhile, the performance of lifting layer is indeed the inner product matching process, which constructs and chooses the appropriate basis function most similar or related to the desired fault features.

It needs to point out that because the smart lifting wavelets are derived from the type of lifting wavelets, the lifting layers could perform the inner product matching to the typical impact waveform characteristics similar to that of Fig. 3a. For example, for bearing fault diagnosis, the lifting layer is suitable to learn and extract the different impact fault feature waveforms for bearings from the noisy input signals, and the different labels of bearing fault types mainly lie in the fault feature frequencies, i.e., the inverse of the interval time among these extracted fault features. Hence, although with one type of lifting wavelets, the smart lifting layer of LW-Net is powerful for mechanical fault diagnosis, especially characterized as impact fault features.

To sum up, we could conclude from the simulations that the interpretability of LW-Net on the lifting layer conforms to the inner product matching principle for mechanical feature extraction and fault diagnosis [12]. The underlying logic and physical meaning of shallow lifting layer with the new kernel is the adaptive waveform matching for the impact fault features.

4 Engineering data validation

In this section, two fault datasets will be used to verify the performance of LW-Net, including bearing fault dataset collected on the bearing test rig of Case Western Reserve University (CWRU), and the gear and bearing fault dataset collected on the planetary gearbox test rig (planetary gearbox dataset). At the same time, a classical 1DCNN [21] and three popular intelligent models outlined in Ref. [22] are also introduced as the comparison methods. Three popular ones include LENet1D, bi-directional LSTM (BILSTM) and multilayer perceptron (MLP), whose details are referred to Ref. [22].

4.1 Case 1: CWRU dataset

CWRU dataset is a pubic experimental dataset widely used in mechanical fault diagnosis [23]. The test rig consists of a two-horsepower (2HP) reliance electric motor, a dynamometer and torque sensor for different motor load, shown in Fig. 7. Single point failures of different diameters are manufactured on the roller, inner ring and outer ring of the testing bearings. The accelerometers are fixed on the bearing house at the driving end and the fan end.

CWRU bearing test rig [23]

In the experiment, a total of four types of outer ring faults, inner ring faults and roller faults and normal state are selected. For bearings with the same fault type, there are three fault diameters, including 0.007 inches, 0.014 inches and 0.021 inches. Therefore, there are 10 kinds of labels in this dataset. The motor running speed is 1750 rpm, and the sampling frequency of the acceleration at the driving end is 12 kHz. Figure 8 shows the typical input signals of 10 labels. The length of each input signal is set to 1024, and 100 original signals of each label are intercepted, randomly allocated to the training set and testing set by a ratio of 3:2. Therefore, the total number of training samples is 600, and the total number of test samples is 400. It should be noted that the proposed method and the compared methods are all trained by the same data in the same environment, and each network has been trained four times, so as to ensure the objectivity and authenticity of the results. Figures 9 and 10 show the training and learning process of LW-Net and the compared methods for CWRU dataset. In Figs. 9 and 10, the abnormal jumping point appears for the same reason when training the iteration to 30 times of Fig. 5. It could be seen that the cross-entropy loss of LW-Net reduces to tiny close to 0 at the eighth round and is faster than those of the compared methods. Meanwhile, the classification accuracy of LW-Net is close to 1 more faster than those of the compared methods. The lifting layer in LW-Net has the fewer parameters than the general convolutional layer of the comparisons, leading to the quick converge of the network in training. Table 2 shows classification results of LW-Net and comparisons in case 1. It can be seen from Table 2 that LW-Net performs better on CWRU dataset than other classical and popular intelligent diagnosis models, with a mean accuracy of 99.37%. The effective fault diagnosis of LW-Net is due to the smart lifting wavelet kernels of lifting layer, which not only have the excellent properties of signal processing but also could adaptively match the fault features of the input signals.

4.2 Case 2: planetary gearbox dataset

A planetary gear test rig [24] is constituted by a motor, encoder, two-stage planetary gearbox, two-stage fixed-axle reducer and magnetic powder brake, shown in Fig. 11. In the experiments, different types of damage were preset on the planetary wheel, sun wheel and bearing of the two-stage planetary gearbox. The motor rotates at the frequencies of 35 Hz, 40 Hz and 45 Hz, and the sampling frequency of the sensor is 5120 Hz.

The labels of the dataset are comprised of the normal status (NO) and the five faults of the first planetary gearbox, i.e., first-stage planetary wheel crack (GCP), first-stage sun wheel pitting (GWS), first-stage sun wheel crack (GCS), first-stage bearing inner ring wear (BI) and first-stage bearing needle roller crack (BP). In the experimental analysis, 100 groups of input signals with a length of 1024 are intercepted from each type of data, and then randomly divided into the training data and testing data in a ratio of 6:4. Since there are six signal types, the total number of the training set is 360 and that of the testing set is 240.

The proposed method and the comparisons are applied to the dataset. Table 3 is the recognition results of Case 2 by each method at different rotational speeds. It can be seen that LW-Net has the best diagnostic performance among the five methods, the accuracy of which for each speed is, respectively, 95.17%, 95.3% and 99.03%. Moreover, due to a small floating range of the maximum and minimum recognition rates, LW-Net shows a stable performance on recognition accuracy.

T-distributed stochastic neighbor embedding (T-SNE) [25] can transform high-dimensional information into low-dimensional information, which is often used for network visualization to show the extracted features. Figure 12 plots a feature visualization of LW-Net for the three speeds. Obviously, the six features are significantly divisible, especially at the speed of 45 Hz, which also shows the excellent feature extraction and diagnostic performance of LW-Net.

5 Conclusion

To explore the ‘black box model’ of CNN and improve the effectiveness of CNN to extract the fault features and diagnose mechanical faults, a new deep convolutional neural network embedded with underlying logic and physical meaning based on the inner product matching principle of smart lifting wavelets are proposed and named LW-Net. The conclusions of the paper are summarized as follows.

-

1)

The first layer of the network, i.e., lifting layer, is designed to be the convolutional layer driven by smart lifting wavelet kernels. Split, prediction and update sublayers are set up in the layer. The smart lifting wavelet kernels are constructed by the mathematic constraints of wavelet scale and vanishing moment, resulting the nice properties of signal processing. Meanwhile, the kernels with only two parameters are learned from the fault features of input data and updated by the back-propagation process.

-

2)

The interpretability of LW-Net to achieve shallow feature extraction is verified and discussed by the repeatable simulations. The simulated results show that the interpretability of LW-Net on the lifting layer conforms to the inner product matching principle. The underlying logic and physical meaning of lifting layer is the adaptive waveform matching by continuously learning and matching the impact features from the signals during training.

-

3)

LW-Net is applied to the engineering diagnostic cases of CWRU dataset and planetary gearbox dataset, compared with the classical and popular methods. The results demonstrate that LW-Net can converge faster than the compared methods. Meanwhile, it performs the best classification accuracy along with the stable performance among all the tested methods. Furthermore, the feature visualization of LW-Net is studied for validating the excellent feature extraction of LW-Net.

References

Yuan J, Yao Z, Zhao Q et al (2021) Dual-Core denoised synchrosqueezing wavelet transform for gear fault detection. IEEE Trans Instrum Meas 70:3521611

Yuan J, Yao Z, Jiang H et al (2022) Multi-lifting synchrosqueezing transform for nonstationary signal analysis of rotating machinery. Measurement 191:110758

Zhang X, Liu Z, Miao Q et al (2018) Bearing fault diagnosis using a whale optimization algorithm-optimized orthogonal matching pursuit with a combined time-frequency atom dictionary. Mech Syst Signal Process 106:24–39

Qiao B, Liu J, Liu J et al (2019) An enhanced sparse regularization method for impact force identification. Mech Syst Signal Process 126:341–367

Zhu J, Hu T, Jiang B et al (2020) Intelligent bearing fault diagnosis using PCA–DBN framework. Neural Comput Appl 32:10773–10781

Li J, Wang Y, Zi Y et al (2021) Whitening-Net: a generalized network to diagnose the faults among different machines and conditions. IEEE Trans Neural Netw Learn Syst. https://doi.org/10.1109/TNNLS.2021.3071564

Yu J, Zhang C, Wang S (2021) Multichannel one-dimensional convolutional neural network-based feature learning for fault diagnosis of industrial processes. Neural Comput Appl 33:3085–3104

Cao X, Wang Y, Chen B et al (2021) Domain-adaptive intelligence for fault diagnosis based on deep transfer learning from scientific test rigs to industrial applications. Neural Comput Appl 33:4483–4499

Li T, Zhao Z, Sun C et al (2021) WaveletKernelNet: an interpretable deep neural network for industrial intelligent diagnosis. IEEE Trans Syst Man Cy-bern Syst. https://doi.org/10.1109/TSMC.2020.3048950

Wang D, Chen Y, Shen C et al (2022) Fully interpretable neural network for locating resonance frequency bands for machine condition monitoring. Mech Syst Signal Process 168:108673

Pan J, Zi Y, Chen J et al (2017) LiftingNet: a novel deep learning network with layerwise feature learning from noisy mechanical data for fault classification. IEEE Trans Ind Electron 65(6):4973–4982

Chen JL, Li ZP, Pan J et al (2016) Wavelet transform based on inner product in fault diagnosis of rotating machinery: a review. Mech Syst Signal Process 70–71:1–35

Yan R, Gao RX, Chen X (2014) Wavelets for fault diagnosis of rotary machines: a review with applications. Signal Process 96:1–15

Daubechies I, Sweldens W (2000) Factoring wavelet transforms into lifting steps. Wavelets Geosci 90:131–157

Sweldens W (1996) Wavelets and the lifting scheme: a 5 minute tour. Zeitschrift fuer Angewandte Mathematik -und Mechanik, ZAMM 76:41–44

Sweldens W, Schroder P (2000) Building your own wavelets at home. Wavelets Geosci 90:72–130

Zhang W, Peng G, Li C et al (2017) A new deep learning model for fault diagnosis with good anti-noise and domain adaptation ability on raw vibration signals. Sensors 17(2):425

Jawerth B, Sweldens W (1994) An overview of wavelet based multiresulotion analysis. SIAM Rev 36(3):377–412

Claypoole R, Baraniuk R, Nowak R (1998) Adaptive wavelet transforms via lifting. In: 1998 IEEE international conference on acoustics, speech and signal processing (ICASSP 98), vol 3, pp 1513–1516

Duan C (2004) Research on fault diagnosis techniques using second generation wavelet transform. Doctoral thesis, Xi’an Jiaotong University

Chen C, Liu Z, Yang G et al (2021) an Improved fault diagnosis using 1D-convolutional neural network model. Electronics 10(1):59

Zhao Z, Li T, Wu J et al (2020) Deep learning algorithms for rotating machinery intelligent diagnosis: an open source benchmark study. ISA Trans 107:224–255

Bearing Data Center, Case Western Reserve University, Cleve land, OH, USA, 2004. http://csegroups.case.edu/bearingdatacenter/home

Lei Y, Lin J, He Z et al (2012) A method based on multi-sensor data fusion for fault detection of planetary gearboxes. Sensors 12(2):2005–2017

Maaten L, Hinton G (2008) Visualizing data using t-SNE. J Mach Learn Res 9:2579–2605

Acknowledgements

This research is sponsored by the National Natural Science Foundations of China (Nos. 51975377 and 52005335) and Shanghai Sailing Program (No. 21YF1430600). The work is also partly supported by the Key Laboratory of Vibration and Control of Aero-Propulsion System Ministry of Education, Northeastern University (VCAME201907). Special thanks are due to Key Laboratory of Education Ministry for Modern Design and Rotor-Bearing System of Xi’an Jiaotong University for sharing the planetary gearbox dataset in Case 2.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yuan, J., Cao, S., Ren, G. et al. LW-Net: an interpretable network with smart lifting wavelet kernel for mechanical feature extraction and fault diagnosis. Neural Comput & Applic 34, 15661–15672 (2022). https://doi.org/10.1007/s00521-022-07225-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07225-1