Abstract

Omnipresent network/graph data generally have the characteristics of nonlinearity, sparseness, dynamicity and heterogeneity, which bring numerous challenges to network related analysis problem. Recently, influenced by the excellent ability of deep learning to learn representation from data, representation learning for network data has gradually become a new research hotspot. Network representation learning aims to learn a project from given network data in the original topological space to low-dimensional vector space, while encoding a variety of structural and semantic information. The vector representation obtained could effectively support extensive tasks such as node classification, node clustering, link prediction and graph classification. In this survey, we comprehensively present an overview of a large number of network representation learning algorithms from two clear points of view of homogeneous network and heterogeneous network. The corresponding algorithms are deeply analyzed. Extensive applications are introduced in an all-round way, and related experiments are conducted to validate the typical algorithms. Finally, we point out five future promising directions for next research in terms of theory and application.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Big data have aroused extensive attention of industry and academia [1,2,3]. It is worth noting that most of the current studies are based on the assumption of independence between data, which leads to the fact that mining large-scale data is far from enough. In fact, there is a general relation between these data. For example, large-scale image and text can be constructed as the network for realizing the multi-information fusion [4]. Polypharmacy side effects of the drug–drug interactions may be affected with protein–protein interactions, drug–protein target interactions [5]. That is to say, there not only exist big data with a single data type including image, text, speech and video, but also exists the ubiquitous network data, such as Google knowledge graph, protein–protein interaction network, gene network, brain network, Internet, social network, multimedia network, molecular compound network and traffic network. In particular, the recent online social network represented by Twitter, WeChat and Facebook has entered the era of billions of nodes, making it more urgent for researchers to study large-scale network data. However, different from image and text data, network data often have the characteristics of nonlinearity, sparseness, dynamicity and heterogeneity, which bring many challenges to deep and advanced data mining task.

Data representation has always been the top priority of research in the field of data mining and machine learning. The so-called data representation (or feature representation) means using a set of symbols or numbers, i.e., feature vectors, to describe an object and to be able to reflect some of its characteristics, structure and distribution. Good data representation can often greatly reduce the volume of data, while retaining the essential characteristics of the original data and capturing the posterior distribution of potential interpretable data and maintaining robustness against random noise. The success of machine learning algorithms generally depends on data representation. This is because different representations can entangle and hide more or less the distinctive explanatory factors of variation behind the data [6]. At the initial stage of development, data representation is mainly dependent on feature engineering by using domain knowledge or expert knowledge to create features. However, it is also the biggest constraint on machine learning applications. It is not only time-consuming and laborious, but also requires a lot of transcendental experience and complicated manual design. This has led to a study of representation learning.

Representation learning from data is an important research hotspot in the field of machine learning and pattern recognition [6]. Especially in recent years, representation learning has seen a surge of research in the well-known journals and conferences in the field of artificial intelligence and data mining, such as TPAMI, TNNLS, TKDE, KDD, NIPS, ICML, AAAI, IJCAI and ICLR. Representation learning can learn high-level abstract feature representation directly from original low-level perception data, which is more conducive to subsequent data analysis and processing [7]. That is, the original feature engineering is transformed into the process of automatic machine learning. In particular, deep learning is a typical method of representation learning, which can automatically extract the appropriate feature or obtain the multiple levels of representation from the data. Deep learning has been successfully applied in speech recognition and signal processing [8,9,10], image recognition [11, 12], object recognition [13,14,15,16,17,18] and other fields, especially in the field of natural language processing (NLP) [19,20,21,22].

In the upsurge of representation learning, network representations learning (a.k.a. network embedding) is in full swing. It is precisely because the traditional method of extracting structural information from network data usually depends on topological statistical information such as degree, aggregation coefficient or the limitations of well-designed manual features. This brings about many drawbacks as mentioned above. Therefore, inspired by the successful application of representation learning in the NLP fields (e.g., word2vec [19, 23]), network representations learning aims to automatically learn a variety of features from the given network-structured data to low-dimensional vector space, while encoded a variety of structural and semantic information. When the vector data representation is obtained, the problem of network data mining can be solved directly by the off-the-shelf machine learning method. As expected, network representations learning has been proven to be useful in many tasks of data mining and machine learning such as link prediction [24,25,26,27,28,29,30], node classification [26,27,28, 30,31,32,33,34,35,36,37,38,39,40,41], network reconstruction [25], recommendation [25, 29, 42], visualization [26, 33, 34, 36, 38, 43] and community detection [44, 45].

Network representation learning has become one of the indispensable and hot topics in domain of data mining and machine learning in recent years. We conduct academic search in important academic library such as Springer Link, IEEE xplore, DBLP and Elsevier Science Direct through a given keyword about network embedding and network representation learning and find that more than 400 related articles have been published in the past 5 years. Figure 1 shows the number of published papers on this topic from 2010 to March 2020.

It can be seen that from 2014 to 2019, the number of papers has increased year by year. Therefore, the research trend on this topic is growing. We believe it is the right time to have a paper to summarize this series of the representative work published in important journals and top conference papers in the field of data mining and machine learning. It is worth noting that we mainly focus on network embedding for network inference and analysis task rather than graph embedding for dimensionality reduction [46]. Therefore, the classical nonlinear dimensionality reduction methods such as ISOMAP [47], locally linear embedding (LLE) [48], Laplacian eigenmaps (LE) [49] and the latest manifold learning algorithms for dimensionality reduction such as the semi-supervised out-of-sample interpolation (SOSI) [50], the robust local manifold representation (RLMR) [51] and the projective unsupervised flexible embedding models with optimal graph (PUFE-OG) [52] will not be involved. The major difference between network embedding and graph embedding has detailed discussed by the recent work [53]. This article will not repeat. To the best of our knowledge, this is the first work to survey the network representation learning comprehensively and systematically, although there have already been a few survey works related to network representation learning problem. For example, Moyano [54] summarizes network representations learning from the point of view of network science. This work is valuable and multipurpose. However, as a new research direction of machine learning, it is still necessary to review from two perspectives of computer science and data science. Moreover, even though some literature has done relative work in the view of computer science, such as [53, 55,56,57], they all have the following defects more or less. Firstly, all the frameworks of the articles are not classified from different type of network data. It is clear that homogeneous network representations learning is greatly different from heterogeneous network representations learning. Secondly, there is no clear classification for different object-oriented embedding in homogeneous network embedding. Most of the articles are merely a general introduction to network embedding methods. Obviously, node embedding, subgraph embedding, whole network embedding and dynamic network embedding should have separate categories. Thirdly, most of the studies do not divide the embedding algorithm of heterogeneous networks comprehensively.

1.1 Contributions

Synthetically speaking, this review consists of three main contributions.

-

1.

Our work presents an unambiguous taxonomy of network embedding from the two perspective of homogeneous network and heterogeneous network. Apparently, heterogeneous network representation learning is more challenging.

-

2.

We propose a systematic taxonomy for homogeneous network representation learning and heterogeneous network representation learning. For homogeneous network, the embedding methods for different targets are summarized, respectively. Specifically, node embedding, subgraph embedding, whole network embedding and dynamic network embedding are listed. For heterogeneous network, the embedding approaches are clearly divided into two categories: knowledge graph embedding and heterogeneous information network embedding.

-

3.

We summarize and list the publicly available datasets used in network embedding, which can facilitate study for other researchers. We comprehensively summarize the related applications of network embedding and empirically evaluate the surveyed methods on several publicly available real-world network datasets. Finally, we point out five future directions in the view of theory and application, which provide valuable suggestions for future researchers.

1.2 Framework and organization of the survey

First of all, we survey the number of representative papers over the past ten years in different sub-directions of network representation learning. The results are shown in Fig. 2. We can find the current important points in the era of network representation learning are homogeneous node embedding and heterogeneous knowledge graph embedding. To provide a comprehensive overview, we cover all sub-directions. Our comprehensive framework of reviewing the network embedding is shown in Fig. 3.

The structure of this paper is organized as follows. In Sect. 2, we introduce the concepts and definitions related to network embedding. Section 3 introduces the homogeneous network embedding systematically. We analyze the algorithm in detail from four aspects of node embedding, subgraph embedding, whole network embedding and dynamic network embedding. Section 4 introduces heterogeneous network embedding comprehensively in the view of knowledge graph embedding and heterogeneous information network (HIN) embedding. Section 5 reviews extensively application of network representation learning. Section 6 includes some experiments on several representative datasets for testing some typical methods. Finally, we draw our conclusions and point out the five future research directions and prospects in terms of theory and application.

2 Definitions

In this section, we introduce some concepts and definitions related to network embedding.

2.1 Definitions of network data

Definition 1

(Network) Given a network \(G = (V,E)\), \(V\) denotes the sets of vertex (node) and \(E\) denotes the sets of edges between the vertex. According to the directivity of edges, it is divided into undirected network and directed network. If the weight of edges is considered, the network can be also divided into weighted and unweighted network.

Definition 2

(Homogeneous network, heterogeneous network) Given \(G = (V,E)\), \(\emptyset :V \to A\) and \(\varPsi :E \to R\) are type mapping functions for nodes and edges, respectively. When \(\left| A \right| > 1\) and/or \(\left| R \right| > 1\), the network is called a heterogeneous network. Otherwise, it is a homogeneous network.

Definition 3

(Knowledge graph) A knowledge graph is defined as a directed graph, whose nodes are entities and edges are subject–property–object triple facts. Each edge of the form (head entity, relation, tail entity) (denoted as \(\left\langle {h,r,t} \right\rangle\).) indicates a relationship of \(r\) from entity \(h\) to entity \(t\). Note that the entities and relations in a knowledge graph are usually of different types. Therefore, knowledge graph can be viewed as an instance of the heterogeneous network.

Definition 4

(Static network, dynamic network) The static network is the opposite direction to dynamic network. A dynamic homogeneous or heterogeneous network at time step \(t\) is defined as \(G^{t} = \left\{ {V^{t} ,E^{t} } \right\}\), where \(V^{t} = \left\{ {v_{1}^{t} ,v_{2}^{t} , \ldots ,v_{N}^{t} } \right\}\) denotes a set of nodes and \(N\) is the number of the nodes. \(E^{t}\) is the set of edges between the nodes. When the time \(t\) is a fixed value, the dynamic network becomes a static network. Without special explanation, this article generally refers to the static network.

2.2 Structure and other information in network

Definition 5

(First-order proximity) The first-order proximity is the weight \(w_{uv}\) between the edges of nodes \(u\) and \(v\). It is the local pairwise proximity between two vertices. If there is no edge between nodes, then its first-order proximity is 0 [33].

Definition 6

(Second-order and high-order proximity) The second-order proximity is the similarity of the first-order proximity of node pairs such as nodes \(u\) and \(v\) [33]. For example, we can let \(s_{u} = \left( {w_{u1} ,w_{u2} ,w_{u3} , \ldots ,w_{u\left| V \right|} } \right)\) denote the first-order proximity of \(u\) with all the other vertices. The second-order proximity between \(u\) and \(v\) can be computed by the cosine similarity between \(s_{u}\) and \(s_{v}\) [39]. Similar to the second-order proximity definition, the high-order (\(X\)-order) proximity is the similarity by calculating the lower-order (\(X - 1\) order) proximity.

Definition 7

(Meta-path of the network) Given a heterogeneous information network \(G = (V,E)\), a meta-path \(\pi\) is a sequence of node types \(v_{1} ,v_{2} , \ldots ,v_{n}\) and/or edge types \(e_{1} ,e_{2} , \ldots ,e_{n - 1} :\;\pi = v_{1} \mathop \to \limits^{{e_{1} }} \cdots v_{i} \mathop \to \limits^{{e_{i} }} \cdots \mathop \to \limits^{{e_{n - 1} }} v_{n}\).

2.3 Problem definition of network embedding

Problem 1

(Homogeneous network embedding) Given the input of a graph \(G\), homogeneous network embedding is designed to map node or subgraph or whole network into a low-dimensional space \(R^{d} \left( {d \ll \left| V \right|} \right)\). The embedding vectors are expected to preserve the various features of the network as much as possible.

Problem 2

(Heterogeneous network embedding) For network \(G\), the problem of heterogeneous network embedding aims to map the different type of entities or relations into a low-dimensional space \(R^{d} \left( {d \ll \left| V \right|} \right)\). The embedding vectors are expected to preserve the structure and semantics in \(G\) as rich as possible.

Problem 3

(Dynamic network embedding) Given a series of dynamic networks \(\left\{ {G^{1} ,G^{2} , \ldots ,G^{T} } \right\}\), dynamic homogeneous or heterogeneous network embedding aims to learn a mapping function \(f^{t} :v_{i} \to R^{d}\). The objective function is to preserve the structure and semantics between \(v_{i}\) and \(v_{j}\), with the evolution of the network at the time.

3 Homogeneous network embedding

The overall architecture of homogeneous network embedding could be presented as Fig. 4. The original raw network data are fed to network embedding algorithm, and the vectors obtained can be utilized to various graph analysis tasks by using the off-the-shelf machine learning algorithm. For homogeneous network embedding, we analyze the algorithm in detail from four aspects of node embedding, subgraph embedding, whole network embedding and dynamic network embedding. Besides, we summarize some representative homogeneous node embedding method, which is shown as Table 1. The graph type, evaluation task, advantages and disadvantages, time complexity are included. Fore time complexity, considering that some works lack of the technique details, therefore we mainly summarize the time complexity of some representative network embedding methods, where N and \(\left| E \right|\) are the number of nodes and edges, respectively. \(I\) is the number of iterations. \(d\) is the representation dimension. \(a\) is the length of node attributes. \(R\) is the number of samples per vertex. \(L\) is the expected sample length.\(d^{\prime }\) is the maximum number of hidden layer nodes in DNN. \(k\) is the number of layer. \(C\), \(H\) and \(F\) are the weight matrix of the \(W^{0} \in R^{C \times H}\) and \(W^{1} \in R^{H \times F}\). \(F\) and \(F^{\prime }\) are the number of input and attention head features. \(M\) is the average number of neighborhoods. Tables 2 and 3 show the open-source datasets used for node embedding and whole network embedding. The details are as follows.

3.1 Node embedding

3.1.1 Random walk-based methods

Motivation Random walk has been widely used in network data analysis to capture the topological structure information [77]. As the name suggests, random walk chooses a certain vertex in the graph for the first step and then randomly migrates through the edges. By Analogy, the walking path obtained when performing random walks can be taken as the sentence in the corpus, the vertices in the network could be regarded as vocabulary in the text corpus. Then, combining with mature natural language processing technology (e.g., word2vec [19, 23]), nodes could be mapped to low-dimensional vector space. Therefore, some methods are proposed based on the similarity of the node sequence and the text sequence. The representative methods are as follows.

The pioneering work of this kind of method was DeepWalk [32], which was inspired by word2vec algorithm [19, 23] of modeling language. In word2vec, the short sequences of words in text corpus could be embedded in continuous vector space. Similar to word2vec, DeepWalk generated sequences of nodes from a stream of truncated random walks on the graph. Each path sampled from the graph corresponds to a sentence from the corpus, where a node corresponded to a word. Then, the skip-gram model [23] was applied on the paths to maximize the probability of observing a node’s neighbor conditioned on its embedding. The hierarchical softmax [78] technology was to approximate the probability distribution. Node representation can be obtained, just like getting the representation of a word.

However, this model has different limitations. Firstly, researchers found that different random walk strategies may produce different node representations in DeepWalk. Therefore, Node2vec [27] improved DeepWalk method by employing biased strategy of breadth-first sampling (BFS) and depth-first sampling (DFS). By adjusting search bias parameters, the model can obtain a good node feature vector. Secondly, DeepWalk cannot obtain discriminative representation for specific node classification tasks. Thus, DDRW [37] was aimed at learn the latent space representations that well captured the topological structure and meanwhile were discriminative for the network classification task. The model extended DeepWalk by jointly optimizing the classification objective and the objective of embedding entities in a latent space. Thirdly, DeepWalk did not model the node label information. Therefore, TriDNR [38] learnt the network representation from three parties, namely node structure, node content and node label. Specifically, the inter-node relationship, node-word correlation and label-word correspondence were modeled by maximizing the probability of observing surrounding nodes given a node in random walks, maximizing the co-occurrence of word sequence given a node and maximizing the probability of word sequence given a class label. Finally, DeepWalk showed fail in structural equivalence tasks. Hence, Struct2vec [30] assessed structural similarity using a hierarchy to measure similarity at different scales and constructed a multilayer graph to encode the structural similarities and generate structural context for nodes via a biased random walk. Then, the skip-gram model was also adopted to learning latent representation of node, which maximized the likelihood of its context in a sequence.

Discussions and insights Although the method based on random walk has achieved certain effects, it cannot ignore the limitations brought by randomness. Therefore, designing a structure-oriented walking strategy will be very important for performance improvement, such as capturing the community structure (see [58]) and preserving the scale-free feature of real-world networks (see [59, 79]). Researcher also finds the limitation of adopting short random walks to explore the local neighborhoods of nodes and non-convex optimization which can become stuck in local minima in the model of DeepWalk and Node2vec, HARP [80] captured the global structure of the input network, by recursively coalescing the input graph into smaller but structurally similar graphs. By learning graph representation on these smaller graphs, a good initialization scheme for the input graph was derived. This multilevel paradigm can improve the graph embedding methods (e.g., DeepWalk, Node2vec) for yielding better node embedding. Besides, as we can see from Table 1, the time complexity of the model Node2vec in above method is linear with respect to the number of nodes N. Therefore, this model can be scalable for large networks.

3.1.2 Optimization-based methods

Motivation Optimization-based algorithm aims to design a proper objective function modeling various structural and semantic information of the graph, then minimize or maximize the objective function by optimization method to obtain node vector representation using a variety of optimization methods, such as Line [33] model using negative sampling [19] + asynchronous stochastic gradient algorithm (ASGD) [81] and APP [29] model using negative sampling + stochastic gradient descent (SGD) [82].

The representative Line model designed two optimization functions by first-order proximity [24] and second-order proximity [33]. The author proposed to minimize the Kullback–Leibler (KL) divergence of joint probability distribution and empirical probability distribution. To obtain the embedding of vertex, an edge-sampling strategy was proposed to minimize either one of the objective function of first-order proximity and second-order proximity. APP model captured both asymmetric proximities and high-order similarities between node pairs. To preserve the asymmetric proximity, each vertex \(v\) needed to have two different roles, the source role and the target role, represented by vector \(\overrightarrow {{s_{v} }}\) and \(\overrightarrow {{t_{v} }}\), respectively. The global objective function was designed by the inner product of \(s_{u}\) and \(t_{v}\), which can represent the proximity of vertex pair \((u,v)\).

Discussions and insights From Table 1, we can find that the Line model is linear with respect to the number of edges E, which can easily scale up to networks with millions of vertices and billions of edges. At the same time, it is not difficult to find that this type of method mainly depends on the modeling ability of the objective function. Therefore, the optimization-based method can further improve the performance of the algorithm by designing more accurate objective functions.

3.1.3 Matrix factorization-based methods

Motivation A network or graph can usually be expressed in some forms of matrix, such as adjacency matrix and Laplacian matrix. Therefore, it is an intuitively way to design node embedding method by matrix factorization. Matrix factorization (MF), such as singular value decomposition (SVD) [83] and eigenvalue decomposition, is an important method in math, which has many successful applications in many fields [84,85,86,87,88,89]. For node embedding methods based on MF, representative algorithms are as follows.

For modeling the different structural information and obtaining the node embedding in framework of MF, GraRep [34], HOPE [25] and M-NMF [39] have made some attempts. Specifically, GraRep presented a node embedding method by decomposing the global structural information matrix, which integrated various k-step relational information. HOPE [25] proposed a high-order proximity embedding which decomposed the high-order proximity matrix rather than adjacency matrix using a generalized SVD. M-NMF [39] proposed a modularized nonnegative matrix factorization model, which preserved both the microscope structure of first-order and second-order proximities and mesoscopic structure of community feature. Note that the real-world networks also contain rich information in addition to the structure. Therefore, for modeling such auxiliary information, TADW [35] introduced text features of vertices into matrix factorization framework. MMDW [36] incorporated the labeling information into vertex representations in an unified learning framework based on matrix factorization.

Discussions and insights The approach of GraRep [34] just performs linear dimension reduction by employing SVD, resulting in the loss of more nonlinear information. Typical extension work is DNGR [43], which will be analyzed in the next section. Considering the flexibility of matrix decomposition, the frameworks of TADW [35], MMDW [36] and M-NMF [39] are well scalable and can easily integrate other information. HOPE [25] could also be extended by designing new higher-order proximity matrices.

3.1.4 Deep learning-based methods

Motivation In recent years, deep learning technology has shown the strong ability to model the data in many fields (see [6]). Therefore, the network data are no exception. For example, autoencoder is first applied to network presentation learning which is influenced by the mature unsupervised learning ability (see [26, 43]). The variant of convolutional neural networks is also designed to network data (see [60]). Besides, attention models have been successfully adopted in many computer vision tasks, including object detection [90]. Inspired by it, the attention mechanism has also recently been applied to network data (see [64]). In addition, the principle of adversarial learning has been widely applied in many fields, such as image classification [91, 92], sequence generation [93], dialog generation [94] and information retrieval [95]. The typical method is generative adversarial networks (GAN) [96] of which the framework consists of two components, i.e., a generator and a discriminator. GAN can be formulated as a minimax adversarial game, where the generator aims to map data samples from some prior distribution to data space, while the discriminator tries to tell fake samples from real data. Considering this ubiquitous game problem, this thought for network representation learning has also made significant research progress (see [63, 65]). The specific branches are as follows.

3.1.4.1 Autoencoder-based methods

There are two main categories of these methods: classical autoencoder-based and graph autoencoder-based algorithms. The representative methods of the first category are SDNE [26] and DNGR [43]. SDNE [26] proposed a modified conventional autoencoder-based model, which simultaneously optimizes the first-order and second-order proximity to characterize the local and global network structure. The first-order proximity was preserved by idea of Laplacian Eigenmaps [49]. The second-order proximity was preserved by the extension of traditional deep autoencoder. Finally, the intermediate layer of deep autoencoder was used as the final node representation. DNGR [43] captured the structural information of the graph via determining the positive pointwise mutual information (PPMI) matrix [97] and learnt the stacked denoising autoencoders to obtain the node embedding. Note that the DNGR model is task-agnostic. This results in the fact that the performance of specific task cannot be guaranteed. Therefore, DNNNC [41] proposed an efficient classification-oriented node embedding method for improving the performance of node classification by extending DNGR in framework of deep learning. The representative algorithms in second class are GAE [98] and ARGA [66]. The former can be regarded as the pioneering work of such methods. The model designed a graph convolutional network (GCN) [60] encoder and a simple inner product decoder to perform the unsupervised learning on graph-structured data. However, the model has at least three defects. The first defect is that the model cannot obtain robust graph representation. In order to remedy this defect, ARGA [66] proposed an adversarial training scheme to regularize the latent codes, which is obtained by GAE. The second defect is that GAE is not designed for specific task of graph analysis. Therefore, DAEGC [99] proposed clustering-oriented node embedding method via jointly optimizing to simultaneously obtain both graph embedding and graph clustering result. The third flaw is that the decoder module is too simple and loses too much structural information. Thus, recent work TGA [100] proposed a triad decoder via modeling the triadic closure property that is fundamental in real-world networks.

3.1.4.2 Graph convolutional network-based methods

GCN [60] can be considered as the most representative work, which adopted an efficient variant of convolutional neural networks on graph-structured data. The main convolutional architecture was via a localized first-order approximation of spectral graph convolutions. However, the researchers found that the model had some representative limitations. Firstly, GCN cannot model the global information of the network. Thus, DGCN [61] extended the GCN model with dual graph convolutional networks, i.e., the graph adjacency matrix-based convolution ConvA and positive pointwise mutual information (PPMI) matrix-based convolution ConvP. In this way, the local-consistency-based knowledge and the global-consistency-based knowledge in the data are embedded. Secondly, GCN will need excessive memory and computational resources when the graph has a large amount of nodes. To overcome this limitation, LGCL [62] proposed a large-scale learnable GCN via the learnable graph convolutional layer and an efficient subgraph training strategy. Thirdly, GCN is too shallow to capture more information. Therefore, H-GCN [67] proposed a deep hierarchical GCN by introducing coarsening layers and symmetric refining layers to increase the receptive field. Finally, GCN has poor extrapolating ability. To improve this capability, HesGCN [69] proposed a more efficient convolution layer rule by optimizing the one-order spectral graph Hessian convolutions, which is a combination of the Hessian matrix and the spectral graph convolutions.

3.1.4.3 Graph attention-based methods

Recently, graph attention networks (GATs) were proposed by Veličković et al. [64], which was an attention-based architecture to perform node classification. The main idea was to compute the hidden representations of each node, by attending over its neighbors, following a self-attention strategy.

Discussions and insights For autoencoder-based methods and GAN-based method, they are all unsupervised learning model. The representations learned can accomplish a variety of tasks, such as node clustering and link prediction. It is noteworthy that the learning ability of simple application of autoencoder to network data is limited, because of the particularity of network data as stated above. Therefore, the design of graph-oriented autoencoder is an urgent research issue. Although the work mentioned above has been covered, there is still a lot of potential development space. E.g., most of the variational graph autoencoder and its variants all use the KL divergence as the similarity measure between the distributions, which is not the true metrics. Therefore, the next generation of the graph autoencoder will be inspired by the recent work of Wasserstein autoencoders [101]. The GAN-based method also has some limitations such as poor stability, which maybe come from the characteristics of GAN itself. Like GAN and its variants which have developed very prosperously in the field of image processing, there is still a great potential for the development of GAN for graph data. For graph convolutional network-based methods and graph attention-based methods, they are specific for the task of semi-supervised node classification. For GCN method, we can find its time complexity is linear in the number of graph edges E in Table 1. We also note that the time complexity of GAT is on par with GCN. Hence, both of the them are suitable for large-scale networks. Although GCN and its variants are inspired by convolutional neural network (CNN), are they necessary for graph data? Is there a linear but very efficient method? Similar to attention models in other field such as NLP, the graph attention-based embedding methods also can be extended to related tasks such as graph classification as a useful mechanism to improve performance (see Sect. 3.3.2).

3.1.4.4 GAN-based methods

The representative work of this kind of method mainly includes adversarial network embedding (ANE) [63], GraphGAN [65] and ProGAN [68]. ANE mainly consisted of two components, i.e., a structure preserving component and an adversarial learning component. Specifically, an inductive DeepWalk was proposed for structure preserving. The adversarial learning component consisted of two parts, i.e., a generator \(G( \cdot )\) and a discriminator \(D( \cdot )\). It was acting as a regularizer for learning stable and robust feature extractor, which was achieved by imposing a prior distribution on the embedding vectors through adversarial training. The parameterized function \(G( \cdot )\) was shared by both the structure preserving component and the adversarial learning component. GraphGAN was an another innovative graph representation learning framework with GAN idea. For a given vertex, the generative model aims to fit its underlying true connectivity distribution over all other vertices and produces “fake” samples to fool the discriminative model. In addition, with the generative capabilities of GAN networks, ProGAN proposed to generate proximity between different nodes which can help to discover the complicated underlying proximity to benefit network embedding.

3.1.5 Extra models

Different from the above method, GraphWave [102] learnt a multidimensional structural embedding for each node based on the diffusion of a spectral graph wavelet centered at the node. The method made it possible to learn nodes’ structural embeddings via spectral graph wavelets [103]. The key is to treat the wavelet coefficients as a probability distribution and characterize the distribution via empirical characteristic functions.

3.2 Subgraph embedding

Subgraph embedding aims to encode a set of nodes and edges into a low-dimensional vector. The representative work was Subgraph2vec [104], which encoded semantic substructure dependencies in a continuous vector space. Firstly, a rooted subgraph around every node in a given graph was extracted by using WL relabeling strategy [105]. Then, the algorithm proceeded to learn its embedding with the radial skip-gram model.

3.3 Whole network embedding

In this section, we focus on the whole graph embedding, which represented a graph as one vector. When such vectors are obtained, graph-level classification is often involved. The actual network is usually rich in a variety of structural information. In such situation, embedding a whole graph needs to capture the property of a whole graph. Therefore, it is a challenging task to design efficient algorithm. In this review, we summarize the representative state-of-the-art graph kernel methods and latest deep learning methods for whole graph embedding to perform graph classification task.

3.3.1 Graph kernel-based methods

Typical methods in this category are graph kernel [106] and deep graph kernel (DGK) [76]. Graph kernel defined a distance measure between subgraphs by the function. DGK was proposed to leverage the dependency information between substructures by learning their latent representations. DGK was one of the state-of-art methods in graph kernel family, which has been proven to outperform three popular graph kernel methods of Graphlet kernels [107], Weisfeiler–Lehman kernel [108] and shortest-path graph kernels [109].

Discussions and insights The main shortcomings of these types of methods are handcrafted feature. Besides, their similarity is directly calculated based on global graph structures and it is computationally expensive to calculate graph distance and prediction rules are hard to interpret, because graph features are numerous and implicit. And, it cannot capture the important substructures, which is useful for graph classification.

3.3.2 Deep learning-based methods

As mentioned above, deep learning has achieved great success in task of node embedding. For graph classification tasks, the corresponding deep-based method is also showing a booming trend. The typical method consists of two branches: CNN-based methods and attention-based methods. The details are as follows.

3.3.2.1 CNN-based methods

This kind of method can be divided into two categories: conventional CNN-based methods and spectral convolution based approaches. For the first type of method, the representation work is PSCN [110]. Niepert et al. [110] proposed the method of PSCN, which can be taken as the first work for learning convolutional neural networks for arbitrary graph classification. The model designed a general approach to extracting locally connected regions from graphs via analogizing image-based convolutional networks that operate on locally connected regions of the input. The final proposed architecture consisted of node sequence selection, neighborhood graph construction, graph normalization and convolutional architecture. However, researchers found that the PSCN model has at least three drawbacks, such as the selection of neighborhood, losing the complex subgraph feature and special labeling approach. Thus, recent work NgramCNN [111] proposed to tackle the above shortcomings. It consisted of three core components: (1) the n-gram normalization, which sorted the nodes in the adjacency matrix of a graph and produced the n-gram normalized adjacency matrix, (2) a special convolution layer, called diagonal convolution, which extracted the common subgraph patterns found within local regions of the input graph and (3) a stacked deep convolution structure, which was built on top of diagonal convolution and repeated by a series of convolution layers and a pooling layer. The researchers also noted the high complexity of the data preprocessing (such as using graph canonization tool NAUTY [112]) in the PSCN model. To avoid this question, the recent model DGCNN [113] proposed a pure neural network architecture for graph classification in an end-to-end fashion. The brief processing steps are as follows. An input graph of arbitrary structure was first passed through multiple graph convolution layers where node information was propagated between neighbors. Then, the vertex features were sorted and pooled with a SortPooling layer, and passed to traditional CNN structures to learn a predictive model.

For spectral convolution based method, early attempts can be traced back to the first spectral graph CNN model [114] and later extended model [115]. However, because the above models usually contain highly complex operations, they cannot be well applied to large-scale networks. In order to make up for the above defects and efficiently perform the task of graph classification, the model of GCN combined with other effective mechanisms to obtain graph-level embedding becomes very attractive and representative. Some recent works such as dense differentiable pooling [116] and self-attention pooling [117] have been introduced the pooling operation to GCN for obtaining whole graph embedding from node embedding, which have achieved good performance in graph classification task.

3.3.2.2 Attention-based methods

Similar to attention-based methods for node classification discussed above (see Sect. 3.1.4), attention thoughts have recently been applied to graph classification tasks. The representative work is graph attention model (GAM) [118], a novel RNN model, proposed to focus on small but informative parts of the graph, which avoided noise in the rest of the graph. The attention mechanism was mainly used to guide the walk toward more informative parts of the graph.

Discussions and insights We could find that many algorithms for graph classification are based on the conventional CNN model, such as NgramCNN [111] and DGCNN [113]. Their essence is still to use the traditional CNN to conduct feature processing and transformation. Although these models have proven effective, their ability to capture complex structures is inherently insufficient. To better identify complex structures in networks, recent work—Capsule Graph Neural Network (CapsGNN) [72] has proposed to graph classification task via leveraging the power of Capsule Neural Network (CapsNet) [119] in field of image processing. The model has achieved good classification results. Therefore, how to learn the most discriminative information from the complex structure space is the key to the problem.

3.4 Dynamic homogeneous network embedding

We can clearly observe that almost all the existing network embedding methods focus on static networks while ignoring network dynamics [25,26,27, 32,33,34,35,36,37,38,39, 43, 63, 65]. Therefore, network embedding for dynamic network is an open question, which is gradually gaining the attention of researchers. Consider the challenges of the network over time, designing efficient dynamic network embedding algorithm is not a trivial problem. Representative methods include DHPE [120], DynamicTriad [121] and Dynamic GCN [122]. The first two methods are non-deep learning methods. Specially, DHPE [120] proposed a dynamic network embedding method, which aimed to preserve the high-order proximity. The generalized SVD (GSVD) was firstly adopted to preserve the high-order proximity. Then, the researcher proposed a generalized eigen perturbation approach to incrementally update the results of GSVD. In this way, the dynamic problems are transformed into eigenvalue updating problems. DynamicTriad [121] designed a dynamic network embedding algorithm via modeling triadic closure process, which enables our model to capture network dynamics. The last method is deep learning-based approach named Dynamic GCN, which combines long short-term memory networks [123] and GCN to learn long short-term dependencies together with graph structure. The performance of the model has been proved to be outstanding in vertex-based semi-supervised classification and graph-based supervised classification tasks.

Discussions and insights Intuitively, how to model dynamic characteristics and update node embedding is the key to the problem of dynamic network embedding. With the development of deep learning, the dynamic network embedding method based on neural network will make greater progress.

4 Heterogeneous network embedding

Similar to homogeneous network, heterogeneous network also exists widely in the real world as mentioned earlier. Typical representatives of heterogeneous network are heterogeneous knowledge graph (KG) [124] and heterogeneous information network (HIIN) [125,126,127,128,129]. Taking online social network as an example, it could be composed of different types of multimodal nodes (e.g., users, image, text and videos) and complex relations (e.g., social or cross-media similarity relations) [130]. So, compared to homogeneous network embedding, heterogeneous network embedding is more challenging issue because of the heterogeneity and high complexity. The architecture of heterogeneous social network representation learning is shown as Fig. 5. Heterogeneous network embedding could map different heterogeneous objects in heterogeneous network into a unified latent space so that objects from different spaces can be directly compared. Consequently, heterogeneous network analysis tasks become feasible. For heterogeneous network embedding, we review the algorithm in detail from two aspects of knowledge graph embedding and heterogeneous information network embedding, in view of the rapid development and importance of knowledge graph embedding technology. Besides, we summarize the knowledge graph embedding method comprehensively, which is shown as Table 4. The score function, prerequisite and time complexity are included. Here, \(d\) and \(k\) are the dimensionality of entity and relation embedding space, respectively. \(\sigma ( \cdot )\) and \(\omega\) denote the nonlinear activation function and convolutional filters. Tables 5 and 6 show the open-source datasets used in knowledge graph embedding and heterogeneous information network embedding. The specific contents are as follows.

4.1 Knowledge graph embedding

4.1.1 Translation-based models

The core of translation-based model is based on translation distance. In the early exploration, researchers used intuitive distance model, such as SE [132]. However, the model loses too much information and results in poor performance. After that, it is more extensive to adopt the translation model introduced in this section.

The first translation-based model was TransE [124], which was the most representative translational distance model. It represented both entities and relations as vectors in the same space, as shown in Fig. 6a. It has gathered attention because of its effectiveness and simplicity. TransE was inspired by the skip-gram model [23], in which the differences between word embedding often represented their relation. TransE regarded the relation \(r\) as translation from entity \(h\) to entity \(t\) for a golden triplet \(\left\langle {h,r,t} \right\rangle\). Hence, \((h + r)\) was close to \((t)\). The score function used for training the vector embedding was defined as

Note that TransE was only suitable for 1-to-1 relations. There remain flaws for 1-to-N, N-to-1 and N-to-N relations.

To solve these problems of TransE, TransH [134] proposed an improved model named translation on a hyperplane. On hyperplanes of different relations, a given entity has different representations. As shown in Fig. 6b, TransH further projected the embedding \(h\) and \(t\) to a relation-specific hyperplane by a normal vector \(w_{r}\), where \(h^{\prime} = h - w_{r}^{T} hw_{r}\) and \(t^{\prime } = t - w_{r}^{T} tw_{r}\). Then, score function used for training was defined as

Note that both TransE and TransH assumed that entities and relations were in the same vector space. But relations and entities were different types of objects, they should not be in the same vector space.

TransR [135] was proposed based on the above idea. It extended the single vector space used in TransE and TransH to many vector spaces. TransR set a mapping matrix \(M_{r}\) for each relation \(r\) to map entity embedding into relation vector space, as shown in Fig. 6c, where \(h^{\prime } = M_{r} h\) and \(t^{\prime } = M_{r} t\). The score function was defined as

However, TransR also has several flaws: (1) For a typical relation \(r\), all entities share the same mapping matrix \(M_{r}\). In fact, the entities linked by a relation always contain various types and attributes. (2) The projection operation is an interactive process between an entity and a relation; it is unreasonable that the mapping matrices are determined only by relations. (3) Matrix–vector multiplication brings large amount of calculation, and when relation number is large, it also has much more parameters than TransE and TransH.

Thus, to improve the TransR, a more fine-grained model TransD [137] was proposed. Two vectors for each entity and relation were defined. The first vector represented the meaning of an entity or a relation, the other one represented the way that how to project an entity embedding into a relation vector space and it will be used to construct mapping matrices. Therefore, every entity-relation pair had a unique mapping matrix. In addition, TransD had no matrix-by-vector operations which can be replaced by vectors operations. The corresponding scoring function was defined as

where \(h_{p}\), \(r_{p}\) and \(t_{p}\) were projection vectors and \(I^{m \times n}\) was an identity matrix.

Although the above methods have strong ability to model knowledge graphs, it remains challenging for the reason that the objects (entities and relations) in a knowledge graph are heterogeneous and unbalanced. TranSparse [139] was proposed to overcome these two issues. \(M(\theta )\) denoted a matrix \(M\) with sparse degree \(\theta\). The score function was defined as

Besides, aiming at the limitations of optimization functions for specific graphs, TransA [138] adaptively found the optimal loss function according to the structure of knowledge graphs, and no closed set of candidates was needed in advance. It not only made the translation-based embedding more tractable in practice, but promoted the performance of embedding related applications. Its scoring function was defined as

Considering most of the knowledge graph embedding methods focused on completing monolingual knowledge graphs, MTransE [145], a translation-based model for multilingual knowledge graph embedding, was proposed. By encoding entities and relations of each language in a separated embedding space, MTransE provided transitions for each embedding vector to its cross-lingual counterparts in other spaces, while preserving the functionalities of monolingual embedding. The score function was the same with the TransA model.

Recently, the researchers noticed the regularization problem in TransE. TorusE [147] aimed to change the embedding space to solve the regularization problem while employing the same principle used in TransE. The required conditions for an embedding space were first considered. Then, a lie group was introduced as candidate embedding spaces. After that, the model embedded entities and relations without any regularization on a torus. Its scoring function was defined as

Discussions and insights Most of the above improved methods are based on TransE. Considering that the model is inspired by the translation model, intuitively, designing algorithms that incorporate the latest research in machine translation will further improve the performance of the task.

4.1.2 Semantic matching models

RESCAL [131] associated each entity with a vector to capture its latent semantics. Each relation was represented by an n-by-n matrix, and the score of triple \(\left\langle {h,r,t} \right\rangle\) was calculated by a bilinear map that corresponded to the matrix of the relation \(r\) and whose arguments are \(h\) and \(t\). The score was defined by a bilinear function

where \(h,t \in R^{d}\) are vector representations of the entities.\(M_{r} \in R^{d \times d}\) is a matrix associated with the relation.

Considering the limitation of the scoring function of RESCAL, some extensions based on this work have been proposed successively. NTN [133] presented a standard linear neural network structure and a bilinear tensor structure. This can be considered as a generalization of RESCAL. DistMult [136] simplified RESCAL by restricting the matrices representing relations to diagonal matrices. However, it had the problem that the scores of (h, r, t) and (t, r, h) were the same. To solve this problem, ComplEx [144] extended DistMult by using complex numbers instead of real numbers and taking the conjugate of the embedding of the tail entity before calculating the bilinear map. HOLE [141] proposed a holographic embedding method to learn compositional vector space representations of entire knowledge graphs via combining the expressive power of the tensor product used in RESCAL and simplicity of TransE. Analogy [146] extended RESCAL to model the analogical properties, which was particularly desirable for knowledge base completion. For example, if system A (a subset of entities and relations) was analogous to system B (another subset of entities and relations), then the unobserved triples in B could be inferred by mirroring their counterparts in A. It adopted the same score function as model RESCAL and adds constraints to matrix \(M_{r}\) m to model Analogy.

Discussions and insights Bilinear models have more redundancy than translation-based models and so easily become overfitting.

4.1.3 Information fusion-based models

For incorporating extra information to improve the performance of knowledge graph embedding, DKRL [142] proposed description-based representations for entities constructed from entity descriptions with continuous bag-of-words (CBOW) or Convolutional neural network (CNN) was capable of modeling entities in zero-shot scenario. Besides, rich information located in hierarchical entity types was also significant for knowledge graph. TKRL [140] was the first method which explicitly encoded type information into multiple representations in with the help of hierarchical structures.

Discussions and insights Multiple information like textual information and type information, considered as supplements for the structured information embedded in triples, is significant for representation learning in knowledge graph, as already reflected in the model DKRL [142] and TKRL [140]. Intuitively, models that incorporate more information will be closer to real problems and will greatly drive relevant practical applications.

4.1.4 GNN and CNN-based models

Graph neural networks (GNNs), including GCN and GAT, have been proved to have strong ability to model graph data. Naturally, knowledge graph is also their application scenario. Representative methods mainly include R-GCNs [158] and the recent work [159] based on the thought of GAT. Specifically, R-GCNs are an extension of applying GCN to knowledge graph. The model applies a convolution operation to the neighborhood of each entity and assigns them equal weights. Nathani et al. [159] proposed a graph attention-based feature embedding that captures both entity and relation features in a multi-hop neighborhood of a given entity. In addition to GNN, researchers also tried to solve the problem of knowledge graph embedding with the ability of multilayer convolutional neural networks to learn deep expressive features. Typical work is ConvE [148]. The model uses 2D convolution over embeddings and multiple layers of nonlinear features to model knowledge graphs.

Discussions and insights With the rapid development of deep learning, especially graph neural networks and convolutional neural networks in recent years, the application of these advanced methods in the field of knowledge graphs has become increasingly popular.

4.1.5 Extra models

In addition to the above methods, ManifoldE [143] applied the manifold-based principle \(M(h,r,t) = D_{r}^{2}\) for a specific triple \(\left\langle {h,t,t} \right\rangle\). When a head entity and a relation were given, the tail entities laid in a high-dimensional manifold. Intuitively, the score function was designed by measuring the distance of the triple away from the manifold

where \(D_{r}\) was a relation-specific manifold parameter.

4.2 Heterogeneous information network embedding

4.2.1 Optimization-based methods

The representative methods of this kind of methods are LSHM [130], PTE [160] and Hebe [161, 162]. The first method was latent space heterogeneous model, which is one of the initial works. The model assumed that two nodes connected in the network will tend to share similar representations regardless of their types. The node latent representation could be learnt by the function which combined the classification and regularization losses. The labels of different type node were then deduced from these representations. The second method was PTE model that utilizes both labeled and unlabeled data to learn the embedding of text for heterogeneous text network. The labeled information and different levels of word co-occurrence information were first represented as a large-scale heterogeneous text network. Then, the heterogeneous text network can be composed of three bipartite networks: word–word, word-document and word-label networks. To learn the embedding of the heterogeneous text network, an intuitive approach was to collectively embed the three bipartite networks, which can be achieved by minimizing conditional probabilities between the node pair in three networks. The third method was Hebe, which captured multiple interactions in the heterogeneous information networks as a whole. The hyperedge was defined as a set of objects forming a consistent and complete semantic unit, which was taken as event. Therefore, this method preserved more semantic information in the network. Two methods were presented based on the concept of hyperedge: HEBE-PO, modeling the proximity among the participating objects themselves on the same hyperedge, and HEBE-PE modeling proximity between the hyperedge and the participating objects.

Discussions and insights The above model has limited modeling of heterogeneous networks, resulting in the loss of a lot of information, such as node type information. Due to the complexity of heterogeneous networks, designing more accurate objective functions is the key to this type of methods.

4.2.2 Deep learning-based methods

Analogous to homogeneous network embedding, deep learning models are also applied to heterogeneous network representation learning, which could capture the complex interactions between heterogeneous components. These methods are mainly divided into two branches: conventional neural network-based methods and GNN-based methods.

4.2.2.1 Conventional neural network-based methods

Conventional neural networks include CNN, autoencoder, etc., which have achieved great success in image processing, computer vision and other fields. However, considering that heterogeneous networks are often rich in multiple types of information and complex structures, it is usually not a trivial problem for traditional neural networks to work well on such networks. In this type of approach, HNE [4] and DHNE [156] are the two representative methods.

The first method is HNE, which jointly modeled contents and topological structures in heterogeneous networks via deep architectures. Briefly, the model decomposed the feature learning process into multiple nonlinear layers of deep structure. Three modules of image–image, image–text and text–text were included in the model. The model used CNN network to learn the features of the image and adopted the fully connected layer to extract the discriminative text representation. These were connected to the prediction layer. Finally, it transferred different objects in heterogeneous networks to unified vector representations. Although the above method has achieved good results, it ignores the ubiquitous problem of indecomposable hyperedge [163, 164] in heterogeneous networks. Therefore, DHNE proposed to embed hypernetworks with indecomposable hyperedges by deep model. The model theoretically proved that any linear similarity metric in embedding space commonly used in existing methods cannot maintain the indecomposibility property in hypernetworks. A new deep model with autoencoder was designed to realize a nonlinear tuplewise similarity function while preserving both local and global proximities in the formed embedding space.

4.2.2.2 Graph neural network-based methods

Influenced by the successful implementation of graph neural network in processing homogeneous network, recently heterogeneous graph neural network has been developed gradually. The representative methods are HetGNN [165] and HAN [166].

HetGNN was a recent heterogeneous graph neural network model that jointly considered heterogeneous structural (graph) information and heterogeneous contents information of each node effectively. Firstly, the model introduced a random walk with restart strategy to sample a fixed size of strongly correlated heterogeneous neighbors for each node and group them based upon node types. Secondly, the model designed a neural network architecture with two modules to aggregate feature information of those sampled neighboring nodes. Finally, the model leveraged a graph context loss and a mini-batch gradient descent procedure to train the model in an end-to-end manner. Note that the above model does not consider the importance information in the heterogeneous network. Thus, HAN proposed a heterogeneous graph neural network based on the hierarchical attention, including node-level and semantic-level attentions. Specifically, the node-level attention aimed to learn the importance between a node and its meta-path based neighbors, while the semantic-level attention could learn the importance of different meta-paths.

Discussions and insights It can be seen that there are fewer heterogeneous network embedding models based on deep learning model comparing with homogeneous network embedding of the same type of methods, because it is a challenging task. At the same time, as mentioned above, deep learning technology has been booming in the field of homogeneous network embedding. Therefore, designing heterogeneous network embedding algorithms based on deep learning has greater development potential. In addition to deep learning, we also notice that broad learning [167] has been introduced in the field of network embedding, such as Zhang et al. [168]. Considering that broad learning is an emerging method, thus, network embedding based on broad learning technology will have great potential for development.

4.2.3 Meta-path-based methods

Meta-path has been widely used in heterogeneous graph analysis [169, 170]. Inspired by this, two representative works: Metapath2vec and metapath2vec++ [171] and HIN2Vec [154] are proposed. The former preserved both structural and semantic correlations of heterogeneous graphs. The model designed a random walk based on meta-path. It was used to generate heterogeneous neighborhoods with network semantics for various types of node. The heterogeneous skip-gram model to perform node embedding was constructed. The latter also explored meta-paths in heterogeneous networks for representation learning. Different from the former, HIN2Vec also learnt representations of meta-paths. Specifically, the model firstly adopted random walk generation and negative sampling to prepare training data. Then, the model trains a logistic binary classifier that predicts whether two input nodes has a specific relationship in order to efficiently learn model parameters, i.e., node vectors and meta-path vectors.

Discussions and insights Although meta-path based methods have achieved good performance, it is not enough to sample rich semantic information contained in heterogeneous information networks only by meta-path. Therefore, designing a better semantic information sampling strategy is greatly important to improve the performance of heterogeneous information network embedding algorithms. The latest model such as MetaGraph2vec [172] and HERec [173] has just explored how to learn better node embedding vectors by fusing semantic information from different meta-paths, and successfully applied it to node classification and recommendation tasks.

4.2.4 Extra models

There are some extra models, which have made a relatively new exploration, such as ASPEM [153] and Tree2Vector [174]. Specifically, ASPEM encapsulated information regarding each aspect individually, instead of preserving information of the network in one semantic space. The aspect selection method was proposed, which demonstrated that a set of representative aspects can be selected from any heterogeneous information networks using statistics of the network without additional supervision. To design the embedding algorithm for one aspect, the skip-gram model [23] was extended. The final embedding for node \(u\) was thereby given by the concatenation of the learned embedding vectors from all aspects involving \(u\). For tree-structured data, we can take it as a specific form of heterogeneous network. For example, a tree structure for representing the content of an author can be constructed by author biography node, written books nodes and book comments nodes [174, 175]. In such cases, researchers have designed effective methods to transform tree-structured data into vectorial representations. The representative work is Tree2Vector [174], which is mainly implemented through the node allocation process via employing the unsupervised clustering technique, and the locality-sensitive reconstruction method to model the reconstruction process. However, this is an unsupervised learning model, which cannot be applied to supervised learning directly. Therefore, designing an end-to-end supervised learning model for semantic tree-structured data is another promising issue.

Summary In order to show the development process of so many representation algorithms more clearly, we illustrated the development timeline that network representation learning experienced in Fig. 7.

5 Application

Because of the universality of the embedding vectors, network embedding technology can be applied to many fields and tasks by using the off-the-shelf machine learning method. Especially, with the rapid development of GNN, the application of GNN in many fields is in the ascendant. In this review, we introduce the classic and the latest applications in a more comprehensive way, i.e., node classification (Sect. 5.1), node clustering (Sect. 5.2), link prediction (Sect. 5.3), visualization (Sect. 5.4), recommendation (Sect. 5.5), graph classification (Sect. 5.6), application in knowledge graph (Sect. 5.7) and extra application scenarios (Sect. 5.8).

5.1 Node classification

Node classification is a very important issue in domain of graph mining and has a great theoretical significance and application value [176, 177]. Node classification aims to predict unlabeled nodes by training the samples of labeled nodes, which is the most widely used task of node embedding. After obtaining the vector representation of nodes, many off-the-shelf machine learning methods and packages (such as SVM [35, 37,38,39], logistic regression [27, 32,33,34, 62, 65, 178] and Liblinear package [26, 63, 179]) could be leveraged to implement the task. First, the feature vectors of the labeled nodes are used to train classification model. Then, the vectors of the unlabeled nodes are utilized as the input of the classification model, and their label categories are deduced. At the same time, there are few deep learning models using the softmax layer for classification, such as GATs [64].

5.2 Node clustering

One of the most important tasks in graph mining and network analysis is node clustering, whose target is to infer the clusters in graph based on the network structure [58]. Many practical applications can be transformed into node clustering problems, such as community detection in social networks [39]. After we learn the node features through the network embedding algorithm, we can use the off-the-shelf clustering algorithm such as K-means to achieve the node clustering task. Many works such as [34, 66, 68] have followed the above steps to verify the performance of the algorithm. In addition, in recent years, deep graph clustering method such as [99] has developed rapidly, aiming to obtain clustering-oriented node embedding in framework of deep learning.

5.3 Link prediction

Link prediction refers to the task of predicting either missing interactions or links that may appear in the future or in an evolving network. e.g., in social networks, friendship links can be missing between two users who actually know each other [180]. The basic assumption of graph embedding is that a good network representation should be able to capture and preserve rich structure and semantic information of graph, which can predict unobserved links in the graph. Therefore, the graph embedding method can further infer the graph structure. Recent work has successfully applied this approach to link prediction tasks in homogenous and heterogeneous graph [5, 25,26,27,28,29, 65, 120, 181,182,183]. For example, Node2vec [27] predicts the missing edges between two users in Facebook network, protein–protein interactions network and collaboration network. For detecting the new protein–protein interactions, Cannistraci et al. [181] offered a solution for topological link prediction by network embedding. Zitnik et al. [5] modeled polypharmacy side effects in multimodal heterogeneous networks with graph convolutional networks [60].

5.4 Visualization

Data visualization is a powerful technology for data exploration [184]. Once we obtain low-dimensional vector representation of nodes, we can use the commonly data visualization techniques (such as t-distributed stochastic neighbor embedding (t-SNE) [185] and LargeVis [186]) to visualize graph data in 2D space. Good vector representation often refers to the common thing that the nodes of the same category are close to each other in 2D space with different color. Some recent work has applied to this task [26, 33, 34, 36, 38, 43, 63], which can be used to visually reflect the data representation.

5.5 Recommendation

Recommender systems have been playing an increasingly important role in various online services [187]. The conventional recommendation methods can be mainly divided into content-based recommendation [188] and collaborative filtering based recommendation [189]. Additionally, it is worth noting that network embedding has been successfully applied to the recommendation in a new light, such as [25, 29, 65, 173]. For example, for incorporating various kinds of auxiliary data in the view of HIN, Shi et al. [173] modeled and utilized these heterogeneous and complex information in recommender systems.

5.6 Graph classification

Graphs are powerful structures that can be used to model almost any kind of data, such as social network [190]. An instinctive application of whole graph embedding is graph classification. Graph classification assigns a class label to a whole graph. It has a wide range of applications in bioinformatics, chemistry, drug design and other fields, such as [61, 110, 111, 118, 191,192,193,194,195,196].

5.7 Application in knowledge graph

The applications based on knowledge graph embedding technology have been widely deployed in knowledge graph [197]. The three main applications are link prediction, triplet classification and knowledge graph completion. Link prediction is to predict the missing \(h\) or \(t\) for a golden triplet \((h,r,t)\). There are some knowledge graphs embedding research work involved this task, such as [124, 134,135,136,137,138,139, 141, 143, 147]. Triplet classification aims to judge whether a given triplet \((h,r,t)\) is correct or not, which is a binary classification task. It has been successfully applied in these works including [133,134,135, 137,138,139,140, 143]. Knowledge graph completion aims to complete a triple \((h,r,t)\) when one of \(h\), \(r\), \(t\) is missing. The representative work is [140, 142].

5.8 Extra application scenarios

Network embedding technology is likewise widely used in other tasks or fields because of the ubiquity of graphs. E.g., for the question of NP-hard combinatorial optimization problems over graphs, Dai et al. [198] proposed a unique combination of reinforcement learning [199] and network embedding to address this challenge, which can automatically learn the optimization algorithm. In the field of multimodal data analysis, the heterogeneous networks can be constructed from images and text. The obtained vector via heterogeneous network embedding technology can be applied to cross-modal retrieval (see [4]). In domain of image processing and text analysis, Defferrard et al. [115] reported the application for MNIST image classification and text categorization. In the biochemistry field, there is an emerging field of graph convolutional networks and their applications in drug discovery and molecular informatics (see [200]). In the field of intelligent transportation, researchers have used GCN in traffic prediction and achieved excellent performance (see [201]). In short, given its powerful capabilities of processing omnipresent graph data, network embedding technology will have broader application prospects.

6 Experiments

For the sake of making a more comprehensive analysis of the algorithm, we conduct some experiments on some benchmark datasets. Owing to the large literature, we could not compare to every method but to test some representative network embedding algorithms with the open-source code and open platform such as PyTorch Geometric,Footnote 1 OpenNeFootnote 2 and OpenKe.Footnote 3 We try our best to cover the newer methods and show the trend of the problem. Our experiment is tested on the Ubuntu 16.04 LTS 64bit, RAM = 125 GB and CPU = Intel® Xeon(R) CPU E5-2650 v3 @ 2.30 GHz × 40 Cores. For homogeneous network embedding, we select the representative node embedding algorithms for performing the task of node classification and visualization which is the most direct and common task, and the representative whole network embedding algorithms for conducting the graph classification task. For the heterogeneous network embedding, we choose a widely developed knowledge graph embedding technology for testing the link prediction task and triple classification task, and some meta-path based methods with continuity in heterogeneous information network embedding for comparing the node classification performance. The specific experiments are as follows.

6.1 Homogeneous node embedding

For homogeneous node embedding, we employ three benchmark datasets from the open-source datasets in Table 2 and OpenNe, whose statistics are summarized in Table 7.

Blogcatalog: It is a network of social relationships of the bloggers listed on the BlogCatalog website. The labels of vertices represent blogger interests inferred through the metadata provided by the bloggers. This graph has 5196 vertices, 171,743 edges and 6 different labels.

Wiki: It contains 2405 documents from 17 classes and 17,981 links between them.

Cora: This is a citation network. The links are citation relationships between the documents. This graph contains 2708 machine learning papers from 7 classes and 5429 links between them. Each document is described by a binary vector of 1433 dimensions indicating the presence of the corresponding word.

6.1.1 Setting and metric

The latent dimension to learn for each node is 128 with default. For the model used for supervised node classification, the ratio of training data for node classification is 0.5. The training epoch of LINE is set to 25. The parameters \(p\) and \(q\) for DeepWalk and Node2vec are set to 1, 1 and 1, 0.25. The logistic regression classifier is adopted. For the model used for semi-supervised node classification, we train all models for a maximum of 200 epochs (training iterations) using Adam [202]. We set the training rate for ChebConv [115], GCN [60] to 0.01 and GAT [64] to 0.005. For comparing the performance of different algorithms, the Micro-F1, Macro-F1 and accuracy are adopted which are widely used metrics for node classification [27, 32, 35].

6.1.2 Result analysis

Table 8 compares the performance of different algorithms on node classification. For each dataset, the best performing method across all baselines is bold-faced. For supervised node classification on BlogCatalog datasets, GraRep model shows excellent results. This may be because our fixed parameters of this method are more suitable for this datasets. For Wiki datasets, the method of Node2vec achieves the best classification performance of Micro-F1 and Macro-F1 overall. This can be attributed to the advantages of BFS and DFS strategies.

For semi-supervised node classification on Cora datasets, we perform the task by reporting average accuracies of 10 runs for the fixed train/valid/test split from GCN model setting [60]. As shown in Table 8, the GAT model shows the best performance. This is due to the fact that the model fully considers the importance of different neighbor nodes through the attention mechanism.

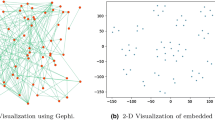

In order to make the comparison of the results more obvious, we use the following representative four methods for the visualization task, i.e., Gf [203], Line [33], GraRep [34] and Node2vec [27]. We visualize Wiki network using t-SNE (the dimension of embedding is 128) (see Figs. 8, 9, 10, 11) to illustrate the properties preserved by embedding methods. Each point corresponds to a node in the network. Color denotes the node label.

As shown in the results, the node layout using Gf in Fig. 8 is not very informative, since vertices of different colors are almost mixed with each other. For Line and Node2vec, results look much better, as most vertices of the same color appear to form groups. However, vertices still do not appear in clearly separable regions with clear boundaries. For GraRep, the boundaries of each group become much clearer, with vertices of different colors appearing in clearly distinguishable regions.

6.2 Homogeneous whole network embedding