Abstract

Photovoltaic (PV) is one of the most promising renewable energy sources. To ensure secure operation and economic integration of PV in smart grids, accurate forecasting of PV power is an important issue. In this paper, we propose the use of long short-term memory recurrent neural network (LSTM-RNN) to accurately forecast the output power of PV systems. The LSTM networks can model the temporal changes in PV output power because of their recurrent architecture and memory units. The proposed method is evaluated using hourly datasets of different sites for a year. We compare the proposed method with three PV forecasting methods. The use of LSTM offers a further reduction in the forecasting error compared with the other methods. The proposed forecasting method can be a helpful tool for planning and controlling smart grids.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Recently, the use of renewable energy sources (RESs) has obviously been increased worldwide. This increase is driven by the environmental and technical benefits of RESs as well as the massive increase in the load demand [15, 38]. Recent studies have been proposed for integrating a 100% renewable energy penetration in smart power systems [12, 39]. The RES units can be large-scale stations integrated to transmission power systems, small-scale distributed generation (DG) integrated to medium voltage distribution systems, or even rooftop-mounted units integrated to low-voltage distribution systems. One of the most notable RES types is photovoltaic (PV) systems. Due to the development in technology and the exponential rise of demand on PV systems, their costs are continuously decreasing [16, 19]. The global contribution of PV systems is expected to rapidly increase in several countries. For instance, in Egypt, there is a great interest in developing several PV projects in small, medium, and large scales, and this trend is highly motivated by the high level of solar radiation and the sunny pattern throughout the year in all parts of the country [3, 44].

PV systems convert sun light directly into electric power. The main characteristic of PV systems is that their output power is intermittent and unpredictable. The output PV power depends on the fluctuated environmental conditions, e.g., sun conditions. For example, the PV output power will have a maximum value during a clear day, while moving and transient clouds greatly reduce the amount of generated power. The authors of [13, 33] demonstrated that high PV penetration can cause many technical problems in power systems, such as reverse power flow and voltage regulation problems. Undesired fluctuations in voltages and power are also a common problem in distribution systems caused by high PV penetration. These fluctuations can lead to instability of small micro-grid systems with small capacities of storage devices [52]. Several other technical aspects are also linked to intermittent PV systems, including power quality, generation control, and protection [49]. These technical aspects constrain the allowed PV penetration level to maintain secure and optimal system operation.

To guarantee safe operation and economic integration of electrical power systems with high PV penetration, accurate forecasting of PV power is essentially required. At the planning stage of PV systems, optimal allocation of these PV systems is required, which needs accurate forecasting of environmental conditions at their recommended sites [37, 48]. Forecasting the output power of PV systems enables system operators to monitor their performance, perform control actions, optimally dispatch various DG types, and manage voltage control devices.

Accurate forecasting of PV power could be a complex task due to the fluctuated nature of the weather (e.g., cloud movement and the temperature changes). Recently, recurrent neural networks have been used in various applications, such as optimal demand response in smart grids [54], control of single-phase converters [17], manipulator control [27, 28, 30, 31], modeling crack growth of aluminum alloy [56], distributed task allocation of multiple robots [26], text recognition [43], and localization of wireless sensor networks [29]. Indeed, recurrent neural networks achieve good results in different applications because they can model the dynamics of the data. This paper proposes the use of long short-term memory (LSTM) recurrent neural network (LSTM-RNN) for forecasting PV output power. LSTM-RNN can model the temporal changes in the data due to their recurrent architecture and memory units. Unlike the traditional recurrent neural networks, LSTMs were designed to avoid the long-term dependency problem. Indeed, LSTM can capture abstract concepts in the PV power sequences. To the best of our knowledge, this is the first paper that uses LSTM-RNN to forecast PV power and considers the temporal changes in PV data when constructing the forecasting models. The main contributions of this paper can be summarized as follows:

-

1.

We propose a novel PV power forecasting method based on deep LSTM recurrent neural networks. The proposed method considers the temporal changes in PV power when constructing the forecasting models.

-

2.

We assess the performance of five LSTM models with different architectures in the forecasting of PV power.

-

3.

To demonstrate the effectiveness of the proposed method, we compare it with three widely used PV power forecasting methods.

The rest of this paper is organized as follows. Section 2 presents the related work. Section 3 explains the proposed method. Section 4 presents and discusses the experiential results. The conclusions and some lines of future work are given in Sect. 5.

2 Related work

Horizons of PV power forecasting vary from seconds to months depending on their usage, where they can be classified into three categories: (1) short-term forecasting, (2) medium-term forecasting, and (3) long-term forecasting. Efficient methods are required to improve the accuracy of PV forecasting models, thus reducing the negative impacts of system uncertainty. In the literature, several forecasting methods have been developed for predicting PV power. These methods can be classified into four categories: (1) statistical methods, (2) artificial intelligence methods, (3) physical methods, and (4) hybrid methods [1, 51]. To achieve accurate results, a suitable forecasting method should be used with each PV data and the horizon length required. In the subsections below, we briefly review the four categories.

2.1 The statistical methods

Statistical methods depend on the given historic environmental data at the PV sites to generate their forecasting models. Persistence models belong to statistical methods category, where they are simple tools for PV power forecasting [7, 11, 34]. These models were developed for stationary time series, and thus they are not suitable to forecast PV power as solar radiation profile is non-stationary. Other examples for the statistical methods are auto-regressive moving average (ARMA) [22], auto-regressive integrated moving average (ARIMA) [45], and auto-regressive moving average model with exogenous inputs (ARMAX) [32]. In [5], a probabilistic forecasting model of PV systems for 6 h ahead was proposed for smart grids applications. These statistical methods are preferable for short-term and medium-term forecasting.

2.2 The artificial intelligence methods

Artificial intelligence techniques are widely used in several fields, including forecasting. In [42], an artificial neural network model was introduced to predict solar irradiation using physical and environmental data. An improved forecasting model that considers aerosol index data instead of using the traditional environmental data was proposed in [35]. In [41], different artificial neural network models were constructed according to sun condition (i.e., sunny, partly cloudy, and overcast) for short-term forecasting of PV production.

In [53], a Bayesian neural network model was proposed to predict solar irradiation. The efficiency of the model was demonstrated through comparisons with traditional neural network models. To improve the forecasting accuracy, the authors of [8] combined wavelet analysis with artificial neural networks. The long-term forecasting of PV output power was performed using historical data, fuzzy theory, and neural networks in [55]. In [24], an artificial neural network model was used to forecast PV power and determine the sufficient time horizon for accurate representation of PV data. Wavelet recurrent neural networks were used in [9] to predict solar radiation for two days ahead, where they considered the correlation between solar radiation, wind speed, air humidity, and temperature.

2.3 The physical methods

Unlike the aforementioned methods, the physical methods require detailed models of PV and local measurements. Satellites, with their ability to monitor cloud movement over wide areas, have been employed for forecasting solar radiation [21, 36, 46]. In [18], an advanced model was proposed to estimate the solar radiation with introducing new sensors that greatly improve the forecasting accuracy. In [10], a ground-based sky imager is employed for cloud and solar radiation forecasting, where images for sky are recorded every half minute.

Satellites are an effective way for short-term forecasting (up to 5 h). In the case of long-term forecasting of solar radiation, numerical weather prediction models are demonstrated to be more efficient than satellites [36]. The authors of [47] tested several numerical weather prediction models at several sites in the USA, Europe, and Canada. A comprehensive study to validate and test the accuracy of several numerical weather prediction models and forecasting systems in the USA is performed in [40].

2.4 The hybrid methods

Efficient and accurate hybrid methods can be formulated by combining different forecasting methods. In [4], ARMA and nonlinear auto-regressive models were combined in order to achieve accurate forecasting results. The authors of [25] demonstrated that the combination of ARMA and time delay neural network produces an efficient hybrid method for solar radiation prediction. The authors of [6] combined two traditional methods, auto-regressive integrated moving average and support vector machines to forecast PV power. Bacher et al. [2] proposed a two-stage method that incorporates auto-regressive model and auto-regressive with exogenous input model for short-term forecasting of PV power. To forecast solar radiation, both exponential smoothing state space model and artificial neural networks were proposed in [14].

The above-mentioned methods do not consider the temporal changes in PV historical data when constructing the forecasting models, and thus they discard key information about the dynamic of the data. In this paper, we propose the use of LSTM-RNN to construct an accurate forecasting model of PV output power. LSTM-RNN considers the temporal changes in the PV power, thereby producing more reliable models.

3 Proposed method

We use the LSTM-RNN to predict an hour-ahead power of PV. LSTM can model the temporal changes in the data and thus improves the forecasting results. In the subsections below, we briefly describe the LSTM unit which is the basic building block of our PV forecasting method, and we explain the proposed PV forecasting models.

3.1 Basic LSTM unit

In the learning phase, traditional neural networks cannot utilize the information learned at previous time steps in the modeling of the data at the current step. This point represents a major shortcoming of traditional neural networks. RNNs try to solve this problem by using loops that pass information from one step of the network to the next steps, allowing information to persist. In other words, RNNs connect previous information to the present task. Indeed, using previous sequence samples may help in the understanding of the present sample.

LSTMs are a special kind of RNNs that can learn short-term as well as long-term dependencies [23]. Unlike RNNs, LSTMs were designed to avoid the long-term dependency problem. LSTM network is trained using backpropagation through time, and it overcomes the vanishing gradient problem. The traditional neural networks have neurons, in turn, LSTM networks have memory blocks that are connected through successive layers. Each block contains gates that handle the state of the block and the output. In the LSTM unit, there are three types of gates: forget, input, and output. The task of each gate can be summarized as follows:

-

Forget gate sets what information to throw away from the block based on certain conditions.

-

Input gate sets which values from the input to update the memory state based on certain conditions.

-

Output gate sets what to output based on input and the memory of the block based on certain conditions.

LSTM unit [23]

As shown in Fig. 1, an LSTM block receives an input sequence and then each gate uses activation units to decide whether they are triggered or not. This operation makes the change of state and addition of information that flows through the block conditional. The gates have weights that can be learned during the training phase. Indeed, the gates make the LSTM blocks smarter than classical neurons and enable them to memorize recent sequences.

Each LSTM unit contains a cell which has a state \(c_t\) at time t. This cell can be considered as a memory unit. Reading/modifying this cell is controlled through the input gate \(i_t\) (a sigmoidal gate), forget gate \(f_t\) and output gate \(o_t\). The LSTM unit receives inputs from two external sources at each of the four terminals (i.e., the three gates and the input) at each time step. The two external sources are:

-

The current sample \(x_t\).

-

The previous hidden states of all LSTM units in the same layer \(h_{t-1}\).

Each gate has an internal source, the cell state \(c_{t-1}\) of its cell block. The LSTM sums the inputs coming from different sources with a bias. The gates are activated by inputting their total input into the logistic function. The total input at the input terminal is passed through \(\tanh\) nonlinearity. The LSTM multiplies the resulting activation by the activation of the input gate and then sums the result of the multiplication to the cell state after multiplying the cell state by the activation of the forget gate \(f_t\). The LSTM passes the updated cell state through \(\tanh\) nonlinearity and then multiplies it with the activations of the output gate \(o_t\) to determine the final output from the LSTM unit \(h_t\). The previous steps and the updates of the LSTM unit can be formulated as follows:

The main advantage of using the LSTM unit, unlike the traditional neurons used in RNN, is that its cell state accumulates activities over time. Since derivatives distribute over sums, the derivatives of the error do not vanish quickly as they are sent back into time. In this way, LSTM can carry out tasks over long sequences and discover long-range features.

3.2 PV power forecasting using different LSTM architectures

To forecast PV output power, we construct five LSTM models using different architectures. We used different LSTM models for the purpose of specifying the model that gives the most accurate results with each PV dataset. Below, we briefly explain each model.

3.2.1 Model1: basic LSTM network for regression

In this architecture, we phrase the PV power forecasting as a regression problem. Given the PV power in this hour, we aim at predicting the output PV power in the next hour. We design the LSTM network for this problem as follows. The network has a visible layer with one input, a hidden layer with four LSTM blocks (neurons), and an output layer that gives the predicted power. We used the default sigmoid activation function for the LSTM blocks. We trained the network for 20, 50, and 100 epochs with a batch size of 1.

3.2.2 Model2: LSTM for regression using the window technique

In this architecture, we use multiple recent time steps to predict the PV output power at the next time step (a window technique). In this technique, we can tune the size of the window for the PV power forecasting problem. For instance, given the current time t, we aim at predicting the PV power at the next time in the sequence \(t+1\). To do so, we use the PV power of the current time t and the ones of two prior times (\(t-1\) and \(t-2\)) as input variables to the LSTM unit. In this case, the input variables of the LSTM unit are the PV power at \(t-2\), \(t-1\), and t while the output variable is the PV power at \(t+1\).

3.2.3 Model3: LSTM for regression with time steps

Indeed, time steps provide another way to phrase the PV output power forecasting problem. Like the previous model (Sect. 3.2.2), we take prior time steps in the PV power time series as inputs to predict the output power at the next time step. In this model, instead of using the past observations as separate input features, we use them as time steps of the one input feature, which is a more accurate framing of the PV power forecasting problem. For instance, if the time step equals 3, the LSTM unit outputs the PV power at t after it handles the PV power at \(t-3\), \(t-2\) and \(t-1\).

3.2.4 Model 4: LSTM with memory between batches

The LSTM network has a memory that enables it to remember across long sequences. When fitting the model in the normal configuration, we reset the state within the network after each training batch. We can make finer control over when the internal state of the LSTM network is cleared by making the LSTM layer stateful. In other words, LSTM can build state over the entire training sequence and even maintain that state if needed to predict PV output power. It requires that the training data not be shuffled when fitting the LSTM network.

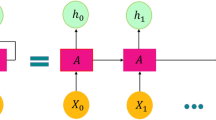

3.2.5 Model 5: stacked LSTMs with memory between batches

Stacked LSTM adds capacity by stacking LSTM layers on top of each other [20, 50]. LSTM networks can be stacked in the same way that other layer types can be stacked (e.g., the layers of neural networks). Figure 2 demonstrates how LSTM layers can be stacked. The blue blocks belong to layer1, while the red blocks belong to layer2. The inputs to layer1 are the PV power \(x_t, x_{t+1}, \ldots x_{N}\) while the inputs to layer2 are \(h_t, h_{t+1}, \ldots h_{N}\). The intuition is that higher LSTM layers can capture abstract concepts in the sequences, which can improve the PV power forecasting results.

4 Results and discussion

4.1 Datasets

We used two PV datasets for locations in Aswan (Dataset1) and Cairo (Dataset2) cities, Egypt. Figure 3 shows the distribution of PV power in the Dataset1 with hours, days, weeks, and months. As shown in Fig. 3a, the maximum PV power is generated at 12.00 h approximately (Egypt time zone: GMT + 2). As we can see, in Aswan city the PV operates for a long period (from 7.00 to 18.00 h) during the whole year (Fig. 3d). This is because Aswan has a subtropical desert low-latitude arid hot climate, and the summer runs from March to November with temperatures reaching upwards of \(40^{circ}\) during June, July, and August. The PV power per week is almost constant (Fig. 3b), while the PV power per day has small fluctuations (Fig. 3c).

4.2 Results

We divide the dataset into training and testing datasets. A total of 70% of the samples are used to train the PV power forecasting model, while the remaining samples are used for testing the model. We used the root-mean-square error (RMSE) to evaluate the performance of the forecasting models. RMSE can be defined as follows:

In this equation, \(\hat{X_i}\) and \(X_i\) are the ith foretasted and actual values, respectively, and N is the size of the testing dataset.

The loss function of LSTM was the mean-squared error, and the optimizer was ‘adam.’ The models were implemented using Keras library (theano backend). Model1 has a visible layer with 1 input, a hidden layer with 4 LSTM blocks, and an output layer that makes a single value prediction. We evaluated the performance of the five models with 20, 50, and 100 epochs.

Table 1 shows the training errors of the five models with Dataset1. In the training phase, model4 gave the smallest training error with 100 epochs, while model1 gave the highest RMSE with 50 epochs. Table 2 shows the testing errors of five models with Dataset1. With 50 epochs, model3 obtained the smallest RMSE value, while model1 gave the highest one.

Figures 4, 5, 6, 7, and 8 present the predicted PV power using the five LSTM models with 20, 50, and 100 epochs with Dataset1. As we can see, model3 with 50 epochs accurately predicts the PV power compared to the other models. In turn, we notice big errors in the case of model1 with 50 epochs.

Table 3 presents the training errors of the five models with Dataset2. In the training phase, model2 gave the smallest RMSE value, while model1 gave the highest error with 50 epochs. Table 4 presents the testing errors of the five models with Dataset2. Model2 and model3 gave the smallest RMSE value, while model1 gave the highest one with 50 epochs.

We can conclude that model3 gives the best results compared to model1, model2, model4, and model5. Thus, we recommend to use it for forecasting the PV power. The main reason of achieving good results (small RMSE) with LSTM is its ability to model the temporal changes in the PV power, while the traditional PV forecasting methods do not utilize the temporal information. In other words, LSTM can capture abstract concepts in the PV power sequences and thus improves the forecasting results.

4.3 Comparison with related methods

In this section, we compare the performance of the proposed method (model3) with three PV forecasting methods: multiple linear regression (MLR), bagged regression trees (BRT), and neural networks. As we can see in Table 5, MLR and BRT give high RMSE. Indeed, these methods were developed for stationary time series forecasting; therefore, they are not suitable for forecasting PV power because solar radiation profile is non-stationary. With NN, we have tried different configurations, such as using 1 and 2 layers while changing the number of neurons from 1 to 50. With Dataset1 and Dataset2, the NN model gives its best results with 2 layers and 7 neurons.

Unlike LSTM-RNN, MLR, BRT and NN methods do not contain memory units, and so they cannot model the temporal changes in PV output power. The NN method has a similar architecture to the LSTM-RNN, but it does not have memory units or a recurrent architecture. In turn, LSTM-RNN uses the information learned in the previous time steps in the predication of the current value, yielding robust and accurate forecasting results. As shown in Table 5, the proposed method (model3) gives very small forecasting errors with Dataset1 and Dataset2 compared to the other methods.

4.4 Applications of the proposed method

The proposed method can be used in several applications of smart grids, such as:

-

Optimal planning of PV units in transmission/distribution systems, i.e., determining the optimal locations and sizes of PV plants with considering their intermittent nature.

-

Optimal control of existing PV plants with avoiding their operational problems, such as voltage rise and reverse power flow.

-

Optimal scheduling of other generators (e.g., fuel-based generators) with considering the predicted values of PV power to minimize operational costs of the grid.

-

Optimal charging/discharging of storage devices (e.g., batteries) for profit maximization.

4.5 Limitations of the proposed method

As shown in Sects. 4.2 and 4.3, the proposed method outperforms the compared methods. However, the current study has some limitations, such as:

-

The effect of outliers in PV power sequences has not been studied in this paper.

-

We did not incorporate environmental parameters, such as, wind speed, air temperature, and humidity, in the forecasting of PV power.

In the future work, we will deeply consider the aforementioned limitations. Furthermore, we will use the proposed method in the applications mentioned in Sect. 4.4.

5 Conclusion and future work

In this paper, we have proposed a new method for forecasting PV output power using deep LSTM networks. Unlike the traditional PV power forecasting methods, our method based on LSTM can capture abstract concepts in the PV power sequences. Therefore, LSTM networks can model the temporal changes in PV output power due to their recurrent architecture and memory units. We have evaluated the performance of five LSTM models with different architectures in the forecasting of PV power. The proposed model3 (LSTM with time steps) gives the best results compared to the other models; therefore, it is recommended to employ it for forecasting the PV power. We also compared the proposed method (model3) with three PV forecasting methods based on MLR, BRT, and NN methods. The proposed method gave a very small forecasting error compared to the other methods. The future work will focus on utilizing different RNN architectures and loss functions for further improvement in the accuracy of forecasting results. Furthermore, we will use the proposed method to control and plan the operation of multiple renewable energy sources (e.g., PV, wind, and biomass) in smart grids.

References

Antonanzas J, Osorio N, Escobar R, Urraca R, Martinez-de Pison F, Antonanzas-Torres F (2016) Review of photovoltaic power forecasting. Sol Energy 136:78–111

Bacher P, Madsen H, Nielsen HA (2009) Online short-term solar power forecasting. Sol Energy 83(10):1772–1783

Barakat S, Samy M, Eteiba MB, Wahba WI (2016) Feasibility study of grid connected PV-biomass integrated energy system in Egypt. Int J Emerg Electr Power Syst 17(5):519–528

Benmouiza K, Cheknane A (2016) Small-scale solar radiation forecasting using ARMA and nonlinear autoregressive neural network models. Theoret Appl Climatol 124(3–4):945–958

Bessa R, Trindade A, Silva CS, Miranda V (2015) Probabilistic solar power forecasting in smart grids using distributed information. Int J Electr Power Energy Syst 72:16–23

Bouzerdoum M, Mellit A, Pavan AM (2013) A hybrid model (SARIMA–SVM) for short-term power forecasting of a small-scale grid-connected photovoltaic plant. Sol Energy 98:226–235

Campbell SD, Diebold FX (2005) Weather forecasting for weather derivatives. J Am Stat Assoc 100(469):6–16

Cao JC, Cao S (2006) Study of forecasting solar irradiance using neural networks with preprocessing sample data by wavelet analysis. Energy 31(15):3435–3445

Capizzi G, Napoli C, Bonanno F (2012) Innovative second-generation wavelets construction with recurrent neural networks for solar radiation forecasting. IEEE Trans Neural Netw Learn Syst 23(11):1805–1815

Chow CW, Urquhart B, Lave M, Dominguez A, Kleissl J, Shields J, Washom B (2011) Intra-hour forecasting with a total sky imager at the UC San Diego solar energy testbed. Sol Energy 85(11):2881–2893

Coimbra CF, Pedro HT (2013) Chapter 15—stochastic-learning methods. In: Kleissl J (ed) Solar energy forecasting and resource assessment. Academic Press, Boston, pp 383–406. doi:10.1016/B978-0-12-397177-7.00015-2

Connolly D, Lund H, Mathiesen BV, Leahy M (2011) The first step towards a 100% renewable energy-system for ireland. Appl Energy 88(2):502–507

Ding M, Xu Z, Wang W, Wang X, Song Y, Chen D (2016) A review on China’s large-scale PV integration: progress, challenges and recommendations. Renew Sustain Energy Rev 53:639–652

Dong Z, Yang D, Reindl T, Walsh WM (2014) Satellite image analysis and a hybrid ESSS/ANN model to forecast solar irradiance in the tropics. Energy Convers Manag 79:66–73

Ellabban O, Abu-Rub H, Blaabjerg F (2014) Renewable energy resources: current status, future prospects and their enabling technology. Renew Sustain Energy Rev 39:748–764

Fantidis J, Bandekas D, Potolias C, Vordos N (2013) Cost of PV electricity-case study of greece. Sol Energy 91:120–130

Fu X, Li S (2016) Control of single-phase grid-connected converters with LCL filters using recurrent neural network and conventional control methods. IEEE Trans Power Electron 31(7):5354–5364

Geraldi E, Romano F, Ricciardelli E (2012) An advanced model for the estimation of the surface solar irradiance under all atmospheric conditions using MSG/SEVIRI data. IEEE Trans Geosci Remote Sens 50(8):2934–2953

Ghafoor A, Munir A (2015) Design and economics analysis of an off-grid PV system for household electrification. Renew Sustain Energy Rev 42:496–502

Graves A, Mohamed A, Hinton G (2013) Speech recognition with deep recurrent neural networks. In: 2013 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 6645–6649

Hammer A, Heinemann D, Lorenz E, Lückehe B (1999) Short-term forecasting of solar radiation: a statistical approach using satellite data. Sol Energy 67(1):139–150

Hassanzadeh M, Etezadi-Amoli M, Fadali M (2010) Practical approach for sub-hourly and hourly prediction of PV power output. In: North American power symposium (NAPS), 2010. IEEE, pp 1–5

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Izgi E, Öztopal A, Yerli B, Kaymak MK, Şahin AD (2012) Short-mid-term solar power prediction by using artificial neural networks. Sol Energy 86(2):725–733

Ji W, Chee KC (2011) Prediction of hourly solar radiation using a novel hybrid model of ARMA and TDNN. Sol Energy 85(5):808–817

Jin L, Li S (2016) Distributed task allocation of multiple robots: a control perspective. IEEE Trans Syst Man Cybern Syst PP(99):1–9

Jin L, Li S, La HM, Luo X (2017) Manipulability optimization of redundant manipulators using dynamic neural networks. IEEE Trans Ind Electron 64(6):4710–4720

Li S, He J, Li Y, Rafique MU (2017) Distributed recurrent neural networks for cooperative control of manipulators: a game-theoretic perspective. IEEE Trans Neural Netw Learn Syst 28(2):415–426

Li S, Qin F (2013) A dynamic neural network approach for solving nonlinear inequalities defined on a graph and its application to distributed, routing-free, range-free localization of WSNs. Neurocomputing 117:72–80

Li S, Wang H, Rafique MU (2017) A novel recurrent neural network for manipulator control with improved noise tolerance. IEEE Trans Neural Netw Learn Syst PP(99):1–11

Li S, Zhang Y, Jin L (2016) Kinematic control of redundant manipulators using neural networks. IEEE Trans Neural Netw Learn Syst 28(10):2243–2254

Li Y, Su Y, Shu L (2014) An ARMAX model for forecasting the power output of a grid connected photovoltaic system. Renew Energy 66:78–89

Lim YS, Tang JH (2014) Experimental study on flicker emissions by photovoltaic systems on highly cloudy region: a case study in Malaysia. Renew Energy 64:61–70

Lipperheide M, Bosch J, Kleissl J (2015) Embedded nowcasting method using cloud speed persistence for a photovoltaic power plant. Sol Energy 112:232–238

Liu J, Fang W, Zhang X, Yang C (2015) An improved photovoltaic power forecasting model with the assistance of aerosol index data. IEEE Trans Sustain Energy 6(2):434–442

Lorenz E, Hurka J, Heinemann D, Beyer HG (2009) Irradiance forecasting for the power prediction of grid-connected photovoltaic systems. IEEE J Sel Top Appl Earth Obs Remote Sens 2(1):2–10

Mahmoud K, Yorino N, Ahmed A (2016) Optimal distributed generation allocation in distribution systems for loss minimization. IEEE Trans Power Syst 31(2):960–969

Mahmud N, Zahedi A (2016) Review of control strategies for voltage regulation of the smart distribution network with high penetration of renewable distributed generation. Renew Sustain Energy Rev 64:582–595

Mathiesen BV, Lund H, Connolly D, Wenzel H, Østergaard PA, Möller B, Nielsen S, Ridjan I, Karnøe P, Sperling K et al (2015) Smart energy systems for coherent 100% renewable energy and transport solutions. Appl Energy 145:139–154

Mathiesen P, Kleissl J (2011) Evaluation of numerical weather prediction for intra-day solar forecasting in the continental united states. Sol Energy 85(5):967–977

Mellit A, Pavan AM, Lughi V (2014) Short-term forecasting of power production in a large-scale photovoltaic plant. Sol Energy 105:401–413

Mubiru J (2008) Predicting total solar irradiation values using artificial neural networks. Renew Energy 33(10):2329–2332

Naz S, Umar AI, Ahmed R, Razzak MI, Rashid SF, Shafait F (2016) Urdu nastaliq text recognition using implicit segmentation based on multi-dimensional long short term memory neural networks. SpringerPlus 5(1):2010

Nematollahi O, Hoghooghi H, Rasti M, Sedaghat A (2016) Energy demands and renewable energy resources in the middle east. Renew Sustain Energy Rev 54:1172–1181

Pedro HT, Coimbra CF (2012) Assessment of forecasting techniques for solar power production with no exogenous inputs. Sol Energy 86(7):2017–2028

Perez R, Kivalov S, Schlemmer J, Hemker K, Renné D, Hoff TE (2010) Validation of short and medium term operational solar radiation forecasts in the US. Sol Energy 84(12):2161–2172

Perez R, Lorenz E, Pelland S, Beauharnois M, Van Knowe G, Hemker K, Heinemann D, Remund J, Müller SC, Traunmüller W et al (2013) Comparison of numerical weather prediction solar irradiance forecasts in the US, Canada and Europe. Sol Energy 94:305–326

Santos SF, Fitiwi DZ, Shafie-Khah M, Bizuayehu AW, Cabrita CM, Catalão JP (2017) New multistage and stochastic mathematical model for maximizing res hosting capacity—part I: problem formulation. IEEE Trans Sustain Energy 8(1):304–319

Shivashankar S, Mekhilef S, Mokhlis H, Karimi M (2016) Mitigating methods of power fluctuation of photovoltaic (PV) sources—a review. Renew Sustain Energy Rev 59:1170–1184

Sutskever I, Vinyals O, Le QV (2014) Sequence to sequence learning with neural networks. In: Ghahramani Z, Welling M, Cortes C, Lawrence ND, Weinberger, KQ (eds) Advances in neural information processing systems. Curran Associates, Inc, Palais des Congrès de Montréal, Montréal CANADA, pp 3104–3112

Wan C, Zhao J, Song Y, Xu Z, Lin J, Hu Z (2015) Photovoltaic and solar power forecasting for smart grid energy management. CSEE J Power Energy Syst 1(4):38–46

Woyte A, Van Thong V, Belmans R, Nijs J (2006) Voltage fluctuations on distribution level introduced by photovoltaic systems. IEEE Trans Energy Convers 21(1):202–209

Yacef R, Benghanem M, Mellit A (2012) Prediction of daily global solar irradiation data using Bayesian neural network: a comparative study. Renew Energy 48:146–154

Yao Y, He X, Huang T, Li C, Xia D (2016) A projection neural network for optimal demand response in smart grid environment. Neural Comput Appl 1–9. doi:10.1007/s00521-016-2532-0

Yona A, Senjyu T, Funabashi T, Kim CH (2013) Determination method of insolation prediction with fuzzy and applying neural network for long-term ahead PV power output correction. IEEE Trans Sustain Energy 4(2):527–533

Zhi L, Zhu Y, Wang H, Xu Z, Man Z (2016) A recurrent neural network for modeling crack growth of aluminium alloy. Neural Comput Appl 27(1):197–203

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Abdel-Nasser, M., Mahmoud, K. Accurate photovoltaic power forecasting models using deep LSTM-RNN. Neural Comput & Applic 31, 2727–2740 (2019). https://doi.org/10.1007/s00521-017-3225-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-017-3225-z