Abstract

There have been many studies on the runtime analysis of evolutionary algorithms in discrete optimization, and however, relatively few homologous results have been obtained on continuous optimization, such as evolutionary programming (EP). This paper presents an analysis of the running time (as approximated by the mean first hitting time) of two EP algorithms based on Gaussian and Cauchy mutations, using an absorbing Markov process model. Given a constant variation, we analyze the running-time upper bound of special Gaussian mutation EP and Cauchy mutation EP, respectively. Our analysis shows that the upper bounds are impacted by individual number, problem dimension number, searching range, and the Lebesgue measure of the optimal neighborhood. Furthermore, we provide conditions whereby the mean running time of the considered EPs can be no more than a polynomial of n. The condition is that the Lebesgue measure of the optimal neighborhood is larger than a combinatorial computation of an exponential and the given polynomial of n. In the end, we present a case study on sphere function, and the experiment validates the theoretical result in the case study.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The running time to optimum is a key factor in determining the success of an evolutionary programming (EP) approach. Ideally, an implementation of an EP approach should run for a sufficient number of generations when the probability of achieving an optimum is greater than some desired value. However, few results on the running times of EP approaches can be found in the current literature.

As a technique of finite state machine, EP was first proposed for continuous optimization [1]. It has since been widely adopted as a powerful optimizing framework for solving continuous optimization problems [2–4]. More recently, EP research has mainly concentrated on the function of its parameters and its improvement by adaptive strategies. As a result, several EP variants have been proposed, distinguishing from each other mainly with different mutation schemes based on different probability distributions.

Arguably, the first EP algorithm that was widely considered successful was the one with Gauss mutation that has been termed as classical evolutionary programming (CEP) [2]. CEP has been intensively analyzed by Fogel [3, 4], Bäck and Schwefel [5, 6]. Subsequently, Yao et al. proposed a fast evolutionary programming algorithm (FEP) with a new mutation scheme based on the Cauchy distribution [7]. Computational experiments showed that FEP is superior to CEP when tackling the optimization problems of multimodal and dispersed peak functions. Another EP variant [8] was later proposed by using the Lévy distribution-based mutation, which we call \(L\acute{e} vy\) evolutionary programming (LEP) in this paper. Empirical analyses show that, overall, LEP exceeds CEP and FEP when solving the benchmark problems of multimodal and highly dispersed peak functions.

CEP [2], FEP [7], and LEP [8] can be considered as classical evolutionary programming algorithms. Several modified EPs have since been designed based on these three basic approaches [2, 7, 8].

The performances of EP approaches such as CEP, FEP, and LEP have oftentimes been verified experimentally rather than theoretically. The theoretical foundations of EP have been an open problem ever since it was first put forward [1, 2]. In particular, Bäck and Schwefel [5, 6] suggested the convergence analysis of EP algorithms as a research topic in their surveys of evolutionary algorithms. Fogel [2–4] presented an initial proof of EP convergence on the basis of the discrete solution space. Rodolph [9–11] then showed that CEP and FEP can converge with an arbitrary initialization; this result is more general since it applies to a continuous solution space.

Previous convergence studies only considered whether an EP algorithm is able to find an optimum within infinite iteration, but did not mention the speed of convergence, i.e., lacking of running-time analysis. To date, running-time analyses have mainly focused on Boolean-individual EAs like (\(1+1\))EA [12], (\(N+N\))EA [13], multi-objective EA [14–16]. Alternative theoretical measures for evaluating the running times of Boolean-individual EA approaches have also been proposed [17, 18]. Recently, the impact of particular components of EA on runtime has also been studied on mutation, selection [19], and population size [18, 20, 21]. In addition to these studies on EAs solving Boolean functions, some results of runtime analysis have been obtained on some combinatorial optimization problems [22–24]. Lehre and Yao [25] completed the runtime analysis of the (\(1+1\))EA on computing unique input output sequences. Zhou et al. presented a series of EA analysis results for discrete optimization like the minimum label spanning tree problem [26], the multiprocessor scheduling problem [27], and the maximum cut problem [28]. Other proposed studies are on the topics of tight bounds on the running time of EA and randomized search heuristic [29–31].

As summarized above, the majority of runtime analyses are limited to discrete search space; analyses for continuous search space require a more sophisticated modeling and remain relatively few, which is unsatisfactory from a theoretical point of view. Jägerskpper conducted a rigorous runtime analysis on (\(1+1\))ES, (\(1+\lambda\))ES minimizing the sphere function [32, 33]. Agapie et al. modeled the (\(1+1\))ES as a renewal process under some reasonable assumptions and analyzed the first hitting time on inclined plane [34] and sphere function [35]. Chen et al. [36] proposed general drift conditions to estimate the upper bound of the first hitting time for EAs to find \(\epsilon\)-approximation solutions. Inspired by the studies above, especially the estimating approach [17] for discrete situation, this paper presents an analytical framework for the running time of evolutionary programming. We also discuss whether the running time of CEP and FEP is influenced by individual number, problem dimension number, searching range, and the Lebesgue measure of the optimal neighborhood in the target problem. Furthermore, we give the approximate conditions under which the EPs can converge in a timespan less than a polynomial of n.

2 EP algorithms and their stochastic process model

2.1 Introduction to EP algorithms

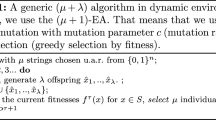

This section introduces the two EP algorithms CEP [2] and FEP [7] that are studied in this paper. The skeleton of EP algorithms analyzed in this paper is given in Algorithm 1; the sole difference among CEP, FEP, and LEP lies in their treatments of step 3.

In step 1, the generated k individuals are denoted by a couple of real vectors \(\mathbf {v}_i = ( \mathbf {x}_i , \varvec{\sigma }_i )\), \(i = 1,2,\ldots ,k\), where the elements \(\mathbf {x}_i = (x_{i1}, x_{i2}, \ldots , x_{in} )\) are the variables to be optimized, and the elements \(\varvec{\sigma }_i = (\sigma _{i1}, \sigma _{i2},\ldots , \sigma _{in})\) are variation variables that affect the generation of offspring. We set the iteration number \(t = 0\) and the initial \(\sigma _{ij} \le 2 (j = 1,\ldots , n)\) as proposed in reference [8]

The fitness values in steps 2 and 4 are calculated according to the objective function of the target problem.

In step 3, a single \({\bar{\mathbf{v}}}_i = ({\bar{\mathbf{x}}_i},\bar{\varvec{\sigma }_i})\) is generated for each individual \(\mathbf {v}_i\), where \(i = 1,\ldots ,k\). For \(j =1,\ldots ,n\),

where \(V(\sigma _{ij})\) denotes a renewing function of variation variable \(\sigma _{ij}\). The renewing function \(\bar{\sigma }_{ij} = V(\sigma _{ij})\) may have various forms, and a representative implementation of it can be found in reference [7]. However, for ease of theoretical analysis, we will consider a kind of simple renewing function, i.e., constant function, in our case studies (see Sect. 4).

The three EP algorithms differ in how \({\bar{\mathbf{x}}}_i\) is derived, which can be explained as follows(in this paper, we only consider CEP and FEP).

where \(N_j(0,1)\) is a newly generated random variable by Gaussian distribution for each j, \(\delta _j\) is a Cauchy random number produced anew for jth dimension, whose density function is

In step 5, for each individual in the set of all parents and offsprings, q different opponent individuals are uniformly and randomly selected to be compared. If the selected individual’s fitness value is more than the opponent’s, the individual obtains a “win.” The top k individuals with the most “wins’ are selected to be the parents in the next iteration, breaking ties by greater fitness.

2.2 Target problem of EP algorithms

Without loss of generality, we assume that the EP approaches analyzed in this study aim to solve a minimization problem in a continuous search space, defined as follows.

Definition 1

(minimization problem) Let \(\varvec{S} \subseteq \varvec{R}^n\) be a finite subspace of the n-dimensional real domain \(\varvec{R}^n\), and let \(f: \varvec{S}\rightarrow \varvec{R}\) be an n-dimensional real function. A minimization problem, denoted by the 2-tuple \((\varvec{S}, f)\), is to find an n-dimensional vector \(\mathbf {x}_{\text{min}}\in \varvec{S}\) such that \(\forall \mathbf {x}\in \varvec{S}, f(\mathbf {x}_{{\text{min}}}) \le f(\mathbf {x})\).

Without loss of generality, we can assume that \(\mathbf {S}=\prod _{i=1}^{n}[-b_{i},b_{i}]\) where \(b_{i}=b>0\). The function \(f:\mathbf {S}\rightarrow \mathbf {R}\) is called the objective function of the minimization problem. We do not require f to be continuous, but it must be bounded. Furthermore, we only consider the unconstrained minimization.

The following properties are assumed, and we will make use of them in our analyses.

-

1.

The subset of optimal solutions in \(\mathbf {S}\) is non-empty.

-

2.

Let \(f^{*}=\min \{f(x)|x\in \mathbf {S}\}\) be the fitness value of an optimal solution, and let \(\mathbf {S}^{*}(\varepsilon )=\{x\in \mathbf {S}|f(x)<f^{*}+\varepsilon \}\) be the optimal epsilon neighborhood. Every element of \(\mathbf {S}^{*}(\varepsilon )\) is an optimal solution of the minimization problem.

-

3.

\(\forall \varepsilon >0\), \(m\big (\mathbf {S}^{*}(\varepsilon )\big )>0\), where we denote the Lebesgue measure of \(\mathbf {S}^{*}(\varepsilon )\) as \(m\big (\mathbf {S}^{*}(\varepsilon )\big )\).

The first assumption describes the existence of optimal solutions to the problem. The second assumption presents a rigorous definition of optimal solution for continuous minimization optimization problems. The third assumption indicates that there are always solutions whose objective values are distributed continuously and arbitrarily close to the optimal, which makes the minimization problem solvable.

2.3 Stochastic process model of EP algorithms

Our running-time analyses are based on representing the EP algorithms as Markov processes. In this section, we explain the terminologies and notations used throughout the rest of this study.

Definition 2

(stochastic process of EP) The stochastic process of an evolutionary programming algorithm EP is denoted by \(\{\xi _{t}^{\text{EP}}\}_{t=0}^{+\infty }\); \(\xi _{t}^{{\text{EP}}}=(\mathbf {v}_{1}^{(t)},\mathbf {v}_{2}^{(t)}, \ldots ,\mathbf {v}_{k}^{(t)})\), where \(\mathbf {v}_{i}^{(t)}=(\mathbf {x}_{i}^{(t)}, \varvec{\sigma }_{i}^{(t)})\) is the ith individual at the tth iteration.

The stochastic status \(\xi _{t}^{{\text{EP}}}\) represents the individuals of the tth iteration population for the algorithm EP. All stochastic processes examined in this paper are discrete time, i.e., \(t\in \mathbf {Z}^{+}\).

Definition 3

(status space) The status space of EP is \(\varOmega _{{\text{EP}}}=\{(\mathbf {v}_{1},\mathbf {v}_{2},\ldots ,\mathbf {v}_{k})| \mathbf {v}_{i}=(\mathbf {x}_{i},\varvec{\sigma }_{i}),\mathbf {x}_{i}\in \mathbf {S},\sigma _{ij}\le 2,j=1,\ldots ,n\}\).

\(\varOmega _{{\text{EP}}}\) is the set of all possible population statuses for EP. Intuitively, each element of \(\varOmega _{{\text{EP}}}\) is associated with a possible population in the implementation of EP. Let \(\mathbf {x}^{*}\in \mathbf {S}^{*}(\varepsilon )\) be an optimal solution. We define the optimal status space as follows:

Definition 4

(optimal status space) The optimal status space of EP is the subset \(\varOmega _{{\text{EP}}}^{*}\subseteq \varOmega _{{\text{EP}}}\) such that \(\forall \xi _{{\text{EP}}}^{*}\in \varOmega _{{\text{EP}}}^{*}\), \(\exists (\mathbf {x}^{*},\varvec{\sigma })\in \xi _{{\text{EP}}}^{*}\), where \(\varvec{\sigma }=(\sigma ,\sigma ,\ldots ,\sigma )\), and \(\sigma >0\).

Hence, all members of \(\varOmega _{{\text{EP}}}^{*}\) contains at least one optimal solution represented by individual \(\mathbf {x}^{*}\).

Definition 5

(Markov process) A stochastic process \(\{\xi _{t}\}_{t=0}^{+\infty }\) with status space \(\varOmega\) is a Markov process if \(\forall \tilde{\varOmega }\subseteq \varOmega\), \(P\{\xi _{t+1}\in \tilde{\varOmega }|\xi _{0},\ldots ,\xi _{t}\}=P\{\xi _{t+1} \in \tilde{\varOmega }|\xi _{t}\}\).

Lemma 1

The stochastic process \(\{\xi _{t}^{{\text{EP}}}\}_{t=0}^{+\infty }\) of EP is a Markov process.

Proof

The proof is given in “Appendix” section.\(\{\xi _{t}^{{\text{EP}}}\}_{t=0}^{+\infty }\)

We now show that the stochastic process of EP is an absorbing Markov process, defined as follows.\(\square\)

Definition 6

(absorbing Markov process to optimal status space) A Markov process \(\{\xi _{t}\}_{t=0}^{+\infty }\) is an absorbing Markov process to \(\varOmega ^{*}\) if \(\exists \varOmega ^{*}\subset \varOmega\), such that \(P\{\xi _{t+1}\notin \varOmega ^{*}|\xi _{t}\in \varOmega ^{*}\}=0\) for \(t=0,1,\ldots\).

Lemma 2

The stochastic process \(\{\xi _{t}^{{\text{EP}}}\}_{t=0}^{+\infty }\) of EP is an absorbing Markov process to \(\varOmega _{{\text{EP}}}^{*}\).

Proof

The proof is given in “Appendix” section.\(\square\)

This property implies that once an EP algorithm attains an optimal state, it will never leave optimality.

3 General running-time analysis framework for EP algorithms

The analysis of EP algorithms has usually been approximated using a simpler measure known as the first hitting time [13, 17], which is employed in this study.

Definition 7

(first hitting time of EP) \(\mu _{{\text{EP}}}\) is the first hitting time of EP if \(\mu _{{\text{EP}}}=\min \{t\ge 0:\xi _{t}^{{\text{EP}}}\in \varOmega _{{\text{EP}}}^{*}\}\).

If an EP algorithm is modeled as an absorbing Markov chain, the running time of the EP can be measured by its first hitting time \(\mu _{{\text{EP}}}\). We denote its expected value by \(E\mu _{{\text{EP}}}\) which can be calculated by Theorem 1. Hereinafter, we use the term running time and first hitting time interchangeably.

Let \(\lambda _{t}^{{\text{EP}}}=P\{\xi _{t}^{{\text{EP}}}\in \varOmega _{{\text{EP}}}^{*}\}=P\{\mu _{{\text{EP}}}\le t\}\) be the probability that EP has attained an optimal state by time t.

Theorem 1

If \(\lim \nolimits _{t\rightarrow +\infty }\lambda _{t}^{{\text{EP}}}=1\) , the expected first hitting time \(E\mu _{{\text{EP}}}=\sum _{i=0}^{+\infty }(1-\lambda _{i}^{{\text{EP}}})\) .

Corollary 1

The expected hitting time can also be expressed as \(E\mu _{{\text{EP}}}=(1-\lambda _{0}^{{\text{EP}}})\sum\nolimits_{t=0}^{+\infty }\prod _{i=1}^{t}(1-P\{\xi _{i}^{{\text{EP}}}\in \varOmega _{{\text{EP}}}^{*}|\xi _{i-1}^{{\text{EP}}}\notin \varOmega _{{\text{EP}}}^{*}\})\).

Proof

The proof is given in “Appendix” section.\(\square\)

The conclusions of Theorem 1 and Corollary are a direct approach to calculating the first hitting time of EP. However, Corollary 1 is more practical than Theorem 1 for this purpose since \(p_{i}=P\{\xi _{i}^{{\text{EP}}}\in \varOmega _{{\text{EP}}}^{*}| \xi _{i-1}^{{\text{EP}}}\notin \varOmega _{{\text{EP}}}^{*}\}\), which is the probability that the process first finds an optimal solution at time i, is dependent on the mutation and selection techniques of the EP. Hence, the framework of Corollary 1 is similar to the one in [17]. However, the estimating method [17] is based on a probability \(q_{i}\) in discrete optimization \(q_{i}=\sum \nolimits _{y\notin \varOmega _{{\text{EP}}}^{*}}P\{\xi _{i}^{{\text{EP}}} \in \varOmega _{{\text{EP}}}^{*}|\xi _{i-1}^{{\text{EP}}}=y\}P\{\xi _{i-1}^{{\text{EP}}}=y\}\).

\(p_{i}\) is a probability for continuous status while \(q_{i}\) is for the discrete one. In general, the exact value of \(p_{i}\) is difficult to calculate. Alternatively, first hitting time can also be analyzed in terms of an upper and a lower bound of \(p_{i}\), as shown by the following Corollaries 2 and 3:

Corollary 2

If \(\alpha _{t}\le P\{\xi _{t}^{{\text{EP}}}\in \varOmega _{{\text{EP}}}^{*}|\xi _{t-1}^{{\text{EP}}}\notin \varOmega _{{\text{EP}}}^{*}\}\le \beta _{t}\),

-

1.

\(\sum\nolimits_{t=0}^{+\infty }[(1-\lambda _{0}^{{\text{EP}}})\prod\nolimits_{i=1}^{t}(1-\beta _{i})]\le E\mu _{{\text{EP}}}\le \sum\nolimits_{t=0}^{+\infty }[(1-\lambda _{0}^{{\text{EP}}})\prod\nolimits_{i=1}^{t}(1-\alpha _{i})]\) where \(0<\alpha _{t},\beta _{t}<1\).

-

2.

\(\beta ^{-1}(1-\lambda _{0}^{{\text{EP}}})\le E\mu _{{\text{EP}}}\le \alpha ^{-1}(1-\lambda _{0}^{{\text{EP}}})\) when \(\alpha _{t}=\alpha\) and \(\beta _{t}=\beta\).

Proof

The proof is given in “Appendix” section.\(\square\)

Corollary 2 indicates that \(E\mu _{{\text{EP}}}\) can be studied by the lower bound and upper bound of \(P\{\xi _{t}^{{\text{EP}}}\in \varOmega _{{\text{EP}}}^{*}|\xi _{t-1}^{{\text{EP}}} \notin \varOmega _{{\text{EP}}}^{*}\}\). Therefore, the theorem and corollaries introduce a first-hitting-time framework for the running-time analysis of EP. The running times of EPs based on Gaussian and Cauchys mutation are studied following the framework.

4 Running-time upper bounds of CEP and FEP

In this section, we use the framework proposed in Sect. 3 to study the running times of EPs based on Gaussian and Cauchy mutations, i.e., CEP and FEP. Moreover, our running-time analysis aims at a class of EPs with constant variation, as shown in Eq. (2).

4.1 Mean running time of CEP

CEP [2] is a classical EP, from which several continuous evolutionary algorithms are derived. This subsection mainly focuses on the running time of CEP proposed by Fogel [3], Bäck and Schwefel [5, 6]. The mutation of CEP is based on the standard normal distribution indicated by Eq. (3).

According to the running-time analysis framework, the probability \(p_{t}=P\{\xi _{t}^{C}\in \varOmega _{{\text{EP}}}^{*}|\xi _{t-1}^{C} \notin \varOmega _{{\text{EP}}}^{*}\}\) for CEP is a crucial factor, and its property is introduced by the following.

Theorem 2

Let the stochastic process of CEP be denoted by \(\{\xi _{t}^{C}\}_{t=0}^{+\infty }\) . Let k be the population size of CEP with solution space \(\mathbf {S}=\prod _{i=1}^{n}[-b_{i},b_{i}]\) where \(b_{i}=b>0\) . Then \(\forall \varepsilon >0\) , we have

-

1.

For fixed individual \((\mathbf {x},{\bar{\varvec{\sigma }}})\), \(P\{{\bar{\mathbf{x}}}\in \mathbf {S}^{*}(\varepsilon )\}\ge \frac{m\big (\mathbf {S}^{*}(\varepsilon )\big )}{(\sqrt{2\pi })^{n}}(\prod \nolimits _{j=1}^{n}\frac{1}{\sigma }) \exp \{-\sum \nolimits _{j=1}^{n}\frac{2b^{2}}{\sigma ^{2}}\}\) where the renewing function \(V(\sigma _{ij})=\sigma\).

-

2.

The right part of the inequality of conclusion (1) is maximum when \(\sigma =2b\).

-

3.

\(P\{\xi _{t}^{C}\in \varOmega _{{\text{EP}}}^{*}|\xi _{t-1}^{C}\notin \varOmega _{{\text{EP}}}^{*}\}\ge 1-(1-\frac{m\big (\mathbf {S}^{*}(\varepsilon )\big )}{(4\sqrt{e}\pi b)^{n}})^{k}\) if \(\sigma =2b\).

Proof

The proof is given in “Appendix” section.\(\square\)

In Theorem 2, Part (1) indicates a lower bound of the probability that an individual \(\mathbf {x}\) can be renewed to be the \({\bar{\mathbf{x}}}\) in the optimal neighborhood \(\mathbf {S}^{*}(\varepsilon )\). As a result, the lower bound is a function of variation \(\sigma\). Part (2) presents the lower bound that Part (1) will be tightest if \(\sigma =2b\). By this, the key factor of Corollary 2\(P\{\xi _{t}^{C}\in \varOmega _{{\text{EP}}}^{*}|\xi _{t-1}^{C} \notin \varOmega _{{\text{EP}}}^{*}\}\) may have a tight lower bound shown by Part (3).

Theorem 2 indicates that a larger \(m\big (\mathbf {S}^{*}(\varepsilon )\big )\) leads to faster convergence for CEP since \(P\{\xi _{t}^{C}\in \varOmega _{{\text{EP}}}^{*}|\xi _{t-1}^{C}\notin \varOmega _{{\text{EP}}}^{*}\}\) becomes larger when \(m\big (\mathbf {S}^{*}(\varepsilon )\big )\) is larger. Moreover, Theorem 2 produces the lower bound of \(P\{\xi _{t}^{C}\in \varOmega _{{\text{EP}}}^{*}|\xi _{t-1}^{C}\notin \varOmega _{{\text{EP}}}^{*}\}\), with which the first hitting time of CEP can be estimated following Corollary 2. Corollary 3 indicates the convergence capacity and running-time upper bound of CEP.

Corollary 3

Supposed conditions of Theorem 2 are satisfied,

-

1.

\(\lim \nolimits _{t\rightarrow +\infty }\lambda _{t}^{C}=1\) \((\lambda _{t}^{C}=P\{\xi _{t}^{C}\in \varOmega _{{\text{EP}}}^{*}\})\) and

-

2.

\(\forall \varepsilon >0\),

$$\begin{aligned} E\mu _{C}\le (1-\lambda _{0}^{C})\big (1-(1-\frac{m\big (\mathbf {S}^{*}(\varepsilon )\big )}{(4\sqrt{e}\pi b)^{n}})^{k}\big )^{-1} \end{aligned}$$(6)

Proof

The proof is given in “Appendix” section.\(\square\)

Corollary 3 shows that CEP converge globally and that the upper bound of CEP’s running time decreases as the Lebesgue measure of the optimal \(\varepsilon\) neighborhood \(m\big (\mathbf {S}^{*}(\varepsilon )\big )\) of \(\mathbf {S}^{*}(\varepsilon )\) increases. A larger \(m\big (\mathbf {S}^{*}(\varepsilon )\big )\) translates to a larger a larger optimal \(\varepsilon\) neighborhood \(\forall \varepsilon >0\), which allows the solution of CEP more easily. Moreover, an increase in problem dimension number can increase the upper bound, and enlarging the individual number will make the upper bound smaller.

According to Eq. (6), \(E\mu _{C}\) has a smaller upper bound when the population size k increases. Approximately,

since \(\Big (1-\frac{m\big (\mathbf {S}^{*}(\varepsilon )\big )}{(4\sqrt{e}\pi b)^{n}}\Big )^{k}\approx 1-k\frac{m\big (\mathbf {S}^{*}(\varepsilon )\big )}{(4\sqrt{e}\pi b)^{n}}\) when k is large. Furthermore, the running time of CEP is similar to an exponential order function of size n, if \(m\big (\mathbf {S}^{*}(\varepsilon )\big )\ge C_{0}>0\) where \(C_{0}\) is a positive constant. Hence, the running time of CEP is nearly \(O\big ((4\sqrt{e}\pi b)^{n}\big )\).

Moreover, we can give an approximate condition under which CEP can converge in time polynomial to n when \(m\big (\mathbf {S}^{*}(\varepsilon )\big )\ge c_{C}\frac{(4\sqrt{e}\pi b)^{n}}{P_{n}}\), where \(c_{C}=\frac{(1-\lambda _{0}^{C})}{P_{n}}>0\).

Suppose Eq. (7) holds. Then, \(m\big (\mathbf {S}^{*}(\varepsilon )\big )\ge \frac{(1-\lambda _{0}^{C})(4\sqrt{e}\pi b)^{n}}{P_{n}^2}>0\Leftrightarrow E\mu _{C}\le \frac{(1-\lambda _{0}^{C})(4\sqrt{e}\pi b)^{n}}{P_{n}\cdot m\big (\mathbf {S}^{*}(\varepsilon )\big )}\le P_{n}.\) Thus, the running time of CEP can be polynomial to n, under the constraint that \(\forall \varepsilon >0\), \(m\big (\mathbf {S}^{*}(\varepsilon )\big )\ge \frac{(1-\lambda _{0}^{C})}{k}\cdot \frac{(4\sqrt{e}\pi b)^{n}}{P_{n}}\), where \(\mathbf {S}^{*}(\varepsilon )=\{\mathbf {x}\in \mathbf {S}|f(\mathbf {x})<f^{*}+\varepsilon \}\).

4.2 Mean running time of FEP

FEP was proposed by Yao [7] as an improvement to CEP. The mutation for FEP is based on the Cauchy distribution indicated by Eq. (4). The property of the probability \(p_{t}=P\{\xi _{t}^{F}\in \varOmega _{{\text{EP}}}^{*}| \xi _{t-1}^{F}\notin \varOmega _{{\text{EP}}}^{*}\}\) is discussed by Theorem 3 \((t=0,1,\ldots )\).

Theorem 3

Let \(\{\xi _{t}^{F}\}_{t=0}^{+\infty }\) be the stochastic process of FEP,

-

1.

\(P\{{\bar{\mathbf{x}}}\in \mathbf {S}^{*}(\varepsilon )\}\ge \frac{m\big (\mathbf {S}^{*}(\varepsilon )\big )}{(\pi )^{n}} (\sigma +\frac{4b^{2}}{\sigma })^{-n}\)

-

2.

The right part of the inequality of conclusion (1) is maximum when \(\sigma =2b\)

-

3.

\(P\{\xi _{t}^{F}\in \varOmega _{{\text{EP}}}^{*}|\xi _{t-1}^{F}\notin \varOmega _{{\text{EP}}}^{*}\}\ge 1-(1-\frac{m\big (\mathbf {S}^{*}(\varepsilon )\big )}{(4\pi b)^{n}})^{k}\) if \(\sigma =2b\).

Proof

The proof is given in “Appendix” section.\(\square\)

Similarly to Theorem 2, FEP with \(\sigma =2b\) may lead to a tight lower bound of the probability in Part (1) and (3). Theorem 3 indicates that \(m\big (\mathbf {S}^{*}(\varepsilon )\big )\) affects the convergence of FEP directly. A larger \(m\big (\mathbf {S}^{*}(\varepsilon )\big )\) allows FEP to more easily arrive at a status in the optimal state space (Definition 4). Conversely, a bigger b (the bounds of search space) increases the difficulty of the problem for FEP. Given this convergence time framework, Corollary 4 summarizes the convergence capacity and running-time upper bound of FEP.

Corollary 4

Supposed conditions of Theorem 3 are satisfied,

-

1.

\(\lim \nolimits _{t\rightarrow +\infty }\lambda _{t}^{F}=1\) \((\lambda _{t}^{F}=P\{\xi _{t}^{F}\in \varOmega _{{\text{EP}}}^{*}\})\) and

-

2.

for \(\forall \varepsilon >0\), \(E\mu _{F}\le (1-\lambda _{0}^{F})\big (1-(1- \frac{m\big (\mathbf {S}^{*}(\varepsilon )\big )}{(4b\pi )^{n}})^{k}\big )\).

Proof

The proof is given in “Appendix” section.\(\square\)

Corollary 4 proves the convergence of FEP and indicates that a larger \(m\big (\mathbf {S}^{*}(\varepsilon )\big )\) can make FEP converge faster. Using a similar analysis to that for CEP, a larger dimension number of problem n can increase the upper bound of FEP, but larger individual number k can lead to a smaller upper bound. Furthermore, [\(-b,b\)] is the maximum interval bound for each dimension of the variables to be optimized (Definition 1). As a result, the second conclusion of Corollary 4 also implies that a larger search space will increase the upper bound of \(E\mu _{{\text{EP}}}\).

If k becomes large enough, we have \(\Big (1-\frac{m\big (\mathbf {S}^{*}(\varepsilon )\big )}{(4b\pi )^{n}}\Big )^{k}\approx 1-k\frac{m\big (\mathbf {S}^{*}(\varepsilon )\big )}{(4b\pi )^{n}}\) such that

Hence, the running time of FEP is nearly \(O((4b\pi )^{n})\) when \(m\big (\mathbf {S}^{*}(\varepsilon )\big )\) is a constant greater than zero.

Moreover, we can give an approximate condition where FEP can converge in polynomial time P(n), i.e., \(m\big (\mathbf {S}^{*}(\varepsilon )\big )\ge \frac{1-\lambda _{0}^{F}}{k}\cdot \frac{(4b\pi )^{n}}{P(n)}\), \(\forall \varepsilon >0\) when Eq. (8) is true. The analysis is similar to the one for CEP at the end of Sect. 4.1.

4.3 Case study: simple EPs for sphere function problem

In this section, we analyzed the running time of simple EPs for a concrete problem presented by Definition 8.

Definition 8

A sphere function problem is to minimize the value of function \(f({\mathbf{y}}) = \sum \nolimits _{i = 1}^n {y_i^2}\) where \({y_i} \in [ - 1,1]\).

Obviously, the optimal solution of sphere function problem is the vector \((0,0,\ldots ,0)\).

Definition 9

A simple EP is an EP algorithm such that \({\varvec{\sigma }} = (1,1,\ldots ,1)\),there is only one individual in the population and the variation renewing function \(V({\sigma _j}) = 1\) where \(j = 1,\ldots ,n\).

Then, we have

Simple CEP:

Simple FEP:

We will use the proposed theories of running time to analyze simple CEP and FEP to investigate which algorithm may have a smaller upper bound of running time.

Given the solution space \(\mathbf {S}=\prod \nolimits _{j=1}^{n}[-1,1]\), optimal neighborhood \(\mathbf {S}^{*}(\varepsilon )=\{\mathbf{y }\in \mathbf {S}|f(\mathbf y )<\varepsilon \},\varepsilon \le 1\). Let \(\tilde{\mathbf{S}}=\{\mathbf {z}|\mathbf {z}= {\bar{\mathbf{x}}}-\mathbf {x},{\bar{\mathbf{x}}}\in \mathbf {S}^{*}(\varepsilon ),\mathbf {x}\in \mathbf {S}\}\). We have the following results.

Theorem 4

Let \(\mu _{1}\) and \(\mu _{2}\) be the first hitting time of simple CEP and FEP, then

-

1.

\(E{\mu _1} \le {(\frac{{\sqrt{2\pi e} }}{\varepsilon })^n}\)

-

2.

\(E{\mu _2} \le {(\frac{{2\pi }}{\varepsilon })^n}\)

Proof

The proof is given in “Appendix” section.\(\square\)

As a result, we find simple CEP has a little smaller upper bound than simple FEP. CEP was proved to be a little better than FEP in solving sphere function problem by experimental data [7], respectively. Therefore, Theorem 4 might also reveal that Gauss distribution is better for sphere function problem than Cauchy distribution.

5 Experiment

Theorem 4 gives the upper bounds of the expected first hitting time for simple CEP and FEP on n-dimensional sphere function problem. We conduct experiment to validate the theoretical result, the experiment settings: fix the error \(\varepsilon =0.1\); fix the initial solution \({{\varvec{x}}}_0=(1,1,\ldots ,1)\); for simple CEP and FEP, respectively, we conduct 500 runs and denote \(T_i\) as the first hitting time at the ith run, then \(\frac{{\sum \nolimits _{i = 1}^{500} {{T_i}}} }{{500}}\) is considered to be the estimation of the expected first hitting time.

From Fig. 1, we can see that the expected first hitting time of simple CEP and FEP grows exponentially when the dimension n of the sphere function problem increases, and the time complexity of the FEP is higher than that of CEP, which validates the theoretical analysis in Sect. 4.3. We need to point out that the time complexity of CEP and FEP grows so fast that when the dimension \(n>7\), the calculation of the first hitting time is too long to obtain a result on our computer, so we only present the case of \(n\le 7\).

6 Conclusion

In this paper, we propose a running-time framework to calculate the mean running time of EP and present a case study and experiment. Based on this framework, the convergence and mean running time of CEP and FEP with constant variation are studied. We also obtain some results at the worst running time of the considered EPs, although the results show that the upper bounds can be tighter if the variation \(\sigma =2b\) where 2b is the length of the searching interval per dimension. It is shown that the individual number, problem dimension number, searching range, and the Lebesgue measure of the optimal neighborhood of the optimal solution have a direct impact on the bounds of the expected convergence times of the considered EPs. Moreover, the larger the Lebesgue measure of the optimal neighborhood of the optimum, the lower the upper bound of the mean convergence time. In addition, the convergence time of the EPs can be polynomial on average on the condition that the Lebesgue measure is greater than a value that is exponential to b.

However, it is possible to make an improvement on the running-time framework and analysis given in this study. The deduction process in the proofs for Theorems 2 and 3 uses few properties of the distribution functions of the mutations, and so it is possible to tighten the results. By introducing more information on the specific mutation operations, more sound theoretical conclusions may be derivable. More importantly, unlike the rigorous conditions under which CEP and FEP can converge in polynomial time, the running-time analysis of specific EP algorithms for real-world and constructive problems would have a more significant and practical impact. Future research could also focus on the runtime analysis of specific case studies of EPs.

The proposed framework and results can be considered as a first step for analyzing the running time of evolutionary programming algorithms. We hope that our results can serve as a basis for further theoretical studies.

References

Fogel LJ, Owens AJ, Walsh MJ (1996) Artificial intelligence through simulated evolution. Wiley, New York

Fogel DB (1991) System identification through simulated evolution: a machine learning approach to modeling. Ginn Press, Washington

Fogel DB (1992) Evolving artificial intelligence. Dissertation, University of California at San Diego

Fogel DB (1993) Applying evolutionary programming to selected traveling salesman problems. Cybern Syst 24(1):27–36

Bäck T, Schwefel HP (1993) An overview of evolutionary algorithms for parameter optimization. Evol Comput 1(1):1–23

Schwefel HP (1995) Evolution and optimum seeking. Wiley, New York

Yao X, Liu Y, Lin G (1999) Evolutionary programming made faster. IEEE Trans Evol Comput 3(2):82–102

Lee CY, Yao X (2004) Evolutionary programming using mutations based on the Lvy probability distribution. IEEE Trans Evol Comput 8(1):1–13

Rudolph G (2001) Self-adaptive mutations may lead to premature convergence. IEEE Trans Evol Comput 5(4):410–414

Rudolph G (1994) Convergence of non-elitist strategies. Proceedings of the first IEEE conference on evolutionary computation 600:63–66

Rudolph G (1996) Convergence of evolutionary algorithms in general search spaces. Proceedings of IEEE international conference on evolutionary computation 180:50–54

Droste S, Jansen T, Wegener I (2002) On the analysis of the (1 + 1) evolutionary algorithm. Theor Comput Sci 276(1):51–81

He J, Yao X (2001) Drift analysis and average time complexity of evolutionary algorithms. Artif Intell 127(1):57–85

Laumanns M, Thiele L, Zitzler E (2004) Running time analysis of multiobjective evolutionary algorithms on pseudo-boolean functions. IEEE Trans Evolut Comput 8(2):170–182

Kumar R, Banerjee N (2006) Analysis of a multiobjective evolutionary algorithm on the 0C1 knapsack problem. Theor Comput Sci 358(1):104–120

Neumann F (2007) Expected runtimes of a simple evolutionary algorithm for the multi-objective minimum spanning tree problem. Eur J Oper Res 181(3):1620–1629

Yu Y, Zhou ZH (2008) A new approach to estimating the expected first hitting time of evolutionary algorithms. Artif Intell 172(15):1809–1832

Chen T, Tang K, Chen G et al (2012) A large population size can be unhelpful in evolutionary algorithms. Theor Comput Sci 436:54–70

Lehre PK, Yao X (2012) On the impact of mutation-selection balance on the runtime of evolutionary algorithms. IEEE Trans Evolut Comput 16(2):225–241

Witt C (2008) Population size versus runtime of a simple evolutionary algorithm. Theor Comput Sci 403(1):104–120

Chen T, He J, Sun G et al (2009) A new approach for analyzing average time complexity of population-based evolutionary algorithms on unimodal problems. IEEE Trans Syst Man Cybern Part B Cybern 39(5):1092–1106

Yu Y, Yao X, Zhou ZH (2012) On the approximation ability of evolutionary optimization with application to minimum set cover. Artif Intell 180:20–33

Zhou Y, He J, Nie Q (2009) A comparative runtime analysis of heuristic algorithms for satisfiability problems. Artif Intell 173(2):240–257

Oliveto PS, He J, Yao X (2009) Analysis of the-EA for finding approximate solutions to vertex cover problems. IEEE Trans Evol Comput 13(5):1006–1029

Lehre PK, Yao X (2014) Runtime analysis of the (1 + 1) EA on computing unique input output sequences. Inf Sci Int J 259:510–531

Lai X, Zhou Y, He J et al (2014) Performance analysis of evolutionary algorithms for the minimum label spanning tree problem. IEEE Trans Evolut Comput 18(6):860–872

Zhou Y, Zhang J, Wang Y (2014) Performance analysis of the \((1+ 1)\) Evolutionary Algorithm for the multiprocessor scheduling problem. Algorithmica 5:1–21

Zhou Y, Lai X, Li K (2014) Approximation and parameterized runtime analysis of Evolutionary Algorithms for the maximum cut problem. IEEE Trans Cybern. doi:10.1109/tcyb.2014.2354343

Witt C (2013) Tight bounds on the optimization time of a randomized search heuristic on linear functions. Combin Probab Comput 22(02):294–318

Witt C (2014) Fitness levels with tail bounds for the analysis of randomized search heuristics. Inf Process Lett 114(1):38–41

Sudholt D (2013) A new method for lower bounds on the running time of evolutionary algorithms. IEEE Trans Evolut Comput 17(3):418–435

Jägerskpper J (2007) Algorithmic analysis of a basic evolutionary algorithm for continuous optimization. Theor Comput Sci 379(3):329–347

Jägerskpper J (2008) Lower bounds for randomized direct search with isotropic sampling. Oper Res Lett 36(3):327–332

Agapie A, Agapie M, Baganu G (2013) Evolutionary algorithms for continuous-space optimisation. Int J Syst Sci 44(3):502–512

Agapie A, Agapie M, Rudolph G, Zbaganu G (2013) Convergence of evolutionary algorithms on the n-dimensional continuous space. IEEE Trans Cybern 43(5):1462–1472

Chen Y, Zou X, He J (2011) Drift conditions for estimating the first hitting times of evolutionary algorithms. Int J Comput Math 88(1):37–50

Acknowledgements

This work is supported by National Natural Science Foundation of China (61370102, 61370177), Humanity and Social Science Youth Foundation of Ministry of Education of China (14YJCZH216), Guangdong Natural Science Funds for Distinguished Young Scholar (2014A030306050), the Fundamental Research Funds for the Central Universities, SCUT (2015PT022) and Guangdong High-level personnel of special support program (2014TQ01X664).

Author information

Authors and Affiliations

Corresponding author

Appendix: Proof of the Lemmas, Theorems and Corollaries

Appendix: Proof of the Lemmas, Theorems and Corollaries

Proof of Lemma 1

Recall that \(\xi _{t}^{{\text{EP}}}=(\mathbf {v}_{1}^{(t)},\mathbf {v}_{2}^{(t)},\ldots ,\mathbf {v}_{k}^{(t)})\) is a real vector and the status space \(\varOmega _{{\text{EP}}}\) of EP is continuous. Furthermore, \(\xi _{t}^{{\text{EP}}}\) is only dependent on \(\xi _{t-1}^{{\text{EP}}}\) for \(t=0,1,\ldots\), as evidenced by steps 3–6 of the EP process. Hence, \(\{\xi _{t}^{{\text{EP}}}\}_{t=0}^{+\infty }\) is a Markov process.

Proof of Lemma 2

\(\xi _{t}^{{\text{EP}}}\in \varOmega _{{\text{EP}}}^{*}\) when \(\exists (\mathbf {x}_{i}^{*(t)},\mathbf {\sigma }_{i}^{(t)})\in \xi _{t}^{{\text{EP}}}\) where \(\mathbf {x}_{i}^{*(t)}\) is an optimal solution. Given steps 5 and 6 of EP, \(\mathbf {x}_{i}^{*(t)}\) will have the most “wins” as well as the highest fitness, so \(\mathbf {x}_{i}^{*(t)}\) will be selected for the next iteration with probability one if there are less than k optimal solutions in the tournament. \(\mathbf {x}_{i}^{*(t)}\) might be lost, but other optimal solutions will be selected if there are more than k optimal solutions in the parent and offspring. Thus, \(P\{\xi _{t+1}^{{\text{EP}}}\notin \varOmega _{{\text{EP}}}^{*}|\xi _{t}^{{\text{EP}}} \in \varOmega _{{\text{EP}}}^{*}\}=0\), and \(\{\xi _{t}^{{\text{EP}}}\}_{t=0}^{+\infty }\) is an absorbing Markov process to \(\varOmega _{{\text{EP}}}^{*}\).

Proof of Theorem 1

Observe that \(\lambda _{t}^{{\text{EP}}}-\lambda _{t-1}^{{\text{EP}}}=P\{\mu _{{\text{EP}}}\le t\}-P\{\mu _{{\text{EP}}}\le t-1\}=P\{\mu _{{\text{EP}}}=t\}\). Therefore, we have

Proof of Corollary 1

Let \(p_{i}=P\{\xi _{i}^{{\text{EP}}}\in \varOmega _{{\text{EP}}}^{*}|\xi _{i-1}^{{\text{EP}}}\notin \varOmega _{{\text{EP}}}^{*}\}\). Based on the total probability equation, \(\lambda _{t}^{{\text{EP}}}=(1-\lambda _{t-1}^{{\text{EP}}})p_{t}+\lambda _{t-1}^{{\text{EP}}}(1-p_{t})\). Substituting it into Theorem 1, we have \(E\mu _{{\text{EP}}}=\sum \nolimits _{t=0}^{+\infty }[(1-\lambda _{0}^{{\text{EP}}})\prod _{i=1}^{t}(1-p_{i})]\).

Proof of Corollary 2

According to Corollary 1, \(E\mu _{{\text{EP}}}\ge \sum _{t=0}^{+\infty }[(1-\lambda _{0}^{{\text{EP}}}) \prod _{i=1}^{t}(1-\beta _{i})]\). Similarly, we have \(E\mu _{{\text{EP}}}\le \sum _{t=0}^{+\infty }[(1-\lambda _{0}^{{\text{EP}}}) \prod _{i=1}^{t}(1-\alpha _{i})]\). Thus, \(\beta ^{-1}(1-\lambda _{0}^{{\text{EP}}})\le E\mu _{{\text{EP}}} \le \alpha ^{-1}(1-\lambda _{0}^{{\text{EP}}})\) when \(\alpha _{t}=\alpha\) and \(\beta _{t}=\beta\).

Proof of Theorem 2

For fixed individual \((\mathbf {x},{\bar{\varvec{\sigma }}})\), \({\bar{\mathbf{x}}}=\mathbf {x}+\mathbf {u}\,\bigotimes\,{\bar{\varvec{\sigma }}}\), where \(\mathbf {\bar{\sigma }}=(\bar{\sigma }_1,\bar{\sigma }_2,\ldots ,\bar{\sigma }_n)\) is a n-dimension variation variable and the stochastic disturbance \(\mathbf {u}=(u_1,u_2,\ldots ,u_n)\) follows n-dimensional standard Gaussian distribution. Let \(\mathbf {z}=(z_1,z_2,\ldots ,z_n)\), that is, \(\mathbf {z}=\mathbf {u}\,\bigotimes\,{\bar{\varvec{\sigma }}}=(u_1\bar{\sigma }_1,u_2\bar{\sigma }_2,\ldots ,u_n\bar{\sigma }_n)\). According to Expressions (1) and (2), \(z_{j}=\mu _{j}\bar{\sigma }_{j}=\mu _{j}\sigma\). Then, we have \(z_{j}\sim N(0,\bar{\sigma }_{j}^{2})\) since \(u_{j}\sim N(0,1)\), where \(j=1,\ldots ,n\). Therefore, \({\bar{\mathbf{x}}}=\mathbf {x}+\mathbf {u}\,\bigotimes\,{\bar{\varvec{\sigma }}}=\mathbf {x}+\mathbf {z}.\)

Given \(\mathbf {S}=\prod \nolimits _{j=1}^{n}[-b_{j},b_{j}], \mathbf {S}^{*}(\varepsilon )\subseteq \mathbf {S}.\) Let \(\tilde{\mathbf {S}}=\{\mathbf {z}|\mathbf {z}={\bar{\mathbf{x}}}-\mathbf {x},{\bar{\mathbf{x}}}\in \mathbf {S}^{*}(\varepsilon ),\mathbf {x}\in \mathbf {S}\}\). According to the property of measure, \(m\big (\mathbf {S}^{*}(\varepsilon )\big )=m(\tilde{\mathbf {S}})\). \(P\{{\bar{\mathbf{x}}}\in \mathbf {S}^{*}(\varepsilon )\}=P\{\mathbf {z}\in \tilde{\mathbf {S}}\}=\int \cdots \int _{\tilde{\mathbf {S}}}\prod \nolimits _{j=1}^{n}\frac{1}{\sqrt{2\pi \bar{\sigma }_{j}}}\exp \{-\frac{z_{j}^{2}}{2\bar{\sigma }_{j}^{2}}\}dz_{1}\ldots dz_{n}.\)

Note that \({\bar{\mathbf{x}}},\mathbf {x}\in \mathbf {S}\) and \(\mathbf {z}={\bar{\mathbf{x}}}-\mathbf {x}\), so we have \(|z_{j}|=|\bar{x}_{j}-x_{j}|\le 2b_{j}=2b\) (\(j=1,\ldots ,n\)), which leads to the following inequality:

That is, we obtain a lower bound of \(P\{{\bar{\mathbf{x}}}\in \mathbf {S}^{*}\}\) as follows:

Noting that \(m\big (\mathbf {S}^{*}(\varepsilon )\big )=m(\tilde{\mathbf {S}})\), we also have \(P\{{\bar{\mathbf{x}}}\in \mathbf {S}^{*}(\varepsilon )\}\ge m\big (\mathbf {S}^{*}(\varepsilon )\big )(\frac{1}{\sqrt{2\pi }})^{n} (\prod \nolimits _{j=1}^{n}\frac{1}{\sigma })\exp \{-\sum \nolimits _{j=1}^{n}\frac{2b^{2}}{\sigma ^{2}}\}\) (2) Let \(f({\bar{\varvec{\sigma }}})=\log \big (\prod \nolimits _{j=1}^{n}\frac{1}{\sigma }\exp \{-\sum \nolimits _{j=1}^{n}\frac{2b^{2}}{\sigma ^{2}}\}\big ) =-\sum \nolimits _{j=1}^{n}\big (\log \sigma +a_{j}\frac{1}{\sigma ^{2}}\big )\)where \(a_{j}=2b^{2}\). \(\max f({\bar{\varvec{\sigma }}})\Leftrightarrow \min \sum \nolimits _{j=1}^{n}\big (\log \sigma +a_{j}\frac{1}{\sigma ^{2}}\big )\), \(\frac{d\big (\log \sigma +a_{j}\frac{1}{\sigma ^{2}}\big )}{d\sigma }=0\Rightarrow \frac{1}{\sigma }-2a_{j}\frac{1}{\sigma ^{3}}=0\Rightarrow \sigma =\sqrt{2a_{j}}.\)

Noticing that \(a_{j}=2b_{j}^{2}=2b^{2}\), we have \(\sigma =2b\), which implies the lower bound of \(P\{{\bar{\mathbf{x}}}\in \mathbf {S}^{*}(\varepsilon )\}\) can be improved into

Therefore, \(P\{{\bar{\mathbf{x}}}_{i}^{t}\in \mathbf {S}^{*}(\varepsilon )\}\ge m\big (\mathbf {S}^{*}(\varepsilon )\big )(4\sqrt{e}\pi b)^{-n}\).

(3) By the property of \(\varOmega _{{\text{EP}}}^{*}\) and \(m\big (\mathbf {S}^{*}(\varepsilon )\big )\) in Definition 4, \(\forall \varepsilon >0\) and \(t=1,2,\ldots\), \(P\{\xi _{t}^{C}\in \varOmega _{{\text{EP}}}^{*}|\xi _{t-1}^{C} \notin \varOmega _{{\text{EP}}}^{*}\}=1-\prod _{i=1}^{k}\big (1-P\{{\bar{\mathbf{x}}}_{i}\in \mathbf {S}^{*}(\varepsilon )\}\big )\)

Thus, \(P\{\xi _{t}^{C}\in \varOmega _{{\text{EP}}}^{*}|\xi _{t-1}^{C}\notin \varOmega _{{\text{EP}}}^{*}\}\ge 1-\big (1-m(\mathbf {S}^{*})(4\sqrt{e}\pi b)^{-n}\big )^{k}\;\text {for}\;\forall \varepsilon >0\).

Proof of Corollary 3

1. Based on the total probability equation, we have

Because \(\{\xi _{t}^{C}\}_{t=0}^{+\infty }\) can be considered as an absorbing Markov process following Lemma 2, \(P\{\xi _{t}^{C}\in \varOmega _{{\text{EP}}}^{*}|\xi _{t-1}^{C}\in \varOmega _{{\text{EP}}}^{*}\}=1\).\(\lambda _{t}^{C}=(1-\lambda _{t-1}^{C})P\{\xi _{t}^{C}\in \varOmega _{{\text{EP}}}^{*}|\xi _{t-1}^{C}\notin \varOmega _{{\text{EP}}}^{*}\}+\lambda _{t-1}^{C}\), we have \(1-\lambda _{t}^{CEP}=(1-\lambda _{t-1}^{C})(1-P\{\xi _{t}^{C}\in \varOmega _{{\text{EP}}}^{*}|\xi _{t-1}^{C}\notin \varOmega _{{\text{EP}}}^{*}\})\). According to Theorem 2,

For \(m\big (\mathbf {S}^{*}(\varepsilon )\big )(\frac{1}{4\sqrt{e}\pi b} )^{n}>0\), \(d=1-\Big (1-m\big (\mathbf {S}^{*}(\varepsilon )\big )(\frac{1}{4\sqrt{e}\pi b})^{n}\Big )^{k}>0\) and \(\lim \nolimits _{t\rightarrow +\infty }(1-d)^{t}=0\). Thus, \(1-\lambda _{t}^{C}\le (1-d)(1-\lambda _{t-1}^{C})=(1-\lambda _{0}^{C})(1-d)^{t}\), so \(\lim \nolimits _{t\rightarrow +\infty }\lambda _{t}^{C}\ge 1-(1-\lambda _{0}^{C})\lim \nolimits _{t\rightarrow +\infty }(1-d)^{t}=1\). Since \(\lambda _{t}^{C}\le 1\), \(\lim \nolimits _{t\rightarrow +\infty }\lambda _{t}^{C}=1\).

2. Given \(\alpha =1-\Big (1-m\big (\mathbf {S}^{*}(\varepsilon )\big )(\frac{1}{4\sqrt{e}\pi b})^{n}\Big )^{k}>0\), we know that \(P\{\xi _{t}^{C}\in \varOmega _{{\text{EP}}}^{*}|\xi _{t-1}^{C}\notin \varOmega _{{\text{EP}}}^{*}\}\ge \alpha\) based on Theorem 2. According to Corollary 3, \(\forall \varepsilon >0\), \(E\mu _{C}\le (1-\lambda _{0}^{C})\Big (1-\big (1-m(\mathbf {S}^{*}(\varepsilon ))(\frac{1}{4\sqrt{e}\pi b})^{n}\big )^{k}\Big )^{-1}\).

Proof of Theorem 3

1. For fixed individual \((\mathbf {x},{\bar{\varvec{\sigma }}})\), \({\bar{\mathbf{x}}}=\mathbf {x}+\mathbf {\delta }\,\bigotimes\, {\bar{\varvec{\sigma }}}\), where the stochastic disturbance \(\mathbf {\delta }\) follows n-dimensional standard Cauchy distribution. Let \(\mathbf {z}=\mathbf {\delta }\,\bigotimes\, {\bar{\varvec{\sigma }}}\), that is, \(z_{j}=\sigma \delta _{j}\) (\(j=1,\ldots ,n\)). Then, we have \({\bar{\mathbf{x}}}=\mathbf {x}+\mathbf {z}\) and \(z_{j}\sim \frac{1}{\sigma }C_{\phi =1}(\frac{y}{\sigma })\) since \(\delta _{j}\sim C_{\phi =1}(y)\) (\(j=1,\ldots ,n\)).

In a manner similar to the Proof of Theorem 2, we derive

(Note that \(|z_{j}|=|\bar{x}_{j}-x_{j}|\le 2b\) (\(j=1,\ldots ,n\)).)

2. Letting \(f({\bar{\varvec{\sigma }}})=-\log (\sigma +\frac{4b^{2}}{\sigma })\) , we have

It can be improved into \(P\{{\bar{\mathbf{x}}}\in \mathbf {S}^{*}\}\ge \frac{m\big (\mathbf {S}^{*}(\varepsilon )\big )}{(4b\pi )^{n}}\) when \(\sigma =2b\).

3. Hence, \(\forall \varepsilon >0\) and \(t=1,2,\ldots\), if \(\sigma =2b\), \(P\{\xi _{t}^{F}\notin \varOmega _{{\text{EP}}}^{*}|\xi _{t-1}^{F}\in \varOmega _{{\text{EP}}}^{*}\}\ge 1-\big (1-\frac{m\big (\mathbf {S}^{*}(\varepsilon )\big )}{(4b\pi )^{n}}\big )^{k}\).

Proof of Corollary 4

1. According to Theorem 3, \(P\{\xi _{t}^{F}\notin \varOmega _{{\text{EP}}}^{*}|\xi _{t-1}^{F}\in \varOmega _{{\text{EP}}}^{*}\}\ge 1-\big (1-\frac{m\big (\mathbf {S}^{*}(\varepsilon )\big )}{(4b\pi )^{n}}\big )^{k}\). \(0<\frac{m\big (\mathbf {S}^{*}(\varepsilon )\big )}{(4b\pi )^{n}}<1\) due to the second assumption in Sect. 2.2. Let \(\theta =1-\Big (1-\frac{m\big (\mathbf {S}^{*}(\varepsilon )\big )}{(4b\pi )^{n}}\Big )^{k}\) for \(t=1,2,\ldots\). Then \(0<\theta <1\) and \(\lim \nolimits _{t\rightarrow +\infty }(1-\theta )^{t}=0\).

Thus, \(\lim \nolimits _{t\rightarrow +\infty }\lambda _{t}^{F}\ge 1- (1-\lambda _{0}^{F})\lim \nolimits _{t\rightarrow +\infty }(1-\theta )^{t}=1\). \(\lim \nolimits _{t\rightarrow +\infty }\lambda _{t}^{F}=1\) since \(\lambda _{t}^{F}\le 1\).

2. Since \(\lim \nolimits _{t\rightarrow +\infty }\lambda _{t}^{F}=1\), following Corollary 2 and Theorem 4, we find that

Proof of Theorem 4

1. According to Eq. (9), for the only individual \({{{\varvec{x}}}}\) per iteration, \(\bar{{{\varvec{x}}}} = {{\varvec{x}}} + {{\varvec{u}}}\) , where the stochastic disturbance \({{\varvec{u}}}\) follows n-dimensional standard Gauss distribution. Then, we have \({{\varvec{u}}}=\bar{{{\varvec{x}}}} - {{\varvec{x}}}\) and \({u_j}\sim N(0,1)\) (\(j=1, 2, \ldots , n\)). Following the Proof of Theorem 2,

Based on Corollary 3, \(E{\mu _1} \le {(\frac{{\sqrt{2\pi e} }}{\varepsilon })^n}\)

2. According to Eq. (10), \(\bar{{{\varvec{x}}}} = {{\varvec{x}}} + \varvec{\delta }\) where the stochastic disturbance \(\varvec{\delta }\) follows n-dimensional standard Cauchy distribution. Then, we have \(\varvec{\delta }=\bar{{{\varvec{x}}}} - {{\varvec{x}}}\) and \({\delta _j}\sim {C_{\phi = 1}}(y)\) (\(j=1, 2, \ldots , n\)).

Following the Proof of Theorem 3,

Hence, \(E{\mu _2} \le {(\frac{{2\pi }}{\varepsilon })^n}\) owning to Corollary 4.

Rights and permissions

About this article

Cite this article

Zhang, Y., Huang, H., Lin, Z. et al. Running-time analysis of evolutionary programming based on Lebesgue measure of searching space. Neural Comput & Applic 30, 617–626 (2018). https://doi.org/10.1007/s00521-016-2651-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-016-2651-7