Abstract

This paper considers the finite-time stability and finite-time boundedness problems for switched neural networks subject to \(L_2\)-gain disturbances. Sufficient conditions for the switched neural networks to be finite-time stable and finite-time bounded are derived. These conditions are delay-dependent and are given in terms of linear matrix inequalities. Average dwell time of switching signals is also given such that switched neural networks are finite-time stable or finite-time bounded. By resorting to the average dwell time approach and Lyapunov–Krasovskii functional technology, some new delay-dependent criteria guaranteeing finite-time boundedness and stabilizability with \(L_2\)-gain analysis performance are developed. An illustrative example is given to demonstrate the effectiveness of the proposed state estimator.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

It is well known that neural networks have become a popular topic that attracts researchers attention, various delayed neural networks such as Hopfield neural networks, Cohen–Grossberg neural networks, cellular neural networks and bidirectional associative memory neural networks have been extensively investigated [1–7]. Studying artificial neural networks has been the central focus of intensive research activities during the last decades since these networks have found wide applications in areas like associative memory, pattern classification, reconstruction of moving images, signal processing, solving optimization problems (see [8–15]).

In hardware implementation of neural networks, it is well known that time delay frequently occurs, and the existence of time delay may cause instability and poor performance. Therefore, much effort has been devoted to the delay-dependent stability analysis of delayed neural networks, since delay-dependent stability criteria are generally less conservative than delay-independent ones especially when the size of the time delay is small (for example, [16–24]).

In recent years, switched neural networks (SNNs), whose individual subsystems are a set of neural networks, have attracted significant attention and have been successfully applied to many fields such as high-speed signal processing, artificial intelligence and gene selection in DNA microarray analysis. Recent researches in SNNs typically focus on the analysis of dynamic behaviors, such as stability, controllability, reachability, and observability aiming to design controllers with guaranteed stability and performance [25–27]. Besides the aforementioned problem, designing a controller to achieve tracking for SNNs is a challenging problem. Thus tracking control problem for SNNs with many researchers focus on time-varying delays using average dwell time approach and piecewise Lyapunov functional methods (see [28–31]).

Over the past few years, many study efforts have been dedicated to the finite-time stability of SNNs due to its wide applications [32–35]. To study the transient behavior of systems, finite-time stability concerns the stability of a system over a finite interval of time and plays an important role (for example, [36–39]). It is important to emphasize the disconnection between classical Lyapunov stability and finite-time stability. The problem about finite-time stability \(L_2\)-gain analysis has been widely learned in the literature [40–42]. It is worth pointing out that there is a difference between finite-time stability and Lyapunov asymptotic stability, and they are also independent of each other. Recently, finite-time stability for SNNs based on the technique of average dwell time, the problem of finite-time boundedness for the SNNs with time delays was investigated (see [43–46]).

As an important feature of the switching system, average dwell time is commonly adopted in finite-time boundedness analysis of SNNs [47–49]. In [50], the authors studied finite-time stability of high-order stochastic nonlinear systems in strict-feedback form. However, the property of the average dwell time switching signal, which requires the average interval between two successive switching constants must be over \(\tau _a\), is independent of the system modes. Therefore, conservativeness still exists for the minimum admissible average dwell time. The average dwell time concept, which can fully use the mode-dependent information, is firstly taken into account for the general switched linear systems in [51]. However, to the best of authors’ knowledge, only few attempts have been made on the study of the finite-time bounded for the average dwell time approach, especially for the switched NNs with time-varying delays, which motivates us to undertake this study.

Motivated by the above discussions, we investigate the finite-time boundedness and finite-time \(L_2\)-gain analysis for a SNN problem. The novel features are that a new Lyapunov–Krasovskii functional is constructed average dwell time approach is applied firstly to the study of finite-time boundedness for switched neural networks. By applying Newton–Leibniz formula and Jensen’s inequality, Schur complement lemma, a switching rule for finite-time boundedness of SNNs with interval time-varying delay is derived delay-dependent finite-time \(L_2\)-gain analysis for SNNs with interval time-varying delay are established in terms of linear matrix inequalities (LMIs), which allow simultaneous computation of two bounds that characterize the finite-time boundedness and finite-time \(L_2\)-gain analysis rate of the solution. The obtained results are conservative than the results in [14–16, 21–24].

The outline of the paper is as follows. Section 2 presents problem formulation, notations, definitions and a technical lemma. In Sect. 3, a delay-dependent finite-time boundedness for SNNs with interval time-varying delay, a switching rule for finite-time \(L_2\)-gain analysis of SNNs with interval time-varying delay. Numerical examples show the effectiveness of the result. The paper ends with conclusions given in Sect. 4 and cited references follow.

2 Problem formulation and preliminaries

Consider the following n-neuron switched neural networks with time-varying delays,

where \(x(t)=[x_1(t),x_2(t),\ldots ,x_n(t)]^T\in R^n\) is the state, \(z(t)\in R^{q}\) is the controlled output and \(w(t)\in L^q_2[0,\infty\)) satisfies the constraint:

\(f(x(t))= [f_1(x_1(t)), f_2(x_2(t)), \ldots , f_n(x_n(t))]^T\in R^n\) is the neuron activation function, \(A_{\sigma (t)}\) is a positive diagonal matrix, \(B_{\sigma (t)},\, C_{\sigma (t)},\, D_{1\sigma (t)}, \, E_{\sigma (t)}, \, D_{2\sigma (t)}\) are the weight connection matrices with appropriate dimensions. \(\tau (t)\) is a time-varying delay function with \(0\le \tau (t) \le h\) and \(\dot{\tau }(t)\le \tau,\) where \(\tau\) is the upper bound of the time-varying delay \(\tau (t)\). \(\phi (t)\) is a continuous vector-valued initial function on \([-h,0]\). \(\sigma (t): [0,\infty ) \rightarrow \mathcal {N}=\{1,2,\ldots ,N\}\) is the right continuous piecewise constant switching signal to be designed, where \(\mathcal {N}\) is a finite set.

Corresponding to the switching signal \(\sigma (t)\), we get the following switching sequence:

where \(t_0\) is the initial time when \(t_k \in [t_k,t_{k+1}), x(t_0)\) is the initial state and \(\sigma (t)=i\), \(i_k^{th}\) subsystem is active. Throughout this paper, we assume the state of the switched neural networks does not jump at the switching instants, that is, the trajectory x(t) is everywhere continuous. Moreover, the switching signal \(\sigma (t)\) has finite number of switching on any finite interval time. It is worth pointing out that almost all results for switched systems are based on the continuous of the state and the finite of the switching number on any finite interval time, which is the elementary assumption. For the activation function, we make the following assumptions.

Assumption 1

[53] The activation functions satisfy the following condition, for any \(p=1,2,\ldots ,n\) there exist constants \(G_p^-\), \(G_p^+\) such that

For presentation convenience, in the following, we denote

Definition 2.1

[41] For any \(T_{2}>T_{1}\ge 0\), let \(N_{p}(T_{1},T_{2})\) denote the switching number of \(\sigma (t)\) on an interval \((T_{1},T_{2})\). If

holds for given \(N_{0}\ge 0\), \(\tau _{a}>0\), then the constant \(\tau _{a}\) is called the average dwell time and \(N_{0}\) is the chatter bound. Without loss of generality, we choose \(N_{0}=0\) throughout this paper.

Definition 2.2

[36] Switch system (1) is said to be finite-time bounded with respect to \((c_{1},c_{2},T,R,d)\) if following condition holds:

where \(c_{2}>c_{1}\ge 0\) and \(R>0\) is a positive definite matrix.

Definition 2.3

[32] For \(\gamma>0,d>0,T>0,\eta>0,\Lambda >0\), and \(c_1>c_2>0\), system (1) is said to be finite-time stable with a weighted \(L_2\) performance \(\gamma\) with respect to \((c_{1},c_{2},T,R,d)\), if the following condition holds:

and under zero initial condition, it holds for all nonzero \(w: \int _0^Tw^T(s)w(s){\rm{d}}s \le d\).

Lemma 2.4

[52] For any constant matrix \(Z \in \mathcal {R}^{n \times n}, Z=Z^T>0\), scalars \(h>0\), such that following integrations are well defined; then

Lemma 2.5

[52] (Schur complement) Given constant matrices X, Y, Z, where \(X=X^T\) and \(0<Y=Y^T\), then \(X+Z^TY^{-1}Z<0\) if and only if

3 Main results

3.1 Finite-time boundedness analysis

In this section, we focus on finite-time boundedness of switched neural networks (1). First, consider a switched neural networks with external disturbance as follows:

Theorem 3.1

System (3) is said to be finite-time bounded with respect to \((c_1,c_2,R,d,T)\) if there exist symmetric positive matrices \(P_i, Q_{1i},Q_{2i},S_{1i},S_{2i}, Y_i\) and matrices \(N_{si} (s=1,\,2,\,3)\), \(U_{1i}> 0, U_{2i} > 0\) and scalars \(\eta \ge 0, \mu \ge 1, \lambda _l>0 (l=1,2,\ldots ,8), d>0, h>0, \Lambda>0, \tau >0\) such that \(\forall i,j \in \mathcal {N}\), we have that following linear matrix inequalities hold:

with the average dwell time of the switching signal \(\sigma\) satisfying

where

Proof

We consider the following Lyapunov–Krasovskii functional:

where

Taking the time derivative of \(V_{\sigma (t)}(x_t,t)\) along the trajectory of the system (3) and we define \(\sigma (t)=i\),

From Lemma 2.4, we have

Based on Assumption 1 , we obtain

can be compactly written as

Then for any positive matrices \(U_{1i}={\rm{diag}}\{u_{1i},u_{2i},\ldots ,u_{ni}\}\) and \(U_{2i}={\rm{diag}}\{\hat{u}_{1i},\hat{u}_{2i},\ldots ,\hat{u}_{ni}\}\), the following inequalities hold true

From the Leibniz–Newton formula, the following equation is true for any matrices \(N_{1i}, N_{2i}\) and \(N_{3i}\) with appropriate dimensions:

Therefore, for a given \(\eta >0\) and from (9)–(18), one can obtain that

where

The inequality (19) is equivalent to (4).

Thus, we obtain

Notice that

Integrating (21) from \(t_k\) to t, we can get that

Note that (5) and \(\mu \ge 1\) yields

Then, we can easily have

Define \(\bar{P}_{i}=R^{-1/2}P_{i}R^{-1/2}\), \(\bar{Q}_{1i}=R^{-1/2}Q_{1i}R^{-1/2}\), \(\bar{Q}_{2i}=R^{-1/2}Q_{2i}R^{-1/2}\), \(\bar{S}_{1i}=R^{-1/2}S_{1i}R^{-1/2}\), \(\bar{S}_{2i}=R^{-1/2}S_{2i}R^{-1/2}\), \(\bar{Y}_{i}=R^{-1/2}Y_{i}R^{-1/2}\).

Note that

where \(g={\rm{max}}(|G_p^-,G_p^+|)\)

where

Thus,

On the other hand,

When \(\mu =1\), which is the trivial case, from (6)

When \(\mu \ge 1\), from (6),

we have

Substituting (30) into (29) yields

The proof is complete. \(\square\)

Remark 3.2

The function V(t) in the proof procedure of Theorem 3.1 belongs to Lyapunov–Krasovskii functionals. Unlike the classical Lyapunov function for switched systems in the case of asymptotical stability, there is no requirement of negative definiteness or negative semi-definiteness on \(\dot{V}(t).\) Actually, if the exogenous disturbance \(w(t) = 0\) and we limit the constants \(\delta < 0\), then \(\dot{V}(t)\) will be a negative definite function. For this case, we can obtain the system (1) is asymptotically stable on the infinite interval \([0,\infty )\) if the average dwell time.

Remark 3.3

When \(D_{1\sigma (t)}=0,\) the system (3) reduces to

Corollary 3.4

Consider the system (32) is said to be asymptotically stable and if there exist symmetric positive matrices \(P_i, Q_{1i},Q_{2i},S_{1i},S_{2i}, Y_i\) and matrices \(N_{si} (s=1,\,2,\,3)\), \(U_{1i}> 0, U_{2i} > 0\) and scalars \(h>0, \tau >0\) such that \(\forall i\in \mathcal {N}\), we have that following linear matrix inequalities hold:

Proof

Let \(\sigma (t)=1.\) The proof is similar to that of Theorem 3.1, it is omitted here. \(\square\)

3.2 Finite-time weighted \(L_2\)-gain analysis

Theorem 3.5

System (1) is finite-time bounded with respect to \((c_1, c_2, R, d, T)\) if there exist symmetric positive matrices \(P_i, Q_{1i},Q_{2i},S_{1i},S_{2i}, Y_i\) and matrices \(N_{si} (s=1,\,2,\,3)\), \(U_{1i}> 0, U_{2i} > 0\) and scalars \(\eta \ge 0, \gamma>0, \mu \ge 1, \lambda _l>0 \,(l=1,2,\ldots ,7), d>0, h>0, \Lambda>0, \tau >0\) such that \(\forall i,j \in \mathcal {N}\), following linear matrix inequalities holds:

with the average dwell time of the switching signal \(\sigma\) satisfying

Proof

Choosing the Lyapunov–Krasovskii functional as in Theorem 3.1, after some mathematical manipulation and using Schur complement, we can get

Define

We obtain,

When \(t\in [t_k,t_{k+1}],\) where \(t_k\) is the switching instant,

Notice that \(x(t_k)=x(t_k^-);\) then one obtains

For any \(t\in [0,T],\) one has

Under zero initial condition, we have

which implies that

Multiplying both sides of (39) by \(\mu ^{-N_{\sigma }(0,T)}\) yields

It is easy to deduce from (37) that

Since \(\mu \ge 1\), we have

Therefore, we can obtain

This completes the proof by Definition 2.3. \(\square\)

Remark 3.6

Note that for finite-time switched neural networks (1), finite-time boundedness can be considered as the extension concept of energy value or peak value performance of the system (1). It should be pointed out that the switching signals of the results in this paper pays more attention to the time-varying delays appearing in switched neural networks and the stability analysis with respect to the finite-time interval, the main results in this paper is more general.

Remark 3.7

In this paper finite-time boundedness condition is derived for the switched neural networks (3). We also discussed finite-time boundedness with \(L_2\)-gain analysis for switched neural networks (1) with noise attenuation \(\gamma ^2\) is designed. In the analysis process, Lyapunov-function method and average dwell time technique are used to achieve our main results.

Remark 3.8

In the Theorem 3.1, a new Lyapunov–Krasovskii functional is constructed and we utilized exponential functions which gives convergence rate. The obtained results are compared with the existing results to show the conservativeness. The results in this paper are conservative than the results in [14–16, 21–24].

Remark 3.9

In this paper, the influence of disturbance signals on the system dynamics cannot be ignored, so the concept of finite-time boundedness explains the stable characteristics when considering external disturbances.

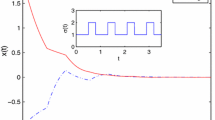

4 Numerical examples

In this section, numerical examples are provided to illustrate the validity and the advantage of the proposed finite-time boundedness and finite-time \(L_2\)-gain analysis results.

Example 4.1

Consider a switched neural networks with time-varying delay, as

with

The activation function is chosen as \(G_t={\rm{diag}}\{0,0\},\ G_u={\rm{diag}}\{1,1\}\), the values of \(c_1, c_2, T, d\) are given as follows:

When \(c_2=77.59\), we see that the admissible maximum bound of h is 2.01.By using the Matlab LMI Toolbox, solve LMI (3)–(6) the feasible solutions are

Example 4.2

Consider the following neural networks with time-varying delays (32) with following parameters given in [14–16, 21–24]:

and

with \(\delta =0\). By solving Example 4.2 using LMI in Corollary 3.4, we obtain maximum admissible upper bounds (MAUB) of \({\tau }\) for different h as shown in Table 1. The results obtained in this paper are significantly better than those in [14–16, 21–24], which clearly shows the effectiveness of our work. The time responses of the state variables are shown in Table 1.

The admissible upper bounds of \({\tau }\) are listed in Table 1.

Example 4.3

Consider a switched neural networks with time-varying delay,

with

The values of \(c_1,c_2,T,d\) are given as follows:

and \(G_t={\rm{diag}}\{0.5,0.5\},\) \(G_u={\rm{diag}}\{1,1\}.\) By solving LMI (31)–(34) we get, \(\gamma =1.362\), the average dwell time \(\tau _a\) is calculated by \(\tau _a={\ln \mu }/{\delta }=81.0930\).

5 Conclusion

In this paper, finite-time boundedness and finite-time weighted \(L_2\)-gain analysis for a SNN with time-varying delay have been investigated. Based on linear matrix techniques Lyapunov–Krasovskii function method and average dwell time approach, sufficient conditions are derived. Numerical examples are given to demonstrate the effectiveness of the proposed approach. In future work, we extend our results to study finite-time stability analysis of Markovian jumping switched neural networks with time-varying delays.

References

Syed Ali M, Arik S, Saravanakumar R (2015) Delay-dependent stability criteria of uncertain Markovian jump neural networks with discrete interval and distributed time-varying delays. Neurocomputing 158:167–173

Syed Ali M, Balasubramaniam P (2011) Global asymptotic stability of stochastic fuzzy cellular neural networks with multiple discrete and distributed time-varying delays. Commun. Nonlinear Sci. Numer. Simul. 16:2907–2916

Hou L, Zong G, Wu Y (2011) Robust exponential stability analysis of discrete-time switched Hopfield neural networks with time delay. Nonlinear Anal. Hybrid Syst 5:525–534

Zhu Q, Cao J, Rakkiyappan R (2015) Exponential input-to-state stability of stochastic Cohen–Grossberg neural networks with mixed delays. Nonlinear Dyn 79:1085–1098

Xi J, Park JH, Zeng H (2015) Improved delay-dependent robust stability analysis for neutral-type uncertain neural networks with Markovian jumping parameters and time-varying delays. Neurocomputing 149:1198–1205

Syed Ali M (2015) Stability of Markovian jumping recurrent neural networks with discrete and distributed time-varying delays. Neurocomputing 149:1280–1285

Balasubramaniam P, Syed Ali M (2010) Global asymptotic stability of stochastic fuzzy cellular neural networks with multiple time-varying delays. Expert Syst Appl 37:7737–7744

Feng W, Yang SX, Wu H (2009) On robust stability of uncertain stochastic neural networks with distributed and interval time-varying delays. Chaos Solitons Fractals 42:2095–2104

Zhu Q, Cao J (2014) Mean-square exponential input-to-state stability of stochastic delayed neural networks. Neurocomputing 131:157–163

Xiong W, Meng J (2013) Exponential convergence for cellular neural networks with continuously distributed delays in the leakage terms. Electron J Qual Theory Differ Equ 10:1–12

Zhu Q, Cao J (2011) Exponential stability of stochastic neural networks with both Markovian jump parameters and mixed time delays. IEEE Trans Syst Man Cybern Part B 41:341–353

Zhu Q, Cao J (2012) Stability of Markovian jump neural networks with impulse control and time varying delays. Nonlinear Anal Real World Appl 13:2259–2270

Qiu J, Yang H, Zhang J, Gao Z (2009) New robust stability criteria for uncertain neural networks with interval time-varying delays. Chaos Solitons Fractals 39:579–585

Li T, Zheng WX, Lin C (2011) Delay-slope-dependent stability results of recurrent neural networks. IEEE Trans Neural Netw 22:2138–2143

Zeng HB, He Y, Wu M, Zhang C (2011) Complete delay-decomposing approach to asymptotic stability for neural networks with time-varying delays. IEEE Trans Neural Netw 22:806–812

Ge C, Hua C, Guan X (2014) New delay-dependent stability criteria for neural networks with time-varying delay using delay-decomposition approach. IEEE Trans Neural Netw 25(7):1378–1383

Zhu Q, Cao J (2012) Stability analysis of Markovian jump stochastic BAM neural networks with impulse control and mixed time delays. IEEE Trans Neural Netw Learn Syst 23:467–479

Yin C, Chen Y, Zhong S (2014) Fractional-order sliding mode based extremum seeking control of a class of nonlinear systems. Automatica 50:3173–3181

Yin C, Cheng Y, Chen Y, Stark B, Zhong S (2015) Adaptive fractional-order switching-type control method design for 3D fractional-order nonlinear systems. Nonlinear Dyn 82:39–52

Zhu Q, Rakkiyappan R, Chandrasekar A (2014) Stochastic stability of Markovian jump BAM neural networks with leakage delays and impulse control. Neurocomputing 136:136–151

Tian JK, Xiong WJ, Xu F (2014) Improved delay-partitioning method to stability analysis for neural networks with discrete and distributed time-varying delays. Appl Math Comput 233:152–164

Zhou XB, Tian JK, Ma HJ, Zhong SM (2014) Improved delay-dependent stability criteria for recurrent neural networks with time-varying delays. Neurocomputing 129:401–408

Zhang HG, Yang FS, Liu XD, Zhang QJ (2013) Stability analysis for neural networks with time-varying delay based on quadratic convex combination. IEEE Trans Neural Netw Learn Syst 24:513–521

Shi K, Zhong S, Zhu H, Liu X, Zeng Y (2015) New delay-dependent stability criteria for neutral-type neural networks with mixed random time-varying delays. Neurocomputing 168:896–907

Wang S, Shi T, Zeng M, Zhang L, Alsaadi FE, Hayat T (2015) New results on robust finite-time boundedness of uncertain switched neural networks with time-varying delays. Neurocomputing 151:522–530

Wu Y, Cao J, Alofi A, AL-Mazrooei A, Elaiw A (2015) Finite-time boundedness and stabilization of uncertain switched neural networks with time-varying delay. Neural Netw 69:135–143

Wu X, Tang Y, Zhang W (2014) Stability analysis of switched stochastic neural networks with time-varying delays. Neural Netw 51:39–49

Yan P, Ozbay H (2008) Stability analysis of switched time delay systems. SIAM J Control Optim 47:936–949

Ahn CK (2010) An \(H_\infty\) approach to stability analysis of switched Hopfield neural networks with time-delay. Nonlinear Dyn 60:703–711

Zhong Q, Cheng J, Zhao Y (2015) Delay-dependent finite-time boundedness of a class of Markovian switching neural networks with time-varying delays. ISA Trans 57:43–50

Lin X, Du H, Li S, Zou Y (2013) Finite-time boundedness and finite-time \(l_2\) gain analysis of discrete-time switched linear systems with average dwell time. J Frankl Inst 350:911–928

Lin X, Du H, Li S (2011) Finite-time boundedness and \(L_2\)-gain analysis for switched delay systems with norm-bounded disturbance. Appl Math Comput 217:5982–5993

Cheng J, Zhong S, Zhong Q, Zhu H, Du Y (2014) Finite-time boundedness of state estimation for neural networks with time-varying delays. Neurocomputing 129:257–264

He S, Liu F (2013) Finite-time boundedness of uncertain time-delayed neural network with Markovian jumping parameters delays. Neurocomputing 103:87–92

Zhang Y, Shi P, Nguang SK, Zhang J, Karimi HR (2014) Finite-time boundedness for uncertain discrete neural networks with time-delays and Markovian jumps. Neurocomputing 140:1–7

Bai J, Lu R, Xue A, She Q, Shi Z (2015) Finite-time stability analysis of discrete-time fuzzy Hopfield neural network. Neurocomputing 159:263–267

Cai Z, Huang L, Zhu M, Wang D (2016) Finite-time stabilization control of memristor-based neural networks. Nonlinear Anal Hybrid Syst 20:37–54

Niamsup P, Ratchagit K, Phat VN (2015) Novel criteria for finite-time stabilization and guaranteed cost control of delayed neural networks. Neurocomputing 160:281–286

Yao D, Lu Q, Wu C, Chen Z (2015) Robust finite-time state estimation of uncertain neural networks with Markovian jump parameters. Neurocomputing 159:257–262

Wu ZG, Shi P, Su HY, Chu J (2014) Asynchronous \(L_2-L_\infty\) filtering for discrete-time stochastic Markov jump systems with randomly occurred sensor nonlinearities. Automatica 50:180–186

Sun XM, Zhao J, Hill DJ (2006) Stability and \(L_2\)-gain analysis for switched delay systems: a delay-dependent method. Automatica 42:1769–1774

Lin XZ, Du HB, Li SH (2011) Finite-time boundedness and \(L_2\)-gain analysis for switched delay systems with norm-bounded disturbance. Appl Math Comput 217:5982–5993

Zhong QS, Cheng J, Zhao YQ, Ma JH, Huang B (2013) Finite-time filtering for a class of discrete-time Markovian jump systems with switching transition probabilities subject to average dwell time switching. Appl Math Comput 255:278–294

Liu L, Sun J (2008) Finite-time stabilization of linear systems via impulsive control. Int J Control 81:905–909

He S, Liu F (2013) Finite-time boundedness of uncertain time-delayed neural network with Markovian jumping parameters. Neurocomputing 103:87–92

Li X, Lin X, Li S, Zou Y (2015) Finite-time stability of switched nonlinear systems with finite-time unstable subsystems. J Frankl Inst 352:1192–1214

Liu H, Shen Y (2012) Asynchronous finite-time stabilization of switched systems with average dwell time. IET Control Theory Appl 6:1213–1219

Liu H, Shen Y, Zhao X (2012) Delay-dependent observer-based \(H_\infty\) finite-time control for switched systems with time-varying delay. Nonlinear Anal Hybrid Syst 6:885–898

Syed Ali M, Saravanan S (2016) Robust finite-time \(H_\infty\) control for a class of uncertain switched neural networks of neutral-type with distributed time varying delays. Neurocomputing 177:454–468

Wang H, Zhu Q (2015) Finite-time stabilization of high-order stochastic nonlinear systems in strict-feedback form. Automatica 54:284–291

Zhao X, Zhang L, Shi P, Liu M (2012) Stability and stabilization of switched linear systems with mode-dependent average dwell time. IEEE Trans Autom Control 57:1809–1815

Gu K, Kharitonov VL, Chen J (2003) Stability of time delay systems. Birkhuser, Boston

Liu Y, Wang Z, Liu X (2006) Global exponential stability of generalized recurrent neural networks with discrete and distributed delays. Neural Netw 19:667–675

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Syed Ali, M., Saravanan, S. Finite-time \(\bf{{\it{L}}_2}\)-gain analysis for switched neural networks with time-varying delay. Neural Comput & Applic 29, 975–984 (2018). https://doi.org/10.1007/s00521-016-2498-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-016-2498-y