Abstract

EEG signals play an important role in both the diagnosis of neurological diseases and understanding the psychophysiological processes. Classification of EEG signals includes feature extraction and feature classification. This paper uses approximate entropy and sample entropy based on wavelet package decomposition as the feature exaction methods and employs support vector machine and extreme learning machine as the classifiers. Experiments are performed in epileptic EEG data and five mental tasks, respectively. Experimental results show that the combination strategy of sample entropy and extreme learning machine has shown great performance, which obtains good classification accuracy and low training time.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The electroencephalogram (EEG) signals play an important role in both the diagnosis of neurological diseases and understanding the psychophysiological processes. Although several methods for the brain function analysis such as magnetoencephalography (MEG), functional magnetic resonance imaging (fMRI), and positron emission tomography (PET) have been introduced, the EEG signal is still a valuable tool for monitoring the brain activity due to its relatively low cost and being convenient for the patient [1]. Effectively classifying EEG is the foundation for building usable Brain–Computer Interfaces (BCI) and improving the performance of EEG analysis software used in clinical and research settings.

Feature extraction and classification of EEG signals is a challenge for engineers and scientists. In recent years, there has been an increasing interest in the application of machine learning methods for EEG signal. Many methods have been proposed to classify and analyze EEG signal. Among these methods, support vector machine (SVM) and artificial neural network [2, 3] are two widely used classification techniques. Most of EEG signal classifications are built by time–frequency domain-based feature extraction followed by a variety of classification models. So, the classification performance of these methods depends on the feature extraction of the EEG signal.

Wavelet has played an important role in biomedical signal processing for its ability to capture localized spatial-frequency information of EEG signals [4]. In the feature extraction phase, this paper uses approximate entropy (ApEn) and sample entropy (SampEn) based on wavelet packet decomposition (WPD). Obtained feature values are employed as the inputs of a classifier, such as extreme learning machine (ELM) and SVM. Experiments are performed in four different combinations of feature extractions and classifiers. Experimental results in epileptic EEG data and five mental tasks indicate that the combination of sample entropy and ELM has shown great performance.

The remaining of the paper is organized as follows. In Sect. 2, we briefly presented the literature survey that has been performed. In Sect. 3, we described about the materials and methods. In Sect. 4, we compared the classification results of four different combinations in epileptic EEG data and five mental tasks, respectively. And Sect. 5 concluded the paper.

2 Related work

In the feature extraction for EEG signals, Burke et al. [5] extend the usual autoregressive (AR) models for EEG feature extraction. The extension model is an AR with exogenous input (ARX) model for combined filtering and feature extraction. Polat and Günes [6] use fast Fourier transform as feature extraction method. Wavelet transform is also widely used in extracting EEG features [7, 8], in which EEG signals are decomposed by wavelet transform to calculate approximation and detail coefficients. ApEn is a statistical parameter that measures the predictability of the current amplitude values of a physiological signal based on its previous amplitude values. ApEn is used for the first time in the proposed system for the detection of epilepsy using neural networks [2]. A novel feature extraction method based on multi-wavelet transform and ApEn is proposed by Guo et al. [9]. The proposed method uses approximate entropy features derived from multi-wavelet transform and combines with an artificial neural network to classify the EEG signals. Song et al. [10, 11] use sample entropy (SampEn) as a feature extraction method for detecting epileptic seizures. In Ref. [10], SampEn is selected as a feature extraction method to classify the task of three different kinds of EEG signals and detecting epileptic seizures. Based on Ref. [10], the authors propose an optimized sample entropy algorithm to identify the EEG signals in [11].

Artificial neural networks (ANNs) have been widely applied to classify EEG signals [2, 9]. Kumar et al. [7] use discrete wavelet transform (DWT)-based ApEn and ANN to detect epileptic seizures. SVM is also one of the classification methods for EEG signals [3, 4]. Zhang et al. [12] present an improved method to calculate phase locking value (PIV) based on Hilbert–Huang transform, and the PLVs are used as features for a least squares support vector machine (LS-SVM) to recognize normal and hypoxia EEG. Bajaj et al. [13] present a new method for classification of EEG signals using empirical mode decomposition (EMD) method. The proposed method for classification of EEG signals is based on the bandwidth features and employs LS-SVM for classifying seizure and non-seizure EEG signals. Wu et al. [14] propose a multiscale cross-approximate entropy method to quantify the complex fluctuation between R–R intervals series and pulse transit time series. Ahangi et al. [15] use a multiple classifier system for classification of EEG signals. The proposed method uses DWT decomposition, and a variety of classifier combination methods along with genetic algorithm feature selection is evaluated.

3 Materials and methods

In this section, we give a description of proposed method for EEG signal classification problem, present feature extraction based on the WPD, and give a briefly review of two classifiers, ELM and SVM.

Figure 1 shows the block diagram of the proposed method based on WPD. In our method, we first use the WPD to decompose EEG data into sub-band signals. Then, ApEn values or SampEn values are calculated by approximation and detail coefficients. Obtained feature vectors are used as the inputs of classifiers, such as SVM and ELM. Last, we evaluate the classification accuracy.

3.1 Feature extraction based on wavelet packet decomposition

Feature extraction of EEG signals includes two phases. In the first phase, EEG signals are decomposed by the WPD to calculate approximation and detail coefficients. In the second phase, approximate entropy values or sample entropy values of the approximation and detail coefficients are calculated, which form feature vectors. These feature vectors are used as the inputs of a classifier.

In this subsection, we first simply analyze the characteristic of the WPD, and then, give a briefly review of approximate entropy and sample entropy.

3.1.1 Wavelet packet decomposition

Feature extraction is very important to EEG signals analysis and processing. WPD is a wavelet transform where the discrete-time signal is passed through more filters than the DWT, which can provide a multi-level time–frequency decomposition of signals [16]. In the DWT, each level is calculated by passing only the previous wavelet approximation coefficients, which are decomposed to yield approximation and detail information at a higher level. However, in the WPD, both the detail and approximation coefficients are decomposed to create the full binary tree.

For n levels of decomposition, the WPD produces 2n different sets of coefficients. Figure 2 gives the three-level WPD. In Fig. 2, the symbol “s” represents an original signal. Symbols “A” and “D” are the approximation and detail coefficients, respectively. It can be seen in Fig. 2 that in the wavelet packet analysis, both the approximation and details at a certain level are further decomposed into the next level, which means the wavelet packet analysis can provide a more precise frequency resolution than the wavelet analysis.

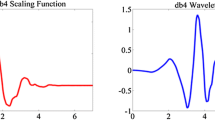

There are many wavelets that can analyze the EEG signal and extract the feature vector. The most important wavelets families are Haar, Daubechies, Symlets, Coiflets, and biorthogonal [16]. It is very important to select the suitable wavelet and the number of decomposition levels in the analysis of EEG signals. Among the various wavelet bases, the Daubechies family of wavelets is known for its orthogonality property and efficient filter implementation.

3.1.2 Approximate entropy and sample entropy

Approximate entropy was first proposed by Pincus [17]. It is a formulated statistical parameter to quantify the regularity of a time series data of physiological signals. EEG signals are time series data essentially, in which raw data values are from measurement equally spaced in time.

Let m be an integer which represents the length of a vector, and r be a similar limit. Given a time series u(1), u(2), …, u(N), where N is the total number of data points.

-

1.

Use the time series u(1), u(2),…,u(N) to form a sequence of m-length vectors x(1), x(2),…,x(N − m + 1), where \(x(i) = [u(i),u(i + 1), \ldots ,u(i + m - 1)]\), i = 1,…, N − m + 1.

-

2.

Define the distance between each vector x(i) and x(j), \({\text{d}}[x(i),x(j)]\), as the maximum difference between their scalar components:

$${\text{d}}[x(i),x(j)] = \mathop {\hbox{max} }\limits_{k = 1,2, \ldots ,m} \left( {|u(i + k - 1) - u(j + k - 1)|} \right) .$$(1) -

3.

For a given x(i), count the number of j, such that the distance between x(i) and x(j) is less than or equal to r. Then, for each i, compute

$$C_{i}^{m} (r) = \frac{{\sum\nolimits_{j} {\{ 1|{\text{d}}[x(i),x(j)] \le r\} } }}{N - m + 1}$$(2) -

4.

Define \(\varPhi^{m} (r)\) and \(\varPhi^{m + 1} (r)\) as

$$\varPhi^{m} (r) = \frac{1}{N - m + 1}\sum\limits_{i = 1}^{N - m + 1} {\ln \,C_{i}^{m} (r)} ,$$(3)$$\varPhi^{m + 1} (r) = \frac{1}{N - m + 1}\sum\limits_{i = 1}^{N - m + 1} {\ln \,C_{i}^{m + 1} (r)}$$(4) -

5.

ApEn(m, r, N) is computed by \(\varPhi^{m} (r)\) and \(\varPhi^{m + 1} (r)\) as follows:

$${\text{ApEn}}(m,r,N) = \varPhi^{m} (r) - \varPhi^{m + 1} (r) = \frac{1}{N - m + 1}\sum\limits_{i = 1}^{N - m + 1} {\ln \,\frac{{C_{i}^{m} (r)}}{{C_{i}^{m + 1} (r)}}} .$$(5)

Two input parameters of approximate entropy must be specified. Usually, parameter m is set to be 1, 2, or 3. Parameter r is set to be some percentage of the standard deviation of the amplitude of time series.

ApEn allows the self-matching (i = j in Eq. 2) to avoid logarithm of zero, thus inducing the bias in estimates. Sample entropy [18] was developed to reduce the bias caused by the self-matching. Mathematically, the computation of SampEn has the same steps 1–3 explained for ApEn. Note that the self matched templates are not computed in step 3. Then, define

Finally, sample entropy can be defined as

And it is estimated by the statistic

The parameters m and r are critical in determining the outcome of SampEn. Usually, SampEn is estimated using the widely established parameter values where m is be set 1 or 2 and r is set between 0.11 and 0.25 times the standard deviation of the original time series.

3.2 Classification methods

3.2.1 Extreme learning machine

The ELM [19, 20] is a newly proposed algorithm for generalized single-hidden layer feedforward neural networks (SLFNs) with randomly chosen hidden nodes and analytically determined output weights.

Given N distinct samples (x i , t i ), where \({\mathbf{x}}_{i} = \left[ {x_{i1} ,x_{i2} , \ldots ,x_{in} } \right]^{\text{T}}\) is the ith sample with n-dimensional features and \({\mathbf{t}}_{i} = \left[ {t_{i1} ,t_{i2} , \ldots ,t_{im} } \right]^{\text{T}}\) represents the actual labels of x i . Standard SLFNs with M hidden neurons can be defined as:

where \(w_{j} = \left[ {w_{j1} ,w_{j2} , \ldots ,w_{jn} } \right]^{\text{T}}\) represents the weight vector connecting the jth hidden neuron and the input nodes, \(\beta_{j}\) is the output weight connecting the jth hidden neuron and the output nodes, b j is the bias of the jth hidden neuron, o i is the ith output neuron, and g(∙) denotes the activation function.

If there exist \(\beta_{j}\), \(w_{j}\), and b j , an SLFN with M hidden neurons can approximate these N samples with zero error, we have

Equation (11) can be rewritten in a matrix form:

where

H is called the hidden layer output matrix of the network. In many real applications, the training error cannot be made exactly zero. To obtain the smallest nonzero training error, a simple representation of the solution of Eq. (13) is given explicitly by Huang et al. [19] as

where H + is the Moore–Penrose generalized inverse of H. The general procedure of ELM is as follows:

- Step 1::

-

Assess the input weights \(w_{j}\) and hidden layer biases b j randomly;

- Step 2::

-

Compute the hidden layer output matrix H;

- Step 3::

-

Obtain the output weight \(\tilde{\beta }\) according to Eq. (13);

In addition, SVM is applied for comparison with ELM. The following is the simple description of SVM.

3.2.2 SVM classifier

SVM developed by Vapnik [21] has received considerable attention. Based on statistics learning theory, it has shown promising empirical results in many fields. SVM can prevent over-fitting based on the structural risk minimization principle.

Given a training data set \(\{ (x_{1} ,y_{1} ),(x_{2} ,y_{2} ), \ldots ,(x_{l} ,y_{l} )\}\), where \(x_{i} \subset \Re^{n}\) denotes a sample with n-dimension and \(y_{i} \in \{ + 1, - 1\}\) is the class of sample x i . An SVM training algorithm constructs an optimal separating hyperplane in a high-dimensional space to classify new samples. In order to find the optimal hyperplane, the training samples are first transformed into a higher dimensional feature space by a mapping function \(\phi\). Then, a possible separating hyperplane can be represented by [21]

The above classification problem can be formalized as the following optimization problem:

subject to

where parameter C is a user-specified positive parameter representing the penalty of misclassifying the training samples. It should be noted that the larger the C, the more the error is penalized. The parameter C can be chosen based on the model’s performance on the validation set. The slack variables \(\xi_{i} > 0\) provide an estimate of the error of the decision boundary on the training samples \(x_{i}\), which are mapped into a higher dimensional space by the function \(\phi\).

This constrained optimization problem can be solved by constructing a Lagrangian representation and transforming it into the dual problem. When we obtain the optimal solution (w*, b*) of the decision boundary, the SVM decision function can be given by

where l is the number of support vectors and \(K(x_{i} ,x_{j} )\) is the kernel function as follows:

Thus, the kernel function is the key that determines the performance of the SVM. Normally, several suggested kernel functions are the linear kernel \((K(x_{i} ,x_{j} ) = x_{i} \cdot x_{j} )\), the polynomial kernel with degree d \((K(x_{i} ,x_{j} ) = (x_{i} \cdot x_{j} + 1)^{d} )\), the Gaussian kernel \((K(x_{i} ,x_{j} ) = \exp ( - \gamma ||x_{i} - x_{j} ||^{2} ))\), and the sigmoid kernel \((K(x_{i} ,x_{j} ) = \tan \,h(\eta \cdot x_{i} \cdot x_{j} + \theta ))\)).

4 Experimental results

This section describes the details of the experiments performed in order to evaluate classification capabilities of the proposed method. The experimental study is divided in two sets of experiments. One is tested by the epileptic EEG data, and the other is implemented in the data set of five mental tasks.

In the experiments, we first use wavelet package to decompose EEG signals and then extract features using approximate entropy or sample entropy. ELM and SVM are selected as classification methods. To evaluate classification capabilities, we combine feature extraction with classification methods. The combination leads to four cases, namely ApEn + SVM, ApEn + ELM, SampEn + SVM, and SampEn + ELM.

Before performance evaluation, the parameters of SVM achieved the best generalization performance are pre-estimated. SVMs in this paper use Gaussian kernel and involve the penalty parameter C as well as kernel parameter \(\gamma\) to be optimized. We determined the tuning parameters \(\gamma\) and C with standard tenfold cross validation. So, the original data is randomly partitioned into ten equal size subsets. Of the ten subsets, a single subset is retained as the validation data for testing the model, and the remaining nine subsets are used as training data. The initial search for optimal parameters \(\gamma\) and C was done on a 10 × 10 uniform coarse grid in the (\(\gamma\), C) space, namely \(\gamma\) = [2−10, 2−8,…,26, 28], C = [2−10, 2−8,…,26, 28]. We used LIBSVM [22] in our experiments.

4.1 Epileptic EEG data

4.1.1 Data description

The epileptic EEG data from the Clinique of Epileptology of Bonn University [23] are used as the experimental data for our proposed method. The epileptic EEG data include five data sets denoted by A–E, respectively. Each data set contains 100 single-channel EEG segments at a sampling rate of 173.61 Hz for 23.6 s, and each segment contains 4,096 samples. These EEG data are obtained from three kinds of different people, namely healthy people, epileptic patients during seizure-free interval, and epileptic patients during seizure interval.

Data sets A and B are attained from five healthy volunteers in an awaken state with eyes open and eyes closed, respectively. Data sets C and D are obtained from five epileptic patients during seizure-free intervals (interictal). Data set E is obtained from five epileptic patients during seizure activity (ictal).

All EEG signals were recorded with the same 128-channel amplifier system, using an average common reference. After 12-bit analog-to-digital conversion, the data were written continuously onto the disk of a data acquisition computer system at a sampling rate of 173.61 Hz [23].

4.1.2 Experiment analysis

We first consider a two-classification problem, where the seizure activity (E) is labeled as positive class, while the other data (A, B, C, and D) form negative class. Our experiments are implemented in four different combinations, namely ApEn + SVM, ApEn + ELM, SampEn + SVM, and SampEn + ELM. In our experiments, data sets randomly divide into two sets, 80 % of data from positive class and negative class, respectively, are used to train classifiers, and the rest for testing the model. The r value is changed from 0.11 to 0.25, while m value is equal to 1, 2, or 3. The performances of each combination are evaluated by overall accuracy defined in the following equation

In order to select suitable Daubechies wavelet function and the level of decomposition, we take epileptic EEG data as experimental data set and give the experimental results as shown in Fig. 3. The left of Fig. 3 shows the classification accuracy and the right shows the time of feature extraction.

From Fig. 3, we can see that the experiment obtains the best accuracy in the case of Daubechies “db2” wavelet function with 5-level decomposition, while the time of feature extraction is the shortest. So, in this experiment, we select the Daubechies “db2” wavelet function with 5-level decomposition to analyze the signal by wavelet package transform.

Experiments are repeated ten times, and we compute their average. Figure 4 shows the overall accuracies of four different combination strategies in the case of different parameters m and r.

Based on the experimental results in Fig. 4, ApEn + SVM strategy achieves higher accuracy values, in comparison with other strategies. Especially, the overall accuracy of ApEn + SVM strategy achieves 100 % in the case of m = 2, r = 0.15 and m = 3, r = 0.23. On the other hand, SampEn + ELM strategy shows very good result which achieved 99.6 % overall accuracy in the case of m = 2 and r = 0.17. ApEn + ELM strategy achieves 99.4 % overall accuracy in the case of m = 1 and r = 0.19, while SampEn + SVM strategy only obtains 96.3 % overall accuracy in the case of m = 2 and r = 0.19. Generally, the overall accuracy using ApEn is higher than the overall accuracy using SampEn, when the experiment adopts the same classification method, SVM or ELM.

In order to further analyze the proposed methods, we employ four different classification problems presented by Tzallas et al. [24] in our experiments. The first and second problems are binary-classification problems, while the third and fourth are three-classification problems. The first problem includes normal (A) and seizure (E). The second problem includes non-seizure (A, B, and C) and seizure (E). We select out normal (A), seizure-free (C) and seizure (E) in the third problems. In the fourth problem, EEG data are classified into three categories including normal (A and B), seizure-free (C and D), and seizure (E). The above classification problems are described in Table 1.

Due to SampEn is superior to ApEn in the training time, we select out two combination strategies to experimental comparison, namely SampEn + SVM and SampEn + ELM. Referring to the experimental results in Fig. 4, we set m = 2 and r = 0.19 for the combination of SampEn and SVM, and set m = 2 and r = 0.17 for the combination of SampEn and ELM. Table 2 shows the classification accuracy for four classification problems by SampEn + SVM and SampEn + ELM approaches in this paper. Based on the results in Table 2, SampEn + ELM achieved higher accuracy values for classification problems 1, 3, and 4, in comparison with SampEn + SVM, while they achieved the similar accuracy in the second classification problem.

4.2 Five mental tasks

4.2.1 Data description

In this paper, we used EEG data from Colorado State University recorded by Keirn and Anderson [25] as the experimental data set. The EEG data collected signals from seven participants for five different mental tasks, each of which is 7 rows by 2,500 columns. The 7 rows represent the corresponding seven channels, namely c3, c4, p3, p4, o1, o2, and EOG. Each task is trialed no <5 times, each of which is 10 s. The acquired signal is sampled at a frequency of 250 Hz. Recording of these EEG data was performed with a bank of Grass 7P511 amplifiers whose bandpass analog filters were set at 0.1–100 Hz. Information of five mental tasks in the experiment is shown as Table 3 [26].

Before feature extraction, EEG signals are first normalized with zero mean and variance one and then divided into 0.5-s segments with an overlap of 0.25-s segments between adjacent segments. Thus, 39 segments are produced.

4.2.2 Experiment analysis

In this study, we make a comparison for each subject in different combinations of mental tasks. From the view of overall accuracy and training time, we select the combination of SampEn and ELM to perform this experiment. The experiment selects the Daubechies “db2” wavelet function with 5-level decomposition and sets parameters m = 2 and r = 0.17. Tables 4, 5, 6, and 7 show the classification accuracy of 2, 3, 4, and 5 metal tasks, respectively, for each subject.

Tables 4 and 5 show the classification results with the better accuracies of the employed strategy, in the case of the combinations of two and three mental tasks, respectively. However, for the combinations of four mental tasks shown in Table 6, subject 3 achieves the lowest accuracy value 66.05 % in the case of B, C, M, and R mental tasks, and subject 6 also achieves the lowest accuracy value 70.59 % in the case of B, L, M, and R mental tasks. The other subjects remain better classification accuracy for the combinations of four mental tasks. From Table 7, we can see that subject 3 and subject 6 achieve less than 80 % accuracy, while the other subjects perform with over 90 % accuracy. Especially, subject 1 obtains 96.74 % accuracy.

Figure 5 gives an intuitional description of average classification accuracy in different tasks for each subject.

5 Conclusions

Wavelet has played an important role in biomedical signal processing for its ability to capture localized spatial-frequency information of EEG signals. In this paper, we have evaluated the performance of two classifiers, namely SVM and ELM on two EEG signals. The proposed scheme uses approximate entropy and sample entropy based on WPD to calculate the feature values, which are acted as the inputs of two classifiers. The combination of SampEn and ELM has shown great performance in classification accuracy and training time.

References

Parvinnia E, Sabeti M, Zolghadri Jahromi M, Boostani R (2014) Classification of EEG signals using adaptive weighted distance nearest neighbor algorithm. J King Saud Univ-Comput Inform Sci 26(1):1–6

Srinivasan V, Eswaran C, Sriraam N (2007) Approximate entropy-based epileptic EEG detection using artificial neural networks. IEEE Trans Inform Technol Biomed 11(3):288–295

Guo L, Wu Y, Cao T, Yan W, Shen X (2011) Classification of mental task from EEG signals using immune feature weighted support vector machines. IEEE Trans Magn 47(5):866–869

Murugavel ASM, Ramakrishnan S (2012) Multi-class SVM for EEG signal classification using wavelet based approximate entropy. CCSIT 2012, pp 335–344

Burke DP, Kelly SP, de Chazal P, Reilly RB, Finucane C (2005) A parametric feature extraction and classification strategy for brain–computer interfacing. IEEE Trans Neural Syst Rehabil Eng 13(1):12–17

Polat K, Günes S (2007) Classification of epileptiform EEG using a hybrid system based on decision tree classifier and fast Fourier transform. Appl Math Comput 187(2):1017–1026

Kumar Y, Dewal ML, Anand RS (2014) Epileptic seizures detection in EEG using DWT-based ApEn and artificial neural network. Signal Image Video Proc 8(7):1323–1334

Subasi A (2007) EEG signal classification using wavelet feature extraction and a mixture of expert model. Expert Syst Appl 32(4):1084–1093

Guo L, Riveero D, Pazaos A (2010) Epileptic seizure detection using multiwavelet transform based approximate entropy and artificial neural networks. J Neurosci Methods 193:156–163

Song Y, Liò P (2010) A new approach for epileptic seizure detection: sample entropy based feature extraction and extreme learning machine. J Biomed Sci Eng 6:556–567

Song Y, Crowcroft J, Zhang J (2012) Automatic epileptic seizure detection in EEGs based on optimized sample entropy and extreme learning machine. J Neurosci Methods 210:132–146

Zhang J, Wang N, Kuang H, Wang R (2014) An improved method to calculate phase locking value based on Hilbert-Huang transform and its application. Neural Comput Appl 24(1):125–132

Bajaj V, Pachori RB (2012) Classification of seizure and nonseizure EEG signals using empirical mode decomposition. IEEE Trans Inform Technol Biomed 16(6):1135–1142

Wu HT, Lee CY, Liu CC, Liu AB (2013) Multiscale cross-approximate entropy analysis as a measurement of complexity between ECG R–R interval and PPG pulse amplitude series among the normal and diabetic subjects. Comput Math Methods Med, vol. 2013, Article ID 231762

Ahangi A, Karamnejad M, Mohammadi N, Ebrahimpour R, Bagheri N (2013) Multiple classifier system for EEG signal classification with application to brain–computer interfaces. Neural Comput Appl 23(5):1319–1327

Hu DY, Li W, Chen X (2011) Feature extraction of motor imagery EEG signals based on wavelet packet decomposition. In: Proceedings of the 2011 IEEE/ICME international conference on complex medical engineering, pp 694–697

Pincus SM (1991) Approximate entropy as a measure of system complexity. Proc Natl Acad Sci USA 88(6):2297–2301

Richman J, Moorman J (2000) Physiological time series analysis using approximate entropy and sample entropy. Am J Physiol 278(6):2039–2049

Huang G-B, Zhu Q-Y, Siew C-K (2006) Extreme learning machine: theory and applications. Neurocomputing 70:489–501

Huang G-B, Zhou H, Ding X, Zhang R (2011) Extreme learning machine for regression and multiclass classification. IEEE Trans Syst Man Cybern 42(2):513–529

Vapnik V (1995) The nature of statistical learning theory. Springer, New York

Chang C-C, Lin C-J (2011) LIBSVM: a library for support vector machines. ACM Trans Intell Syst Technol 2(3):1–27. http://www.csie.ntu.edu.tw/~cjlin/libsvm

Andrzejak RG, Lehnertz K, Mormann F, Rieke C, David P, Elger CE (2001) Indications of nonlinear deterministic and finite-dimensional structures in time series of brain electrical activity: dependence on recording region and brain state. Phys Rev E Stat Nonlinear Soft Matter Phys 64:061907-1–061907-8

Tzallas AT, Tsipouras MG, Fotiadis DI (2007) Automatic seizure detection based on time-frequency analysis and artificial neural networks. Comput Intell Neurosci. Article ID 80510

EEG Data. http://www.cs.colostate.edu/eeg/main/data/1989_Keirn_and_Aunon

Geethanjali P, Mohan YK, Jinisha S (2012) Time domain Feature extraction and classification of EEG data for brain computer interface. In: Proceedings of the 9th international conference on fuzzy systems and knowledge discovery (FSKD 2012), May 29–31, 2012, IEEE Press, pp 1136–1139

Acknowledgments

This work is partly supported by National Natural Science Foundation of China (No. 61373127), the China Postdoctoral Science Foundation (No. 20110491530), and the University Scientific Research Project of Liaoning Education Department of China (No. 2011186).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zhang, Y., Zhang, Y., Wang, J. et al. Comparison of classification methods on EEG signals based on wavelet packet decomposition. Neural Comput & Applic 26, 1217–1225 (2015). https://doi.org/10.1007/s00521-014-1786-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-014-1786-7