Abstract

Mathematical models derived from the physical systems are usually complex and in the form of higher order differential equations. Such systems are difficult for analysis and controller synthesis. Therefore, it is desirable to develop an efficient algorithm for reducing such higher order systems to a lower order model by preserving all the significant characteristics of the original higher order system. For this, we have proposed an improved adaptive differential evolution algorithm (I-ADE), which is mixed with Routh approximation (RA) to determine the numerator and denominator coefficients of the corresponding lower order stable model (LOSM) by preserving the fundamental characteristics of a higher order stable system (HOSS). The superiority of the proposed method is illustrated by numerical test cases of single-input single-output (SISO) systems and multiple-input multiple-output (MIMO) systems. To evaluate the efficiency of proposed I-ADE algorithm, 23 benchmark functions are considered, and the results are statistically compared with nine promising meta-heuristic algorithms. The proposed I-ADE algorithm and the Routh approximation technique provide superior results in reducing the model order of SISO and MIMO systems. It achieves statistically the best rank among nine promising meta-heuristic algorithms employing the Friedman and Nemenyi Hypothesis test for optimizing 23 benchmark functions. This shows the proposed I-ADE algorithm’s reliability, robustness and applicability for other optimization problems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the last few decades, the model order reduction of higher order systems has been a significant research topic in the domain of control engineering. Mathematical modelling for complex physical systems is too expensive and tedious. Indeed, the mathematical modelling of intricate physical systems frequently yields higher-order differential equations or transfer functions. These more complex mathematical models can pose significant difficulties when it comes to both analyzing and constructing controller design. To overcome this scenario, model order reduction (MOR) has become the most promising technique to construct a corresponding lower order model that retains the significant characteristics of the higher order stable system (HOSS). A lower order stable model (LOSM) can enhance the comprehension of the original system, reduce computational complexities, and streamline the control design.

Various model order reduction techniques for linear continuous-time SISO systems have been documented in existing literature, encompassing both time-domain and frequency domain approaches (Fortuna et al. 2012; Schilders et al. 2008). Moreover, the model order reduction methods initially developed for SISO systems have also been extended to encompass MIMO systems. In the context of model order reduction for linear time-invariant (LTI) systems in the frequency domain, various techniques (Shamash 1974; Ashoor and Singh 1982) have been developed to match the time moments of HOSSs with LOSMs. One commonly used method is Padé approximation (Shamash 1974). Padé approximation is a straightforward approach that retains the initial time moments of the HOSS in the LOSM, while also matching their steady-state characteristics (Ashoor and Singh 1982). However, a significant limitation of Padé approximation is that it does not guarantee the stability of the lower order model, even when the higher order system is stable. This drawback raises concerns about the reliability of this technique. Researchers have introduced hybrid approaches to address the stability issue associated with Padé approximation (Hwang 1984; Lucas 1993; Mukherjee and Mittal 2005; Padhy et al. 2023). These hybrid techniques combine the characteristics of two different algorithms to generate more stable and accurate reduced-order models. Hwang (Hwang 1984) proposed a method that combines the Routh approximation (RA) technique with an integral square error (ISE) criterion to compute stable LOSMs. This approach minimizes the steady-state error between the original system and the reduced-order model. Lucas (Lucas 1993) presented a popular multipoint Padé approximation iterative technique focusing on generating optimal models by iteratively refining the approximation. This iterative process helps to improve the accuracy of the reduced-order model. Mukherjee and Mittal (Mukherjee and Mittal 2005) introduced a pole pattern technique for model reduction. This technique is designed to minimize the ISE between the higher order system and the lower order model, emphasizing the importance of capturing the system’s dynamic behaviour accurately. Padhy et al. (Padhy et al. 2023) introduced a combined approach that utilizes both time moment (TM) matching and integrated stability equation methods to calculate lower order models. Furthermore, this technique aids in enhancing the stability performance of the lower order model. These hybrid approaches attempt to overcome the stability issues associated with Padé approximation and improve the accuracy of reduced-order models by integrating stability-retaining methods and optimal reduction techniques. Researchers continue to explore and develop various methodologies to effectively address the challenges of model reduction in the frequency domain.

Nowadays, soft computing techniques have fascinated researchers and industry to solve a wide variety of complex problems, including reducing large-scale systems. The recent emergence of nature-inspired swarm and evolutionary algorithms, namely, genetic algorithm (GA) (Goldberg 1989), particle swarm optimization (PSO) (Kennedy and Eberhart 1995), Moth-flame optimization algorithm (Mirjalili 2015), Sine cosine algorithm (Mirjalili 2016a; Padhy et al. 2021), Dragon fly algorithm (Mirjalili 2016b; Padhy 2022), artificial gorilla troops optimizer (Abdollahzadeh et al. 2021) have been used in the optimization problems. In the field of model order reduction, these algorithms are applied to minimize the objective/fitness function. The fitness function is often the integral square error (ISE), mean square error (MSE), or root mean square error (RMSE). Several authors (Vishwakarma and Prasad 2009; Desai and Prasad 2013a; Sikander and Prasad 2015a) proposed combined MOR methods in which denominator coefficients are computed using a mathematical technique, and the numerator polynomials are obtained by using swarm and evolutionary algorithms to match the system’s transient response. A combined order reduction technique based on modified pole clustering and genetic algorithm is proposed in Vishwakarma and Prasad (2009). Desai and Prasad (Desai and Prasad 2013a) integrated the benefits of the Big Bang Big Crunch (BBBC) optimization technique and stability equation method for MOR. Another mixed technique for obtaining a lower order model based on the stability equation method and the nature-inspired PSO algorithm is presented in Sikander and Prasad 2015a. Furthermore, single nature-inspired optimization algorithms like PSO, GA, DF, SCA, etc., cannot provide global optimal solutions because of their limited feature and poor convergence characteristics. Therefore, now, researchers give attention towards hybrid optimization algorithms in the application of MOR. Evolutionary algorithms are frequently utilized to identify global maxima or minima of intricate fitness functions. In a referenced paper (Ganji et al. 2017), the author introduced a hybrid algorithm named PSO-DV. This algorithm integrates a selection mechanism into the Particle Swarm Optimization (PSO) approach and incorporates the differential vector operator from Differential Evolution (DE) to enhance the velocity update scheme within the PSO framework. Recently, a new optimization algorithm has been proposed by combining the benefits of the firefly algorithm (FA) (Yang and Slowik 2020) and Grey wolf optimization (GWO) (Mirjalili et al. 2014). In this hybrid algorithm, the solution vectors are initialised using the FA and the GWO algorithm is used to fine-tune the solution.

The main objective of this manuscript is to simplify the SISO and MIMO higher order linear continuous system by minimizing the integral square error of step response between HOSS and LOSM. The Routh approximation technique determines the denominator polynomial of the LOSM, while the numerator polynomial is determined using the proposed I-ADE algorithm. In summary, the key contributions of the paper are:

-

i.

An improved adaptive DE algorithm (I-ADE) is proposed, which is reliable and robust.

-

ii.

The denominator polynomial of the lower-order model is determined by the Routh approximation technique.

-

iii.

The numerator polynomial of the lower-order model is determined by minimizing the step response ISE and using the proposed I-ADE algorithm.

-

iv.

Numerical test cases of SISO systems and MIMO systems illustrate the superiority of the proposed method.

-

v.

To evaluate the reliability, robustness and applicability of proposed I-ADE algorithm to different optimization problems, statistical comparative performance analysis is carried out in optimizing 23 benchmark functions using nine promising meta-heuristic algorithms.

This article has been structured into six sections. Section 1 contains the introduction and a comprehensive literature survey on model order reduction. The problem formulation of the order reduction has been given in Sect. 2. Differential evolution and algorithmic overview are discussed in Sect. 3. The proposed method of order reduction is illustrated in Sect. 4. Simulated results are provided in Sect. 5. Finally, Sect. 6 comprises the conclusion and the future scope of the work presented.

2 Problem formulation

The assessment of the proposed method’s performance involves the utilization of both SISO and MIMO systems. Therefore, in this section, the SISO and MIMO systems are briefly mentioned.

2.1 Single-input single-output (SISO) system

Consider an hth order large-scale dynamic HOSS transfer function described in the form of Eq. 1

where \(c_{0} ,c_{1} ,c_{2} , \cdots ,c_{h - 1}\) and \(d_{0} ,d_{1} , \cdots ,d_{h}\) are the coefficients of higher order stable system. The prime objective of the representation (given in Eq. 2) is to determine an order ‘l’ of the lower order model, which is less than the order ‘h’ of higher order system. Further, the lower order model must retain the significant characteristics of the higher order system in terms of its transient and steady-state parameters.

where \(\hat{e}_{0} ,\hat{e}_{1} ,\hat{e}_{2} \cdots \hat{e}_{l - 1}\) and \(\hat{f}_{0} ,\hat{f}_{1} ,\hat{f}_{2} \cdots \hat{f}_{l}\) are the unknown coefficients of lower order stable model.

2.2 Multi-input multi-output (MIMO) system

This paper extends the research to encompass systems with m inputs and n outputs, which are represented by the transfer matrix illustrated in Eq. 3. Such systems are commonly referred to as multi-input multi-output (MIMO) systems. These systems are frequently employed in the modelling of real time control structure of power plant model. Thus, the nth-order MIMO model is expressed as in Eq. 3.

where \(i = 1,2,3, \cdots ,n;\quad j = 1,2,3, \cdots ,m\)

Now, individual transfer functions are expressed in corresponding numerator and denominator form as given by Eq. 4.

In general,\(h{}_{ij}\left( s \right) = \frac{{C_{ij} \left( s \right)}}{{D_{h} \left( s \right)}},\)

where \(i = 1,2,3, \cdots ,n;\quad j = 1,2,3, \cdots ,m\)

The MIMO model of order l(l < h) is represented by Eq. 5

where \(\hat{E}_{ij} \left( s \right)\) and \(\hat{F}_{l} \left( s \right)\) are the numerator and denominator polynomial of LOSM transfer functions, respectively. \(i = 1,2,3, \cdots ,n;\quad j = 1,2,3, \cdots ,m\)

3 Differential evolution and algorithmic overview

Storn and Price (Kenneth and Storn 1997) proposed an efficient evolutionary algorithm called differential evolution (DE) whose extended versions have shown promising results in the CEC competition series (Awad et al. 2016, 2017; Akhmedova et al. 2018). The recent review papers (Bilal et al. 2020; Das et al. 2016) present the algorithmic developments of DE algorithm. It is a stochastic search algorithm initiating chromosome set, commonly known as decision vectors or population) where each chromosome has of a set of genes, often called as decision variables or individuals. In this technique, chromosomes of a generation follows three major steps such as mutation, crossover and optimal selection iteratively to feed the population forwardly till an optimal solution is obtained.

The approach towards DE algorithm is mentioned in the stepwise manner:

-

1.

Parameter initialization for problem statement, technique to be used and decision vector.

-

2.

Computation of the fitness value for each decision variable.

-

3.

This step comprises of three sub-steps.

-

a.

Apply the mutation operator to produce the mutant vector.

-

b.

Crossover between the target vector and mutant vector to generate trial vector.

-

c.

Selection of the fittest between target vector and trial vector

Sub-step a to c to be followed to produce chromosomes for following generation.

-

4.

If the stopping criteria has achieved, then continue with step-5 otherwise back to step-3.

-

5.

Determine the most suitable fitness value as the optimal solution.

Consider the set of decision vector of nth generation is \(pop_{n} = \left( {P_{n}^{1} ,P_{n}^{2} , \cdots ,P_{n}^{i} , \cdots P_{n}^{psz} } \right),\) with \(P_{n}^{i} = \left( {G_{n}^{j1} ,G_{n}^{j2} , \cdots G_{n}^{jk} \cdots G_{n}^{jl} } \right)\) for \(j = 1,2,3 \cdots psz,\) \(l =\) each chromosome length,\(G_{n}^{jk} =\) kth gene of the jth variable in nth generation. Thereafter, mutation for jth chromosome generates a mutant vector \(MV_{n}^{j} = \left( {MV_{n}^{j1} ,M_{n}^{j2} , \cdots M_{n}^{jk} \cdots M_{n}^{jl} } \right)\), which is implemented in the crossover operator with the chosen chromosome \(P_{n}^{i} = \left( {G_{n}^{j1} ,G_{n}^{i2} , \cdots G_{n}^{jk} \cdots G_{n}^{jl} } \right)\) to generate a trial vector \(TV_{n}^{j} = TV_{n}^{j1} ,TV_{n}^{j2} , \cdots TV_{n}^{jk} \cdots TV_{n}^{jl}\).

The DE algorithm shows changes in characteristics through the mutation and crossover operators. Different mutation operators are proposed in Price et al. (2006), Corne et al. (1999) to produce mutant vector. Different DE mutation schemes include DE/best/1 (Eq. 6), DE/rand/1 (Eq. 7), DE/rand/2 (Eq. 8), DE/best/2 (Eq. 9) and DE/target-to-best/1 (Eq. 10). Once the mutant vector is generated using any of the mutation scheme, crossover operators such as binomial (Eq. 11) or exponential (Eq. 12) is applied to obtain a trial vector. Thereafter, selection process (Eq. 13) is implemented to identify fittest chromosomes between trial vector and target vector for choosing the fittest individual among the chromosomes for the following generations.

The abbreviations used in this paper are denoted as \(DE/c/d\), where DE is differential evolution, \(c\) is meant for choosing the base vector in mutation, \(d\) denotes the number of difference vectors used in mutation; \(MV_{n}^{j}\) meant for jth mutant vector in nth generation;\(p_{n}^{best}\) is the most suitable vector among the decision vector set in nth generation;\(p_{n}^{rnd}\) is a randomly selected decision vector;\(p_{n}^{j}\) is the target vector; the crossover probability is denoted as \(Cp\); \(S^{f}\) is termed to be the scale factor;\(TV_{n}^{ji}\) represents the ith gene of jth chromosome in nth generation; rand (0,1) ranges between 0 and 1 chosen randomly. It should be noted that all the selected decision vectors for mutation must be distinct to each other.

4 Methodology

This research aims to introduce a novel mixed-order reduction method applicable to continuous-time fixed coefficient systems. The proposed approach leverages the Routh Approximation method to calculate the denominator coefficients of the lower order model and employs the I-ADE optimization algorithm to determine the optimal numerator coefficients for the lower order model. The primary objective of this study is to minimize the Integral of Squared Error (ISE) between the step responses of the original higher order system and the lower order model. In this article, SISO and MIMO test systems from existing literature are selected as benchmarks to evaluate the effectiveness of the proposed technique. To gauge its performance, the proposed method is compared against established reduction methods in the literature. The paper provides step responses and Bode diagrams for both the original higher order system and its corresponding lower order models. A comprehensive comparative analysis is conducted using various error metrics and time-domain specifications. The simulation results and the comparative study collectively demonstrate that the proposed approach yields an acceptably accurate lower order model for the original higher order system.

4.1 Proposed improved adaptive differential evolution algorithm (I-ADE)

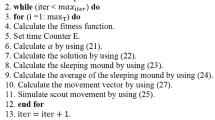

In this paper, an improved adaptive differential evolution algorithm (I-ADE) is proposed. Figure 1 presents the flowchart of the proposed I-ADE algorithm. The algorithm operates in three stages: initialization, iteration and termination. In the initialization stage, the algorithm starts with a psz number of randomly generated decision vectors from an upper bound and lower bound with each decision vector denoting a candidate solution of the problem. Each decision vector has a set of variables with each variable denoting an independent variable of the problem. The generation counter n, mean of crossover probability \({Cp}_{m}\) and number of trial vectors upon stagnation is initialized. In the iteration stage, mutation, crossover and selection operations are repeatedly applied to move the population of decision vectors to next generation until a termination criterion is satisfied. In the proposed algorithm, DE/best/1 mutation scheme is used with a modification in generating multiple mutant vectors upon stagnation. In this algorithm, it is assumed that the population stagnates if the rate of improvement of best solution is less than 1%. The novelty of the proposed algorithm lies in its parameter adaption which is presented in Algorithm 1.

When stagnation occurs, NT scale factors (\(S^{f}\)) are employed to generate NT number of mutant vectors. Then, the target vector and mutant vectors undergo a crossover operation to generate multiple trial vectors. Finally, the best trial vector is used for selection operation between the trial vector and target vector. The generation of multiple trial vectors around the existing solution helps in getting out of stagnation. This is because: a) If the difference vector \(P_{n}^{r1} - P_{n}^{r2}\) becomes small, or the vector converges to a tiny domain, then the \(S^{f}\) having larger values are used to generate a larger difference vector which ultimately assists in avoiding the suboptimal peaks causing stagnation, b) If the difference vector \(P_{n}^{r1} - P_{n}^{r2}\) is large, the decision vector may jump the near-optimal solutions. But the use of \(S^{f}\) with smaller value may overcome this problem. This is because when a larger difference vector is multiplied with a smaller scale factor (less than one), the difference vector large value reduces significantly which will assist in making local search around the existing solution. Thereby avoids the chances of overshooting the near-optimal solution due to addition of larger difference vector value. Thus, the multiple \(S^{f}\) from a uniform distribution ranging (0–2) will be beneficial for both local search and global search.

When stagnation does not occur, the initial generation scale factor values are larger while the later generation scale factor values are smaller. Thus, initially, it makes exploration of the search space while in later generations, it does exploitation of the solution by making local search around the existing solutions. Thereby, a trade between exploration and exploitation is implicitly maintained.

The crossover probability (\(Cp\)) does not depend on any stagnation criterion. Additionally, the \(Cp\) is generated from a large Gaussian distribution with a fixed value of standard deviation and mean of 0.1 and \(Cp_{m}\), respectively. The adaptation of \(Cp_{m}\) is predominantly depends on the preserving the better crossover probabilities to obtain the most suitable trial vectors. The implication of arithmetic mean during adjusting \(Cp_{m}\) avoids the bias of \(Cp\) towards smaller values (as in Eq. 14). Additionally, \(Cp_{m}\) uses \(Cp_{success}\) along with a factor of randomness. Thereby, it not only makes use of better crossover probabilities but also adds an element of randomness into it. In this research, Gaussian distribution is used on \(Cp_{m}\) which improvises the chances to obtain the fittest trial vectors with the help of \(Cp_{m}\) values within a unity range. The value of \(Cp_{m}\) is initially set to 0.5, thereby gradually updated in every iteration (using Eq. 14).

where \(W_{cr}^{f}\) is the weight factor in the range of (0.7, 1).\(Cp_{success}\) is the set of \(Cp\) generating better trial vectors.

Once the best trial vector is obtained, it is used in selection to move the candidate solution with better fitness to next generation. Once the termination criteria are satisfied, the decision vector of final generation with best fitness value is used as the solution of the problem. The proposed algorithm is an improved and faster version of ADE-ANNT (Panigrahi and Behera 2020) algorithm. Hence, it is named as I-ADE.

Algorithm 1 Pseudo-code of parameter adaptation

Here rand (1, NT) represents NT numbers from a uniform distribution space in a span of (0, 1), Gaussianrnd (\(Cp_{m}\), 0.1) is generated from a Gaussian distribution having standard deviation and mean of 0.1 and \(Cp_{m}\), respectively. In this algorithm, when there is less than 1% change in the best solution between two generations then only multiple trial vectors are generated. Otherwise, only one trial vector will be generated and the computational efficiency of the algorithm is same to other algorithms. Additionally, one can employ parallel computing to compute the multiple trial vectors parallel which will again diminish the questions on computational complexity and applicability of the proposed algorithm in practice. Of course, the number of trial vectors and choice of stagnation criteria maintains a trade between exploration and exploitation which need to be carefully chosen based on the problem in hand.

4.2 Application of Routh approximation for computation of denominator polynomials of lower-order model

Routh approximation (RA) is initially highlighted by Hutton et al. (1975) to reduce the order of HOSS to its corresponding LOSM in the frequency domain. The technique is quite simple to use, thereby used for preserving the stable approximants in LOSM, only if the HOSS is termed to be stable. This technique is suitable to evaluate denominator coefficients of the LOSM depending on the \(\beta\)-table (as in Table 1).

Step-1: Compute the reciprocal transformation of HOSS using Eq. 15.

Step-2: Considering the coefficients of \(\overline{Q}\left( s \right)\), determine the values of \(\beta_{1} ,\beta_{2} , \cdots \beta_{n} ,\) by using Beta table.

Step-3: Obtain lth-order denominator polynomial \(\hat{Q}_{l}\) considering the Beta coefficients of \(\overline{Q}_{h} \left( s \right)\).

For second order, \(\hat{Q}_{2} (s) = \beta_{1} \beta_{2} s^{2} + \beta_{2} s + 1\).

For third order, \(\hat{Q}_{3} (s) = \beta_{1} \beta_{2} \beta_{3} s^{3} + \beta_{2} \beta_{3} s^{2} + \left( {\beta_{1} + \beta_{3} } \right)s + 1\).

Equation 16 represents the generalized form of denominator polynomial.

4.3 Application of proposed I-ADE algorithm for computation of numerator polynomials of lower order model

The proposed I-ADE algorithm is used to compute the numerator polynomials of lower order model. For this each numerator polynomial is considered as a decision variable and all numerator polynomials form a decision vector. Then, the mutation, crossover and selection scheme of I-ADE algorithm employing the proposed parameter adaption scheme is repeatedly applied until the termination criteria are satisfied. Note that the objective function is a minimization one and is used to minimize the integral square error (ISE) between the step responses of original higher order system and the reduced model. Once the I-ADE algorithm terminates, the decision vector of the final generation with the best fitness (lowest ISE) is selected as the values of numerator polynomials of lower order model.

5 Simulated results and discussion

5.1 Experimental evaluation of proposed I-ADE algorithm for optimizing benchmark functions

The proposed I-ADE algorithm’s performance is evaluated using benchmark functions, both unimodal and multimodal, as detailed in Mirjalili’s work (El-Kenawy et al. 2022). This includes information on the dimensionality (Dim), ranges, and optimal solutions (fmin) for these benchmark functions. To assess the effectiveness of the proposed I-ADE algorithm, it is compared with several well-known meta-heuristic algorithms. Specifically, these algorithms are the original SCA, PSO, ALO, DE, MFO, and DF. Since the proposed I-ADE method is a modification of the DE (Differential Evolution) algorithm, the original DE algorithm is chosen as a comparison benchmark. Additionally, PSO, ALO, and DE have recently been applied in the context of model order reduction, making them relevant for comparison. Two other popular meta-heuristic algorithms, MFO and DF, are also included as comparative methods. The control parameters for SCA, PSO, ALO, and MFO remain the same. However, for the DE algorithm, a specific strategy called DE/best/1/bin is employed, with control parameter values F set to 0.5 and Cr set to 0.6. All algorithms, including the proposed I-ADE, are run independently 20 times, each with a swarm size of 20 search agents (also referred to as individuals or chromosomes) and a maximum of 400 iterations. For the convenience of interested readers, the algorithm parameters are summarized in Table 2.

In this field, it is a common practice to evaluate the performance of algorithms using a set of well-established mathematical functions that have known global optima. Computational experiments are typically conducted on a set of 23 benchmark functions, which can be categorized into three groups: unimodal functions, multimodal functions with varying numbers of local optima, and fixed-dimension multimodal functions. The details of these three categories of functions are mentioned below.

-

Benchmark functions 1–7 are unimodal functions (as in Table 3). These functions are primarily used to assess how well algorithms can exploit the search space and find the global optimum. Unimodal functions have a single global optimum.

-

Benchmark functions 8–13 are flexible dimension multimodal functions (as in Table 4). These functions are characterized by having multiple local optima, and the number of local optima tends to increase with the number of dimensions. These functions are utilized to evaluate the ability of algorithms to explore the search space effectively and locate multiple local optima.

-

Benchmark functions 14–23 are fixed-dimension multimodal benchmark functions (as in Table 5). They are designed to test the exploration capabilities of algorithms in situations where the dimensionality of the optimization problem is fixed, and multiple local optima exist.

In summary, these benchmark functions serve as a standardized way to test and compare the performance of algorithms in terms of their ability to exploit global optima, explore the search space efficiently, and handle optimization problems with various characteristics and dimensions. The global optimum values of the benchmark functions are also given in Tables 3, 4 and 5 to give an idea to the readers about the performances of the proposed algorithm.

Table 6 provides a summary of the mean and standard deviation (StDev) results obtained from the suggested I-ADE algorithm as well as the compared algorithms across the benchmark functions (f1 to f23). These results reveal that the I-ADE algorithm has achieved impressive performance, even obtaining zero values for both mean and standard deviation in some cases. Additionally, it outperforms the compared algorithms in various instances. Table 7, on the other hand, presents the outcomes of the Wilcoxon signed-rank test (WSRT) conducted on the 23 sample functions (f1 to f23). Since meta-heuristic algorithms exhibit stochastic behaviour, it is essential to employ statistical tests to make conclusive assessments. The WSRT, conducted at a 95% significance level, is used to determine whether a method is inferior (−), superior ( +), or equivalent (≈) to the proposed I-ADE algorithm. These statistical tests aid in providing a rigorous and reliable evaluation of the algorithms, allowing for comparisons and identifying the strengths and weaknesses of each method. These results are crucial in making informed decisions about the algorithm’s suitability for optimization tasks.

Table 8 presents the frequency of statistical significance comparative algorithms than proposed I-ADE algorithm than other in optimizing 23 benchmark functions. The ADE-ANNT algorithm provides inferior, superior and equivalent results than proposed I-ADE algorithm in 10, 1 and 12 benchmark functions, respectively. Table 8 reveals that the proposed I-ADE algorithm has demonstrated statistically superior performance when compared to several other algorithms, including the original ADE, DE, GWO, WOA, PSO, MFO, ALO, DF, and SCA. This statistical analysis provides strong evidence of the effectiveness of the I-ADE algorithm across various benchmark functions. To rank the meta-heuristic algorithms comprehensively, taking into account all 23 functions, a Friedman and Nemenyi hypothesis test is conducted at a 95% confidence level, and the results are depicted in Fig. 2. It is evident from Fig. 2 that the proposed I-ADE algorithm attains the lowest mean rank (51.34), which is significantly lower than the mean ranks of other algorithms by at least a critical distance of 2.8. ADE-ANNT acquires the second rank, SCA acquires the worst rank, and so on. The GWO and PSO algorithms are statistically equivalent to each other, since the difference in mean rank is less than the critical distance 2.8.

5.2 Performance evaluation of proposed method for optimizing the parameters of numerator and denominator polynomial of SISO system

In order to evaluate the performance of proposed method for model order reduction of SISO system, the fourth-order system (Vishwakarma and Prasad 2008) is considered which is expressed in Eq. 17. Equation 18 presents the reciprocal of denominator polynomial of HOSS. Then Routh approximation is used and the computed Beta table for denominator polynomial of the fourth-order SISO system is presented in Table 9.

The second-order denominator polynomial is obtained by using Table 9 and is presented in Eq. 19.

For computing the denominator polynomial of the desired reduced LOSM, the reciprocal transformation of Eq. 19 is taken and it is presented in Eq. 20.

After computation of denominator polynomial mentioned in Eq. 20, the numerator polynomial of the LOSM is computed using I-ADE by setting population size to 50, the maximum number of iterations to 400 and minimizing the ISE between HOSS and LOSM. The numerator polynomial is presented in Eq. 21.

Thus, the obtained second-order transfer function stable model using the proposed technique is presented in Eq. 22.

Figures 3 and 4 demonstrate that the error gap between the step and frequency responses of the HOSS and the LOSM obtained through the proposed method is remarkably small. This observation indicates that the LOSM effectively captures the essential characteristics of the original system. Equation 22 represents a second-order steady-state model for a fourth-order system, and it is worth noting that this reduced model encapsulates all the significant attributes of the original system. Furthermore, Table 10 provides evidence that the reduced-order model obtained through the proposed method maintains the stability of the original system while achieving impressive results in various error metrics, including integral square error (ISE), integral absolute error (IAE), integral time square error (ITSE), mean square error (MSE), and root mean square error (RMSE). These error indices demonstrate that the proposed LOSM performs better than other well-known reduction techniques found in the literature. As a result, it can be concluded that the simplified LOSM derived from the proposed method is the closest match to the original system’s behaviour. This makes it highly useful for dynamic simulations of large-scale systems, as it reduces computational complexity, simulation time, and memory requirements while maintaining accuracy and stability.

5.3 Performance evaluation of proposed method for optimizing the parameters of numerator and denominator polynomial of MIMO system

Consider a sixth order MIMO system (Padhy et al. 2021; Bistritz and Shaked 1984) having two inputs two outputs (TITO) described by Eq. 23

The denominator and numerator polynomial of the MIMO system is represented by Eqs. 24 and 25, respectively.

By using beta table, the lower-order denominator polynomial is represented by Eq. 26.

The numerator coefficients of the LOSMs are computed using I-ADE algorithm by minimizing the integral square error between higher-order system and lower-order model which are represented in Eq. 27.

For transfer function \(H_{11} \left( s \right)\).

The transfer matrix of second-order lower order model obtained by using proposed method which are represented by Eq. 28.

For transfer function \(H_{12} \left( s \right)\).

For transfer function \(H_{21} \left( s \right)\).

For transfer function \(H_{22} \left( s \right)\).

For MIMO system, the proposed LOSM is compared with the lower order models obtained by the particle swarm optimization and SE (Sikander and Prasad 2015a), BBBC optimization algorithm and RA (Desai and Prasad 2013b), cuckoo search optimization and SE (Narwal and Prasad 2016), GA and SE method (Parmar et al. 2007a), factor division (FD) and Eigen spectrum analysis (ESA) (Parmar et al. 2007b), FD and SE method (Sikander and Prasad 2015b) to assess the efficacy of the proposed model order reduction technique. Figures 5, 6, 7, and 8 illustrate the step responses of the LOSM alongside its corresponding HOSS. Additionally, Figs. 9, 10, 11, and 12 clearly demonstrate that the frequency responses of the LOSM by the proposed method closely resemble those of the HOSS. To further evaluate the performance of the LOSM, various performance indices, such as ISE, IAE, ITSE, MSE, and RMSE, are employed. These indices are used for comparing the LOSM with models obtained through existing methods, as outlined in Tables 11, 12, 13, and 14. The optimal values for each of the test systems are obtained using the proposed method which are highlighted in bold face for easy identification.

6 Conclusion

A method based on the proposed improved adaptive differential evolution algorithm (I-ADE) and Routh approximation is implicated in the model order reduction of higher order SISO and MIMO systems. In the proposed method, the denominator polynomial is calculated using the Routh approximation technique and the numerator polynomial is obtained by employing the proposed I-ADE algorithm. The key factor of the proposed model order reduction method is obtaining an LOSM for an HOSS with minimum error bounds and retaining the significant characteristics of the HOSS. Additionally, the performance of the model order reduction method is evaluated by employing diverse test case studies for SISO and MIMO systems. The frequency and step responses show the transient and steady-state behaviour of HOSS and LOSM. The results obtained using the proposed method are better than those obtained using different model order reduction methods of the published literature pertaining to stability checks by employing step and frequency responses. Performance indices results indicate that the proposed method for LOSM is effective, efficient, reliable and feasible. Hence, it can be concluded that the proposed approximation method is stable and superior to the other alternative methods existing in the literature. Additionally, the proposed I-ADE algorithm is robust and reliable, which is statistically confirmed from the simulation results for optimizing 23 benchmark functions. The proposed I-ADE algorithm is so adaptive that it can be applied to a wide range of optimization problems with the least intervention. In the future, one can extend the proposed scheme for simplifying discrete, fractional-order SISO and MIMO systems.

Data availability

Data can be available on request to the corresponding author.

References

Abdollahzadeh B et al (2021) Artificial gorilla troops optimizer: a new nature-inspired metaheuristic algorithm for global optimization problems. Int J Intell Syst 36:5887–5958

Akhmedova S, Stanovov V, Semenkin E (2018) LSHADE Algorithm with a Rank-based Selective Pressure Strategy for the Circular Antenna Array Design Problem. In ICINCO (1). 2018

Ashoor N, Singh V (1982) A note on low-order modeling. IEEE Trans Autom Control 27:1124–1126

Awad NH et al. (2016) An ensemble sinusoidal parameter adaptation incorporated with L-SHADE for solving CEC2014 benchmark problems. In 2016 IEEE congress on evolutionary computation (CEC). IEEE

Awad NH, Ali MZ, Suganthan PN (2017) Ensemble sinusoidal differential covariance matrix adaptation with Euclidean neighborhood for solving CEC2017 benchmark problems. in 2017 IEEE Congress on Evolutionary Computation (CEC). IEEE

Bilal, Pant M, Zaheer H, Garcia-Hernandez L, Abraham A (2020) Differential evolution: a review of more than two decades of research. Eng Appl Artif Intell 90:103479

Bistritz Y, Shaked U (1984) Minimal Pade model reduction for multivariable systems. J Dyn Syst Meas Control. https://doi.org/10.1115/1.3140688

Corne D et al (1999) New ideas in optimization. McGraw-Hill Ltd., UK

Das S, Mullick SS, Suganthan PN (2016) Recent advances in differential evolution–an updated survey. Swarm Evol Comput 27:1–30

Desai S, Prasad R (2013a) A new approach to order reduction using stability equation and big bang big crunch optimization. Syst Sci Control Eng an Open Access J 1:20–27

Desai S, Prasad R (2013b) A novel order diminution of LTI systems using Big Bang Big Crunch optimization and Routh Approximation. Appl Math Model 37:8016–8028

El-Kenawy E-SM et al (2022) Novel meta-heuristic algorithm for feature selection, unconstrained functions and engineering problems. IEEE Access 10:40536–40555

Fortuna L, Nunnari G, Gallo A (2012) Model order reduction techniques with applications in electrical engineering. Springer Science and Business Media

Ganji V et al (2017) A novel model order reduction technique for linear continuous-time systems using PSO-DV algorithm. J Control Autom Electr Syst 28:68–77

Goldberg DE (1989) Genetic algorithms in search optimization and machine learning. Addison-Wesley Reading. https://doi.org/10.1007/s10589-009-9261-6

Hutton M, Friedland B (1975) Routh approximations for reducing order of linear, time-invariant systems. IEEE Trans Autom Control 20:329–337

Hwang C (1984) Mixed method of Routh and ISE criterion approaches for reduced-order modeling of continuous-time systems. J Dyn Syst Meas Control. https://doi.org/10.1115/1.3140697

Kennedy J, Eberhart R (1995) Particle swarm optimization. In IEEE International Conference on Neural Networks, vol. 4, IEEE, pp. 1942–1948. https://doi.org/10.1109/ICNN.1995.488968

Kenneth SR, Storn RM (1997) Differential evolution-a simple and efficient heuristic for global optimization over continuous spaces. J Global Optim 11(4):341–359

Lucas TN (1993) Optimal model reduction by multipoint Padé approximation. J Franklin Inst 330:79–93

Mirjalili S (2015) Moth-flame optimization algorithm: a novel nature-inspired heuristic paradigm. Knowl-Based Syst 89:228–249

Mirjalili S (2016a) SCA: a sine cosine algorithm for solving optimization problems. Knowl-Based Syst 96:120–133

Mirjalili S (2016b) Dragonfly algorithm: a new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput Appl 27:1053–1073

Mirjalili S et al (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61

Mukherjee S, Mittal R (2005) Model order reduction using response-matching technique. J Franklin Inst 342:503–519

Narwal A, Prasad BR (2016) A novel order reduction approach for LTI systems using cuckoo search optimization and stability equation. IETE J Res 62:154–163

Padhy AP (2022) Model order approximation of linear time invariant system using dragonfly algorithm. J Algebr Stat 13:2890–2897

Padhy AP et al (2021) Model order reduction of continuous time multi-input multi-output system using sine cosine algorithm. Congress Intell Syst Proceed CIS 2(2022):503–513

Padhy AP, Singh V, Singh VP (2023) Stable approximation of S and MIMO linear dynamic systems. Sādhanā. https://doi.org/10.1007/s12046-023-02151-x

Panigrahi S, Behera H (2020) Time series forecasting using differential evolution-based ANN modelling scheme. Arab J Sci Eng 45:11129–11146

Parmar G, Prasad R, Mukherjee S (2007a) Order reduction of linear dynamic systems using stability equation method and GA. Int J Comput Inform Syst Sci Eng 1:26–32

Parmar G, Mukherjee S, Prasad R (2007b) System reduction using factor division algorithm and eigen spectrum analysis. Appl Math Model 31:2542–2552

Philip B, Pal J (2010) “An evolutionary computation based approach for reduced order modelling of linear systems,” in 2010 IEEE International Conference on Computational Intelligence and Computing Research, 2010, pp. 1–8

Price K, Storn RM, Lampinen JA (2006) Differential evolution: a practical approach to global optimization. Springer Science and Business Media

Schilders WH et al (2008) Model order reduction: theory, research aspects and applications, vol 13. Springer

Shamash Y (1974) Stable reduced-order models using Padé-type approximations. IEEE Trans Autom Control 19:615–616

Sikander A, Prasad R (2015a) Soft computing approach for model order reduction of linear time invariant systems. Circuits Syst Signal Process 34:3471–3487

Sikander A, Prasad R (2015b) Linear time-invariant system reduction using a mixed methods approach. Appl Math Model 39:4848–4858

Smamash Y (1981) Truncation method of reduction: a viable alternative. Electron Lett 17:97–99

Vishwakarma C, Prasad R (2008) Clustering method for reducing order of linear system using Pade approximation. IETE J Res 54:326–330

Vishwakarma C, Prasad R (2009) MIMO system reduction using modified pole clustering and genetic algorithm. Model Simul Eng 2009:1–5

Yang X-S, Slowik A (2020) Firefly algorithm. Swarm intelligence algorithms. CRC Press, pp 163–174

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest in the manuscript.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Padhy, A.P., Panigrahi, S., Singh, V.P. et al. Model order reduction for SISO and MIMO system using improved adaptive differential evolution algorithm. Soft Comput (2024). https://doi.org/10.1007/s00500-023-09489-8

Accepted:

Published:

DOI: https://doi.org/10.1007/s00500-023-09489-8