Abstract

Recently, the latticized linear programming problems subjected to max–min and max-product fuzzy relational inequalities (FRI) have been studied extensively and have been utilized in many interesting applications. In this paper, we introduce a new generalization of the latticized optimization problems whose objective is a non-linear function defined by an arbitrary continuous s-norm (t-conorm), and whose constraints are formed as an FRI defined by an arbitrary continuous t-norm. Firstly, the feasible region of the problem is completely characterized and two necessary and sufficient conditions are proposed to determine the feasibility of the problem. Also, a general method is proposed for finding the exact optimal solutions of the non-linear model. Then, to accelerate the general method, five simplification techniques are provided that reduce the work of computing an optimal solution. Additionally, a polynomial-time method is presented for solving general latticized linear optimization problems subjected to the continuous FRI. Moreover, an application of the proposed non-linear model is described where the objective function and the FRI are defined by the well-known Lukasiewicz s-norm and product t-norm, respectively. Finally, a numerical example is provided to illustrate the proposed algorithm.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Resolution of fuzzy relational equations (FRE) with max–min composition was first studied by Sanchez (1993). Besides, Sanchez developed the application of FRE in medical diagnosis in biotechnology. Nowadays, it is well known that many of the issues associated with body knowledge can be treated as FRE problems (Pedrycz 2013). The fundamental result for FRE with max-product composition goes back to Pedrycz (1985), and was further studied in Bourke and Fisher (1998); Loetamonphong and Fang (1999). Since then, many researchers studied different FREs defined by various types of t-norm operators (Ghodousian and Babalhavaeji 2018; Ghodousian et al. 2018; Wu et al. 2008). Moreover, some other researchers have worked on introducing a novel concept, and at times improving some of the existing theoretical aspects and applications of fuzzy relational inequalities (FRI) (Ghodousian 2019; Ghodousian and Parvari 2017; Ghodousian and Khorram 2012; Guo and Xia 2006; Guo et al. 2013; Li and Yang 2012; Yang et al. 2016a).

Generally, there are three important difficulties related to the optimization problems subject to FRE or FRI regions. First, to completely determine FREs and FRIs, we must initially find all the minimal solutions, and, the finding of all the minimal solutions is an NP-hard problem (Markovskii 2005). Second, a feasible region formed as FRE or FRI is often a non-convex set determined by one maximum solution and a finite number of minimal solutions (Ghodousian and Babalhavaeji 2018; Ghodousian et al. 2018; Ghodousian 2019; Ghodousian and Khorram 2012). Third, FREs and FRIs as feasible regions lead to optimization problems with highly non-linear constraints. Due to the above mentioned difficulties, the optimization problem subject to FRE and FRI is one of the most interesting and on-going research topics among similar problems (Ghodousian and Babalhavaeji 2018; Ghodousian 2019; Ghodousian and Parvari 2017; Ghodousian and Khorram 2012; Guo and Xia 2006; Guo et al. 2013; Yang et al. 2016a; Liu et al. 2016).

The linear optimization problem subjected to various versions of FRI is widely available in the literature (Ghodousian 2019; Ghodousian and Khorram 2012; Guo and Xia 2006; Guo et al. 2013; Li and Yang 2012; Yang et al. 2016a; Matusiewicz and Drewniak 2013; Yang 2014; Yang et al. 2016b, 2015a). Guo et al. (2013) studied the linear programming problem with max–min FRI constraint. Li and Yang (2012) introduced the so-called addition-min FRI to characterize a peer-to-peer file-sharing system. Based on the concept of the pseudo-minimal index, Yang (2014) developed a pseudo-minimal-index algorithm to minimize a linear objective function with addition-min FRI constraint, defined as \(a_{i1}\wedge {x_1}+a_{i2}\wedge {x_2}+ \cdots +a_{in}\wedge {x_n} \ge b_i\), for \(i=1,...,m\), where \(a_{ij}\) is \((i,j)\textrm{th}\) entry of the coefficient matrix A, \({x_j}\) is the \(j\textrm{th}\) component of the unknown vector x, \({b_i}\) is the \(i\textrm{th}\) component of the right hand side vector b and \(a\wedge b = \min \{a,b\}\) (Yang 2014). To improve the results presented in Yang (2014), Yang et al. (2016b) proposed the min-max programming subject to addition-min fuzzy relational inequalities. They also studied the multi-level linear programming problem with the addition-min FRI constraint (Yang et al. 2015a). Drewniak and Matusiewicz were interested in max-* fuzzy relation equations and inequalities with the increasing operation * continuous on the second argument (Matusiewicz and Drewniak 2013). Ghodousian and Khorram (2012) studied the linear optimization with constraints formed by \(X(A,D,b^1,b^2)=\{x \in [0,1]^n: A\varphi x \le b^1, D\varphi x \ge b^2\}\) where \(\varphi \) represents an operator with convex solutions (e.g., non-decreasing or non-increasing operator), A and D are fuzzy matrices, \(b^1\) and \(b^2\) are fuzzy vectors and x is unknown vector. They showed that the feasible region can be expressed as the union of a finite number of convex sets.

Recently, many interesting forms of generalizations of the linear programming applied to the system of fuzzy relations have been introduced and developed based on composite operations used in FRE or FRI, fuzzy relations used in the definition of the constraints, some developments on the objective function of the problems and other ideas (Ghodousian and Babalhavaeji 2018; Ghodousian et al. 2018; Wu et al. 2008; Liu et al. 2016; Dempe and Ruziyeva 2012; Dubey et al. 2012; Freson et al. 2013; Li and Liu 2014). For example, Wu et al. represented an efficient method to optimize a linear fractional programming problem under FRE with max-Archimedean t-norm composition (Wu et al. 2008). Dempe and Ruziyeva generalized the fuzzy linear optimization problem by considering fuzzy coefficients (Dempe and Ruziyeva 2012). In addition, Dubey et al. studied linear programming problems involving interval uncertainty modeled using an intuitionistic fuzzy set (Dubey et al. 2012). The linear optimization of bipolar FRE was also the focus of the study carried out by some researchers where FRE was defined with max–min composition (Freson et al. 2013) and max-Lukasiewicz composition (Ghodousian and Babalhavaeji 2018; Liu et al. 2016; Li and Liu 2014; Cornejo et al. 2022). For example, in Li and Liu (2014), the authors introduced a linear optimization problem subjected to a system of bipolar FRE defined as \(X(A^+,A^-,b)=\{x\in [0,1]^m: x \circ A^+\vee \tilde{x} \circ A^- = b\}\), where \(A^+\) and \(A^-\) are fuzzy matrices, and \(\tilde{x}_i = 1-x_i\), for each component of \(\tilde{x} = (\tilde{x}_i)_{1\times m}\) and the notations "\(\vee \)" and "\(\circ \)" denote max operation and the max-Lukasiewicz composition, respectively. They translated the original problem into a \(0-1\) integer linear problem which is then solved using well-developed techniques. In a separate, the foregoing bipolar linear optimization problem was solved by an analytical method based on the resolution and some structural properties of the feasible region (using a necessary condition for characterizing an optimal solution and a simplification process for reducing the problem) (Liu et al. 2016).

The optimization problems with general nonlinear objective functions and FRE or FRI constraints were studied in Ghodousian and Babalhavaeji (2018), Ghodousian et al. (2018), Ghodousian and Parvari (2017), Lu and Fang (2001). In general, some heuristic algorithms were applied to deal with this kind of problem. However, some fuzzy relation nonlinear optimization problems such as geometric programming problems (Yang et al. 2015b) could be solved by some specific method. Yang et al. (2015b) studied the single-variable term semi-latticized geometric programming subject to max-product fuzzy relation equations. The proposed problem was devised from the peer-to-peer network system and the target was to minimize the biggest dissatisfaction degrees of the terminals in such system.

Latticized optimization problem was introduced in Wang et al. (1991), where the conservative path method was proposed to find out all the minimal solutions of the max–min FRI. Subsequently, the optimal solutions were selected from the minimal solutions by pairwise comparison. The latticized linear programming problem subjected to max–min FRI was also investigated in the works (Li and Fang 2009; Li and Wang 2013). Li and Fang (2009) obtained an optimal solution to the latticized linear programming problem. Besides they studied some variants of the problem. In Li and Wang (2013), based on the concept of semi-tensor product, a matrix approach was applied to handle the latticized linear programming problem subjected to max–min FRI. Also, Yang et al. (2016a) introduced the latticized programming problem defined by minimizing objective function \(f(x) = x_1 \vee x_2 \vee \cdots \vee x_n\) subject to the feasible region \(X(A,b)=\{x\in [0,1]^n: A\circ x \ge b\}\), where "\(\circ \)" denotes fuzzy max-product composition. They, also, presented a solution matrix approach for solving the problem.

In this paper, we investigate a non-linear generalization of the latticized linear programming problems that are formulated in the problem below:

where \(\varphi : [0,1]^2 \rightarrow [0,1]\) is an arbitrary continuous s-norm. \(I=\{1,2,...,m\}\) and \(J=\{1,2,...,n\}\). \(A=(a_{ij})_{m\times n}\) is a fuzzy matrix such that \(0 \le a_{ij} \le 1\) (\(\forall i \in I\) and \(\forall j\in J\)) and \(b=[b_1,b_2,...,b_m] \in [0,1]^m\) is a fuzzy vector. Also, \(A\psi x \ge b\) denotes fuzzy max-\(\psi \) composition where \(\psi :[0,1]^2 \rightarrow [0,1]\) is an arbitrary continuous t-norm. So, if \(a_i\) (\(i\in I)\) denotes the \(i\textrm{th}\) row of matrix A, then the \(i\textrm{th}\) constraint of the problem (1) can be expressed as \(a_i\psi x\ge b_i\), where \(a_i\psi x=\max \nolimits ^n_{j=1}\{\psi (a_{ij},x_j)\}\).

Especially, if \(\varphi \) is considered as the maximum s-norm, then the objective function of the problem (1) is transformed into \(\max \{ x_1,x_2,...,x_n\}\). In this case, if \(A\psi x\ge b\) is defined by the max-product composition, then the problem (1) is reduced to the model studied in Yang et al. (2016a). Also, in Sect. 4, an application of the problem (1) is described where \(\varphi \) and \(\psi \) are defined by the Lukasiewicz s-norm and the product t-norm, respectively.

The rest of the paper is organized as follows. Section 2 discusses basic results on the feasible solutions set of problem (1) where \(\varphi \) represents an arbitrary continuous t-norm. In Sect. 3, an algorithm is presented to find the exact optimal solutions to the problem. The general latticized linear programming problems are described in Sect. 4, where a polynomial-time method is presented without finding all the minimal solutions of the feasible region. Section 5 introduces five techniques to reduce the size of the main problem during the process of finding an optimal solution. Based on the techniques, a procedure for finding an optimal solution is summarized as well. Finally, Sect. 6 presents an illustrative example that demonstrates the effectiveness of the proposed method. Moreover, we show the application background of a special case of the non-linear latticized optimization problem in which the objective function is defined by the Lukasiewicz s-norm and the constraints are formed as an FRI defined by the max-product composition.

2 Feasible solution set of the problem (1)

In Ghodousian and Khorram (2012), the authors discussed some properties of FRIs defined by operators with (closed) convex solutions. In this section, some relevant results are studied about the solutions to a system of max-\(\psi \)-continuous FRIs introduced in the problem (1). For the sake of simplicity, let S(A, b) denote the feasible region of the problem (1), that is, \(S(A,b)=\{x\in [0,1]^n: A\psi x\ge b\}\). Also, for each constraint \(a_i \psi x\ge b_i\) (\(i\in I\)), let \(S_i(A,b)=\{x\in [0,1]^n: a_i\psi x\ge b_i\}\). So, \(S_i(A,b)\) denotes the feasible solution set of the \(i\textrm{th}\) constraint, and therefore, we have \(S(A,b)=\bigcap \nolimits _{i\in I}S_i(A,b)\).

Definition 2.1

For each \(i\in I\) and each \(j\in J\), let \(\Psi _{ij} = \{x_j\in [0,1]: \psi (a_{ij},x_j)\ge b_i\}\). Moreover, if \(\Psi _{ij}\ne \emptyset \), we define \(\underline{x}(a_{ij},b_i)=\inf \Psi _{ij}\) and \(\overline{x}(a_{ij},b_i)=\sup \Psi _{ij}\).

Remark 2.1

From the least-upper-bound property of \(\mathbb {R}\), it is clear that \(\underline{x}(a_{ij},b_i)\), and \(\overline{x}(a_{ij},b_i)\) exist, if \(\Psi _{ij}\ne \emptyset \). Moreover, since \(\psi \) is a t-norm, its monotonicity property implies that \(\Psi _{ij}\) is indeed a connected subset of [0, 1]. Additionally, by the continuity of \(\psi \), we must have \(\Psi _{ij}=\left[ \underline{x}(a_{ij},b_i),\overline{x}(a_{ij},b_i)\right] \).

Lemma 2.1

For each \(i\in I\) and \(j\in J\), \(\Psi _{ij}\ne \emptyset \) iff \(a_{ij}\ge b_i\). Moreover, if \(\Psi _{ij}\ne \emptyset \), then \(\Psi _{ij}=\left[ \underline{x}(a_{ij},b_i),1\right] \).

Proof

Let \(\Psi _{ij}\ne \emptyset \) and \(x^\prime \in \Psi _{ij}\), i.e., \(\psi (a_{ij},x^\prime )\ge b_i\). From the identity law and monotonicity of \(\psi \), we have \(b_i\le \psi (a_{ij},x^\prime )\le \psi (a_{ij},1)=a_{ij}\) which means \(b_i\le a_{ij}\) and \(1\in \Psi _{ij}\). Conversely, if \(a_{ij}\ge b_i\), then, \(b_i\le a_{ij}=\psi (a_{ij},1)\) that implies \(1\in \Psi _{ij}\), i.e., \(\Psi _{ij}\ne \emptyset \). So, \(\Psi _{ij}\ne \emptyset \) iff \(a_{ij}\ge b_i\). By the above argument, we can also conclude that \(\Psi _{ij}\ne \emptyset \) iff \(1\in \Psi _{ij}\). This fact together with Remark 2.1 result in \(\Psi _{ij}=\left[ \underline{x}(a_{ij},b_i),1\right] \), if \(\Psi _{ij}\ne \emptyset \). \(\square \)

Lemma 2.1 results in the following important corollaries that provide two equivalent necessary and sufficient conditions for the feasibility of set \(S_i(A,b)\), \((i\in I)\).

Corollary 2.1

Let \(i\in I\). Then, \(S_i(A,b)\ne \emptyset \) iff there exists at least one \(j\in J\) such that \(\Psi _{ij}\ne \emptyset \).

Proof

Let \(x^\prime \in S_i(A,b)\). By contradiction, suppose that \(\Psi _{ij}=\emptyset \) for each \(j\in J\). So, from Lemma 2.1, we have \(a_{ij}<b_i\), \(\forall j\in J\). Hence,

that contradicts \(x^\prime \in S_i(A,b)\). Conversely, suppose that \(\Psi _{ij_0}\ne \emptyset \) (equivalently, \(a_{ij_0}\ge b_i\)) for some \(j_0\in J\). Also, let \({\textbf {1}}\) be an n-dimensional vector with each component equal to one. So, we have \(a_i\psi {\textbf {1}}= \max \nolimits ^n_{j=1}\{\psi (a_{ij},1)\}\ge \psi (a_{ij_0},1)=a_{ij_0}\ge b_i\), that means, \({\textbf {1}}\in S_i(A,b)\). \(\square \)

Corollary 2.2

Let \(i\in I\). Then, \(S_i(A,b)\ne \emptyset \) iff \({\textbf {1}}\in S_i(A,b)\), where \({\textbf {1}}\) is an n-dimensional vector with each component equal to one.

Proof

If \({\textbf {1}}\in S_i(A,b)\), then obviously \(S_i(A,b)\ne \emptyset \). Conversely, suppose that \(S_i(A,b)\ne \emptyset \). Corollary 2.1 implies \(\Psi _{ij_0}\ne \emptyset \) (equivalently, \(a_{ij_0}\ge b_i\)) for some \(j_0\in J\). So, similar to the proof of Corollary 2.1, we have \(a_i\psi {\textbf {1}} \ge \psi (a_{ij_0},1)=a_{ij_0}\ge b_i\), that means, \({\textbf {1}}\in S_i(A,b)\). \(\square \)

Remark 2.2

Since \(S(A,b)=\bigcap \nolimits _{i\in I}S_i (A,b)\), Corollary 2.2 implies that \(S(A,b)\ne \emptyset \) iff \({\textbf {1}}\in S(A,b)\). Therefore, if problem (1) is feasible, then vector \({\textbf {1}}\) is the unique maximum solution of the feasible region.

Corollary 2.3 below provides a necessary and sufficient condition for a vector \(x\in [0,1]^n\) to be a feasible solution to the constraint \(a_i\psi x\ge b_i\) (\(i\in I\)).

Corollary 2.3

Suppose that \(S_i(A,b)\ne \emptyset \) for some \(i\in I\) and \(x^\prime \in [0,1]^n\). Then, \(x^\prime \in S_i(A,b)\) iff there exists at least one \(j_0\in J\) such that \(x_{j_0}^\prime \in \Psi _{ij_0}\).

Proof

Let \(x^\prime \in S_i(A,b)\). So, \(a_i\psi x^\prime = \max \nolimits ^n_{j=1} \{\psi (a_{ij},x^\prime _j)\}\ge b_i\). Hence, there exist some \(j_0\in J\) such that \(\psi (a_{ij_0},x_{j_0}^\prime )\ge b_i\), i.e., \(x_{j_0}^\prime \in \Psi _{ij_0}\). Conversely, suppose that \(x_{j_0}^\prime \in \Psi _{ij_0}\) for some \(j_0\in J\). Thus, \(\psi (a_{ij_0},x_{j_0}^\prime )\ge b_i\), and therefore, \(a_i\psi x^\prime \ge \psi (a_{ij_0},x_{j_0}^\prime )\ge b_i\) which implies \(x^\prime \in S_i(A,b)\). \(\square \)

Remark 2.3

Let \(x\in S(A,b)\). So, from the equality \(S(A,b)=\bigcap \nolimits _{i\in I}S_i(A,b)\), we have \(x\in S_i(A,b)\), \(\forall i\in I\). Thus, Corollary 2.1 implies that for each \(i\in I\) there exists at least one \(j\in J\) such that \(\Psi _{ij}\ne \emptyset \). Conversely, suppose that for each \(i\in I\) there exists at least one \(j\in J\) such that \(\Psi _{ij}\ne \emptyset \). So, Corollary 2.1 implies that \(S_i(A,b)\ne \emptyset \), \(\forall i\in I\), and then, by Corollary 2.2 we have \({\textbf {1}}\in S_i(A,b)\), \(\forall i\in I\). Hence, \({\textbf {1}}\in S(A,b)\) that means \(S(A,b)\ne \emptyset \).

Definition 2.2

Let \(i\in I\) and \(S_i(A,b)\ne \emptyset \). So, we define \(J(i)=\{j\in J: \Psi _{ij}\ne \emptyset \}\). Also, for each \(j\in J(i)\), define \(\underline{x}(i,j)\in [0,1]^n\) such that \(\underline{x}(i,j)_k = \underline{x}(a_{ij},b_i)\) for \(k=j\), and \(\underline{x}(i,j)_k=0\) otherwise.

Lemma 2.2

Suppose that \(S_i(A,b)\ne \emptyset \) and \(j_0\in J(i)\). Then, \(\underline{x}(i,j_0)\) is a minimal solution of \(S_i(A,b)\).

Proof

From Definition 2.2 and Corollary 2.3, \(\underline{x}(i,j_0)\in S_i(A,b)\). Suppose that \(x^\prime \in S_i(A,b)\), \(x^\prime \le \underline{x}(i,j_0)\) and \(x^\prime \ne \underline{x}(i,j_0)\). So, \(x^\prime _j=0\), \(\forall j\in J-\{j_0\}\), and \(x^\prime _{j_0} < \underline{x}(a_{ij_0},b_i)\). However, in this case we will have \(a_i\psi x^\prime =\max \nolimits ^n_{j=1}\{\psi (a_{ij},x^\prime _j)\}= \psi (a_{ij_0},x^\prime _{j_0})<b_i\) (see Lemma 2.1) that contradicts \(x^\prime \in S_i(A,b)\). \(\square \)

Corollary 2.4

Let \(x^\prime \in S_i(A,b)\). There exists some \(j_0\in J(i)\) such that \(\underline{x}(i,j_0)\le x^\prime \).

Proof

From Corollary 2.3, \(x^\prime _{j_0}\in \Psi _{ij_0}\) for some \(j_0\in J(i)\). Hence, \(\underline{x}(a_{ij_0},b_i)\le x^\prime _{j_0}\le 1\). Now, the result follows from the definition of \(\underline{x}(i,j_0)\) (Definition 2.2). \(\square \)

Theorem 2.1

Suppose that \(i\in I\) and \(S_i(A,b)\ne \emptyset \). Then, \(S_i(A,b)=\bigcup \nolimits _{j\in J(i)}\left[ \underline{x}(i,j),{\textbf {1}}\right] \).

Proof

Let \(x^\prime \in S_i(A,b)\). From Corollary 2.4, \(\underline{x}(i,j_0)\le x^\prime \) for some \(j_0\in J(i)\). Therefore, \(x^\prime \in \left[ \underline{x}(i,j_0),{\textbf {1}}\right] \). Conversely, let \(x^\prime \in \left[ \underline{x}(i,j_0),{\textbf {1}}\right] \) for some \(j_0\in J(i)\). Thus, \(x^\prime _{j_0}\in \Psi _{ij_0}\) that implies \(x^\prime \in S_i(A,b)\) from Corollary 2.3. \(\square \)

Definition 2.3

Suppose that \(S(A,b)\ne \emptyset \). Let \(e:I\rightarrow \bigcup \nolimits _{i\in I}J(i)\) be a function from I to \(\bigcup \nolimits _{i\in I}J(i)\) such that \(e(i)\in J(i)\), \(\forall i\in I\), and let E denote the set of all the functions e. Sometimes, for the sake of convenience, each \(e\in E\) is presented as an m-dimensional vector \(e=\left[ j_1,j_2,...,j_m\right] \) in which \(j_k=e(k)\), \(k=1,2,...,m\).

Definition 2.4

Suppose that \(S(A,b)\ne \emptyset \) and \(e\in E\). We define \(\underline{x}(e)\in [0,1]^n\) whose components are defined as \(\underline{x}(e)_j=\max \nolimits _{i\in I}\{\underline{x}(i,e(i))_j\}\), \(\forall j\in J\).

Remark 2.4

Let \(I(j)=\{i\in I:\Psi _{ij}\ne \emptyset \}\), \(\forall j\in J\). Also, for each \(e\in E\) we define \(I_j(e)=\{i\in I(j):e(i)=j\}\). So, according to Definitions 2.3 and 2.4, each solution \(\underline{x}(e)\) can be equivalently obtained as follows:

Theorem 2.2

Suppose that \(S(A,b)\ne \emptyset \). Then, \(S(A,b)=\bigcup \nolimits _{e\in E}[\underline{x}(e),{\textbf {1}}]\).

Proof

From Theorem 2.1 and the equality \(S(A,b)=\bigcap \nolimits _{i\in I}S_i(A,b)\), we have \(S(A,b)=\bigcap \nolimits _{i\in I}\bigcup \nolimits _{j\in J(i)}[\underline{x}(i,j),{\textbf {1}}]\), or equivalently \(S(A,b)=\bigcup \nolimits _{e\in E}\bigcap \nolimits _{i\in I}[\underline{x}(i,e(i)),{\textbf {1}}]\). Therefore, \(S(A,b)=\bigcup \nolimits _{e\in E}[\max \nolimits _{i\in I}\{\underline{x}(i,e(i))\},{\textbf {1}}]\). Now, the result follows from the definition of \(\underline{x}(e)\). \(\square \)

Based on Theorem 2.2, the feasible region of the problem (1) is completely determined by the union of a finite number of closed convex sets \([\underline{x}(e),{\textbf {1}}]\), \((e\in E)\).

3 A general method for the resolution of the problem (1)

In contrast to vectors \(\underline{x}(i,j) \, (j\in J(i))\), that are the minimal solutions of \(S_i(A,b)\) (Theorem 2.1), all the vectors \(\underline{x}(e) \, (e\in E)\) may not be necessarily the minimal solutions of S(A, b) (Theorem 2.2). In other words, there may exist \(e_1,e_2\in E\) such that \(\underline{x}(e_1)\le \underline{x}(e_2)\). However, the following lemma shows that each minimal solution of S(A, b) can be written in the form of \(\underline{x}(e)\) for some \(e\in E\).

Lemma 3.1

Let \(\underline{S}(A,b)\) denote the set of all the minimal solutions of S(A, b) and \(S_E(A,b)=\{\underline{x}(e):e\in E\}\). Then, \(\underline{S}(A,b)\subseteq S_E(A,b)\).

Proof

Suppose that \(\underline{x}\in \underline{S}(A,b)\). From Theorem 2.2, there exists some \(e\in E\) such that \(\underline{x}\in [\underline{x}(e),{\textbf {1}}]\). Since \(\underline{x}(e)\le \underline{x}\), and \(\underline{x}\) is a minimal solution, then we must have \(\underline{x}=\underline{x}(e)\), that is, \(\underline{x}\in S_E(A,b)\). \(\square \)

Based on Lemma 3.1, Theorem 2.2 can be strengthened as follows.

Corollary 3.1

Suppose that \(S(A,b)\ne \emptyset \). Then, \(S(A,b)=\bigcup \nolimits _{\underline{x}\in \underline{S}(A,b)}[\underline{x},{\textbf {1}}]\).

Proof

According to Lemma 3.1, for each \(\underline{x}(e)\in S_E(A,b)\) there exists some minimal solution \(\underline{x}\in \underline{S}(A,b)\) such that \(\underline{x}\le \underline{x}(e)\), and therefore \([\underline{x}(e),{\textbf {1}}]\subseteq [\underline{x},{\textbf {1}}]\). Now, the result follows from Theorem 2.2. \(\square \)

It is found in Theorem 2.2 that solving \(\max -\psi \) fuzzy relational inequalities is equivalent to finding out all the minimal solutions of the feasible region. Theorem 3.1 below shows that the minimal solutions of S(A, b) also play a significant role in solving the problem (1).

Theorem 3.1

If \(S(A,b)\ne \emptyset \), then there exists a solution \(\underline{x}^*\in \underline{S}(A,b)\) such that \(\underline{x}^*\) is an optimal solution of the problem (1).

Proof

Suppose that \(f(x)=\varphi (...(\varphi (\varphi (x_1,x_2),x_3)...,x_n)\), where \(\varphi \) is an arbitrary continuous s-norm. Also, suppose that minimal solution \(\underline{x}^*\) minimizes f(x) among all minimal solutions, i.e., \(f(\underline{x}^*)\le f(\underline{x})\), \(\forall \underline{x}\in \underline{S}(A,b)\). From Corollary 3.1, for an arbitrary feasible solution \(x^\prime \in S(A,b)\), there exists some \(\underline{x}^\prime \in \underline{S}(A,b)\) such that \(x^\prime \in [\underline{x}^\prime ,{\textbf {1}}]\) (i.e., \(\underline{x}^\prime \le x^\prime \)). So, the monotonicity law of s-norms implies that \(\varphi (\underline{x}_1^\prime ,\underline{x}_2^\prime )\le \varphi (x_1^\prime ,x_2^\prime )\). By applying the same argument, \(\varphi (\varphi (\underline{x}_1^\prime , \underline{x}_2^\prime ), \underline{x}_3^\prime )\le \varphi (\varphi (x_1^\prime ,x_2^\prime ), x_3^\prime )\), and if we continue in this way, then (in \(n-1\) steps) we obtain \(f(\underline{x}^\prime )\le f(x^\prime )\). But, since \(f(\underline{x}^*)\le f(\underline{x}^\prime )\), we have \(f(\underline{x}^*)\le f(x^\prime )\). Since \(x^\prime \) was an arbitrary feasible solution, the result follows. \(\square \)

Now, we summarize the preceding discussion as an algorithm.

Algorithm 3.1 (General method)

Given problem (1):

-

1.

Compute \(\Psi _{ij}\) for each \(i\in I\) and \(j\in J\) (Lemma 2.1).

-

2.

If there exists some \(i\in I\) such that \(\Psi _{ij}=\emptyset \), \(\forall j\in J\), then stop; S(A, b) is empty (Remark 2.3).

-

3.

Compute J(i), \(\forall i\in I\) (Definition 2.2).

-

4.

Compute solutions \(\underline{x}(e)\in S_E(A,b)\), \(\forall e\in E\) (Definitions 2.3 and 2.4).

-

5.

Find minimal solutions by pairwise comparison between vectors \(\underline{x}(e)\) (Lemma 3.1).

-

6.

Select the optimal solution \(\underline{x}^*\) from the set \(\underline{S}(A,b)\) (Lemma 3.1 and Theorem 3.1).

If the constraints of problem (1) are replaced with \(A\psi x\le b\), it can be easily shown that the feasible region of the problem will be in the form of \([{\textbf {0}},{\textbf {Y}}]\), where \({\textbf {0}}\) is the zero vector and \({\textbf {Y}}\) is the unique maximum solution. Moreover, the constraint \(A\psi x=b\) can be equivalently expressed as \(A\psi x\le b\) and \(A\psi x\ge b\), and therefore the feasible region is then attained by \(\bigcup \nolimits _{e\in E}[\underline{x}(e),{\textbf {1}}]\cap [{\textbf {0}},{\textbf {Y}}]=\bigcup \nolimits _{e\in E}[\underline{x}(e),{\textbf {Y}}]\) (see Theorem 2.2). However, since according to Theorem 3.1, the optimal solution will be one of the solutions of \(\underline{S}(A,b)\), Algorithm 3.1 can also be used to solve problems with the constraints \(A\psi x=b\).

In the general method, Step 3 is a major disadvantage of the algorithm. As mentioned before, \(S_E(A,b)\) often contains many solutions \(\underline{x}(e)\) that are not minimal. Moreover, the cardinality of \(S_E(A,b)\), denoted by \(|S_E(A,b)|\), grows exponentially with the size of the sets J(i), \(\forall i\in I\). More precisely, we have \(|S_E(A,b)|=\prod \nolimits _{i\in I}|J(i)|\), where |J(i)| denotes the cardinality of the set J(i). To accelerate the algorithm, we can initially remove some \(e\in E\) that generate non-minimal solutions \(\underline{x}(e)\). For this purpose, five simplification techniques will be described in Sect. 5. Furthermore, in some special cases, we can find a fast optimal solution to problem (1) by an efficient algorithm that is of polynomial complexity in the size of the problem. These special cases will be studied in the next section.

4 Special cases with fast optimal solutions

In this section, an algorithm is presented for solving some special cases of problem (1) without finding all the solutions \(\underline{x}(e)\in S_E(A,b)\). It is shown that the computational complexity of the algorithm is O(mn).

Definition 4.1

Define \(\underline{x}(i)=\min \nolimits _{j\in J(i)}\{\underline{x}(a_{ij},b_i)\}\) and \(\underline{J}(i)=\{j\in J(i):\underline{x}(a_{ij},b_i)=\underline{x}(i)\}\), \(\forall i\in I\). Also, similar to Definition 2.3, let \(\underline{E}= \{e\in E:e:I\rightarrow \bigcup \nolimits _{i\in I}\underline{J}(i)\}\).

By Definition 4.1, it is clear that \(\underline{J}(i)\subseteq J(i)\) and \(\underline{E}\subseteq E\). Also, for each \(e\in \underline{E}\) we have \(e(i)\in \underline{J}(i)\), \(\forall i\in I\).

Remark 4.1

Consider a fixed \(i\in I\). For each \(e\in E\) and \(e^\prime \in \underline{E}\), we have \(e(i)\in J(i)\) and \(e^\prime (i)\in \underline{J}(i)\). Therefore, from Definition 4.1, \(\underline{x}(a_{ie^\prime (i)},b_i)= \underline{x}(i)\le \underline{x}(a_{ie(i)},b_i)\), \(\forall i\in I\).

Theorem 4.1

Let \(e^\prime \in \underline{E}\) and \(e\in E\). Then, \(\max \nolimits _{j\in J}\{\underline{x}(e^\prime )_j\}\le \max \nolimits _{j\in J}\{\underline{x}(e)_j\}\).

Proof

From Remark 2.4, \(\max \nolimits _{j\in J}\{\underline{x}(e)_j\}=\max \nolimits _{j\in J}\max \nolimits _{i\in I}\{\underline{x}(a_{ij},b_i)\}\) that is equal to \(\max \nolimits _{i\in I}\{\underline{x}(a_{ie(i)},b_i)\}\). Also, from Remark 4.1, we have \(\max \nolimits _{i\in I}\{\underline{x}(a_{ie(i)},b_i)\}\ge \max \nolimits _{i\in I}\{\underline{x}(a_{ie^\prime (i)},b_i)\}\). Consequently, \(\max \nolimits _{j\in J}\{\underline{x}(e)_j\}\ge \max \nolimits _{i\in I}\{\underline{x} (a_{ie^\prime (i)},b_i)\}\) (*). But, \(\max \nolimits _{i\in I}\{\underline{x} (a_{ie^\prime (i)},b_i)\} = \max \nolimits _{j\in J}\max \nolimits _{i\in I_j(e^\prime )} \{\underline{x}(a_{ij},b_i)\}=\max \nolimits _{j\in J}\{\underline{x}(e^\prime )_j\}\) (**). Now, the result follows from (*) and (**). \(\square \)

Corollary 4.1

For each \(e_1,e_2\in \underline{E}\), \(\max \nolimits _{j\in J}\{\underline{x}(e_1)_j\} = \max \nolimits _{j\in J}\{\underline{x}(e_2)_j\}\).

Proof

Since, \(\underline{E}\subseteq E\), then we also have \(e_1,e_2\in E\). So, by considering \(e_1\in \underline{E}\) and \(e_2\in E\), Theorem 4.1 implies that \(\max \nolimits _{j\in J}\{\underline{x}(e_1)_j\} \le \max \nolimits _{j\in J}\{\underline{x}(e_2)_j\}\). By the same argument, \(\max \nolimits _{j\in J}\{\underline{x}(e_2)_j\} \le \max \nolimits _{j\in J}\{\underline{x}(e_1)_j\}\). Hence \(\max \nolimits _{j\in J}\{\underline{x}(e_1)_j\} = \max \nolimits _{j\in J}\{\underline{x}(e_2)_j\}\). \(\square \)

Theorem 4.2

Consider problem (1) where \(\psi \) is an arbitrary continuous t-norm and \(\varphi \) is the maximum s-norm. If \(S(A,b)\ne \emptyset \), then all the solutions \(\underline{x}(e)\) generated by \(e\in \underline{E}\) are the optimal solutions to the problem with the same objective function value.

Proof

By assuming that \(\varphi \) is the maximum s-norm, the objective function \(f(x)=\varphi (...(\varphi (\varphi (x_1,x_2),x_3)...,x_n)\) is reduced to \(f(x)=\max \nolimits _{j\in J}\{x_j\}\). From Theorem 3.1, for each optimal solution \(x^*\) we must have \(x^*\in \underline{S}(A,b)\). On the other hand, Lemma 3.1 implies that \(\underline{x}^*\in S_E(A,b)\). Now, the result follows from Theorem 4.1 and Corollary 4.1. \(\square \)

Theorem 4.2 proposes an efficient polynomial-time algorithm for solving the special cases of problem (1) where \(\varphi \) is the maximum s-norm. Algorithm 4.1 below shows the steps of this algorithm followed by the complete description of its complexity.

Algorithm 4.1 (Polynomial-time algorithm for \(\varphi =\textrm{maximum}\))

Given problem (1), where \(\varphi \) is the maximum s-norm:

-

1.

Compute \(\Psi _{ij}\) for each \(i\in I\) and \(j\in J\) (Lemma 2.1).

-

2.

If there exists some \(i\in I\) such that \(\Psi _{ij}=\emptyset \), \(\forall j\in J\), then stop; S(A, b) is empty (Remark 2.3).

-

3.

Compute \(\underline{J}(i)\), \(\forall i\in I\) (Definition 4.1).

-

4.

Select an arbitrary \(e\in \underline{E}\).

-

5.

Obtain the optimal solution \(x^*\) by computing \(\underline{x}(e)\) (Theorem 4.2).

In Step 1, computing \(\Psi _{ij}\) costs mn operations. In Step 2, checking the feasibility of the problem costs mn pairwise comparisons. In Step 3, computing the index sets costs 2mn operations, and finally each of Steps 4 and 5 costs mn operations. Therefore, it costs 6mn operations to carry out all the steps of the algorithm, that is, the computational complexity is obtained as O(mn).

5 Simplification techniques

In this section, five simplification techniques are presented to accelerate the resolution of the problem. Throughout this section, \(x^*\) denotes an optimal solution for problem (1) and f(x) denotes the objective function of problem (1); that is, \(f(x)=\varphi (...(\varphi (\varphi (x_1,x_2),x_3)...,x_n)\).

Lemma 5.1

Suppose that \(J(i_0)=\{j_0\}\) for some \(i_0\in I\) and \(j_0\in J\). Also, Suppose that \(\underline{x}(a_{i_0j_0},b_{i_0})\ge \underline{x}(a_{ij_0},b_i)\), \(\forall i\in I(j_0)\). Then, \(\underline{x}(e)_{j_0}=\underline{x}(a_{i_0j_0},b_{i_0})\), \(\forall e\in E\).

Proof

Since for any \(e\in E\), we have \(e(i_0)\in J(i_0)\) and by the assumption, \(J(i_0)\) is a singleton set, then \(e(i_0)=j_0\), \(\forall e\in E\). Therefore, \(i_0\in I_{j_0}(e)\), \(\forall e\in E\). Now, since \(I_{j_0}(e)\ne \emptyset \), from relation (2) we obtain \(\underline{x}(e)_{j_0}=\max \nolimits _{i\in I_{j_0}(e)} \{\underline{x}(a_{ij_0},b_i)\}\). The latter equality together with \(I_{j_0}(e)\subseteq I(j_0)\) (see Remark 2.4) and the assumption \(\underline{x}(a_{i_0j_0},b_{i_0})\ge \underline{x}(a_{ij_0},b_i)\), \(\forall i\in I(j_0)\), result in \(\underline{x}(e)_{j_0}= \underline{x}(a_{i_0j_0},b_{i_0})\). \(\square \)

By Theorem 3.1 and Lemma 3.1, we know that \(x^*\in S_E(A,b)\). Hence, under the assumptions of Lemma 5.1, we can set \(x_{j_0}^*= \underline{x}(a_{i_0j_0},b_{i_0})\). Therefore, since the \({j_0}\textrm{th}\) variable of the optimal solution is known, we can remove this variable from the problem by deleting its corresponding coefficients, i.e., the \({j_0}\textrm{th}\) column of A. On the other hand, \(x_{j_0}^*=\underline{x} (a_{i_0j_0},b_{i_0})\) means \(x_{j_0}^*\in \Psi _{i_0j_0}=\left[ \underline{x}(a_{i_0j_0},b_{i_0}),1\right] \) that together with Corollary 2.4 imply \(x^*\in S_{i_0}(A,b)\). Therefore, by assignment \(x_{j_0}^*=\underline{x}(a_{i_0j_0},b_{i_0})\), the \({i_0}\textrm{th}\) constraint of the problem is always satisfied. Hence, we can remove this constraint from the problem by deleting the \({i_0}\textrm{th}\) row of A and \(b_{i_0}\). Moreover, let \(i^\prime \in I(j_0)\) and \(i^\prime \ne i_0\). Since \(\underline{x}(a_{i_0j_0},b_{i_0})\ge \underline{x}(a_{ij_0},b_i)\), \(\forall i\in I(j_0)\), then \(\underline{x}(a_{i_0j_0},b_{i_0})\ge \underline{x}(a_{i^\prime j_0},b_{i^\prime })\) that means \(x_{j_0}^*\in \Psi _{i^\prime j_0}=\left[ \underline{x}(a_{i^\prime j_0},b_{i^\prime }),1\right] \). Therefore, Corollary 2.4 implies \(x^*\in S_{i^\prime }(A,b)\), i.e., \(x^*\) also satisfies the \({i^\prime }\textrm{th}\) constraint of the problem. Hence, we can remove this constraint from the problem by deleting the \({i^\prime }\textrm{th}\) row of A and \(b_{i^\prime }\). The above discussions are summarized in the following corollary.

Corollary 5.1

(First simplification technique). If there exist \(i_0\in I\) and \(j_0\in J\) such that \(J(i_0)=\{j_0\}\) and \(\underline{x}(a_{i_0j_0},b_{i_0})\ge \underline{x}(a_{ij_0},b_i)\), \(\forall i\in I(j_0)\), then set \(x_{j_0}^*=\underline{x}(a_{i_0j_0},b_{i_0})\) and delete the \({j_0}\textrm{th}\) column of A. Moreover, for each \(i\in I(j_0)\), delete the \(i\textrm{th}\) row of A and component \(b_i\).

Definition 5.1

Let \(A^\prime \psi x \ge b^\prime \) be a system resulted from \(A\psi x\ge b\) by deleting the \(i\textrm{th}\) constraint \(a_i\psi x=\max \nolimits _{j=1}^{n}\{\psi (a_{ij},x_j)\}\ge b_i\). So, this constraint is called redundant if \(S(A^\prime ,b^\prime )=S(A,b)\), that is, each feasible solution to the system \(A^\prime \psi x\ge b^\prime \) also satisfies the constraint \(a_i \psi x\ge b_i\).

It is to be noted that from Theorem 2.2 we can find a simpler condition for the identification of a redundant constraint. Indeed, a constraint of \(A\psi x\ge b\) is redundant if each feasible solution \(\underline{x}(e)\in S_E(A^\prime ,b^\prime )\) also satisfies that constraint.

Lemma 5.2

Suppose that \(i_1,i_2\in I\) such that \(J(i_1)\subseteq J(i_2)\) and \(\underline{x}(a_{i_2j},b_{i_2})\le \underline{x}(a_{i_1j},b_{i_1})\), \(\forall j\in J(i_1)\). Then, \({i_2}\textrm{th}\) constraint is redundant.

Proof

Let \(A^\prime \psi x\ge b^\prime \) be a system resulted from \(A\psi x\ge b\) by deleting the \({i_2}\textrm{th}\) constraint \(\max \nolimits _{j=1}^{n}\{\psi (a_{i_2j},x_j)\}\ge b_{i_2}\). In the new system \(A^\prime \psi x\ge b^\prime \), consider an arbitrary \(e\in E\) and suppose that \(e(i_1)=j\). Therefore, \(i_1\in I_j(e)\) that means \(I_j(e)\ne \emptyset \). Moreover, by Theorem 2.2, \(\underline{x}(e)\) is a feasible solution to \(A^\prime \psi x\ge b^\prime \). On the other side, since \(j\in J(i_1)\) and \(J(i_1)\subseteq J(i_2)\), then \(j\in J(i_2)\) for the system \(A\psi x\ge b\). Now, due to the fact that \(I_j(e)\ne \emptyset \), by relation (2) we obtain \(\underline{x}(e)_j=\max \nolimits _{i\in I_j(e)}\{\underline{x}(a_{ij},b_i)\} \ge \underline{x}(a_{i_1j},b_{i_1})\ge \underline{x}(a_{i_2j},b_{i_2})\), where the last inequality is resulted from the assumption of the lemma. Therefore, \(\underline{x}(e)_j\ge \underline{x}(a_{i_2j},b_{i_2})\), which means, \(\underline{x}(e)_j\in \Psi _{i_2j}=\left[ \underline{x}(a_{i_0j_0},b_{i_0}),1\right] \). Now, Corollary 2.4 implies \(\underline{x}(e)\in S_{i_2}(A,b)\), that is, \(\underline{x}(e)\) also satisfies the \({i_2}\textrm{th}\) constraint of the primal system. \(\square \)

Corollary 5.2

(Second simplification technique). If there exist \(i_1, i_2 \in I\) such that \(J(i_1)\subseteq J(i_2)\) and \(\underline{x}(a_{i_2j},b_{i_2})\le \underline{x}(a_{i_1j},b_{i_1})\), \(\forall j\in J(i_1)\), then delete the \({i_2}\textrm{th}\) row of A and \(b_{i_2}\).

Proof

According to the assumptions and Lemma 5.2, \({i_2}\textrm{th}\) row is a redundant constraint, and therefore it can be deleted from the problem. \(\square \)

Let \(x_{j_1}\) and \(x_{j_2}\) be two arbitrary variables of vector x. Since each s-norm \(\varphi \) is both commutative and associative, then the objective function of problem (1) can be equivalently rewritten as follows:

Lemma 5.3

Suppose that \(j_1, j_2 \in J\) such that \(I(j_2)\subseteq I(j_1)\) and \(\underline{x}(a_{ij_1},b_i)\le \underline{x}(a_{ij_2},b_i)\), \(\forall i\in I(j_2)\). Then, \(x_{j_2}^*=0\).

Proof

To prove the lemma, it is sufficient to show that a solution \(\underline{x}(e)\) with \(\underline{x}(e)_{j_2}>0\) cannot be an optimal solution. It is to be noted that \(\underline{x}(e)_{j_2}>0\) implies \(I_{j_2}(e)\ne \emptyset \), that is, there exists at least one \(i\in I\) such that \(e(i)=j_2\). Based on this vector e, define \(e^\prime \in E\) as follows:

According to (3), we have \(\underline{x}(e^\prime )_k= \underline{x}(e)_k\), \(\forall k\in J-\{j_1,j_2\}\), and \(\underline{x}(e^\prime )_{j_2}=0<\underline{x}(e)_{j_2}\). From the assumption of the lemma and the fact that \(I_{j_2}(e)\subseteq I(j_2)\) (see Remark 2.4), we have \(\max \nolimits _{i\in I_{j_2}(e)}\{\underline{x}(a_{ij_1},b_i)\}\le \max \nolimits _{i\in I_{j_2}(e)}\{\underline{x}(a_{ij_2},b_i)\}\). Now, from the latter inequality and relation (2), we obtain:

where the last inequality is resulted from the fact that \(\max \{x,y\}\le \varphi (x,y)\) for any s-norm \(\varphi \). Consequently, from (5) we have:

Thus, by the equalities \(\underline{x}(e^\prime )_{j_2}=0\) and \(\varphi (0,\underline{x}(e^\prime )_{j_1})=\underline{x}(e^\prime )_{j_1}\) (resulted from the identity law of s-norms), it follows that \(\varphi (\underline{x}(e^\prime )_{j_1},\underline{x}(e^\prime )_{j_2})= \varphi (\underline{x}(e^\prime )_{j_1},0)=\underline{x}(e^\prime )_{j_1}\) which together with (6) imply:

Now, since \(\underline{x}(e^\prime )_k=\underline{x}(e)_k\) (\(\forall k\in J-\{j_1,j_2\}\)), from (3) and (7) and the monotonicity property of s-norms, we obtain \(f(\underline{x}(e^\prime ))\le f(\underline{x}(e))\); that is, \(\underline{x}(e)\) is not an optimal solution. \(\square \)

Corollary 5.3

(Third simplification technique). If there exist \(j_1, j_2\in J\) such that \(I(j_2)\subseteq I(j_1)\) and \(\underline{x}(a_{ij_1},b_i)\le \underline{x}(a_{ij_2},b_i)\), \(\forall i\in I(j_2)\), then set \(x_{j_2}^{*}=0\) and delete the \({j_2}\textrm{th}\) column of A.

Proof

From the assumptions and Lemma 5.3, \(x_{j_2}^{*}=0\) and then we can consequently delete the \({j_2}\textrm{th}\) column of A. \(\square \)

Lemma 5.4

Suppose that \(x_0\) is an arbitrary feasible solution to problem (1) with the objective value \(f(x_0)\). Also, suppose \(\underline{x}(a_{i_0j_0},b_{i_0})\ge f(x_0)\) for some \(i_0\in I\) and \(j_0\in J(i_0)\). Then, for each \(e\in E\) such that \(e(i_0)=j_0\), the corresponding solution \(\underline{x}(e)\) is not an optimal solution.

Proof

Let \(e\in E\) such that \(e(i_0)=j_0\) and suppose that \(\underline{x}(e)\) is generated by relation (2). Define \(x^\prime \in [0,1]^n\) such that \(x_{j_0}^\prime =\underline{x} (a_{i_0j_0},b_{i_0})\) and \(x_j^\prime =0\), \(\forall j\in J-\{j_0\}\). So, according to relation (2), we have \(x_j^\prime \le \underline{x}(e)_j\), \(\forall j\in J\). Hence, based on the monotonicity law of s-norms, \(f(x^\prime )\le f(\underline{x}(e))\). On the other hand, from the identity law of s-norms, we obtain:

that is, \(f(x^\prime )\ge f(x_0)\). Consequently, the inequalities \(f(x^\prime )\ge f(x_0)\) and \(f(x^\prime )\le f(\underline{x}(e))\) imply \(f(\underline{x}(e))\ge f(x_0)\), where \(x_0\) is a feasible solution to the problem. This completes the proof. \(\square \)

Corollary 5.4

(Fourth simplification technique). Let \(x_0\) be an arbitrary initial feasible solution of the problem. If \(\underline{x}(a_{i_0j_0},b_{i_0})\ge f(x_0)\) for some \(i_0\in I\) and \(j_0\in J(i_0)\), then delete \(j_0\) from \(J(i_0)\).

Proof

From Lemma 5.4, for each \(e\in E\) such that \(e(i_0)=j_0\), the corresponding solution \(\underline{x}(e)\) is not an optimal solution. So, we can delete such points by removing \(j_0\) from \(J(i_0)\). \(\square \)

Remark 5.1

In Corollary 5.4, by deleting \(j_0\) from \(J(i_0)\), the cardinality of \(S_E(A,b)\) is reduced from \(\prod \nolimits _{i \in I}|J(i)|\) to \(|J(i_0)-1|\).\(\prod \nolimits _{i\in I-\{i_0\}}|J(i)|\). Moreover, according to Lemma 2.1 and Definition 2.2, the deletion of \(j_0\) from \(J(i_0)\) can be equivalently accomplished by assigning an arbitrary value from \([0,b_{i_0})\) to \(a_{i_0j_0}\), e.g., \(a_{i_0j_0}=0\), or by setting \(\Psi _{i_0j_0}=\emptyset \).

Remark 5.2

As a general method in Corollary 5.4, we can consider \(x_0=\underline{x}(e_0)\) as an initial feasible solution where \(\underline{x}(e_0)\) is obtained by relation (2) for any arbitrary \(e_0\in \underline{E}\) as defined in Definition 4.1.

Lemma 5.5

Suppose that \(I(j_0)=\emptyset \) for some \(j_0\in J\). Then, \(\underline{x}(e)_j=0\), \(\forall \underline{x}(e)\in S_E(A,b)\).

Proof

Since \(I_{j_0}(e)\subseteq I(j_0)\) (see Remark 2.4), \(\forall e\in E\), then we have \(I_{j_0}(e)=\emptyset \), \(\forall e\in E\). Now, the result directly follows from relation (2). \(\square \)

Corollary 5.5

(Fifth simplification technique). If there exist \(j_0\in J\) such that \(I(j_0)=\emptyset \), then set \(x_{j_0}^*=0\) and delete the \({j_0}\textrm{th}\) column of A.

Proof

From the assumptions and Lemma 5.5, \(x_{j_0}^*=0\) and then we can consequently delete the \({j_0}\textrm{th}\) column of A. \(\square \)

Now, we summarize the preceding discussion as an algorithm.

Algorithm 5.1 (Accelerated method)

Given problem (1):

-

1.

Compute \(\Psi _{ij}\) for each \(i\in I\) and \(j\in J\) (Lemma 2.1).

-

2.

If there exists some \(i\in I\) such that \(\Psi _{ij}=\emptyset \), \(\forall j\in J\), then stop; S(A, b) is empty (Remark 2.3).

-

3.

Compute J(i), \(\forall i\in I\) (Definition 2.2).

-

4.

Apply the simplification techniques (Corollaries 5.1-5.5) to determine the values of decision variables as many as possible. Denote the remaining problem by \(A^\prime \psi x\ge b^\prime \).

-

5.

Compute solutions \(\underline{x}(e)\in S_E(A^\prime ,b^\prime )\), \(\forall e\in E\) (Definitions 2.3 and 2.4).

-

6.

Find minimal solutions by pairwise comparison between vectors \(\underline{x}(e)\) (Lemma 3.1).

-

7.

Select the optimal solution \(\underline{x}^*\) from the set \(\underline{S}(A^\prime ,b^\prime )\) (Lemma 3 and Theorem 4).

Steps 5 and 6 provide all the minimal solutions of the feasible region that is equivalent to the complete resolution of the feasible solution set (Theorem 2.2). However, as it is well-known in FRE (FRI) theory, the complete resolution of FREs (FRIs) has a high computational complexity in the sense that each minimal solution corresponds to an irredundant covering (in terms of the covering problem). Therefore, although the above algorithm accelerates the resolution of the problem by taking advantage of the five simplification techniques and discarding some non-minimal solutions, it should be noted that the number of minimal solutions may also grow exponentially in terms of the problem size. Consequently, finding other simplification rules or providing some methods (such as appropriate branch-and-bound techniques) that can reach the optimal solution by examining a smaller number of minimal (candidate) solutions is always in demand.

6 Numerical example and application

Consider a type of wireless communication management models in which the information is transmitted by the electromagnetic wave (Yang et al. 2016a). The electromagnetic wave is emitted from some fixed emission base stations (EBSs), \(A_1,A_2,...,A_n\). The \(j\textrm{th}\) EBS will emit electromagnetic waves with radiation intensity \(x_j>0\), \(j=1,2,...,n\). The sum of the intensities is restricted by the maximum allowable emissions limits for EBSs, so that, \(\sum \nolimits ^n_{j=1}x_j\) cannot exceed L units. On the other hand, the communication quality level is determined by the intensity of electromagnetic radiation. To satisfy the requirement of communication quality level, m testing points, \(B_1,B_2...,B_m\) are selected to test the intensity of electromagnetic radiation. In the cell phone network, places with higher population density are considered as the testing points. Let \(B_i\) denote the \(i\textrm{th}\) testing point and \(r_{ij}\) denote the intensity of electromagnetic radiation emitted from \(A_j\). So, \(r_{ij}\in [0,x_j]\) where \(i\in I=\{1,...,m\}\), and \(j\in J=\{1,...,n\}\). Since \(r_{ij}\) is related to the distance between \(B_i\) and \(A_j\), there exists a positive real number \(k_{ij}\) such that \(r_{ij}=k_{ij}x_j\). Therefore, the intensity of electromagnetic radiation at \(B_i\) is attained by \(\max \nolimits ^n_{j=1}\{r_{ij}\}=\max \nolimits ^n_{j=1}\{k_{ij}x_j\}\). Suppose the least requirement of communication quality level at \(B_i\) is \(L_i\), \(\forall i\in I\). So, we have \(\max \nolimits ^n_{j=1}\{k_{ij}x_j\}\ge L_i\), \(\forall i\in I\). By normalizing the variables and parameters into the unit interval [0, 1], we get \(\max \nolimits ^n_{j=1}\{a_{ij}x_j\}\ge b_i\), where \(a_{ij}\in [0,1]\) (\(\forall i\in I\) and \(\forall j\in J\)), \(b_i\in [0,1]\) (\(\forall i\in I\)) and \(\sum \nolimits ^n_{j=1}x_j\le 1\). Although high radiation intensity ensures good communication quality, it harms human health at the same time. For this reason, the objective function is \(f(x)=\min \{\sum \nolimits ^n_{j=1}x_j,1\}\). Hence, the wireless communication EBS model is reduced into the problem (1) in which \(\varphi \) and \(\psi \) are defined by the Lukasiewicz s-norm and the product t-norm, respectively.

Example 6.1

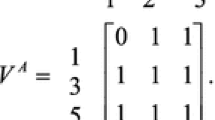

Consider the optimization problem (1) in which the feasible region has been randomly generated by the following A and b.

Also, \(\varphi \) is the Lukasiewicz s-norm and \(\psi \) is the product t-norm, i.e., \(\varphi (x,y)=\min \{x+y,1\}\) and \(\psi (x,y)=xy\). The steps of Algorithm 5.1 are as follows:

Step 1. Based on Lemma 2.1, it is easily verified that closed intervals \(\Psi _{ij}\) are obtained as follows:

These intervals are summarized in matrix \(\Psi =(\Psi _{ij})_{5\times 7}\), where \(\Psi _{ij}=[\underline{x}(a_{ij},b_i),{\textbf {1}}]\):

Step 2. From Remark 2.3, the necessary and sufficient condition holds for the feasibility of the problem. More precisely, we have

that means \(1\in S(A,b)\) (see Remark 2.2).

Step 3. By Definition 2.2, \(J(1)=\{1,3,4,6,7\}\), \(J(2)=\{2,3,5,6\}\), \(J(3)=\{4,7\}\), \(J(4)=\{1,...,7\}\), and \(J(5)=\{1,2,3\}\).

Also, for example, the minimal solutions of \(S_3(A,b)\) are attained as \(\underline{x}(1,4)=[0,0,0,0.3793,0,0,0]\) and \(\underline{x}(1,7)=[0,0,0,0,0,0,1]\) (Definition 2.2). Thus, by Theorem 2.1, \(S_3(A,b)=[\underline{x}(1,4),{\textbf {1}}] \cup [\underline{x}(1,7),{\textbf {1}}]\).

In this example, we have \(|E|=\prod \nolimits ^5_{i=1}|J(i)|=840\). Therefore, according to Definitions 2.3 and 2.4, the number of all vectors \(\underline{x}(e)\) \((e\in E)\) is equal to 840. However, each feasible solution \(\underline{x}(e)\) \((e\in E)\) is not a minimal solution for the problem. For example, by selecting \(e^\prime =[1,2,7,6,3]\), the corresponding solution is obtained as \(\underline{x}(e^\prime )=[0.1961,0.2646,0.9136,0,0,0.011,1]\). Although \(\underline{x}(e^\prime )\) is feasible, but it is not a minimal solution. To see this, let \(e^{\prime \prime }=[7,2,7,2,2]\). Then, \(\underline{x}(e^{\prime \prime })=[0,0.2646,0,0,0,0,1]\). Obviously, \(\underline{x}(e^{\prime \prime })\le \underline{x}(e^\prime )\) which shows that \(\underline{x}(e^\prime )\) is not a minimal solution.

By Definition 4.1, \(\underline{x}(1)=0.1961\), \(\underline{x}(2)=0.2646\), \(\underline{x}(3)=0.3793\), \(\underline{x}(4)=0.0101\), and \(\underline{x}(5)=0.2601\). Also, \(\underline{J}(1)=\{1\}\), \(\underline{J}(2)=\{2\}\), \(\underline{J}(3)=\{4\}\), \(\underline{J}(4)=\{5\}\), and \(\underline{J}(5)=\{2\}\). So, \(\underline{E}\) includes only one element \(e_0=[1,2,4,5,2]\) whose corresponding solution is obtained by relation (2) as \(\underline{x}(e_0)=[0.1961,0.2646,0,0.3793,0.0101,0,0]\). If the objective function is defined by the maximum s-norm, then from Theorem 4.2, \(\underline{x}(e_0)\) is the unique optimal solution (the uniqueness is resulted from \(|\underline{E}|=1\)). However, in this example where \(\varphi \) is the Lukasiewicz s-norm, we may consider \(\underline{x}(e_0)\) as an initial feasible solution with the objective value \(f(\underline{x}(e_0))=0.8501\) (see Remark 5.2).

Step 4. By considering columns 4 and 7 of matrix A (and the corresponding columns in matrix \(\Psi \)), it follows that \(\{1,3,4\}=I(7)\subseteq I(4)=\{1,3,4\}\), \(0.3262=\underline{x}(a_{14},b_1)\le \underline{x}(a_{17},b_1)=0.8932\), and \(0.3793=\underline{x}(a_{34},b_3)\le \underline{x}(a_{37},b_3)=1\), that is, \(\underline{x}(a_{i4},b_i)\le \underline{x}(a_{i7},b_i)\), \(\forall i\in I(7)\). So, by applying the third simplification technique (Corollary 5.3), we set \(x_7^*=0\) and delete column 7 in matrices A and \(\Psi \). After deletion, the reduced matrices \(A^\prime =(a_{ij}^\prime )_{5\times 6}\) and \(\Psi ^\prime = (\Psi _{ij}^\prime )_{5\times 6}\) are obtained as follows:

The reduced matrices \(A^\prime \) and \(\Psi \) are equivalent to five inequalities (constraints) with six variables. As is clear from the matrix \(\Psi ^\prime \), by deleting column 7, the set \(J(3)=\{4,7\}\) is reduced to \(J(3)=\{4\}\), that is, a singleton set. Also, \(I(4)=\{1,3,4\}\) and we have \(0.3793=\underline{x}(a_{34},b_3)\ge \underline{x}(a_{14},b_1)=0.3262\), and \(0.3793=\underline{x}(a_{34},b_3)\ge \underline{x}(a_{44},b_4)=0.0119\), i.e., \(\underline{x}(a_{34},b_3)\ge \underline{x}(a_{i4},b_i)\), \(\forall i\in I(4)\). Therefore, by applying the first simplification technique (Corollary 5.1), we set \(x_4^*=\underline{x}(a_{34},b_3)=0.3793\), and delete column 4 and rows 1, 3, and 4 of matrices \(A^\prime \) and \(\Psi ^\prime \), and \(b_1\), \(b_3\), and \(b_4\). Hence, the new reduced matrices \(A^\prime \) and \(\Psi ^\prime \) become

It is to be noted that the first and second rows of the matrices \(A^\prime \) and \(\Psi ^\prime \) correspond to the second and fifth rows of the primal matrices A and \(\Psi \), respectively. Also, the columns 4 and 5 in the reduced matrices correspond to the columns 5 and 6 in the primal matrices, respectively. In the current matrix \(\Psi ^\prime =(\Psi _{ij}^\prime )_{2\times 5}\), we have \(\underline{x}(a_{13}^\prime ,b_1^\prime )=\underline{x}(a_{14}^\prime ,b_1^\prime )=\underline{x}(a_{15}^\prime ,b_1^\prime )=1\ge 0.8501=f(\underline{x}(e_0))\) and \(\underline{x}(a_{23}^\prime ,b_2^\prime )=0.9136\ge 0.8501=f(\underline{x}(e_0))\), where \(\underline{x}(e_0)\) is the initial feasible solution obtained at Step 6. Hence, by applying the fourth simplification technique (Corollary 5.4), we set \(a_{13}^\prime =a_{14}^\prime = a_{15}^\prime =a_{23}^\prime =0\) and \(\Psi _{13}^\prime =\Psi _{14}^\prime = \Psi _{15}^\prime =\Psi _{23}^\prime =\emptyset \) (see Remark 5.1). The new matrices \(A^\prime \) and \(\Psi ^\prime \) are attained as follows:

Since \(\{2\}=J(1)\subseteq J(2)=\{1,2\}\) and \(0.2601=\underline{x}(a_{22}^\prime ,b_2^\prime )\le \underline{x}(a_{12}^\prime ,b_1^\prime )=0.2646\), i.e., \(\underline{x}(a_{2j}^\prime ,b_2^\prime )\le \underline{x}(a_{1j}^\prime ,b_1^\prime )\), \(\forall j\in J(1)\), then the second row of the matrices \(A^\prime \) and \(\Psi ^\prime \) (i.e., the fifth row in A and \(\Psi \)) and \(b_2^\prime \) (i.e., \(b_5\) in the main problem) are deleted by the second simplification technique (Corollary 5.2). So, we have the following reduced matrices:

Finally, since in the matrices \(A^\prime =(a_{ij}^\prime )_{1\times 5}\) and \(\Psi ^\prime =(\Psi _{ij}^\prime )_{1\times 5}\) we have \(I(1)=I(3)=I(4)=I(5)=\emptyset \), then we can delete columns 1, 3, 4, and 5 by the fifth simplification technique (Corollary 5.5) to obtain the following new reduced matrices:

As mentioned before, the columns 4 and 5 of the matrix \(A^\prime \) correspond to the columns 5 and 6 of the main matrix A, respectively. So, by the fifth simplification technique, we set \(x_1^*=x_3^*=x_5^*=x_6^*=0\).

Step 5. 6. 7. After applying the simplification techniques, the problem is reduced to \(A^\prime \psi x\ge b^\prime \) where \(A^\prime \) is a \(1\times 1\) matrix with one entry corresponding to the entry (2, 2) in A. In the matrix \(A^\prime \), the set E includes only one element \(e=[1]\) with corresponding solution \(\underline{x}(e)=[0.2646]\) that is the unique element of \(S_E(A^\prime ,b^\prime )\). Therefore, \(\underline{x}(e)=0.2646\) is clearly the unique optimal solution to the problem \(A^\prime \psi x\ge b^\prime \). Hence, \(x_2^*=0.2646\), and finally the optimal solution of the primal problem is obtained as \(x^*=[x_1^*,x_2^*,x_3^*,x_4^*,x_5^*,x_6^*,x_7^*]= [0, 0.2646, 0, 0.3793, 0, 0, 0]\) with the objective value of \(f(x^*)=0.64393\).

7 Conclusion

In this paper, we introduced a generalization of the latticized optimization problem. The proposed model consists of a non-linear objective function defined by any continuous s-norm and a set of constraints in the form of a system of fuzzy relational inequalities defined by an arbitrary continuous t-norm. Feasible solution sets for such continuous FRIs were completely resolved. Moreover, two necessary and sufficient conditions were presented to determine the feasibility of the problem. Based on the theoretical results, an algorithm was presented for finding the exact optimal solutions of the proposed non-linear optimization model. In contrast to FRI optimization problems with the linear objective functions, analytical results revealed that the maximum solution does not contain useful information for obtaining an optimal solution. Theorem 3.1 indicated that an optimal solution is one of the minimal solutions of the continuous FRI. However, finding all the minimal solutions is usually NP-hard work. To avoid this NP-hard problem, an alternative strategy was adopted in this paper; five simplification techniques were developed to pre-assign values to as many decision variables as possible. Consequently, problem size was quickly reduced. In addition, we discussed a special case of the non-linear model in which the objective function was defined by the Lukasiewicz s-norm, and the feasible region was formed as a continuous FRI with max-product composition. This model was used in a type of wireless communication management problem. Furthermore, a polynomial-time method was presented for solving the latticized linear programming problems subjected to FRI defined by an arbitrary continuous t-norm. These problems unified several interesting properties of the latticized linear programming problems with max–min and max-product type (used by Yang et. al.) through the framework of the \(\max -\varphi \) composition with \(\varphi \) as a continuous t-norm. As future works, we aim at developing the current model defined by different t-norms and s-norms to other applications.

Data availability

Enquiries about data availability should be directed to the authors.

References

Bourke MM, Fisher DG (1998) Solution algorithms for fuzzy relational equations with max-product composition. Fuzzy Sets Syst 94:61–69

Dempe S, Ruziyeva A (2012) On the calculation of a membership function for the solution of a fuzzy linear optimization problem. Fuzzy Sets Syst 188:58–67

Dubey D, Chandra S, Mehra A (2012) Fuzzy linear programming under interval uncertainty based on IFS representation. Fuzzy Sets Syst 188:68–87

Eugenia Cornejo M, Lobo D, Medina J (2022) Comment on “Bipolar fuzzy relation equations systems based on the product t-norm’’. Math Methods Appl Sci 45(8):4661–4664

Freson S, DeBaets B, Meyer HD (2013) Linear optimization with bipolar max–min constraints. Inf Sci 234:3–15

Ghodousian A (2019) Optimization of linear problems subjected to the intersection of two fuzzy relational inequalities defined by Dubois–Prade family of t-norms. Inf Sci 503:291–306

Ghodousian A, Babalhavaeji A (2018) An efficient genetic algorithm for solving nonlinear optimization problems defined with fuzzy relational equations and max-Łukasiewicz composition. Appl Soft Comput 69:475–492

Ghodousian A, Khorram E (2012) Linear optimization with an arbitrary fuzzy relational inequality. Fuzzy Sets Syst 206:89–102

Ghodousian A, Parvari MR (2017) A modified PSO algorithm for linear optimization problem subject to the generalized fuzzy relational inequalities with fuzzy constraints (FRI-FC). Inf Sci 418–419:317–345

Ghodousian A, Naeeimi M, Babalhavaeji A (2018) Nonlinear optimization problem subjected to fuzzy relational equations defined by Dubois–Prade family of t-norms. Comput Ind Eng 119:167–180

Guo FF, Xia ZQ (2006) An algorithm for solving optimization problems with one linear objective function and finitely many constraints of fuzzy relation inequalities. Fuzzy Optim Decis Mak 5:33–47

Guo FF, Pang LP, Meng D, Xia ZQ (2013) An algorithm for solving optimization problems with fuzzy relational inequality constraints. Inf Sci 252:20–31

Li PK, Fang SC (2009) A survey on fuzzy relational equations, part I: classification and solvability. Fuzzy Optim Decis Mak 8:179–229

Li PK, Liu Y (2014) Linear optimization with bipolar fuzzy relational equation constraints using Lukasiewicz triangular norm. Soft Comput 18:1399–1404

Li H, Wang Y (2013) A matrix approach to latticized linear programming with fuzzy-relation inequality constraints. IEEE Trans Fuzzy Syst 21:781–788

Li JX, Yang SJ (2012) Fuzzy relation inequalities about the data transmission mechanism in BitTorrent-like peer-to-peer file sharing systems. In: Proceedings of the 9th international conference on fuzzy systems and knowledge discovery (FSKD), pp 452–456

Liu CC, Lur YY, Wu YK (2016) Linear optimization of bipolar fuzzy relational equations with max-Łukasiewicz composition. Inf Sci 360:149–162

Loetamonphong J, Fang SC (1999) An efficient solution procedure for fuzzy relation equations with max-product composition. IEEE Trans Fuzzy Syst 7:441–445

Lu J, Fang SC (2001) Solving nonlinear optimization problems with fuzzy relation equations constraints. Fuzzy Sets Syst 119:1–20

Markovskii AV (2005) On the relation between equations with max-product composition and the covering problem. Fuzzy Sets Syst 153:261–273

Matusiewicz Z, Drewniak J (2013) Increasing continuous operations in fuzzy max-* equations and inequalities. Fuzzy Sets Syst 232:120–133

Pedrycz W (1985) On generalized fuzzy relational equations and their applications. J Math Anal Appl 107:520–536

Pedrycz W (2013) Granular computing: analysis and design of intelligent systems. CRC Press, Boca Raton

Sanchez E (1993) Solution in composite fuzzy relation equations: application to medical diagnosis in Brouwerian logic. Read Fuzzy Sets Intell Syst 178:159–165

Wang PZ, Zhang DZ, Sanchez E, Lee E (1991) Latticized linear programming and fuzzy relation inequalities. J Math Anal Appl 159(1):72–87

Wu YK, Guu SM, Liu JY (2008) Reducing the search space of a linear fractional programming problem under fuzzy relational equations with max-Archimedean t-norm composition. Fuzzy Sets Syst 159:3347–3359

Yang SJ (2014) An algorithm for minimizing a linear objective function subject to the fuzzy relation inequalities with addition-min composition. Fuzzy Sets Syst 255:41–51

Yang XP, Zhou XG, Cao BY (2015a) Multi-level linear programming subject to addition-min fuzzy relation inequalities with application in peer-to-peer file sharing system. J Intell Fuzzy Syst 28:2679–2689

Yang XP, Zhou XG, Cao BY (2015b) Single-variable term semi-latticized fuzzy relation geometric programming with max-product operator. Inf Sci 325:271–287

Yang XP, Zhou XG, Cao BY (2016a) Latticized linear programming subject to max-product fuzzy relation inequalities with application in wireless communication. Inf Sci 358–359:44–55

Yang XP, Zhou XG, Cao BY (2016b) Min-max programming problem subject to addition-min fuzzy relation inequalities. IEEE Trans Fuzzy Syst 24(1):111–119

Funding

The authors did not receive support from any organization for the submitted work.

Author information

Authors and Affiliations

Contributions

AG: Conception and design of the work, mathematical analyzing and proofs, critical revision of the article, final approval of the version to be published. BSR and OG: Investigation, Software implementation.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ghodousian, A., Rad, B.S. & Ghodousian, O. A non-linear generalization of optimization problems subjected to continuous max-t-norm fuzzy relational inequalities. Soft Comput 28, 4025–4036 (2024). https://doi.org/10.1007/s00500-023-09376-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-023-09376-2