Abstract

Multiobjective optimization techniques have much importance while solving real-life problems. There may get chances of neutral thoughts and hesitations in real-life problems. This paper has studied the multiobjective programming problems (MOPPs) under neutrosophic hesitant fuzzy uncertainty. The degrees of neutrality and hesitations in MOPPs were introduced, and simultaneously, we have developed the neutrosophic hesitant fuzzy multiobjective programming problems (NHFMOPPs) under a neutrosophic hesitant fuzzy environment. Besides, a new robust solution scheme, namely neutrosophic hesitant fuzzy Pareto optimal solution to the NHFMOPPs, is investigated, and two different optimization techniques are suggested to evaluate it. The validity and applicability of the proposed methods are unanimously implemented on different multiobjective real-life problems. Finally, conclusions, comparative study, and future research direction are addressed based on the presented work.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Real-life optimization problems inherently involve more than one objective function to be optimized (minimized or maximized) under the given circumstances. A mathematical programming problem having more than two objectives is termed as multiobjective programming problems (MOPPs). The modeling and optimization texture of MOPPs depends on the nature of optimizing the environment, such as deterministic framework, and vague and random uncertainties. In MOPPs, it may seldom occur that a single solution set satisfies all objectives efficiently at a time, but it is quite possible to get a compromise solution that satisfies all objective functions marginally simultaneously. Thus, a considerable number of solution methods have been suggested in the literature to solve the MOPPs.

Initially, Zadeh (1965) proposed the fuzzy set (FS) and afterward, it was explored in multiple criteria, multiple attributes, and multiobjective decision-making problems. Afterward, Zimmermann (1978) investigated the fuzzy programming technique for the multiobjective optimization problem, which was based on the membership function (degree of belongingness) for the marginal evaluation of each objective function. The fuzzy programming approach (FPA) is concerned with the maximization of satisfaction degree for the DM(s) while simultaneously dealing with multiple objectives. Ahmad (2021a, 2021b) discussed the modeling and optimization of MOPPs under uncertainty.

The limitation of the fuzzy set has been examined because it is not capable of defining the non-membership function of the element into the fuzzy set. Firstly, (Atanassov 1986) introduced the intuitionistic fuzzy set (IFS). Based on IFS, Angelov (1997) first addressed the intuitionistic fuzzy programming approach (IFPA) for real-life decision-making problems. The IFPA is a more flexible and realistic optimization technique compared to the fuzzy technique because it tackles the membership as well as non-membership functions simultaneously. Mahmoodirad et al. (2018), Ahmad et al. (2021b), Ahmadini and Ahmad (2021b) studied the multiobjective transportation problem under an intuitionistic fuzzy environment. Ahmad and John (2021), Ahmad et al. (2021c, 2021e, 2021a) suggested an intuitionistic fuzzy programming method for multiobjective linear programming problems.

In reality, the characteristic of hesitation is the most trivial concerns in the decision-making process. It seldom happens that DM(s) is/are not sure about a single specific value about membership degree (degree of belongingness) of the element into the feasible decision set. In this situation, a set of some conflicting values is possible to assign it. Inspired with such cases, Torra and Narukawa (2009) proposed a set named hesitant fuzzy set (HFS), which is the extension of FS. The HFS deals with a set of different possible degrees of the element’s membership function into a feasible decision set. Researchers have widely used the hesitant fuzzy technique. Ahmad et al. (2018), Ahmadini and Ahmad (2021a) presented the research study on MOPPs under hesitant situation. Rouhbakhsh et al. (2020) studied the MOPPs under a hesitant fuzzy environment. Bharati (2018) discussed hesitant fuzzy optimization techniques for multiobjective linear programming problems. Zhou and Xu (2018) proposed a solution algorithm for portfolio optimization under a hesitant fuzzy environment.

The extension and generalization of FS and IFS are presented by Smarandache (1999) and named as neutrosophic set (NS). The NS deals with three different membership functions, namely truth (degree of belongingness), indeterminacy (belongingness up to some extent), and a falsity (degree of non-belongingness) of the element into the feasible decision set. Later on, many researchers such as (Ahmad and Adhami 2019a, b) have used the neutrosophic decision set to develop the solution methods for MOPPs. Ahmad et al. (2020), Ahmad et al. (2021c) presented modified neutrosophic optimization techniques for multiobjective supply chain planning problems. Abdel-Basset et al. (2018) presented a study on a fully neutrosophic linear programming problem. Ahmad et al. (2019), Adhami and Ahmad (2020), Ahmad (2021a) have also addressed neutrosophic goal programming technique for shale gas water management under uncertainty. Ahmad and Adhami (2019a), Ahmad (2021b) and Ahmad et al. (2021a) suggested neutrosophic optimization models for multiobjective transportation problems.

All the above-discussed decision sets and similar optimization techniques cannot unify and capture the two different scopes of human perceptions, such as indeterminacy and hesitations degrees, that arise simultaneously while making fruitful decisions. To highlight this situation, we have taken advantage of a single-valued neutrosophic hesitant fuzzy decision set and, consequently, a neutrosophic hesitant fuzzy multiobjective programming problem (NHFMOPP) is developed. Since the proposed NHFMOPP is the continuous case of optimization, we define the different membership functions based on the different experts/managers’ opinions regarding the decisions (Bharati 2018; Ahmad et al. 2018). While dealing with the multicriteria decision-making problem, the neutrosophic hesitant fuzzy set becomes more complex, and simultaneously the mathematical calculations are more advanced than the classical ones (Ye 2015). During handling the multicriteria decision-making problems, neutrosophic hesitant fuzzy parameters are taken into considerations. For more details about the neutrosophic hesitant fuzzy parameters and their numerical operations, one may visit (Ye 2015; Bharati 2018; Ahmad et al. 2022). The advanced modeling and optimization framework of NHFMOPPs is very close to real-life scenarios. It ensures the most promising optimization environment by reducing the violation of risk reliability for the decision-maker(s) (DM(s)) under a neutrosophic hesitant fuzzy environment. A novel solution scheme is investigated to solve the proposed NHFMOPPs and named neutrosophic hesitant fuzzy Pareto optimal solutions (NHFPOSs). Furthermore, two different optimization techniques are also suggested to determine the NHFPOSs of NHFMOPPs. The robustness of NHFPOS is revealed by performing the optimality tests. The optimization techniques unavoidably consider the neutral thoughts (indeterminacy degrees) and the different opinions (hesitation degrees) of various experts or DMs while making the decisions. A wholesome opportunity to interact with different distinguished experts or DMs is also advantageous in quantitative decision-planning scenarios. Hence, the proposed optimization techniques capture all sorts of vagueness, impreciseness, and the incompleteness that inevitably arise in real-life optimization problems and manage flexibility in the decision-making scenario. Thus, this paper can be considered as an extension of the work carried out by Ahmad et al. (2018), Ahmadini and Ahmad (2021a, 2021b), Bharati (2018), Ahmad and Adhami (2019a), Adhami et al. (2021) and Rouhbakhsh et al. (2020) under neutrosophic hesitant fuzzy environment. The propounded modeling and optimization structure of NHFMOPPs can be unanimously accepted as super-technique (that contains all techniques under special cases) for solving NHFMOPPs. From the author’s knowledge, no such solution concept is propounded in the literature for modeling and solving the NHFMOPPs so far.

The remaining part of the manuscript is presented as follows: In Sect. 2, the basic concept related to neutrosophic, hesitant fuzzy, and single-valued neutrosophic hesitant fuzzy sets is presented, while Sect. 3 depicts the modeling of MOPPs under neutrosophic hesitant fuzzy environment. A computational study containing three different real-life applications is presented in Sect. 4. The comparison of the proposed NHFPOSs of NHFMOPPs is made with other approaches. The conclusions and future research direction are addressed in Sect. 5.

2 Preliminaries

Definition 1

Smarandache (1999) An NS is said to be a single-valued neutrosophic set (SVNS) A, if the membership functions are represented as follows:

where \( \mu _{A}(x) , \lambda _{A}(x)\) and \(\nu _{A}(x) \in [0,1]\) and \(0 \le \mu _{A}(x) + \lambda _{A}(x) + \nu _{A}(x) \le 3\) for all \(x \in X\).

Definition 2

Torra and Narukawa (2009) A hesitant fuzzy set (HFS) A over universe of discourse X can be represented in terms of a function \(h_{A}(x)\) returning a set of values in [0,1] and is expressed as follows:

where \(h_{H}(x)\) gives a set of values in [0,1], depicting the membership grades of the value \(x \in X \) into H. Moreover, \(h_{H}(x)\) is also called as a hesitant fuzzy element.

Definition 3

Torra and Narukawa (2009) The upper and lower bounds are represented as \(h^{-}(x)= \mathrm{min}~h(x)\) and \(h^{+}(x)= \mathrm{max}~h(x)\) for each hesitant fuzzy element h, respectively.

Definition 4

Ye (2015) Suppose X is a fixed set; then a single-valued neutrosophic hesitant fuzzy set (SVNHFS) \(N_{h}\) on X is expressed as follows:

where \(\mu _{h}(x), \lambda _{h}(x)\) and \(\nu _{h}(x)\) are three sets of values in [0,1], representing the truth hesitant, indeterminacy hesitant and the falsity hesitant membership degrees of the element \(x \in X \) into the set \(N_{h}\), respectively. The conditions hold \(0 \le \alpha ,~\beta ,~\gamma \le 1\) and \(0 \le \alpha ^{+},~\beta ^{+},~\gamma ^{+} \le 3\), where \(\alpha \in \mu _{h}(x)\), \(\beta \in \lambda _{h}(x)\), \(\gamma \in \nu _{h}(x)\) with \(\alpha ^{+}\in \mu ^{+}_{h}(x)= \cup _{\alpha \in \mu _{h}(x)}\mathrm{max} \{ \alpha \}\), \(\beta ^{+}\in \lambda ^{+}_{h}(x)= \cup _{\beta \in \lambda _{h}(x)}\mathrm{max} \{ \beta \}\) and \(\gamma ^{+}\in \nu ^{+}_{h}(x)= \cup _{\gamma \in \nu _{h}(x)}\mathrm{max} \{ \gamma \}\) for all \(x \in X \).

Definition 5

Ye (2015) Suppose that \(N_{{h}_{1}}\) and \(N_{{h}_{2}}\) be two SVNHFSs; then the union of these sets can be represented by

Definition 6

Ye (2015) Suppose that \(N_{{h}_{1}}\) and \(N_{{h}_{2}}\) be two SVNHFSs; then the intersection of these sets can be represented by

Definition 7

The general form of MOPPs is given as follows:

where \(O_{p}(x)\) is the pth objective functions and B(x) and x are the real-valued functions and a set of decision variable vectors.

3 Formulation of MOPPs under neutrosophic hesitant fuzzy environment

In this section, we have presented the modeling approach for MOPP with neutrosophic hesitant fuzzy goals of each objective function under a neutrosophic hesitant fuzzy environment. In addition to that, we have also proposed two optimization techniques to solve the neutrosophic hesitant fuzzy multiobjective programming problems (NHFMOPP).

In the MOPP (2.1), we assume that the DM has neutrosophic fuzzy goals for each objective functions that are to be achieved. In such circumstance, the MOPP (2.1) may be transformed into the neutrosophic fuzzy multiobjective programming problem (NFMOPP) and is stated as below:

where the notations \(\widetilde{(\cdot )}\) represent a flexible or neutrosophic fuzzy version of \((\cdot )\), meaning that “the functions should be minimized as much as possible under neutrosophic fuzzy environment” subject to the given constraints (Ahmad et al. 2021d; Ahmad and Smarandache 2021). A neutrosophic optimization problem is determined by X possible solution sets and a set of neutrosophic goals \(G_{i},~i=1,2, \ldots , p\), along with a set of neutrosophic constraints \(C_{j},~j=1,2, \ldots , m\) which is depicted by neutrosophic set on X.

The idea of fuzzy decision set was developed by Bellman and Zadeh (1970), and later on, it was widely used by many researchers in the real-life optimization problems. Therefore, the fuzzy decision set is stated as \( D= G \cap C\).

Equivalently, the following expressions mathematically represent the neutrosophic decision set \(D_{N}\):

where

The truth, indeterminacy, and a falsity membership degrees are represented by \( \mu _{D}(x) , \lambda _{D}(x)\) and \(\nu _{D}(x)\), respectively.

The minimum and maximum values \(L_{i}\) and \(U_{i}\) of each objectives are given as follows:

The bounds for pth objective function in the neutrosophic environment are determined by the following expressions:

where \(y_{i}\) and \(z_{i} \in (0,1)\) are the known real numbers.

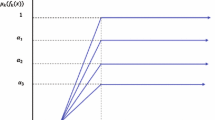

With the help of lower and upper bounds, the linear membership functions can be defined as follows:

where \(L_{i}^{(.)} \ne U_{i}^{(.)}\) for all p objective function. Once the neutrosophic decision \(D_{N}\) is derived, the optimal decision \(x^{*} \in X\) can be determined if and only iff

Definition 8

\(x^{*} \in X\) can be considered as a neutrosophic fuzzy Pareto optimal solution (NFPOS) to the NFMOPP (3.1) if and only iff, there does not exist any other \(x \in X\) such that \(\mu _{G_{i}}(x) \ge \mu _{G_{i}}(x^{*}),~~\lambda _{G_{i}}(x) \le \lambda _{G_{i}}(x^{*}) ~~ and~~\nu _{G_{i}}(x) \le \nu _{G_{i}}(x^{*})~~ \forall i=1,2, \ldots , p\); and \(\mu _{G_{j}}(x) > \mu _{G_{j}}(x^{*}),~~\lambda _{G_{j}}(x)< \lambda _{G_{j}}(x^{*}) ~~ and~~\nu _{G_{j}}(x) < \nu _{G_{j}}(x^{*})\) for at least one j, respectively.

After depicting the different membership functions \(\mu _{G_{i}}(x),~~\lambda _{G_{i}}(x)\text { and }\nu _{G_{i}}(x)\) fro each objective functions \(O_{i}(x)\) by the DM, and applying the neutrosophic decision set (Ahmad et al. 2019), the NFMOPP (3.1) is converted into the equivalent problem:

Now, problem (3.9) is equivalent to problem (3.10) and can be shown as follows:

The problem (3.10) is a neutrosophic optimization model and used by many researchers in different fields of real-life applications, see (Ahmad et al. 2020; Ahmad and Adhami 2019a; Ahmad et al. 2018). Based on the extended concept of Sakawa (2013) that when the different membership functions (3.6), (3.7) and (3.8) are used and problem (3.10) yields in a unique solution, then an NFPOS will be the required optimal solution. Otherwise, we can examine the Pareto optimality test by eliciting the equivalent problem (3.11):

where \(\eta =(\eta _{1}, \eta _{2}, \ldots , \eta _{p})^{T}\) and \(x^{*}\) is an optimal solution of problem (3.9). Then for \(({\bar{\eta }},~{\bar{x}})\) as an optimal solution for problem (3.11), we have any one of the following two cases:

-

(1)

if \( {\bar{\eta }}_{i} \ne 0\), for at least one i, then \({\bar{x}}\) is an NFPOS for (3.1).

-

(2)

if \( {\bar{\eta }}_{i} = 0,~~i=1,2, \ldots ,p\), then \(x^{*}\) is an NFPOS for (3.1).

In NFMOPP (3.1), the DM incorporates his/her neutral thoughts or indeterminacy degree while making decisions. It would be better to assign the evaluations of various experts or decision-makers under the neutrosophic environment.

Hence, a novel solution method based on a single-valued neutrosophic hesitant fuzzy set is investigated for solving the MOPP. The propounded method is the mixture of the two sets, namely neutrosophic set (Smarandache 1999) and hesitant fuzzy set (Torra and Narukawa 2009), respectively. An exciting characteristic feature of the proposed method is that it manages the opposite and adverse opinions of various experts about the parameters, ensuring the DM(s) to determine the most suitable outcomes in the neutrosophic environment.

Thus, one may incorporate a neutrosophic hesitant fuzzy multiobjective programming problem (NHFMOPP) as an extension of NFMOPP under neutrosophic hesitant fuzzy modeling situation.

where the notations \(\widetilde{\widetilde{(\cdot )}}\) represent a relaxed or neutrosophic hesitant fuzzy form of \((\cdot )\), meaning that “the functions should be minimized as much as possible under neutrosophic hesitant fuzzy environment” subject to the given constraints.

In the NHFMOPP (3.12), the different membership functions such as truth, indeterminacy, and falsity hesitant membership degrees for each objective are defined by the marginal evaluations of several experts or decision-makers. The proposed NHFMOPP (3.12) requires some parameters well in advance before solving them. In order to define these parameters, one should seek the opinion of different experts about their aspiration values between 0 and 1. The values closer to “0” signify the lower satisfactory degree for the corresponding objective, and a value nearer to “1” depicts a higher satisfactory degree and vice versa.

A neutrosophic hesitant fuzzy optimization problem is determined by X possible solution sets and neutrosophic hesitant fuzzy goals \(\widetilde{G_{i}},~i=1,2, \ldots , p\), for each objective functions \(\widetilde{\widetilde{O_{i}}},~i=1,2, \ldots , p\) which is depicted by neutrosophic fuzzy set on X.

Accordingly, the neutrosophic hesitant fuzzy decision set \( D^{N}_{h} \) can be expressed as follows:

More clearly, a set of different membership functions under neutrosophic hesitant fuzzy environment can be represented as follows:

Remark 1

One should note that the different membership functions \(\mu _{{G}^{k_{i}}_{i}}(x),~\lambda _{{G}^{k_{i}}_{i}}(x)\) and \(\nu _{{G}^{k_{i}}_{i}}(x)\) for all \(i\!=\!1,2, \ldots , p\) and \(k_{i}=1,2, \ldots , l_{i}\) would be decreasing one (or increasing) functions similar to Eqs. (3.6), (3.7) and (3.8), where \(l_{i}\) is the number of experts who assigns the attainment levels for the objective functions \(\widetilde{\widetilde{O_{i}}}(x)\) for all \(i=1,2, \ldots , p\), in neutrosophic environment. Furthermore, assume that \(H_{p}(x)= \{ \mu _{{G}^{k_{i}}_{i}}(x),~\lambda _{{G}^{k_{i}}_{i}}(x),~\nu _{{G}^{k_{i}}_{i}}(x)~|~i=1,2, \ldots , p,~~k_{i}=1,2, \ldots , l_{i} \}\). In the propounded optimization method, neutrosophic hesitant fuzzy Pareto optimal solution (NHFPOS) to the NHFMOPP (3.12) is discussed in an effective and efficient manner.

Definition 9

\(< x^{*},~H_{p}(x^{*})~|~ x^{*} \in X>\) is considered as a NHFPOS to the NHFMOPP (3.12) if and only iff, there does not exist any other \(x \in X\) such that \(\mu _{{G}^{k_{i}}_{i}}(x) \ge \mu _{{G}^{k_{i}}_{i}}(x^{*}),~~\lambda _{{G}^{k_{i}}_{i}}(x) \le \lambda _{{G}^{k_{i}}_{i}}(x^{*})\) and \(\nu _{{G}^{k_{i}}_{i}}(x) \le \nu _{{G}^{k_{i}}_{i}}(x^{*})~~ \forall i=1,2, \ldots , p,~~k_{i}=1,2, \ldots , l_{i}\); and \(\mu _{{G}^{k_{j}}_{j}}(x) > \mu _{{G}^{k_{j}}_{j}}(x^{*}),~~\lambda _{{G}^{k_{j}}_{j}}(x) < \lambda _{{G}^{k_{j}}_{j}}(x^{*})\) and \(\nu _{{G}^{k_{j}}_{j}}(x) < \nu _{{G}^{k_{j}}_{j}}(x^{*})\) for at least one \(j \in \{1,2, \ldots , p \}\) and \(k_{j}=1,2, \ldots , l_{j}\).

Remark 2

One should note that a NHFPOS can be treated as a NFPOS if \(l_{i}=1\) for all i in (3.13). Hence, the NFPOS is a special case of the NHFPOS.

In the following sections, we have discussed the two different optimization techniques for MOPP under neutrosophic hesitant fuzzy environment.

3.1 Proposed Optimization Technique-I

Suppose the NHFS \(\widetilde{G_{i}}\) for each objective function in the NHFMOPP (3.12). On implementing the intersection concept of NHFSs, one can depict the neutrosophic hesitant fuzzy decision set. Hence, the neutrosophic hesitant fuzzy decision set \( D^{N}_{h} \) can be stated by the following expressions:

with the neutrosophic hesitant fuzzy membership element of \(h_{D^{N}_{h}}(x)\)

for each \(x \in X\) where \(\tau = l_{1},l_{1}, \ldots , l_{p}\) and \(\theta _{ir} \in \{ 1,2, \ldots , l_{i}\}\). The members of \(\mu _{D^{N}_{h}} (x)\) are the minimum of the set of truth hesitant membership functions, whereas the members of \(\lambda _{D^{N}_{h}} (x)\) and \(\nu _{D^{N}_{h}} (x)\) are the maximum of the set of indeterminacy and a falsity hesitant membership functions, respectively. Furthermore, \(\mu _{D^{N}_{h}} (x),~\lambda _{D^{N}_{h}}(x)\) and \(\nu _{D^{N}_{h}} (x)\) contains a set of truth, indeterminacy, and a falsity hesitant degrees of acceptance for neutrosophic hesitant fuzzy solutions.

We introduce the maximum satisfaction degrees of rth \((r=1,2, \ldots , \tau )\) member of each membership functions under neutrosophic hesitant fuzzy environment as follows:

Using auxiliary variables \(\alpha ,~\beta \) and \(\gamma \), problem (3.15) can be rewritten as follows:

After solving the rth problem (3.16), the maximal degrees of the attainment level \(\phi ^{*r}\) with the optimal solution \(x^{*r}\) can be obtained. Thus, on solving \(\tau \) problems given in problem (3.16), we can determine the maximal aspiration level degrees \(\phi ^{*1},~\phi ^{*2}, \ldots , \phi ^{*\tau }\) and the various set of optimal solutions as \(x^{*1},~x^{*2}, \ldots , x^{*\tau }\) equivalently.

Remark 3

If the DM is not satisfied by an NHFPOS among the rth NHFPOSs, then there is option for pessimistic NHFPOS or optimistic NHFPOSs. To serve this facility, assume that \(\phi ^{*m}=~\mathrm{min}~\{\phi ^{*1},~\phi ^{*2}, \ldots , \phi ^{*\tau }\}\) and \(\phi ^{*M}=~\mathrm{max}~\{\phi ^{*1},~\phi ^{*2}, \ldots , \phi ^{*\tau }\}\), then we depict \(< x^{*m},~H_{p}(x^{*m})~|~ x^{*m} \in X>\) as the pessimistic NHFPOS and \(< x^{*M},~H_{p}(x^{*M})~|~ x^{*M} \in X>\) as the optimistic NHFPOS, respectively.

In Theorem 1, we will prove that all the obtained solutions for problem (3.16) are NHFPOSs.

Theorem 1

If there exists a unique optimal solution \((x^{*r},\phi ^{*r})\) for the problem (3.16), then \(< x^{*r},~H_{p}(x^{*r})~|~ x^{*r} \in X>\) will be a NHFPOS for the NHFMOPP (3.12), where \(H_{p}(x^{*r})=\{ \mu _{{\widetilde{G}}^{k_{i}}_{i}}(x^{*r}),~\lambda _{{\widetilde{G}}^{k_{i}}_{i}}(x^{*r}),~\nu _{{\widetilde{G}}^{k_{i}}_{i}}(x^{*r})~|~i=1,2, \ldots , p,~~k_{i}=1,2, \ldots , l_{i} \}\).

Proof

Assume that \(< x^{*r},~H_{p}(x^{*r})~|~ x^{*r} \in X>\) is not NHFPOS for the NHFMOPP. Then, there exists an \(x \in X\) with \(< x,~H_{p}(x)>\) such that \(\mu _{{\widetilde{G}}^{k_{i}}_{i}}(x) \ge \mu _{{\widetilde{G}}^{k_{i}}_{i}}(x^{*r}),~\lambda _{{\widetilde{G}}^{k_{i}}_{i}}(x) \le \lambda _{{\widetilde{G}}^{k_{i}}_{i}}(x^{*r})\) and \(\nu _{{\widetilde{G}}^{k_{i}}_{i}}(x) \le \nu _{{\widetilde{G}}^{k_{i}}_{i}}(x^{*r})\) for all \(i=1,2, \ldots , p,~~k_{i}=1,2, \ldots , l_{i}\), and \(\mu _{{\widetilde{G}}^{k_{j}}_{j}}(x) > \mu _{{\widetilde{G}}^{k_{j}}_{j}}(x^{*r}),~\lambda _{{\widetilde{G}}^{k_{j}}_{j}}(x) < \lambda _{{\widetilde{G}}^{k_{j}}_{j}}(x^{*r})\) and \(\nu _{{\widetilde{G}}^{k_{j}}_{j}}(x) < \nu _{{\widetilde{G}}^{k_{j}}_{j}}(x^{*r})\) for at least one \(j \in \{1,2, \ldots , p \}\) and \(~k_{j}=1,2, \ldots , l_{j}\). More precisely for all \(r=1,2, \ldots , \tau \), \(\mu _{\widetilde{G_{i}}^{\theta _{ir}}}(x) \ge \mu _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r}),~\lambda _{\widetilde{G_{i}}^{\theta _{ir}}}(x) \le \lambda _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r})\) and \(\nu _{\widetilde{G_{i}}^{\theta _{ir}}}(x) \le \nu _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r})\) for all \(i=1,2, \ldots , p\); and \(\mu _{\widetilde{G_{j}}^{\theta _{jr}}}(x) > \mu _{\widetilde{G_{j}}^{\theta _{jr}}}(x^{*r}),~\lambda _{\widetilde{G_{j}}^{\theta _{jr}}}(x) < \lambda _{\widetilde{G_{j}}^{\theta _{jr}}}(x^{*r})\) and \(\nu _{\widetilde{G_{j}}^{\theta _{jr}}}(x) < \nu _{\widetilde{G_{j}}^{\theta _{jr}}}(x^{*r})\) for at least one \(j \in \{1,2, \ldots , p \}\).

Hence, we have

\(\mathrm{min}~ \{ \mu _{\widetilde{G_{i}}^{\theta _{ir}}}(x) \}^{p}_{i=1} \ge \mathrm{min}~ \{ \mu _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r}) \}^{p}_{i=1}\),

\(\mathrm{max}~ \{ \lambda _{\widetilde{G_{i}}^{\theta _{ir}}}(x) \}^{p}_{i=1} \le \mathrm{max}~ \{ \lambda _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r}) \}^{p}_{i=1}\),

\(\mathrm{max}~ \{ \nu _{\widetilde{G_{i}}^{\theta _{ir}}}(x) \}^{p}_{i=1} \le \mathrm{max}~ \{ \nu _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r}) \}^{p}_{i=1}\).

The inequality results in a contradiction of the optimality or uniqueness of the optimal solution \(x^{*r}\) to the problem (3.16). Thus, Theorem 1 is proven.

3.2 Neutrosophic hesitant fuzzy Pareto optimality test

If there is no guarantee that the optimal solution \(x^{*r}\) is a unique optimal solution to the problem (3.16), then one can perform the Pareto optimality test in the neutrosophic hesitant situation to determine an NHFPOS. The neutrosophic hesitant fuzzy Pareto optimality test (NHFPOT) for \(x^{*r}\) can be carried out by obtaining the solution of following mathematical programming problem (3.17):

Theorem 2

Let us consider that \((x^{*r}, \phi ^{*r})\) be an efficient solution for the problem (3.16). Then, for \(({\bar{x}}^{r}, {\bar{\eta }}^{r})\) as an optimal solution of problem (3.17), one can have the below two conditions:

-

(a)

If \( {\bar{\eta }}^{r}_{i} = 0,~~i=1,2, \ldots ,p\), then \(< x^{*r},~H_{p}(x^{*r})~|~ x^{*r} \in X>\) is a NHFPOS for the problem (3.16).

-

(b)

If \( {\bar{\eta }}^{r}_{i} \ne 0\), for at least one i, then \(< {\bar{x}}^{r},~H_{p}({\bar{x}}^{r})~|~ {\bar{x}}^{r} \in X>\) is a NHFPOS for the problem (3.16).

Proof

(a): Assume that \(< x^{*r},~H_{p}(x^{*r})~|~ x^{*r} \in X>\) is not NHFPOS for the NHFMOPP (3.12). Thus, in a same manner to Theorem 1, there is \(< x,~H_{p}(x)~|~ x \in X>\), for all \(r=1,2, \ldots , (l_{1}, l_{2}, \ldots l_{p})\), \(\mu _{\widetilde{G_{i}}^{\theta _{ir}}}(x) \ge \mu _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r}),~\lambda _{\widetilde{G_{i}}^{\theta _{ir}}}(x) \le \lambda _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r})\) and \(\nu _{\widetilde{G_{i}}^{\theta _{ir}}}(x) \le \nu _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r})\) for all \(i=1,2, \ldots , p\); and \(\mu _{\widetilde{G_{j}}^{\theta _{jr}}}(x) > \mu _{\widetilde{G_{j}}^{\theta _{jr}}}(x^{*r}),~\lambda _{\widetilde{G_{j}}^{\theta _{jr}}}(x) < \lambda _{\widetilde{G_{j}}^{\theta _{jr}}}(x^{*r})\) and \(\nu _{\widetilde{G_{j}}^{\theta _{jr}}}(x) < \nu _{\widetilde{G_{j}}^{\theta _{jr}}}(x^{*r})\) for at least one \(j \in \{1,2, \ldots , p \}\). Hence by the definition of \(\eta _{i}\) as \(\eta _{i}= \{ (\mu _{\widetilde{G_{i}}^{\theta _{ir}}}(x) - \mu _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r})), (\lambda _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r}) - \lambda _{\widetilde{G_{i}}^{\theta _{ir}}}(x)), (\nu _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r}) - \nu _{\widetilde{G_{i}}^{\theta _{ir}}}(x) )\}\), \(i=1,2, \ldots , p\) we have \(\eta _{i} \ge 0,~~i=1,2, \ldots , p\) and \(\eta _{j} >0\) for one j. Thus, the problem (3.17) have a feasible solution \((x, \eta )\) with the objective function value \( \sum _{i=1}^{p} \eta _{i}>0\) which has contradiction with the postulates that \(({\bar{x}}^{r}, {\bar{\eta }}^{r})\) is an efficient solution of (3.17) with the optimal objective value \( \sum _{i=1}^{p} {\bar{\eta }}^{r}_{i} = 0\).

(b): Assume that \(< {\bar{x}}^{r},~H_{p}({\bar{x}}^{r})~|~ {\bar{x}}^{r} \in X>\) is not NHFPOS for the NHFMOPP (3.12). Thus, in a same fashion to Theorem 1, there exists an \(< x,~H_{p}(x)~|~ x \in X>\) such that for all \(r=1,2, \ldots , (l_{1}, l_{2}, \ldots l_{p})\), \(\mu _{\widetilde{G_{i}}^{\theta _{ir}}}(x) \ge \mu _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r}),~\lambda _{\widetilde{G_{i}}^{\theta _{ir}}}(x) \le \lambda _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r})\) and \(\nu _{\widetilde{G_{i}}^{\theta _{ir}}}(x) \le \nu _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r})\) for all \(i=1,2, \ldots , p\); and \(\mu _{\widetilde{G_{j}}^{\theta _{jr}}}(x) > \mu _{\widetilde{G_{j}}^{\theta _{jr}}}(x^{*r}),~\lambda _{\widetilde{G_{j}}^{\theta _{jr}}}(x) < \lambda _{\widetilde{G_{j}}^{\theta _{jr}}}(x^{*r})\) and \(\nu _{\widetilde{G_{j}}^{\theta _{jr}}}(x) < \nu _{\widetilde{G_{j}}^{\theta _{jr}}}(x^{*r})\) for at least one \(j \in \{1,2, \ldots , p \}\). Hence by the definition of \(\eta _{i}\) as \(\eta _{i}= \{ (\mu _{\widetilde{G_{i}}^{\theta _{ir}}}(x) - \mu _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r})), (\lambda _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r}) - \lambda _{\widetilde{G_{i}}^{\theta _{ir}}}(x)), (\nu _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r}) - \nu _{\widetilde{G_{i}}^{\theta _{ir}}}(x) )\}\), \(i=1,2, \ldots , p\). Then, \((x, \eta )\) is a feasible solution for the problem (3.17). We know that

for one j, thus, \( \sum _{i=1}^{p} \eta _{i} > \sum _{i=1}^{p} {\bar{\eta }}^{r}_{i}\) which arises in a contradiction with the postulates that \(({\bar{x}}^{r}, {\bar{\eta }}^{r})\) is an efficient solution of (3.17).

Remark 4

Below, we can be revealed that the pessimistic NHFPOS can be determined with the help of extended concept of Bellman and Zadeh (1970) for all the membership functions under neutrosophic fuzzy environment.

To highlight this, assume that the problem (3.16) yields in the pessimistic NHFPOS. Introduce the maximum satisfaction degrees of mth \((m=1,2, \ldots , \tau )\) member of each membership functions under neutrosophic hesitant fuzzy environment as follows:

Using auxiliary variables \(\alpha ,~\beta \) and \(\gamma \), problem (3.18) can be rewritten as follows:

Suppose that \((x^{*m}, \phi ^{*m})\) is an efficient solution of (3.19) with \(\phi ^{*m} = \mathrm{min}~(\phi ^{*1}, \phi ^{*2}, \ldots , \phi ^{*\tau })\). Also, assume that the extended concept (Bellman and Zadeh 1970) is used in the neutrosophic hesitant fuzzy environment.

Equivalently, the problem (3.20) can be rewritten as follows:

Theorem 3

The problems (3.19) and (3.21) have the equal optimal objective values.

Proof

Assume that \((x^{*}, \phi ^{*})\) and \((x^{*m}, \phi ^{*m})\) are the optimal solution of (3.21) and (3.19), respectively. Our aim is to prove \(\phi ^{*} = \phi ^{*m}\) or consequently

Firstly, we have to show that \(\phi ^{*} \le \phi ^{*m}\). A feasible solution for (3.21) is a subset of (3.16). In particular, a feasible solution for (3.16) is a subset of (3.19). Thus, \(\phi ^{*} \le \phi ^{*m}\) or correspondingly

Now, it should be shown that \( \phi ^{*m} \le \phi ^{*}\). The problem (3.18) obtains the pessimistic NHFPOS, for all \(r \in \{ 1,2, \ldots , \tau \}\), we get \( \phi ^{*m} \le \phi ^{*r}\) or equivalently

\(\mathrm{max}~\mathrm{min}~ \{ \mu _{\widetilde{G_{i}}^{\theta _{im}}}(x^{*m}) \}^{p}_{i=1} \le \mathrm{max}~\mathrm{min}~ \{ \mu _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r}) \}^{p}_{i=1}\forall ~r \in \{ 1,2, \ldots , \tau \}\),

\(\mathrm{min}~\mathrm{max}~ \{ \lambda _{\widetilde{G_{i}}^{\theta _{im}}}(x^{*m}) \}^{p}_{i=1} \ge \mathrm{min}~\mathrm{max}~ \{ \lambda _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r}) \}^{p}_{i=1} \forall ~r \in \{ 1,2, \ldots , \tau \}\),

\(\mathrm{min}~\mathrm{max}~ \{ \nu _{\widetilde{G_{i}}^{\theta _{im}}}(x^{*m}) \}^{p}_{i=1} \ge \mathrm{min}~\mathrm{max}~ \{ \nu _{\widetilde{G_{i}}^{\theta _{ir}}}(x^{*r}) \}^{p}_{i=1}~~\forall ~r \in \{ 1,2, \ldots , \tau \}\).

Therefore, it is obvious that

Therefore \( \phi ^{*m} \le \phi ^{*r}\). Thus, the two inequalities in Eqs. (3.23) and (3.24) confirm that \(\phi ^{*} = \phi ^{*m}\). Hence, Theorem 3 is proved.

In optimization technique-II, the weighted arithmetic-mean score function of NHFEs \(\mu _{D^{N}_{h}}(x),~\lambda _{D^{N}_{h}} (x)\) and \(\nu _{D^{N}_{h}} (x)\) is used to achieve the optimal solution and formulated a problem that gives a NHFPOS to the NHFMOPP.

3.3 Proposed optimization technique-II

Let us consider that DM(s) intends to solve NHFMOPP (3.12). Then, the arithmetic-mean score function of each \(\mu _{D^{N}_{h}}(x),~\lambda _{D^{N}_{h}} (x)\) and \(\nu _{D^{N}_{h}} (x)\) is obtained and develop problem (3.25):

where \(\chi (\mu _{{\widetilde{G_{i}}}}(x)) = \frac{\sum _{j=1}^{l_{i}} \mu _{{\widetilde{G^{j}_{i}}}}(x) }{l_{i}}\), \(\chi (\lambda _{{\widetilde{G_{i}}}}(x)) = \frac{\sum _{j=1}^{l_{i}} \lambda _{{\widetilde{G^{j}_{i}}}}(x) }{l_{i}}\) and \(\chi (\nu _{{\widetilde{G_{i}}}}(x)) = \frac{\sum _{j=1}^{l_{i}} \nu _{{\widetilde{G^{j}_{i}}}}(x) }{l_{i}}\) are the arithmetic-mean score function of \(\mu _{{\widetilde{G_{i}}}}(x),~\lambda _{{\widetilde{G_{i}}}}(x)\) and \(\nu _{{\widetilde{G_{i}}}}(x)\), respectively.

To solve the problem (3.25), we have used the weighted sum method. The problem (3.25) can be re-formulated as follows (3.26):

where \(w= (w_{1}, w_{2}, \ldots , w_{p})\) is a vector of positive weights in such a way that \(\sum _{i=1}^{p} w_{i} =1\). Theorem 4 permits to solve only a single-objective mathematical programming rather NHFMOPP (3.12).

Theorem 4

Suppose that \(w= (w_{1}, w_{2}, \ldots , w_{p})\) is a vector of nonnegative weights prescribed to the objectives, in such a way that \(\sum _{i=1}^{p} w_{i} =1\). If \(x^{*}\) is an optimal solution for (3.26), then \(< x^{*},~H_{p}(x^{*})~|~ x^{*} \in X>\) is a NHFPOS for the NHFMOPP.

Proof

Assume that \(< x^{*},~H_{p}(x^{*})~|~ x^{*} \in X>\) is not NHFPOS for the NHFMOPP. Thus, there exists an \(< x,~H_{p}(x)~|~ x \in X>\) such that \(\mu _{{\widetilde{G}}^{k_{i}}_{i}}(x) \ge \mu _{{\widetilde{G}}^{k_{i}}_{i}}(x^{*r}),\lambda _{{\widetilde{G}}^{k_{i}}_{i}}(x) \le \lambda _{{\widetilde{G}}^{k_{i}}_{i}}(x^{*r})\) and \(\nu _{{\widetilde{G}}^{k_{i}}_{i}}(x) \le \nu _{{\widetilde{G}}^{k_{i}}_{i}}(x^{*r})\) for all \(i=1,2, \ldots , p,~~k_{i}=1,2, \ldots , l_{i}\), and \(\mu _{{\widetilde{G}}^{k_{j}}_{j}}(x) > \mu _{{\widetilde{G}}^{k_{j}}_{j}}(x^{*r}),~\lambda _{{\widetilde{G}}^{k_{j}}_{j}}(x) < \lambda _{{\widetilde{G}}^{k_{j}}_{j}}(x^{*r})\) and \(\nu _{{\widetilde{G}}^{k_{j}}_{j}}(x) < \nu _{{\widetilde{G}}^{k_{j}}_{j}}(x^{*r})\) for at least one \(j \in \{1,2, \ldots , p \}\) and \(k_{j}=1,2, \ldots , l_{j}\). All weights are nonnegative, and we get

All the inequalities in Eq. (3.27) contradict the optimality of \(x^{*}\) for (3.26). Thus, Theorem 4 is proven.

4 Computational study

The proposed optimization techniques are applied to three real-life optimization problems, such as manufacturing, system design, and production planning. All the multiobjective mathematical programming problems, as discussed in examples, are coded in SAS/OR software; see (Rodriguez 2011; Ruppert 2004).

4.1 Manufacturing system problem

Example 1 (seeAhmad et al. 2018; Singh and Yadav 2015): A manufacturing factory intends to produce three types of products \(P_{1}, P_{2}\), and \(P_{3}\) in a specified period (say one year). The production processes of \(P_{1}, P_{2}\) and \(P_{3}\) need three different kinds of resources \(R_{1}, R_{2}\) and \(R_{3}\). The total requirements of each resource for product \(P_{1}\) are 2, 3, and 4 units, respectively. To produce each product \(P_{2}\), each kind of resource requirement is around 4, 2, and 2 units, whereas each unit of \(P_{3}\) requires 3, 2, and 3 units approximately. The total availability of resource \(R_{1}\) and \(R_{2}\) is around 325 and 360 units, respectively. However, around 30 and 20 units of the additional stock are stored in resources monitored by the factory manager’s manager. To provide a better quality to the products, at least 365 units of resource, \(R_{3}\), should be utilized. Moreover, an additional 20 units of resource \(R_{3}\) are administrable by the managerial board at the time of emergency. The estimated completion time for each unit of products \(P_{1}, P_{2}\), and \(P_{3}\) are prescribed as 4, 5, and 6 hours, respectively. Suppose that the production quantities of \(P_{1}, P_{2}\) and \(P_{3}\) are \(x_{1}, x_{2}\) and \(x_{3}\) units, respectively. Furthermore, consider that unit cost and sale’s price of product \(P_{1}, P_{2}\) and \(P_{3}\) are \(c_{1}=8, c_{2}=10.125\) and \(c_{3}=8\), and; \(s_{1}=\frac{99.875}{x_{1}^{-1/2}}\), \(s_{2}=\frac{119.875}{x_{2}^{-1/2}}\) and \(s_{3}=\frac{95.125}{x_{3}^{-1/3}}\), respectively. The manager wants to maximize the profit and minimize the total time requirement. Thus, the mathematical programming formulations result in the nonlinear programming problem (4.1) and can be presented as follows:

On solving the problem (4.1), we get the individual minimum and maximum values \(U_{1}=-180.72\), \(L_{1}=-516.70\), \(L_{2}=599.23\) and \(U_{2}=620.84\) for each objective function, respectively. Initially, assume that one expert has provided his aspiration levels for the first objective and can be given by the following three membership functions:

One DM or expert also provides the aspiration levels for the second objective, which expressed his neutrosophic fuzzy goals by the following three membership functions:

Using the problem (3.10), the equivalent neutrosophic decision-making problem can be stated as follows (4.8):

At \(t=2\), the optimal solution of (4.8) is \(x=(60.48,~5.26,58.37) \), \(O_{1}=409.70,~O_{2}=607.28\) with the degree of satisfaction \(\phi ^{*}=0.62\), respectively. It should be noted that \(\phi ^{*}=0.62\) represents the overall satisfaction of neutrosophic fuzzy goals of the DM which is 62%. Furthermore, assume that three other DMs or experts contributed to decision-making processes. One DM provides his thought about the first objective function, and two DMs provide their comment about the second objective function under a neutrosophic hesitant fuzzy environment. The DM or expert’s neutrosophic, hesitant fuzzy goals for first objective function are expressed by the different membership functions as follows:

The neutrosophic hesitant fuzzy goals of the DMs or experts for second objective functions are expressed by the different membership functions as follows:

Thus, we have neutrosophic hesitant fuzzy decision set as follows:

where X is a feasible solution region and

In the optimization technique-I, the neutrosophic hesitant fuzzy decision (Example 1) is stated as below:

with

for each \(x \in X\). Now, our aim is to maximize the truth \(\mu _{\widetilde{G_{i}}}(x)\) hesitant membership function and minimization of indeterminacy \(\lambda _{\widetilde{G_{i}}}(x)\) and falsity \(\nu _{\widetilde{G_{i}}}(x)\) hesitant membership functions of \(h_{D^{N}_{h}}(x)\), respectively. The obtained NHFPOSs are summarized in Table 1. Also, the optimistic and pessimistic NHFPOSs with other approaches discussed in Ahmad et al. (2018); Singh and Yadav (2015) are shown in Table 2.

Using Remark 4, the pessimistic NHFPOSs are given in (4.20):

The optimal solution of problem (4.20) is obtained as \(x^{*}=(60.48,~5.26,~58.37) \), and \(\phi ^{*}=0.96\) with objective functions values \(O_{1}=288.86,~O_{2}=599.64\) which is the pessimistic NHFPOSs as depicted in Table 2.

Furthermore, suppose that \(O_{1}\) first objective is more important than \(O_{2}\) second, such that \(w_{1}=0.65\) and \(w_{2}=0.35\), then by implementing the optimization technique-II we have obtained the following problem (4.21):

On solving the problem (4.21), we have obtained the optimal solution \(x^{*}=(60.48,~5.26,~58.37) \), and \(\phi ^{*}=0.99\) with objective functions values \(O_{1}=409.70,~O_{2}=607.28\). According to Theorem 4, \(< x^{*},~H_{p}(x^{*})~|~ x^{*} \in X>\) is a NHFPOS.

4.2 System design problem

Example 2 (see Sakawa 2013, Rouhbakhsh et al. 2020): Suppose that a park consists of six machine types with different capacities available to the production of three unique products, say \(P_{1}\), \(P_{2}\), and \(P_{3}\), respectively. All the relevant information is summarized in Table 3. The decision-maker(s) intended to develop and optimize the three different objectives, (i) total profits, (ii) quality of the products, and (iii) worker satisfaction.

Assume that \(x_{1}\), \(x_{2}\), and \(x_{3}\) be the optimal number of each product types that are to be produced. Thus, the mathematical formulations of multiobjective programming problem (4.22) can be given as follows:

We would like to examine this problem under the neutrosophic hesitant fuzzy environment. On solving the problem (4.22), we get the individual minimum and maximum values for each objective functions \(L_{1}=5452.63\), \(L_{2}=10020.33\), \(L_{3}=5903\), \(U_{1}=8041.14\), \(U_{2}=10950.59\) and \(U_{3}=9355.90\), respectively. Assume that one expert provides the aspiration levels for the first objective:

Furthermore, consider that three experts provide the aspiration levels for the second objective which expressed their neutrosophic hesitant fuzzy goals by the following membership functions:

Also, consider that two experts provide the aspiration levels for the second objective which expressed their neutrosophic hesitant fuzzy goals by the following membership functions:

Hence, we have neutrosophic hesitant fuzzy decision set as follows:

where X is a feasible solution region and

In the optimization technique-I, the neutrosophic hesitant fuzzy decision (Example 2) can be stated:

Intuitionally, our intention is to maximize the truth \(\mu _{\widetilde{G_{i}}}(x)\) hesitant membership function and minimization of indeterminacy \(\lambda _{\widetilde{G_{i}}}(x)\) and falsity \(\nu _{\widetilde{G_{i}}}(x)\) hesitant membership functions of \(h_{D^{N}_{h}}(x)\), respectively. The obtained NHFPOSs of NHFMOPP using optimization technique-I are summarized in Table 4. Also, the optimistic and pessimistic NHFPOSs are shown in Table 5.

With the aid of Remark 4, the pessimistic NHFPOSs are obtained by solving (4.44):

The optimal solution of problem (4.44) is obtained as \(x^{*}=(54.97,~38.56,~46.59) \), and \(\phi ^{*}=0.58\) with objective functions values \(O_{1}=7419.82,~O_{2}=10278.74\) and \(O_{3}=8724.50\) which is the pessimistic NHFPOSs as depicted in Table 5.

Moreover, assume that the three different weighting schemes, such as (\(w_{1}=0.6\), \(w_{2}=0.2\), \(w_{3}=0.2\)), (\(w_{1}=0.2\), \(w_{2}=0.6\), \(w_{3}=0.2\)) and (\(w_{1}=0.2\), \(w_{2}=0.2\), \(w_{3}=0.6\)), then by applying the optimization technique-II we have obtained the following problem (4.45):

On solving the problem (4.45), we get the optimal solution at different weights summarized in Table 6. According to Theorem 4, \(< x^{*},~H_{p}(x^{*})~|~ x^{*} \in X>\) is a NHFPOS. Furthermore, the comparative study of problem (Example 2) is performed with other existing methods and depicted in Table 7. However, the solution outcomes determined by our proposed optimization techniques are quite better, but it cannot be claimed that our techniques are always outperformed, because it depends on the experience and opinion of various experts, but it can be stated that our results are much nearer to reality because we have utilized the opinions of several experts with degrees of neutral thoughts in decision-making processes.

4.3 Production planning problem

Example 3 (see Zeleny 1986, Rouhbakhsh et al. 2020): A production company produces two different items \(I_{1}\) and \(I_{2}\) by using three different raw materials \(R_{1}\), \(R_{2}\) and \(R_{3}\) and intends to maximize the total profit after-sales. The different input data of resources required to produce each unit of \(I_{1}\) and \(I_{2}\) are summarized in Table 8. The maximum capacity available materials are restricted to 27, 45, and 15 tons for each \(R_{1}\), \(R_{2}\), and \(R_{3}\), respectively. The individual profit incurred over each product is also well-known in advance and such that item \(I_{1}\) yields a profit of 1 million yen per ton, whereas \(I_{2}\) incurs a profit of 2 million yen per ton. Under the available resources, the company aims to determine the optimal production policy of each unit of items \(I_{1}\) and \(I_{2}\) in such a way that the overall profit is maximum. Furthermore, it should be kept in mind that the item \(I_{1}\) releases three units of pollution per ton, while \(I_{2}\) generates two units of pollutions per ton. Therefore, it is indispensable for the decision-maker(s) or experts to not only enhance the total profit but also reduce the amount of pollution.

Assume that \(x_{1}\) and \(x_{2}\) represent the number of tonnes produced of each items \(I_{1}\) and \(I_{2}\), respectively. Therefore, the mathematical formulations of the production planning problem can be given as follows (4.46):

After solving the problem (4.46), we have obtained the individual minimum and maximum values for each objective functions \(L_{1}=-10\), \(L_{2}=0\), \(U_{1}=0\) and \(U_{2}=16.5\), respectively.

Also, one DM or expert provides the aspiration levels for the second objective which expressed his neutrosophic fuzzy goals by the following three membership functions:

Using the problem (3.10), the equivalent neutrosophic decision-making problem can be stated as follows (4.53):

The optimal solution of the bi-objective programming problem (4.53) is \(x^{*}=(x_{1}^{*},x_{2}^{*})=(0.87,~4.21) \), \(O_{1}=-9.29,~O_{2}=11.03\) with the degree of satisfaction \(\phi ^{*}=0.64\), respectively. It should be noted that \(\phi ^{*}=0.64\) represents the overall satisfaction of neutrosophic fuzzy goals of the DM is 64%. Furthermore, assume the neutrosophic hesitant fuzzy goals of the DMs or experts for first objective function are expressed by the different membership functions as follows:

Therefore, we have neutrosophic hesitant fuzzy decision set as follows:

where X is a feasible solution region and

Thus, the neutrosophic hesitant fuzzy decision for this problem (Example 3) is stated as follows:

with

for each \(x \in X\). Thus, our aim is to maximize the truth \(\mu _{\widetilde{G_{i}}}(x)\) hesitant membership function and minimization of indeterminacy \(\lambda _{\widetilde{G_{i}}}(x)\) and falsity \(\nu _{\widetilde{G_{i}}}(x)\) hesitant membership functions of \(h_{D^{N}_{h}}(x)\), respectively. The obtained NHFPOSs are summarized in Table 9. The optimistic and pessimistic NHFPOSs are depicted in Table 10 and compared with HFPOSs (Rouhbakhsh et al. 2020).

With the aid of Remark 4, the pessimistic NHFPOSs can be determined by solving the following problem (4.71):

The optimal solution of problem (4.71) is obtained as \(x^{*}=(0,~4.3) \), and \(\phi ^{*}=0.21\) with objective functions values \(O_{1}=-8.60,~O_{2}=8.60\) which is the pessimistic NHFPOSs as depicted in Table 10.

Furthermore, suppose that \(O_{1}\) is more important than \(O_{2}\), such that \(w_{1}=0.75\) and \(w_{2}=0.25\), then by implementing the optimization technique-II, we have obtained the following problem (4.72):

On solving the problem (4.72), we have obtained the optimal solution \(x^{*}=(0,~4.2) \), and \(\phi ^{*}=0.2\) with objective functions values \(O_{1}=-8.40,~O_{2}=8.40\). According to Theorem 4, \(< x^{*},~H_{p}(x^{*})~|~ x^{*} \in X>\) is a NHFPOS.

4.4 Computational steps and discussion

This paper investigated the two different optimization techniques for MOPPs under the neutrosophic hesitant fuzzy environment. The robustness of proposed techniques is also presented by performing the Pareto optimality tests. The stepwise solution algorithm for optimization technique-I is presented as follows:

-

1.

Elicit the different membership functions \( \mu _{{\widetilde{G^{k_{i}}_{i}}}} (x), \lambda _{{\widetilde{G^{k_{i}}_{i}}}} (x)\) and \( \nu _{{\widetilde{G^{k_{i}}_{i}}}} (x)~~\forall ~~k_{i}=1,2, \ldots , l_{i}\) DMs and develop \(\widetilde{G_{i}}= \{ x,~\mu _{{\widetilde{G_{i}}}}(x),~\lambda _{\widetilde{G_{i}}}(x),~\nu _{\widetilde{G_{i}}}(x)~|~ x \in X\}\) such that \(\mu _{\widetilde{G_{i}}}(x)=\{\mu _{{G}^{1}_{i}}(x), \mu _{{G}^{2}_{i}}(x), \ldots , \mu _{{G}^{l_{i}}_{i}}(x) \} \), \(\lambda _{\widetilde{G_{i}}}(x)=\{\lambda _{{G}^{1}_{i}}(x), \lambda _{{G}^{2}_{i}}(x), \ldots , \lambda _{{G}^{l_{i}}_{i}}(x) \} \) and \( \nu _{\widetilde{G_{i}}}(x)=\{\nu _{{G}^{1}_{i}}(x), \nu _{{G}^{2}_{i}}(x), \ldots , \nu _{{G}^{l_{i}}_{i}}(x) \}\) as neutrosophic hesitant fuzzy goals for objective function \(O_{i}~~\forall ~~i=1,2, \ldots , p \).

-

2.

For every \(r=1,2, \ldots , \tau \) where \(\tau = l_{1}l_{2} \ldots l_{p},\) select one \(\theta _{ir} \in \{1,2, \ldots , l_{i}\} ~~\forall ~~i=1,2, \ldots , p \) and construct the problem (3.16).

-

3.

After solving rth model of the problem (3.16), we determine the maximal degree of the aspiration level \(\phi ^{*r}\) with the optimal solution \(x^{*r}\) and elicit \(H_{p} (x^{*r}) = \{ \mu _{{\widetilde{G}}^{k_{i}}_{i}}(x^{*r}),~\lambda _{{\widetilde{G}}^{k_{i}}_{i}}(x^{*r}),~\nu _{{\widetilde{G}}^{k_{j}}_{j}}(x^{*r}) |~~i=1,2, \ldots , p \}\) and \(~k_{j}=1,2, \ldots , l_{j}\).

-

4.

It can be suggested that \( < \phi ^{*m}, x^{*m}, H_{p} (x^{*m})>\) as the pessimistic NHFPOS which \(\phi ^{*m}= \mathrm{min}~\{\phi ^{*1}, \phi ^{*2}, \ldots , \phi ^{*\tau } \} \) and \( < \phi ^{*M}, x^{*M}, H_{p} (x^{*M})>\) as the optimistic NHFPOS which \(\phi ^{*M}= \mathrm{min}~\{\phi ^{*1}, \phi ^{*2}, \ldots , \phi ^{*\tau } \} \)

The stepwise solution algorithm for optimization technique-II is summarized as follows:

-

1.

Follow the first step of optimization technique-I.

-

2.

Evaluate the arithmetic-mean score function \( ~ \chi (\mu _{{\widetilde{G_{i}}}}(x))\), \(\chi (\lambda _{{\widetilde{G_{i}}}}(x))\) and \(\chi (\nu _{{\widetilde{G_{i}}}}(x))\) for each \(\mu _{{\widetilde{G_{i}}}}(x),~\lambda _{{\widetilde{G_{i}}}}(x)\) and \(\nu _{{\widetilde{G_{i}}}}(x) \), respectively.

-

3.

Assign the positive weights \(w_{i}\) to the ith objective function \(O_{i}(x)\) according to the decision makers’ preference. Construct the problem (3.26) and solve it to obtain the optimal solution \(x^{*r}\), define \( < x^{*}, H_{p} (x^{*})>\) as NHFPOS for the NHFMOPP.

To analyze the computational complexity, Table 11 presents an overview of the dimensions involved among the NHFMOPP in problem (3.12) including n variables, m constraints, and p objectives in the first and second optimization techniques with other methods.

In all the above discussed three examples, we have obtained the truth, indeterminacy, and falsity membership functions using the marginal evaluations of each objective function. Then, we took the opinion of different experts/managers regarding the satisfaction values corresponding to each membership function based on their previous knowledge or experiences.

As a matter of discussion, it should be answered that how the proposed optimization techniques are capable of yielding a better solution than other existing approaches? For this purpose, in general, we all better know that using the neutral thoughts and opinions of several experts or DMs for a problem is very much related to reality and yields more analytical results and more reliable too. We are not claiming that if we seek the opinion of various experts along with neutral thoughts (indeterminacy degree), then the quantitative solutions would be better in the neutrosophic hesitant fuzzy environment, it fully complies over the indeterminacy degrees under the different opinion of experts; in fact, the proposed optimization techniques unifies and captures the degrees of neutrality and hesitancy of various experts or DMs simultaneously for solving MOPPs.

In optimization technique-I, alternative solutions are reflected toward the DMs’ specific viewpoints. Hence, each solution has its importance and particular utilization for DMs’ as the optimistic NHFPOS or the pessimistic NHFPOS and many more. Furthermore, each NHFPOS in the optimization technique-I is elaborated as the satisfactory degrees of the experts from objective function values; consequently, one can choose an NHFPOS among the specified solutions. Conclusively, this technique determines the final decision from a set of solutions. If anyone is not interested in a neutrosophic hesitant fuzzy set, select one of them, such as the optimistic or pessimistic NHFPOS.

In optimization technique-II, one can deal with a single programming problem by assigning weights to each objective function when their preference is different. Thus, this technique is used in the real-life applications of MOPPs in the neutrosophic hesitant fuzzy environment when the priorities of each objective function play an essential role and are conflicting in nature. Additionally, in this technique, with the aggregation of the experts’ or DMs’ opinions under neutral thoughts, only a single programming problem needs to be solved. However, we lose some information.

5 Conclusions

In this study, an effective modeling and optimization framework for the MOPPs has been presented under neutrosophic hesitant fuzzy uncertainty. This paper used the neutrosophic hesitant fuzzy sets in modeling and optimizing the multiobjective programming problems with neutrosophic hesitant fuzzy objectives called the neutrosophic hesitant fuzzy multiobjective programming problems. Then, a novel solution concept, namely neutrosophic hesitant fuzzy Pareto optimal solutions, is developed to solve NHFMOPPs. Neutral/indeterminacy is the area of ignorance of a proposition’s value, between truth and a falsity degree. The indeterminacy factor is incorporated that leads the decision-making process more realistic in nature. The two different techniques have been proposed under the neutrosophic hesitant fuzzy environment, which consists of independent indeterminacy/neutral thoughts under hesitations in decision-making processes. The superiority of the proposed techniques is revealed by the fact that they give a set of solutions based on various experts’ satisfaction levels in the neutrosophic hesitant fuzzy environments. One can obtain different NHFPOSs using the two proposed optimization techniques. These alternative solutions respond to the DMs’ predetermined points of view. Thus, each solution has its importance for the DM(s). The DM(s) may select a promising optimal solution according to the adverse situation.

The propounded study has some limitations that can be addressed in future research. Various metaheuristic approaches may be applied to solve the NHFMOPP as a future research scope. The discussed NHFMOPPs can be applied to various real-life applications such as transportation problems, supplier selection problems, inventory control, and portfolio optimization. Therefore, the proposed techniques would be worth useful in the situation where neutral thoughts and hesitation values exist simultaneously in the decision-making processes.

Data availability

Enquiries about data availability should be directed to the authors.

References

Abdel-Basset M, Gunasekaran M, Mohamed M, Smarandache F (2018) A novel method for solving the fully neutrosophic linear programming problems. Neural Comput Appl 6:1595–1605

Adhami AY, Ahmad F, Wani N (2021). Overall shale gas water management a neutrosophic optimization approach. Optim Decis Making Oper Res Stat Methodol Appl, page 321

Adhami AY, Ahmad F (2020) Interactive pythagorean-hesitant fuzzy computational algorithm for multiobjective transportation problem under uncertainty. Int J Manag Sci Eng Manag 15(4):288–297

Ahmad F (2021a) Interactive neutrosophic optimization technique for multiobjective programming problems: an application to pharmaceutical supply chain management. Ann Oper Res, pp 1–35

Ahmad F (2021b) Robust neutrosophic programming approach for solving intuitionistic fuzzy multiobjective optimization problems. Complex Intell Syst 7:1935–1954

Ahmad F, Adhami AY (2019) Neutrosophic programming approach to multiobjective nonlinear transportation problem with fuzzy parameters. Int J Manag Sci Eng Manag 14(3):218–229

Ahmad F, Adhami AY (2019b) Total cost measures with probabilistic cost function under varying supply and demand in transportation problem. Opsearch 56(2):583–602

Ahmad F, Adhami AY, Smarandache F (2018) Single valued neutrosophic hesitant fuzzy computational algorithm for multiobjective nonlinear optimization problem. Neutrosophic Sets Syst 22:76–86

Ahmad S, Ahmad F, Sharaf M (2021a) Supplier selection problem with type-2 fuzzy parameters: a neutrosophic optimization approach. Int J Fuzzy Syst 23(3):755–775

Ahmad F, Ahmad S, Soliman AT, Abdollahian M (2021b) Solving multi-level multiobjective fractional programming problem with rough interval parameter in neutrosophic environment. RAIRO-Oper Res 55(4):2567–2581

Ahmad F, Ahmad S, Zaindin M (2021c) A sustainable production and waste management policies for covid-19 medical equipment under uncertainty: a case study analysis. Comput Indus Eng 157(3):107381

Ahmad F, Ahmad S, Zaindin M, Adhami AY (2021d) A robust neutrosophic modeling and optimization approach for integrated energy-food-water security nexus management under uncertainty. Water 13(2):121

Ahmad F, Alnowibet KA, Alrasheedi AF, Adhami AY (2021e) A multi-objective model for optimizing the socio-economic performance of a pharmaceutical supply chain. Socio-Econ Plann Sci, 101126

Ahmad F, Adhami AY, John B, Reza A A novel approach for the solution of multiobjective optimization problem using hesitant fuzzy aggregation operator

Ahmad F, Adhami AY, Smarandache F (2019) Neutrosophic optimization model and computational algorithm for optimal shale gas water management under uncertainty. Symmetry, 11(4)

Ahmad F, Adhami AY, Smarandache F (2020) Modified neutrosophic fuzzy optimization model for optimal closed-loop supply chain management under uncertainty. In: Abdel-Basset M (ed) Optimization theory based on neutrosophic and plithogenic sets. Elsevier, pp 343–403

Ahmadini AAH, Ahmad F (2021) A novel intuitionistic fuzzy preference relations for multiobjective goal programming problems. J Intell Fuzzy Syst 40(3):4761–4777

Ahmadini AAH, Ahmad F (2021) Solving intuitionistic fuzzy multiobjective linear programming problem under neutrosophic environment. AIMS Mathematics 6(5):4556–4580

Ahmad F, John B (2021) A fuzzy quantitative model for assessing the performance of pharmaceutical supply chain under uncertainty. Kybernetes

Ahmad F, Smarandache F (2021) Neutrosophic fuzzy goal programming algorithm for multi-level multiobjective linear programming problems. In: Smarandache F (ed) Neutrosophic operational research. Springer, pp 593–614

Angelov PP (1997) Optimization in an intuitionistic fuzzy environment. Fuzzy Sets Syst 86(3):299–306

Atanassov KT (1986) Intuitionistic fuzzy sets. Fuzzy Sets Syst 20(1):87–96

Bellman RE, Zadeh LA (1970) Decision-making in a fuzzy environment. Manag Sci 17(4):B-141-B-164

Bharati SK (2018) Hesitant fuzzy computational algorithm for multiobjective optimization problems. Int J Dyn Control, 1–8

Bharati S, Singh S (2018) Solution of multiobjective linear programming problems in interval-valued intuitionistic fuzzy environment. Soft Comput, 1–8

Mahmoodirad A, Allahviranloo T, Niroomand S (2018) A new effective solution method for fully intuitionistic fuzzy transportation problem. Soft Comput, 1–10

Rodriguez RN (2011) Sas. Wiley Interdiscip Rev Comput Stat 3(1):1–11

Rouhbakhsh F, Ranjbar M, Effati S, Hassanpour H (2020) Multi objective programming problem in the hesitant fuzzy environment. Appl Intell, 1–16

Ruppert D (2004) Statistics and finance: an introduction, vol 27. Springer, Berlin

Sakawa M (2013) Fuzzy sets and interactive multiobjective optimization. Springer, Berlin

Singh SK, Yadav SP (2015) Modeling and optimization of multi objective non-linear programming problem in intuitionistic fuzzy environment. Appl Math Model 39(16):4617–4629

Smarandache F (1999) A unifying field in logics: neutrosophic logic

Torra V, Narukawa Y (2009) On hesitant fuzzy sets and decision. In: IEEE international conference on fuzzy systems, pp 1378–1382

Ye J (2015) Multiple-attribute decision-making method under a single-valued neutrosophic hesitant fuzzy environment. J Intell Syst 24(1):23–36

Zadeh L (1965) Fuzzy sets. Inf Control 8(3):338–353

Zeleny M (1986) Optimal system design with multiple criteria: De novo programming approach. Eng Costs Prod Econ 10(1):89–94

Zhou W, Xu Z (2018) Portfolio selection and risk investment under the hesitant fuzzy environment. Knowl-Based Syst 144:21–31

Zimmermann HJ (1978) Fuzzy programming and linear programming with several objective functions. Fuzzy Sets Syst 1(1):45–55

Acknowledgements

We are very thankful to the Editor-in-Chief, anonymous Guest Editor, and potential reviewers for the comments to improve the readability and clarity of the manuscript.

Funding

No funding is received for this research.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ahmad, F., John, B. Modeling and optimization of multiobjective programming problems in neutrosophic hesitant fuzzy environment. Soft Comput 26, 5719–5739 (2022). https://doi.org/10.1007/s00500-022-06953-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-022-06953-9