Abstract

Rough set theory is a non-statistical approach to handle uncertainty and uncertain knowledge. It is characterized by two methods called classification (lower and upper approximations) and accuracy measure. The closeness of notions and results in topology and rough set theory motivates researchers to explore the topological aspects and their applications in rough set theory. To contribute to this area, this paper applies a topological concept called “somewhere dense sets” to improve the approximations and accuracy measure in rough set theory. We firstly discuss further topological properties of somewhere dense and cs-dense sets and give explicitly formulations to calculate S-interior and S-closure operators. Then, we utilize these two sets to define new concepts in rough set context such as SD-lower and SD-upper approximations, SD-boundary region, and SD-accuracy measure of a subset. We establish the fundamental properties of these concepts as well as show their relationships with the previous ones. In the end, we compare the current method of approximations with the previous ones and provide two examples to elucidate that the current method is more accurate.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In a simple expression, the phenomenon or problem is vague if it contains some elements or components which we cannot decide where are they? inside or outside. Rough set theory initiated by Pawlak (1982, 1991) is one of the followed techniques to deal with vagueness (uncertainty) of information systems data and imperfect knowledge. This theory starts from an equivalence relation that is the basis to define the concepts of lower and upper approximations. However, an equivalence relation seems a very strict condition that limits the applications of the rough set theory. This leads to study the approximation operators using specific kinds of binary relations (Abo-Tabl 2013) or arbitrary binary relations by many authors (El-Bably and Al-shami 2021).

The boundary region is calculated as the difference between the upper and lower approximations, and according to the boundary region is empty or not, the sets are classified to a crisp (exact) set or a rough (inexact) set. As we know, the increase in boundary region hampers appropriate decision-making; therefore, we mainly aim to reduce the boundary region by decreasing the upper approximation and increasing the lower approximation. Another important concept of the rough set theory is the accuracy measure of a set which expresses the degree of completeness of our knowledge. The accuracy measure of a set shows how large the boundary region is; however, it does not say anything of the structure of the boundary region. On the other hand, the approximations provide some insights into the structure of the boundary region without information of its size.

The concepts in rough set theory such as membership of elements and inclusion and equality relations cannot be determined in the absolute sense, but on the information which we know about them. For example, the sets with different elements can be equal in the rough sense if they have the same lower and/or upper approximations. This thought refers to the topological structure when we compare between sets in terms of their interior and closure points, not to the elements they consist of. Skowron (1988) and Wiweger (1989) first investigated the role of topological aspects in rough sets. Lashin et al. (2005) introduced a topology using binary relations and applied to generalize the basic rough set concepts. One of the good achievements of solving the missing attribute values problem by using the topological notions was given in Salama (2010). In fact, a combination of rough set theory and topological theory became the main goal of many studies, for example the interaction between rough sets and digital topology Abo-Tabl (2014), separation axioms of approximation spaces (Al-shami et al. 2021), approximations generated from rough sets and ideals (Hosny et al. 2021), topological properties of rough approximations (Kondo and Dudek 2006; Kozae et al. 2007), continuous functions between approximation spaces (Salama et al. 2021), rough set and topological algebra (Wu et al. 2008), topological methods induced from covering rough sets (Zhu 2007). This interaction also included some generalizations of topology such as minimal structure (Azzam et al. 2020) and bitopology (Salama 2020b). Some interesting applications to supply chain management were given in Chen et al. (2021), Wang et al. (2020), Xiao et al. (2021). .

The notion of near open sets (it also called generalized open set) is a major area of research in the classical topology. They are utilized to initiate a wider classes of topological structures and extended some topological concepts such as compact and connected spaces and separation axioms. The most celebrated types of near open sets are those formulated by using the closure and interior operators such as \(\alpha \)-open, pre-open, semi-open, b-open and \(\beta \)-open sets. Quite recently, Al-shami (2017) investigated a wider class of near open sets, namely somewhere dense sets, and then he with Al-shami and Noiri (2019) applied to define new types of continuous maps.

In 2014, El-Monsef et al. (2014) exploited some near open sets to define new types of approximations in the case of fore-set and after-set. Amer et al. (2017) and Salama (2018) generalized these approximations and presented several types of j-near approximations. These approximations consider a vital methods to reduces the boundary region using topological concepts. In 2018, Hosny (2018) defined new four approximations by use of the concepts of \(\delta \beta \)-open sets and \(\bigwedge _{\beta }\)-sets. Recently, Salama (2020a) defined the concept of higher order sets as a new class of new types of near open and closed sets. He initiated this class by much iteration of topological closure and interior operations for a given set. This manuscript contributes to this direction by making use of the concept of somewhere dense sets. We initiate new rough set models and present some comparisons which illustrate that our approach produces approximations and accuracy measures better than those given in El-Monsef et al. (2014), Amer et al. (2017). Moreover, we prove that our approach keeps most properties of Pawlak’s approximations as illustrated in Proposition 7 and 8.

The remainder of this manuscript are organized as follows. In Sect. 2, we recall some definitions and properties of rough sets and topological spaces that help the reader to well understand this manuscript. In Sect. 3, we explore more properties of somewhere dense and cs-dense sets. In Sect. 4, we apply somewhere dense sets to establish new kinds of approximation operators. We scrutinize the main properties of these approximation operators, and demonstrate that they produce accuracy measures greater than that of Amer et al. (2017). Finally, we give some conclusions and make a plan for future works in Sect. 5.

2 Preliminaries

Now, we proceed to give the main notions in rough set theory. We begin with the fundamental concepts in this field that owes to the idea of classifying a set by another two sets.

Definition 1

(Pawlak 1982, 1991) Let \({\mathcal {R}}\) be an equivalence relation (i.e., reflexive, symmetric and transitive) on a finite set \(E\ne \emptyset \). We associate every \(X\subseteq E\) with two subsets.

The two sets \(\underline{{\mathcal {R}}}(X)\) and \(\overline{{\mathcal {R}}}(X)\) are, respectively, called lower and upper approximations of X.

From now on, we consider E a nonempty finite set, unless stated otherwise.

Proposition 1

(Pawlak 1982, 1991) Let \({\mathcal {R}}\) be an equivalence relation on E and \(U, V\subseteq E\). Then, the following properties hold.

As we know that the core of Pawlak approach is the equivalence relation which makes some problems and limitations for theoretical and practical aspects. Therefore, many proposals have been made to overcome these obstacles and generalize this approach. One of them is idea of \(N_j\)-neighborhoods which were defined for any binary relation as follows.

Definition 2

(El-Monsef et al. 2014; Allam et al. 2005, 2006; Yao 1996, 1998) Let \({\mathcal {R}}\) be a binary relation on E. The j-neighborhoods of \(v\in E\) (denoted by \(N_j(v)\)) are defined for each \(j\in \{r, l,<r>,<l>, i, u,<i>, <u>\}\) as follows:

-

(i)

\(N_r(v)=\{w\in E: v {\mathcal {R}} w\}\).

-

(ii)

\(N_l(v)=\{w\in E: w {\mathcal {R}} v\}\).

-

(iii)

$$\begin{aligned}N_{<r>}(v)=\left\{ \begin{array}{rcl} \bigcap \limits _{v\in N_r(w)} N_r(w) &{} : &{}\text { there exists}\ ~N_r(w) \\ &{} &{} ~\text {containing}~ v \\ \emptyset ~~~~~~~~ &{}: &{} Otherwise \end{array} \right. \end{aligned}$$

-

(iv)

$$\begin{aligned}N_{<l>}(v)=\left\{ \begin{array}{rcl} \bigcap \limits _{v\in N_l(w)} N_l(w) &{}: &{}\text {there exists}\ ~N_l(w)\\ &{} &{} ~\text {containing}~ v \\ \emptyset ~~~~~~~~ &{} :&{}Otherwise \end{array} \right. \end{aligned}$$

-

(v)

\(N_i(v)=N_r(v)\bigcap N_l(v)\).

-

(vi)

\(N_u(v)=N_r(v)\bigcup N_l(v)\).

-

(vii)

\(N_{<i>}(v)=N_{<r>}(v)\bigcap N_{<l>}(v)\).

-

(viii)

\(N_{<u>}(v)=N_{<r>}(v)\bigcup N_{<l>}(v)\).

From now on, we consider \(j\in \{r,l,<r>,<l>, i, u,<i>, <u>\}\), unless stated otherwise.

Definition 3

Let \({\mathcal {R}}\) be a binary relation on E and \(\phi _j: E\longrightarrow 2^E\) be a map which associates each \(v\in E\) with its j-neighborhood in \(2^E\). The triple \((E, {\mathcal {R}}, \phi _j)\) is called a j-neighborhood space (in short, j-NS).

Remark 1

Neighborhoods system originally comes from topology; study rough sets in view of different kinds of neighborhoods system attracted the attention of several researchers. In this regard, many types of neighborhood systems were defined and discussed such as containment neighborhoods (Al-shami 2021), \(E_j\)-neighborhoods (Al-shami et al. 2021), core neighborhoods (Mareay 2016), and remote neighborhood (Sun et al. 2019).

Recall that a topology on a nonempty set E is a family of subsets of E which is closed under arbitrary union and finite intersection. Some authors do not approve writing a condition of belonging of the universal and empty sets to topology’s conditions because the universal set comes from the empty intersection, and the empty set comes from the empty union.

If every open subset of a topological space is also closed, then a topology is called a clopen topology. A topology is called disconnected if it can be written as a union of two disjoint open sets except for the empty and universal sets, and a topology is called extremally disconnected if the closure of any open set is open.

The result below explains one of the most important and interesting methods of generating topological structures by use of the notion of neighborhood systems; also, it allows a wide interaction between the concepts of rough set theory and topological space.

Theorem 1

(El-Monsef et al. 2014) If \((E, {\mathcal {R}}, \phi _j)\) is a j-NS, then a class \(\theta _{j}=\{U\subseteq E: N_j(v)\subseteq U\) for each \(v\in U\}\) is a topology on E for each j.

Definition 4

A subset A of a j-NS \((E, {\mathcal {R}}, \phi _j)\) is called a j-open set if \(A\in \theta _{j}\). Its complement is called a j-closed set.

We denote the family of all j-closed sets by \(\varGamma _j\). That is, \(\varGamma _j=\{V\subseteq E: V^c\in \theta _{j}\}\).

The following two approximations are formulated with a topological flavor.

Definition 5

(El-Monsef et al. 2014) The j-lower and j-upper approximations of a subset A of a j-NS \((E, {\mathcal {R}}, \phi _j)\) are formulated, respectively, by

One easily note that \(\underline{{\mathcal {R}}}_j(A)\) and \(\overline{{\mathcal {R}}}_j(A)\) are, respectively, the interior and closure points of A in a topological space \((E, \theta _j)\); hence, we sometimes write \(\underline{{\mathcal {R}}}_j(A)=int_j(A)\) and \(\overline{{\mathcal {R}}}_j(A)=cl_j(A)\), where \(int_j\) and \(cl_j\) denote the interior and closure operators in a topological space \((E, \theta _j)\), and j denotes a type of neighborhood given in 2 that generating a topology \(\theta _j\) on E.

Definition 6

(El-Monsef et al. 2014) The j-boundary, j-positive and j-negative regions and j-accuracy measure of a subset A of a j-NS \((E, {\mathcal {R}}, \phi _j)\) are formulated, respectively, by

It is clear that \(M_j(A)\) is greater or equal to zero and less or equal to one for each \(A\subseteq E\).

In 1963, Levine (1963) defined the concept of semiopen set which represents a wider class of open sets. After that, topologists initiated many wider classes of open sets, the most celebrated types of them are mentioned in the definition below and Definition 11; each one of these classes is called a class of near open sets. Many topological concepts, notions and properties were presented and discussed again using near open sets which make a central area of topological researches. Note that near open sets were formulated by use of the closure and interior operators.

Definition 7

A subset A of a topological space \((E, \zeta )\) is said to be:

-

(i)

semiopen (Levine 1963) if \(A\subseteq cl(int(A))\); Equivalently, there is an open set U such that \(U\subseteq A\subseteq cl(U)\).

-

(ii)

preopen (Mashhour et al. 1982)if \(A\subseteq int(cl(A))\); Equivalently, there is an open set U such that \(A\subseteq U\subseteq cl(A)\).

-

(iii)

\(\alpha \)-open (Njastad 1965) if \(A\subseteq int(cl(int(A)))\); Equivalently, if it is both semiopen and preopen set.

-

(iv)

b-open (Andrijevic 1996) if \(A\subseteq int(cl(A))\bigcup cl(int(A))\) (it is also called \(\gamma \)-open set).

-

(v)

\(\beta \)-open (El-Monsef et al. 1983) if \(A\subseteq cl(int(cl(A)))\) (sometime, it is called semi preopen set).

The complement of the above sets is, respectively, called semiclosed, preclosed, \(\alpha \)-closed, b-closed and \(\beta \)-closed sets.

In a similar way, the above near open sets were formulated and studied in a j-NS.

Definition 8

(El-Monsef et al. 2014) A subset A of a j-NS \((E, {\mathcal {R}}, \phi _j)\) is said to be \(j\alpha \)-open (resp. j-semiopen, j-preopen, jb-open, \(j\beta \)-open) if \(A\subseteq int_j(cl_j(int_j(A)))~(resp.~ A\subseteq cl_j(int_j(A)),~ A\subseteq int_j(cl_(A)),~ A\subseteq int_j(cl_j(A))\bigcup cl_j(int_j(A)),~ A\subseteq cl_j(int_j(cl_j(A))))\).

The complement of A is called \(j\alpha \)-closed (resp. j-semiclosed, j-preclosed, jb-closed, \(j\beta \)-closed) sets.

Remark 2

-

(i)

The families of \(j\alpha \)-open, j-semiopen, j-preopen, jb-open and \(j\beta \)-open subsets of \((E, \theta _j)\) are, respectively, denoted by \(\alpha O(\theta _{j})\), \(semiO(\theta _{j})\), \(preO(\theta _{j})\), \(bO(\theta _{j})\) and \(\beta O(\theta _{j})\)

-

(ii)

The families of \(j\alpha \)-closed, j-semiclosed, j-preclosed, jb-closed and \(j\beta \)-closed subsets of \((E, \theta _j)\) are, respectively, denoted by \(\alpha C(\varGamma _{j})\), \(semiC(\varGamma _{j})\), \(preC(\varGamma _{j})\), \(bC(\varGamma _{j})\) and \(\beta C(\varGamma _{j})\)

Similarly to Definition 5, the authors of Amer et al. (2017), Salama (2018) introduced the concepts of j-near lower and j-near upper approximations of a subset using \(j\alpha \)-open and \(j\alpha \)-closed, j-semiopen and j-semiclosed, j-preopen and j-preclosed, jb-open and jb-closed, and \(j\beta \)-open and \(j\beta \)-closed sets; also, they formulated the concepts of j-near boundary, j-near positive and j-near negative regions and j-near accuracy measure.

Definition 9

(Amer et al. 2017; Salama 2018) For each \(k\in \{\alpha , semi, pre, b, \beta \}\), the jk-near lower and jk-near upper approximations of a subset A of a j-NS \((E, {\mathcal {R}}, \phi _j)\) are formulated, respectively, by

From now on, we consider \(k\in \{\alpha , semi, pre, \beta , b\}\), unless stated otherwise.

Definition 10

(Amer et al. 2017; Salama 2018) The jk-near boundary, jk-near positive and jk-near negative regions and jk-near accuracy measure of a subset A of a j-NS \((E, {\mathcal {R}}, \phi _j)\) are formulated, respectively, by

It is clear that \(M^k_j(A)\) is greater or equal to zero and less or equal to one for each \(A\subseteq E\).

Definition 11

(Al-shami 2017) A subset A of \((X, \zeta )\) is said to be somewhere dense if \(int(cl(A))\ne \emptyset \). Its complement is said to be cs-dense.

Theorem 2

(Al-shami 2017) A subset H of \((X, \zeta )\) is cs-dense iff there is a closed set \(F\ne X\) such that \(int(H)\subseteq F\).

Definition 12

(Al-shami 2017) For a subset A of \((X, \zeta )\), the S-interior of A (briefly, Sint(A)) is the union of all somewhere dense sets that are contained in A, the S-closure of A (briefly, Scl(A)) is the intersection of all cs-dense sets containing A.

In Al-shami (2017); Al-shami and Noiri (2019), the authors revealed the main properties of somewhere dense and cs-dense sets. They proved that every nonempty \(\alpha \)-open (preopen, semi open, b-open and \(\beta \)-open) set is somewhere dense, but the converse need not be true. Also, they demonstrated some unique characteristics of somewhere dense sets which it does not own by the previous ones such that every set in a topological space is somewhere dense or cs-dense, every superset of a somewhere dense set is somewhere dense, and every subset of a cs-dense set is cs-dense. It is worth noting that a family of somewhere dense sets forms a generalized topology, whereas the other families of near open sets forms a supra topology.

Definition 13

(Al-shami 2017) A topological space \((X, \zeta )\) is called \(ST_1\) if for every \(v\ne w\in X\), \(\zeta \) contains two somewhere dense sets such that one of them containing v but not w, and the other containing w but not v.

3 Further properties of somewhere dense sets

In this section, we explore more properties of somewhere dense sets which help us to establish some properties of the approximations and accuracy measure of a set in the next section.

Proposition 2

The S-interior of any subset H of \((X, \zeta )\) is given by the following formulation.

Proof

Let H be only a cs-dense set. Suppose that \(Sint(H)\ne \emptyset \). Then, \(int(H)\ne \emptyset \); therefore, \(cl(int(H))\ne \emptyset \). Thus, H is also a somewhere dense set. This contradicts assumption. Hence, \(Sint(H)=\emptyset \), as required. In the remaining two cases, H is somewhere dense; hence, \(Sint(H)= H\). \(\square \)

Corollary 1

For any subset H of \((X, \zeta )\), we have \(Sint[Sint(H)]= Sint(H)\).

Proof

It is clear that \(Sint(H)=\emptyset \) implies that \(Sint[Sint(H)]=\emptyset \). If \(Sint(H)\ne \emptyset \), then it follows from the above proposition that \(Sint(H)=H\). Hence, \(Sint[Sint(H)]= Sint(H)\), as required. \(\square \)

Proposition 3

The S-closure of any subset H of \((X, \zeta )\) is given by the following formulation.

Proof

Let H be only a somewhere dense set. Then, \(H\ne \emptyset \). Suppose that \(Scl(H)=F \ne X\). Therefore, F is a cs-dense set containing H. This implies that H is also cs-dense, but this contradicts assumption. Hence, \(Scl(H)= X\), as required. In the remaining two cases, H is cs-dense; hence, \(Scl(H)= H\). \(\square \)

Corollary 2

For any subset H of \((X, \zeta )\), we have \(Scl[Scl(H)]= Scl(H)\).

Proof

If \(Scl(H)=X\), then \(Scl[Scl(H)]= X\). If \(Scl(H)\ne X\), then Scl(H) is a proper cs-dense subset of \((X, \zeta )\). It follows from the above proposition that \(Scl[Scl(H)]= Scl(H)\), as required. \(\square \)

Proposition 4

Every singleton subset of a disconnected topological space is a cs-dense set.

Proof

Let \((X, \zeta )\) be disconnected. Then, there exists a proper nonempty subset H of X which is both open and closed. This means that there exist proper closed subsets M and N of X such that \(int(H)\subseteq M\) and \(int(H^c)\subseteq N\). Therefore, for each \(v\in X\) either \(v\in H\) or \(v\in H^c\). Thus, \(int(\{v\})\subseteq M\) or \(int(\{v\})\subseteq N\). Hence, \(\{v\}\) is a cs-dense set. \(\square \)

Corollary 3

Every disconnected topological space is an \(ST_1\)-space.

Proof

Let \(v\ne w\) in a disconnected topological space \((X, \zeta )\). Then, \(\{v\}\) and \(\{w\}\) are cs-dense sets. Obviously, \(\{v\}^c\) and \(\{w\}^c\) are somewhere dense sets containing w and v, respectively, such that \(v\not \in \{v\}^c\) and \(w\not \in \{w\}^c\). Hence, the proof is complete. \(\square \)

Theorem 3

If \((U, \zeta _{U})\) is an open subspace of \((X, \zeta )\) and \(F\subseteq X\), then \(U \bigcap cl(F)=cl_U(U \bigcap F)\), where \(cl_U(U\bigcap F)\) is the closure of \(U\bigcap F\) in the subspace \((U, \zeta _{U})\).

Proof

For any \(v\not \in U\bigcap cl(F)\), one of the following cases holds.

-

(i)

Either \(v\not \in U\). This directly leads to that \(v\not \in cl_U(U\bigcap F)\).

-

(ii)

Or \(v\in U\). In this case, note that \(v\not \in cl(F)\). Consequently, \(G\bigcap F=\emptyset \) for some open subsets G of \((X, \zeta )\) containing v. It is clear that \(G\bigcap U\) is an open set containing v such that \((G \bigcap U)\bigcap (F \bigcap U)=\emptyset \); thus, \(v\not \in cl_U(U\bigcap F)\).

Hence, \(cl_U(U\bigcap F)\subseteq U\bigcap cl(F)\).

On the other hand, let \(v\not \in cl_U(U\bigcap F)\). Then, a subspace \((U, \zeta _U)\) contains an open set G such that \(v\in G\) and \(G\bigcap (U\bigcap F)=\emptyset \). By hypotheses, U is an open subset of X; therefore, G is also an open subset of \((X, \zeta )\). It is clear that \((G\bigcap U)\bigcap F=\emptyset \). Then, \(v\not \in U\bigcap cl(F)\). Thus, \(U\bigcap cl(F)\subseteq cl_U(U\bigcap F)\). Hence, we obtain the desired result. \(\square \)

Corollary 4

Let U be a dense subset of \((X, \zeta )\) containing H. Then, H is a somewhere dense subset of \((X, \zeta )\) iff H is a somewhere dense subset of \((U, \zeta _U)\).

Proof

To prove the necessary part, let H be a somewhere dense subset of \((X, \zeta )\). Then, there exists a nonempty open set G such that \(G\subseteq cl(H)\). Now, there exists an open set \(F\in \zeta _U\) such that \(F=G \bigcap U\subseteq cl(H)\bigcap U \subseteq cl_U(H)\). Since U is dense, then \(F \ne \emptyset \). Thus, H is a somewhere dense subset of \((U, \zeta _U)\).

One can prove the sufficient part using a similar technique. \(\square \)

Proposition 5

The families of \(\alpha \)-open and semi-open subsets of an extremally disconnected topological space are identical.

Proof

It is well known that every \(\alpha \)-open set is semi-open. To prove the converse, let H be a semi-open set. Then, \(H\subseteq cl(int(H))\). By hypothesis of extremally disconnectedness, cl(int(H)) is an open set; therefore, \(int(cl(int(H)))=cl(int(H))\). Thus, \(H\subseteq int(cl(int(H)))\), and this means that H is an \(\alpha \)-open set. Hence, the proof is finished. \(\square \)

Proposition 6

The following properties hold in a finite clopen topological space.

-

(i)

The family of preopen (b-open, \(\beta \)-open and somewhere dense) set is the power set of the universal set.

-

(ii)

The families of open and semi-open sets are identical.

Proof

-

(i):

It is clear that cl(H) is a closed set for every subset H of \((E, \theta _j)\). By hypothesis of a clopen topology \(int(cl(H))=cl(H)\supseteq H\); therefore, \(H\subseteq int(cl(H))\). This means that H is a preopen set. Thus, the collection of preopen sets is P(E). It is well known that: pre-open set \(\Longrightarrow \) \(\beta \)-open set \(\Longrightarrow \) somewhere dense set. This implies that the families of b-open, \(\beta \)-open and somewhere dense set are also P(E). Hence, the proof is finished.

-

(ii):

It is obvious.

\(\square \)

4 Approximations using somewhere dense sets

In this section, we employ the concepts of somewhere dense and cs-dense sets to define new approximations in rough set content, namely jSD-near lower and jSD-near upper approximations. Based on them, we introduce the concepts of jSD-near boundary, jSD-near positive and jSD-near negative regions and jSD-near accuracy measure. We prove their main properties with the help of some examples. We complete this section by comparing the new approximations with the previous ones and showing the importance of the current approximations in improving the accuracy measure.

Definition 14

A subset A of a j-NS \((E, {\mathcal {R}}, \phi _j)\) is said to be j-somewhere dense if \(int_j(cl_j(A))\ne \emptyset \). The complement of A is called jcs-dense.

The families of j-somewhere dense and jcs-dense sets are, respectively, denoted by \(SD(\theta _{j})\) and \(CS(\varGamma _{j})\).

Definition 15

The jSD-near lower and jSD-near upper approximations of a subset A of a j-NS \((E, {\mathcal {R}}, \phi _j)\) are formulated, respectively, by

The following two results present the main features of the above two approximations.

Proposition 7

Let \((E, {\mathcal {R}}, \phi _j)\) be a j-NS and \(X, Y\subseteq E\). Then, the following properties hold.

-

(i)

\(\underline{{\mathcal {R}}}^{SD}_j(X)\subseteq X\).

-

(ii)

\(\underline{{\mathcal {R}}}^{SD}_j(\emptyset )= \emptyset \).

-

(iii)

\(\underline{{\mathcal {R}}}^{SD}_j(E)= E\).

-

(iv)

If \(X\subseteq Y\), then \(\underline{{\mathcal {R}}}^{SD}_j(X)\subseteq \underline{{\mathcal {R}}}^{SD}_j(Y)\).

-

(v)

\(\underline{{\mathcal {R}}}^{SD}_j(X\cap Y)\subseteq \underline{{\mathcal {R}}}^{SD}_j(X)\cap \underline{{\mathcal {R}}}^{SD}_j(Y)\).

-

(vi)

\(\underline{{\mathcal {R}}}^{SD}_j(X)\cup \underline{{\mathcal {R}}}^{SD}_j(Y)\subseteq \underline{{\mathcal {R}}}^{SD}_j(X\cup Y)\).

-

(vii)

\(\underline{{\mathcal {R}}}^{SD}_j(X^c)= (\overline{{\mathcal {R}}}^{SD}_j(X))^c\).

-

(viii)

\(\underline{{\mathcal {R}}}^{SD}_j(\underline{{\mathcal {R}}}^{SD}_j(X))= \underline{{\mathcal {R}}}^{SD}_j(X)\).

Proof

-

(i)

According to Definition 15, we obtain \(\underline{{\mathcal {R}}}^{SD}_j(X)=\bigcup \{U\in SD(\theta _{j}): U\subseteq X\}\subseteq X\).

-

(ii)

We know that \(\emptyset \) is not a somewhere dense set, also, \(\bigcup \limits _{i\in I}A_i=\emptyset \), where I is an empty index set. So that \(\underline{{\mathcal {R}}}^{SD}_j(\emptyset )=\emptyset \), as required.

-

(iii)

Since E is a somewhere dense set, \(E\subseteq \underline{{\mathcal {R}}}^{SD}_j(E)\). It follows from (i) above that \(\underline{{\mathcal {R}}}^{SD}_j(E)\subseteq E\). Hence, \(\underline{{\mathcal {R}}}^{SD}_j(E)= E\).

-

(iv)

Since \(X\subseteq Y\), we obtain \(\bigcup \{U\in SD(\theta _{j}): U\subseteq X\}\subseteq \bigcup \{U\in SD(\theta _{j}): U\subseteq Y\}\). Therefore, \(\underline{{\mathcal {R}}}^{SD}_j(X)\subseteq \underline{{\mathcal {R}}}^{SD}_j(Y)\).

-

(v)

Since \(X\cap Y\subseteq X\) and \(X\cap Y\subseteq Y\), it follows from (iv) above that \(\underline{{\mathcal {R}}}^{SD}_j(X\cap Y)\subseteq \underline{{\mathcal {R}}}^{SD}_j(X)\) and \(\underline{{\mathcal {R}}}^{SD}_j(X\cap Y)\subseteq \underline{{\mathcal {R}}}^{SD}_j(Y)\). Hence, we obtain the desired result.

-

(vi)

One can prove it following similar arguments given in (v).

-

(vii)

\(\underline{{\mathcal {R}}}^{SD}_j(X^c)=\bigcup \{U\in SD(\theta _{j}): U\subseteq X^c\}=(\bigcap \{U^c\in CS(\varGamma _{j}): X\subseteq U^c\})^c= (\overline{{\mathcal {R}}}^{SD}_j(X))^c\).

-

(viii)

It follows from (i) and (iv) above that \(\underline{{\mathcal {R}}}^{SD}_j(\underline{{\mathcal {R}}}^{SD}_j(X))\subseteq \underline{{\mathcal {R}}}^{SD}_j(X)\). On the other hand, let \(v\in \underline{{\mathcal {R}}}^{SD}_j(X)\). Then, there is a \(U\in SD(\theta _{j})\) such that \(v\in U\subseteq X\). This implies that \(U\subseteq \underline{{\mathcal {R}}}^{SD}_j(X)\). Therefore, \(v\in \underline{{\mathcal {R}}}^{SD}_j(\underline{{\mathcal {R}}}^{SD}_j(X))\). Hence, the proof is complete.

\(\square \)

To elucidate that the inclusion relations are proper in the properties (i) and (iv-vi) of the above proposition, we provide the following example.

Example 1

Consider a j-NS \((E, {\mathcal {R}}, \phi _j)\) is the same as given in Example 5. In the case of \(j=r\) (one can similarly check the other cases of j), we have \(\theta _r=\{\emptyset , E, \{y\}, \{x, y\}, \{v, w\}, \{v, w, y\}\}\) and \(\varGamma _r=\{\emptyset , E, \{x\}, \{x, y\}, \{v, w\}, \{v, w, x\}\}\). Let \(V=\{v, x\}\), \(W=\{v, w\}\), \(X=\{x\}\) and \(Y=\{x, y\}\). By calculation, we obtain \(\underline{{\mathcal {R}}}^{SD}_r(V)=V\), \(\underline{{\mathcal {R}}}^{SD}_r(W)=W\) \(\underline{{\mathcal {R}}}^{SD}_r(X)=\emptyset \) and \(\underline{{\mathcal {R}}}^{SD}_r(Y)=Y\). Now, we note the following.

-

(i)

\(\underline{{\mathcal {R}}}^{SD}_r(X)\nsupseteq X\).

-

(ii)

\(\underline{{\mathcal {R}}}^{SD}_r(X)\subseteq \underline{{\mathcal {R}}}^{SD}_r(W)\), but \(X\not \subseteq W\).

-

(iii)

\(\underline{{\mathcal {R}}}^{SD}_r(V)\cap \underline{{\mathcal {R}}}^{SD}_r(Y)=\{x\}\not \subseteq \underline{{\mathcal {R}}}^{SD}_r(V\cap Y)=\emptyset \).

-

(iv)

\(\underline{{\mathcal {R}}}^{SD}_r(W\cup X)=\{v, w, x\}\not \subseteq \underline{{\mathcal {R}}}^{SD}_r(W)\cup \underline{{\mathcal {R}}}^{SD}_r(X)=\{v, w\}\).

Proposition 8

Let \((E, {\mathcal {R}}, \phi _j)\) be a j-NS and \(X, Y\subseteq E\). Then, the following properties hold.

-

(i)

\(X\subseteq \overline{{\mathcal {R}}}^{SD}_j(X)\).

-

(ii)

\(\overline{{\mathcal {R}}}^{SD}_j(\emptyset )= \emptyset \).

-

(iii)

\(\overline{{\mathcal {R}}}^{SD}_j(E)= E\).

-

(iv)

If \(X\subseteq Y\), then \(\overline{{\mathcal {R}}}^{SD}_j(X)\subseteq \overline{{\mathcal {R}}}^{SD}_j(Y)\).

-

(v)

\(\overline{{\mathcal {R}}}^{SD}_j(X\cap Y)\subseteq \overline{{\mathcal {R}}}^{SD}_j(X)\cap \overline{{\mathcal {R}}}^{SD}_j(Y)\).

-

(vi)

\(\overline{{\mathcal {R}}}^{SD}_j(X)\cup \overline{{\mathcal {R}}}^{SD}_j(Y)\subseteq \overline{{\mathcal {R}}}^{SD}_j(X\cup Y)\).

-

(vii)

\(\overline{{\mathcal {R}}}^{SD}_j(X^c)= (\underline{{\mathcal {R}}}^{SD}_j(X))^c\).

-

(viii)

\(\overline{{\mathcal {R}}}^{SD}_j(\overline{{\mathcal {R}}}^{SD}_j(X))= \overline{{\mathcal {R}}}^{SD}_j(X)\).

Proof

Following similar technique given in the proof of Proposition 7. \(\square \)

To elucidate that the inclusion relations are proper in the properties (i) and (iv-vi) of the above proposition, we provide the following example.

Example 2

Let \(V=\{x, y\}\), \(W=\{v, w\}\) \(X=\{y\}\) and \(Y=\{v, w, y\}\) be subsets of a j-NS \((E, {\mathcal {R}}, \phi _j)\) which given in Example 5. By calculation, we obtain \(\overline{{\mathcal {R}}}^{SD}_r(V)=V\), \(\overline{{\mathcal {R}}}^{SD}_r(W)=W\) \(\overline{{\mathcal {R}}}^{SD}_r(X)=X\) and \(\overline{{\mathcal {R}}}^{SD}_r(Y)=E\). Now, we note the following.

-

(i)

\(Y\nsupseteq \overline{{\mathcal {R}}}^{SD}_r(Y)\).

-

(ii)

\(\overline{{\mathcal {R}}}^{SD}_r(V)\subseteq \overline{{\mathcal {R}}}^{SD}_r(Y)\), but \(V\not \subseteq Y\).

-

(iii)

\(\overline{{\mathcal {R}}}^{SD}_r(V)\cap \overline{{\mathcal {R}}}^{SD}_r(Y)=V\not \subseteq \underline{{\mathcal {R}}}^{SD}_r(V\cap Y)=\{y\}\).

-

(iv)

\(\overline{{\mathcal {R}}}^{SD}_r(W\cup X)=E\not \subseteq \underline{{\mathcal {R}}}^{SD}_r(W)\cup \underline{{\mathcal {R}}}^{SD}_r(X)=\{v, w, x\}\).

Remark 3

The equality relations hold in the properties (v) and (vi) which, respectively, given in Proposition 7 and Proposition 8 if \((E, \theta _j)\) is strongly hyperconnected (i.e., a set is dense \(\Longleftrightarrow \) it is nonempty open).

The next result is the key point to improve the accuracy measure by use of the concept of near open sets; it also explains the grades of accuracy measure according to the different types of near open sets.

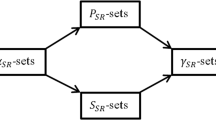

Theorem 4

Let \((E, {\mathcal {R}}, \phi _j)\) be a j-NS and \(X\subseteq E\). Then,

Proof

It is well known that the collection of k-open subsets of \((X, \theta _j)\) contains a topology \(\theta _j\); therefore, \(\underline{{\mathcal {R}}}_j(X)\subseteq \underline{{\mathcal {R}}}^{k}_j(X)\). Also, it was showed in Al-shami (2017) that the collection of somewhere dense subsets of \((X, \theta _j)\) contains the collection of k-open subsets of \((X, \theta _j)\) for each k; therefore, \(\underline{{\mathcal {R}}}^{k}_j(X)\subseteq \underline{{\mathcal {R}}}^{SD}_j(X)\). It follows from Proposition 7 that \(\underline{{\mathcal {R}}}^{SD}_j(X)\subseteq X\). Hence, \(\underline{{\mathcal {R}}}_j(X)\subseteq \underline{{\mathcal {R}}}^{k}_j(X)\subseteq \underline{{\mathcal {R}}}^{SD}_j(X)\subseteq X\). Following similar arguments, one can prove that \(X\subseteq \overline{{\mathcal {R}}}^{SD}_j(X)\subseteq \overline{{\mathcal {R}}}^{k}_j(X)\subseteq \overline{{\mathcal {R}}}_j(X)\). \(\square \)

Definition 16

A subset X of a j-NS \((E, {\mathcal {R}}, \phi _j)\) is called jSD-exact if \(\underline{{\mathcal {R}}}^{SD}_j(X)=\overline{{\mathcal {R}}}^{SD}_j(X)=X\). Otherwise, it is called a jSD-rough set.

It is clear that every j-near exact set is jSD-exact, but the converse is not always true. We give the next example to support this result.

Example 3

Let \(X=\{v, x, y\}\) be a subset of a j-NS \((E, {\mathcal {R}}, \phi _r)\) given in Example 5. On the one hand, we note that \(\underline{{\mathcal {R}}}^{SD}_r(X)=\overline{{\mathcal {R}}}^{SD}_r(X)=X\). Then, X is an rSD-exact set. On the other hand, \(\underline{{\mathcal {R}}}^{semi}_r(X)=\{x, y\}\ne \overline{{\mathcal {R}}}^{semi}_r(X)=E\) and \(\underline{{\mathcal {R}}}^{\alpha }_r(X)=\{x, y\}\ne \overline{{\mathcal {R}}}^{\alpha }_r(X)=E\). Then, X is neither an rsemi-exact set nor an \(r\alpha \)-exact set.

Definition 17

The jSD-near boundary, jSD-near positive, and jSD-near negative regions and jSD-near accuracy measure of a subset A of a j-NS \((E, {\mathcal {R}}, \phi _j)\) are formulated, respectively, by

It is clear that \(M^{SD}_j(A)\) is greater or equal to zero and less or equal to one for each \(A\subseteq E\).

Proposition 9

Let \((E, {\mathcal {R}}, \phi _j)\) be a j-NS and \(X\subseteq E\). Then,

-

(i)

\(B^{SD}_j(X)\subseteq B^k_j(X)\subseteq B_j(X)\).

-

(ii)

\(M_j(X)\le M^k_j(X)\le M^{SD}_j(X)\).

Proof

The proof of (i) immediately follows from Theorem 4.

To prove (ii): Theorem 4 shows that \(\underline{{\mathcal {R}}}^{k}_j(X)\subseteq \underline{{\mathcal {R}}}^{SD}_j(X)\) and \(\overline{{\mathcal {R}}}^{SD}_j(X)\subseteq \overline{{\mathcal {R}}}^{k}_j(X)\). Then, \(\mid \underline{{\mathcal {R}}}^{k}_j(X)\mid \le \mid \underline{{\mathcal {R}}}^{SD}_j(X)\mid \) and \(\mid \overline{{\mathcal {R}}}^{SD}_j(X)\mid \le \mid \overline{{\mathcal {R}}}^{k}_j(X)\mid \). This means that \(\mid \underline{{\mathcal {R}}}^{k}_j(X)\mid \times \mid \overline{{\mathcal {R}}}^{SD}_j(X)\mid \le \mid \underline{{\mathcal {R}}}^{SD}_j(X)\mid \times \mid \overline{{\mathcal {R}}}^{k}_j(X)\mid \). By dividing the both sides by \(\mid \overline{{\mathcal {R}}}^{SD}_j(X)\mid \times \mid \overline{{\mathcal {R}}}^{k}_j(X)\mid \), we obtain the following inequality

Following similar arguments, we obtain the following inequality.

It follows from the two equalities 1 and 2 that

Hence, we prove the desired result. \(\square \)

Proposition 10

A subset A of a j-NS \((E, {\mathcal {R}}, \phi _j)\) is jSD-exact iff \(B^{SD}_j(A)=\emptyset \).

Proof

Necessity: Let A be a jSD-exact set. Then, \(B^{SD}_j(A)=\overline{{\mathcal {R}}}^{SD}_j(A)\setminus \underline{{\mathcal {R}}}^{SD}_j(A)= \overline{{\mathcal {R}}}^{SD}_j(A)\setminus \overline{{\mathcal {R}}}^{SD}_j(A)=\emptyset \).

Sufficiency: If \(B^{SD}_j(A)=\emptyset \), then \(\overline{{\mathcal {R}}}^{SD}_j(A)\setminus \underline{{\mathcal {R}}}^{SD}_j(A)=\emptyset \). Since \(\underline{{\mathcal {R}}}^{SD}_j(A)\subseteq \overline{{\mathcal {R}}}^{SD}_j(A)\), then \(\overline{{\mathcal {R}}}^{SD}_j(A)= \underline{{\mathcal {R}}}^{SD}_j(A)\). Thus, A is jSD-exact. \(\square \)

Proposition 11

The SD-near accuracy measure of any subset X of \((E, \theta _j)\) is given by the following formulation.

Proof

Let X be a nonempty subset of \((E, \theta _j)\). Then, we have only the following three cases.

Case 1: If X is somewhere dense and cs-dense, then it follows from Proposition 2 and 3 that \(Sint(X)=Scl(X)=X\). Therefore,

Case 2: If X is only cs-dense, then it follows from Proposition 2 and 3 that \(Sint(X)=\emptyset \) and \(Scl(X)=X\). Therefore,

Case 3: If X is only somewhere dense, then it follows from Proposition 2 and 3 that \(Sint(X)=X\) and \(Scl(X)= E\). Therefore,

\(\square \)

The above property is one of the unique characteristics of somewhere dense sets. To validate this matter, consider the following example in the cases of \(k=\alpha , ~semi\) and \(j=r\).

Example 4

It was showed in Example 3 that \(\underline{{\mathcal {R}}}^{\alpha }_r(X)=\underline{{\mathcal {R}}}^{semi}_r(X)=\{x, y\}\) and \(\overline{{\mathcal {R}}}^{\alpha }_r(X)=\overline{{\mathcal {R}}}^{semi}_r(X)=E\). Then, \(M^{\alpha }_{r}(X)=M^{semi}_{r}(X)=\frac{1}{2}\ne \frac{|X|}{|E|}=\frac{|3|}{|4|}\).

In Amer et al. (2017), Salama (2018), the authors discussed different methods to approximate sets, and they showed that the best one of these approximations is obtained by \(\beta \)-open sets. They justified that by decreasing (or canceling) the boundary regions to a minimum. However, we explain in the following that we can still improve the approximations of a set more than those obtained using \(\beta \)-open sets using the concept of somewhere dense sets.

Example 5

If \((E, {\mathcal {R}}, \phi _j)\) is a j-NS, where \({\mathcal {R}}=\{(v, v), (v, w), (w, v), (x, y)\}\) is a binary relation on \(E=\{v, w, x, y\}\). Then,

-

(i)

\(\theta _r=\{\emptyset , E, \{y\}, \{x, y\}, \{v, w\}, \{v, w, y\}\}\).

-

(ii)

\(\theta _l=\{\emptyset , E, \{x\}, \{x, y\}, \{v, w\}, \{v, w, x\}\}\).

-

(iii)

\(\theta _{<r>}=\{\emptyset , E, \{v\}, \{x\}, \{y\}, \{v, x\}, \{v, y\}, \{v, w\}, \{x, y\},\{v, w, x\}, \{v, w, y\}, \{v, x, y\}\}\).

-

(iv)

\(\theta _{<l>}=\{\emptyset , E, \{v\}, \{x\}, \{y\}, \{v, x\}, \{v, y\}, \{v, w\}, \{x, y\},\{v, w, x\}, \{v, w, y\}, \{v, x, y\}\}\).

-

(v)

\(\theta _u=\{\emptyset , E, \{v, w\}, \{x, y\}\}\).

-

(vi)

\(\theta _i=\{\emptyset , E, \{x\}, \{y\}, \{x, y\}, \{v, w\}, \{v, w, x\}, \{v, w, y\}\}\).

-

(vii)

\(\theta _{<u>}=\{\emptyset , E, \{v\}, \{x\}, \{y\}, \{v, x\}, \{v, y\}, \{x, y\}, \{v, w\}, \{v, w, x\}, \{v, w, y\}, \{v, x, y\}\}\).

-

(viii)

\(\theta _{<i>}=\{\emptyset , E, \{v\}, \{x\}, \{y\}, \{v, x\}, \{v, y\}, \{x, y\}, \{v, w\},\{v, w, x\}, \{v, w, y\}, \{v, x, y\}\}\).

It is easy to calculate \(\varGamma _j\) from \(\theta _j\) for each j as follows.

-

(i)

\(\varGamma _r=\{\emptyset , E, \{x\}, \{x, y\}, \{v, w\}, \{v, w, x\}\}\).

-

(ii)

\(\varGamma _l=\{\emptyset , E, \{y\}, \{x, y\}, \{v, w\}, \{v, w, y\}\}\).

-

(iii)

\(\varGamma _{<r>}=\{\emptyset , E, \{w\}, \{x\}, \{y\}, \{w, y\}, \{w, x\}, \{v, w\}, \{x, y\}, \{v, w, x\}, \{v, w, y\}, \{w, x, y\}\}\).

-

(iv)

\(\varGamma _{<l>}=\{\emptyset , E, \{w\}, \{x\}, \{y\}, \{w, y\}, \{w, x\}, \{v, w\}, \{x, y\}, \{v, w, x\}, \{v, w, y\}, \{w, x, y\}\}\).

-

(v)

\(\varGamma _u=\{\emptyset , E, \{v, w\}, \{x, y\}\}\).

-

(vi)

\(\varGamma _i=\{\emptyset , E, \{x\}, \{y\}, \{x, y\}, \{v, w\}, \{v, w, x\}, \{v, w, y\},\}\).

-

(vii)

\(\varGamma _{<u>}=\{\emptyset , E, \{w\}, \{x\}, \{y\}, \{w, x\}, \{w, y\}, \{x, y\}, \{v, w\}, \{v, w, x\}, \{v, w, y\}, \{w, x, y\}\}\).

-

(viii)

\(\varGamma _{<i>}=\{\emptyset , E, \{w\}, \{x\}, \{y\}, \{w, x\}, \{w, y\}, \{x, y\}, \{v, w\}, \{v, w, x\}, \{v, w, y\}, \{w, x, y\}\}\).

Now, it was showed in Al-shami (2017) that every \(\alpha \)-open (preopen, semi open, \(\beta \)-open and b-open) set is a \(\beta \)-open set. To demonstrate the efficiency of the new approximations that are based on somewhere dense sets, we suffice by calculating all \(\beta \)-open and \(\beta \)-closed sets for \(j=r\).

To show how the accuracy measure with respect to somewhere dense sets is greater than accuracy measure with respect to \(\beta \)-open sets, we first calculate the collection of somewhere dense and cs-dense subsets of \(\theta _r\).

In Table 1, we calculate r-lower and r-upper approximations, \(r\beta \)-near lower and \(r\beta \)-near upper approximations, and rSD-near lower and rSD-near upper approximations for every subset of E.

Depending on Table 1, we construct Table 2 which represents the r-accuracy, \(r\beta \)-accuracy, and rSD-accuracy for every subset of E.

From the above two tables which show the differences between the approximations and accuracy measure under three different cases, we note that the new approximations reduce the size of boundary regions; also, the accuracy measure by use of somewhere dense sets is higher than the other measures; the green rows in the above two tables illustrate this fact. To analyze the obtained results, note that the family of somewhere dense sets is wider than the family of nonempty \(\alpha \)-open (semiopen, preopen, b-open, \(\beta \)-open) sets. This maximizes the SD-near lower approximations which are the counterparts of S-interior topological operator. In addition, the family of cs-dense sets is wider than the family of nonempty \(\alpha \)-closed (semiclosed, preclosed, b-closed, \(\beta \)-closed) sets. This minimizes the SD-near upper approximations which are the counterparts of S-closure topological operator.

In what follows, we introduce a practical example to elucidate the significance of applying somewhere dense sets in the information system.

Example 6

Consider the information system as given in Table 3, where \(S=\{s_1, s_2, s_3, s_4\}\), \(A=\{Maths, physics, chemistry\}\), and \(E=\{Good, Very~good, Excellent\}\) represent, respectively, the universal set of students, the set of attributes and the set of values.

The equivalences classes of S are \(\{\{s_1, s_4\}, \{s_2\}, \{s_3\}\}\). Then, \(\theta _j\) in the case of \(j=r\) is \(\{\emptyset , \{s_2\}, \{s_3\}, \{s_2, s_3\}, \{s_1, s_4\}, \{s_1, s_2, s_4\},\{s_1, s_3, s_4\}, S\}\). Since \({\mathcal {R}}\) is an equivalence relation, then \(\theta _j\) is a clopen topology; therefore \(\theta _j= \varGamma _j\). It follows from Proposition 6 that the family of semi-open subsets of \((S, \theta _j)\) is \(\theta _j\), and the family of somewhere dense subsets of \((S, \theta _j)\) is the power set of S.

Now, we compare among Pawlak’s approach, approach given in Amer et al. (2017), Salama (2018) with respect to \(k=semi\), and our approach.

Depending on Table 4, we construct Table 5 which represents the r-accuracy, \(r\beta \)-near accuracy, and rSD-near accuracy for every subset of E.

From the above two tables which show the differences between the approximations and accuracy measure under three different cases, we note that the new approximations reduce the size of boundary regions; also, the accuracy measure induced from somewhere dense sets is higher than the other measures; the green rows in the above two tables illustrate this fact.

5 Conclusion

To the best of our knowledge, the tool of rough set theory is a new efficacious technique to dispose of uncertainties. In recent decades, many authors interested in the rough set theory endeavor to reduce the boundary region for the sake of increasing the accuracy measure of decision-making. One of the approaches followed is creating new types of neighborhoods and exploiting to establish new types of approximations with a view to increasing accuracy measure.

Topology forms a new type of geometry that relies on nearness or neighborhood of points instead of measuring distance between them. Classifications of sets in rough set content are based on the approximation operators which have topological properties similar to all/some properties of the interior and closure operators. Therefore, investigation rough set by use of the topological concepts is fruitful to model real-life problems such as image processing, machine learning, data mining, pattern recognition and medical events.

Although many scholars have done a great job in developing many notions of rough set content, yet there is still a lot of space in this area. In this paper, we have scrutinized further properties of somewhere denes sets which are wider than \(\beta \)-open sets. Then, we have employed to introduce new types of approximations, boundary region, and accuracy measure of a subset. With the help of examples, we have elucidated the relationships between them and the previous ones and investigated main properties. We conclude this manuscript by comparing the current approach of approximations with the previous ones given in El-Monsef et al. (2014), Amer et al. (2017), Salama (2018) and showing the family of somewhere dense sets utilized in our approaches is the largest granulation which ultimately made the accuracy measures are higher than the other types; see, Examples 5 and 6.

Another merit of the technique followed herein is to keep most properties of Pawlak’s approximations, whereas many properties are losing in the case of generating these approximations in a direct way from \(N_j\)-neighborhoods. In our approach, we can impose some conditions to preserve all properties of Pawlak’s approximations such as strongly hyperconnectedness. But in the general case, Pawlak’s properties L6 and U6 given in Proposition 1 are not kept by our approach which is a limitation of the technique followed in this manuscript.

In upcoming works, we shall endeavor to achieve the following goals.

-

(i)

Define new types of neighborhoods in rough set theory and use to define a topological structure.

-

(ii)

Study the approximations and accuracy measures of a set using the followed technique herein (somewhere dense sets) via topological structures induced from different neighborhood systems such as \(E_j\)-neighborhoods and \(C_j\)-neighborhoods.

-

(iii)

Apply the current technique on soft rough set content using soft somewhere dense sets (Al-shami 2018; Al-shami et al. 2020).

-

(iv)

As we know the class of \(\alpha \)-open sets forms a topological space, so that, the approximations defined by this class keep Pawlak’s properties L6 and U6 given in Proposition 1 that do not hold in the approximations given herein. On the other hand, our approach produces higher accuracy measures than approach generated by \(\alpha \)-open sets. To obtain these two properties (best approximations and highest accuracy), we will suggest a new class of generalizations of open sets which is wider than a class of somewhere dense sets and forms a topological structure.

References

Abo-Tabl EA (2013) Rough sets and topological spaces based on similarity. Int J Mach Learn Cybern 4:451–458

Abo-Tabl EA (2014) On links between rough sets and digital topology. Appl Math 5:941–948

Abu-Donia HM (2008) Comparison between different kinds of approximations by using a family of binary relations. Knowl Based Syst 21:911–919

Al-shami TM (2017) Somewhere dense sets and \(ST_1\)-spaces. Punjab University. J Math 49(2):101–111

Al-shami TM (2018) Soft somewhere dense sets on soft topological spaces. Commun Korean Math Soc 33(4):1341–1356

Al-shami TM (2021) An improvement of rough sets accuracy measure using containment neighborhoods with a medical application. Inform Sci 569:110–124

Al-shami TM, Fu WQ, Abo-Tabl EA (2021) New rough approximations based on \(E\)-neighborhoods, Complexity, 2021:6, Article ID 6666853

Al-shami TM, Alshammari I, Asaad BA (2020) Soft maps via soft somewhere dense sets. Filomat 34(10):3429–3440

Al-shami TM, Noiri T (2019) More notions and mappings via somewhere dense sets. Afrika Matematika 30(7):1011–1024

Al-shami TM, El-Shafei ME, Alshammari I (2021) A comparison of two types of rough approximations based on \(N_j\)-neighborhoods. J Intell Fuzzy Syst 41(1):1393–1406

Al-shami TM, Mhemdi A, Rawshdeh A, Aljarrah H (2021) Soft version of compact and Lindelöf spaces using soft somewhere dense set. AIMS Math 6(8):8064–8077

Allam AA, Bakeir MY, Abo-Tabl EA (2005) New approach for basic rough set concepts. In: International workshop on rough sets, fuzzy sets, data mining, and granular computing. Lecture Notes in Artificial Intelligence, 3641, Springer, Regina, 64–73

Allam AA, Bakeir MY, Abo-Tabl EA (2006) New approach for closure spaces by relations. Acta Math Acad Paedagogicae Nyiregyháziensis 22:285–304

Amer WS, Abbas MI, El-Bably MK (2017) On \(j\)-near concepts in rough sets with some applications. J Intell Fuzzy Syst 32(1):1089–1099

Andrijevic D (1996) On \(b\)-open sets. Mat Vesnik 48:59–64

Azzam A, Khalil AM, Li S-G (2020) Medical applications via minimal topological structure. J Intell Fuzzy Syst 39(3):4723–4730

Chen L, Gao R, Bian Y, Di H (2021) Elliptic entropy of uncertain random variables with application to portfolio selection. Soft Comput 25:1925–1939

El-Monsef MEA, El-Deeb SN, Mahmoud RA (1983) \(\beta \)-open sets and \(\beta \)-continuous mappings, Bulletin of the Faculty of Science. Assiut University 12:77–90

El-Monsef MEA, Embaby OA, El-Bably MK (2014) Comparison between rough set approximations based on different topologies. Int J Gran Comput Rough Sets Intell Syst 3(4):292–305

El-Bably MK, Al-shami TM (2021) Different kinds of generalized rough sets based on neighborhoods with a medical application. Int J Biomath. https://doi.org/10.1142/S1793524521500868

Hosny M (2018) On generalization of rough sets by using two different methods. J Intell Fuzzy Syst 35(1):979–993

Hosny RA, Asaad BA, Azzam AA, Al-shami TM (2021) Various topologies generated from \(E_j\)-neighbourhoods via ideals, Complexity, Volume 2021, Article ID 4149368, 11 pages

Kondo M, Dudek WA (2006) Topological structures of rough sets induced by equivalence relations. J Adv Comput Intell Intell Inform 10(5):621–624

Kozae AM, Khadra AAA, Medhat T (2007) Topological approach for approximation space (TAS). Proceeding of the 5th International Conference INFOS 2007 on Informatics and Systems. Information Cairo University, Cairo, Egypt, Faculty of Computers, pp 289–302

Lashin EF, Kozae AM, Khadra AAA, Medhat T (2005) Rough set theory for topological spaces. Int J Approx Reason 40:35–43

Levine N (1963) Semi-open sets and semi-continuity in topological spaces. Am Math Month 70:36–41

Li Z, Xie T, Li Q (2012) Topological structure of generalized rough sets. Comput Math Appl 63:1066–1071

Mareay R (2016) Generalized rough sets based on neighborhood systems and topological spaces. J Egypt Math Soc 24:603–608

Mashhour AS, El-Monsef MEA, El-Deeb SN (1982) On precontinuous and weak precontinuous mappings. Proc Math Phys Soc Egypt 53:47–53

Njastad O (1965) On some classes of nearly open sets. Pac J Math 15:961–970

Pawlak Z (1982) Rough sets. Int J Comput Inform Sci 11(5):341–356

Pawlak Z (1991) Rough sets. Kluwer Acadmic Publishers Dordrecht, Theoretical Aspects of Reasoning About Data

Salama AS (2020) Sequences of topological near open and near closed sets with rough applications. Filomat 34(1):51–58

Salama AS (2020) Bitopological approximation apace with application to data reduction in multi-valued information systems. Filomat 34(1):99–110

Salama AS (2018) Generalized topological approximation spaces and their medical applications. J Egypt Math Soc 26(3):412–416

Salama AS (2010) Topological solution for missing attribute values in incomplete information tables. Inform Sci 180:631–639

Salama AS, Mhemdi A, Elbarbary OG, Al-shami TM (2019) Topological approaches for rough continuous functions with applications, Complexity, Volume 2021, Article ID 5586187, 12 pages

Skowron A (1988) On topology in information system. Bull Polish Acad Sci Math 36:477–480

Sun S, Li L, Hu K (2019) A new approach to rough set based on remote neighborhood systems, Mathematical Problems in Engineering, Volume 2019, Article ID 8712010, 8 pages

Wang R, Nan G, Chen L, Li M (2020) Channel integration choices and pricing strategies for competing dual-channel retailers. IEEE Trans Eng Manag. https://doi.org/10.1109/TEM.2020.3007347

Wiweger A (1989) On topological rough sets. Bull Polish Acad Sci Math 37:89–93

Wu QE, Wang T, Huang YX, Li JS (2008) Topology theory on rough sets. IEEE Trans Syst Man Cybern Part B (Cybernetics) 38(1):68–77

Xiao Q, Chen L, Xie M, Wang C (2021) Optimal contract design in sustainable supply chain: Interactive impacts of fairness concern and overconfidence. J the Op Res Soc 72(7):1505–1524

Yao YY (1996) Two views of the theory of rough sets in finite universes. Int J Approx Reason 15:291–317

Yao YY (1998) Generalized rough set models, Rough sets in knowledge Discovery 1, L. Polkowski, A. Skowron (Eds.), Physica Verlag, Heidelberg, 286–318

Zhu W (2007) Topological approaches to covering rough sets. Inform Sci 177:1499–1508

Acknowledgements

The author would like to thank the referees for their valuable comments, which helped us to improve the manuscript.

Funding

Not applicable.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that there is no conflict of interests regarding the publication of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Al-shami, T.M. Improvement of the approximations and accuracy measure of a rough set using somewhere dense sets. Soft Comput 25, 14449–14460 (2021). https://doi.org/10.1007/s00500-021-06358-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-021-06358-0